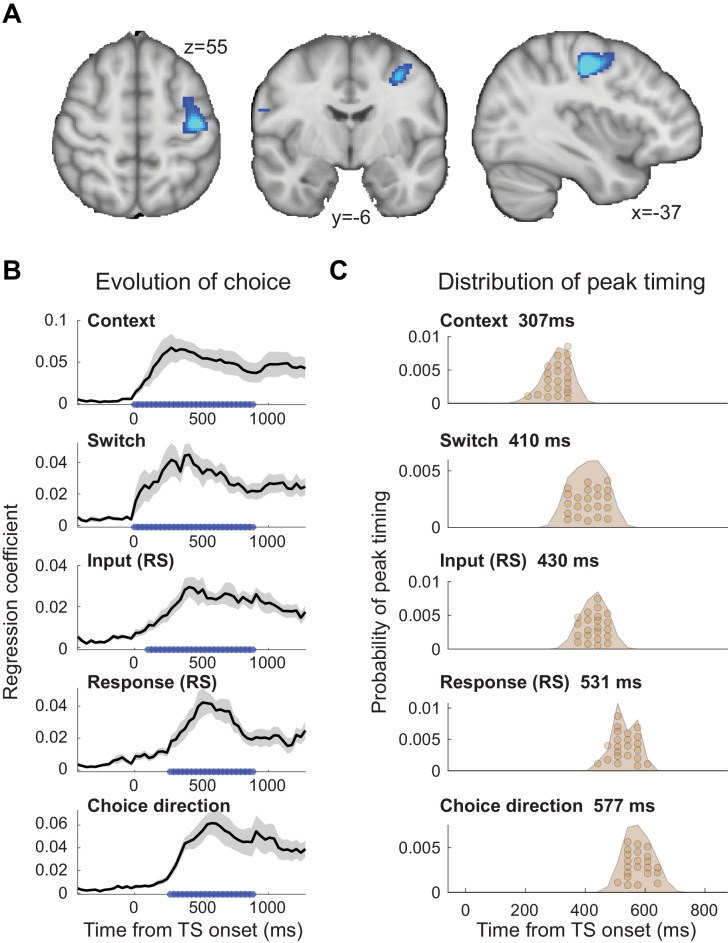

Figure 2. The evolution of choice in human premotor cortex.

(A) Dorsal premotor cortex (PMd) was the a priori region of interest for this study. Indeed, using source localization and a contrast between trials containing any input or response adaptation, compared to no adaptation, revealed a cluster involving M1 and left PMd (group contrast, whole-brain cluster-level FWE-corrected at p<0.05 after initial thresholding at p<0.001; PMd peak coordinate: x=-37, y=-6, z = 55). Importantly, this contrast was agnostic and orthogonal to any differences between input and response adaptation, relevant and irrelevant input adaptation, or pre- vs. post-training effects (including any timing differences, as beamforming was performed in the time window [−250, 750] ms). (B) The time-resolved nature of magnetoencephalography (MEG), combined with a selective suppression of different choice features, allowed us to track the evolution of the choice on a millisecond timescale. Linear regression coefficients from a regression performed on data from left premotor cortex (PMd) demonstrate an early representation of context (motion or colour) and switch (attended dimension same or different from TS) from ~8 ms (sliding window centred on 8 ms contains 150 ms data from [−67,83] ms). The representation of inputs, as indexed using repetition suppression (RS), emerged from around 108 ms (whether or not the input feature, colour, or motion was repeated). Finally, the motor response, again indexed using RS (same finger used to respond to adaptation stimulus [AS] and test stimulus [TS] or not), and TS choice direction (left or right, unrelated to RS) were processed from 275 ms. *p<0.001; error bars denote SEM across participants; black line denotes group average. (C) The distribution of individual peak times across the 22 participants directly reflects this evolution of the choice process. In particular, it shows significant differences in the processing of input and response, consistent with premotor cortex transforming sensory inputs into a motor response.