Abstract

Previous work has shown that total variation superiorization (TVS) improves reconstructed image quality in proton computed tomography (pCT). The structure of the TVS algorithm has evolved since then and this work investigated if this new algorithmic structure provides additional benefits to pCT image quality. Structural and parametric changes introduced to the original TVS algorithm included: (1) inclusion or exclusion of TV reduction requirement, (2) a variable number, N, of TV perturbation steps per feasibility-seeking iteration, and (3) introduction of a perturbation kernel 0 < α < 1. The structural change of excluding the TV reduction requirement check tended to have a beneficial effect for 3 ≤ N ≤ 6 and allows full parallelization of the TVS algorithm. Repeated perturbations per feasibility-seeking iterations reduced total variation (TV) and material dependent standard deviations for 3 ≤ N ≤ 6. The perturbation kernel α, equivalent to α = 0.5 in the original TVS algorithm, reduced TV and standard deviations as α was increased beyond α = 0.5, but negatively impacted reconstructed relative stopping power (RSP) values for α > 0.75. The reductions in TV and standard deviations allowed feasibility-seeking with a larger relaxation parameter λ than previously used, without the corresponding increases in standard deviations experienced with the original TVS algorithm. This work demonstrates that the modifications related to the evolution of the original TVS algorithm provide benefits in terms of both pCT image quality and computational efficiency for appropriately chosen parameter values.

Keywords: feasibility-seeking algorithms, image reconstruction, perturbations, proton computed tomography (pCT), superiorization, total variation superiorization (TVS)

I. Introduction

PROTON computed tomography (pCT) is a relatively new imaging modality that has been developed from early beginnings [1], [2], [3], [4] towards a recent preclinical realization of a pCT scanner [5], [6], [7]; a comprehensive review of pCT development can be found in [8]. The main motivation of pCT has been to improve the accuracy of proton therapy dose planning due to more accurate maps of relative stopping power (RSP) with respect to water, which determines how protons lose energy in human tissues in reference to water as a medium. The same method can also be used to image the patient immediately before treatment to verify the accuracy of the treatment plan about to be delivered. Proton therapy, like therapy with other heavy charged particles, e.g., carbon ions, is very susceptible to changes in tissue RSP, and small differences of a few percent, both random and systematic, can lead to range errors exceeding the desired limit of 1-2 mm [9]. Thus, the planner of proton and ion therapy must increase margins around the target, which leads to unwanted exposure of normal tissues to high dose.

The faithful reconstruction of proton RSP maps, in terms of accuracy and reproducibility, is an important part of the successful clinical implementation of pCT. The approach that has been selected as the most promising in recent years, although technologically demanding, is to track individual protons through the patient and to predict their most likely path (MLP) [10], [11] in addition to measuring the energy loss of each proton and converting it to water-equivalent pathlength (WEPL). This has led to pCT reconstruction algorithms that are based on solving large and sparse linear equation systems, where each equation has the linear combination of intersection lengths of tracked protons through individual object voxels and the unknown RSP of those voxels on the left-hand side of the equation and the measured WEPL on the right-hand side of the equation. A solution of such large systems can be found with algorithms using projections onto convex sets and solving them iteratively as shown previously [12]. The noise content of the reconstructed images depends on many factors, such as the thickness of the object, the number of protons used in the image formation, and the details of the iterative algorithm, such as the number of iterations performed and the relaxation parameter chosen.

The superiorization method (SM) is another relatively recent development that has found its place between feasibility-seeking and constrained optimization in medical physics applications [13]. The superiorization method has also been tested as a technique to improve the image quality, in particular the noise properties, of pCT images when combined with the diagonally-relaxed orthogonal projections (DROP) algorithm [14]. Superiorization reduces, not necessarily minimizes, the value of a target function while seeking constraints-compatibility. This is done by taking a solely feasibility-seeking algorithm, analyzing its perturbation resilience, and proactively perturbing its iterates accordingly to steer them toward a feasible point with reduced value of the target function. When the perturbation steps are computationally efficient, this enables generation of a superior result with approximately the same computation time and efficiency (computational cost) as that of the original feasibility-seeking algorithm.

The mathematical principles of the SM over general consistent “problem structures” with the notion of bounded perturbation resilience were formulated in [15]. The framework of the SM was extended to the inconsistent case by using the notion of strong perturbation resilience in [16], [17]. In [17], the efficacy of the SM was also demonstrated by comparing it with the performance of the projected subgradient method for constrained minimization problems.

A comprehensive overview of the state of the art and current research on superiorization appears in our continuously updated bibliography Internet page, which currently contains 82 items [18]. Research works in this bibliography include a variety of reports ranging from new applications to new mathematical results for the foundation of superiorization. A special issue entitled: “Superiorization: Theory and Applications” of the journal Inverse Problems appeared in [19].

Recently published works also attest to the advantages of the superiorization methodology in x-ray CT image reconstruction. These include reconstruction of CT images from sparse-view and limited-angle polyenergetic data [20], statistical tomographic image reconstruction [21], CT with total variation and with shearlets [22], and superiorization-based multi-energy CT image reconstruction [23].

In this work, we report on improvements in noise properties (total variation, standard deviation of regions of interest in different materials) and computational efficiency when applying novel modifications of superiorization to pCT reconstruction.

II. Motivation

Iterative projection methods seeking feasible solutions have been shown to be an effective image reconstruction technique for pCT [24], but reconstructed images exhibit local RSP fluctuations that cannot be removed by the reconstruction process alone. Inelastic electronic and nuclear events result in a statistical distribution of energy loss and, consequently, of the WEPL values calculated from measurements. These statistical variations in WEPL manifest in the reconstructed image as correlated localized fluctuations in the reconstructed RSP values. Although iterative reconstruction algorithms [12] are less sensitive to these variations than reconstruction transform methods, such as filtered backprojection (FBP) [25], [26], there is a propagation and amplification of WEPL uncertainty with successive iterations. Hence, although accuracy tends to increase with each iteration, as reconstruction nears convergence, updates of the solution from subsequent iterations are increasingly dominated by growing fluctuations. Thus, beyond a certain number of iterations, image quality begins to degrade, placing a limit on the maximum number of useful iterations and preventing steady-state convergence. WEPL uncertainty is inherent in the physical process and cannot be avoided, but techniques have been developed to reduce fluctuations and limit their propagation during iterative reconstruction. Given the amplification of uncertainty in the iterative process, any reduction in local RSP variations may lead to improved convergence behavior and, therefore, increase the accuracy of reconstructed RSP values. Therefore, the current work focused on further reduction of the noise content in the overall image as well as in certain regions of defined RSP.

The two measures that were adopted to quantify the prevalence and magnitude of these RSP fluctuations are total variation (TV) and standard deviation. For an introduction to TV for image analysis see, e.g., [27]. Standard deviation is a commonly used measure of variability around the mean in statistics. In image analysis, it is often employed to characterize the amount of fluctuation present in a region of interest that is known to present a homogeneous material. These measures provide a basis for comparing the effectiveness of techniques developed to address the noise problem in iterative image reconstruction. Total variation superiorization (TVS) is a technique for reducing image noise content without reducing the sharpness of edges between boundaries of materials. TV superiorization consists of repeated steepest descent steps of TV interlaced between iterations of a feasibility-seeking algorithm.

In pCT reconstruction, feasibility-seeking tends to accentuate RSP variations present due to WEPL uncertainty. Whereas this sharpens edges between different material regions, it also results in an amplification of RSP fluctuations during iterative image reconstruction. Performing TV reduction steps between consecutive feasibility-seeking iterations slows the growth of RSP variations. This permits more feasibility-seeking iterations before fluctuations grow to dominate updates of the solution. Hence, although the reduction in TV is itself an important aspect of TVS, another important aspect is the increased number of useful iterations made possible by the reduction in the amplification of RSP fluctuations.

III. Methods

A. TVS Algorithms

The efficacy of TVS for image reconstruction in pCT has been demonstrated in previous work [14]. In recent years, the algorithmic structure of the superiorization method has undergone some evolution in ways that offer several potential benefits in pCT. The details of this evolution can be found in the Appendix of [28], titled “The algorithmic evolution of superiorization”. In addition, there were certain aspects of the original TVS algorithm, here referred to as OTVS, that had been proposed but were not previously investigated in its application to pCT.

With the new version of the TVS algorithm, here referred to as NTVS, we investigated both the structural changes and aspects previously not investigated of the OTVS algorithm. The notation and other algorithmic details of the NTVS algorithm can be found in Appendices A and B. The definition of the OTVS algorithm investigated here and in previous work is provided in this notation in Section I of the Supplemental Materials1 of this manuscript.

B. NTVS Algorithm

The NTVS algorithm investigated in this work combines properties that were scattered among previous works on TVS in x-ray CT, see the Appendix of [28]. These properties, listed next, were never combined in a single algorithm, as we do here, neither for x-ray CT nor for pCT.

Exclusion of the TV reduction verification step (step (14) of the NTVS algorithm in Appendix B).

Usage of powers of the perturbation kernel α to control the step-sizes βk in the TV perturbation steps.

Incorporation of the user-chosen integer N (step (8) of the NTVS algorithm in Appendix B) that specifies the number of TV perturbation steps between consecutive feasibility-seeking iterations.

Incorporation of a new formula for calculating the power ℓk, ℓk = rand(k, ℓk−1), used to calculate the step-size βk = αℓk at iteration k of feasibility-seeking (step (6) of the NTVS algorithm in Appendix B).

The step verifying the reduction of TV (step (10) of the OTVS algorithm (Section I of the Supplemental Materials1) is not time consuming, but such decision-controlled branches present their own challenges with respect to computational efficiency. Although there are technically a few computations with data dependencies (e.g., norm calculations), in each case, these can either be rearranged/reformulated or simply repeated separately to generate data independent calculations, making parallel computation of the algorithm possible. Hence, if the branching introduced by the TV reduction verification can be removed without compromising image quality, the NTVS algorithm can be incorporated into the existing parallelization scheme, providing up to a 30% reduction in sequential operation count (computation time) and eliminating the repeated perturbations until a reduced TV is achieved (computation time and efficiency). This change is similar to, but distinct from, the investigations performed by Penfold et al [14] in developing the OTVS algorithm for application in pCT reconstruction in which he found that the computationally expensive feasibility proximity check step of the classical TVS algorithm [29], [30], [31] could safely be removed. Inclusion or exclusion of such checks not only affects computational efficiency, but these can also have a significant impact on image quality. Hence, the formulation of the NTVS algorithm presented in this work permits exclusion of the TV reduction check by demonstrating the surprising result that its removal has a positive impact on reconstructed image quality in addition to its computational benefits. The algorithm representing NTVS with the TV reduction requirement included is defined in Appendix B), but this is only provided for reference purposes and is not intended for use.

The OTVS algorithm, initializing TVS with β0 = 1 and simply halving the perturbation magnitude each time through the TV perturbation loop, prevented access to one of the most influential variables of TVS: the perturbation kernel α. With the magnitude of the perturbations given by βk = αℓk, convergence is maintained by requiring 0 < α < 1. The primary purpose of α is to control the rate at which βk converges to zero. In OTVS, β0 = 1 and α = 0.5 results in a relatively modest initial perturbation and a rapidly decreasing β such that little to no perturbation is applied after the first few feasibility-seeking iterations. Hence, OTVS perturbations applied after subsequent feasibility-seeking iterations are unlikely to have a meaningful impact on the amplification of RSP variations. This results in an overall under-utilization of TV perturbations. Thus, NTVS provides direct control of α, and its performance for various values of α was investigated in this work.

With the ability to increase the perturbation kernel α, larger reductions in TV can be generated; this also produces slower-decaying perturbations, which may not be desired. Alternatively, larger reductions in TV can also be generated by applying perturbations multiple times per feasibility-seeking iteration without increasing the magnitude of individual perturbations. Hence, NTVS introduces a variable N controlling the number of repetitions of TV perturbations between feasibility-seeking iterations.

Since the exponent ℓ increases after each of the N applied perturbations, reducing the perturbation coefficient βk = αℓ, an increase in N causes the perturbation magnitude to converge to zero earlier in reconstruction. To preserve meaningful perturbations in later iterations, the exponent ℓ is adjusted between feasibility-seeking iterations by decreasing it to a random integer between its current (potentially large) value and the (potentially much smaller) iteration number k, i.e., ℓk = rand(k, ℓk−1). This update was suggested and justified in [32, page 38], [33, page 36] and subsequently used in [34] for maximum likelihood expectation maximization (MLEM) algorithms and in the linear superiorization (LinSup) algorithm [28, Algorithm 4]. Although the decrease of ℓk is random within a bounded range, on average, the corresponding perturbation coefficient β experiences a sizeable increase. For N = 1, the difference between ℓk−1 and k is only nonzero when the TV reduction requirement is included and at least one perturbation did not reduce TV; when the TV reduction requirement is excluded, ℓk−1 and k are always equal and, therefore, ℓk is never decreased. Since ℓk is incremented after each of the N perturbations, the random decrease in ℓk becomes increasingly important as N increases. This random decrease slows the rate at which βk converges towards zero while preserving the convergence property, given that the iteration number k, which increases sequentially, is set as the lower limit.

C. Input Data Sets

The preliminary investigations of the NTVS algorithm were performed using a simulated pCT data set to quantify the variations generated by the random increase in ℓk between feasibility-seeking iterations (step 6 of Algorithm B). The simulated data set of the Catphan® CTP404 phantom module (The Phantom Laboratory Incorporated, Salem, NY, USA) was generated using the simulation toolkit Geant4 [35] and contained approximately 120 million proton histories. The definitive investigations were then performed for two experimental data sets: (1) a scan obtained with an experimental pCT scanner [5] containing approximately 250 million proton histories of the same Catphan® CTP404 phantom and (2) an experimental pCT scan of a pediatric anthropomorphic head phantom (model HN715, CIRS, Norfolk, VA, USA) containing approximately the same number of proton histories. All pCT data sets were generated with the phantom rotating on a fixed horizontal proton beam line producing a cone beam (simulated data set) or a rectangular field using a magnetically wobbled beam spot (experimental data sets). The simulated data set was generated with 90 fixed angular step intervals of 4 degrees ranging from 0 to 356 degrees, and the experimental data sets were generated from a continuous range of projection angles between 0 and 360 degrees.

The Catphan® CTP404 phantom is a 15 cm diameter by 2.5 cm tall cylinder composed of an epoxy material with an RSP ≈ 1.144; because this value was not known at the time when the simulated data set was created, it was explicitly set to RSP = 1.0 (water) in the Geant4 simulation. The theoretical and experimentally measured RSP of the materials of the phantom are listed in Table I. The phantom has three geometric types of contrasting material inserts embedded with the centers of each arranged in evenly spaced circular patterns of varying diameter d as follows:

d = 30 mm: five acrylic spheres of diameter 2, 4, 6, 8, and 10mm, with the center of each lying on a circular cross section midway along the phantom’s axis.

mm: four 3 mm diameter rods, 3 × air and 1 × Teflon, running the length of the phantom.

d = 120 mm: eight 12.2 mm diameter cylindrical holes, six filled with materials of known composition2 and two left empty (air-filled), running the length of the phantom.

TABLE I:

RSP of the material inserts for the simulated and experimental Catphan® CTP404 data sets

| Air | PMP | LDPE | Epoxy | |

|---|---|---|---|---|

| Simulated Experimental |

0.0013 0.0013 |

0.877 0.883 |

0.9973 0.979 |

1.024 1.144 |

| Polystyrene | Acrylic | Delrin | Teflon | |

| Simulated Experimental |

1.0386 1.024 |

1.155 1.160 |

1.356 1.359 |

1.828 1.79 |

Since the acrylic spheres have an RSP ≈ 1.160, in the case of the experimental data, these cannot be discerned from the surrounding epoxy material (RSP ≈ 1.144) of nearly the same RSP.

D. Data Preprocessing and Implementation Details of Image Reconstruction

Details of the pCT data preprocessing, calibration, and image reconstruction have been presented previously [36], [37]. For the purposes of this work, feasibility-seeking was performed using the DROP algorithm of [38] with blocks containing 3200 (simulated data set) and 25,600 (experimental data set) proton histories. The smaller block-size was chosen for the simulated data set, which had only half of the histories as the larger experimental data sets and thus more noise. In general, smaller block sizes further accentuate noise during the reconstruction, and would potentially benefit more from NTVS. The intent of the preliminary investigation with the simulated data set was twofold: (1) to provide a larger opportunity for improvement with NTVS to better assess its benefits for more noisy data sets and (2) to quantify the magnitude of random variations in performance arising from the random increases in ℓk. The random number generator used to determine the random increase in ℓk between feasibility-seeking iterations was assigned a random seed based on the Julian time at execution, yielding a different set of random increases in ℓk each time reconstruction is performed.

The experimental data set, on the other hand, was used to determine the impact of NTVS in a realistic reconstruction scenario. The block-size was still chosen from the smaller end of an acceptable range of block-sizes because, although smaller blocks are more sensitive to noise, they also provide a greater material differentiation capability and an opportunity to assess the maximum potential benefits of NTVS for realistic experimental data sets.

Image reconstruction was performed within a 20 × 20 × 5 cm3 volume with each voxel representing a volume of 1.0×1.0×2.5 mm3, yielding 200×200 image matrix for each slice.

E. Reconstruction Parameter Space

The following describes the choices for reconstruction parameters that were systematically investigated in this work. Note that for the purpose of the investigations performed in this work, each of the following parameters of the parameter space remained constant for the duration of a particular reconstruction.

Inclusion or exclusion of TV reduction requirement: The primary structural change to the OTVS algorithm is the option to exclude the requirement that a perturbation reduces image TV, thereby eliminating the need to calculate and compare image TV before and after perturbations. Hence, NTVS was investigated with and without this check.

The number of TV perturbations per feasibility-seeking iteration: After initial investigations with increasing N, results were found to degrade as N increased beyond N ≈ 10. Therefore, in this work, the values of N chosen were between 1 and 12, in increments of 1.

The perturbation kernel coefficient: Since the configuration of the OTVS algorithm effectively used α = 0.5 and the resulting perturbations did not negatively affect RSP accuracy [14], this work only investigated with α ≥ 0.5 to determine how large it can be set without affecting RSP accuracy. The values of α investigated in this work were α = 0.5, 0.65, 0.75, 0.85, and 0.95.

The choice of relaxation parameter in the feasibility-seeking algorithm: In previous unpublished work λ = 0.0001 yielded optimal results for a block-size containing 3200 proton histories; increasing λ beyond this value results in increased standard deviations. To investigate the interaction between TVS parameters and λ and determine if NTVS is capable of reducing the increase in standard deviations, the values of λ investigated in this work were λ = 0.0001, 0.00015, and 0.0002.

IV. Computational Hardware and Performance Analysis

Image reconstruction was executed on a single node of a compute cluster with input data read from a local solid state drive and the bulk of computation was performed in parallel on a single NVIDIA k40 GPU. The parallel computational efficiency of the DROP algorithm increases as the number of histories per block increases since this permits better GPU utilization, but even with only 3200 histories per block, the total computation time from reading of input data from disk through the writing of reconstructed images to disk was, at most, about 6 minutes (for k = 12 feasibility-seeking iterations and N = 12 perturbation steps).

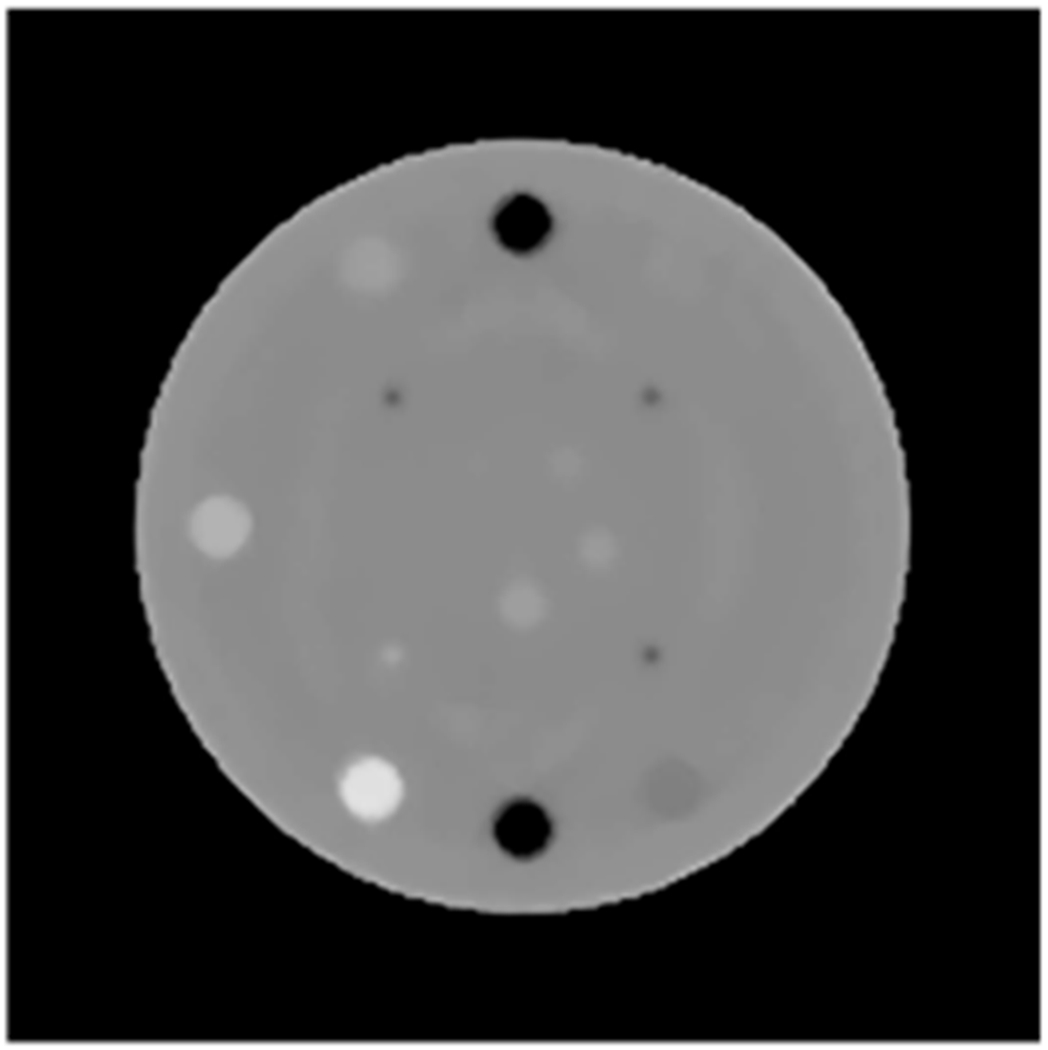

The central slices of the CTP404 phantom containing the spherical inserts have the most complicated material composition and represent the greatest challenge to reconstruction. Consequently, the data acquired for protons passing through these slices will have a greater variance in paths and WEPL values, which manifests in the corresponding slices of the reconstructed images as an increase in noise. Hence, analysis of these slices provides a better basis for comparing the NTVS and OTVS algorithms. Since the coplanar centers of the spherical inserts lie in the central slice, the comparative analyses performed in this work focused on this slice. A representative reconstruction of this slice is shown in Figure 1.

Fig. 1:

Representative reconstruction of the central slice of the CTP404 phantom from simulated data.

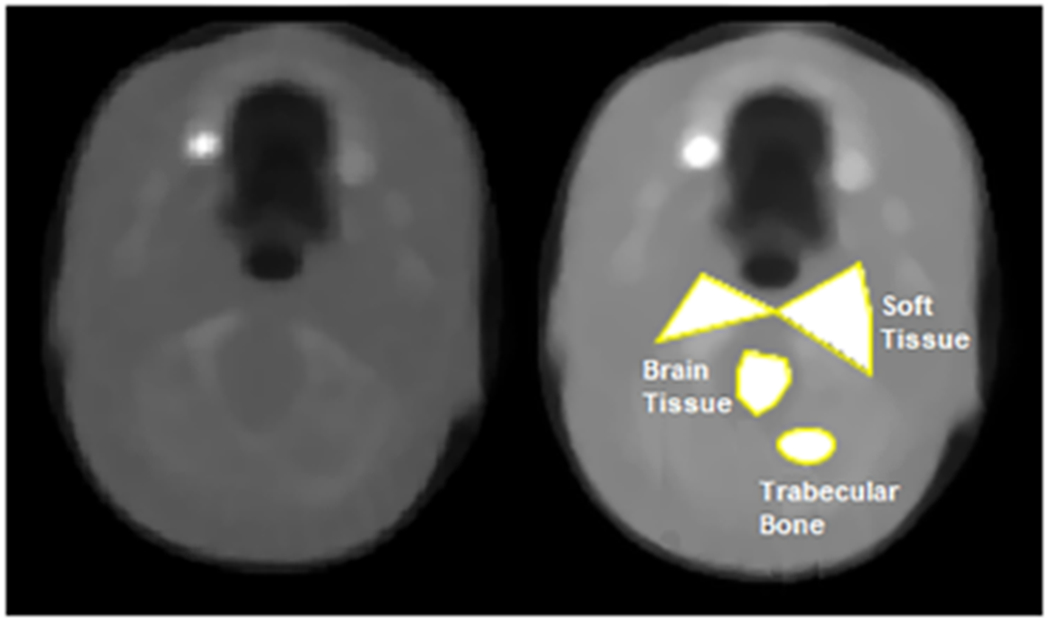

The image analysis program ImageJ2 1.51r [39] was used to perform quantitative analyses of reconstructed image quality. The cylindrical material inserts of the CTP404 phantom were analyzed by selecting a 7mm diameter circular region of interest (ROI) centered within the boundary of each insert and measuring the mean and standard deviation in reconstructed RSP of the voxels within the ROI selection. The polygon and ovular selection tools were used to measure the mean and standard deviation in reconstructed RSP within the more realistically complicated ROIs of the HN715 phantom. Although the finer structure of the HN715 phantom make it difficult to select an ROI of a single material, particularly for the brain tissue, ROIs were chosen from regions composed primarily of the material of interest and a minimal number of voxels of disparate material; the analyzed ROIs are shown shaded and labeled in Figure 2.

Fig. 2:

Representative reconstruction of the slice of the pediatric head phantom containing the analyzed regions of interest (left); the analyzed regions of interest are filled in white and labeled in the image on the right.

RSP error was calculated as the percentage difference between the mean measured RSP in an ROI and the RSP (a) defined for the material in the Geant4 simulation for the simulated data and (b) based on experimental material RSP investigations for the experimental data sets; the theoretical RSP used in the analyses of each material ROI of the (a) CTP404 and (b) HN715 phantoms are listed in Tables I and II, respectively. In accordance with [27], total variation was calculated as the sum of local variations over all voxels of the entire image for both the TV reduction requirement (when included) and the analysis of reconstructed images.

TABLE II:

RSP of the tissue/bone regions of interest analyzed in the pediatric head phantom.

| Soft Tissue | Brain Tissue | Trabecular Bone | |

| Experimental | 1.037 | 1.047 | 1.108 |

V. Results

In the following, we present results from an investigation of the multi-parameter space, including potential interactions between parameters, first for the preliminary investigation with the simulated CTP404 data set and then for the definitive investigation with the experimental CTP404 and HN715 data sets. Note that each data point on the following plots represents a separate, complete reconstruction with the corresponding combination of reconstruction and superiorization parameters held fixed throughout the reconstruction.

A. Simulated CTP404 Data Set

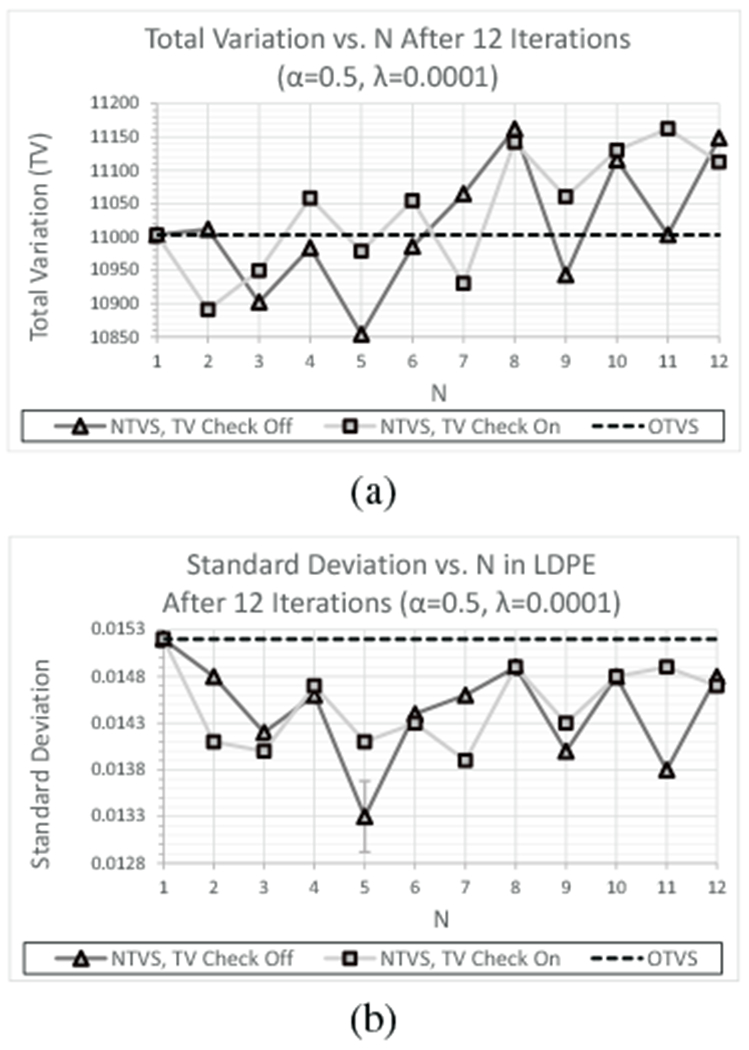

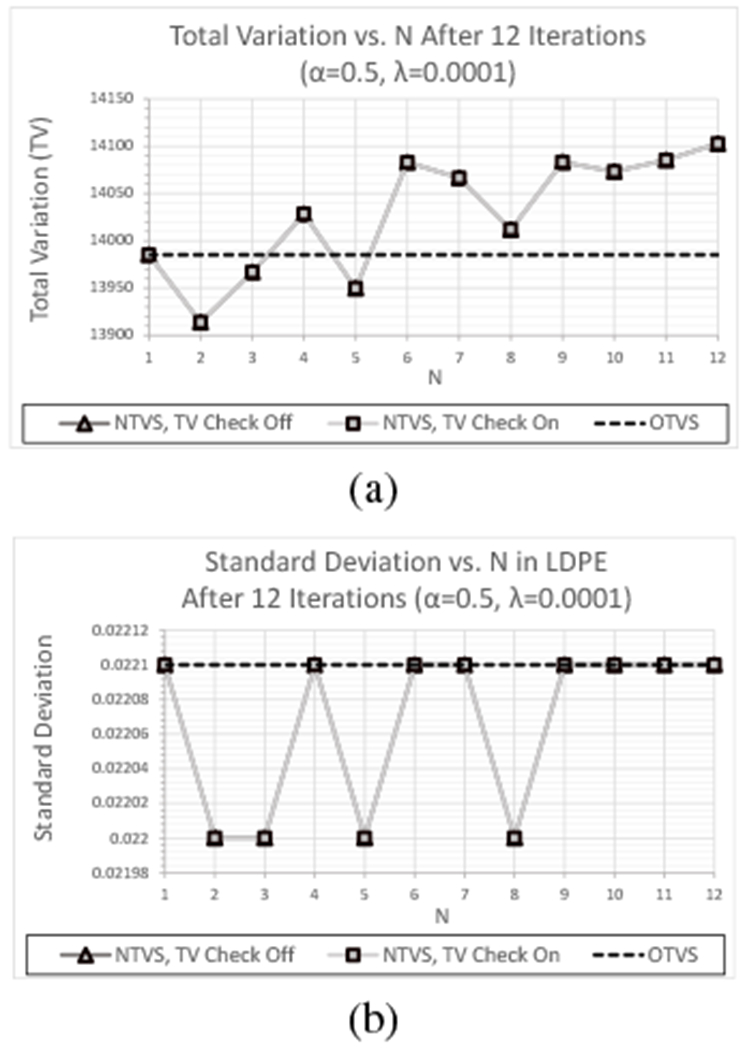

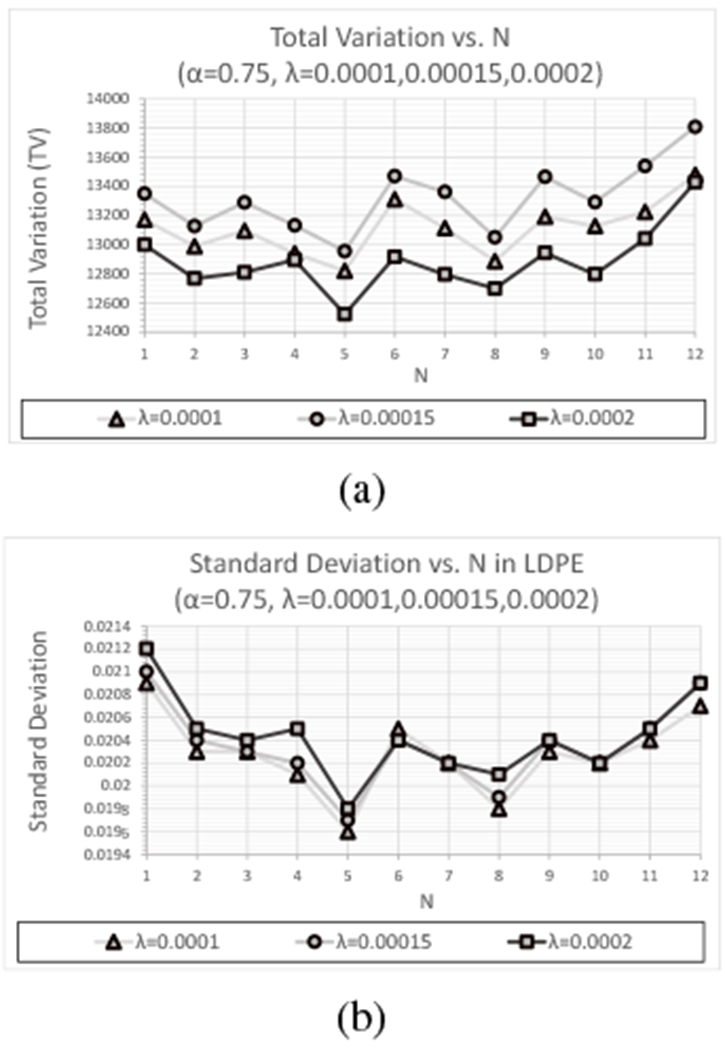

The preliminary investigations with the simulated CTP404 data set demonstrated that the random increase in ℓk results in random variations in both the overall TV of reconstructed images and the standard deviations within the individual material inserts. However, the benefits of NTVS for 3 ≤ N ≤ 6 were consistently larger by multiple standard deviations than the random variations. Figures 3(a) and 3(b) show a comparison of overall TV and standard deviation within the LDPE insert, respectively, obtained after 12 feasibility-seeking iterations with λ = 0.0001 using OTVS (horizontal line) and NTVS with λ = 0.5 and including/excluding the TV reduction requirement (grey/black curves, respectively). Interested readers may find a more detailed analysis of the preliminary investigations with the simulated data set in Section II of the Supplemental Materials3 of this manuscript.

Fig. 3:

(a) TV and (b) standard deviation (LDPE) as a function of N after 12 feasibility-seeking iterations for the simulated CTP404 data set using OTVS and NTVS including and excluding the TV reduction requirement with λ = 0.0001 and α = 0.5. The error bar at N = 5 denotes the variation in standard deviation (σ = 0.00038) between 8 repetitions of reconstruction with N = 5.

To determine whether the observed fluctuations were due to random or systematic variations, an analysis of 8 separate reconstructions with N = 5, α = 0.5, λ = 0.0001, and the TV reduction requirement excluded was performed. The standard deviation obtained within the LDPE insert varied between reconstructions with a standard deviation of σLDPE = 0.00038 (shown as an error bar on the point at N = 5 in Figure 3(b)); similar variations were also obtained within the ROI of the other materials. Note that the standard deviation obtained within the LDPE insert at N = 5 with the TV reduction requirement excluded was nearly 2σLDPE less than that obtained with the requirement included and just under 4σLDPE less than that obtained with OTVS. In addition, the standard deviation obtained with N = 5 was at least 1.5σLDPE less than that obtained with any other value of N.

These differences are large enough to conclude that the observed fluctuations in standard deviation as a function of N were primarily systematic variations inherent to the NTVS algorithm, arising due to its interaction with feasibility-seeking, and not the product of random variations. Further support for this conclusion was the observance of similar fluctuations for each combination of α and λ, with differences in the magnitude of TV and standard deviations but with similarly shaped curves as a function of N; for each combination of α and λ, maximal benefits of NTVS were obtained with the TV reduction requirement excluded and 3 ≤ N ≤ 6.

B. Experimental CTP404 Data Set

The experimental CTP404 data set was then used to reconstruct images for the same combination of parameter values from the reconstruction parameter space as for the simulated data set.

1). Number of TVS steps (N):

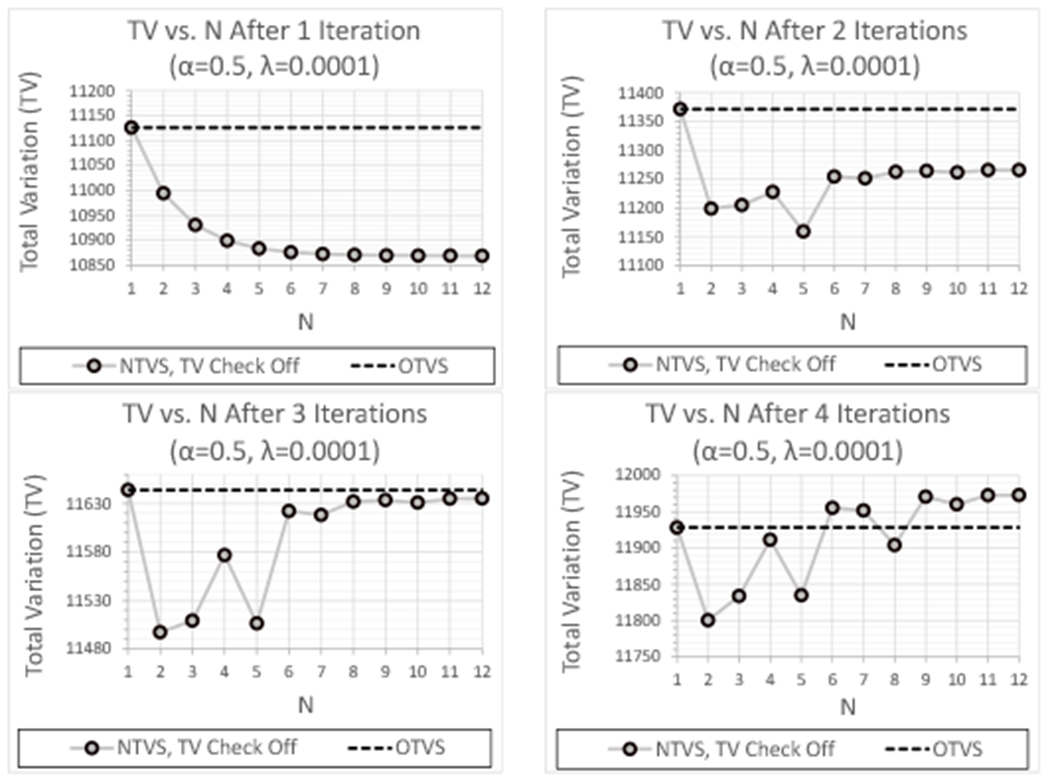

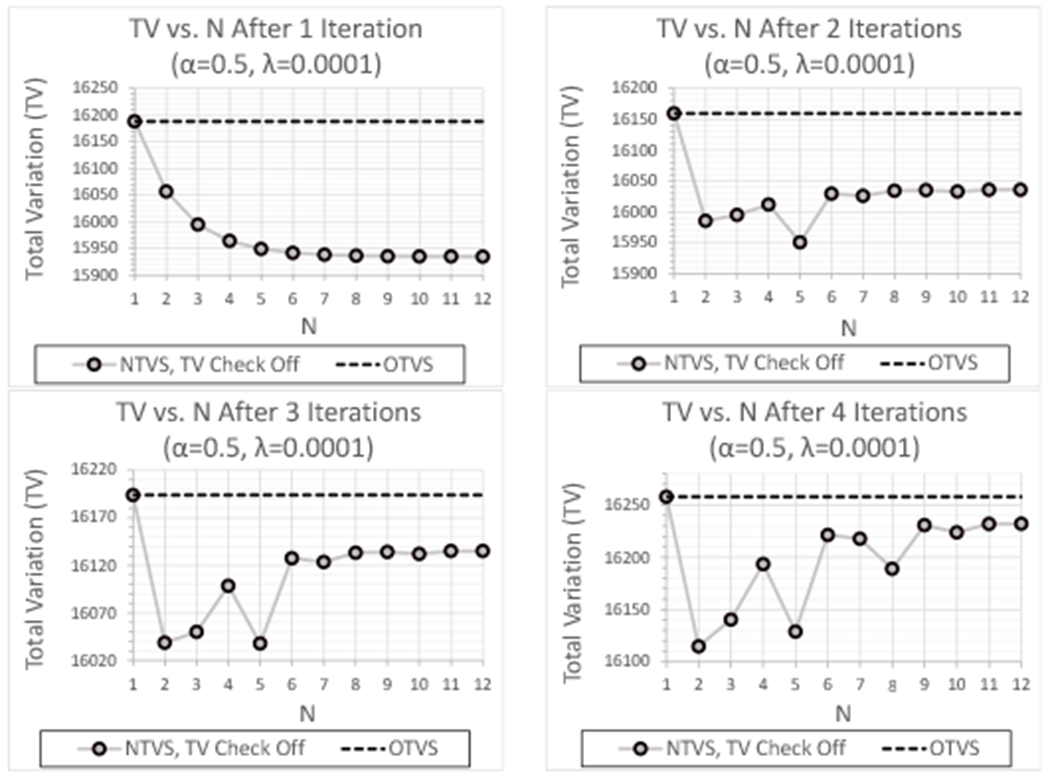

The number of TV perturbations per feasibility-seeking iteration, N, was again varied sequentially between 1 and 12 for the experimental CTP404 data set; Figure 4 shows plots of TV as a function of N for each of the first four feasibility-seeking iterations for the case where the TV reduction requirement is excluded. TV did not fluctuate as much as it did with the simulated data but the same general trend can be seen: increasing N results in a monotonic reduction in TV for the first iteration, but as subsequent feasibility-seeking iterations are performed on the resulting image, the reductions in TV obtained by increasing N reverse and eventually exceed the results obtained with OTVS. As with the simulated data, a consistent benefit was obtained by performing 3 ≤ N ≤ 6 TVS perturbations per feasibility-seeking iteration, but increasing beyond N ≥ 7 results in an image whose perturbations place it in a less advantageous point in the solution space for feasibility-seeking.

Fig. 4:

TV as a function of N after each of the first 4 feasibility-seeking iterations for the experimental CTP404 data set using OTVS and NTVS (TV reduction requirement excluded) with λ = 0.0001 and α = 0.5 .

2). Inclusion/Exclusion of TV Reduction Requirement:

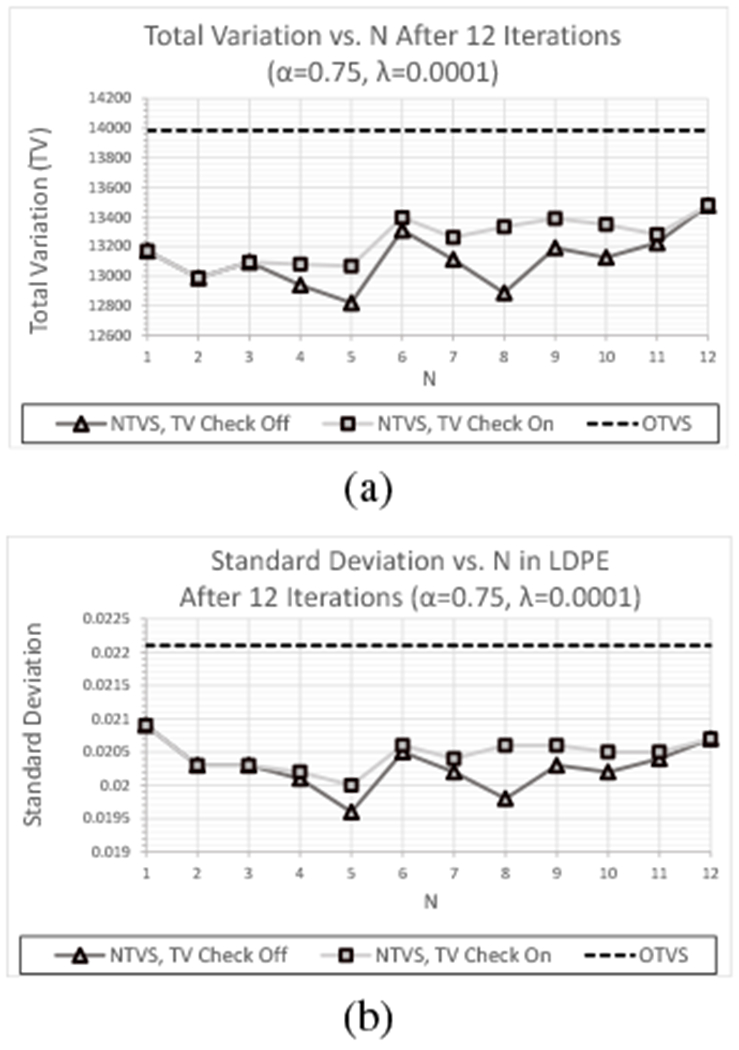

An investigation that isolated the impact of the exclusion of the TV reduction requirement on NTVS results was also performed for the experimental CTP404 data set. Figures 5(a) and (b) show a comparison of TV and standard deviation, respectively, for inclusion and exclusion of the TV reduction requirement in reconstructions of the experimental data set with α = 0.5. In this case, the difference in TV and standard deviation is not large enough to discern between the lines representing inclusion and exclusion of the TV reduction requirement; this occurred for each value of λ and, in the case of standard deviation, within the ROI of each material. However, for α = 0.75, the exclusion of the TV reduction requirement consistently resulted in smaller TV and standard deviation, as demonstrated by Figures 6(a) and (b); similar trends were also seen for other values of λ and in the other cylindrical material inserts.

Fig. 5:

(a) TV and (b) standard deviation as a function of N after 12 feasibility-seeking iterations for the experimental CTP404 data set using the OTVS algorithm and the NTVS algorithm including and excluding the TV reduction requirement with λ = 0.0001 and α = 0.5 (note that the 2 NTVS curves overlap).

Fig. 6:

(a) TV and (b) standard deviation as a function of N after 12 feasibility-seeking iterations for the experimental CTP404 data set using the OTVS algorithm and the NTVS algorithm including and excluding the TV reduction requirement with λ = 0.0001 and α = 0.75.

The scale of these plots also provides a better perspective on the reductions obtained with the larger α = 0.75 compared to those obtained with OTVS, indicating a consistent and sizeable reduction in TV and standard deviation for every value of N investigated, including those with N ≥ 7. These results demonstrate that the 50% increase in α resulted in a reduction in TV and standard deviation with approximately the same magnitude as the largest difference in TV and standard deviation obtained with varying N. In particular, the difference between the standard deviation obtained with OTVS and 3 ≤ N ≤ 6 was more than twice as large as the maximum difference between results within this range of N, a trend that was also consistently seen within the ROIs of the other materials.

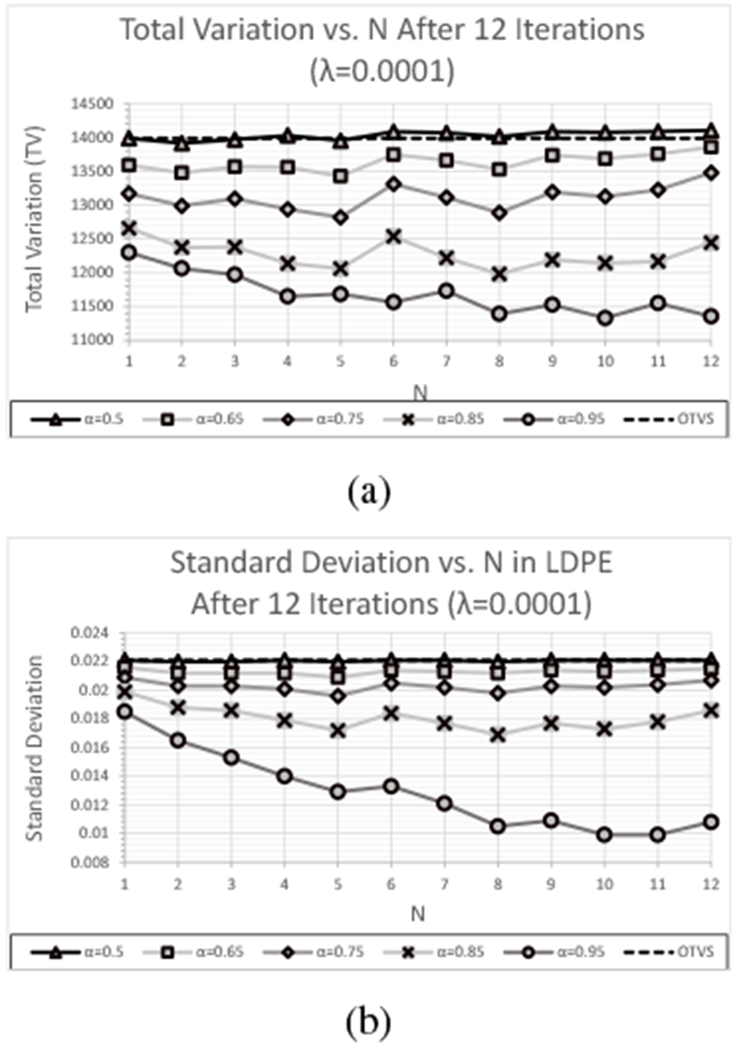

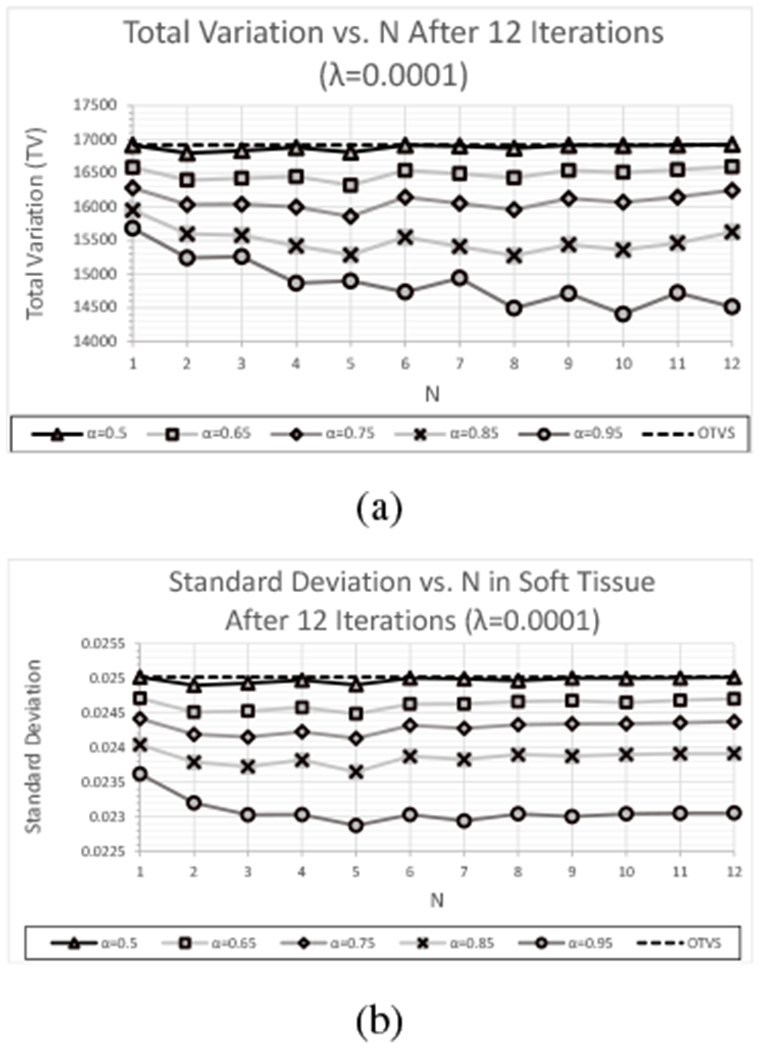

3). Perturbation Kernel (α):

Increasing α produces larger perturbations and results in the perturbation magnitude βk converging to zero more slowly. Thus, one can expect a larger reduction of TV and standard deviations for larger values of α. Figures 7(a) and (b), which show plots of TV and standard deviation as a function of N for λ = 0.0001 and with the TV reduction requirement excluded for reconstructions of the experimental data set, demonstrate this effect. Although these figures do not exhibit as strong of a dependence on α or N as was observed with the simulated data, they do indicate the same general trend of decreasing TV and standard deviation as a increases, with the difference growing increasingly larger as α increases in steps of 0.1. Notice that increasing α beyond α ≈ 0.75 begins to have a significant impact on the reconstructed RSP and, hence, the RSP error. The direction in which the reconstructed RSP is driven (i.e. increases/decreases reconstructed RSP) is unpredictable, as demonstrated by the fact that an increasing α reduced the RSP error in the Delrin insert for the simulated data set, but Figure 8 shows that an increasing α increased the RSP error in this insert in the case of the experimental data. In fact, for the experimental data set, increasing α > 0.75 resulted in an increase in RSP error within every cylindrical material insert.

Fig. 7:

(a) TV and (b) standard deviation (LDPE) as a function of N after 12 feasibility-seeking iterations for the experimental CTP404 data set using OTVS and NTVS (TV reduction requirement excluded) with λ = 0.0001 and varying α.

Fig. 8:

RSP error (Delrin) for each value of α as a function of N after 12 feasibility-seeking iterations for the experimental CTP404 data set using OTVS and NTVS (TV reduction requirement excluded) with λ = 0.0001.

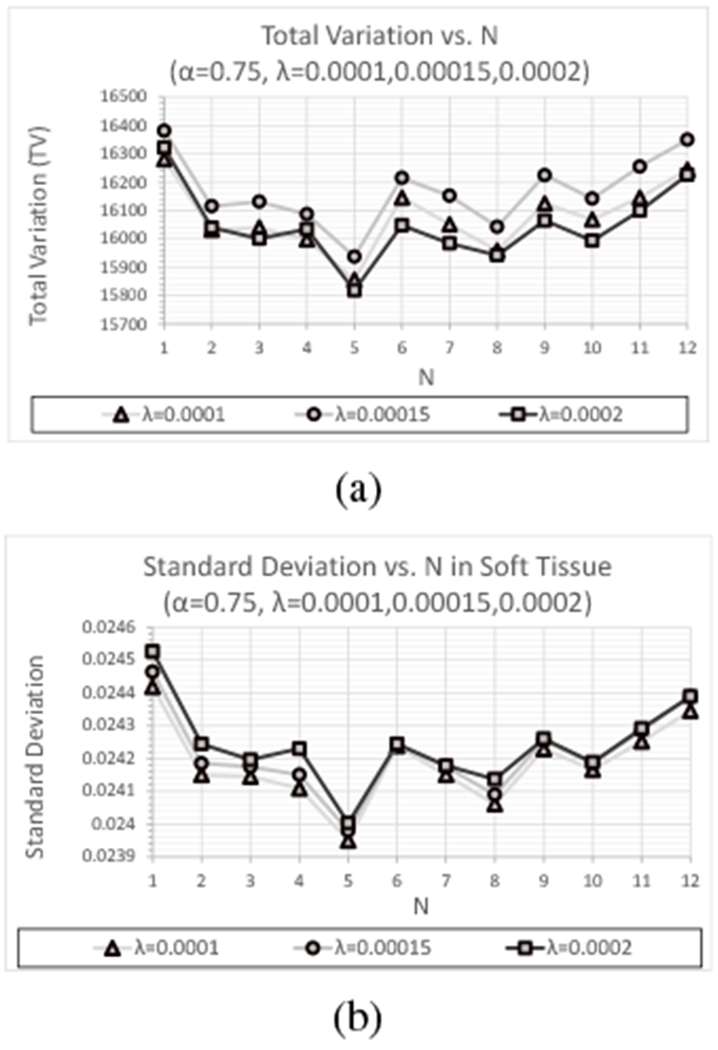

4). Relaxation Parameter (λ):

Comparisons of TV and standard deviation as a function of N for varying relaxation parameter λ are shown in Figures 9(a) and (b) for α = 0.75. As with the simulated data, the results for λ = 0.0001 after k = 12 feasibility-seeking iterations are shown and the number of iterations k was chosen for λ = 0.00015, 0.0002 such that these reconstructions had converged to the same point (i.e., reached approximately the same RSP); this occurred at k = 8 for λ = 0.00015 and k = 6 for λ = 0.0002 for the experimental data as it did for the simulated data.

Fig. 9:

(a) TV and (b) standard deviation (soft tissue) as a function of N for λ = 0.0001, k = 12; λ = 0.00015, k = 8; and λ = 0.0002, k = 6 iterations, respectively, and α = 0.75 for the experimental CTP404 data set.

Figure 9(a) indicates that, for each value of N, increasing λ results in a reduction in TV. This trend demonstrates the benefit of performing reconstruction with as few iterations as is necessary to obtain an acceptable level of convergence since a side effect of feasibility-seeking is a consistent increase in TV. On the other hand, the plot of standard deviation in Figure 9(b) indicates that, unlike with the simulated data, an increase in λ results in a slight increase in standard deviation in the LDPE insert. For both TV and standard deviation, optimal results were obtained with N = 5 for each value of λ and within each material insert, with nearly identical behavior as a function of both N and λ seen in each insert.

C. Experimental HN715 Pediatric Plead Phantom Data Set

The experimentally acquired data for the pediatric head phantom was reconstructed using the same set of parameter value combinations as those used to reconstruct the simulated and experimental CTP404 phantom data sets. This phantom provides a considerably different material composition and internal structure to determine the impact these properties have on the behavior of the NTVS algorithm and the combination of parameter values that produce maximal benefit.

1). Number of TVS steps (N):

Figure 10 shows plots of TV as a function of N for the first four feasibility-seeking iterations for the case where λ = 0.0001 and the TV reduction requirement is excluded. These results are very similar to those of the experimental CTP404 phantom, particularly for N ≤ 6, but unlike for both the simulated and experimental CTP404 data sets, the benefits of NTVS do not degrade as quickly for N ≥ 7 and continue to outperform OTVS for all values of N. However, the optimal values of N after 4 feasibility-seeking iterations occur at N = 2 and N = 5 for all 3 data sets.

Fig. 10:

TV as a function of N after each of the first 4 feasibility-seeking iterations for the experimental HN715 data set using OTVS and NTVS (TV reduction requirement excluded) with λ = 0.0001 and α = 0.5 .

As previously noted, repeated reconstructions with the same value of N yield variations in TV and standard deviation. Again, the difference in TV and standard deviation as a function of N is seen to be a property of the algorithm and its relationship with feasibility-seeking and not the result of the random variations arising from random increases in ℓk. The objectives of feasibility-seeking and TVS are somewhat opposed; feasibility-seeking tends to amplify noise, thereby increasing TV, while each TVS perturbation may drive the solution to a more or less feasible solution. The resulting push back and forth begins to produce small differences in TV between successive values of N after the first two feasibility-seeking iterations and these subsequently increase as each additional feasibility-seeking iteration amplifies the resulting differences. Simultaneously, TV perturbations and updates applied in feasibility-seeking both decrease in magnitude as k increases, diminishing their ability to counteract the impact of a previous, less optimal solution. Hence, a solution that is less optimal after the first few iterations will rarely overcome its performance deficit and will far more often become increasingly suboptimal, particularly if parameter values are held fixed and not adapted based on performance as in the present case. Hence, values of N that yield a larger reduction in TV early in reconstruction also experience a lesser amplification of noise at each feasibility-seeking iteration, resulting in a compounding effect that accounts for the relatively large differences in TV between similar values of N.

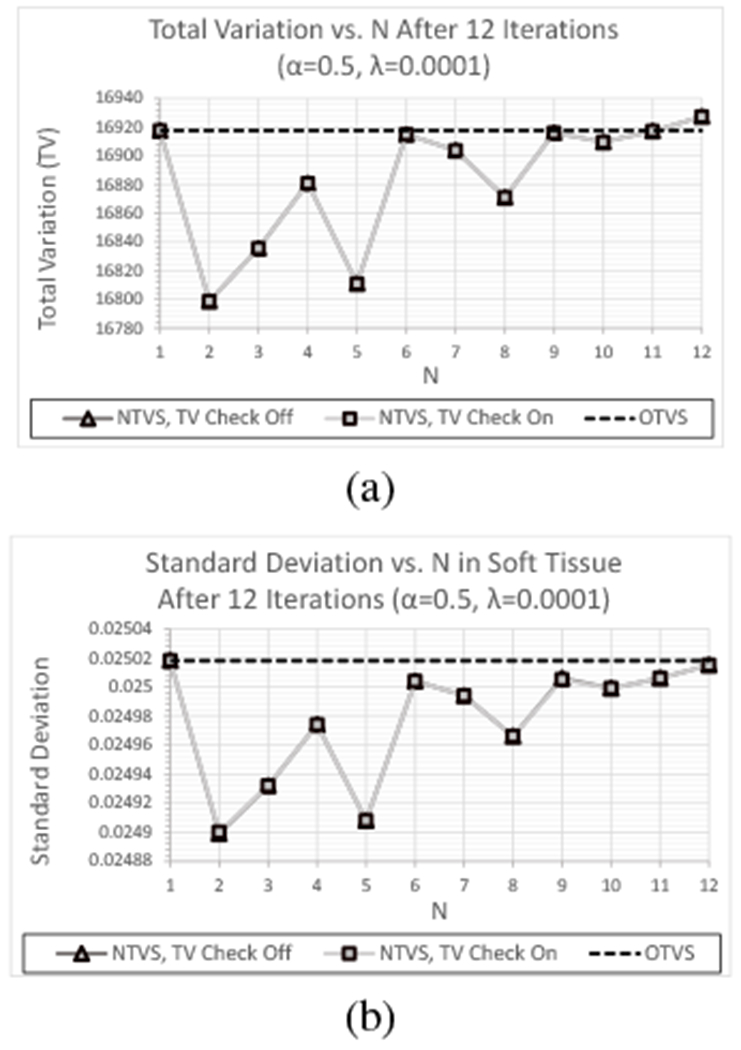

As can be seen in Figure 11, showing the TV and standard deviation within the soft tissue ROI as a function of N after all 12 feasibility-seeking iterations for λ = 0.0001 and α = 0.5, NTVS including and excluding the TV reduction requirement both yield larger reductions in TV and standard deviation for every value of N except for the slight increase in TV obtained with N = 12. Repeating these reconstructions with α = 0.75 consistently yields images with significantly larger reductions in both TV and standard deviation for every value of N, with similar standard deviation results obtained for every material ROI. These results also demonstrate that the smallest reductions in TV and standard deviation obtained with N = 1 and N = 12 were approximately 50% larger than the largest difference between varying values of N and more than twice as large for 3 ≤ N ≤ 6.

Fig. 11:

(a) TV and (b) standard deviation as a function of N after 12 feasibility-seeking iterations for the experimental HN715 data set using the OTVS algorithm and the NTVS algorithm including and excluding the TV reduction requirement with λ = 0.0001 and α = 0.5 (note that the 2 NTVS curves overlap).

2). Inclusion/Exclusion of TV Reduction Requirement:

Comparisons of TV and standard deviation as a function of N after 12 feasibility-seeking iterations are shown for OTVS and NTVS including and excluding the TV reduction requirement in Figures 11(a) and (b), respectively. These are shown for λ = 0.0001 and α = 0.5, which makes the reduction in βk with NTVS equivalent to that of OTVS. As with the experimental CTP404 data set, the difference in TV and standard deviation between the results with and without the TV reduction requirement were not discernable for α = 0.5 and, again, independent of the value of λ and material of the ROI. On the other hand, for α = 0.75, exclusion of the TV reduction requirement consistently yielded a larger reduction in TV and standard deviation for each value of N, as seen in Figures 12(a) and (b), respectively. Again, this was seen for all λ and, in the case of the standard deviation, within the ROI of each material. As with the previous data sets, all subsequent analyses for this data set were performed using the NTVS algorithm with the TV reduction requirement excluded (as defined in Appendix B).

Fig. 12:

(a) TV and (b) standard deviation as a function of N after 12 feasibility-seeking iterations for the experimental HN715 data set using the OTVS algorithm and the NTVS algorithm including and excluding the TV reduction requirement with λ = 0.0001 and α = 0.75.

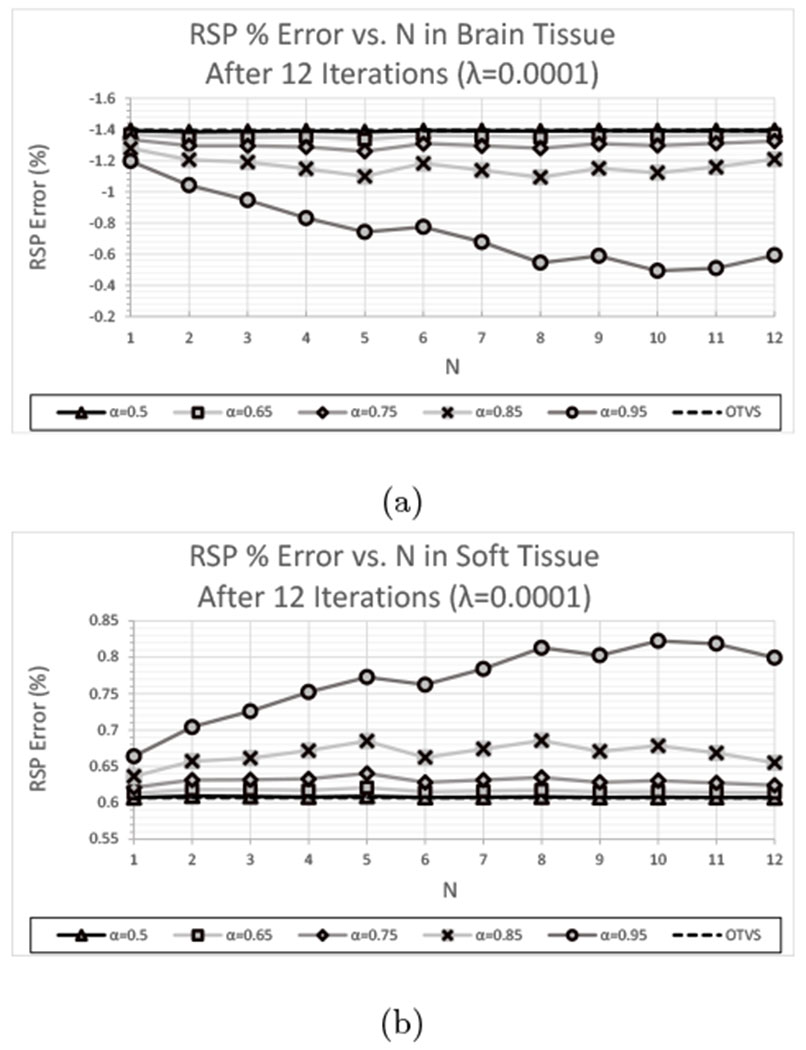

3). Perturbation Kernel (α):

Plots of TV and standard deviation in the ROI of soft tissue ROI a function of N for λ = 0.0001 and with the TV reduction requirement excluded are shown for each value of α in Figure 13 for the HN715 data set; as with the other data sets, the standard deviation results for the ROIs of the other materials showed a similar trend as a function of α. As with the simulated and experimental CTP404 data sets, TV and standard deviation decreased as α increased, but the standard deviation was less sensitive to the value of N than observed with the CTP404 data sets.

Fig. 13:

(a) TV and (b) standard deviation (soft tissue) as a function of N after 12 feasibility-seeking iterations for the experimental HN715 data set using OTVS and NTVS (TV reduction requirement excluded) with λ = 0.0001 and varying α.

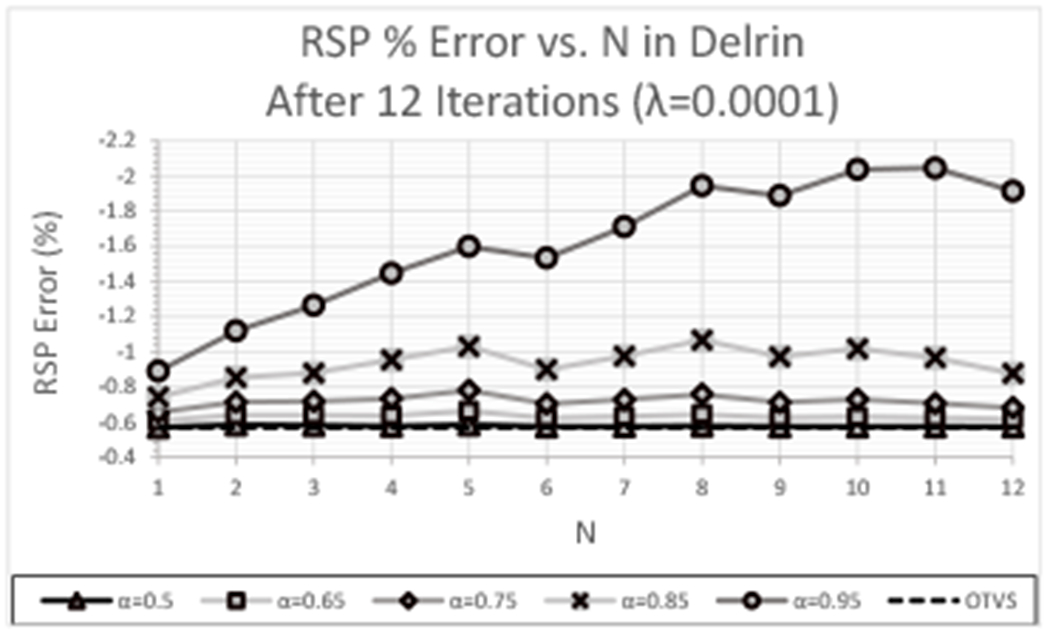

Figure 14 once again demonstrates the impact that values of α > 0.75 had on reconstructed RSP error within the different materials inserts. Unlike the RSP reconstructed from the experimental CTP404 data set, the RSP reconstructed from the experimental HN715 data set was driven in unpredictable directions depending on the particular material insert (as it was with the simulated CTP404 data set), improving accuracy within some material inserts while decreasing accuracy in others.

Fig. 14:

RSP error in the (a) brain tissue and (b) soft tissue ROIs as a function of N after 12 feasibility-seeking iterations for the experimental HN715 data set using OTVS and NTVS (TV reduction requirement excluded) with λ = 0.0001 and varying α.

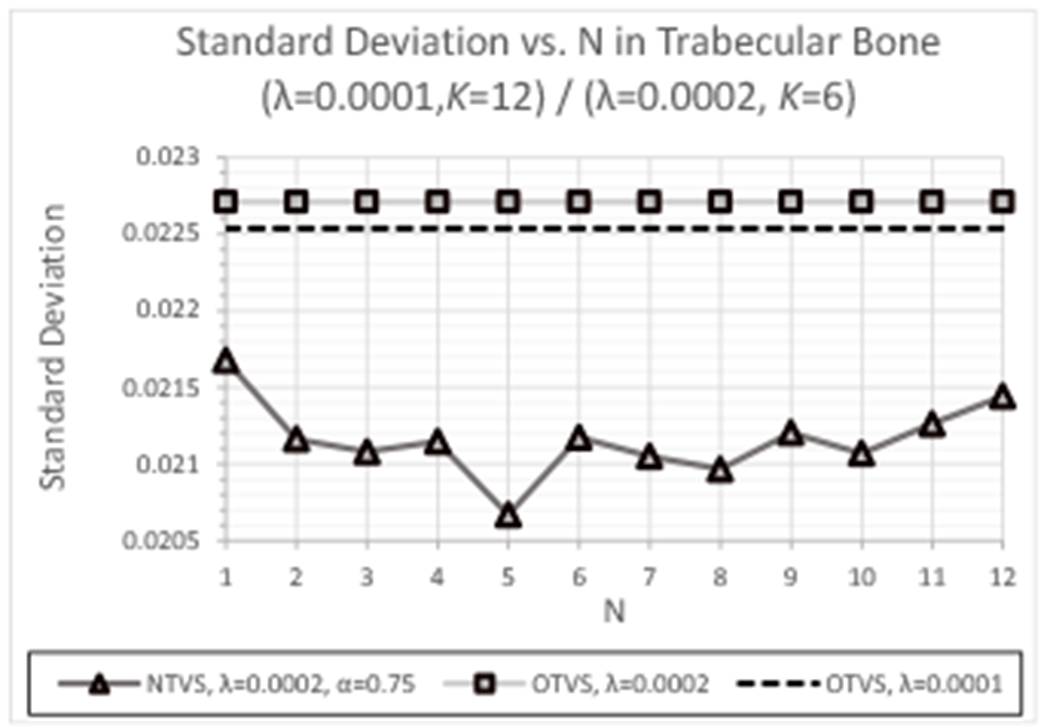

4). Relaxation Parameter (λ):

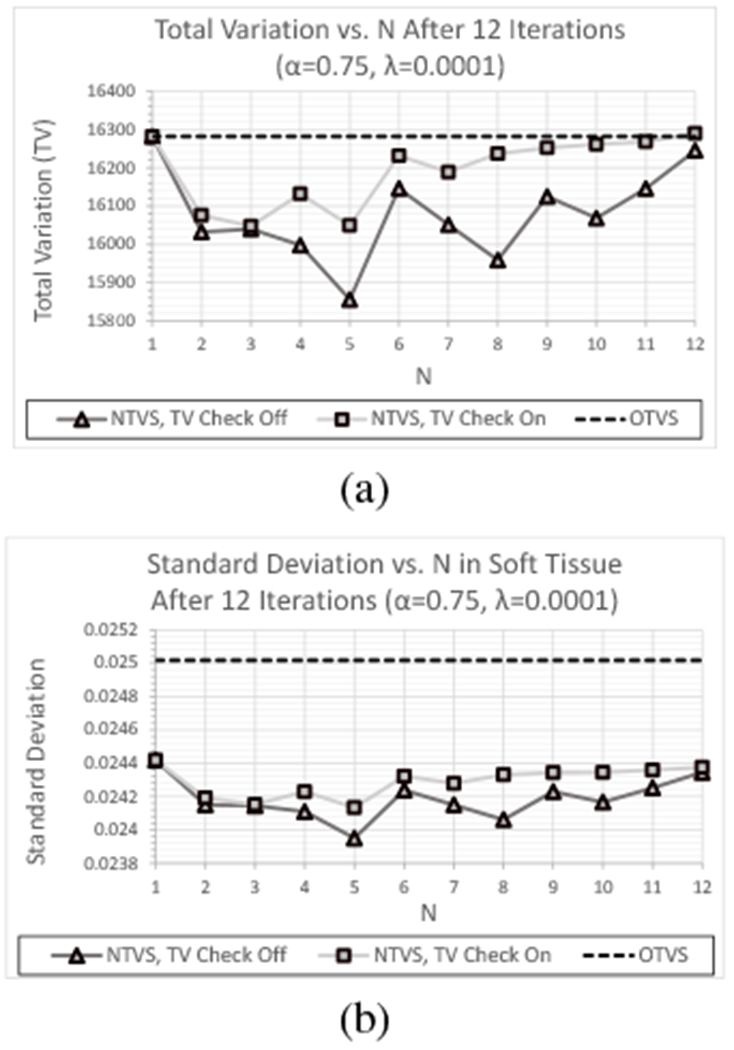

Figures 15(a) and (b) shows comparisons of TV and standard deviation within the ROI of soft tissue, respectively, as a function of N for varying relaxation parameter λ with α = 0.75 and excluding the TV reduction requirement. Plots of standard deviation for the ROI of other materials displayed the same dependence on N and λ. The number of feasibility-seeking iterations k for λ = 0.00015 and λ = 0.0002 were again chosen such that these reconstructions reached the same point in convergence as the λ = 0.0001 reconstructions yielded after 12 feasibility-seeking iterations; this resulted in the same feasibility-seeking iteration numbers k = 8 and k = 6 for λ = 0.00015 and λ = 0.0002, respectively, as for the simulated and experimental CTP404 data sets.

Fig. 15:

(a) TV and (b) standard deviation (soft tissue) as a function of N for λ = 0.0001, k = 12; λ = 0.00015, k = 8; and λ = 0.0002, k = 6 iterations, respectively, and for α = 0.75 for the experimental HN715 data set.

As was seen with the CTP404 data sets, increasing λ consistently yielded larger reductions in TV for each value of N. The standard deviation obtained within the ROI of soft tissue was similar to those obtained with the experimental CTP404 data set, demonstrating a slight increase in standard deviation as λ increases for each value of N. However, the results for 3 ≤ N ≤ 6 are consistently better than those obtained with N = 1 for NTVS and with OTVS for the less noise sensitive λ = 0.0001 (see Figure 5).

The benefit of NTVS in permitting reconstruction with larger λ can be seen by considering the plots of standard deviation within the ROI of the trabecular bone for λ = 0.0002 as compared to OTVS for λ = 0.0001 and λ = 0.0002, as shown in Figure 16. The λ = 0.0001 solution with OTVS yields a noticeably smaller standard deviation for the same level of RSP accuracy, a trend that has previously been encountered for different phantom data sets, leading to the choice of λ = 0.0001 for reconstructions for block sizes up to 250,000 histories. However, using NTVS with α = 0.75, the λ = 0.0002 solution yields smaller standard deviations than those obtained with OTVS and λ = 0.0001 for all values of N and with negligible differences in standard deviation obtained with NTVS and λ = 0.0001 for many values of N (particularly at the often optimal N = 5; see Figure 15(b)). The larger reduction in TV also obtained with λ = 0.0002 leads to the conclusion that reconstruction with λ = 0.0002 is now an appropriate choice made possible by introduction of the NTVS algorithm.

Fig. 16:

Standard deviation in the ROI of trabecular bone as a function of N after 6 feasibility-seeking iterations for λ = 0.0002 as compared to OTVS with λ = 0.0001 and λ = 0.0002.

VI. Discussion

In this work, we have investigated the impact of the innovative changes made to the original version of the DROP-TVS algorithm (OTVS) on both a cylindrical phantom with material inserts (simulated and experimental data sets) and an anthropomorphic head phantom closely resembling a human head (experimental data set). Whereas the changes in noise parameters (TV and standard deviation) introduced by modifications of the OTVS algorithm, leading to the NTVS algorithm are admittedly small, less than 5% improvements for most parameter variations investigated, and for some instances no improvement was seen, we feel one can learn from these small improvements and they are expected to be proportionally larger with noisier data sets such as those introduced by very low fluences or fluence-modulated pCT methods [40], [41], We thus feel that it is worthwhile sharing the experiences made with the innovative algorithmic structures introduced into NTVS and the fact that a repeated TV check is actually not required or even leads to inferior results.

The reconstruction parameter space investigated in this work contained 360 parameter value combinations (2 {TV check on/off} · 5 {alpha} · 3 {lambda} · 12 {N}), requiring 360 separate reconstructions for each of the investigated data sets. The benefit of NTVS was dependent on the number of perturbations per feasibility-seeking iteration, N, with the largest benefit consistently attained for 3 ≤ N ≤ 6, typically optimal with N = 5. For N ≥ 7, these benefits decreased as the number of feasibility-seeking iterations, k, increased except for α ≥ 0.85, but these benefits were negated by the fact that the RSP error with these α is affected in an unpredictable and often counterproductive direction. This can be understood as the effect that larger N have on the magnitude of perturbations βk as k increases. With ℓk increasing by 1 after each of the N perturbations, increasing N results in βk = αℓk decreasing more quickly as k increases. Hence, for larger N, meaningful perturbations persist for a smaller number of feasibility-seeking iterations unless α is close to 1.0, in which case perturbations can decrease too slowly and result in inappropriately large perturbations as reconstruction nears convergence.

A remarkable finding of our investigations was a fluctuating reduction of TV and standard deviation as a function of N for all three data sets, which persisted for each of the 30 different parameter value combinations for each value of N. It was important to determine if these fluctuations were an inherent and reproducible characteristic of NTVS or if the random decrease in ℓk or some other aspect of TVS or feasibility-seeking accounted for this observation. Hence, reconstructions were performed repeatedly (8 times) for N = 5 and the same parameter value combination from the reconstruction parameter space to determine the variation in reconstructed images between independent reconstructions. This analysis demonstrated that the bounded randomness of ℓ did not produce large enough variations to account for the observed fluctuations. In general terms, the fluctuations are the result of the opposing objectives and resulting effects on TV of the alternating applications of TVS and feasibility-seeking.

The inclusion of the TV reduction requirement results in the image being perturbed with perturbations of successively smaller magnitude due to the resulting increment of ℓk each time a perturbation fails to decrease the image TV. Since a failure of this requirement almost always happens during the early feasibility-seeking iterations while it is far from convergence, the resulting increase in ℓk after each failure results in all subsequent perturbations having a smaller perturbation magnitude for each of the N perturbation steps of the remaining K – k iterations. By excluding the TV reduction requirement, the magnitude of subsequent perturbations is preserved throughout the remainder of reconstruction. Hence, although a perturbation applied early in feasibility-seeking may temporarily increase TV slightly, the cumulative effect of larger perturbations throughout the remainder of reconstruction usually results in a larger overall reduction in TV and, consequently, standard deviation. For the range 3 ≤ N ≤ 6, the removal of the TV reduction requirement produced at least comparable and often superior TV and standard deviation results for both the simulated and experimental data sets, particularly with α = 0.75 as seen most clearly with the experimental data sets.

Removing the TV reduction requirement also improves computation time by eliminating the conditional branch that prevents full parallelization of the superiorization algorithm and eliminating repeated perturbations until an improved TV is achieved. There are global calculations within the TVS algorithm, such as the ℓ2 (discrete-space) norm used to normalize perturbation vectors, which act as a bottleneck in an explicit and direct implementation. However, such data dependencies can be eliminated by performing these calculations in each thread rather than communicating these from a central location. Hence, there are no real data dependencies and the parallelization made possible by removing the conditional branch reduces NTVS computation time by up to 30% (estimated based on a count of the reduced number of sequential computational operations).

An appealing aspect of NTVS is the added ability to control the perturbation kernel α, which was previously held constant in OTVS at a value of α = 0.5. Increasing the perturbation kernel α yields larger reductions in TV and standard deviations. However, it was found that as α increased beyond α ≈ 0.75, perturbations began to affect reconstructed RSP values in an unpredictable and region-dependent manner. The direction that the RSP was driven was shown not to be an inherent property of the phantom geometry and/or composition, as demonstrated by the observation that the RSP within the Delrin insert of the CTP404 phantom was driven in opposite directions for the simulated and experimental data sets, respectively. Thus, we suggest using α = 0.75 as this maximized the benefits that an increasing α have on TV and standard deviation while avoiding the unpredictable and potentially negative impact of larger α values.

Another benefit of NTVS is that it allows feasibility-seeking to be performed with a larger relaxation parameter λ than was appropriate with OTVS (λ = 0.0001, k = 12). It was found that with NTVS, the same RSP error can be obtained with λ = 0.0002 after performing k = 6 feasibility-seeking iterations without producing larger standard deviations, as previously experienced in practice with OTVS, which lead to the choice of λ = 0.0001 in previously published work with the simulated data set [42], [36]. Arriving at an acceptable solution in k = 6 feasibility-seeking iterations also offers substantial computational benefit. As mentioned previously, feasibility-seeking increases TV at each feasibility-seeking iteration k. Since reconstruction with a larger λ reaches the same point in convergence at an earlier iteration k while perturbations are still larger, an image with a smaller TV can be obtained by performing fewer iterations.

In the work presented here, each of the TVS parameter values was held fixed throughout a reconstruction. One possible direction to explore in future work is investigating how parameter values can be varied during reconstruction to produce greater benefits with NTVS.

Another interesting question to explore is if the diminishing benefits for N ≥ 7 are due to an excessive use of TVS per feasibility-seeking iteration or if this is simply a consequence of βk decreasing too quickly as a function of k, perhaps resulting in an under-utilization of TVS at larger values of k. Note that the value of the perturbation kernel α determines not only the initial perturbation magnitude βk(k = 1), but also the rate at which βk decreases after each perturbation.

One would like the ability to control the initial perturbation magnitude βk (N = 1) as a function of k while independently determining the rate at which βk decreases between each of the N perturbations per feasibility-seeking iteration. This is not possible with βk = αℓ since the value of ℓ implicitly depends on both n and k. Hence, an interesting direction to explore is the introduction of another parameter γ that independently controls the rate at which perturbation magnitude decreases as a function of k; the parameter α would then control only the rate at which βk,n decreases between each of the n = 1,2, …,N perturbation steps. This makes βk,n an explicit rather than an implicit function of n and k, eliminating the need to randomly increase ℓ between feasibility-seeking iterations to prevent perturbation magnitude decreasing too quickly as a function of k. By reformulating the perturbation magnitude as βk,n = αnγf(k), with 0 < α, γ < 1 and f(k) chosen such that limk→∞ f(k) = ∞ (e.g., f(k) = k), the rate at which βk,n, decreases as a function of n and k can then be controlled independently while preserving the superiorization requirement that limk→∞ βk,n = 0.

VII. Conclusions

The investigations performed in this work demonstrate that the modifications implemented by the NTVS algorithm provide clear advantages over the OTVS algorithm in terms of both quality and computational cost. Future work should include investigating whether varying parameters during reconstruction or controlling the decrease of the perturbation magnitude independently during iterations and repeated perturbation steps can further increase the advantages of the NTVS algorithm.

Supplementary Material

Acknowledgment

We greatly appreciate the constructive comments of three anonymous reviewers which helped us to significantly improve this paper. The research in proton CT was supported by the National Institute of Biomedical Imaging and Bioengineering (NIBIB) of the National Institute of Health (NIH) and the National Science Foundation (NSF) award number R01EB013118, and the United States - Israel Binational Science Foundation (BSF) grant no. 2009012, and is currently supported by BSF grant no. 2013003. The content of this paper is solely the responsibility of the authors and does not necessarily represent the official views of NBIB or NIH. The support of UT Southwestern and State of Texas through a Seed Grants in Particle Therapy award is gratefully acknowledged.

Appendix A Definition of Terms

The list below defines the terms and mathematical notation used in describing the OTVS and NTVS algorithms:

| • k : overall cycle #, i.e., k-th iteration of feasibility-seeking and TV perturbations. |

| • K : total # of cycles, i.e., total # of iterations of feasibility-seeking and TV perturbations. |

| • n : TV perturbation step #, 1 ≤ n ≤ N. |

| • xk : image vector x at cycle k. |

| • : initial iterate x0 of image reconstruction. |

| • N : # of TV perturbation steps per feasibility-seeking iteration. |

| • α : perturbation kernel, 0 < α < 1. |

| • ℓk : perturbation kernel exponent. |

| • βk,n: perturbation coefficient βk,n = αℓk at TV perturbation step n and feasibility-seeking iteration k. |

| • ϕ : the target function to which superiorization is applied; here, ϕ = TV, the total variation of the image vector. |

| • ϕ(xk,n) : TV of image vector xk,n at TV perturbation step n and feasibility-seeking iteration k. |

| • vk,n : normalized non-ascending perturbation vector for ϕ at xk,n, i.e., |

| • PT : projection operator representative of an iterative feasibility-seeking algorithm. |

Appendix B NTVS Algorithm

| A pseudocode definition of the NTVS algorithm is written as follows: |

| 1: set k = 0 |

| 2: set ℓ−1 = 0 |

| 3: set |

| 4: while k < K do |

| 5: set n = 0 |

| 6: set ℓk = rand(k, ℓk−1) |

| 7: set xk,n = xk |

| 8: while n < N do |

| 9: set vk,n = ϕ′(xk,n) |

| 10: set βk,n = αℓk |

| 11: set xk,n+1 = xk,n + βk,nvk,n |

| 12: set n = n + 1 |

| 13: set ℓk = ℓk + 1 |

| 14: end while |

| 15: set xk+1 = PT(xk,N) |

| 16: set k = k + 1 |

| 17: end while |

*FOR REFERENCE ONLY: A pseudocode definition of the NTVS algorithm with the TV reduction requirement included: 1: set k = 0

| 1: set k = 0 |

| 2: set ℓ−1 = 0 |

| 3: set |

| 4: while k < K do |

| 5: set n = 0 |

| 6: set ℓk = rand(k, ℓk−1) |

| 7: set xk,n = xk |

| 8: while n < N do |

| 9: set vk,n = ϕ′(xk,n) |

| 10: set βk,n = αℓk |

| 11: set loop = true |

| 12: while loop do |

| 13: set zk,n = xk,n + βk,nvk,n |

| 14: if ϕ(zk,n) ≤ ϕ(xk,n) then |

| 15: set xk,n = zk,n |

| 16: set loop = false |

| 17: end if |

| 18: set ℓk = ℓk + 1 |

| 19: end while |

| 20: set n = n + 1 |

| 21: end while |

| 22: set xk+1 = PT(xk,N) |

| 23: set k = k + 1 |

| 24: end while |

Footnotes

supplemental materials are available in the supplementary files /multimedia tab

2×air, acrylic, polymethylpentene (PMP), low density polyethylene (LDPE), Teflon®, Delrin®, and polystyrene

supplemental materials are available in the supplementary files /multimedia tab

References

- [1].Cormack A and Koehler A, “Quantitative proton tomography: preliminary experiments,” Physics in Medicine & Biology, vol. 21, no. 4, pp. 560–569, 1976. [Online]. Available: http://stacks.iop.org/0031-9155/21/i=4/a=007 [DOI] [PubMed] [Google Scholar]

- [2].Hanson KM, Bradbury JN, Cannon TM, Hutson RL, Laubacher DB, Macek R, Paciotti MA, and Taylor CA, “The application of protons to computed tomography,” IEEE Transactions on Nuclear Science, vol. 25, no. 1, pp. 657–660, February 1978. [Google Scholar]

- [3].Hanson KM, “Proton computed tomography,” IEEE Transactions on Nuclear Science, vol. 26, no. 1, pp. 1635–1640, February 1979. [Google Scholar]

- [4].Hanson KM, Bradbury JN, Cannon TM, Hutson RL, Laubacher DB, Macek RJ, Paciotti MA, and Taylor CA, “Computed tomography using proton energy loss,” Physics in Medicine & Biology, vol. 26, no. 6, pp. 965–983, 1981. [Online]. Available: http://stacks.iop.org/0031-9155/26/i=6/a=001 [DOI] [PubMed] [Google Scholar]

- [5].Johnson RP, Bashkirov VA, Coutrakon G, Giacometti V, Karbasi P, Karonis NT, Ordoñez C, Pankuch M, Sadrozinski HF-W, Schubert KE, and Schulte RW, “Results from a prototype proton-CT head scanner,” Conference on the Application of Accelerators in Research and Industry, CAARI 2016, 30 October - 4 November 2016, Ft. Worth, TX, USA, Jul 2017. [Online]. Available: https://arxiv.org/pdf/1707.01580 [Google Scholar]

- [6].Bashkirov VA, Johnson RP, Sadrozinski HF-W, and Schulte RW, “Development of proton computed tomography detectors for applications in hadron therapy,” Nuclear Instruments & Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment, vol. 809, pp. 120–129, 2016. [Online]. Available: http://www.sciencedirect.com/science/article/pii/S0168900215009274 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Bruzzi M, Civinini C, Scaringella M, Bonanno D, Brianzi M, Carpinelli M, Cirrone G, Cuttone G, Presti DL, Maccioni G, Pallotta S, Randazzo N, Romano F, Sipala V, Talamonti C, and Vanzi E, “Proton computed tomography images with algebraic reconstruction,” Nuclear Instruments & Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment, vol. 845, pp. 652–655, May 2017, Proceedings of the Vienna Conference on Instrumentation 2016. [Online]. Available: http://www.sciencedirect.com/science/article/pii/S0168900216304454 [Google Scholar]

- [8].Johnson RP, “Review of medical radiography and tomography with proton beams,” Reports on Progress in Physics, vol. 81, no. 1, p. 016701, 2018. [Online]. Available: http://stacks.iop.org/0034-4885/81/i=1/a=016701 [DOI] [PubMed] [Google Scholar]

- [9].Paganetti H, “Range uncertainties in proton therapy and the role of Monte Carlo simulations,” Physics in Medicine & Biology, vol. 57, no. 11, pp. R99–R117, May 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Schulte RW, Penfold SN, Tafas J, and Schubert KE, “A maximum likelihood proton path formalism for application in proton computed tomography,” Medical Physics, vol. 35, pp. 4849–4856, November 2008. [DOI] [PubMed] [Google Scholar]

- [11].Fekete C-AC, Doolan P, Dias MF, Beaulieu L, and Seco J, “Developing a phenomenological model of the proton trajectory within a heterogeneous medium required for proton imaging,” Physics in Medicine & Biology, vol. 60, no. 13, pp. 5071–5082, 2015. [Online]. Available: http://stacks.iop.org/0031-9155/60/i=13/a=5071 [DOI] [PubMed] [Google Scholar]

- [12].Penfold SN, Schulte RW, Censor Y, Bashkirov VA, McAllister SA, Schubert KE, and Rosenfeld AB, “Block-iterative and stringaveraging projection algorithms in proton computed tomography image reconstruction,” in Biomedical Mathematics: Promising Directions in Imaging, Therapy Planning and Inverse Problems, Censor Y, Jiang M, and Wang G, Eds., The Huangguoshu International Interdisciplinary Conference. Madison, WI, USA: Medical Physics, 2010, pp. 347–367. [Google Scholar]

- [13].Herman GT, Garduño E, Davidi R, and Censor Y, “Superiorization: An optimization heuristic for medical physics,” Medical Physics, vol. 39, no. 9, pp. 5532–5546, 2012. [Online]. Available: 10.1118/1.4745566 [DOI] [PubMed] [Google Scholar]

- [14].Penfold SN, Schulte RW, Censor Y, and Rosenfeld AB, “Total variation superiorization schemes in proton computed tomography image reconstruction,” Medical Physics, vol. 37, pp. 5887–5895, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Censor Y, Davidi R, and Herman GT, “Perturbation resilience and superiorization of iterative algorithms,” Inverse problems, vol. 26, p. 65008, June 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Censor Y, “Weak and strong superiorization: Between feasibility-seeking and minimization,” Analele Stiintifice ale Universitatii Ovidius Constanta, Seria Matematica, vol. 23, pp. 41–54, October 2014. [Google Scholar]

- [17].Censor Y, Davidi R, Herman GT, Schulte RW, and Tetruashvili L, “Projected subgradient minimization versus superiorization,” Journal of Optimization Theory and Applications, vol. 160, no. 3, pp. 730–747, March 2014. [Online]. Available: 10.1007/s10957-013-0408-3 [DOI] [Google Scholar]

- [18].Censor Y, “Superiorization and perturbation resilience of algorithms: A bibliography compiled and continuously updated,” http://math.haifa.ac.il/yair/bib-superiorization-censor.html. [Google Scholar]

- [19].Censor Y, Herman GT, and Jiang M, “Superiorization: Theory and applications,” Special Issue of Inverse Problems, vol. 33, no. 4, p. 040301, 2017. [Online]. Available: http://stacks.iop.org/0266-5611/33/i=4/a=040301 [Google Scholar]

- [20].Humphries T, Winn J, and Faridani A, “Superiorized algorithm for reconstruction of CT images from sparse-view and limited-angle polyenergetic data,” Physics in Medicine & Biology, vol. 62, no. 16, pp. 6762–6783, 2017. [Online]. Available: http://stacks.iop.org/0031-9155/62/i=16/a=6762 [DOI] [PubMed] [Google Scholar]

- [21].Helou E, Zibetti M, and Miqueles E, “Superiorization of incremental optimization algorithms for statistical tomographic image reconstruction,” Inverse Problems, vol. 33, no. 4, p. 044010, 2017. [Online]. Available: http://stacks.iop.org/0266-5611/33/i=4/a=044010 [Google Scholar]

- [22].Garduño E and Herman GT, “Computerized tomography with total variation and with shearlets,” Inverse Problems, vol. 33, no. 4, p. 044011, 2017. [Online]. Available: http://stacks.iop.org/0266-5611/33/i=4/a=044011 [Google Scholar]

- [23].Yang Q, Cong W, and Wang G, “Superiorization-based multi-energy CT image reconstruction,” Inverse Problems, vol. 33, no. 4, p. 044014, 2017. [Online]. Available: http://stacks.iop.org/0266-5611/33/i=4/a=044014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Penfold SN and Censor Y, “Techniques in iterative proton CT image reconstruction,” Sensing and Imaging, vol. 16, no. 1, October 2015. [Online]. Available: 10.1007/s11220-015-0122-3 [DOI] [Google Scholar]

- [25].Bracewell R and Riddle A, “Inversion of fan beam scawns in radio astronomy,” Astrophysics Journal, vol. 150, pp. 427–434, 1967. [Google Scholar]

- [26].Ramanchandran G and Lakshminarayanan A, “Three dimensional reconstructions from radiographs and electron micrographs: Application of convolution instead of Fourier transforms,” Proceedings of the National Academy of Sciences, USA, vol. 68, pp. 2236–2240, 1971. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Chambolle A, Caselles V, Novaga M, Cremers D, and Pock T, “An introduction to total variation for image analysis,” in Theoretical Foundations and Numerical Methods for Sparse Recovery, De Gruyter, 2010. [Google Scholar]

- [28].Censor Y, “Can linear superiorization be useful for linear optimization problems?” Inverse Problems, vol. 33, no. 4, p. 044006, 2017. [Online]. Available: http://stacks.iop.org/0266-5611/33/i=4/a=044006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [29].Herman GT and Davidi R, “Image reconstruction from a small number of projections,” Inverse Problems, vol. 24, no. 4, p. 045011, 2008. [Online]. Available: http://stacks.iop.org/0266-5611/24/i=4/a=045011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Davidi R, Herman GT, and Censor Y, “Perturbation-resilient block-iterative projection methods with application to image reconstruction from projections,” International Transactions in Operational Research, vol. 16, no. 4, pp. 505–524, 2009. [Online]. Available: https://onlinelibrary.wiley.com/doi/abs/10.1111/j.1475-3995.2009.00695.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- [31].Butnariu D, Davidi R, Herman GT, and Kazantsev IG, “Stable convergence behavior under summable perturbations of a class of projection methods for convex feasibility and optimization problems,” IEEE Journal of Selected Topics in Signal Processing, vol. 1, no. 4, pp. 540–547, December 2007. [Google Scholar]

- [32].Langthaler O, “Incorporation of the superiorization methodology into biomedical imaging software,” Salzburg University of Applied Sciences, Salzburg, Austria, and the Graduate Center of the City University of New York, NY, USA, Marshall Plan Scholarship Report, September 2014, 76 pages. [Google Scholar]

- [33].Prommegger B, “Verification and evaluation of superiorized algorithms used in. biomedical imaging: Comparison of iterative algorithms with and without superiorization for image reconstruction from projections,” Salzburg University of Applied Sciences, Salzburg, Austria, and the Graduate Center of the City University of New York, NY, USA, Marshall Plan Scholarship Report, October 2014, 84 pages. [Google Scholar]

- [34].Havas C, “Revised implementation and empirical study of maximum likelihood expectation maximization algorithms with and without superiorization in image reconstruction,” Salzburg University of Applied Sciences, Salzburg, Austria, and the Graduate Center of the City University of New York, NY, USA, Marshall Plan Scholarship Report, October 2016, 49 pages. [Google Scholar]

- [35].Agostinelli S, Allison J, Amako K et al. , “Geant4 - a simulation toolkit,” Nuclear Instruments & Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment, vol. 506, no. 3, pp. 250–303, 2003. [Online]. Available: http://www.sciencedirect.com/science/article/pii/S0168900203013688 [Google Scholar]

- [36].Schultze BE, Karbasi P, Giacometti V, Plautz TE, Schubert KE, and Schulte RW, “Reconstructing highly accurate relative stopping powers in proton computed tomography,” in Proceedings of the IEEE Nuclear Science Symposium & Medical Imaging Conference (NSS/MIC) 2015, Oct 2015, pp. 1–3. [Google Scholar]

- [37].Giacometti V, Bashkirov VA, Piersimoni P, Guatelli S, Plautz TE, Sadrozinski HF-W, Johnson RP, Zatserklyaniy A, Tessonnier T, Parodi K, Rosenfeld AB, and Schulte RW, “Software platform for simulation of a prototype proton CT scanner,” Medical Physics, vol. 44, no. 3, pp. 1002–1016, 2017. [Online]. Available: 10.1002/mp.12107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [38].Censor Y, Elfving T, Herman GT, and Nikazad T, “On diagonally relaxed orthogonal projection methods,” SIAM Journal on Scientific Computing, vol. 30, no. 1, pp. 473–504, 2008. [Online]. Available: 10.1137/050639399 [DOI] [Google Scholar]

- [39].Rueden CT, Schindelin J, Hiner MC, DeZonia BE, Walter AE, Arena ET, and Eliceiri KW, “Imagej2: Imagej for the next generation of scientific image data,” BMC Bioinformatics, vol. 18, no. 1, p. 529, November 2017. [Online]. Available: 10.1186/s12859-017-1934-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- [40].Dedes G, Angelis LD, Rit S, Hansen D, Belka C, Bashkirov VA, Johnson RP, Coutrakon G, Schubert KE, Schulte RW, Parodi K, and Landry G, “Application of fluence field modulation to proton computed tomography for proton therapy imaging,” Physics in Medicine & Biology, vol. 62, no. 15, pp. 6026–6043, 2017. [Online]. Available: http://stacks.iop.org/0031-9155/62/i=15/a=6026 [DOI] [PubMed] [Google Scholar]

- [41].Dedes G, Johnson RP, Pankuch M, Detrich N, Pols WMA, Rit S, Schulte RW, Parodi K, and Landry G, “Experimental fluence modulated proton computed tomography by pencil beam scanning,” Medical Physics, vol. 45, pp. 3287–3296, May 2018. [DOI] [PubMed] [Google Scholar]

- [42].Schultze BE, Witt M, Censor Y, Schubert KE, and Schulte RW, “Performance of hull-detection algorithms for proton computed tomography reconstruction,” in Infinite Products of Operators and Their Applications, ser. Contemporary Mathematics, Reich S and Zaslavski A, Eds., vol. 636. American Mathematical Society, 2015, pp. 211–224. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.