Abstract

Purpose:

Electronic clinical decision support (CDS) for treatment of community-acquired pneumonia (ePNa) is associated with improved guideline adherence and decreased mortality. How rural providers respond to CDS developed for urban hospitals could shed light on extending CDS to resource-limited settings.

Methods:

ePNa was deployed into 10 rural and critical access hospital emergency departments (EDs) in Utah and Idaho in 2018. We reviewed pneumonia cases identified through ICD-10 codes after local deployment to measure ePNa utilization and guideline adherence. ED providers were surveyed to assess quantitative and qualitative aspects of satisfaction.

Findings:

ePNa was used in 109/301 patients with pneumonia (36%, range 0%−67% across hospitals) and was associated with appropriate antibiotic selection (93% vs 65%, P < .001). Fifty percent of survey recipients responded, 87% were physicians, 87% were men, and the median ED experience was 10 years. Mean satisfaction with ePNa was 3.3 (range 1.7–4.8) on a 5-point Likert scale. Providers with a favorable opinion of ePNa were more likely to favor implementation of additional CDS (P = .005). Satisfaction was not associated with provider type, age, years of experience or experience with ePNa. Ninety percent of respondents provided qualitative feedback. The most common theme in high and low utilization hospitals was concern about usability. Compared to high utilization hospitals, low utilization hospitals more frequently identified concerns about adaptation for local needs.

Conclusions:

ePNa deployment to rural and critical access EDs was moderately successful and associated with improved antibiotic use. Concerns about usability and adapting ePNa for local use predominated the qualitative feedback.

Keywords: care process, decision support, emergency department, pneumonia

Community-acquired pneumonia (CAP) is among the most common reasons for emergency department (ED) visits, hospitalizations, and death for adults in the United States.1,2 With almost 20% of Americans living in rural areas,3 CAP care needs to be optimized in rural hospitals. Optimizing care at rural hospitals will be achieved, in part, through implementation of electronic clinical decision support (CDS). Despite research showing improved clinical outcomes when using CDS, penetrance of CDS for pneumonia care into rural (RH) and critical access hospitals (CAH) is incomplete, and its absence is associated with lower quality measures.4 Assessing the response of rural providers to adopting CDS is important to extending the use of CDS in settings beyond where it was initially developed.

In 2018, Intermountain Healthcare (a large, integrated, non-profit health system based in Utah) deployed its electronic CDS tool (ePNa) for the diagnosis and treatment of pneumonia to 10 RH and CAH within its network. Originally deployed into 4 medium to large urban hospitals in 2011, ePNa use in those hospitals was associated with lower mortality and decreased use of broad spectrum antibiotics among patients treated in the ED for CAP.5, 6 However, how ePNa would perform when repurposed for rural settings and how it would be received by providers with unique demands and roles was uncertain.

Methods

Description of Clinical Decision Support Tool

Derivation and features of this clinical decision support tool (ePNa) have previously been published.7 No comparable electronic CDS relevant to pneumonia has been published.8 ePNa incorporates the Five Rights Framework for CDS9 by integrating a pneumonia detection system with a management tool that delivers information to ED clinicians. The system utilizes presenting symptoms, physical exam, and laboratory and radiographic findings extracted from the electronic medical record (EMR) to detect patients with potential pneumonia. The clinical management system of ePNa measures severity of illness to recommend the safest disposition for each patient (outpatient, inpatient, or intensive care), appropriate antibiotics, and indicated microbiology studies. Disposition thresholds and decision-making have previously been validated.10–12 Decision logic was initially adapted from the 2007 Infectious Disease Society of America (IDSA)/American Thoracic Society (ATS) pneumonia treatment guideline.13 New IDSA/ATS guidelines14 became available in October 2019 and updates are now reflected in ePNa decision logic.

Setting and Deployment Methods

We included all 10 rural and critical access hospitals in Idaho and Utah operated by Intermountain Healthcare (Table 1). ePNa was deployed into each hospital over 3 phases both for operational and methodologic reasons. Physicians and advance practice providers (APP) caring for patients in the ED of these hospitals were introduced to ePNa initially with a didactic, interactive session. Use was reinforced with 1-page “cheat sheets” posted prominently in each ED. Providers then received one-on-one instruction on how to use ePNa during their clinical shifts using a test patient and a clinical coach. Providers also met with an ED nurse educator who instructed them on ePNa. In addition, we identified “clinical champions” to encourage ePNa use among their peers. Regular feedback was provided in the format of case reviews sent to the clinical leaders and champions of each ED for review and local distribution. Data sent to each facility included patient identifier, attending of record, whether the ePNa tool was used, whether individual patients received guideline-concordant best practice pneumonia care, and how use of the ePNa tool, if not used, may have changed management. Case reviewers were also careful to note where deviation from ePNa recommendations reflected appropriate clinical judgement.

Table 1.

Hospital Characteristics

| Hospital | Admissions | ED visits |

|---|---|---|

| RH1 | 3,221 | 18,012 |

| RH2 | 2,124 | 11,453 |

| RH3 | 549 | 5,090 |

| RH4 | 1,139 | 7,348 |

| RH5 | 1,987 | 10,655 |

| RH6 | 543 | 6,061 |

| CAH1 | 826 | 6,223 |

| CAH2 | 216 | 1,699 |

| CAH3 | 332 | 2,460 |

| CAH4 | 319 | 2,687 |

Hospital admissions and ED visits during 2018. RH = Rural Hospital, CAH = Critical Access Hospital

Case Reviews

Patients with pneumonia for case review and utilization assessment were identified retrospectively by ICD-10 codes (A48.1, B01.2, J10.0, J11.0, J85.1, J12.*, J16.*, J18.*) and a concurrent chest imaging order in the ED. Reviews were done by the coauthors at regular intervals from April 2018 through November 2019 after each hospital’s implementation date. Reviewers could not be blinded as access to the chart was required for review and ePNa use is automatically documented in the ED providers’ notes.

In any given period, charts for all patients meeting the above criteria at an individual hospital were pulled for review. We excluded patients with a primary diagnosis other than pneumonia (eg, heart failure), based upon the judgement of the reviewing clinician with deference to clinical judgement of the local ED provider (required convincing evidence of alternative diagnosis) at the time of ED presentation. ePNa was considered “utilized” if the physician completed the full CDS pathway in the ED. Utilization was calculated as the percent of patients with pneumonia on which ePNa was completed. Determination about the appropriateness of antibiotic spectrum was based on the Drug Resistance in Pneumonia (DRIP) score15 and dose and duration based on 2007 IDSA/ATS guidelines13 and Intermountain institutional policies in place prior to ePNa roll out. Disposition determination between hospital and outpatient was adjudicated based on electronic CURB score, PaO2:FiO2 and/or presence of a significant parapneumonic effusion. Inpatient disposition was adjudicated based on the severe CAP criteria with a severe CAP score <3 implying general floor, score =3 per clinician discretion, and >3 to intensive care unit.10, 16 In 7 of the hospitals, admission to intensive care required transfer to a different facility.

Survey

Physicians and APPs received an email invitation to complete the anonymous survey with up to 3 reminders if they were an ED provider at 1 of the included hospitals during August 2019. Participation was incentivized by offering a total of 7 $100 gift certificates that were randomly awarded after survey completion. Our survey was adapted from a prior, validated, 31-question survey that was used to assess ePNa use at 4 urban hospitals within Intermountain Healthcare.17 The survey was built and administered through REDCap (Vanderbilt University) by a study author who anonymized participant responses prior to review by other authors. The survey collected data on provider gender, experience, work location, and satisfaction with the ePNa tool across domains of ePNa use and function (complete survey available in supplement). Satisfaction with the ePNa tool was assessed on a 5-point Likert scale.

Institutional Review Board

The Intermountain Healthcare institutional review board approved all aspects of this work (IRB 1050688); survey respondents consented in their response to the first survey question.

Statistical Analysis

Provider satisfaction was compared across demographic domains based on responses to Likert-scale questions. We used principal components analysis to check whether our satisfaction questions were unidimensional (ie, loaded onto the same component). We ran a reliability analysis of questions loading onto the same component using Cronbach’s Alpha as a measure of internal consistency. Internally consistent questions loading onto the same component were aggregated into a single score representing satisfaction.

Student’s t-test, one-way ANOVA and Pearson correlation were used to evaluate differences in satisfaction based on demographics. The Chi-squared test was used to assess dichotomous process outcomes from case reviews. All analyses are considered exploratory for targeting further interventions and for modifying the tool, thus no adjustments were made for multiple comparisons.

Qualitative data were divided into responses from hospitals with consistently high utilization (RH 2 and 6) and all others. Responses were reviewed by 1 author and coded into unique themes then grouped into unifying themes. Coding was reviewed by a second author who independently assessed themes and groupings. Disagreement was adjudicated by the 2 coding authors. If a comment had more than 1 theme, each was coded and tabulated separately.

Results

Patient Case Reviews

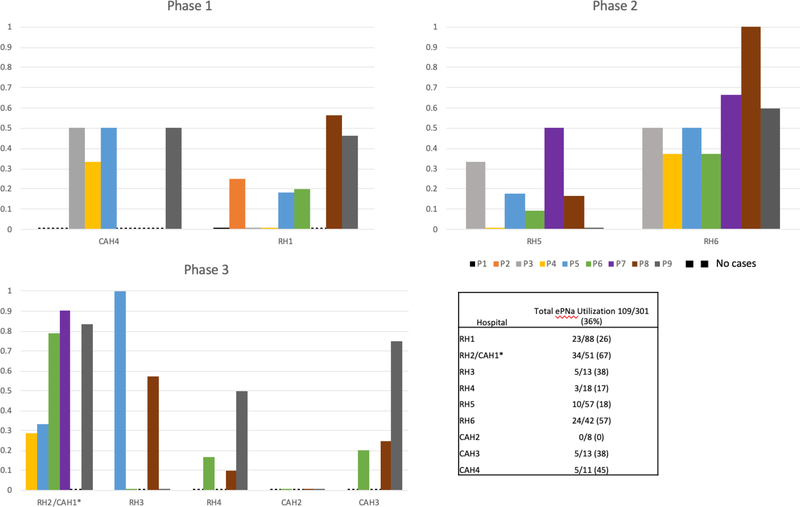

Three hundred fifteen cases were reviewed and 14 excluded for a primary diagnosis other than pneumonia being more likely on presentation. Overall utilization of ePNa by ED providers was 36% (109/301). Utilization varied greatly both between hospitals and between time periods at the same hospital (Figure 1). Results of the individual case review are shown in Table 2. Use of ePNa was associated with greater probability of appropriate antibiotic use (93% vs 65%, P < .001), but not guideline-concordant disposition (95% vs 92%, P = .22) or a diagnosis other than pneumonia being more likely on review (5% vs 7%, P = .35).

Figure 1: ePNa Utilization Rates By Hospital. Aggregate and Individual Assessment Periods.

Phase 1: first group of facilities where ePNa was deployed; Phase 2: second group; Phase 3: third group. * results for these two hospitals are pooled as their EDs are covered by the same group of providers.

Table 2.

Summary of Case Reviews

| Case Reviews | Tool Used n=109 | Not Used n=192 |

|---|---|---|

| n (%) | n (%) | |

| Guideline Concordant Disposition | 104 (95) | 176 (92) |

| Alternative Diagnosis More Likely | 5 (5) | 14 (7) |

| Appropriate Antibiotics | 101 (93) | 125 (65) |

Discordant disposition means disposition disagreed with ePNa recommendation. Pneumonia unlikely means clinical reviewer felt that an alternative diagnosis to pneumonia was more likely. Appropriate antibiotics determined according to DRIP score.

Survey Demographics

Survey invitations were sent to 62 rural providers. Of the 31 (50%) who responded 27 (87%) were physicians, 3 (10%) were physician assistants and 1 was a nurse practitioner. Of these, 87% were male, 45% worked in multiple EDs, and 48% worked in clinics affiliated with the same hospital and/or provided inpatient care at those same facilities. Complete demographic data for respondents are listed in Table 3. Among the 77% who reported a history of managing patients with ePNa, 23% reported ePNa use between 1 and 5 times, 10% reported use 6–10 times, 19% reported use 11–15 times, 6% reported use 16–20 times, and 19% reported use more than 20 times. Twenty-nine providers reported they were “beginner” users, 29% “intermediate” users, 16% “veteran” users, and 10% “expert” users.

Table 3.

Demographics and ePNa Experience Among Survey Respondents

| % (n) | ||

|---|---|---|

| Male | 87% (27) | |

| Age groups | ||

| 31–40 | 45% (14) | |

| 41–50 | 23% (7) | |

| 51–60 | 26% (8) | |

| 61–70 | 6% (2) | |

| Provider Type | ||

| Physician | 87% (27) | |

| Physician Assistant | 10% (3) | |

| Nurse Practitioner | 3% (1) | |

| ED Experience, years; median (IQR) | 10 (6–17.5) | |

| Work in more than 1 ED | 45% (14) | |

| Work outside of ED | 48% (15) | |

| % of Time with dual role as admitting clinician; median (IQR) | 6 (0–50) | |

| Have used ePNa | 77% (24) | |

| Self-Reported Experience Level | ||

| Beginner | 29% (9) | |

| Intermediate | 29% (9) | |

| Veteran | 16% (5) | |

| Expert | 3% (1) | |

| Number of Patients Managed | ||

| 1–5 | 23% (7) | |

| 6–10 | 10% (3) | |

| 11–15 | 19% (6) | |

| 16–20 | 6% (2) | |

| More than 20 | 19% (6) | |

IQR: Inter-Quartile Range

Satisfaction Score

In the principal components analysis, satisfaction questions loaded onto the same component with coefficient values 0.42 or higher. With the exception of 1 question (Q23, see supplement), all questions had coefficient loading values ranging from 0.54 to 0.90. The Cronbach’s Alpha value of questions loading onto the satisfaction component was 0.92, indicating excellent internal consistency. Having established which questions are a valid and reliable measure of satisfaction, we aggregated them into a single score representing satisfaction.

User Satisfaction

Overall satisfaction averaged 3.3 (standard deviation [SD] 0.8) on a scale of 1–5 with higher scores indicating greater satisfaction (Table 4). Those who did want more CDS were more satisfied, compared to those that did not (mean 3.6, SD 0.6 vs. 2.7, SD 0.6; P = .005). Among the 2 hospitals with consistently high ePNa utilization (hospital RH2 and RH6), satisfaction varied significantly (3.8 versus 2.5, P = .003).

Table 4.

Satisfaction Scores, Detail

| Category | Mean (SD) | P value | |

|---|---|---|---|

| Overall Satisfaction | 3.3 (0.75) | ||

| Desire more CDS | |||

| Yes | 3.6 (0.64) | ||

| No | 2.7 (0.65) | .005 | |

| Provider type | |||

| Physician | 3.3 (0.79) | ||

| APP | 3.5 (0.36) | .69 | |

| Level of experience (self-reported) | |||

| Beginner | 3.0 (0.67) | ||

| Intermediate | 3.2 (0.78) | ||

| Veteran | 3.6 (0.49) | ||

| Expert | 4.8 (n/a) | .09 | |

| # of patients managed (self-reported) | .83 | ||

| Years of Experience | .53 | ||

| Age Groups | .49 | ||

| % of time with additional duties | .45 | ||

| Time to receive radiology report | .74 | ||

| Clinicians who work elsewhere | .60 | ||

| EDs with high utilization | |||

| RH6 | 3.8 (0.62) | ||

| RH2/CAH1 | 2.5 (0.56) | .003 | |

| High vs Low Utilization EDs | .42 | ||

SD: Standard Deviation

There were no significant differences in satisfaction between physicians and APPs (P = .69), age groups (P = .49), number of patients managed (P = .83), and between physicians who only work in the ED and those who work elsewhere (P = .60). The association between satisfaction and years worked was not significant (P = .53).

The effect of self-reported experience with ePNa on satisfaction was not significant (P = .09); however, achieving significance was likely impeded by insufficient power due to small sample size. It should be noted that overall satisfaction increased as level of experience went up (Table 3).

Qualitative Responses

Ninety percent (28/31) of survey recipients responded to the 5 questions eliciting free-text input about tool use. The most common theme amongst responses from high and low utilization hospitals was “improve usability” (eg, “This needs to be streamlined and work more smoothly” and “Still would like to have less ‘clicking’”). The next most common theme was “unrecognized opportunity to use tool” and “utilize more inputs” for the high utilizing hospitals and “adapt for local needs” from the lower utilizing hospitals. Further results are elaborated in Table 5.

Table 5.

Summary of Themes in Survey Responses

| High Utilization Hospitals | ||

| Improve usability | 7 | |

| Did not recognize pneumonia | 3 | |

| Take more information into account | 3 | |

| Eliminate tool | 1 | |

| Improve accuracy | 1 | |

| Loss of autonomy | 1 | |

| Distrust of management motives | 1 | |

| Low Utilization Hospitals | ||

| Improve Usability | 8 | |

| Adapt for local use | 5 | |

| Unrecognized opportunity to use tool | 3 | |

| Improve accuracy | 2 | |

| Tool part of flawed EMR | 2 | |

| Eliminate tool | 1 | |

| Doesn’t improve current workflow | 1 | |

EMR: Electronic Medical Record

When asked about additional CDS clinicians would like to implement, responses from high utilization facilities indicated that cardiovascular syndromes were of greatest interest, followed by infectious etiologies. Low utilization facilities indicated that infectious etiologies were of greatest interest, followed by cardiovascular syndromes. Complete data on individual survey questions are included in the supplement.

Discussion

Deploying CDS to settings beyond where it was originally designed and validated poses unique challenges and opportunities. While a “cut-and-paste” approach may reduce the total resources associated with developing and implementing CDS, the needs of local providers and their unique work circumstances may ultimately determine whether CDS is truly scalable or remains limited to its origin ecosystems.

In our effort to extend ePNa, we found it was utilized in 36% of reviewed encounters from the 10 rural and critical access hospitals involved in this effort; lower than the 63% observed in the 4 original urban hospitals.5 Utilization tended to increase over time, especially at 3 hospitals (RH2, CAH1, and RH6) where the last 3 assessments averaged 80% use of the tool. The difference in guideline-concordant antibiotic use of 30% between ePNa use and non-use was greater than observed in urban hospitals.5 Guideline-concordant antibiotic prescribing has been shown to play an important role in improving outcomes13 and preventing complications of treatment,18 emphasizing the potential positive impacts beyond guideline adherence. Diagnostic accuracy and guideline-concordant disposition were lower without ePNa use, although these differences did not reach statistical significance. Whether ePNa deployment across Intermountain hospitals was associated with improved clinical outcomes is being investigated and will be the subject of a future report.

Survey responses came from ED providers with median experience of 10 years. These rural providers are fundamentally unique from physicians that cover urban tertiary/quaternary EDs in that 48% of rural providers performed other clinical roles at the same rural institution outside of the ED. That is, they served as ED clinicians, primary care providers, and hospitalists—often in the same 24-hour period. Qualitative feedback elicited on our provider survey likely reflects the impact of diverse roles but also general concerns with CDS implementation (with usability as the most common concern). The fact that low utilizing hospitals had more concerns about adaptation of ePNa for local use is notable. While this may reflect misalignment of stated objectives for using the tool and providers’ perceived local needs, whether better implementation approaches, leadership structure or education would have led to improved use is unclear and is not addressed by our survey instrument. Changes to ePNa being actively investigated that may impact clinician perceptions of tool value include incorporation of real-time artificial intelligence for image interpretation. However, further encroachment of technology may not be seen as an advantage for at least several providers we surveyed.

Despite differences in adaptation, overall satisfaction with ePNa among clinicians was fair to good (3.3 on a 5-point Likert scale, Table 3) with no differences by demographic features of providers, provider type, or experience. The most significant difference in clinician satisfaction with ePNa use was between those who did and did not want additional CDS technology. Whether this was a result of positive experience with the ePNa tool or a pre-conditioned belief based on other knowledge or experience is unclear. Surprisingly, among hospitals with high utilization (RH2, CAH1, and RH6), satisfaction varied significantly (Table 3). This implies that local use was driven by many factors outside of physician satisfaction that are key to extending the use of CDS. While we did not formally evaluate additional factors, anecdotally the involvement of clinical champions and local leadership appeared important to successful CDS rollout at these locations.

It is interesting to note that Intermountain Healthcare’s experience with a universally applied evidence-based guideline for community-acquired pneumonia had its origins in our rural hospitals.19 Originally, this rural guideline was challenged in its adaption to the urban environment. Over subsequent years, the guideline evolved in urban EDs and was incorporated into the EMR with a heavy focus on the workflows and decisions in tertiary settings. Somewhat ironically, we are learning again the lessons of implementation in varied clinical and cultural settings as the transition back to our rural hospitals is attempted.

Limitations

This analysis is limited by our 50% survey response rate (31/62), though the high degree of internal consistency in the satisfaction questions and the limited number of high-frequency themes suggest we may have captured the critical factors affecting rural clinician satisfaction with CDS use. In addition, ePNa was adapted from Intermountain’s legacy EMR to function within Cerner (Cerner Corporation, Kansas City, MO); another EMR may perform differently. The veteran and expert users who completed the survey may have been ED physicians familiar with ePNa from prior experience in urban Utah hospitals before moving to a rural area. Finally, the small and rural hospitals included in this survey reflect the experience of clinicians in 1 system and 2 states and may not be generalizable to other rural populations or health systems, although there is limited literature addressing our question in this population.

Conclusion

Clinical decision support developed in urban hospitals for the treatment of CAP is portable to rural and critical access hospitals with common implementation science techniques and may improve processes of care. A better user interface, more sensitivity to perceived local needs, and integration with local processes are critical to clinician buy-in and utilization. Further study is needed to understand the impact of this CDS on patient outcomes.

Supplementary Material

Acknowledgements:

Intermountain Office of Research, Intermountain rural and critical access providers, and the ePNa development, implementation and education team.

Funding: Intermountain Office of Research and National Institutes of Health Ruth L. Kirschstein National Research Service Award 5T32HL105321.

Footnotes

Supporting Information

Pneumonia Decisions Support Tool (ePNa) Survey (pdf of REDCap questions)

Rural ePneumonia Decision Support Survey

Rural ePneumonia Decision Support Survey Text Responses

References

- 1.Kochanek K MS, Xu J, Arias E. Technical Notes Addendum for Supplemental Tables of “Deaths: Final Data for 2017”. National Vital Statistics Reports. 2019;68(9):1–77. [PubMed] [Google Scholar]

- 2.U.S. Department of Health and Human Services CfDCaP, National Center for Health Statistics. National Hospital Ambulatory Medical Care Survey: 2017 Emergency Department Summary Tables. National Hospital Ambulatory Medical Care Survey. 2017. [Google Scholar]

- 3.AHA. Rural Report: Chllenges Facing Rural Communities and the Roadmap to Ensure Local Acccess to High Quality, Affordable Care. 2019.

- 4.Mitchell J, Probst J, Brock-Martin A, Bennett K, Glover S, Hardin J. Association between clinical decision support system use and rural quality disparities in the treatment of pneumonia. J Rural Health. Spring 2014;30(2):186–195. [DOI] [PubMed] [Google Scholar]

- 5.Dean NC, Jones BE, Jones JP, et al. Impact of an Electronic Clinical Decision Support Tool for Emergency Department Patients With Pneumonia. Ann Emerg Med. November 2015;66(5):511–520. [DOI] [PubMed] [Google Scholar]

- 6.Webb BJ, Sorensen J, Mecham I, et al. Antibiotic Use and Outcomes After Implementation of the Drug Resistance in Pneumonia Score in ED Patients With Community-Onset Pneumonia. Chest. November 2019;156(5):843–851. [DOI] [PubMed] [Google Scholar]

- 7.Dean N, Vines C, Rubin J, et al. Implementation of real-time electronic clinical decision support for emergency department patients with pneumonia across a healthcare system. AMIA Annu Symp Proc. 2019:353–362. [PMC free article] [PubMed] [Google Scholar]

- 8.Mecham ID, Vines C, Dean NC. Community-acquired pneumonia management and outcomes in the era of health information technology. Respirology. November 2017;22(8):1529–1535. [DOI] [PubMed] [Google Scholar]

- 9.Campbell R The five “rights” of clinical decision support. J AHIMA. 2013;84(10):42–47. [PubMed] [Google Scholar]

- 10.Brown SM, Jones JP, Aronsky D, Jones BE, Lanspa MJ, Dean NC. Relationships among initial hospital triage, disease progression and mortality in community-acquired pneumonia. Respirology. November 2012;17(8):1207–1213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Sanz FD N; Dickerson J; Jones B; Knox D; Fernandez-Fabrellas E; Chiner E; Briones ML; Cervera A; Agular MC; Blanquer J Accuracy of PaO2/FiO2 calculated from SpO2 for severity assessment in ED patients with pneumonia. Respirology. 2015;20(5):813–818. [DOI] [PubMed] [Google Scholar]

- 12.Jones BE, Jones J, Bewick T, et al. CURB-65 pneumonia severity assessment adapted for electronic decision support. Chest. July 2011;140(1):156–163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Mandell LA, Wunderink RG, Anzueto A, et al. Infectious Diseases Society of America/American Thoracic Society consensus guidelines on the management of community-acquired pneumonia in adults. Clin Infect Dis. March 1 2007;44 Suppl 2:S27–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Metlay JP, Waterer GW, Long AC, et al. Diagnosis and Treatment of Adults with Community-acquired Pneumonia. An Official Clinical Practice Guideline of the American Thoracic Society and Infectious Diseases Society of America. Am J Respir Crit Care Med. October 1 2019;200(7):e45–e67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Webb BJ, Dascomb K, Stenehjem E, et al. Derivation and Multicenter Validation of the Drug Resistance in Pneumonia Clinical Prediction Score. Antimicrob Agents Chemother. May 2016;60(5):2652–2663. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Brown SM, Jones BE, Jephson AR, Dean NC. Validation of the Infectious Disease Society of America/American Thoracic Society 2007 guidelines for severe community-acquired pneumonia. Crit Care Med. December 2009;37(12):3010–3016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Jones BE, Collingridge DS, Vines CG, et al. CDS in a Learning Health Care System: Identifying Physicians’ Reasons for Rejection of Best-Practice Recommendations in Pneumonia through Computerized Clinical Decision Support. Appl Clin Inform. January 2019;10(1):1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Webb BJ, Sorensen J, Jephson A, Mecham I, Dean NC. Broad-spectrum antibiotic use and poor outcomes in community-onset pneumonia: a cohort study. Eur Respir J. July 2019;54(1). [DOI] [PubMed] [Google Scholar]

- 19.Dean NC, Silver MP, Bateman KA, James B, Hadlock CJ, Hale D. Decreased Mortality after Implementation of a Treatment Guideline for Community-acquired Pneumonia. The American Journal of Medicine. 2001;110:451–457. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.