Abstract

Individuals with bilateral cochlear implants (BiCIs) rely mostly on interaural level difference (ILD) cues to localize stationary sounds in the horizontal plane. Independent automatic gain control (AGC) in each device can distort this cue, resulting in poorer localization of stationary sound sources. However, little is known about how BiCI listeners perceive sound in motion. In this study, 12 BiCI listeners’ spatial hearing abilities were assessed for both static and dynamic listening conditions when the sound processors were synchronized by applying the same compression gain to both devices as a means to better preserve the original ILD cues. Stimuli consisted of band-pass filtered (100–8000 Hz) Gaussian noise presented at various locations or panned over an array of loudspeakers. In the static listening condition, the distance between two sequentially presented stimuli was adaptively varied to arrive at the minimum audible angle, the smallest spatial separation at which the listener can correctly determine whether the second sound was to the left or right of the first. In the dynamic listening condition, participants identified if a single stimulus moved to the left or to the right. Velocity was held constant and the distance the stimulus traveled was adjusted using an adaptive procedure to determine the minimum audible movement angle. Median minimum audible angle decreased from 17.1° to 15.3° with the AGC synchronized. Median minimum audible movement angle decreased from 100° to 25.5°. These findings were statistically significant and support the hypothesis that synchronizing the AGC better preserves ILD cues and results in improved spatial hearing abilities. However, restoration of the ILD cue alone was not enough to bridge the large performance gap between BiCI listeners and normal-hearing listeners on these static and dynamic spatial hearing measures.

Keywords: interaural level differences, binaural hearing, signal processing, spatial hearing, spatial resolution, automatic gain control

Introduction

Bilateral cochlear implants (BiCIs) afford listeners improved spatial hearing abilities relative to unilateral cochlear implantation. Compared with normal-hearing (NH) listeners, BiCI listeners perform substantially worse on tasks that assess spatial hearing performance (Grantham et al., 2007; Litovsky et al., 2009; Van Hoesel, 2004; Van Hoesel et al., 2002; Van Hoesel & Tyler, 2003). Sound localization is a spatial hearing task that has been studied extensively in individuals with NH and in individuals with hearing loss. In many of these studies, sound is presented from discrete locations in the horizontal plane. The participant indicates the perceived location of the target sound, and an average localization error (e.g., root-mean-square error) is reported. Root-mean-square errors have been reported to range from ∼28° to ∼30° for BiCI listeners and from ∼5° to ∼7° for NH adults (Grantham et al., 2007; Kerber & Seeber, 2012).

However, the stationary nature of the traditional localization tasks used to assess spatial hearing is not representative of an individual’s auditory spatial experience in their day-to-day life. For example, auditory objects can move relative to the listener (e.g., tracking moving vehicles and other important sounds in social settings). In addition, the listener can move relative to stationary auditory objects in their environment.

Early studies evaluating auditory motion perception abilities in NH listeners employed physically moving speakers (e.g., Harris & Sergeant, 1971; Perrott & Musicant, 1977) or amplitude panning to simulate motion (Grantham, 1986). Harris and Sergeant (1971) defined the minimum audible movement angle (MAMA) as the smallest amount of movement required to correctly identify the direction an auditory source moves. They found that for a stimulus moving at 2.8° per second, the MAMA was between 2° and 4°. Grantham (1986) and Perrott and Musicant (1977) defined MAMA as the smallest movement required to differentiate a moving sound source from that of a stationary one. They found a direct relationship between the velocity of the stimulus and MAMA. To illustrate this, Grantham (1986) used a slower velocity stimulus and found MAMAs similar to that observed in this earlier work (i.e., 2°–5°). Perrott and Musicant (1977) observed MAMA to be 8.3° for a velocity of 90° per second. Both groups observed MAMA to be greater than 20° for 360° per second velocities.

More recently, auditory motion perception abilities have been measured in individuals with NH and in BiCI listeners (Moua et al., 2019). Moua et al. (2019) found that BiCI listeners performed more poorly than NH adult listeners in correctly identifying whether a sound source was moving, and if moving, the direction and distance the auditory object traversed. However, they used conventional CI processor technology, which is known to disrupt important cues that BiCI listeners use to localize sound, as explained later.

Unlike NH individuals, who use both interaural level differences (ILDs) and interaural timing differences (ITDs) to localize sounds in the horizontal plane, it is well established that BiCI individuals, with no measurable acoustic hearing, rely almost entirely on ILD cues. This is because current sound processing strategies do not encode ITD in the carrier and, at best, are not efficient at encoding envelope ITD, as (a) signal envelopes are distorted by independently operating automatic gain controls (AGCs) and by independent electrical stimulation (Dorman et al., 2014; Gifford et al., 2014; Grantham et al., 2007, 2008; Van Hoesel et al., 2002); and (b) independent carriers result in independent temporal quantization (Dieudonné et al., 2020). Furthermore, neural sensitivity to ITD has been shown to be compromised with hearing loss and for bilateral CI listeners specifically, ITD sensitivity drops when the stimulation rate is above ∼500 Hz (Kan & Litovsky, 2015).

BiCI recipients employ ILD cues in localization tasks reasonably well. Interference from the head and torso leads to level differences between ears that aid spatial hearing abilities. However, AGC circuits significantly reduce ILD cues, potentially contributing to the deficits seen in the spatial hearing abilities of BiCI listeners when compared with listeners with NH. Grantham et al. (2008) investigated the effect of AGC, which seeks to ensure audibility of soft sounds and comfort of moderate and loud sounds, on ILD perception in BiCI users fitted with the MED-EL COMBI 40+ CI system. The investigators found a 1.9 dB improvement in ILD thresholds for Gaussian noise, bandpass filtered from 100 to 4000 Hz, when the AGC was not active and that ILD thresholds were highly correlated with total error in a horizontal-plane localization task. Dorman et al. (2014) also showed the impact of AGC on ILD for wideband, lowpass-filtered and highpass-filtered signals. Simulated ILDs were calculated by passing wideband, lowpass-filtered, and highpass-filtered signals through a head-related transfer function and to a MATLAB simulation of MED-EL signal processing. For the wideband signals and highpass-filtered signals, ILDs in the range of 15 to 17 dB were reduced to 3 to 4 dB after signal processing, and inverted ILDs were observed for low-frequency channels. The ILDs for the lowpass-filtered signals were reduced from ∼5 dB to 1 to 2 dB. They also showed a correlation between the magnitude of the ILD cue and performance on a horizontal-plane localization task.

ILD cues are further distorted by the fact that the AGCs in bilateral devices operate independently. The ILD cue is preserved when the level of a sound source is under the compression knee-point for both devices. However, if the level of the sound source is above the compression knee-point for both devices, or if the compression knee-point is exceeded in only one device, the ILD cue is reduced or distorted, respectively (Dorman et al., 2014; Van Hoesel et al., 2002). This is due to broadband compression of louder sounds which leads to similar physical level between ears. As a result, the perception could be one of an auditory image that is shifted toward the center. Important to note that this perception could be dependent on an individual’s electrical hearing. For example, the sound image could also shift in the opposite direction if the listener had only low-frequency electrical hearing available.

Programming parameters can distort ILD cues even further. Microphone sensitivity, a programming parameter in the CI programming software that allows audiologists to add gain (in dB) to the input signal, can be adjusted to give the listener access to lower level sounds by mapping them higher in the electrical dynamic range; that is, increasing sensitivity will result in lower level inputs—typically perceived as softer sounds—being stimulated at higher charge. This serves to increase the perceived loudness of all sound inputs below the compression kneepoint; however, this parameter change also decreases the input level at which the device goes into compression. Kerber and Seeber (2012) found that changing the microphone sensitivity setting significantly impacted localization accuracy.

In recent years, several methods of providing larger-than-normal ILD cues have been shown to improve localization abilities in hearing aid (HA) users (Moore et al., 2016), simulated bimodal listeners (Dieudonné & Francart, 2018; Francart et al., 2009), bimodal listeners (Francart et al., 2011) and BiCI listeners (Brown, 2018), and to improve speech understanding in complex listening environments in NH listeners and individuals with hearing loss (Kollmeier & Peissig, 1990), simulated bimodal listeners (Dieudonné & Francart, 2018), and BiCI listeners (Brown, 2014).

In another approach, synchronized AGC has been utilized to preserve the ILD cue, rather than to magnify it. In HA users, this has been done but with mixed results. The lack of benefit provided by linked AGC for HA users in Hassager et al. (2017) could be attributed to the participants having access to ITD cues, as benefit with linked AGCs has been observed in HA listeners when speech material is high-pass filtered, removing ITD cues (Strelcyk et al., 2018).

In BiCI listeners, the benefit of synchronized AGC is more clear, because, as noted earlier, they rely almost entirely on ILDs for spatial hearing abilities. In these listeners, synchronized AGC has been shown to provide improvements in both localization abilities and speech reception threshold (SRT; Lopez-Poveda et al., 2019; Potts et al., 2019). Improved speech recognition with synchronized AGC has also been observed in spatially separated speech and noise for NH adults (Wiggins & Seeber, 2013), in BiCI simulation (Spencer et al., 2020) and in adults with BiCIs (Lopez-Poveda et al., 2017; Potts et al., 2019).

There are, however, no known evaluations of the impact of ILD cue preservation on spatial hearing tasks involving both static and dynamic stimuli in BiCI listeners, which are truer to an individual’s day-to-day auditory experiences. Thus, the purpose of this investigation was (a) to determine whether synchronized AGC could improve spatial hearing resolution in the horizontal plane for both static and dynamic listening conditions and (b) to investigate the impact of synchronized AGC on physical ILDs from various source azimuths. Twelve BiCI listeners participated in spatial hearing tasks with both synchronized AGCs (research platform) and with their current everyday listening program, which used current commercially approved software and hardware. Following each task, participants rated the difficulty of their experiences. Our hypothesis was that by synchronizing the AGCs we could preserve ILD cues not available in the clinical processing strategy and thus improve performance in both static and dynamic listening tasks.

General Methods

Participants

Participant demographic information is shown in Table 1. Twelve experienced adults with bilateral Advanced Bionics (AB) CIs (Valencia, CA, USA) and bilateral severe-profound sensorineural hearing loss participated in this study, which was conducted in accordance with local university institutional review board approval (IRB number: 131315). Participants provided written informed consent and ranged in age from 37 years to 79 years (M = 60.3, standard deviation = 12.8). Eleven participants were postlingually deafened (one prelingually deafened participant was enrolled) and all had at least 6 months experience with their devices to meet inclusion criteria. Participants were tested acutely with two different programs.

Table 1.

Participant Demographics.

| Participant | Age (years) | Pre/post-lingually deafened | Years of CI use 1st ear | Years of CI use 2nd ear | Sound coding strategy | Mic source |

|---|---|---|---|---|---|---|

| 1 | 50 | Pre | 15.76 | 1.09 | Optima-S | T-Mic2 |

| 2 | 37 | Post | 15.61 | 15.55 | Optima-S | T-Mic2 |

| 3 | 48 | Post | 2.98 | 1.78 | Optima-S | P-Mic |

| 4 | 64 | Post | 4.14 | 2.26 | Optima-S | T-Mic2 |

| 5 | 64 | Post | 6.02 | 2.54 | Optima-S | T-Mic2 |

| 6 | 62 | Post | 4.56 | 2.77 | Optima-S | T-Mic2 |

| 7 | 52 | Post | 3.55 | 3.24 | Optima-S | T-Mic2 |

| 8 | 72 | Post | 14.11 | 6.25 | Optima-S | T-Mic2 |

| 9 | 71 | Post | 7.08 | 5.51 | Optima-P | T-Mic2 |

| 10 | 74 | Post | 3.95 | 1.10 | Optima-S | T-Mic2 |

| 11 | 50 | Post | 12.39 | 12.39 | HiRes-P | T-Mic2 |

| 12 | 79 | Post | 1.48 | 1.05 | Optima-S | T-Mic2 |

| Mean | 60.25 | 7.63 | 4.63 |

Note. CI = cochlear implant.

AGC Characteristics

Two programs were used for this study: the clinical program with independent AGCs and the experimental program with synchronized AGCs. The AB device uses a dual-loop broadband AGC system (Figure 1A), where two interlocked level estimators, Lslow and Lfast, operating with different time constants are used as basis for determining the compression gain (see Boyle et al., 2009; Moore & Glasberg, 1988; Moore et al., 1991 for more detail). The attack and release time constants of Lfast are 0.33 ms and 46.2 ms, and attack and release time constants of Lslow are 139 ms and 383 ms, respectively. The dynamic behavior of the AGC is typically dominated by Lslow, except for the occurrence for sudden loud onsets or level increases when Lfast takes control of the AGC gain, providing near-instantaneous compression to protect the CI user from transient loudness discomfort. Before entering the AGC stage, the audio signal is passed through a pre-emphasis filter with high-pass characteristic (Figure 1B), which avoids saturation of the AGC and dynamic range by low-frequency environmental noise or the CI user’s own voice. The AGC knee-point (the level above which compression sets in) is defined with respect to the signal level at the output of the pre-emphasis filter and therefore depends on the frequency composition of the audio signal (similar to an A-weighted sound pressure level [SPL]). For white noise, such as the stimuli used for the experiments in this study, the knee-point is approximately 56 dB SPL. For pink noise, which has a higher concentration of energy in lower frequencies, the knee-point is approximately 62 dB SPL. For speech or speech-shaped noise, the knee-point varies depending on the particular speaker characteristics and can easily range from 62 to 67 dB SPL.

Figure 1.

The AGC processing of the sound processors. Flow chart of the AGC circuits used in this study (A) and magnitude response of the pre-emphasis filter applied to the audio inputs (B). The contralateral (in gray) audio input and pre-emphasis filter are exclusive to the experimental (synchronized) AGC. AGC = automatic gain control.

The AGC processing of the research sound processors is synchronized by simultaneously supplying audio inputs from both left and right devices. To this end, digital audio signals are bidirectionally transmitted between the left and right research processors in real-time via Phonak’s proprietary Hearing Instrument Body Area Network wireless technology (HIBAN). A common signal is derived as the maximum of the instantaneous sound levels of the two input audio signals after pre-emphasis (Figure 1A). Because the same input level is estimated on both devices from their two respective inputs, the same resultant compression gain is applied to the respective original (ipsilateral) audio signals, and thus the original natural ILD between them is preserved. The research AGC characteristics are mostly identical to those of the clinical program. The only deviation is that the research program uses an infinite compression ratio (CR), compared with a CR of 12:1 in the clinical (independent) AGC program, simply due to technical limitations of the research platform.

Device Programming

The clinical, independent AGC program was simply the participant’s current everyday listening program, which was saved to commercially available hardware (Naída CI Q90) using commercially available software (SoundWave 3.0). The experimental, synchronized AGC program was programmed on AB Naída CI Q90 research processors using research software. All clinically available programming parameters were held constant between both programs. For example, if a participant used the T-Mic 2™ mic source for everyday use (n = 11), this setting was maintained. Appropriate audibility was confirmed by an accredited and licensed audiologist resulting in aided detection thresholds of no worse than 25 dB hearing loss at 250 Hz, 500 Hz, 1000 Hz, 2000 Hz, 3000 Hz, 4000 Hz, and 6000 Hz before testing. Should aided detection need to be improved, lower stimulation levels (threshold [T] levels) were measured to improve audibility for the respective frequencies and these stimulation levels were used in both programs. To isolate any differences in performance to the AGC itself, all front-end processing features (e.g., ClearVoice™, SoftVoice, and microphone directionality) were disabled in both programs. In addition, because of the known impact of microphone sensitivity on the level of the acoustic input needed to trigger the AGC, microphone sensitivity was verified to be set at 0 dB in both sound processors and for both programs. Finally, the left and right processors were loudness balanced in “live speech mode” to ensure that the perceptual loudness from each ear was equivalent. If required, a global adjustment to upper stimulation levels (most comfortable [M] levels) was made to achieve balanced loudness between ears.

Program Effects on Steady-State ILD

Figure 2 demonstrates the effect of both programs on ILD (for 70 dBA white noise) as a function of azimuth for various frequency channels. The white noise was filtered with published head-related impulse responses from Kayser et al. (2009) using their behind-the-ear (BTE) microphone recordings (anechoic, 80 cm distance, 5° step size). The signals were then fed to AB’s HiRes Optima sound coding processing simulator. The CI program setting was set to the default clinical settings (15 channel program, extended low filter setting). AGC was set to pass through for the natural ILD (panel A), clinical independent AGC for the clinical AGC program (panel B), and research synchronized AGC for the experimental program (panel C). The energy levels (after the AGC had settled to steady-state) for each frequency channel were then extracted from the simulator for both devices, and ILD was computed as the difference of the two (right minus left). For visual simplicity, the ILDs were visualized in groups as average of low-frequency channels (Channels 1–5, 238–918 Hz), middle-frequency channels (Channels 6–10, 918–2141 Hz), and high-frequency channels (Channels 11–15, 2141 to 8054 Hz). As expected, and similar to previous findings, for clinical independent AGC, ILDs are reduced for high-frequency channels and inverted for low-frequency channels, a result from broadband compression (panel B). Experimental synchronized AGC restores the ILDs that are naturally available (panel C). It is worth mentioning that this simulation uses BTE microphone from the database, but in this study all but one participant used the T-Mic 2™ microphone source, which has been shown to have responses somewhere in between BTE and in-the-ear microphones, and has a higher and more monotonic ILD function than the BTE mics (Mayo & Goupell, 2020).

Figure 2.

Simulated Effects of Clinical Program and Experimental Program on Steady-State ILD as Function of Sound Source Azimuth for Different Frequency Channels for a 70 dBA White Noise Sound. ILDs (R – L, in dB) are plotted for low-frequency channels (Channels 1–5, blue lines), mid-frequency channels (Channels 6–10, red lines), and high-frequency channels (Channels 11–15, yellow lines). Panel A displays ILDs as a function of sound source azimuths for the natural cue due to the head-shadow (no effect of AGC), panel B for the clinical program (independent AGC), and panel C for the experiment program (synchronized AGC). Independent AGC (B) results in reduced ILD cues for high-frequency channels and inverted ILD cues due for low-frequency channels. Synchronized AGC (C) results in identical ILD as natural cue (A). AGC = automatic gain control; ILD = interaural level difference.

Test Environment

The participants were seated in a comfortable chair in the middle of a 360° array of 64 stationary loudspeakers in a well-lit anechoic chamber. The speakers were positioned at ear level and 1.95 m from the listener. The participants were instructed to face forward (0° azimuth) and not to turn their head during stimulus presentation. Head position was monitored via live video feed to experimenters from outside of the chamber. Participants used a keyboard to indicate their responses.

Subjective Report

At the completion of each task, participants were asked to rate the perceived difficulty of the task. These responses were coded using a 10-point Likert-type scale with 1 = very easy to 10 = very difficult. At the beginning of the test procedure, subjective reporting without a baseline to compare to is difficult. For example, some participants rated the perceived difficulty of the minimum audible angle (MAA) task as high. However, if they were randomized to doing the MAA task first, there is not much room to move up the scale when rating the difficulty of MAMA, which was perceived, and is based on our results, a more difficult task. It is because of this, participants would adjust their perceived difficulty ratings as they moved through the testing procedure, which we allowed.

Experiment 1: MAA

Auditory spatial resolution under static listening conditions in the horizontal plane was evaluated using the MAA task (Grantham, 1985, 1986; Perrott & Musicant, 1977). MAA is defined as the smallest amount of spatial separation between two sequentially presented sound sources where the listener is able to correctly identify which side (right or left) a second sound originated from, relative to the first.

Stimuli

A Gaussian noise, band-pass filtered from 100 to 8000 Hz, was employed. The stimulus duration was 500 ms including 20-ms cos2 ramps. The noise we chose allowed us to compare CI outcomes to the NH data in the classic work in this area by Chandler and Grantham (1992). The signal was always presented at a nominal level of 70 dB SPL, with 10 dB across-trial level roving (±5 dB about the target level) to ensure activation of the AGC. Amplitude panning was used to simulate auditory objects between physical speakers (e.g., Grantham, 1986; Pulkki, 1997; Pulkki & Karjalainen, 2001). In amplitude panning, virtual auditory objects are created by presenting the same signal from adjacent speakers, both of which are the same distance from the listener. By manipulating the relative amplitude of the signal presented from the physical speakers, the listener will perceive a sound from a virtual location in the azimuth where there is no physical sound source.

Procedure

Prior to experimentation, the listening condition order (synchronized AGC, independent AGC program) and the experiment order (MAA, MAMA) were randomized by the test administrators. Participants were blinded to which program they were listening with. Data collection ensued only after each participant completed several practice runs and their performance had stabilized. No feedback was provided. Thresholds were obtained by presenting two sequential 500 ms stationary stimuli from different positions in the horizontal plane. Participants used the keyboard to indicate whether the second sound was to the right or to the left of the first sound. To measure MAA in as near optimal conditions as possible, an interstimulus interval of 1 s was chosen, as smaller interstimulus interval values have been shown to increase MAA thresholds (Grantham, 1985). The initial stimulus location for each presentation was picked from a normal distribution, such that 95% of the initial locations were between −4° and +4°. The jittering was designed to encourage listeners to compare the locations of the two stimuli, rather than just to concentrate on the position of the second stimulus. However, the amount of jitter was not sufficient to rule out the use of absolute position as a cue.

An initial starting span of 35° was chosen as, in our experience, it allows for most BiCI recipients to easily detect sound source changes in the horizontal plane. An adaptive three-down one-up procedure with 8° and 4° large and small step sizes, respectively, was used to vary the spatial distance between the two stimulus presentations. Small step sizes were applied after two reversals. Runs were terminated after eight reversals and the threshold (in degrees) was calculated as the average of the last six reversals. If eight reversals could not be achieved within 60 presentations, the run was terminated. Two data collection runs were averaged together to obtain an MAA in degrees. If the difference of the threshold (in degrees) between the first two runs was greater than 10°, a third run was completed and averaged with the other two runs.

Broadband Dynamic ILD Analysis

Figure 3 shows the ILD output as a function of time of the actual physical task (recorded) and corresponding simulated output of the two test programs. In this example recording, the first stimulus is presented at 0° and the second stimulus at +53° (to the right). A correct response would be scored if the participant indicated the second sound was to the right of the first. The start location was 0°; however, during participant testing the starting location of each presentation varied, as mentioned earlier. The same simulator was used as described in Methods section. Note that when signal moves to +53°, the physical cue has a broadband ILD of ∼12 dB. The independent AGC (middle column, clinical program) results in a reduced ILD that approaches zero as the AGC settles. On the other hand, the synchronized AGC program (right column, experiment program) maintains the physical ILD throughout the time course. For frequency-dependent ILD, one can refer to Figure 2 for conceptualization.

Figure 3.

Broadband Levels and ILDs of the Stimulus at the Left and Right Sound Processors as Function of Time for an Example MAA Trial. The first stimulus is presented at 0° and the second stimulus at +53° (rightward moving). The left column represents the actual physical cues for the tasks recorded from the clinical processors with the AB Listening Check device (before the AGC in the signal path). Middle and right columns show the simulated output with the clinical program (independent AGC) and the experiment program (synchronized AGC), respectively. The top and middle rows show the sound levels (dBA) of the left and right device, respectively. ILD (R-L) is shown in the bottom row. Vertical dashed lines represent stimulus onset and offset. ILD = interaural level difference; MAA = minimum audible angle.

Results

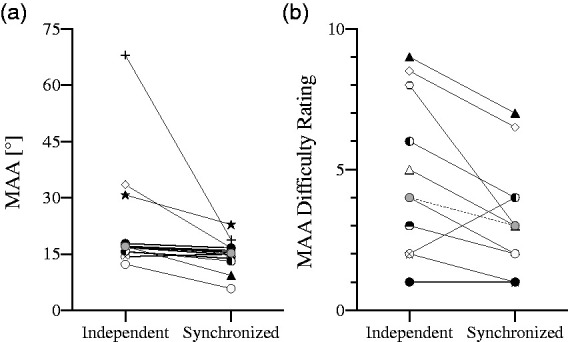

Individual and median (gray filled circles) results for the MAA task for independent AGC and synchronized AGC programs are shown in Figure 4A. A Friedman test was completed with AGC type (i.e., independent, synchronized) as the independent variable and threshold (in degrees) as the dependent variable. There was a significant difference in the MAA thresholds between the independent (Mdn = 17.1, interquartile range [IQR] = 15.7–27.5) and the synchronized AGC conditions, Mdn = 15.3, IQR = 13.4–16.7; χ2(1) = 8.33, p = .004. These results suggest that AGC type did have an effect; when a synchronized AGC was used, the MAA threshold was significantly improved.

Figure 4.

Results from the MAA task. Individual and median (gray filled circles) MAA thresholds with independent and synchronized AGC (panel A). Subjective difficulty of the MAA task for the independent and synchronized AGC programs are shown in panel B. A higher rating indicates the task being perceived as more difficult. Subjective report was not collected from participant 1. MAA = minimum audible angle.

Subjective Results

Individual reported subjective difficulty of the MAA experiment is shown in Figure 4B. Ratings were not collected from Participant Number 1. Wilcoxon matched-pairs signed-rank tests were used to evaluate the perceived difficulty of the task. The perceived difficulty of the MAA task was rated significantly more difficult with the independent AGC (Mdn = 4, IQR = 2–8) than with the synchronized AGC (Mdn = 3, IQR = 1–4), Z = −2.197, p = .028. A Kendall’s tau-b correlation was run to determine the relationship between the magnitude of the subjective benefit and objective improvement with synchronized AGC, which was not statistically significant for MAA (τb = .29, p = .279).

Discussion

In the first experiment, we evaluated auditory spatial resolution under static listening conditions using the MAA task. Our hypothesis was that synchronized AGC that provided the same amount of gain to both ears would preserve ILD cues and improve performance on the task. Median MAA performance was 1.8° better with synchronized AGC as compared with independent AGC; this difference was statistically significant. Considering individual data, poorer performing participants received the most benefit from the synchronized AGC, as the “good” performers appear to have already reached ceiling performance in the independent AGC listening condition (Figure 4A). One possible explanation is that the better performing participants in this study were more sensitive to the onset of the stimulus prior to the AGC reaching full compression. In Figure 3 (middle column, clinical program), shortly after the initial onset of the second stimulus, the ILD peaks, however, after the AGCs engage, the ILD is further reduced, distorting any available ILD cue. This could afford the listener access to a perceptible ILD prior to the AGC engaging after the onset of the second stimulus. Once the AGCs are fully engaged, the sound image should be perceived more toward the center of the horizontal plane for the listener. This, however, did not appear to compromise the MAA for the good performers among our subjects.

One other possible explanation is that better performing participants were able to rely on the residual high-frequency ILDs and ignore the low-frequency inverted ILDs, although this is less likely due to the relatively large low-frequency ILD reversal and compressed high-frequency ILD (see Figure 2B). Another alternative explanation is that these participants used, or learned to use, monaural spectral cues for this task. Some participants commented on sound quality difference for sounds coming from different angles; for example, individuals reported sounds being perceived as duller or brighter than others.

In Figure 3 (right column, experiment program), there is a clear ILD when the second stimulus is presented off-axis with synchronized AGC. This could be what contributed to the improvement in the subjective difficulty of the task. Median difficulty was reported as less difficult with synchronized AGC (Mdn = 3) than with independent AGC (Mdn = 4). Subjective benefit of synchronized AGC was observed in 9 of 11 participants, 1 participant reported no change in subjective difficulty, and 1 individual reported the task to be more difficult with synchronized AGC. The fact that the tasks were subjectively perceived as easier with synchronized AGC suggests that the larger, more stable ILD cue in this condition provided further aid to subjects, in addition to onset ILD cues and monaural spectral cues. It is worth noting that individuals who benefit the most are the ones who had the poorest performance with the independent AGC program (MAA > 30°). It is possible that these individuals were not able to use the onset ILD cue or monaural spectral cues and synchronized AGC gave them steady ILD cues that they could utilize (everyone’s MAA was smaller than 30° for synchronized AGC).

It is important to note that the mapping of electrical current levels between threshold and upper stimulation levels is completely linear in the AB system so there is no additional compressive stage that further distorts the ILD cue. The fact that even with synchronized AGC, where acoustic ILD is preserved, the scores do not further improve to NH listeners’ performance levels (in a similar experiment, Chandler and Grantham [1992] reported average MAA of 1.2° for NH individuals), suggests that restoration of ILD cues or monaural spectral cues together may not be enough to achieve similar accuracy, as NH listeners also have access to ITD cues. In NH listeners, CI simulations impair sound localization by distorting ITD, ILD, and monaural spectral cues (Ausili et al., 2019). A future vocoder study, removing ITD cues, to test the static and dynamic spatial hearing abilities of NH listeners might give us more insights to the contribution of ITD and ILD just noticeable difference (JND) for this particular task.

Experiment 2: MAMA

Auditory spatial resolution under dynamic listening conditions was evaluated using the MAMA task. The same participants, AGC characteristics, test programs, and test environment from the first experiment were employed.

Stimuli

The same Gaussian noise from the first experiment was employed (i.e., band-pass filtered from 100 to 8000 Hz, presented at 70 dB SPL, with ±5 dB across-trial level roving). Amplitude panning was used to simulate auditory motion perception without physically moving sound sources (e.g., Grantham, 1986; Pulkki, 1997; Pulkki & Karjalainen, 2001). Dynamic amplitude panning is the commonly used method for creating the illusion of a sound in motion without the use of a motorized or pulley mechanism moving the source signal. In amplitude panning, the amplitude of the signal is increased at one physical speaker location while the amplitude of the signal at an adjacent speaker is decreased in a similar manner, so that overall level (at center of localization arc, if no one is sitting there) remained constant during panning. The listener will perceive sound from a virtual location in the azimuth where there is no physical sound source. When this is completed in succession in multiple adjacent pairs of speakers over the arc to be simulated, the listener perceives the sound as moving.

In the MAMA task, there is a relationship between target stimulus velocity, stimulus duration, and the distance the stimulus travels. As velocity increases, a shorter stimulus duration is required to travel a given distance or a larger distance is traversed within a given duration. Thus, at higher velocities, temporal factors govern performance on the task, while at lower velocities, spatial resolution is the governing factor and the primary cue is distance traveled (Chandler & Grantham, 1992). As it is spatial resolution that we are interested in, we chose a lower stimulus velocity of 20° per second, as Chandler and Grantham (1992) reported nearly optimal MAMA at this velocity.

Procedure

Prior to data collection, each participant completed several practice runs. Data collection ensued only once the participant understood the task and their performance had stabilized. Prior to each presentation, the stimulus starting location was determined in the same fashion as in the first experiment (i.e., starting location of each presentation was normally distributed around a mean of zero and standard deviation of two). After each presentation, participants used a keyboard to indicate which direction the sound swept (left or right). Threshold for a run (in degrees) was obtained by adaptively varying the distance the stimulus traveled using a three-down, one-up tracking procedure (8° and 4° large and small step sizes, respectively). The small step sizes were applied after two reversals. Runs were terminated after eight reversals, and the threshold (in degrees) was calculated as the average of the last six reversals.

In NH listeners, ILD functions (ILD vs. source azimuth) are nonmonotonic, with greater azimuthal variation with increasing frequency. As a result, ILDs increase from 0° to 60°, but for azimuths greater than 60°, the ILD cue becomes less useful (Macaulay et al., 2010). However, even though ILD functions are nonmonotonic (with decreasing magnitude > 60°), it is quite likely that BiCI users are still able to use these cues. Because of this, a run was not terminated until the distance traveled met or exceeded 100°. In addition, if eight reversals could not be achieved within 60 presentations, the run was terminated. Only after multiple runs where a threshold could not be achieved could we confidently say that the participant could not complete the task.

Broadband Dynamic ILD Analysis

Figure 5 shows ILD as a function of time of the actual physical task (recorded) and corresponding simulated output of the two test programs. In this example recording, the stimulus started from 0° and sweeps to +53° (rightward moving). During participant testing, the starting location of each presentation was varied, as mentioned in Procedure section. The same simulator that was used in the analysis of the MAA task was used here. Note that the ILD gradually increases when sweeping from 0° to +53°; however, the independent AGC (middle column, clinical program) has a much-reduced broadband ILD. On the other hand, the synchronized AGC program maintains the physical ILD throughout the time course (right column, experiment program).

Figure 5.

Broadband Levels and ILDs of the Stimulus at the Left and Right Sound Processors as a Function of Time for an Example MAMA Trial. The stimulus starts from 0° and sweeps +53° (rightward moving). The left column represents the actual physical cues for the task recorded from the clinical processors with the AB Listening Check device (before the AGC in the signal path). Middle and right columns show simulated output with the clinical program (independent AGC) and the experiment program (synchronized AGC), respectively. The top and middle rows show the sound levels (dBA) of the left and right device, respectively. ILD (R-L) is shown in the bottom row. Vertical dashed lines represent stimulus onset and offset. ILD = interaural level difference; MAMA = minimum audible movement angle.

Results

Individual and median (gray filled circles) MAMA thresholds for the independent AGC and synchronized AGC are shown in Figure 6A. As with MAA, a Friedman test on ranks was completed with AGC type (i.e., independent, synchronized) as the independent variable and MAMA threshold (in degrees) as the dependent variable. For several individuals, an MAMA result could not be obtained. These were participants for whom threshold could not be determined after 60 presentations of the stimulus, had several runs that were terminated once stimulus movement angle reached 100° or greater, or a combination of both. For statistical purposes, these individuals were assigned a result of 100°. There was a significant difference in threshold between the independent (Mdn = 100.0, IQR = 33.5–100.0) and the synchronized AGC conditions, Mdn = 25.5, IQR = 20.8–27.7); χ2(1) = 7.36, p = .006. These results suggest that AGC type did influence the auditory motion perception; when a synchronized AGC was used, the MAMA threshold was significantly lower (i.e., better) and individuals were more sensitive to movement of sound in the horizontal plane.

Figure 6.

Results from the MAMA task. Individual and median (gray filled circles) MAMA thresholds for independent and synchronized AGC (panel A). Subjective difficulty of the MAMA task for the independent and synchronized AGC programs are shown in panel B. A higher rating indicates the task being perceived as more difficult. Note: Subjective report was not collected from participant 1. MAMA = minimum audible movement angle.

Subjective Results

Individual reported subjective difficulty is shown in Figure 6B. Ratings were not collected from Participant Number 1. Wilcoxon matched-pairs signed-rank tests were used to evaluate the perceived difficulty of the task. The MAMA task perceived as significantly more difficult for the independent AGC (Mdn = 9, IQR = 8–10) when compared with the synchronized AGC listening condition (Mdn = 5, IQR = 3–8), Z = −2.673, p = .008. A Kendall’s tau-b correlation was run to determine the relationship between the magnitude of the subjective benefit and objective improvement with synchronized AGC and was not statistically significant for MAMA (τb = .32, p = .207). The difficultly of the MAMA task with independent AGC (Mdn = 9, IQR = 8–10) was rated significantly more difficult than MAA with the independent AGC (Mdn = 4, IQR = 2–8), Z = −2.814, p = .005. The difficultly of the MAMA task with synchronized AGC (Mdn = 5, IQR = 3–8) was rated significantly more difficult than MAA with the synchronized AGC (Mdn = 3, IQR = 1–4), Z = −2.120, p = .034.

Discussion

In the second experiment, we evaluated auditory spatial resolution under dynamic listening conditions using the MAMA task. Our hypothesis was that synchronized AGC providing the same amount of gain to both ears would preserve ILD cues and improve performance on the task. Median MAMA thresholds improved from 100.0° to 25.5° (M = 75° to 30°) with AGC synchronization, an effect that was statistically significant. While we did observe significant improvement, performance did not approach that of NH individuals (Chandler & Grantham, 1992, reported average MAMA of 5.7° for NH individuals).

The difficulty of this task was evidenced by the fact that half of participants could not even complete the task with independent AGC. In contrast, all but one participant (91.7%)—who was also unable to complete the task with independent AGC—were able to complete the task with synchronized AGC. The reported difficulty of the task with both the independent and synchronized AGCs is displayed in Figure 6B. Participants reported the MAMA task as more difficult than the MAA task; however, they also reported more benefit with synchronized AGC (Mdn = 5) over independent AGC (Mdn = 9).

Discussion

In this present work, as well as several other publications, ILD compression was observed as the azimuth of the stimulus becomes increasingly off axis. This effect leads to errors in localization as the auditory image is projected more toward center. Dorman (2014) explains how broadband compression, independent AGC, and the headshadow can cause some lower level signals on the device contralateral to the sound source to receive more amplification. In agreement with these findings, our physical measurements show these inverted ILDs that occur in the low-frequency range and compressed high-frequency ILDs (Figure 2B). In Figure 2B, any portion of the frequency range below zero on the horizontal axis is referred as an inverted ILD or a condition where AGC compression distorts the ILD in such a way that the intensity of the stimulus is greater at the ear contralateral to the sound source. Figure 2B illustrates how independent AGC, headshadow, and broadband compression in independently operating AGCs create inverted low-frequency ILDs and compressed high-frequency ILDs. In Figures 3 and 5, evidence of the distorted ILD cue is observed in the clinical program (middle column, independent AGC) during the MAA and MAMA experiments, respectively. To explain further, after the AGCs are engaged in the MAA task, even while the second stimulus was presented at +53° (i.e., toward the right device), the ILD is severely compressed and in some instances, reduced to 0 in the clinical program (see Figure 3, middle column). Highly compressed near zero (or zero) ILD is also observed with the clinical program during the MAMA task (see Figure 5, middle column). With synchronized AGC, we see more faithful representation of the ILD across the low-, mid-, and high-frequency channels (Figure 2C) and in the time domain (see right columns of Figures 3 and 5).

It is worth noting that the amount of ILD reversal and the zero-crossing frequency of the ILD are not constant but depend on the frequency content of the stimulus. We know that the headshadow creates broadband level differences mostly due to high-frequency contents. Therefore, the more the stimulus energy is concentrated in high frequencies, where the headshadow effect is large, the more broadband level difference there is between ipsilateral and contralateral sides. For independent AGC, differences in input level result in broadband gains being applied to each stimulus, creating ILD distortion and frequency reversal for the low frequencies. In contrast, if the broadband stimulus level is dominated by low-frequency content, for which the headshadow effect is small, there are less broadband level differences and less discrepancy in the AGC gain and therefore less ILD distortion and low-frequency reversal. The low-frequency ILD reversal may appear large for the stimuli used in this study, because the stimulus is white noise, which has most of its energy in high frequencies where the headshadow is large. Less ILD compression and reversal are expected for more low-frequency dominated signals such as pink-, red-, or speech-shaped noise. Also note that the input level estimation for AGC is performed after application of the pre-emphasis filter to the input signal, further emphasizing its high-frequency content and exacerbating the phenomenon of low-frequency ILD reversal as observed in these experiments.

Limitations and Future Directions

As this was an acute study, we did not evaluate the impact of chronic synchronized AGC use. There is always the potential for performance to change following device experience. In addition, while interaction among some front-end processing is known (e.g., microphone sensitivity), a systematic review of the interaction of programming parameters (e.g., noise reduction algorithms, number of active electrodes, etc.) and internal device characteristics (scalar location, insertion depth, etc.) on synchronized AGC benefit should be completed to maximize the benefit of its use. Future work should consider the role of interear asymmetry in outcomes on other domains (e.g., speech understanding) and how this may impact spatial hearing benefit with synchronized AGC. All future work in this area, as well assessments of clinical implications, should bear in mind the effect of the stimulus spectrum on the ILD resulting from independently operated AGCs.

Not all programming parameters in this study were held constant. Here, we used experimental software and hardware that allowed us to measure after the AGC in the signal pathway. This meant that the CR between the independent and synchronized programs is slightly different. While the independent AGC program that we used for participant testing does add a fraction of a dB of ILD (1/12 of actual), this should make the independent ILD with a 12:1 CR result better, not worse. As follows, we would not expect to see an impact of this decision on the results in this manuscript.

The authors would also like to acknowledge that the interpretation of subjective difficulty data on an adaptive task could be viewed as problematic. This is because a task that is designed to converge upon a fixed percent correct should result in about the same level of difficulty near threshold. However, the listeners reported that the task was easier at the beginning of a trial using synchronized AGC as compared with independent AGC conditions. We believe that this was likely driven by the fact that for the independent AGC conditions, even at the starting value of the adaptive track, many listeners were not able to consistently perform the task.

Summary and Conclusions

Previous work has shown that by synchronizing the AGC, localization abilities of BiCI listeners can be improved (e.g., Potts et al., 2019). The purpose of this investigation was to replicate these earlier findings and extend them to include not only static but also dynamic conditions both in terms of psychophysical performance and in terms of the physical cues produced by CI with either synchronized or independent AGCs. We evaluated both static and dynamic auditory spatial resolutions in 12 experienced, bilateral adult cochlear implant recipients with independent and synchronized AGC in their listening devices. The main findings are as follows:

Synchronization of AGC in BiCI recipients resulted in significantly improved horizontal plane static and dynamic spatial hearing abilities.

Synchronization of AGC resulted in better preservation of both static and dynamic horizontal plane ILDs as evidenced by acoustic measurements and AGC simulations.

Synchronization of AGC reduced reported listening difficulty for both static and dynamic spatial hearing in the horizontal plane.

Acknowledgments

In addition to providing hardware and software support, the authors would like to express their sincere gratitude to Dean Swan, PhD for his valuable scientific input and discussions throughout this study and in the preparation of this manuscript. The authors would also like to thank Dan Ashmead, PhD for MATLAB programming support and Amy Stein, AuD and Smita Agrawal, PhD for their helpful suggestions on preliminary versions of this manuscript. A subset of these data were presented at the Conference on Implantable Auditory Prostheses on July 16, 2019.

Footnotes

Declaration of Conflicting Interests: The authors declared the following potential conflicts of interest with respect to the research, authorship, and/or publication of this article: R. H. G. is on the advisory board for Advanced Bionics, Cochlear, and Frequency Therapeutics. C. C. and P. H. are employed by Advanced Bionics. The remaining authors declare no conflict of interest.

Funding: The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This research was supported by Advanced Bionics, LLC (participant remuneration).

ORCID iDs: Robert T. Dwyer https://orcid.org/0000-0001-8361-9519

René H. Gifford https://orcid.org/0000-0001-6662-3436

References

- Ausili S. A., Backus B., Agterberg M. J. H., van Opstal A. J., van Wanrooij M. M. (2019). Sound localization in real-time vocoded cochlear-implant simulations with normal-hearing listeners. Trends in Hearing, 23, 233121651984733. 10.1177/2331216519847332 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boyle P. J., Büchner A., Stone M. A., Lenarz T., Moore B. C. J. (2009). Comparison of dual-time-constant and fast-acting automatic gain control (AGC) systems in cochlear implants. International Journal of Audiology, 48(4), 211–221. 10.1080/14992020802581982 [DOI] [PubMed] [Google Scholar]

- Brown C. A. (2014). Binaural enhancement for bilateral cochlear implant users. Ear and Hearing, 35(5), 580–584. 10.1097/AUD.0000000000000044 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown C. A. (2018). Corrective binaural processing for bilateral cochlear implant patients. PLoS One, 13(1), e0187965. 10.1371/journal.pone.0187965 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chandler D. W., Grantham D. W. (1992). Minimum audible movement angle in the horizontal plane as a function of stimulus frequency and bandwidth, source azimuth, and velocity. The Journal of the Acoustical Society of America, 91(3), 1624–1636. 10.1121/1.402443 [DOI] [PubMed] [Google Scholar]

- Dieudonné B., Francart T. (2018). Head shadow enhancement with low-frequency beamforming improves sound localization and speech perception for simulated bimodal listeners. Hearing Research, 363, 78–84. 10.1016/j.heares.2018.03.007 [DOI] [PubMed] [Google Scholar]

- Dieudonné B., Van Wilderode M., Francart T. (2020). Temporal quantization deteriorates the discrimination of interaural time differences. The Journal of the Acoustical Society of America, 148(2), 815–828. 10.1121/10.0001759 [DOI] [PubMed] [Google Scholar]

- Dorman M. F., Loiselle L., Stohl J., Yost W. A., Spahr A., Brown C., Cook S. (2014). Interaural level differences and sound source localization for bilateral cochlear implant patients. Ear and Hearing, 35(6), 633–640. 10.1097/AUD.0000000000000057 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Francart T., Lenssen A., Wouters J. (2011). Enhancement of interaural level differences improves sound localization in bimodal hearing. The Journal of the Acoustical Society of America, 130(5), 2817–2826. 10.1121/1.3641414 [DOI] [PubMed] [Google Scholar]

- Francart T., Van den Bogaert T., Moonen M., Wouters J. (2009). Amplification of interaural level differences improves sound localization in acoustic simulations of bimodal hearing. The Journal of the Acoustical Society of America, 126(6), 3209–3213. 10.1121/1.3243304 [DOI] [PubMed] [Google Scholar]

- Gifford R. H., Grantham D. W., Sheffield S. W., Davis T. J., Dwyer R., Dorman M. F. (2014). Localization and interaural time difference (ITD) thresholds for cochlear implant recipients with preserved acoustic hearing in the implanted ear. Hearing Research, 312, 28–37. 10.1016/j.heares.2014.02.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grantham D. W. (1985). Auditory spatial resolution under static and dynamic conditions. The Journal of the Acoustical Society of America, 77(S1), S50–S50. 10.1121/1.2022378 [DOI] [PubMed] [Google Scholar]

- Grantham D. W. (1986). Detection and discrimination of simulated motion of auditory targets in the horizontal plane. The Journal of the Acoustical Society of America, 79(6), 1939–1949. 10.1121/1.393201 [DOI] [PubMed] [Google Scholar]

- Grantham D. W., Ashmead D. H., Ricketts T. A., Haynes D. S., Labadie R. F. (2008). Interaural time and level difference thresholds for acoustically presented signals in post-lingually deafened adults fitted with bilateral cochlear implants using CIS+ processing. Ear and Hearing, PAP(1), 33–44. 10.1097/AUD.0b013e31815d636f [DOI] [PubMed] [Google Scholar]

- Grantham D. W., Ashmead D. H., Ricketts T. A., Labadie R. F., Haynes D. S. (2007). Horizontal-plane localization of noise and speech signals by postlingually deafened adults fitted with bilateral cochlear implants*. Ear and Hearing, 28(4), 524–541. 10.1097/AUD.0b013e31806dc21a [DOI] [PubMed] [Google Scholar]

- Harris J. D., Sergeant R. L. (1971). Monaural/binaural minimum audible angles for a moving sound source. Journal of Speech and Hearing Research, 14(3), 618–629. 10.1044/jshr.1403.618 [DOI] [PubMed] [Google Scholar]

- Hassager H. G., May T., Wiinberg A., Dau T. (2017). Preserving spatial perception in rooms using direct-sound driven dynamic range compression. The Journal of the Acoustical Society of America, 141(6), 4556–4566. 10.1121/1.4984040 [DOI] [PubMed] [Google Scholar]

- Kan A., Litovsky R. Y. (2015). Binaural hearing with electrical stimulation. Hearing Research, 322, 127–137. 10.1016/j.heares.2014.08.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kayser H., Ewert S. D., Anem J., Rohdenburg T., Hohmann V., Kollmeier B. (2009). Database of multichannel in-ear and behind-the-ear head-related and binaural room impulse responses. EURASIP Journal on Advances in Signal Processing, 2009(1), 298605. 10.1155/2009/298605 [DOI] [Google Scholar]

- Kerber S., Seeber B. U. (2012). Sound localization in noise by normal-hearing listeners and cochlear implant users. Ear and Hearing, 33(4), 445–457. 10.1097/AUD.0b013e318257607b [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kollmeier B., Peissig J. (1990). Speech intelligibility enhancement by interaural magnification. Acta Oto-Laryngologica, 109(sup469), 215–223. 10.1080/00016489.1990.12088432 [DOI] [PubMed] [Google Scholar]

- Litovsky R. Y., Parkinson A., Arcaroli J. (2009). Spatial hearing and speech intelligibility in bilateral cochlear implant users. Ear and Hearing, 30(4), 419–431. 10.1097/AUD.0b013e3181a165be [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lopez-Poveda, E.A., Eustaquio-Martín, A., Fumero, M.J., Stohl, J.S., Schatzer, R., Nopp, R., Wolford, R.D., Gorospe, J.M., Polo, R., Gutiérrez Revilla, A., Wilson, B.S. (2019). Lateralization of virtual sound sources with a binaural cochlear-implant sound coding strategy inspired by the medial olivocochlear reflex. Hearing Research, 379, 103–116. 10.1016/j.heares.2019.05.004 [DOI] [PubMed] [Google Scholar]

- Lopez-Poveda, E.A., Eustaquio-Martín, A., Stohl, J.S., Wolford, R.D., Schatzer, R., Gorospe, J.M., Santa Cruz Ruiz, S., Benito, F., Wilson, B.S. (2017). Intelligibility in speech maskers with a binaural cochlear implant sound coding strategy inspired by the contralateral medial olivocochlear reflex. Hearing Research, 348, 134–137. 10.1016/j.heares.2017.02.003 [DOI] [PubMed] [Google Scholar]

- Macaulay, E., Hartmann, W., & Rakerd, B. (2010). The acoustical bright spot and mislocalization of tones by human listeners. The Journal of the Acoustical Society of America, 127, 1440–9. 10.1121/1.3294654. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mayo P. G., Goupell M. J. (2020). Acoustic factors affecting interaural level differences for cochlear-implant users. The Journal of the Acoustical Society of America, 147(4), EL357–EL362. 10.1121/10.0001088 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore B. C. J., Glasberg B. R. (1988). A comparison of four methods of implementing automatic gain control (AGC) in hearing aids. British Journal of Audiology, 22(2), 93–104. 10.3109/03005368809077803 [DOI] [PubMed] [Google Scholar]

- Moore B. C. J., Glasberg B. R., Stone M. A. (1991). Optimization of a slow-acting automatic gain control system for use in hearing aids. British Journal of Audiology, 25(3), 171–182. 10.3109/03005369109079851 [DOI] [PubMed] [Google Scholar]

- Moore B. C. J., Kolarik A., Stone M. A., Lee Y.-W. (2016). Evaluation of a method for enhancing interaural level differences at low frequencies. The Journal of the Acoustical Society of America, 140(4), 2817–2828. 10.1121/1.4965299 [DOI] [PubMed] [Google Scholar]

- Moua K., Kan A., Jones H. G., Misurelli S. M., Litovsky R. Y. (2019). Auditory motion tracking ability of adults with normal hearing and with bilateral cochlear implants. The Journal of the Acoustical Society of America, 145(4), 2498–2511. 10.1121/1.5094775 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perrott, D. R., & Musicant, A. D. (1977). Minimum auditory movement angle: Binaural localization of moving sound sources. J Acoust Soc Am, 62(6), 1463. 10.1121/1.381675 [DOI] [PubMed] [Google Scholar]

- Potts W. B., Ramanna L., Perry T., Long C. J. (2019). Improving localization and speech reception in noise for bilateral cochlear implant recipients. Trends in Hearing, 23, 233121651983149. 10.1177/2331216519831492 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pulkki, V. (1997). Virtual sound source positioning using vector base amplitude panning. J. Audio Eng. Soc, 45, 456–466. [Google Scholar]

- Pulkki V., Karjalainen M. (2001). Localization of amplitude-panned virtual sources. I: Stereophonic panning. AES: Journal of the Audio Engineering Society, 49(9), 739–752. [Google Scholar]

- Spencer, N. J, Tillery, K, H, Brown, C. A. The Effects of Dynamic-range Automatic Gain Control on Sentence Intelligibility With a Speech Masker in Simulated Cochlear Implant Listening, Ear and Hearing: May/June 2019, 40(3), 710–724. doi: 10.1097/AUD.0000000000000653 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strelcyk, O., Zahorik, P., Patro, C., Kramer, M., & Derleth, R.P. (2018). Effects of binaurally-linked dynamic range compression on word identification by hearing-impaired listeners, International Hearing Aid Research Conference (IHCON), Lake Tahoe, CA. [Google Scholar]

- Van Hoesel R. J. M. (2004). Exploring the benefits of bilateral cochlear implants. Audiology and Neuro-Otology, 9(4), 234–246. 10.1159/000078393 [DOI] [PubMed] [Google Scholar]

- Van Hoesel R. J. M., Ramsden R., Odriscoll M. (2002). Sound-direction identification, interaural time delay discrimination, and speech intelligibility advantages in noise for a bilateral cochlear implant user. Ear and Hearing, 23(2), 137–149. 10.1097/00003446-200204000-00006 [DOI] [PubMed] [Google Scholar]

- Van Hoesel R. J. M., Tyler R. S. (2003). Speech perception, localization, and lateralization with bilateral cochlear implants. The Journal of the Acoustical Society of America, 113(3), 1617–1630. 10.1121/1.1539520 [DOI] [PubMed] [Google Scholar]

- Wiggins I. M., Seeber B. U. (2013). Linking dynamic-range compression across the ears can improve speech intelligibility in spatially separated noise. The Journal of the Acoustical Society of America, 133(2), 1004–1016. 10.1121/1.4773862 [DOI] [PubMed] [Google Scholar]