Abstract

Background

Self‐administered survey questionnaires are an important data collection tool in clinical practice, public health research and epidemiology. They are ideal for achieving a wide geographic coverage of the target population, dealing with sensitive topics and are less resource‐intensive than other data collection methods. These survey questionnaires can be delivered electronically, which can maximise the scalability and speed of data collection while reducing cost. In recent years, the use of apps running on consumer smart devices (i.e., smartphones and tablets) for this purpose has received considerable attention. However, variation in the mode of delivering a survey questionnaire could affect the quality of the responses collected.

Objectives

To assess the impact that smartphone and tablet apps as a delivery mode have on the quality of survey questionnaire responses compared to any other alternative delivery mode: paper, laptop computer, tablet computer (manufactured before 2007), short message service (SMS) and plastic objects.

Search methods

We searched MEDLINE, EMBASE, PsycINFO, IEEEXplore, Web of Science, CABI: CAB Abstracts, Current Contents Connect, ACM Digital, ERIC, Sociological Abstracts, Health Management Information Consortium, the Campbell Library and CENTRAL. We also searched registers of current and ongoing clinical trials such as ClinicalTrials.gov and the World Health Organization (WHO) International Clinical Trials Registry Platform. We also searched the grey literature in OpenGrey, Mobile Active and ProQuest Dissertation & Theses. Lastly, we searched Google Scholar and the reference lists of included studies and relevant systematic reviews. We performed all searches up to 12 and 13 April 2015.

Selection criteria

We included parallel randomised controlled trials (RCTs), crossover trials and paired repeated measures studies that compared the electronic delivery of self‐administered survey questionnaires via a smartphone or tablet app with any other delivery mode. We included data obtained from participants completing health‐related self‐administered survey questionnaire, both validated and non‐validated. We also included data offered by both healthy volunteers and by those with any clinical diagnosis. We included studies that reported any of the following outcomes: data equivalence; data accuracy; data completeness; response rates; differences in the time taken to complete a survey questionnaire; differences in respondent's adherence to the original sampling protocol; and acceptability to respondents of the delivery mode. We included studies that were published in 2007 or after, as devices that became available during this time are compatible with the mobile operating system (OS) framework that focuses on apps.

Data collection and analysis

Two review authors independently extracted data from the included studies using a standardised form created for this systematic review in REDCap. They then compared their forms to reach consensus. Through an initial systematic mapping on the included studies, we identified two settings in which survey completion took place: controlled and uncontrolled. These settings differed in terms of (i) the location where surveys were completed, (ii) the frequency and intensity of sampling protocols, and (iii) the level of control over potential confounders (e.g., type of technology, level of help offered to respondents). We conducted a narrative synthesis of the evidence because a meta‐analysis was not appropriate due to high levels of clinical and methodological diversity. We reported our findings for each outcome according to the setting in which the studies were conducted.

Main results

We included 14 studies (15 records) with a total of 2275 participants; although we included only 2272 participants in the final analyses as there were missing data for three participants from one included study.

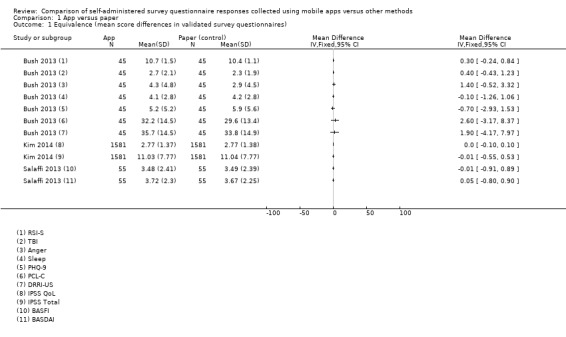

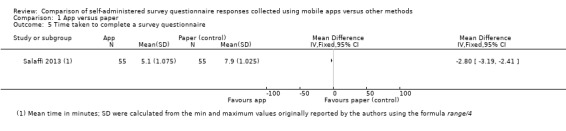

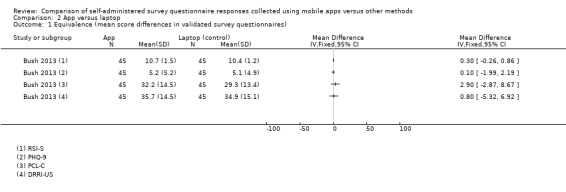

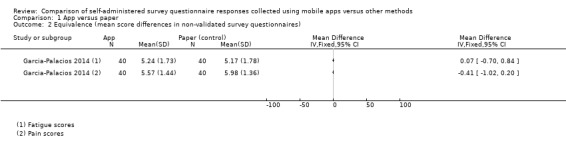

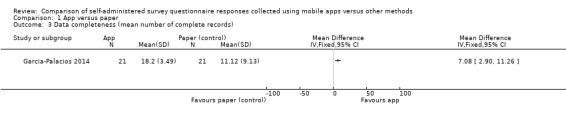

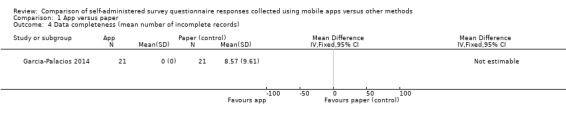

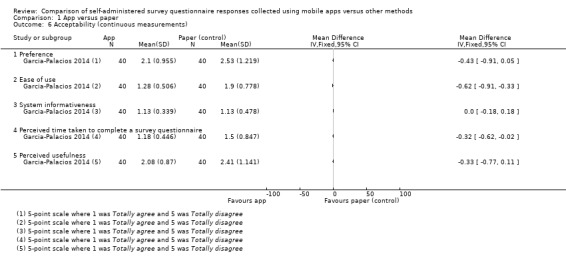

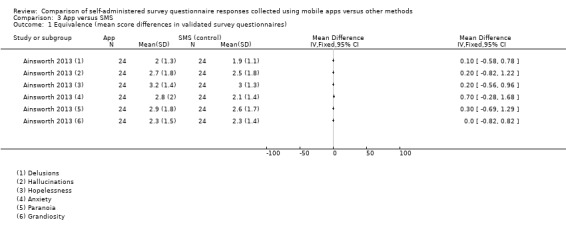

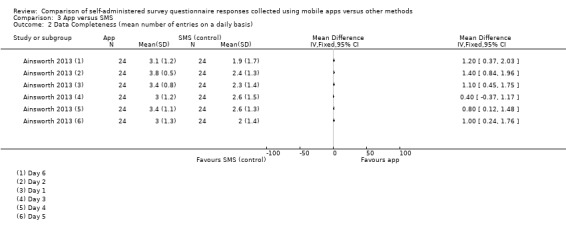

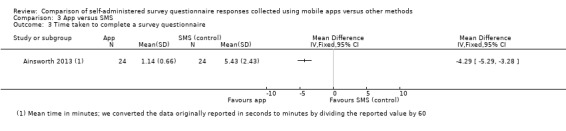

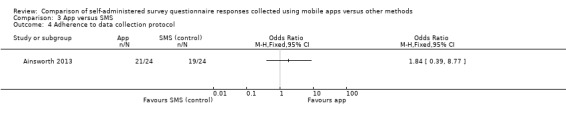

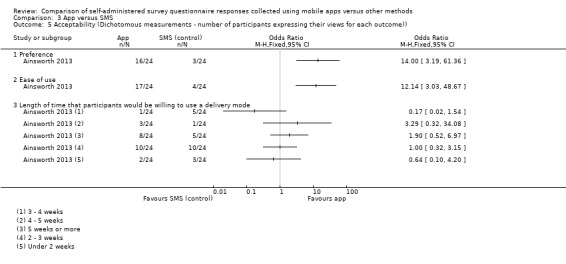

Regarding data equivalence, in both controlled and uncontrolled settings, the included studies found no significant differences in the mean overall scores between apps and other delivery modes, and that all correlation coefficients exceeded the recommended thresholds for data equivalence. Concerning the time taken to complete a survey questionnaire in a controlled setting, one study found that an app was faster than paper, whereas the other study did not find a significant difference between the two delivery modes. In an uncontrolled setting, one study found that an app was faster than SMS. Data completeness and adherence to sampling protocols were only reported in uncontrolled settings. Regarding the former, an app was found to result in more complete records than paper, and in significantly more data entries than an SMS‐based survey questionnaire. Regarding adherence to the sampling protocol, apps may be better than paper but no different from SMS. We identified multiple definitions of acceptability to respondents, with inconclusive results: preference; ease of use; willingness to use a delivery mode; satisfaction; effectiveness of the system informativeness; perceived time taken to complete the survey questionnaire; perceived benefit of a delivery mode; perceived usefulness of a delivery mode; perceived ability to complete a survey questionnaire; maximum length of time that participants would be willing to use a delivery mode; and reactivity to the delivery mode and its successful integration into respondents' daily routine. Finally, regardless of the study setting, none of the included studies reported data accuracy or response rates.

Authors' conclusions

Our results, based on a narrative synthesis of the evidence, suggest that apps might not affect data equivalence as long as the intended clinical application of the survey questionnaire, its intended frequency of administration and the setting in which it was validated remain unchanged. There were no data on data accuracy or response rates, and findings on the time taken to complete a self‐administered survey questionnaire were contradictory. Furthermore, although apps might improve data completeness, there is not enough evidence to assess their impact on adherence to sampling protocols. None of the included studies assessed how elements of user interaction design, survey questionnaire design and intervention design might influence mode effects. Those conducting research in public health and epidemiology should not assume that mode effects relevant to other delivery modes apply to apps running on consumer smart devices. Those conducting methodological research might wish to explore the issues highlighted by this systematic review.

Keywords: Humans, Cell Phone, Cell Phone/statistics & numerical data, Data Accuracy, Minicomputers, Minicomputers/statistics & numerical data, Mobile Applications, Mobile Applications/statistics & numerical data, Surveys and Questionnaires, Surveys and Questionnaires/standards, Text Messaging, Text Messaging/statistics & numerical data, Time Factors

Plain language summary

Can apps be used for the delivery of survey questionnaires in public health and clinical research?

Background

Survey questionnaires are important tools in public health and clinical research as they offer a convenient way of collecting data from a large number of respondents, dealing with sensitive topics, and are less resource intensive than other data collection techniques. The delivery of survey questionnaires via apps running on smartphones or tablets could maximise the scalability and speed of data collection offered by these tools, whilst reducing costs. However, before this technology becomes widely adopted, we need to understand how it could affect the quality of the responses collected. Particularly, if we consider the impact that data quality can have on the evidence base that supports many public health and healthcare decisions.

Objective

In this Cochrane review, we assessed the impact that using apps to deliver a survey can have on various aspects of the quality of responses. These include response rates, data accuracy, data completeness, time taken to complete a survey questionnaire, and acceptability to respondents.

Methods and results

We searched for studies published between January 2007 and April 2015. We included 14 studies and analysed data from 2272 participants. We did not conduct a meta‐analysis because of differences across the studies. Instead, we describe the results of each study. The studies took place in two types of setting: controlled and uncontrolled. The former refers to research or clinical environments in which healthcare practitioners or researchers were able to better control for potential confounders, such as the location and time of day in which surveys were completed, the type of technology used and the level of help available to respondents deal with technical difficulties. Uncontrolled settings refer to locations outside these research or clinical environments (e.g., the respondent's home). We found that apps may be equivalent to other delivery modes such as paper, laptops and SMS in both settings. It is unclear if apps could result in faster completion times than other delivery modes. Instead, our findings suggest that factors such as the characteristics of the clinical population, and survey and interface design could moderate the effect on this outcome. Data completeness and adherence to sampling protocols were only reported in uncontrolled settings. Our results indicate that apps may result in more complete datasets, and may improve adherence to sampling protocols compared to paper but not to SMS. There were multiple definitions of acceptability to respondents, which could not be standardised across the included studies. Lastly, none of the included studies reported on response rates or data accuracy.

Conclusion

Overall, there is not enough evidence to make clear recommendations about the impact that apps may have on survey questionnaire responses. Data equivalence may not be affected as long as the intended clinical application of a survey questionnaire and its intended frequency of administration is the same whether or not apps are used. Future research may need to consider how the design of the user interaction, survey questionnaire and intervention may affect data equivalence and the other outcomes evaluated in this review.

Background

Description of the problem or issue

Quantitative survey methods are commonly used in public health research and epidemiology as they enable the collection, through survey questionnaires, of highly structured data that are standardised across collection sites and research studies (Bowling 2005; Boynton 2004; Carter 2000; Groves 2009; Hosking 1995). These data can then be used to make statistical inferences about the population from which the sample of respondents is drawn. As such, these techniques have become the basis of evidence in public health policy development and intervention design. For this reason, careful consideration of the data collection mode is needed to ensure the quality of the data. In this Cochrane review we define quality in relation to survey error: both measurement error (discrepancies between survey questionnaire responses and the true value of the attribute under study) and representational error (discrepancies between statistics estimated on a sample and the estimates of the target population) (Groves 2009; Lavrakas 2008).

Data collection mode refers to variation in several aspects of the survey process, namely sampling of and contact with potential respondents, delivery of the survey questionnaire and administration of the survey questionnaire (Bowling 2005; Lavrakas 2008). Regarding the latter, survey questionnaires can be self‐administered or interview administered (Carter 2000). While both approaches have their merits, self administration is usually preferred. Self‐administered survey questionnaires are ideal for achieving a wide geographic coverage of the target population, dealing with sensitive topics and are typically less resource‐intensive than interviews (Bowling 2005; Bowling 2009; Carter 2000; Gwaltney 2008). To further leverage these benefits of maximising scalability and speed of data collection while reducing cost, the electronic delivery of self‐administered survey questionnaires has received considerable attention (Groves 2009; Lampe 1998; Lane 2006; Shih 2009).

Electronic modes of delivery have become commonplace in several research areas such as pain, asthma, tobacco use and smoking cessation (Lane 2006). These modes can vary in the type and degree of technology (e.g., devices and their technical specifications) used to deliver self‐administered survey questionnaires, the channels (i.e., visual or auditory) through which questions and response options are presented to respondents, and in the data entry formats that are supported (Bowling 2005; Groves 2009; Gwaltney 2008; Tourangeau 2000). However, survey questionnaire responses are the product of a complex interaction between the survey questionnaire, the respondent and the delivery mode. Therefore, variation in any of the properties of an electronic delivery mode may introduce some form of survey error or bias (i.e., mode effect) (Bowling 2005; Carter 2000; Coons 2009; Fan 2010; Manfreda 2008; Tourangeau 2000; Wells 2014).

Delivery modes could affect the type of responses given to a survey questionnaire (i.e., measurement error), which can manifest itself as differences in estimates, social desirability bias, acquiescence or extremeness bias, recall effects or response order effects (i.e., primacy and recency effects) (Bowling 2005; Groves 2009). Responses collected via electronic delivery modes, such as computers and personal digital assistants (PDA), have been found to be more accurate, timely and equivalent to those obtained with paper survey questionnaires (Gwaltney 2008; Lane 2006). The evidence concerning recall effects or social desirability bias has been inconclusive (Bowling 2005). Furthermore, primacy effects are more prevalent when response options are presented visually (e.g., in web surveys), whereas recency effects are more common if the options are presented aurally (e.g., using interaction voice response (IVR] systems) (Groves 2009; Lavrakas 2008).

Changes to the delivery mode could also result in representational errors, which are usually defined in terms of sampling error, coverage error and non‐response error (Lavrakas 2008). Compared to other delivery modes (e.g., mail, fax, email, telephone and interactive voice response), web surveys can result in a drop of between 10% and 20% in response rates (Manfreda 2008; Shih 2009). However, this effect is mediated by the content and presentation of the survey questionnaire, sampling methods, type and number of invitations sent, access to technology and (in the case of web surveys) the stage of the survey questionnaire process (Bowling 2005; Fan 2010; Manfreda 2008; Shih 2009). Adherence to sampling protocols appears to be enhanced when using electronic survey questionnaires (Gwaltney 2008; Lane 2006). Additionally, electronic delivery modes tend to result in higher item response rates than paper (Bowling 2005).

Nonetheless, delivery mode effects tend to be mode‐specific, thus it should not be assumed that lessons from one mode will apply to all others (Wells 2014). While these effects have been documented for traditional electronic delivery modes (Bowling 2005; Coons 2009; Fan 2010; Gwaltney 2008; Lane 2006), they have not been systematically assessed for current consumer smart devices that are able to support the delivery of self‐administered survey questionnaires.

Description of the methods being investigated

Consumer smart devices, in this case smartphones and tablets, are mobile devices with advanced computing and connectivity capabilities, and with an operating system (OS) framework that focuses on small, distributed software applications (i.e., apps). Mobile OSs provide a platform through which apps are able to access the computational and connectivity capabilities of a device and enable it to perform specialist functions. Apps can be pre‐loaded by the phone manufacturer and distributed as part of the factory settings of the device. Alternatively, third‐party developers can distribute their own apps through marketplaces from which end users can directly download and install them (Aanensen 2009; Wilcox 2012). Smartphones and tablets are also equipped with built‐in sensors that can unobtrusively capture some of the contextual and environmental information surrounding their use.

How these methods might work

Through their computing and connectivity capabilities, and the interfaces offered by apps, consumer smart devices are able to collect complex data and implement complex scoring requirements, thus supporting the delivery of self‐administered survey questionnaires (Aanensen 2009; Link 2014). However, a potential differentiating factor between consumer smart devices and other electronic modes of delivery is the perceived advantage of being able to conveniently complete survey questionnaires at any time and anywhere, as consumer smart devices are almost always on a person. This could help address certain limitations of the quantitative survey method such as recall bias. This type of survey completion can be further facilitated by the interfaces offered by the app, which could enable user interactions aimed at maximising the quality of responses collected (e.g., increasing data completeness through alerts and reminders, or presentation of a number of questions that is compatible with the usage patterns of consumer smart devices).

Furthermore, the portability, connectivity and ubiquitousness of consumer smart devices have resulted in usage patterns characterised by short, habitual sessions associated with specific contextual or environmental triggers (Adams 2014; Gaggioli 2013; Ishii 2004; Oulasvirta 2012). In addition, the type of activities for which consumer smart devices are used and the nature of the information accessed through them are different when compared to other electronic devices (Ishii 2004). These changes can introduce new forms of mode effects as the context and the setting in which the respondent‐survey interaction takes place can affect the information available to respondents, the salience of certain cues, the speed required to produce responses, the chosen accuracy for responses, and the social influence or norms operating at that particular moment (Tourangeau 2000). These elements will in turn determine the cognitive mechanisms involved in the response generation process, thus affecting the final properties of the responses (Gaggioli 2013; Klasnja 2012; Tourangeau 2000).

The ubiquitousness of consumer smart devices, the number of activities for which they are used and the distribution model of apps have resulted in users experiencing an ever increasing level of familiarity with their devices. For respondents, this may reduce the training requirements needed to complete a survey questionnaire on a consumer smart devices. For researchers, these devices may offer a wider target audience and reduce research implementation costs.

Finally, data collected by built‐in sensors can enrich datasets with contextual and environmental information that could assist in the formulation or validation of theoretical models that attempt to explain the survey completion process or certain attributes of interest.

Why it is important to do this review

A systematic review in this area is warranted due to (i) the lack of a comprehensive assessment of the potential mode effects resulting from delivering self‐administered survey questionnaires via apps, (ii) the importance that self‐administered survey questionnaires have in generating the evidence base for public health and epidemiology, and (iii) the number of researchers already using apps for delivering self‐administered survey questionnaires.

Potential limitations of this Cochrane review

One of the potential limitations in this field is the difficulty in teasing out the relative contribution of the delivery mode to changes in survey questionnaire responses. An additional challenge concerns the generalisability and applicability of results given the large number of devices with differing technical specifications and the rapid pace at which technology advances. Moreover, variation in the characteristics of the population, the psychometric properties of a survey questionnaire and access to consumer smart devices across contexts might also affect the generalisability of our findings.

Objectives

To assess the impact that smartphone and tablet apps as a delivery mode have on the quality of self‐administered survey questionnaire responses compared to any alternative delivery mode: paper, laptop computer, tablet computer (manufactured before 2007), short message service (SMS), and plastic objects. The latter refers to a study in which the color analog scale (CAS) was printed on a plastic ruler.

Methods

Criteria for considering studies for this review

Types of studies

The International Society for Pharmacoeconomics and Outcomes Research electronic Patient‐Reported Outcome (ISPOR ePRO) Good Research Practices Task Force Report (Coons 2009) recommends using parallel randomised controlled trials (RCTs) and crossover trials to test for data equivalence between self‐reported measures delivered via different modes. Therefore, we included these study designs. We also included studies using a paired repeated measures study design.

Types of data

We included data obtained from participants completing health‐related self‐administered survey questionnaires, both validated and non‐validated. Although in measurement science it is important to ensure the validity and reliability of the instruments being used, a number of epidemiological studies still use patient‐reported measures whose psychometric properties have not been assessed or are not available. These studies might still provide useful insight into mode effects. However, we did not include data resulting from non‐validated instruments in a meta‐analysis. Instead, we synthesised these data narratively and used the data to inform our discussion.

We also included data offered by both healthy volunteers and by those with any clinical diagnosis. We planned to include the data resulting from individuals who were completing self‐administered surveys as part of a complex self management intervention; but, we did not identify any studies with these characteristics.

We excluded data that were generated by interviewers, clinicians, caregivers or parents who were completing survey questionnaires on behalf of someone else. We also excluded survey questionnaires that measured consumer behaviour or that were used as part of routine paperwork. We did not exclude studies on the basis of the age, gender or any other socio‐demographic variable of the individuals completing the self‐administered survey questionnaires. However, data generated by individuals aged 18 or younger were analysed separately from data generated by adult participants.

Types of methods

We included studies that used a smartphone or tablet app to deliver survey questionnaires. We only included native apps that had been developed for a particular mobile device platform, or web apps wrapped within a native app (e.g., using a framework such as Adobe® PhoneGapTM). We excluded web apps that were rendered on a mobile web browser. We believe that there are important differences in usability between these two types of apps (e.g., responsiveness, user interface design and performance), which could affect respondents' interaction with a survey questionnaire. Only smartphones and tablets that became available in or after 2007 were included, as these devices are compatible with the current mobile OS framework that focuses on apps.

We included studies that compared at least two modes of data collection, one of which was a smartphone or tablet app. Therefore, we compared self‐administered survey questionnaires delivered using an app versus the same survey questionnaire delivered using any other mode (either electronic or paper‐based).

We excluded apps that allowed pictures as a form of data entry. We excluded studies where students, researchers or employees used smartphones or tablets to collect data as part of their daily routines.

Types of outcome measures

Primary outcomes

Equivalence between survey questionnaire responses administered via two different delivery modes. This outcome assesses the changes in the psychometric properties of a survey questionnaire when it is adapted for use with a new delivery mode. We measured equivalence using correlations or measures of agreement (intra‐class correlation (ICC) coefficient, Pearson product‐moment correlations, Spearman rho and weighted Kappa coefficient), comparisons of mean scores between two delivery modes, or both (Gwaltney 2008). We focused on the overall equivalence of a survey questionnaire, as opposed to the equivalence between constructs or individual items (Gwaltney 2008). For ICC, we used 0.70 as the cut‐off point for group comparisons (Gwaltney 2008). For other coefficients, we used 0.60 as the cut‐off point for concluding equivalence (Gwaltney 2008). For studies comparing mean scores, we used the minimally important difference (MID) as an indicator of equivalence (Gwaltney 2008). In addition, since equivalence between alternative delivery modes is a form of test‐retest or alternate‐forms reliability, between‐mode mean differences (MDs) and ICC coefficients were interpreted, whenever possible, in relation to within‐mode test‐retest ICC of the original mode (Coons 2009; Gwaltney 2008).

Data accuracy: comparison of the proportion of errors or problematic items between two modes for delivering the same survey questionnaire.

Data completeness: comparison of the proportion of missing items between two modes for delivering the same survey questionnaire.

Response rates: the number of completed questionnaires divided by the total number of eligible sample units.

Secondary outcomes

Difference between two delivery modes in the time taken to complete a survey questionnaire.

Differences in respondents' adherence to the original sampling protocol: respondents' adherence to a pre‐specified schedule (both in terms of duration and frequency) of survey completion.

Differences between two delivery modes in acceptability to respondents.

Search methods for identification of studies

Electronic searches

We searched MEDLINE (January 2007 ‐ April 2015) using the search strategy outlined in Appendix 1. We adapted this search strategy for use in EMBASE (January 2007 ‐ April 2015) (Appendix 2), PsycINFO (January 2007 ‐ April 2015) (Appendix 3), IEEEXplore (January 2007 ‐ April 2015) (Appendix 4), Web of Science (January 2007 ‐ April 2015) (Appendix 5), CABI: CAB Abstracts (January 2007 ‐ April 2015) (Appendix 6), Current Contents Connect (January 2007 ‐ April 2015) (Appendix 7), ACM Digital (January 2007 ‐ April 2015) (Appendix 8), ERIC (January 2007 ‐ April 2015) (Appendix 9), Sociological Abstracts (January 2007 ‐ April 2015) (Appendix 10), Health Management Information Consortium (January 2007 ‐ April 2015) (Appendix 11), the Campbell Library (January 2007 ‐ April 2015) and CENTRAL (January 2007, Issue 1 ‐ April 2015, Issue 4) (Appendix 12). We also searched registers of current and ongoing clinical trials such as ClinicalTrials.gov (up to April 2015) (Appendix 13) and the World Health Organization (WHO) International Clinical Trials Registry Platform (ICTRP) (up to April 2015) (Ghersi 2009). We conducted our initial searches between up to 2 July 2014 (Marcano Belisaro 2014). We performed our search update up to 12 and 13 April 2015.

We did not exclude any studies based on their language of publication.

We limited our electronic searches to studies published on or after 2007 since the type of devices and the software development and distribution framework that we evaluated in this systematic review did not exist before this year. We documented the search results for each electronic database and included them as Appendix 14 and Appendix 15.

Searching other resources

We searched the grey literature in OpenGrey (up to April 2015), Mobile Active (up to April 2015) and ProQuest Dissertations & Theses (January 2007 ‐ April 2015) (Appendix 16). In addition, we searched Google Scholar (up to April 2015). We also checked the reference lists of included studies and relevant systematic reviews identified through our electronic searches for additional references.

Data collection and analysis

Selection of studies

JMB implemented the search strategies described in Electronic searches and Searching other resources, and both JMB and JJ reviewed the outputs. We imported all the references into EndNote X5 and removed duplicate records of the same reports using the built‐in function offered by this programme.

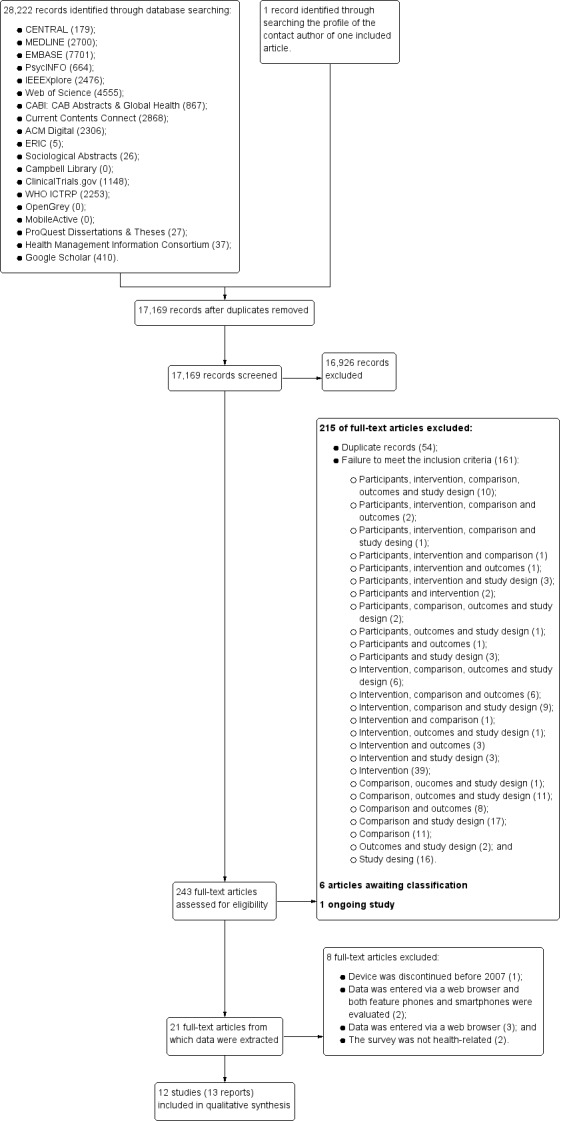

Following the initial searches, JMB (acting as Screener 1) and JJ and BF (both acting as Screener 2) independently examined the titles and abstracts of 17,169 records in order to identify potentially relevant studies. JMB, and JJ and BF then independently screened the full‐text reports of 243 potentially relevant records (54 of which were duplicate records that were not identified as such by the built‐in function offered by EndNote X5, and 161 of which were excluded) and assessed them for compliance with our inclusion and exclusion criteria.

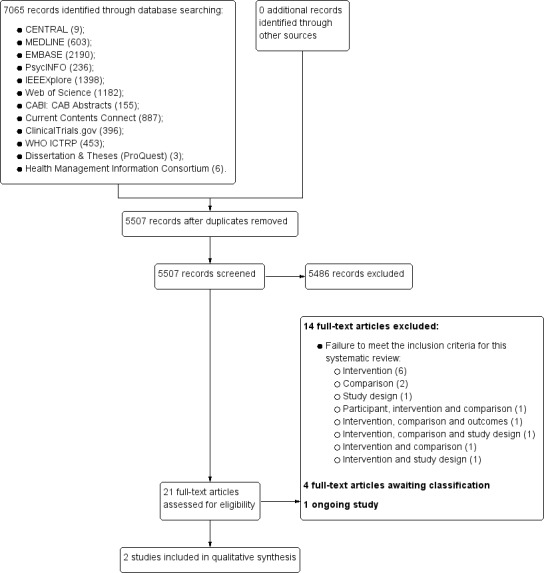

For the search update, JMB and JJ independently examined the titles and abstracts of 5507 records. JMB and JJ then independently screened the full‐text reports of 21 potentially relevant records (14 of which were excluded) and assessed them for compliance with our inclusion and exclusion criteria.

Any disagreements were resolved through discussion between JMB, JJ and BF. If the information presented in the full‐text report was insufficient to make a full assessment, we contacted the study authors to request additional information.

Data extraction and management

JMB and JJ independently extracted data from the included studies using a structured web‐based form in REDCap 2009. We compared the data extraction forms completed by each review author and followed up any discrepancies with reference to the original full‐text report. We contacted authors of studies containing missing or incomplete data in an attempt to obtain the incomplete information.

Where possible, we extracted the following information from each record:

General study details.

Study methods, including study design; inclusion and exclusion criteria; and study setting.

Description and number of participants, including their level of health literacy, age group and health status.

Types of self‐administered survey questionnaires used, as well as the technological platform used to deliver them.

Outcomes: outcomes measured and time points at which they were measured and the numerical results of these measurements.

Study conclusions, advantages and limitations.

We summarised the information extracted in a Characteristics of included studies table.

Assessment of risk of bias in included studies

For RCTs, JMB and JJ independently assessed the risk of bias for all the included studies using Cochrane's tool for assessing the risk of bias in randomised trials (Higgins 2011). Therefore, we assessed the risk of bias across the following domains:

Random sequence generation.

Allocation concealment.

Blinding of participants and personnel.

Blinding of outcome assessment.

Incomplete outcome data.

Selective outcome reporting.

Other bias (i.e., imbalance of outcome measures at baseline, comparability between the characteristics of the intervention and control groups, and protection against contamination).

For crossover trials, we assessed the risk of bias across the following domains (Higgins 2011):

Suitability of the crossover design.

Evidence of a carry‐over effect.

Whether only first period data were available.

Incorrect statistical analysis.

Comparability of results with those from randomised trials.

We planned to assess the risk of bias for cluster‐randomised controlled trials (cRCTs) across the following domains (Higgins 2011):

Recruitment bias.

Baseline imbalances.

Loss of clusters.

Incorrect analysis.

Comparability with randomised trials.

However, we did not find any cRCTs that met our inclusion criteria.

For each included study, JMB and JJ classified each domain as presenting low, high or unclear risk of bias. We resolved any discrepancies between the two review authors through discussion. We summarised our assessment for each included study in a 'Risk of bias' table (included within the Characteristics of included studies table).

Measures of the effect of the methods

We compared the characteristics of included studies in order to determine the feasibility of performing a meta‐analysis. For continuous outcomes (i.e., comparison of mean scores between delivery modes, data completeness, time taken to complete a survey questionnaire and acceptability), we calculated the MD and 95% confidence intervals (CI). If studies using different measurement scales had been analysed quantitatively, we would have calculated the standardised mean difference (SMD). For dichotomous outcomes (i.e., acceptability), we calculated the odds ratio (OR) and 95% CI. In addition, had the correlation coefficients been analysed quantitatively, we would have calculated a summary correlation coefficient using a weighted linear combination method (Gwaltney 2008).

Unit of analysis issues

For cRCTs, we stated that we would attempt to obtain data at the individual level. Had these data not been available from the study report, we would have requested them directly from the contact author. In this case, we would have conducted a meta‐analysis of individual‐level data using a generic inverse‐variance method in RevMan 2014, which would have accounted for the clustering of data. If access to individual‐level data was not possible, we would have extracted the summary effect measurement for each cluster. We would have considered the number of clusters as the sample size and conducted the analysis as if the trial was individually randomised. This approach, however, would have reduced the statistical power of our analysis. For those studies that considered clustering of data in their statistical analysis, we would have extracted the reported effect estimates and used them in our meta‐analysis. However, we did not include any cRCTs.

Dealing with missing data

We attempted to contact the authors of studies with missing or incomplete data to request the missing information; however, we did not receive any replies. Therefore, we used an available case analysis.

Assessment of heterogeneity

For all our outcomes, we assessed the clinical and methodological diversity between included studies qualitatively. We assessed clinical diversity in relation to the type of device and platform used, the intensity of the data collection protocol and the characteristics of the participants. Methodological diversity was assessed in relation to the properties of the survey questionnaire, the study methodology and the outcome definitions. As a result of this assessment, we considered that a meta‐analysis would be appropriate if the included studies analysed the same age group of participants (i.e., adult participants separate from those aged 18 years or under), were using validated survey questionnaires and were using the same comparator (i.e., paper survey questionnaires or SMS).

Only a small number of studies met these criteria for each of our outcomes. In addition, the included studies displayed substantial clinical and methodological diversity, even after taking into consideration these criteria. For these reasons, we did not use a formal statistical test to quantify statistical heterogeneity or did not conduct any meta‐analysis.

Assessment of reporting biases

We conducted a comprehensive search of multiple bibliographic databases and trial registries in order to minimise the risk of publication bias, through which we identified two trials for which there are no publications yet available. Since there were fewer than 10 included studies in each of our analyses, we did not assess reporting bias using a funnel plot regression weighted by the inverse of the pool variance.

We assessed outcome reporting bias as part of the per‐study 'Risk of bias' assessment.

Data synthesis

Since performing a meta‐analysis was not appropriate, we conducted a narrative synthesis of the evidence. We adapted the framework proposed by Rodgers 2009 to guide this process. We had originally planned to use the Grading Recommendations Assessment, Development and Evaluation (GRADE) approach to assess the quality of the pooled evidence, the magnitude of effect of the interventions examined and the sum of the available data on the main outcomes to produce a 'Summary of findings' table for each of our primary outcomes. However, we did not implement this, because we did not conduct a meta‐analysis.

Subgroup analysis and investigation of heterogeneity

We planned to perform subgroup analyses according to whether the participants were healthy volunteers or had any given clinical diagnosis, and whether or not they were completing survey questionnaires as part of a complex self management intervention. We also planned to perform subgroup analyses based on the type of device (i.e., smartphone versus tablets) and the form of data entry, and on whether the survey questionnaires were used for longitudinal data collection or for a single outcome assessment. Finally, we also planned to perform subgroup analysis based on whether the study was industry‐funded or not. However, since a meta‐analysis was not appropriate, we did not perform any of these analyses.

Sensitivity analysis

We planned to conduct a sensitivity analysis if one or more studies were dominant due to their size, if one or more studies had results that differed from those observed in other studies, or if one or more studies had quality issues that may have affected their interpretation as assessed with the Cochrane 'Risk of bias' tool. However, since none of these conditions were met, we did not conduct a sensitivity analysis.

Results

Description of studies

Results of the search

After the initial implementation of our search procedures, we screened the titles and abstracts of 17,169 papers. Most records (17,168) were identified through the search strategies developed as part of our Electronic searches, whilst only one record was identified after looking at the professional profile of one contact author (Fanning 2014). During the initial screening, we excluded 16,926 records and retrieved full‐text reports for 243 potential includable studies and assessed them for eligibility. Of these, we excluded 215 records, and categorised six were as awaiting classification (see Studies awaiting classification and Characteristics of studies awaiting classification) because there was insufficient information available from the reports, and our attempts to obtain information from the contact authors were unsuccessful. Additionally, one record corresponded to an ongoing trial. We extracted data from 21 reports; however, we excluded eight of these records (corresponding to six studies) (Depp 2012; Fanning 2014; Haver 2011; Mavletova 2013; Wells 2014; Woods 2009).

Following the implementation of our search update, we screened the titles and abstracts of 5507 papers. During this initial screening, we excluded 5486 records and retrieved full‐text reports for 21 potential includable studies and assessed them for eligibility. Of these, we excluded 14 records, four were categorised as awaiting classification (as there was insufficient information available from the study reports and our attempts to obtain information from the contact authors were unsuccessful) and one record corresponded to an ongoing trial. We extracted data from two reports.

Overall, 15 records (corresponding to 14 studies) met the inclusion criteria (Ainsworth 2013; Brunger 2015; Bush 2013; Garcia‐Palacios 2014; Khraishi 2012; Kim 2014; Lamber 2012; Newell 2015; Salaffi 2013; Schemmann 2013; Sigaud 2014; Stomberg 2012; Sun 2013a; Sun 2013b; see Figure 1 and Figure 2).

1.

PRISMA flow diagram.

2.

PRISMA flow diagram ‐ updated search.

Included studies

Of the 15 included records, nine were papers published in peer‐reviewed journals and six were posters or abstracts, or both. Moreover, two records corresponded to a single study and were included as Khraishi 2012. Two other records also corresponded to one study; but, this study evaluated two separate samples using different survey questionnaires. Therefore, we included them as two separate studies (Sun 2013a; Sun 2013b) with both records contributing data to each study.

Overall, we included 14 studies with a total of 2275 participants (only 2272 participants were analysed as there was missing data for three participants in one included study).

Types of studies

All the included studies were conducted in high‐income countries: Canada (Khraishi 2012; Sun 2013a; Sun 2013b); France (Sigaud 2014); Germany (Schemmann 2013); Italy (Lamber 2012; Salaffi 2013); Republic of Korea (Kim 2014); Spain (Garcia‐Palacios 2014); Sweden (Stomberg 2012); United Kingdom (Ainsworth 2013; Brunger 2015); and the United States of America (USA) (Bush 2013; Newell 2015). Newell 2015 recruited participants from disadvantaged communities in rural areas of the USA.

Lamber 2012, Newell 2015 and Stomberg 2012 conducted a randomised controlled study. Ainsworth 2013, Bush 2013, Garcia‐Palacios 2014, Khraishi 2012, Kim 2014, Salaffi 2013, Schemmann 2013, Sigaud 2014, Sun 2013a and Sun 2013b conducted cross‐over trials. Brunger 2015 conducted a paired repeated measures study. We planned to include studies using a cluster‐randomised design; but, we did not identify any that met our inclusion criteria. The duration of these trials (which includes both periods of data collection) varied between one day (Brunger 2015; Lamber 2012; Newell 2015; Salaffi 2013; Schemmann 2013; Sun 2013a; Sun 2013b) and six months (Sigaud 2014). Washout periods varied between 30 minutes (Sun 2013a; Sun 2013b) and one week (Ainsworth 2013; Kim 2014).

The main objectives of the included studies (as stated in the study reports) were to compare the psychometric properties of a survey questionnaire when administered using alternative delivery modes (Ainsworth 2013; Brunger 2015; Bush 2013; Garcia‐Palacios 2014; Khraishi 2012; Newell 2015; Salaffi 2013; Schemmann 2013; Sun 2013a; Sun 2013b), to develop a smartphone application for delivering a validated survey questionnaire and demonstrate its validity and reliability (Kim 2014), to demonstrate the data equivalence between different delivery modes whilst assessing the impact that patient‐related factors has on usability (Lamber 2012), and to evaluate the performance of a new delivery mode for recording patient data (Sigaud 2014; Stomberg 2012).

Finally, three studies mentioned having provided some form of incentive to their participants: Ainsworth 2013 offered GBP 50 worth of phone credit (for those participants on pay‐as‐you‐go plans) plus an additional GBP 30 upon completion of the study; Garcia‐Palacios 2014 offered three weeks of free psychological treatment for fibromyalgia syndrome (six two‐hour group sessions); and Newell 2015 offered a USD 40 gift card to their participants.

Types of data

We were able to categorise the types of data across the characteristics of the self‐administered survey questionnaire and of the target populations, and the setting in which completion of the survey questionnaires took place.

Characteristics of the self‐administered survey questionnaires

For this dimension, we considered the validation status (i.e., validated, non‐validated, composite instruments and unclear), clinical applications and the type of response scales of each survey questionnaire.

Table 1 provides a summary of the self‐administered survey questionnaires included in this Cochrane review, grouped according to their validation status and clinical application. Overall, nine studies used validated instruments (Brunger 2015; Khraishi 2012; Kim 2014; Lamber 2012; Newell 2015; Salaffi 2013; Schemmann 2013; Sun 2013a; Sun 2013b). Brunger 2015 used a visual analogue scale (VAS) to measure satiety in a sample of participants before and after the consumption of either a high‐energy or a low‐energy drink. These scales were developed according to the guidance proposed by Blundell 2010 for the assessment of food consumption. Khraishi 2012 used the Health Assessment Questionnaire (HAQ) (Bruce 2003), which is a self report functional status measure commonly used in rheumatology (although it can be used across diverse clinical disciplines) that collects data on five patient‐related health dimensions: to avoid disability; to be free of pain or discomfort; to avoid adverse treatment effects; to keep treatment costs low; and to postpone death (Bruce 2003). Kim 2014 used the International Prostate Symptom Score (IPSS) (Barry 1992), which is a symptom index normally used for the assessment and management of patients with benign prostatic hyperplasia. This eight‐item instrument evaluates sensation of incomplete bladder emptying, urinary frequency, urinary intermittency, difficulty urinating, strength of the urinary stream, straining, nocturia and quality of life. Lamber 2012 employed the European Organization for Research and Treatment of Cancer Quality of Life Questionnaire ‐ C30 (EORTC QLQ ‐ 30), which has been developed to assess the quality of life in patients with cancer (EORTC QLQ‐C30). Newell 2015 used the Center for Epidemiologic Studies Depression Scale (CES‐D) and the Regulatory Focus Questionnaire (RFQ). The CES‐D (Eaton 2004; Radloff 1977) was developed to measure symptoms of depression across nine dimensions: dysphoria; anhedonia; appetite; sleep; thinking; feelings of worthlessness; fatigue; agitation; and suicidal ideation. The RFQ (Higgins 2001) measures an individual's orientation towards her or his goals. This survey questionnaire consists of 11 items (each mapped on to a five‐point scale) assessing two subscales: prevention and promotion. The former subscale focuses on safety and responsibility, while the promotion subscale focuses on hopes and accomplishments. Salaffi 2013 used both the Bath Ankylosing Spondylitis Disease Activity Index (BASDAI) (Garrett 1994) and the Bath Ankylosing Spondylitis Functional Index (BASFI) (Calin 1994). The former is a 10 cm horizontal VAS that measures the severity of fatigue, spinal and peripheral joint pain, localised tenderness and morning stiffness (Garrett 1994) in patients with ankylosing spondylitis. The BASFI is a two‐part questionnaire measuring function in ankylosing spondylitis and patients' ability to perform everyday activities (Calin 1994). The 10 questions in part 2 of this questionnaire are on a 10‐point scale. Schemmann 2013 used the German version of the short International Hip Outcome Tool (iHOT‐12) (Griffin 2012), which is a 12‐item scale assessing the quality of life and functional status of patients with hip disorders (Griffin 2012). Finally, Sun 2013a and Sun 2013b used the Faces Pain Scale Revised (FPS‐R) (Hicks 2001) and the Color Analog Scale (CAS) (Bulloch 2009) to assess post‐surgical pain in a paediatric population.

1. Self‐administered survey questionnaires grouped by validation status and clinical application.

| Validation status | Clinical application | Study ID | Instrument name |

| Validated | Functional Status Assessment | Khraishi 2012 | Health Assessment Questionnaire |

| Salaffi 2013 | Bath Ankylosing Spondylitis Disease Activity Index | ||

| Bath Ankylosing Spondylitis Functional Index | |||

| Schemmann 2013 | Short International Hip Outcome Tool (iHOT‐12 ‐ German Version) | ||

| Pain Assessment | Sun 2013a | Faces Pain Scale Revised (FPS‐R) | |

| Sun 2013b | Color Analog Scale (CAS) | ||

| Symptom Scores | Kim 2014 | International Prostate Symptom Score (IPSS) | |

| Health‐related Quality of Life | Lamber 2012 | European Organization for Research and Treatment of Cancer Quality of Life Questionnaire‐C30 (EORTC QLQ‐30) | |

| Kim 2014 | Quality of Life component of the IPSS | ||

| Mental Health Assessment | Newell 2015 | Center for Epidemiologic Studies Depression Scale (CES‐D) | |

| Assessment of Individual Differences | Newell 2015 | Regulatory Focus Questionnaire (RFQ) | |

| Food Consumption/Appetite Assessment | Brunger 2015 | Visual Analogue Scales (VAS) designed following the guidance proposed by Blundell 2010 | |

| Composite Instruments | Mental Health Assessment | Ainsworth 2013 | Symptom dimensionsa: (i) hopelessness, (ii) depression, (iii) hallucinations; (iv) anxiety, (v) grandiosity, (vi) paranoia, and (vii) delusions |

| Bush 2013 | Mobile Screenera : (i) the Post Traumatic Stress Disorder Checklist (PTSD Checklist); (ii) Patient Health Questionnaire ‐ 9 (PHQ‐9); (iii) Revised Suicidal Ideation Scale (R‐SIS); (iv) Deployment Risk and Resilience Inventory‐Unit Support (DRRI‐US); (v) Dimensions of Anger 5 (DAR5); (vi) Sleep Evaluation Scale; and (vii) TBI Self‐Report of Symptoms | ||

| Non‐validated | Pain Assessment | Stomberg 2012 | Patient‐reported Post‐surgical Pain |

| Diary | Sigaud 2014 | Treatment Compliance Diary | |

| Unclear | Functional Status Assessment | Garcia‐Palacios 2014 | PROMs measuring fatigue |

| Pain Assessment | PROMs measuring pain | ||

| Mental Health Assessment | PROMs measuring mood |

aThe composite instruments used in these studies were derived from previously validated instruments.

Both Ainsworth 2013 and Bush 2013 used composite scales derived from previously validated instruments to conduct mental health assessments. Ainsworth 2013 developed a diagnostic assessment tool from a mobile phone assessment scale that assesses seven symptom dimensions: hopelessness, depression, hallucinations, anxiety, grandiosity, paranoia and delusions. This instrument was delivered using ecological momentary assessment (EMA) methods. Bush 2013 developed their Mobile Screener which incorporated the Post Traumatic Stress Disorder Checklist (PTSD Checklist), Patient Health Questionnaire ‐ 9 (PHQ‐9), Revised Suicidal Ideation Scale (R‐SIS), Deployment Risk and Resilience Inventory‐Unit Support (DRRI‐US), Dimensions of Anger 5 (DAR5), Sleep Evaluation Scale and TBI Self Report of Symptoms.

Sigaud 2014 used a non‐validated diary to monitor the home treatment (recombinant Factor VIII) of patients diagnosed with severe Haemophilia A, whilst Stomberg 2012 used a non‐validated numerical rating scale (NRS) to monitor post‐surgical pain. Garcia‐Palacios 2014 used EMA methods to collect patient‐reported outcome measures (PROMs) of pain, fatigue and mood in a clinical population using NRS items. However, the validation status of these measures was unclear.

In relation to the response scales, the survey questionnaire used by Bush 2013 was a categorical scale: their Sleep Evaluation Scale consisted of ten items measured as true/false. The rest of the included studies used continuous scales, including VAS (Brunger 2015; Salaffi 2013; Sun 2013b), NRS (Garcia‐Palacios 2014; Newell 2015; Salaffi 2013; Stomberg 2012), adjectival or Likert scales (Ainsworth 2013; Bush 2013; Kim 2014; Lamber 2012; Schemmann 2013), and face scales (Sun 2013a).

Population characteristics

We considered the health status and age group of the participants.

Most participants came from clinical populations: rheumatology (Garcia‐Palacios 2014; Khraishi 2012; Salaffi 2013; Schemmann 2013), surgery (Stomberg 2012; Sun 2013a; Sun 2013b), psychiatry (Ainsworth 2013), urology (Kim 2014), oncology (Lamber 2012) and haematology (Sigaud 2014). Only Brunger 2015, Newell 2015 and Bush 2013 recruited participants from a population of healthy adults, with the latter recruiting army personnel. Table 2 provides a summary of the diagnoses and exclusion criteria for each included study.

2. Clinical populations included in this Cochrane review.

| Clinical Domain | Study ID | Diagnosis | Exclusion criteria |

| Rheumatology | Garcia‐Palacios 2014 | Fibromyalgia | Severe mental illness; severe sensory impairment |

| Khraishi 2012 | Psoriatic arthritis; rheumatoid arthritis | Not specified | |

| Salaffi 2013 | Axial spondyloarthritis | Younger than 18 years; mental or physical disability | |

| Schemmann 2013 | Inpatients treated with hip arthroscopy | Not specified | |

| Surgery | Stomberg 2012 | Patients undergoing planned vaginal hysterectomy or laparoscopic cholecystectomy | History of alcohol or drug abuse; memory impairments |

| Sun 2013a | Children in the post‐anaesthetic care unit | Not specified | |

| Sun 2013b | |||

| Urology | Kim 2014 | Lower urinary tract symptoms | Cancer; Neurologic diseases; uncontrolled hypertension; uncontrolled diabetes; psychiatric disorders; prostatic surgery; liver cirrhosis; and renal failure |

| Oncology | Lamber 2012 | Cancer (not specified) | Not specified |

| Psychiatry | Ainsworth 2013 | Schizophrenia; schizoaffective disorder | Organic or substance‐induced psychoses |

| Haematology | Sigaud 2014 | Severe Haemophilia A treated with Advate® (recombinant Factor VIII) | Not specified |

Concerning age groups, both Sun 2013a and Sun 2013b recruited a paediatric sample of children aged between four and 11 years, and between five and 18 years, respectively. The remaining studies recruited adult participants ranging from 18 to 80 years old.

Setting

Ainsworth 2013, Garcia‐Palacios 2014, Sigaud 2014, and Stomberg 2012 asked participants to complete survey questionnaires in a naturalistic setting. These studies also required a longer and more intensive sampling protocol. The remaining studies asked participants to complete survey questionnaires in a clinical or research setting (Brunger 2015; Bush 2013; Khraishi 2012; Kim 2014; Lamber 2012; Newell 2015; Salaffi 2013; Schemmann 2013; Sun 2013a; Sun 2013b).

Types of method

We categorised the types of methods based on the type of device and platform, functionality offered, human‐machine interaction factors, data collection protocol and additional interventions.

Types of device and platform

Lamber 2012 evaluated the EORTC QLQ‐30 using an app running on both smartphones and tablets. The model of the tablet was not specified; but, the handset used was a Nokia N97 running Symbian OS. The report of this study indicated that their app, MobiDay, was developed specifically for smartphones, which suggests that the tablet might not be compatible with our inclusion criteria (see Types of methods in Criteria for considering studies for this review).

Ainsworth 2013, Bush 2013, Garcia‐Palacios 2014, Kim 2014, Sigaud 2014, Stomberg 2012, Sun 2013a and Sun 2013b used apps running on smartphones. Bush 2013 and Stomberg 2012 supported the iPhone (iOS) platform, although the latter also supported Android and Java‐enabled handsets. Ainsworth 2013 used Orange San Francisco handsets running Android OS, and Garcia‐Palacios 2014 used HTC 1 Diamond devices running Windows Mobile OS. Kim 2014, Sigaud 2014, Sun 2013a and Sun 2013b reported using smartphones in their studies but did not specify the models used.

Brunger 2015, Khraishi 2012, Newell 2015, Salaffi 2013 and Schemmann 2013 used apps running on tablets. Khraishi 2012 and Newell 2015 used an iPad (iOS), Brunger 2015 used an iPad mini (iOS), whereas Salaffi 2013 used an Archos 101 tablet running Android OS. However, Schemmann 2013 did not specify the device model.

Functionality

Four studies reported the functionality offered by their apps (Ainsworth 2013; Garcia‐Palacios 2014; Salaffi 2013; Stomberg 2012). The app used in Ainsworth 2013 allowed the configuration of the number of questions that were displayed on each day (or the times in which these were displayed), configuration of questions, the creation of multiple question sets, question branching, questionnaire timeout, time stamping of questionnaire entries and complex skip procedures. Garcia‐Palacios 2014 implemented a configurable number of questions displayed on each day and time stamping of questionnaire entries, whilst Stomberg 2012 implemented both a configurable number of questions displayed on each day and configurable questions. Lastly, Salaffi 2013 enabled the implementation of complex skip procedures and compulsory questions. See Table 3 for additional information.

3. Functionality and human computer interaction of the included apps.

| Study ID | Functionality | Human Computer Interaction | ||||||||||

| Configurable number/times of questions per day | Configurable question sets | Question branching | Questionnaire timeout | Time stamping of data entries | Complex skip procedures | Alerts | Reminders | Questionnaire layout | Data input | Saving data | Audio instructions | |

| Ainsworth 2013 | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | User definable alerts; delivered at semi‐random intervals; snooze alter to be reminded 5 minutes later; one alert for each question set | ‐ | One question per page; navigation through the pages of questions enabled | Continuous slider bar mapped onto a 7‐point Likert scale | Automatic; however, all answers were stored in the handset for downloading at the end of the sampling procedure | ‐ |

| Brunger 2015 | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | One question per page | 100mm horizontal line; users could select their answer by sliding their finger across the line on the touchscreen | Automatic transfer of data to a secure database via a wireless connection | ‐ |

| Bush 2013 | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ |

| Garcia‐Palacios 2014 | ✓ | ‐ | ‐ | ‐ | ✓ | ‐ | Audio signal indicated that the rating scale should be completed; times could be adjusted to the particular needs of each participant | Audio signal every minute for the next 15 minutes after the initial alert, and then every 15 minutes during the next hour | ‐ | ‐ | ‐ | Enabled |

| Khraishi 2012 | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ |

| Kim 2014 | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | One question per page; navigation through the pages of questions enabled; users were allowed to correct/change previous answers | ‐ | Users had to tap the save button in order to submit their answers; automatic transfer of data | ‐ |

| Lamber 2012 | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | One question per screen | ‐ | Users were allowed to suspend their tasks and to come back to the questionnaire later on | ‐ |

| Newell 2015 | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ |

| Salaffi 2013 | ‐ | ‐ | ‐ | ‐ | ‐ | ✓ | ‐ | ‐ | One question per screen with visual and auditory stimuli | ‐ | Automatic | Voice and text synchronisation; replay buttons for the question stems and the individual response options |

| Schemmann 2013 | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ |

| Sigaud 2014 | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ |

| Stomberg 2012 | ✓ | ✓ | ‐ | ‐ | ‐ | ‐ | Push notifications delivered every 4 hours | SMS reminder if no response was obtained within 13 minutes of the initial alert | One question per screen; question disappeared immediately after an answer was submitted | ‐ | Automatic | ‐ |

| Sun 2013a | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ |

| Sun 2013b | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ |

Human computer interaction

Seven studies reported the human‐machine interaction elements implemented in their apps (Ainsworth 2013; Brunger 2015; Garcia‐Palacios 2014; Kim 2014; Lamber 2012; Salaffi 2013; Stomberg 2012). Ainsworth 2013 allowed respondents to set their own schedule of alerts. These alerts were delivered at semi‐random intervals, and respondents were allowed to snooze the alerts for five minutes. Questions were presented one per page but respondents were able to navigate back and forth between pages. Data input was done via a continuous slider bar mapped onto a seven‐point Likert scale, and data were saved immediately after a response was entered. Brunger 2015 presented one question per page. Data input was achieved through a continuous slider mapped onto a 10 cm horizontal line; users interacted directly with this line through the touchscreen. Responses were transmitted automatically to a secure database via a wireless connection. Garcia‐Palacios 2014 also implemented alerts but these were in the form of audio signals. Audio reminders were displayed if an answer was not entered within the time specified, and were played every minute during the first 15 minutes and then every 15 minutes for the next hour. This app also featured audio‐recorded instructions. Alerts in Stomberg 2012 took the form of push notifications delivered every four hours, and reminders were sent via SMS if no response was received within 13 minutes of the scheduled time.

Kim 2014, Lamber 2012, Salaffi 2013 and Stomberg 2012 also presented one question per page. However, only Kim 2014 allowed respondents to navigate between pages and to modify previous answers. Respondents in Kim 2014 had to confirm the selected option before their response was saved, whereas data were saved automatically in Lamber 2012, Salaffi 2013 and Stomberg 2012. Furthermore, participants in Lamber 2012 were allowed to stop at any time and resume the survey questionnaire whenever it was convenient for them. Salaffi 2013 implemented voice and text synchronisation, and replay buttons for each question stem and individual response option.

See Table 3 for additional information.

Data collection protocol

Most included studies (71%) sampled participants for one day (Brunger 2015; Bush 2013; Khraishi 2012; Kim 2014; Lamber 2012; Newell 2015; Salaffi 2013; Schemmann 2013; Sun 2013a; Sun 2013b). However, Brunger 2015 sampled participants 0, 30, 60, 90 and 120 minutes after the consumption of a low‐energy or a high‐energy drink. On the other hand, Garcia‐Palacios 2014 required participants to complete survey questionnaires for at least three times a day for seven days, and both Ainsworth 2013 and Stomberg 2012 for at least four times a day for six days. Participants in Sigaud 2014 were asked to keep a diary for three months, although the frequency was not mentioned in the report.

Additional interventions

Ainsworth 2013, Newell 2015, Salaffi 2013 and Stomberg 2012 offered training on the use of their app or device. In addition, both Ainsworth 2013 and Stomberg 2012 allowed phone calls during the sampling period, whilst only Stomberg 2012 offered installation of the app by a member of staff. A semi‐structured interview, the Positive and Negative Syndrome Scale (PANSS), was conducted in Ainsworth 2013 before and after the sampling period. These features could have acted as interventions in their own right, influencing the study findings.

Types of comparisons

Type of device & platform

Bush 2013 and Lamber 2012 chose both a laptop and paper as their comparators. However, only Lamber 2012 specified using a MacBook Pro laptop. Since the tablet used in Lamber 2012 is unlikely to match our inclusion criteria, we considered it a comparator. Brunger 2015 used a PDA (iPAQ) as their comparator. Ainsworth 2013 chose SMS as their comparator, delivered via openCDMS (an open source, secure online clinical data management system). Sun 2013b used a version of the CAS printed on a plastic ruler as a comparator. The remaining studies compared an app to paper (Garcia‐Palacios 2014; Khraishi 2012; Kim 2014; Newell 2015; Salaffi 2013; Schemmann 2013; Sigaud 2014; Stomberg 2012; Sun 2013a).

Functionality

Only Ainsworth 2013 reported allowing configurable number of questions displayed on each day, configurable questions, multiple question sets, question branching, questionnaire timeout, time stamping of data entries and skip procedures.

Human computer interaction

Only Ainsworth 2013, Brunger 2015 and Lamber 2012 reported this information in sufficient detail.

In Ainsworth 2013, SMS alerts were delivered at semi‐random intervals. There was one alert for each question and one SMS reminder if no response was received within five minutes. Questions were presented one per SMS and answers were submitted by responding to the SMS (typing a number between one and seven); subsequent questions were delivered when the response to the current question had been submitted. Brunger 2015 presented one question per page on an iPAQ (model not specified). Data input was made through a continuous slider mapped onto a 64 mm horizontal line; user‐device interaction was achieved through the use of a stylus. Lamber 2012 developed a Computer‐based Health Evaluation System (CHES) designed to run on a tablet (where users could enter their responses with a stylus‐pen) and a web‐based application designed to run on a laptop. Patients using the laptop were able to access the application via a web browser; this application adapted its graphical user interface to the device characteristics, and presented one question per screen and response options in a drop‐down list.

Data collection protocol

The sampling protocols between apps and their comparators were identical, except for Stomberg 2012: four times a day for four days (compared to four times a day for six days in the intervention group).

Additional interventions

Ainsworth 2013 offered training and phone calls during the sampling period, and administered the PANSS semi‐structured interview before and after the sampling period. Newell 2015 offered training on the use of an iPad to all participants, including those who completed the survey questionnaire using pen‐and‐paper. Salaffi 2013 offered on site assistance to their participants.

Types of outcome measures

None of the studies measured data accuracy or response rates.

Eleven studies (out of 14) measured data equivalence. Bush 2013, Kim 2014 and Salaffi 2013 compared mean scores (either overall scores or construct scores) between delivery modes and also calculated ICC coefficients. Ainsworth 2013, Garcia‐Palacios 2014, Khraishi 2012 and Newell 2015 used the comparison of mean scores as their only measure of equivalence; Sun 2013a and Sun 2013b compared mean scores and calculated the Pearson correlation coefficient, and Schemmann 2013 used the ICC coefficient. Brunger 2015 calculated correlation coefficients for each of five questions but the type of coefficient was not specified in the study report.

Ainsworth 2013 and Garcia‐Palacios 2014 measured data completeness. Ainsworth 2013 measured it as the mean number of data entries on a daily basis, and Garcia‐Palacios 2014 defined it as the difference in the mean number of complete and incomplete records.

Ainsworth 2013, Khraishi 2012 and Salaffi 2013 compared the mean time taken to complete a survey questionnaire using an app with that of the comparator.

Ainsworth 2013 measured adherence to the data collection protocol and defined it as the difference between two delivery modes in the proportion of individuals who completed at least one third of all possible data points. Sigaud 2014 claimed to have measured the rate of diary completion, and Stomberg 2012 response rates (defined as the proportion of individuals 'responding' to the data entry alerts sent or those following the pre‐defined data collection protocol). However, we considered these definitions to be more compatible with our outcome 'adherence to the data collection protocol' and reported them as such.

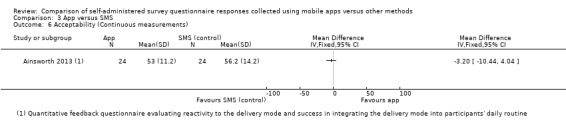

With the exception of Brunger 2015 and Stomberg 2012, all the studies measured acceptability, each using their own custom‐designed questionnaire. Consequently, the definitions of acceptability varied considerably: preference (Ainsworth 2013; Bush 2013; Garcia‐Palacios 2014; Khraishi 2012; Kim 2014; Newell 2015; Salaffi 2013; Schemmann 2013; Sun 2013a; Sun 2013b); ease of use (Ainsworth 2013; Garcia‐Palacios 2014; Khraishi 2012; Lamber 2012; Newell 2015; Schemmann 2013); willingness to use a delivery mode (Kim 2014; Sigaud 2014); satisfaction (Lamber 2012; Newell 2015; Sigaud 2014); effectiveness of the system informativeness (Garcia‐Palacios 2014; Lamber 2012; Newell 2015); perceived time taken to complete the survey questionnaire (Garcia‐Palacios 2014; Khraishi 2012); perceived benefit of a delivery mode (Khraishi 2012); perceived usefulness of a delivery mode (Garcia‐Palacios 2014); perceived ability to complete a survey questionnaire (Newell 2015); maximum length of time that participants would be willing to use a delivery mode (Ainsworth 2013); and reactivity to the delivery mode and its successful integration into respondents' daily routine (Ainsworth 2013).

Studies awaiting classification

Ten records are awaiting classification (see the Characteristics of studies awaiting classification table for additional information). For seven of them we need additional information on the model of the device used (Bjorner 2014a; Bjorner 2014b; Burke 2012; Cunha‐Miranda 2014; Nandkeshore 2013; O'Gorman 2014; Schaffeler 2014). In addition, we need to determine if Bjorner 2014a and Bjorner 2014b refer to the same study. We also need additional details on the study design in Benway 2013. Nonetheless, a preliminary analysis of these studies suggests that they would not modify our findings for data equivalence, adherence to sampling protocol and acceptability to respondents.

Moreover, we need the full‐text report of Anand 2015 in order to assess this study against our inclusion and exclusion criteria.

Lastly, although Pfizer 2009 has been completed, no data have yet been published.

Ongoing trials

Khair 2015 is a multi‐centre, cluster‐RCT evaluating whether measures of functional outcome correlate with quality of life measures in boys with haemophilia. Kingston 2014 is a RCT evaluating the feasibility and acceptability (compared to paper) of conducting e‐screening using a wireless‐enabled tablet computer in pregnant and post‐partum women. Additional information can be found in the Characteristics of ongoing studies table.

Excluded studies

Following the initial search, most excluded during full‐text screening were duplicate records (54 records). In addition, the most common causes for excluding studies were because of ineligibility: i) interventions (39 studies); ii) comparisons and study design (17 studies); iii) study design (16 studies); iv) comparisons, outcomes and study design (11 studies); v) comparisons (11 studies); and vi) participants, interventions, comparisons, outcomes and study design (10 studies). Following the search update, we excluded most studies during full‐text screening due to ineligible interventions (six studies).

Six studies were excluded during data extraction for the following reasons: handset was discontinued before 2007 (Woods 2009); data were entered via browsers running on mobile devices, and both features phones and smartphones were tested in the intervention (Mavletova 2013); data were entered via web browsers running on mobile devices (Depp 2012; Fanning 2014); and a non health‐related survey (Haver 2011; Wells 2014).

We have listed the additional reasons for exclusion in the Characteristics of excluded studies table.

Risk of bias in included studies

Risk of bias in RCTs

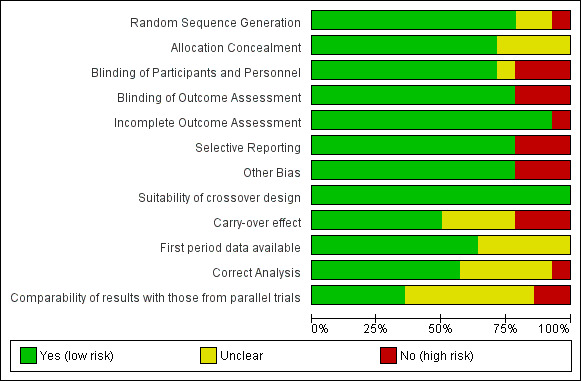

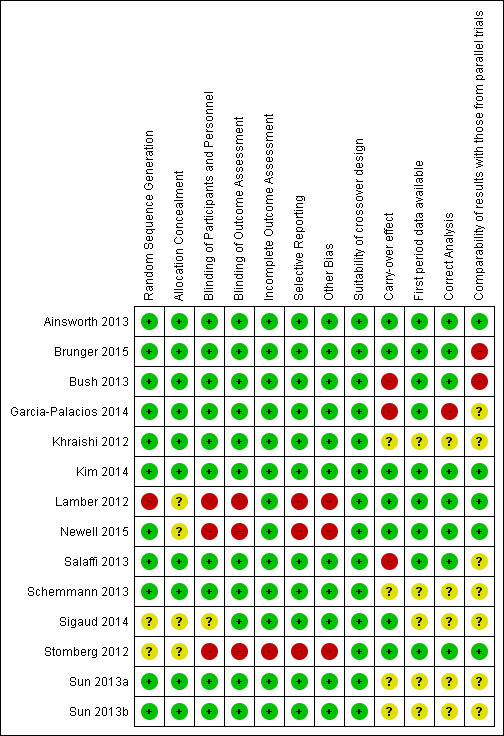

We used Cochrane's 'Risk of bias' assessment tool (Higgins 2011) to assess the risk of bias in Lamber 2012, Newell 2015 and Stomberg 2012 as these were the only studies using an RCT study design (see Figure 3 and Figure 4 for additional information).

3.

Risk of bias graph: review authors' judgements about each risk of bias item presented as percentages across all included studies.

4.

Risk of bias summary: review authors' judgements about each risk of bias item for each included study.

Allocation

Lamber 2012 reported that patients were selected by clinicians and were randomly allocated to one of the four experimental groups (i.e., mobile, laptop, tablet and pen‐and‐paper). However, the specific method by which this was achieved was not reported. Moreover, almost half of the participants (47.3%) were allocated to the laptop group. For these reasons we deemed the risk of selection bias due to random sequence generation as high in this study. Newell 2015 reported conducting computerised randomisation using Qualtrics software. For this reason, we considered the risk of selection bias due to random sequence generation as low in this study. Participants in Stomberg 2012 were randomly allocated to either the mobile group or the questionnaire group; however, the specific procedure followed by the investigators was not specified; therefore, we considered the risk of selection bias due to random sequence generation for this study as unclear.

There was insufficient information in the study reports to assess the risk of selection bias due to allocation concealment in Lamber 2012, Newell 2015 and Stomberg 2012 (i.e., unclear risk of bias).

Blinding

We judged the risk of performance bias (due to blinding of participants and personnel) as high for Lamber 2012, Newell 2015 and Stomberg 2012. Although blinding is not possible in these circumstances as the delivery mode is evident, awareness of the delivery modes being offered to other participants could have influenced participants' motivation to complete the self‐administered survey questionnaires. This was evident in Stomberg 2012, as some participants expressed disappointment at being allocated to the paper questionnaire group. Moreover, all the participants in Newell 2015 received a tutorial on the use of an iPad regardless of the delivery mode they were allocated to.

Similarly, we deemed the risk of detection bias (due to non‐blinding of outcome assessment) as high for all three studies. Although it is unclear from the study reports whether or not outcome assessors were blinded to participant allocation, manual data entry (or calculation of overall scores) for responses collected via paper instruments could have introduced detection bias.

Incomplete outcome data

In Lamber 2012 and Newell 2015, all the participants that were initially enrolled in the studies completed the intervention and their data were included in the final statistical analysis. For this reason, we judged the risk of attrition bias (due to incomplete outcome data) in these studies as low. In Stomberg 2012, data from three participants were not included in the final analysis. Moreover, some participants did not submit any data. Therefore, we judged the risk of attrition bias in this study as high.

Selective reporting

We judged the risk of reporting bias (due to selective reporting) as high in Lamber 2012, Newell 2015 and Stomberg 2012. Lamber 2012 evaluated the impact of patient profile (both clinical and technological) on usability of the electronic delivery modes. For this, however, they only focused on the laptop group as "this is the only group where enough samples were collected (to assure reliable results)". Participants in Newell 2015 underwent randomisation before completing each of two survey questionnaires (CES‐D and RFQ). In between the two survey questionnaires, participants were asked to complete a clarity/confidence survey questionnaire that was used to assess the acceptability to respondents of the delivery mode. For the analysis of this outcome however, only participants that completed both survey questionnaires (i.e., CES‐D and RFQ) using the same delivery mode were included in the statistical analysis. In addition, only participants in the second community from which participants were recruited were asked to complete the BRIEF health literacy scale and other survey format items. Stomberg 2012 considered response rate as one of their outcomes. However, it was measured as compliance with the original data collection protocol and reported on a day‐by‐day basis. Moreover, the study authors attempted to report a comparison of the overall pain scores, sometimes across type of surgery performed and sometimes across type of delivery mode. However, the lack of appropriate tables and figures makes it difficult to identify the significant differences.

Other potential sources of bias

We assessed the risk of other bias as high for Lamber 2012, Newell 2015 and Stomberg 2012. The standard deviation (SD) of the usability scores in Lamber 2012 was not reported. All the participants in Newell 2015 received a tutorial on how to use an iPad regardless of the delivery mode they were allocated to; which could have acted as an intervention in its own right. In addition, participants in the second community from which participants were recruited used an iPad to complete the BRIEF health literacy scale and other survey format items. In Stomberg 2012, participants allocated to the intervention group received training on both pain management and on the use of the mobile app. As a result, participants in the intervention group could have been more engaged than those assigned to the control group. Finally, the sampling period in this study was different for the two experimental groups (six days for the intervention group and four days for the control group).

Risk of bias in crossover studies

The risk of bias in Ainsworth 2013, Brunger 2015, Bush 2013, Garcia‐Palacios 2014, Khraishi 2012, Kim 2014, Salaffi 2013, Schemmann 2013, Sigaud 2014, Sun 2013a and Sun 2013b was assessed following the recommendation of the Cochrane Handbook for Systematic Reviews of Interventions (Higgins 2011) for crossover trials (see Figure 3 and Figure 4 for additional information).

Suitability of the crossover design

Crossover trials are one of the recommended study designs for assessing data equivalence between alternative delivery modes of the same self reported instrument (Coons 2009). For this reason, we judged the risk of bias in this domain as low for all the included studies using this study design.

Carry over effect