Abstract

A color-encoded single-shot computer-generated Moiré profilometry (CSCGMP) is proposed. Two sinusoidal gratings with a π phase difference are encoded in red and blue channels respectively to combine a composite color grating. While this composite color grating is projected onto the measured object, the corresponding color deformed pattern can be captured. So two deformed patterns with a π phase difference are separated from its red and blue components respectively. After normalization and subtraction, the AC component of both separated deformed patterns can be extracted. If this AC component respectively multiplied by the two AC components of fringe patterns of reference plane with a π/2 phase difference prepared and saved on the computer in advance, two computer-generated Moiré fringes just respectively standing for sine and cosine of phase which is modulated by the height of the object relative to the reference plane are figured out. So the 3D shape of the measured object can be reconstructed with normal computer-generated Moiré profilometry. Both simulation and experimental results show the feasibility and validity of the proposed method. It has potential in real-time 3D measurement due to its single-shot feature.

Subject terms: Optics and photonics, Optical techniques, Engineering, Electrical and electronic engineering

Introduction

Nowadays, three-dimensional (3D) measurement1–6 is applied widely, such as reverse engineering7,8, three dimensional print9, medical treatment10 and precision instrument testing11. 3D measurements can be classified as touchable measurements and untouchable measurements. Cause untouchable measurements have the advantages of no damage to objects, portable setup, and fast measuring speed12–15, they are getting a lot of attention.

Among these untouchable measurements, Fourier-transform profilometry (FTP)16 proposed by Takeda is proper to achieve fast measurement. However, because the wrapped phase is obtained by filtering out the either of the two spectra on the carrier frequency which contains the 3D information of the measured object, FTP is hard to obtain higher accuracy. To improve this method, some methods were proposed by some researchers17–20. Furthermore, phase measuring profilometry (PMP)21 proposed by Srinnivasan is proper to achieve higher accuracy measurement by using several shots. To actualize real-time measuring and reserve the superiority of PMP, a single-shot color phase-shifting technique (CPST)22,23 was proposed. In this method, three phase-shifting sinusoidal gratings are encoded in red, green, and blue channels to combine the projected color grating. And then a composite grating phase measuring profilometry (CGPMP) was proposed24. In this method, three phase-shifting sinusoidal gratings are modulated by three other orthogonal carrier gratings with different frequencies to combine the projected composite grating. Though this method can realize real-time measurement, the dynamic range is limited because six or more gratings share at most 256 Gy levels. Although Moiré profilometry (MP) was proposed by Takasaki early in 197025, its development has been severely challenged, because it is based on analyzing the Moiré fringes caused by two overlapped gratings and its optical setup is more complex than that of FTP or PMP. Recently, along with the development of computer technology, a computer-generated Moiré profilometry (CGMP)26 was proposed. It just captures only one deformed pattern rather than directly generates and captures Moiré fringe like MP, and the Moiré fringes are generated just by the computer. It has the advantages of achieving real-time measurement as FTP and realizing relatively high precision measurement as PMP. But when the zero-order spectrum component is overlapped by the first-order spectrum component in the captured deformed pattern, the measuring accuracy of CGMP may be limited. To achieve higher accuracy, high precision computer-generated Moiré profilometry (HCGMP)27 was proposed. However, the feature of single-shot projection may be challenged to some extent because two deformed patterns are needed to be captured in the measuring.

To solve this problem, a color-encoded single-shot computer-generated Moiré profilometry (CSCGMP) is proposed. In this method, a composite color grating is composed of a sinusoidal grating encoded in the red channel and a sinusoidal grating with a π phase difference encoded in the blue channel. While this composite color grating is projected onto the measured object, only a single-shot color deformed pattern is needed to be captured. Then, the AC component of the color deformed pattern is extracted by color separating and normalization rather than spectrum filtering. So the 3D measuring accuracy can be improved.

Principle

The setup of CSCGMP is the same with that of CGMP as shown in Fig. 1. It is composed of a CCD camera, a projector (DLP), and a computer (PC).

Figure 1.

The setup of CSCGMP.

Before measuring, preparation work should be carried out. Firstly, four gray-scale sinusoidal gratings with a π/2 phase difference in the sequence are projected onto the reference plane. The corresponding four gray-scale sinusoidal patterns modulated by the reference plane are captured as follows:

| 1 |

| 2 |

| 3 |

| 4 |

where R(x, y) stands for the reflectance of the reference plane, A stands for the background light intensity, B reflects the fringe contrast, and ϕ0(x, y) presents the phase distribution modulated by the reference plane.

To get rid of the background light intensity, I0(x, y) should minus I2(x, y), and I1(x, y) should minus I3(x, y). These expressions are shown as follows:

| 5 |

| 6 |

where Icos(x, y) and Isin(x, y) are just the AC components of I0(x, y) and I1(x, y) respectively. They are stored in the computer in advance.

Then, a composite color grating in which two sinusoidal gratings with a π phase difference each other are encoded in the red channel and the blue channel respectively is designed and also stored on the computer in advance. The reason why the red channel and the blue channel are chosen is much dependent on the spectral coupling curve of the CCD camera as shown in Fig. 2. It can be seen the color coupling between the blue channel and the red channel is the smallest.

Figure 2.

Spectral coupling curve of CCD.

In the measuring work, while the designed composite color grating is projected onto the measured object, the corresponding color deformed pattern modulated by both the measured object and the reference plane can be captured. The two deformed patterns separated from the red channel and blue channel of the captured color deformed pattern can be expressed below:

| 7 |

| 8 |

where Rr(x, y) presents the red light reflectance of the measured object surface, and Rb(x, y) presents the blue light reflectance of the object surface, A stands for the background light intensity, B reflects the fringe contrast, and ϕ(x, y) stands for the phase distribution modulated by both the measured object and the reference plane.

It can be seen from Fig. 2, the red light responsiveness and blue light responsiveness of the CCD are different, so ARr(x, y) and ARb(x, y), and BRr(x, y) and BRb(x, y) are different respectively. BRr(x, y) and BRb(x, y) called fringe modulations are filtered out respectively as followings:

| 9 |

| 10 |

Considering the modulations in shadow areas are close to zero, a binary mask is generated according to the threshold of modulation based on Otsu28 to avoid errors caused by shadow.

After using the mask, two separated deformed patterns are normalized as follows:

| 11 |

| 12 |

It can be seen from Eqs. (11 and 12), there is a π phase difference between and , so the AC component of the two separated deformed patterns could be extracted:

| 13 |

Then, by multiplying this AC component with the above two AC components prepared in advance, two equivalent transmittances of the overlapping gratings generated by the computer can be respectively expressed as follows:

| 14 |

| 15 |

The two computer-generated Moiré fringes are just the DC components of these two equivalent transmittances. A filter is designed to filter out the DC components of these two equivalent transmittances. The computer-generated Moiré fringes are shown as:

| 16 |

| 17 |

Finally, the phase information of the height of the measured object relative to the reference plane can be obtained:

| 18 |

Because of the arctangent operation, the phase Δϕ1(x, y) is wrapped in (− π, π]. The phase unwrapping method is used to calculate the unwrapped phase Δϕ2(x, y) from the wrapped phase29. After the height-to-phase mapping30, the height of the measured object can be obtained:

| 19 |

where a(x, y), b1a(x, y), b1(x, y), b2(x, y), and c(x, y) are the system parameters. They can be obtained by calibration.

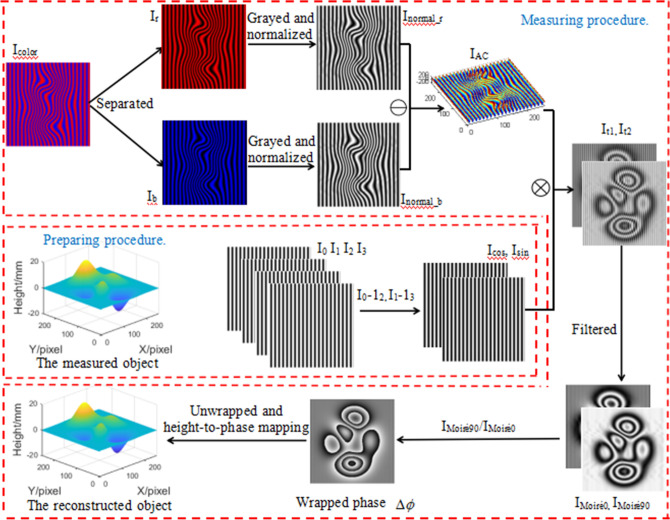

In summary, the flow chart of CSCGMP is as shown in Fig. 3.

Figure 3.

The principle flow chart of CSCGMP.

Simulation

In order to verify the feasibility of CSCGMP, lots of simulations have been done. Here, a peaks function as shown in Fig. 4a is designed as the measured object. Before measuring, two AC components of the fringe patterns of the reference plane with a π/2 phase difference are prepared in advance. Figure 4b shows the corresponding color deformed pattern whose phase is modulated by the height of the peaks relative to the reference plane, and Fig. 4c shows the red component and the blue component of the color deformed pattern after normalization. Figure 4d is the AC component of the red deformed pattern after normalization in Fig. 4c. Figure 4e shows the computer-generated Moiré fringes Moiré0 and Moiré90. Figure 4f shows the reconstructed object. It can be seen from Fig. 4f that the reconstructed object is rebuilt well by CSCGMP. What’s more, in order to verify CSCGMP’s accuracy, the error distribution of the reconstructed object is measured as shown in Fig. 4g. It is recognized that the error with CSCGMP is small enough, the maximum error is 0.0652 mm, the minimum value is − 0.0738 mm, and the root of mean square (RMS) is 0.0892 mm.

Figure 4.

Simulation of color-encoded single-shot computer-generated Moiré profilometry: (a) the measured peaks; (b) the color deformed pattern of the peaks; (c) the red and blue components after normalization of the peaks; (d) the AC component IAC of the peaks; (e) the Moiré0 and Moiré90 fringes of the peaks; (f) the reconstructed peaks; (g) the error with CSCGMP.

Experimental results and analysis

In order to prove the validity of CSCGMP, large numbers of 3D measurement experiments are carried out. The setup is as shown in Fig. 1, the type of the CCD is DFM 72AUC02, and the type of the DLP is ViewSonic PLED-W200.

In preparing procedure, the four gray-scale sinusoidal gratings are projected onto the reference plane sequentially, and the corresponding four gray-scale sinusoidal patterns are captured by the CCD camera. The AC components of fringe patterns of the reference plane with a π/2 phase difference can be obtained according to Eqs. (5 and 6) and are saved on the computer. Then, in the measuring procedure, a star model as shown in Fig. 5a is put on the reference plane, and the designed composite color grating is projected onto the star. The corresponding color deformed pattern is captured by the CCD camera as shown in Fig. 5b, and it is also saved on the computer. After the red component and the blue component are separated from the color deformed pattern, the normalized deformed patterns are as shown in Fig. 5c. The AC component of the red normalized deformed pattern can be get as shown in Fig. 5d. Two computer-generated Moiré fringes are as shown in Fig. 5e. Finally, the 3D shape of the measured star model can be reconstructed, and the reconstructed result is shown in Fig. 5f. It can be seen from Fig. 5f that CSCGMP can reconstruct the object well with normalization and binary mask. Figure 5g shows the reconstructed star without normalization and binary mask. From comparing Fig. 5f and Fig. 5g, it can be seen that the error can be eliminated as much as possible by introducing normalization and binary mask. Because only one color-encoded composite grating is needed to be projected onto the measured object and only one corresponding composite color deformed pattern is needed to be captured, it still reserves the single-shot feature and plays a potential application in real-time 3D measurement.

Figure 5.

The reconstructed results of the star: (a) the measured star; (b) the color deformed pattern; (c) the normalized red and blue components; (d) the AC component of the red normalized deformed pattern; (e) Moiré0 and Moiré90 fringes; (f) the reconstructed star with normalization and binary mask; (g) the reconstructed star without normalization and binary mask.

In order to reveal that the errors caused by shadow can be efficiently avoided by using the mask in CSCGMP, a face model is measured as shown in Fig. 6.

Figure 6.

The comparison of reconstructed face models before and after using the mask: (a) the measured face; (b) the modulation; (c) the mask; (d) the reconstructed face before using the mask; (e) the reconstructed face after using the mask.

Figure 6a shows the measured face model. Figure 6b shows the modulation which is filtered from the red component of the captured color deformed pattern, and Fig. 6c shows the designed binary mask based on the Otsu method. Figure 6d, e are the reconstructed results before and after using the mask. It can be seen that the reconstructed face model after using the mask is more reliable and more accurate.

In addition, a turtle model with more detailed textures is also measured as shown in Fig. 7. Figure 7a shows the measured turtle model. Figure 7b shows the modulation which is filtered from the red component of the captured color deformed pattern, and Fig. 7c shows the binary mask. Figure 7d, e are the reconstructed results before and after using the mask. It can be seen that the reconstructed turtle after using the mask is more reliable and shows more detailed texture information. It further reveals that the proposed method is more applicable and more realistic.

Figure 7.

The comparison of reconstructed turtle models before and after using the mask: (a) the measured turtle; (b) the modulation; (c) the mask; (d) the reconstructed turtle before using the mask; (e) the reconstructed turtle after using the mask.

In order to verify the proposed CSCGMP can be applied into real-time measurement, a lot of dynamic objects have been measured by CSCGMP.

Figure 8 shows the measuring results of a moving hand which is one of the authors by CSCGMP. The 3D shapes of the real-time dynamic hand are obtained at 42 fps, and the execution time is 0.0238 s/frame. Three different dynamic states of the moving hand are shown in Fig. 8.

Figure 8.

The measuring results of the dynamic hand at three random states. (a)–(c) The captured color deformed patterns at state1, state2, and state3, and they are integrated in Supplementary Video 1 (Supplementary Video 1, MP4, 1.16 MB); (d)–(f) the reconstructed hands at state1, state2, and state3, and they are integrated in Supplementary Video 2 (Supplementary Video 2, MP4, 346 KB).

In Fig. 8, it can be seen that the dynamic hand is reconstructed well by CSCGMP. Figure 8a–c show the captured color deformed patterns at three random times in real-time measurement, and Fig. 8d–f show the reconstructed hands at corresponding times.

In order to verify the accuracy of CSCGMP, a lot of comparative experiments have been done. As is known to all, large-step PMP is the most accurate measuring method in the optical 3D measuring methods based on structure light projection, so a reconstructed object by 8-step PMP31 is regarded as quasi truth-value. Here a plate is measured by FTP, CGMP, CSCGMP, and 8-step PMP. The comparisons of reconstruction results are shown in Fig. 9.

Figure 9.

The comparisons of the reconstructed results by the four methods: (a) the measured plate; (b) the corresponding color deformed pattern of the plate; (c) the cutaways at column 500; (d) the dashed rectangular box area in (c).

Figure 9a shows the measured plate. The corresponding color deformed pattern by CSCGMP is shown in Fig. 9b. The cutaways at column 500 of the reconstructed object by the above four methods are shown in Fig. 9c. For the convenience of detail comparisons, the enlarged figure of the dashed rectangular box area in Fig. 9c is shown in Fig. 9d.

It can be seen from Fig. 9d that the plate reconstructed by CSCGMP is much close to that by 8-step PMP. That means the reconstructed result by CSCGMP is better than that by either FTP or CGMP.

In CGMP, the background light intensity is directly eliminated by filtering the captured deformed pattern. The result of reconstruction may be affected by the spectrum overlapping to some extent. In FTP, the background light intensity of captured deformed patterns is eliminated by filtering operation too. However, in CSCGMP, the background light intensity of the captured color deformed pattern is eliminated by normalizing and subtracting red and blue components of the color deformed pattern. No filtering operation is needed to eliminate the background light intensity by CSCGMP. Thus, the reconstructed object is more accurate by CSCGMP.

Therefore, CSCGMP owns the superiority of single-shot and better accuracy. It has great potential applications in real-time 3D measurement.

Conclusion

In this paper, a CSCGMP method is proposed. While only one composite color grating in which two sinusoidal gratings with a π phase difference are encoded in red and blue channels respectively is projected onto the measured object, the only one corresponding color deformed pattern is needed to be captured. The 3D shape of the measured object can be reconstructed successfully. Since spectrum filtering is avoided effectively in the purification of the AC component of the deformed pattern, it is directly purified in the spatial domain. Even when the spectrums of the DC component and the AC component are overlapped, the purification of the AC component is not affected, so the purification accuracy is higher. Numerical simulation and experimental results verify the effectiveness and practicability of the proposed method. Further comparative experiments show that CSCGMP has higher measurement accuracy than FTP and CGMP, and reserves its single-shot feature, so it has great potential applications in real-time 3D measurement. However, in CSCGMP, because the color grating is used to captured the 3D information of the measured object, measuring color object is still a challenge.

Supplementary Information

Author contributions

H.Z. and Y.C. put forward the idea and designed the schemes of the simulations and experiments. H.Z. and C.L set up the experimental system, carried out the experiments, processed the resulting data and analyzed the experimental results. All the authors discussed on the results in this manuscript and contributed to the writing of the manuscript.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-021-90522-x.

References

- 1.Wang Z, Yang Y. Single-shot three-dimensional reconstruction based on structured light line pattern. Opt. Laser Eng. 2018;106:10–16. doi: 10.1016/j.optlaseng.2018.02.002. [DOI] [Google Scholar]

- 2.Zuo C, et al. Phase shifting algorithms for fringe projection profilometry: A review. Opt. Laser Eng. 2018;109:23–59. doi: 10.1016/j.optlaseng.2018.04.019. [DOI] [Google Scholar]

- 3.Zhang G, et al. High-speed FPGA-based phase measuring profilometry architecture. Opt. Express. 2017;25(9):10553–10564. doi: 10.1364/OE.25.010553. [DOI] [PubMed] [Google Scholar]

- 4.Zhu L, Cao Y, He D, Chen C. Real-time tricolor phase measuring profilometry based on CCD sensitivity calibration. J. Mod. Opt. 2017;64(4):379–387. doi: 10.1080/09500340.2016.1240250. [DOI] [Google Scholar]

- 5.Song J, Lau D, Ho Y, Liu K. Automatic look-up table based real-time phase unwrapping for phase measuring profilometry and optimal reference frequency selection. Opt. Express. 2019;27(9):13357–13371. doi: 10.1364/OE.27.013357. [DOI] [PubMed] [Google Scholar]

- 6.Wang Z. Robust three-dimensional face reconstruction by one-shot structured light line pattern. Opt. Laser Eng. 2020;124:66. [Google Scholar]

- 7.Burke J, Bothe T, Osten W, Hess C. Reverse engineering by fringe projection. Proc. SPIE-Int. Soc. Opt. Eng. 2002;4778:312–324. [Google Scholar]

- 8.Lin C, et al. Fringe projection measurement system in reverse engineering. J. Shanghai Univ. 2005;9(2):66. doi: 10.1007/s11741-005-0069-z. [DOI] [Google Scholar]

- 9.Zhang Z, et al. 3D palmprint and hand imaging system based on full-field composite color sinusoidal fringe projection technique. Appl. Opt. 2013;52(25):6138–6145. doi: 10.1364/AO.52.006138. [DOI] [PubMed] [Google Scholar]

- 10.Khan D, Shirazi M, Kim M. Single shot laser speckle based on 3D acquisition system for medical applications. Opt. Laser Eng. 2018;105:43–53. doi: 10.1016/j.optlaseng.2018.01.001. [DOI] [Google Scholar]

- 11.Xu J, et al. Rapid 3D surface profile measurement of industrial parts using two-level structured light patterns. Opt. Laser Eng. 2011;49(7):907–914. doi: 10.1016/j.optlaseng.2011.02.010. [DOI] [Google Scholar]

- 12.Lu Y, Lu R. Using composite sinusoidal patterns in structured-illumination reflectance imaging (SIRI) for enhanced detection of apple bruise. J. Food Eng. 2017;199:54–64. doi: 10.1016/j.jfoodeng.2016.12.008. [DOI] [Google Scholar]

- 13.Liu Y, Zhang Q, Zhang H, Wu Z, Chen W. Improve temporal Fourier transform profilometry for complex dynamic three-dimensional shape measurement. Sensors. 2020;20(7):66. doi: 10.3390/s20071808. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Feng S, et al. Motion-oriented high speed 3-D measurements by binocular fringe projection using binary aperiodic patterns. Opt. Express. 2017;25(2):540–559. doi: 10.1364/OE.25.000540. [DOI] [PubMed] [Google Scholar]

- 15.Yin W, et al. High-speed 3D shape measurement using the optimized composite fringe patterns and stereo-assisted structured light system. Opt. Express. 2019;27(3):55. doi: 10.1364/OE.27.002411. [DOI] [PubMed] [Google Scholar]

- 16.Takeda M, Ina H, Kobayashi S. Fourier-transform method of fringe-pattern analysis for computer-based topography and inteferometry. J. Opt. Soc. Am. 1982;72(1):156–160. doi: 10.1364/JOSA.72.000156. [DOI] [Google Scholar]

- 17.Li B, Liu Z, Zhang S. Motion-induced error reduction by combining Fourier transform profilometry with phase-shifting profilometry. Opt. Express. 2016;24(20):23289–23303. doi: 10.1364/OE.24.023289. [DOI] [PubMed] [Google Scholar]

- 18.Liu X, Kofman J. Real-time 3D surface-shape measurement using background-modulated modified Fourier transform profilometry with geometry-constraint. Opt. Laser Eng. 2019;115:217–224. doi: 10.1016/j.optlaseng.2018.11.014. [DOI] [Google Scholar]

- 19.Zhang H, Zhang Q, Li Y, Liu Y. High speed 3D shape measurement with temporal Fourier transform profilometry. Appl. Sci. 2019;9(19):4123. doi: 10.3390/app9194123. [DOI] [Google Scholar]

- 20.Zuo C, et al. Micro Fourier transform profilometry (μFTP): 3D shape measurement at 10,000 frames per second. Opt. Laser Eng. 2018;102:70–91. doi: 10.1016/j.optlaseng.2017.10.013. [DOI] [Google Scholar]

- 21.Srinivasan V, Liu H, Halioua M. Automated phase-measuring profilometry of 3-D diffuse objects. Appl. Opt. 1984;23(18):66. doi: 10.1364/AO.23.003105. [DOI] [PubMed] [Google Scholar]

- 22.Huang P, Hu Q, Jin F, Chiang F. Color-encoded digital fringe projection technique for high-speed three-dimensional surface contouring. Opt. Eng. 1999;38(6):1065–1071. doi: 10.1117/1.602151. [DOI] [Google Scholar]

- 23.Fu G, Cao Y, Wang Y, Wan Y, Wang L. Dynamic phase measuring profilometry based on tricolor binary fringe encoding combined time-division multiplexing. Appl. Sci. 2019;9(4):66. doi: 10.3390/app9040813. [DOI] [Google Scholar]

- 24.Guan C, Hassebrook L, Lau D. Composite structured light pattern for three-dimensional video. Opt. Express. 2003;11(5):406–417. doi: 10.1364/OE.11.000406. [DOI] [PubMed] [Google Scholar]

- 25.Takasaki H. Moiré topography. Appl. Opt. 1970;9(6):1467–1472. doi: 10.1364/AO.9.001467. [DOI] [PubMed] [Google Scholar]

- 26.Li C, et al. Computer-generated Moiré profilometry. Opt. Express. 2017;25(22):26815–26824. doi: 10.1364/OE.25.026815. [DOI] [PubMed] [Google Scholar]

- 27.Li C, et al. High precision computer-generated moiré profilometry. Sci. Rep. 2019;9(1):7804. doi: 10.1038/s41598-019-44186-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Peng K, Cao Y, Wu Y, Xiao Y. A new pixel matching method using the modulation of shadow areas in online 3D measurement. Opt. Laser Eng. 2013;51(9):1078–1084. doi: 10.1016/j.optlaseng.2013.03.008. [DOI] [Google Scholar]

- 29.Wang H, Wu C, Jia T, Li D. A phase unwrapping method based on sparse depth for depth perception. Opt. Laser Eng. 2018;109:60–67. doi: 10.1016/j.optlaseng.2018.05.001. [DOI] [Google Scholar]

- 30.Ma Q, et al. Intrinsic feature revelation of phase-to-height mapping in phase measuring profilometry. Opt. Laser Eng. 2018;108:46–52. doi: 10.1016/j.optlastec.2018.06.049. [DOI] [Google Scholar]

- 31.Zhang S. Recent progresses on real-time 3D shape measurement using digital fringe projection techniques. Opt. Laser Eng. 2010;48(2):149–158. doi: 10.1016/j.optlaseng.2009.03.008. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.