Abstract

Many Americans fail to get life-saving vaccines each year, and the availability of a vaccine for COVID-19 makes the challenge of encouraging vaccination more urgent than ever. We present a large field experiment (N = 47,306) testing 19 nudges delivered to patients via text message and designed to boost adoption of the influenza vaccine. Our findings suggest that text messages sent prior to a primary care visit can boost vaccination rates by an average of 5%. Overall, interventions performed better when they were 1) framed as reminders to get flu shots that were already reserved for the patient and 2) congruent with the sort of communications patients expected to receive from their healthcare provider (i.e., not surprising, casual, or interactive). The best-performing intervention in our study reminded patients twice to get their flu shot at their upcoming doctor’s appointment and indicated it was reserved for them. This successful script could be used as a template for campaigns to encourage the adoption of life-saving vaccines, including against COVID-19.

Keywords: vaccination, COVID-19, nudge, influenza, field experiment

According to a recent poll by the Pew Research Center, only 60% of Americans plan to get a COVID-19 vaccine (1). To make matters worse, past research suggests that many who say they intend to get vaccinated will not follow through (2). Experts have estimated that to reach herd immunity, 60–90% of Americans must be inoculated against the novel coronavirus (3–5). Evidence-based strategies that can be rapidly deployed at scale to encourage vaccination are urgently needed.

Although COVID-19 differs from the flu in many ways, both are deadly respiratory diseases with an available vaccine that many Americans choose not to get. The Centers for Disease Control and Prevention recommends that every American over 6 mo of age receive a flu shot (6) because inoculation typically reduces the chances of contracting the flu by at least 50% (7). However, less than half of Americans were vaccinated during the 2019–2020 influenza season (8), and an estimated 35,000 died from the flu (9).

It may be possible to move the needle on vaccination against the flu (and, hopefully, COVID-19 as well) with simple, low-cost nudges (10). For instance, we know that prompting people to consider and jot down the exact date and time when they will get a flu shot at a workplace clinic makes vaccination more likely (11); that defaulting people into vaccination appointments is effective (12); that mailings designed to leverage behavioral science insights can increase immunization (13); and that simply reminding high-risk individuals to get vaccinated increases inoculation rates (14).

In this paper, we test 19 different nudges delivered to patients via text message, all designed to boost adoption of the flu vaccine.

Materials and Methods

To identify whether and how text messaging interventions could be used to boost vaccination rates at routine primary care visits, we ran a megastudy—a field experiment in which many interventions developed by different teams of scientists were tested in the same population on the same outcome.

We conducted our study in fall 2020 in partnership with two large health systems in the Northeastern United States: Penn Medicine and Geisinger Health. We included all patients with new or routine (nonsick) primary care appointments at Penn Medicine between September 24, 2020, and December 31, 2020, and at Geisinger Health between September 28, 2020, and December 31, 2020, who met the following eligibility criteria: 1) they had a cell phone number recorded in their electronic health record; 2) they had not opted out of receiving SMS appointment reminders from their healthcare provider or asked not to be contacted for research purposes; 3) they did not have a documented allergy or adverse reaction to the flu vaccine; and 4) they had not yet received a flu shot in 2020 according to their electronic health record.*

Twenty-six behavioral scientists worked in small teams to generate 19 different text messaging protocols. Protocols varied the contents and/or timing of up to two sets of text reminders to get a flu shot sent from the patient’s healthcare provider in the 3 d preceding the patient’s appointment. All intervention message content is included in SI Appendix.

This research was approved by the Institutional Review Board (IRB) at the University of Pennsylvania; the IRB granted a waiver of consent for this research. No identifying information about study participants was shared with the researchers.

We preregistered our megastudy’s design and analysis plan (1: https://aspredicted.org/blind.php?x=sq23yd, 2: https://aspredicted.org/blind.php?x=9zr9nu)† and then randomized a total of 47,306 patients to one of the 19 experimental conditions designed by team scientists (Nmin = 2,295, Nmean = 2,365, Nmax = 2,397) or a usual care control condition in which we did not send patients any text-based reminders to get a flu vaccine (N = 2,389). All patients received standard appointment reminders (the usual care).

Separately, as described in SI Appendix, we hired a separate sample of 2,214 prolific workers to code subjective attributes (e.g., casualness) of each of the 19 text messaging protocols, and in addition, we classified each on 12 objective attributes (e.g., word count).

Results and Discussion

Patients in our study were an average of 51.9 y of age (SD = 16.3), 43% were male, 70% were white, 47% had been vaccinated in the previous flu season, and 55% were patients at Penn Medicine. As shown in Table S8 in the web appendix (https://osf.io/tucjs/?view_only=c491df37a33840abbdedda4e60176f34), study arms were well-balanced on age, gender, race, health system, and vaccination history (P values from all F tests > 0.05).

Following our preregistration, we evaluated whether participants received a flu shot on the date of their scheduled appointment or in the 3 d leading up to it (i.e., when treatments had begun) using an ordinary least-squares (OLS) regression and pooling data from Penn Medicine and Geisinger. The primary predictors in our regression were 19 indicator variables—one for assignment to each of our study’s 19 experimental conditions (with an indicator omitted for assignment to our study’s usual care control condition). Our preregistered OLS regression included the following control variables: 1) an indicator for being a Penn Medicine patient; 2) patient age; 3) indicators for patient race/ethnicity; 4) indicators for patient gender; 5) an indicator for whether the patient received a flu shot last year; 6) indicators for the type of clinician who saw the patient; and 7) the linear and squared days separating the patient’s target primary care appointment from the start of our study (September 20, 2020, when the first participants were enrolled).

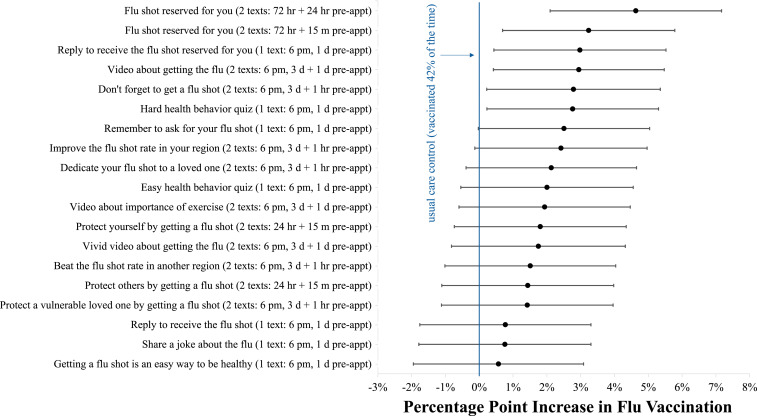

In our usual care control group, 42% of patients received a flu vaccine on the day of their scheduled appointment or in the 3 d before it. As Fig. 1 shows, 6 out of our 19 interventions (32%) produced a statistically significant boost in vaccinations (two-sided unadjusted P values < 0.05), and all of our interventions directionally increased vaccination rates. The 19 treatments boosted vaccination levels by an average of 2.1 percentage points or 5% (P = 0.024), and we cannot reject the null hypothesis that all 19 effects have the same true value (Chi-sq = 21.277, df = 18, P = 0.266). To account for multiple comparisons, we report not only SEs, two-sided P values, and 95% CIs, but also q values (see web appendix at https://osf.io/tucjs/?view_only=c491df37a33840abbdedda4e60176f34). Since each of the six effects significant at α = 0.05 has a q value lower than 0.02, the expected proportion of false positives among estimates at least as extreme as the sixth largest is less than 2% (15). Using the harmonic mean method to compute the meta-analytic P value from our study, we find that the probability of observing the 19 results depicted in Fig. 1 given that they are all true nulls is <0.0055.

Fig. 1.

Regression-estimated increase in flu vaccinations induced by each of our 19 interventions compared to a usual care control at Penn Medicine and Geisinger. Whiskers depict 95% CIs. As indicated in parentheses, some messages were sent at 6 PM, 1 and/or 3 d before an appointment, while others were sent a predetermined number of hours before an appointment (e.g., 72 h).

The top-performing intervention in our study showed a 4.6 percentage point boost in vaccination (an 11% increase; P < 0.01) at the cost of sending two text messages (less than a dime). Correcting for likely inflation in the largest out of 19 estimates, we calculate a more conservative estimate of the true effect to be a 2.8 percentage point boost in vaccination or a 6.7% increase from baseline (see SI Appendix and web appendix at https://osf.io/tucjs/?view_only=c491df37a33840abbdedda4e60176f34). As shown in Fig. S1 in the web appendix (https://osf.io/tucjs/?view_only=c491df37a33840abbdedda4e60176f34), the first text message in this condition, sent 72 h before the patient’s appointment, noted that “it’s flu season,” “a flu vaccine is available for you,” and “a vaccine reminder” would be sent before the appointment. The second text in this condition, sent 24 h before the appointment, stated simply that “this is a reminder that a flu vaccine has been reserved for your appointment.” This intervention was the top performer among both Penn Medicine and Geisinger patients.

Which attributes correlate best with intervention effectiveness? In post-hoc analyses, we found that interventions performed better when they were 1) framed as reminders to get flu shots that were already reserved for the patient (β = 0.41, P = 0.05), and 2) congruent with the sort of communications patients expected to receive from their healthcare provider (i.e., not surprising, casual, or interactive) (β = 0.48, P < 0.03). See SI Appendix and web appendix (https://osf.io/tucjs/?view_only=c491df37a33840abbdedda4e60176f34) for details on how messages were rated and, next, classified using principal-components analysis. Notably, some of the most artful interventions (e.g., one including a joke about spreading the flu told by a dog to a cat and conveyed in picture form) were among the least effective.

In secondary analyses, we examined how treatment effects differed across different subpopulations studied (see web appendix at https://osf.io/tucjs/?view_only=c491df37a33840abbdedda4e60176f34). In general, we found that estimated treatment effects across conditions did not differ significantly whether we looked at patients from Penn Medicine or Geisinger, patients who identified as male or female, patients who were 65+ versus under 65 y old, patients who did or did not receive a flu shot in the 2019–2020 flu season, or patients who had appointments with physicians versus other types of clinicians (all values of P > 0.375). There were some significant differences in treatment effect estimates by patient race, suggesting tailoring communications on this dimension could be valuable, but our attribute analyses yielded nearly identical results for White and non-White patients.

Overall, our findings show nudges sent via text messages to patients prior to a primary care visit and developed by behavioral scientists to encourage vaccine adoption can substantially boost vaccination rates at close to zero marginal cost. Our best-performing message, which increased adoption by an estimated 11%, reminded patients twice to get their flu shot at their upcoming doctor’s appointment and mentioned that a shot was reserved for them. Although the factors influencing the adoption of vaccines for other diseases, including COVID-19, differ in important ways, this successful script could potentially be repurposed.

Supplementary Material

Acknowledgments

Research reported in this publication was supported by the National Institute on Aging of the National Institutes of Health under Award P30AG034532, the Bill and Melinda Gates Foundation, the Flu Lab, and the Penn Center for Precision Medicine Accelerator Fund. Support for this research was also provided in part by the AKO Foundation, John Alexander, Mark J. Leder, and Warren G. Lichtenstein. We thank Chayce Baldwin for outstanding research assistance, Joseph Simmons for valuable feedback on the manuscript, and Julian Parris for consulting with us on attribute analyses. The content is solely the responsibility of the authors and does not necessarily represent the official views of any of the listed entities.

Footnotes

Competing interest statement: K.G.V. is a part-owner of VAL Health, a behavioral economics consulting firm.

*As preregistered, this analysis consists of data collected through December 31, 2020 (our first study endpoint). However, as noted in our preregistration, we also plan to analyze additional data collected in 2021.

†Note that preregistration 1 makes small updates to preregistration 2, both of which were posted before any data were analyzed.

This article contains supporting information online at https://www.pnas.org/lookup/suppl/doi:10.1073/pnas.2101165118/-/DCSupplemental.

Data Availability

A web appendix, aggregated data, and analysis scripts have been deposited in the Open Science Framework (https://osf.io/tucjs/?view_only=c491df37a33840abbdedda4e60176f34) (16). Researchers interested in using individual-level data to replicate our results should contact the Behavior Change for Good Initiative at the University of Pennsylvania (bcfg@wharton.upenn.edu) and must sign a standard medical data nondisclosure agreement to access the data on a protected medical server.

References

- 1.Funk C., Tyson A., Intent to get a COVID-19 vaccine rises to 60% as confidence in research and development process increases. Pew Research Center (3 December 2020). https://www.pewresearch.org/science/2020/12/03/intent-to-get-a-covid-19-vaccine-rises-to-60-as-confidence-in-research-and-development-process-increases/. Accessed 6 January 2021.

- 2.Sheeran P., Intention–behavior relations: A conceptual and empirical review. Eur. Rev. Soc. Psychol. 12, 1–36 (2002). [Google Scholar]

- 3.D’Souza G., Dowdy D., What is herd immunity and how can we achieve it with COVID-19? Johns Hopkins Bloomberg School of Public Health (10 April 2020). https://www.jhsph.edu/covid-19/articles/achieving-herd-immunity-with-covid19.html. Accessed 6 January 2021.

- 4.Nayer Z., On the road to herd immunity, vaccination speeds the journey. Stat, 17 December 2020. https://www.statnews.com/2020/12/17/calculating-our-way-to-herd-immunity/. Accessed 6 January 2021.

- 5.Allen J., Fauci says herd immunity could require nearly 90% to get coronavirus vaccine. Reuters, 24 December 2020. https://www.reuters.com/article/health-coronavirus-usa/fauci-says-herd-immunity-could-require-nearly-90-to-get-coronavirus-vaccine-idUSL1N2J411V. Accessed 6 January 2021.

- 6.US Centers for Disease Control and Prevention , Who needs a flu vaccine and when (22 October 2020). https://www.cdc.gov/flu/prevent/vaccinations.htm. Accessed 6 January 2021.

- 7.US Centers for Disease Control and Prevention , Vaccine effectiveness: How well do the Flu vaccines work? (16 December 2020). https://www.cdc.gov/flu/vaccines-work/vaccineeffect.htm. Accessed 6 January, 2021.

- 8.US Centers for Disease Control and Prevention , Flu vaccination coverage, United States, 2019–20 influenza season (1 October 2020). https://www.cdc.gov/flu/fluvaxview/coverage-1920estimates.htm. Accessed 6 January, 2021.

- 9.US Centers for Disease Control and Prevention , 2019–2020 U.S. flu season: Preliminary in-season burden estimates (3 December 2020). https://www.cdc.gov/flu/about/burden/preliminary-in-season-estimates.htm. Accessed 6 January 2021.

- 10.Benartzi S., et al., Should governments invest more in nudging? Psychol. Sci. 28, 1041–1055 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Milkman K. L., Beshears J., Choi J. J., Laibson D., Madrian B. C., Using implementation intentions prompts to enhance influenza vaccination rates. Proc. Natl. Acad. Sci. U.S.A. 108, 10415–10420 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Chapman G. B., Li M., Colby H., Yoon H., Opting in vs opting out of influenza vaccination. JAMA 304, 43–44 (2010). [DOI] [PubMed] [Google Scholar]

- 13.Yokum D., Lauffenburger J. C., Ghazinouri R., Choudhry N. K., Letters designed with behavioural science increase influenza vaccination in Medicare beneficiaries. Nat. Hum. Behav. 2, 743–749 (2018). [DOI] [PubMed] [Google Scholar]

- 14.Regan A. K., Bloomfield L., Peters I., Effler P. V., Randomized controlled trial of text message reminders for increasing influenza vaccination. Ann. Fam. Med. 15, 507–514 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Storey J. D., Tibshirani R., Statistical significance for genomewide studies. Proc. Natl. Acad. Sci. U.S.A. 100, 9440–9445 (2003). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Milkman K. L., et al., Data from “A mega-study of text-based nudges encouraging patients to get vaccinated at an upcoming doctor’s appointment.” Open Science Framework. https://osf.io/tucjs/?view_only=c491df37a33840abbdedda4e60176f34. Deposited 19 April 2021. [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

A web appendix, aggregated data, and analysis scripts have been deposited in the Open Science Framework (https://osf.io/tucjs/?view_only=c491df37a33840abbdedda4e60176f34) (16). Researchers interested in using individual-level data to replicate our results should contact the Behavior Change for Good Initiative at the University of Pennsylvania (bcfg@wharton.upenn.edu) and must sign a standard medical data nondisclosure agreement to access the data on a protected medical server.