Abstract

Upper gastrointestinal (GI) cancers are the leading cause of cancer-related deaths worldwide. Early identification of precancerous lesions has been shown to minimize the incidence of GI cancers and substantiate the vital role of screening endoscopy. However, unlike GI cancers, precancerous lesions in the upper GI tract can be subtle and difficult to detect. Artificial intelligence techniques, especially deep learning algorithms with convolutional neural networks, might help endoscopists identify the precancerous lesions and reduce interobserver variability. In this review, a systematic literature search was undertaken of the Web of Science, PubMed, Cochrane Library and Embase, with an emphasis on the deep learning-based diagnosis of precancerous lesions in the upper GI tract. The status of deep learning algorithms in upper GI precancerous lesions has been systematically summarized. The challenges and recommendations targeting this field are comprehensively analyzed for future research.

Keywords: Artificial intelligence, Deep learning, Convolutional neural network, Precancerous lesions, Endoscopy

Core Tip: Artificial intelligence techniques, especially deep learning algorithms with convolutional neural networks, have revolutionized upper gastrointestinal endoscopy. In recent years, several deep learning-based artificial intelligence systems have emerged in the gastrointestinal community for endoscopic detection of precancerous lesions. The current review provides an analysis and status of the deep learning-based diagnosis of precancerous lesions in the upper gastrointestinal tract and identifies future challenges and recommendations.

INTRODUCTION

Upper gastrointestinal (GI) cancers, mainly including gastric cancer (8.2% of total cancer deaths) and esophageal cancer (5.3% of total cancer deaths), are the leading cause of cancer-related deaths worldwide[1]. Previous studies have shown that upper GI cancers always go through the stages of precancerous lesions, which can be defined as common conditions associated with a higher risk of developing cancers over time[2-4]. The detection of the precancerous lesions before cancer occurs could significantly reduce morbidity and mortality rates[5,6]. Currently, the main approach for the diagnosis of disorders or issues in the upper GI tract is endoscopy[7,8]. Compared with GI cancers, which usually show typical morphological characteristics, the precancerous lesions often appear in flat mucosa and exhibit few morphological changes. Manual screening through endoscopy is labor-intensive, time-consuming and relies heavily on clinical experience. Computer-assisted diagnosis based on artificial intelligence (AI) can overcome these dilemmas.

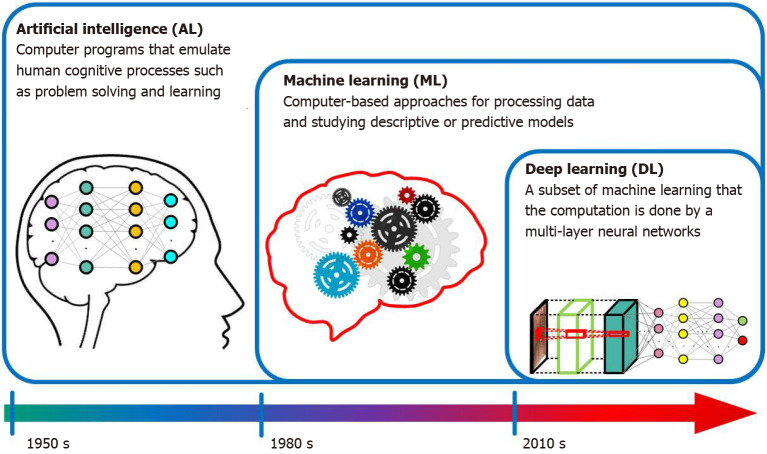

Over the past few decades, AI techniques such as machine learning (ML) and deep learning (DL) have been widely used in endoscopic imaging to improve the diagnostic accuracy and efficiency of various GI lesions[9-13]. The exact definition of AI, ML and DL can be misunderstood by physicians. AI, ML and DL are overlapping disciplines (Figure 1). AI is a hierarchy that encompasses ML and DL; it describes a computerized solution to address the issues of human cognitive defined by McCarthy in 1956[14]. ML is a subset of AI in which algorithms can execute complex tasks, but it needs handcrafted feature extraction. ML originated around the 1980s and focuses on patterns and inference[15]. DL is a subset of ML and became feasible in the 2010s; it is focused specifically on deep neural networks. A convolutional neural network (CNN) is the primary DL algorithm for image processing[16,17].

Figure 1.

Infographic with icons and timeline for artificial intelligence, machine learning and deep learning.

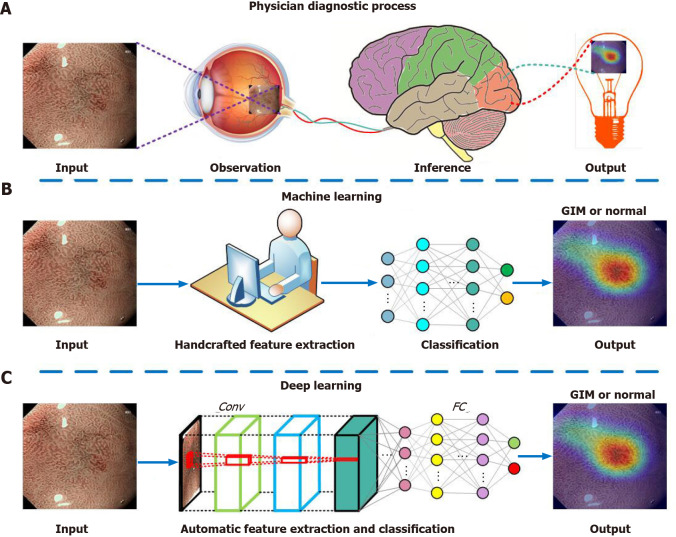

The diagnostic process of an AI model is similar to the human brain. We take our previous research as an example to illustrate the diagnostic process of ML, DL and human experts. When an input image with gastric intestinal metaplasia (GIM), a precancerous lesion of gastric cancer, is fed into the ML system, it usually needs a manual feature extraction step, while the handcrafted features are unable to discern slight variations in the endoscopic image (Figure 2)[15]. Unlike conventional ML algorithms, CNNs can automatically learn representative features from the endoscopic images[17]. When we apply a CNN model to detect GIM, it performs better than conventional ML models and is comparable to experienced endoscopists[18]. For a broad variety of image processing activities in endoscopy, CNNs also show excellent results, and some CNN-based algorithms have been used in clinical practice[19-21]. However, DL, especially CNN, has some limitations. First, DL requires a lot of data and easily leads to overfitting. Second, the diagnostic accuracy of DL relies on the training data, but the clinical data of different types of diseases are always imbalanced, which easily causes diagnosis bias. In addition, a DL model is complex and requires a huge calculation, so most researchers can only use the ready-made model.

Figure 2.

Illustration of the diagnostic process of physician, machine learning and deep learning. A: Physician diagnostic process; B: Machine learning; C: Deep learning. Conv: Convolutional layer; FC: Fully connected layer; GIM: gastric intestinal metaplasia.

Despite the above limitations, DL-based AI systems are revolutionizing GI endoscopy. While there are several surveys on DL for GI cancers[9-13], no specific review on the application of DL in the endoscopic diagnosis of precancerous lesions is available in the literature. Therefore, the performance of DL on gastroenterology is summarized in this review, with an emphasis on the automatic diagnosis of precancerous lesions in the upper GI tract. GI cancers are out of the scope of this review. Specifically, we review the status of intelligent diagnoses of esophageal and gastric precancerous lesions. The challenges and recommendations based on the findings of the review are comprehensively analyzed to advance the field.

DL IN ENDOSCOPIC DETECTION OF PRECANCEROUS LESIONS IN ESOPHAGEAL MUCOSA

Esophageal cancer is the eighth most prevalent form of cancer and the sixth most lethal cancer globally[1]. There are two major subtypes of esophageal cancer: Esophageal squamous cell carcinoma (ESCC) and esophageal adenocarcinoma (EAC)[22]. Esophageal squamous dysplasia (ESD) is believed to be the precancerous lesion of ESCC[23-25], and Barrett’s esophagus (BE) is the identifiable precancerous lesion associated with EAC[4,26]. Endoscopic surveillance is recommended by GI societies to enable early detection of the two precancerous lesions of esophageal cancer[25,27].

However, the current endoscopic diagnosis methods for patients with BE, such as random 4-quadrant biopsy, laser-induced endomicroscopy, image enhanced endoscopy, etc., have disadvantages concerning the learning curve, cost, interobserver variability and time-consuming problems[28]. The current standard for identifying ESD is Lugol’s chromoendoscopy, but it shows poor specificity[29]. Besides, iodine staining often presents a risk of allergic reactions. To overcome these challenges, DL-based AI systems have been established to help endoscopists identify ESD and BE.

DL in ESD

Low-grade and high-grade intraepithelial neoplasms, collectively referred to as ESD, are deemed as precancerous lesions of ESCC. Early and accurate detection of ESD is essential but also full of challenges[23-25]. DL is reliably able to depict ESD in real-time upper endoscopy. Cai et al[30] designed a novel computer-assisted diagnosis system to localize and identify early ESCC, including low-grade and high-grade intraepithelial neoplasia, through real-time white light endoscopy (WLE). The system achieved a sensitivity, specificity and accuracy of 97.8%, 85.4% and 91.4%, respectively. They also demonstrated that when referring to the results of the system, the overall diagnostic capability of the endoscopist has been increased. This research paved the way for the real-time diagnosis of ESD and ESCC. Following this work, Guo et al[31] applied 6473 narrow band (NB) images to train a real-time automated computer-assisted diagnosis system to support non-experts in the detection of ESD and ESCC. The system serves as a “second observer” in an endoscopic examination and achieves a sensitivity of 98.04% and specificity of 95.30% on NB images. The per-frame sensitivity was 96.10% for magnifying narrow band imaging (M-NBI) videos and 60.80% for non-M-NBI videos. The per lesion sensitivity was 100% in M-NBI videos.

DL in BE

BE is a disorder in which the lining of the esophagus is damaged by gastric acid. The critical purpose of endoscopic Barrett’s surveillance is early detection of BE-related dysplasia[4,26-28]. Recently, there have been many studies on the DL-based diagnosis of BE, and we review some representative studies. de Groof et al[32] performed one of the first pilot studies to assess the performance of a DL-based system during live endoscopic procedures of patients with or without BE dysplasia. The system demonstrated 90% accuracy, 91% sensitivity and 89% specificity in a per-level analysis. Following up this work, they improved this system using stepwise transfer learning and five independent endoscopy data sets. The enhanced system obtained higher accuracy than non-expert endoscopists and with comparable delineation performance[33]. Furthermore, their team also demonstrated the feasibility of a DL-based system for tissue characterization of NBI endoscopy in BE, and the system achieved a promising diagnostic accuracy[34].

Hashimoto et al[35] borrowed from the Inception-ResNet-v2 algorithm to develop a model for real-time classification of early esophageal neoplasia in BE, and they also applied YOLO-v2 to draw localization boxes around regions classified as dysplasia. For detection of neoplasia, the system achieved a sensitivity of 96.4%, specificity of 94.2% and accuracy of 95.4%. Hussein et al[36] built a CNN model to diagnose dysplastic BE mucosa with a sensitivity of 88.3% and specificity of 80.0%. The results preliminarily indicated that the diagnostic performance of the CNN model was close to that of experienced endoscopists. Ebigbo et al[37] exploited the use of a CNN-based system to classify and segment cancer in BE. The system achieved an accuracy of 89.9% in 14 patients with neoplastic BE.

DL has also achieved excellent results in distinguishing multiple types of esophageal lesions, including BE. Liu et al[38] explored the use of a CNN model to distinguish esophageal cancers from BE. The model was trained and evaluated on 1272 images captured by WLE. After pre-processing and data augmentation, the average sensitivity, specificity and accuracy of the CNN model were 94.23%, 94.67% and 85.83%, respectively. Wu et al[39] developed a CNN-based framework named ELNet for automatic esophageal lesion (i.e. EAC, BE and inflammation) classification and segmentation, the ELNet achieved a classification sensitivity of 90.34%, specificity of 97.18% and accuracy of 96.28%. The segmentation sensitivity, specificity and accuracy were 80.18%, 96.55% and 94.62%, respectively. A similar study was proposed by Ghatwary et al[40], who applied a CNN algorithm to detect BE, EAC and ESCC from endoscopic videos and obtained a high sensitivity of 93.7% and a high F-measure of 93.2%.

The studies exploring the creation of DL algorithms for the diagnosis of precancerous lesions in esophageal mucosa are summarized in Table 1.

Table 1.

Summary of studies using deep learning for detection of esophageal precancerous lesions

|

Ref.

|

Year

|

Imaging

|

Study design

|

Study aim

|

DL model

|

Dataset

|

Outcomes

|

| Cai et al[30] | 2019 | WLE | Retrospective | Detection of precancerous lesions and early ESCC | -- | 2615 images | Sensitivity: 97.8%. Specificity: 85.4%. Accuracy: 91.4% |

| Guo et al[31] | 2020 | NBI, M-NBI | Retrospective | Detection of precancerous lesions and early ESCC | SegNet | 13144 images and 168865 video frames | Sensitivity: 96.10% for M-NBI videos, 60.80% for non-M-NBI videos, 98.04% for images. Specificity: 99.90% for non-M-NBI/M-NBI videos, 95.30% for images |

| de Groof et al[32] | 2020 | WLE | Retrospective | Detection of Barrett’s neoplasia | ResNet/U-Ne | 1544 images | Sensitivity: 91%. Specificity: 89%. Accuracy: 90% |

| de Groof et al[33] | 2020 | WLE | Retrospective | Detection of Barrett’s neoplasia | ResNet/U-Ne | 494364 unlabeled images and 1704 labeled images | Sensitivity: 90%. Specificity: 88%. Accuracy: 89% |

| Struyvenberg et al[34] | 2021 | NBI | Retrospective | Detection of Barrett’s neoplasia | ResNet/U-Ne | 2677 images | Sensitivity: 88%. Specificity: 78%. Accuracy: 84% |

| Hashimoto et al[35] | 2020 | WLE, NBI | Retrospective | Recognition of early neoplasia in BE | Inception-ResNet-v2, YOLO-v2 | 2290 images | Sensitivity: 96.4%. Specificity: 94.2%. Accuracy: 95.4% |

| Hussein et al[36] | 2020 | WLE | Retrospective | Diagnosis of early neoplasia in BE | Resnet101 | 266930 video frames | Sensitivity: 88.26%. Specificity: 80.13% |

| Ebigbo et al[37] | 2020 | WLE | Retrospective | Diagnosis of early EAC in BE | DeepLab V.3+, Resnet101 | 191 images | Sensitivity: 83.7%. Specificity: 100%. Accuracy: 89.9% |

| Liu et al[38] | 2020 | WLE | Retrospective | Detection of esophageal cancer from precancerous lesions | Inception-ResNet | 1272 images | Sensitivity: 94.23%. Specificity: 94.67%. Accuracy: 85.83% |

| Wu et al[39] | 2021 | WLE | Retrospective | Automatic classification and segmentation for esophageal lesions | ELNet | 1051 images | Classification sensitivity: 90.34%. Classification specificity: 97.18%. Classification accuracy: 96.28%. Segmentation sensitivity: 80.18%. Segmentation Specificity: 96.55%, Segmentation accuracy: 94.62% |

| Ghatwary et al[40] | 2021 | WLE | Retrospective | Detection of esophageal abnormalities from endoscopic videos | DenseConvLstm, Faster R-CNN | 42425 video frames | Sensitivity: 93.7%. F-measure: 93.2% |

BE: Barrett’s esophagus; DL: Deep learning; EAC: Esophageal adenocarcinoma; ESCC: Esophageal squamous cell carcinoma; M-NBI: Magnifying narrow band imaging; NBI: Narrow band imaging; WLE: White light endoscopy.

DL IN ENDOSCOPIC DETECTION OF PRECANCEROUS LESIONS IN GASTRIC MUCOSA

Gastric cancer is the fifth most prevalent form of cancer and the third most lethal cancer globally[1]. Even though the prevalence of gastric cancer has declined during the last few decades, gastric cancer remains a significant clinical problem, especially in developing countries. This is because most patients are diagnosed in late stages with poor prognosis and restricted therapeutic choices[41]. The pathogenesis of gastric cancer involves a series of events starting with Helicobacter pylori-induced (H. pylori-induced) chronic inflammation, progressing towards atrophic gastritis, GIM, dysplasia and eventually gastric cancer[42]. Patients with the precancerous lesions (e.g., H. pylori-induced chronic inflammation, atrophic gastritis, GIM and dysplasia) are at considerable risk of gastric cancer[3,6,43]. It has been argued that the detection of such precancerous lesions may significantly reduce the incidence of gastric cancer. However, endoscopic examination is difficult to identify these precancerous lesions, and the diagnostic result also has high interobserver variability due to their subtle morphological changes in the mucosa and lack of experienced endoscopists[44,45]. Currently, many researchers are trying to use DL-based methods to detect gastric precancerous lesions; here, we review these studies in detail.

DL in H. pylori infection

Most of the gastric precancerous lesions are correlated with long-term infections with H. pylori[46]. Shichijo et al[47] performed one of the pioneering studies to apply CNNs in the diagnosis of H. pylori infection. The CNNs were built on GoogLeNet and trained on 32208 WLE images. One of their CNN models has higher accuracy than endoscopists. The study showed the feasibility of using CNN to diagnose H. pylori from endoscopic images. After this study, Itoh et al[48] developed a CNN model to detect H. pylori infection in WLE images and showed a sensitivity of 86.7% and specificity of 86.7% in the test dataset. A similar model was developed by Zheng et al[49] to evaluate H. pylori infection status, and the per-patient sensitivity, specificity and accuracy of the model were 91.6%, 98.6% and 93.8%, respectively. Besides WLE, blue laser imaging-bright and linked color imaging systems were prospectively applied by Nakashima et al[50] to collect endoscopic images. With these images, they fine-tuned a pre-trained GoogLeNet to predict H. pylori infection status. As compared with linked color imaging, the model achieved the highest sensitivity (96.7%) and specificity (86.7%) when using blue laser imaging-bright. Nakashima et al[51] also did a single center prospective study to build a CNN model to identify the status of H. pylori in uninfected, currently infected and post-eradication patients. The area under the receiver operating characteristic curve for the uninfected, currently infected and post-eradication categories was 0.90, 0.82 and 0.77, respectively.

DL in atrophic gastritis

Atrophic gastritis is a form of chronic inflammation of the gastric mucosa; accurate endoscopic diagnosis is difficult[52]. Guimarães et al[53] reported the application of CNN to detect atrophic gastritis; the system achieved an accuracy of 93% and performed better than expert endoscopists. Recently, another CNN-based system for detecting atrophic gastritis was reported by Zhang et al[54]. The CNN model was trained and tested on a dataset containing 3042 images with atrophic gastritis and 2428 without atrophic gastritis. The diagnostic accuracy, sensitivity and specificity of the model were 94.2%, 94.5% and 94.0%, respectively, which were better than those of the experts. More recently, Horiuchi et al[55] explored the diagnostic ability of the CNN model to distinguish early gastric cancer and gastritis through M-NBI; the 22-layer CNN was built on GoogleNet and pretrained using 2570 endoscopic images, and the sensitivity, specificity and accuracy on 258 images were 95.4%, 71.0% and 85.3%, respectively. Except for high sensitivity, the CNN model also showed an overall test speed of 0.02 s per image, which was faster than human experts.

DL in GIM

GIM is the replacement of gastric-type mucinous epithelial cells with intestinal-type cells, which is a precancerous lesion with a worldwide prevalence of 25%[56]. The morphological characteristics of GIM are subtle and difficult to observe, so the manual diagnosis of GIM is full of challenges. Wang et al[57] reported the first instance of an AI system for localizing and identifying GIM from WLE images. The system achieved a high classification accuracy and a satisfactory segmentation result. A recent study developed a CNN-based diagnosis system that can detect atrophic gastritis and GIM from WLE images[58], and the detection sensitivity and specificity for atrophic gastritis were 87.2% and 91.1%, respectively. For detection of GIM, the system also achieved a sensitivity of 90.3% and a specificity of 93.7%. Recently, our team also developed a novel DL-based diagnostic system for detection of GIM in endoscopic images[18]. The difference from the previous research is that our system is composed of three independent CNNs, which can identify GIM from either NBI or M-NBI. The per-patient sensitivity, specificity and accuracy of the system were 91.9%, 86.0% and 88.8%, respectively. The diagnostic performance showed no significant differences as compared with human experts. Our research showed that the integration of NBI and M-NBI into the DL system could achieve satisfactory diagnostic performance for GIM.

DL in gastric dysplasia

Gastric dysplasia is the penultimate step of gastric carcinogenesis, and accurate diagnosis of this lesion remains controversial[59]. To accurately classify advanced low-grade dysplasia, high-grade dysplasia, early gastric cancer and gastric cancer, Cho et al[60] established three CNN models based on 5017 endoscopic images. They found that the Inception-Resnet-v2 model performed the best, while it showed lower five-class accuracy compared with the endoscopists (76.4% vs 87.6%). Inoue et al[61] constructed a detection system using the Single-Shot Multibox Detector, which can automatically detect duodenal adenomas and high-grade dysplasia from WLE or NBI. The system detected 94.7% adenomas and 100% high-grade dysplasia on a dataset containing 1080 endoscopic images within only 31 s. Although most of the AI-assisted system can achieve high accuracy on endoscopic diagnosis, no study has investigated the role of AI in the training of junior endoscopists. To evaluate the role of AI in the training of junior endoscopists in predicting histology of endoscopic gastric lesions, including dysplasia, Lui et al[62] designed and validated a CNN classifier based on 3000 NB images. The classifier achieved an overall accuracy of 91.0%, sensitivity of 97.1% and specificity of 85.9%, which was superior to all junior endoscopists. They also demonstrated that with the feedback from the CNN classifier, the learning curve of junior endoscopists was improved in predicting histology of gastric lesions.

The studies exploring the creation of DL algorithms for the diagnosis of precancerous lesions in gastric mucosa are summarized in Table 2.

Table 2.

Summary of studies using deep learning for detection of gastric precancerous lesions

|

Ref.

|

Year

|

Imaging

|

Study design

|

Study aim

|

DL model

|

Dataset

|

Outcomes

|

| Shichijo et al[47] | 2017 | WLE | Retrospective | Diagnosis of H. pylori infection | GoogLeNet | 43689 images | Sensitivity: 88.9%; Specificity: 87.4%; Accuracy: 87.7% |

| Itoh et al[48] | 2018 | WLE | Retrospective | Analysis of H. pylori infection | GoogLeNet | 179 images | Sensitivity: 86.7%; Specificity: 86.7% |

| Zheng et al[49] | 2019 | WLE | Retrospective | Evaluation of H. pylori infection status | ResNet-50 | 15484 images | Sensitivity: 91.6%; Specificity: 98.6%; Accuracy: 93.8% |

| Nakashima et al[50] | 2018 | BLI-bright, LCI | Prospective | Prediction of H. pylori infection status | GoogLeNet | 666 images | Sensitivity: 96.7%; Specificity: 86.7% |

| Nakashima et al[51] | 2020 | WLE, LCI | Prospective | Diagnosis of H. pylori infection | -- | 13127 images | For currently infected patients, the sensitivity and specificity are 62.5% and 92.5%, respectively |

| Guimarães et al[53] | 2020 | WLE | Retrospective | Diagnosis of atrophic gastritis | VGG16 | 270 images | Accuracy: 93% |

| Zhang et al[54] | 2020 | WLE | Retrospective | Diagnosis of atrophic gastritis | DenseNet121 | 5470 images | Sensitivity: 94.5%; Specificity: 94.0%; Accuracy: 94.2% |

| Horiuchi et al[55] | 2020 | M-NBI | Retrospective | Differentiation between early gastric cancer and gastritis | GoogLeNet | 2826 images | Sensitivity: 95.4%; Specificity: 71.0%; Accuracy: 85.3% |

| Wang et al[57] | 2019 | WLE | Retrospective | Localization and identification of GIM | DeepLab V.3+ | 200 images | Accuracy: 89.51% |

| Zheng et al[58] | 2020 | WLE | Retrospective | Detection of atrophic gastritis and GIM | ResNet-50 | 3759 images | Sensitivity for atrophic gastritis: 87.2%; Specificity for atrophic gastritis: 91.1%; Sensitivity for GIM: 90.3%; Specificity for GIM: 93.7% |

| Yan et al[18] | 2020 | NBI, M-NBI | Retrospective | Diagnosis of GIM | EfficientNetB4 | 2357 images | Sensitivity: 91.9%; Specificity: 86.0%; Accuracy: 88.8% |

| Cho et al[60] | 2019 | WLE | Prospective | Classification of multiclass gastric neoplasms | Inception-Resnet-v2 | 5217 images | Accuracy: 84.6% |

| Inoue et al[61] | 2020 | WLE, NBI | Retrospective | Detection of duodenal adenomas and high-grade dysplasias | Single-Shot Multibox Detector | 1511 images | For high-grade dysplasia, the sensitivity and specificity are all 100% |

| Lui et al[62] | 2020 | NBI | Retrospective | Classification of gastric lesions | ResNet | 3000 images | Sensitivity: 97.1%; Specificity: 85.9%; Accuracy: 91.0% |

BLI-bright: Blue laser imaging-bright; DL: Deep learning; GIM: Gastric intestinal metaplasia; H. pylori: Helicobacter pylori; LCI: Linked color imaging; M-NBI: Magnifying narrow band imaging; NBI: Narrow band imaging; WLE: White light endoscopy.

CHALLENGES AND RECOMMENDATIONS

AI has gained much attention in recent years. In the field of GI endoscopy, DL is also a promising innovation in the identification and characterization of lesions[9-13]. Many successful studies have focused on GI cancers. Accurate detection of precancerous lesions such as ESD, BE, H. pylori-induced chronic inflammation, atrophic gastritis, GIM and gastric dysplasia can greatly reduce the incidence of cancers and require less cancer treatment. DL-assisted detection of these precancerous lesions has increasingly emerged in the last 5 yrs. To perform a systematic review of the status of DL for diagnosis of precancerous lesions of the upper GI tract, we conducted a comprehensive search for all original publications on this target between January 1, 2017 and December 30, 2020. A variety of published papers has verified the outstanding performance of DL-assisted systems, several challenges remain from the viewpoint of physicians and algorithm engineers. The challenges and our recommendations on future research directions are outlined below.

Prospective studies and clinical verification

The current literature reveals that most studies were designed in a retrospective manner with a strong probability of bias. In these retrospective studies, researchers tended to collect high-quality endoscopic images that showed typical characteristics of the detected lesions from a single medical center, while they excluded common low-quality images. This kind of selection bias may jeopardize the precision and lead to lower generalization of the DL models. Thus, data collected from multicenter studies with uninformative frames are necessary to build robust DL models, and prospective studies are needed to properly verify the accuracy of AI in clinical practice.

Handling of overfitting

Overfitting means an AI model performs well on the training set but has high error on unseen data. The deep CNN architectures usually contain several convolutional layers and fully connected layers, which produce millions of parameters that easily lead to strong overfitting[16,17]. Training these parameters needs large-scale well-annotated data. However, well-annotated data are costly and hard to obtain in the clinical community. Possible solutions for overcoming the lack of well-annotated data to avoid overfitting mainly include data augmentation[63], transfer learning[17,64], semi-supervised learning[65] and data synthesis using generative adversarial networks[66].

Data augmentation is a common method to train CNNs to reduce overfitting[63]. According to current literature, almost all studies use data augmentation. Data augmentation is performed by using several image transformations such as random image rotation, flipping, shifting, scaling and their combinations are shown in Figure 3. Transfer learning involves transfer knowledge learned from a large source domain to a target domain[17,64]. This technique is usually performed by initializing the CNN using the weights pretrained on ImageNet dataset. As there are many imaging modalities such as WLE, NBI and M-NBI and the images share the common features of the detected lesions, Struyvenberg et al[34] applied a stepwise transfer learning approach to tackle the shortage of NB images. Their CNN model was first trained on many WLE images, which are easy to acquire as compared with NB images. Then, the weights were further trained and optimized using NB images. In the upper endoscopy, although well-annotated data are limited, the unlabeled data are abundant and easily available in most situations. Semi-supervised learning, which utilizes limited labeled data and large-scale unlabeled data to train and improve the CNN model, is useful in the GI tract[65,67,68]. Generative adversarial networks are widely used in the field of medical image synthesis; the synthetic images can be used to provide auxiliary training to improve the performance of the CNN models[66]. de Souza et al[69] introduced generative adversarial networks to generate high-quality endoscopic images for BE, and these synthetic images were used to provide auxiliary training. The detection results suggested that with the help of these synthetic images the CNN model outperformed the ones over the original datasets.

Figure 3.

Data augmentation for a typical magnifying narrow band image for training a convolutional neural network model. This is performed by using a variety of image transformations and their combinations. A: Original image; B: Flip horizontal and random rotation; C: Flip vertical and magnification; D: Random rotation and shift; E: Flip horizontal, minification and shift; F: Flip vertical, rotation and shift.

Although the above techniques were used to reduce overfitting to a certain extent, the single use of one type did not guarantee resolution of the overfitting problem. Integration of these techniques is a promising strategy, and it was verified in our research[18,70].

Improvement on interpretability

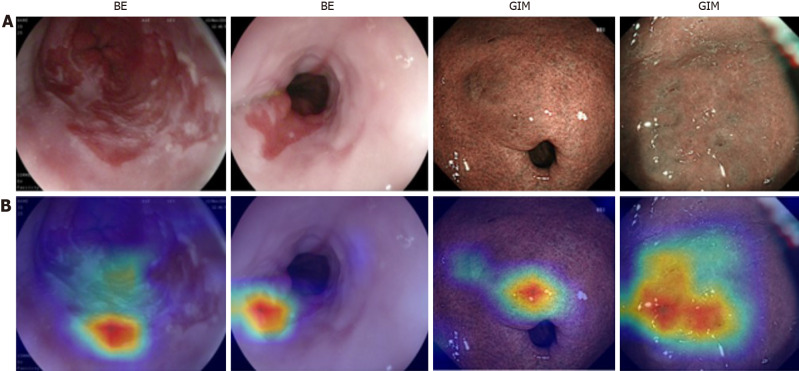

Lack of interpretability (i.e. the “black box” nature), which is the nature of DL technology, is another gap between studies and clinical applications in the field of precancerous lesion detection from endoscopic images. The black box nature means that the decision-making process by the DL model is not clearly demonstrated, which may reduce the willingness of doctors to use it. Although attention maps can help explain the dominant areas by highlighting them, they are constrained in that they do not thoroughly explain how the algorithm comes to its final decision[71]. The attention maps are displayed as heat maps overlaid upon the original images, where warmer colors mean higher contributions to the decision making, which usually correspond to lesions. However, the attention maps also have some defects such as inaccurate display of lesions as shown in Figure 4, where the attention maps only cover partial areas associated with BE and GIM. This is the inherent shortcoming of attention maps. Therefore, understanding the mechanism used by the DL model for prediction is a hot research topic. The network dissection[72], an empirical method to identify the semantics of individual hidden nodes in the DL model, may be a feasible solution to improve interpretability.

Figure 4.

Informative features (partially related to lesions areas) acquired by the convolutional neural networks, where warmer colors mean higher contributions to decision making. A: Original endoscopic images; B: Corresponding attention. BE: Barrett’s esophagus; GIM: Gastric intestinal metaplasia.

Network design

In this review, we analyzed the DL model used in the detection of precancerous lesions in the upper GI tract. The literature shows that almost all the DL-based AI systems are developed based on state-of-the-art CNN architectures. These CNNs can only handle a single task, such as GoogLeNet for disease classification[47,48,50,55], YOLO for lesion identification[36] and SegNet for lesion segmentation[31]. Few networks can handle multiple tasks simultaneously. Thus, networks must be designed for multitask learning, which is valuable in clinical applications. Networks designed for handling high-resolution images can help detect micropatterns, which is profitable for small precancerous lesions. Moreover, attempts should be guided to exploit the use of videos rather than images to minimize the processing time and keep DL algorithms working at almost real-time level. Therefore, as suggested by Mori et al[73] and Thakkar et al[74], the AI systems may be treated as an extra pair of eyes to prevent the absence of subtle lesions.

CONCLUSION

Upper GI cancers are a major cause of cancer-related deaths worldwide. Early detection of precancerous lesions could significantly reduce cancer incidence. Upper GI endoscopy is a gold standard procedure for identifying precancerous lesions in the upper GI tract. DL-based endoscopic systems can provide an easier, faster and more reliable endoscopic method. We have conducted a thorough review of detection of precancerous lesions of the upper GI tract using DL approaches since 2017. This is the first review on the DL-based diagnosis of precancerous lesions of the upper GI tract. The status, challenges and recommendations summarized in this review can provide guidance for intelligent diagnosis of other GI tract diseases, which can help engineers develop perfect AI products to assist clinical decision making. Despite the success of DL algorithms in upper GI endoscopy, prospective studies and clinical validation are still needed. Creation of large public databases, adoption of comprehensive overfitting prevention strategies and application of more advanced interpretable methods and networks are also necessary to encourage clinical application of AI for medical diagnosis.

ACKNOWLEDGEMENTS

The authors would like to thank Dr. I Cheong Choi, Dr. Hon Ho Yu and Dr. Mo Fong Li from the Department of Gastroenterology, Kiang Wu Hospital, Macau for their advice on this manuscript.

Footnotes

Conflict-of-interest statement: All authors declare no conflict of interest.

Manuscript source: Invited manuscript

Peer-review started: January 24, 2021

First decision: March 14, 2021

Article in press: April 9, 2021

Specialty type: Gastroenterology and hepatology

Country/Territory of origin: China

Peer-review report’s scientific quality classification

Grade A (Excellent): 0

Grade B (Very good): B

Grade C (Good): C

Grade D (Fair): 0

Grade E (Poor): 0

P-Reviewer: Amornyotin S, Haruma K S-Editor: Fan JR L-Editor: Filipodia P-Editor: Liu JH

Contributor Information

Tao Yan, School of Mechanical Engineering, Hubei University of Arts and Science, Xiangyang 441053, Hubei Province, China; Department of Electromechanical Engineering, University of Macau, Taipa 999078, Macau, China.

Pak Kin Wong, Department of Electromechanical Engineering, University of Macau, Taipa 999078, Macau, China. fstpkw@um.edu.mo.

Ye-Ying Qin, Department of Electromechanical Engineering, University of Macau, Taipa 999078, Macau, China.

References

- 1.Bray F, Ferlay J, Soerjomataram I, Siegel RL, Torre LA, Jemal A. Global cancer statistics 2018: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J Clin. 2018;68:394–424. doi: 10.3322/caac.21492. [DOI] [PubMed] [Google Scholar]

- 2.Morson BC. Precancerous lesions of upper gastrointestinal tract. JAMA. 1962;179:311–315. doi: 10.1001/jama.1962.03050050001001. [DOI] [PubMed] [Google Scholar]

- 3.Rugge M, Capelle LG, Cappellesso R, Nitti D, Kuipers EJ. Precancerous lesions in the stomach: from biology to clinical patient management. Best Pract Res Clin Gastroenterol. 2013;27:205–223. doi: 10.1016/j.bpg.2012.12.007. [DOI] [PubMed] [Google Scholar]

- 4.Coron E, Robaszkiewicz M, Chatelain D, Svrcek M, Fléjou JF. Advanced precancerous lesions in the lower oesophageal mucosa: high-grade dysplasia and intramucosal carcinoma in Barrett's oesophagus. Best Pract Res Clin Gastroenterol. 2013;27:187–204. doi: 10.1016/j.bpg.2013.03.011. [DOI] [PubMed] [Google Scholar]

- 5.Fassan M, Croce CM, Rugge M. miRNAs in precancerous lesions of the gastrointestinal tract. World J Gastroenterol. 2011;17:5231–5239. doi: 10.3748/wjg.v17.i48.5231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Pimentel-Nunes P, Libânio D, Marcos-Pinto R, Areia M, Leja M, Esposito G, Garrido M, Kikuste I, Megraud F, Matysiak-Budnik T, Annibale B, Dumonceau JM, Barros R, Fléjou JF, Carneiro F, van Hooft JE, Kuipers EJ, Dinis-Ribeiro M. Management of epithelial precancerous conditions and lesions in the stomach (MAPS II): European Society of Gastrointestinal Endoscopy (ESGE), European Helicobacter and Microbiota Study Group (EHMSG), European Society of Pathology (ESP), and Sociedade Portuguesa de Endoscopia Digestiva (SPED) guideline update 2019. Endoscopy. 2019;51:365–388. doi: 10.1055/a-0859-1883. [DOI] [PubMed] [Google Scholar]

- 7.Berci G, Forde KA. History of endoscopy: what lessons have we learned from the past? Surg Endosc. 2000;14:5–15. doi: 10.1007/s004649900002. [DOI] [PubMed] [Google Scholar]

- 8.Kavic SM, Basson MD. Complications of endoscopy. Am J Surg. 2001;181:319–332. doi: 10.1016/s0002-9610(01)00589-x. [DOI] [PubMed] [Google Scholar]

- 9.Niu PH, Zhao LL, Wu HL, Zhao DB, Chen YT. Artificial intelligence in gastric cancer: Application and future perspectives. World J Gastroenterol. 2020;26:5408–5419. doi: 10.3748/wjg.v26.i36.5408. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Parasher G, Wong M, Rawat M. Evolving role of artificial intelligence in gastrointestinal endoscopy. World J Gastroenterol. 2020;26:7287–7298. doi: 10.3748/wjg.v26.i46.7287. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Hussein M, González-Bueno Puyal J, Mountney P, Lovat LB, Haidry R. Role of artificial intelligence in the diagnosis of oesophageal neoplasia: 2020 an endoscopic odyssey. World J Gastroenterol. 2020;26:5784–5796. doi: 10.3748/wjg.v26.i38.5784. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Huang LM, Yang WJ, Huang ZY, Tang CW, Li J. Artificial intelligence technique in detection of early esophageal cancer. World J Gastroenterol. 2020;26:5959–5969. doi: 10.3748/wjg.v26.i39.5959. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Yang YJ, Bang CS. Application of artificial intelligence in gastroenterology. World J Gastroenterol. 2019;25:1666–1683. doi: 10.3748/wjg.v25.i14.1666. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.McCarthy J, Minsky ML, Rochester N, Shannon CE. A proposal for the dartmouth summer research project on artificial intelligence, August 31, 1955. AI Mag . 2006;27:12–14. [Google Scholar]

- 15.Jordan MI, Mitchell TM. Machine learning: Trends, perspectives, and prospects. Science. 2015;349:255–260. doi: 10.1126/science.aaa8415. [DOI] [PubMed] [Google Scholar]

- 16.LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 17.Shin HC, Roth HR, Gao M, Lu L, Xu Z, Nogues I, Yao J, Mollura D, Summers RM. Deep Convolutional Neural Networks for Computer-Aided Detection: CNN Architectures, Dataset Characteristics and Transfer Learning. IEEE Trans Med Imaging. 2016;35:1285–1298. doi: 10.1109/TMI.2016.2528162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Yan T, Wong PK, Choi IC, Vong CM, Yu HH. Intelligent diagnosis of gastric intestinal metaplasia based on convolutional neural network and limited number of endoscopic images. Comput Biol Med. 2020;126:104026. doi: 10.1016/j.compbiomed.2020.104026. [DOI] [PubMed] [Google Scholar]

- 19.Wang P, Berzin TM, Glissen Brown JR, Bharadwaj S, Becq A, Xiao X, Liu P, Li L, Song Y, Zhang D, Li Y, Xu G, Tu M, Liu X. Real-time automatic detection system increases colonoscopic polyp and adenoma detection rates: a prospective randomised controlled study. Gut. 2019;68:1813–1819. doi: 10.1136/gutjnl-2018-317500. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Gong D, Wu L, Zhang J, Mu G, Shen L, Liu J, Wang Z, Zhou W, An P, Huang X, Jiang X, Li Y, Wan X, Hu S, Chen Y, Hu X, Xu Y, Zhu X, Li S, Yao L, He X, Chen D, Huang L, Wei X, Wang X, Yu H. Detection of colorectal adenomas with a real-time computer-aided system (ENDOANGEL): a randomised controlled study. Lancet Gastroenterol Hepatol. 2020;5:352–361. doi: 10.1016/S2468-1253(19)30413-3. [DOI] [PubMed] [Google Scholar]

- 21.An P, Yang D, Wang J, Wu L, Zhou J, Zeng Z, Huang X, Xiao Y, Hu S, Chen Y, Yao F, Guo M, Wu Q, Yang Y, Yu H. A deep learning method for delineating early gastric cancer resection margin under chromoendoscopy and white light endoscopy. Gastric Cancer. 2020;23:884–892. doi: 10.1007/s10120-020-01071-7. [DOI] [PubMed] [Google Scholar]

- 22.Rustgi AK, El-Serag HB. Esophageal carcinoma. N Engl J Med. 2014;371:2499–2509. doi: 10.1056/NEJMra1314530. [DOI] [PubMed] [Google Scholar]

- 23.Taylor PR, Abnet CC, Dawsey SM. Squamous dysplasia--the precursor lesion for esophageal squamous cell carcinoma. Cancer Epidemiol Biomarkers Prev. 2013;22:540–552. doi: 10.1158/1055-9965.EPI-12-1347. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Raghu Subramanian C, Triadafilopoulos G. Diagnosis and therapy of esophageal squamous cell dysplasia and early esophageal squamous cell cancer. Gastroenterol Rep . 2017;5:247–257. [Google Scholar]

- 25.Auld M, Srinath H, Jeyarajan E. Oesophageal Squamous Dysplasia. J Gastrointest Cancer. 2018;49:385–388. doi: 10.1007/s12029-018-0122-3. [DOI] [PubMed] [Google Scholar]

- 26.Hvid-Jensen F, Pedersen L, Drewes AM, Sørensen HT, Funch-Jensen P. Incidence of adenocarcinoma among patients with Barrett's esophagus. N Engl J Med. 2011;365:1375–1383. doi: 10.1056/NEJMoa1103042. [DOI] [PubMed] [Google Scholar]

- 27.Eluri S, Shaheen NJ. Barrett's esophagus: diagnosis and management. Gastrointest Endosc. 2017;85:889–903. doi: 10.1016/j.gie.2017.01.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Tavakkoli A, Appelman HD, Beer DG, Madiyal C, Khodadost M, Nofz K, Metko V, Elta G, Wang T, Rubenstein JH. Use of Appropriate Surveillance for Patients With Nondysplastic Barrett's Esophagus. Clin Gastroenterol Hepatol 2018; 16: 862-869. :e3. doi: 10.1016/j.cgh.2018.01.052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Dawsey SM, Fleischer DE, Wang GQ, Zhou B, Kidwell JA, Lu N, Lewin KJ, Roth MJ, Tio TL, Taylor PR. Mucosal iodine staining improves endoscopic visualization of squamous dysplasia and squamous cell carcinoma of the esophagus in Linxian, China. Cancer. 1998;83:220–231. [PubMed] [Google Scholar]

- 30.Cai SL, Li B, Tan WM, Niu XJ, Yu HH, Yao LQ, Zhou PH, Yan B, Zhong YS. Using a deep learning system in endoscopy for screening of early esophageal squamous cell carcinoma (with video). Gastrointest Endosc 2019; 90: 745-753. :e2. doi: 10.1016/j.gie.2019.06.044. [DOI] [PubMed] [Google Scholar]

- 31.Guo L, Xiao X, Wu C, Zeng X, Zhang Y, Du J, Bai S, Xie J, Zhang Z, Li Y, Wang X, Cheung O, Sharma M, Liu J, Hu B. Real-time automated diagnosis of precancerous lesions and early esophageal squamous cell carcinoma using a deep learning model (with videos) Gastrointest Endosc. 2020;91:41–51. doi: 10.1016/j.gie.2019.08.018. [DOI] [PubMed] [Google Scholar]

- 32.de Groof AJ, Struyvenberg MR, Fockens KN, van der Putten J, van der Sommen F, Boers TG, Zinger S, Bisschops R, de With PH, Pouw RE, Curvers WL, Schoon EJ, Bergman JJGHM. Deep learning algorithm detection of Barrett's neoplasia with high accuracy during live endoscopic procedures: a pilot study (with video) Gastrointest Endosc. 2020;91:1242–1250. doi: 10.1016/j.gie.2019.12.048. [DOI] [PubMed] [Google Scholar]

- 33.de Groof AJ, Struyvenberg MR, van der Putten J, van der Sommen F, Fockens KN, Curvers WL, Zinger S, Pouw RE, Coron E, Baldaque-Silva F, Pech O, Weusten B, Meining A, Neuhaus H, Bisschops R, Dent J, Schoon EJ, de With PH, Bergman JJ. Deep-Learning System Detects Neoplasia in Patients With Barrett's Esophagus With Higher Accuracy Than Endoscopists in a Multistep Training and Validation Study With Benchmarking. Gastroenterology 2020; 158: 915-929. :e4. doi: 10.1053/j.gastro.2019.11.030. [DOI] [PubMed] [Google Scholar]

- 34.Struyvenberg MR, de Groof AJ, van der Putten J, van der Sommen F, Baldaque-Silva F, Omae M, Pouw R, Bisschops R, Vieth M, Schoon EJ, Curvers WL, de With PH, Bergman JJ. A computer-assisted algorithm for narrow-band imaging-based tissue characterization in Barrett's esophagus. Gastrointest Endosc. 2021;93:89–98. doi: 10.1016/j.gie.2020.05.050. [DOI] [PubMed] [Google Scholar]

- 35.Hashimoto R, Requa J, Dao T, Ninh A, Tran E, Mai D, Lugo M, El-Hage Chehade N, Chang KJ, Karnes WE, Samarasena JB. Artificial intelligence using convolutional neural networks for real-time detection of early esophageal neoplasia in Barrett's esophagus (with video). Gastrointest Endosc 2020; 91: 1264-1271. :e1. doi: 10.1016/j.gie.2019.12.049. [DOI] [PubMed] [Google Scholar]

- 36.Hussein M, Juana Gonzales-Bueno P, Brandao P, Toth D, Seghal V, Everson MA, Lipman G, Ahmad OF, Kader R, Esteban JM, Bisschops R, Banks M, Mountney P, Stoyanov D, Lovat L, Haidry R. Deep Neural Network for the detection of early neoplasia in Barrett’s oesophagus. Gastrointest Endosc. 2020;91:AB250. [Google Scholar]

- 37.Ebigbo A, Mendel R, Probst A, Manzeneder J, Prinz F, de Souza LA Jr, Papa J, Palm C, Messmann H. Real-time use of artificial intelligence in the evaluation of cancer in Barrett's oesophagus. Gut. 2020;69:615–616. doi: 10.1136/gutjnl-2019-319460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Liu G, Hua J, Wu Z, Meng T, Sun M, Huang P, He X, Sun W, Li X, Chen Y. Automatic classification of esophageal lesions in endoscopic images using a convolutional neural network. Ann Transl Med. 2020;8:486. doi: 10.21037/atm.2020.03.24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Wu Z, Ge R, Wen M, Liu G, Chen Y, Zhang P, He X, Hua J, Luo L, Li S. ELNet:Automatic classification and segmentation for esophageal lesions using convolutional neural network. Med Image Anal. 2021;67:101838. doi: 10.1016/j.media.2020.101838. [DOI] [PubMed] [Google Scholar]

- 40.Ghatwary N, Zolgharni M, Janan F, Ye X. Learning Spatiotemporal Features for Esophageal Abnormality Detection From Endoscopic Videos. IEEE J Biomed Health Inform. 2021;25:131–142. doi: 10.1109/JBHI.2020.2995193. [DOI] [PubMed] [Google Scholar]

- 41.Van Cutsem E, Sagaert X, Topal B, Haustermans K, Prenen H. Gastric cancer. Lancet. 2016;388:2654–2664. doi: 10.1016/S0140-6736(16)30354-3. [DOI] [PubMed] [Google Scholar]

- 42.Correa P, Piazuelo MB. The gastric precancerous cascade. J Dig Dis. 2012;13:2–9. doi: 10.1111/j.1751-2980.2011.00550.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.de Vries AC, van Grieken NC, Looman CW, Casparie MK, de Vries E, Meijer GA, Kuipers EJ. Gastric cancer risk in patients with premalignant gastric lesions: a nationwide cohort study in the Netherlands. Gastroenterology. 2008;134:945–952. doi: 10.1053/j.gastro.2008.01.071. [DOI] [PubMed] [Google Scholar]

- 44.Chung CS, Wang HP. Screening for precancerous lesions of upper gastrointestinal tract: from the endoscopists' viewpoint. Gastroenterol Res Pract. 2013;2013:681439. doi: 10.1155/2013/681439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Menon S, Trudgill N. How commonly is upper gastrointestinal cancer missed at endoscopy? Endosc Int Open. 2014;2:E46–E50. doi: 10.1055/s-0034-1365524. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Matysiak-Budnik T, Mégraud F. Helicobacter pylori infection and gastric cancer. Eur J Cancer. 2006;42:708–716. doi: 10.1016/j.ejca.2006.01.020. [DOI] [PubMed] [Google Scholar]

- 47.Shichijo S, Nomura S, Aoyama K, Nishikawa Y, Miura M, Shinagawa T, Takiyama H, Tanimoto T, Ishihara S, Matsuo K, Tada T. Application of Convolutional Neural Networks in the Diagnosis of Helicobacter pylori Infection Based on Endoscopic Images. EBioMedicine. 2017;25:106–111. doi: 10.1016/j.ebiom.2017.10.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Itoh T, Kawahira H, Nakashima H, Yata N. Deep learning analyzes Helicobacter pylori infection by upper gastrointestinal endoscopy images. Endosc Int Open. 2018;6:E139–E144. doi: 10.1055/s-0043-120830. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Zheng W, Zhang X, Kim JJ, Zhu X, Ye G, Ye B, Wang J, Luo S, Li J, Yu T, Liu J, Hu W, Si J. High Accuracy of Convolutional Neural Network for Evaluation of Helicobacter pylori Infection Based on Endoscopic Images: Preliminary Experience. Clin Transl Gastroenterol. 2019;10:e00109. doi: 10.14309/ctg.0000000000000109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Nakashima H, Kawahira H, Kawachi H, Sakaki N. Artificial intelligence diagnosis of Helicobacter pylori infection using blue laser imaging-bright and linked color imaging: a single-center prospective study. Ann Gastroenterol. 2018;31:462–468. doi: 10.20524/aog.2018.0269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Nakashima H, Kawahira H, Kawachi H, Sakaki N. Endoscopic three-categorical diagnosis of Helicobacter pylori infection using linked color imaging and deep learning: a single-center prospective study (with video) Gastric Cancer. 2020;23:1033–1040. doi: 10.1007/s10120-020-01077-1. [DOI] [PubMed] [Google Scholar]

- 52.Okamura T, Iwaya Y, Kitahara K, Suga T, Tanaka E. Accuracy of Endoscopic Diagnosis for Mild Atrophic Gastritis Infected with Helicobacter pylori. Clin Endosc. 2018;51:362–367. doi: 10.5946/ce.2017.177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Guimarães P, Keller A, Fehlmann T, Lammert F, Casper M. Deep-learning based detection of gastric precancerous conditions. Gut. 2020;69:4–6. doi: 10.1136/gutjnl-2019-319347. [DOI] [PubMed] [Google Scholar]

- 54.Zhang Y, Li F, Yuan F, Zhang K, Huo L, Dong Z, Lang Y, Zhang Y, Wang M, Gao Z, Qin Z, Shen L. Diagnosing chronic atrophic gastritis by gastroscopy using artificial intelligence. Dig Liver Dis. 2020;52:566–572. doi: 10.1016/j.dld.2019.12.146. [DOI] [PubMed] [Google Scholar]

- 55.Horiuchi Y, Aoyama K, Tokai Y, Hirasawa T, Yoshimizu S, Ishiyama A, Yoshio T, Tsuchida T, Fujisaki J, Tada T. Convolutional Neural Network for Differentiating Gastric Cancer from Gastritis Using Magnified Endoscopy with Narrow Band Imaging. Dig Dis Sci. 2020;65:1355–1363. doi: 10.1007/s10620-019-05862-6. [DOI] [PubMed] [Google Scholar]

- 56.Lam SK, Lau G. Novel treatment for gastric intestinal metaplasia, a precursor to cancer. JGH Open. 2020;4:569–573. doi: 10.1002/jgh3.12318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Wang C, Li Y, Yao J, Chen B, Song J, Yang X. Localizing and Identifying Intestinal Metaplasia Based on Deep Learning in Oesophagoscope. 2019 8th International Symposium on Next Generation 2019; 1-4. [Google Scholar]

- 58.Zheng W, Yu T, Lin N, Ye G, Zhu X, Shen Y, Zhang X, Liu J, Hu W, Cao Q, Si J. Tu1075 deep convolutional neural networks for recognition of atrophic gastritis and intestinal metaplasia based on endoscopy images. Gastrointest Endosc . 2020;91:AB533–AB534. [Google Scholar]

- 59.Sung JK. Diagnosis and management of gastric dysplasia. Korean J Intern Med. 2016;31:201–209. doi: 10.3904/kjim.2016.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Cho BJ, Bang CS, Park SW, Yang YJ, Seo SI, Lim H, Shin WG, Hong JT, Yoo YT, Hong SH, Choi JH, Lee JJ, Baik GH. Automated classification of gastric neoplasms in endoscopic images using a convolutional neural network. Endoscopy. 2019;51:1121–1129. doi: 10.1055/a-0981-6133. [DOI] [PubMed] [Google Scholar]

- 61.Inoue S, Shichijo S, Aoyama K, Kono M, Fukuda H, Shimamoto Y, Nakagawa K, Ohmori M, Iwagami H, Matsuno K, Iwatsubo T, Nakahira H, Matsuura N, Maekawa A, Kanesaka T, Yamamoto S, Takeuchi Y, Higashino K, Uedo N, Ishihara R, Tada T. Application of Convolutional Neural Networks for Detection of Superficial Nonampullary Duodenal Epithelial Tumors in Esophagogastroduodenoscopic Images. Clin Transl Gastroenterol. 2020;11:e00154. doi: 10.14309/ctg.0000000000000154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Lui TKL, Wong KKY, Mak LLY, To EWP, Tsui VWM, Deng Z, Guo J, Ni L, Cheung MKS, Leung WK. Feedback from artificial intelligence improved the learning of junior endoscopists on histology prediction of gastric lesions. Endosc Int Open. 2020;8:E139–E146. doi: 10.1055/a-1036-6114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Shorten C, Khoshgoftaar TM. A survey on image data augmentation for deep learning. J Big Data. 2019;6:60. doi: 10.1186/s40537-021-00492-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Pan SJ, Yang Q. A survey on transfer learning. IEEE. 2009;22:1345–1359. [Google Scholar]

- 65.Zhu X, Goldberg AB. Introduction to Semi-Supervised Learning. Synthesis Lectures on Artificial Intelligence and Machine Learning 2009; 3: 1-130. [Google Scholar]

- 66.Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio , Y Generative adversarial nets. Advances in neural information processing systems 2014; 27: 2672-2680. [Google Scholar]

- 67.Guo X, Yuan Y. Semi-supervised WCE image classification with adaptive aggregated attention. Med Image Anal. 2020;64:101733. doi: 10.1016/j.media.2020.101733. [DOI] [PubMed] [Google Scholar]

- 68.Borgli H, Thambawita V, Smedsrud PH, Hicks S, Jha D, Eskeland SL, Randel KR, Pogorelov K, Lux M, Nguyen DTD, Johansen D, Griwodz C, Stensland HK, Garcia-Ceja E, Schmidt PT, Hammer HL, Riegler MA, Halvorsen P, de Lange T. HyperKvasir, a comprehensive multi-class image and video dataset for gastrointestinal endoscopy. Sci Data. 2020;7:283. doi: 10.1038/s41597-020-00622-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.de Souza LA Jr, Passos LA, Mendel R, Ebigbo A, Probst A, Messmann H, Palm C, Papa JP. Assisting Barrett's esophagus identification using endoscopic data augmentation based on Generative Adversarial Networks. Comput Biol Med. 2020;126:104029. doi: 10.1016/j.compbiomed.2020.104029. [DOI] [PubMed] [Google Scholar]

- 70.Yan T, Wong PK, Ren H, Wang H, Wang J, Li Y. Automatic distinction between COVID-19 and common pneumonia using multi-scale convolutional neural network on chest CT scans. Chaos Solitons Fractals. 2020;140:110153. doi: 10.1016/j.chaos.2020.110153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Selvaraju , RR , Cogswell M, Das A, Vedantam R, Parikh D, Batra D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In: Proceedings of the IEEE International Conference on Computer Vision 2017; 618-626. [Google Scholar]

- 72.Bau D, Zhu JY, Strobelt H, Lapedriza A, Zhou B, Torralba A. Understanding the role of individual units in a deep neural network. Proc Natl Acad Sci USA. 2020;117:30071–30078. doi: 10.1073/pnas.1907375117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Mori Y, Kudo SE, Mohmed HEN, Misawa M, Ogata N, Itoh H, Oda M, Mori K. Artificial intelligence and upper gastrointestinal endoscopy: Current status and future perspective. Dig Endosc. 2019;31:378–388. doi: 10.1111/den.13317. [DOI] [PubMed] [Google Scholar]

- 74.Thakkar SJ, Kochhar GS. Artificial intelligence for real-time detection of early esophageal cancer: another set of eyes to better visualize. Gastrointest Endosc. 2020;91:52–54. doi: 10.1016/j.gie.2019.09.036. [DOI] [PubMed] [Google Scholar]