Abstract

Photoacoustic tomography (PAT) is a non-invasive, high-resolution imaging modality, capable of providing functional and molecular information of various pathologies, such as cancer. One limitation of PAT is the depth and wavelength dependent optical fluence, which results in reduced PA signal amplitude from deeper tissue regions. These factors can therefore introduce errors into quantitative measurements such as oxygen saturation (sO2) or the localization and concentration of various chromophores. The variation in the speed-of-sound between different tissues can also lead to distortions in object location and shape. Compensating for these effects allows PAT to be used more quantitatively. We have developed a proof-of-concept algorithm capable of compensating for the heterogeneity in speed-of-sound and depth dependent optical fluence. Speed-of-sound correction was done by using a straight ray-based algorithm for calculating the family of iso-time-of-flight contours between the transducers and every pixel in the imaging grid, while fluence compensation was done by utilizing the graphics processing unit (GPU) accelerated software MCXCL for Monte Carlo modeling of optical fluence variation. This algorithm was tested on a polyvinyl chloride plastisol (PVCP) phantom, which contained cyst mimics and blood inclusions to test the algorithm under relatively heterogeneous conditions. Our results indicate that our PAT algorithm can compensate for the speed-of-sound variation and depth dependent fluence effects within a heterogeneous phantom. The results of this study will pave the way for further development and evaluation of the proposed method in more complex in-vitro and ex-vivo phantoms, as well as compensating for the wavelength-dependent optical fluence in spectroscopic PAT.

Keywords: Photoacoustic tomography, Ultrasound tomography, Optical fluence compensation, Image reconstruction, Speed of sound compensation, Numerical modeling, Quantitative photoacoustic tomography

1. Introduction

Photoacoustic (PA) imaging (PAI) is a promising imaging modality that can provide functional and molecular information. PAI takes advantage of the photoacoustic effect, which uses light to excite various chromophores within a tissue, such as hemoglobin, that in turn undergo thermoelastic expansion and generate acoustic waves. These acoustic waves then travel throughout the medium and can be picked up with a piezoelectric transducer [1]. The signals received by the transducer can be reconstructed to, ideally, form an optical absorption map of the tissue that was illuminated. The acoustic waves generated during this process are like those found within ultrasound (US) imaging, such that the same transducer can be used. This leads to a natural synergy between the two modalities and the development of combined PA and US imaging systems. This complementary relationship allows PA to provide functional and molecular information of various chromophores, while US provides structural information. Hence, a PA-US tomography (PA-UST) probe can potentially provide a powerful diagnostic tool for physicians.

The generation of acoustic waves due to light absorption can be described by Eq. (1),

| (1) |

where is the initial PA pressure field after excitation, is the Grueneisen parameter, is the light fluence, is the optical absorption, and is the optical energy density [2]. Currently, the inability to compensate for light penetration and the resulting variations in fluence as a function of depth and wavelength, limits the potential of quantitative photoacoustic (qPA) imaging. The depth and wavelength-dependent nature of PAI implies that an identical absorber, located at different depths within the tissue will generate a different PA signal, solely because of the light fluence variation. Therefore, comparing PA signals that arise at different depths and reaching a more quantitative analysis of PA images has always been a challenge. Depth and wavelength-dependency of optical fluence in PAI becomes more of an issue when there is a requirement for imaging at multiple wavelengths. This is used for spectroscopic imaging, where chromophores at different wavelengths possess different absorption and scattering characteristics, and their spectral behavior is used to reveal their presence and concentration. This can induce a bias in spectral unmixing algorithms [[3], [4], [5], [6]], such that these limitations affect the clinical translation and utility of PAI. For example, the ability of spectroscopic PAI to accurately measure blood oxygenation, an important indicator in cancer progression, is shown to have a bias at different depths [[7], [8], [9]]. In addition, these depth and wavelength dependencies can also hinder the ability to properly localize and quantify exogenous contrast agents, such as molecular imaging contrast agents or nano-sized drug carriers. Therefore, to remove these dependencies we modeled the optical fluence in a heterogeneous medium to generate a map of the fluence distribution, which is defined as energy per area (J/m2). This distribution is then transformed into a map of the inverse fluence (), whose product can be taken with a photoacoustic tomography (PAT) image to provide a fluence compensated PAT image.

To estimate the initial pressure distribution or optical absorption maps, various reconstruction algorithms have been developed for PAT imaging, such as universal back projection and time reversal [10,11]. The universal back projection algorithm projects the PA signal received from multiple transducers into the imaging domain and the overlapping signals form the image. Time reversal looks to solve the inverse wave equation, which simulates the physics behind the acoustic wave as it travels throughout the imaging domain. In this paper we choose to use the back projection algorithm for PAT image reconstruction, as it is computationally cheap and straight forward to implement.

Previous efforts to compensate for the effects of optical fluence on PAI have been reported [[12], [13], [14]]. Typically, these methods look to reduce the problem to an optically homogeneous model. This assumption enables computationally cheap fluence calculations, using the diffusion model [12,15]. While other groups have used the Monte Carlo method [[16], [17], [18]] for fluence compensation, they still utilized homogeneous phantoms [14]. However, in the case of deep and heterogeneous tissue imaging, such as breast imaging [[19], [20], [21]], where the distance from the sternal notch to the nipple can be 21 cm, with a resulting volume of 500 cm3 [22], it is important to consider the effects of heterogeneous optical and acoustic properties. Therefore, there is a need to incorporate heterogeneous modeling when developing optical compensation methodologies. To optically compensate PA images, there must be a way to form heterogeneous models from experimental data and use these models to accurately compute fluence maps.

In this study, the synergistic relationship between UST and PAT is utilized to aid in compensating the PAT image for optical fluence and speed-of-sound (SOS). UST has been shown to produce quantitative acoustic images such as acoustic SOS, attenuation, and stiffness [[23], [24], [25], [26]]. These images can therefore be used to improve the complementary PAT images. The primary focus of this study is to use the information present in the SOS maps to enhance the quality of PAT images. This is done by first compensating PAT images for the heterogeneous SOS, and secondly using the UST SOS maps to build a numerical model of the phantom that can be used - with literature values for optical properties - for fluence calculations through the Monte Carlo method.

2. Materials and methods

2.1. UST and PAT image acquisition system

A full-ring ultrasound and photoacoustic tomography (USPAT) system was employed for data acquisition [[27], [28], [29], [30]]. The USPAT system is composed of a 200 mm diameter, 256-element ring US transducer (Sound Technology (STI), State College, PA) with a center frequency of 1.5 MHz and bandwidth of 60 %. The presented imaging system has a measured resolution of 1 mm as determined by measuring a 200 μm light-absorbing string. This transducer has an element pitch of 2.45 mm and a height of 9 mm. The generated acoustic waves, from a PA absorber, are recorded by all 256 elements using a sampling frequency of 8.33 MHz. The US transducer is housed in a polyethylene terephthalate glycol-modified (Stellar Plastics, Detroit, MI) holder which also contains the phantom and the coupling medium (degassed and distilled water). A 20 dB linear time gain compensation (TGC) was also used for all UST/PAT data.

Our illumination source was a tunable 10 nsec pulsed laser (Phocus Core, Optotek, Carlsbad, CA, USA), which was used for all PAT imaging experiments. This laser generates around 160 mJ per pulse at 680 nm. To provide full-ring illumination, two custom ring mirrors from Syntec Optics (Rochester, NY, US) were used with a 10 mm diameter axicon mirror (68–791, Edmund Optics, Barrington, NJ, USA) to create the 4 mm thick ring-shaped beam on the phantom surface [29,30]. The total fluence on the surface of the phantom - with a 65 mm diameter - would be ∼0.0215 mJ/mm2, which places it ∼10× below the American National Standards Institute (ANSI) safety limit for laser exposure (0.2 mJ/mm2 at λ = 680 nm) [31].

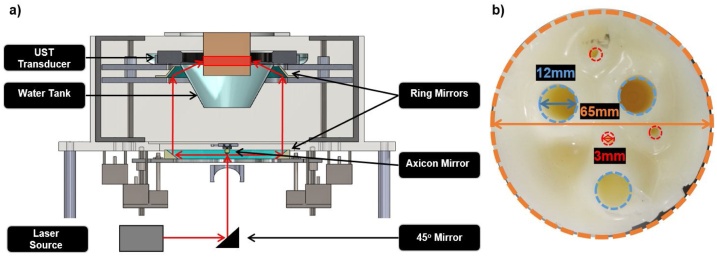

The diagram of the PAT system is presented in Fig. 1a. As shown, the laser beam is directed towards a 45° mirror which directs it to an axicon mirror that distributes the energy to the first ring mirror. From there the second ring mirror directs the beam onto the plastic mold where the full ring beam illuminates the object of interest.

Fig. 1.

(a) The USPAT acquisition system, which consist of a laser source, an axicon mirror, dual ring mirror system, water tank, and the UST transducer. (b) The PVC phantom that was imaged. It is composed of a PVC background (outlined in orange), with three cyst mimics that were filled with water and are 12 mm in diameter (outlined in blue) and three blood inclusions that are 3 mm diameter (outlined in red).

2.2. Heterogenous phantom study

To validate the proposed acoustic and optical compensation algorithm, phantoms with known acoustic and optical properties were constructed. Polyvinyl chloride plastisol (PVCP) is a phantom medium favored by the UST/PAT imaging community, since its acoustic and optical properties can be tuned [[32], [33], [34]]. A version of this material used for this paper is called Super Soft Plastic (M-F Manufacturing, Fort Worth, TX, USA), which is easy to handle and optically modify. Titanium dioxide (TiO2) was used to control the optical scattering properties of the phantom, with a concentration of 0.5 mg mL−1. The optical properties for Super Soft Plastic were approximated from literature, using a formulation of benzyl butyl phthalate/di(2-ethylhexyl) adipate (BBP/DEHA) [33]. Three- and twelve-millimeter inclusions of blood and water, respectively, were used in the phantom, representing the heterogeneous medium.

The inclusions in the phantom, shown in Fig. 1b, were composed of fresh, whole, human blood which is assumed to be completely oxygenated. Water filled cyst mimics were also included in this phantom, resulting in a heterogeneous phantom that would mimic some of the conditions seen in soft tissue, such as the breast. The optical properties for all the materials in this section are shown in Table 1.

Table 1.

Optical properties for the PVCP phantom as well as background at 680 nm. Values for the Super Soft + TiO2 were taken from measurements based on another PVCP formulation using BBP/DEHA + TiO2. Blood and water measurements were taken from mcxyz; developed by the Oregon Medical Laser Center (OMLC) [16]. The anisotropy (g) value for all mediums were assumed to be 0.9.

| Material | μa [mm−1] | μs [mm−1] | g |

|---|---|---|---|

| Super Soft + TiO2 | 0.00173 | 5.00 | 0.90 |

| Blood | 0.43427 | 7.35 | 0.90 |

| Water | 0.00045 | 1.00 | 0.90 |

2.3. UST-assisted, speed-of-sound and fluence compensation for PAT

As previously described, the fluence compensation of our PAT images is built upon the UST SOS maps. The combination of reflection, attenuation, and SOS information can be used together to increase the accuracy of the model by better defining the inclusion boundaries. In this preliminary work, only the SOS map was used. The UST SOS maps allowed for correction of object geometry via SOS correction and image segmentation. SOS correction was especially important for the PVC phantoms, where the phantom background has ∼100 m sec−1 lower speed of sound compared to water (Table 2). Therefore, we can synergistically combine information from UST to improve the PAT image quality.

Table 2.

Mean (μ) speed-of-sound values calculated from the UST SOS map; as seen in Fig. 3, along with the standard deviation (σ) within the region-of-interest.

| Material | Speed of Sound (μ ± σ) [m sec−1] |

|---|---|

| PVC | 1410 ± 10 |

| Water | 1498 ± 6 |

| Blood | 1466 ± 15 |

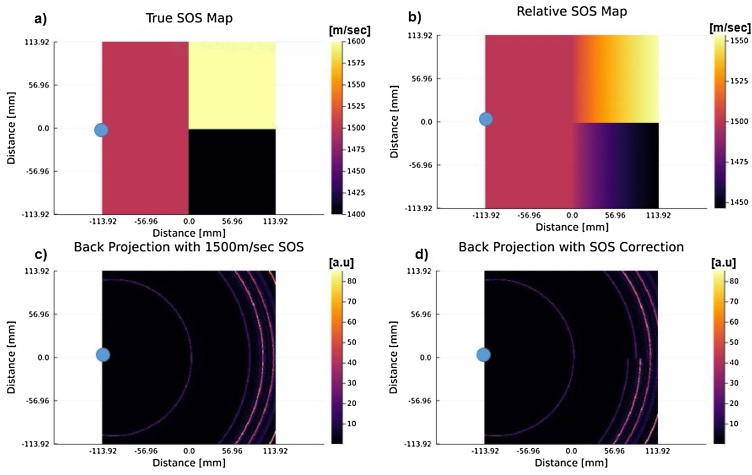

The UST SOS images were computed using an SOS inversion algorithm [[35], [36], [37], [38]]. For SOS corrected PAT, one needs to back project onto the family of iso-time-of-flight (TOF) contours, instead of the typical concentric circles. The family of iso-TOF contours is found via a straight ray approximation, such that the TOF at each pixel is computed by integrating the slowness function (1/SOS) over the ray that connects the transducer and pixel locations [39,40]. An example is shown in Fig. 2, where Fig. 2a shows the true SOS map such that the blue circle is the transducer element. Fig. 2b then goes on to show what the relative SOS map looks like when straight rays are traced from the transducer to every pixel in the imaging grid and then integrated to find the mean SOS for every pixel. These relative SOS values are then used to calculate the correct time delay for a pressure source arriving from that particular location. That information is used with a back projection algorithm, which considers those corrected time-of-flight contours as opposed to the typical concentric circles one would see if using a homogeneous SOS to reconstruct the PAT image. An example of the difference between a back projection with a homogeneous SOS assumption and the straight ray tracing SOS correction can be seen in Fig. 2c and d, where 2 c shows a back projection for the single blue element using a homogeneous SOS assumption of 1500 m/sec. There we can see that we are forming concentric circles, due to the homogeneous assumption. Whereas if we look at Fig. 2d, we can observe that there is a discontinuity between the signal that was back projected into the 1600 m/sec and 1400 m/sec region. This is expected since the same signal will be projected farther into the higher SOS region compared to the lower SOS region.

Fig. 2.

An example of speed-of-sound (SOS) correction using a straight-ray method for back projection reconstruction. Note that the blue dot represents the location of a transducer element within the grid. (a) The ground truth speed of sound map (b) The relative SOS map calculated using a straight-ray time of flight approximation method between the transducer element and every pixel in the grid (c) A back projected image using a homogeneous SOS assumption of 1500 m/sec. (d) A back projected signal using the relative SOS map shown in (b). It can be observed that in (d) there is a discontinuity between the back projected signals, which can be attributed to the heterogeneous distribution seen in (a). This is due to the fact that PA-generated acoustic waves traveling from the 1600 m/sec region would be able to travel much faster than those within the 1400 m/sec region and would subsequently appear further away in the back projected image.

To properly model the light diffusion within the phantom, a UST SOS map was used in constructing a numerical phantom. Using a priori knowledge of the phantom's structure, a seeded region growing method was used to segment the UST SOS images (from Julia’s Images.jl library) [41]. Once segmented, the phantom was labeled and tagged with optical properties gathered from literature (Table 1), where it was then used as a numerical phantom for the Monte Carlo light simulation software MCXCL. The Monte Carlo simulation models the full-ring illumination as described in Section 2.1, where 128-point sources are used to approximate the full-ring. In addition, the simulation was composed of a spatial discretization of 0.25 mm/voxel, which matches the spatial discretization of the UST SOS images. While the temporal discretization was 50 nsec to be on the same order of magnitude as the Optotek laser described in Section 2.1. The optical fluence results from this simulation were then used for fluence correction.

As described in Section 1, in order to compensate for optical fluence we take the product of the PAT image () and the inverse fluence map (), such that Eq. (1) becomes Eq. (2).

| (2) |

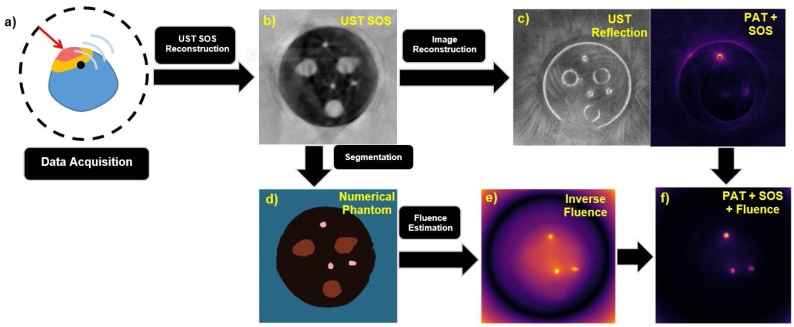

We are now left with an image that is proportional to the optical absorbance (), thereby removing the fluence variation in the image. The overall process of this algorithm can be seen in Fig. 3.

Fig. 3.

Diagram of the overall algorithm from (a) data acquisition to (f) fluence and SOS compensated PAT image. (a) Shows UST and PAT data acquisition using a full-ring transducer which is then taken and used to form (b) an UST SOS map. That UST SOS map is then used in SOS compensation for (c) UST reflection and PAT images. The UST SOS map is also used in (d) to form the segmented numerical phantom (blue = water background, black = PVC phantom, pink = blood inclusions, brown = water cyst mimics) that is used in the calculation of (e) the inverse fluence map. Finally, the SOS corrected PAT image from (c) and inverse fluence map from (e) are used to form (f) the fluence and SOS compensated PAT image.

3. Results

The results from the PVC phantom measurements, described in Section 2, are described next. We highlight the improvements made through each step of the algorithm as shown in Fig. 3. These included the profiles of the inclusions, resolution measurements, the signal-to-background ratio (SBR) (Eq. (3)), and the contrast-to-background ratio (CBR) (Eq. (4)).

| (3) |

| (4) |

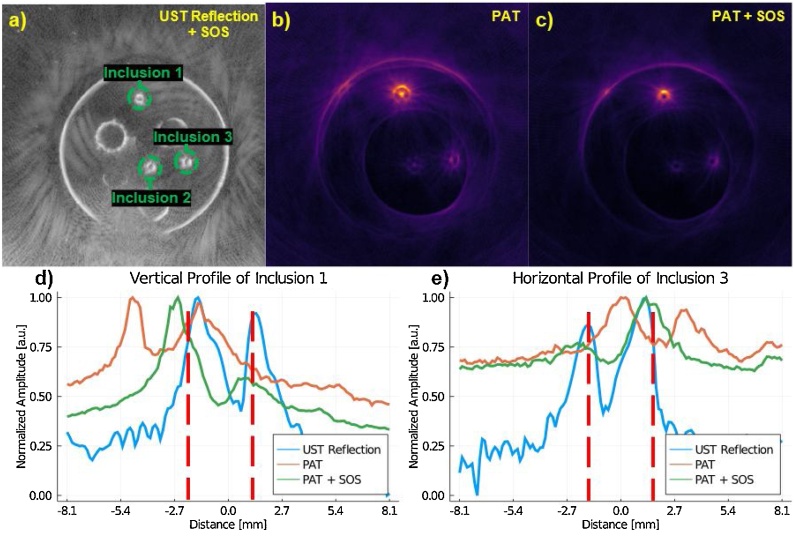

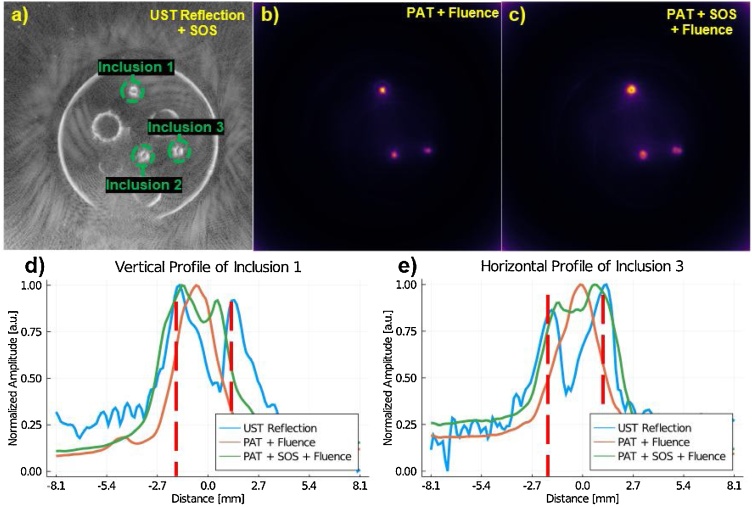

Where Sobject is the mean signal of the object of interest (OOI), SBackground is the mean signal of the background, and σBackground is the standard deviation of the background. When measuring SBackground and σBackground the whole background of the image was used excluding the inclusions. Inclusion selection was based on the UST reflection images. The SOS correction (Fig. 4) demonstrates the improvement to both inclusion shape and location, relative to homogeneous SOS reconstruction. Without SOS compensation, the PAT blood inclusions are distorted, which resulted in a high intensity point within the inclusion (Fig. 4b), particularly inclusion 1. With the SOS correction (Fig. 4c), the circular inclusion is seen as a ring which is closer to reality. We can also observe from the profile plots (Fig. 4d and e), that the SOS corrected PAT images better match the UST reflection profile of the inclusions. Additionally, Fig. 4d and e show the variation in intensity of the PAT profiles, which is to be expected, since fluence compensation has not yet been corrected for.

Fig. 4.

(a) The SOS-compensated UST reflection image that is used for reference in determining the location of the inclusions. (b) PAT where a homogeneous SOS of 1450 m sec−1 is assumed. (c) The speed-of-sound corrected PAT image. (d) The profile of the first inclusion; labeled in (a). (e) The profile of the third inclusion; labeled in (a). It can be seen in (d) and (e) that the SOS correction shifts the inclusion profile to better align with the location of the object as it is seen in the UST reflection map. Red dash lines in (d) and (e) serve to aid in visualizing the objects true size. Note that the x-axis for (d) and (e) have been rounded to the nearest tenth of a millimeter. We expect edges to have varying intensity as seen in SOS compensated PAT since fluence compensation has not been added yet.

The effect of incorporating optical compensation with the SOS compensation for the PAT images is discussed next. In Fig. 5b and c one can see that the full shape of the inclusions has been recovered. Here, the entirety of the blood inclusion cross-section is visible, not just the surface of the enclosure. This can be attributed to the removal of uneven optical fluence. This matched our expectations, since the fluence compensated PAT images should be proportional to μa, see Eq. (2), and therefore the entirety of the tube should be bright relative to the background. We can also observe in Fig. 5b that the inclusions without SOS compensation appear smaller. We believe this is due to the errors in the non-SOS corrected PAT image being amplified during fluence compensation. In Fig. 4b we see a bright point in the middle of the inclusion, which is due to the homogeneous SOS assumption mis-assigning pressure amplitudes within the image during back projection reconstruction.

Fig. 5.

(a) The SOS-compensated UST reflection image that is used for reference in determining the location of the inclusions. (b) The fluence compensated PAT, where a homogeneous SOS of 1450 m sec−1 is assumed. (c) The speed-of-sound and fluence corrected PAT image. (d) The profile of the first inclusion; labeled in (a). (e) The profile of the third inclusion; labeled in (a). It can be seen in (d) and (e) that the SOS and fluence corrected PAT better algins with the peaks seen in the UST reflection image. Red dash lines in (d) and (e) serve to aid in visualizing the objects true size. Note that the x-axis for (d) and (e) have been rounded to the nearest tenth of a millimeter.

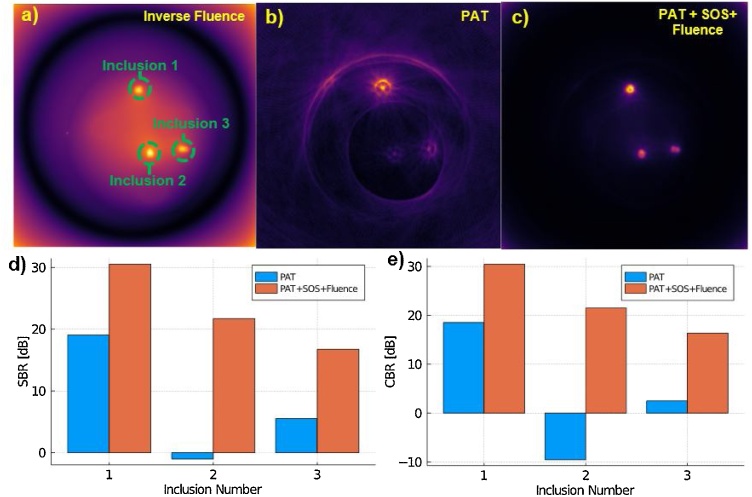

Next, we consider quantitative image improvements in the form of SBR and CBR. As shown in Fig. 6d and e there is a dramatic improvement in both SBR and CBR for all inclusions. The SBR is increased by ∼20 dB for the deepest object (inclusion 2), and ∼10 dB for the object with the strongest signal (inclusion 1). CBR also saw an increase of ∼30 dB for the deepest object (inclusion 2) and ∼10 dB for the object with the strongest signal (inclusion 1). This suggests that objects that are further away from the source of illumination benefit the most from optical compensation. These results hold promise for future studies that will look at quantifying the changes in sO2 and other quantitative measurements due to the benefit of fluence compensation.

Fig. 6.

(a) The inverse fluence map used for light fluence compensation. (b) PAT reconstructed image assuming a homogeneous SOS of 1450 m sec−1 (c) Fluence and SOS compensated PAT image. (d) SBR comparison between uncompensated PAT and SOS + fluence compensated PAT. (e) CBR comparison between uncompensated PAT and SOS + fluence compensated PAT.

Lastly, resolution measurements were made for inclusions 1, 2, and 3 (see Table 3). Where the resolution was measured using one of two methods. Either a peak-to-peak or full-width-half-maximum (FWHM) method depending on the shape of the inclusion. If the profile of the inclusion contained two gaussian-like profiles the peak-to-peak method was used, see the UST reflection profile in Fig. 5d. If the profile was a single gaussian-like profile the FWHM method was used, see the PAT + Fluence profile in Fig. 5d. The method that was used is also denoted in Table 3. Additionally, the error was taken as defined in Eq. (5).

| (5) |

Where the error is the absolute value (|…|) of the difference between the true size and the measured size .

Table 3.

The sizes of visualized inclusions in SOS compensated UST reflection, fluence compensated PAT, and fluence and SOS compensated PAT. The inclusion sizes were measured either by calculating the distance between two apparent peaks or by using full width half maximum (FWHM) in cases where the intensity profile has a single peak.

| Inclusion | UST Reflection [mm] |

PAT + Fluence [mm] |

PAT + SOS + Fluence [mm] |

||||||

|---|---|---|---|---|---|---|---|---|---|

| Calculation Method | Measured | Error | Calculation Method | Measured | Error | Calculation Method | Measured | Error | |

| 1 | Peak-to-Peak | 2.89 | 0.11 | FWHM | 2.29 | 0.71 | Peak-to-Peak | 2.2 | 0.80 |

| 2 | Peak-to-Peak | 2.93 | 0.07 | FWHM | 2.04 | 0.96 | FWHM | 3.27 | 0.27 |

| 3 | Peak-to-Peak | 2.84 | 0.16 | FWHM | 2.39 | 0.61 | Peak-to-Peak | 2.1 | 0.9 |

Having a closer look at the results presented in Table 3, the inclusion size measurements between the PAT + Fluence and PAT + SOS + Fluence are generally comparable. This could lead one to think that SOS compensation did little to improve the image. However, we must consider what has been presented in Fig. 4, Fig. 5. Where in Fig. 4b we can see initially all the inclusions appear distorted with a large and bright center artifact. This is attributed to the homogeneous SOS assumption, which makes the inclusion appear artificially small when considering the central artifact. This is later exacerbated by the information encoded by the numerical phantom’s structure, see Fig. 3d. Anything within the region defined as the blood inclusion will be amplified more than signals outside of that region. Therefore, only the central artifact gets amplified, making the inclusions in the PAT + Fluence image look artificially comparable to those in the PAT + SOS + Fluence images.

4. Discussion

The SOS and fluence compensation algorithms described in the previous sections are promising and demonstrate potential for future applications in deep and heterogeneous tissue imaging. In such imaging applications, the depth dependence of the fluence is exacerbated and therefore requires correction. Our algorithm has shown the ability to compensate for more realistic heterogeneous conditions, by using the information from UST SOS images to take in account the phantom’s structure. As previously discussed, the SBR and CBR are increased by ∼20 and ∼30 dB respectively for the deepest object, thereby allowing us to compare signals, regardless of their depth, more equitably.

The results presented show promise for future applications in quantitative PAT (qPAT) and by extension spectroscopic PAT (sPAT) imaging. For qPAT and sPAT it is critical to compensate for light fluence as to improve the accuracy of various measurements, such as oxygen saturation or concentration of exogenous and endogenous contrast agents [7]. Contrast enhanced molecular imaging with PAI requires quantitative measurements of the accumulated quantities within the tissue [42,43]. In other words, localizing the presence of accumulated contrast agents is an important step towards achieving molecular specific imaging for potential differential diagnosis and staging of pathologies such as cancer. Quantitative images representing the amount of contrast agents detected at various depths is critical, yet very challenging mainly due to tissue heterogeneity for light diffusion. This can be seen in the results section above, where our heterogenous phantom contains three blood inclusions, all of which were the same concentration. The resulting uncompensated image shows different amplitudes, SBR, and CBR for each inclusion. Once corrected, the inclusions become closer in amplitude, SBR, and CBR. This holds promise for testing the algorithm with contrast enhanced molecular PA imaging, where we would anticipate improved quantitative measurements.

As discussed above, fluence compensation holds promise for future applications in qPAT and sPAT due to its ability to correct for the wavelength and depth dependence of fluence. This would aid in quantitative measurements like sO2 and the localization of exogenous contrast agents [[3], [4], [5]]. However, other methods have been developed to improve the accuracy of these quantitative estimates and spectroscopic imaging in general. For a more complete review, we would point the reader to [5,44]. Recently, machine learning approaches have been studied to help overcome some of the limitations of qPAT and sPAT. One such method is learned spectral decoloring (LSD) [45], which looks to bridge the gap between in-silico models and experimental in-vitro and in-vivo data. This is done by training the machine learning model on in-silico data using only the spectral photoacoustic data and the known oxygenation values. Once the model is trained, it can be applied to in-vitro and in-vivo data. Currently, this method holds promise for increasing the accuracy of qPAT measurements, since once the model is trained it can be faster than typical linear unmixing techniques. However, it still must be shown how well the method generalizes. Further, the LSD method does not use an acoustic forward model to generate images and may have difficulty separating signal amplitude information. For example, if two blood vessels are the same distance away from the transducer, but have different oxygenation levels, their amplitudes will coincide in the signal time trace, thereby introducing errors is the resulting sO2 measurements. Another method is to utilize Beer’s Law to calculate the local optical fluence [12,46]. However, as touched upon in Section 1, Beer’s Law allows for faster fluence calculations and a simpler implementation for calculating the local optical fluence, yet it ignores the effects of heterogenous tissue properties. As discussed at the end of this section, a topic for future work would be to measure the error between Beer’s Law and Monte Carlo light simulations on sO2 measurements. This would look at the computational and accuracy trade-offs between the two to better determine in what situations either would be used. Lastly, statistical approaches have been researched that assume unaided or blind spectral unmixing [[47], [48], [49]]. This eliminates a strong assumption in qPAT for in-vivo measurements, since one would not truly know the optical properties for a given medium. Currently, if the method described in this paper was applied, informed judgments must be made on the a priori optical property distribution. However, such statistical unmixing methods have been shown to glean the number of primary optical absorbers rather than the specific absorber concentration levels.

The current algorithm has been developed with breast and other soft tissues in mind. Therefore, one primary limitation is the presence of bone or other dense objects embedded in the medium. Such scenarios have not been investigated and therefore could introduce errors with SOS reconstruction. Thereby, the segmentation algorithm could introduce further errors in the light model and resulting fluence compensated PAT image.

Considerations must be taken when generating the numerical model to calculate the fluence map. We are limited by the resolution of the image used for segmentation, i.e. acoustic reflection, sound speed, or attenuation. For the current USPAT system, these values are 1 mm for acoustic reflection and 4 mm for acoustic SOS and attenuation [50]. Currently, the SOS image was used for segmentation due to the structures being continuously filled. However, segmentation of the reflection mode image to extract features below the resolution of the current SOS image would result in a more complex and accurate medium geometry. It is also an ongoing effort to expand and improve the segmentation algorithm in general in the hopes of allowing automatic soft tissue differentiation, which would dramatically reduce the effort required on the part of the researcher to run the fluence compensation algorithm. For future PAT breast imaging applications, it is feasible to differentiate the breast tissue into three primary tissue types: fat, glandular, and cancerous using the SOS values alone [50,51]. Once the tissues are characterized and segmented using SOS, a look-up table of optical properties from literature for a given tissue type enables generation of a robust light diffusion model. While the literature values for absorption and scattering may not match-up exactly with those found in the body, the general premise is to improve the PAT images relative to their uncompensated or homogeneous fluence compensated counterparts.

There are numerical issues when compensating for light fluence in deeper tissues. In rather large objects, the fluence will be very low at greater depth within the medium. Therefore, when one inverts the fluence map, they will have very large values that will amplify noise present in the PAT image. Current methods to minimize this involve adding a positive regularization parameter to the fluence map before inversion [52]. However, this is an ongoing effort as we look to apply the algorithm to large mediums.

The proposed algorithm has taken advantage of the natural synergy between UST and PAT to enhance PA images and compensate for the effects of SOS and optical fluence without additional hardware overhead when compared to typical USPAT systems. This allows for any USPAT system that can acquire transmission US images, to enhance their PA images. Maintaining the relatively low cost and increasing the accessibility of USPAT. The primary limitation is still with the computational cost of Monte Carlo based methods for fluence calculations. While GPU-accelerated methods have improved the computational speed of fluence simulations, sPAT would still naturally need additional simulations for every wavelength used. Otherwise, certain approximations would have to be made such as wavelength independent fluence, where the fluence is assumed to be constant for a given set of wavelengths. A subject for further investigation would be to consider the accuracy versus computational cost of Beer's Law and Monte Carlo methods when compensating for fluence in sPAT. For example, such a comparison can be made by looking at sO2 maps from uncompensated sPAT, sPAT using fluence compensation with Beer's Law, and sPAT using fluence compensation with the Monte Carlo method.

5. Conclusions

PAT is a powerful molecular and functional imaging modality currently limited in imaging depth and quantitative information due to the depth dependence of optical fluence in diffusive mediums. While acoustic compensation of PAT images improves the image quality in terms of SBR and CBR, it is the fluence compensation which has the most dramatic improvement on image quality. Deeper objects saw the greatest improvements in SBR and CBR for our phantom studies, which suggests potentially large improvements for deep tissue imaging, where we may be able to better recover signals from deeper/noisier regions. These encouraging results also pave the way for future sPAT experiments that will be the subject for future works.

Declaration of Competing Interest

The authors declare that there are no conflicts of interest.

Acknowledgement

This work is partially supported by Department of Defense, Breast Cancer Program through grant number W81XWH-18-1-003.

We would also like to thank Dr. Karl Kratkiewicz, who provided numerous insights into our work as well as invaluable feedback for the manuscript.

Biography

Alexander Pattyn is a PhD student in the Department of Biomedical Engineering at Wayne State University. Alex receive his B.S. degree in 2017 from Wayne State University in Biomedical Physics. During this time he was introduced to photoacoustic and ultrasound imaging through his research with Dr. Mehrmohammadi. He has continued his research with Dr. Mehrmohammadi into his PhD program. Throughout his graduate studies Alexander has been the recipient of numerous academic awards and scholarships, including the Thomas Rumble Fellowship and Graduate Professional Scholarship. His research interest included quantitative ultrasound and photoacoustic tomography imaging for biomedical applications such as breast cancer imaging.

References

- 1.Tam A.C. Applications of photoacoustic sensing techniques. Rev. Mod. Phys. 1986;58:381–431. doi: 10.1103/RevModPhys.58.381. [DOI] [Google Scholar]

- 2.Wang L.V. Tutorial on photoacoustic microscopy and computed tomography. IEEE J. Sel. Top. Quantum Electron. 2008;14:171–179. doi: 10.1109/JSTQE.2007.913398. [DOI] [Google Scholar]

- 3.Naser M.A., Sampaio D.R.T., Munoz N.M., Wood C.A., Mitcham T.M., Stefan W., Sokolov K.V., Pavan T.Z., Avritscher R., Bouchard R.R. Improved photoacoustic-based oxygen saturation estimation with SNR-regularized local fluence correction. IEEE Trans. Med. Imaging. 2019;38:561–571. doi: 10.1109/TMI.2018.2867602. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Mitcham T., Taghavi H., Long J., Wood C., Fuentes D., Stefan W., Ward J., Bouchard R. Photoacoustic-based sO2 estimation through excised bovine prostate tissue with interstitial light delivery. Photoacoustics. 2017;7:47–56. doi: 10.1016/j.pacs.2017.06.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Li M., Tang Y., Yao J. Photoacoustic tomography of blood oxygenation: a mini review. Photoacoustics. 2018;10:65–73. doi: 10.1016/j.pacs.2018.05.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Maslov K., Sivaramakrishnan M., Zhang H.F., Stoica G., Wang L.V. Technical considerations in quantitative blood oxygenation measurement using photoacoustic microscopy in vivo. In: Oraevsky A.A., Wang L.V., editors. San Jose, CA. 2006. p. 60860R. [DOI] [Google Scholar]

- 7.Mehrmohammadi M., Joon Yoon S., Yeager D., Emelianov S.Y. Photoacoustic imaging for cancer detection and staging. CMI. 2013;2:89–105. doi: 10.2174/2211555211302010010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Weidner N., Semple J.P., Welch W.R., Folkman J. Tumor angiogenesis and metastasis — correlation in invasive breast carcinoma. N. Engl. J. Med. 1991;324:1–8. doi: 10.1056/NEJM199101033240101. [DOI] [PubMed] [Google Scholar]

- 9.Hockel M., Vaupel P. Tumor hypoxia: definitions and current clinical, biologic, and molecular aspects. JNCI J. Natl. Cancer Inst. 2001;93:266–276. doi: 10.1093/jnci/93.4.266. [DOI] [PubMed] [Google Scholar]

- 10.Treeby B.E., Zhang E.Z., Cox B.T. Photoacoustic tomography in absorbing acoustic media using time reversal. Inverse Probl. 2010;26 doi: 10.1088/0266-5611/26/11/115003. [DOI] [Google Scholar]

- 11.Xu M., Wang L.V. Universal back-projection algorithm for photoacoustic computed tomography. Phys. Rev. E. 2005;71 doi: 10.1103/PhysRevE.71.016706. [DOI] [PubMed] [Google Scholar]

- 12.Park S., Oraevsky A.A., Su R., Anastasio M.A. Compensation for non-uniform illumination and optical fluence attenuation in three-dimensional optoacoustic tomography of the breast. In: Oraevsky A.A., Wang L.V., editors. Photons Plus Ultrasound: Imaging and Sensing 2019. SPIE; San Francisco, United States: 2019. p. 180. [DOI] [Google Scholar]

- 13.Kirillin M., Perekatova V., Turchin I., Subochev P. Fluence compensation in raster-scan optoacoustic angiography. Photoacoustics. 2017;8:59–67. doi: 10.1016/j.pacs.2017.09.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Bu Shuhui, Liu Zhenbao, Shiina T., Kondo K., Yamakawa M., Fukutani K., Someda Y., Asao Y. Model-based reconstruction integrated with fluence compensation for photoacoustic tomography. IEEE Trans. Biomed. Eng. 2012;59:1354–1363. doi: 10.1109/TBME.2012.2187649. [DOI] [PubMed] [Google Scholar]

- 15.Jacques S.L., Pogue B.W. Tutorial on diffuse light transport. J. Biomed. Opt. 2008;13 doi: 10.1117/1.2967535. [DOI] [PubMed] [Google Scholar]

- 16.Jacques S., Li T. 2013. Monte Carlo Simulations of Light Transport in 3D Heterogenous Tissues (mcxyz. C) See Http://Omlc.Org/Software/Mc/Mcxyz/Index.Html (Accessed 30 January 2017) [Google Scholar]

- 17.Zhu C., Liu Q. Review of Monte Carlo modeling of light transport in tissues. J. Biomed. Opt. 2013;18 doi: 10.1117/1.JBO.18.5.050902. [DOI] [PubMed] [Google Scholar]

- 18.Fang Q., Kaeli D.R. Accelerating mesh-based Monte Carlo method on modern CPU architectures. Biomed. Opt. Express. 2012;3:3223. doi: 10.1364/BOE.3.003223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Boyd N.F., Rommens J.M., Vogt K., Lee V., Hopper J.L., Yaffe M.J., Paterson A.D. Mammographic breast density as an intermediate phenotype for breast cancer. Lancet Oncol. 2005;6:798–808. doi: 10.1016/S1470-2045(05)70390-9. [DOI] [PubMed] [Google Scholar]

- 20.Harvey J.A., Bovbjerg V.E. Quantitative assessment of mammographic breast density: relationship with breast cancer risk. Radiology. 2004;230:29–41. doi: 10.1148/radiol.2301020870. [DOI] [PubMed] [Google Scholar]

- 21.Muštra M., Grgić M., Delač K. Breast density classification using multiple feature selection. Automatika. 2012;53:362–372. doi: 10.7305/automatika.53-4.281. [DOI] [Google Scholar]

- 22.Kovacs L., Eder M., Hollweck R., Zimmermann A., Settles M., Schneider A., Endlich M., Mueller A., Schwenzer-Zimmerer K., Papadopulos N.A., Biemer E. Comparison between breast volume measurement using 3D surface imaging and classical techniques. Breast. 2007;16:137–145. doi: 10.1016/j.breast.2006.08.001. [DOI] [PubMed] [Google Scholar]

- 23.Duric N., Littrup P., Li C., Roy O., Schmidt S., Seamans J., Wallen A., Bey-Knight L. Whole breast tissue characterization with ultrasound tomography. In: Bosch J.G., Duric N., editors. Orlando, Florida, United States. 2015. p. 94190G. [DOI] [Google Scholar]

- 24.Li C., Duric N., Littrup P., Huang L. In vivo breast sound-speed imaging with ultrasound tomography. Ultrasound Med. Biol. 2009;35:1615–1628. doi: 10.1016/j.ultrasmedbio.2009.05.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Nebeker J., Nelson T.R. Imaging of sound speed using reflection ultrasound tomography. J. Ultrasound Med. 2012;31:1389–1404. doi: 10.7863/jum.2012.31.9.1389. [DOI] [PubMed] [Google Scholar]

- 26.Roy O., Jovanović I., Hormati A., Parhizkar R., Vetterli M. Sound speed estimation using wave-based ultrasound tomography: theory and GPU implementation. In: D’hooge J., McAleavey S.A., editors. San Diego, California, USA. 2010. p. 76290J. [DOI] [Google Scholar]

- 27.Alijabbari N., Alshahrani S.S., Pattyn A., Mehrmohammadi M. Photoacoustic tomography with a ring ultrasound transducer: a comparison of different illumination strategies. Appl. Sci. 2019;9:3094. doi: 10.3390/app9153094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Alshahrani S., Pattyn A., Alijabbari N., Yan Y., Anastasio M., Mehrmohammadi M. IEEE. 2018. The effectiveness of the omnidirectional illumination in full-ring photoacoustic tomography; pp. 1–4. [Google Scholar]

- 29.Alshahrani S.S., Yan Y., Alijabbari N., Pattyn A., Avrutsky I., Malyarenko E., Poudel J., Anastasio M., Mehrmohammadi M. All-reflective ring illumination system for photoacoustic tomography. J. Biomed. Opt. 2019;24:1. doi: 10.1117/1.JBO.24.4.046004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Alshahrani S.S., Yan Y., Malyarenko E., Avrutsky I., Anastasio M.A., Mehrmohammadi M. An advanced photoacoustic tomography system based on a ring geometry design. In: Duric N., Byram B.C., editors. Medical Imaging 2018: Ultrasonic Imaging and Tomography. SPIE; Houston, United States: 2018. p. 31. [DOI] [Google Scholar]

- 31.Laser Institute of America . 2014. American National Standard for Safe Use of Lasers ANSI Z136. 1-2014. [Google Scholar]

- 32.Bohndiek S.E., Bodapati S., Van De Sompel D., Kothapalli S.-R., Gambhir S.S. Development and application of stable phantoms for the evaluation of photoacoustic imaging instruments. PLoS One. 2013;8 doi: 10.1371/journal.pone.0075533. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Vogt W.C., Jia C., Wear K.A., Garra B.S., Joshua Pfefer T. Biologically relevant photoacoustic imaging phantoms with tunable optical and acoustic properties. J. Biomed. Opt. 2016;21 doi: 10.1117/1.JBO.21.10.101405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Spirou G.M., Oraevsky A.A., Vitkin I.A., Whelan W.M. Optical and acoustic properties at 1064 nm of polyvinyl chloride-plastisol for use as a tissue phantom in biomedical optoacoustics. Phys. Med. Biol. 2005;50:N141–N153. doi: 10.1088/0031-9155/50/14/N01. [DOI] [PubMed] [Google Scholar]

- 35.Pratt R.G. Seismic waveform inversion in the frequency domain, Part 1: theory and verification in a physical scale model. Geophysics. 1999;64:888–901. doi: 10.1190/1.1444597. [DOI] [Google Scholar]

- 36.Pratt R.G., Shipp R.M. Seismic waveform inversion in the frequency domain, Part 2: fault delineation in sediments using crosshole data. Geophysics. 1999;64:902–914. doi: 10.1190/1.1444598. [DOI] [Google Scholar]

- 37.Pratt R.G., Huang L., Duric N., Littrup P. In: Sound-Speed and Attenuation Imaging of Breast Tissue Using Waveform Tomography of Transmission Ultrasound Data. Hsieh J., Flynn M.J., editors. 2007. p. 65104S. [DOI] [Google Scholar]

- 38.Brenders A.J., Pratt R.G. Full waveform tomography for lithospheric imaging: results from a blind test in a realistic crustal model. Geophys. J. Int. 2007;168:133–151. doi: 10.1111/j.1365-246X.2006.03156.x. [DOI] [Google Scholar]

- 39.Jose J., Willemink R.G.H., Steenbergen W., Slump C.H., van Leeuwen T.G., Manohar S. Speed-of-sound compensated photoacoustic tomography for accurate imaging. Med. Phys. 2012;39:7262–7271. doi: 10.1118/1.4764911. [DOI] [PubMed] [Google Scholar]

- 40.Modgil D., Anastasio M.A. Image reconstruction in photoacoustic tomography with variable speed of sound using a higher-order geometrical acoustics approximation. J. Biomed. Opt. 2010;15:9. doi: 10.1117/1.3333550. [DOI] [PubMed] [Google Scholar]

- 41.Bezanson J., Edelman A., Karpinski S., Shah V.B. Julia: a fresh approach to numerical computing. SIAM Rev. 2017;59:65–98. doi: 10.1137/141000671. [DOI] [Google Scholar]

- 42.Luke G.P., Yeager D., Emelianov S.Y. Biomedical applications of photoacoustic imaging with exogenous contrast agents. Ann. Biomed. Eng. 2012;40:422–437. doi: 10.1007/s10439-011-0449-4. [DOI] [PubMed] [Google Scholar]

- 43.Kruger R.A., Lam R.B., Reinecke D.R., Del Rio S.P., Doyle R.P. Photoacoustic angiography of the breast: photoacoustic angiography of the breast. Med. Phys. 2010;37:6096–6100. doi: 10.1118/1.3497677. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Cao F., Qiu Z., Li H., Lai P. Photoacoustic imaging in oxygen detection. Appl. Sci. 2017;7:1262. doi: 10.3390/app7121262. [DOI] [Google Scholar]

- 45.Gröhl J., Kirchner T., Adler T.J., Hacker L., Holzwarth N., Hernández-Aguilera A., Herrera M.A., Santos E., Bohndiek S.E., Maier-Hein L. Learned spectral decoloring enables photoacoustic oximetry. Sci. Rep. 2021;11:6565. doi: 10.1038/s41598-021-83405-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Kim S., Chen Y.-S., Luke G.P., Emelianov S.Y. In vivo three-dimensional spectroscopic photoacoustic imaging for monitoring nanoparticle delivery. Biomed. Opt. Express. 2011;2:2540. doi: 10.1364/BOE.2.002540. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Glatz J., Deliolanis N.C., Buehler A., Razansky D., Ntziachristos V. Blind source unmixing in multi-spectral optoacoustic tomography. Opt. Express. 2011;19:3175. doi: 10.1364/OE.19.003175. [DOI] [PubMed] [Google Scholar]

- 48.Ntziachristos V., Razansky D. Molecular imaging by means of multispectral optoacoustic tomography (MSOT) Chem. Rev. 2010;110:2783–2794. doi: 10.1021/cr9002566. [DOI] [PubMed] [Google Scholar]

- 49.Patwardhan S.V., Culver J.P. Quantitative diffuse optical tomography for small animals using an ultrafast gated image intensifier. J. Biomed. Opt. 2008;13 doi: 10.1117/1.2830656. [DOI] [PubMed] [Google Scholar]

- 50.Duric N., Littrup P., Poulo L., Babkin A., Pevzner R., Holsapple E., Rama O., Glide C. Detection of breast cancer with ultrasound tomography: first results with the Computed Ultrasound Risk Evaluation (CURE) prototype. Med. Phys. 2007;34:773–785. doi: 10.1118/1.2432161. [DOI] [PubMed] [Google Scholar]

- 51.Sak M., Duric N., Littrup P., Bey-Knight L., Ali H., Vallieres P., Sherman M.E., Gierach G.L. Using speed of sound imaging to characterize breast density. Ultrasound Med. Biol. 2017;43:91–103. doi: 10.1016/j.ultrasmedbio.2016.08.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Cox B.T., Arridge S.R., Köstli K.P., Beard P.C. Two-dimensional quantitative photoacoustic image reconstruction of absorption distributions in scattering media by use of a simple iterative method. Appl. Opt. 2006;45:1866. doi: 10.1364/AO.45.001866. [DOI] [PubMed] [Google Scholar]