Abstract

Overall impact of public health prevention interventions relies not only on the average efficacy of an intervention, but also on the successful adoption, implementation, and maintenance (AIM) of that intervention. In this study, we aim to understand the dynamics that regulate AIM of organizational level intervention programs. We focus on two well-documented obesity prevention interventions, implemented in food carry-outs and stores in low-income urban areas of Baltimore, Maryland, which aimed to improve dietary behaviour for adults by providing access to healthier foods and point-of-purchase promotions. Building on data from field observations, in-depth interviews, and data discussed in previous publications, as well as the strategy and organizational behaviour literature, we developed a system dynamics model of the key processes of AIM. With simulation analysis, we show several reinforcing mechanisms that span stakeholder motivation, communications, and implementation quality and costs can turn small changes in the process of AIM into big difference in the overall impact of the intervention. Specifically, small changes in the allocation of resources to communication with stakeholders of intervention could have a nonlinear long-term impact if those additional resources can turn stakeholders into allies of the intervention, reducing the erosion rates and enhancing sustainability. We present how the dynamics surrounding communication, motivation, and erosion can create significant heterogeneity in the overall impact of otherwise similar interventions. Therefore, careful monitoring of how those dynamics unfold, and timely adjustments to keep the intervention on track are critical for successful implementation and maintenance.

Keywords: Obesity prevention, intervention, sustainability of implementation, motivation, communication, system dynamics, simulation, process evaluation

1. Introduction

Variation in the successful implementation and maintenance of public health prevention interventions depends, in part, on the organizational dynamics unfolding during and after implementation [1]. The overall goal of this paper is to enhance our understanding of organizational dynamics that impact the effectiveness of obesity prevention interventions, with implications for multi-stakeholder organizational programs in general. Through case-based simulation modelling of complex systems [2], this study contributes to our understanding of the dynamics of program adoption, implementation, and maintenance (AIM) and thus helps explain why some instances of obesity prevention and treatment interventions prove more effective than others. Specifically, using a simulation model grounded in case data we show how small changes in an intervention can make the difference between failure and success and make the interventions sustainable.

1.1. Dynamics of adoption, implementation, and maintenance

The efficacy of many lifestyle interventions aimed at obesity and related chronic diseases such as diabetes is well established [3]. Overall effectiveness of these interventions, however, relies not only on the average efficacy of the intervention, but also on the successful AIM of each instance of that intervention (i.e., each program or organization level instance of intervention) within the responsible organizational and community context [4]. In practice, much variability in overall effectiveness of interventions arises from variations in AIM. Evidence from a few controlled trials of multiple programs of the same intervention suggests significant variations across programs are common [5, 6].

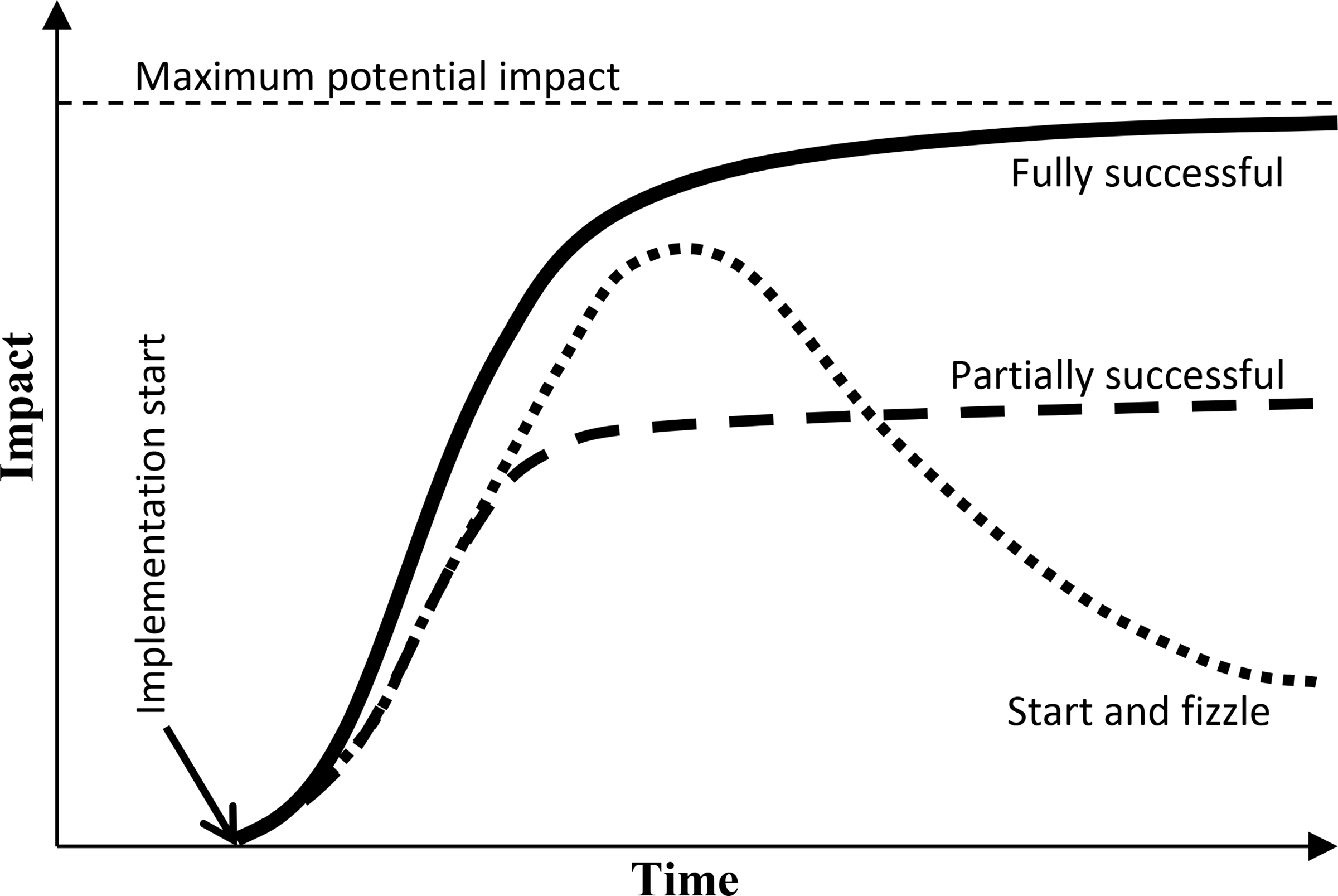

Figure 1 reflects how multiple instances of the same intervention (i.e., same inherent efficacy) applied to similar population groups (i.e., similar reach) can show different trajectories of overall effectiveness due to their varying levels of AIM.

Figure 1.

Potential program impact trajectories

For example, one can imagine differences in the implementation process that lead one organization to receive early technical assistance while the other receives none. In the absence of that support, the second organization fails to develop the required knowledge and capabilities, does not receive any positive feedback from its effort, and thus loses momentum. Such mechanisms could lead to significantly diverse results across different instances of AIM for the same intervention. Understanding the sources of variation in AIM is therefore central to enhancing the effectiveness of existing interventions and designing more effective new interventions.

Common effectiveness research methods, such as randomized controlled trials (RCTs), usually focus on measuring efficacy and therefore try to minimize the program variations across AIM by selecting motivated, resource rich, and well-trained organizational participants. Therefore, by design, RCTs may indeed exclude from analysis the very factors that explain important variations in actual effectiveness of different programs [7–9]. These shortcomings have motivated recent interest in more holistic frameworks to evaluate interventions on dimensions other than efficacy alone (e.g., [10–14]). Even though there have been several models and frameworks for implementation of obesity prevention interventions, existing research does not explicitly capture the feedback loops between key variables in the system and does not attempt to quantify the key mechanisms of AIM (see Supplementary Section S1 for more discussion on AIM).

1.2. Building on the strategy and organizational behaviour literature

At their core AIM processes are organizational, so theories in strategy and organizational behaviour can inform the effectiveness of chronic disease interventions. Specifically, strategy literature shows that the differences in configurations of organizational resources and capabilities explains much of the heterogeneity in organizational performance [15–17]. Getting to successful configurations of resources is a complex process fraught with many pitfalls, in which some organizations succeed while others fail [18–20]. Building on strategy literature, we look for organizational resources and capabilities which are instrumental in AIM processes of obesity prevention interventions.

A second insight from the organizational literature involves the dynamic trade-offs in building alternative resources. These trade-offs increase the risk of failure in many settings [21]. Organizations are subject to doing what they know best and ignoring new emerging opportunities [22, 23]. They also routinely under-value investments with long-term payoffs [24, 25]. For instance, empirical studies provide strong support for many of quality and process improvement programs [26, 27], yet organizations often fail to fully realize these benefits because resources are withdrawn from the program before full results are observed, initial enthusiasm overwhelms the training capacity to keep the program effective, or seeking short-term gains the system is overloaded with demand and is pushed into a “firefighting” mode of operation [28–31].

Similar to quality and process improvement initiatives, many health care interventions may be beneficial over the long run, but require initial investments and delays before benefits materialize. Therefore, we use insights from organizational literature to design our study of obesity prevention interventions. Specifically, we ask: What are the mechanisms for building and sustaining the resources central to an intervention’s effectiveness? What are the common failure modes that derail successful development and maintenance of those resources? What are the main leverage points to increase success chances in a program’s life-cycle?

Finally, by explicitly considering the AIM of programs, this study builds on the current public health intervention assessment methods such as RE-AIM (reach, effectiveness, adoption, implementation, and maintenance) [32–35] by using system dynamics modelling to study dynamics of program success and failure. This perspective, combined with model-based experimentation, allows us to develop more holistic theory, evaluate existing and new programs, and provide more operational recommendations.

1.3. Dynamic modelling and endogenous perspective

System dynamics modelling and simulation are potential tools to understand the complexity of a system and are increasingly used in public health [36–45]—see Supplementary Section S2 for more discussion about our use of the term “model”. Dynamic simulation models often take an endogenous perspective: they focus on the interactions among concepts within the boundary of the system that lead to behaviours we are interested to understand. This focus does not negate the importance of exogenous drivers of behaviour, but is motivated by three considerations [2]: First, the endogenous perspective often provides a richer understanding of the phenomenon, because it does not pass down the explanation to external drivers such as uncontrollable environmental factors or external decision makers. Second, the endogenous perspective brings to the forefront the interactions among various stakeholders that relate to success and failure of AIM, providing more operational guideline for systemic alterations. Finally, endogenous dynamics allow us to tease out how otherwise similar organizations can move to different outcomes, a major focus in our study of AIM.

We not only take an endogenous perspective, but also quantify the emerging feedback mechanisms through a simulation model. The value of simulation is not to produce specific predictions, but to provide a range of likely scenarios and insights into what dynamics drive those scenarios [1]. Simulation enforces internal consistency in the resulting explanations, enables quantitative analysis of various tradeoffs, and allows for building training micro-worlds [46] to enhance stakeholders’ mutual understanding, commitment, and skills.

For discussing our modelling work, we use two concepts central to system dynamics models: 1) stock and flow variables, and 2) feedback loops. See Supplementary Section S3 for definitions and examples.

1.4. Case study, food environment interventions

The Baltimore Healthy Carry-out (BHC; February-September 2011) and Baltimore Healthy Store (BHS; January-October 2006) interventions were designed and implemented in low-income neighbourhoods of Baltimore (median household income about $20,000 [47]), where carry-out restaurants (a non-franchise fast food outlet) and corner stores are the main food sources [48, 49]. The carry-outs in the BHC intervention are similar to fast-food restaurants but have different physical layouts—with limited parking availability and no or very few seating arrangements—and lower availability of healthy food choices [47]. They often store, cook, and sell foods behind floor-to-ceiling glass partitions. Many owners may speak English as a second language. Consequently, customers do not interact much with the storeowner or the seller. Storeowners usually know what foods are popular and adjust their menu accordingly. Customers often do not have many healthy options or the choice to request a customized healthier meal [47]. Similar physical layout and language barriers were also observed in the corner stores in the BHS intervention [50, 51].

The scope of the BHC intervention was to design the following strategies: improving menu boards and labelling to promote existing healthier items, introducing and promoting healthy sides and beverages, and introducing healthier combo meals and healthier cooking methods [52]. The aim of the BHS intervention was also to increase the availability and sales of healthier food options in local stores [50] through healthy food incentives (to minimize the financial risk to store healthy items), improving nutrition-related knowledge of storeowners, cultural guidelines to help storeowners build better relationship with their customers regarding cultural and language differences, and guidelines on the types of foods to be stocked and the places where customers could easily access those healthy items [50]. The data for the current study comes from two case studies of BHC (see Supplementary Section S4 for an overview of these two cases) and use of published data on BHS interventions.

2. Methods

Figure 2 summarizes the main processes of our research method. These processes are discussed in detail below.

Figure 2. Process flow of our research method.

The five processes took place in 2, 2, 1, 3, and 6 months, respectively.

2.1. Data collection through interviews

We collected data through semi-structured interviews with the key stakeholders in two of the intervention carry-outs in the BHC (after the original intervention implementation had ended)—see the first process in Figure 2. In consultation with interventionists, we selected two carry-outs with different levels of success with implementing the BHC intervention and post-implementation status (i.e., to what extend the intervention components were still in place a year after the end of the implementation). Variations across the cases offer useful insights into how AIM processes can diverge.

Given the small number of stakeholders, we interviewed key potential interviewees including the owners of the two carry-outs involved in the intervention, the lead interventionist, and two experts from the Baltimore City Planning Department (the two experts were familiar with the food environment in Baltimore and the BHC intervention). This resulted in seven interviews—some stakeholders were interviewed more than once—for the total duration of 335 minutes. All interviewees were informed of the purpose and procedures of the research, and assured that the information would be confidential. They signed a consent form and received compensation of $35 per hour of interview (if they accepted the payment) for their time—the study protocol was approved by the Institutional Review Board.

The interviewers used a list of 35 predefined questions and they were free to depart from the list. The list of questions covered various related categories including program history, initial steps in implementation, communication among stakeholders, trust, motivation, post-implementation processes, outputs, financial matters, success and failure instances, and customer feedback (see the questions in Supplementary Section S9).

2.2. Data analysis

The goal of data analysis was to weave together the case study and published data into a system dynamics model that provides a picture of dynamics relevant to AIM of interventions. Following standards for inductive and generative coding [53], the interview data were coded (the second process in Figure 2) by two researchers in MAXQDA 11 to extract: 1) key concepts related to perceived intervention effectiveness; 2) mechanisms of AIM; 3) time-line of events within a program; and 4) quantitative metrics where available.

In addition to the data collected from BHC, we learned about the intervention from archival data from the original report on the implementation of the intervention (extracted from [54]). We also used published interview data from seven corner stores in the BHS intervention (extracted from [51]). It should be noted that the data from the BHS—an older but similar intervention to BHC (see Section Case study, food environment interventions)—was used as additional supporting evidence to corroborate and contextualize the main case (BHC).

2.3. Qualitative model development

The development of a qualitative model required mapping the interactions among key variables emerging from our data. Coding the interview data helped in identifying the main variables and their relationships as well as learning the mechanisms of AIM. For example, ‘perceived benefits’ (of implemented intervention components) and ‘motivation of stakeholders’ are two variables extracted from the interviews—which will be discussed in detail—and the relationship between these two variables was that ‘perceived benefits’ had a positive effect on ‘motivation of stakeholders.’ More variables and mechanisms are discussed in the following sections.

The synthesis from emerging causal relationships generated a set of dynamic hypotheses, also called a causal loop diagram [2], that provides a qualitative overview of the potentially relevant mechanisms (the third process in Figure 2). These dynamic hypotheses were refined through additional data collection (hence, the feedback arrow from the third to the first process in Figure 2). Sample model variables, interview codes, and organizational examples that illustrate the relevant mechanisms are provided in Supplementary Section S10.

2.4. Quantitative model development

The next step of analysis entailed quantifying these mechanisms into a detailed simulation model (the fourth process in Figure 2). The feedback loops from the previous step summarize the key endogenous mechanisms relevant to understanding the dynamics of AIM in our case study. However, a fully operational simulation model of the AIM dynamics required us to include additional detail in specifying each mechanism quantitatively and include various exogenous drivers, such as the amount of interventionist time available for the intervention—details including model parameters and formulations are fully documented in Supplementary Section S11. Operationalizing a conceptual model is the first test; it helps in recognizing vague concepts or areas that were not discussed during the initial data collection and development of the conceptual model. Hence, we reached out to the interventionists to both resolve such ambiguities and parameterize the model (the feedback arrow from the fourth to the first process in Figure 2).

Quantification of the causal loop diagrams into a simulation model provides a few concrete benefits. First, it generates insights drawn from a complex web of causal pathways, a task the human brain is not well-equipped to do without the assistance of computational tools [55–58]. Second, quantitative model allows us to assess the plausibility of various dynamic hypotheses and to quantify the aggregate relationship between model variables (e.g., communication among stakeholders) and the outputs of interest (e.g., the sustainability of the intervention), that may flow through complex causal pathways.

2.5. Model testing, validation, and analysis

Finally, our quantitative model was tested and validated, and then used for analysis. We conducted various tests to build confidence in our model in understanding the endogenous dynamics of AIM. See Supplementary Section S5 for discussions about these tests.

3. Results

Here, we discuss the causal feedback loops relevant to understanding AIM that were explicated in the analysis process. Next, we show simulation results that demonstrate the key outcomes of the hypothesized mechanisms and offer insights into the sources of variation in AIM. Additional details including the simulation model (consistent with model reporting standards for replication [59]) and detailed instructions for replicating the results are provided in Supplementary Sections S11–15.

3.1. Causal feedback mechanisms

In presenting the mechanisms, we draw on the BHC and BHS examples, but provide a more generic terminology and discussion to highlight the transferability of the insights to other interventions that include multiple stakeholders. While we present some of the interview data to support our discussions, Supplementary Section S10 provides more examples of the data, and the process of translating qualitative data into codes, model variables, and mechanisms.

Intervention components, implementation, and motivation

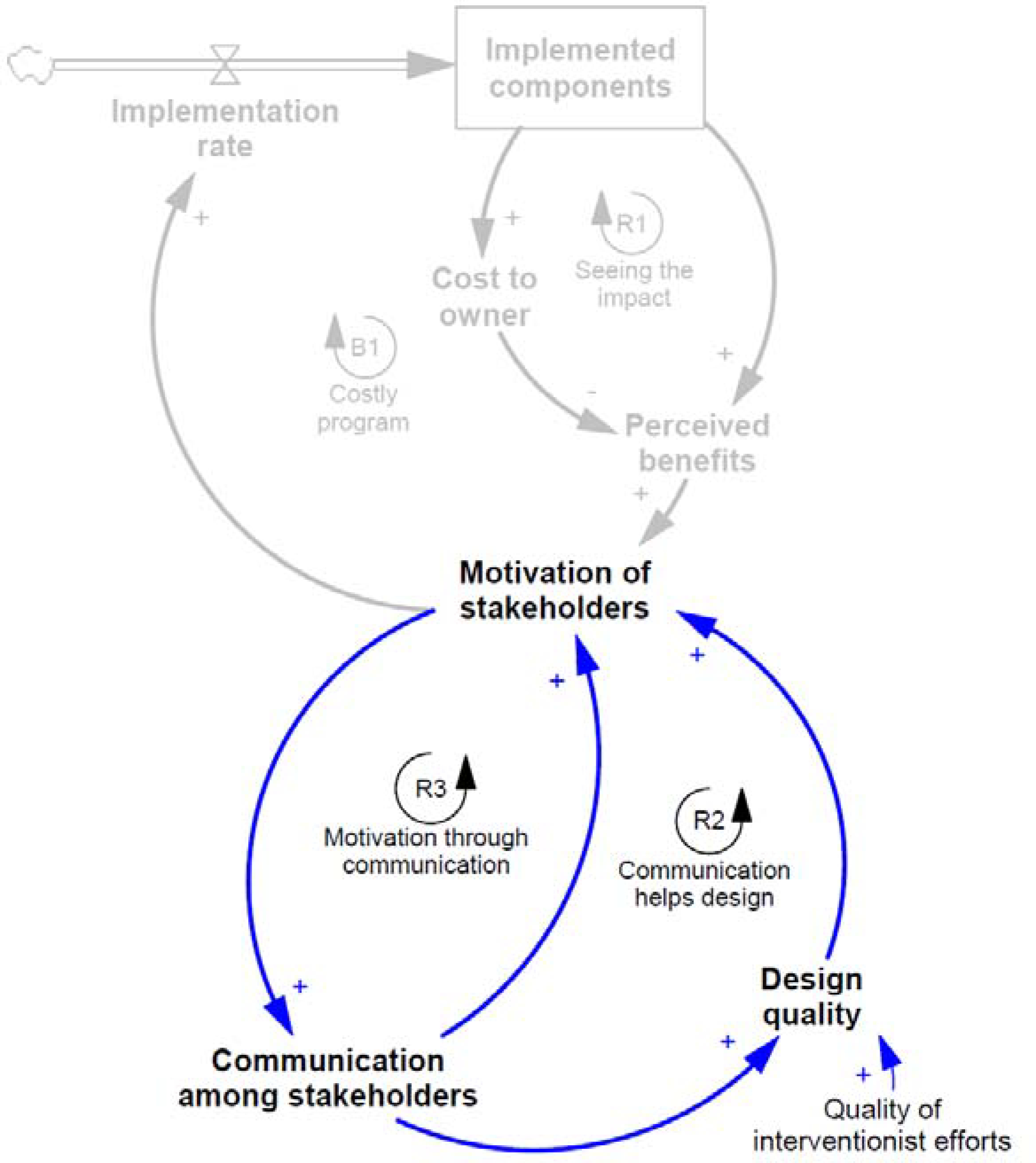

An intervention can be seen as a project with a deadline, comprised of various components. Execution of these components, such as designing and installing a new menu board, informs the progress of implementation phase, and depends on the time allocated to implementation by the interventionists, the quality of their effort, and the motivation of carry-out and corner store owners (hereby we call them storeowners) to actively contribute to the intervention. In fact, in the absence of any cooperation by storeowners, no implementation is feasible. In both BHC and BHS interventions, interventionists emphasized building rapport with the storeowners and making changes that place minimal burden on the store staff to maintain the motivation of stakeholders when implementing the intervention. Figure 3 summarizes these mechanisms, showing in a box the stock of “Implemented components” that grows with the valve-like flow variable “Implementation rate”.

Figure 3. Effect of costs and benefits on motivation.

Successful implementation depends on the competition between a reinforcing loop (R1: Seeing the impact) and a balancing loop (B1: Costly program). If R1 dominates, successful implementation is possible.

The first feedback loops in our setting emerge when we consider the impact of implemented components on the carry-out and store operations. Some components may lead to new costs, e.g., for acquiring healthier ingredients and finding new suppliers. Benefits may also ensue, including financial benefits due to increased sales or incentives for participation in the study, reputational benefits, and the personal satisfaction of making a contribution to community health. One carry-out owner in BHC elaborates:

“We could tell from their [customers’] eyes they liked that. My wife is the cashier and some people said that they liked it, and that was encouraging.”

A corner store owner in BHS mentions:

“One day, a new customer just came into the store asking whether we have fruits. I think she just saw the poster (“We Have Fruits” poster) on the door. I also like it. Often my customers gave some positive comments on those.”

An increase in motivation due to observation of such benefits can lead to further implementation of components, and thus even more benefits, in a reinforcing process (Figure 3, reinforcing loop 1 (R1), Seeing the impact).

Strategies such as offering monetary incentives to reduce the financial costs of the program help further increase storeowners’ perceived benefits and eventually increase their motivation in participating in the implementation.

On the other hand, if the storeowner perceives the costs (both financial and time costs) to exceed those benefits, then a balancing loop may dominate which reduces motivation in response to progress, and slows down further implementation (Figure 3, balancing loop 1 (B1), Costly program). An intervention that, in net, does not benefit the storeowner, has little chance of successful implementation, let alone maintenance.

Note that in practice costs are often observed first (because they are mainly associated with implementation rate) followed by benefits (that are associated with the stock of implemented components). While this lag may be relevant, it did not emerge as a central consideration in our setting and we do not discuss it. Also, here we focused on the perceived benefits and costs by carry-out owners, not interventionists or customers.

These mechanisms of perceived benefits and costs are consistent with the discussions presented in a multi-site interview-based study [60] including 52 small urban food stores in eight different cities across the U.S. The diffusion of innovation and implementation science literature also supports these mechanisms. Research presents that cost-effective programs are easier to implement [61, 62]. Also, studies emphasize the perception of intervention as beneficial as a main facilitator for implementation [63, 64].

Design quality and communication among stakeholders

The benefits of the intervention, however, are not pre-determined. Design quality is an important aspect of any intervention. A well-designed intervention is less costly to storeowners, may include more benefits, and would be easier to implement and maintain. The quality of design partially depends on the skills and knowledge of the interventionists, which was high in both BHC and BHS cases. Moreover, the intervention should be customized based on the characteristics of each program, and that requires ample communication between the interventionists and the storeowner. Storeowner’s motivation was a major determinant of their availability for communication, and in the BHC case we observed that the owners started with high-levels of motivation. On the other hand, design problems that are not fixed can lead to various issues in implementation and hurt the motivation of stakeholders. Prior research supports these mechanisms [63, 65, 66]. Consider two examples from our cases:

Adding watermelons to the menu during the summer was one of the BHC intervention components and sales data suggested it was profitable [67]; however, it resulted in trash removal problems for the carry-out owner which led to the scrapping of this component: “During the summer time, even if we wanted to sell those [watermelons], a lot of garbage would come out of it. Here in [this area of] Baltimore city, there isn’t a place to throw garbage... You can’t put a garbage can outside.”

In the BHS intervention, regardless of the level of storeowners’ motivation and support, they observed some interventionists’ activities as potential barriers to program participation as storeowners were concerned about shoplifting during such activities. One of the corner store owners elaborates:

“When interventionists come to the store and talk with customers, it distracts the store business.”

Identifying and addressing such issues requires early communication between interventionists and storeowners, and would enhance design quality. This idea is consistent with prior work highlighting the impact of communication on implementation success [68, 69]. Hence, taken together, communication and design quality create another reinforcing loop (Figure 4, Communication helps design (R2)): increased motivation facilitates better communication, which improves design and keeps the stakeholders motivated, in turn keeping storeowners engaged in future communication. Moreover, communication can directly affect motivation through trust-building and sense of engagement (Figure 4, Motivation through communication (R3)). Reinforcing loops can amplify small differences between two programs: if one, by chance, faces an early design problem, that can reduce motivation and communication, and sow the seeds of future problems. See Supplementary Section S6 for more discussion about motivation of stakeholders.

Figure 4. Effect of motivation of stakeholders and communication among stakeholders on design quality.

Small problems in design can lead to loss of motivation, reduced communication, and more design problems as implementation progresses.

Intervention maintenance

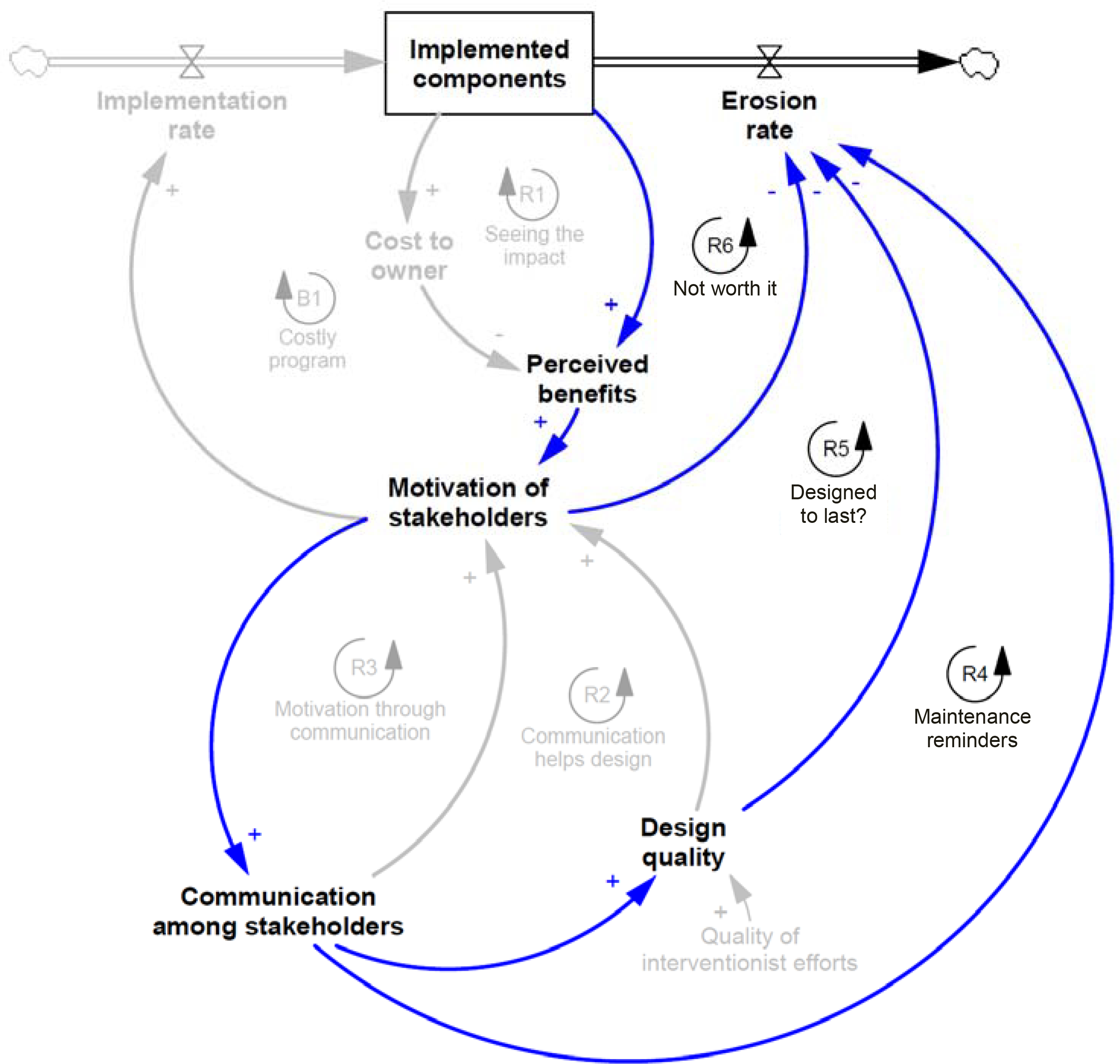

Interventions are not maintained when their components deteriorate, depreciate, or are otherwise scrapped, and are not renewed. For instance, in the BHC, from wear and tear of signs and menus, to changes in prices that may reduce the attractiveness of fresh items, these erosion processes continually reduce the number of ‘Implemented components’. Yet, the erosion rate is also endogenous, as it depends on motivation, communication, and design problems (Figure 5).

Figure 5. Mechanisms affecting the maintenance of interventions.

The erosion rate is influenced by motivation, design quality, and communication, creating three additional reinforcing loops that can drive a wedge between successful and unsuccessful maintenance of programs.

Communication can help remind the stakeholders about the need for sustaining changes and fixing emerging problems. High-quality design steps can also foresee, and correct for, the most common modes of failure and thus result in lower baseline erosion rates. Finally, we find that motivated stakeholders are more likely to sustain the changes without external prompts. In the BHS intervention, for example, the storeowners who showed strong or moderate motivation were more likely to help maintain the program (i.e., sustain the stocking of promoted foods) after the program was completed as compared to less motivated storeowners.

These mechanisms create three additional reinforcing loops (Figure 5, R4-R6), as successful implementation raises motivations, improves communication and design, and thus allows for sustaining the gains more effectively and keeping the intervention on track. These mechanisms of the sustainability of the interventions are also supported by the implementation science literature on maintenance [68, 70–73].

3.2. Simulation analysis

Given limited space, we only discuss the details of the quantitative model in the Online Supplementary Materials, and here we focus on the simulation results from that model. Our model is generic in the sense that: 1) Its core mechanisms apply to interventions beyond BHC and BHS; and 2) It is parameterized to be qualitatively consistent with the time horizons and magnitudes of variables in the BHC cases (see Table S2 in Supplementary Section S11.a for model parameters). As a result, we focus on understanding the qualitative changes in the model’s behaviour rather than specific numerical values from simulations. This focus thus maintains a balance between the theory-building nature of our paper and the limits of the quantitative data that informs our model.

Our simulation results point to a nonlinear dynamic with potentially important implications for understanding variations in AIM. Specifically, we find that small differences in allocation of interventionist resources to design, implementation, or communication can lead to significant differences in AIM outcomes.

Tipping dynamics

To demonstrate, consider two scenarios of identical organizations with identical interventions, composed of various components (e.g., improving menu boards in the BHC program or introducing healthier foods in the BHS program). The only difference between the two simulated scenarios is the amount of interventionist time allocated to the maintenance of each (e.g., 8% higher in one case). The project’s AIM unfold over multiple months: during the first year the focus is on the design of the intervention, the next seven months are mostly focused on implementation, and then the sustainability of the intervention is measured once the interventionists largely leave the scene, offering only some follow up time afterwards.

Figure 6 (A) shows our main outcome variable, the number of intervention components effectively at work in the simulated scenarios. This number is zero for the first months as much of the effort goes into designing the intervention. Implementation starts after about 10 months and speeds up to completely roll out the intervention (i.e., implement its 20 components) by month 19. By this time, both simulated scenarios show a solid implementation, and if they were actual organizations, they would likely be considered success stories for this intervention. However, what happens afterwards, i.e., maintenance, is key to the long-term effectiveness of each program, and here a small difference in the resources allocated by the interventionist (i.e., their time) makes a huge difference—see Section S11.b for more information about how we model time allocated to different activities. One organization keeps most of the components in place, while the other gradually loses most of the components. Why would such a small difference (less than 10% in interventionists’ time during the maintenance phase) have such a major impact?

Figure 6. Implemented components (A), communication sufficiency (B), motivation of stakeholders to implement (C), and perceived net benefit ratio (D).

Baseline (blue line) is based on 24 hours effort of interventionists per month during the maintenance phase. More effort (red line) is based on 26 hours effort of interventionists per month. The big difference between the outputs of these two scenarios relates to the tipping threshold, e.g., a level of interventionists’ efforts that once exceeded causes a sustained intervention. Y-axis in (A) shows the number of implemented components (capped at 20 components). Y-axis in (B) shows the level of communication sufficiency (>=0), where values below one represent shortfalls in communication. Y-axis in (C) shows motivation of stakeholders (0<= Motivation <=1), where one presents high levels of motivation. Y-axis in (D) shows the ratio of perceived benefits to perceived implementation costs (>=0), where benefits exceed costs for values above one.

Early on, the design and implementation processes unfold almost identically for both scenarios, and both have enough resources and support to complete the tasks on schedule. The differences become visible only in the maintenance phase. Once implemented, the components are subject to erosion, for example, menu boards may fall and not be replaced and healthy items may be dropped from the offering. The rate at which such deterioration happens and the speed with which the required fixes are applied (or ignored) distinguish between scenarios that maintain the intervention in the long-run and those that revert back to the old ways of doing things. Reinforcing loops around communication and motivation of stakeholders inform the relevant dynamics.

Communication:

First, reinforcing loop “R4-Maintenance reminders” (Figure 5) underlines continued communication between interventionists and organizational stakeholders (i.e., storeowners). Such communication entails modest effort but provides reminders and support for keeping the erosion rates low, e.g., by fixing any emerging problems before they lead to complete loss of a component. Low erosion, in return, allows for keeping the intervention at its most efficacious state. The intervention thus shows more benefits to the organization, motivates the storeowners to keep the communication up with the interventionists, and thus maintains the program in a desired state. On the other hand, a shortfall in communication early after the end of implementation phase can increase the erosion rate, reduce the components standing, cause disillusionment with the program, and further cut down on communication (Figure 6 (B)). A similar reinforcing loop, “R5-Designed to last?” operates in parallel through impact of communication on quality of intervention design and thus its sustainability.

Motivation:

A similar mechanism unfolds in reinforcing loop “R6-Not worth it” (Figure 5), as lost motivation increases erosion, reduces the success of the program, and thus further erodes the motivation of organizational stakeholders (Figure 6 (C)).

Communication and motivation:

The third reinforcing loop “R3-Motivation through communication” (Figure 5) connects motivation and communication: a shortfall in communication gradually erodes motivation; that will then require even more communication for fixing task-related issues as well as rebuilding the interpersonal trust and collaborative atmosphere. As a result, the current communication levels fall even further behind what is required, completing a vicious cycle (Figure 6 (B)).

Interestingly, early in the maintenance period the perceived net benefit ratio (the ratio of perceived benefits to perceived implementation costs) may actually exceed in the ultimately unsuccessful scenario (Figure 6 (D)). The reason is that reduced communication early in that period (Figure 6 (B)) actually reduces the overhead of the intervention for the storeowners, making them potentially more satisfied (Figure 6 (D)). Yet, insufficient communication increases the erosion rate which later reduces the success of the program, and ultimately reduces perceived net benefit ratio, e.g., due to decreased sales.

As these reinforcing loops take over the dynamics, the unlucky scenario falls behind, requires even more time from the interventionist for fixing the problems, which leaves even less time for communication, further strengthening the feedback loops that are affected by the sufficiency of communication. After a few months, the gap between the two otherwise similar scenarios becomes very wide and the chances of reviving the intervention in the unsuccessful program remote.

In our simulation experiment, the initial shortfall in communication is triggered by slightly less interventionist time available after implementation is complete (26 vs. 28 hours effort of interventionists per month). However, this small shortfall is amplified through the feedback loops above, leading to the widely different outcomes at the end. On the other hand, for a little while after the completion of implementation phase, the scenario with lower interventionist time seems to do even better (Figure 6 (C-D)), because lower communication translates into less cost for the organization, making the intervention seem more appealing as long as little erosion has happened. The real costs are only revealed once the erosion requires more interventionist time for fixes and thus reduces the sufficiency of communication below acceptable levels.

Note that the exact numbers generated in our simulations are not consequential for the main qualitative finding. While specific numbers vary by the selection of model parameters, the dynamics of AIM under a wide range of parameters include a tipping point that lead to very different outcomes in response to small changes. Lack of attention to the underlying dynamics can lead to erosion of an intervention after it was implemented, in a vicious cycle of lower motivation and communication, faster erosion, and thus less beneficial intervention.

Boundary Conditions and Sensitivity of Results

The scenario above highlights the core dynamics of the model using the base case parameter values roughly consistent with the qualitative evidence in the two BHC cases. To gain a more nuanced understanding of the trade-offs involved, we systematically change some of the key model assumptions and assess their impact on the tipping dynamics and the long-term success of simulated programs. Specifically, we explore two questions: What is the impact of interventionists’ quality and capabilities? How do different resource allocation policies influence the sustainability of programs?

See Supplementary Sections S8–a and S8–b for our detailed analysis method to answer these two questions. In summary, the quality of interventionists influences both their productivity in designing and supporting the implementation of intervention components, and the quality of design. In the base case, we simulated very high quality interventionists, but in practice there is often heterogeneity in the capabilities of interventionists, and some are limited in their knowledge, skills, and clarity of communication. As a result, the components designed and seen through by these interventionists may prove problematic, leading to poor implementation or faster erosion.

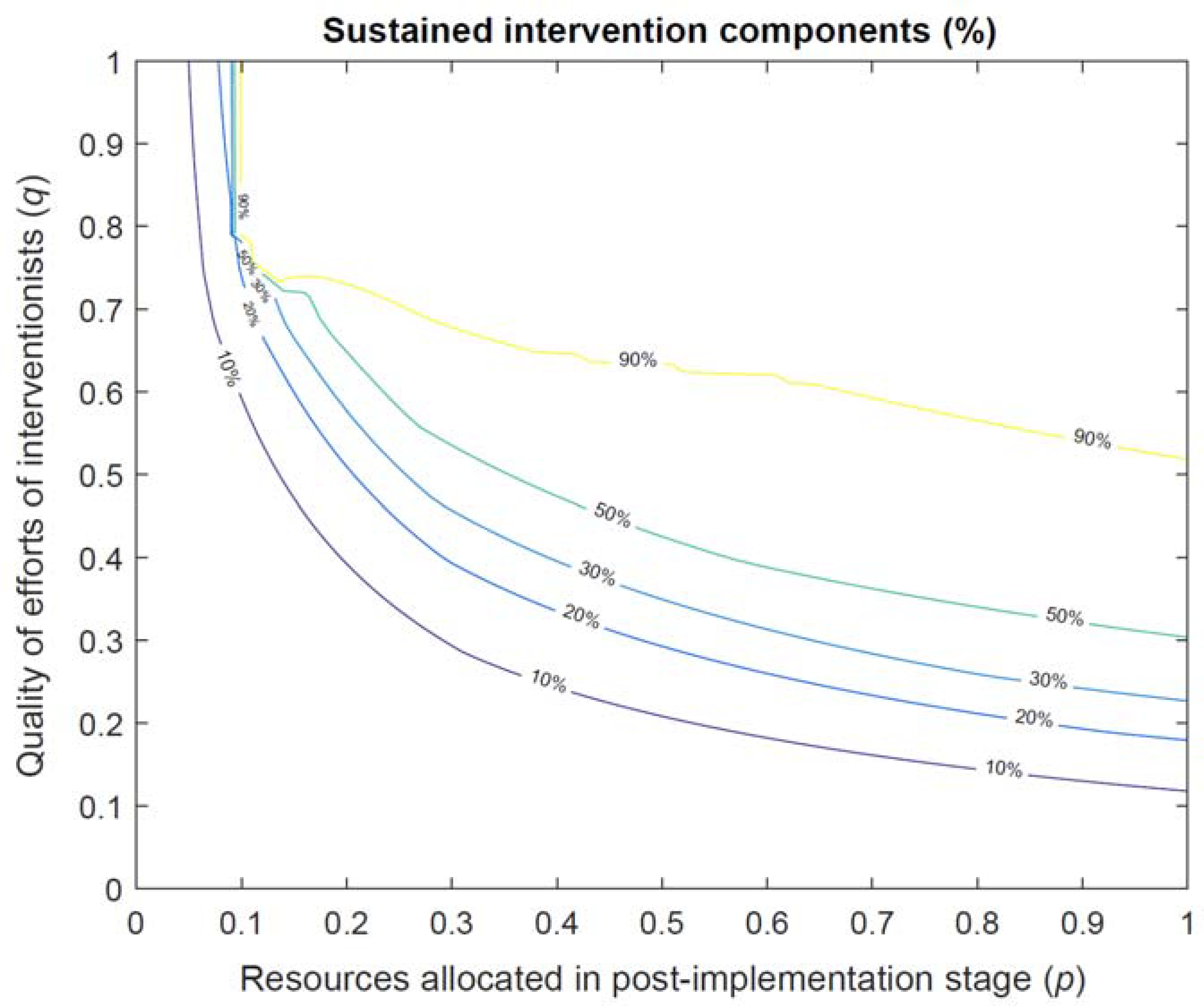

Figure 7 presents, in a contour plot, the impacts of quality of efforts of interventionists and resource allocation on the sustainability of the intervention. This analysis (based on 10,000 simulations; see Supplementary Section S8 for details) show that low quality interventionists are not only costly because of their lower productivity, but also because the components they design and implement have many flaws which will in practice reduce the sustainability of implementation. On the other hand, increasing the resources in the maintenance phase would allow the organization to fix the components that erode over time, and keep a higher level of performance in the steady-state, but at increasing costs both to the interventionists and to the storeowners. Moreover, if the interventionist quality is low enough, excess resources cannot solve the problem: the designed intervention will include so many flaws that implementing and maintaining them will require prohibitively large amounts of interventionist time (Figure 7).

Figure 7. Implementation accomplishment in post-implementation.

The rate of resource allocation in the post-implementation stage is proportional to that of the implementation stage, such that a value of 50% means that half of resources used during the implementation are allocated in the post-implementation.

So far we assumed interventionists’ time (the key resource) was allocated with the same priority between communication vs. design and implementation (i.e. α=0.5). In a second analysis we assess changing communication priority (α). Figure 8 summarizes the results in a contour plot of parameter settings with equal success (in % of sustained intervention components).

Figure 8. Trade-offs in resource allocations.

The coloured lines present the rates of sustained intervention components at the end of the simulations.

For a given quality (and quantity; not varying in this analysis) of interventionist time we find that best results are achieved when communication is given slightly higher priority than implementation and design (α ranging between 0.5 to 0.8). Note that the impact of allocation function is modest, compared to the quality of interventionists and their availability. While a better allocation of existing resources, potentially to keep a positive relationship with stakeholders, can help save some marginal initiatives, if a project is plagued with too little resources or low-quality intervention design, resource allocation offers limited leverage.

4. Discussion

This is the first study which: 1) builds on the current public health intervention assessment methods (i.e., through considering the adoption, implementation, and maintenance processes) by using system dynamics modelling to study the dynamic mechanisms of intervention success and failure; and 2) contributes to the public health intervention by drawing on theories in strategy and organizational behaviour to study the effectiveness of the interventions.

In this study, based on our in-depth interview data from Baltimore Healthy Carry-outs program and published data from Baltimore Healthy Stores program [51], we developed a system dynamics model showing how the dynamics surrounding communication, motivation, quality of efforts of interventionists, and erosion of interventions can create tipping dynamics which lead to greatly different outcomes over the long haul. Specifically, small changes in allocation of resources to an intervention could have a disproportionate long-term impact if those additional resources can turn stakeholders into allies of the intervention, reducing the erosion rates and enhancing sustainability.

We also found that the quality of the intervention design plays a key role throughout the process. Reviews of research show that health interventions that are designed on a strong theoretical foundation are more effective than those lacking such foundation [74]. We showed that a well-designed intervention sustains stakeholder motivation and limits later deterioration; therefore, changes that increase the quality of original design, and maintenance of components, are critical for the long-term success of AIM. Those changes could include the use of more skilled and situationally informed interventionists. They could also include more communication early in the design process with key stakeholders to iterate on the elements of the intervention and to foresee and fix potential problems and gain stakeholder buy in.

While it is easy to call for more resources, in practice most interventions are plagued with budgetary pressures which calls for methods to identify when an intervention is at risk, and how to mitigate those risks. One useful area for improvement is monitoring of stakeholder motivation. This variable plays a key role in the tipping dynamics we identify, if it goes below a threshold, the intervention will become exceedingly costly to maintain. Interventionists should be as sensitive to this motivation level as to the design and implementation of tasks. Intervention design steps may also need to include components that explicitly boost motivation. Again, communication plays an important role in enhancing stakeholder motivation, and thus needs to be prioritized. A minimum level of communication should be maintained throughout, so that motivation is above a threshold that allows for active support of the implemented components by the storeowner.

Interventionists need to be sensitive to financial or other incentives that stakeholders value, and incorporate them in the design of the intervention to increase the chances that once implemented, the intervention can cross the self-sustaining threshold. For example, in the BHC intervention, certificate from the mayor and the city of the Baltimore was a successful practice that increased motivation at little cost.

In addition, finding different mechanisms to motivate stakeholders, especially in the middle of the process (after the early honeymoon period and before they see the actual benefits) can help. These rapport building mechanisms can be frequent site visits by interventionists, asking about ongoing problems and coming up with solutions before tasks are abandoned, and providing data on the benefits (and setting up measurement procedures to track benefits from early on).

Another leverage point is how the design influences intervention erosion rates. Intervention components that can easily become part of the daily routines in an organization (such as the menu board in the BHC intervention which require limited attention for the maintenance) are much easier to sustain than those that will require conscious and constant attention (such as restocking of baked chips). If organizational routines are to be changed as part of the intervention, structures such as physical layout, supply chains, and decision making processes should be thought through and explicitly designed so that they are consistent with the changes in the core organizational routines. Inconsistencies in those arrangements are likely to increase the speed of erosion of implemented components and diminish motivation over time. For example, if the new process requires new ingredients, the storeowners will find it inconvenient to seek and maintain yet another supplier. The implementation process should also focus on training and empowering organizational stakeholders so that they will appreciate and maintain the components in the absence of the interventionists. Only when the new routines are fully integrated in the organizational culture and processes, can one expect long-term sustainment of new interventions.

A common trade-off that our model highlights is the trade-off between designing and implementing intervention components vs. communicating with stakeholders to help build confidence and improve the quality of the intervention. Given that many interventionists are more familiar with the former, there may be a built in bias in the AIM processes against adequate investment in the communication processes central to AIM dynamics. Overcoming that bias and tuning communication levels to address both the motivation and the quality considerations is an important leverage point for training successful interventionists.

5. Conclusion and Limitations

Overall, transporting interventions from laboratory settings to community settings is challenging. When the implementation of interventions fails, it is important to know whether the failure occurred because the intervention was not successfully implemented or if it was ineffective [75]. In many cases, in fact, the intervention is theoretically effective but not properly implemented and maintained. The current study, as a first step towards better understanding endogenous dynamics of organizational interventions, provides evidence on tipping dynamics in health intervention design, implementation, and maintenance. The model we develop is stylistic and simple; is limited to a handful of cases in two interventions; and builds on limited quantitative data. Studies that address each of these limitations can provide stronger evidence (See Supplementary Section S7 for an extended discussion of limitations). In fact, real world interventions include many subtle variations and in practice building a fully calibrated model may not be feasible due to data limitations or costs, or may only be viable after the intervention has fully unfolded and the opportunity to improve the situation is lost. Yet our simple model provides a few potential ideas to help monitor and improve the design and implementation of interventions in order to avoid the dynamics that lead to poor long-term maintenance of interventions.

Supplementary Material

References

- 1.Maglio PP, Sepulveda MJ, and Mabry PL, Mainstreaming modeling and simulation to accelerate public health innovation. Am J Public Health, 2014. 104(7): p. 1181–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Sterman J, Business dynamics : systems thinking and modeling for a complex world. 2000, Boston: Irwin/McGraw-Hill. xxvi, 982 p. [Google Scholar]

- 3.Brown T, et al. , Systematic review of long term lifestyle interventions to prevent weight gain and morbidity in adults. Obesity Reviews, 2009. 10(6): p. 627–638. [DOI] [PubMed] [Google Scholar]

- 4.Glasgow RE and Emmons KM, How can we increase translation of research into practice? Types of evidence needed. Annual Review of Public Health, 2007. 28: p. 413–433. [DOI] [PubMed] [Google Scholar]

- 5.Gortmaker SL, et al. , Reducing obesity via a school-based interdisciplinary intervention among youth - Planet health. Archives of Pediatrics & Adolescent Medicine, 1999. 153(4): p. 409–418. [DOI] [PubMed] [Google Scholar]

- 6.Gittelsohn J, et al. , A Food Store Intervention Trial Improves Caregiver Psychosocial Factors and Children’s Dietary Intake in Hawaii. Obesity, 2010. 18: p. S84–S90. [DOI] [PubMed] [Google Scholar]

- 7.Starfield B, Quality-of-care research: internal elegance and external relevance. JAMA, 1998. 280(11): p. 1006–8. [DOI] [PubMed] [Google Scholar]

- 8.Thompson SE, Smith BA, and Bybee RE, Factors influencing participation in Worksite Wellness Programs among minority and underserved populations. Family & Community Health, 2005. 28(3): p. 267–273. [DOI] [PubMed] [Google Scholar]

- 9.Green LW and Glasgow RE, Evaluating the relevance, generalization, and applicability of research - Issues in external validation and translation methodology. Evaluation & the Health Professions, 2006. 29(1): p. 126–153. [DOI] [PubMed] [Google Scholar]

- 10.Spence JC and Lee RE, Toward a comprehensive model of physical activity. Psychology of Sport and Exercise, 2003. 4(1): p. 7–24. [Google Scholar]

- 11.Swinburn B, Gill T, and Kumanyika S, Obesity prevention: a proposed framework for translating evidence into action. Obesity Reviews, 2005. 6(1): p. 23–33. [DOI] [PubMed] [Google Scholar]

- 12.Hendriks A-M, et al. , Proposing a conceptual framework for integrated local public health policy, applied to childhood obesity - the behavior change ball. Implementation Science, 2013. 8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Damschroder LJ and Lowery JC, Evaluation of a large-scale weight management program using the consolidated framework for implementation research (CFIR). Implementation Science, 2013. 8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Sweet SN, et al. , Operationalizing the RE-AIM framework to evaluate the impact of multi-sector partnerships. Implement Sci, 2014. 9: p. 74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Grant RM, Contemporary strategy analysis: concepts, techniques, applications. 4th ed. 2002, Malden, Mass.: Blackwell Business. xii, 551. [Google Scholar]

- 16.Barney J, Firm Resources and Sustained Competitive Advantage. Journal of Management, 1991. 17(1): p. 99–120. [Google Scholar]

- 17.Peteraf MA, The Cornerstones of Competitive Advantage - a Resource-Based View. Strategic Management Journal, 1993. 14(3): p. 179–191. [Google Scholar]

- 18.Dierickx I and Cool K, Asset Stock Accumulation and Sustainability of Competitive Advantage. Management Science, 1989. 35(12): p. 1504–1511. [Google Scholar]

- 19.Eggers JP and Kaplan S, Cognition and Renewal: Comparing CEO and Organizational Effects on Incumbent Adaptation to Technical Change. Organization Science, 2009. 20(2): p. 461–477. [Google Scholar]

- 20.Zollo M and Winter SG, Deliberate learning and the evolution of dynamic capabilities. Organization Science, 2002. 13(3): p. 339–351. [Google Scholar]

- 21.Warren K, Competitive strategy dynamics. 2002, Chichester: Wiley. xiii, 330 p. [Google Scholar]

- 22.Levitt B and March JG, Organizational Learning. Annual Review of Sociology, 1988. 14: p. 319–340. [Google Scholar]

- 23.Leonard-Barton D, Core Capabilities and Core Rigidities - a Paradox in Managing New Product Development. Strategic Management Journal, 1992. 13: p. 111–125. [Google Scholar]

- 24.Rahmandad H, Effect of delays on complexity of organizational learning. Management Science, 2008. 54(7): p. 1297–1312. [Google Scholar]

- 25.Sterman JD, Learning in and about complex systems. System Dynamics Review, 1994. 10(2–3): p. 91–330. [Google Scholar]

- 26.Easton GS and Jarrell SL, The effects of total quality management on corporate performance: An empirical investigation. The Journal of Business, 1998. 71(2): p. 253. [Google Scholar]

- 27.Hendricks KB and Singhal VR, The Long-Run Stock Price Performance of Firms with Effective TQM Programs. Management Science, 2001. 47(3): p. 359–368. [Google Scholar]

- 28.Repenning NP and Sterman JD, Nobody ever gets credit for fixing problems that never happened: Creating and sustaining process improvement. California Management Review, 2001. 43(4): p. 64–+. [Google Scholar]

- 29.Sterman JK, Repenning NP, and Kofman F, Unanticipated side effects of successful quality programs: Exploring a paradox of organizational improvement. Management Science, 1997. 43: p. 503–521. [Google Scholar]

- 30.Repenning NP, Understanding fire fighting in new product development. The Journal of Product Innovation Management, 2001. 18: p. 285–300. [Google Scholar]

- 31.Repenning NP and Sterman JD, Capability traps and self-confirming attribution errors in the dynamics of process improvement. Administrative Science Quarterly, 2002. 47(2): p. 265–295. [Google Scholar]

- 32.Green LW and Kreuter MW, Health program planning : an educational and ecological approach. 4th ed. 2005, New York: McGraw-Hill. [Google Scholar]

- 33.Brownson RC, Gurney JG, and Land GH, Evidence-based decision making in public health. J Public Health Manag Pract, 1999. 5(5): p. 86–97. [DOI] [PubMed] [Google Scholar]

- 34.Heller RF and Page J, A population perspective to evidence based medicine: “evidence for population health”. J Epidemiol Community Health, 2002. 56(1): p. 45–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Glasgow RE, Vogt TM, and Boles SM, Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health, 1999. 89(9): p. 1322–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Chang AY, et al. , Dynamic modeling approaches to characterize the functioning of health systems: A systematic review of the literature. Social Science & Medicine, 2017. 194: p. 160–167. [DOI] [PubMed] [Google Scholar]

- 37.Fallah-Fini S, et al. , Modeling US Adult Obesity Trends: A System Dynamics Model for Estimating Energy Imbalance Gap. American Journal of Public Health, 2014. 104(7): p. 1230–1239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Struben J, Chan D, and Dube L, Policy insights from the nutritional food market transformation model: the case of obesity prevention. Annals of the New York Academy of Sciences, 2014: p. n/a–n/a. [DOI] [PubMed] [Google Scholar]

- 39.Rahmandad H, Human Growth and Body Weight Dynamics: An Integrative Systems Model. PloS one, 2014. 9(12): p. e114609. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Ghaffarzadegan N, Ebrahimvandi A, and Jalali MS, A Dynamic Model of Post-Traumatic Stress Disorder for Military Personnel and Veterans. PLoS ONE, 2016. 11(10): p. e0161405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Jalali MS, et al. Dynamics of Obesity Interventions inside Organizations. in The 32nd International Conference of the System Dynamics Society. 2014. System Dynamics Society. [Google Scholar]

- 42.Jalali M, et al. , Dynamics of Implementation and Maintenance of Organizational Health Interventions. International Journal of Environmental Research and Public Health, 2017. 14(8): p. 917. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Jalali SMJ, Three Essays on Systems Thinking and Dynamic Modeling in Obesity Prevention Interventions. 2015, Virginia Tech. [Google Scholar]

- 44.Ghaffarzadegan N, et al. , Model-Based Policy Analysis to Mitigate Post-Traumatic Stress Disorder, in Policy Analytics, Modelling, and Informatics. 2018, Springer. p. 387–406. [Google Scholar]

- 45.Hosseinichimeh N, et al. , Modeling and Estimating the Feedback Mechanisms among Depression, Rumination, and Stressors in Adolescents. PLOS ONE, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Sterman JD, Learning in and about complex systems. System Dynamics Review, 1994. 10(2–3): p. 291–330. [Google Scholar]

- 47.Lee SH, et al. , Characteristics of prepared food sources in low-income neighborhoods of Baltimore City. Ecol Food Nutr, 2010. 49(6): p. 409–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Gittelsohn J, et al. , Understanding the Food Environment in a Low-Income Urban Setting: Implications for Food Store Interventions. Journal of Hunger & Environmental Nutrition, 2008. 2(2–3): p. 33–50. [Google Scholar]

- 49.Gittelsohn J, et al. , Process evaluation of Baltimore Healthy Stores: a pilot health intervention program with supermarkets and corner stores in Baltimore City. Health Promot Pract, 2010. 11(5): p. 723–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Song HJ, et al. , A corner store intervention in a low-income urban community is associated with increased availability and sales of some healthy foods. Public Health Nutr, 2009. 12(11): p. 2060–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Song HJ, et al. , Korean American storeowners’ perceived barriers and motivators for implementing a corner store-based program. Health Promot Pract, 2011. 12(3): p. 472–82. [DOI] [PubMed] [Google Scholar]

- 52.Lee-Kwan SH, et al. , Development and implementation of the Baltimore healthy carry-outs feasibility trial: process evaluation results. BMC Public Health, 2013. 13: p. 638. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Patton MQ and Patton MQ, Qualitative research and evaluation methods. 3 ed. 2002, Thousand Oaks, Calif.: Sage Publications. xxiv, 598, 65 p. [Google Scholar]

- 54.Lee SH, Changing the Food Environment in Baltimore City: Impact of an Intervention to Improve Carry-Outs in Low-Income Neighborhoods. 2012, Johns Hopkins University: Baltimore, MD. [Google Scholar]

- 55.Jalali MS, Siegel M, and Madnick S, Decision-making and biases in cybersecurity capability development: Evidence from a simulation game experiment. The Journal of Strategic Information Systems, 2018. [Google Scholar]

- 56.Abdel-Hamid T, et al. , Public and health professionals’ misconceptions about the dynamics of body weight gain/loss. System Dynamics Review, 2014. 30(1–2): p. 58–74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Baghaei Lakeh A and Ghaffarzadegan N, Does analytical thinking improve understanding of accumulation? System Dynamics Review, 2015. 31(1–2): p. 46–65. [Google Scholar]

- 58.Gonzalez C, et al. , Graphical features of flow behavior and the stock and flow failure. System Dynamics Review, 2017. 33(1): p. 59–70. [Google Scholar]

- 59.Rahmandad H and Sterman JD, Reporting guidelines for simulation-based research in social sciences. System Dynamics Review, 2012. 28(4): p. 396–411. [Google Scholar]

- 60.Gittelsohn J, et al. , Small retailer perspectives of the 2009 Women, Infants and Children Program food package changes. Am J Health Behav, 2012. 36(5): p. 655–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Rogers EM, Diffusion of innovations. 5th ed. 2003, New York: Free Press. xxi, 551 p. [Google Scholar]

- 62.Dirksen CD, Ament AJ, and Go PM, Diffusion of six surgical endoscopic procedures in the Netherlands. Stimulating and restraining factors. Health Policy, 1996. 37(2): p. 91–104. [DOI] [PubMed] [Google Scholar]

- 63.Legare F, et al. , Barriers and facilitators to implementing shared decision-making in clinical practice: update of a systematic review of health professionals’ perceptions. Patient Educ Couns, 2008. 73(3): p. 526–35. [DOI] [PubMed] [Google Scholar]

- 64.Harvey G and Kitson A, Achieving improvement through quality: An evaluation of key factors in the implementation process. Journal of Advanced Nursing, 1996. 24(1): p. 185–195. [DOI] [PubMed] [Google Scholar]

- 65.Repenning NP, A simulation-based approach to understanding the dynamics of innovation implementation. Organization Science, 2002. 13(2): p. 109–127. [Google Scholar]

- 66.Gravel K, Légaré F, and Graham ID, Barriers and facilitators to implementing shared decision-making in clinical practice: a systematic review of health professionals’ perceptions. Implementation Science, 2006. 1: p. 16–16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Lee-Kwan SH, et al. , Environmental Intervention in Carryout Restaurants Increases Sales of Healthy Menu Items in a Low-Income Urban Setting. Am J Health Promot, 2014. [DOI] [PubMed] [Google Scholar]

- 68.ØVretveit J, et al. , Quality collaboratives: lessons from research. Qual Saf Health Care, 2002. 11(4): p. 345–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Hofstede SN, et al. , Barriers and facilitators to implement shared decision making in multidisciplinary sciatica care: a qualitative study. Implement Sci, 2013. 8: p. 95. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Ayala GX, et al. , Stocking characteristics and perceived increases in sales among small food store managers/owners associated with the introduction of new food products approved by the Special Supplemental Nutrition Program for Women, Infants, and Children. Public Health Nutr, 2012: p. 1–9. [DOI] [PubMed] [Google Scholar]

- 71.Alexander J and Hearld L, Methods and metrics challenges of delivery-system research. Implementation Science, 2012. 7(1): p. 15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Schell SF, et al. , Public health program capacity for sustainability: a new framework. Implement Sci, 2013. 8: p. 15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Chambers DA, Glasgow RE, and Stange KC, The dynamic sustainability framework: addressing the paradox of sustainment amid ongoing change. Implement Sci, 2013. 8: p. 117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Glanz K and Bishop DB, The role of behavioral science theory in development and implementation of public health interventions. Annu Rev Public Health, 2010. 31: p. 399–418. [DOI] [PubMed] [Google Scholar]

- 75.Proctor E, et al. , Outcomes for Implementation Research: Conceptual Distinctions, Measurement Challenges, and Research Agenda. Administration and Policy in Mental Health and Mental Health Services Research, 2011. 38(2): p. 65–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.