Abstract

Background

Advances in genome sequencing over the last years have lead to a fundamental paradigm shift in the field. With steadily decreasing sequencing costs, genome projects are no longer limited by the cost of raw sequencing data, but rather by computational problems associated with genome assembly. There is an urgent demand for more efficient and and more accurate methods is particular with regard to the highly complex and often very large genomes of animals and plants. Most recently, “hybrid” methods that integrate short and long read data have been devised to address this need.

Results

LazyB is such a hybrid genome assembler. It has been designed specificially with an emphasis on utilizing low-coverage short and long reads. LazyB starts from a bipartite overlap graph between long reads and restrictively filtered short-read unitigs. This graph is translated into a long-read overlap graph G. Instead of the more conventional approach of removing tips, bubbles, and other local features, LazyB stepwisely extracts subgraphs whose global properties approach a disjoint union of paths. First, a consistently oriented subgraph is extracted, which in a second step is reduced to a directed acyclic graph. In the next step, properties of proper interval graphs are used to extract contigs as maximum weight paths. These path are translated into genomic sequences only in the final step. A prototype implementation of LazyB, entirely written in python, not only yields significantly more accurate assemblies of the yeast and fruit fly genomes compared to state-of-the-art pipelines but also requires much less computational effort.

Conclusions

LazyB is new low-cost genome assembler that copes well with large genomes and low coverage. It is based on a novel approach for reducing the overlap graph to a collection of paths, thus opening new avenues for future improvements.

Availability

The LazyB prototype is available at https://github.com/TGatter/LazyB.

Keywords: Nanopore sequencing, Illumina sequencing, Genome assembly, Spanning tree, Unitigs, Anchors

Background

The assembly of genomic sequences from high throughput sequencing data has turned out to be a difficult computational problem in practice. Recent approaches combine cheap short-read data (typically using Illumina technology [1]) with long reads produced by PacBio or Nanopore technologies [2]. Although the short-read data are highly accurate and comparably cheap to produce, they are insufficient even at (very) high coverage due to repetitive elements. Long-read data, on the other hand, are comparably expensive and have much higher error rates. HiFi PacBio reads [3] derived from repeat sequencing of circularized elements rival short read accuracy but at vastly increased costs.

Several assembly techniques have been developed recently for de novo assembly of large genomes from high-coverage (50 or greater) PacBio or Nanopore reads. Recent state-of-the-art methods employ a hybrid assembly strategy using Illumina reads to correct errors in the longer PacBio reads prior to assembly. For instance, the 32 Gb axolotl genome was produced in this manner [4].

Traditional assembly strategies can be classified into two general categories [5, 6]. The Overlap-layout-consensus (OLC) assembly model attempts to find all pairwise matches between reads, using sequence similarity as a metric for overlaps. A general layout is constructed and post-processed in various ways. Most notably, overlaps can be transformed into assembly graphs such as string graphs [7]. This method is flexible to read length and can be adapted to the diverse error models of different sequencing technologies. However, finding all overlaps is very expensive, in particular as read-sizes become large.

In de Bruijn graph strategies [8, 9], reads are deconstructed to fixed length k-mers, representing nodes. Edges are inserted between nodes that overlap on -mers. Ideally, a de Bruijn graph thus represents exactly one Eulerian path per chromosome, although this property is generally violated in practice due to sequencing errors even in the absence of repetitive elements. With the help of specialized hashing strategies, k-mers can be efficiently stored and constructed. Thus, de Bruijn graphs require much less memory than OLC strategies. An overall speed up can be attributed to the absence of an all-vs-all comparison step. However, as k has to be chosen smaller than read size, contiguity information is lost. With increasing error rates in reads, de Bruijn graphs tend to become less useful, as k-mers become also less accurate.

Long read only and hybrid assembly strategies also largely align to these two categories, although some more unique methods have emerged over the years. Canu [10] and Falcon [11] implement the classic OLC approach, albeit both error-correct long reads before creating a string graph. MinHash filters [12] can significantly reduce the costs of comparisons, but overall complexity remains high. Wtdbg2 [13] also follows OLC, but utilizes de Bruijn like graphs based on sparse k-mer mapping for comparison. It avoids all-vs-all mapping by matching reads that share k-mers under the assumption that even under high error rates correct pairs share more k-mer than those with spurious matches. Shasta [14] implements a full de Bruijn graph strategy by transforming k-mers into a run-length encoding that is more robust to sequencing errors in long reads. Newer versions of Canu also implement a similar encoding [15].

Classic de Bruijn methods have been adapted to combine both long and short reads into a hybrid assembly. Long reads can serve as “bridging elements” in the same way as mate pairs to resolve paths in (short read) assembly graphs [16, 17].

Under the assumption that short-read assemblies are cheap and reliable, various workflows have been proposed to integrate both kinds of data also for OLC-like approaches. As a general goal, these programs avoid the costly all-vs-all comparison to create the assembly graph with the help of various heuristics. MaSuRCA [18] attempts to join both long and short reads into longer super-reads by chaining unique k-mers, thereby reducing the number of reads that need to be tested for overlap. WENGAN [19] first creates full short-read contigs that are then scaffolded by synthetic mate pairs generated out of the long reads. Flye [20], even more uniquely, assembles intentionally erroneous contigs that are concatenated to a common sequence. Self-mapping then reveals repeats that can be resolved much like in a traditional assembly graph.

HASLR [21] defines an assembly-graph-like structure, that includes both short and long reads. Short reads are assembled into contigs that, after k-mer filtering to remove repeats, are aligned to long reads. In the resulting backbone graph, short-read contigs serve as nodes that are connected by an edge if they map onto the same long read. While different to e.g. string graphs, standard tip and bubble removal algorithms are applied to remove noise. Contigs are extracted as paths. TULIP [22] implements a very similar strategy, however, does not assemble short reads into full contigs. Instead, the gaps between mate-pairs are closed if possible with sufficiently rare k-mers, resulting in relative short but unique seeds that serve in the same capacity. In both cases, consensus construction of the resulting sequence is trivial. Edges define fixed regions on groups of long reads that can be locally aligned for each edge along a path.

DBG2OLC [23] is methodologically most closely related to LazyB. The two approaches, however, differ in several key features. DBG2OLC assembles short reads to full contigs, thereby avoiding repeat resolving techniques such as gap closing or scaffolding because these introduce too many errors. The short-read Contigs are then aligned against the long reads. Each long read implies a neighborhood of contigs. Mappings are corrected prior to graph construction via consistency checks over all neighborhoods for each contig, i.e., contigs are required to map in the same order on all long reads. This technique can help to remove both spuriously matched contigs and chimeric long reads, but requires sufficient coverage to allow for effective voting. Similar to LazyB, long reads serve as nodes in the DBG2OLC graph, with edges representing contigs mapping to two long reads. Nodes that map a subset of contigs of another node are removed as they are redundant. The resulting graph is error corrected by classic tip and bubble removal, after which paths are extracted as contigs, following the edge with the best overlap at each step. LazyB instead uses a step-wise procedure to extract paths that employs a series of heuristics to edit the initial overlap graph to a collection of paths.

LazyB implements an alternative approach to assembling genomes from a combination of long-read and short-read data. It not only avoids the expensive, direct all-vs-all comparison of the error-prone long-read data, but also the difficult mapping of individual short reads against the long reads, and the conventional techniques to error-correct de Bruijn or string graphs. As we shall see, its step-wise approach of processing the long-read overlap graph adds the benefit of producing quite good assemblies with surprisingly low requirements on the coverage of both short and long reads. Even at just 2× coverage of long reads the contiguity of assemblies of complex genomes can be significantly improved using our method. It lends itself in particular to the exploratory assembly of a large numbers of species, where cheap identification of functional islands is required rather then expensive finishing.

This contribution, which is a revised, updated, and extended version of a paper presented at WABI 2020 [24], is organized as follows. We first outline the overall strategy of LazyB and then describe the pre-processing of the short-read data and the mapping short-reads-derived unitigs to long reads. Similar to DBG2OLC and HASLR, LazyB operates on a long-read overlap graph whose edges are derived from partially assembled short-read sequences that map to multiple long-reads. The main innovation in is the Processing of the overlap graph, which proceeds by a series of heuristics inspired by properties of overlap graphs derived from ideal data, and avoids the commonly used techniques to correct assembly graphs. We then briefly describe the construction of the sequence assembly from the path decomposition of the overlap graph. Benchmarking results are reported for the assembly of yeast, fruitfly, and human genomes. We close with a discussion and an outlook to open problems and future improvements.

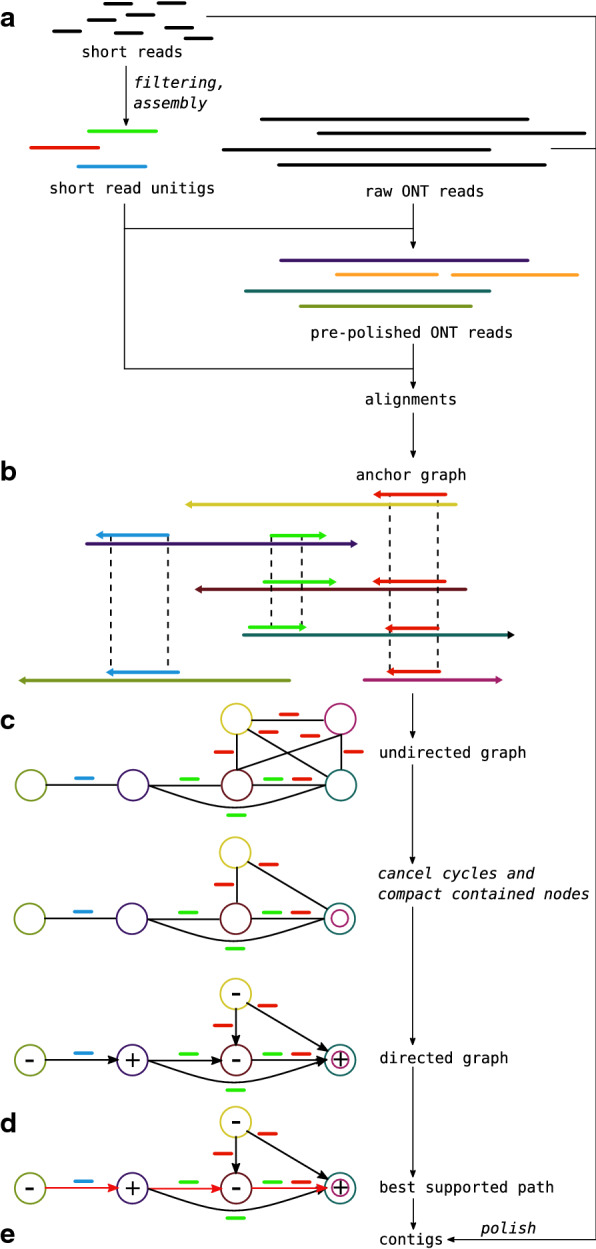

Strategy

LazyB does not pursue a “total data” approach. Instead, it identifies “anchors” that are nearly guaranteed to be correct and implements an, overall, greedy-like workflow to obtain very large long-read contigs. To this end, the initial overlap graph is first oriented and then edited in several consecutive steps to graph classes that more closely approach the desired final results, i.e., a union of paths. Conceptually, therefore, LazyB does not attempt solve a single global optimization problem but instead approximates as sequence of graph editing problems. This strategy of LazyB is outlined in Fig. 1.

Fig. 1.

Overview of the LazyB assembly pipeline. (a) Short Illumina reads are filtered to represent only near unique k-mers and subsequently assembled into unambiguous unitigs. Long Nanopore reads (ONT) can be optionally scrubbed to include only regions consistent to at least one other read. For larger data sets scrubbing can be handled on subsets efficiently. Mapping unitigs against Nanopore reads yields unique “anchors” between them (b). An undirected graph (c) is created by adding Nanopore reads as nodes and edges between all pairs of reads sharing an “anchor”. Each edge is assigned a relative orientation, depending on whether the “anchor” maps in the same direction on both Nanopore reads. Cycles with a contradiction in orientation have to be removed before choosing a node at random and directing the graph based on its orientation. As Nanopore reads that are fully contained within another do not yield additional data, they can be collapsed. Contigs are extracted as maximally supported paths for each connected component (d). Support in this context is defined by the number of consistent overlaps transitive to each edge. Final contigs (e) can be optionally polished using established tools

The key idea to obtain the overlap graph is to start from a collection of pre-assembled, high-quality sequences that are unique in the genome. These are obtained from accurate short-read sequencing data and serve as “anchors” to determine overlaps among the long reads . In practice, can be obtained by assembling Illumina data with low or moderate coverage to the level of unitigs only. The total genomic coverage of only needs to be large enough to provide anchors between overlapping long reads. This data is therefore rigorously filtered to be devoid of repetitive and highly similar sequences. Mapping a sequence against the set of long reads implies (candidate) overlaps between two long reads (as well as their relative orientation) whenever maps to both and . Thus we obtain a directed overlap graph G of the long reads without an all-vs-all comparison of the long reads.

A series of linear-time filtering and reduction algorithms then prunes first the underlying undirected overlap graph and then the directed version of the reduced graph. Its connected components are reduced to near-optimal directed acyclic graphs (DAGs) from which contigs are extracted as best-supported paths. In the following sections the individual steps will be described in detail. In comparison to DBG2OLC we avoid global corrections of short-read mappings, but instead rely on the accuracy of assembled unitigs and a series of local corrections. For this, we utilize previously unreported properties of the class of alignment graphs used by both tools. This allows LazyB to operate reliably even on very low coverage. Variations of the dataset dependent assembly options have little impact on the outcome. In contrast to the complicated setup of options required for tools such as DBG2OLC, LazyB comes with robust defaults.

Data preprocessing

A known complication of both PacBio and Nanopore technologies are chimeric reads formed by the artificial joining of disconnected parts of the genome [25] that may cause mis-assemblies [26]. Current methods dealing with this issue heavily rely on raw coverage [27] and hence are of little use for our goal of a low-coverage assembler. In addition, start- and end-regions of reads are known to be particularly error-prone [28]. We pre-filter low quality regions, but only consider otherwise problematic reads later at the level of the overlap graph.

Short-read unitig-level assembly

Short-read (Illumina) data is preprocessed by adapter clipping and trimming. The set of high quality fragments is obtained from a restricted assembly of the short-read data. The conventional use case of assembly pipelines aims to find a minimal set of contigs in trade-off to both correctness and completeness. For our purposes, however, completeness is of less importance and fragmented contigs are not detrimental to our workflow, as long as their lengths stay above a statistical threshold. Instead, correctness and uniqueness are crucial. We therefore employ two initial filtering steps:

(1) Using a k-mer profile, we remove all k-mers that are much more abundant than the expected coverage since these are likely part of repetitive sequences. This process can be fully automated.

(2) In order to avoid ambiguities, only branch-free paths are extracted from the short-read assembly graph. This feature is implemented e.g. in the de Bruijn graph assembler ABySS [29], which allows to assemble up to unitig stage. Moreover, a minimal path length is required for a unitig to serve as a secure anchor.

Since repeats in general lead to branch-points in the de Bruijn graph, repetitive sequences are strongly depleted in unitigs. While in theory, every such assembly requires a fine-tuned k-mer size, a well known factor to be influential on assembly quality, we found overall results to be mostly invariant of this parameter. To test this, we systematically varied the k-mer-size for ABySS. Nevertheless, we found little to no effect on the results of LazyB (Fig. 2). As assembly stops at unitigs, error rates and genome coverage stay within a narrow range as long as the unitigs are long enough.

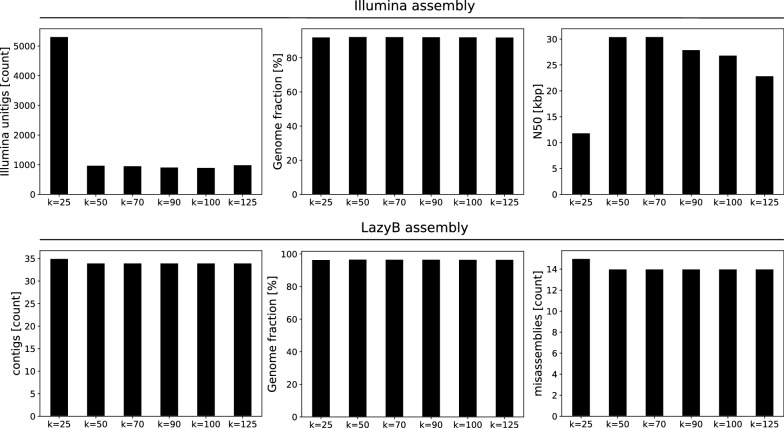

Fig. 2.

Assembly statistics as a function of the k-mer size used to construct unitigs from the short-read data for yeast. Top: Illumina unitigs (left: number of unitigs; middle: fraction of the reference genome covered; right: N50 values); bottom: final LazyB assembly at long reads (left: number of unitigs; middle: fraction of the reference genome covered; right: number of mis-assemblies)

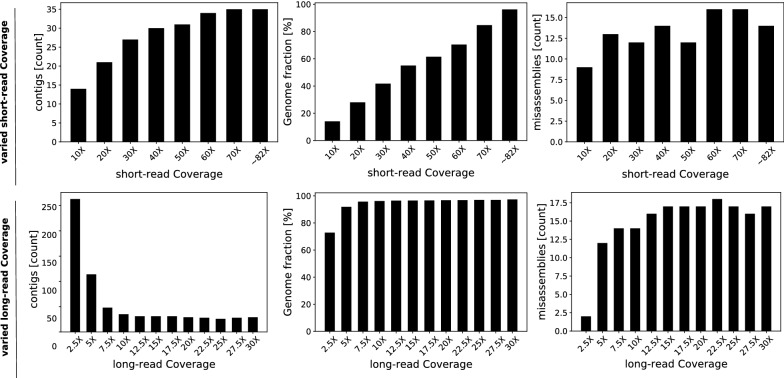

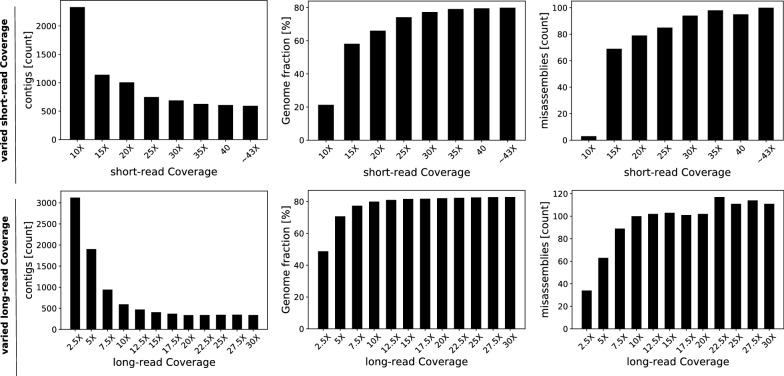

The strategies for filtering short-read data have a larger impact than the choice of the k-mer size for unitig assembly (Fig. 3). This is not surprising given that both chimeric unitigs and unitigs that harbor repetitive DNA elements introduce spurious edges into the long-read overlap graph G and thus negatively influence the assembly. In order to exclude short reads that contain highly frequent k-mers, the maximal tolerated occurrence has to be set manually and is dependent on the k-mer size. Setting the cut-off right next to the main peak in the profiles has turned out to be a good estimate. After assembling short reads, unitigs are mapped to long reads and a coverage profile over the length of every unitig is calculated. Unitigs with maximal coverage above interquartile range are considered outliers. However, regions below coverage threshold (Q3) spanning more than 500 bp can be “rescued”. This filter step effectively reduces ambiguous regions, in particular when no previous filtering has been applied (Fig. 4). Combining both short-read filters improves the assembly quality; see Table 1.

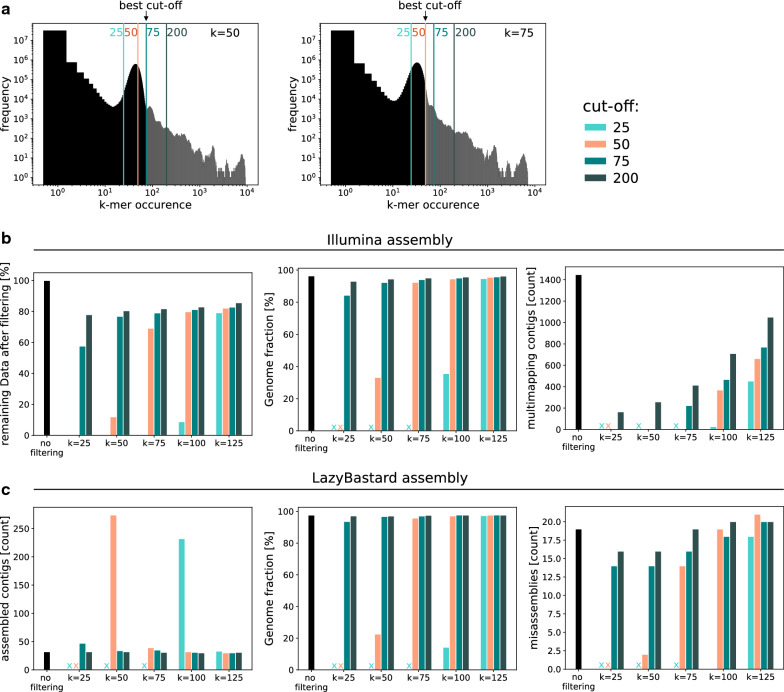

Fig. 3.

Assembly statistics of yeast as a function of the k-mer size and maximal occurrence cut-off used to remove very frequent k-mers from short reads prior to unitig assembly. (a) k-mer profiles for k = 50 bp and k = 75 bp. Cut-offs restrict short reads to different degrees. Note logarithmic axes. (b) Illumina unitigs (left: percentage of remaining short-read data; middle: fraction of the reference genome covered; right: number of unitigs mapping multiple times to reference). (c) Final LazyB assembly left: number of unitigs; middle: fraction of the reference genome covered; right: number of mis-assemblies). x: not enough data to assemble

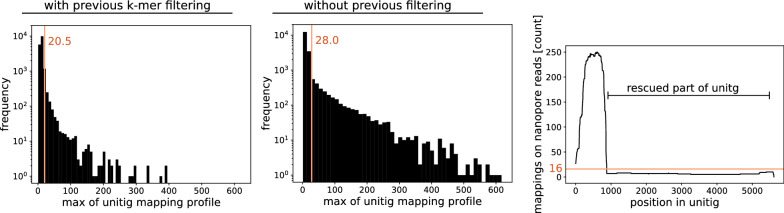

Fig. 4.

Exclusion of unitigs based on very high mapping coverage. Thresholds are IQR × 1.5+Q3. Shown are maximal values of coverage profiles for unitigs assembled with (left) and without (middle) previous k-mer filtering. Note the logarithmic axes. right: exemplary profile; only the high-coverage peak is excluded. Threshold is Q3

Table 1.

Impact of short-read filtering strategies on LazyB assembly quality in fruit fly

| Filter strategy | Compl. [%] | #ctg | #MA |

|---|---|---|---|

| no filter | 82.81 | 457 | 302 |

| k-mer filter | 80.66 | 567 | 104 |

| unitig filter | 80.71 | 563 | 108 |

| k-mer and unitig filter | 80.11 | 596 | 99 |

Column descriptions: completeness of the assembly, #ctg: number of contigs, #MA: number of mis-assemblies (breakpoints relative to the reference assembly)

Anchor alignments

The set of long reads is mapped against the unitig set. At present we use minimap2 [30] for this purpose. Regions or whole unitigs significantly exceeding the expected coverage are removed from , as described in the last section, because they most likely are repetitive or at least belong to families of very similar sequences such as coding sequences of multi-gene families. Note that all repetitive elements connected to a unique region within a single long read may still be correctly assembled.

Classic alignment tools perform poorly in the presence of high rates of insertions and deletions (InDels) [31]. Even methods specifically advertised for this purpose rely on scoring schemes that cannot accurately represent the extreme abundance of InDels in long-read data. Instead, they rely on seeds of high quality matches that are then chained with high error tolerance. Currently, minimap2 [30, 32] is one of the most commonly used tools for this purpose. Since we do not have a gold standard set of perfect data, we can only roughly estimate the influence of this heuristic on the LazyB alignment quality in a related experiment. Specifically, we tested consistency of anchor alignments on pairs of long reads to direct alignments of both reads for fruit fly. Consistency is validated at the level of relative orientation, the offset indicated by both alignment methods, the portion of overlap that can be directly aligned and whether direct alignment of the long reads is possible at all. Different relative orientations were observed only in very small numbers. Changes in the offset by more then 5% of the longer read length are equally rare (Fig. 5).

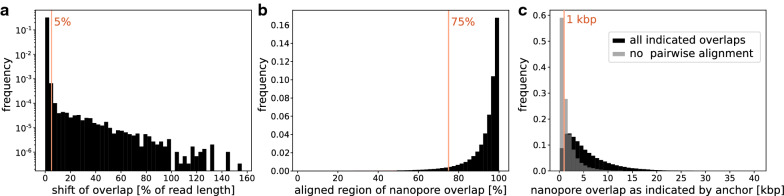

Fig. 5.

Consistency test of anchor-linked long-read overlaps to direct alignments of both reads on fruit fly. (a) Frequencies of shifted offsets (% of the longer read); changes up to 5% are tolerated; note logarithmic axis. (b) Frequencies of the percentage at which the direct alignment covers the overlap. A minimum of 75% is set for consistency. (c) Long read pairs where no direct alignment is possible tend to have shorter anchor-indicated overlaps. Connections that cannot be confirmed via direct alignments despite an expected overlap of at least 1 kbp are excluded

However, requiring a direct alignment of at least 75% of the overlap region marks 4.6% of the anchor links as incorrect. Removing these “incorrect” anchors, surprisingly, has a negative effect on the final LazyB assembly and in particular tends to break correct contigs apart; see Table 2. In our test set 7.7% of direct alignments of two anchor-linked long reads gave no result. In these cases, expected overlaps are rather short (Fig. 5). We therefore tested whether the assembly could be improved by excluding those connections between long reads for which no alignment could be calculated despite the presence of an overlap of at least 1 kbp (3.7%). We found, however, that this procedure also causes the loss of correct edges in G.

Table 2.

Assessment of different parameters to verify long-read overlaps and their impact on LazyB assembly quality on fruit fly

| Varification parameters | Compl.[%] | #ctg | #MA |

|---|---|---|---|

| Direction | 80.13 | 608 | 111 |

| Direction + offset | 80.08 | 622 | 103 |

| Direction + offset + incomplete mapping | 80.04 | 1263 | 121 |

| No mapping | 80.15 | 801 | 113 |

Overlaps are indicated by anchors and evaluated by pairwise long-read alignments. They are considered valid if: the relative direction suggested by the anchor matches that of the pairwise alignment (direction); the offset is sufficiently similar for both methods (offset); at least 75% of the overlap is found as direct alignment (incomplete mapping); the overlap indicated by the anchor is less than or equal to 1 kbp or a pairwise alignment is possible (no mapping). Column descriptions: completeness of the assembly, #ctg: number of contigs, #MA: number of mis-assemblies (breakpoints relative to the reference assembly)

Summarizing, we observe three facts: (1) The overwhelming number of pairs is consistent and therefore true. (2) Removing inconsistent edges from the assembly not only does not improve the results but results are worse on average. (3) While we can manually identify some incorrect unitig matches, the mappings produced by minimap2 are too inconsistent for proper testing. Since we have no proper methods to identify such false positives we also cannot properly estimate the number of false negatives, i.e., missing matches in the graph

, e.g. by computing a transitive completion.

, e.g. by computing a transitive completion.

Overall, out tests indicate that a high level trust in the anchors mapping in warranted. We also conclude that minimap2 is sufficient for our purposes. However, the data also suggest that the assembly would profit substantially from a more accurate handling of the overlap alignments. This remains a problem for future research at this point.

Long read overlap graph

As a result of mapping the short-read unitig to the long reads, we obtain a set of significant matches with matching scores that exceed a user-defined threshold . The long-read overlap graph G has the vertex set . Conceptually, two long reads overlap, i.e., there should be an undirected edge if and only if there is an such that and . The choice of therefore has an immediate effect on the resulting graph. Setting low will allow more false-postive edges to be introduced into the graph, as spurious matches become more likely. Higher values of improve the confidence of matches but may remove true edges. In the current prototype, we set the matching score as the number of exactly aligned basepairs in the match and require at least 500 such exact basepairs. With increasing accuracy of long read basecallers, long read mappers, unitig assembly, and possibly also dependent on the organism, this value is subject to change. In practice, we employ an overall more restrictive but robust procedure to introduce edges in order to reduce the danger of introducing false-positive edges into G, mitigating also effects of slightly sub-optimal choices of .

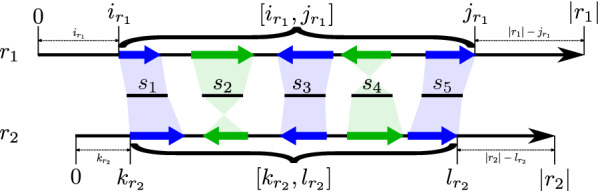

For two distinct long reads with a common , i.e., , we denote by [i, j] and [k, l], respectively, the sequence intervals on s that match intervals on and . The intersection is the interval if and the empty interval otherwise. A non-empty intersection corresponds to a direct match of and . The expected bit score for the overlap is estimated as

| 1 |

if . For we set . For a given edge there may be multiple significant matches, mediated by a set of unitigs . In ideal data all these matches are co-linear and consistent with respect their orientation. In real data, however, this may not be the case. It is necessary, therefore, to handle inconsistencies.

For each significant match we define the relative orientation of the reading directions of the short-read scaffold s relative to the long read r. The relative reading direction of the two long reads (as suggested by s) is thus .

The position of a significant match (s, r) defined on the unitig s on interval [i, j] corresponds to an interval on the long read r that is determined by the alignment of s to r. Due to the large number of randomly distributed InDels in the Nanopore data, the usual dynamic programming alignment strategies fail to produce accurate alignments. This is also the case for minimap2 [30], our preliminary choice, as it only chains short, high quality matches into larger intervals. Although more accurate alignments would of course improve the local error rate of the final assembled sequence, we expect very little impact on the overall assembly structure of the assembly from local details of the sequence alignments at (s, r) matches. We therefore record only the matching intervals and use a coordinate transformation that estimates the position for some by linear interpolation:

| 2 |

The values of are rounded to integers and used to determine intersections of matches. We write for the interval on r corresponding to an interval [i, j] of s.

Definition 1

Two unitigs in are consistent if (i) , (ii) the relative order of , on and , on is the same.

For distinct long reads , Definition 1 enables us to determine subsets of such that each is maximal with respect to inclusion and contains only unitigs that are pairwise consistent with respect to and . In addition, we may require that the difference between the distances of consecutive corresponding intervals on and , respectively, is sufficiently similar. Computing the set that maximizes the total bit score amounts to a classical chaining problem. It can can be solved by dynamic programming [33] in quadratic time w.r.t. the number of unitig-mediated matches. An edge is inserted into G if the optimal total bit score exceeds a user-defined threshold. The signature of the edge is the common value for all .

For each edge we furthermore determine such that is the minimal and is the maximal coordinate of the matching intervals on . Hence, the interval spans all matching intervals on . The corresponding pair of coordinates, and , spans the matching intervals on . In particular, the interval (resp. ) spans both matching intervals on if (resp. ). For the sake of a clear notation, let and be the “spanning” interval on , i.e., either or . Intervals and specify the known overlapping regions between and , see also Fig. 6 for an illustration. If then extends to the left if and to the right if . For the corresponding conditions are and , respectively. If does not extend to either side then is completely contained in and does not contribute to the assembly. Similarly, if extends on both sides, is fully contained in . In both cases we contract the edge between and in G. Otherwise, if extends to the left and extends to the right we record and analogously, we set if extends to the left and extends to the right.

Fig. 6.

Construction of the overlap of two long reads and (long black arrows) from all unitigs (short black bars) that match to both and . A significant match (s, r) of on is illustrated by blue and green thick arrows on r. The relative orientation of (s, r) is indicated by the direction of its arrow, that is, (resp. ) if its arrow points to the right (resp. left). The subsets (unitigs with blue significant matches) and (unitigs with green significant matches) of are both inclusion-maximal and consists of pairwise consistent unitigs. The set maximizes and thus determines the overlap. It implies . Moreover, (resp. ) is the minimal (resp. maximal) coordinate of significant matches of unitigs from on . The corresponding coordinates on are and , respectively. The spanning intervals and define the overlap of and . In this example we have and , implying that extends neither to the left or right and thus, edge is contracted in G

The result of this construction is a long-read-overlap graph G whose vertices are the non-redundant long reads and whose edges record (1) the relative orientation , (2) the bit score , (3) the local direction of extension, and (4) the overlapping interval.

Processing of the overlap graph

Consistent orientation of long reads

For perfect data it is possible to consistently determine the reading direction of each read relative to the genome from which it derives. This is not necessarily the case in real-life data. The relative orientation of two reads is implicitly determined by the relative orientation of overlapping reads, i.e., by the signature of the edge . To formalize this idea we consider a subset and define the orientation of D as . For a disjoint union of two edge sets D and we therefore have

and, more generally, their symmetric different satisfies since the edges in appear twice in and thus each of these edges contributes a factor .

and, more generally, their symmetric different satisfies since the edges in appear twice in and thus each of these edges contributes a factor .

Definition 2

Two vertices are orientable if holds for any two paths P and connecting and in G. We say that G is orientable if all pairs of vertices in G are orientable.

Lemma 3

G is orientable if and only if every cycle C in G satisfies .

Proof

Let be two vertices of G and write for the set of all cycles that contain r and . If or , then r and are orientable by definition. Now assume , , and consider a cycle . Clearly, C can be split into two edge-disjoint path and both of which connect r and . If r and are orientable, then and thus . If r and are not orientable, then there is a pair of paths and connecting r and such that . Since

is an edge-disjoint union of cycles we have and thus there is least one cycle with in G.

is an edge-disjoint union of cycles we have and thus there is least one cycle with in G.

The practical importance of Lemma 3 is the implication that only a small set of cycles needs to be considered, because every cycle in G can be obtained as an -sum of cycles in a cycle basis [34, 35]. Every graph G with c connected components has a cycle basis comprising cycles. Particular cycles bases, known as Kirchhoff bases, are obtained from a spanning tree T of G as the set of cycles consisting of the edge and the unique path in T connecting the endpoints of e [36]. Every cycle C of G can then be written as , see e.g. [35].

Theorem 4

Let G be a graph with signature on its edges, and let be a cycle basis of G. Then G is orientable if and only if for all .

Proof

The theorem follows from Lemma 3 and the fact that every cycle C in G can be written as an -sum of basis cycles, i.e., for every cycle in C if and only if for every basis cycle .

Thm. 4 suggests the following, conservative heuristic to extract an orientable subgraph from G:

- (1)

Construct a maximum weight spanning tree of G by using the -scores as edge weights. Tree can easily be obtained using, e.g., Kruskal’s algorithm [37].

- (2)

Construct a Kirchhoff cycle basis from .

- (3)

For every cycle , check whether . If so, find the -minimum weighted edge and remove it from E(G) and (possibly) from if .

We delete the offending edge because it is very unlikely that the preprocessing correctly identified that two long reads overlap but failed to determine the correct relative orientation. The edge deletion is simplified by the following observation:

Lemma 5

If T is maximal -weight spanning tree of T and end e is a non-tree edge, then .

Proof

Let by a tree edge in the cycle . Then is again a spanning tree of G since the vertex set is still connected and contains not cycle. Its weight it weight is , since T is a maximum weight spanning tree by assumption. Thus , i.e., e has minimum -weight.

As a consequence, the minimum weight edge of an offending cycle is always the non-tree edge. Step (3) above therefore reduces to finding the basis edges with negative signature cycles and to remove these edges. The removal of leaves unchanged and thus does not affect the contiguity of the assembly. The end result of the procedure outlined above is therefore a connected subgraph and a spanning forest for .

Lemma 6

Let G be an undirected connected graph with signature and let be the residual graph produced by the heuristic steps (1)-(3). Then (i) is connected, (ii) is orientable, and (iii) is an -maximal spanning tree of .

Proof

(i) By Lemma 5, , hence is a spanning tree of and thus is connected. (ii) Since the heuristic removes all non-tree edges e with , Thm. 4 implies that is orientable. (iii) Since , Kruskal’s maximum weight spanning tree algorithm will pick the same spanning tree edges again from , and is an -maximal spanning tree.

In order to expedite the identification of edges that violate orientability in G, we define an orientation for the vertices of G, i.e., the long reads. To this end, we pick an arbitrary as reference and set . Denote by the unique path from to r and define .

Lemma 7

If G is a connected orientable graph with signature , then the vertex orientation with reference is independent of the choice of the spanning tree T.

Proof

Let P be an arbitrary path connecting r and . By connectedness, such a path exists and since G is orientable w.r.t. we have . Furthermore r and are connected by the backbone of any spanning tree of T, is independent of the choice of T.

As an immediate consequence we observe:

Corollary 8

If G is an orientable graph with signature and vertex orientation , then every pair of adjacent vertices satisfies .

It follows that the heuristic to extract an orientable subgraph can be implemented in linear time:

- (1)

An -maximal spanning tree is obtained in time using Kruskal’s algorithm.

- (2)

The vertex orientation is computed by traversal of the spanning tree in time.

- (3)

For each , one checks in constant time whether and if so deletes the edge . The total effort is therefore .

We remark that one could now define a graph , obtained from G by inverting all long-reads r with a negative orientation . This amounts to replacing each long read r by its reverse complement. Since processing of the overlap graph does not explicitly consider the sequence information, it would be sufficient to replace the coordinates [p, q] of a match interval by and to invert the directionality of extension by another long read. The bit scores of matches, of course, remain unchanged. In all edge signatures are . It is not necessary, however, to construct explicitly. Instead, we simply keep track of the vertex orientations .

From here on, we again write G for the orientable graph .

Reduction to a directed acyclic graph

We next make use of the direction of extension of long read and defined by the mutual overhangs in the case that is an edge in G. We write

for the directed version of a connected component G of the residual graph constructed above. For each edge we create the corresponding edge (

for the directed version of a connected component G of the residual graph constructed above. For each edge we create the corresponding edge (

) as

) as

| 3 |

Suppose the data used to construct

are free of repetitive sequences and contain no false-positive overlaps. In such perfect data,

are free of repetitive sequences and contain no false-positive overlaps. In such perfect data,

is a directed interval graph. Since we have contracted edges corresponding to nested reads (i.e., intervals),

is a directed interval graph. Since we have contracted edges corresponding to nested reads (i.e., intervals),

is in fact a proper interval graph or indifference graph [38]. In addition

is in fact a proper interval graph or indifference graph [38]. In addition

is directed in a manner consistent with the ordering of the intervals. More precisely, there is an ordering of the vertices (long reads) that satisfies the umbrella property [39]: and (

is directed in a manner consistent with the ordering of the intervals. More precisely, there is an ordering of the vertices (long reads) that satisfies the umbrella property [39]: and (

) implies (

) implies (

). We can interpret to mean that extends to the left, i.e., towards smaller coordinate values in the final assembly. A “normal interval representation” and a linear order of the reads can be computed in time [40, 41] for proper interval graphs.

). We can interpret to mean that extends to the left, i.e., towards smaller coordinate values in the final assembly. A “normal interval representation” and a linear order of the reads can be computed in time [40, 41] for proper interval graphs.

Due to the noise in the data, however, we have to expect that the original overlap graph only approximates a proper interval graph. On the other hand, we have already obtained an orientation of the edges that – in ideal data – would be consistent with interval order. We therefore consider necessary conditions for directed indifference graphs and set out to enforce them.

First, we observe that

should be acyclic. Orientability w.r.t. a signature , does not guarantee acyclicity since

should be acyclic. Orientability w.r.t. a signature , does not guarantee acyclicity since

still may contain some spurious “back-linking” edges due to unrecognized repetitive elements. The obvious remedy is to remove a -minimal set of directed edges. This amounts to solving an feedback arc set problem, which is known to be NP-complete in both weighted and unweighted versions, see [42] for a recent overview. We therefore resort to a heuristic that makes use of our expectations on the structure of

still may contain some spurious “back-linking” edges due to unrecognized repetitive elements. The obvious remedy is to remove a -minimal set of directed edges. This amounts to solving an feedback arc set problem, which is known to be NP-complete in both weighted and unweighted versions, see [42] for a recent overview. We therefore resort to a heuristic that makes use of our expectations on the structure of

: In general we expect multiple overlaps of correctly placed reads, i.e., r is expected to have several incoming edges from its predecessors and several outgoing edges exclusively to a small set of succeeding reads. In contrast, we expect incorrect edges to appear largely in isolation. This suggests to adapt Khan’s topological sorting algorithm [43]. In its original version, it identifies a source u, i.e., a vertex with in-degree 0, appends it to the list W of ordered vertices, and then deletes all its out-edges. It stops with “fail” when no source can be found before the sorting is complete, i.e., W does not contain all vertices of the given graph, indicating that a cycle has been encountered. We modify this procedure in two ways:

: In general we expect multiple overlaps of correctly placed reads, i.e., r is expected to have several incoming edges from its predecessors and several outgoing edges exclusively to a small set of succeeding reads. In contrast, we expect incorrect edges to appear largely in isolation. This suggests to adapt Khan’s topological sorting algorithm [43]. In its original version, it identifies a source u, i.e., a vertex with in-degree 0, appends it to the list W of ordered vertices, and then deletes all its out-edges. It stops with “fail” when no source can be found before the sorting is complete, i.e., W does not contain all vertices of the given graph, indicating that a cycle has been encountered. We modify this procedure in two ways:

First, if multiple sources are available in a given step, we always pick the one with largest total -weight of edges incoming from the sorted set W. As a consequence, incomparable paths in

are sorted contiguously, i.e., a new path is initiated only after the previous one cannot be continued any further. Note that keeping track of the total input weight from W does not alter the running time of the Kahn’s algorithm.

are sorted contiguously, i.e., a new path is initiated only after the previous one cannot be continued any further. Note that keeping track of the total input weight from W does not alter the running time of the Kahn’s algorithm.

Second, we replace the “fail” state by a heuristic to find an “almost source” to continue the sorting. Denote by the out-neighborhood of the set W that has been sorted so far and consider the set the not-yet-sorted out-neighbors of W. These are the natural candidates for the next source. For each we distinguish incoming edges xu from , , and and consider two cases:

- (1)

There is a without an in-edge xu from some other . Then we choose among all vertices of this type the vertex with the largest total -weight incoming from W because then overlaps with most of the previously sorted reads.

- (2)

If for each there is an in-edge xu from some other , then the candidate set K forms a strongly connected digraph. In this case we choose the candidate with the largest difference of -weights incoming from W and K, i.e., .

In either case, we add the edges incoming from into to the set F of edges that violate the topological order. It is clear from the construction that (i) F remains empty if

is a DAG since in this case a source is available in each step, and (ii) the graph

is a DAG since in this case a source is available in each step, and (ii) the graph

obtained by from

obtained by from

by deleting the edges in F is acylic since all in-edges to u in

by deleting the edges in F is acylic since all in-edges to u in

emanate from W, the set of vertices sorted before u, and all out-edges from u point to the as yet unsorted set. Thus F is a feedback arc set for

emanate from W, the set of vertices sorted before u, and all out-edges from u point to the as yet unsorted set. Thus F is a feedback arc set for

.

.

Lemma 9

The modified Kahn algorithm can be implemented to run in time.

Proof

Our modified Kahn algorithm keeps the not-yet-sorted vertices in a priority queue instead of a simple queue. The priority of a vertex depends on the number of total -scores of the in-edges wu with , , and respectively. Every time a vertex v is added to W, these values have to be updated for the out-neighbors . Each update only comprises of the addition or subtraction of and the decision whether the second and/or third values are zero, and thus require total time . Highest priority is given to vertices u with , i.e., true sources, next vertices with , and the last tier is formed by the remaining vertices. Assuming an efficient implementation of the priority queue as a heap, the total effort for its maintenance is O(E) plus for the dequeuing operations, see e.g. [44, 45].

It is possible that

is not connected. In this case, each connected component can be processed independently in subsequent processing steps. If the feedback set F is disjoint from , then is still a -maximal spanning tree of

is not connected. In this case, each connected component can be processed independently in subsequent processing steps. If the feedback set F is disjoint from , then is still a -maximal spanning tree of

. Otherwise, edges in need to be replaced. Lemma 5 that the replacement edges have to be drawn from non-tree edges between the vertex sets spanned by the connected components of . In principle, this can be done efficiently with specialized data structures for dynamic connectivity queries, in particular if is small [46]. However, the effort to run Kruskal’s algorithm again on

. Otherwise, edges in need to be replaced. Lemma 5 that the replacement edges have to be drawn from non-tree edges between the vertex sets spanned by the connected components of . In principle, this can be done efficiently with specialized data structures for dynamic connectivity queries, in particular if is small [46]. However, the effort to run Kruskal’s algorithm again on

is by no means prohibitive, since the update has to be done only once.

is by no means prohibitive, since the update has to be done only once.

Golden paths

For perfect data, the directed proper interval graph

has a single source and a single sink vertex, corresponding to the left-most and right-most long reads and , respectively. Furthermore, every directed path connecting and is a golden path, that is, a sequence of overlapping intervals that covers the entire chromosome. Even more stringently, every read has at least one predecessor and at least one successor in

has a single source and a single sink vertex, corresponding to the left-most and right-most long reads and , respectively. Furthermore, every directed path connecting and is a golden path, that is, a sequence of overlapping intervals that covers the entire chromosome. Even more stringently, every read has at least one predecessor and at least one successor in

. An undirected graph is a proper interval graph if there is a set of intervals, corresponding to the vertices, such that (i) no interval is properly contained within another, and (ii) two vertices are adjacent iff their intervals intersect. For perfect data, therefore, the overlap graph is a proper interval graph.

. An undirected graph is a proper interval graph if there is a set of intervals, corresponding to the vertices, such that (i) no interval is properly contained within another, and (ii) two vertices are adjacent iff their intervals intersect. For perfect data, therefore, the overlap graph is a proper interval graph.

Lemma 10

[47] A connected proper interval graph has a unique vertex order (other than the reversal of the order).

The vertex order of a connected proper interval graph is therefore completely determined by fixing the orientation of single edge. In our case, the orientation is fixed by . We choose the ascending vertex order, i.e., for every directed edge . A proper interval graph with such an orientation of edges is a directed proper interval graph.

For perfect data, therefore,

is directed proper interval graph and thus it would suffice to compute the unique topological sorting of

is directed proper interval graph and thus it would suffice to compute the unique topological sorting of

. For real-life data, however, we cannot expect that even the acyclic graph

. For real-life data, however, we cannot expect that even the acyclic graph

is a directed proper interval graph. Ploidy in eukaryotes may constitute a valid exception to this assumption, as differences in chromosomes ideally also cause diverging structures. However, given the high error rate of long reads, low sequence variation can only be differentiated in very high coverage scenarios or with the help of known ancestral relationships [48]; both are explicitly not targeted by LazyB. In practice, ploidy is commonly reduced even when sufficient coverage is available but can be recovered via variant calling [49]. High accuracy short read assemblies originating from different alleles can be expected to match equally well to the same long reads given their low quality. Therefore, also ploidy variation will normally be merged to a single consensus. Accordingly, we did not detect any mayor duplication issues in the human, fly, or yeast.

is a directed proper interval graph. Ploidy in eukaryotes may constitute a valid exception to this assumption, as differences in chromosomes ideally also cause diverging structures. However, given the high error rate of long reads, low sequence variation can only be differentiated in very high coverage scenarios or with the help of known ancestral relationships [48]; both are explicitly not targeted by LazyB. In practice, ploidy is commonly reduced even when sufficient coverage is available but can be recovered via variant calling [49]. High accuracy short read assemblies originating from different alleles can be expected to match equally well to the same long reads given their low quality. Therefore, also ploidy variation will normally be merged to a single consensus. Accordingly, we did not detect any mayor duplication issues in the human, fly, or yeast.

Our aim now is to approximate the DAG

by a disjoint union of connected directed proper intervals graphs. To gain some intuition for this task, we first consider reductions of directed graphs that expose longest paths.

by a disjoint union of connected directed proper intervals graphs. To gain some intuition for this task, we first consider reductions of directed graphs that expose longest paths.

A transitive reduction

of some directed graph

of some directed graph

is a subgraph of

is a subgraph of

with as few edges as possible such that two vertices x and y are connected by a directed path in

with as few edges as possible such that two vertices x and y are connected by a directed path in

if and only if they are connected by a directed path in H [50, 51]. It is well-known that every directed acyclic graph has a unique transitive reduction [51, Thm. 1]. This property allows us to call an edge e of an acyclic digraph

if and only if they are connected by a directed path in H [50, 51]. It is well-known that every directed acyclic graph has a unique transitive reduction [51, Thm. 1]. This property allows us to call an edge e of an acyclic digraph

redundant if (

redundant if (

). Unfortunately, computation of the transitive reduction requires time in sparse graphs and , where is the matrix multiplication constant. This is impractical for our purposes.

). Unfortunately, computation of the transitive reduction requires time in sparse graphs and , where is the matrix multiplication constant. This is impractical for our purposes.

As a simpler analog of transitive reduction, we define the triangle reduction

of H as the digraph obtained from

of H as the digraph obtained from

by removing all edges (

by removing all edges (

) for which there is a vertex v with (

) for which there is a vertex v with (

).

).

Lemma 11

If

is a connected directed proper interval graph then (i)

is a connected directed proper interval graph then (i)

is a path, and (ii)

is a path, and (ii)

=

=

.

.

Proof

By Lemma 10,

has a unique topological sorting, i.e., is a unique total order. Property (ii) now is an immediate consequence of the umbrella property, and (iii) follows from the fact the transitive reduction is a subgraph of the triangle reduction and preserves connectedness.

has a unique topological sorting, i.e., is a unique total order. Property (ii) now is an immediate consequence of the umbrella property, and (iii) follows from the fact the transitive reduction is a subgraph of the triangle reduction and preserves connectedness.

As an immediate consequence of Lemma 11 we observe that if

is a connected induced subgraph of a directed proper interval graph

is a connected induced subgraph of a directed proper interval graph

, then

, then

is an induced path in the triangle reduction

is an induced path in the triangle reduction

of

of

. Of course, Lemma 11 does not imply that the triangle reduction

. Of course, Lemma 11 does not imply that the triangle reduction

is a path. It serves as motivation, however, to identify long-read contigs as maximal paths in the triangle reduction

is a path. It serves as motivation, however, to identify long-read contigs as maximal paths in the triangle reduction

of the directed acycling graph

of the directed acycling graph

. Since the topological sorting along any such path is unique, it automatically identifies all redundant non-triangle edges along a path.

. Since the topological sorting along any such path is unique, it automatically identifies all redundant non-triangle edges along a path.

We note that it is not necessary to first compute the transitive or triangle reduction if one is only interested in the maximal paths.

Lemma 12

Let

be a directed acyclic graph with triangle reduction

be a directed acyclic graph with triangle reduction

and transitive reduction

and transitive reduction

. Then

P

is a maximal path in

. Then

P

is a maximal path in

if and only if it is a also maximal path in

if and only if it is a also maximal path in

or

or

.

.

Proof

Every maximal path in

connects a source with a sink, since otherwise it could be extended at one the the ends. Now suppose that a longest path P contains an edge that this not contained in the transitive reduction. By definition, then there is a path of length at least 2 from and , and since H is acyclic, no vertex in lies along the path P. Thus obtained from P by replacing e with is again a path, which is strictly longer then P, contradicting the assumption that P was maximal. Thus P is contained

connects a source with a sink, since otherwise it could be extended at one the the ends. Now suppose that a longest path P contains an edge that this not contained in the transitive reduction. By definition, then there is a path of length at least 2 from and , and since H is acyclic, no vertex in lies along the path P. Thus obtained from P by replacing e with is again a path, which is strictly longer then P, contradicting the assumption that P was maximal. Thus P is contained

and

and

. Since

. Since

and

and

is a subgraph of

is a subgraph of

and P is maximal in

and P is maximal in

, it is also maximal in

, it is also maximal in

and

and

.

.

We note, furthermore, that the modified Kahn algorithm described above has the useful side effect of producing long runs of consecutive vertices . These can be used to effectively reduce the graph

by removing all arcs connecting non-consecutive vertices with any such run.

by removing all arcs connecting non-consecutive vertices with any such run.

The longest path terminating in a given vertex x can be computed with effort [52], suggesting that the explicit computation of reductions will not be helpful in practice. It also does not address the issue that the triangle-reduction

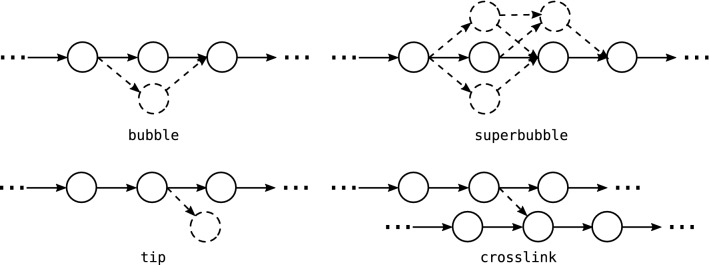

differs from a unique golden path by bubbles, tips, and crosslinks, see Fig. 7. Tips and bubbles predominantly are caused by edges that are missing e.g. due to mapping noise between reads that belong to a shared contig region. Remember that ploidy is collapsed to one haplotype due to the high error rates of long reads. Hence, any path through a bubble or superbubble yields essentially the same assembly of the affected region and thus can be chosen arbitrarily whereas tips may prematurely end a contig. Node-disjoint alternative paths within a (super-)bubble [53] start and end in the neighborhood of the original path. Tips either originate or end in neighborhood of the chosen path. As tips themselves may also be subject to mild noise, and crosslinks may occur near the start- or end-sites of the true paths, both are not always easily distinguished. Crosslinks represent connections between two proper contigs by spurious overlaps, caused, e.g., by repetitive elements that have escaped filtering. As crosslinks can occur at any position, a maximal path may not necessarily follow the correct connection and thus may introduce chimeras into the assembly.

differs from a unique golden path by bubbles, tips, and crosslinks, see Fig. 7. Tips and bubbles predominantly are caused by edges that are missing e.g. due to mapping noise between reads that belong to a shared contig region. Remember that ploidy is collapsed to one haplotype due to the high error rates of long reads. Hence, any path through a bubble or superbubble yields essentially the same assembly of the affected region and thus can be chosen arbitrarily whereas tips may prematurely end a contig. Node-disjoint alternative paths within a (super-)bubble [53] start and end in the neighborhood of the original path. Tips either originate or end in neighborhood of the chosen path. As tips themselves may also be subject to mild noise, and crosslinks may occur near the start- or end-sites of the true paths, both are not always easily distinguished. Crosslinks represent connections between two proper contigs by spurious overlaps, caused, e.g., by repetitive elements that have escaped filtering. As crosslinks can occur at any position, a maximal path may not necessarily follow the correct connection and thus may introduce chimeras into the assembly.

Fig. 7.

Examples of assembly graph defects in

. Given two nodes

. Given two nodes

, an path is a path starting in s and ending in t. A simple bubble consists of two vertex disjoint paths. This construct can be extended to super-bubbles, defined as a set of paths, exactly including all nodes reachable from s without passing t and vice versa. Bubbles and superbubbles are primarily the result of unrecognized overlaps. Tips are “side branches” that do not reconnect with the dominating paths and thus have distinct end-points. Crosslinks, finally, are connecting edges between two golden paths

, an path is a path starting in s and ending in t. A simple bubble consists of two vertex disjoint paths. This construct can be extended to super-bubbles, defined as a set of paths, exactly including all nodes reachable from s without passing t and vice versa. Bubbles and superbubbles are primarily the result of unrecognized overlaps. Tips are “side branches” that do not reconnect with the dominating paths and thus have distinct end-points. Crosslinks, finally, are connecting edges between two golden paths

We therefore have to expect that solving the longest path problem on

will sometimes follow spurious edges rather than locally more plausible choices since these may lead to overall shorter paths. As a remedy, we therefore aim to resolve the path choices based on local information. More precisely, we measure how well an edge e fits into a local region that forms an induced proper interval graph. Recall that a tournament is an orientation of a complete graph, and is called transitive if and only if it is acyclic [54].

will sometimes follow spurious edges rather than locally more plausible choices since these may lead to overall shorter paths. As a remedy, we therefore aim to resolve the path choices based on local information. More precisely, we measure how well an edge e fits into a local region that forms an induced proper interval graph. Recall that a tournament is an orientation of a complete graph, and is called transitive if and only if it is acyclic [54].

Lemma 13

If

is a directed proper interval graph, then the subgraph

is a directed proper interval graph, then the subgraph

induced by the closed outneighborhood

is a transitive tournament.

induced by the closed outneighborhood

is a transitive tournament.

Proof

By definition there is an arc from

r

to every

. Furthermore, we already know that

has a unique topological ordering. The umbrella property therefore implies that there is an arc from

u

to

v

whenever

u

preceeds

v

in the unique topological ordering. Thus

has a unique topological ordering. The umbrella property therefore implies that there is an arc from

u

to

v

whenever

u

preceeds

v

in the unique topological ordering. Thus

is a transitive tournament.

is a transitive tournament.

For ideal data, the out-neighborhoods

form transitive tournaments, and their triangle reductions form induced subpath of

form transitive tournaments, and their triangle reductions form induced subpath of

. In fact, collecting results from the literature, it can be shown that is also necessary:

. In fact, collecting results from the literature, it can be shown that is also necessary:

Theorem 14

A connected directed graph H is a directed proper interval graph if and only if the out-neighorhood is complete (and hence a transitive tournament) for every and forms an interval in the (unique) vertex order.

Proof

The equivalence of proper interval graphs and so-called closed graphs is shown in [55]. By definition, H is closed if it has so-called closed vertex ordering equivalent to the umbrella property [55]. Prop.2.2 in [56] states that a vertex ordering is closed if and only if the out-neighborhood is complete and forms an interval w.r.t. . Together with the forward-orientation of the edges of H w.r.t. , this in particular implies that is transitive tournament.

An analogous result holds for the in-neighbors. Equivalently, proper interval graphs are also characterized by the fact that they admit a straight vertex order in which the in-neighbors of r, r itself, and then the out-neighbors of r appear consecutively [47].

For real data the subgraph

induced by the out-neighbors of r will in general violate the transitive tournament property. The problem of finding the maximum transitive tournament in an acyclic graph is NP-hard [57]. An approximation can be obtained, however, using the fact that a transitive tournament has a unique directed Hamiltonian path. Thus candidates for transitive tournaments in

induced by the out-neighbors of r will in general violate the transitive tournament property. The problem of finding the maximum transitive tournament in an acyclic graph is NP-hard [57]. An approximation can be obtained, however, using the fact that a transitive tournament has a unique directed Hamiltonian path. Thus candidates for transitive tournaments in

can be retrieved efficiently as the maximal path in

can be retrieved efficiently as the maximal path in

that connects r with an endpoint q, i.e., a vertex without an outgoing edge within

that connects r with an endpoint q, i.e., a vertex without an outgoing edge within

. Using the topological order of

. Using the topological order of

, the maximal paths can be traced back in time for each endpoint .

, the maximal paths can be traced back in time for each endpoint .

For we compute the number

. The subgraph

. The subgraph

with the largest value of serves as approximation for the maximal transitive tournament with r as its top element. Its edge set

with the largest value of serves as approximation for the maximal transitive tournament with r as its top element. Its edge set

is used to define the interval support of an edge

is used to define the interval support of an edge

as

as

| 4 |

Here, d(r, e) is the minimal number of edges in the unique path from r to e in the path formed by the edges in . The interval support can be interpreted as the number of triangles that support e as lying within an induced proper interval graph. Importantly, it suffices to compute for

. The idea is now to choose, at every vertex r with more than one successor or precedssor in

. The idea is now to choose, at every vertex r with more than one successor or precedssor in

the edges in and that have the maximal interval support. We observed empirically that determining the best path by greedily optimizing at branch points results in contigs with a better solution quality compared to optimizing the weight of the spanning tree edges of . Taken together, we arrive at the following heuristic to iteratively extract meaningful paths:

the edges in and that have the maximal interval support. We observed empirically that determining the best path by greedily optimizing at branch points results in contigs with a better solution quality compared to optimizing the weight of the spanning tree edges of . Taken together, we arrive at the following heuristic to iteratively extract meaningful paths:

- (i)

Find a maximal path in

such that at every junction, we choose the incoming and outgoing edges e with maximal interval support .

such that at every junction, we choose the incoming and outgoing edges e with maximal interval support .- (ii)

Add the path to the contig set if it is at least two nodes long and neither the in-neighborhood nor the out-neighborhood is already marked as “visited” in

. Otherwise, we have found a tip if one of or was visited before and a bubble if both were visited. Such paths are assumed to have arisen from more complex crosslinks and can be added to the contig set if they exceed a user-defined minimum length.

. Otherwise, we have found a tip if one of or was visited before and a bubble if both were visited. Such paths are assumed to have arisen from more complex crosslinks and can be added to the contig set if they exceed a user-defined minimum length.- (iii)

The path is marked “visited” in

and all corresponding nodes and edges are deleted from

and all corresponding nodes and edges are deleted from

.

.- (iv)

The procedure terminates when

is empty.

is empty.

As the result, we obtain a set of paths, each defining a contig.

Post processing of the path decomposition

Consensus sequence

The final step is the retrieval of a consensus sequence for each path . This step is more complicated than usual due to the nature of our initial mappings. While we enforce compatible sets of unitigs for each pair of long reads, a shared unitig between edges does not necessarily imply the same genomic coordinate. We have to consider four distinct situations: (i) Unitigs can be long enough that we gain triples such that an exists but and share no interval on s. Such triples can occur chained. (ii) Unitigs of genomic repeats may remain in the data. Such unitigs may introduce pairwise distinct edges that appear in this order, denoted by , along the path such that and but , therefore creating disconnected occurrences of s. (iii) Similarly, proximal repeats may cause inversions in the order of two unitigs , w.l.o.g . This scenario cannot appear on neighboring edges, as the shared node has a unique order of s and . Hence, either s or must be missing in an intermediary edge due to the consistency constraints in the original graph, resulting in a situation as described in (ii). (iv) Finally, true matches of unitigs may be missing for some long reads due to alignment noise, which may also yield a situation as in (ii).

To address (i), we collect all instances of a unitig in the path independent of its context. We create an undirected auxiliary graph with a vertex set . We add edges for all edge-pairs that share an overlap in s. Any clique in this graph then represents a set of edges that share a common interval in s. We assign each edge a unique cluster index , according to a minimal size clique decomposition. As finding a set of maximal cliques is NP-hard, we instead resort to a heuristic [58]. We address (ii-iv) with the help of a second index , where for two edges if and only if an edge exists such that and .

Finally, we can now create a multigraph M consisting of vertex triples . We add edges if and only if on an edge e and no element exists such that . The resulting graph M is cycle free and thus uniquely defines the positions of all unitigs. Nodes represent the sequence of the common interval on the unitig s as attributed to the clique . Edges represent the respective sequence of long reads between s and , or a negative offset value if unitigs overlap. We take an arbitrary node in M and set its interval as the reference point. Positions of all other nodes are progressively built up following a topological order in this graph. If multiple edges exist between two nodes in this process an arbitrary but fixed edge is chosen to estimate the distance between nodes.

At this point, all sequence features are embedded in the same coordinate system. The reference contig is obtained as an in principle arbitrary projection of the read sequences. In practice, the short-read unitigs are used wherever available because of their much higher sequence quality. At the same time, we can map the features of each long read to their respective position in this newly constructed reference. This information can be directly fed into consensus based error correction systems such as racon [59].

Benchmarking

To demonstrate the feasibility of our assembly strategy we applied LazyB to data sets from previously published benchmarks of Nanopore assemblies. For yeast (S. cerevisiae) we used Nanopore sets ERR1883389 for lower coverage, ERR1883399 for higher coverage, and short-reads set ERR1938683, all from bioproject PRJEB19900 [60]. For comparison we used the reference genome R64.2.1 of strain S288C from the SGD. For fruit fly (D. melanogaster) we used the Oxford Nanopore and Illumina raw data of bioproject PRJNA433573 [61], and the FlyBase reference genome 6.30 (http://www.flybase.org). For Human we uses accession SRX6356866-8 of bioproject PRJNA549351 [62] for long reads and SRA292482 [63] for short reads. Assemblies are compared against the NCBI reference genome GRCh38.p13.

Sequencing data were downsampled to approximately 5 and 10 nanopore coverage for long reads, respectively, and Illumina coverage sufficient for short-read anchors. We compare results to the most widespread competing assembler Canu [10] and the faster Wtdbg2 [13], both demonstrating the disadvantages of long-read-only strategies especially in low coverage scenarios, although Wtdbg2 requires considerately less coverage in comparison. Additionally, we benchmarked two tools implementing the most closely related concept: DBG2OLC [23] and most recent competitor HASLR [21]. Finally, we added Wengan [19] as a leading but conceptually very unique alternative. Wengan uses long reads to scaffold a short read assembly and therefore exhibits very high levels of completeness even when presented with very few reads. It defaults to the pre-existing contigs or statistically insignificant scaffolds when nothing else can be done. This behavior can be mimicked partially with LazyB by merging our assembly to the same short-read-only assembly as used by Wengan. However, we strictly limit merging to regions with strong support from long read contigs to avoid spurious scaffolds. While not ideal, we used the pre-existing tool Quickmerge [64] to investigate such effects. At very low long read coverage, integration with a short read assembly is generally advisable to close gaps in long reads unavoidably arising on complex genomes. For reference, we also provide the statistics for short-read only assemblies created with ABySS [29] on the same sets of reads used to create the “anchors” to show the advantage of hybrid assembly even at a low coverage of long reads. The same short read assembly was used also for Wengan and Quickmerge on LazyB. Quality was assessed via alignment to a reference genome by the QUAST tool [65].

Table 3 summarizes the benchmarking results. Unsurprisingly, LazyB produced consistently better results than Canu and Wtdbg2, increasing genomic coverage at a lower contig count. Due to our inclusion of accurate short-read unitigs, overall error counts are also significantly lower than on Canu. Most notably, Canu was unable to properly operate at the 5 mark for both data sets. Only insignificant portions of the yeast genome could be assembled, accounting for less than 15% of the genome. Canu completely failed for fruit fly, even after adapting settings to low coverage. Wtdbg2 performed only marginally better, although it managed to assemble 6% of fruit fly at low coverage. Even at 5, LazyB already significantly reduces the number of contigs compared to the respective short-read assemblies, while retaining a reasonably close percentage of genome coverage. At only 10 coverage for fruit fly, we were able to reduce the contig count 10-fold at better error rates. For human, LazyB manages at 39-fold decrease of the number contigs, albeit at a loss of greater 10% coverage. This difference appears to be a consequence of the high fragmentation of unitigs in the abundant repeat regions of the genome, rendering them too unreliable as anchors. Results are indeed in line with unitig coverage. While HASLR produced the fewest mis-assemblies, it creates significantly more and shorter contigs that cover a much smaller fraction of the genome. As a consequence it has the least favorable NA50 values of all tools. For fruit fly at 10, it results in four times as many contigs and covers 10% less of the genome, with a 12 times lower NA50. While an improvement to Canu, it also struggles on datasets with low Nanopore coverage. DBG2OLC shows great promise compared to our own method, but similarly fails to operate well on very low coverage datasets. For yeast at 5, less then 50% the genome can be reconstructed. In fruit fly even less then 25% can be assembled at about 2 times the error rate of LazyB. At 10, DBG2OLC reconstruct a similar proportion of the genome, albeit at high error rates. While it produces about 100 fewer contigs for fruit fly, this achievement is offset by over 350 (4.7 times more) mis-assemblies.

Table 3.

Assessment of assembly qualities for LazyB, Canu Wtdbg2, HASLR, Wengan and short-read only assemblies for two model organisms

| Org. | X | Tool | Compl. [%] | #ctg | #MA | MM | InDels | NA50 |

|---|---|---|---|---|---|---|---|---|

| Yeast | LazyB | 90.466 | 127 | 9 | 192.56 | 274.62 | 118843 | |

| LazyB+QM | 94.378 | 64 | 12 | 174.77 | 245.05 | 311094 | ||

| Canu | 14.245 | 115 | 5 | 361.47 | 2039.15 | – | ||

| Wtdbg2 | 22.237 | 177 | 0 | 849.07 | 805.31 | – | ||

| HASLR | 64.158 | 111 | 1 | 14.87 | 34.86 | 60316 | ||

| DBG2OLC | 45.645 | 53 | 20 | 2066.64 | 1655.92 | – | ||

| Wengan | 95.718 | 41 | 11 | 49.14 | 68.47 | 438928 | ||

| LazyB | 97.632 | 33 | 15 | 193.73 | 300.20 | 505126 | ||

| LazyB+QM | 94.211 | 34 | 14 | 234.59 | 329.4 | 453273 | ||

| Canu | 92.615 | 66 | 15 | 107.00 | 1343.37 | 247477 | ||

| Wtdbg2 | 94.444 | 42 | 8 | 420.96 | 1895.28 | 389196 | ||

| HASLR | 92.480 | 57 | 1 | 7.89 | 33.91 | 251119 | ||

| DBG2OLC | 97.689 | 38 | 25 | 55.06 | 1020.48 | 506907 | ||

| Wengan | 96.036 | 37 | 4 | 32.35 | 53.04 | 496058 | ||

| Abyss | 95.247 | 283 | 0 | 9.13 | 1.90 | 90927 | ||

| Fruit fly | LazyB | 71.624 | 1879 | 68 | 446.19 | 492.43 | 64415 | |

| LazyB+QM | 75.768 | 1164 | 79 | 322.49 | 349.29 | 167975 | ||

| Canu | – | – | – | – | – | – | ||

| Wtdbg2 | 6.351 | 2293 | 2 | 916.77 | 588.19 | – | ||

| HASLR | 24.484 | 1407 | 10 | 31.07 | 58.96 | – | ||

| DBG2OLC | 25.262 | 974 | 141 | 1862.85 | 969.26 | – | ||

| Wengan | 81.02 | 2129 | 192 | 105.35 | 123.33 | 77215 | ||

| LazyB | 80.111 | 596 | 99 | 433.37 | 486.28 | 454664 | ||

| LazyB+QM | 80.036 | 547 | 100 | 416.34 | 467.14 | 485509 | ||

| Canu | 49.262 | 1411 | 275 | 494.66 | 1691.11 | – | ||

| Wtdbg2 | 41.82 | 1277 | 155 | 2225.12 | 1874.01 | – | ||

| HASLR | 67.059 | 2463 | 45 | 43.83 | 84.89 | 36979 | ||

| DBG2OLC | 82.52 | 487 | 468 | 739.47 | 1536.32 | 498732 | ||

| Wengan | 84.129 | 926 | 237 | 114.96 | 154.03 | 221730 | ||

| Abyss | 83.628 | 5811 | 123 | 6.20 | 8.31 | 67970 | ||

| Human | LazyB | 67.108 | 13210 | 2915 | 1177.59 | 1112.84 | 168170 | |

| Unitig | 69.422 | 4146090 | 252 | 93.07 | 13.65 | 338 | ||

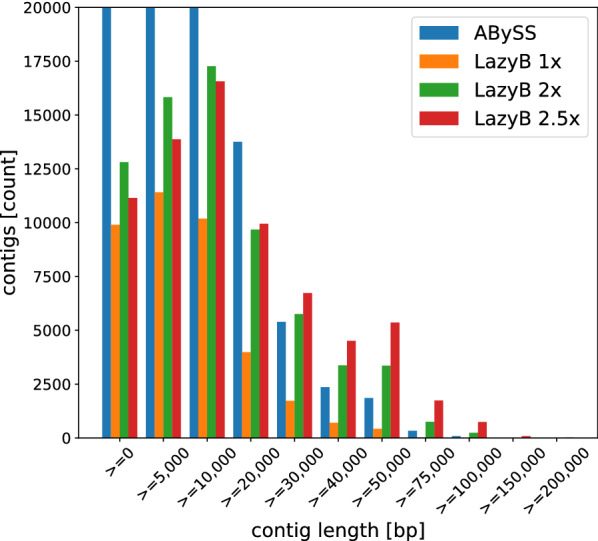

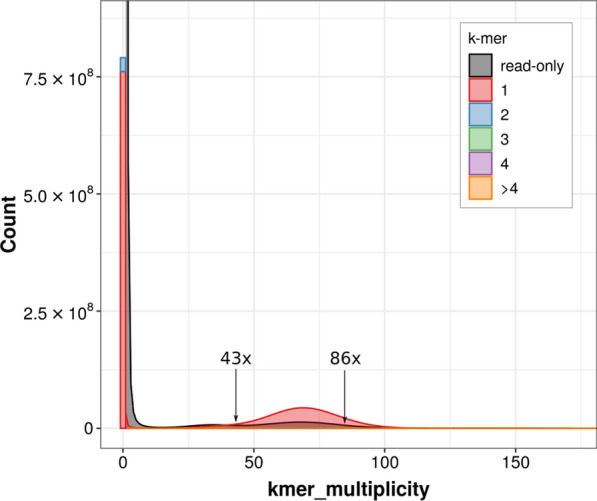

| Abyss | 84.180 | 510315 | 2669 | 98.53 | 25.03 | 7963 |