Abstract

The sudden increase in coronavirus disease 2019 (COVID-19) cases puts high pressure on healthcare services worldwide. At this stage, fast, accurate, and early clinical assessment of the disease severity is vital. In general, there are two issues to overcome: (1) Current deep learning-based works suffer from multimodal data adequacy issues; (2) In this scenario, multimodal (e.g., text, image) information should be taken into account together to make accurate inferences. To address these challenges, we propose a multi-modal knowledge graph attention embedding for COVID-19 diagnosis. Our method not only learns the relational embedding from nodes in a constituted knowledge graph but also has access to medical knowledge, aiming at improving the performance of the classifier through the mechanism of medical knowledge attention. The experimental results show that our approach significantly improves classification performance compared to other state-of-the-art techniques and possesses robustness for each modality from multi-modal data. Moreover, we construct a new COVID-19 multi-modal dataset based on text mining, consisting of 1393 doctor–patient dialogues and their 3706 images (347 X-ray 2598 CT 761 ultrasound) about COVID-19 patients and 607 non-COVID-19 patient dialogues and their 10754 images (9658 X-ray 494 CT 761 ultrasound), and the fine-grained labels of all. We hope this work can provide insights to the researchers working in this area to shift the attention from only medical images to the doctor–patient dialogue and its corresponding medical images.

Keywords: COVID-19 diagnose, Knowledge attention mechanism, Knowledge-based representation learning, Knowledge embedding

1. Introduction

The pandemic of the coronavirus disease 2019 (COVID-19) has brought unprecedented disaster to humans’ lives. Facing the ongoing outbreak of COVID-19, viral nucleic acid diagnoses using real-time polymerase chain reaction (RT-PCR) is the accepted standard diagnostic method to find the crowd of infestor [1], [2], [3], [4]. However, due to political and economic reasons, many hyper-endemic regions and countries cannot use the RT-PCR method to detect tens of thousands of suspected patients. On the other hand, due to its high false-negative rate, repeat testing of RT-PCR might be needed to achieve an accurate diagnosis of COVID-19. The chest X-ray, ultrasound, and computed tomography (CT) of imaging tools frequently have been used for diagnosing other diseases. It is fast and easy to operate and has become a widely used diagnostic tool [5], [6]. Researchers are studying how to distinguish COVID-19 from chest X-ray, ultrasound images, or CT scans to solve the lack of reagents [7], [8], [9], [10]. Also, medical COVID-19 data [11], [12], [13], [14] consists of chest X-ray images, ultrasound images, and CT images (i.e., slices) and mostly multi-modal.

The great success of deep learning methods in pneumonia diagnosis tasks has inspired many researchers [15], [16], [17], [18]. The deep-learning-based COVID-19 diagnosis methods are emerging one after another. Nevertheless, extensive medical data is typically required to train these high-quality deep learning-based models. Typically, deep learning models for training this high-performance classification require large amounts of medical COVID-19 data. Besides, medical data on patients with confirmed or suspected COVID-19 might infrequently appear in the public dataset. Thus, it is tough to exploit limited and restricted medical data to train reliable diagnostic models.

On the other hand, in the real world, doctors recommend that physicians communicate with patients (i.e., doctor–patient dialogues) before performing radiological examinations and obtain the patient’s past medical history, current medical history, etc. doctor–patient dialogue is one of the most common forms of consultation [19], [20], [21], [22]. However, this information is not included in the existing medical image dataset. Moreover, in the COVID-19 epidemic, most patients have a past medical history, and chronic medical history [23], [24]. For example, the physician needs to learn about the patients’ history of previous exposure and previous symptoms through a dialogue between the patient and the physician. Also, the physician and the government need to identify relevant close contacts of this patient based on dialogue with the patient and the patient’s recollections to implement effective prevention and control measures.

During the COVID-19 pandemic, even though traditional doctor–patient conversations are at risk of close contact, doctor–patient conversations through Internet video calls and real-time Internet chats are on the rise. The doctor–patient dialogue over the Internet is gradually becoming one of the primary instruments of consultation. Therefore, it is urgent and essential that the deep-learning-based model shifts the attention from only medical images to the doctor–patient dialogue and its corresponding medical images.

In short, there are two main challenges in the task of COVID-19 diagnosis:

(1) Multi-modal information of COVID-19 infestors, including doctor–patient dialogue and different modality medical images, must be jointly considered to make accurate inferences about COVID-19;

(2) Limited multi-modal data makes it challenging to design effective diagnostic models.

Inspired by the success of graph-based attention models (e.g., graph attention network [25]), which capture entities (i.e., nodes) as well as their relationships (i.e., edges) with each other, we focus on the strategy of graph-based attention models to solve these above two issues [26], [27], [28]. While existing work about graph attention mechanisms all considers knowledge graphs, which is a type of heterogeneous graph from multi-modal data and aims to make the full & joint use of multi-modal data, they do not differentiate between the different kinds of links and nodes. This is important as approaches based on heterogeneous graphs have been shown to outperform approaches that assume that graphs only have a single type of link/edge. Therefore, how can attention mechanisms be designed to leverage and jointly exploit the multi-modal data?

Moreover, data and feature representations of a priori knowledge focus on projecting data and relationships between data into a low-dimensional continuous space [29]. Most approaches aim to learn representations with a priori knowledge that represent relationships between data [30]. In this way, we may use the limited multimodal data to train robust deep networks [31], [32]. Why not use knowledge-based representation learning to make representations of attentional mechanisms as joint representations of a priori medical knowledge and deep network?

Motivated by these observations, to tackle all the problems mentioned above, in this paper, we propose a novel multi-modal knowledge embedding-based graph attention model for the COVID-19 diagnosis task. During this process, the model makes use of medical knowledge. Notably, we firstly propose the multimodal attention mechanism that is able to learn the medical knowledge-based representations about the prior multimodal information. Secondly, we get the multimodal medical information embeddings. Thirdly, we create the temporal convolutional self-attention network and obtain the pivotal features of prior multimodal information. Finally, our framework relates feature maps to the embeddings about multimodal medical and explains the classification of COVID-19 diagnosis. Experimental results demonstrate that the proposed approach has higher performance expressively than the state-of-the-art methods in the COVID-19 diagnosis task.

Moreover, we propose a new multi-modal information dataset about COVID-19, which contains doctor–patient dialogue and their corresponding multi-modal medical images ( X-ray images, axial CT images, and ultrasound images) with the text-mined fine-grained disease labels during the ongoing outbreak of COVID-19, mined from the text radiological reports. All in all, the main contributions of this paper are summarized as follows:

We propose a robust and end-to-end multimodal knowledge embedding-based graph attention model to classify COVID-19 multimodal data. To the extent of our knowledge, it is the first attempt to investigate multimodal attentional mechanisms based diagnostic approach about COVID-19.

We construct an effective multi-modal attention mechanism that dramatically improves the performance of the proposed approach. What is more, we design a novel cross-level modality attention to combine single-modality and multiple-modality information.

We present a novel temporal convolutional self-attention network to extract and learn the discriminative features on the datasets. The qualitative discussion demonstrates that this strategy achieves competitive performance over other temporal convolutional network-based methods.

A new dataset about multi-modal information is constructed for the task of COVID-19 diagnosis. This dataset contains doctor–patient dialogues and their images ( X-ray CT ultrasound) about COVID-19 patients and non-COVID-19 patient dialogues and their images ( X-ray CT ultrasound), and the fine-grained labels of all.

2. Previous COVID-19 diagnosis

Radiological diagnosis is a convenient medical technique for patients with COVID-19 who are suspected of urgently needing a risk area diagnosis [33], [34]. X-ray CT scans and ultrasonography are widely used to provide compelling evidence for the analysis of radiologists. To achieve higher accuracy for radiological diagnosis, using either X-ray or CT as the acquisition method, many works have been proposed for COVID-19 diagnosis. Also, benefit from ultrasonography convenience, some works have been proposed for COVID-19 diagnosis via ultrasound images.

Based on chest X-ray images, there are many discussions of the classification between COVID-19 and other non-COVID-19 subjects, including other pneumonia subjects and healthy subjects. Zhang et al. [35] present a ResNet based model to classify COVID-19 and non-COVID-19 X-ray images for COVID-19 diagnose. They use X-ray images from seventy COVID-19 patients and one thousand and eight non-COVID-19 pneumonia patients, and they achieve 96.0% sensitivity and 70.7% specificity along with an AUC of 0.952. A deep CNNs based architecture called COVID-Net [36] is presented for COVID-19 diagnosis from X-ray images. Utilizing their self-built COVIDx dataset, the COVID-Net achieves the testing accuracy of 83.5%. Considering the difficulty of a systematic collection of chest X-ray images for deep neural network training, s patch-based convolutional neural network approach that requires a relatively small number of trainable parameters for COVID-19 diagnosis is proposed by Oh et al. [37]. Also, there have been efforts made for the classification of COVID-19 from non-COVID-19 based on CT scans. Jin et al. [38] build a chest CT dataset consisting of four hundred and ninety-six COVID-19 positive cases and one thousand three hundred and eighty-five negative cases. They propose a two-dimensional CNN-based model for lung segmentation and a COVID-19 diagnosis model. Experimental results show that the proposed model achieves a sensitivity of 94.1%, a specificity of 95.5%, and an AUC of 0.979. To comprehensively explore the description of multiple features of CT images from different perspectives, Shen et al. [39] propose a method for learning a unified potential representation that fully encodes information from different facets of the features and has a promising class structure that enables detachability. Ouyang et al. [40] propose a convolutional network (CNN) with a new online attention module to target the region of lung infection when reaching diagnostic decisions. Based on multi-centre CT data for COVID-19, their algorithm can identify the COVID-19 images with the F1-score of 0.82, the specificity of 0.901, the sensitivity of 0.869, the accuracy of 0.875, and the AUC of 0.944. Furthermore, ultrasonography is another useful technique for diagnosing COVID-19, which is non-invasive, cheap, portable, and available in almost all medical facilities. Roy et al. [41] present a spatial transformer style network with weakly-supervised learning, which localizes the pathological artefacts and predicts the disease severity score. Besides several deep models, they release a novel ultrasound image dataset with fully-annotated labels at a frame-level, video-level, and pixel-level to represent the degree of disease severity.

In summary, lots of studies have been proposed for X-ray-based, CT-based, and ultrasound-based COVID-19 diagnosis. However, most of the recent works only focus on diagnosis tasks in one medical imaging modality and consider less about the doctor–patient conversations before radiological examinations, which also is the crucial part for disease diagnosis through the whole medical healing procedures.

3. Multi-modal COVID-19 pneumonia dataset

In this section, we first describe how to build our proposed Multi-Modal COVID-19 Pneumonia Dataset and introduce the structure of our proposed dataset. Then we make a comparison with existing public available COVID-19 datasets.

3.1. Dataset creation and structure

Medical dialogues are part of the traditional medical procedure when potential patients come to the hospital for professional consultation. Commonly, to avoid a failed diagnosis, doctors always ask patients to make more detailed examinations after necessary doctor–patient dialogues. However, most recent works for COVID-19 diagnosis only pay attention to medical images of COVID-19 without any dialogues, which may lead to biased diagnostic results when information is incomplete. To address this issue, in this paper, we propose a multi-modal dataset for COVID-19 pneumonia, which consists of both images and doctor–patient dialogues.

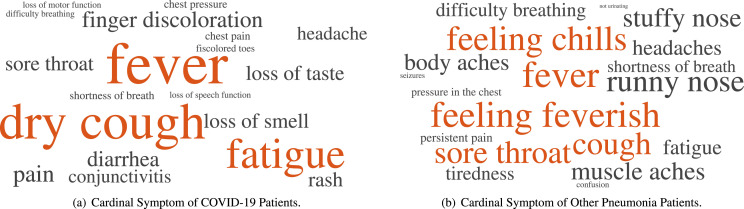

The image subset mainly contains three medical imaging modalities: X-ray, CT, and ultrasound by collecting them from public radiology medical reports and patient follow-up records. In detail, X-ray images, CT images, and ultrasound images of COVID-19 are collected from radiological reports published by online hospitals and radiology medical centres in China [15]. All X-ray images are posteroanterior (PA) or anteroposterior (AP) views, and salient axial slices of different CT volumes are collected for CT images. Most of the ultrasound images are in a convex view, and the rest are in linear view. Following the work of Chest-X-ray-8 [42], we use the technology of text mining and natural language processing (NLP) to get disease findings and decide the labels of all images. The dialogue subset is assembled from the same websites [11], and the labels of all dialogues are extracted from the disease description part by using natural language processing (NLP) toolkit [43]. Also, we provide text-mined fine-grained disease labels of each image in our dataset, including patient sex, patient age, which can be found in our Supplemental Materials. The image subset has 2D images including X-ray images, axial CT images and ultrasound images. In particular, X-ray images, CT images, and ultrasound images of COVID-19 have been assembled in our proposed image subset. Basic statistics for each class of our proposed image subset are shown in Table 1. Besides, the dialogue subset contains more than thousand sentences between doctors and patients. For both image subset and dialogue subset, two main categories are shared: COVID-19 and Non-COVID-19. The Non-COVID-19 label means other different types of community-acquired pneumonia (CAP) except normal case [40]. We use this strategy to collect a total of 2000 doctor–patient dialogues, of which there are doctor–patient dialogues about COVID-19 patients and non-COVID-19 patient dialogues. We use the NLP toolkit [43] to count the frequency of the keywords of the two kinds of dialogues and formed two kinds of word clouds for the top words, which are shown in Fig. 1. From Fig. 1, we can clearly see that the symptoms of COVID-19 patients and non-patients are significantly different. Different from these existing public datasets or challenges, we focus on the analysis of different patterns between types of pneumonia with diverse causes; that is why there are no normal cases in our dataset.

Table 1.

Basic statistics of the image subset in our proposed multi-modal COVID-19 pneumonia dataset.

| X-ray | CT | Ultrasound | Total | |

|---|---|---|---|---|

| COVID-19 | 340 | 2598 | 761 | 3706 |

| Non-COVID-19 | 9658 | 494 | 599 | 10754 |

| 9998 | 3092 | 1360 | 14450 | |

Fig. 1.

Comparison about COVID-19 and non-COVID-19 dialogues.

Both medical images and doctor–patient dialogue are tools for physicians to know their patients. For patients with a certain type of disease, their disease characteristics are statistical characteristics [44], [45], [46]. For example, as shown in Fig. 1, the disease characteristics of two diseases are different. Such statistical characteristics are also the basis for physicians to judge the disease [47], [48]. In this way, machine learning models essentially capture these statistical characteristics to be able to classify effectively. The model is trained with the information of a particular individual, not a group of individuals. It is also challenging to capture statistical characteristics with a model that uses this training strategy for classification. Moreover, considering the emergency of diagnosis and different situations for potential patients, the examinations cannot be very comprehensive in a short time when medical resources are extremely saturated [49], [50]. In other words, in a COVID-19 pandemic, medical resources are precious and limited. Most patients do not have medical images of all three modalities (i.e., X-ray, CT, ultrasound). Thus, to simulate this urgent state, the relationship between our proposed image subset and dialogue subset is unpaired. To validate the validity of our proposed dataset, experienced radiologists in our team check all images, including comparing the label of the patient’s medical images with the results of the patient’s RT-PCR, and eliminating images with errors.

3.2. Dataset comparison

For COVID-19, a new type of coronavirus disease that crosses the world, it is essential to collect data for machine learning applications. In recent months, a number of works have been presented on the COVID-19 public dataset [15], [51].

Cohen et al. [52], [53] create an image collection containing 329 images from 183 patients, most of which are chest X-ray images for COVID-19. Based on an early version of the COVID-19 image dataset constructed by the above work, COVID v2.0, and its enriched version [36] adds more bacterial pneumonia chest X-ray images and standard chest X-ray images. Zhao et al. [54] present a publicly available COVID-CT dataset consisted of COVID-19 CT axial images collected from preprinted publications from medRxiv and bioRxiv. They extract figures and captions in conjunction, judging whether a patient is positive for COVID-19 from the associated captions. Besides the X-ray-based image dataset and CT-based image dataset, ultrasound-based image datasets are also reported recently. Jannis et al. [55] propose a lung ultrasound dataset, called POCUS, consisting of one thousand six hundred fifty-four COVID-19 images, two hundred seventy-seven bacterial pneumonia images, and one hundred and seventy-two healthy controls images, which are sampled from sixty-four videos assembled from various online sources. COVIDGR-1.0 dataset is proposed [56] and is organized into two classes: positive and negative. This dataset includes 852 images (426 positive and 426 negative cases). Existing public image datasets only focus on diagnosis tasks using one medical imaging modality but hardly explore the possibility of utilizing different medical imaging modalities together. Furthermore, there are not doctor–patient dialogues in existing public image datasets for precise diagnosis, and the image numbers of existing public image datasets are not enough. To approve the advancement of the image subset in our proposed Multi-Modal COVID-19 Pneumonia Dataset, the X-ray-based part of the image subset is compared to COVID v2.0 dataset [36], the CT-based part is compared to COVID-CT dataset [54] and the ultrasound-based part is compared to POCUS [55]. All of three comparisons are shown in Table 2, Table 3, Table 4.

Table 2.

Comparison of COVID v2.0 dataset [36] and X-ray part of the image subset in our proposed multi-modal COVID-19 pneumonia dataset.

| COVIDx | Normal |

Pneumonia |

COVID-19 |

|---|---|---|---|

| 8066 | 8614 | 190 | |

| Proposed image subset |

Normal |

Non-COVID-19 |

COVID-19 |

| – | 9658 | 340 |

Table 3.

Comparison of COVID-CT dataset [54] and CT part of the image subset in our proposed multi-modal COVID-19 pneumonia dataset.

| COVID-CT | COVID-19 |

Non-COVID-19 |

|---|---|---|

| 349 | 397 | |

| Proposed image subset |

COVID-19 |

Non-COVID-19 |

| 2598 | 494 |

Table 4.

Comparison of POCUS dataset [55] and ultrasound part of the image subset in our proposed multi-modal COVID-19 pneumonia dataset.

| POCUS | Normal |

Bacterial pneumonia |

COVID-19 |

|---|---|---|---|

| 172 | 277 | 654 | |

| Proposed image subset |

Normal |

Non-COVID-19 |

COVID-19 |

| – | 599 | 761 |

As for other formats of the COVID-19 dataset. [57] propose a medical dialogue dataset about COVID-19 and other related pneumonia, which contains more than 1000 consultations. Yet, existing public dialogue datasets only crawl relevant conversations from websites, and there is no precisely fine-grained label for each dialogue.

From the comparisons and the analysis mentioned above, it is evident that our proposed dataset is better than others, the advantages of which can be summarized as follows:

-

•

Our proposed Multi-Modal COVID-19 Pneumonia Data-set considers the exploit of utilizing different medical imaging modalities together.

-

•

With precise labels, our proposed Multi-Modal COVID-19 Pneumonia Dataset considers the fusion of information both from images and doctor–patient dialogues.

-

•

Compared to existing public available COVID-19 data-sets, our proposed Multi-Modal COVID-19 Pneumonia Data-set can be seen as the largest dataset with fine-grained labels.

4. Methodology

In this section, we first describe the notations and the structure of our COVID-19 data. Then, we propose the details of our proposed multi-modal knowledge embedding graph attention model.

4.1. Basic notations

Multimodal Information We suppose there are four kinds of multimodal data: X-ray images, CT images, ultrasound images, and text description of diagnose. The training data is denoted as , where , , , and ; denotes the -th X-ray image, denotes the -th CT image, denotes the -th Ul image, and denotes the -th text data; instead of , , and , they are simplified as ; , , , and mean their corresponding labels are from the set , ; , , , denote the number of corresponding training data and are simply written as ;Testing data is denoted as , , where , , , ; , , , mean the number of corresponding training data and are simply written as .

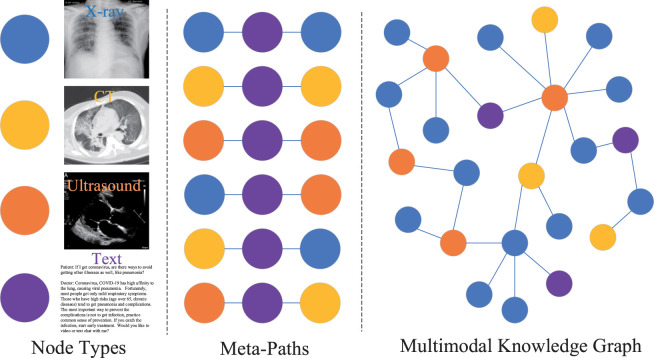

Knowledge Graph We suppose the heterogeneous knowledge graph is built, in which is a link set as well as is an object set, by utilizing the training data. Distinctly, as shown in Fig. 2, the consists of multiple types(i.e., X-ray image, CT image, ultrasound image, and text) and multiple types of links (e.g., X-ray image text X-ray image, X-ray image text CT image). We define a node type mapping function as and the function about link type mapping as , in which and are predefined object types and the sets of link types, respectively. is associated with two these functions, i.e., . We define in as meta-path, where the path can be in the style of and can be abbreviated as . We use the to describe a composite relation between objects and , where means the composition operator on relations. We suppose the nodes set is related with meta-path and node . We define the nodes set, which is the meta-path based neighbours of node including themselves.

Fig. 2.

An illustrative example of a multi-modal knowledge graph. From left to right, these are the four types of nodes (i.e., X-ray, CT, ultrasound, and text description of diagnose), six meta-paths involved in the given knowledge graph, a multi-modal knowledge graph.

Multimodal Graph Attention We denote projected node feature as and initial node feature as . We denote the type-specific transformation matrix as and features of different types of nodes are projected into the same feature space [58]. We design our attention mechanism to deal with all kinds of nodes with type-specific projection operations.

We denote the meta-path based node pair’s weight as to obtain the weight value between node and node , which is associated with (meta-path). We bespeak the single-level modality attention vector about (meta-path) as . We acquire the importance between pairs of nodes based on meta-paths, and then aim to acquire the meta-path-based node pair ’s weight , these node pairs are normalized. We bespeak as single-level modality attention vector about (meta-path). The weight value between pairs of nodes based on meta-paths is learned, and we can obtain the denoted as the meta-path based node pair ’s important. This process is also called the normalization of these nodes. We use the weight of the single-level modality attention to describe the similarity [59] of transformed embedding, denoted as a multiple-level modality attention vector .

Similarly, the weight of each meta-path is defined and denoted as . We can get the each meta-path ’s weight, and obtain the meta-path ’s importance . This process is also called the normalization of these meta-paths.

From these above notations, we input the multimodal knowledge graph , multimodal data , is defined as the number of attention head, the given meta-path set where the number of the given meta-paths are denoted as , and the node feature . As a result, we get the knowledge-based attention feature vector .

4.2. Building multi-modal knowledge graphs

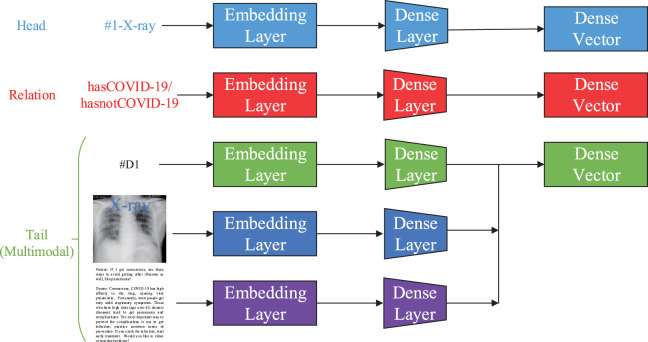

Built upon Pezeshkpour et al.’s work [60], [61], [62], we use a triple of the head, relation, and tail, in order to represent our knowledge graph . Similar to the multi-modal knowledge bases [63], we take advantage of recent advances in deep learning to build embedding layers for these nodes to represent them, in essence offering embeddings for different types of nodes. As shown in Fig. 3, we use different embedding layers to represent each specific data type.

Fig. 3.

Architecture of multi-modal knowledge graph model .

Structured Knowledge Considering a triplet of the head, relation, and tail as independent embedding vectors, we generate dense vectors through embedding layers.

Images A wide variety of models have been devised to represent the semantic information in images compactly and have been successfully applied to tasks such as classification [64], and visual reasoning [65]. To represent the images, we employ the last hidden layer of VGG-16[66] as an embedding layer, which is pre-trained by ImageNet [67].

Texts The doctor–patient dialogues are highly relevant to the text content and can capture the disease status of patients. For these texts, we apply a BERT model [68] to get the weighted word vectors of the sentences as an embedding layer to represent the text features. In this way, it is simple and efficient compared to the conventional LSTM [69].

Finally, as illustrated in Fig. 3, we use dense layers to unify all embedding layers into the same dimension to construct the multi-modal knowledge graph.

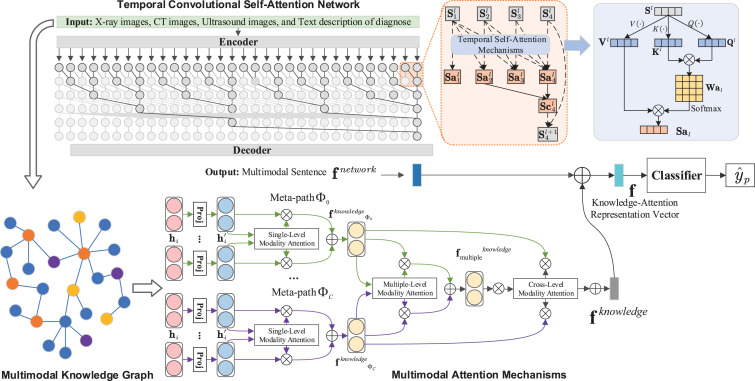

4.3. The proposed approach

In this paper, the overall framework of our proposed approach is illustrated in Fig. 4. Given knowledge graph , we define the embedding matrix of single-level modality attention as . Similarly, we define the matrix of multiple-level modality attention embeddings as , following the work of graph-based multimodal attention mechanism [58]. Secondly, we create a cross-level modality attention mechanism to fuse the information of single-level modality and multiple-level modality attentions. By this process, we can get the embedding matrix . Thirdly, we propose the Temporal Convolutional Self-Attention Network (TCSAN) to handle the inputted multimodal data and get the multimodal sentence vectors . As a result, we get the knowledge-based attention feature vector , utilizing attention embedding vectors and multimodal sentence vectors, to classify information of the labels, i.e., . Also, our approach only focuses on the interaction between the text of dialogues and the images of three different medical imaging modalities, which means that one dialogue can be combined with two different modality images.

Fig. 4.

The proposed multi-modal knowledge graph attention embedding model. Given multimodal knowledge graph , we propose the multimodal attention mechanisms including three parts: ➀ the single-level modality attention and its results denoted as ; ➁ the multiple-level modality attention and its embedding denoted as ; ➂ cross-level modality attention mechanism that fuse the information of single-level modality and multiple-level modality attentions, and its the embedding matrix denoted as . Meanwhile, we propose the Temporal Convolutional Self-Attention Network (TCSAN) to handle the inputted multimodal data and get the multimodal sentence vectors . Then, we get the knowledge-based attention feature vector . Finally, we use the classifier (in this paper, we use the ResNet-34 [70]) to gain the labels, i.e., .

4.3.1. The multimodal attention mechanism

In this sub-subsection, an exquisite and novel multi-modal attention mechanism with domain knowledge is proposed to deal with multi-modal medical data. Our model consists of three part: Single-Level Modality Attention, Multiple-Level Modality Attention, Cross-Level Modality Attention. Single-level modality attention [58] is designed to obtain the importance of meta-path based neighbours, which can be assembled to obtain the embedding of the single-level modality attention. Similarly, the multiple-level modality attention [58] is utilized to obtain the difference between single-level modality attentions. As a result, we can obtain the embedding of the multiple-level modality attention. For each modality, the multiple complementary separate representations and are obtained and describe the information of the single modality and multiple modality. We design the cross-level modality attention mechanism to fuse the separate representations hierarchically, and get the attention embedding .

Single-Level Modality Attention Due to the heterogeneity of nodes, there are different feature spaces which contain different types of nodes. Before we aggregate the information from the meta-path neighbours of each node (e.g.,node with type ), it has been recognized that different meta-path-based neighbours of each node have varying roles and differing levels of importance in terms of learning embedding. Here, we design single-level modality attention to gain, for each node in , its meta-path-based neighbours’ importance, and fuse these eloquent representations of the neighbours to form the embeddings:

| (1) |

where are performed by the deep neural network, and denotes the concatenate operation.

We can get the learned embeddings with repeated the above process for times. These embeddings concatenated and the results is our single-level modality attention embedding:

| (2) |

We input the meta-path set , and obtain the single-level modality attention embedding .

Multiple-Level Modality Attention Each node in a heterogeneous graph includes multiple kinds of semantic information, thus, single-level modality embedding is only able to consider the information of nodes in one way. To this end, we have to integrate multiple semantics with representation of meta-paths. In order to obtain a more widespread embedding, we use the multiple-level modality attention to automatically understand the different meta-path’s importance and fuse them together, as follows:

| (3) |

in which represents a weight matrix as well as represents the bias vector.

Cross-Level Modality Attention For multimodal information in the heterogeneous graph , we realize that it is fundamental to consider the balance between the each node’s importance and the meta-paths ’importance. It is apparent that each modality plays a different role, and therefore, a model of the relation between each modality is needed. To address this problem, we propose cross-level modality attention. We firstly, for different node kinds, fashion single-level modality and multiple-level modality embeddings to learn the well-rounded representation, by a nonlinear transformation (for example, one-layer MLP (Multi-Layer Perception)). Moreover, the weight of all nodes of single-level modality and multiple-level modality embeddings is averaged and is explained as the weight of each modality. We identify the weight of each node as , is calculated as follows:

| (4) |

where two weight matrices and . It is worth mentioning that this kind method follows Ref. [71] However our methodology is based on node types rather than entity types.

We express the each node ’s weight as . To this end, we normalize the above weights for all nodes using the softmax function.

| (5) |

where the above equation could be interpreted as a node’s contribution to a particular task. Each node could have varying weights for different tasks. In addition, the higher , the more important node is.

By fusing the following embeddings, we can obtain the weight of learning as a factor to obtain the final embedding :

| (6) |

4.3.2. The Temporal Convolutional Self-Attention Network

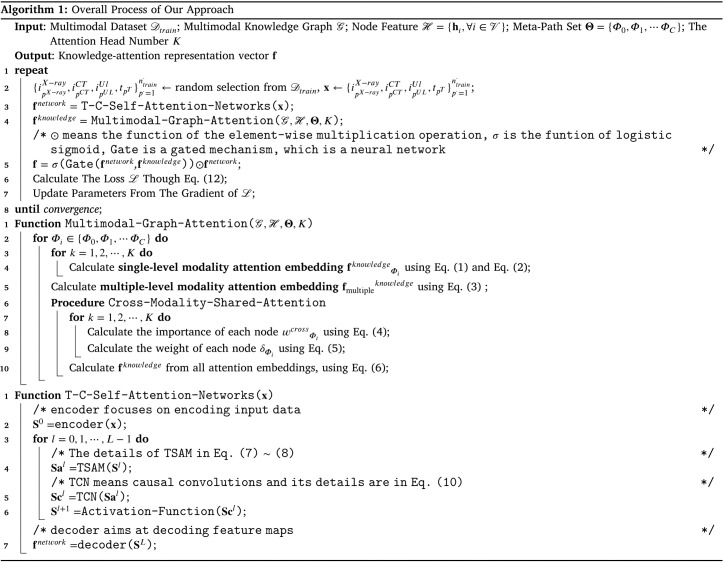

Inspired by the success of temporal convolutional network (TCN) [72], [73], [74] and the self-attention mechanism [75], we design a novel convolutional network, named Temporal Convolutional Self-Attention Network (TCSAN). Similar to most competitive neural sequence transduction models [27], [28], [75], we use the encoder and decoder structure in our network. Encoder maps the input data to its representations . Then we use the causal convolutions as a hidden layer across layers, and the intermediate variable at time step and level ()is divided to four steps, illustrated in Fig. 4:

-

Step 1:

We use encoder to encode the ;

-

Step 2:

The is passed through Temporal Self-Attention Mechanism (TSAM): where means an intermediate variable that contains information, illustrated in Fig. 4;

-

Step 3:

We apply causal convolution on the : where indicates the output of causal convolution. For keeping the same length of each layer, we add zero padding on the left, white blocks in Fig. 4. In this way, the left relevant information of input will gradually accumulate to the right;

-

Step 4:

We can get , when the is passed through the activation function.

A full TCSAN is built by stacking layers of TCSAN block across depth and time, and we use the decoder to decode the , and get the output sequence : .

Temporal Self-Attention Mechanism Temporal Self-Attention Mechanism (TSAM) is illustrated as Fig. 4. Inspired by self-attention structure [75], we use three linear transformations , , and to three different vectors: key , query , value , and the dimension . For computing the weight matrix , we compute the vectors and , and divided each by :

| (7) |

where means the transpose of the matrix .

Given the weights , we can get the weighted output by:

| (8) |

Causal Convolutions TCNs are a peculiar kind of 1D convolutional neural network (CNN), which is a natural way to encode information from a sequence [72]. A 1D convolutional layer can be written as

| (9) |

where we define the size convolution filter as , and the input sequence data as . However, when applied to model sequences [76], one-dimensional CNNs are limited by their reduced output size and limited receptive field, and the TCNs in this paper have the technique to solve these problems, i.e., causal convolution.

The causal convolutional layer is filled at the beginning of the input sequence [76] with a zero connection length. Besides, It ensures that there is no disclosure of information that never came to the past, which is essential for predicting future communication, as follows:

| (10) |

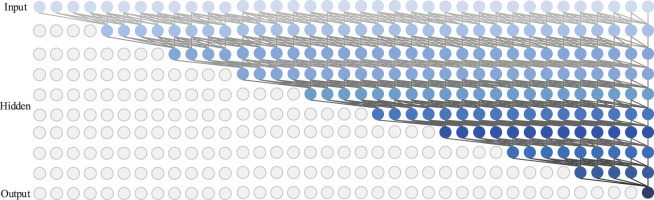

Furthermore, simple standard causal convolution can only add a receptive field with linear size to the network depth. This makes it challenging to handle sequential data. Therefore, we construct the proposed model with an exponentially large receptive field via the use of a dilated causal convolution [72]. Fig. 5 illustrates the dilated causal convolution (we set the filter size to 5). As we can see, a dilated causal convolution is a convolution where the filter is applied over an area larger than its length by skipping input values with a certain step. It is equivalent to a convolution with a larger filter derived from the original filter by dilating it with zeros, but is significantly more efficient since it utilizes fewer parameters. As a result, a dilated causal convolution can better process sequential data without more layers.

Fig. 5.

The illustration of TCN with dilated causal convolutions.

4.3.3. Knowledge-based representation learning

We present a gate mechanism for embedding knowledge representations to strengthen representation learning, with consideration of the suppression of the non-informational features and permission of the informational features to transit under the tutorial of a multi-peaked knowledge graph, similar to Ref. [77], [78], [79], denoted as

| (11) |

where we define the element-wise multiplication operation as , the logistic sigmoid as ; is a neural network that combines features embedded in the final knowledge with features extracted using TCSAN.

4.3.4. Objective function

Depending on the final embedding of a particular task, we can design different loss functions. In this paper, we can choose to minimize the cross-entropy of all labelled nodes between ground-truth and prediction:

| (12) |

Notice that in our implementation, we assume the average of the single cross-entropy errors of all nodes. The Algorithm 1 depicts the overall training process of our approach.

5. Experimental results

In this section, we experiment with the multimodal COVID-19 dataset to assess the performance of the proposed method. In comparison to the state-of-the-art models, our approach would be better in different evaluation strategies.

5.1. Experimental setup

In this subsection, we begin with an overview of the training data setup and the measures used for performance evaluation. Then we describe our experiment’s implementation details.

5.1.1. Evaluation protocol

Training Data Setup We randomly choose the diagnoses and corresponding X-ray images, CT images, ultrasound images from our dataset to construct the training set; we randomly choose the diagnoses and corresponding images from our dataset to construct the validation set; we randomly choose the diagnoses and corresponding images from our dataset to construct the test set; note that we randomly choose ten times as per the above strategy and take the average evaluation criteria for comparison.

We take advantage of the training data in different dataset and constitute the knowledge graph by [61], [62]. There are four modality data: X-ray images, CT images, ultrasound images, and text description of diagnose. Apparently, most of diagnoses includes some images. If a certain diagnose contains no image, we use a zero vector to represent the image. Besides, if a certain diagnose contains images, we extract image features from a -layer pre-trained VGGNet [66] to represent the images, similar to [80]. By this way, we get the feature of X-ray images (XR), CT images (CT) and ultrasound images (UL). We use the sentences in a certain diagnose to represent the text (T). Here we define the meta-path set as {XRTXR, XRTCT, CTTCT, CTTUL, ULTUL, ULTXR} to perform experiments. We define the images as the image-type node features , , . We use the BERT method [68] to deal with the text samples, and then conduct the text-type node features . After these, we obtain the node features .

Evaluation Measures We use the accuracy, precision, F1-score, sensitivity, specificity, and area under the receiver operator curve (AUC) to assess the performance of all models. More precisely, we use sensitivity and specificity to denote the number of positive and negative samples correctly identified, respectively. Besides, we use AUC to measure the overall classification performance, which is sensitive to the imbalance among multiple classes.

5.1.2. Implementation details

All experiments are performed this way with a 4-core PC with four 12 GB NVIDIA TITAN XP GPUs, 16 GB RAM, and Ubuntu 16. In all models, we set the number of epochs to . We input the knowledge-based attention feature vector into the COVID-19 classifier and then acquire the final classification results. We perform COVID-19 classification with ResNet-34 classifier [70]. The individual state of the knowledge attention mechanism and TCSAN implementation is as follows:

Temporal Self-Attention Network We utilize layers of temporal convolutional networks [72] to serve as our network architecture. For each layer, the hidden node has a value of , and the kernel size has a value of . By extending the dropout to all nonlinear layers, we have a probability of 0.5. Adam optimizer is used to optimize our model. In addition, our is set to 0.9, and the setting of is set to 0.999, in which the weights of decay to . For classification, the initial learning rate has a value of . The batch size is set to . Besides, for multimodal sentences, the final representation vector ’s length is set to .

The Multimodal Attention Mechanism We randomly initialize the parameters. For optimization of the model we use Adam [81]. The attention ’s dropout has a value of 0.6, and we define the number of attentional heads as , the regularization parameter as 0.001, and the learning rate as 0.005. We set the dimension of (the multiple-level modality attention vector) to . In addition, the final embedding dimension is set to . We put in , , and for training or testing of attentional mechanisms for experiments on our dataset.

5.2. Comparison with state-of-the-art methods

We present a comparison of state-of-the-art methods with our dataset. In this subsection, “Ours w/o Knowledge” denotes a variant of Ours, involving the use of only network representations of learning without the use of multimodal attention mechanisms.“Ours w/o TSAM” indicates a variant of Ours with TCN only and no TCSAN. “XR” means X-ray modality, “CT” means CT modality, “UL” means ultrasound modality and “T” represents text modality; “XRCTUL” stands for the combination of X-ray modality, CT modality and ultrasound modality, and “XRCTULT” means combining X-ray modality, CT modality, ultrasound modality and text modality.

Baselines on Our Dataset We compare against various state-of-the-art baselines on our dataset, including CAAD [35], COVID-CAPS [82], COVID-DA [83], COVID-ResNet [84], DCSL [85], COVNet [86], AnoDet [87], DLS [88], DeCovNet [89], DLQCTM [90], DenseNet-161[91], ResNet-34[70], VGG-19[66], ResNet-18[70], VGG-16[66], DGLM [92], LM3FT [93], MMCL [94], MEC [95], and GMMF [96].

Effect of Proposed Multimodal Attention Mechanisms. To estimate the performance of our methodology, we present a comparison of the results reported in the “Ours w/o Knowledge” rows and the “Ours” in rows in Table 5. Our approach utilizes the fair comparison of the same loss functions and features in the “Ours w/o Knowledge” row. Drawing from Table 5, we observe that our methodology continuously increases performance in all cases. In particular, from “Ours w/o Knowledge” to Ours, the number of parameters of models changes from 71.2M to 75.6M with three modal data (i.e., XRCTUL). Similarly, for the case of four modal data (i.e., XRCTULT), the number of parameters of models changes from M to 76.4M. Moreover, the batch time of the trained models all decrease in the testing stage, after “Ours w/o Knowledge” is added the multimodal attention mechanisms. In terms of all evaluation measures (i.e., accuracy, precision, sensitivity, specificity, F1-score, AUC), the performance of Ours all increased compared to “Ours w/o Knowledge”. It is clear that the design of multimodal attention mechanisms can enhance the effectiveness of our model.

Table 5.

Classification results of each model on our dataset. “M” means . Batch time means the runtime of each batch in the model testing.

| Method | Modality | Accuracy | Precision | Sensitivity | Specificity | F1-score | AUC | Parameters (a.k.a., model size) | Batch time |

|---|---|---|---|---|---|---|---|---|---|

| CAAD [35] | XR | 0.7394 | 0.7430 | 0.7659 | 0.7791 | 0.7543 | 0.7488 | 138.3M | 2.06 s |

| COVID-CAPS [82] | XR | 0.7413 | 0.7450 | 0.7689 | 0.7844 | 0.7568 | 0.7540 | 23M | 2.09 s |

| COVID-DA [83] | XR | 0.7446 | 0.7471 | 0.7692 | 0.7844 | 0.7580 | 0.7593 | 33M | 2.32 s |

| COVID-ResNet [84] | XR | 0.7466 | 0.7497 | 0.7747 | 0.7856 | 0.7620 | 0.7702 | 60.2M | 2.31 s |

| DCSL [85] | XR | 0.7486 | 0.7510 | 0.7777 | 0.7870 | 0.7641 | 0.7709 | 125M | 2.54 s |

| COVNet [86] | CT | 0.7518 | 0.7512 | 0.7805 | 0.7880 | 0.7656 | 0.7812 | 130.8M | 2.18 s |

| AnoDet [87] | CT | 0.7552 | 0.7518 | 0.7853 | 0.7907 | 0.7682 | 0.7843 | 125.8M | 2.19 s |

| DLS [88] | CT | 0.7607 | 0.7535 | 0.7878 | 0.7958 | 0.7703 | 0.7878 | 179.1M | 2.18 s |

| DeCovNet [89] | CT | 0.7613 | 0.7573 | 0.7947 | 0.8022 | 0.7755 | 0.7885 | 93M | 2.12 s |

| DLQCTM [90] | CT | 0.7663 | 0.7648 | 0.7989 | 0.8081 | 0.7815 | 0.7896 | 103M | 2.26 s |

| DenseNet-161[91] | UL | 0.7029 | 0.7229 | 0.7394 | 0.7517 | 0.7311 | 0.7285 | 18.8M | 2.12 s |

| ResNet-34[70] | UL | 0.7154 | 0.7288 | 0.7514 | 0.7553 | 0.7400 | 0.7287 | 63.6M | 0.80 s |

| VGG-19[66] | UL | 0.7214 | 0.7367 | 0.7525 | 0.7605 | 0.7445 | 0.7307 | 144M | 2.82 s |

| ResNet-18[70] | UL | 0.7278 | 0.7395 | 0.7575 | 0.7708 | 0.7484 | 0.7434 | 33.3M | 0.56 s |

| VGG-16[66] | UL | 0.7365 | 0.7420 | 0.7633 | 0.7777 | 0.7525 | 0.7474 | 140M | 2.48 s |

| DGLM [92] | XRCTUL | 0.8070 | 0.7842 | 0.8322 | 0.8591 | 0.8075 | 0.8067 | 130.2M | 2.08 s |

| LM3FT [93] | XRCTUL | 0.8110 | 0.7984 | 0.8323 | 0.8676 | 0.8150 | 0.8120 | 126M | 2.16 s |

| MMCL [94] | XRCTUL | 0.8120 | 0.8010 | 0.8351 | 0.8682 | 0.8177 | 0.8154 | 129.3M | 2.34 s |

| MEC [95] | XRCTUL | 0.8122 | 0.8079 | 0.8370 | 0.8716 | 0.8222 | 0.8186 | 131.7M | 2.22 s |

| GMMF [96] | XRCTUL | 0.8127 | 0.8082 | 0.8378 | 0.8741 | 0.8227 | 0.8202 | 130.3M | 2.53 s |

| Ours w/o knowledge | XRCTUL | 0.8160 | 0.8108 | 0.8378 | 0.8741 | 0.8413 | 0.8234 | 71.2M | 1.74 s |

| Ours w/o TSAM | XRCTUL | 0.8308 | 0.8121 | 0.8494 | 0.8898 | 0.8491 | 0.8293 | 70M | 1.12 s |

| Ours w/o knowledge | XRCTULT | 0.8389 | 0.8293 | 0.8297 | 0.8292 | 0.8292 | 0.8319 | 73M | 1.77 s |

| Ours w/o TSAM | XRCTULT | 0.8679 | 0.8688 | 0.8641 | 0.8909 | 0.8664 | 0.8675 | 72M | 1.14 s |

| Ours | XRCTUL | 0.9371 | 0.9209 | 0.8884 | 0.9805 | 0.9498 | 0.9171 | 75.6M | 1.12 s |

| Ours | XRCTULT | 0.9810 | 0.9889 | 0.9861 | 0.9859 | 0.9875 | 0.9908 | 76.4M | 1.14 s |

Effect of Proposed Temporal Self-Attention Mechanism. When our model and its variants use the XRCTULT, “Ours” is 0.1131, 0.1202, 0.1220, 0.0951, 0.1211, 0.1232 higher than “Ours w/o TSAM”, in term of accuracy, precision, sensitivity, specificity, F1-Score, AUC. Similarly, with XRCTUL, “Ours” is 0.1063, 0.1089, 0.0390, 0.0908, 0.1006, 0.0878 higher than “Ours w/o TSAM”, in term of accuracy, precision, sensitivity, specificity, F1-Score, AUC. These improvements demonstrate that learning by the temporal self-attention mechanism for the better performance of COVID-19 case diagnoses. With almost unchanged batch time in the test phase, such a large performance improvement can be obtained at the cost of less than 6M increase in the model size. It once again shows that our model is effective.

Effect of Doctor–Patient Dialogues. From Table 5, the performance of all models improved after adding the text from the doctor–patient dialogues to the training of our model and its variants. This emphasizes the significance of the doctor–patient dialogues for COVID-19 diagnosis.

Effect of Our Approach. Looking at Table 5, it’ s patently apparent that our method is superior to others. Particularly, ours is 0.2416, 0.2397, 0.2364, 0.2344, 0.2324, 0.2292, 0.2258, 0.2203, 0.2197, 0.2147, 0.2781, 0.2656, 0.2596, 0.2532, 0.2445, 0.1741, 0.1700, 0.1690, 0.1688, and 0.1683 higher than CAAD [35], COVID-CAPS [82], COVID-DA [83], COVID-ResNet [84], DCSL [85], COVNet [86], AnoDet [87], DLS [88], DeCovNet [89], DLQCTM [90], DenseNet-161[91], ResNet-34 [70], VGG-19 [66], ResNet-18 [70], VGG-16 [66], DGLM [92], LM3FT [93], MMCL [94], MEC [95], and GMMF [96], in the light of accuracy, respectively. In the light of precision, sensitivity, specificity, F1-Score, AUC, there are similar scenarios as the above. From above, our approach has more robust performances than the state-of-the-art approaches on our dataset. With the best performance, in terms of model size, none of our models exceeds M, and the batch time is around 1.14 seconds(s). In other words, our model processes a medical image of a lung in about 70 ms on average. This demonstrates that our model has good application perspectives, although it may seem a bit redundant and heavy. For above, these mean our approach can detect COVID-19 diagnose effectively.

5.3. Ablation study

In order to verify the reasonableness and effectiveness of each component of our attention machine, we develop the ablation experiment.

In Table 6, “Ours without Single” means a variant of Ours, which assigns the same weight to each neighbour and removes single-level modality attention; “Ours without Multiple” means a variant of Ours, which removes multiple-level modality attention and assigns the same weight to each meta-path; “Ours without Cross” means a variant of Ours, which assigns the same importance to each node and removes cross-level modality attention. We analyse the following two aspects:

Table 6.

The results of ablation study.

| Method | Accuracy | Precision | Sensitivity | Specificity | F1-score | AUC |

|---|---|---|---|---|---|---|

| Ours w/o cross | 0.9444 | 0.9462 | 0.9338 | 0.9442 | 0.9399 | 0.9577 |

| Ours w/o multiple | 0.9608 | 0.9523 | 0.9691 | 0.9659 | 0.9606 | 0.9818 |

| Ours w/o single | 0.9520 | 0.9513 | 0.9634 | 0.9622 | 0.9573 | 0.9768 |

| Ours | 0.9810 | 0.9889 | 0.9861 | 0.9859 | 0.9875 | 0.9908 |

Compared with “Ours”. From Table 5, it is quite apparent that our approach has better performances than others. In particular, ours is 0.0366, 0.0202, and 0.0290 better than “Ours w/o Cross”, “Ours w/o Multiple”, and “Ours w/o Single”, in term of accuracy, respectively. In terms of precision, sensitivity, specificity, F1-Score, AUC, there are similar scenarios as the above. As we can see, “Ours” is better than others. These suggest making full use of multimodal information helps us to improve COVID-19 diagnosis.

Compared with “Ours without Cross”. “Ours without Cross” is 0.0164 and 0.0076 lower than “Ours w/o Multiple”, and “Ours w/o Single”, in term of accuracy, respectively. In terms of precision, sensitivity, specificity, F1-Score, and AUC, there are similar scenarios. As we can see, “Ours without Cross” is worse than others. These suggest the importance of making joint use of the single-level modality and multiple-level modality information.

From the above, we get the conclusion in the following two aspects:

(1) It is apparent that the design of our attention mechanisms improves COVID-19 diagnosis.

(2) It is evident that the design of our cross-level modality mechanisms is better than our other attention mechanisms. This suggests that the design of cross-level modality mechanisms is robust and effective.

5.4. Discussion about knowledge attention mechanisms

In this subsection, we compare with some state-of-the-art graph attention mechanisms, including network embedding approach and graph neural network-based methodology, to validate the effectiveness of the presented attention mechanisms. Firstly, we introduce state-of-the-art graph attention mechanisms. Then, we analyse discussion experiment results.

5.4.1. State-of-the-art graph attention mechanisms

We review the theory and implementation of state-of-the-art graph attention mechanisms, like the following:

DeepWalk [97] is a random walk-based network embedding method. Here, we perform DeepWalk on the entire graph and ignore the nodes. Obviously, this method is able to be designed for homogeneous graphs.

ESim [98] captures multi-modal information from multiple meta-paths and is a graph embedding method. We assign the weights from our attention mechanisms to ESim in our discussion because it is hard to search for weights for the meta-paths set.

metapath2vec [99] performs a random walk based on a meta-path and uses skip-gram to embed knowledge graphs. This is a graph embedding method. Here, we report the best performance in the discussion and test all meta-paths.

HERec [100] devises a constraint policy for filtering node sequences. Besides, this is a graph embedding method and uses a skip-gram embedding knowledge graph. Here, we report the best performance in the discussion and test all the meta paths for this method.

GCN [101] designs for the graphs and is a semi-supervised graph convolutional network. Here we report the best performance and test all the meta-paths for GCN.

GAT [25] considers the attention mechanism on the graphs and is a semi-supervised neural network. Here we report the best performance in our discussion and test all the meta-paths for this method.

MAGNN [102] maps the heterogeneous node attribute information to the vector space of the same hidden layer, uses the attention mechanism to consider the semantic information inside the meta path, and applies the attention mechanism to aggregate information from multiple meta paths. Here we report the best performance in our discussion and test all the meta-paths for this method.

RGCN [103] applies the GCN framework to relational data modelling and employs sharing parameters techniques and a sparse matrix multiplications implementation in multiple graphs with a large number of relations. Obviously, this method is able to be designed for multiple homogeneous graphs. However, our graph attention mechanism is to consider building a relational model at a given heterogeneous graph. Here we report the best performance and test all the meta-paths for RGCN.

GATNE [104] classifies all node embeddings of heterogeneous graphs into three categories: base embeddings, edge embeddings, and attribute embeddings. Base embeddings and attribute embeddings are shared among different types of edges, while edge embeddings are computed through the aggregation of neighbourhood information and self-attention mechanisms. Different from the proposed graph attention mechanism that focuses on the relationship between data modalities, GATNE focuses on the relationship between different node embeddings and different attributes between nodes. Here we report the best performance and test all the meta-paths for GATNE.

HGAN [105] is a heterogeneous GNN. It learns metapath-specific node embeddings from different metapath-based homogeneous graphs and leverages the attention mechanism to combine them into one vector representation for each node. Different from the proposed graph attention mechanism that focuses on the relationship of cross-modalities, HGAN focuses only on the relationship of multiple modalities as a whole. Here we report the best performance and test all the meta-paths for HGAN.

HetGNN [106] first samples a fixed number of neighbours in the vicinity of an object via random walk with a restart. Then it performs within-type aggregation of these neighbours and designs a type-level attention mechanism for type-level aggregation. If we regard a type as a modality, different from the proposed graph attention mechanism that focuses on the relationship of cross-modalities, HetGNN focuses only on intra-modalities. Here we report the best performance and test all the meta-paths for HetGNN.

HGT [107] is a heterogeneous method that considers all possible by computing all possible meta-path based graphs and then performs graph convolution on the resulting graphs. Unlike the proposed graph attention mechanism driven by some meta-paths, HGT focuses on all relationships from all meta-paths. If meta-paths contain noise or bias, HGT may not work well. Here we report the best performance and test all the meta-paths for HGT.

MMGCN [108] is a graph-based algorithm. It devises a model-specific bipartite graph based on user–item interactions for each modality to learn representations of user preferences on different modalities. After that, it aggregates all model-specific representations to obtain the representations of users or items for prediction. Different from the proposed graph attention mechanism that focuses on the relationship of cross-modalities, MMGCN focuses on the relationship of model-specific modalities. Here we report the best performance and test all the meta-paths for MMGCN.

5.4.2. Analysis of attention mechanisms

In this sub-sub section, Ours(DeepWalk) means a variant of Ours, which only using DeepWalk and not using our graph attention mechanisms; Ours(ESim) means a variant of Ours, which only using ESim and not using our graph attention mechanisms; Ours(metapath2vec) means a variant of Ours, which only using metapath2vec and not using our graph attention mechanisms; Ours(HERec) means a variant of Ours, which only using HERec and not using our graph attention mechanisms; Ours(GCN) means a variant of Ours, which only using GCN and not using our graph attention mechanisms; Ours(GAT) means a variant of Ours, which only using GAT and not using our graph attention mechanisms; Ours(MAGNN) means a variant of Ours, which only using MAGNN and not using our graph attention mechanisms; Ours(RGCN) means a variant of Ours, which only using RGCN and not using our graph attention mechanisms; Ours(GATNE) means a variant of Ours, which only using GATNE and not using our graph attention mechanisms; Ours(HGAN) means a variant of Ours, which only using HGAN and not using our graph attention mechanisms; Ours(Het-GNN) means a variant of Ours, which only using HetGNN and not using our graph attention mechanisms; Ours(HGT) means a variant of Ours, which only using HGT and not using our graph attention mechanisms; Ours(MMGCN) means a variant of Ours, which only using MMGCN and not using our graph attention mechanisms.

Based on Table 7, we can see that our attention mechanisms achieve the best performance. Specifically, Our is 0.1347, 0.1322, 0.1311, 0.1287, 0.1144, 0.1140, 0.1120, 0.1094, 0.1074, 0.1045, 0.0474, 0.0206, and 0.0177 higher than Ours(DeepWalk), Ours(Esim), Ours(metapath2vec), Ours(HE-Rec), Ours(GCN), Ours(GAT), Ours(MAGNN), Ours (RGCN), Ours (GATNE), Ours (HGAN), Ours (HetGNN), Ours (HGT), and Ours (MMGCN), in term of accuracy, respectively. In terms of precision, sensitivity, specificity, F1-Score, AUC, there are similar scenarios as the above. On the other hand, from Table 7, we compare the model size of our knowledge attention mechanism with the model size of others: in the case of best performance, the model size of our knowledge attention mechanism does not exceed M. Compared to the heaviest model (i.e., Ours (HGAN)), our model parameters are about M smaller, and the performance of our model increases all by almost 0.1. Besides, the batch time of our model (i.e., per image) is not the optimal one, but it can satisfy the requirements of real-time applications (less than 100 ms to process each image [112]).

Table 7.

The results of discussion about knowledge attention mechanisms. “M” means . Batch time means the runtime of each batch in the model testing.

| Method | Accuracy | Precision | Sensitivity | Specificity | F1-score | AUC | The model size of knowledge attention mechanisms | Batch time |

|---|---|---|---|---|---|---|---|---|

| Ours (DeepWalk [97]) | 0.8463 | 0.8549 | 0.8506 | 0.8867 | 0.8528 | 0.8507 | 23M | 0.40 s |

| Ours (Esim [98]) | 0.8488 | 0.8567 | 0.8545 | 0.8869 | 0.8556 | 0.8552 | 24.3M | 0.35 s |

| Ours (metapath2vec [99]) | 0.8499 | 0.8628 | 0.8554 | 0.8912 | 0.8591 | 0.8568 | 24M | 0.34 s |

| Ours (HERec [100]) | 0.8523 | 0.8637 | 0.8638 | 0.8938 | 0.8638 | 0.8658 | 23.75M | 0.32 s |

| Ours (GCN [101]) | 0.8666 | 0.8701 | 0.8703 | 0.8991 | 0.8702 | 0.8678 | 25.4M | 0.46 s |

| Ours (GAT [25]) | 0.8670 | 0.8715 | 0.8724 | 0.8992 | 0.8719 | 0.8766 | 25.4M | 0.97 s |

| Ours (MAGNN [102]) | 0.8690 | 0.8764 | 0.8736 | 0.8992 | 0.8750 | 0.8772 | 37.2M | 1.48 s |

| Ours (RGCN [103]) | 0.8716 | 0.8716 | 0.8714 | 0.8913 | 0.8814 | 0.8744 | 44M | 1.24 s |

| Ours (GATNE [104]) | 0.8736 | 0.8726 | 0.8734 | 0.8955 | 0.8839 | 0.8764 | 44.6M | 1.54 s |

| Ours (HGAN [105]) | 0.8765 | 0.8746 | 0.8745 | 0.8960 | 0.8852 | 0.8772 | 47.3M | 2.27 s |

| Ours (HetGNN [106]) | 0.9336 | 0.8764 | 0.9090 | 0.9344 | 0.9045 | 0.9459 | 42.1M | 1.35 s |

| Ours (HGT [107]) | 0.9604 | 0.8764 | 0.9444 | 0.9331 | 0.9039 | 0.9536 | 41M | 1.50 s |

| Ours (MMGCN [108]) | 0.9633 | 0.8764 | 0.9466 | 0.9371 | 0.9057 | 0.9539 | 46.7M | 2.13 s |

| Ours | 0.9810 | 0.9889 | 0.9861 | 0.9859 | 0.9875 | 0.9908 | 39.4M | 1.14 s |

From the above observation, for traditional graph embedding methods, ESim performs better than metapath2vec. Besides, it is known that ESim is able to take multiple meta-paths. In general, graph neural network-based methods combine feature and structure information, such as GCN, GAT, and MAGNN. This method generally performs better. To delve into these methods, MAGNN can accurately weigh information and improve learning embedding performance compared to just the average node neighbour. Compared to MAGNN, our attention mechanism can capture the more valuable multi-modal information successfully and shows its superiority. Compared to some advanced models, including RGCN, GATNE, HGAN, HetGNN, HGT, and MMGCN, our attention mechanism can capture the cross-modalities information successfully and shows its superiority. Besides, according to Table 6, without single-level modality attention (“Ours without Single”), multiple-level modality attention (“Ours without Multiple”), cross-level modality attention (“Ours without Cross”), the performance of these becomes worse than ours, which indicates the importance of modelling the attention mechanism on both of the single-level modality and multiple-level modality information, and joint of multi-modal information.

Through the above analysis, we can find that the proposed knowledge attention mechanisms achieve the best performance among the state-of-the-art graph attention mechanisms. The experimental results also demonstrate that it is essential to capture and joint the importance of single-level modality information and multiple-level modality information in a multi-modal knowledge graph.

5.5. Compared to different temporal convolution networks

In this subsection, we compare with some state-of-the-art temporal convolution networks to verify the effectiveness of the proposed temporal convolution networks. Firstly, we introduce state-of-the-art temporal convolution networks. Then, we analyse the comparison experiment results.

State-of-The-Art Temporal Convolution Networks We review the theory and implementation of state-of-the-art temporal convolution networks, like the following:

TCN [72] use dilated convolutions to solve the global information of the entire input sequence and sets the Residual block for further feature extraction.

TrellisNet [109] is similar to TCN, but it is different in the weight sharing mechanism and hidden layer state calculation process. Each layer of TrellisNet can be regarded as performing a one-dimensional convolution operation on the hidden state sequence and then convolution. The output is passed to the activation function.

SA-TCN [110] is a TCN-based model embedded with a temporal self-attention block. Each block extracts a global temporal attention mask from the hidden representation laying between the encoder and decoder. Instead, our model utilizes information from other blocks to boost the representation of one block. Obviously, there is a clear difference between these two models.

TCAN [111] is also combines temporal convolutional network and attention mechanism. Its temporal attention can integer internal correlative features under the condition of satisfying sequential characteristics. We have a similar idea to TCAN, but the implementation is slightly different. TCAN uses a conventional convolution operation to extract features and then employs a residual network to augment the features. Instead, we adopt causal convolutions to extract and boost the features from one block.

Analysis of Temporal Convolution Networks In this subsection, Ours(TCN) means a variant of Ours, which only using TCN and not using our temporal convolution networks; Ours(TrellisNet) means a variant of Ours, which only using TrellisNet and not using our temporal convolution networks; Ours(SA-TCN) means a variant of Ours, which only using SA-TCN and not using our temporal convolution networks; Ours(TCAN) means a variant of Ours, which only using TCAN and not using our temporal convolution networks.

From Table 8, it is apparent that our approach has better performances than others. Specifically, ours is 0.1131, 0.1022, 0.0956, and 0.0940 higher than Ours (TCN), Ours (TrellisNet), Ours (SA-TCN), and Ours (TCAN), in term of accuracy, respectively. In terms of precision, sensitivity, specificity, F1-Score, AUC, there are similar scenarios as the above. On the other hand, we compare the model size of our temporal convolution network with the model size of others: in the case of best performance, the model size of our temporal convolution network is required as M, which is the second smallest. Besides, the batch time of our model is the smallest. Thus, our method has more convincing performance than other state-of-the-art temporal convolution networks for COVID-19 diagnosis.

Table 8.

The results of discussion about temporal convolution networks. “M” means . Batch time means the runtime of each batch in the model testing.

| Method | Accuracy | Precision | Sensitivity | Specificity | F1-score | AUC | The model size of temporal convolution networks | Batch time |

|---|---|---|---|---|---|---|---|---|

| Ours (TCN [72]) | 0.8679 | 0.8688 | 0.8641 | 0.8909 | 0.8664 | 0.8675 | 28.6M | 1.77 s |

| Ours (TrellisNet [109]) | 0.8788 | 0.8824 | 0.8761 | 0.9036 | 0.8792 | 0.8842 | 87M | 1.69 s |

| Ours (SA-TCN [110]) | 0.8854 | 0.8995 | 0.8837 | 0.9087 | 0.9040 | 0.8869 | 54M | 1.31 s |

| Ours (TCAN [111]) | 0.8870 | 0.9302 | 0.8981 | 0.9248 | 0.9275 | 0.8895 | 33M | 1.26 s |

| Ours | 0.9810 | 0.9889 | 0.9861 | 0.9859 | 0.9875 | 0.9908 | 32M | 1.14 s |

5.6. Compared to different spatial–temporal networks

In this subsection, we compare with some state-of-the-art spatial–temporal networks to verify the effectiveness of the proposed model. Firstly, we introduce state-of-the-art spatial–temporal networks. Then, we analyse the comparison experiment results.

State-of-The-Art spatial–temporal Networks We review the theory and implementation of state-of-the-art spatial–temporal networks, like the following:

DyHAN [113] is a dynamic heterogeneous graph embedding method with hierarchical attention that learns node embeddings leveraging both structural heterogeneity and temporal evolution.

CE-LSTM [114] is an event-flow serializing method to learn the representation from heterogeneous spatial–temporal graph through encoding the evolution of dynamic heterogeneous graph into a special language pattern such as word sequence in a corpus.

Analysis of spatial–temporal Networks From Table 9, it is apparent that our approach has better performances than others. Specifically, ours is 0.0802 and 0.0649 higher than DyHAN and CE-LSTM, in terms of accuracy, respectively. In terms of precision, sensitivity, specificity, F1-Score, AUC, there are similar scenarios as the above. On the other hand, we compare our model’s model size with the model size of others: in the case of best performance, the model size and batch time of our model are the smallest. Therefore, our method has more effective performance than other state-of-the-art spatial–temporal networks for COVID-19 diagnosis.

Table 9.

The results of discussion about spatial–temporal networks. “M” means . Batch time means the runtime of each batch in the model testing.

5.7. Parameters experiments

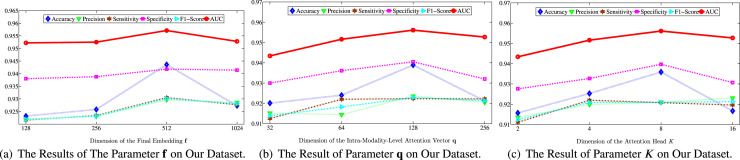

In this subsection, we investigate the sensitivity of parameters and report the performance results on our dataset with various settings in Fig. 6.

Fig. 6.

The result of parameters experiments.

Different Dimension of The Final Embedding For testing the final embedding of , the results are shown in Fig. 6(a). We can see that as the embedding dimension grows, the performance first goes up and then starts to decrease slowly. This is because our method requires a suitable dimension to encode multimodal information, and larger dimensions may introduce additional redundancy.

Different Dimension of Multiple-Level Modality Attention Vector As multimodal attention’s capability is influenced by the dimensions of the multimodal attention vector , we expose experimental results for different dimensions. The results are shown in Fig. 6(b). We can see that when the dimension of is set to , the performance of our method reaches its best performance as the number of dimensions of the multilevel modal attention vector grows. Afterward, as the performance of our method begins to degrade, overfitting may occur.

Number of attention head To examine the effects of multi-head attention, we investigate the performance of various attention head approaches. The results are shown in Fig. 6(c). From the results, it can be seen that the number of attention heads usually improves the performance of our method. is the best performance. After that, the performance gradually decreases.

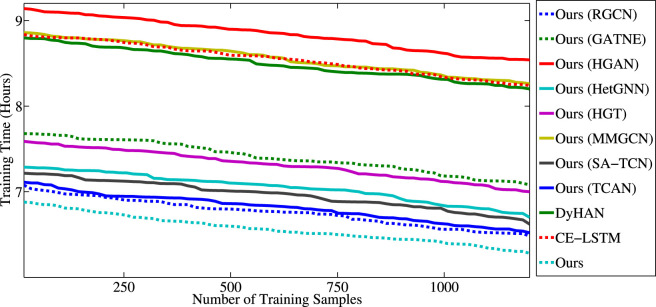

5.8. Scalability analysis

In this subsection, we investigate our model’s scalability and compared methods deployed on different numbers of training samples for optimization. Fig. 7 shows the speedup, w.r.t., the number of training samples on the proposed dataset.

Fig. 7.

Scalability. The training time decreases as the number of training samples increases. Ours takes less training time to converge compared with others.

From Fig. 7, our model is entirely scalable as the training time decreases significantly when we add up the number of training samples, and finally, the proposed model takes less than six hours to converge with 1200 training samples. We also find that our method’s training speed increases almost linearly as the number of training samples increases, while other methods converge slower. Besides the state-of-the-art performance, ours is also scalable enough to be adopted in practice.

5.9. Robustness analysis

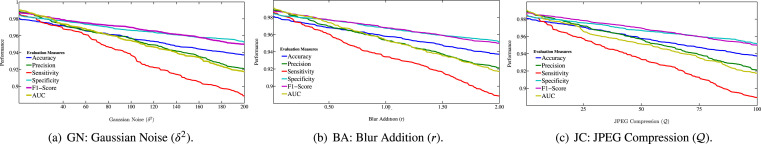

In this subsection, in order to investigate the robustness of our model under study, we consider the alterations for all medical chest images in our proposed dataset. In other words, we think the most common alterations that can occur when working on digital images in the medical sector:

Gaussian Noise (GN) simulates the possible effect of a wrong manipulation of the microscopic slide (e.g., too much dye has been used for contrast) [115]. We considered different values for the variance of the noise.

Blur Addition (BA) may occur due to a small move of the tool causing a focus loss. We vary the radius of blurring.

JPEG Compression (JC) may occur when images are transferred in a lossy manner. We vary compression value .

From Fig. 8, the performance profiles of our model are similar under different noisy data conditions. Moreover, the performance degradation does not exceed 0.12. It suggests that our model has a good ability to resist noise. Further, it demonstrates the robustness of our model.

Fig. 8.

The result of robustness analysis. Performance modification using the altered data input over our model .

5.10. Generalization about asymptomatic infection cases

A rising number of asymptomatic patients with a confirmed diagnosis of COVID-19 has been reported. Asymptomatic infections are those patients who do not have clinical symptoms associated with COVID-19 (e.g., fever, cough, sore throat, etc.) but who test positive for antibodies on RTPCR or in specimens such as the respiratory tract [116]. Asymptomatic patients can be the source of infection and carry some risk of transmission. Therefore, it is urgent to recognize asymptomatic infected patients from non-COVID-19 patients. Asymptomatic patients with COVID-19 pneumonia have unilateral ground-glass opacities on medical imaging of the lungs [117]. Therefore, the chest images of asymptomatic COVID-19 patients have several imaging features, and these images are of significant diagnostic value in close contact with an infected person.

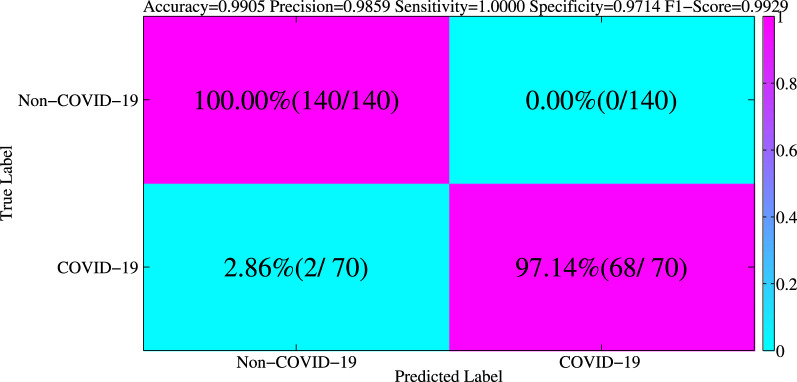

In this subsection, to better verify the robust performance of our algorithm, we extend our model to a specific classification task, where we use the trained model to directly classify the asymptomatic infection (COVID-19) cases and non-COVID-19 cases. To this end, we collect cases from asymptomatic infection (COVID-19) patients with the similar methods mentioned in Section 3, and we randomly choose non-COVID-19 cases from our test dataset. In this way, we get the new test set in this generalization experiment. We analyse the generalization results shown in Fig. 9, in terms of accuracy, precision, F1-score, sensitivity, specificity. From Fig. 9, our model considers only asymptomatic infection cases as non-COVID-19 patients, with a 97.14% specificity. It demonstrates the good performance of our model on this particular task. Furthermore, it suggests that our model has good generalization performance.

Fig. 9.

The confusion matrix of classifying the asymptomatic infection (COVID-19) cases and non-COVID-19 cases.

5.11. Error analysis

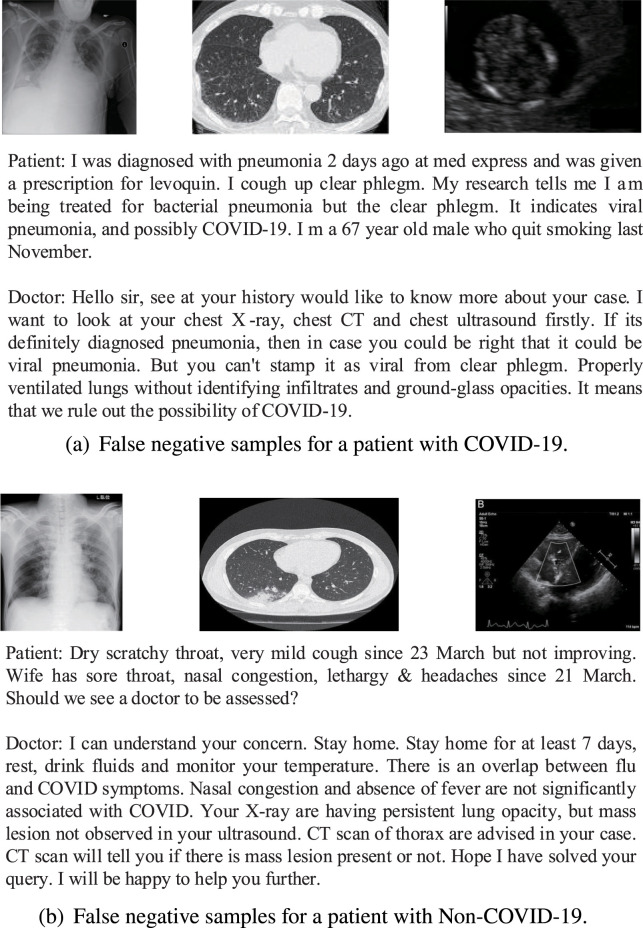

We conducted error analysis on our model results on the test data and identified two types of dominating errors as shown in Fig. 10.

Fig. 10.

Error analysis.

In Fig. 10(a), our model predicts that the patient has COVID-19 because there are words in the doctor–patient dialogues: ground-glass opacities, infiltrates, and viral pneumonia. These words are closely related to COVID-19, although some of them are denial terms, which leads to confusion and errors in our model.

As shown in Fig. 10(b), our model falsely identifies this as a non-COVID-19 patient. In this kind of case, we find that there are words in doctor–patient dialogues: not observed, not significantly. In this case, we also observe that the doctor gives words like lung opacity, which may cause our model not to identify and classify it correctly. In summary, these errors provide suggestions for future work on our model.

6. Conclusion

In this paper, we propose a novel COVID-19 diagnosis approach, which can fully take advantage of multi-modal medical information to build up the performance. Our approach gains the precise embedding of multi-modal medical information and exports medical knowledge directly from a deep learning-based network through learning from a given knowledge graph. With the learned deep learning-based network and medical embedding, our approach can yield the knowledge-based attention feature vector that can mainly contribute to the improved performance of diagnostic models. Experimental results demonstrate the effectiveness and robustness of our approach for the task of COVID-19 diagnosis. We believe and hope this work can provide insights to the researchers working in this area to shift the attention from only medical images to the doctor–patient dialogue and its corresponding medical images.

CRediT authorship contribution statement

Wenbo Zheng: Conceptualization, Methodology, Software, Validation, Investigation, Writing - original draft, Writing- review & editing. Lan Yan: Methodology, Investigation, Writing - original draft. Chao Gou: Conceptualization, Resources, Writing- review & editing, Project administration, Funding acquisition. Zhi-Cheng Zhang: Data curation,Validation, Software, Resources. Jun Jason Zhang: Supervision, Software, Validation, Investigation. Ming Hu: Data curation, Validation, Software, Resource. Fei-Yue Wang: Supervision, Funding acquisition.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgements

We would like to thank the General Hospital of the People’s Liberation Army and Wuhan Pulmonary Hospital for medical data and helpful advice on this research. This work is supported in part by the National Key R&D Program of China (2020YFB1600400), in part by the National Natural Science Foundation of China (61806198, 61533019, U1811463), in part by the Key Technologies Research and Development Program of Guangzhou, China (202007050002), and in part by the National Key Research and Development Program of China (No. 2018AAA0101502).

Biographies

Wenbo Zheng received his bachelor degree in software engineering from Wuhan University of Technology, Wuhan, China, in 2017. He is currently a PhD candidate in the School of Software Engineering, Xi’an Jiaotong University as well as the State Key Laboratory for Management and Control of Complex Systems, Institute of Automation, Chinese Academy of Sciences. His research interests include computer vision and machine learning.