Conservatives are less able to distinguish truths and falsehoods than liberals, and the information environment helps explain why.

Abstract

The idea that U.S. conservatives are uniquely likely to hold misperceptions is widespread but has not been systematically assessed. Research has focused on beliefs about narrow sets of claims never intended to capture the richness of the political information environment. Furthermore, factors contributing to this performance gap remain unclear. We generated an unique longitudinal dataset combining social media engagement data and a 12-wave panel study of Americans’ political knowledge about high-profile news over 6 months. Results confirm that conservatives have lower sensitivity than liberals, performing worse at distinguishing truths and falsehoods. This is partially explained by the fact that the most widely shared falsehoods tend to promote conservative positions, while corresponding truths typically favor liberals. The problem is exacerbated by liberals’ tendency to experience bigger improvements in sensitivity than conservatives as the proportion of partisan news increases. These results underscore the importance of reducing the supply of right-leaning misinformation.

INTRODUCTION

Public opinion polls suggest that American conservatives are prone to political misperceptions, typically claiming belief in more falsehoods than liberals (1–6). Some scholars further argue that this pattern is evidence that conservatives are more biased than liberals (7). If true, these observations would have important consequences. Holding accurate political knowledge is fundamental to democracy (8), and ideological differences in citizens’ understanding of empirical evidence about politically important topics are potentially destabilizing to democracy itself (9, 10). Effective decision-making depends on having a common understanding of the reality to which citizens and lawmakers must collectively respond (11).

Despite the importance of claims about ideological differences in belief accuracy, however, empirical evidence of conservatives’ susceptibility to misperceptions is limited. The claims are based on Americans’ beliefs about a relatively narrow set of topics, which were, in many cases, intentionally selected to reflect falsehoods promoted by conservative political elites. For example, conservatives have been shown to hold less accurate beliefs about climate change, weapons of mass destruction in Iraq, and where President Obama was born, all of which have been the subject of misleading statements by high-profile members of the Republican party (1–4). Thus, it is possible that conservatives’ relatively low accuracy about political information is a by-product of the fact that issues used in forming this assessment were selected with an eye toward detecting misperceptions among the political group. If so, conservatives’ performance might look better if we assessed beliefs about topics reflecting a wider range of issues broadly discussed by the public.

If, across a diverse set of political issues that have captured the public’s attention, conservatives still hold more misperceptions than liberals, then it would be important to understand why. One common, albeit contested, explanation is that conservatives are more biased (7). Political bias occurs when individuals respond to the same information in different ways depending on their political predispositions (12). The extent to which ideology and misperceptions are correlated could be evidence that conservatives’ beliefs are more strongly influenced by their political attitudes than liberals, but other explanations are possible. It might, for instance, be that the extent to which processing is biased is the same regardless of ideology, but that the effects of bias on misperceptions are more visible among conservatives because of the composition of the political information environment (13). For example, perhaps, political falsehoods that receive the most public attention disproportionately advance conservative interests. In this case, conservatives would be biased toward accepting false claims, resulting in misperceptions, while liberals would be biased toward rejecting them. In such a situation, comparable levels of bias across the ideological spectrum would be associated with very different beliefs. Some researchers have further sought to assess differences in bias by balancing messages on important characteristics, such as political slant and veracity (14). This approach provides a better opportunity to assess partisan or ideological differences in bias, but it limits researchers’ ability to speak to the influence of the political information environment.

This study aims to remedy these limitations. We provide robust evidence that American conservatives discriminate between political truths and falsehoods less well than liberals when assessing a broad cross section of real-world political claims. We use a novel methodological approach to ensure that the claims assessed for veracity reflect a broad range of political issues. Rather than selecting claims on the basis of researcher intuitions, we measure participants’ beliefs about a diverse set of widely shared political claims over a 6-month period. We then demonstrate that conservatives’ relatively poor ability to separate truths from falsehoods is explained, in large part, by the political orientation of the claims in circulation. We find that high-profile true political claims tend to promote issues and candidates favored by liberals, while falsehoods tend to be better for conservatives. Even if liberals and conservatives were comparably biased, we would expect liberals to perform better in such an environment. Last, we examine ideological differences in how bias works, focusing on conservatives’ sensitivity to threat.

Theory

Misperceptions are beliefs that are inconsistent with the best available evidence (15), including both the acceptance of false claims and the rejection of true claims. Much of the research in this domain has focused on the former, but the latter is no less important. There is considerable evidence that citizens often hold misperceptions about factually accurate claims (16). This project accounts for both. Following work in signal detection theory (SDT) (17), we consider two aspects of belief accuracy. The first is sensitivity, which characterizes an individual’s ability to distinguish between truths and falsehoods. The second is response bias, which refers to individuals’ propensity to label all statements true—known as a truth bias—or false.

Our first objective is to test whether conservatives’ greater susceptibility to misperceptions holds up to more careful scrutiny. As noted, this relationship has not been systematically tested, having instead been observed across a relatively small number of high-profile issues. There is, however, other evidence that is consistent with this idea. In addition to their tendency to hold misperceptions about politics, conservatives are more likely to question other scientific findings, such as whether smoking causes cancer (18). It is important to test whether this pattern holds up when assessing beliefs about the types of political claims circulating in the wider public. Our first question, then, is whether conservatives will exhibit lower sensitivity and a stronger truth bias than liberals when assessing a broad selection of high-profile real-world political claims.

Assuming this pattern holds, the more important question is why conservatives tend to perform more poorly. One possibility is that conservatives are simply more biased than liberals (7). According to the ideological asymmetry hypothesis, conservatives are uniquely likely to evaluate identical information more favorably if it supports their preferred perspective than if it challenges that perspective. This is consistent with evidence that liberals and conservatives exhibit many other important psychological differences. For example, conservatives value order, cognitive closure, and dogmatism more than liberals (19). Americans on the political right have more faith in their ability to intuitively recognize what is true, and they are more likely to believe that “truth” itself is a political construct (20). There is even some evidence suggesting that American conservatives are, on average, more credulous (21–23). However, evidence of ideologically asymmetric bias is mixed. A meta-analysis suggests that psychological differences associated with ideology do not translate into consistently higher levels of bias among conservatives than among liberals (24), although there is considerable debate over how to interpret these results (7, 12).

Given the ambiguity surrounding the (a)symmetry of ideological bias, we focus on another potential explanation for the pattern observed. Perhaps conservatives’ propensity to hold misperceptions is a by-product of the political information environment. We propose a pair of explanations for observed differences in liberals’ and conservatives’ misperceptions that are related to this idea. First, we consider the supply of political (mis)information. It could be that truths and falsehoods circulating most widely in the political information environment differ systematically in their implications for conservatives and liberals. If high-profile political falsehoods disproportionately promote conservatives’ interests, truths promote liberals’ interests, or both, belief accuracy on the left and right would diverge regardless of whether conservatives are more biased than liberals.

There is some evidence suggesting that political misinformation circulating online in the United States disproportionately promotes issues and candidates favored by conservatives. During the 2016 presidential election, for example, falsehoods shared via social media benefited the Republican candidate more often than the Democratic candidate (25), and this political misinformation was shared most frequently among relatively small, tightly knit groups of conservatives (26–29). This pattern may be amplified by bots, automated social media accounts that are often used to share political misinformation (30). Conservatives are more often fooled by bots purporting to share their ideology than liberals (21). Some scholars also contend that established news organizations, which are an influential source of factually accurate information, favor news coverage promoting liberal values, although this characterization is disputed (31–33). In such an environment, symmetrical motivations to believe claims reinforcing a preferred worldview would promote both liberal accuracy and conservative inaccuracy. The effect would be stronger if, as suggested by the asymmetry hypothesis, conservatives are more biased. More formally, we pose several additional research questions. Are high-profile factually accurate political claims more likely to favor the political left? Are high-profile inaccurate political claims more likely to favor the political right? And are individuals’ sensitivity and bias influenced by the political orientation of the claims they are assessing?

The second potential explanation for ideological differences in Americans’ misperceptions builds on the first. Perhaps liberals and conservatives are comparably biased, but their biases play out in different ways depending on whether a message benefits or harms their political ingroup, i.e., members of their preferred party. Liberals and conservatives exhibit a wide range of differences in their responses to negative stimuli, both physiological and psychological (34). Conservatives tend to exhibit higher threat sensitivity, suggesting that they might be more likely than liberals to reject claims that threaten their political interests, regardless of the claims’ accuracy (23). Conversely, liberals might be uniquely prone to accept claims that benefit their ingroup. These ideologically distinct expressions of bias could exacerbate belief divergence caused by the political information environment. For example, if truths tend to harm conservative interests while benefiting liberal interests, then this could make ideological differences in misperceptions more pronounced. Thus, we ask whether the influence of ideology on sensitivity and response bias is conditioned on claims’ implications for the ingroup.

To summarize, this study addresses four primary research questions. First, do conservatives exhibit lower sensitivity in distinguishing truths and falsehoods and a stronger truth bias than liberals when assessing a broad selection of widely shared claims? Second, are there differences in the distribution of truths and falsehoods favoring the interests of one political group over the other? Third, does this distribution of information help to explain differences between liberals’ and conservatives’ sensitivity and response bias? Last, are effects of the distribution of information on sensitivity and response bias moderated by political ideology?

Empirical approach

Before turning to our results, we present a brief overview of our empirical approach. To assess American conservatives’ propensity to hold inaccurate political beliefs and to test the complementary explanations that we have proposed, we generated a multifaceted longitudinal dataset. Over a 6-month period, spanning January through June 2019, we used a social media monitoring service to identify 20 of the most viral political news stories, 10 true and 10 false, every 2 weeks. This provided a systematic method for selecting high-profile claims, balanced by veracity, and ensuring that our belief accuracy measures are not unduly swayed by idiosyncratic judgments about topic importance. At the same time, we fielded a 12-wave political beliefs panel with a large representative sample of Americans (see table S1 for sample demographics). Each survey was conducted in the week following the observation of the viral news stories, allowing us to assess participants’ beliefs in a series of short statements about the selected high-profile topics (hereafter, belief statements).

We construct a pair of measures for assessing susceptibility to misinformation on the basis of SDT (17). Sensitivity is a quantitative expression of an individual’s ability to discriminate true (signal) and false (noise) statements. The theoretical range for sensitivity is between 0 (miscategorizing every statement) and 1 (perfect categorization), with 0.5 being the equivalent of a coin flip. Response bias, which is conceptually independent of sensitivity, describes an individual’s propensity to label all claims in the same way. Negative scores correspond to a truth bias, the tendency to believe that all claims are true; positive scores correspond to a tendency to distrust all claims; and a score of zero indicates unbiased assessment.

Last, we used Amazon’s Mechanical Turk (AMT) to determine the political slant of the belief statements. Statements were classified as favoring the left or right or as reflecting comparably on both side (supplementary text S2 and tables S2 to S4).

RESULTS

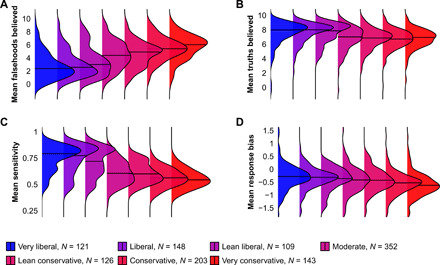

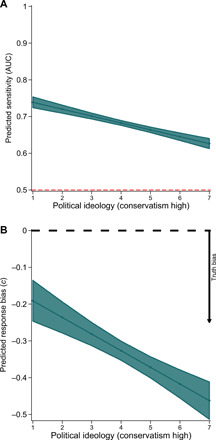

Consistent with other studies, we find that American conservatives are more likely than liberals to hold misperceptions. Visual inspection suggests stark ideological differences. Conservatives tend to claim more falsehoods are true (Fig. 1A), while the number of truths believed is modestly lower (Fig. 1B). Similarly, conservatives’ sensitivity tends to be lower and their response bias higher (Fig. 1, C and D). We estimate the relationship between ideology and the latter two outcomes, sensitivity and response bias, using random effects regression models. These models also control for potential confounders, including age, gender, education, psychological characteristics associated with misperceptions (20), and an indicator for wave (see table S5 for descriptives). Regarding our first research question, we find that ideology is a significant predictor in both models and the sign of the coefficient indicates that increasing conservatism is associated with reduced sensitivity and a stronger truth bias (tables S6 and S7). Notably, strong conservatives’ estimated sensitivity is closer to chance than it is to strong liberals’ sensitivity (Fig. 2A), and conservatism is associated with a stronger truth bias (Fig. 2B).

Fig. 1. Probability density estimates by ideology.

(A) The number of falsehoods believed, (B) the number of truths believed, (C) sensitivity, and (D) response bias.

Fig. 2. Estimate marginal means based on random effects regression.

Estimated association between ideology and (A) sensitivity (the red dashed line denotes the performance of a random classifier) and (B) response bias.

Our first proposed explanation for conservatives’ apparent susceptibility to misperceptions concerns the characteristics of the political claims in circulation. We find that widely shared truths and falsehoods have, on average, systematically different implications for liberals than they do for conservatives (Fig. 3A). Fully two-thirds (65.0%) of the high engagement true statements were characterized as benefiting the political left, compared to only 10.0% that were described as benefiting the right. The pattern among falsehoods was reversed, although the relationship was attenuated: 45.8% benefited the political right versus about a quarter (23.3%) the left. This trend was consistent across all waves, although the proportion of claims favoring each side varied over time (Fig. 3B).

Fig. 3. Ideological implications of claims by veracity.

(A) Most true statements that were widely shared online over the 6-month study favored the political left, while falsehoods favored the right. (B) Boxplots show variability in the proportion of statements favoring each side over the 12 waves. True statements (green bars) typically benefited the left/harmed the right (thin outline) more than they benefited the right/harmed the left (thick outline). False statements (red bars), in contrast, favored the left less than they favored the right. The proportion of statements of each type varied over time. D, Democrats; R, Republicans.

Next, we consider the extent to which individuals’ belief accuracy is shaped by the political implications of the claims being assessed. Does the political information environment explain conservatives’ relatively low sensitivity and/or their high truth bias? We first reestimated the models of sensitivity and bias, adding four additional factors (tables S8 and S9). The new measures correspond to the proportions of truths that benefit the respondent’s ingroup (including those that harm the outgroup) and that harm the ingroup (including those that benefit the outgroup), as well as a corresponding pair of proportions associated with falsehoods. For example, if 60% of true statements benefited Democrats, then that would translate to 60% benefiting the ingroup for a Democratic respondent and 60% harming the ingroup for a Republican. Note that these four proportions are only calculated for participants who self-identify as Republicans and Democrats; they are treated as missing for all others. Consistent with decades of research on biased assimilation (35), we find that participants’ response to information is systematically biased in favor of the ingroup. Sensitivity improves as more truths benefit the respondent’s ingroup (B = 0.174, SE = 0.011, and P < 0.001), and it is reduced when more falsehoods benefit it (B = −0.143, SE = 0.009, and P < 0.001). For example, if only 10% of true claims benefited the ingroup, then an average participant has a predicted sensitivity score of 0.63; if 90% of the claims benefited it, then predicted sensitivity is 0.77. For false claims, the corresponding predicted sensitivity scores are 0.71 and 0.60. Unexpectedly, we also observe that sensitivity improves with the proportion of truths harming the ingroup, although the magnitude of the effect is substantively small (B = 0.054, SE = 0.012, and P < 0.001). This corresponds to a change in predicted sensitivity (as specified above) from 0.68 to 0.67.

Ingroup favoritism is also evident in response bias. Participants exhibit more truth bias—they are more likely to say every claim is true—the larger the proportion of claims benefiting the ingroup, whether those claims are true (B = −0.546, SE = 0.049, and P < 0.001) or false (B = −0.147, SE = 0.052, and P < 0.001). Replicating the comparison of predicted values (10 versus 90% of claims benefiting the ingroup), the estimated bias is −0.17 versus −0.61 for true claims and −0.30 versus −0.42 for false claims. The higher the proportion of false claims harming the ingroup, the more likely participants are to describe all claims as false (B = 0.365, SE = 0.041, and P < 0.001), corresponding to estimated bias of −0.43 versus −0.14. A higher proportion of truths harming the ingroup is associated with a higher propensity to label all statements true (B = −0.171, SE = 0.052, and P < 0.01), corresponding to an estimated bias of −0.29 versus −0.43.

However, ideology remains a highly significant predictor after controlling for these environmental characteristics. In other words, the political information environment matters, but even after accounting for its attributes, conservatives tend to be more prone to misperceptions than liberals.

The second potential explanation for conservatives’ misperceptions concerns the possibility that conservatives and liberals respond differently to claims harming or benefiting the ingroup. To test this idea, we estimated a pair of regression models that include fixed effects for the participant. One model predicts sensitivity while the other predicts response bias. Both models include interactions between ideology and the four proportions characterizing claims’ implications for the ingroup. In contrast to prior analyses, we can use fixed effects regression for these analyses because ideology interacts with the time-varying proportions. Fixed effects regression provides better protection against omitted variable bias, effectively controlling all stable individual characteristics (e.g., education) without needing to include separate variables for each (36).

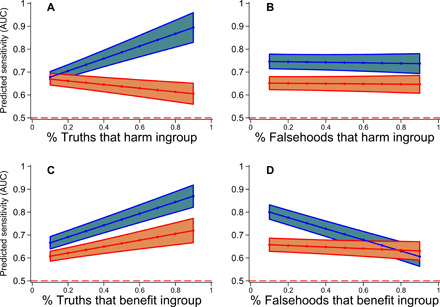

Results are consistent with the idea that liberals and conservatives respond differently to different message environments (table S10). The influence of conservatism on individuals’ ability to distinguish between truths and falsehoods is contingent on what proportion of information benefits or harms the ingroup. For example, the proportion of truths that harm the ingroup (or benefit the outgroup) is associated with a bigger increase in sensitivity among strong liberals than strong conservatives (Fig. 4A). We observe a similar pattern between sensitivity and the proportion of truths that benefit the ingroup, although the difference between the ideologies is less pronounced (Fig. 4C). Turning to falsehoods, strong liberals experience a bigger drop in sensitivity than strong conservatives as the proportion of falsehoods benefiting the ingroup increases (Fig. 4D).

Fig. 4. Sensitivity by ideological implications and veracity.

Estimated marginal means based on fixed effects regression comparing strong liberals (blue) to strong conservatives (red). The red dashed lines denotes the performance of a random classifier. (A) Liberals’ sensitivity increases more rapidly than conservatives’ as the proportion of truths harming the ingroup increases. (B) Conservatives’ and liberals’ sensitivity is unrelated to the proportion of ingroup-harming falsehoods. (C) Liberals’ sensitivity increases more rapidly than conservatives’ as the proportion of truths benefiting the ingroup increases. (D) Liberals’ sensitivity declines more rapidly than conservatives’ as the proportion of falsehoods benefiting the ingroup increases.

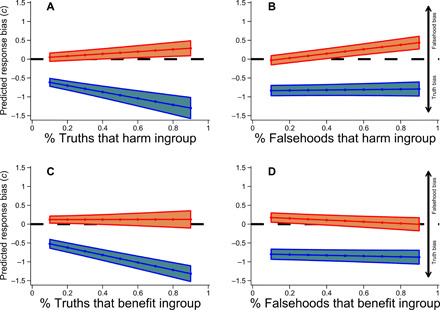

We observe a similar pattern when examining the contingent nature of ideology’s relationship with response bias. The models’ specifications are identical to those used to assess sensitivity except for the outcome measure (table S11). We find that, compared to conservatives, liberals exhibit a stronger truth bias as the proportion of truths that benefit or harm the ingroup increases (Fig. 5, A and C). There is also a very small difference in how liberals’ and conservatives’ response bias changes with the proportion of falsehood that harm the ingroup, with conservatives being more likely to label all claims false (Fig. 5B). The relationship between the proportion of falsehoods that benefit the ingroup and response bias is not contingent on ideology (Fig. 5D).

Fig. 5. Response bias by ideological implications and veracity.

Estimated marginal means based on fixed effects regression models comparing strong liberals (blue) to strong conservatives (red). (A) Liberals’ tendency to label all claims true increases faster than conservatives’ as the proportion of truths harming the ingroup increases. (B) Conservatives’ tendency to label all claims false increases faster than liberals’ as the proportion of falsehoods harming the ingroup increases. (C) Liberals’ tendency to label all claims true increases faster than conservatives’ as the proportion of truths benefiting the ingroup increases. (D) Conservatives’ and liberals’ response bias is unrelated to the proportion of ingroup-promoting falsehoods.

DISCUSSION

This study provides the most rigorous evidence to date that U.S. conservatives are uniquely susceptible to political misperceptions in the current sociopolitical environment. Data were collected over 6 months in 2019 and reflect Americans’ beliefs about hundreds of political topics. The topics were selected on the basis of social media engagement, suggesting that these are the very issues that Americans were most likely to encounter online. Analyses suggest that conservatism is associated with a lesser ability to distinguish between true and false claims across a wide range of political issues and with a tendency to believe that all claims are true.

The study also shows that conservatives’ propensity to hold misperceptions is partly explained by the political implications of this widely shared news. Socially engaging truthful claims tended to favor the left, while engaging falsehoods disproportionately favored the right. In such an environment, the belief accuracy of liberals and conservatives would be expected to diverge even if ideological bias is symmetrical. The same propensity to believe ingroup-favorable claims and to reject ingroup-harming claims would promote accuracy among liberals while simultaneously promoting inaccuracy among conservatives. Conservative misperceptions are not, however, fully explained by the political orientation of widely shared news stories. Conservatism is associated with misperceptions even after controlling for how the stories in circulation reflect on individuals’ ingroup.

We also find evidence that ideology influences how bias is expressed. The relationship between the composition of the information environment and both sensitivity and bias is contingent on ideology. For example, liberals exhibited more rapid increases in sensitivity and truth bias than conservatives as the proportion of factually accurate news stories that benefited or harmed the ingroup increased. In other words, the more politically neutral true stories there were in a wave, the more liberals resembled conservatives; the presence of politically charged true stories exacerbated the ideological divide in sensitivity and response bias. Individuals who are more liberal also experienced a more rapid drop in sensitivity as the proportion of falsehoods benefiting the ingroup increased (though their performance never dropped below conservatives). Conservatives, in contrast, displayed a slightly faster increase in their propensity to label all claims false as the proportion of falsehoods harming the ingroup grew. These differential responses to who benefits from the claims being evaluated underscore the importance of understanding how the media environment may influence people’s judgments of contested claims.

These results are not as simple as prior theory might suggest. It is not the case that conservatives are uniformly more responsive to ingroup threats. Liberals responded more strongly than conservatives to harmful truths, although they became better at discriminating between truths and falsehoods. We do see evidence that liberals are more prone to accept claims that benefit the ingroup, although the strength of the relationship is modest. Liberals’ sensitivity decreased in the face of politically beneficial falsehoods, resulting in performance comparable to that of conservatives in the most extreme cases. What is unambiguous here is that Americans’ response to the composition of the political information environment is dependent on their ideology.

Collectively, these results underscore the importance of policies designed to ensure that news shared in the political information environment is reliable and factually accurate. Conservatives’ consistently poor performance in distinguishing truths from falsehoods appears to be largely explained by the fact that widely shared falsehoods were systematically more supportive of conservatives’ political positions. This suggests that reducing the flow of conservative-favorable misinformation and/or promoting the flow of conservative-favorable accurate information could substantially reduce the gap in belief accuracy across the ideological spectrum. This is a difficult task, creating competing risks of censorship—if conservative claims are unfairly suppressed—and of false equivalence—if trivial but factually accurate claims are elevated in order to ensure that both sides are represented in news coverage (37). Political leaders, policymakers, and technology companies each have roles to play. Furthermore, conservative political elites have a powerful role in shaping the political information environment, and a good faith effort to promote honest discourse could be an effective strategy for improving Americans’ belief accuracy. Policymakers must provide more guidance about how to guard against misinformation in ways that are sensitive to the risks identified here, and technology companies must develop and enact policies and technologies that allow them to identify and slow the spread of misinformation.

Although this study provides valuable evidence that conservative misperceptions are significantly shaped by the political information environment, it has some important limitations. This research was conducted in the United States, but we do not mean to suggest that the problems addressed here are unique to that country. To the contrary, there is ample evidence that political misperceptions are a global phenomenon and that they pose challenges to effective governance around the world (38, 39). The United States does, however, provide an important opportunity to study the relationship between the political information environment and misperceptions. The country is a mature democracy that has historically scored well on media freedom. Yet, over the past two decades, it has grappled with a growing number of high-profile, and often politically consequential, misperceptions. Understanding whether lessons learned in the United States apply to other parts of the world is an important open question. Are perceptions of who benefits from the political claims circulating in a society, both true and false, a robust factor for explaining the prevalence of misperceptions globally?

In the United States, political ideology and partisanship are highly correlated. Although this work focuses on ideology as the primary individual trait variable that is related to an ability to distinguish truth from falsehood, in the American context, party identification is likely to be similarly predictive. An examination of the belief statements clearly shows that the partisanship of actors in widely circulated stories is often central to the underlying claim. Furthermore, replicating these analyses using a measure of party affiliation in place of our measure of ideology yields nearly identical results. Because of the high correlation between partisanship and ideology in our sample—as well as in the U.S. population, more broadly—disentangling the effects of party identification and ideology would be challenging. However, in many other countries, the relationship between political ideology and party identification is substantially weaker, and these contexts may provide opportunities to better understand the separate, or combined, influences of ideology and party on political misperceptions.

Another important limitation concerns the asymmetry hypothesis. As with prior scholarship, this study fails to provide definitive evidence about the debate over whether bias is ideologically asymmetrical. Some aspects of the results are consistent with this idea. For example, attributes of the political information environment only partially explain conservatives’ relative lack of accuracy about political news. Conservatism is associated with worse performance even after accounting for the fact that high-profile truths tend to favor the left and falsehoods the right. Supporters of the ideological asymmetry hypothesis have explicitly called for tests such as this one, which use belief statements that are more “representative of the topics of political debates at the time of the study” (7). Data reported here leave little doubt that conservatism is associated with less accurate judgments about a wide range of contemporary political topics. It seems increasingly likely that this is due, at least in part, to individual-level psychological differences associated with conservatism. It is even conceivable that we are underestimating the effects of bias. For example, we cannot rule out the possibility that conservative media generate or amplify misleading content in response to consumer demand. That is, these outlets may be producing content to sate the appetite of a credulous conservative audience. Our design does not, however, rule out other possible explanations (24).

A strength of our design is that we selected claims on the basis of engagement data and on whether the claims could be verified as either true or false. This allows us to ask participants about a wide variety of politically important topics that are garnering substantial attention on social media. However, the approach comes with some trade-offs. By selecting belief statements using social media data, the claims studied reflect processes that produce high engagement on social media platforms. These include actions that users take directly, such as sharing and liking stories, as well as actions of the platforms and the algorithms that underlie them, such as ranking stories in users’ feeds. Furthermore, the characteristics of those who share and consume political news on social media may not be fully representative of the processes of consuming and sharing information through other media. Because of this, our decision to test belief in claims from social media among survey respondents who may or may not use social media is of critical importance to the generalizability of the relationships that we observe.

Another important unanswered question raised by these data is why accurate political news tended to advantage the left, while falsehoods helped the right. Some scholars have suggested that this, too, reflects a deeper truth about ideological differences, arguing that liberalism is more “compatible with the epistemic standards, values, and practices” of science (7). Others would likely contend that this is evidence that the news media and/or social media exhibit a liberal bias (33). The latter explanation, however, fails to explain why falsehoods disproportionately advantage the right. We suspect that this is due, at least in part, to foreign powers’ efforts to sow political discord (40) and to the propensity of the then-sitting president to promote politically advantageous falsehoods (41), although the extent to which the party in power affects these relationships remains an open question.

In sum, American conservatives in the early 21st century are uniquely likely to hold political misperceptions. This is due, in large part, to characteristics of the messages circulating in the political information environment. Widely shared accurate political news disproportionately advances liberal interests, while viral falsehoods most often promote conservative interests. Together, these characteristics contribute to stark ideological differences in citizens’ ability to distinguish between truths and falsehoods about high-profile topics. This pattern may be exacerbated by the fact that liberals tend to experience bigger improvements in sensitivity than conservatives as the proportion of partisan news increases.

Widespread political misperceptions pose a notable threat to democracy, which is dependent on citizens’ ability to make informed decisions. The evidence presented here suggest that it may be possible to enhance conservatives’ ability to distinguish between political truths and falsehoods by altering the political information environment. If widely shared political news contained fewer falsehoods promoting conservative causes or more conservative-favorable accurate information, then misperceptions among conservatives would likely decline.

MATERIALS AND METHODS

Data collection for the panel was administered by YouGov between 20 February and 31 July 2019. Informed consent was obtained after the nature and possible consequences of the study were explained. The company used a sample matching methodology to construct representative sample (N = 1204 at baseline). The frame against which the sample was matched was constructed using stratified sampling from the full 2016 American Community Survey 1-year sample with selection within strata by weighted sampling with replacements. Retention between waves of panel ranged from 66.5 to 75.4% (n varies from 801 to 908), and we allowed participants to return after missing intervening waves. Approximately three-quarters (76.1%) of the sample completed at least half the waves, and almost a third (30.5%) completed all 12.

To generate the belief statements assessed in these surveys, every 2 weeks, we retrieved social media engagement data for 5000 news stories that had the most engagement in the past 7 days using the NewsWhip API. Aggregate user engagement was measured as the total number of reactions, shares, and comments associated with each URL posted on Facebook and/or Twitter (42). Given Facebook’s market share, however, engagement on this platform tended to dominate. Using these data, we identified 20 widely circulating viral news stories (URLs that had very high engagement on social media) in which key claims were unambiguously true or false. We established story veracity using several indicators, including source domain, other news coverage, published assessments by fact checkers, and assessments by scholars with relevant expertise. The team then drafted short statements briefly summarizing key claims, either true or false, promoted by each article’s headline and/or content (tables S2 to S3).

In alternate weeks, we asked survey respondents to indicate the veracity of the 20 belief statements on a four-point scale, anchored by “definitely true” and “definitely false.” To guard against the risk that demand characteristics might lead participants to search the news before responding, we explicitly asked them to answer based only on existing knowledge, without conducting additional research. We note that these measures capture how people portray their beliefs on a public opinion survey, which is not necessarily the same as their “true” beliefs. For example, some participants may have responded strategically, expressing belief in claims they know to be false or denying claims they know to be true (43). However, recent evidence suggests that the influence of partisan cheerleading in studies such as our own is small (44). Regardless of whether participants’ stated beliefs were strategic or not, these belief statements are consequential, making the political information environment more challenging to navigate for others.

We construct a pair of measures to assess participants’ susceptibility to misinformation: sensitivity and response bias (17, 45). To measure sensitivity, we construct a receiver operating characteristic curve for each participant in each wave on the basis of their ratings of 20 statements in the wave, and we calculate the area under the curve (AUC) using trapezoidal approximation. To compute response bias, c, we treat each rating task as either correct or incorrect (for true statements, “definitely true” and “mostly true” were coded as correct, with corresponding codes for false statements). Then, for each participant in each wave, we average z scores for the hit rate (the proportion of truths labeled true) and the false alarm rate. Following SDT conventions, we multiply the result by −1.

The baseline survey also included three seven-point measures of ideology, one focusing on politics generally, another on economic ideology, and a third on social ideology. Higher scores corresponded to greater conservatism. We created an aggregate ideology score by averaging the three items (α = 0.948, M = 4.14, and SD = 1.75).

To determine the political slant of the belief statements, we relied on crowdworkers recruited using AMT. Prior work has shown that, although crowdworkers may differ from the general population in some important ways (46), they are capable of producing high-quality labels, including labels related to messages’ political orientation (32, 47). We posted a Human Intelligence Task (HIT) briefly describing the study to workers living in the United States who had previously completed at least 1000 HITs with a minimum approval rating of 99%. The incentive for participating was $0.15 for each statement coded. A total of 45 AMT workers evaluated the 240 statements, with five Democrats and five Republicans assigned to each. We focused on party identity for statement categorization, which is highly correlated with ideology in the United States, because it made the labeling task more concrete. Workers were presented with one statement at a time and were asked how the statement, if true, would make them feel about the ingroup (e.g., for a crowdworker who identified as a Democrat, “Democratic candidates or causes”) and the outgroup (for the same crowdworker, “Republican candidates or causes”). Responses were given using 11-point semantic differentials (worse to better). We labeled each statement as favoring the party that benefited more or was hurt less according to the two groups of partisan workers. When both groups said that neither party benefited more, or when they gave contradictory assessments, we labeled the statements as favoring neither party (supplementary text S2 and tables S2 to S4). Using these data, we then computed the proportion of statements favoring each party for every wave of the panel. For example, if five true statements disproportionately benefited Democrats and three benefited Republicans, a Democratic respondent would have the following scores: 50% benefit the ingroup, 30% harm the ingroup, and 20% neither favor nor harm the ingroup.

We use two primary estimation strategies. First, our analyses of the relationship between ideology and our outcome variables use random effects regression. For those analyses, we estimate the following equation

where the dependent variable Yit is either the sensitivity or the response bias of participant i in wave t. The variable xi is the participant’s ideology as measured in the baseline wave. The variable μ captures the overall average of the dependent variable across individuals and waves. The model uses random effects for the wave, denoted θt, and individual-specific random effects, denoted uit, in addition to control variables for age, sex, education, and three psychological factors (Faith in Intuition, Need for Evidence, and Truth is Political) that were all measured at the baseline wave.

Our second estimation strategy is similar, but because we use a time-varying predictor for our key variable of interest we use fixed effects regression that obviates the need for the use of individual-level control variables and provides more robust protection against omitted variable bias. For these analyses, we estimate the following equation

where the dependent variable Yit is either the sensitivity or the response bias of participant i in wave t. The variable αi is a fixed effect for individual i, and τt is a fixed effect for wave t. Variable ait is the percentage of true statements that harm the ingroup for individual i in wave t, bit is the percentage of false statements that harm the ingroup for individual i in wave t, cit is the percentage of true statements that benefit the ingroup for individual i in wave t, and dit is the percentage of false statements that benefit the ingroup for individual i in wave t. The variable xi is the participant’s ideology as measured in the baseline wave. We note that these models do not include a first-order term for ideology, as it is time-invariant and is therefore captured by the individual level fixed effect αi.

Last, our research design relies on a long-term panel, and hence, it is important to understand the possible effects that panel attrition may have on our results. To examine whether the relationships that we observe are robust to the exclusion of differing subsamples, we replicated the regression results for those who completed more than six waves of the panel and for those who completed all 12 waves of the panel (see tables S12 to S23). In all cases, the coefficients of interest are very similar to those observed in the full panel, suggesting that panel attrition does not substantively affect our results.

Acknowledgments

We thank S. Soroka for valuable input when planning the study; S. Poulsen for extensive assistance during data collection; and J. Coronel, R. Fazio, Q. Li, H. Padamsee, M. Prior, P. Resnick, participants at the Facebook Integrity Research Academic Workshop, and participants at the Michigan Symposium on Media and Politics for thoughtful feedback on earlier versions of the work. Funding: Facebook Integrity Foundational Research Award, submission ID no. 2152762478373490, and internal funding from the Ohio State University School of Communication. Author contributions: R.K.G. designed the study. All authors contributed to the data collection, analysis, and preparation of the paper. Competing interests: The authors declare that they have no competing interests. Data and materials availability: All data needed to evaluate the conclusions in the paper are present in the paper and/or the Supplementary Materials. Data needed to replicate the analysis are present in the paper are available in the Harvard Dataverse at https://doi.org/10.7910/DVN/CGV6NP.

SUPPLEMENTARY MATERIALS

Supplementary material for this article is available at http://advances.sciencemag.org/cgi/content/full/7/23/eabf1234/DC1

REFERENCES AND NOTES

- 1.World Public Opinion, Percentage of Americans Believing Iraq had WMD Rises (2006); https://drum.lib.umd.edu/handle/1903/10562.

- 2.Brulle R. J., Carmichael J., Jenkins J. C., Shifting public opinion on climate change: An empirical assessment of factors influencing concern over climate change in the U.S., 2002–2010. Clim. Change 114, 169–188 (2012). [Google Scholar]

- 3.Pasek J., Stark T. H., Krosnick J. A., Tompson T., What motivates a conspiracy theory? Birther beliefs, partisanship, liberal-conservative ideology, and anti-Black attitudes. Elect. Stud. 40, 482–489 (2015). [Google Scholar]

- 4.A. J. Berinsky, The Birthers Are Back (2012); http://today.yougov.com/news/2012/02/03/birthers-are-back/.

- 5.Miller J. M., Saunders K. L., Farhart C. E., Conspiracy endorsement as motivated reasoning: The moderating roles of political knowledge and trust. Am. J. Polit. Sci. 60, 824–844 (2016). [Google Scholar]

- 6.G. Pennycook, D. G. Rand, Research note: Examining false beliefs about voter fraud in the wake of the 2020 Presidential Election, Harvard Kennedy School (HKS) Misinformation Review (2021).

- 7.Baron J., Jost J. T., False equivalence: Are liberals and conservatives in the United States equally biased? Perspect. Psychol. Sci. 14, 292–303 (2019). [DOI] [PubMed] [Google Scholar]

- 8.Kuklinski J. H., Quirk P. J., Jerit J., Schwieder D., Rich R. F., Misinformation and the currency of democratic citizenship. J. Polit. 62, 790–816 (2000). [Google Scholar]

- 9.World Economic Forum, Global Risks: Digital Wildfires in a Hyperconnected World (World Economic Forum, 2013). [Google Scholar]

- 10.Persily N., Can democracy survive the Internet? J. Democr. 28, 63–76 (2017). [Google Scholar]

- 11.M. X. Delli Carpini, S. Keeter, What Americans Know About Politics and Why it Matters (Yale Univ. Press, 1996). [Google Scholar]

- 12.Ditto P. H., Clark C. J., Liu B. S., Wojcik S. P., Chen E. E., Grady R. H., Celniker J. B., Zinger J. F., Partisan bias and its discontents. Perspect. Psychol. Sci. 14, 304–316 (2019). [DOI] [PubMed] [Google Scholar]

- 13.Y. Benkler, R. Faris, H. Roberts, Network Propaganda: Manipulation, Disinformation, and Radicalization in American Politics (Oxford Univ. Press, 2018). [Google Scholar]

- 14.Pennycook G., Rand D. G., Lazy, not biased: Susceptibility to partisan fake news is better explained by lack of reasoning than by motivated reasoning. Cognition 188, 39–50 (2019). [DOI] [PubMed] [Google Scholar]

- 15.Kuklinski J. H., Quirk P. J., Schweider D., Rich R. F., “Just the facts, ma’am”: Political facts and political opinion. Ann. Am. Acad. Pol. Soc. Sci. 560, 143–154 (1998). [Google Scholar]

- 16.Jerit J., Barabas J., Partisan perceptual bias and the information environment. J. Polit. 74, 672–684 (2012). [Google Scholar]

- 17.N. A. Macmillan, C. D. Creelman, Detection Theory: A User’s Guide (Lawrence Erlbaum Associates, 2005). [Google Scholar]

- 18.Lewandowsky S., Oberauer K., Gignac G. E., NASA faked the moon landing—therefore, (climate) science is a hoax: An anatomy of the motivated rejection of science. Psychol. Sci. 24, 622–633 (2013). [DOI] [PubMed] [Google Scholar]

- 19.Jost J. T., Ideological asymmetries and the essence of political psychology. Polit. Psychol. 38, 167–208 (2017). [Google Scholar]

- 20.Garrett R. K., Weeks B. E., Epistemic beliefs’ role in promoting misperceptions and conspiracist ideation. PLOS ONE 12, e0184733 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Yan H. Y., Yang K.-C., Menczer F., Shanahan J., Asymmetrical perceptions of partisan political bots. New Media Soc. 1461444820942744 (2020). [Google Scholar]

- 22.Pfattheicher S., Schindler S., Misperceiving bullshit as profound is associated with favorable views of Cruz, Rubio, Trump and conservatism. PLOS ONE 11, e0153419 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Fessler D. M. T., Pisor A. C., Holbrook C., Political orientation predicts credulity regarding putative hazards. Psychol. Sci. 28, 651–660 (2017). [DOI] [PubMed] [Google Scholar]

- 24.Ditto P. H., Liu B. S., Clark C. J., Wojcik S. P., Chen E. E., Grady R. H., Celniker J. B., Zinger J. F., At least bias is bipartisan: A meta-analytic comparison of partisan bias in liberals and conservatives. Perspect. Psychol. Sci. 14, 273–291 (2019). [DOI] [PubMed] [Google Scholar]

- 25.Allcott H., Gentzkow M., Social media and fake news in the 2016 election. J. Econ. Perspect. 31, 211–236 (2017). [Google Scholar]

- 26.Grinberg N., Joseph K., Friedland L., Swire-Thompson B., Lazer D., Fake news on Twitter during the 2016 U.S. presidential election. Science 363, 374–378 (2019). [DOI] [PubMed] [Google Scholar]

- 27.Guess A. M., Nyhan B., Reifler J., Exposure to untrustworthy websites in the 2016 US election. Nat. Hum. Behav. 4, 472–480 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Guess A., Nagler J., Tucker J., Less than you think: Prevalence and predictors of fake news dissemination on Facebook. Sci. Adv. 5, eaau4586 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.D. Nikolov, A. Flammini, F. Menczer, Right and left, partisanship predicts (asymmetric) vulnerability to misinformation, Harvard Kennedy School Misinformation Review (2021).

- 30.Shao C., Ciampaglia G. L., Varol O., Yang K.-C., Flammini A., Menczer F., The spread of low-credibility content by social bots. Nat. Commun. 9, 4787 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Hassell H. J. G., Holbein J. B., Miles M. R., There is no liberal media bias in which news stories political journalists choose to cover. Sci. Adv. 6, eaay9344 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Budak C., Goel S., Rao J. M., Fair and balanced? Quantifying media bias through crowdsourced content analysis. Public Opin. Q. 80, 250–271 (2016). [Google Scholar]

- 33.Groseclose T., Milyo J., A measure of media bias. Q. J. Econ. 120, 1191–1237 (2005). [Google Scholar]

- 34.Hibbing J. R., Smith K. B., Alford J. R., Differences in negativity bias underlie variations in political ideology. Behav. Brain Sci. 37, 297–307 (2014). [DOI] [PubMed] [Google Scholar]

- 35.Lord C. G., Ross L., Lepper M. R., Biased assimilation and attitude polarization: The effects of prior theories on subsequently considered evidence. J. Pers. Soc. Psychol. 37, 2098–2109 (1979). [Google Scholar]

- 36.P. D. Allison, Fixed effects regression models, Quantitative Applications in the Social Sciences, T. F. Liao, Ed. (Sage Publications, 2009), vol. 160, pp. 123. [Google Scholar]

- 37.M. T. Boykoff, J. T. Roberts, Media coverage of climate change: Current trends, strengths, weaknesses, Human Development Report (United Nations Development Program, 2007). [Google Scholar]

- 38.Lazer D. M. J., Baum M. A., Benkler Y., Berinsky A. J., Greenhill K. M., Menczer F., Metzger M. J., Nyhan B., Pennycook G., Rothschild D., Schudson M., Sloman S. A., Sunstein C. R., Thorson E. A., Watts D. J., Zittrain J. L., The science of fake news. Science 359, 1094–1096 (2018). [DOI] [PubMed] [Google Scholar]

- 39.C. Wardle, H. Derakhshan, Information disorder: Toward an interdisciplinary framework for research and policy making, Council of Europe Report (Council of Europe, 2017).

- 40.Kim Y. M., Hsu J., Neiman D., Kou C., Bankston L., Kim S. Y., Heinrich R., Baragwanath R., Raskutti G., The stealth media? Groups and targets behind divisive issue campaigns on Facebook. Polit. Commun. 35, 515–541 (2018). [Google Scholar]

- 41.G. Kessler, S. Rizzo, M. Kelly, President Trump has made more than 20,000 false or misleading claims, Washington Post (2020); www.washingtonpost.com/politics/2020/07/13/president-trump-has-made-more-than-20000-false-or-misleading-claims/.

- 42.NewsWhip, Data dictionary (2020); https://developer.newswhip.com/docs/data-dictionary.

- 43.Prior M., Sood G., Khanna K., You cannot be serious: The impact of accuracy incentives on partisan bias in reports of economic perceptions. Q. J. Political Sci. 10, 489–518 (2015). [Google Scholar]

- 44.Peterson E., Iyengar S., Partisan gaps in political information and information-seeking behavior: Motivated reasoning or cheerleading? Am. J. Polit. Sci. 65, 133–147 (2021). [Google Scholar]

- 45.Stanislaw H., Todorov N., Calculation of signal detection theory measures. Behav. Res. Methods Instrum. Comput. 31, 137–149 (1999). [DOI] [PubMed] [Google Scholar]

- 46.Huff C., Tingley D., “Who are these people?” Evaluating the demographic characteristics and political preferences of MTurk survey respondents. Research & Politics 2, 2053168015604648, (2015). [Google Scholar]

- 47.A. Sorokin, D. Forsyth, in 2008 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (2008), pp. 1–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary material for this article is available at http://advances.sciencemag.org/cgi/content/full/7/23/eabf1234/DC1