Abstract

Developmental psychology plays a central role in shaping evidence-based best practices for prelingually deaf children. The Auditory Scaffolding Hypothesis (Conway et al., 2009) asserts that a lack of auditory stimulation in deaf children leads to impoverished implicit sequence learning abilities, measured via an artificial grammar learning (AGL) task. However, prior research is confounded by a lack of both auditory and language input. The current study examines implicit learning in deaf children who were (Deaf native signers) or were not (oral cochlear implant users) exposed to language from birth, and in hearing children, using both AGL and Serial Reaction Time (SRT) tasks. Neither deaf nor hearing children across the three groups show evidence of implicit learning on the AGL task, but all three groups show robust implicit learning on the SRT task. These findings argue against the Auditory Scaffolding Hypothesis, and suggest that implicit sequence learning may be resilient to both auditory and language deprivation, within the tested limits.

Graphical Abstract

Hearing children, Deaf native signers, and deaf CI users all show significant implicit learning on the Serial Reaction Time Task. This pattern suggests that implicit learning abilities are resilient to a period of up to 12 years without auditory access and up to 3 years without language access.

1 ∣. INTRODUCTION

Maximizing deaf children’s developmental potential is a goal that engages researchers and clinicians from a variety of disciplines. In particular, the role of developmental psychology is becoming increasingly apparent. A growing literature reports deficits in cognitive development in deaf children, even in domains that –superficially – have little to do with hearing, such as problem solving (Luckner & McNeill, 1994), concept formation (Castellanos et al., 2015), and the many skills that comprise executive function (Beer et al., 2014; Figueras, Edwards, & Langdon, 2008; Kronenberger, Pisoni, Henning, & Colson, 2013; Kronenberger, Beer, Castellanos, Pisoni, & Miyamoto, 2014; Remine, Care, & Brown, 2008). This paper focuses primarily on the domain of implicit learning, especially of sequences, in deaf children.

Implicit learning happens incidentally, without intention, and in a manner that is opaque to explanation (Reber, 1989). It plays a crucial and pervasive role in human development and everyday life. Typically developing infants show implicit sensitivity to many different statistical regularities in their input, and mounting evidence suggests that they may use these skills to bootstrap language acquisition, although definitive proof remains elusive (e.g., Romberg & Saffran, 2010). Implicit learning underlies motor skill acquisition, such as learning to ride a bike, type, or play an instrument (Abrahamse, 2012), and allows us to make short-range predictions about events in our environment (Janacsek & Nemeth, 2012).

1.1 ∣. The auditory scaffolding hypothesis

Unfortunately, some evidence suggests that deaf children may be at risk for deficits in implicit learning, at least for sequences. Conway, Pisoni, Anaya, Karpicke, and Henning (2011) presented deaf and hearing children with an implicit sequence learning task in a non-linguistic visual domain. The task, which they call ‘artificial grammar learning’ (AGL), was presented to participants as a serial recall task, but each stimulus was covertly generated by an underlying grammar. In the learning phase, all sequences came from the same grammar, whereas in a seamlessly integrated test phase, novel sequences came from either the trained grammar or an untrained grammar. Although the groups did not differ in explicit memory for learning-phase sequences, the hearing but not the deaf group performed better on test-phase sequences from the trained than untrained grammar, suggesting that only they had implicitly acquired knowledge about the trained grammar. The lack of a difference between trained and untrained grammars for the deaf group was taken as evidence of deficient implicit sequence learning abilities. This, in turn, contributed to the development of the auditory scaffolding hypothesis (Conway, Pisoni, & Kronenberger, 2009), which states:

… losing the sense of audition early in development may set up a cascade of complex effects that alter a child’s entire suite of perceptual and cognitive abilities, not just those directly related to hearing and the processing of acoustic signals. According to the auditory scaffolding hypothesis, deafness may especially affect cognitive abilities related to learning, recalling, and producing sequential information. (p. 276)

This view is embedded within a larger emerging discipline known as cognitive hearing science (Arlinger, Lunner, Lyxell, & Pichora-Fuller, 2009). Regarding deaf children with cochlear implants, these authors write,

Cognitive development is further related to factors such as age at implant and early preimplant auditory experience (Geers et al., 2008; Pisoni & Cleary, 2003; Pisoni, Kronenberger, Conway, Horn, Karpicke & Henning, 2008), where early implantation and early auditory experience are more beneficial for cognitive development. (p. 377)

If this account is correct, then developmental theories will need to identify the mechanism(s) by which auditory experience (or deprivation) influences these higher-order skills, and clinicians will need to inform parents of deaf children about the importance of providing early auditory access via hearing technologies such as cochlear implants (CIs). At present, however, such conclusions may be premature; there are both theoretical and empirical reasons to be cautious before interpreting the results of Conway et al. (2011) as evidence that auditory deprivation compromises implicit sequence learning.

1.2 ∣. Theoretical concerns about the auditory scaffolding hypothesis

From a theoretical standpoint, one concern is the a priori plausibility of the auditory scaffolding account. Implicit learning has been conspicuous largely for its invariance across diverse populations, consistent with Reber’s original characterization of implicit learning as an evolutionary precursor to explicit learning and hence deeply conserved across individuals (Reber, 1989, 1993; Reber, Walkenfeld, & Hernstadt, 1991). Implicit learning abilities are found to be robust to differences in IQ (Maybery, Taylor, & O’Brien-Malone, 1995; Unsworth & Engle, 2005), amnesia (Seger, 1994; Thomas & Nelson, 2001), Korsakoff syndrome (Nissen, Willingham, & Hartman, 1989), closed head injury (McDowall & Martin, 1996), and Alzheimer’s disease (Knopman & Nissen, 1987). The only reliable factor that is known to disrupt implicit learning is biological damage to the basal ganglia and other subcortical structures, as in Parkinson’s and Huntington’s disease (Ferraro, Balota, & Connor, 1993; Knopman & Nissen, 1991). But even in these patients, decrements to implicit learning may not be detectable until the disease has progressed to a fairly advanced stage (Smith, Siegert, McDowall, & Abernethy, 2001). If implicit learning is robust to all of these factors, including early stages of structural damage to the neural circuits directly involved, it seems relatively implausible that they would be profoundly disrupted by the indirect effects of hearing loss.

On the other hand, it could be argued that most of the above conditions are acquired rather than congenital, and that deviations from typical development could lead to greater perturbations of the system (e.g., Kral, Kronenberger, Pisoni, & O’Donoghue, 2016). Here, the literature is more divided. Some results suggest that implicit learning skills are developmentally invariant; that is, that they are detectable in early infancy (Fiser & Aslin, 2002; Saffran, 2003; Saffran, Aslin, & Newport, 1996), and that they do not differ or differ only slightly between children and adults (e.g., Cherry & Stadler, 1995; Howard & Howard, 1989; Meulemans, Van der Linden, & Perruchet, 1998; Seger, 1994; Thomas & Nelson, 2001). Other results find evidence that implicit learning skills do change over childhood (Janacsek, Fiser, & Nemeth, 2012; Thomas et al., 2004), particularly for higher-order patterns (Howard & Howard, 1997).

For present purposes, we are not directly concerned with comparing adults and children; the question of developmental invariance is relevant primarily because the auditory scaffolding hypothesis is more viable under a developmental variance account, in which age and/or experience are believed to influence implicit learning. It is therefore useful to survey the studies that have supported a variance account, to see what factors are known to be related to variation in implicit learning. The key factor that emerges from this literature is language (Kaufman et al., 2010; Kidd, 2012; Misyak, Christiansen, & Tomblin, 2010a, 2010b; Tomblin, Mainela-Arnold, & Zhang, 2007). While these studies find that variation in implicit learning is related to variation in language, they are all correlational in nature, making it impossible to determine whether (1) variation in implicit learning ability impacts language ability, (2) variation in language ability impacts implicit learning ability, (3) there is reciprocal influence between the two domains, or (4) both domains are impacted by a shared third factor.

The present study is not designed to adjudicate among these four possibilities. Rather, the critical observation is that there is already evidence of a possible link between implicit learning and language ability, but no prior evidence linking implicit learning to auditory perception. Therefore, the auditory scaffolding account must be evaluated by testing implicit learning in participants who have atypical auditory experience without having atypical language experience. To date, all of the evidence cited in support of the auditory scaffolding hypothesis has come from participants who experienced a period without exposure to both acoustic and linguistic input, and whose language skills are likely to be delayed relative to peers with typical hearing (Conway et al., 2011). Given the known links between implicit learning and language ability, it is plausible that a lack of exposure to the patterns of natural language could disrupt the development of pattern detection skills more broadly. That is, the development of implicit learning skills may depend less on the temporal and linear structure of sound and more on the temporal, hierarchical, and inherently social structure of language. We refer to this as the Language Scaffolding Hypothesis. Extant findings on implicit sequence learning cannot distinguish between these competing theoretical accounts, which have very different clinical implications.

1.3 ∣. Empirical concerns about the auditory scaffolding hypothesis

Our second caution about interpreting the results of Conway et al. (2011) as evidence that auditory deprivation perturbs the development of implicit learning is empirical in nature. We question the replicability of the group difference in Conway et al. (2011), for which the statistical evidence was relatively weak. In their study, the crucial question was whether the implicit learning effect was larger among the hearing participants than among the deaf participants. Because the implicit learning effect is itself a difference score (performance on ‘grammatical’ versus ‘ungrammatical’ trials), this question involves a difference of differences and is therefore best addressed by testing for a group by trial type interaction. Instead, the authors report that the effect of trial type was significant in the hearing group but not in the deaf group (Conway et al., 2011, p. 74). This is not a particularly compelling argument, because this pattern can obtain even when the interaction is not significant. The authors then present a t-test of difference scores (p. 75), which is conceptually closer to the interaction, but with an improper error term. Even here, the critical effect is reported as t(47) = −2.01, p < .05, when the two-tailed p-value associated with t(47) = −.201 is 0.0502. (We note that the authors use two-tailed statistics throughout.) This minor discrepancy is likely due to truncating significant figures, and we do not fault the authors for so doing. Our point is simply that the statistical evidence for a group difference in the strength of the implicit learning effect in this paradigm is weak, both in terms of its statistical significance and its overall size. Indeed, the authors acknowledge in a footnote that the effect is small:

It could be objected that an increase of 5.8% for grammatical vs. ungrammatical sequences is a trivial gain. However, the magnitude of learning in much of the artificial grammar learning literature is often within the 5–10% range. Especially considering the age range of the participants and the extremely short period of exposure to the grammatical patterns, a 5.8% learning gain score is not insubstantial. (p. 75)

In evaluating this response, it is important to bear in mind that raw percentages are not a reliable means of indicating effect size, especially since there is substantial variability across studies in both the unit of measure (e.g., accuracy, habituation, response time, etc.) and the number of trials involved. In Conway et al.’s (2011) study, the test phase included 24 trials: 12 from the trained grammar and 12 from the untrained grammar. This means that the minimal unit by which a child’s performance could differ between the trained and untrained grammars is 1/12, or 8.3%. The observed group difference of 5.8% therefore equates to an average difference of less than 1 trial.

Setting aside questions of effect size, there remain several other concerns about using this paradigm to measure implicit sequence learning. One concern is that this paradigm is only able to detect evidence for implicit learning when explicit memory fails: children who are able to recall all sequences correctly will earn a ‘learning score’ of 0%, implying a lack of implicit learning ability when instead this simply reflects strong explicit memory. In addition, the two grammars were not counterbalanced between subjects: that is, rather than assigning ‘Grammar A’ to the trained condition for half of the participants and to the untrained condition for the other half, the sequences in the trained condition were always from Grammar A and with untrained sequences from Grammar B. Although both groups saw the same two grammars, they did not necessarily use the same kinds of encoding strategies; for example, hearing children may have relied on more speech-based rehearsal whereas deaf children may have relied on a more distributed set of memory representations (e.g., Hall & Bavelier, 2010). Thus it remains possible that the difference between the two grammars was different for the two groups. Replication would assuage some of these concerns, but to our knowledge, the Conway et al. AGL task has not been independently replicated (though a study by this same group (Conway, Karpicke, & Pisoni, 2007) did find evidence of implicit learning in hearing adults). We are not aware of any replications of this task with children.

There are, however, other ways of measuring implicit sequence learning in the psychological literature. Of these, the most salient is the Serial Reaction Time (SRT) task (Nissen & Bullemer, 1987), which can also be used with children (Meulemans et al., 1998). It has been found to be robustly sensitive to individual and group differences in language processing (Kidd, 2012; Tomblin et al., 2007) as well as higher-order cognitive skills (Kaufman et al., 2010).

1.4 ∣. The present study

Given these considerations, the present study takes a replicate and extend approach. Experiment 1A replicates and extends Conway et al.’s (2011) AGL task by testing three groups of child participants: hearing controls, deaf cochlear implant users who lacked access to language for a period of up to 36 months (similar to Conway et al.), and deaf children who have had access to language input from birth by virtue of having been born to Deaf parents who used American Sign Language (ASL). Experiment 1B further extends this work by including a more widely used measure of implicit sequence learning: the Serial Reaction Time (SRT) task(Nissen & Bullemer, 1987)

2 ∣. EXPERIMENT 1A

2.1 ∣. Method

2.1.1 ∣. Participants

A total of 77 children participated in the study: 30 Deaf native signers, 12 deaf CI users, and 35 hearing English speakers. All were between 7 and 12 years of age. We excluded data from one hearing participant for whom no video backup was available. Following Conway et al. (2011), we also excluded participants (n = 1 Deaf native signer, 1 deaf CI user, and 3 hearing controls) whose overall accuracy (i.e., explicit sequence recall) was more than 2SD below their group mean (the upper cut-off exceeded the maximum possible score), in order to exclude children who failed to understand, pay attention to, or comply with the task. Demographic information for the final sample of 29 Deaf native signers, 11 deaf CI users, and 31 hearing participants is given in Table 1. Because the native signers in the current sample generally make less use of hearing technology than the cochlear implant users studied in previous research, the deficits predicted by the auditory scaffolding hypothesis should be at least as large as, if not larger than, those reported previously. The present sample overlaps in age with that of Conway et al. (2011), who included 23 deaf and 26 hearing participants ages 5–10, implanted between 10 and 39 months.

TABLE 1.

Demographic characteristics of participants in Experiment 1A

| Deaf native signers n = 29 |

Deaf CI users n = 11 |

Hearing controls n = 31 |

F/X2 | p | |

|---|---|---|---|---|---|

| Age | .94 | .39 | |||

| Mean yr;mo | 9;06 | 9;09 | 9;03 | ||

| (SD) | (1;09) | (1;11) | (1;04) | ||

| Range | 7;01–12;10 | 7;04–12;10 | 7;0–12;11 | ||

| Sex (f: m) | 19: 10 | 4: 7 | 16: 15 | 3.01 | .22 |

| Hearing status | Severe or profound congenital deafness | Severe or profound congenital deafness | No known hearing impairment | n/a | n/a |

| Language experience | Exposure to sign language at home from birth and at school; variable speech emphasis at home and school. | Little accessible language input prior to cochlear implant; listening and spoken language emphasis at home and school. | Exposure to spoken language from birth. | n/a | n/a |

| Age of CIa: | (4 of 29) | (11 of 11) | n/a | n/a | n/a |

| Mean yr;mo | 1;10 | 1;04 | |||

| (SD) | (0;04) | (0;09) | |||

| Range | l;07–2;03 | 0;08–2;10 | |||

| Primary caregiver education levelb | I: 1 | I: 0 | I: 0 | – | .96 |

| II: 2 | II: 1 | II: 1 | |||

| III: 6 | III: 2 | III: 6 | |||

| IV: 8 | IV: 3 | IV: 9 | |||

| V: 14 | V: 5 | V: 14 | |||

| VI: 0 | VI: 0 | VI: 1 |

Only 4/29 (14%) of the Deaf native signers received a cochlear implant; they are included with the other Deaf native signers rather than with the CI users because they were exposed to ASL from birth.

Education level: I = less than high school, II = high school or GED, III = some college or associate’s degree; IV = bachelors degree; V = some graduate school or advanced degree. VI: Not reported.

The Deaf native signers in our sample had severe or profound congenital deafness. The majority (n = 15) did not regularly use any hearing technology; 10 used hearing aids at least ‘sometimes’, and four had received cochlear implants (although only three of these participants routinely used their implants). They were recruited from schools and organizations for the deaf in Connecticut, Texas, Maryland, Massachusetts, and Washington, DC. Deaf native signers were bilingual in ASL and (written) English; two also knew more than one sign language.

The deaf CI users in our sample also had severe or profound congenital deafness, and received at least one cochlear implant before turning 3. The average age of implantation was 16 months. All were raised in families who had chosen an oral/aural approach, focusing on listening and spoken language, and therefore did not use ASL or other forms of manual communication with their children. Participants were recruited by contacting a broad range of sources, including every school for the deaf in New England, programs and organizations specifically for deaf children who do not use sign language, audiology clinics in CT, MA, and RI, a summer camp for deaf children in Colorado, early intervention programs for deaf children, social media groups for parents with deaf children, community events/organizations/conferences/festivals for cochlear implant users in CT, RI, MA, and NY, and parent-to-parent recruitment. We also contacted every public school in the state of Connecticut where state records indicated that there was at least one student for whom hearing loss was the primary reason for an IEP. Participants were tested in Connecticut, Massachusetts, Rhode Island, New York, and Colorado. (Colorado was the site of the summer camp; the children tested there hailed from several states.)

The hearing participants in our sample were recruited from local schools, advertisements in and around the University of Connecticut community, and local contacts in Connecticut and California. Multilingual children were included, and constituted 13% of the sample (4/31).

2.1.2 ∣. Materials

We recreated the experiment described in Conway et al. (2011) using PHP and Javascript, and deployed it via web browser (running off a local server) on a Microsoft touchscreen laptop running Windows 8. Participants saw a 2 × 2 grid of colored squares near the center of the screen, with a large ‘Continue’ button underneath. Touching the ‘continue’ button triggered a stimulus sequence, which ranged in length from two to five elements. At trial onset, all colors initially disappeared, followed by one square appearing for 700 ms, an inter-stimulus interval of 500 ms, and then the next element in the sequence (700 ms). At the end of the sequence, all four squares reappeared together with the continue button; this served as the participants’ cue to recall the sequence by touching the squares in the same order in which they had flashed during the sequence.

2.1.3 ∣. Procedure

The design was identical to that described in Conway et al. (2011). The experiment had a training phase and a test phase, but participants were unaware of this distinction. During training, all stimulus sequences were generated by a grammar; these abstract sequences were identical for all participants (see Conway et al., 2011 for specific sequences and transitional probabilities). The mapping from a given grammatical element to a specific color and spatial position was randomized for each new participant, but then remained consistent for that participant. During the test phase, participants viewed novel sequences from either the familiar grammar or a new grammar, in alternating blocks of four trials. Again, participants were unaware of any underlying structure, and were instructed only to repeat the sequence in the correct order. There were 16 learning trials and 24 test trials (12 trained, 12 untrained). All study procedures were approved by the University of Connecticut Institutional Review Board and those of participating schools.

2.1.4 ∣. Scoring

Each trial was scored as correct or incorrect. Although the program was designed to collect and score responses automatically, pilot testing indicated that children often pressed repeatedly (as if to confirm that their response had been registered, since the display provided no feedback) or made self-corrections. It was clear that relying solely on the automated scoring procedure would yield inaccurate results. We therefore filmed all participants, and based scoring on the video footage, counting confirmatory touches as a single touch and accepting self-corrections. In a small number of cases where the child’s response was not visible (e.g., their body obstructed the camera’s view of the screen), we relied on the automated record. A small number of trials were missing responses (25/2840, 0.009%), usually because a child pressed the continue button before entering the response for the preceding trial. These were not scored as either correct or incorrect, and were not included in the final analyses.

We computed a ‘learning score’ (LRN), as in Conway et al. (2011), which measures the difference in accuracy for trained versus untrained grammars. It is calculated from the test trials as: LRN = [accuracy for trained grammar] – [accuracy for untrained grammar]. Higher scores are taken as evidence of implicit learning of the (trained) grammar.

This measure is vulnerable to slight bias if there are unequal numbers of observations for trained versus untrained grammars. For example, a child who makes one mistake in each condition would ordinarily score 11/12 vs. 11/12, yielding LRN = 0%. However, if there was also a missing trial, the scores would be 11/12 vs. 10/11, yielding LRN = ±.7%. We dealt with missing trials in the testing phase by removing a response from the corresponding serial position in the other condition, regardless of whether it was correct or incorrect. (For example, if we were missing a response to the 3rd untrained sequence at length = 4, we removed the response to the 3rd trained sequence at length = 4.) This ensures an equal number of observations in each condition for each participant.

2.2 ∣. RESULTS

We examined the data distributions to choose appropriate analyses. Learning phase data were distributed non-normally (skewed toward ceiling). We therefore compare means with the Wilcoxon rank sum test, which is approximated by the chi-square distribution when the grouping factor has more than two levels. LRN scores during the test phase were normally distributed, and were analyzed with one-way ANOVA.

2.2.1 ∣. Explicit accuracy (learning phase)

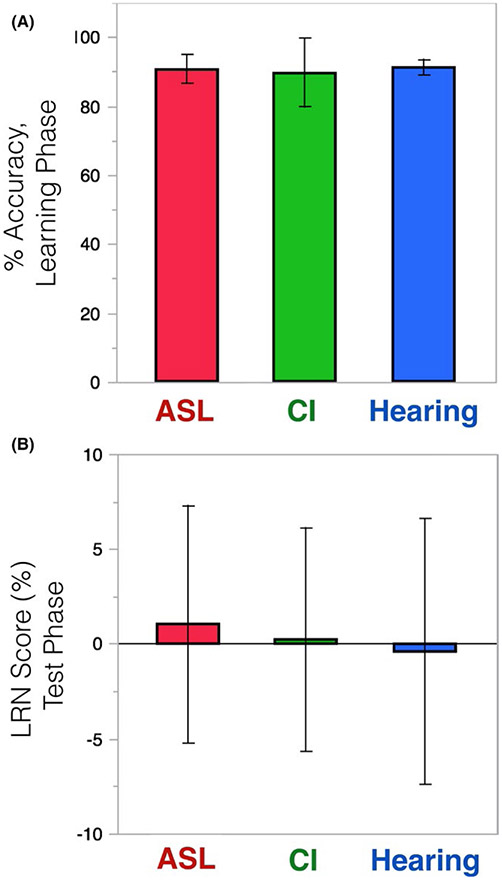

As shown in Figure 1A, the groups did not differ in accuracy during the learning phase: 90.7%, 89.7%, and 91.3% for the Deaf native signers, deaf CI users, and hearing controls, respectively (χ2 = .45, p = .80).

FIGURE 1.

No group differences on either (A) Accuracy or (B) LRN score (which compares performance on the trained minus untrained grammar). Error bars represent 95% confidence intervals

2.2.2 ∣. Implicit learning (test phase)

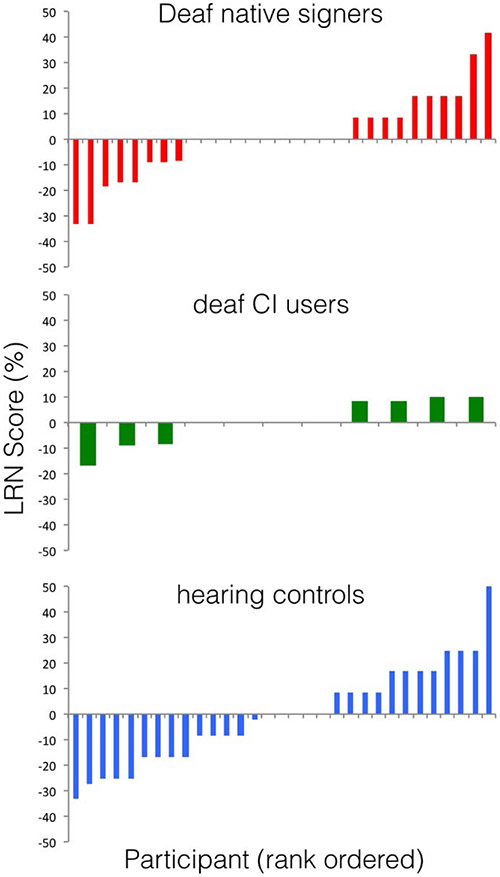

The groups did not differ in LRN score: 0.01%, 0.002%, and −0.42% for the Deaf native signers, deaf CI users, and hearing controls, respectively [F(2, 68) = 0.57, p = .95]. As indicated by the confidence intervals in Figure 1B, no group scored significantly above zero. The distribution of individual child responses (Figure 2) provides clear evidence of a lack of implicit learning on this task.

FIGURE 2.

Individual differences in LRN score

2.2.3 ∣. Cross-study comparison

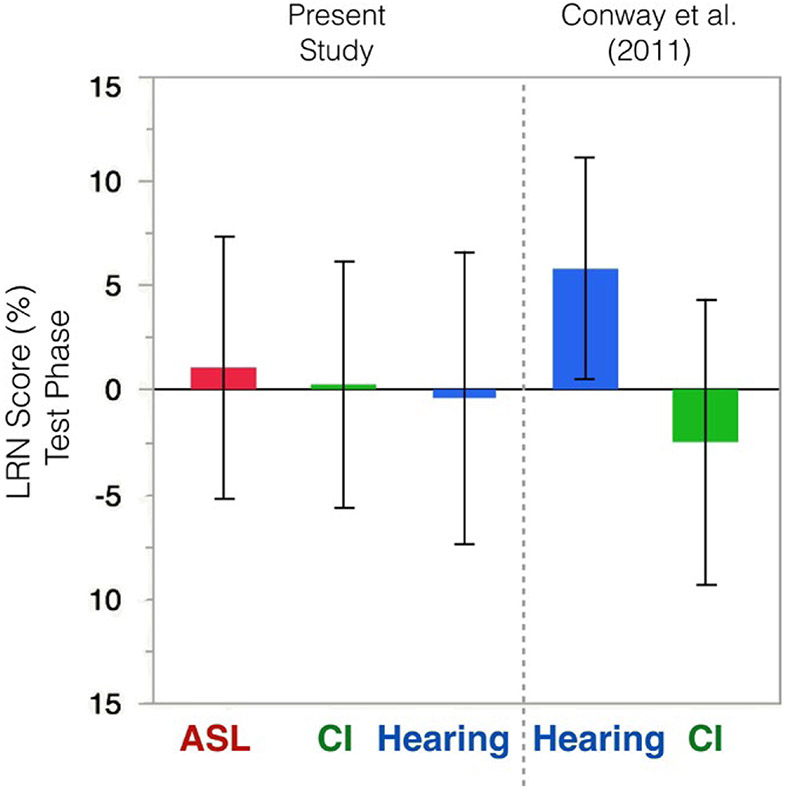

Because Conway et al. (2011) reported individual differences, it is possible to reconstruct confidence intervals around their means. These are displayed next to data from the current study in Figure 3. The hearing participants from Conway et al. (2011) are the only ones whose confidence interval does not include 0; however, there is substantial overlap with all other child participants, regardless of whether they are deaf or hearing, native signers or CI users.

FIGURE 3.

LRN scores in the present study compared to Conway et al. (2011), reconstructed from published data. Error bars represent 95% confidence intervals

2.3 ∣. Interim discussion

Our replication of Conway et al. (2011) found that of the five groups of children tested between the two studies, four behaved similarly in showing no evidence of implicit learning. The one group in which implicit learning was reported showed a difference of 5.8%, with the lower bound of the 95% confidence interval just barely missing 0. Given the more general concerns about this paradigm as reviewed in the introduction, we suggest that this task may not be a reliable indicator of implicit learning in either deaf or hearing children.

However, it is possible that some aspect of our implementation of the Conway et al. paradigm is responsible for our failure to find evidence of implicit sequence learning in Experiment 1A, or that even the hearing children in the current study had implicit learning deficits. Therefore, Experiment 1B tested the same children with a more standard measure of implicit sequence learning: the serial reaction time (SRT) task (Nissen & Bullemer, 1987).

3 ∣. EXPERIMENT 1B

3.1 ∣. Method

3.1.1 ∣. Participants

The same 77 children took part in Experiment 1B, during the same testing session. Task order was determined randomly for each participant. Some children whose data were excluded from analysis in Experiment 1A yielded usable data here, and vice versa. Data from a given child were excluded from analysis if no usable responses were produced on at least half of the trials, due to children choosing to abort the task (n = 11; 5 of 30 Deaf native signers, 2 of 12 deaf CI users, 4 of 35 hearing controls), or to computer error (n = 1 Deaf native signer). The final sample contained data from 24 Deaf native signers, 10 deaf CI users, and 31 hearing children, whose demographics are given in Table 2.

TABLE 2.

Demographic characteristics of participants in Experiment 1B

| Deaf native signers n = 24 |

Deaf CI users n = 10 |

Hearing controls n = 31 |

F/X2 | p | |

|---|---|---|---|---|---|

| Age | 1.61 | .21 | |||

| Mean yr;mo | 9;10 | 10;01 | 9;03 | ||

| (SD) | (1;08) | (1;10) | (1;04) | ||

| Range | 7;01–12;10 | 7;07–12;10 | 7;0–12;11 | ||

| Sex (f: m) | 16: 8 | 5: 5 | 17: 14 | 1.13 | .57 |

| Hearing status | Severe or profound congenital deafness | Severe or profound congenital deafness | No known hearing impairment | n/a | n/a |

| Language experience | Exposure to sign language at home from birth and at school; variable speech emphasis at home and school. | Little accessible language input prior to cochlear implant; listening and spoken language emphasis at home and school. | Exposure to spoken language from birth. | n/a | n/a |

| Age of CIa: | (3 of 24) | (10 of 10) | n/a | n/a | n/a |

| Mean yr;mo | 1;10 | 1;10 | |||

| (SD) | (0;02) | (0;07) | |||

| Range | 1;07–1;10 | 0;08–2;10 | |||

| Primary caregiver education levelb | I: 1 | I: 0 | I: 0 | – | .97 |

| II: 1 | II: 1 | II: 1 | |||

| III: 5 | III: 2 | III: 6 | |||

| IV: 6 | IV: 2 | IV: 9 | |||

| V: 11 | V: 5 | V: 14 | |||

| VI: 0 | VI: 0 | VI: 1 |

Only 3/24 (12%) of the Deaf native signers received a cochlear implant; they are included with the other Deaf native signers rather than with the CI users because they were exposed to ASL from birth.

Education level: I = less than high school, II = high school or GED, III = some college or associate’s degree; IV = bachelors degree; V = some graduate school or advanced degree. VI: Not reported.

3.1.2 ∣. Materials

The task used a Finding Nemo (a Pixar movie) theme, to increase engagement and motivation. Participants saw a still image of Nemo (a fish), presented in one of four locations on a laptop screen. The three empty locations appeared as white squares on a solid-colored background. The laptop keyboard was labeled with Arabic numerals 1, 2,3, and 4, using stickers, on the z, x, >, and ? keys, respectively. The participants’ task was to press the button corresponding to Nemo’s current location as quickly as possible, using the index and middle fingers of each hand. The stimulus remained on the screen until the participant pressed the correct button, at which point it moved to a new location after a 250 ms inter-stimulus interval. The experiment was presented using E-Prime on the same laptop as Experiment 1A.

3.1.3 ∣. Design

As with most SRT tasks, the trials were covertly divided into predictable (‘sequence’) trials and unpredictable (‘random’) trials. Throughout the task, a fixed sequence of 10 positions repeated 12 times, but before and after each repetition of the sequence there were six instances where the stimulus position was unpredictable. The transition from the last random to the first sequence trial was also unpredictable, by definition. Similarly, the transition from the first sequence position to the second sequence position occurred equally often in the sequenced and random trials. In contrast, the transitions into positions three through 10 of the sequence were twice as frequent during sequence trials as during random trials. Accordingly, the first two positions of the sequence were analyzed as part of the random condition, and only the latter eight positions were analyzed as part of the sequence condition. This yields an equal number of observations in both the sequence and random conditions.

The experiment was divided into five blocks of 198 individual responses each, with an opportunity to take a break between blocks, which lasted roughly 2–4 minutes each (depending on the speed of the child’s responses). At the completion of each block, the participant encountered a screen with a different character from the Finding Nemo movie, encouraging them to keep looking. At the end of the fifth and final block, they were informed that they had successfully found Nemo.

3.1.4 ∣. Procedure

An experimenter explained the task (in ASL or English, as appropriate), framing it as a speed-based game with five levels (blocks), with the stated goal being for the participants to beat their own best time on each level. The nature of the task means that this is usually possible, which provides helpful intrinsic motivation for what could otherwise be a rather boring task. In addition, the experimenter closely monitored the participants for evidence of ‘button mashing’ (i.e., pressing all buttons simultaneously and indiscriminately), which was a strategy discovered by some children to get through the task more quickly. All study procedures were approved by the University of Connecticut Institutional Review Board and those of participating schools.

3.1.5 ∣. Analysis

Outlier responses longer than 2000 ms (2.5%) were removed. To minimize the contribution of ‘button mashing’ trials, we also removed all responses shorter than 150 ms (1.3%). The resulting response times had a typical skewed distribution; therefore, our analyses use mean log(RT) as the dependent measure. For ease of interpreting the y-axis, Figure 4 shows median(RT). All main effects remain if median(RT) is used as the dependent measure, but some interactions do not survive; these are noted below.

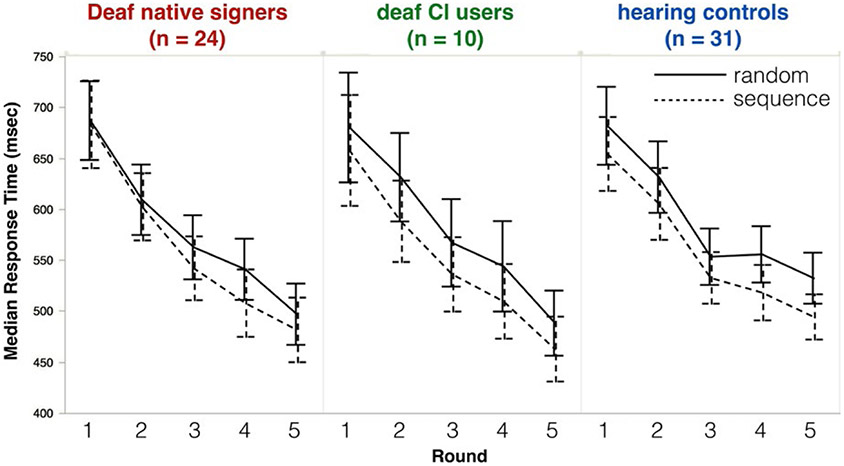

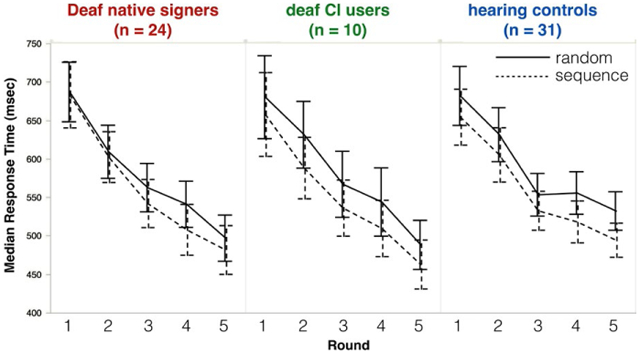

FIGURE 4.

Deaf native signers (left), deaf CI users (middle), and hearing controls (right) all respond more quickly to stimuli from sequence trials (dotted line) than random trials (solid line). Error bars represent 95% confidence intervals

3.2 ∣. RESULTS

Figure 4 shows participants’ median response time (in ms) by Group (hearing, Deaf native signers, oral CI users), Block (1–5) and Trial Type (Random, Sequence). Response times were non-normally distributed (as is typical); to correct for this, we apply a log transformation prior to analysis. We analyze mean log(RT) here, followed by a parallel analysis of median(RT), untransformed.

A Group × Block × Trial Type ANOVA with mean log(RT) as the dependent measure found a significant main effect of Block [F(4, 62) = 113.45, p < .001], indicating that responses generally became faster as the experiment continued. Crucially, a main effect of Trial Type [F(1, 62) = 43.71, p < .001] indicated that responses were significantly faster for sequence trials than for random trials. This effect is the signature of implicit learning.

There was no main effect of Group [F(2, 62) = .14, p = .87]. Group interacted only with Block [F(8, 62) = 2.36, p = .02]; the nature of the interaction is such that while all three groups showed similar RTs in the earlier blocks, mean log(RT) did not continue to decrease in the later blocks to the same extent among hearing participants as among both groups of deaf participants. (This interaction is not significant when analyzing median RT rather than log(RT): F(4, 62) = .98, p = .45).

There was a Block and Trial Type interaction [F(4, 62) = 3.01, p = .02], which seems to be driven by an unexplained slowing in response times to random sequences in Block 4, for all groups. (This interaction is not significant when analyzing median RT rather than log(RT): F(4, 62) = 1.02, p = .40).

There was no three-way interaction between Group, Block, and Trial Type: F(8, 62) = .24, p = .98.

3.2.1 ∣. Analysis of median RT

The analyses reported above correct for the skewed distribution of raw mean response times applying a log transformation. Another approach is to analyze untransformed median(RT) instead. When so doing, the main effects of Trial Type [F(1, 62) = 33.77, p < .001] and Block [F(4, 62) = 102.39, p < .001] remain, but no other effects reach significance (all F < 1.5, all p > .2).

3.2.2 ∣. Group-level analyses

The omnibus analyses above found that Group did not interact with Trial Type; however, that alone does not demonstrate that participants in all three groups showed robust evidence of implicit sequence learning. In particular, it would be reassuring to document that the effects of Block and especially Trial Type are significant when each group is analyzed on its own. This is in fact the case, as reported in Table 3.

TABLE 3.

Results of separate Block × Trial Type analyses for each group of participants in Experiment 1B

| log(RT) |

Median RT |

||||

|---|---|---|---|---|---|

| Group | Effect (df) | F | p | F | p |

| Deaf native signers (n = 24) | Block | 48.99 | < .001 | 49.70 | < .001 |

| Trial Type | 19.77 | < .001 | 6.51 | < .02 | |

| Block × Trial Type | 2.48 | = .049 | 1.52 | = .20 | |

| Deaf CI users (n = 10) | Block | 49.12 | < .001 | 29.43 | < .001 |

| Trial Type | 17.27 | < .01 | 13.07 | < .01 | |

| Block × Trial Type | .56 | .69 | .32 | = .86 | |

| Hearing controls (n = 31) | Block | 55.13 | < .001 | 49.44 | < .001 |

| Trial Type | 20.73 | < .001 | 24.14 | < .001 | |

| Block × Trial Type | 1.06 | = .38 | 1.03 | = .39 | |

3.2.3 ∣. Correlation analyses

If the auditory scaffolding hypothesis is correct, then the strength of implicit sequence learning should be inversely proportional to the duration of a child’s deafness. To test this prediction, we computed the difference (in log(RT)) between each child’s responses to random trials and sequence trials, and analyzed the magnitude of that difference in relation to the duration of the child’s deafness (in months) using age of cochlear implantation as a proxy for the offset of deafness. Despite duration of deafness ranging from 0 to 154 months (with 34 data points where duration of deafness > 0), there was no significant relationship between a children’s duration of deafness and the size of their implicit learning effect (r2 = .001, p = .73).

If the language scaffolding hypothesis is correct, then we should expect an inverse relationship between the size of the implicit learning effect (in log(RT)) and the number of months that the child lacked access to language, using age of cochlear implantation as a proxy for onset of language access among non-signers. We found no evidence to support this prediction (r2 = .001, p = .78), although we note that in this analysis there are only 10 data points where duration of lack of access to language is greater than 0, ranging from 8 to 34 months.

3.2.4 ∣. Between-experiment results

To measure the extent to which the tasks in Experiments 1A and 1B measured the same underlying ability, we tested each individual’s mean difference (in log(RT)) between random trials and sequence trials across all blocks. We then tested for a correlation between this score and the LRN score from the same individual in Experiment 1A (where available), but did not find a significant correlation (r2 = .02, p > .25).

3.3 ∣. DISCUSSION

The serial reaction time task in Experiment 1B provided robust evidence of implicit sequence learning, which did not differ between groups. There was no correlation between individual differences in implicit learning abilities as assessed by AGL and SRT tasks.

To date, we are aware of only one other study that has administered the serial reaction time task to people with hearing loss (Lévesque, Théoret, & Champoux, 2014). That study tested adults with either congenital or adult-onset hearing loss. Like the present study, Lévesque et al. found evidence of robust implicit sequence learning in both groups. However, they used a different experimental design, in which participants encountered several blocks of sequence trials in a row, followed by a random block (whereas the present study interleaved sequence and random trials within each block). Thus, another way to measure the strength of implicit sequence learning is to measure the amount of slowing that participants display upon encountering this random block at the very end of the study (the inference being that greater slowing reflects more surprise at the deviation from the sequence, thus indicating more robust learning). Lévesque et al. observed that although participants both with and without hearing loss did slow down upon encountering this final random block, the amount of slowing was proportionally smaller in the deaf than in the hearing, and did not differ as a function of congenital or acquired hearing loss. They interpret their findings as revealing attenuated implicit sequence learning abilities among individuals with hearing loss, and present their findings as corroboration of the auditory scaffolding hypothesis. Given the substantial differences in both participant population and experimental design, we are unable to resolve this discrepancy here, and restrict our conclusions to implicit learning abilities during childhood.

4 ∣. GENERAL DISCUSSION

We investigated implicit sequence learning in deaf and hearing children. Our goal was to provide a more rigorous test of the auditory scaffolding hypothesis, which proposes that auditory deprivation leads to deficits in implicit sequence learning. Unlike Conway et al. (2011), we found no evidence of implicit learning in either deaf or hearing children when tested with the AGL task. This failure to find evidence of learning could be due to methodological differences, such as the fact that we verified children’s responses with video footage, rather than relying on automated coding. The absence of implicit learning could also reflect a task confound; a child with excellent explicit memory skills could have good performance on both the trained and untrained grammars, leading to an LRN score of zero despite learning of the grammar. Furthermore, while Conway et al.’s evidence for a group difference relied largely on the observation that the hearing group reached significance while the deaf group did not, our replication provided no compelling evidence for reliable group differences.

We then used a more standard measure of implicit sequence learning: the serial reaction time task, for which the auditory scaffolding hypothesis predicts weak implicit learning in deaf children, due to impoverished auditory experience. The present results do not support this prediction, with all three groups demonstrating robust implicit learning and no evidence to suggest weaker implicit learning among the deaf participants. We also found no correlation between duration of auditory deprivation and the size of the implicit learning effect. The present study therefore calls into question the notion that a lack of auditory experience compromises deaf children’s implicit sequence learning abilities. The limited evidence to support that notion comes mainly from Conway et al.’s (2011) Artificial Grammar Learning task, which we have argued to be an unreliable measure of implicit learning.

The present data are also at odds with the language scaffolding hypothesis, which predicted weaker implicit sequence learning abilities among children who experienced a period of development without access to language. Instead, Experiment 1B demonstrated that children who lacked language access for an average of 16 months still demonstrated sensitivity to the implicit regularity in the serial reaction time task, and we found no correlation between age of implantation (a proxy for onset of language access among deaf non-signers) and the size of the implicit learning effect. However, all children in the present study gained access to language by no later than age 3; it remains possible that children who endure a longer period without language access might demonstrate impaired implicit sequence learning. Conway et al. (2011) considered the possibility that language skills could affect implicit sequence learning, although they expressed doubt as to whether sign language skills would support healthy implicit sequence learning.

Arguably, ASL also contains a rich source of temporal and sequential information and therefore its use may alleviate some of the sequence learning disturbances seen in the present sample of children. On the other hand, signed languages, compared to spoken languages, have relatively limited sequential contrasts and instead rely heavily on nonlinear and simultaneous spatial expressions to convey information (Wilson & Emmorey, 1997). As such, it could be expected that deaf users of sign language would also show difficulties with sequential processing. (pp. 77–78)

The present findings establish that healthy implicit sequence learning (as measured by the serial reaction time task) can and does arise under early exposure to a natural sign language, even in the absence of auditory input.

These findings are squarely in line with a longstanding theoretical view of implit learning as a highly resilient aspect of human cognition (Meulemans et al., 1998; Nissen & Bullemer, 1987; Reber, 1989,1993; Reber et al., 1991; Seger, 1994; Smith et al., 2001; Thomas & Nelson, 2001; Unsworth & Engle, 2005). Strong evidence would be necessary to reject this view in favor of alternatives in which implicit learning abilities are disrupted by alterations in early experience, whether sensory (as in auditory scaffolding) or cognitive (as in language scaffolding). The small size of the effect reported in Conway et al. (2011) and inherent characteristics of their version of the AGL task do not provide such evidence. On the contrary, Experiment 1B provides clear evidence that deaf children do show robust implicit sequence learning in a serial reaction time task, which is a well-replicated and reliable indicator of implicit learning (Salthouse, McGuthry, & Hambrick, 1999). This significant positive result argues strongly against the auditory scaffolding hypothesis. The language scaffolding hypothesis is neither supported nor ruled out by the present findings. The previous studies that have noted covariation between implicit learning and language processing typically did so by analyzing individual differences in language proficiency, which we did not attempt in this study. Meanwhile, the data are fully consistent with the more established view that implicit sequence learning is a resilient and relatively invariant aspect of human cognition.

4.1 ∣. CONCLUSIONS

The present findings suggest that, contra both the auditory scaffolding hypothesis and the language scaffolding hypothesis, implicit sequence learning abilities do not atrophy in the absence of either auditory or linguistic input, at least for a time: we observed healthy implicit learning among children who had gone without language access for up to 3 years (average: 16 months), and among children who had gone without auditory experience for up to 12 years (average: 9 years). It remains possible that more subtle effects could be detected with larger samples, using more sensitive measures, or following longer periods of auditory and/or linguistic deprivation. It is also possible that implicit sequence learning abilities are a function of language proficiency, rather than language exposure. Under this account, the robust implicit learning effects observed here might not generalize to children who are not developing proficient language skills in either sign language or spoken language. We encourage future researchers to address this possibility more directly.

For now, we take these findings as potential good news for parents of deaf children, regardless of how they have chosen to communicate. Implicit learning skills are a powerful way that deaf and hearing children extract meaningful regularities from input in their environment, including the patterns of human language (both signed and spoken). Finding that these skills are largely intact means that deaf children are not missing critical tools that can be deployed in the service of language acquisition on the basis of ‘noisy’ input, whether that noise is metaphorical (as in the case children acquiring ASL from less-than-fluent parents) or literal (as in the case of children acquiring spoken language through a cochlear implant). Much work remains to more fully characterize just how noisy this input can be before it overwhelms the child’s learning mechanisms, and whether that limit is different for signed versus spoken input.

RESEARCH HIGHLIGHTS.

Previous research has confounded auditory deprivation with language deprivation.

We distinguish these hypotheses by studying deaf children with and without exposure to sign language from birth.

A replication of Conway et al. (2011) finds no evidence of implicit learning in either deaf or hearing children.

A serial reaction time task finds robust implicit learning in both deaf and hearing children, with or without exposure to language from birth.

REFERENCES

- Abrahamse EL (2012). Editorial to the special issue implicit serial learning. Advances in Cognitive Psychology / University of Finance and Management in Warsaw, 8, 70–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arlinger S, Lunner T, Lyxell B, & Pichora-Fuller MK (2009). The emergence of cognitive hearing science. Scandinavian Journal of Psychology, 50, 371–384. [DOI] [PubMed] [Google Scholar]

- Beer J, Kronenberger WG, Castellanos I, Colson BG, Henning SC, & Pisoni DB (2014). Executive functioning skills in preschool-age children with cochlear implants. Journal of Speech, Language, and Hearing Research, 57, 1521–1534. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Castellanos I, Kronenberger WG, Beer J, Colson BG, Henning SC, Ditmars A, & Pisoni DB (2015). Concept formation skills in long-term cochlear implant users. Journal of Deaf Studies and Deaf Education, 20, 27–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cherry KE, & Stadler ME (1995). Implicit learning of a nonverbal sequence in younger and older adults. Psychology and Aging, 10,379–394. [DOI] [PubMed] [Google Scholar]

- Conway CM, Karpicke J, & Pisoni DB (2007). Contribution of implicit sequence learning to spoken language processing: Some preliminary findings with hearing adults. Journal of Deaf Studies and Deaf Education, 12, 317–334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Conway CM, Pisoni DB, Anaya EM, Karpicke J, & Henning SC (2011). Implicit sequence learning in deaf children with cochlear implants. Developmental Science, 14, 69–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Conway CM, Pisoni DB, & Kronenberger WG (2009). The importance of sound for cognitive sequencing abilities: The auditory scaffolding hypothesis. Current Directions in Psychological Science, 18, 275–279. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferraro FR, Balota DA, & Connor LT (1993). Implicit memory and the formation of new associations in nondemented Parkinson′s disease individuals and individuals with senile dementia of the Alzheimer type: A serial reaction time (SRT) investigation. Brain and Cognition, 21, 163–180. [DOI] [PubMed] [Google Scholar]

- Figueras B, Edwards L, & Langdon D (2008). Executive function and language in deaf children. Journal of Deaf Studies and Deaf Education, 13, 362–377. [DOI] [PubMed] [Google Scholar]

- Fiser J, & Aslin RN (2002). Statistical learning of new visual feature combinations by infants. Proceedings of the National Academy of Sciences, USA, 99, 15822–15826. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall ML, & Bavelier D (2010). Working memory, deafness, and sign language. In Marschark M & Spencer P (Eds.), Oxford handbook of deaf studies, language, and education, Volume 2 (pp. 458–472). New York: Oxford University Press. [Google Scholar]

- Howard DV, & Howard JH (1989). Age differences in learning serial patterns: Direct versus indirect measures. Psychology and Aging, 4, 357–364. [DOI] [PubMed] [Google Scholar]

- Howard JH Jr., & Howard DV (1997). Age differences in implicit learning of higher order dependencies in serial patterns. Psychology and Aging, 12, 634–656. [DOI] [PubMed] [Google Scholar]

- Janacsek K, Fiser J, & Nemeth D (2012). The best time to acquire new skills: Age-related differences in implicit sequence learning across the human lifespan. Developmental Science, 15, 496–505. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Janacsek K, & Nemeth D (2012). Predicting the future: From implicit learning to consolidation. International Journal of Psychophysiology, 83, 213–221. [DOI] [PubMed] [Google Scholar]

- Kaufman SB, DeYoung CG, Gray JR, Jiménez L, Brown J, & Mackintosh N (2010). Implicit learning as an ability. Cognition, 116, 321–340. [DOI] [PubMed] [Google Scholar]

- Kidd E (2012). Implicit statistical learning is directly associated with the acquisition of syntax. Developmental Psychology, 48, 171–184. [DOI] [PubMed] [Google Scholar]

- Knopman DS, & Nissen MJ. (1987). Implicit learning in patients with probable Alzheimer’s disease. Neurology, 37, 784–788. [DOI] [PubMed] [Google Scholar]

- Knopman D, & Nissen MJ. (1991). Procedural learning is impaired in Huntington’s disease: Evidence from the serial reaction time task. Neuropsychologia, 29, 245–254. [DOI] [PubMed] [Google Scholar]

- Kral A, Kronenberger WG, Pisoni DB, & O’Donoghue GM (2016). Neurocognitive factors in sensory restoration of early deafness: A connectome model. The Lancet Neurology, 15, 610–621. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kronenberger WG, Beer J, Castellanos I, Pisoni DB, & Miyamoto RT (2014). Neurocognitive risk in children with cochlear implants. JAMA Otolaryngology-Head & Neck Surgery, 140, 608–615. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kronenberger WG, Pisoni DB, Henning SC, & Colson BG (2013). Executive functioning skills in long-term users of cochlear implants: A case control study. Journal of Pediatric Psychology, 38, 902–914. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lévesque J, Théoret H, & Champoux F (2014). Reduced procedural motor learning in deaf individuals. Frontiers in Human Neuroscience, 8, 343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luckner JL, & McNeill JH (1994). Performance of a group of deaf and hard-of-hearing students and a comparison group of hearing students on a series of problem-solving tasks. American Annals of the Deaf, 139, 371–377. [DOI] [PubMed] [Google Scholar]

- Maybery M, Taylor M, & O’Brien-Malone A (1995). Implicit learning: Sensitive to age but not IQ. Australian Journal of Psychology, 47, 8–17. [Google Scholar]

- McDowall J, & Martin S (1996). Implicit learning in closed head injured subjects: Evidence from an event sequence learning task. New Zealand Journal of Psychology, 25, 2–6. [Google Scholar]

- Meulemans T, Van der Linden M, & Perruchet P (1998). Implicit sequence learning in children. Journal of Experimental Child Psychology, 69, 199–221. [DOI] [PubMed] [Google Scholar]

- Misyak JB, Christiansen MH, & Tomblin JB (2010a). Sequential expectations: The role of prediction-based learning in language. Topics in Cognitive Science, 2, 138–153. [DOI] [PubMed] [Google Scholar]

- Misyak JB, Christiansen MH, & Tomblin JB (2010b). On-line individual differences in statistical learning predict language processing. Frontiers in Psychology, 1, 31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nissen MJ, & Bullemer P (1987). Attentional requirements of learning: Evidence from performance measures. Cognitive Psychology, 19, 1–32. [Google Scholar]

- Nissen MJ, Willingham D, & Hartman M (1989). Explicit and implicit remembering: When is learning preserved in amnesia? Neuropsychologia, 27, 341–352. [DOI] [PubMed] [Google Scholar]

- Reber AS (1989). Implicit learning and tacit knowledge. Journal of Experimental Psychology: General, 118, 219–235. [Google Scholar]

- Reber AS (1993). Implicit learning and tacit knowledge: An essay on the cognitive unconscious. New York: Oxford University Press. [Google Scholar]

- Reber AS, Walkenfeld FF, & Hernstadt R (1991). Implicit and explicit learning: Individual differences and IQ. Journal of Experimental Psychology: Learning, Memory, and Cognition, 17, 888–896. [DOI] [PubMed] [Google Scholar]

- Remine MD, Care E, & Brown PM (2008). Language ability and verbal and nonverbal executive functioning in deaf students communicating in spoken English. Journal of Deaf Studies and Deaf Education, 13, 531–545. [DOI] [PubMed] [Google Scholar]

- Romberg AR, & Saffran JR (2010). Statistical learning and language acquisition. Wiley Interdisciplinary Reviews: Cognitive Science, 1, 906–914. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saffran JR (2003). Statistical language learning mechanisms and constraints. Current Directions in Psychological Science, 12, 110–114. [Google Scholar]

- Saffran JR, Aslin RN, & Newport EL (1996). Statistical learning by 8-month-old infants. Science, 274, 1926–1928. [DOI] [PubMed] [Google Scholar]

- Salthouse TA, McGuthry KE, & Hambrick DZ (1999). A framework for analyzing and interpreting differential aging patterns: Application to three measures of implicit learning. Aging, Neuropsychology, and Cognition, 6, 1–18. [Google Scholar]

- Seger CA (1994). Implicit learning. Psychological Bulletin, 115, 163–196. [DOI] [PubMed] [Google Scholar]

- Smith J, Siegert RJ, McDowall J, & Abernethy D (2001). Preserved implicit learning on both the serial reaction time task and artificial grammar in patients with Parkinson’s disease. Brain and Cognition, 45, 378–391. [DOI] [PubMed] [Google Scholar]

- Thomas KM, Hunt RH, Vizueta N, Sommer T, Durston S, Yang Y, & Worden MS (2004). Evidence of developmental differences in implicit sequence learning: An fMRI study of children and adults. Journal of Cognitive Neuroscience, 16, 1339–1351. [DOI] [PubMed] [Google Scholar]

- Thomas KM, & Nelson CA (2001). Serial reaction time learning in preschool- and school-age children. Journal of Experimental Child Psychology, 79, 364–387. [DOI] [PubMed] [Google Scholar]

- Tomblin JB, Mainela-Arnold E, & Zhang X (2007). Procedural learning in adolescents with and without specific language impairment. Language Learning and Development, 3, 269–293. [Google Scholar]

- Unsworth N, & Engle RW (2005). Individual differences in working memory capacity and learning: Evidence from the serial reaction time task. Memory & Cognition, 33, 213–220. [DOI] [PubMed] [Google Scholar]