Abstract

This short survey reviews the recent literature on the relationship between the brain structure and its functional dynamics. Imaging techniques such as diffusion tensor imaging (DTI) make it possible to reconstruct axonal fiber tracks and describe the structural connectivity (SC) between brain regions. By measuring fluctuations in neuronal activity, functional magnetic resonance imaging (fMRI) provides insights into the dynamics within this structural network. One key for a better understanding of brain mechanisms is to investigate how these fast dynamics emerge on a relatively stable structural backbone. So far, computational simulations and methods from graph theory have been mainly used for modeling this relationship. Machine learning techniques have already been established in neuroimaging for identifying functionally independent brain networks and classifying pathological brain states. This survey focuses on methods from machine learning, which contribute to our understanding of functional interactions between brain regions and their relation to the underlying anatomical substrate.

1. Motivation

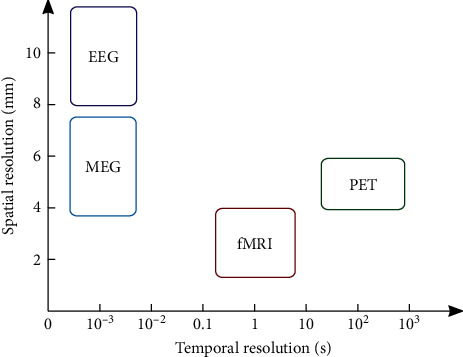

Similar to molecular biology, neuroscience also faces the problem to bridge the gap between experimental techniques, which study the anatomical substrate of neural information processing and techniques, used to determine functional interactions between specified brain regions. In molecular biology, it is known that such a relationship is only loosely linked such that, on the one hand, different 3D protein structures may have similar functions, while, on the other hand, similar 3D structures may exhibit rather different functions in the metabolism [1]. Still, as even single amino acid replacements may change the function of the protein completely, structure and function must be related to a certain extent. Similarly, in neuroscience, evidence has been collected over the last two decades suggesting that the anatomical structure of the neuronal network determines important constraints to the functional organization of neuronal activities and concomitant information processing [2–4]. Hence, they must be somehow interrelated. Moreover, though space interactions (such as, for example, electromagnetic fields or magnetic dipole-dipole interactions in physics) are not (yet) of relevance in neuroscience, information processing is confined to the anatomical substrate and thus depends on its structural connectome. Large-scale activity distribution is mediated via propagating action potentials; therefore, the spatial organization of neuron assemblies and their dendritic and axonal connections forms the underlying physical substrate for information processing. Experimental evidence for neural network topologies mainly comes from noninvasive neuroimaging techniques and neuroanatomical methods, while their functional variants consider the related inherent dynamics. Formerly, only the static aspect of this organization has been studied, while recent evidence demonstrated the importance to also consider the highly dynamic nature of functional activity patterns. Functional neuroimaging techniques such as functional magnetic resonance imaging (fMRI), electroencephalography (EEG), magnetoencephalography (MEG), or positron emission tomography (PET) operate on several distinct spatiotemporal scales, and an overview of the respective spatial and temporal resolutions is provided in Table 1. The relation of the scales which can be covered by these different neuroimaging techniques is further illustrated in Figure 1. Moreover, understanding processing of information relies on the applied physical modeling and simulation, statistical analysis, signal processing, and, more recently, also machine learning techniques. This noncomprehensive survey explores the recent literature on these issues and advocates for the idea of pursuing both modeling and data-driven analysis in combination.

Table 1.

Temporal and spatial scales of several neuroimaging techniques.

| Method | Resolution | |

|---|---|---|

| Temporal | Spatial | |

| MEGs | 1 ms | 5 mm |

| EEG | 1 ms | 10–15 mm |

| fMRI | 1 s | 1–3 mm |

| PET | 45 s | 4 mm |

Figure 1.

The temporal and spatial resolutions on which different functional neuroimaging techniques can operate. While fMRI allows to study neural processes at higher spatial resolution, EEG and MEG can better resolve neural activity dynamics in the temporal domain.

A better understanding in the relationship between the brain structure and function can provide insights into the integrated nature of the brain. Firstly, such an approach can contribute to our understanding of how information is first segregated and then integrated across different brain regions, while it could explain how complex neural activity patterns emerge, even in a resting brain [5, 6]. Moreover, as brain connectivity can be revealed by various statistical measures and different imaging modalities, all these follow their individual concepts displayed on different spatial and temporal scales. Understanding the interplay between the structure and function can help to interpret what can actually be seen in data obtained by different brain imaging modalities. Such an analysis can tell us how to relate these different modalities to each other [3, 4] and further how to integrate them in a meaningful manner [7, 8]. Finally, such integrated models can be of clinical relevance, for example, by explaining how structural lesions affect the brain not only locally but also via functional connections across the whole brain [9, 10].

2. Complex Brain Networks

The brain is organized into spatially distributed but functionally connected regions of dynamically correlated neuronal activity. These dynamically changing network structures can be characterized by three different but related forms of connectivity [11]:

Structural connectivity (SC) via excitatory and inhibitory synaptic contacts gives rise to the so-called connectome [12, 13]. Modern neuroimaging technologies, especially diffusion tensor imaging (DTI), provide the basis for the construction of structural graphs representing the spatial layout of white matter fiber tracks that serve to link cortical and subcortical structures. These graphs are characterized by densely connected nodes forming network hubs and fiber tracks (white matter) which connect spatially distant neuronal pools. The anatomy of this neuronal network exhibits substantial plasticity on long time scales, usually due to its natural development, aging, or disease [14, 15], though it is quite stable for short time scales. Therefore, it is considered as static in most of the experiments [16].

Functional connectivity (FC) expresses temporal correlations between neuronal activity patterns occurring simultaneously in spatially segregated areas of the brain. Such temporal activity patterns can be encoded in functional graphs and quantified through statistical concepts. They fluctuate on multiple time scales ranging from milliseconds to seconds. FC patterns are robustly expressed by resting-state networks (RSNs), where they emerge from spontaneous neuronal activity. They exhibit complex spatiotemporal dynamics, which have been described within the realm of state-space models by frequent transitions between discrete FC configurations. Much effort has been spent to characterize the latter. However, understanding the mechanisms that drive these fluctuations is more elusive and has recently been the subject of intense modeling efforts [17]. Although these fluctuations have long been considered stationary, recently, it became obvious that the consideration of their nonstationary nature is essential for a thorough understanding of information processing in the brain [2]. Furthermore, it remains elusive to what extent such functional graphs map onto structural graphs, i.e., how the network dynamics are constrained by the underlying anatomy.

Effective connectivity (EC) or directed connectivity describes causal interactions between activated brain areas [18]. As correlation does not imply causation, these concepts were established to deal with the directional influences of segregated neuron assemblies. If inferred from time series analysis, Granger causality [19] does not need any information about the structural organization of the neuronal network. Additionally, dynamic causal modeling (DCM) provides a deterministic model of neural dynamics, describing causal mechanisms within brain networks [20]. Recent investigations showed that FC patterns can be modeled successfully if global dynamic brain models are constrained by EC rather than by SC [21].

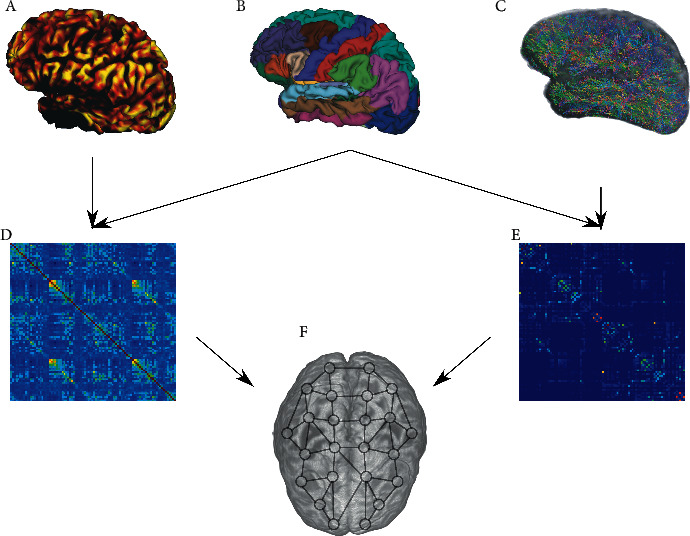

Modern imaging techniques map out structural and functional connectivities with remarkable spatial and temporal resolutions. A summary of how structural and functional connectivity can be conceptually derived from MRI is provided in Figure 2. While static structural connectivity is straightforward, its functional counterpart is more subtle. Current views consider two distant brain regions as functionally connected if their activity fluctuates synchronously and coherently, implying an instantaneous and persistent constant phase relation between their temporal activities. Given a pair of distinct brain areas (regions of interest, neuron pools, and network nodes), their SC is often derived from diffusion tensor imaging (DTI), high angular resolution diffusion imaging (HARDI), and diffusion spectrum imaging (DSI). Structural connection strength is typically quantified experimentally by the number of fibers, fractional anisotropy, etc., and these values are converted into edge weights in graphical models [22]. On the contrary, FC is derived from fMRI, EEG, or MEG and quantified by static measures such as instantaneous cross-correlations or partial correlations, while dynamic measures consider coherence and Granger causality [23].

Figure 2.

In fMRI, spatial-temporal activity maps of the human brain can be observed (A). On the contrary, DTI can be used to model white matter connections between different spatial brain regions (C). Next, a set of brain regions can be defined to act as nodes in a brain network (B). By quantifying the temporal coherence of activity fluctuations in a group of brain regions (B), the strength of functional connectivity (FC) is derived and can be arranged in a FC matrix (D). Analogous thereto, structural connectivity (SC) can be described by measuring the anatomical connection strength between those regions (B) and can be combined to a SC matrix (E). These two connection profiles can complement each other to give us a more comprehensive picture of brain connectivity (F).

The analysis of these types of network connectivity leads to the notion of complex brain networks [24]. Intense efforts have been exerted over the last decade to unravel the mechanisms and principles of neuronal information processing and to bridge the gap between the different types of connectivity analysis. The timely review by Friston [25] detailed biophysical concepts used to model such connectivities. Again, the push came from neuroimaging techniques such as DTI [26], which allow us to track fibers, though at relatively low spatial resolution only. In the postmortem human brain, 3D polarized light imaging (3D-PLI) [27] allows us to trace the 3-dimensional course of fibers with a spatial resolution even of the micrometer range.

While these connectivity concepts were being developed, doubts began to be raised concerning the usefulness of the concept of functional or effective connectivity as long as its relation to structural connectivity is not sufficiently understood [28]. The concerns mainly focused on different spatial and temporal scales, with which functional/effective versus structural connectivity was determined using different neuroimaging modalities.

3. Graphical Models of Brain Networks

Graphical models represent physical variables as a set of possibly connected nodes, also called vertices, and related edges, which signal marginal or conditional interactions between the connected nodes. Such models are commonly used to describe complex interrelationships between the set of variables. The central idea is that each variable, for example, the neuronal activity of a localized neuron pool, is represented by a node in a graph. Such nodes may be joined by edges of variable strength. Hence, the topology of complex brain networks can be characterized by static (GM) or dynamic graphical models (DGM), either on a structural or on a functional basis [29–34]. They represent a versatile mathematical framework for a generic study of pairwise relations between interacting brain regions. Small-world networks (SWNs) [35] provide an adequate description of the static topology of brain networks. Such SWNs exhibit the two principles of segregation and integration of information processing in the brain.

Given that the functional organization of the brain changes along various intrinsic time scales, graphical models also need to be dynamic descriptors of these spatiotemporally fluctuating neuronal populations. As DGMs are applicable across various spatiotemporal scales, they most adequately represent the temporal complexity of the activity of interacting neuronal populations. Recent studies of DGMs elaborate on inferring FC from SC and vice versa. They describe metrics suitable for quantifying the SC-FC interrelationship by elucidating the eigenspectrum of structural Laplacian (Given a graph, its Laplacian measures the difference of the diagonal matrix of its vertex degrees and its adjacency matrix (see Appendix A). A few common eigenmodes are indeed sufficient to reconstruct FC matrices. Closely related techniques employ independent component analysis (ICA) of FC time courses [36]. Many of these studies also emphasize the decisive role of indirect structural connections, for example, based on spectral mapping methods. Most of these studies are based on linear DGMs because of their computational stability and ease to infer reverse correlations. Studies aimed to predict SC from FC are considered as well because DTI data sometimes fail to model certain anatomical connections [37]. Other interesting issues that have been addressed concern the subject specificity of SC-FC relationships and their dependence on the employed imaging modalities.

DGMs can promote our understanding of emotional and cognitive states, task switching, adaptation and development, or aging and disease progression. Graph theory thus provides a comprehensive description of topological and dynamical properties of complex brain networks. Most studies seem to corroborate that network topology, to a large extent, determines time-averaged functional connectivity in large-scale brain networks. However, not many tools yet exist to describe dynamic graphs, and only very few consider the relationship between static and dynamic graphical models.

Tools for such analysis are made available for researchers, such as GraphVar (https://www.nitrc.org/projects/graphvar/) [38, 39], a GUI-based toolbox for comprehensive graph theoretical analyses of brain connectivity, as well as the construction, validation, and exploration of machine learning models. Dynamic graphical models have also been developed and are available in R (https://github.com/schw4b/DGM) [34]. Such DGMs can deal with spatiotemporal activity patterns, allow for loops in networks, and can describe directions of instantaneous couplings between nodes. Furthermore, temporal lags of the hemodynamic response between coupled nodes have been shown to influence quantitative directionality estimates but are known for avoiding false positive estimates [34].

Very recently, Meunier et al. [40] presented NeuroPycon (https://github.com/neuropycon), an open-source toolbox for advanced connectivity and graph theoretical analysis of MEG, EEG, and MRI data. Often, one problem is the reproducibility of processing pipelines in neuroimaging studies. To tackle this problem, the NeuroPycon toolbox wraps commonly used neuroimaging software for processing and graph analysis into a common Python environment and provides shareable parameter files. Currently, NeuroPycon offers two packages named ephypype and graphpype. While the former focuses on EEG and MEG data analysis, the latter is designed to study functional connectivity employing graph theoretic metrics. Accordingly, this open-source software package can help to facilitate sharing and reproducing scientific results for the neuroscience community.

3.1. Graph Topology of Brain Networks

With respect to complex network architectures, an especially attractive network topology is characterized by the so-called small-world organization of complex systems [35, 41]. Such small-world networks (SWN) are characterized by densely connected nodes of information processing which are distant in the anatomical space and only sparsely connected via long-range connections between different functionally interacting brain regions. Their main characteristic is reflected in high clustering (similar to that found for a regular lattice) and low path length (similar to a random network). Such topology allows efficient information processing at different spatial and temporal scales with a very low energy cost [42]. Note that such networks are sometimes liberally classified as SWNs, implying their unique properties, but they are lacking essential characteristics of SWNs [43]. Specifically, clustering in networks needs to be compared to clustering on the lattice and not random networks. Telesford et al. [43] proposed a proper metric for such comparison. If brain networks show a small-world network topology, it is mirrored by two principles of information processing: functional segregation on small, quasi-mesoscopic spatiotemporal scales but functional integration on larger, macroscopic spatial and temporal scales.

Recently, Sizemore and Bassett [31] reviewed existing methods and employed a publicly available MATLAB toolbox (https://github.com/asizemore/Dynamic-Graph-Metrics) to visualize and characterize such dynamic graphs with proper metrics. In [44], Sizemore et al. already showed that the algebraic topology is well suited to characterize mesoscale structures of brain connectivity formed by cliques (a set of adjacent vertices) in an otherwise sparsely connected network. Aside from cliques, topological network cavities of varying sizes were observed to link regions of early and late evolutionary origin in long loops, presumably playing an important role in controlling the brain function. Differences in the topological organizations of functional and structural graphs were the focus in the study by Lim et al. [45], who adopted a multilayer framework with SC and FC. Their analysis showed that SC tends to be organized such that brain regions are mainly connected to other brain regions with similar node strengths, while FC shows smaller values of assortativity [46], which can be associated with robustness of brain functions in the context of network theory [45].

3.2. Graph Theoretical Aspects of SC-FC Relations

Independence tests have shown strong correlations between structural and functional connectivities [47]. As shown by Hermundstad et al. [3], the length, number, and spatial location of anatomical connections (SCs) can be inferred from the strength of the resting state and task-based functional correlations (FCs) between brain regions. With resting-state networks (RSNs), FC is constrained by the large-scale SC of the brain in terms of strength, persistency, and spatial statistics. Often, functionally connected brain areas do not show any direct anatomical connections, pointing to the importance of indirect connections as well, for example, via the thalamus. This discrepancy between structural and functional connectivity also motivates us to combine these measures in order to overcome shortcomings of individual measures and to get a more comprehensive picture of brain connectivity [7, 8]. In an early study, Honey et al. [4] concluded from computational modeling that the inference of structural connectivity from functional connectivity deems impractical. Still, several recent graph theoretical studies elaborate on inferring FC from SC and vice versa. They provide different metrics for quantifying the SC-FC interrelationship and discuss the decisive role of indirect connections.

Abdelnour et al. [48] added a new twist to inferring FC from SC by considering linear computational GMs rather than the commonly employed nonlinear simulations. They captured the long-range second-order correlation structure of RSNs, which governs the relationship between its anatomic and functional connectivities. The model applied random walks on a graph (see Appendix A) to structural networks, as deduced by DTI, and predicted the FC structure obtained from the fMRI data of the same subjects. Because of its linearity, the model also allows inverse predictions of SC from FC. The study thus corroborates the linearity of ensemble-averaged brain signals and suggests a percolation model (Percolation theory describes the behavior of connected clusters in a random graph.) [49], where purely mechanistic processes of the structural backbone confine the emergence of large-scale FCs. Yet, another linear model of correlations across long-duration BOLD fMRI time series has been devised by Luisa Saggio et al. [50] to measure FC for the comparison of real and simulated data. The analytically solvable model considers the diffusion of physiological noise along anatomical connections and provides FC patterns from related SC patterns. The model allows for the investigation of nonstationary temporal dynamics in RSNs and, because of its linearity, can be inverted easily to deduce SC from known FC. Later, Abdelnour et al. [51] further investigated the interplay of SC versus FC on graphs by elucidating the eigenspectrum of structural Laplacian (see Appendix A). The authors showed that both SC and FC share common eigenvectors, their eigenvalues are exponentially related, and a small number of eigenmodes are sufficient to reconstruct FC matrices. The method is intimately related to a data-driven independent component analysis (ICA) of FC time courses and outperforms time-consuming generative simulations of dynamic brain network models. The importance of FC time courses was also emphasized in the study of Sarkar et al. [52]. As anatomical connections are often missing from the DTI data, the authors considered the inverse problem of inferring SC from FC and formulated it as a convex optimization problem regularized with sparsity constraints based on physiological observations. The study could not only reproduce quantitative measures of SC on a fine-grained cortical dataset, consisting of 998 nodes, but also robustly predict long-range transhemispheric couplings, which are not resolved by DTI.

Concerning computational GMs, Huang and Ding [56] also considered the question of proper quantification of SC-FC interrelationships. They showed that conditional Granger causality (cGC) was significantly correlated across subjects with edge weights [22] in RSNs, but not with mean fractional anisotropy. The authors concluded that edge weight represents the proper SC measure, while cGC adequately measures FC. Following the question of proper quantification of FC, Meier et al. [57] compared the structure-function relationship, when using different imaging modalities to assess FC, measured not only with fMRI but also with MEG. The study mainly considered local connectivities between immediate neighbors, with some connections in homologous regions of the opposite hemisphere. The results of their SC-FC mapping indicated that, although sharing many properties, the SC-FC relationship also seems to be imaging modality dependent. Following this hypothesis, the idea of employing imaging modalities with higher temporal resolution for observing brain functions, also with state-of-the-art tractography methods for reconstructing the brain structure, is an interesting direction for future research on this field [16].

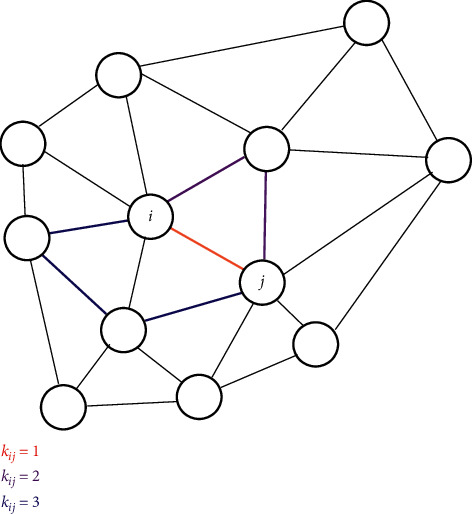

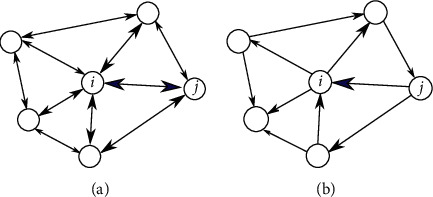

Topological aspects of the SC-FC relationship were further discussed by Liang and Wang [55] at the level of connectivity matrices. They developed a similarity measure to assess the quality of various network models based on persistent homology and regularized the solution to cope with large matrices. The measure could distinguish between direct and indirect SCs for predicting FC. The results corroborated a nonlinear structure-function relationship and suggested that the FC in RSNs is characterized not only by direct structural connections but also by sparse indirect connections. Figure 3 illustrates such higher-order connections between two nodes in a network. Also, Becker et al. [53] investigated how brain activity propagates along indirect structural walks by using a spectral mapping method (Spectral graph theory is the study of properties of the Laplacian matrix or adjacency matrix associated with a graph.) [58], which systematically reveals how the length of indirect structural walks in a network influences the FC between two nodes. Results of their mapping suggested that walks on the structural graph up to a length of three contribute most to the functional correlation structure. Such indirect connections were also the focus of the work of Røge et al. [59]. Simulations on high-resolution whole-brain networks show that functional connectivity is not well predicted by direct structural connections alone. However, predictions improved considerably if indirect structural connections were integrated. The authors also showed that shortest structural pathways connecting distant brain regions represent good predictors of FC in sparse networks. This focus on the shortest structural paths between connected brain regions was also taken up by Chen and Wang [60], who designed an efficient propagation network built with only the shortest paths between brain regions. Concerning subject specificity of SC-FC relations, Zimmermann et al. [61] furthermore showed that because of the marginal variability between subjects in SCs compared to a rather pronounced variability in FCs, little subject specificity results, indicating that FC is only weakly linked to SC across subjects.

Figure 3.

In the most simple case, structural connections between two brain areas i and j could be direct (order kij=1), but also, higher-order connections (kij=2,3,…) between two regions play a significant role for the propagation of neural signals [53–55].

Bettinardi et al. [54] considered the postulate that coactivated brain areas should have similar input patterns and elaborated on this idea to explain how anatomical connectivity can determine the spontaneous correlation structure of brain activity. They explored the idea that information, once generated, spreads rather isotropically along all possible pathways while decaying in strength with increasing distance from its origin. The authors analytically quantified the similarity of whole-network stimulus patterns based solely on the underlying network topology, thus generalizing the well-known matching index [62]. Finally, the authors could corroborate that the network topology, to a large extent, determines time-averaged functional connectivity in large-scale brain networks.

In an effort to explain brain dynamics, Gilson et al. [63] considered a multivariate Ornstein–Uhlenbeck model (An Ornstein–Uhlenbeck process is a stochastic stationary Gauss–Markov process, which solves the Langevin equation.) [64] on a DGM to estimate statistics characterizing effective connectivities (ECs) in RSNs. Linear response theory (LRT) (Let x(t) be a stimulus and y(t) a related system response; then, both are related by y(t) ≈ ∫−∞tχ(t − t′)x(t′)dt′, where the susceptibility χ(t − t′) represents the linear response function. The latter is related to Green's function in case of a Dirac delta impulse stimulus.) was then employed to estimate network-related Green's function, which specifies the dynamic coupling between network nodes. The model provided graph-like descriptors (community and flow) to describe the role of either nodes or edges to propagate activity within the network. The graphical model thus stresses temporal aspects, which merge segregated functional communities to integrate into a global network activity. The approach is not limited to resting-state dynamics but can also deal with task-evoked activity.

The interrelation between connectivity, as derived from different imaging modalities, was studied in a systematic approach by Garcés et al. [65]. They investigated similarities between SC derived from DTI, FC observed in fMRI, and FC measured in MEG on different spatial scales: global network, node, and hub level. They verified the strong relation between SC and FC observed in MRI, but also found strong similarities between SC and FC in MEG at theta, alpha, beta, and gamma bands. In their analysis, they could find the highest node similarity across modalities in regions of the default mode network and the primary motor cortex. The relation between structural and functional graphs was further exploited by Glomb et al. [66] to overcome problems in FC-EEG analysis. In whole-cortex EEG studies, volume conduction can induce spurious FC patterns, which are hard to disentangle from genuine FC. Glomb et al. [66] proposed a technique to smooth EEG signals in the space defined by white matter connections, in order to strengthen the FC between structurally connected regions, which could improve the resemblance of FC observed with EEG and FC measured with fMRI.

4. Computational Connectomics

Functional neuroimaging techniques initiated connectome-based computational modeling of brain networks, called computational connectomics (CC). The latter reproduces experimental findings related to such large-scale activity distributions. Furthermore, such modeling also encompasses spatiotemporal multiscale concepts of information processing in such complex networks. To ease such modeling endeavors, simulation platforms such as the Brain Dynamics Toolbox (https://bdtoolbox.org/) [24, 67] or DynamicBC (http://www.restfmri.net/forum/DynamicBC) [68] have been developed which support the major classes of differential equations of interest in computational neuroscience and/or implement both dynamic functional and effective connectivities for tracking brain dynamics from functional MRI. On a more phenomenological level, The Virtual Brain (https://www.thevirtualbrain.org/tvb/zwei) neuroinformatics platform [69, 70] provides a brain simulator as an integrated framework which encompasses several neuronal models and their dynamics. It offers multiscale model-based simulations and allows inference of neurophysiological processes underlying functional neuroimaging datasets. Hence, such modeling frameworks generate features, which allow for an understanding of underlying mechanisms beyond computational reproduction.

One typical interpretation derived from computational modeling is that the brain at rest resembles a dynamically metastable (A metastable state of a dynamical system is stable against small perturbations but not against large perturbations. It corresponds to a minimum of the free-energy landscape other than the global minimum. In neuroscience, it is sometimes more loosely used to denote a transient state of the system that persists for a finite lifetime only.) system with frequent switches between several metastable states, potentially driven by multiplicative noise [2]. By constraining computational models by the anatomical connections derived from DTI, they can serve as a link between the brain structure and correlation patterns, empirically observed in functional MRI. Usually, in such a framework, the strength of white matter connections characterizes the coupling strength of nodes in such large-scale computational models. Furthermore, with such simulations, it can be studied how different topological properties of the anatomical substrate contribute to the systems' dynamics, explaining how functional connectivity patterns depend on the underlying structural backbone [71, 72].

Recently, machine learning methods were also applied to extract characteristic features of the underlying networks from functional neuroimaging. These features opened the field for deducing functional connectivity from structural connectivity and vice versa in a purely data-driven approach [47, 73–76].

4.1. The Resting State as a Dynamically Metastable System

Though neuronal activity fluctuates continuously even without being driven by external stimuli, a thorough understanding of the spatiotemporal dynamics of complex brain networks is yet to be achieved. With the seminal paper of Raichle et al. [77], the notion of a default mode network (DMN) of a resting brain was born. This concept triggered a wealth of studies related to the resting state of the brain [2, 78, 79], whereby the resting brain is in general understood as the state in which the brain does not receive any explicit input. Such computational connectomics studies revealed that the resting human brain represents a quasi-metastable dynamical system [2] with frequent fluctuations around a dynamic equilibrium network state, which occasionally resembles the default mode network (DMN) of the resting state [77, 80]. Note that this equilibrium network state is not defined as a global minimum of an energy landscape like that used to model protein folding. Rather, it is understood as a steady-state balancing deployment of fast and slow systems which process neuronal activations. Such brain network modeling has the potential to reveal nontrivial network mechanisms and goes beyond canonical correlation analysis of functional neuroimaging. Indeed, the main driving force behind these computational brain network modeling efforts results from the observation that the spiking of single neurons is understood in biophysical detail, but how large-scale, whole-brain spatiotemporal activity dynamics emerge from spontaneously spiking neuron assemblies is still a matter of much debate, and their underlying mechanisms are only partly understood [78]. This is especially intriguing as even a resting brain without external stimuli shows highly structured spatiotemporal activity patterns far from being random.

4.2. The Statistical Mechanics Perspective of Brain Dynamics

Models adapted from statistical physics provide one possibility to describe brain dynamics empirically observed in different neuroimaging modalities such as fMRI. Concerning the resting state, in an early work, Fraiman et al. [81] focused on the question whether such a state can be comparable to any known dynamical state. For that purpose, correlation networks deduced from human brain fMRI investigations were contrasted with correlation networks extracted from numerical simulations of an Ising model (An Ising model describes an interacting lattice spin system with two degrees of freedom for every spin variable. Each spin interacts with its immediate neighbors and an external field. In 2D, an Ising model exhibits a phase transition from an unordered to an ordered phase. It represents one of the few exactly solvable models in statistical physics.) [82] in 2D, at different temperatures. Near the critical temperature Tc, strikingly similar statistical properties rendered the two networks indistinguishable from each other. These results were considered to support the conjecture that the dynamics of the functioning brain is near a critical point.

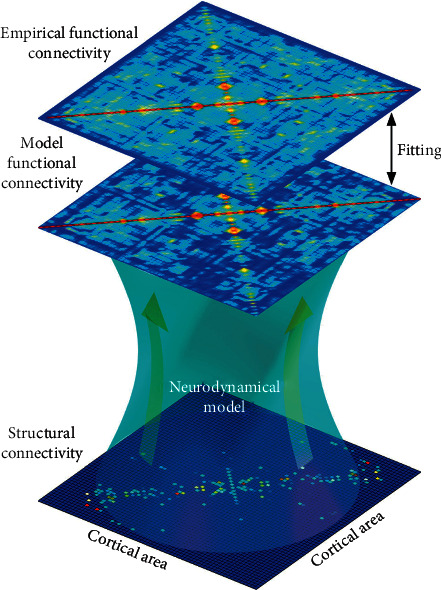

Fortunately, all modeling efforts profit from having available constraints from functional, effective, and structural connectivity measures as provided by empirical neuroimaging data [83]. An early attempt to include such constraints was undertaken by Deco et al. [84], who derived a mean-field model (In the limit of a large number of interacting entities in a network, a Markov chain model of network dynamics is often replaced by a mean-field model, which assumes that each unit homogeneously interacts with the network in an average way only. An especially illusive example is mean-field approximations of the Ising model [85].) [86] (MFM) of the stationary dynamics of a conductance-based synaptic large-scale network of a spiking neuron population. The connectivity in this network was constrained by DTI data from human subjects, such as that illustrated in Figure 4. The temporal evolution of the neuronal ensemble was approximated by the longest time scale of the dynamic mean-field model. The latter has been further simplified into a set of equations of motion (An equation of motion describes the dynamic behavior of a physical system in terms of generalized coordinates as a function of time.) for statistical moments. These differential equations provided an analytical link between anatomical structure, stationary neural network dynamics, and FC. In a subsequent seminal paper of Deco at al. [2], nonstationary network dynamics have been considered as well. There, the resting brain has been modeled by a network of noisy Stuart–Landau oscillators (Stuart–Landau oscillators represent coupled limit-cycle oscillator, which exhibit collective behavior such as synchronization.), each operating near a critical Hopf bifurcation (An Andronov-Hopf bifurcation consists in the birth of a limit cycle out of an equilibrium point of a dynamic system such as coupled oscillators.) point in the phase space [87]. Each oscillator is running at its intrinsic frequency as given by the mean peak frequency of the narrowband BOLD signals of each brain region which the oscillator represents. Note that this ensemble of locally coupled oscillators is different from ensembles of nonlocally coupled, identical oscillators which show chimera states (Chimera states represent a unique collective behavior of a dynamic system, where coherent and incoherent states coexist.) which have attracted much attention recently [88–90]. Rather, in a Kuramoto-type (A Kuramoto model consists of a set of phase oscillators, which rotate at disordered intrinsic frequencies and with nonlinear couplings. Their time-dependent amplitudes are neglected.) [91, 92] approach, each oscillator is characterized through its phase variable only, while the time-dependent amplitude of the analytical signal is neglected. The resulting global network dynamics reveals spatial correlation patterns constrained by the underlying anatomical structure. By investigating cortical heterogeneity across the entire brain, the authors explained how fluctuations around a dynamic equilibrium state of a core brain, represented by eight identified brain areas, could drive functional network state transitions [2, 93, 94]. Hence, in the work of Naskar et al. [95], the resting brain is considered to represent a dynamically metastable system with frequent switches between several metastable states driven by multiplicative noise. Metastability can be quantified in such coupled oscillator systems by the standard deviation of one of the two time-dependent Kuramoto order parameters, which measures the phase coherence of a set of oscillators in the weak coupling limit [2]. In this respect, the brain can be considered as operating at maximal metastability suggesting some kind of spinodal-like (The spinodal curve in a phase diagram connects states where the second derivative of the Gibbs free energy is zero. At these points, even the faintest perturbation induces a phase transition to the related equilibrium state.) instability [96] in the phase space, where a transition towards a related stable, possibly task-related network state occurs as a consequence of a small external disturbance. It is interesting to see recently that research into coupled Stuart–Landau oscillator networks has also focused on the amplitude dynamics of the analytic signal [89, 90, 97]. It has been shown that an explosive death of oscillations can be observed which might be related to the suppression of neuronal activity in RSNs evoked by stimulus-driven information processing [98].

Figure 4.

Structural connectivity (SC), like that derived from DTI, can be included to describe the couplings of nodes (anatomical areas) in a neurodynamical model, constraining the neural dynamics. The emerging functional connectivity (FC) patterns of the model can then be compared to the empirical FC obtained by fMRI (adapted from Deco et al. [84]).

The idea of viewing human brain dynamics from a statistical mechanics perspective was also proposed by Ashourvan et al. [99]. However, rather than studying the evolution of regional activity between local attractors (In a dynamical system, an attractor represents a set of numerical values towards which the system evolves from a wide range of initial conditions. An attractor can be a point, a finite set of points, a curve, a manifold, or even a complicated set with a fractal structure known as a strange attractor.) representing mental states, time-varying states composed of locally coherent activity (functional modules) were put forward. A maximum entropy model was adapted to pairwise functional relationships between ROIs, based on an information-theoretic energy landscape model, whose local minima represent attractor states with specific patterns of the modular structure. Clustering such attractors revealed three types of functional communities. Transitions between community states were simulated using random walk processes. Thus, the brain is understood as a dynamical system with transitions between basins of attraction characterized through coherent activity in localized brain regions.

4.3. Spatiotemporal Brain Dynamics and Statistical State-Space Models

Although large-scale activity distributions in RSNs have long been considered stationary, recent investigations provided ample evidence of their nonstationary nature. Consequently, it is insufficient to only consider the grand average functional connectivity (FC); rather, its nonstationary dynamical nature has to be considered as well [2]. Several recent studies showed how functional connectivity states can emerge from a single stationary structural connectivity net through fluctuations around this metastable state. The latter can be described with mean-field models, while the fluctuations can be generated with an ensemble of coupled Stuart–Landau oscillators with simple attractor dynamics and reproduce the spatiotemporal connectivity dynamics if constrained by EC rather than SC as deduced from DTI measurements.

While the functional connectivity can be estimated via a linear Pearson correlation (Pearson's correlation coefficient is a measure of the linear bivariate correlation between two variables x and y. It is computed as the covariance of the two variables divided by the product of their standard deviations.) between corresponding elements of the empirical (and the simulated) covariance matrix, the statistics of the dynamic functional connectivity (DFC) can instead be evaluated through the Kolmogorov–Smirnov distance (Nonparametric Kolmogorov–Smirnov statistics provide a distance either between an empirical and a reference cumulative density function or between two probability distributions.) of the related empirical (and simulated) distributions of covariance matrix elements on a sliding time window basis. Note that, lately, this sliding window technique has been challenged by a hidden Markov model (A hidden Markov model represents the simplest dynamic Bayesian network. It is a statistical model of a system with Markovian state transitions between unobservable (hidden) states.) approach which overcomes some of the drawbacks of the former method [100–102]. Concerning the interrelation of the structural and functional connectivity, the work of the Deco group, as discussed above, demonstrated that, by carefully constraining global mean-field brain models with structural connectivity data and fitting the model parameters employing corresponding dynamic functional connectivity data, phenomenological models of causal brain dynamics (Dynamic causal models adapt nonlinear state-space models to data and test their evidence employing Bayesian inference.) [20] can be constructed. Such models yield insight into mechanisms by which the brain generates structured, large-scale activity patterns from spontaneous activities in RSNs while respecting empirical knowledge about structural and functional connectivities between distant brain areas as provided by functional neuroimaging.

Using a whole-brain computational network model, Glomb et al. [103] applied a sliding window approach, though employing a rather long time window, to relate temporal dynamics of RSNs to global modulations in BOLD variance. The authors demonstrated that spatiotemporal fluctuations in FC and BOLD can be described as fluctuations around an average stationary FC structure. In a related study, Glomb et al. [17] further elaborated this idea by combining dimensionality reduction via tensor decomposition with a mean-field model (MFM) [104] generating stationary network dynamics. The model has been shown to explain grand average resting-state FCs. However, such average FCs summarize correlated spatial activity distributions but do not reveal their temporal dynamics. In a two-step approach, first, spatiotemporal data arrays have been decomposed, employing tensor decomposition methods, into sets of brain regions, called communities, with similar temporal dynamics. Their related time courses are assessed by an overlapping sliding window technique and could be grouped into four distinct communities resembling well-known RSNs. Second, data were simulated with this stationary MFM constrained by results from diffusion tensor imaging (DTI) and fiber tracking. The spatiotemporal structure of the network then results solely from fluctuations around a mean FC pattern. More importantly, the four distinct RSNs emerged from this stationary MFM if the network nodes were coupled according to the model-based EC. The method is rather generative as it only needs weak assumptions about the underlying data, thus is generally applicable to resting-state data and task-based data from arbitrary subject populations. In a related study, Glomb et al. [21] compared the DTI-based SC with the model-based effective connectivity (EC) using whole-brain computational modeling of the spatiotemporal dynamics of FC evaluated on a sliding window basis. The authors discussed the way node connectivity affects the fitting of simulated to empirical patterns. The resulting tensors are decomposed into a weighted set of communities, whose nodes share similar time courses. Some of these communities resemble known RSNs such as the DMN, whose fluctuations have been linked to cognitive function. Similarity between simulated and empirical spatiotemporal dynamics of ROIs was especially pronounced whenever model nodes were connected by EC rather than SC. Thus, networks of Stuart–Landau oscillators with simple attractor dynamics can reproduce empirical spatiotemporal connectivity dynamics if constrained by EC rather than SC. A recent review of Cabral et al. [16] discussed different computational resting-state models, which almost all try to explain how a rich repertoire of functional connectivity states can emerge from a single static structural connectome. In the future, it will be of interest to extend these computational models to task-based settings and to also consider faster neural processes like those measured by EEG or MEG.

The potential of computational connectomics for general inference and integration of neurophysiological knowledge, complementing empirical functional neuroimaging studies, has been further demonstrated by the work of Schirner et al. [105]. The authors integrated individual SC and FC data with neuronal population dynamics to infer neurophysiological properties on multiple scales. In their study, EEG was used to record electrical potentials at the scalp surface of individuals. These source activities were integrated into an individual-specific model, which simulated brain activity distributions and predicted person-specific fMRI time series and spatial topologies of RSN activities. In addition, neurophysiological mechanisms underlying several experimental observations from various functional imaging modalities could be successfully predicted. Whole-brain computational modeling can also answer questions concerning the reproducibility and consistency of resting-state fMRI. Donnelly-Kehoe et al. [106] demonstrated that the estimation of parameters, which describe the dynamical regime of the Hopf model (It denotes a model of a dynamic system which exhibits a critical point in the phase space where its stability switches and a periodic solution arises.) [2], becomes consistent after a scanning time of around 20 minutes. This suggests that such a scanning duration is sufficient to capture subject-specific brain dynamics. Also, such nonlinear computational models are capable of quantifying EC on a whole-brain level [107]. By gradually modifying the strength of structural connections, the authors could improve the correspondence between FC predicted by their model and empirical FC observed in fMRI. This measure should therefore better characterize the activation flow between brain regions and can extend the structure-based connectivity measure, derived from DTI.

4.4. Impact of the Structure on Function in Computational Connectomics Models

An as yet unresolved issue concerns the dependence of the SC-FC relationship on either specific topological features of the network or the computational models used to describe the network dynamics. Over the last decade, a couple of studies, designing stationary and nonstationary dynamical network models, focused on these important issues.

By employing a simple epidemiological model, Chen and Wang [60] built a dynamic susceptible-infected-susceptible (SIS) network [108, 109], focusing on the shortest path to predict resting-state FC from SC. The model could predict FC between directly and indirectly connected structural network nodes and outperformed DMF models in predicting FC from SC. Considering a more complex approach, Robinson [110] introduced propagator theory (A propagator represents a special Green's function, which characterizes the probability of propagation of a particle or wave from location x to location y. The exact form of the propagator depends on the equation of motion with its related initial or boundary conditions.) to relate anatomical connections to functional interactions, where neural interactions allegorically resembled properties of photon scattering on atoms, as observed in standard quantum mechanics. This Green's function-based model also accounts for excitatory and inhibitory connections, multiple structures and populations, asymmetries, time delays, and measurement effects.

In another early study to elucidate the structure-function relationship in more detail, Deco et al. [111] devised a brain model of Ising spin dynamics constrained by a neuroanatomical connectivity as obtained from DTI/DSI data. The model, which describes stationary dynamics, exhibited multiple attractors, whose underlying attractor landscape could be explored analytically. They showed that the entropy of the attractors directly measures the computational capabilities of the modeled brain network, thus pointing to a scale-free (A scale-free network exhibits a degree distribution that, at least asymptotically, follows a power law.) architecture. Note that entropy measures the number of possible network configurations; hence, a scale-free network maximizes the system's entropy. However, recently, strictly scale-free networks have been shown to be rare putting in jeopardy statistical interpretations of such networks [112]. It has been shown that log-normal distributions fit degree distributions often better than power laws, thus demanding alternative theoretical explanations. A similar spirit, predicting SC from FC, has also been considered in the study of Deco et al. [104]. The authors used diffusion spectrum imaging (DSI) to map structural measures of connectivity, but note that interhemispheric connections are usually inhibitory and are hard to map. The study proposed a dynamic MFM operating near a critical point in the phase space where state transitions occur spontaneously. They iteratively optimized the matrix of SCs based on a matrix of FCs and observed that the addition of a small number of anatomical couplings, primarily transhemispheric connections, improved the predicted SCs dramatically, even though DTI has its limitations in modeling long-range connections [37].

While these modeling studies rely on stationary dynamics, Messè et al. [113] considered the relative contributions of stationary and nonstationary dynamics (A time series is stationary if all its statistical moments are time independent. Wide-sense stationarity only asks for time independence of the first two statistical moments, and nonstationarity means just the contrary [114].) to the structure-function relationship. The authors compared FCs of RSNs with computational models of increasing complexity while manipulating the models' anatomical connectivity. Their results suggest three contributions to FC in RSNs based on a scaffold of anatomical connections, a stationary dynamical regime constrained by the underlying SC, and additional stationary and nonstationary dynamics not directly related to anatomy. Most importantly, the last component was estimated to contribute 65% to the observed variance of FC pointing to the need for nonstationary dynamic computational brain models. The study corroborated the preference for simple models of stationary dynamics and emphasized the decisive role of transhemispheric couplings, which are often difficult to reconstruct in white matter tractography [37]. Messé et al. [72] also further considered the issue of how topological features influence dynamic models and designed a dynamic susceptible-excited-refractory (SER) model with excitable units and analyzed the influence of network modularity on FC. Their results were compared to a FitzHugh–Nagumo model (The FitzHugh–Nagumo model, sometimes also called Bonhoeffer–van der Pol oscillator, represents a relaxation oscillator and describes a prototype of an excitable system, which shows spike generation above a certain threshold.) [115] as an alternative model of excitable systems. Differences between the models arose from different time limits for integrating coactivations to deduce “instantaneous” FCs, thus providing a clear distinction between coactivation and sequential activation and thereby corroborating the importance of the modular structure of the network. In a subsequent paper, Messé et al. [71] elaborated further on the topological network features which shape FC in their dynamic SER model. The authors presented an analytical framework, based on discrete excitable units, to estimate the contribution of topological elements to the coactivation of the nodes in their model network. They compared their analytic predictions with numerical simulations of several artificial networks and concluded that their framework provides a first step towards a mechanistic understanding of the contributions of the network topology to brain dynamics.

5. Machine Learning Approaches

Machine learning approaches were first applied to fMRI datasets to deduce signal components in a purely data-driven fashion. Such explorative techniques allow to detect spatially segregated regions in the brain, associated with individual functions [116, 117]. These blindly identified source components can then help to define nodes in graphical models of brain networks and set the spatial layout for brain connectivity. Also, such blind source separation techniques were applied to EEG recordings in order to identify relevant source signals and to separate them from artifacts in the data [118–120]. Like in fMRI, such intrinsic components can be used to define nodes in functional networks and provide a data-driven perspective on brain connectivity in EEG studies [121].

Exploratory matrix factorization (EMF) techniques were employed mostly, whereby any data matrix X ∈ ℝN×M contains spatial fMRI maps in its M columns at N subsequent time points tn. This data matrix is decomposed into two factor matrices according to X ≈ WH, whereby W ∈ ℝN×K and H ∈ ℝK×M. Here, K denotes a generally unknown inner dimension, which can be estimated with model order selection techniques [122]. Such decomposition needs additional constraints to yield unique answers. Depending on the form of the constraints, various decomposition techniques (see Appendix B) result as follows:

Factors should form orthogonal matrices, yielding principal component analysis (PCA)

Factor matrix H should contain statistically independent spatial or temporal components (sICA and tICA)

Factor matrix W should yield a sparse encoding (SCA)

Both factor matrices should have only nonnegative entries, given the entries of the data matrix are nonnegative exclusively, yielding nonnegative matrix factorization (NMF)

Matrix H should contain intrinsic modes, which represent pure oscillations yet with time-varying amplitude and local frequency, yielding empirical mode decomposition (EMD)

Such studies were employed to deduce functionally connected brain networks in a purely data-driven way, most commonly by a combination of PCA and ICA [123]. Although the number of independent spatial or temporal components is generally unknown, investigations showed a high consistency of the extracted functional networks across subjects and conditions. Since structural constraints are yet to be included, structure-function relationships have not yet been considered so far with such techniques. Considering regularized ICA, comprehensive studies of the choice of hyperparameters and their impact on the results are still not completely explored. Some attempts have been undertaken to combine cICA with optimization techniques.

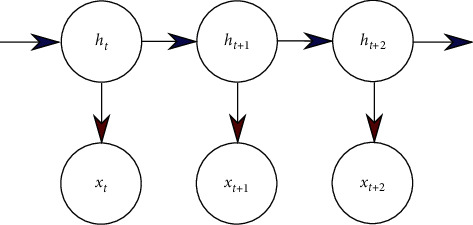

Over the last decade, exploratory analysis techniques have placed a new focus on trying to better understand brain dynamics. Dynamic functional connectivity patterns (also denoted as the chronnectome [124]) have been studied with EMF techniques by employing a sliding window approach, whereby a considerable overlap of the individual time segments was allowed. The length of the chosen time window determines the time scale of the slowest fluctuations that can be studied. Most commonly, time courses were then linearly correlated to generate temporal sequences of related connectivity matrices. Such sequences demonstrate the temporal variability of functional connections between identified brain networks, notably those investigated under resting-state conditions. The main purpose of such studies was to quantify the impact of spontaneous BOLD fluctuations on the temporal dynamics of FCs. The investigations demonstrated that transiently synchronized subnetworks with coherent spatial patterns drive the dynamics of large-scale functional networks in the resting brain. These results gave rise to the application of state-space models, whereby network states were represented by their covariance matrices [125, 126]. Predominantly, hidden Markov models (HMMs) have been employed to describe the underlying network dynamics. As an alternative to HMMs, Bayesian probabilistic models can also learn latent states and represent dFC networks and their temporal evolution as well as transition probabilities between these states.

State-space models assume stationary dynamics, an assumption that has been placed in question by several studies. These studies assigned part of the temporal variability of dFCs not to noise contributions but rather to their nonstationary nature [127, 128]. The latter can be characterized through EMF approaches combined with sliding window techniques and Pearson correlation of voxel time series. Clustering such dFC states results in a small number of prototypical FC patterns, which, in turn, lead to discrete brain states [127, 129]. Alterations of such brain states with various diseases were naturally of interest as well.

5.1. Static Functional Connectivity

Over the last two decades, aside from large-scale computational modeling of functional brain connectivity and dynamics, data-driven machine learning approaches have also been employed to analyze functional neuroimaging data and to track the dynamics of FCs. In various cases, methods from machine learning, such as exploratory matrix factorization (EMF) techniques, can be applied, where voxel-wise univariate evaluations are not appropriate [116, 130]. Blind source separation (BSS) techniques [131–133] refer to data-driven, unsupervised machine learning techniques for feature extraction based on EMF, which are applied in biomedicine and neuroinformatics. The underlying idea of these techniques is to search for a linear mixture of base components, which characterize the observed data. Especially in the absence of stimulus-driven tasks, like in resting-state fMRI [116] or in resting-state EEG [121], such exploratory techniques have proven to be a promising alternative to atlas-based definitions of brain networks. Most notably, principal component analysis (PCA) and independent component analysis (ICA) (While PCA extracts components with maximal variance from the data, ICA applies a stronger condition and maximizes for statistical independent components [133]) [134] are frequently employed to analyze biomedical and neuroimaging datasets, especially EEG and fMRI data [117, 118]. While most studies on brain connectivity still rely on atlas-based definitions of graph nodes in brain networks, simulations have shown that data-driven derivations of such nodes with ICA can be beneficial for graphical analysis [36].

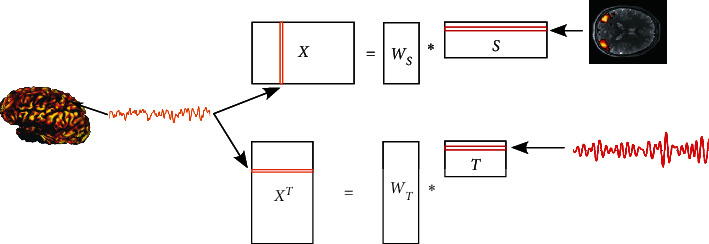

ICA is intrinsically a multivariate approach, and hence, each independent component (IC) groups brain activity into similar response patterns thereby providing a natural measure of functional connectivity (FC). ICA comes in two flavors extracting either spatially (sICA) or temporally (tICA) independent component maps. The basic principle of spatial and temporal ICA is illustrated in Figure 5, but these studies predominantly rely on sICA due to the abundance of requisite data samples. Spatial ICA has been applied first to fMRI datasets by McKeown et al. [117], while tICA followed shortly afterwards [135]. Also, few studies dealing with spatiotemporal ICA have been performed [136–141]. These various modes of ICA all share the limitation that the user has to identify the underlying sources. To resolve this issue, constrained ICA [142, 143] or ICA with reference [144, 145] has been proposed to extract one or more ICs, which are as similar as possible to given reference signals. Thus, a priori information of the desired sources is used to form constraints in either the spatial or temporal domain. This approach has also been extended to the spatiotemporal domain. The constrained stICA algorithm searches for maximally independent sources that correspond to constraints in both spatial and temporal domains [137]. This also exhibits improved performance for the analysis of fMRI datasets.

Figure 5.

The principle of ICA is illustrated. As the input, a voxel time series is considered and indicated as an orange stripe in the related data matrices. The decomposition is either done to obtain independent spatial maps in component matrix S or to obtain independent component time series contained in the rows of component matrix T.

Early work studied cortical functional connectivity (FC) in a seed-based approach, where the time course of any chosen seed voxel was correlated with the time courses of all other voxels to reveal two-point correlations of cortical activity in response to external stimuli and task requirements. Such seed-based correlation analysis (sCA) studies are generally biased by the choice of the seed region. Several studies elaborated on the difference between sCA- and ICA-derived measures of FC. Already a decade ago, Joel et al. [146] concluded that seed-based FC measures are the sum of ICA-based measures both within and between network connectivities. Very recently, Wu et al. [147] proved a mathematical equivalence between sCA and a connectivity-matrix enhanced ICA (cmICA). However, they also noted conceptual differences, which lead to different information captured by both techniques and which they exemplified in examining whole-brain rsFC at the voxel resolution in schizophrenic patients and healthy controls. FC is reduced over the entire brain, whereby the connectivity not only between networks but also within network hubs is affected. In the resting state, decreasing FC was in both groups which also strongly related to aging in both groups.

An overview of exploratory ICA, applied to deduce the functional connectivity from the fMRI data, was given by Calhoun et al. [148]. The authors discussed ICA in the spatial or temporal domain related to task and transiently task-related paradigms as well as physiology-related signals, the analysis of multisubject fMRI data, the incorporation of a priori information, and the analysis of complex-valued fMRI data. While most studies only take the magnitude of the fMRI signal into account, it was shown that the phase information of complex-valued fMRI has the potential to increase the sensitivity of ICA [149]. More recently, it was demonstrated that spatial resting-state networks observed in fMRI could also be found in high-temporal-resolution EEG data using ICA [150]. In their study, Sockeel et al. [150] could observe several overlapping EEG and fMRI networks in motor, premotor, sensory, frontal, and parietal areas.

Studies of FC in the resting state started only later when the work of McKeown et al. [151] suggested that this could be possible. As Calhoun and Adali noted in a focused survey [153], ICA offered, and still offers, essential methodological tools to study the functional connectivity of brain networks not only in single subjects but also across whole groups. The focus then shifted from task-related paradigms to the study of resting-state networks (RSN) and, most importantly, their differences in the diseased brain. It has been shown that even in the absence of a stimulus-driven task, a number of brain networks, such as the default mode network (DMN), could be observed at rest [5, 123] and successfully reconstructed with ICA [130]. The major use of the DMN is made in fMRI studies of brain disorders. In an early investigation, Esposito et al. [154] considered the cognitive load modulation of group-level ICA-based fMRI responses. They suggested that the high variability of the default mode pattern may link the DMN as a whole to cognition and may more directly support the use of the ICA model for evaluating cognitive decline in brain disorders.

An early study on rsFC with sICA was published in 2004 [155]. The authors identified many of the known RSNs, and their ICs showed an extremely high degree of consistency in spatial, temporal, and frequency parameters within and between subjects. These results were discussed in relation to the functional relevance of fluctuations of neural activity in the resting state. Such high spatial consistency of cortical functional networks across subjects was also found by Beckmann et al. [130], who applied probabilistic ICA and discussed the role of this exploratory technique can take in scientific investigations into the spatiotemporal structure of RSNs. The exploratory nature of ICA was also stressed by Rajapakse et al. [156] in contrast to covariance-based methods such as principal component analysis (PCA) and structural equation modeling (SEM), where SEM is employed to automatically find the connectivity structure among elements in independent components. However, their hybrid ICA/SEM approach was restricted to task-related fMRI paradigms. Meanwhile, numerous studies have been published based on exploratory, data-driven fMRI analyses, which established ICA in its many flavors as a standard technique to analyze fMRI datasets with respect to FC, most notably of RSNs and the DMN. Also, a recent claim by Daubechies et al. [157] that the ICA algorithms Infomax and FastICA (The Infomax algorithm is based on the minimization of mutual information between estimated components, while FastICA follows the idea of maximization of non-Gaussianity of components [133]. Both algorithms have shown to be reliable for the estimation of independent brain networks [152].), which are widely used for fMRI analysis and which are based on different principles like those of entropy or cumulant expansion, select for sparsity rather than independence has been refuted by Calhoun et al. [158]. The latter authors claimed that the ICA algorithms are indeed doing what they are designed to do, which is to identify maximally statistically independent sources.

Because of scaling and permutation indeterminacies of ICA, group inferences from multisubject studies turned out to be challenging. Several attempts have been considered to resolve this issue [159–163]. The most widely used approach is based on the gICA algorithm provided in the GIFT toolbox (http://mialab.mrn.org/software/gift/). A large-scale study [164], encompassing 603 healthy adolescents and adults, employed gICA to establish a multivariate analytic approach and applied it to the study of RSNs. The latter were identified and evaluated in terms of three primary outcome measures: time-course spectral power, spatial map intensity, and functional network connectivity. The study considered the impact of age and gender on resting-state connectivity patterns. The results revealed robust effects and suggested that the established analysis pipeline could form a useful baseline for investigations of human brain networks. Recently, we proposed a hybrid cICA-EMD approach, where a bidimensional ensemble empirical mode decomposition technique based on Green's functions in tension (GiT-BEEMD) was used to create reference signals for a constrained ICA [165, 166]. The idea of this technique is to decompose a signal into its underlying intrinsic frequency compartments [56], reflecting frequency-specific aspects of the latter. The natural ordering of the intrinsic modes (IMs) extracted with GiT-BEEMD provides an immediate assignment of ICs, extracted with cICA, across a group of subjects. Results of both methods are in good agreement. However, the consistency of identified functional networks across a group of subjects is higher for the hybrid cICA-EMD approach. Still, one of the problems of cICA algorithms is the choice of hyperparameters such as the threshold for similarity measures or the accuracy of a priori information. Shi et al. [167] recently tackled such problems by combining cICA with multiobjective optimization, where the inequality constraint of traditional cICA is transformed into the objective optimization function of constrained stICA, and both temporal and spatial prior information are included simultaneously. The algorithm apparently avoids the threshold parameter selection problem, shows an improved source recovery ability, and reduces the requirements on the accuracy of prior information.

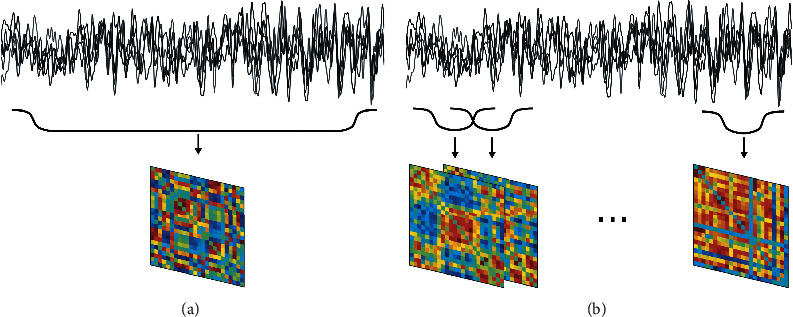

5.2. Temporal Dynamics of Functional Connectivity

The explorative techniques discussed so far all concern investigations of static functional connectivity. However, as we have seen from investigations into computational brain dynamics, the brain is operating in a metastable state close to a critical point, where spontaneous fluctuations play a decisive role in determining the inherent dynamics of brain networks. Such fluctuations emerge on time scales ranging from milliseconds to minutes but have largely been ignored in most recent investigations involving data-driven techniques. Still, over the last decade, a paradigm shift has occurred in functional connectivity studies towards the focus on the temporal variations in FC patterns [168]. Most studies on dFC employ a sliding window technique, such as illustrated in Figure 6. Instead of computing FC across the whole time span of a session, dFC accounts for the variability of connectivity within a session by assessing FC on (possibly overlapping) segments in the time domain, but it also has been shown that this technique is not without problems in itself [169–171]. Also, a sound statistical analysis of such studies is mostly lacking, thus casting doubts on the interpretations given to the results [172].

Figure 6.

Illustration of the concepts used to derive the static and dynamic functional connectivity (dFC). (a) An example of various activity time courses and their related static connectivity matrix, which is deduced from the complete session. (b) The same set of local activity time courses and their related connectivity matrices of the respective segments of the activity time courses.

A number of studies considered the dFC of BOLD signals and their related spatial patterns based on sliding window correlations (SWCs). With this new focus, Chang and Glover [173] investigated the dynamics of resting-state connectivity patterns during a single fMRI scan. They performed a time-frequency coherence analysis based on the wavelet transform and employed a sliding window correlation procedure to demonstrate time-varying connectivity patterns between several brain regions. The authors noted that such coherence and phase variability might be the result of residual noise rather than resulting from modulations of the cognitive state. In a similar study, Kang et al. [174] thoroughly investigated the temporal FC of spontaneous BOLD signals derived from RSNs with fMRI. RSNs were identified using a seed-based voxel-wise correlation analysis by calculating correlations between representative time courses of certain predefined regions and all other voxels of interest. A subsequent variable parameter regression model, combined with a Kalman filter for optimal model parameter estimation, was applied to identify dynamic interactions between the identified RSNs. The results revealed that functional interactions within and between RSNs showed indeed time-varying properties. Furthermore, the spatial pattern of dynamic connectivity maps obtained from adjacent time points exhibited a remarkable similarity. Employing ultrahigh field fMRI, Allan et al. [175] further investigated the contribution of spontaneous BOLD events to the temporal dynamics of FC and suggested that spontaneous fluctuations of BOLD signals drive the dynamics of large-scale functional networks commonly detected by seed-based correlation and ICA. These suggestions were based on observations that spontaneous BOLD signal fluctuations contribute significantly to network connectivity estimates but do not always encompass whole networks or nodes. Rather, clusters of coherently active voxels forming transiently synchronized subnetworks resulted. Furthermore, tasks can significantly alter the number of localized spontaneous BOLD signals. From these observations, the picture emerged that large-scale networks are manifestations of smaller, transiently synchronizing subnetworks of voxels whose coherent activity dynamics give rise to spontaneous BOLD signals. Recent fMRI studies demonstrated that the dynamics of spontaneous brain activities and the dynamics of their functional interconnections show similar spatial patterns suggesting they are associated to each other. Thus, Fu et al. [176] characterized local BOLD dynamics and dFC in the resting state and studied their interregional associations. Again, dFCs were estimated employing the aforementioned sliding window correlation technique, and BOLD dynamics were quantified via the temporal variability of the BOLD signal. BOLD dynamics and dFC indeed exhibited similar spatial patterns, and they were significantly associated across brain regions. Interestingly, intra- and internetwork connectivities were either positively or negatively correlated with the BOLD signal and exhibited spatially heterogeneous patterns. These associations either conveyed related or distinct information pointing towards underlying mechanisms involved in the coordination and coevolution of brain activity.

Though, in the first decade of the new millennium, an increasing number of dynamic FC studies have appeared, Thompson et al. [177] noted that only few investigations used small enough time scales to infer single subjects' behaviors. While studying the interaction between the DMN and task-positive networks within a psychomotor vigilance task, they evaluated correlations between the two networks' signals within a time window of 12.3s, centered at each peristimulus time interval. In addition, correlations were also computed within entire resting-state fMRI runs from the same subjects. These correlation measures were compared to time lags of response signals, both intra- and interindividually. Generally, significant anticorrelation was related to shorter response time lags interindividually, while single subjects showed this behavior only 4⟶8s before the detected target. Hence, studies of the relation between functional networks and behavior are valid only on short time scales and need to take into consideration the inter-as well as intraindividual variability. These early findings of studies devoted to dynamic FC were summarized and evaluated by Hutchison et al. [178].

Considering that variability of neural activity is a hallmark of intrinsic connectivity networks identified by rs-fMRI, Jones at al. [128] hypothesized that the variability, rather than representing noise, is also related to the nonstationary nature of those networks, switching between various connectivity states over time. The authors noted that this variability has hampered efforts to define a robust metric of connectivity that could be used as a biomarker for neurologic illness. Employing gICA and a large cohort of 892 older subjects, 68 functional ROIs were defined, and, for each subject, a dynamic graphical representation of brain connectivity was constructed within a sliding window approach to demonstrate the nonstationary nature of the brain's modular organization. When comparing dwell time in strong subnetworks of the DMN of a group of subjects suffering from Alzheimer's dementia with a healthy control group, it was concluded that connectivity differences between these groups are due to dwell time differences in DMN subnetwork configurations rather than steady-state connectivity.