Abstract

Photoacoustic tomography (PAT) has demonstrated versatile biomedical applications. Currently, PAT has two major implementations: photoacoustic computed tomography (PACT) and photoacoustic microscopy (PAM). PACT employs a multi-element ultrasonic array for parallel detection, which is complex and expensive. PAM requires point-by-point scanning with a single-element detector, which has a limited imaging throughput. The trade-off between the system cost and throughput demands a new imaging method. To this end, we have developed photoacoustic topography through an ergodic relay (PATER). PATER can capture a widefield image with only a single-element ultrasonic detector upon a single laser shot. This protocol describes the detailed procedures for PATER system construction, including component selection, equipment setup, and system alignment. A step-by-step guide for in vivo imaging of a mouse brain is provided. Data acquisition, image reconstruction, and troubleshooting procedures are also elaborated. It currently takes ~130 min to carry out this protocol, including ~60 min for both calibration and snapshot widefield data acquisition using a laser with a 2 kHz pulse repetition rate. PATER offers low-cost snapshot widefield imaging of fast dynamics, such as visualizing blood pulse wave propagation and tracking melanoma tumor cell circulation in mice in vivo. PATER is envisaged to have wide biomedical applications, such as being used as a wearable device to monitor human vital signs.

Introduction

Photoacoustic tomography (PAT), also known as optoacoustic tomography, is a noninvasive hybrid imaging technology that combines the high spatial resolution inside deep tissue of ultrasound imaging with the molecular contrast of optical imaging.1–3 Capitalizing on the photoacoustic (PA) effect, in PAT, the optical energy is absorbed by chromophores in the biological tissue and re-emitted as ultrasonic waves (referred to as PA waves).4,5 By detecting the PA waves, tomographic images with optical contrasts can be generated.6,7 Taking advantage of the weak scattering of ultrasound in soft tissue, PAT achieves superb resolution at depths.8 Currently, PAT has two major implementations based on the image-formation method: inverse reconstruction based photoacoustic computed tomography (PACT) and focus-scanning based photoacoustic microscopy (PAM).9,10 By employing a multi-element ultrasonic array, PACT can detect PA signals from a large field of view (FOV) simultaneously and thus can visualize biological dynamics in deep tissue.11–14 However, the multi-channel detection and digitization system in PACT is complex and expensive.15–20 In addition, PACT systems are often bulky and not suitable for portable or wearable applications.21–25 PAM employs point-by-point scanning of a single-element ultrasonic transducer to cover a large FOV, which limits the imaging throughput.26–28 Thus, we encounter a trade-off between the system cost and imaging throughput, which demands a new imaging method.

To address the problem, photoacoustic topography through an ergodic relay (PATER) has been developed recently, which requires only a single-element transducer to capture snapshot widefield images.10 Topography refers to projection imaging of features along the depth direction. The acoustic ergodic relay (ER) is a waveguide that permits the acoustic wave originated from any point to reach any other point with distinct reverberant characteristics.10,29,30 The ER can be an effective encoder to convert a one-dimensional (1D) depth image (A-line) into a unique temporal sequence. Because of the uniqueness of each temporal signal, PA waves from the entire imaging volume through the ER can be detected in parallel. Then we can decode them mathematically to reconstruct 2D projection images.

Overview of the procedure

This protocol provides a comprehensive guideline for constructing the PATER system and applying it for in vivo experiments. We first introduce the PATER system, including the overview of the system, key components, image acquisition, and image reconstruction. We also describe the step-by-step procedures to build and align the PATER system (see Equipment setup). PATER has two image acquisition modes: the calibration mode and the widefield mode. During the in vivo experiment, we implement the calibration imaging of the animal (steps 1–17) first to obtain the system impulse responses. Then the widefield measurement (steps 18–27) captures biological dynamics of the animal. Troubleshooting procedures for in vivo measurements are also provided.

Advantages, limitations, and applications of PATER

PATER can detect PA signals from a large imaging volume in parallel with a single-element detector, enabling snapshot widefield imaging. We have demonstrated that PATER can provide widefield imaging at a frame rate of 2 kHz. We have applied PATER to image cerebral hemodynamic responses, visualize blood pulse wave propagation, and monitor circulating tumor cells and enable super-resolution imaging in vivo.10 Combining with multifocal illumination, PATER improves the spatial resolution to 13 μm and provides 400× faster imaging than conventional PAM.31 Moreover, PATER employs only a single-element ultrasonic transducer for parallel detection of widefield signals, which greatly simplifies the system, minimizes the size, and lowers the cost. The demonstration of high-speed matching of vascular patterns shows its potential for biometric authentication on portable and wearable devices.32

Currently, PATER can image biological dynamics from areas that have high optical absorption during calibration; however, it may not be able to accurately detect features that appear in new areas after calibration. PATER employs focused light for calibration, which restrains the imaging depths within the ~1 mm optical diffusion limit inside biological tissue. In addition, PATER’s calibration is object specific. The boundary conditions between the object and the ER should remain unchanged throughout the calibration and widefield measurements.

PATER system

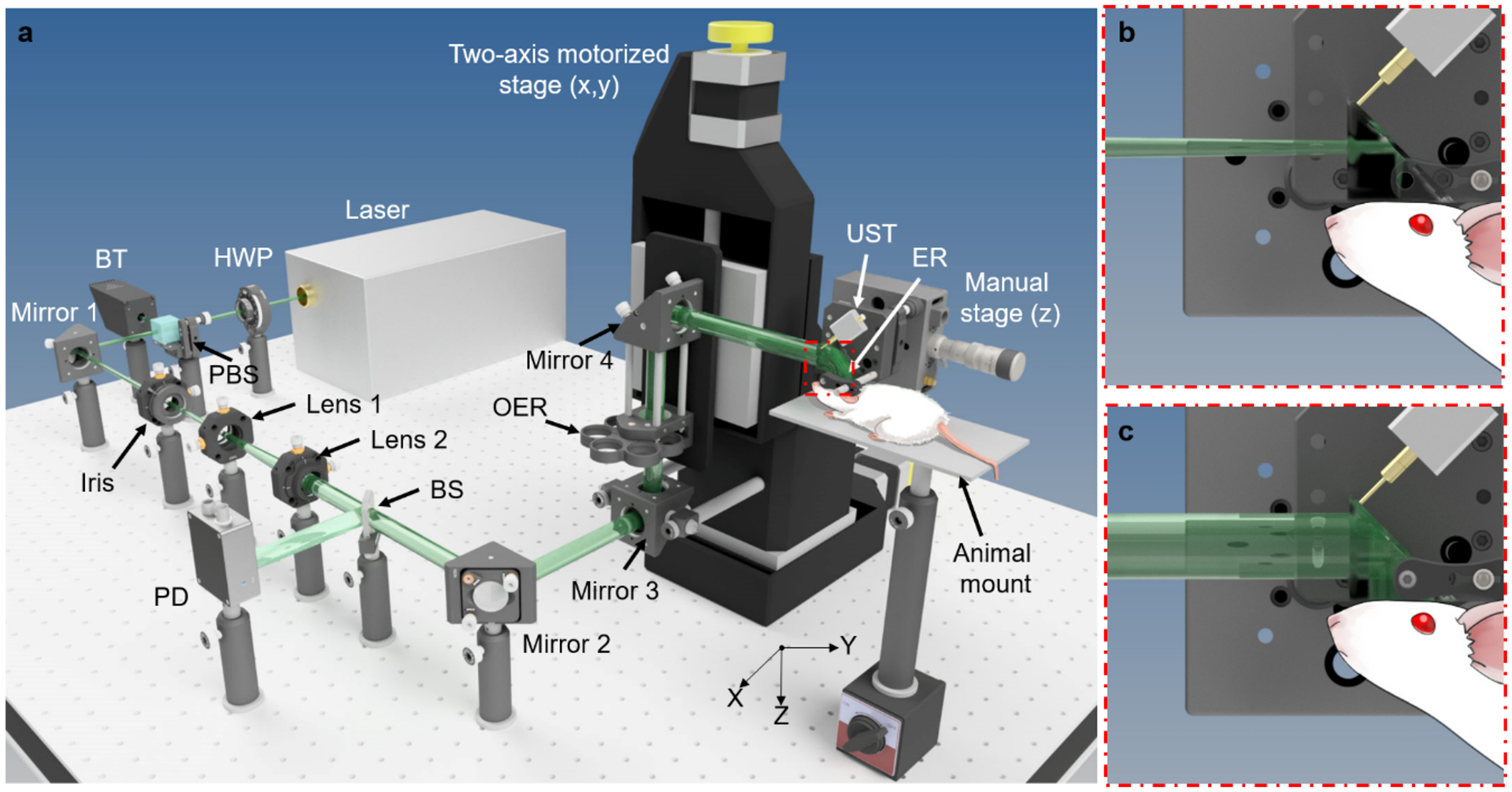

The PATER system (Fig. 1a) consists of four major subsystems: an optical system, a mechanical scanning system, an ultrasound detection system, and a data acquisition and control system. The motorized scanner, the data acquisition system, and the laser are synchronized. The control system sends signals to trigger laser firing, motor scanning, and data acquisition.

Figure 1.

Schematic of the PATER system. Drawn not to scale for clarity. (a) Layout of the PATER system. BT, beam trap; BS, beam sampler; ER, ergodic relay; HWP, half-wave plate; OER, optical element rotator; PBS, polarized beam splitter; PD, photodiode; UST, ultrasonic transducer. (b) Close-up of the calibration illumination. (c) Close-up of the widefield illumination.

The optical system is comprised of a laser system and optics for beam shaping. The laser system produces high-repetition-rate short laser pulses for PA excitation. The current PATER system uses a 532 nm Q-switched laser and a tunable dye laser. The Q-switched laser has a pulse duration of ~5 ns and a pulse repetition rate of 2 kHz. The tuning range of the dye laser is 560–690 nm, which enables imaging of various chromophores (e.g., melanin, oxy- and deoxy-hemoglobin, etc.) in vivo. In this protocol, we mainly focus on an application employing the 532 nm illumination, and thus the dye laser is omitted in Fig. 1a. The laser system should produce sufficient energy for PA excitation. Currently, we deliver ~2 mJ per pulse to the tissue surface for widefield imaging (~1 cm2 area of illumination, the fluence is ~2 mJ/cm2). The laser light first passes through a half-wave plate (HWP) and a polarized beam splitter (PBS). By rotating the HWP, users can adjust the laser energy delivered to the object. Then the laser beam goes through an iris, which controls the incident beam diameter. A lens pair (Lens 1 and Lens 2) after the iris expands the beam. The expanded beam passes through a beam sampler (BS), which reflects a small fraction of the energy to a photodiode (PD) for energy fluctuation correction. Then the beam reaches an optical element rotator (OER), which holds a focusing lens and an engineered diffuser. When the focusing lens is aligned in the optical path, it focuses the laser beam to the bottom surface of the prism for calibration illumination (Fig. 1b). After switching the engineered diffuser into the optical path, a broad laser beam uniformly illuminates the bottom surface of the prism for widefield illumination (Fig. 1c).

The mechanical scanning system primarily consists of a two-axis (x- and y-axis) motorized translational stage and a manual (z-axis) stage (Fig. 1a). The motorized stage can be program-controlled to translate Mirror 3, OER, and Mirror 4 to achieve two-dimensional (x- and y-axis) scanning of the optical focus over the imaging surface of the ER. Currently, PATER employs an unfocused pin transducer (XMS-310, Olympus Corporation; or VP-0.5–20MHz, CTS Electronics, Inc.) for ultrasound detection. In this protocol, we use XMS-310 (10 MHz central frequency, 100% one-way bandwidth, and 2 mm element size) for our experimental procedures. Two low noise amplifiers are cascaded to amplify the detected PA amplitude by 48 dB. A dual-channel high sampling rate digitizer is employed for data acquisition. The digitizer has a maximum sampling rate of 500 MS/s and a dynamic range of 12 bits. One input channel of the digitizer is connected to the 2nd stage amplifier to digitize the detected PA signals. The other input channel connects to a photodiode to measure the laser pulse energy fluctuation for compensation.

Acoustic ER

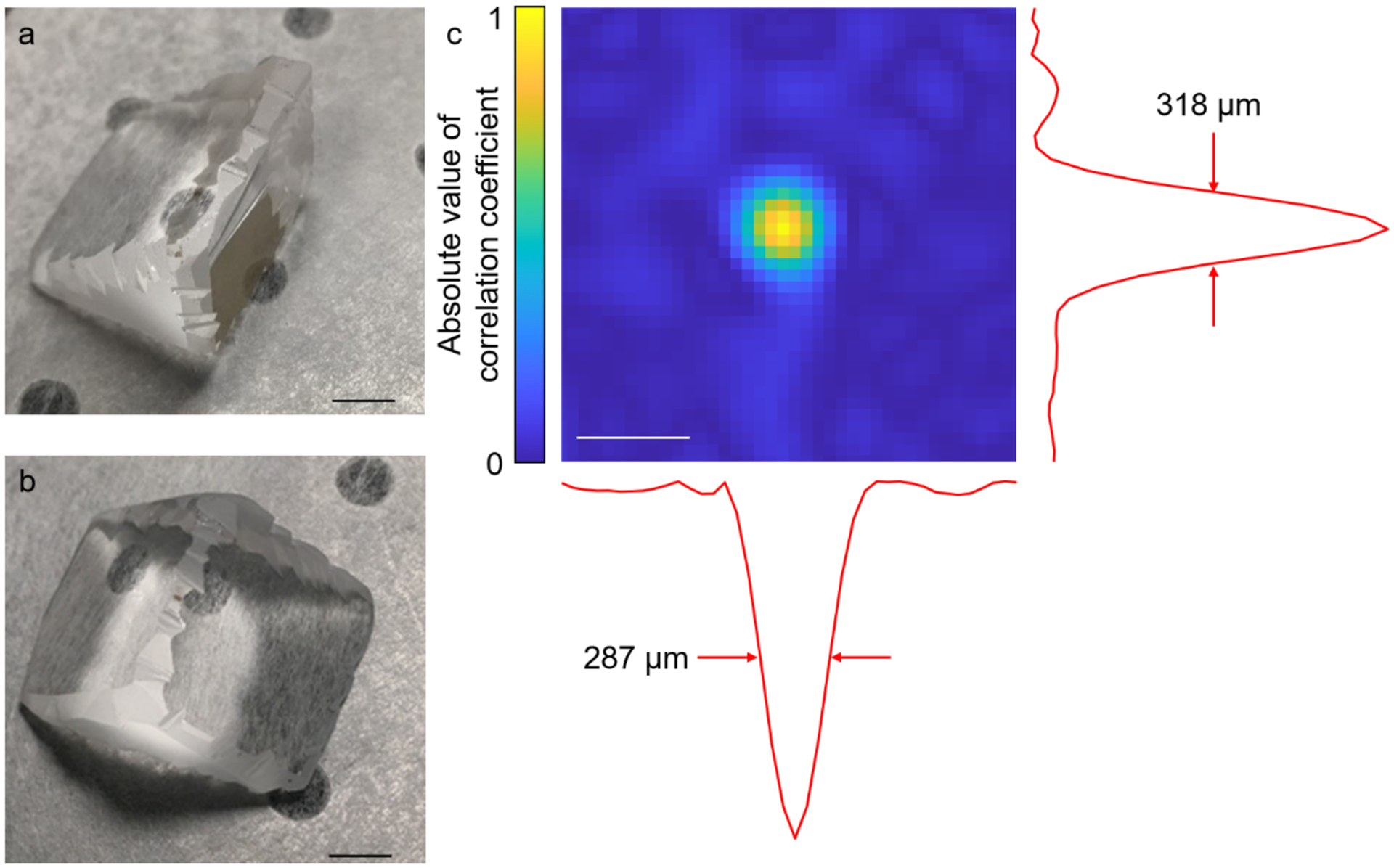

The acoustic ER is the crucial enabling element for PATER. A perfect ER necessitates high acoustic reflectivity at the interfaces, low acoustic attenuation inside, and strict geometric asymmetry about the contact point of the detector.10 In addition, chaotic boundaries are required to guarantee ergodicity.33 Optical transparency is also needed for optical illumination. A UV fused silica based right-angle prism with chaotic boundaries, satisfying both the optical and acoustic requirements, can serve as the acoustic ER. It has an acoustic reflectivity of 99.99% by amplitude at the prism and air interface. And the acoustic attenuation is negligible inside over the detectable pathlength range.34 We use a low-speed diamond saw to grind all nine edges of the prism following a sawtooth pattern to obtain chaotic boundaries (Fig. 2a and b), which guarantees the ergodicity and permits the diffraction-limited spatial resolution of PATER (Fig. 2c). Three clear apertures of the prism are used for optical transmission and acoustic detection (Fig. 1b and c). One of the two leg apertures is the optical incident aperture; the other is the imaging surface and the acoustic incident aperture, which is in contact with the objects. The hypotenuse aperture is the acoustic detection surface, where the ultrasonic transducer is placed. It also reflects the incident light to the imaging surface for illumination.

Figure 2.

The ER and the spatial resolution of PATER. (a, b) Photos of the ER, which is based on a UV fused silica right-angle prism. The edges are ground to ensure the ergodicity of the prism. Scale bar, 5 mm. (c) Cross-correlation map generated from the correlation coefficients between the calibration signal of the center pixel and the signals of all the pixels in the FOV, showing the spatial resolution of PATER. Scale bar, 500 μm.

Ultrasonic transducer

Given that the ER is chaotic, PATER can provide acoustic diffraction-limited resolution for widefield imaging.33 The spatial resolution of the PATER image is determined by the frequency bandwidth of the transducer. The wider the bandwidth, the higher the resolution. Users should choose the transducer according to the features to be imaged. An unfocused pin ultrasonic transducer is selected for acoustic detection. The smaller the sensing element, the wider the acoustic receiving angle, and thus the more effectively picking up the PA signals from the ER. The transducer should be placed at a corner of the detection surface of the ER to avoid geometric symmetry. To facilitate acoustic transmission, we apply ultrasound gel between the ER and the object and add a layer of high acoustic-impedance ultrasonic couplant between the ER and the transducer.

Image acquisition

In the calibration mode, focused laser illumination is employed for raster scanning (Fig. 1b). Because the laser pulse width of (~5 ns) is orders of magnitude narrower than the central period of the ultrasonic detector (~100 ns), and the focused beam diameter (~10 μm) is orders of magnitude smaller than the central acoustic wavelength (~600 μm), we can approximate the PA wave excited by each laser pulse as a spatiotemporal delta function. Therefore, we can quantify the system impulse response at each pixel by raster scanning the laser focus over the FOV. In the widefield mode, the beam passing through an engineered diffuser uniformly illuminates the object (Fig. 1c). The PA signals from all pixels of the entire imaging volume are recorded in parallel, enabling snapshot widefield imaging. We can solve an inverse problem to reconstruct widefield images, which map the optical absorption changes of the object.

Motor-controlling and data acquisition parameters

In the calibration mode, the motor-controlling parameters include the starting point, scanning step sizes, and scanning ranges. The scanning ranges should cover the desired FOV. In general, the scanning step size should be less than or equal to half of the resolution of widefield imaging. Using finer step size improves the calibration image quality but prolongs scanning time. The data acquisition parameters include the trigger delay, sampling rate, data length, averaging time, and data acquisition mode. The trigger delay is ~2 μs, enabling acquisition slightly before the first PA wave arrives at the ultrasonic transducer. The sampling rate is typically ~3–4 times of the upper cutoff frequency of the transducer, satisfying the Nyquist sampling requirement. For example, we used 50 MS/s sampling rate and 100 MS/s sampling rate for transducers with upper cutoff frequencies of 15 MHz and 25 MHz, respectively. We set the data length to 164 μs (acoustic transition time). The higher SNR of the calibration signal, the better the reconstructed widefield images. To improve the SNR of the calibration signal, we typically repeat the acquisition 100-time at each scanning position and use the average signal as the calibrated impulse response. To minimize the motor backlash, we choose unidirectional data acquisition, i.e., we only acquire data during the motor moving forward and stop the acquisition while the motor returns. In the widefield mode, motor scanning is disabled. The data acquisition parameters are the same as those in the calibration mode. Data averaging is optional, and the imaging frame rate can go up to 2 kHz.

Image reconstruction

A widefield PA signal is a linear combination of the calibrated responses from all pixels:

| (1) |

where s is the widefield PA signal, t time, i the pixel index, Np the total number of pixels, Pi the root mean square (RMS) PA amplitude, and ki the normalized impulse response from the calibration. ki is computed for each time point tj via ki(tj) = Ki(tj)/RMSi, where Ki(t) is the raw calibration signal. The RMS value of Ki(t) for the ith pixel can be calculated as

| (2) |

where Nt is the number of sampled time points and t time. A calibration image is a 2D density plot of RMSi over all pixels.

After data acquisition, all the signals are digitized, and we can recast Eq. (1) to matrix form:

| (3) |

where is the system matrix. We can reconstruct the widefield image P from the signal s by solving Eq. (3), where a two-step iterative shrinkage/thresholding (TwIST) algorithm35 is employed. We solve Eq. (3) for P as a minimizer of the objective function:

| (4) |

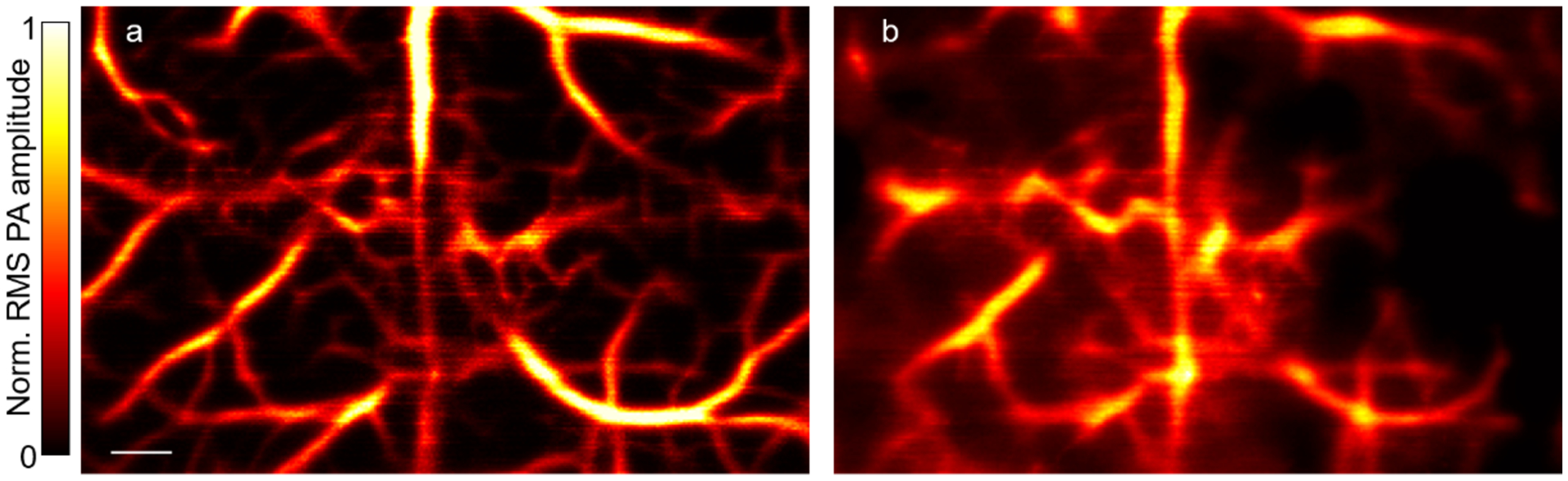

where ΦTV(P) is the total variation of P, and λ the regularization parameter. To minimize computational instability and ensure reconstruction image quality, pixels with RMS values lower than twice (6 dB) the noise amplitude are excluded from the system matrix K. Examples of the calibration and widefield images of the mouse cortical vasculature are shown in Fig. 3; the raw data can be found in Supplementary Data. The reconstruction program is provided as an example (Supplementary Software); however, the regularization parameter may need to be readjusted depending on the application.

Figure 3.

PATER of the mouse cortical vasculature in vivo. (a) Calibration image of mouse brain vasculature. Norm., normalized. Scale bar, 500 μm. (b) Reconstructed widefield image of the calibrated area. All animal procedures were approved by the Institutional Animal Care and Use Committee of California Institute of Technology.

Materials

Biological materials

Mice.

For brain imaging experiments, we used 6–12-week-old Swiss Webster mice (Hsd: ND4, 20–30 g, Envigo, cat. no. 032). !CRITICAL Wild-type C57BL/6J mice (The Jackson Laboratory, cat. no. 000664) can also be used for brain imaging. 6–12-week-old mice (no specific sex requirement) are preferred. For imaging ears or other body sites, we used 8–12-week-old nude mice (Hsd: Athymic Nude-FoxlNU, 20–30 g, Envigo, cat. no. 069).

!CAUTION All experiments using animals must be carried out in conformity with the applicable national guidelines and regulations. The animal procedures described within this protocol were approved by the Institutional Animal Care and Use Committee of California Institute of Technology (IA20–1737).

Reagents

Deionized water

Ultrasound gel (MFIMedical, cat. no. PKR-03–02) to facilitate acoustic coupling between objects and the ER.

High acoustic-impedance ultrasonic couplant (Berg Engineering & Sales Company, Inc., cat. no. ECHO-Z-PLUS-F-04) to facilitate acoustic coupling between the ER and the ultrasonic transducer.

Isoflurane (Zoetis, cat. no. 10015516)

Hair-removing lotion (Nair, CVS, cat. no. 339826)

Ophthalmic ointment (Pharmaderm, cat. no. NDC 17033-211-38)

Equipment

Pulsed pump laser (INNOSAB IS8II-E, Edgewave GmbH, 532 nm, 5-ns pulse width, 2-kHz pulse repetition rate) !CAUTION Class IV laser. Wear laser protection goggles during operation.

Laser line mirror (NB1-K12, Thorlabs, Inc.)

Half-wave plate (WPH10M-532, Thorlabs, Inc.)

Polarized beam splitter (10FC16PB.3, Newport Corporation) in combination with the half-wave plate to adjust laser energy.

Beam trap (BT610, Thorlabs, Inc.)

Beam expanding lens pair (LC1715-A, f = −50.0 mm, and LA1433-A, f = 150.0 mm, Thorlabs, Inc.)

Beam sampler (BSF10-A, Thorlabs, Inc.)

Photodiode (APD430A2, Thorlabs, Inc.)

Focusing lens (LA1509, f = 100.0 mm, Thorlabs, Inc.)

Engineered diffuser (EDC-5-A-1r, RPC Photonics, Inc.) to homogenize the laser light for widefield illumination.

Right-angle prism (PS615, Thorlabs, Inc.)

Digital low-speed diamond saw (SYJ-150, MTI Co.)

Beam profiler (SP620U, OPHIR)

Motorized linear translation stage (PLS-85, PI GmbH)

Motor driver (CW215, Circuit Specialists, Inc.)

Manual linear translation stage (NFL5D, Thorlabs, Inc.)

Ultrasonic transducer (XMS-310, Olympus Corporation; or VP-0.5-20MHz, CTS Electronics, Inc.)

Wideband signal amplifier (ZFL-500LN+, Mini-Circuits Corporation)

Computer

Dual-channel digitizer (ATS9350, Alazar Technologies Inc.)

Multifunction I/O device (PCIe-6321, National Instruments) to control the laser, the scanner, and the data acquisition system.

Hair trimmer (Model 9854-600, Wahl Clipper Corporation)

Thermocouple (SA1-E, OMEGA Engineering, Inc.)

Heater (SRFG-303/10, OMEGA Engineering, Inc.)

Temperature controller (Dwyer 32B-33, Cole-Parmer Instrument Company, LLC.) in combination with the thermocouple and the heater to regulate the animal temperature.

Breathing anesthesia system (E-Z Anesthesia; E-Z Systems Corporation)

Polyethylene membrane (Cling Wrap, Glad)

Double-sided tape (Scotch tape, 3M)

Paper tape (3M)

Silicone tubing (HelixMark, VWR International, LLC.)

Scientific software (LabVIEW, National Instruments; MATLAB, MathWorks) for developing the data acquisition program and image reconstruction algorithm

Equipment setup

!CRITICAL The experimental setup shown in Fig. 1 is required for performing PATER imaging. In addition to the setup, good alignment is essential for high-quality PATER imaging. The procedures below should be followed to build and align the PATER system.

Have the vendor set up the optical table, as shown in Fig. 1a.

Set up a computer with both the dual-channel digitizer and the multifunction I/O device installed.

Have the vendor install the laser, as shown in Fig. 1a.

Set up the HWP and the PBS to adjust the laser power. !CAUTION The beam trap should be installed to absorb the laser beam reflected by the PBS.

Install the iris to control the laser beam diameter. Mirror 1 in Fig. 1a is used to turn the beam direction; it is not an essential part of the system.

After the iris, set up the lens pair (Lens 1 and Lens 2 in Fig. 1a) to expand the laser beam. !CRITICAL The laser beam should coincide with the optical axes of Lens 1 and Lens 2.

Install the BS and the PD to sample the laser beam for energy fluctuation correction. Connect the output of the PD to one of the input channels of the dual-channel digitizer.

Following Fig. 1a, Install Mirrors 2 to 4, the two-axis (x- and y-axis) motorized translational stage, and the manual (z-axis) stage. !CRITICAL Mirrors 2 to 4 should be adjusted such that the incident and reflected laser beams are perpendicular to each other.

Set up the OER between Mirror 3 and Mirror 4. Install the focusing lens and the engineered diffuser on the OER. !CRITICAL When switched into the optical path, the optical axes of the focusing lens and the engineered diffuser should coincide with the laser beam.

Use a low-speed diamond saw to grind all nine edges of a UV fused silica based right-angle prism to obtain the acoustic ER (Fig. 2a and b). Whereas chaotic boundaries and ergodicity can be obtained in a variety of patterns, we choose to grind the prism edges following a sawtooth pattern, which has been experimentally proven effective (Fig. 2c). There is no requirement on the flatness of the ground surfaces.

Install the ER on the z-axis stage (Fig. 1a).

Place the ultrasonic transducer at a corner of the detection surface of the ER (the hypotenuse surface of the prism in Fig. 1b). !CRITICAL Make sure that the transducer is in good contact with the ER and the axis of the transducer is perpendicular to the ER detection surface.

Connect the output of the transducer to the input of the cascaded amplifiers; and connect the output of the cascaded amplifiers to the other input channel of the dual-channel digitizer.

Set up the animal holder for animal imaging in vivo. The 3D models of the animal holder components can be found in Supplementary Information.

Start the calibration mode by rotating the OER to switch the focusing lens into the optical path (Fig. 1b). !CAUTION In the calibration mode, a tight optical focus is scanned over the object to measure the impulse response of each pixel.

Employ a beam profiler to check the optical alignment on the imaging surface of the ER (the bottom surface of the prism in Fig. 1b). Adjust the distance between the beam profiler and the ER to overlap the imaging surface of the ER and the focal plane of the beam profiler.

Manually adjust the z-axis stage (Fig. 1a) to locate a position where the laser beam has the smallest diameter, indicating the laser beam is focused on the imaging surface of the ER.

Move the x-axis stage across the whole scanning range and check if the laser beam is in focus for the whole range. If not, adjust Mirrors 2 and 3 (Fig. 1a) to ensure normal incidence of the laser beam into the focusing lens.

Move the y-axis stage across the whole scanning range and check if the laser beam is in focus for the whole range. If not, adjust Mirrors 3 and 4 (Fig. 1a) to ensure normal incidence of the laser beam into the ER.

Repeat steps 18) and 19) until the laser beam is in focus for the entire imaging surface. Figure 2c shows the experimentally measured resolution of the PATER system after it is well aligned.

When imaging animals in vivo, move the z-axis stage to transfer the laser focus into the tissue (~300–400 μm in depth) to make sure the focal zone of the calibration light covers the region of interest (ROI) along the depth direction.

Start the widefield mode by rotating the OER to switch the engineered diffuser into the optical path (Fig. 1c). Check if the broad laser beam covers the FOV. If not, adjust the linear translation stages to ensure the light uniformly illuminates the entire FOV. !CAUTION Users should also be noted that the widefield illumination beam is within or equal to the calibrated area.

Procedure

!CAUTION For illustration, in vivo imaging of the mouse cortical vasculature (ND4, Swiss Webster, bodyweight: 20–30 g) is elaborated. We need to modify the procedure accordingly for other imaging applications. All animal procedures described within this protocol were approved by the Institutional Animal Care and Use Committee of California Institute of Technology (IA20-1737).

Warm up the laser 30 min before the experiment.

Set the temperature controller to 38 °C and turn on the heater. Throughout the experiment, the animal body temperature is regulated toward 38 °C.

!CAUTION The operator should wear clean examination gloves, face mask, and clean lab coat. Weigh the animal and then place it in an induction chamber connecting to an isoflurane vaporizer. Induce anesthesia with a mixture of medical-grade air and 5% vaporized isoflurane (volume concentration) at a flow rate of 1–1.5 L/min for ~5 min. Monitor the anesthetic depth by testing the animal’s response to tail or toe pinches. A lack of response indicates that the animal is adequately anesthetized. Once fully anesthetized, transfer the mouse to the animal holder and change the isoflurane concentration to 2% at a flow rate of 1–1.5 L/min through a nosecone.

Trim hair in the ROI (e.g., head) as well as the surrounding area.

Apply hair-removing lotion to the trimmed area, wait for 1–3 min, and then clean with warm water.

Disinfect the depilated skin and incise scalp along the midline and expose the skull.

Apply a layer of ultrasound gel (~1–2 mm thick) to the exposed skull.

Transfer the animal with the holder to the imaging table. Loosely fix the body and the four legs onto the holder with paper tapes to restrain motions during data acquisition.

Place the ROI under the ER and lift the animal holder until the skull touches the imaging surface of the ER. Gently push the mouse skull against the ER to ensure tight contact between them. !CAUTION Avoid trapping air bubbles in the middle.

Continuously supply a mixture of medical-grade air and 1% isoflurane at a flow rate of 1–1.5 L/min to the animal through the nosecone. !CAUTION Flow rate varies with the bodyweight of the animal. Please refer to the instructions of each specific anesthesia system.

Apply ophthalmic ointment to the corneal surfaces to prevent dryness and cover the eyes to prevent accidental laser damage.

Switch the focusing lens into the optical path, as shown in Fig. 1b, and begin with the calibration mode.

Start the control and data acquisition program in LabVIEW. Turn on the laser, select the low power continuous-wave (CW) mode, and locate the position of the laser spot on the imaging surface. !CAUTION Class IV laser. Wear laser protection goggles during operation. Move the laser spot to the desired location and set the scanning ranges and step sizes of both the x- and y-axis to the designated values to cover the ROI.

Switch the laser to the external triggering mode and start laser firing. ! CAUTION The laser pulse energy and the pulse repetition rate should be checked carefully according to laser safety.

Execute a quick raster scan with data acquisition.

-

Check the calibration image of the quick scan to ensure that the SNR is good and the ROI fits in the FOV. If the SNR is low, adjust the laser illumination fluence and repeat. If the ROI is not entirely within the FOV, adjust the scanning ranges, and repeat.

? TROUBLESHOOTING

Set the scanning and averaging parameters (typically 100–200-time averaging at every scanning position) and start the calibration scanning and data acquisition.

Move the laser beam to the center of the ROI after calibration scanning.

Rotate the OER to switch the engineered diffuser in the optical path (Fig. 1c). Set the laser to the CW mode and ensure that the broad laser beam uniformly illuminates the ROI. Please note that the widefield beam illuminated area should be within the calibrated area.

Switch the laser to the external triggering mode and start laser firing for pre-acquisition.

-

Check the SNR of the pre-acquisition data. If the SNR is low, adjust the laser illumination fluence and repeat.

? TROUBLESHOOTING

Set the data acquisition parameters and start the widefield image acquisition.

Stop laser firing after completion of the data acquisition.

-

Run the MATLAB reconstruction program to reconstruct widefield images using both the calibration and widefield data.

? TROUBLESHOOTING

Lower the animal holder and detach the animal from the ER.

Increase the isoflurane concentration to 5% to euthanize the animal, followed by cervical dislocation to ensure death. Dispose of the carcass by following proper procedures.

Turn off the laser and isoflurane supply and clean the imaging table.

Timing

System and animal preparation (Steps 1–14): ~30 min

Adjust the scanning range to fit the ROI (Steps 15, 16): ~10 min

Calibration imaging (Step 17): varies on the scanning range, step size, averaging parameter, and laser repetition rate. For example, scanning of a 6 × 6 mm2 area with a step size of 30 μm and 100-time averaging at 2 kHz laser repetition rate takes ~50 min.

Widefield imaging (Steps 18–23): depends on the # of frames needed for recording the targeted dynamics, typically ~1–10 min.

Remaining procedures (Steps 24–27): the time of image reconstruction depends on the # of reconstructed frames, typically ~30 min for 120 frames on a workstation (Intel Xeon E5–2620v2, 2.10GHz, 8 cores, and 16 GB RAM). Steps 25–27 typically take ~20–30 min. Users can perform Step 24 and Steps 25–27 in parallel.

Total time: ~130 min

? TROUBLESHOOTING

No PA signal or weak PA signal (Step 16)

First, make sure that the ultrasonic transducer is in good contact with the ER and the axis of the transducer is perpendicular to the ER detection surface. Second, make sure that high acoustic-impedance ultrasonic couplant (not ultrasound gel) is correctly applied between the transducer and the ER detection surface. Third, gentle force should be applied to contact the transducer with the ER and make sure no air gap trapped in the middle. Fourth, make sure that signal amplifiers are powered, and all cables are connected correctly and robustly and are functioning properly. Fifth, make sure that the object is illuminated with enough laser energy, and the laser is operated under external triggering mode and synchronized with the data acquisition system.

To locate PA signals initially, users can set the delay between laser firing and data acquisition to zero and set the data recording length to ~500 μs. After the PA signals are observed, change the trigger delay and data recording length to designated values.

Low SNR of the calibration or widefield signals (Steps 16 and 21)

Please refer to “No PA signal or weak PA signal (Step 16)” section first to make sure the system is detecting PA signals. If PA signals are detected but noisy, users can check the steps below. First, make sure the optical path is well aligned. Especially for the calibration mode, make sure the object is within the optical focal zone. Second, make sure the system is well shielded and grounded. The motor drivers and control circuits should be shielded, and power supply cables should be grounded. Third, gradually increase the laser fluence and average more times to improve the SNR.

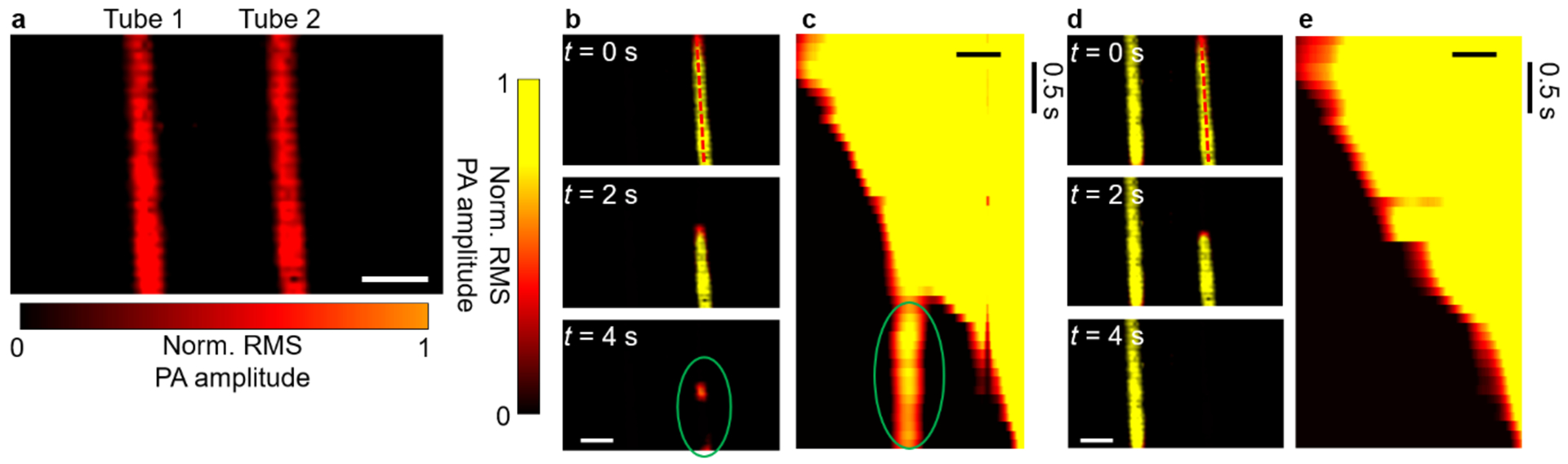

Low quality of reconstructed widefield images (Step 24)

First, the users should check whether the widefield beam illuminated area is beyond the calibrated area. Users can adjust the distance between the engineered diffuser and the object to change the size of the widefield illumination beam. If the widefield signal mixes with signals from un-calibrated areas, it will yield incorrect reconstruction images, as demonstrated in Fig. 4. As shown in Fig. 4a, two tubes filled with blood were imaged. During the calibration step, we scanned Tube 2 only, leaving Tube 1 uncalibrated. In the widefield mode, we illuminated both tubes to capture blood flushing out of Tube 2. The reconstructed images at different time points are shown in Fig. 4b, where reconstruction artifacts are labeled by a green ellipse. A space-time domain plot of pixels along the dashed line in Fig. 4b (top panel) also reveals the artifacts, marked by a green ellipse (Fig. 4c). When both tubes were calibrated, correct images can be reconstructed (Fig. 4d and e). Another possibility is the low SNR of the calibration images or widefield images, please refer to “Low SNR of the calibration or widefield signals (Steps 16 and 21)” section. This problem is also probably caused by boundary condition changes during the processes of calibration and widefield imaging. Animal motions, primarily the respiratory motion, should be minimized, and the contact area between the animal and the ER should remain unchanged throughout the experiment. Make sure that the animal is properly and securely fixed, and the anesthesia system functions well. Check the animal holding and inhalation gas supply and make sure that the animal is anesthetized and can breathe freely. Users should gently apply force to attach the animal against the ER to ensure tight contact.

Figure 4.

Reconstruction artifacts caused by signals from uncalibrated areas. Two tubes filled with blood were placed on the imaging surface. While Tube 1 served as the control without flow, blood flowed through Tube 2 during widefield measurements. (a) Calibration image of the two tubes. Norm., normalized. Scale bar, 2 mm. (b) Reconstructed widefield images with Tube 1 uncalibrated. Signals from both tubes were captured in widefield imaging. The reconstruction artifacts are marked by a green ellipse. (c) Space-time domain plot of pixels along the axis of Tube 2 in (b), revealing the reconstruction artifacts, marked by a green ellipse. (d) Reconstructed widefield images with both tubes calibrated, showing no artifacts. (e) Space-time domain plot of pixels along the axis of Tube 2 in (d), illustrating a correct reconstruction. Scale bars in b–e, 1 mm. Images in this figure are adapted from ref. 10.

Anticipated results

This protocol, based on ref. 10, elaborates a step-by-step guideline on how to apply PATER to snapshot in vivo imaging of a mouse brain. When operated correctly, PATER can provide high-throughput topographic images of optical absorption.

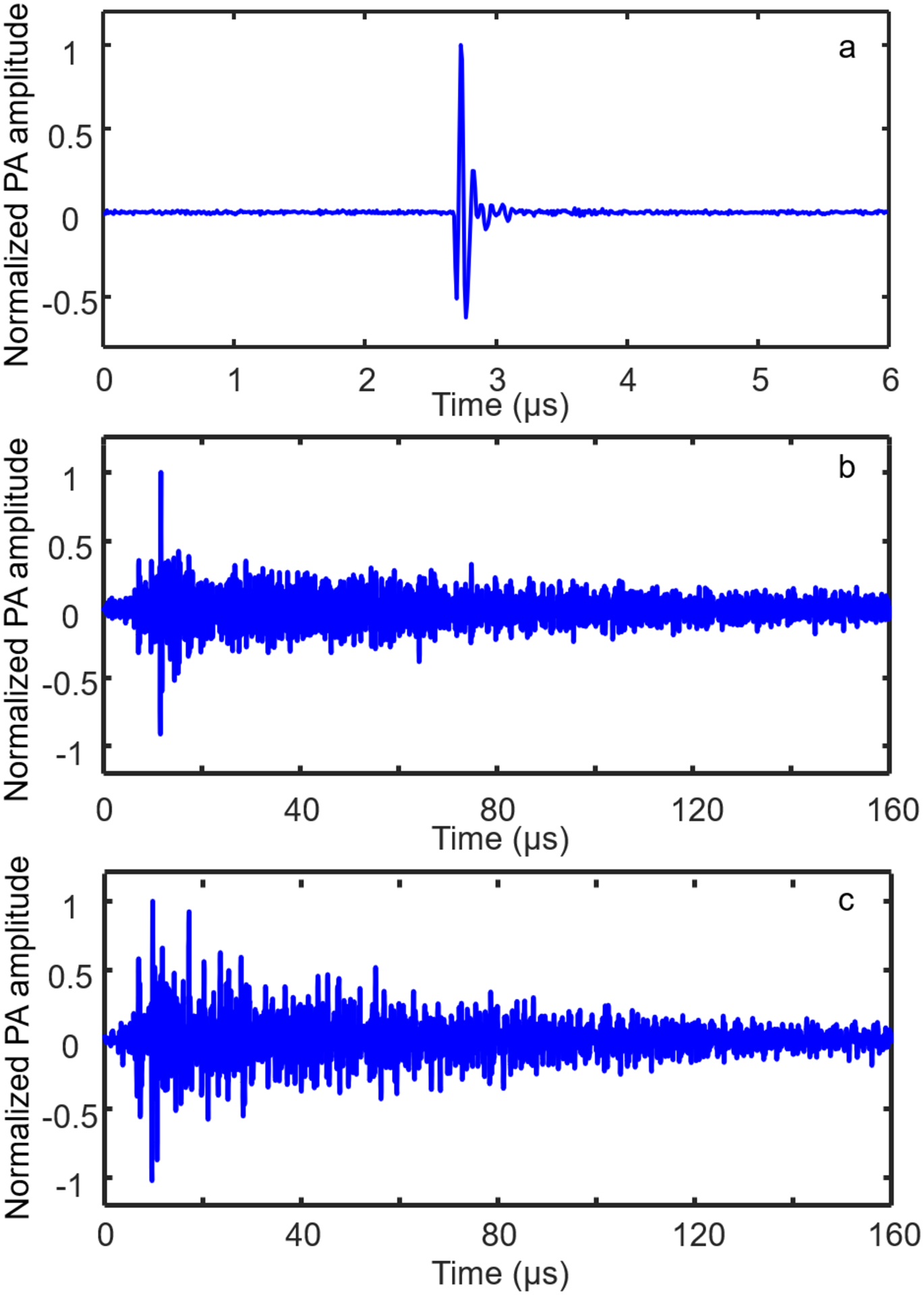

In the calibration mode, a focused optical beam is used for scanning. The detected PA waves through the ER are different from A-line signals in a convention PAM, which represents a 1D depth-resolved image (Fig. 5a). However, the PA signal with randomized temporal signatures, encoded by the ER, is much longer (Fig. 5b). After raster scanning the optical focus over the object, PATER yields a 2D projection image of the 3D object. Sample images can be found in Fig. 3 of this protocol, and in Figures 3–5 of ref. 10.

Figure 5.

Temporal signatures of the PA signals. (a, b) PA signals from a point source detected by a conventional PAM system (a) and PATER (b). The useful signal in (a) lasts approximately 500 ns. PATER records the signal in (b) for 160 μs. (c) Widefield signal recorded using PATER for 160 μs.

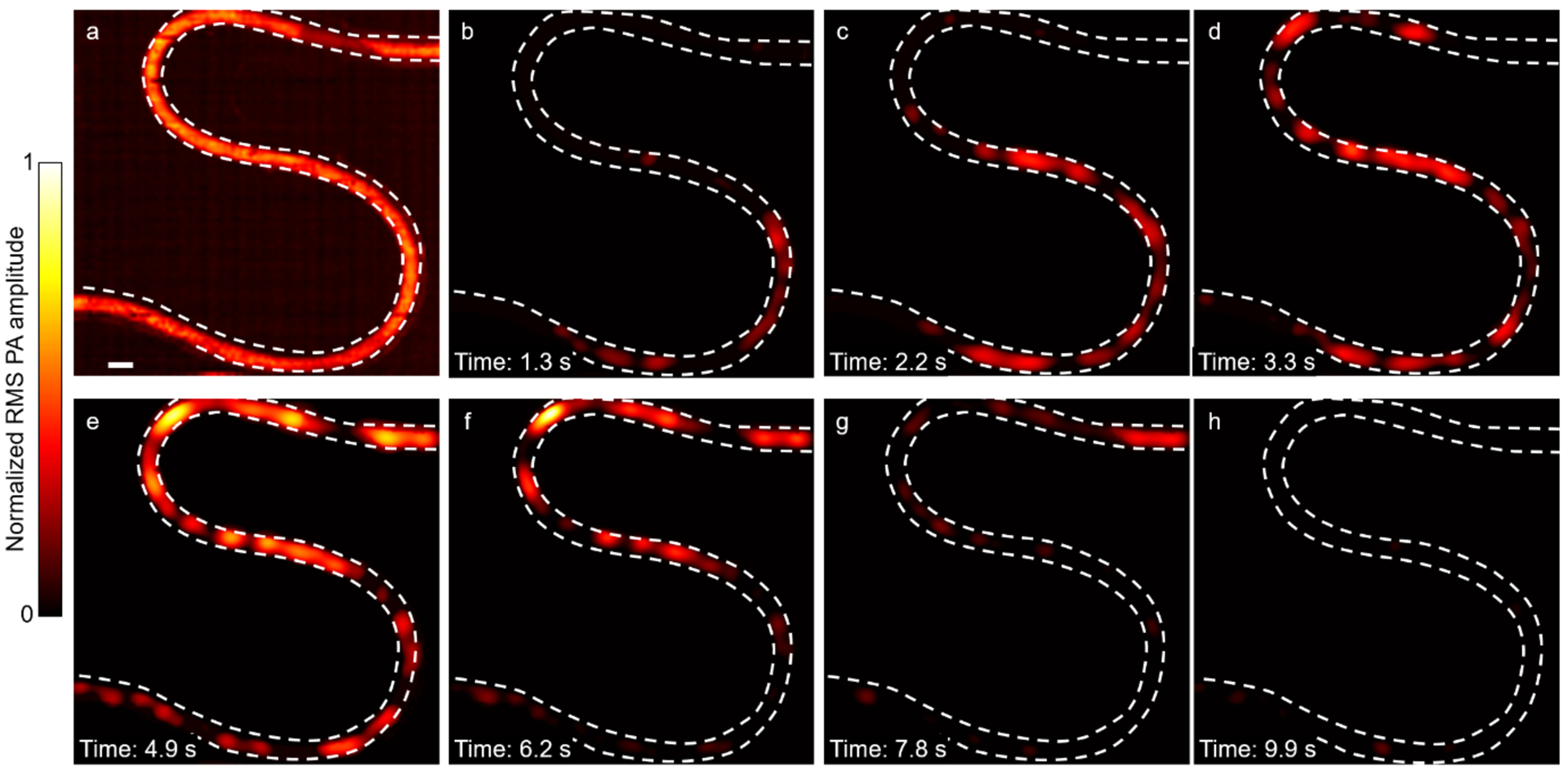

In the widefield mode, a broad beam illuminates the object uniformly. The detected PA signals are a weighted summation of the calibration signals (Fig. 5c). In this mode, PA signals from the entire FOV can be received in parallel. We can mathematically compute the 2D projection images, which represent the optical absorption changes of the object. As shown in Fig. 6, PATER captures the processes of blood flushing in and out of an S-shaped tube.

Figure 6.

Blood flushing in and out of a tube. (a) RMS image of an S-shape tube filled with blood. Scale bar, 1 mm. (b–h) Widefield images showing blood flushing in and out of the tube.

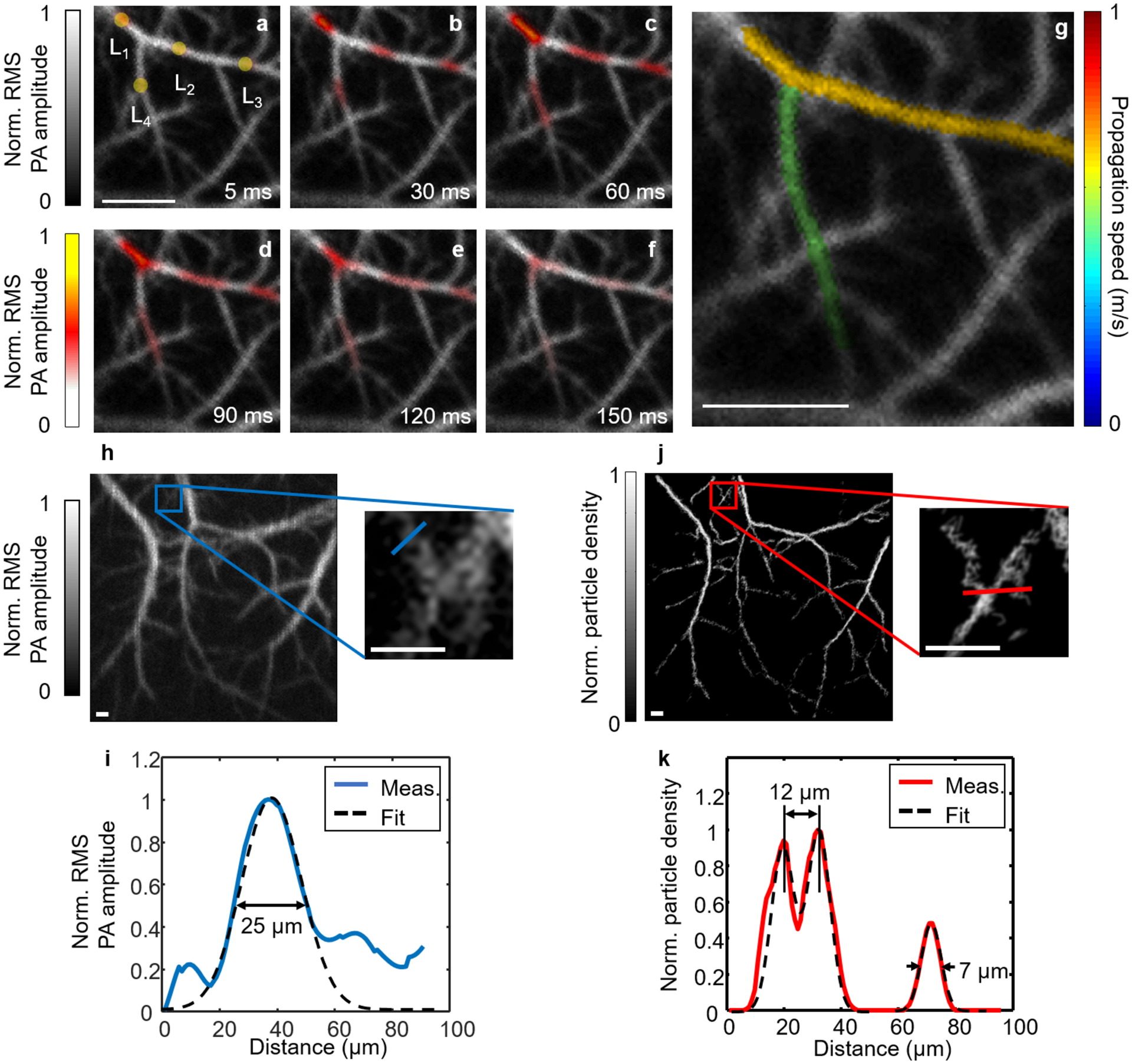

More widefield imaging results are shown in Fig. 7 and can be found in ref. 10. Taking advantage of the high-speed snapshot widefield imaging, PATER visualizes the blood pulse wave propagation at multiple sites simultaneously in mice in vivo (Fig. 7a–g, and Fig. 4 of ref. 10) and tracks circulating melanoma tumor cells in the brain and localizes them at super-resolution in vivo (Fig. 7h–k, and Fig. 5 of ref. 10).

Figure 7.

PATER of biological dynamics in vivo. (a–f) Widefield images showing thermal wave propagation in the mouse middle cerebral arteries. The yellow circles in (a), labeled L1–L4, indicate locations of the laser heating spots during recording. The overlay images show the thermal wave signals in color and the background blood signals in gray. Norm., normalized. (g) Map of pulse wave velocity at two branches of the middle cerebral arteries. (h) Calibration image of the mouse brain and a zoomed-in view of the blue boxed region showing a group of small vessels. Scale bars in (a) and (g), 500 μm. (i) Plot of the profile along the blue line in the zoomed-in view in (h) and a Gaussian fit. Meas., measurement. (j) Localization map of circulating tumor cells and a zoomed-in view of the red boxed region showing the same region as the blue boxed region in (h). Scale bars in (h) and (j), 100 μm. (k) Plot of the profile along the red line in the zoomed-in view in (j) and a three-term Gaussian fit. The two neighboring vessels branching out from each other can be separated at a distance of 12 μm, and the FWHM for the small vessel is 7 μm. Images in this figure are adapted from ref. 10. All animal procedures were approved by the Institutional Animal Care and Use Committee of California Institute of Technology.

PATER requires that the boundary conditions between the object and ER should remain unchanged throughout the experiment. A successful widefield reconstruction relies on the assumption that the widefield measurement is a consistent linear combination of the calibrated system impulse responses. Once the boundary conditions change due to movements of the object or the instability of the system, the calibrated impulse responses will be different from the system responses during the widefield measurements, resulting in reconstruction artifacts and incorrect measurements. Those issues can be solved by a universal calibration method, however, which is beyond the scope of this protocol.

Supplementary Material

Acknowledgments

This work was supported in part by National Institutes of Health grants R01 CA186567 (NIH Director’s Transformative Research Award), R01 NS102213, U01 NS099717 (BRAIN Initiative), R35 CA220436 (Outstanding Investigator Award), and R01 EB028277.

Footnotes

Data availability

All data generated or analyzed within this study are included in the paper, its Supplementary Information, and ref. 10. All raw data are available from the corresponding author upon request.

Code availability

The reconstruction algorithm and data processing methods are described in detail in this protocol. We have deposited the reconstruction algorithm in Supplementary Software.

Competing interests

L.V.W. has financial interests in Microphotoacoustics, Inc., CalPACT, LLC, and Union Photoacoustic Technologies, Ltd., which did not support this work.

References

- 1.Weber J, Beard PC & Bohndiek SE Contrast agents for molecular photoacoustic imaging. Nature Methods 13, 639–650 (2016). [DOI] [PubMed] [Google Scholar]

- 2.Ntziachristos V Going deeper than microscopy: the optical imaging frontier in biology. Nature Methods 7, 603–614, doi: 10.1038/nmeth.1483 (2010). [DOI] [PubMed] [Google Scholar]

- 3.Wang LHV & Hu S Photoacoustic tomography: in vivo imaging from organelles to organs. Science 335, 1458–1462, doi: 10.1126/science.1216210 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Zhang HF, Maslov K & Wang LV In vivo imaging of subcutaneous structures using functional photoacoustic microscopy. Nature Protocols 2, 797 (2007). [DOI] [PubMed] [Google Scholar]

- 5.Beard PC Biomedical photoacoustic imaging. Interface Focus 1, 602–631 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Xu M & Wang LV Universal back-projection algorithm for photoacoustic computed tomography. Physical Review E 71, 016706 (2005). [DOI] [PubMed] [Google Scholar]

- 7.Matthews TP, Poudel J, Li L, Wang LV & Anastasio MA Parameterized Joint Reconstruction of the Initial Pressure and Sound Speed Distributions for Photoacoustic Computed Tomography. SIAM Journal on Imaging Sciences 11, 1560–1588 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Li L et al. Single-impulse panoramic photoacoustic computed tomography of small-animal whole-body dynamics at high spatiotemporal resolution. Nature Biomedical Engineering 1, 0071 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Wang LV & Yao J A practical guide to photoacoustic tomography in the life sciences. Nature Methods 13, 627–638 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Li Y et al. Snapshot photoacoustic topography through an ergodic relay for high-throughput imaging of optical absorption. Nature Photonics, 1–7 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Li L et al. Small near-infrared photochromic protein for photoacoustic multi-contrast imaging and detection of protein interactions in vivo. Nat Commun 9, 2734, doi: 10.1038/s41467-018-05231-3 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Zhang P et al. High‐resolution deep functional imaging of the whole mouse brain by photoacoustic computed tomography in vivo. J Biophotonics 11, e201700024 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Zhang P, Li L, Lin L, Shi J & Wang LV In vivo superresolution photoacoustic computed tomography by localization of single dyed droplets. Light: Science & Applications 8, 36 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Jathoul AP et al. Deep in vivo photoacoustic imaging of mammalian tissues using a tyrosinase-based genetic reporter. Nature Photonics 9, 239–246, doi: 10.1038/nphoton.2015.22 (2015). [DOI] [Google Scholar]

- 15.Yao J et al. Multiscale photoacoustic tomography using reversibly switchable bacterial phytochrome as a near-infrared photochromic probe. Nature Methods 13, 67 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wu Z et al. A microrobotic system guided by photoacoustic computed tomography for targeted navigation in intestines in vivo. Science Robotics 4, eaax0613 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Li L et al. Label-free photoacoustic tomography of whole mouse brain structures ex vivo. Neurophotonics 3, 035001 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Yeh C et al. Dry coupling for whole-body small-animal photoacoustic computed tomography. J Biomed Opt 22, 041017 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Razansky D et al. Multispectral opto-acoustic tomography of deep-seated fluorescent proteins in vivo. Nature Photonics 3, 412–417, doi: 10.1038/nphoton.2009.98 (2009). [DOI] [Google Scholar]

- 20.Imai T et al. High-throughput ultraviolet photoacoustic microscopy with multifocal excitation. J Biomed Opt 23, 036007 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Qu Y et al. Dichroism-sensitive photoacoustic computed tomography. Optica 5, 495–501 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Razansky D, Buehler A & Ntziachristos V Volumetric real-time multispectral optoacoustic tomography of biomarkers. Nature Protocols 6, 1121–1129, doi: 10.1038/nprot.2011.351 (2011). [DOI] [PubMed] [Google Scholar]

- 23.Li L, Zhu L, Shen Y & Wang LV Multiview Hilbert transformation in full-ring transducer array-based photoacoustic computed tomography. J Biomed Opt 22, 076017 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Laufer J et al. In vivo preclinical photoacoustic imaging of tumor vasculature development and therapy. J Biomed Opt 17, 056016 (2012). [DOI] [PubMed] [Google Scholar]

- 25.Ellwood R, Ogunlade O, Zhang E, Beard P & Cox B Photoacoustic tomography using orthogonal Fabry–Pérot sensors. J Biomed Opt 22, 041009 (2016). [DOI] [PubMed] [Google Scholar]

- 26.Li L et al. Fully motorized optical-resolution photoacoustic microscopy. OPTICS LETTERS 39, 2117–2120 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Yao J et al. High-speed label-free functional photoacoustic microscopy of mouse brain in action. Nature Methods 12, 407 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Hsu H-C et al. Dual-axis illumination for virtually augmenting the detection view of optical-resolution photoacoustic microscopy. J Biomed Opt 23, 076001 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Draeger C & Fink M One-channel time reversal of elastic waves in a chaotic 2D-silicon cavity. Physical Review Letters 79, 407 (1997). [Google Scholar]

- 30.Ing RK, Quieffin N, Catheline S & Fink M In solid localization of finger impacts using acoustic time-reversal process. Applied Physics Letters 87, 204104 (2005). [Google Scholar]

- 31.Li Y, Wong TT, Shi J, Hsu H-C & Wang LV Multifocal photoacoustic microscopy using a single-element ultrasonic transducer through an ergodic relay. Light: Science & Applications 9, 1–7 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Li Y et al. Photoacoustic topography through an ergodic relay for functional imaging and biometric application in vivo. J Biomed Opt 25, 070501 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Fink M & de Rosny J Time-reversed acoustics in random media and in chaotic cavities. Nonlinearity 15, R1 (2001). [Google Scholar]

- 34.Eder FX Moderne Messmethoden der Physik. Bd 1. . 2, erweiterte Aufl. edn, (Deutscher Verlag der Wissenschaften, 1960). [Google Scholar]

- 35.Bioucas-Dias JM & Figueiredo MAT A new TwIST: two-step iterative shrinkage/thresholding algorithms for image restoration. IEEE Transactions on Image Processing 16, 2992–3004 (2007). [DOI] [PubMed] [Google Scholar]

Key references

- Li Y et al. Snapshot photoacoustic topography through an ergodic relay for high-throughput imaging of optical absorption. Nature Photonics 14, 164–170 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li Y et al. Photoacoustic topography through an ergodic relay for functional imaging and biometric application in vivo. Journal of Biomedical Optics 25, 070501 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li Y, Wong TTW, Shi J, Hsu H-C & Wang LV Multifocal photoacoustic microscopy using a single-element ultrasonic transducer through an ergodic relay. Light: Science & Applications 9, 135 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.