Abstract

Lysine succinylation is a typical protein post-translational modification and plays a crucial role of regulation in the cellular process. Identifying succinylation sites is fundamental to explore its functions. Although many computational methods were developed to deal with this challenge, few considered semantic relationship between residues. We combined long short-term memory (LSTM) and convolutional neural network (CNN) into a deep learning method for predicting succinylation site. The proposed method obtained a Matthews correlation coefficient of 0.2508 on the independent test, outperforming state of the art methods. We also performed the enrichment analysis of succinylation proteins. The results showed that functions of succinylation were conserved across species but differed to a certain extent with species. On basis of the proposed method, we developed a user-friendly web server for predicting succinylation sites.

1. Introduction

Protein post-translational modification (PTM) refers to the chemical interaction occurring prior to protein biosynthesis and after mRNAs are translated into polypeptide chains. PTM has different categories and is very prevalent in the cells. More than 450 categories of PTMs were discovered to date, such as phosphorylation, methylation, and acetylation [1–3]. PTM increases diversity of protein structures and functions, viewed as one of most regulating mechanisms in the cellular process. Lysine succinylation is a type of protein TPMs, in which a succinyl group (-CO-CH2-CH2-CO2H) is attached to lysine residue of proteins [4]. Succinylation is reversible, dynamic, and evolutionarily conserved, widely existing in the prokaryote and the eukaryotes cells [5, 6]. The succinylation of proteins induces shift in the charge and the structural alteration and thus would yield effects on functions of proteins [6]. Growing evidences also showed aberrant succinylations were involved in the pathogenesis of some diseases including cancers [7], metabolism disease [8, 9], and nervous system diseases [10]. Thus, identifying succinylation sites and understanding its mechanism are crucial to develop drugs for related diseases.

Identifying succinylation sites has two main routes: experimental and computational methods. The experimental methods were represented by mass spectrometry, which contributed to the validation of succinylation and collection of first-hand data. On the other hand, the experimental methods are labor-intensive and time-consuming without assist of the computational methods. The computational methods are based on data yielded by the experimental methods and build machine learning-based models to predict new succinylations. Therefore, identifying succinylation is a cyclic iterative process from experiment to computation and again from computation to experiment. We focused on the computational methods to predict succinylation. In the past decades, more than ten computational methods have been developed for identifying succinylation [11–29]. Most of these computational methods extracted features directly from protein sequences, which were subsequently used for training model. For example, Zhao et al. [11] used the auto-correlation functions, the group weight-based encoding, the normalized van der Waals volume, and the position weight amino acid composition. Kao et al. [25] exploited the amino acid composition and informative k-spaced amino acid pairs. Xu et al. [12] and Jia et al. [13, 19] employed pseudo amino acid composition. Dehzangi et al. [23] exploited the structure information. Hasan et al. [28] compared 12 types of feature as well as two learning methods: random forest and support vector machine for succinylation prediction. Different features have different performance with species. So does the learning methods. The best performance was no more than 0.83 AUC (area under receiver operating characteristic curve) for independent test. Like sentences of language, the protein sequences should have semantic. However, all the methods above failed to seize semantic relationship hidden among residues. Thapa et al. [29] presented a convolutional neural network- (CNN-) based deep learning method DeepSuccinylSite for predicting succinylation. Different from traditional methods, the DeepSuccinylSite exploited word embedding which translated word into vector, which was an extensively used method in the field of natural language process. The CNN is a widely used method to extract local features especially in the field of image processing. Inspired by the DeepSuccinylSite and loss of semantic relationship between residues, we fused long short-term memory (LSTM) and CNN into a deep learning method for succinylation prediction.

2. Data

All the succinylated proteins were downloaded from the PLMD (Protein Lysine Modifications Database) database which is dedicated to specifically collect protein lysine modification [30–32]. The PLMD has evolved to version 3.0, housing 284780 modification events in 53501 proteins for 20 types of lysine modification. We extracted 6377 proteins containing 18593 succinylation sites. To remove dependency of the proposed method on the homology, we used the software CD-Hit [33, 34] to cluster 6377 protein sequences. The sequence identify cut-off was set to 0.4, and we obtained 3560 protein sequences, of which any two kept sequence similarity less than 0.4. We randomly divided these 3560 proteins into the training and the testing samples at the ratio of training to testing 4 : 1, resulting in 712 testing and 2848 training sequences. For each protein sequence, we extracted all the peptides which centered the lysine residue with 15 amino acid residues in the downstream/upstream of it. For peptides less than 15 amino acid residues, we prefixed or suffixed “X” to supply it. The length of the amino acids is influential in prediction of succinylation sites. The short amino acid peptides would miss key information, while the long peptides would include noise or redundancy. Whether the short or the long peptides would cause low accuracy of prediction. Among methods to predict succinylation sites, iSuc-PseAAC [12] adopted the shorter peptides of 15 amino acid residues; SuccinSite2.0 [20] and GPSuc [22] adopted the longer 41 amino acid residues, while the most methods including SSEvol-Suc [23], Success [24], iSuc-PseOpt [13], pSuc-Lys [19], SucStruct [18], and PSSM-Suc [17] adopted peptides of 31 amino acid residues, which is of moderate length. Thus, we chose 31 amino acid residues as basic peptides. The peptides with succinylation sites were viewed positive samples and the others as negative ones. For the training set, the negative samples extremely outnumbered the positive ones. Unbalanced training set would cause preference to negative samples in the process of prediction. Therefore, we randomly sampled the same size of negative examples as the positive ones. Finally, the training set comprised 6512 positive and 6512 negative samples, while the testing set 1479 positive and 16457 negative samples. All the experimental data are freely available to scientific communities.

3. Method

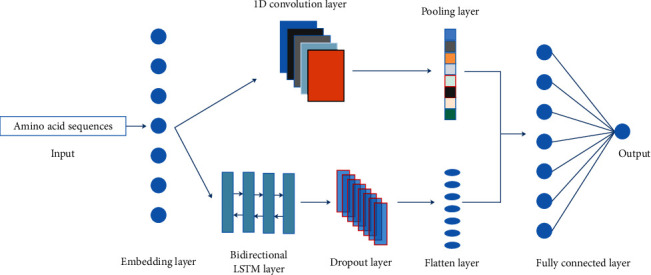

As shown in Figure 1, the proposed deep learning network consisted mainly of embedding, 1D convolution, pooling, bidirectional LTSM, dropout, flatten, and fully connected layers. Peptides with 31 amino acid residues were entered to the embedding layer and were translated into vectors with shape of (31, 64). Then, two different network structures, respectively, took the embedding as input, and their outputs were concatenated as input to the fully connected layer. One structure was the convolution neural network, and another was the bidirectional LSTM neural network. The final output was a neuron representing probability of belonging to the positive sample. The parameters and the shape of output of each layers in the deep neural network are listed in Table 1. The total number of trainable parameters is 336,897.

Figure 1.

Flowchart of the proposed method.

Table 1.

Number of parameters and shape of output in the LSTMCNNsucc.

| Layers | Parameters | Output |

|---|---|---|

| Embedding | 1472 | (None, 31, 64) |

| Bidirectional LSTM | 197632 | (None, 31, 256) |

| Dropout | 0 | (None, 31, 256) |

| Flatten | 0 | (None, 7936) |

| 1D convolution | 10272 | (None, 27, 32) |

| Pooling | 0 | (None, 32) |

| Dense (16) | 127504 | (None, 16) |

| Dense (1) | 17 | (None, 1) |

3.1. Embedding Layer

Most machine learning-based methods for predicting protein post-translational modification generally required an encoding step which translated sequences into vector representation. For example, the frequently used encoding schemes included position specific scoring matrix [35], amino acid composition, composition of k-space amino acid pair [14], and pseudo amino acid composition [36]. For sequences of text, these methods might lose hidden semantic. The word2vec [37, 38] is different from the above methods, embedding word into vector. The word2vec is capable of extracting semantic of word. An interesting example is that King–Man + Woman = Queen. Similar to the word2vec [37, 38], the embedding layer translated words into vector representations. In this method, the character of amino acid corresponds to word.

3.2. 1D Convolution Layer

The convolution neural network (CNN) proposed by LeCun et al. [39, 40] is a feed forward network. Compared with the conventional neural network, the CNN has two notable properties: local connectivity and parameter sharing. The local connectivity lies that two neighboring layers are not fully connected but locally connected. That is to say, the neuron in a layer is not connected to all neurons in the neighboring layers. The CNN implemented the parameter sharing via the filter (also called convolution kernel). The filter slides on the image and convoluted with all sections in image. The filter is shared by the image. In the last ten years, many deep convolution neural networks such as AlexNet [41], VGG [42], GoogleNet [43], and ResNet [44] have been proposed and applied to computer vision. The CNN achieved significant advance in terms of classification error in comparison with the previous deep neural network. The convolution is of 1-dimension, 2-dimension, or more than 2 dimensions. Here, we used 1D convolution. Suppose a discrete sequence was α = [a1, a2, ⋯, an], and the convolution kernel was β = [b1, b2, ⋯bm]. The 1D convolution product of α and β was expressed by

| (1) |

where d was the stride of convolution and k was the length of the output sequence. Generally, k was the most integer less than or equal to (n − m)/d + 1.

3.3. Pooling Layer

The pooling operation firstly appeared in the AlexNet [41] and is increasingly becoming one of components of the deep CNN architecture. The pooling operation has such categories as max pooling, min pooling, and mean pooling. The role of pooling operation included removal of redundancy information and reduction of overfitting. Here, we used the max pooling operation. Given an n-channel input A = (ai,j,k), the max pooling operation was defined by

| (2) |

3.4. Bidirectional LSTM Layer

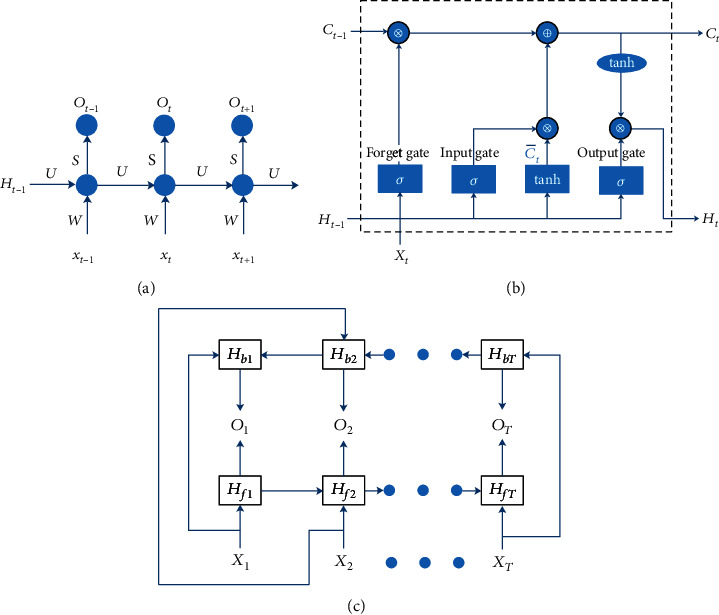

Recurrent neural network (RNN) [45, 46] is a different framework of neural network from multiple layer perception. The RNN shares weights and is especially suitable to the field of sequence analysis such as language translation and semantic understanding. An unfolded RNN model was shown in Figure 2(a). The hidden state Ht at the time step t was not only dependent on the current input but also on the previous hidden state, which was computed by

| (3) |

where f was an activation function and α was a bias. The output Ot at the time step t was computed by

| (4) |

where g was also an activation function and β was a bias. For long sequences, there was a fatal question with the RNN, i.e., vanishing gradient. Among all the solutions to the vanishing gradient, the LSTM [47] is one of the better. The LSTM contains a candidate memory cell and three gates: forget gate, input gate, and output gate, as shown in Figure 2(b). The forget gate Ft, the input gate It, and the output gate Pt at the time step t were computed, respectively, by

| (5) |

where Wx,f and Wh,f were weights of the LSTM from input to forget gate and from the hidden state to the forget gate, respectively. Wx,i and Wh,i were link weights from input to input gate and from the hidden state to the input gate, respectively. Wx,o and Wh,o were link weights from input to output gate and from the hidden state to the output gate, respectively. bf, bi, and bo were the bias of the forget and the input and the output gate, respectively. σ was the activation function. The candidate memory cell was calculated by

| (6) |

where Wx,c and Wh,c were weights of the LSTM from input to the candidate memory and from the hidden state to the candidate memory, respectively, and bc was the bias. The memory cell at the time step t was computed by

| (7) |

where ⨂ was defined as element-wise multiplication. The hidden state was updated by

| (8) |

Figure 2.

The structure of neural networks: (a) for RNN, (b) for LSTM, and (c) for directional LSTM.

The previous RNN was forward. The output at the time step t was only dependent on the preceding inputs and the hidden state. In fact, the output might be relevant to the latter input and the hidden state. Schuster et al. [48] proposed a bidirectional RNN to model this relationship, showed in Figure 2(c). The forward hidden state at the time step t was computed by

| (9) |

while the backward hidden state was computed by

| (10) |

The output at the time step t was computed by

| (11) |

3.5. Dropout Layer

The deep neural network is prone to lead to overfitting when the number of training samples was too less. To deal with this issue, Hinton et al. [49] proposed the dropout concept. Due to its effect and efficiency, the dropout is increasingly becoming the frequently used trick in the deep learning area [41, 50–53]. The neurons were dropped out at a certain rate of dropout, and parameters of only preserved neurons were updated in the training stage, while all the neurons were used in the predicting stage.

3.6. Flatten Layer and Fully Connected Layer

The role of flatten layer was only to convert the data into one-dimension and then facilitated connection of the fully connected layer. No parameters were trainable in the flatten layer. The fully connected layer was similar to hidden layer in the MLP, each neuron connected to the neurons in the preceding layer.

4. Metrics

We adopted to evaluate the predicted result these frequently used metrics in the binary classification questions such as sensitivity (SN), specificity (SP), accuracy (ACC), and Matthews correlation coefficient (MCC), which were defined by

| (12) |

where TP and TN were defined as numbers of the true positive and the true negative samples, respectively, FP and FN, respectively, as numbers of the false positive and the false negative samples in the prediction. SN reflected the accuracy of the correctly predicted positive samples, SP accuracy of the correctly predicted negative samples, and ACC the average accuracy of the correctly predicted samples. SN, SP, and ACC ranged from 0 to 1, larger meaning better performance. MCC was Matthews correlation coefficient, representing correlation between the true class and the predicted class. MCC ranged from -1 to 1. 1 meant perfect prediction, 0 random prediction, and -1 meant that the prediction was completely opposite to the true.

5. Results

Table 2 showed the predicting performance of the trained model on the 712 testing sequences. Although more than ten approaches or tools for predicting succinylation have been proposed in the past ten years, either they did not provide online predicting server or the web server could not work. We compared the proposed method to three methods whose web predicting server still can work [28]: SuccinSite [15], iSuc-PseAAC [12], and DeepSuccinylSite [29]. 712 testing sequences were used to examine three approaches. Among 712 testing sequences, at least 225 sequences repeated in the training set of the SuccinSite, and at least 223 repeated in the training set of DeepSuccinylSite. These minus 225 sequences were used to examine the SuccinSite and these minus 223 sequences to test the DeepSuccinylSite. iSuc-PseAAC [12] obtained best SP and best ACC but worst SN and worst MCC. The SuccinSite [15] reached better SP and better ACC but worse MCC and worse SN. The iSuc-PseAAC [12] and the SuccinSite [15] were in favor of predicting the negative samples. The DeepSuccinylSite [29] was better than the LSTMCNNsucc in terms of SN, worse than the LSTMCNNsucc in terms of sp. The overall performance of the LSTMCNNsucc was slightly better than that of the DeepSuccinylSite.

Table 2.

Comparison with state of the art methods.

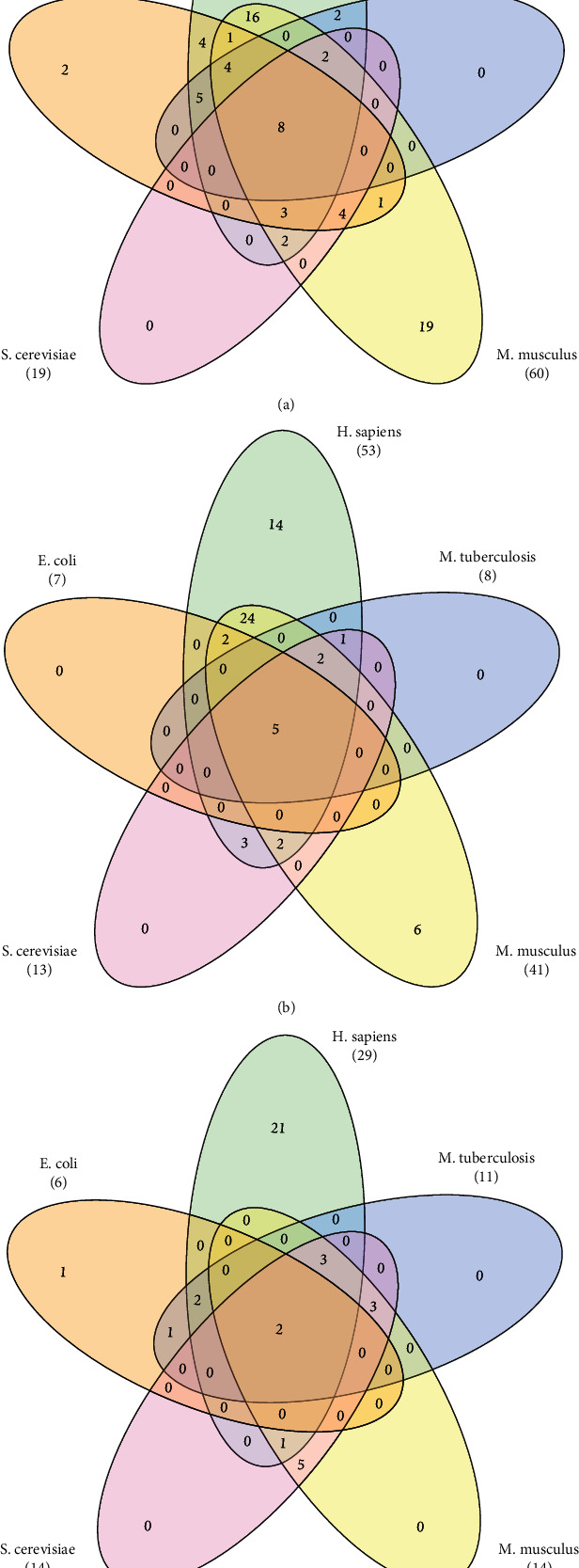

5.1. Functional Analysis

We used the statistical over-representation test of gene list analysis in the PANTHER classification system [54, 55] to perform function enrichment analysis of the succinylated proteins. The significant biological process, the molecular function, and the cellular component terms (p value≤0.01) were listed in the supplementary materials 1 and 2. For five species, Escherichia coli (E. coli), Homo sapiens (H. sapiens), Mus musculus (M. musculus), Mycobacterium tuberculosis (M. tuberculosis), and Saccharomyces cerevisiae (S. cerevisiae), they shared some common functions, but they had also own specific functions. The numbers of shared terms among five species are shown in Figure 3. H. sapiens and M. musculus shared 36 significant biological process terms and 35 cellular component terms, much more than the numbers of shared terms between any other two species (Figures 3(a) and 3(b)). Five species shared eight biological process GO terms: “biosynthetic process (GO:0009058)”, “carboxylic acid metabolic process (GO:0019752)”, “organic acid metabolic process (GO:0006082)”, “organic substance biosynthetic process (GO:1901576)”, “organonitrogen compound biosynthetic process (GO:1901566)”, “organonitrogen compound metabolic process (GO:1901564)”, “oxoacid metabolic process (GO:0043436)”, and “small molecule metabolic process (GO:0044281)”; 5 cellular component GO terms: “cytoplasm (GO:0005737)”, “cytoplasmic part (GO:0044444)”, “cytosol (GO:0005829)”, “intracellular (GO:0005622)”, and “intracellular part (GO:0044424)”; and two molecular function GO terms: “catalytic activity (GO:0003824)”, and “molecular_function (GO:0003674)”. H. sapiens had much more own specific functions than other species, with 75 specific biological process GO terms, 14 GO cellular component terms, and 21 molecular function GO terms. No specific functions existed in both M. tuberculosis and S. cerevisiae whether for biological process, cellular component, or molecular functions.

Figure 3.

The numbers of shared terms (a) for biological process, (b) cellular component, and (c) molecular function.

We also performed enrichment analysis of Kyoto Encyclopedia of Genes and Genomes (KEGG) pathway by functional annotation in the DAVID tool [56, 57] to investigate in which pathway the succinylated proteins were involved. The statistically significant KEGG terms (Benjamini ≤ 0.01) are listed in Table 3. Different species were involved in some identical pathways. For example, both metabolic pathways and biosynthesis of antibiotics were enriched in the succinylated proteins for five species, implying the universal role of succinylation. On the other hand, different pathways were involved in different species. H. sapiens and M. musculus shared more pathway and had more pathways than other three species, implying species-specific role of the succinylation.

Table 3.

Significant KEGG pathway terms.

| Species | KEGG terms | Benjamini |

|---|---|---|

| E. coli | Metabolic pathways | 3.30E-08 |

| Biosynthesis of amino acids | 1.00E-06 | |

| Biosynthesis of secondary metabolites | 2.40E-04 | |

| Biosynthesis of antibiotics | 7.40E-04 | |

| Lysine biosynthesis | 3.30E-03 | |

|

| ||

| H. sapiens | Biosynthesis of antibiotics | 3.70E-10 |

| Metabolic pathways | 2.80E-09 | |

| Ribosome | 3.40E-08 | |

| Valine, leucine, and isoleucine degradation | 1.30E-06 | |

| Carbon metabolism | 6.20E-06 | |

| Oxidative phosphorylation | 1.10E-05 | |

| Parkinson's disease | 2.60E-05 | |

| Citrate cycle (TCA cycle) | 1.00E-04 | |

| Huntington's disease | 4.10E-04 | |

| Alzheimer's disease | 7.80E-04 | |

| Aminoacyl-tRNA biosynthesis | 1.00E-03 | |

| Butanoate metabolism | 3.40E-03 | |

| Proteasome | 8.20E-03 | |

|

| ||

| M. musculus | Metabolic pathways | 6.20E-26 |

| Parkinson's disease | 8.50E-11 | |

| Oxidative phosphorylation | 3.40E-10 | |

| Nonalcoholic fatty liver disease (NAFLD) | 1.00E-09 | |

| Huntington's disease | 2.80E-09 | |

| Alzheimer's disease | 1.40E-08 | |

| Ribosome | 3.30E-07 | |

| Peroxisome | 1.80E-06 | |

| Glycine, serine, and threonine metabolism | 1.50E-05 | |

| Pyruvate metabolism | 9.00E-05 | |

| Propanoate metabolism | 2.40E-04 | |

| Valine, leucine, and isoleucine degradation | 1.90E-03 | |

| Glyoxylate and dicarboxylate metabolism | 3.10E-03 | |

| Biosynthesis of antibiotics | 5.60E-03 | |

|

| ||

| M. tuberculosis | Metabolic pathways | 1.00E-04 |

| Microbial metabolism in diverse environments | 2.50E-04 | |

| Biosynthesis of antibiotics | 4.40E-04 | |

| Biosynthesis of secondary metabolites | 1.00E-02 | |

| Propanoate metabolism | 1.00E-02 | |

|

| ||

| S. cerevisiae | Metabolic pathways | 5.20E-05 |

| Biosynthesis of amino acids | 3.30E-04 | |

| 2-Oxocarboxylic acid metabolism | 7.90E-04 | |

| Biosynthesis of antibiotics | 3.50E-03 | |

| Oxidative phosphorylation | 3.50E-03 | |

5.2. LSTMCNNsucc Web Server

We built a web server of the proposed LSTMCNNsucc at http://8.129.111.5/. Users either directly input protein sequences in a fasta format or upload a file of fasta format to perform prediction. When both protein sequences and files were submitted, the file was given to priority of prediction.

6. Conclusion

We presented a bidirectional LSTM and CNN-based deep learning method for predicting succinylation sites. The method absorbed semantic relationship hidden in the succinylation sequences, outperforming state-of-the-art method. The functions of succinylation proteins were conserved to a certain extent across species but also were species-specific. We also implemented the proposed method into a user-friendly web server which is available at http://8.129.111.5/.

Acknowledgments

This work is supported by the National Natural Science Foundation of China (11871061, 61672356), by the Scientific Research Fund of Hunan Provincial Education Department (18A253), by the open project of Hunan Key Laboratory for Computation and Simulation in Science and Engineering (2019LCESE03), and by Shaoyang University Graduate Research Innovation Project (CX2020SY060).

Data Availability

The experimental succinylation and nonsuccinylation sites used to support the findings of this study have been deposited in the website http://8.129.111.5/ and are freely available to all scientific communities.

Conflicts of Interest

The authors declare that no competing interest exists.

Supplementary Materials

KEGG enrichmeent analysis of KEGG pathway for 5 species.

GO enrichment analysis of GO terms for 5 species.

References

- 1.Witze E. S., Old W. M., Resing K. A., Ahn N. G. Mapping protein post-translational modifications with mass spectrometry. Nature Methods. 2007;4(10):798–806. doi: 10.1038/nmeth1100. [DOI] [PubMed] [Google Scholar]

- 2.Sharma B. S. Post-translational modifications (PTMs), from a cancer perspective: an overview. Oncogen Journal. 2019;2(3):p. 12. [Google Scholar]

- 3.Huang G., Li X. A review of computational identification of protein post-translational modifications. Mini-Reviews in Organic Chemistry. 2015;12(6):468–480. doi: 10.2174/1570193X13666151218191044. [DOI] [Google Scholar]

- 4.Zhang Z., Tan M., Xie Z., Dai L., Chen Y., Zhao Y. Identification of lysine succinylation as a new post-translational modification. Nature Chemical Biology. 2011;7(1):58–63. doi: 10.1038/nchembio.495. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Du J., Zhou Y., Su X., et al. Sirt5 is a NAD-dependent protein lysine demalonylase and desuccinylase. Science. 2011;334(6057):806–809. doi: 10.1126/science.1207861. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Sreedhar A., Wiese E. K., Hitosugi T. Enzymatic and metabolic regulation of lysine succinylation. Genes & Diseases. 2020;7(2):166–171. doi: 10.1016/j.gendis.2019.09.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Xiangyun Y., Xiaomin N. Desuccinylation of pyruvate kinase M2 by SIRT5 contributes to antioxidant response and tumor growth. Oncotarget. 2017;8(4):6984–6993. doi: 10.18632/oncotarget.14346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Yang M., Wang Y., Chen Y., et al. Succinylome analysis reveals the involvement of lysine succinylation in metabolism in pathogenic Mycobacterium tuberculosis. Molecular & Cellular Proteomics. 2015;14(4):796–811. doi: 10.1074/mcp.M114.045922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Yang Y., Gibson G. E. Succinylation links metabolism to protein functions. Neurochemical Research. 2019;44(10):2346–2359. doi: 10.1007/s11064-019-02780-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Gibson G. E., Xu H., Chen H. L., Chen W., Denton T. T., Zhang S. Alpha-ketoglutarate dehydrogenase complex-dependent succinylation of proteins in neurons and neuronal cell lines. Journal of Neurochemistry. 2015;134(1):86–96. doi: 10.1111/jnc.13096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Zhao X., Ning Q., Chai H., Ma Z. Accurate in silico identification of protein succinylation sites using an iterative semi-supervised learning technique. Journal of Theoretical Biology. 2015;374:60–65. doi: 10.1016/j.jtbi.2015.03.029. [DOI] [PubMed] [Google Scholar]

- 12.Xu Y., Ding Y. X., Ding J., Lei Y. H., Wu L. Y., Deng N. Y. iSuc-PseAAC: predicting lysine succinylation in proteins by incorporating peptide position-specific propensity. Scientific Reports. 2015;5(1, article 10184) doi: 10.1038/srep10184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Jia J., Liu Z., Xiao X., Liu B., Chou K. C. iSuc-PseOpt: identifying lysine succinylation sites in proteins by incorporating sequence-coupling effects into pseudo components and optimizing imbalanced training dataset. Analytical Biochemistry. 2016;497:48–56. doi: 10.1016/j.ab.2015.12.009. [DOI] [PubMed] [Google Scholar]

- 14.Xu H. D., Shi S. P., Wen P. P., Qiu J. D. SuccFind: a novel succinylation sites online prediction tool via enhanced characteristic strategy. Bioinformatics. 2015;31(23):3748–3750. doi: 10.1093/bioinformatics/btv439. [DOI] [PubMed] [Google Scholar]

- 15.Hasan M. M., Yang S., Zhou Y., Mollah M. N. SuccinSite: a computational tool for the prediction of protein succinylation sites by exploiting the amino acid patterns and properties. Molecular BioSystems. 2016;12(3):786–795. doi: 10.1039/C5MB00853K. [DOI] [PubMed] [Google Scholar]

- 16.Ai H., Wu R., Zhang L., et al. pSuc-PseRat: predicting lysine succinylation in proteins by exploiting the ratios of sequence coupling and properties. Journal of Computational Biology. 2017;24(10):1050–1059. doi: 10.1089/cmb.2016.0206. [DOI] [PubMed] [Google Scholar]

- 17.Dehzangi A., López Y., Lal S. P., et al. PSSM-Suc: accurately predicting succinylation using position specific scoring matrix into bigram for feature extraction. Journal of Theoretical Biology. 2017;425:97–102. doi: 10.1016/j.jtbi.2017.05.005. [DOI] [PubMed] [Google Scholar]

- 18.López Y., Dehzangi A., Lal S. P., et al. SucStruct: prediction of succinylated lysine residues by using structural properties of amino acids. Analytical Biochemistry. 2017;527:24–32. doi: 10.1016/j.ab.2017.03.021. [DOI] [PubMed] [Google Scholar]

- 19.Jia J., Liu Z., Xiao X., Liu B., Chou K. C. pSuc-Lys: predict lysine succinylation sites in proteins with PseAAC and ensemble random forest approach. Journal of Theoretical Biology. 2016;394:223–230. doi: 10.1016/j.jtbi.2016.01.020. [DOI] [PubMed] [Google Scholar]

- 20.Hasan M. M., Khatun M. S., Mollah M. N. H., Yong C., Guo D. A systematic identification of species-specific protein succinylation sites using joint element features information. International Journal of Nanomedicine. 2017;Volume 12:6303–6315. doi: 10.2147/IJN.S140875. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Ning Q., Zhao X., Bao L., Ma Z., Zhao X. Detecting succinylation sites from protein sequences using ensemble support vector machine. BMC Bioinformatics. 2018;19(1):p. 237. doi: 10.1186/s12859-018-2249-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.de Brevern A. G., Hasan M. M., Kurata H. GPSuc: Global Prediction of Generic and Species-specific Succinylation Sites by aggregating multiple sequence features. PLoS One. 2018;13(10) doi: 10.1371/journal.pone.0200283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Dehzangi A., López Y., Lal S. P., et al. Improving succinylation prediction accuracy by incorporating the secondary structure via helix, strand and coil, and evolutionary information from profile bigrams. PLoS One. 2018;13(2, article e0191900) doi: 10.1371/journal.pone.0191900. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.López Y., Sharma A., Dehzangi A., et al. Success: evolutionary and structural properties of amino acids prove effective for succinylation site prediction. BMC Genomics. 2018;19(S1):p. 923. doi: 10.1186/s12864-017-4336-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kao H.-J., Nguyen V.-N., Huang K.-Y., Chang W.-C., Lee T.-Y. SuccSite: incorporating amino acid composition and informative k-spaced amino acid pairs to identify protein succinylation sites. Genomics, Proteomics & Bioinformatics. 2020;18(2):208–219. doi: 10.1016/j.gpb.2018.10.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Huang K.-Y., Hsu J. B.-K., Lee T.-Y. Characterization and identification of lysine succinylation sites based on deep learning method. Scientific Reports. 2019;9(1, article 16175) doi: 10.1038/s41598-019-52552-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Zhang L., Liu M., Qin X., Liu G., Ding H. Succinylation Site Prediction Based on Protein Sequences Using the IFS- LightGBM (BO) Model. Computational and Mathematical Methods in Medicine. 2020;2020:15. doi: 10.1155/2020/8858489.8858489 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Hasan M. M., Khatun M. S., Kurata H. Large-scale assessment of bioinformatics tools for lysine succinylation sites. Cell. 2019;8(2):p. 95. doi: 10.3390/cells8020095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Thapa N., Chaudhari M., McManus S., et al. DeepSuccinylSite: a deep learning based approach for protein succinylation site prediction. BMC Bioinformatics. 2020;21(S3):p. 63. doi: 10.1186/s12859-020-3342-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Liu Z., Cao J., Gao X., et al. CPLA 1.0: an integrated database of protein lysine acetylation. Nucleic Acids Research. 2011;39(suppl_1):D1029–D1034. doi: 10.1093/nar/gkq939. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Liu Z., Wang Y., Gao T., et al. CPLM: a database of protein lysine modifications. Nucleic Acids Research. 2014;42(D1):D531–D536. doi: 10.1093/nar/gkt1093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Xu H., Zhou J., Lin S., Deng W., Zhang Y., Xue Y. PLMD: an updated data resource of protein lysine modifications. Journal of Genetics and Genomics. 2017;44(5):243–250. doi: 10.1016/j.jgg.2017.03.007. [DOI] [PubMed] [Google Scholar]

- 33.Huang Y., Niu B., Gao Y., Fu L., Li W. CD-HIT suite: a web server for clustering and comparing biological sequences. Bioinformatics. 2010;26(5):680–682. doi: 10.1093/bioinformatics/btq003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Li W., Godzik A. Cd-hit: a fast program for clustering and comparing large sets of protein or nucleotide sequences. Bioinformatics. 2006;22(13):1658–1659. doi: 10.1093/bioinformatics/btl158. [DOI] [PubMed] [Google Scholar]

- 35.Huang G., Lu L., Feng K., et al. Prediction of S-nitrosylation modification sites based on kernel sparse representation classification and mRMR algorithm. BioMed Research International. 2014;2014:10. doi: 10.1155/2014/438341.438341 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Xiang Q., Feng K., Liao B., Liu Y., Huang G. Prediction of lysine malonylation sites based on pseudo amino acid. Combinatorial Chemistry & High Throughput Screening. 2017;20(7):622–628. doi: 10.2174/1386207320666170314102647. [DOI] [PubMed] [Google Scholar]

- 37.Mikolov T., Sutskever I., Chen K., Corrado G. S., Dean J. Advances in neural information processing systems. Curran Associates Inc.; 2013. Distributed representations of words and phrases and their compositionality; pp. 3111–3119. [Google Scholar]

- 38.Mikolov T., Chen K., Corrado G., JJapa D. Efficient estimation of word representations in vector space. 2013. https://arxiv.org/abs/1301.3781.

- 39.LeCun Y., Boser B., Denker J. S., et al. Backpropagation applied to handwritten zip code recognition. Neural Computation. 1989;1(4):541–551. doi: 10.1162/neco.1989.1.4.541. [DOI] [Google Scholar]

- 40.Lecun Y., Bottou L., Bengio Y., Haffner P. Gradient-based learning applied to document recognition. Proceedings of the IEEE. 1998;86(11):2278–2324. doi: 10.1109/5.726791. [DOI] [Google Scholar]

- 41.Krizhevsky A., Sutskever I., Hinton G. E. Imagenet classification with deep convolutional neural networks. Communications of the ACM. 2017;60(6):84–90. doi: 10.1145/3065386. [DOI] [Google Scholar]

- 42.Simonyan K., Zisserman A. Very deep convolutional networks for large-scale image recognition. 2014. https://arxiv.org/abs/1409.1556.

- 43.Szegedy C., Liu W., Jia Y., et al. Going deeper with convolutions. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2015; pp. 1–9. [Google Scholar]

- 44.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition; IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2016. pp. 778–779. [Google Scholar]

- 45.Pearlmutter B. A. Learning state space trajectories in recurrent neural networks. Neural Computation. 1989;1(2):263–269. doi: 10.1162/neco.1989.1.2.263. [DOI] [Google Scholar]

- 46.Giles C. L., Kuhn G. M., Williams R. J. Dynamic recurrent neural networks: theory and applications. IEEE Transactions on Neural Networks. 1994;5(2):153–156. doi: 10.1109/TNN.1994.8753425. [DOI] [Google Scholar]

- 47.Hochreiter S., Schmidhuber J. Long short-term memory. Neural Computation. 1997;9(8):1735–1780. doi: 10.1162/neco.1997.9.8.1735. [DOI] [PubMed] [Google Scholar]

- 48.Schuster M., Paliwal K. K. Bidirectional recurrent neural networks. IEEE Transactions on Signal Processing. 1997;45(11):2673–2681. doi: 10.1109/78.650093. [DOI] [Google Scholar]

- 49.Hinton G. E., Srivastava N., Krizhevsky A., Sutskever I., Salakhutdinov R. R. Improving neural networks by preventing co-adaptation of feature detectors. 2012. https://arxiv.org/abs/1207.0580.

- 50.Srivastava N. Improving neural networks with dropout. University of Toronto. 2013;182(566):p. 7. [Google Scholar]

- 51.Bouthillier X., Konda K., Vincent P., Memisevic R. Dropout as data augmentation. 2015. https://arxiv.org/abs/1506.08700.

- 52.Baldi P., Sadowski P. The dropout learning algorithm. Artificial Intelligence. 2014;210:78–122. doi: 10.1016/j.artint.2014.02.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Srivastava N., Hinton G., Krizhevsky A., Sutskever I., Salakhutdinov R. Dropout: a simple way to prevent neural networks from overfitting. Journal of Machine Learning Research. 2014;15(1):1929–1958. [Google Scholar]

- 54.Mi H., Muruganujan A., Ebert D., Huang X., Thomas P. D. PANTHER version 14: more genomes, a new PANTHER GO-slim and improvements in enrichment analysis tools. Nucleic Acids Research. 2018;47(D1):D419–D426. doi: 10.1093/nar/gky1038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Mi H., Muruganujan A., Huang X., et al. Protocol update for large-scale genome and gene function analysis with the PANTHER classification system (v.14.0) Nature Protocols. 2019;14(3):703–721. doi: 10.1038/s41596-019-0128-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Huang D. W., Sherman B. T., Lempicki R. A. Bioinformatics enrichment tools: paths toward the comprehensive functional analysis of large gene lists. Nucleic Acids Research. 2009;37(1):1–13. doi: 10.1093/nar/gkn923. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Huang D. W., Sherman B. T., Lempicki R. A. Systematic and integrative analysis of large gene lists using DAVID bioinformatics resources. Nature Protocols. 2009;4(1):44–57. doi: 10.1038/nprot.2008.211. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

KEGG enrichmeent analysis of KEGG pathway for 5 species.

GO enrichment analysis of GO terms for 5 species.

Data Availability Statement

The experimental succinylation and nonsuccinylation sites used to support the findings of this study have been deposited in the website http://8.129.111.5/ and are freely available to all scientific communities.