Abstract

Rationale and Objectives:

Our primary aim was to improve radiology reports by increasing concordance of target lesion measurements with oncology records using radiology preprocessors (RP). Faster notification of incidental actionable findings to referring clinicians and clinical radiologist exam interpretation time savings with RPs quantifying tumor burden were also assessed.

Materials and Methods:

In this prospective quality improvement initiative, RPs annotated lesions before radiologist interpretation of CT exams. Clinical radiologists then hyperlinked approved measurements into interactive reports during interpretations. RPs evaluated concordance with our tumor measurement radiologist, the determinant of tumor burden. Actionable finding detection and notification times were also deduced. Clinical radiologist interpretation times were calculated from established average CT chest, abdomen, and pelvis interpretation times.

Results:

RPs assessed 1287 body CT exams with 812 follow-up CT chest, abdomen, and pelvis studies; 95 (11.7%) of which had 241 verified target lesions. There was improved concordance (67.8% vs.22.5%) of target lesion measurements. RPs detected 93.1% incidental actionable findings with faster clinician notification by a median time of 1 hour (range: 15 minutes–16 hours). Radiologist exam interpretation times decreased by 37%.

Conclusions:

This workflow resulted in three-fold improved target lesion measurement concordance with oncology records, earlier detection and faster notification of incidental actionable findings to referring clinicians, and decreased exam interpretation times for clinical radiologists. These findings demonstrate potential roles for automation (such as AI) to improve report value, worklist prioritization, and patient care.

Keywords: Radiology preprocessors, Tumor quantification, Cancer clinical trials, Actionable findings, Artificial intelligence

INTRODUCTION

Metastatic tumor measurements are frequently used to guide therapeutic response assessment in cancer patients and required for those enrolled in clinical trials, providing oncologists with objective quantification to calculate treatment response. In this ongoing prospective quality improvement initiative, we applied a workflow involving radiology preprocessors (RPs) premeasuring lesions, simulating an automated process at our clinical center that is currently under development with in-house deep learning tools.

With the emerging importance of tumor metrics and advancements in computed tomography (CT) and magnetic resonance imaging, various tumor imaging criteria have been developed to assess disease response to treatment (1). Of these, Response Evaluation Criteria in Solid Tumors (RECIST) 1.1 is the most widely used criterion to standardize selection and measurement of target and nontarget lesions. For RECIST 1.1, CT is the most commonly used imaging modality to perform lesion measurements (2). Additionally, CT chest, abdomen, and pelvis (CAP) examinations are frequently used for oncologic follow-up evaluations (3).

However, since many measurements in radiology reports often lack concordance with those in oncology records for determining disease burden (4–7), advances in Picture Archiving and Communication System (PACS) tools (5) and artificial intelligence (AI) (8) have been introduced as potential solutions to assist radiologists in improving the consistency of tumor quantification. Analogous to these automated systems and AI algorithms, RPs trained to accomplish specific tasks can provide useful insight on defining roles for integrating these innovative technologies within radiology workflows to enhance precision and efficiency (9). With specific training to complete defined tasks in the augmented workflow, RPs (students of various educational levels, including student interns, and postdoctoral fellows) were hypothesized to improve lesion measurement concordance in radiology reports with oncology records by selecting and measuring lesions prior to radiologist review. We evaluated this by comparing RP measurements with those made by our tumor measurement radiologist (TMR) who meets regularly with oncologists to verify target lesion selection and measurements, hence, establishing the “ground truth” for concordance in this study since their combined efforts are used to determine therapeutic response.

Additionally, we evaluated the potential for worklist prioritization by recording incidental actionable findings detected by RPs and determining turn-around times for reporting these findings to referring clinicians. Incidental actionable findings are defined as unexpected imaging abnormalities that require nonstandard expeditious communication to referring clinicians within minutes, hours, or days (10), depending on criticality. Lastly, we hypothesized that radiologists would experience decreased CT exam interpretation times after implementation of our RP augmented workflow.

MATERIALS AND METHODS

Study Design and Overview

Our center’s Institutional Review Board reviewed this project and determined that it qualified as a quality improvement initiative. This project involved RPs prospectively assessing body CT exams in advance of clinical radiologists.

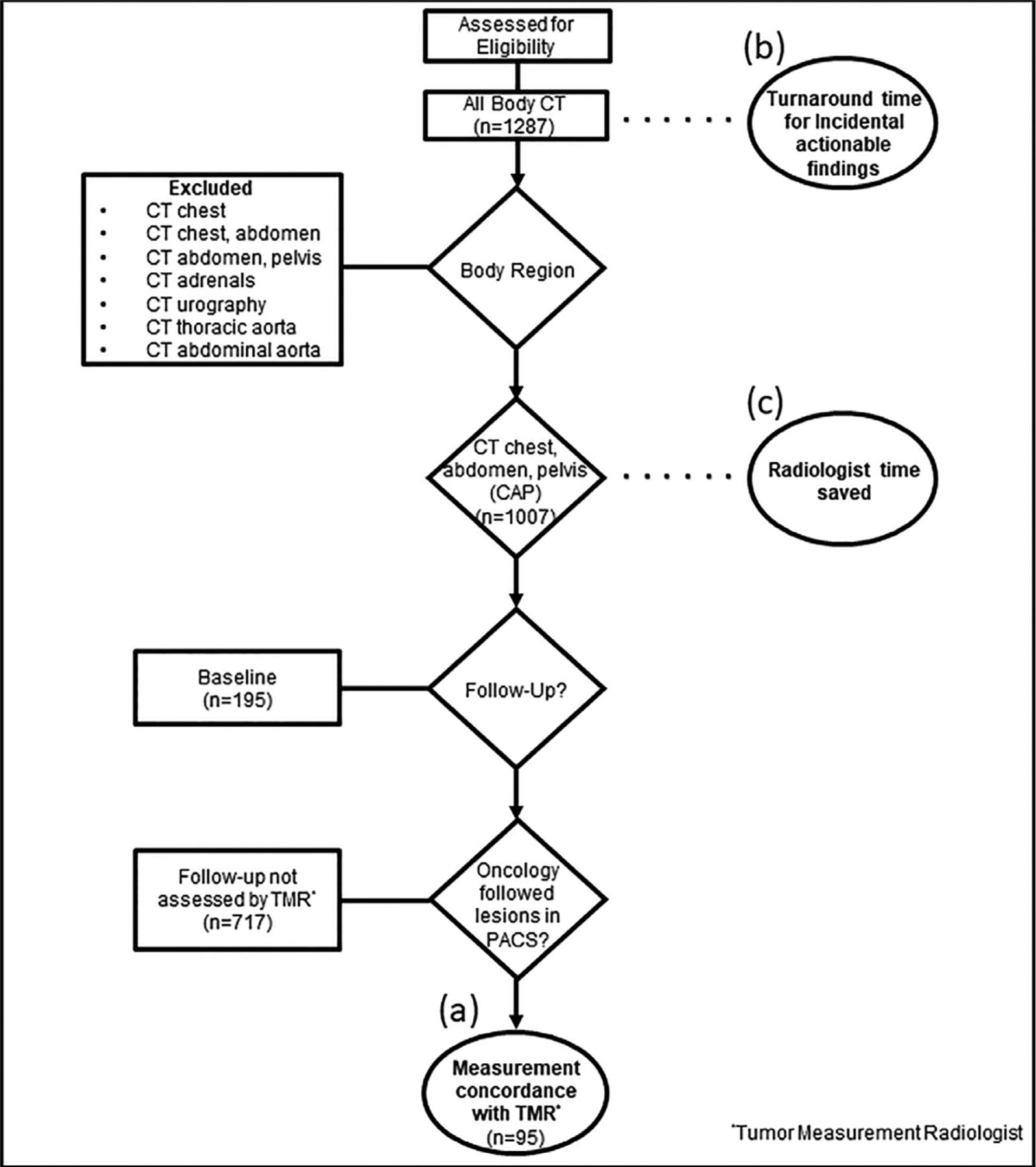

In the next sections, we first describe the roles and training of participating RPs followed by explanation of our typical (standard) clinical radiologist workflow, our routine TMR workflow for patients on clinical trials, and our RP augmented workflow (slight modification of the clinical radiologist workflow). We also outline CT exam demographics for this study, with inclusion criteria and study design specifics illustrated in Figure 1. We then describe evaluation approaches for our three outcomes of (1) target lesion measurement concordance, (2) detection rates and turn-around times for incidental actionable findings, and (3) clinical radiologist exam interpretation times; ending with a statistical analysis section.

Figure 1.

Study inclusion flowchart with exclusion criteria, depicting the three variables assessed in this study: (a) Lesion measurement concordance with the tumor measurement radiologist (TMR); (b) turn-around notification times of incidental actionable findings to referring oncology teams; and (c) clinical radiologist time savings.

RP Roles and Training

RPs consisted of six participants from various educational levels (student interns and postdoctoral fellows) in this study. Their roles included identifying and measuring previously quantified lesions (target lesions for clinical trials, if applicable) as well as new and actionable findings (e.g., pulmonary embolus, bowel obstruction, and abscess features) on baseline and follow-up CT exams prior to radiologist review.

RP training required completion of six internally developed video training modules explaining the tumor quantification process and our RP augmented workflow. RPs first received formal presentations about our PACS functionalities and CT image acquisition followed by introductory materials on tumor imaging criteria (primarily RECIST 1.1) (1,11) and CT anatomy online resources. These included “w-radiology” and “e-anatomy” websites for basic and advanced CT anatomy references, respectively (12,13). W-radiology is a free online accessible imaging anatomical reference atlas that labels important anatomical structures and vasculature in the human body (12). Also online accessible, e-anatomy is a more detailed imaging anatomical atlas with labels provided for additional imaging modalities (PET/CT) and vasculature, requiring subscription fees (13).

They also learned about different types of actionable findings based on the American College of Radiology (ACR) 2014 guidelines (10). Upon completion of training, a standardized 50-question quiz pertaining to fundamental concepts of tumor quantification, CT anatomy and incidental findings was administered to RPs to enhance learning incentives.

RPs received direct feedback from any of four participating board-certified clinical radiologists for their first 10 CT exams via one-on-one review, similar to the training provided to radiology residents. After this initial period, RPs reviewed cases with the clinical radiologists on an as-needed basis. To date, participating RPs have assessed at least 50 CT exams each, a majority of which were CAP studies.

RPs were specifically trained to review body CT exams, and complete tasks of tumor quantification and detection of imaging abnormalities. Furthermore, they were confined to these roles for the purposes of this study, and were not expected to provide clinical interpretation or report dictation of these CT exams (9). All RP measurements required approval from board-certified radiologists before appearing in finalized reports (9).

Standard Clinical Radiologist Workflow

Once CT scans were completed and available in PACS, clinical radiologists in our research center opened exams for interpretation and dictated reports. Since most of our CT scans were routine or follow-up research exams, including cancer staging studies, they were generally reviewed in the order they appeared on the worklist unless marked as “stat” or “inpatient” in PACS. Upon exam interpretation, clinical radiologists annotated previously measured and newly identified lesions. During report dictation, radiologists directly connected these measurements to their associated text descriptions via hyperlinks in interactive radiology reports (14). After completing exam review, this process was repeated for subsequent CT studies in the worklist.

Our radiologists used interactive reporting exclusively, directly connecting radiologist textual descriptions to annotations (e.g., measurements) as hyperlinks in our PACS (Vue PACS, version 12.2, Carestream Health, Rochester, NY). These were also visible in the PACS database bookmark tables, allowing for quick reference of lesions and associated metadata, such as target lesion and name, measurement dimensions and measurement creator. These software advances were developed over the last 10 years to improve tumor quantification and automated detection (5,14). However, no software development was made specifically for this phase of our research.

Tumor Measurement Radiologist Workflow

For CT exams of patients enrolled in cancer clinical trials at our institution, the TMR selected and measured lesions in accordance with tumor imaging criteria as specified by referring oncology teams (6,7). The most commonly requested criteria were those of RECIST 1.1. Out of 812 follow-up CT CAP exams included in this study, 11.7% (95/812) were evaluated by the TMR.

After exam interpretation and report dictation by clinical radiologists, the TMR reviewed lesion measurements in radiology reports along with other annotations seen in the corresponding bookmark tables. The TMR then selected target lesions (some of which were already measured by clinical radiologists) using clinical trial protocol-specific tumor imaging criteria, either with or without clinical context provided by referring oncology teams. For these exams, the TMR saved key images of approved target lesions on our PACS and recorded these measurements on separate documentation after achieving consensus with consulting oncologists.

The TMR workflow was independent and not study-specific as this occurred as part of standard cancer clinical trial protocols at our institution. The TMR was also not directly involved in the clinical radiologist or RP augmented workflows.

RP Augmented Workflow

This workflow consisted of RPs opening body CT exams following scan completion and before clinical radiologist review (as opposed to radiologists opening them first as described in the “standard clinical radiologist workflow” section). RPs evaluated CT exams in the worklist that were not yet reviewed by participating clinical radiologists in order to avoid interference with existing turn-around times. These included unopened exams performed earlier in the day, resulting from our typical backlogged radiologist workflow.

Participating RPs identified and measured lesions prospectively for all exams evaluated in this workflow. After RPs completed their assessments, clinical radiologists opened these exams and applied RP measurements into their reports, if approved. Collected data were recorded by RPs and stored in a research workflow database on Microsoft Excel. Data included information pertaining to exam type, number of measured lesions, RP exam assessment time, and actionable findings (if applicable).

Participating radiologists interpreted RP annotated exams in the usual manner, without annotations visible on initial review. Once a qualitative assessment was completed, radiologists toggled RP annotations on with a keyboard shortcut, and either approved or modified the annotations before inclusion in the final report. After completing exam review, radiologists recorded their interpretation times, RP discrepancy number, and type. This information was then transcribed by RPs into our research workflow database.

CT Exam Demographics

In the workflows of four participating clinical radiologists, RPs prospectively evaluated 1287 consecutive baseline and follow-up body CT exams of clinical trial patients from May 2018 to May 2019 for this study. Among these CT exams, 11.7% (95/812) of follow-up CT CAP studies were evaluated by the TMR with 241 verified target lesions and assessed for lesion measurement concordance between radiology and oncology reports. Notably, the TMR was only able to assess a small proportion of the total number of follow-up CT exams included in this study due to inadequate staffing and resources to handle all requests for tumor quantification from referring clinicians at our institution.

Evaluation of Target Lesion Selection and Measurement Concordance

To meet our primary objective of improved concordance, RPs quantitatively assessed lesions that were previously measured and reported by clinical radiologists with attention to target lesions labeled by the TMR (14). Only follow-up CT CAP exams evaluated by our TMR were included in this study (11.7%, 95/ 812) because RPs were trained to compare and measure previously annotated target lesions from prior exams. Conversely, baseline CT CAP exams were excluded from evaluation of this outcome since RPs were not specifically trained to select target lesions for initial screening exams (no available comparison exams with prior measurements) and there was no way of confirming whether these exams would be reviewed by the TMR.

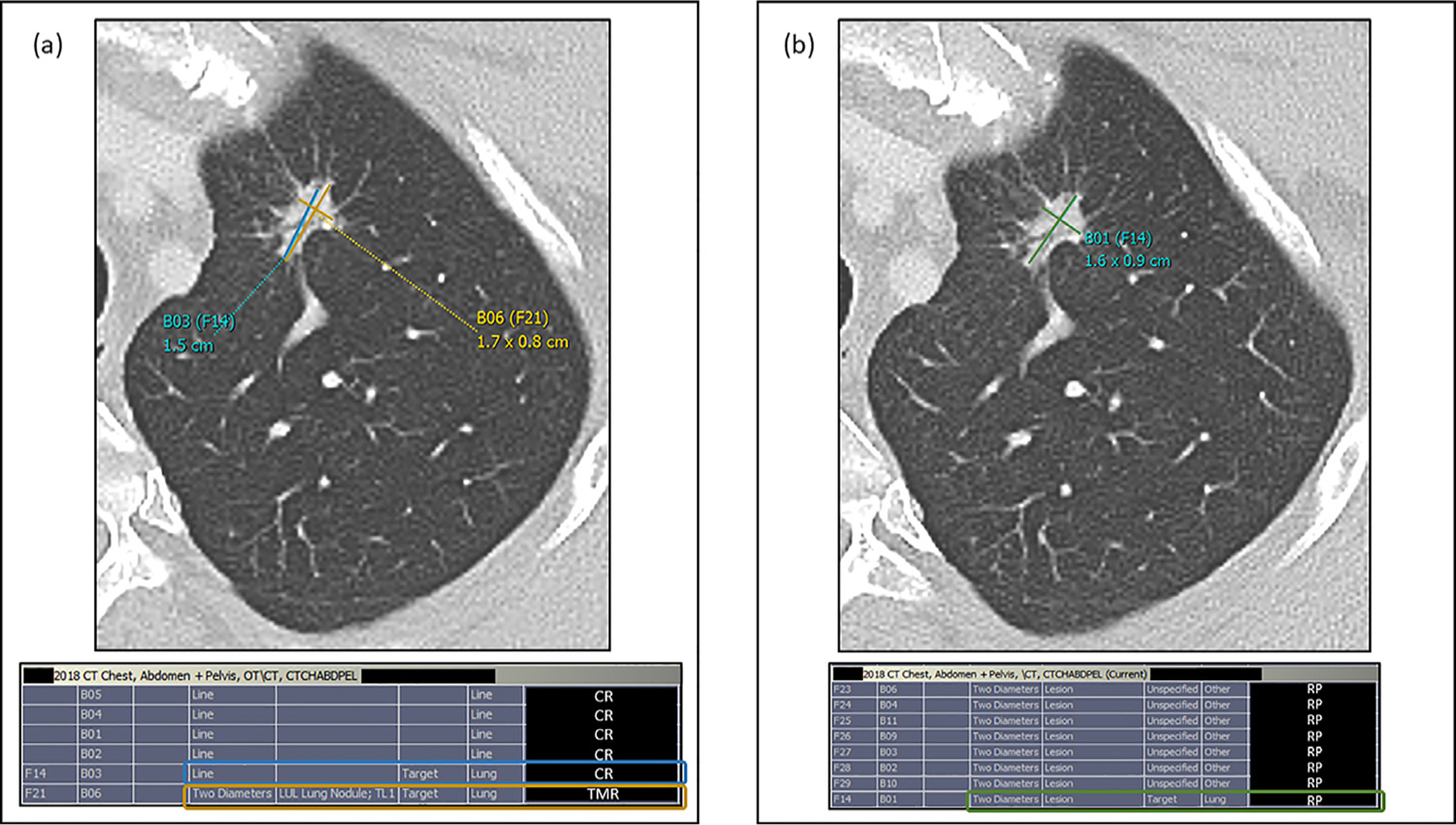

RP concordance with the TMR was determined by comparing target lesion selection and measurements from CT CAP exams in the RP augmented workflow with CT CAP exams in the standard clinical radiologist workflow. This was accomplished by evaluating the PACS bookmark tables for these exams where the TMR either accepted (no modifications) or remeasured target lesion annotations. Measurements were marked as concordant when accepted as-is by the TMR and saved as key images in PACS. Conversely, annotations were reported as discrepant when they were modified or remeasured by the TMR. This is further illustrated in Figure 2a and b.

Figure 2.

(a) Example target lesion measurement discrepancy on prior CT CAP exam with corresponding measured lesions in PACS bookmark table for a patient with lung cancer. In this standard workflow (status quo, no radiology preprocessor [RP] involvement), the clinical radiologist measured a spiculated left apical lung nodule (designated target lesion according to clinical trial protocol) seen above using the single line tool (B03(F14), blue) in our PACS, which was remeasured by our tumor measurement radiologist (TMR) using our PACS two diameter tool (B06 (F21), yellow) and recorded as a measurement discrepancy for the purposes of this study. This discrepancy was also confirmed using the bookmark table as evidenced by two different measurements of the same target lesion, initially measured by the clinical radiologist (CR, blue rectangle) and remeasured by the TMR (yellow rectangle). (b) Example target lesion measurement concordance on follow-up CT CAP exam for the same patient. In the RP augmented workflow, the RP measurement of the same left apical lung nodule target lesion using the two diameter tool (B01(F14), green) was accepted by the TMR as it was not modified and saved as a key image in our PACS. RP measurement concordance with the TMR was confirmed using the bookmark table as the RP measurement of the target lesion (RP, green rectangle) was not remeasured or modified by the TMR. CAP; chest, abdomen, pelvis; PACS, Picture Archiving and Communication System.

Evaluation of Incidental Actionable Findings

For the outcomes of detection rates and turn-around times of incidental actionable findings, 29 body CT exams (2.3%, 29/1287) met these criteria as these abnormalities were discovered in the RP assisted workflow.

These were immediately reported to clinical radiologists upon identification by RPs in this study. Both correctly identified and missed actionable results by RPs were recorded in the research workflow database to deduce RP actionable finding detection rates. Additionally, turn-around notification times of actionable findings to referring clinicians were established by counting the number of CT exams remaining on the worklist to estimate backlog. For example, using an established average time of 12 minutes for radiologists to review one CT CAP exam (15), 10 CT CAP scans on the worklist would equate to an estimated time of 120 minutes for the radiologist to open the exam in question.

Evaluation of Clinical Radiologist Interpretation Time Saved

For the outcome of clinical radiologist time saved, CT CAP exams included early in the study were assessed (82.1%, 1056/1287) and compared to previously established CT CAP interpretation times (15).

To determine time saved by clinical radiologists, clinical radiologist CT CAP exam interpretation times in the RP augmented workflow were subtracted from an established average CT CAP exam interpretation time of 12 minutes using multimedia interactive reporting without RPs (15). Subtracted times were recorded into our research workflow database. Once the time savings were evident, recording of radiologist interpretation times was discontinued after 1056 RP evaluated CT exams due to a PACS upgrade that further improved workflow efficiency.

Statistical Analysis

Statistical analyses for the three study outcomes were reported in conjunction with corresponding results. Verified target lesion measurement concordance with the TMR comparing RPs and clinical radiologists was analyzed using a paired t test to assess for statistical significance of results. Turn-around time of incidentally detected actionable findings was reported as a median value with inclusion of data range. Clinical radiologist exam interpretation time savings were evaluated using a simple t test in comparison to a previously established, constant average time value (15).

RESULTS

Cancer subtypes with metastatic disease evaluated by RPs on CT imaging included lymphoma (9.9%), lung cancer (9.1%), prostate cancer (7.2%), mesothelioma (4.4%), and bladder cancer (3.3%).

Lesion Measurement Concordance with Oncology Records

Based on retrospective review of bookmark tables within our PACS, there was improved concordance with our TMR utilizing an RP-assisted workflow in comparison to the standard radiologist workflow without assistance from RPs. Lesion measurement concordance was 54 out of 241 verified target lesions (22.4%) in non-RP standard clinical radiologist workflows, and 160 out of 241 verified target lesions (66.4%) in RP-assisted workflows. This represents a three-fold improvement in workflows with RPs proactively measuring lesions prior to radiologist review of these CT exams. An example of target lesion measurement concordance and discrepancy is shown in Figure 2a and b. Lesion measurement concordance of RPs and clinical radiologists with the TMR was found to be statistically significant (p < 0.001) using a paired t test.

Turn-around Times for Incidental Actionable Findings

In our RP assisted workflow, 27 incidental actionable findings were identified by RPs and immediately relayed to radiologists. To date, incidental actionable results were successfully identified by RPs on CT exams at a 93.1% (27/29) detection rate.

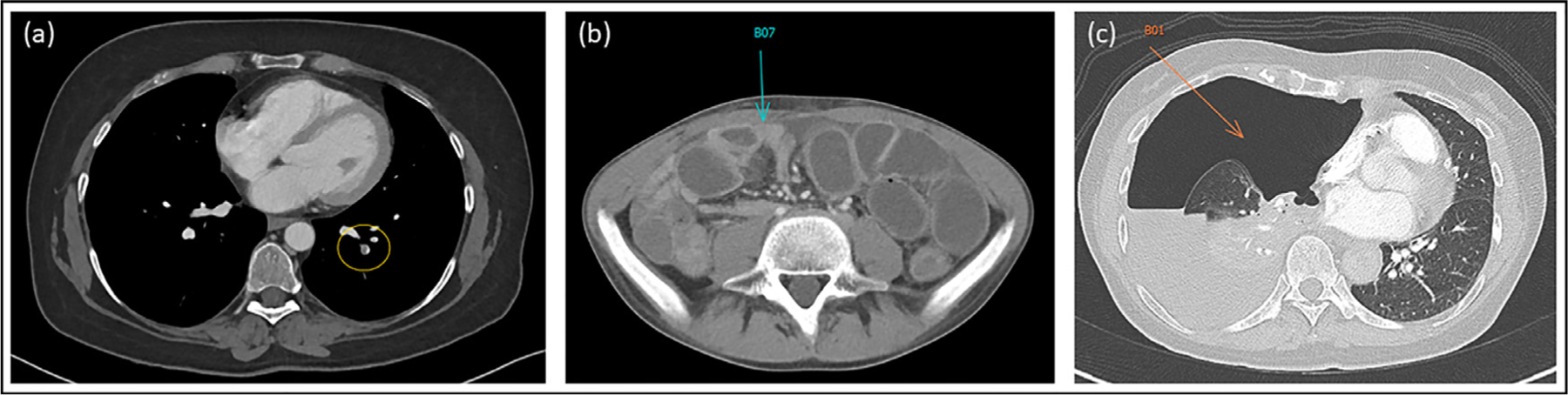

Such findings included 16 cases of incidental pulmonary embolism (PE, most common), three cases of small bowel obstruction, two cases of severe hydronephrosis with caliectasis secondary to significant misplacement of ureteric stent, and one of each of the following: acute appendicitis, intra-abdominal abscess, tension pneumothorax, inferior vena cava (IVC) thrombus, large bowel perforation, and gastric outlet obstruction. Turn-around notification times of actionable findings to referring clinicians in this workflow occurred earlier relative to the standard clinical radiologist workflow by a median time of 1 hour (range: 15 minutes–16 hours). Of note, these times were only recorded for 11 CT exams with incidental actionable findings to minimize interference of communication between radiologists and referring clinicians. Examples of incidental actionable findings discovered by RPs are shown in Figure 3a–c.

Figure 3.

Examples of three incidental actionable findings (from CT exams of three different patients) successfully detected and annotated by RPs in the RP augmented workflow. (a) Left basal segmental pulmonary arterial filling defect consistent with pulmonary embolism (PE, yellow oval). (b) Significant distention of small bowel loops with corresponding air-fluid levels on abdominal X-ray (not shown) and transition point of obstruction (blue arrow) consistent with small bowel obstruction (SBO). (c) Large amount of free air in right thoracic cavity (orange arrow) with associated collapse of the right lung and leftward mediastinal shift consistent with tension pneumothorax.

Clinical Radiologist Interpretation Times

There was a notable difference in dictation time from status quo non-RP workflows compared to RP assisted workflows, decreasing radiologist interpretation times from 12 minutes (established average) to 7.5 minutes. This represents an overall decrease of 37% in time spent by radiologists interpreting CT examinations with implementation of RPs into radiologist workflows. Clinical radiologist CT exam interpretation time savings were found to be statistically significant (p < 0.001) in comparison to a previously reported CT CAP interpretation time of 12 minutes using a simple t test.

DISCUSSION

With RPs performing tumor quantification in the RP augmented workflow, we demonstrate a three-fold improvement in target lesion selection and measurement concordance with the TMR and oncology records on follow-up CT exams in comparison to the standard workflow with clinical radiologists measuring lesions themselves. Similar to existing workflows that use CAD systems such as lung cancer screening (16), RPs focus primarily on tumor measurements, enhancing concordance with the TMR by avoiding duplicate measurements while also improving report value for referring clinicians (4,6,7). Furthermore, our PACS lesion management application tools have been shown to help achieve more consistent and concordant measurements by reducing interobserver variability between RPs, particularly on follow-up CT exams, due to automatic relation of lesion measurements to prior scans using the PACS bookmark table and refined anatomical registration functionalities (5,17). Based on significant improvements in lesion measurement concordance on follow-up CT exams from the RP augmented workflow, we anticipate increased use of RPs (and eventually automated systems) for quantitative assessment of both baseline and follow-up CT exams in the near future.

The number of CT exams reviewed by the TMR at our research center is relatively small for several reasons. Like many centers, our radiology core lab is severely understaffed and cannot support the majority of trials requiring quantification (7,18), which is part of the stimulus for this project. In contrast, our 253 oncologists and approximately 1600 ongoing research protocols have adequate third party staff outside of radiology to measure and manage lesions as most of our patients are in phase 0 (bench to bedside), 1 or 2 clinical trials.

Our RP augmented workflow also resulted in 93.1% (27/29) successful detection rate of incidental actionable (including some critical) findings for routine clinical trial CT exams. RPs immediately reported actionable findings to radiologists upon detection, expediting exam review and resulting in faster notification turn-around times to referring clinicians by a median time of 1 hour. Incidental actionable findings on routine CT exams would otherwise take longer to detect at our institution due to frequent case backlogs, remaining in the worklist for several hours if not ordered as stat, inpatient, or with acute clinical concerns. For this reason, we included all body CT exams (chest, abdomen, or pelvis alone) to expand our range of earlier detection of incidental findings while also supporting our primary aim of improved oncologic quantification. Additionally, it is not unusual for some follow-up CT CAP exams to only include one or two of these anatomic regions (e.g., abdomen only).

This result further demonstrates the importance of expeditious identification and communication of actionable findings, supporting the use of automated systems in clinical applications to improve worklist prioritization and patient outcomes (10,19). As previously described by Prevedello et al., worklist prioritization is an important advantage of AI by alerting radiologists to actionable findings on imaging studies that have not yet been reviewed (20). The two incidental actionable results that went undetected in the RP augmented workflow were PEs. Both instances occurred within the first 50 CT exams (after initial training) evaluated by two of our RPs. For both situations, there was no adverse effect on the clinical workflow. In fact, clinical radiologists were able to review these exams sooner in comparison with the standard workflow because RP involvement minimized worklist backlogs. We believe incidental PEs encountered in subsequently assessed CT exams for this study were successfully discovered by RPs, possibly due to directed one-on-one feedback with the clinical radiologists for previously missed PEs and the addition of a new training module for PE detection.

Relative to the standard clinical radiologist workflow, average radiologist CT exam review times decreased by 37% in the RP augmented workflow as they did not have to spend additional time completing the mundane tasks of identifying and measuring target or nontarget lesions. With our body radiologist section being short staffed during this study, time savings were essential and this workflow subjectively improved the work environment, allowing for more manageable work hours. This increasingly efficient workflow process is supported by previous studies demonstrating time savings with the use of automated follow-up and segmentation tools in PACS (4,5,17).

In addition to these practical benefits, the annotation process also helps accelerate machine learning efforts. The hyperlink capability in our PACS has exponentially increased the number of lesion measurements since 2015, an experience also shared by the University of Virginia radiology department, which demonstrated widespread adoption of hyperlinked reports (21). As an example of success, interactive reporting with hyperlinks allowed for the 32K Lesion dataset to be made publicly available in 2018 (8). These hyperlinks directly connect image annotations to text descriptions from radiologists, acting as expert labeling for machine/deep learning models. Recent studies have shown the potential applicability of deep learning algorithms to detect characteristic imaging features for genetically defined diseases where bounding boxes are guided by radiologist annotations (22) as well as the possibility of distributing deep learning models for increased training efficiency (23). Furthermore, annotated image data (such as RP and radiologist lesion measurements from this study) can be made more readily available for machine learning development using the concept of local training and interinstitutional distribution of algorithms without transferring sensitive patient data, known as federated learning (24). Additionally, more consistent measurements by RPs (or automation) should help improve annotation quality for machine learning training data through better approximation of imaging abnormalities. Advancing technologies may also allow RPs and other automated processes to have more important roles in enhancing radiology workflows, especially in evolving interactive and synoptic reporting where image interaction and quantification directs more specific language (14,25).

Due to its mutual benefits for radiologists, RPs, oncologists, and patients, this workflow continues at our institution. The increased adoption of this workflow supports efficiency and continued improvement of radiologist reports and resultant patient care. However, the authors recognize several limitations of our RP augmented workflow and its integration into standard radiology workflows. These include potential distraction from excessive measurements made by RPs prior to radiologist interpretation and subjectivity of lesion selection and measurement. As part of the initial RP training, similar to junior radiology residents, radiologists spent relatively more one-on-one time with RPs by reviewing their measurements and findings to help them hone their skills early. During these sessions, radiologists discouraged RPs from overmeasuring since these can be distracting and not contributory to patient care. We actually found that RP training was so successful that presentations on advanced PACS capabilities (e.g., bookmark tables) were also applicable to radiologists as part of our PACS upgrade training.

The most common cause of discrepancies between RPs and clinical radiologists was subjectivity of target lesion selection (on baseline exams) and measurements. However, achieving zero discrepancy is unlikely due to well-established inter-reader radiologist subjectivity (26,27).

Similar to how radiologists have been using CAD for clinical applications such as mammography and lung nodule detection (16,28), radiologists have the ability to “turn on” RP measurements (“prompting mode”), and could become complacent and overtrust the RPs. This potential bias could propagate errors, but the authors believe that continuing this workflow prepares the medical community for recommendations that automation will undoubtedly bring in the near future.

While our study demonstrates a good detection rate for incidental actionable findings of 93.1%, the authors realize that this is dependent on individual RP experience and capabilities. PE is our most commonly encountered incidental actionable finding, and additional training for RPs has been created to improve identification of PEs. We only provide RPs with a broad overview of other possible actionable findings as they are uncommon, indicating potential areas to expand our training modules and, hence, improve our RP augmented workflow.

Readers should keep in mind our results may not be generalizable as our patients are referred for their diseases or malignancies with few concurrent pathologies. Taking note of these limitations will be essential in successfully integrating an automated lesion measurement process with physician supervision and facilitating more efficient workflows for radiologists.

Although we did not apply AI tools in this phase of our research, this type of automation in the context of radiology workflows should improve patient care by providing opportunities for triaging the worklist and earlier notification of actionable findings to referring clinicians (10,20,29). We are currently applying deep learning tools to transition this workflow to an actual AI workflow (22).

In conclusion, augmented workflows using RPs were more concordant (three-fold improvement) with oncologists’ quantitative records, which objectively determine therapeutic response. We believe this improves the value of clinical radiologist reports for oncologists and other investigators with patients on clinical trials requiring consistent quantification. Actionable findings incidentally discovered on routine CT exams by RPs resulted in faster turn-around times for referring clinician notification. The RP augmented workflow also decreased radiologist dictation times by 37%, important in short-staffed departments like ours. Our results highlight the potential for enhancing radiology workflows through automated applications previewing imaging exams prior to radiologist review, demonstrating notable benefits in tumor quantification, worklist prioritization, and workflow efficiency. Furthermore, our RP workflow could serve as a guiding model for future inclusion of automated systems in radiology practices. Future studies include increasing use of in-house deep learning algorithms, a cost-benefit analysis of augmented workflows and development of an algorithmic approach to further classify incidental PEs based on degree of criticality.

ACKNOWLEDGMENTS

This is a HIPAA-compliant prospective IRB-exempt quality improvement initiative.

We would like to thank Dr. Bernadette Redd for her participation and contributions to this work. We would also like to thank Cindy Clark (NIH Library Editing Service) and the NIH Fellows Editorial Board for providing editorial assistance for the manuscript, and the Biostatistics and Clinical Epidemiology Service for data analysis. This quality improvement initiative was supported by the Center for Cancer Research, National Cancer Institute, and the NIH Clinical Center.

FUNDING

This research was supported, in part, by the Intramural Research Program of the NIH Clinical Center.

Abbreviations

- RP

Radiology Preprocessor

- CAP

Chest, Abdomen & Pelvis

- PACS

Picture Archiving and Communication System

- MR

Magnetic Resonance

- TMR

Tumor Measurement Radiologist

- CT

Computed Tomography

- PET/CT

Positron Emission Tomography – Computed Tomography

- RECIST

Response Evaluation Criteria In Solid Tumors

- AI

Artificial Intelligence

- CAD

Computer Aided Detection

- PE

Pulmonary Embolus

- SBO

Small Bowel Obstruction

APPENDIX A

CT Specifications and Radiology Reports

CT exams were obtained on the Siemens Force (Munich, Germany) with the following parameters: 120 kVp, effective mAs of 150 (dose modulated with ADMIRE strength 2–4 MBIR). Images pushed to PACS included 2 × 1 overlapping soft and sharp algorithms for an average of 1500 images per study. Most CT CAP exams were completed with only IV contrast in venous phase and water or oral omnipaque for oral contrast.

For follow-up CT exams, the name and corresponding follow-up set number for annotated lesions automatically propagated in the bookmark tables. When automatic propagation did not occur, lesion measurements were manually linked to their corresponding measurements in prior exams by the creator (RP, clinical radiologist, or TMR) to allow for easier comparisons.

Footnotes

DECLARATION OF INTEREST

None declared.

CONFLICT OF INTEREST

Dr. Huy Do and Dr. Les Folio were associate investigators in a corporate research agreement with Carestream Health (Rochester, NY), the PACS used in this initiative.

REFERENCES

- 1.Eisenhauer EA, Therasse P, Bogaerts J, Schwartz LH, Sargent D, Ford R, et al. New response evaluation criteria in solid tumours: revised RECIST guideline (version 1.1). Eur J Cancer 2009; 45(2):228–247. doi: 10.1016/j.ejca.2008.10.026. [DOI] [PubMed] [Google Scholar]

- 2.Padhani AR, Ollivier L. The RECIST (Response Evaluation Criteria in Solid Tumors) criteria: implications for diagnostic radiologists. Br J Radiol 2001; 74(887):983–986. doi: 10.1259/bjr.74.887.740983. [DOI] [PubMed] [Google Scholar]

- 3.Levy MA, Rubin DL. Tool support to enable evaluation of the clinical response to treatment. AMIA Annu Symp Proc 2008: 399–403. [PMC free article] [PubMed] [Google Scholar]

- 4.Machado LB, Apolo AB, Steinberg SM, Folio LR. Radiology Reports With Hyperlinks Improve Target Lesion Selection and Measurement Concordance in Cancer Trials. AJR Am J Roentgenol 2017; 208(2). doi: 10.2214/ajr.16.16845. W31–w7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Folio LR, Choi MM, Solomon JM, Schaub NP. Automated registration, segmentation, and measurement of metastatic melanoma tumors in serial CT scans. Acad Radiol 2013; 20(5):604–613. doi: 10.1016/j.acra.2012.12.013. [DOI] [PubMed] [Google Scholar]

- 6.Jaffe TA, Wickersham NW, Sullivan DC. Quantitative imaging in oncology patients: Part 1, radiology practice patterns at major U.S. cancer centers. AJR Am J Roentgenol 2010; 195(1):101–106. doi: 10.2214/ajr.09.2850. [DOI] [PubMed] [Google Scholar]

- 7.Jaffe TA, Wickersham NW, Sullivan DC. Quantitative imaging in oncology patients: Part 2, oncologists’ opinions and expectations at major U.S. cancer centers. AJR Am J Roentgenol 2010; 195(1):W19–W30. doi: 10.2214/ajr.09.3541. [DOI] [PubMed] [Google Scholar]

- 8.Yan K, Wang X, Lu L, Summers RM. DeepLesion: automated mining of large-scale lesion annotations and universal lesion detection with deep learning. J Med Imaging (Bellingham) 2018; 5(3):036501 doi: 10.1117/1.Jmi.5.3.036501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Borthakur A, Kneeland JB, Schnall MD. Improving Performance by Using a Radiology Extender. J Am Coll Radiol 2018; 15(9):1300–1303. doi: 10.1016/j.jacr.2018.03.051. [DOI] [PubMed] [Google Scholar]

- 10.Larson PA, Berland LL, Griffith B, Kahn CE Jr, Liebscher LA. Actionable findings and the role of IT support: report of the ACR Actionable Reporting Work Group. J Am Coll Radiol 2014; 11(6):552–558. doi: 10.1016/j.jacr.2013.12.016. [DOI] [PubMed] [Google Scholar]

- 11.Chalian H, Tore HG, Horowitz JM, Salem R, Miller FH, Yaghmai V. Radiologic assessment of response to therapy: comparison of RECIST Versions 1.1 and 1.0. Radiographics 2011; 31(7):2093–2105. doi: 10.1148/rg.317115050. [DOI] [PubMed] [Google Scholar]

- 12.W-radiology [October 7, 2019]. Available from: http://w-radiology.com.

- 13.E-anatomy [October 10, 2018]. Available from: https://www.imaios.com/en/e-Anatomy.

- 14.Folio LR, Machado LB, Dwyer AJ. Multimedia-enhanced Radiology Reports: Concept, Components, and Challenges. Radiographics 2018; 38(2):462–482. doi: 10.1148/rg.2017170047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Folio LR, Yazdi AA, Merchant M, Jones EC. Initial experience with multimedia and quantitative tumor reporting appears to improve oncologist efficiency in assessing tumor burden. Radiological Society of North America (RSNA), 2015. [Google Scholar]

- 16.Ritchie AJ, Sanghera C, Jacobs C, Zhang W, Mayo J, Schmidt H, et al. Computer Vision Tool and Technician as First Reader of Lung Cancer Screening CT Scans. J Thorac Oncol 2016; 11(5):709–717. doi: 10.1016/j.jtho.2016.01.021. [DOI] [PubMed] [Google Scholar]

- 17.Folio LR, Sandouk A, Huang J, Solomon JM, Apolo AB. Consistency and efficiency of CT analysis of metastatic disease: semiautomated lesion management application within a PACS. AJR Am J Roentgenol 2013; 201(3):618–625. doi: 10.2214/ajr.12.10136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Do HM, Sala E, Harris GJ, Bagheri MH, Folio LR. Establishing benchmarks in quantitative imaging core labs supporting clinical trials: how are we “measuring up” among centers with various capabilities? Society for Imaging Informatics in Medicine (SIIM), 2019. [Google Scholar]

- 19.Hussain S Communicating critical results in radiology. J Am Coll Radiol 2010; 7(2):148–151. doi: 10.1016/j.jacr.2009.10.012. [DOI] [PubMed] [Google Scholar]

- 20.Prevedello LM, Erdal BS, Ryu JL, Little KJ, Demirer M, Qian S, et al. Automated Critical Test Findings Identification and Online Notification System Using Artificial Intelligence in Imaging. Radiology 2017; 285 (3):923–931. doi: 10.1148/radiol.2017162664. [DOI] [PubMed] [Google Scholar]

- 21.Beesley SD, Patrie JT, Gaskin CM. Radiologist Adoption of Interactive Multimedia Reporting Technology. J Am Coll Radiol 2019; 16(4 Pt A):465–471. doi: 10.1016/j.jacr.2018.10.009. [DOI] [PubMed] [Google Scholar]

- 22.Do HM, Farhadi F, Xu Z, Jin D, Machado LB, Ferre EMN, Cohen GA, Lionakis MS, Folio LR. AI radiomics in a monogenic autoimmune disease: deep learning of routine radiologist annotations correlated with pathologically verified lung findings. Radiological Society of North America (RSNA), 2019. [Google Scholar]

- 23.Chang K, Balachandar N, Lam C, Yi D, Brown J, Beers A, et al. Distributed deep learning networks among institutions for medical imaging. J Am Med Inform Assoc 2018; 25(8):945–954. doi: 10.1093/jamia/ocy017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Langlotz CP, Allen B, Erickson BJ, Kalpathy-Cramer J, Bigelow K, Cook TS, et al. A Roadmap for Foundational Research on Artificial Intelligence in Medical Imaging: From the 2018 NIH/RSNA/ACR/The Academy Workshop. Radiology 2019; 291(3):781–791. doi: 10.1148/radiol.2019190613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Goel AK, DiLella D, Dotsikas G, Hilts M, Kwan D, Paxton L. Unlocking Radiology Reporting Data: an Implementation of Synoptic Radiology Reporting in Low-Dose CT Cancer Screening. J Digit Imaging 2019. doi: 10.1007/s10278-019-00214-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.McErlean A, Panicek DM, Zabor EC, Moskowitz CS, Bitar R, Motzer RJ, et al. Intra- and interobserver variability in CT measurements in oncology. Radiology 2013; 269(2):451–459. doi: 10.1148/radiol.13122665. [DOI] [PubMed] [Google Scholar]

- 27.Belton AL, Saini S, Liebermann K, Boland GW, Halpern EF. Tumour size measurement in an oncology clinical trial: comparison between off-site and onsite measurements. Clin Radiol 2003; 58(4):311–314. doi: 10.1016/s0009-9260(02)00577-9. [DOI] [PubMed] [Google Scholar]

- 28.Samulski M, Hupse R, Boetes C, Mus RD, den Heeten GJ, Karssemeijer N. Using computer-aided detection in mammography as a decision support. Eur Radiol 2010; 20(10):2323–2330. doi: 10.1007/s00330-010-1821-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Wright DL, Killion JB, Johnston J, Sanders V, Wallace T, Jackson R, et al. RAs increase productivity. Radiol Technol 2008; 79(4):365–370. [PubMed] [Google Scholar]