Abstract

Introduction

This study aimed to describe the rates and causes of unplanned readmissions within 30 days following carotid artery stenting (CAS) and to use artificial intelligence machine learning analysis for creating a prediction model for short-term readmissions. The prediction of unplanned readmissions after index CAS remains challenging. There is a need to leverage deep machine learning algorithms in order to develop robust prediction tools for early readmissions.

Methods

Patients undergoing inpatient CAS during the year 2017 in the US Nationwide Readmission Database (NRD) were evaluated for the rates, predictors, and costs of unplanned 30-day readmission. Logistic regression, support vector machine (SVM), deep neural network (DNN), random forest, and decision tree models were evaluated to generate a robust prediction model.

Results

We identified 16,745 patients who underwent CAS, of whom 7.4% were readmitted within 30 days. Depression [p < 0.001, OR 1.461 (95% CI 1.231–1.735)], heart failure [p < 0.001, OR 1.619 (95% CI 1.363–1.922)], cancer [p < 0.001, OR 1.631 (95% CI 1.286–2.068)], in-hospital bleeding [p = 0.039, OR 1.641 (95% CI 1.026–2.626)], and coagulation disorders [p = 0.007, OR 1.412 (95% CI 1.100–1.813)] were the strongest predictors of readmission. The artificial intelligence machine learning DNN prediction model has a C-statistic value of 0.79 (validation 0.73) in predicting the patients who might have all-cause unplanned readmission within 30 days of the index CAS discharge.

Conclusions

Machine learning derived models may effectively identify high-risk patients for intervention strategies that may reduce unplanned readmissions post carotid artery stenting.

Central Illustration

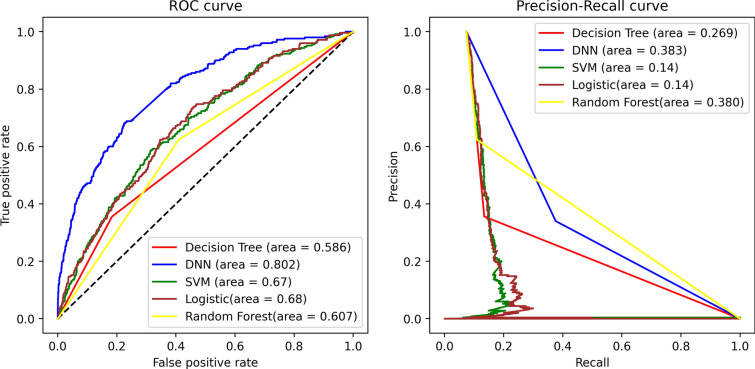

Figure 2: ROC and AUPRC analysis of DNN prediction model with other classification models on 30-day readmission data for CAS subjects

Supplementary Information

The online version contains supplementary material available at 10.1007/s12325-021-01709-7.

Keywords: Readmission, Carotid artery stenting, Artificial intelligence, Machine learning

Plain Language Summary

We present a novel deep neural network-based artificial intelligence prediction model to help identify a subgroup of patients undergoing carotid artery stenting who are at risk for short-term unplanned readmissions. Prior studies have attempted to develop prediction models but have used mainly logistic regression models and have low prediction ability. The novel model presented in this study boasts 79% capability to accurately predict individuals for unplanned readmissions post carotid artery stenting within 30 days of discharge.

Supplementary Information

The online version contains supplementary material available at 10.1007/s12325-021-01709-7.

Key Summary Points

| We present a novel deep neural network-based artificial intelligence prediction model to help identify a subgroup of patients undergoing carotid artery stenting who are at risk for short-term unplanned readmissions. |

| Prior studies have attempted to develop prediction models but have used mainly logistic regression models and have low prediction ability. |

| The novel model presented in this study boasts 79% capability to accurately predict individuals for unplanned readmissions post carotid artery stenting within 30 days of discharge. |

Digital Features

This article is published with digital features, including a summary slide and plain language summary, to facilitate understanding of the article. To view digital features for this article go to 10.6084/m9.figshare.14198939.

Introduction

Strokes secondary to thromboembolism from an atherosclerotic plaque at the carotid bifurcation or the internal carotid artery (ICA) account for 10–15% of all strokes worldwide, and carotid artery stenting (CAS) in a timely fashion may help prevent such strokes [1, 2]. Unplanned readmissions due to all causes are an important aspect from a patient care perspective and quality metric under the Patient and Protection and Affordable Care Act. Prediction of early readmission is crucial in helping plan the delivery of healthcare services and identifying high-risk patients for intervention strategies to reduce readmissions and provide cost conscientious care to the community [3, 4].

Most of the prior studies evaluating carotid revascularization have focused on comparing the CAS with carotid end-arterectomy (CEA) in terms of outcomes, including stroke, major adverse cardiovascular and cerebrovascular events (MACCE), death, or readmission. Furthermore, these studies and others evaluating readmissions used data before 2014 when the International Classification of Diseases, 9th Revision (ICD-9) was in practice. To the best of our knowledge, there is no study that has specifically evaluated CAS for early readmissions using the strength of the International Classification of Diseases, 10th Revision (ICD-10) diagnosis codes and for more recent patient-level data [5, 6].

The specificity of diagnostic and procedure codes has immensely improved with the advent of the ICD-10 codes, which can give us better insight into the causes and associations of unplanned readmissions [7]. With the advent of machine learning methods, there has been a gradual adaptation of those in healthcare, and they are also found to be superior to standard prediction rules for hospital readmissions [8]. We present a nationwide evaluation of early unplanned readmission and an artificial intelligence-assisted readmission prediction model for patients undergoing CAS.

The purpose of this study was to describe the rates and causes of unplanned readmissions within 30 days following CAS, and we aimed at using artificial intelligence machine learning analysis to develop a robust prediction model for short-term readmissions.

Methods

The US Nationwide Readmission Database (NRD) is a nationally representative sample of all-age, all-payer discharges from US nonfederal hospitals produced by the Healthcare Cost and Utilization Project (HCUP) of the Agency for Healthcare Research and Quality (AHRQ) [9]. This database is composed of discharge-level hospitalization data from 28 geographically dispersed states across the USA. It has approximately 18 million discharges for the year 2017 (weighted estimated to roughly 36 million discharges). The dataset used in the present study represents 60% of the US population and 58.2% of all US hospitalizations.

Individual patients in the NRD are assigned up to 40 diagnosis codes and 25 procedure codes for each hospitalization. We defined CAS with the procedure codes from ICD-10 listed in Table 1 in the supplementary material. The primary outcome was first unplanned readmission within 30 days of the index discharge after the first CAS. If a patient had multiple CAS in a year, only the first intervention was used for the analysis. The cohort patients admitted in the month of December for the index admission were also excluded, as they may not have 30 days of follow-up, leading to immortal time bias. All ICD-10 and Clinical Classification Software Refined (CCSR) codes used in this study are presented in Table 2 in the supplementary material. Statistical analysis was performed using IBM SPSS Statistics V26 using two-sided tests and a significance of less than 0.05. We used the Pearson–chi-square test for categorical variables, Mann–Whitney U test for continuous variables with no readmission as the reference group, and logistic regression for predictions.

Compliance with Ethics Guidelines

This study was performed utilizing publicly available datasets and hence does not require IRB review under 45 CFR 46. In addition, the Institutional Review Board (IRB) at the University of South Alabama approved the study for exempt status.

Model Development

A prediction model was developed in accordance with the Transparent Reporting of a multivariable prediction model for Individual Prognosis Or Diagnosis (TRIPOD) initiative guidelines [10].

Data Preprocessing

The preprocessing of CAS readmission records was conducted to make the data at hand represent nationwide readmission rates. The data at hand involved 9019 individual patients (weighted analysis to 16,827 patients for national representation).

Description of Outcome Variable

The outcome of interest was readmission (yes/no) within 30 days from discharge after CAS. Among all subjects undergoing CAS, 7.4% of subjects were readmitted, while 93.6% were not readmitted.

Description of Predictor Variables

A total of 42 clinically pertinent variables were included for model development. These included patient demographics, insurance status, hospital bed size, teaching hospital teaching status, length of stay, and relevant comorbidities. We performed exploratory analysis (Table 1) by evaluating all categorical and continuous variables. Additionally, we evaluated for missing data and zero variances and found no zero variance categorical predictors along with any missing values among all the predictors. The continuous variables were standardized to zero mean and unit variance. The categorical variables were dummy coded using one-hot encoding, which were then used as inputs for the prediction model.

Table 1.

Baseline characteristics and procedure-related factors during index admission for CAS

| No early readmissions (n = 15,512; 92.6%) | 30-day readmission (n = 1233; 7.4%) | Overall (n = 16,745) | p value | Odds ratio (95% CI) | |

|---|---|---|---|---|---|

| Age (years) (median [IQR]) | 70 [62–77] | 73 [65–79] | 70 [62–77] | < 0.001 | |

| Female | 37.7% | 40.9% | 38.0% | 0.028 | 1.141 (1.014–1.284) |

| Elective | 59.8% | 48.2% | 59.0% | < 0.001 | 0.623 (0.555–0.701) |

| Weekend admission | 10.1% | 13.6% | 10.4% | < 0.001 | 1.402 (1.182–1.663) |

| Primary expected payer | < 0.001 | ||||

| Medicare | 70.2% | 77.9% | 70.8% | ||

| Medicaid | 6.5% | 6.0% | 6.4% | ||

| Private | 18.8% | 13.5% | 18.4% | ||

| Self-pay | 1.4% | 0.7% | 1.4% | ||

| No charge | 0.1% | 0.0% | 0.1% | ||

| Other | 2.9% | 1.9% | 2.9% | ||

| Quartile of median household income | 0.009 | ||||

| 0–25th | 30.1% | 28.1% | 29.9% | ||

| 26th–50th | 29.7% | 29.1% | 29.7% | ||

| 51st–75th | 25.6% | 24.7% | 25.5% | ||

| 76th–100th | 14.6% | 18.1% | 14.8% | ||

| Comorbidities | |||||

| Tobacco use disorders/smoker | 23.1% | 22.3% | 23.0% | 0.523 | 0.956 (0.831–1.098) |

| Alcohol use disorders | 3.1% | 3.2% | 3.1% | 0.833 | 1.036 (0.744–1.444) |

| Lipid disorders | 66.0% | 65.9% | 66.0% | 0.943 | 0.996 (0.881–1.125) |

| Hypertension | 59.4% | 50.4% | 58.7% | < 0.001 | 0.695 (0.619–0.781) |

| Diabetes | 34.8% | 42.0% | 35.3% | < 0.001 | 1.359 (1.208–1.528) |

| Obesity | 13.2% | 15.2% | 13.3% | 0.046 | 1.179 (1.002–1.387) |

| Heart failure | 12.8% | 25.1% | 13.7% | < 0.001 | 2.270 (1.979–2.603) |

| Coronary artery disease | 45.3% | 52.0% | 45.8% | < 0.001 | 1.307 (1.164–1.468) |

| Previous PCI | 13.7% | 16.2% | 13.9% | 0.014 | 1.219 (1.041–1.428) |

| Previous CABG | 0.6% | 0.9% | 0.6% | 0.221 | 1.476 (0.788–2.765) |

| Valvular heart disease | 6.8% | 9.1% | 7.0% | 0.003 | 1.365 (1.113–1.674) |

| Dysrhythmias | 16.7% | 25.6% | 17.3% | < 0.001 | 1.721 (1.504–1.969) |

| Atrial fibrillation/flutter | 14.4% | 22.5% | 15.0% | < 0.001 | 1.733 (1.505–1.995) |

| Symptomatic carotid stenosis | 92.0% | 91.7% | 92.0% | 0.716 | 0.962 (0.779–1.187) |

| Periprocedural cerebral infarction | 29.0% | 36.3% | 29.5% | < 0.001 | 1.397 (1.238–1.577) |

| Prior transient ischemic attack or stroke without residual deficit | 23.8% | 23.3% | 23.7% | 0.703 | 0.974 (0.849–1.117) |

| Depression | 9.9% | 15.0% | 10.3% | < 0.001 | 1.610 (1.365–1.898) |

| Dementia/neurocognitive disorders | 4.3% | 7.1% | 4.5% | < 0.001 | 1.716 (1.363–2.160) |

| Peripheral vascular disease | 21.4% | 25.2% | 21.7% | 0.002 | 1.241 (1.085–1.419) |

| Pulmonary circulatory disorders | 2.4% | 5.1% | 2.6% | < 0.001 | 2.191 (1.667–2.881) |

| GI bleed | 1.0% | 2.5% | 1.1% | < 0.001 | 2.459 (1.667–3.628) |

| COPD | 20.8% | 27.0% | 21.3% | < 0.001 | 1.407 (1.234–1.605) |

| Hepatic failure | 0.1% | 0.2% | 0.1% | 0.321 | 2.098 (0.469–9.386) |

| Thyroid disorders | 14.1% | 14.5% | 14.1% | 0.694 | 1.034 (0.877–1.219) |

| CKD | 15.3% | 25.0% | 16.0% | < 0.001 | 1.845 (1.610–2.114) |

| AKI | 6.4% | 14.2% | 7.0% | < 0.001 | 2.429 (2.044–2.886) |

| Fluid and electrolyte disorder | 12.8% | 21.7% | 13.5% | < 0.001 | 1.886 (1.634–2.176) |

| Acute hemorrhagic anemia | 4.8% | 7.2% | 5.0% | < 0.001 | 1.532 (1.220–1.925) |

| Coagulation disorders | 3.6% | 6.9% | 3.8% | < 0.001 | 1.999 (1.579–2.531) |

| Cancer | 4.1% | 7.4% | 4.3% | < 0.001 | 1.870 (1.489–2.348) |

| APR DRG mortality risk | < 0.001 | ||||

| Minor likelihood of dying | 38.7% | 24.3% | 37.6% | ||

| Moderate likelihood of dying | 39.7% | 38.7% | 39.6% | ||

| Major likelihood of dying | 14.4% | 23.9% | 15.1% | ||

| Extreme likelihood of dying | 7.2% | 13.1% | 7.6% | ||

| APR DRG severity of illness | < 0.001 | ||||

| Minor loss of function (includes cases with no comorbidity or complications) | 27.6% | 18.5% | 27.0% | ||

| Moderate loss of function | 39.1% | 33.3% | 38.7% | ||

| Major loss of function | 26.2% | 35.5% | 26.9% | ||

| Extreme loss of function | 7.0% | 12.7% | 7.4% | ||

| Hospital bed size | 0.284 | ||||

| Small | 5.5% | 4.5% | 5.4% | ||

| Medium | 23.3% | 24.2% | 23.3% | ||

| Large | 71.3% | 71.4% | 71.3% | ||

| Control/ownership of hospital | 0.010 | ||||

| Government, nonfederal | 11.9% | 10.2% | 11.8% | ||

| Private, not-profit | 76.9% | 76.1% | 76.8% | ||

| Private, invest-own | 11.2% | 13.7% | 11.4% | ||

| Hospital urban rural designation | < 0.001 | ||||

| Large metropolitan areas with at least 1 million residents | 47.6% | 55.2% | 48.2% | ||

| Small metropolitan areas with fewer than 1 million residents | 49.4% | 42.9% | 48.9% | ||

| Micropolitan areas | 3.0% | 1.9% | 2.9% | ||

| Not metropolitan or micropolitan (non-urban residual) | 0.0% | 0.0% | 0.0% | ||

| Hospital teaching status | 0.010 | ||||

| Metropolitan non-teaching hospital | 14.4% | 16.6% | 14.6% | ||

| Metropolitan teaching hospital | 82.6% | 81.5% | 82.5% | ||

| Non-metropolitan hospital | 3.0% | 1.9% | 2.9% | ||

| Procedural characteristics | |||||

| Vasopressor use | 1.5% | 2.8% | 1.6% | 0.001 | 1.828 (1.270–2.630) |

| Cardiac arrest | 0.3% | 0.9% | 0.4% | 0.003 | 2.625 (1.368–5.039) |

| In-hospital bleeding | 1.0% | 1.9% | 1.0% | 0.002 | 1.973 (1.267–3.074) |

| In-hospital vascular complications | 0.1% | 0.1% | 0.1% | 0.726 | 0.699 (0.093–5.238) |

| Discharge destination | < 0.001 | ||||

| Home/self-care | 75.5% | 61.1% | 74.4% | ||

| Home healthcare | 0.4% | 1.0% | 0.5% | ||

| Discharge against medical advice | 0.3% | 0.5% | 0.3% | ||

| Length of stay and cost analysis | |||||

| Index admission length of stay (days) (median [IQR]) | 2 [1–6] | 3 [1–10] | 2 [1–6] | < 0.001 | |

| Index admission cost (US $) (median [IQR]) | 16,523 [11095–28771] | 21,274 [12684–37720] | 16,788 [11188–29586] | < 0.001 | |

| Readmission length of stay (days) (median [IQR]) | 2 [3–6] | ||||

| Readmission cost (US $) (median [IQR]) | 9768 [5009–14242] | ||||

IQR interquartile range, PCI percutaneous coronary intervention, CABG coronary artery bypass grafting, GI gastrointestinal, AKI acute kidney injury, CKD chronic kidney disease, COPD chronic obstructive pulmonary disease, APRDRG All Patient Refined Diagnosis Related Group

Model Specification

Deep neural network (DNN) is a machine learning algorithm that processes data with complex architectures to perform classification and build prediction models. Briefly, DNN is an artificial neural network with one input layer, at least four hidden layers, and one output layer [11]. Fully connected DNN conventionally uses a feed forward learning mechanism where the information flows from the input layer, through the hidden layers, towards the output layer to learn complex data patterns, extract important features, and make predictions. DNN uses nonlinear transformations to construct such prediction models [12, 13]. To reveal patterns from large datasets and investigate those patterns with biological questions of interest, a key factor is to set up a DNN model properly. For this end, we choose the proper choice of hyper-parameters, such as the number of hidden layers and the type of loss function using a grid search using Grid_SearchCV [14] function on a range of values of the hyper-parameters and then choose the combination which results in the best accuracy. In our model setup, we used (1) hidden layers ranging between 1 and 100 with a step size of 1 and between two loss functions “adam” and “rmsprop”, where “adam” function performed the best and with four as the number of hidden layers. (2) To choose the number of nodes on each hidden layer since we had 91 input variables after dummy coding, we chose the number of nodes to be 128 per hidden layer, as the rule of thumb that recommends the number of chosen nodes to be less than twice the size of the input. (3) For nonlinear activation function to the hidden layers rectified linear unit (relu) was used and sigmoid function as the output layer’s activation function.

Evaluation Strategy

Addressing Class Imbalance

Only 7.4% of the CAS patients were readmitted within 30 days in our study, highlighting a very low readmission rate as often seen with medical data. Particularly, in our dataset, we observed that the proportion of non-readmitted patients (93.6%) was 13 times more than that of readmitted patients (7.4%), clearly making our data highly class imbalanced. To address this class imbalance problem, we adjusted the minority class (patients who were readmitted) weight to 13 times the majority class’s weight (patients who were not readmitted). This adjustment inherently constructs a model with better generalization. These class weights are used during the DNN model’s training to increase the misclassification cost of minority class samples. This makes the training process pay more attention towards the minority class samples, thus increasing the sensitivity of the prediction model.

Incorporating AUPRC as a Metric of Performance Evaluation

Inferring model performance from area under receiver operating characteristic curve (AUROC) can be deceptive in imbalanced datasets like ours where only 7.4% of patients were readmitted while 93.6% were not readmitted. Specifically, in an imbalanced setup, since the number of negative samples (non-readmitted patients) is very large, the false positive rate increases more slowly because the true negatives would probably be very high and make this metric smaller. Therefore, a receiver operating characteristic (ROC) curve can have a better result while misclassifying most or all the minority class. However, precision is not affected by a large number of negative samples, which is because it measures the number of true positives out of the samples predicted as positives. Precision is more focused on the positive class than in the negative class; it actually measures the probability of correct detection of positive values, while false positive rate (FPR) and true positive rate (TPR) (ROC metrics) measure the ability to distinguish between the classes. In contrast, area under the precision recall curve (AUPRC) scores are specially designed to detect rare events and are appropriate in these scenarios as they particularly show a classifier having a low performance if it is misclassifying most or all the minority class [15, 16].

Splitting Data for Training Models

We divided the dataset into three parts, 70% for training, 10% for validation, and 20% for testing. Firstly, we build the model using 70% of the data by obtaining the optimized weight of each node. Secondly, to evaluate our built model’s fit, we apply the model on a validation set to check the prediction accuracy, to which we found it to be at 90.86%. Finally, the remaining 20% of data was used to test the performance of the model. The performance of the model was evaluated using accuracy, precision, sensitivity, specificity, and AUROC. Additionally, we used ELI5 to predict the importance weight of each feature. To determine variable importance, ELI5 uses the idea of permutation importance, which randomly shuffles the values of a single feature and makes a prediction using the shuffled dataset. The prediction accuracy using the shuffled dataset and original dataset are then compared to enumerate the performance degradation. This process is conducted till the importance of all the features is computed.

Results

Population Characteristics and Descriptive Results

The NRD included 18,882 individual patients undergoing CAS from January through December 2017 among hospitalized patients 18 years of age and older. We excluded those who died during the index hospitalization (n = 251) and were discharged after November 30, or had missing data on length of stay. The final study sample included 16,745 patients who were discharged alive after index CAS from January through November 2017.

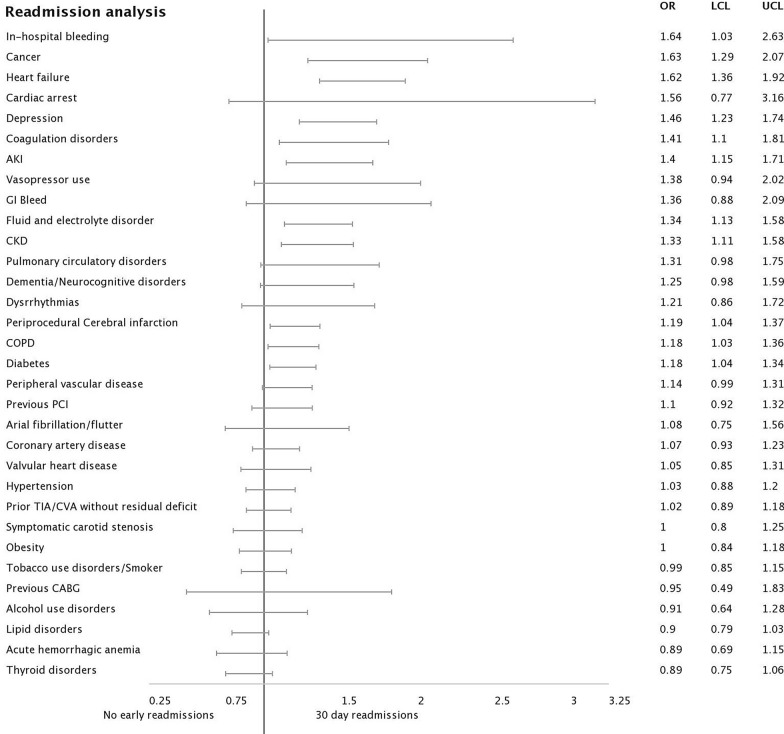

After index CAS, 7.4% patients (n = 1233) had 30-day readmission. Notably, 8.6% of patients returned with acute cerebral infarction, 4.2% acute hemorrhagic cerebrovascular disease, and 4.7% of patients died during the unplanned 30-day readmission encounter. Table 1 gives a detailed synopsis of the baseline and procedure-related factors associated with early readmission. Forest plot analysis of comorbidities and procedure-related factors affecting 30-day readmission is presented (Fig. 1).

Fig. 1.

Forest plot analysis of comorbidities and procedure-related factors affecting 30-day readmission after carotid artery stenting

Cardiac causes made up 39.8% of all readmissions. Ten leading causes and frequencies of primary diagnosis category for readmissions encounters based on Clinical Classification Software Refined (CCSR) categories are presented in Table 2. Total charges of care for the index and 30-day unplanned admissions amount to over $1.9 billion and $66 million, respectively.

Table 2.

Causes and frequencies of primary diagnosis category for readmissions encounters [based on the primary Clinical Classification Software Refined (CCSR)]

| Diagnosis category | Frequency n = 1233 (%) |

|---|---|

| Septicemia | 8.6% |

| Cerebral infarction | 8.6% |

| Heart failure | 5.9% |

| Acute hemorrhagic cerebrovascular disease | 4.2% |

| Acute and unspecified renal failure | 4.2% |

| Gastrointestinal hemorrhage | 3.6% |

| Cardiac dysrhythmias | 3.4% |

| Occlusion or stenosis of precerebral or cerebral arteries without infarction | 3.2% |

| Acute myocardial infarction | 3.0% |

| Pneumonia (except that caused by tuberculosis) | 2.8% |

This table represents only ten leading diagnosis categories for readmission; hence the total will not amount to 100%

Performance Comparison of Deep Neural Network with Other Machine Learning Models

We evaluated the proposed DNN model’s performance with four other frequently used machine learning algorithms in medical data, such as logistic regression, random forest, decision tree, and support vector machine. Firstly, we found that its accuracy was 92.57% for logistic regression, with AUROC of 0.68 and AUPRC of 0.14. Secondly, the accuracy was 55.26% for random forest, with AUROC and AUPRC of 0.611 and 0.367, respectively. Thirdly, for the decision tree, the accuracy was 78.19%, with AUROC of 0.588 and AUPRC of 0.269. Lastly, for the support vector machine, the accuracy was 70.35% with AUROC of 0.67 and AUPRC of 0.14. DNN, on the other hand, produced an accuracy of 87.43% but notably had a higher AUROC of 0.79 (validation 0.73) and AUPRC of 0.383 compared to all other models (Table 3). A graphical representation of the performance (AUROC and AUPRC) of the proposed DNN prediction model with other classification models on 30-day readmission data for CAS subjects is shown in Fig. 2.

Table 3.

Machine learning algorithms and accuracy in predicting early readmission post CAS

| Model | AUC | AUPRC | Accuracy (%) | Sensitivity (%) | Specificity (%) | Precision (%) |

|---|---|---|---|---|---|---|

| Logistic regression | 0.68 | 0.14 | 92.57 | 50 | 100 | 46.29 |

| DNN | 0.79 | 0.383 | 87.43 | 70.22 | 90.43 | 62.65 |

| SVM | 0.67 | 0.14 | 70.35 | 62.46 | 71.72 | 54.07 |

| Random forest | 0.611 | 0.376 | 55.26 | 61.12 | 61.55 | 53.07 |

| Decision tree | 0.588 | 0.269 | 78.19 | 58.61 | 81.61 | 53.74 |

AUC area under the curve, AUPRC area under the precision recall curve, DNN deep neural network, SVM support vector machine

Fig. 2.

ROC and AUPRC analysis of DNN prediction model with other classification models on 30-day readmission data for CAS subjects. Plot of prediction capability of machine learning models

Predictors of 30-Day Readmission and Costs

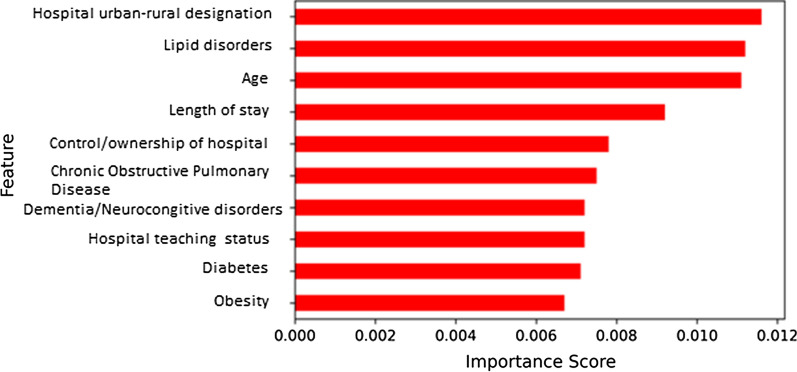

To identify the variables that had a higher contribution to our DNN models predictive power, we applied ELI5 to identify such variables. Specifically, using Eli5, we obtained importance weights for each of the 42 model variables used in this analysis and then ranked them using their respective percentile score. Variables with their importance weight ranked in the top 20 percentiles (ranging from 0.0116 to 0.0067) were then selected as the most influential/important variables in our model (Table 4). Following this procedure, we obtained ten most important variables to predict 30-day readmission (Fig. 3).

Table 4.

Important score of each variable in early readmission post CAS dataset

| Weight | Variance | Name | Percentile rank |

|---|---|---|---|

| 0.0116 | 0.0035 | Hospital urban–rural designation | 1 |

| 0.0112 | 0.0028 | Lipid disorders | 0.976190476 |

| 0.0111 | 0.0043 | Age | 0.952380952 |

| 0.0092 | 0.0032 | Length of stay | 0.928571429 |

| 0.0078 | 0.0021 | Control/ownership of hospital | 0.904761905 |

| 0.0075 | 0.0036 | Chronic obstructive pulmonary disease | 0.880952381 |

| 0.0072 | 0.0016 | Teaching status of hospital | 0.845238095 |

| 0.0072 | 0.0013 | Dementia/neurocognitive disorders | 0.845238095 |

| 0.0071 | 0.0003 | Diabetes | 0.80952381 |

| 0.0067 | 0.0024 | Obesity | 0.785714286 |

| 0.0065 | 0.0032 | Tobacco abuse | 0.761904762 |

| 0.0064 | 0.0016 | Coagulation disorders | 0.738095238 |

| 0.0063 | 0.0011 | Cardiac dysrhythmias | 0.714285714 |

| 0.0062 | 0.0008 | Thyroid disorders | 0.69047619 |

| 0.0061 | 0.0023 | Fluid electrolyte disorders | 0.666666667 |

| 0.0059 | 0.0033 | History of cerebrovascular accident/transitional ischemic attack–no residual deficit | 0.642857143 |

| 0.0055 | 0.003 | Depression | 0.619047619 |

| 0.0054 | 0.0036 | Gender | 0.571428571 |

| 0.0054 | 0.0013 | Acute hemorrhagic anemia | 0.571428571 |

| 0.0054 | 0.0019 | Heart failure | 0.571428571 |

| 0.0053 | 0.004 | Bed size of hospital | 0.523809524 |

| 0.0049 | 0.0016 | Valvular heart disease | 0.5 |

| 0.0048 | 0.0024 | Peripheral artery disease | 0.476190476 |

| 0.0045 | 0.0026 | Atrial fibrillation/flutter | 0.452380952 |

| 0.0041 | 0.0022 | Expected primary payer | 0.428571429 |

| 0.0038 | 0.003 | Hypertension | 0.404761905 |

| 0.0035 | 0.004 | Cerebrovascular accident | 0.380952381 |

| 0.0034 | 0.0014 | Cancer | 0.357142857 |

| 0.0033 | 0.0019 | History of percutaneous coronary intervention | 0.333333333 |

| 0.0031 | 0.003 | Coronary artery disease | 0.30952381 |

| 0.0026 | 0.0011 | Pulmonary circulatory disorders | 0.285714286 |

| 0.0023 | 0.0006 | In-hospital bleeding | 0.25 |

| 0.0023 | 0.0025 | Chronic kidney disease | 0.25 |

| 0.002 | 0.0008 | Alcohol abuse | 0.202380952 |

| 0.002 | 0.001 | Symptomatic carotid artery stenosis | 0.202380952 |

| 0.0018 | 0.0002 | Gastrointestinal bleed | 0.166666667 |

| 0.0016 | 0.0005 | History of coronary artery bypass grafting surgery | 0.142857143 |

| 0.0014 | 0.0006 | Vasopressors | 0.119047619 |

| 0.0001 | 0.0003 | Cardiac arrest | 0.083333333 |

| 0.0001 | 0.0003 | Hepatic failure | 0.083333333 |

| 0 | 0.0002 | In-hospital vascular complications | 0.047619048 |

| − 0.0003 | 0.0017 | Acute kidney injury | 0.023809524 |

Fig. 3.

Bar graph diagram showing relative importance of predictors for unplanned readmission

Discussion

This study, which examines the rate and costs of 30-day readmissions after index CAS, finds that 7.4% of patients get readmitted within 30 days of discharge after undergoing CAS. The major causes for 30-day unplanned readmission were septicemia or cerebral infarction/hemorrhagic cerebrovascular bleed. Using machine learning approaches, we can develop a risk prediction model that can identify patients at high risk of unplanned readmissions with a C-statistic of 0.802 using DNN, to the best of our knowledge, the first of its kind in many ways. This is the most contemporary analysis looking at 30-day unplanned readmissions. The first study uses nationally representative data to develop risk prediction models using advanced machine learning like DNNs for CAS. On the basis of AUROC and AUPRC metrics, DNN shows superior performance to commonly used statistical or machine learning methods in modeling CAS readmission rates.

Prior studies looking at readmissions have shown a variety of readmission rates, including vascular interventions in Medicare patients (24%) [17], endovascular aortic aneurysm repair (10.2%) [18], lower extremity bypass (14.8%) [19], endovascular or surgical revascularization for chronic mesenteric ischemia (19.5%) [20], and revascularization for critical limb ischemia (20.4%) [21]. Looking specifically at the readmission rates in the CAS population, most prior studies have compared CEA readmission rates versus CAS. These studies have demonstrated rates of 12.0% and 8.3% for Medicare-only and nationally representative data, respectively, for the CAS cohorts [5, 22]. Other studies have also shown similar readmission rates for CAS patients in the range of 9.6% in the Pennsylvania Health Care Cost Containment Council study by Hintze et al. [23], 10.75% by Greenleaf et al. [24], and 11.11% by Galinanes et al. [25]. All these prior studies have used patient-level data from before 2015 when ICD-9 was in use. Our study gives a glimpse into the most contemporary nationally representative data using ICD-10 codes. In addition, our national evaluation of unplanned 30-day readmissions after CAS has several key findings. Our observation showed that 7.4% of patients undergoing CAS had unplanned readmissions within 30 days of hospital discharge. The decline in readmission rates observed in our study, as compared to aforementioned prior studies, may be related to increased operator and/or hospital experience or may be due to strict inclusion/exclusion criteria employed in our study [26, 27].

In our study, sepsis was found to be one of the leading causes for readmission post CAS. Interestingly literature review showed that postoperative surgical-site infection, sepsis/septic shock, pneumonia, and urinary tract infection are known associations with readmissions after CEA [28]. Quiroz et al. looked into hidden readmissions after CEA and CAS, and found infectious etiologies amounting to 9.9% as a cause for readmission (wound complication 3.7%, sepsis 3.1%, urinary tract infection/pyelonephritis 0.5%, and other infections 2.6%). This proves that the infection/sepsis rates found in our study were not in excess of those in the existing literature [29].

There are over 40 models for predicting short- and long-term outcomes after carotid revascularization [30]. However, the prediction models available have the following potential limitations. Firstly, most of the models have used patient databases that are not representative of the national population. The models have used logistic regression, and none of them have used artificial intelligence to improve the quality of predictions. The existing models have used data from the ICD-9 era wherein the specificity of diagnostic codes was significantly inferior to ICD-10 codes. None of the short-term models have looked at all-cause readmissions and can only predict stroke or death. C-statistic (or area under the curve) is considered an important discriminating factor for the accuracy of a prediction model with scores of < 0.50, > 0.50–0.70, > 0.70–0.80, < 0.80–0.90, and > 0.90 representing no, poor, low, excellent, and outstanding discrimination, respectively [31, 32]. Volkers et al. presented an excellent external validation study evaluating 30 prediction models for CEA, CAS, or both and found that not a single model had C-statistic over 0.67 (poor prediction capability) during external validation proving that although there are many models to choose from none of them truly qualify for being substantially useful by clinicians [30].

Despite having a plethora of existing state-of-the-art statistical and machine learning methods in modeling readmission rates, it is noteworthy that our novel implementation of DNN helped us build a model with increased predictive power and, at the same time, facilitated the identification of features that are clinically both relevant and important to their association with the event of readmission post CAS. It was also interesting to see that although DNN had a lower or similar level of accuracy compared to traditional methods, the score of our model with respect to performance metrics such as AUROC and AUPRC was significantly higher. One plausible reasoning for such an observation stems from the fact that the accuracy of existing models such as logistic regression is heavily biased towards the majority class’s proportion as opposed to the minority class samples. Although it enriches the accuracy score, this inherent bias has downstream consequences in high misclassification rates, subsequently resulting in low predictive power [33]. In contrast, DNN is agnostic to such biases. It uses the entire dataset to find out the complex patterns between the variables and then further utilizes this pattern to classify the outcome labels even if the data is highly imbalanced. Therefore, on the basis of our strong evidence, DNN should serve as a premium choice in building more robust and adaptive predictive models for accurate predictions in complex data architectures such as 30-day readmission post CAS.

Patients with comorbidities, including depression, heart failure, cancer, in-hospital bleeding, and coagulation disorders, were the strongest predictors of readmission based on logistic regression, as shown in Fig. 1. The logistic regression analysis did not include hospital-level factors like teaching status, control ownership, or hospital location. Also evident was that most comorbidities had overlapping power, which would make it challenging to develop a robust prediction model using this analysis alone. This meant that logistic regression-based prediction models would not perform well in a clinical setting. Our study further improved upon this and used DNN and hospital-level data to identify novel predictors for early readmission, as shown in Fig. 3. The DNN model provided a zoomed-in view with refined results and showed that factors like hospital rural–urban designation, control/ownership, and teaching status form one of the strongest predictors in addition to newly identified comorbidities to identify patients at risk of early readmissions post CAS. Patients with these comorbidities were more likely to get readmitted, which may have been due to disease progression. Further prospective research would be needed to determine real impact and causal associations.

Reasons for readmission based on the primary diagnosis code were septicemia (8.6%), cerebral infarction (8.6%), heart failure (5.9%), acute hemorrhagic cerebrovascular disease (4.2%), acute renal failure (4.2%), and gastrointestinal hemorrhage (3.6%). These further affirm the need to develop robust prediction models to help decrease unplanned readmissions and comorbidities. Interestingly, in our study, patients with different insurance status (Medicare, Medicaid, or private) and hospital bed size had no significant impact on unplanned readmissions. In contrast, patients with higher scores on mortality/severity of illness subclass of APRDRG scores had worse outcomes in terms of all-cause readmissions. Patients treated at private hospitals compared to government, nonfederal hospitals, and those at metropolitan hospitals were at increased risk for unplanned readmissions. This may be attributed to the difference in the practice patterns at different hospital locations or subtypes or secondary to the number of cases being done at that location and physician experience [26, 27].

Multiple prediction models have been developed in the past, mostly looking at outcomes like recurrent stroke, myocardial infarction, or death. Unfortunately, none of them have a prediction tool to help with short-term readmission risk due to all-cause readmissions. The studies also lack prediction power, especially when evaluated with external validation. A study by Volkers et al. presented an excellent external validation study evaluating 30 prediction models for CEA, CAS, or both and found that not a single model had AUC over 0.67 during external validation [30]. This further proves the point that although there are many models to choose from, none of them truly qualify for being substantially useful in current day practice. Our prediction model is novel in many ways. First, it is the first model to use nationally representative data in the contemporary ICD-10 era and uses machine learning models to predict all-cause unplanned short-term readmissions. The AUC score of 0.79 for DNN is very robust in predicting our primary outcome.

Study Limitations

NRD is in a format of annualized data with a maximum follow-up of 1 year. As with any observational data, the results do not suggest a causal relationship as there can be other unmeasured confounders. The NRD database does not provide pharmacological data/lesion-level data that may impact readmissions. Lastly, the presented risk scores have not been externally validated and currently stand applicable only to the US population.

Conclusion

Our analysis suggests that 7.4% of patients get readmitted within 30 days of discharge after undergoing CAS with septicemia or cerebral infarction/hemorrhagic cerebrovascular bleed as the major causes of all unplanned readmissions. We demonstrate that using machine learning approaches, and we are able to develop a risk prediction model that is able to identify patients at high risk of unplanned readmissions with a C-statistic of 0.802 using DNN. Our work is an exemplar of how machine learning techniques can be used to identify patients at high risk of unplanned readmission for targeted interventions, which, if efficacious, may represent significant healthcare savings to the wider healthcare economy. We plan to acquire funding to develop an easy-to-use online tool, and a software plug-in for existing electronic medical record software to allow for quick assessment of readmission risk in patients undergoing CAS.

Supplementary Information

Below is the link to the electronic supplementary material.

Acknowledgements

The authors thank the Healthcare Utilization Project (HCUP) for providing this publicly available data. The authors also thank all participants of the HCUP project.

Funding

No funding or sponsorship was received for this study or publication of this article.

Authorship

All named authors meet the International Committee of Medical Journal Editors (ICMJE) criteria for authorship for this article, take responsibility for the integrity of the work as a whole, and have given their approval for this version to be published.

Authorship Contributions

Dr. AA, Dr. NA, and Dr. GCF conceptualized and designed the study, carried out the initial analysis and interpretation, coordinated data collection and prepared the manuscript. Dr. AA, Dr. SC, Dr. AR, and Mr. RC helped in the formation of study design, data analysis, artificial intelligence model development, and interpretation and critically reviewed the manuscript for important intellectual content. All authors approved the final manuscript as submitted and agree to be accountable for all aspects of the work.

Disclosures

Gregg C. Fonarow reports consulting for Abbott, Amgen, AstraZeneca, Bayer, Edwards, Janssen, Medtronic, Merck, and Novartis. Amod Amritphale, Ranojoy Chatterjee, Suvo Chatterjee, Nupur Amritphale, Ali Rahnavard, G. Mustafa Awan, and Bassam Omar have nothing to disclose.

Compliance with Ethics Guidelines

This study was performed utilizing publicly available datasets and hence does not require IRB review under 45 CFR 46. In addition, the study was approved for exempt status by the Institutional Review Board at the University of South Alabama.

Data Availability

The data used in this study is publicly available from the Healthcare Utilization Project at https://www.hcup-us.ahrq.gov/. All codes used for data extraction/analysis have been provided in the supplementary material.

References

- 1.Flaherty ML, Kissela B, Khoury JC, et al. Carotid artery stenosis as a cause of stroke. Neuroepidemiology. 2013;40:36–41. doi: 10.1159/000341410. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bonati LH, Lyrer P, Ederle J, Featherstone R, Brown MM. Percutaneous transluminal balloon angioplasty and stenting for carotid artery stenosis. Cochrane Database Syst Rev. 2012;2012:CD000515. doi: 10.1002/14651858.CD000515.pub4. [DOI] [PubMed] [Google Scholar]

- 3.Goldfield NI, McCullough EC, Hughes JS, et al. Identifying potentially preventable readmissions. Health Care Financ Rev. 2008;30(1):75–91. [PMC free article] [PubMed] [Google Scholar]

- 4.Rosenbaum S. The Patient Protection and Affordable Care Act: implications for public health policy and practice. Public Health Rep. 2011;126(1):130–135. doi: 10.1177/003335491112600118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Al-Damluji MS, Dharmarajan K, Zhang W, et al. Readmissions after carotid artery revascularization in the Medicare population. J Am Coll Cardiol. 2015;65(14):1398–1408. doi: 10.1016/j.jacc.2015.01.048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Galiñanes EL, Dombroviskiy VY, Hupp CS, Kruse RL, Vogel TR. Evaluation of readmission rates for carotid endarterectomy versus carotid artery stenting in the U.S. Medicare population. Vasc Endovascular Surg. 2014;48:217–223. doi: 10.1177/1538574413518120. [DOI] [PubMed] [Google Scholar]

- 7.CDC. International Classification of Diseases, (ICD-10-CM/PCS) Transition. https://www.cdc.gov/nchs/icd/icd10cm_pcs_background.htm. Accessed 20 July 2020.

- 8.Morgan DJ, Bame B, Zimand P, et al. Assessment of machine learning vs standard prediction rules for predicting hospital readmissions. JAMA Netw Open. 2019;2(3):e190348–e190348. doi: 10.1001/jamanetworkopen.2019.0348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Agency for Healthcare Research and Quality. NRD database documentation. https://www.hcup-us.ahrq.gov/db/nation/nrd/nrddbdocumentation.jsp. Accessed 06 Feb 2020.

- 10.Moons KG, Altman DG, Reitsma JB, Collins GS. Transparent reporting of a multivariate prediction model for individual prognosis or development initiative. New guideline for the reporting of studies developing, validating, or updating a multivariable clinical prediction model: The TRIPOD Statement. Adv Anat Pathol. 2015;22(5):303–305. doi: 10.1097/PAP.0000000000000072. [DOI] [PubMed] [Google Scholar]

- 11.Heba M, El-Dahshan EA, El-Horbaty EM, et al. Classification using deep learning neural networks for brain tumors. Future Comput Inform J. 2018;3(1):68–71. doi: 10.1016/j.fcij.2017.12.001. [DOI] [Google Scholar]

- 12.Litjens G, Kooi T, Bejnordi BE, et al. A survey on deep learning in medical image analysis. Med Image Anal. 2017;42:60–88. doi: 10.1016/j.media.2017.07.005. [DOI] [PubMed] [Google Scholar]

- 13.Ravì D, Wong C, Deligianni F, et al. Deep learning for health informatics. IEEE J Biomed Health Inform. 2017;21(1):4–21. doi: 10.1109/JBHI.2016.2636665. [DOI] [PubMed] [Google Scholar]

- 14.Syarif I, Prugel-Bennett A, Wills G. SVM parameter optimization using grid search and genetic algorithm to improve classification performance. TELKOMNIKA. 2016;14(4):1502–1509. doi: 10.12928/TELKOMNIKA.v14i4.3956. [DOI] [Google Scholar]

- 15.Saito T, Rehmsmeier M. The precision-recall plot is more informative than the ROC plot when evaluating binary classifiers on imbalanced datasets. PLoS One. 2015;10(3):e0118432. doi: 10.1371/journal.pone.0118432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Guang-Hui Fu, Feng Xu, Zhang B-Y, Yi L-Z. Stable variable selection of class-imbalanced data with precision-recall criterion. Chemom Intell Lab Syst. 2017;171:241–250. doi: 10.1016/j.chemolab.2017.10.015. [DOI] [Google Scholar]

- 17.Jencks SF, Williams MV, Coleman EA. Rehospitalizations among patients in the Medicare fee-for-service program. N Engl J Med. 2009;360:1418–1428. doi: 10.1056/NEJMsa0803563. [DOI] [PubMed] [Google Scholar]

- 18.Atti V, Nalluri N, Kumar V, et al. Frequency of 30-day readmission and its causes after endovascular aneurysm intervention of abdominal aortic aneurysm (from the Nationwide Readmission Database) Am J Cardiol. 2019;123:986–994. doi: 10.1016/j.amjcard.2018.12.006. [DOI] [PubMed] [Google Scholar]

- 19.Jones CE, Richman JS, Chu DI, Gullick AA, Pearce BJ, Morris MS. Readmission rates after lower extremity bypass vary significantly by surgical indication. J Vasc Surg. 2016;64:458–464. doi: 10.1016/j.jvs.2016.03.422. [DOI] [PubMed] [Google Scholar]

- 20.Lima FV, Kolte D, Louis DW, et al. Thirty-day readmission after endovascular or surgical revascularization for chronic mesenteric ischemia: insights from the nationwide readmissions database. Vasc Med. 2019;24:216–223. doi: 10.1177/1358863X18816816. [DOI] [PubMed] [Google Scholar]

- 21.Kolte D, Kennedy KF, Shishehbor MH, et al. Thirty-day readmissions after endovascular or surgical therapy for critical limb ischemia: analysis of the 2013 to 2014 nationwide readmissions databases. Circulation. 2017;136:167–176. doi: 10.1161/CIRCULATIONAHA.117.027625. [DOI] [PubMed] [Google Scholar]

- 22.Lima FV, Kolte D, Kennedy KF, et al. Thirty-day readmissions after carotid artery stenting versus endarterectomy: analysis of the 2013–2014 nationwide readmissions database. Circ Cardiovasc Interv. 2020;13(4):e008508. doi: 10.1161/CIRCINTERVENTIONS.119.008508. [DOI] [PubMed] [Google Scholar]

- 23.Hintze AJ, Greenleaf EK, Schilling AL, Hollenbeak CS. Thirty-day readmission rates for carotid endarterectomy versus carotid artery stenting. J Surg Res. 2019;235:270–279. doi: 10.1016/j.jss.2018.10.011. [DOI] [PubMed] [Google Scholar]

- 24.Greenleaf EK, Han DC, Hollenbeak CS. Carotid endarterectomy versus carotid artery stenting: no difference in 30-day postprocedure readmission rates. Ann Vasc Surg. 2015;29(7):1408–1415. doi: 10.1016/j.avsg.2015.05.013. [DOI] [PubMed] [Google Scholar]

- 25.Galinanes EL, Dombroviskiy VY, Hupp CS, Kruse RL, Vogel TR. Evaluation of readmission rates for carotid endarterectomy versus carotid artery stenting in the US Medicare population. Vasc Endovascular Surg. 2014;48:217e223. doi: 10.1177/1538574413518120. [DOI] [PubMed] [Google Scholar]

- 26.Poorthuis MHF, Brand EC, Halliday A, Bulbulia R, Bots ML, de Borst GJ. High operator and hospital volume are associated with a decreased risk of death and stroke following carotid revascularization: a systematic review and meta-analysis: authors’ reply. Ann Surg. 2018;269:631–641. doi: 10.1097/SLA.0000000000002880. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Kim LK, Yang DC, Swaminathan RV, et al. Comparison of trends and outcomes of carotid artery stenting and endarterectomy in the United States, 2001 to 2010. Circ Cardiovasc Interv. 2014;7:692–700. doi: 10.1161/CIRCINTERVENTIONS.113.001338. [DOI] [PubMed] [Google Scholar]

- 28.Rambachan A, Smith TR, Saha S, Eskandari MK, Bendok BR, Kim JY. Reasons for readmission after carotid endarterectomy. World Neurosurg. 2014;82(6):e771–e776. doi: 10.1016/j.wneu.2013.08.020. [DOI] [PubMed] [Google Scholar]

- 29.Quiroz HJ, Martinez R, Parikh PP, et al. Hidden readmissions after carotid endarterectomy and stenting. Ann Vasc Surg. 2020;68:132–140. doi: 10.1016/j.avsg.2020.04.025. [DOI] [PubMed] [Google Scholar]

- 30.Volkers EJ, Algra A, Kappelle LJ, et al. Prediction models for clinical outcome after a carotid revascularization procedure. Stroke. 2018;49(8):1880–1885. doi: 10.1161/STROKEAHA.117.020486. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Hosmer DW, Lemeshow S, Sturdivant RX. Applied logistic regression. 3. Hoboken: Wiley; 2013. [Google Scholar]

- 32.Lloyd-Jones D. Cardiovascular risk prediction: basic concepts, current status, and future directions. Circulation. 2010;121:1768–1777. doi: 10.1161/CIRCULATIONAHA.109.849166. [DOI] [PubMed] [Google Scholar]

- 33.Ottenbacher KJ, Smith PM, Illig SB, Linn RT, Fiedler RC, Granger CV. Comparison of logistic regression and neural networks to predict rehospitalization in patients with stroke. J Clin Epidemiol. 2001;54(11):1159–1165. doi: 10.1016/s0895-4356(01)00395-x. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data used in this study is publicly available from the Healthcare Utilization Project at https://www.hcup-us.ahrq.gov/. All codes used for data extraction/analysis have been provided in the supplementary material.