Abstract

Background

Identifying and approaching eligible participants for recruitment to research studies usually relies on healthcare professionals. This process is sometimes hampered by deliberate or inadvertent gatekeeping that can introduce bias into patient selection.

Objectives

Our primary objective was to identify and assess the effect of strategies designed to help healthcare professionals to recruit participants to research studies.

Search methods

We performed searches on 5 January 2015 in the following electronic databases: Cochrane Methodology Register, CENTRAL, MEDLINE, EMBASE, CINAHL, British Nursing Index, PsycINFO, ASSIA and Web of Science (SSCI, SCI‐EXPANDED) from 1985 onwards. We checked the reference lists of all included studies and relevant review articles and did citation tracking through Web of Science for all included studies.

Selection criteria

We selected all studies that evaluated a strategy to identify and recruit participants for research via healthcare professionals and provided pre‐post comparison data on recruitment rates.

Data collection and analysis

Two review authors independently screened search results for potential eligibility, read full papers, applied the selection criteria and extracted data. We calculated risk ratios for each study to indicate the effect of each strategy.

Main results

Eleven studies met our eligibility criteria and all were at medium or high risk of bias. Only five studies gave the total number of participants (totalling 7372 participants). Three studies used a randomised design, with the others using pre‐post comparisons. Several different strategies were investigated. Four studies examined the impact of additional visits or information for the study site, with no increases in recruitment demonstrated. Increased recruitment rates were reported in two studies that used a dedicated clinical recruiter, and five studies that introduced an automated alert system for identifying eligible participants. The studies were embedded into trials evaluating care in oncology mainly but also in emergency departments, diabetes and lower back pain.

Authors' conclusions

There is no strong evidence for any single strategy to help healthcare professionals to recruit participants in research studies. Additional visits or information did not appear to increase recruitment by healthcare professionals. The most promising strategies appear to be those with a dedicated resource (e.g. a clinical recruiter or automated alert system) for identifying suitable participants that reduced the demand on healthcare professionals, but these were assessed in studies at high risk of bias.

Plain language summary

Strategies designed to help healthcare professionals to recruit participants to research studies

Introduction

Most trials fail to recruit the number of participants they need within the time they had planned to conduct the study. Recruiting potential participants to research studies involves three stages: identifying, approaching and obtaining the consent of potential participants to join a study. Researchers often rely on healthcare staff, such as doctors and nurses, to identify and approach potential participants. This review examines what strategies could be used by researchers to improve recruitment to studies.

Findings

We found 11 studies that assessed recruitment strategies used with healthcare staff in search of the literature in January 2015. Five included the total number of participants (7372). There were three main strategies:

1. Using an alert system, either a computer system or member of staff to check patient records, to alert staff recruiting participants that someone might be suitable for the study (five studies).

2. Giving additional information about the study to the staff at hospitals or clinics who are recruiting people through visits from the researchers, educational seminars or leaflets (four studies).

3. Using a designated member of staff whose primary role was to recruit participants (two studies).

All the studies identified were of quite low quality, so it is difficult to draw firm conclusions from them. Five studies examined the alert system to identify participants who might be suitable for a study. Alert systems showed some promising results but were not unanimous in their findings. The four studies that evaluated the provision of additional information, visits or education to the sites recruiting participants found that none of the tested strategies led to improved recruitment. The most promising strategy appears to be the employment of someone such as a clinical trials officer or research nurse with the specific task of recruiting participants to research studies. The two studies using this strategy showed improvement in recruitment rates but both were at high risk of bias.

Conclusion

More research is still needed to evaluate the role of a designated person to recruit to research studies.

Background

Many research studies fail to recruit sufficient participants to answer the questions posed (Pocock 2008). When a study fails to generate robust results because recruitment targets are not achieved, and the intended benefits of the research are not realised, there are economic, temporal, ethical and clinical consequences (Barnes 2005; Ewing 2004; McDonald 2006; White 2008). Waste in research has been highlighted as a serious issue across a number of domains, including failure to adopt efficient recruitment processes (Salman 2014).

Recruitment is usually a three‐step process that involves (1) initially identifying potential participants against inclusion and exclusion criteria, (2) approaching or contacting them about the study prior to (3) seeking their agreement to join the study (including obtaining their consent). This may be guided by members of the central research team but might be done by the local healthcare team who have access to participants and their medical notes. However, healthcare professionals can intentionally or unintentionally act as 'gatekeepers'. Gatekeepers are those healthcare professionals with access to potential participants to research studies who decide which potential participants to approach with information about a study. Gatekeepers can potentially introduce bias to patient selection, or influence patient identification and therefore affect the rate of recruitment. This review evaluates strategies designed to help healthcare professionals to increase participant recruitment to research studies.

Description of the problem or issue

The reasons why healthcare providers do not identify and approach participants for studies are complex. They include overprotection of vulnerable participants, the impact on their relationship with participants, perceived lack of skill in introducing a request for research participation, concerns about treatment equipoise, doubts about the necessity of research and the prioritisation of workload (Department of Health 2009; Ives 2009; Mason 2007; White 2008).

The EU data protection directive was adopted across Europe in 1994 and has resulted in much stricter controls of private data (Stratford 1998). In the USA, privacy and data protection policies are less stringent but these have been tightened up. There has been considerable debate about the interpretation of the EU directive and its effect on research access and implementation (Lawlor 2001; Redsell 1998; Strobl 2000). In the UK in particular, the Data Protection Act 1998 places intervening stages between researchers and the target population with Research Ethics Committees (RECs) having responsibility for ensuring an ethical approach to patient recruitment is taken in adherence with the Act and research governance directives. While ethical safeguards are needed, they may have a detrimental effect on patient recruitment and ultimately on the rigour and completion of studies. For example, the data protection regulation has been interpreted by some ethics committees in certain countries as meaning that patients can only be initially approached by the care team who can then refer them to the research team. This adds an additional level of approval in the recruitment process.

Many research studies are multi‐centre or run across hospital departments or community settings. This might mean that several members of a healthcare team are involved in identifying and approaching potential participants on the researchers' behalf. Researchers or the healthcare team might then recruit these people to the study following the giving of informed consent. This has resulted in healthcare professionals acting as gatekeepers for recruitment to research studies and it is important to find ways to facilitate the identification of participants for research studies by healthcare professionals, so that the potential participants can then be given the necessary information and can make their own decision about joining the study.

Current systematic reviews of studies to improve recruitment to research studies do not specifically focus on ways of supporting healthcare professionals in the identification of research participants. For example, the Cochrane Methodology Review by Treweek 2010 focuses on a broader recruitment question (the effects of all strategies on participant recruitment, not just those focusing on interventions aimed at healthcare professionals) and on a single type of research design: recruitment to randomised controlled trials (RCTs). Another Cochrane Methodology Review examines a narrower question on incentives, but again just with randomised trials, by examining the evidence for the effect of disincentives and incentives on the extent to which clinicians invite eligible participants to participate in RCTs of healthcare interventions (Rendell 2007). Bryant 2005 also examined the impact of paying healthcare professionals to recruit participants, but this is also limited to trials. We believe that this Cochrane Methodology Review is the first systematic review to investigate strategies specifically designed to help healthcare professionals to identify, approach and recruit participants that is not limited to recruitment to studies that are RCTs.

Description of the methods being investigated

Non‐clinical members of a research team or clinical members working in a different department or institution may have no direct contact with potential participants. Typically, when working with healthcare professionals to support recruitment of eligible participants, researchers inform healthcare professionals of the study criteria and give them responsibility for identifying and approaching those who might be eligible.

If the study design requires it, healthcare professionals may have to give potential participants a verbal explanation of the study. This may be more difficult if it also includes the need to explain randomisation, rather than research that uses an observational or interview‐based design.

We investigated any proposed strategy that had the potential to help healthcare professionals to systematically identify, approach and recruit people to a research study. This may include inducements or incentives, methods to streamline the identification of suitable people, or methods to reduce the time or administrative burden on healthcare professionals. The final step of study entry (obtaining informed consent) may be conducted either by the healthcare professional or by the research team, and is usually the primary way to measure recruitment.

How these methods might work

It is unclear whether the methods used in research studies are underpinned by clear practical or theoretical rationales for their effectiveness. Our primary interest is behaviour change: change in the actions of healthcare professionals towards, rather than against, identifying eligible participants. It may be that theories of behaviour change will help explain successful methods. One purpose of our review is to examine included research studies for the theorised mechanism of any methods that are found to be successful.

Why it is important to do this review

This review provides an evidence base to enhance the recruitment by healthcare professionals of participants for research studies. This has potential to reduce bias in patient selection, and increase the rate at which participants are identified, approached and recruited, so enabling timely and efficient completion of studies that have greater validity. Given the backdrop of limited access to participants for research studies, it is important that effective strategies to facilitate this are identified.

Objectives

Our primary objective was to identify and assess the effect of strategies designed to help healthcare professionals to recruit participants to research studies.

Methods

Criteria for considering studies for this review

Types of studies

We included randomised trials and controlled before and after studies of different strategies and interventions designed to help healthcare practitioners to recruit participants to any type of research study. These research studies include participants receiving primary, secondary and tertiary care; as either inpatients or outpatients. Healthcare professionals include any registered practitioners and wider members of the clinical team with responsibility for recruiting participants to a study or having access to their medical notes (e.g. nurses, allied healthcare professionals, doctors and clinical trials managers).

Types of data

We included data from any eligible study that assessed the effects of different identification and recruitment strategies designed to improve recruitment of participants by healthcare professionals. These included empirical studies where the primary aim is to evaluate the recruitment strategy or those nested in a study of a clinical question.

We only included studies from 1985 onwards because we believe that the most useful research for today's studies will be from after this date, due to the increase in research governance across the European Union, in particular, and also in the United States since the mid 1980s. The changes in research governance meant that researchers were unable to directly approach participants unless they were part of the clinical team.

Types of methods

Strategies and interventions designed to help healthcare professionals to increase the recruitment of patient participants to research studies. Identification alone was not included as it does not necessarily lead to recruitment, which needed to be the aim of the strategies we wished to investigate.

Types of outcome measures

Primary outcomes

The proportion of the target population recruited to the study.

Secondary outcomes

We assessed the following secondary outcome measures, where available:

Recruitment rate (over time).

Acceptability of recruitment strategy to healthcare professionals identified by collection of qualitative or quantitative data from them. Acceptability includes issues such as the attitudes of healthcare professionals towards the recruitment interventions, including their views on accuracy and utility.

Cost‐effectiveness of the strategy.

Search methods for identification of studies

We had a three‐stage approach to searching for suitable studies:

Electronic search.

Comprehensive search of reference lists of all review articles and included studies, which has been shown to be an effective strategy for systematic reviews (Horsley 2011).

Citation tracking of all relevant reviews and included papers.

There were no language restrictions.

Electronic searches

We searched the following databases from 1985 or inception of the database onwards if after 1985 on the 5th January 2015:

Cochrane Methodology Register

Cochrane Central Register of Controlled Trials (CENTRAL)

MEDLINE via Ovid

EMBASE via Ovid

CINAHL via Ovid

British Nursing Index

PsycINFO

Applied Social Sciences Index and Abstracts (ASSIA)

-

Web of Science

Science Citation Index Expanded (SCI‐EXPANDED)

Social Sciences Citation Index (SSCI)

Search strategies are listed in the appendices (Appendix 1; Appendix 2; Appendix 3). We tested them against 10 seminal papers that we would have expected the search strategy to identify. We used MeSH terms and adapted these key words for the different databases. We recognised that there was no search strategy that would result in high specificity or sensitivity and knew citation tracking and reference list checking would be crucial to identify additional studies. Recruitment is a broad term and is likely to result in a large number of retrieved, but irrelevant, records, so pragmatic decisions were needed to make the search manageable.

Searching other resources

We searched Web of Science conference proceedings. We checked through all reference lists of review articles and included studies. We also citation tracked any included studies. We sought ongoing studies or recently completed studies from the following research registers:

International Register of Controlled Trials (ISRCTN Register)

National Institute of Health clinical trials database (Clinical trials.gov)

World Health Organization (WHO) International Clinical Trials Registry Platform (ICTRP)

United Kingdom Clinical Research Network (UKCRN)

Data collection and analysis

Selection of studies

Teams of two review authors independently screened the titles and abstracts of citations retrieved from the electronic searches (LC and CW, CT and CBW, GE and MF, CS and SB and NP and JH). Where disagreements could not be resolved through discussion, a third person acted as an arbitrator. We sought full‐text articles for potentially eligible studies. Two review authors assessed all potentially eligible studies independently to determine whether they met the eligibility criteria using the same author teams. Any disagreements between review authors were settled through discussion or involvement of a third review author and regular team meetings where studies were presented.

Data extraction and management

We developed and piloted data extraction forms and revised them as appropriate. Two review authors (working in three teams) extracted data independently (CW and CT, MF and GE, CS and LC). Any disagreements that could not be resolved through discussion were discussed with a third review author. We sought additional information from the original researchers where necessary to try and establish total populations where this information was missing. We extracted data regarding details of the underlying trials for which the intervention was attempting to increase recruitment (study method, country, setting, type of participant) and data on the recruitment aspect of the study (research design, the intervention (strategy), the participants, healthcare professionals targeted, comparison, recruitment rate and reported outcomes). We assessed:

the risk of bias in included studies (where appropriate);

the adequacy of allocation concealment (adequate, unclear and inadequate); and

the completeness of reporting on the flow of participants through the trial, e.g. from a CONSORT diagram (where appropriate).

Assessment of risk of bias in included studies

We assessed the risk of bias of each study using the six domains of the Cochrane 'Risk of bias' assessment tool (Higgins 2011). We discuss the characteristics of the studies, as related to risk of bias, with a particular focus on studies with a high risk of bias.

Measures of the effect of the methods

We analysed data according to the type of intervention (e.g. designated member of staff, additional information, additional visits etc.). We calculated the risk ratio with 95% confidence intervals from dichotomous data, which we displayed on forest plots (Lewis 2001), but the small number of eligible studies meant that each plot included a single study only. We grouped interventions where appropriate but were not able to combine data for analysis. Where risk ratios could not be calculated due to insufficient data we described the studies in a narrative manner.

Unit of analysis issues

We analysed all studies using the individual patient as the unit of analysis. If we had identified any cluster‐randomised trials, the unit of analysis would have been the cluster.

Dealing with missing data

We analysed participants' data on an intention‐to‐treat basis. We requested missing data from authors of included studies where necessary (Young 2011), and we were successful in gaining some extra data.

Assessment of heterogeneity

We would have examined any statistical heterogeneity of the results of the included studies using the Chi² test for heterogeneity and quantified the degree of heterogeneity in the results using the I² statistic (Higgins 2011), if we had identified a sufficient number of similar studies. If substantial heterogeneity had been detected, we would have investigated possible explanations and assessed the data using random‐effects analysis, if appropriate. However, there were too few similar trials to do this.

Assessment of reporting biases

We would have made an assessment of publication bias if more than 10 studies of the same intervention had been included, but we found fewer studies than this.

Data synthesis

We would have performed a meta‐analysis to describe the overall results had similar studies been identified but they were not. Instead, we synthesised studies which were not suitable for meta‐analysis by means of a narrative synthesis. Hence, we were unable to view convergence between the meta‐analysis results and the narrative review as an indication of strong evidence of the effect.

Subgroup analysis and investigation of heterogeneity

We grouped studies according to the type of strategy or intervention examined, such as the use of a dedicated member of staff, additional training or information, or use of technology. There were insufficient studies to perform a subgroup analysis but, had there been, we would have looked at the following plausible explanations for heterogeneity:

study quality;

study site (e.g. primary versus secondary care);

studies of recruitment to RCTs rather than to observational studies, which include a theorised mechanism of success.

Sensitivity analysis

There were insufficient studies to perform a sensitivity analysis according to the methodological quality and robustness of the results of the included studies.

Results

Description of studies

There were 11 studies that met the inclusion criteria for this review (see Characteristics of included studies table).

Results of the search

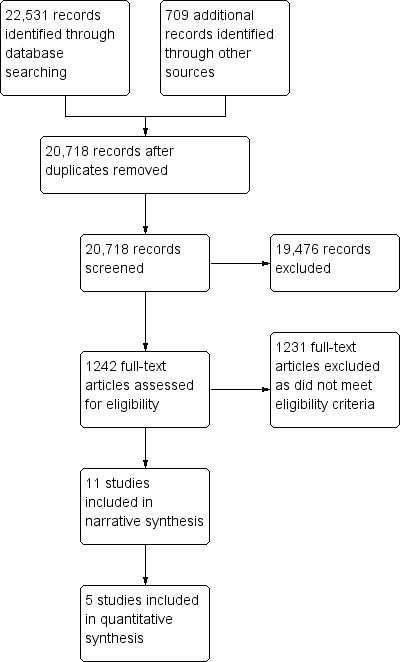

The results of search are detailed in Figure 1.

1.

Study flow diagram.

The search strategy identified 22,531 potential papers and further searching of reference lists and citation tracking identified an additional 709 titles. Following the removal of duplicates this resulted in 20,718 titles, which we screened. We then excluded 19,476 papers as they did not meet the entry criteria. We accessed 1242 full text papers and, of these, we included 11 studies.

Included studies

The 11 included studies were published between 2000 and 2013. We sought additional information from the authors but only two responded (Cox 2005; Monaghan 2007), but missing data were provided for only one of these (Cox 2005).

Of the 11 included studies, five had dichotomous data on recruitment rates: Bell‐Syer 2000, Bradley 2006, Cardozo 2010, Hollander 2004 and Paskett 2002. These studies included a total of 7372 participants and were all comparator studies. Bradley 2006, Hollander 2004, Cardozo 2010, Chen 2013 and Paskett 2002 had a pre and post design and Bell‐Syer 2000 was a non‐randomised controlled trial. Three of the other five studies were randomised trials, which investigated differing recruitment strategies but did not report the total study sample, only the proportion of responses (Kimmick 2005; Lienard 2006; Monaghan 2007). The other two trials used a pre‐post design to identify the proportion of participants recruited to studies and did not report the total sample size for the population from which these participants were recruited (Cox 2005; Embi 2005).

Excluded studies

There are no excluded studies that were close to being eligible for this review.

Risk of bias in included studies

We included three randomised trials and eight cohort studies, most of which had a pre and post design. All the studies identified had a moderate to high risk of bias.

Allocation

Three included studies were randomised trials (Kimmick 2005; Lienard 2006; Monaghan 2007). The studies by Kimmick 2005 and Lienard 2006 had a high risk of allocation bias. Monaghan 2007 outlined the randomisation procedure and stated that this was undertaken using computer‐generated algorithms with stratification undertaken by country, but there was no mention of allocation concealment. The remaining eight studies were not randomised, so had a high risk of allocation bias.

Blinding

One randomised trial had a single‐blind design, which we assessed as having a moderate risk of bias (Monaghan 2007). The other two randomised trials had an open design and we assessed them as having a high risk of bias (Kimmick 2005; Lienard 2006). The other studies used before and after designs, which had a high risk of bias.

Incomplete outcome data

Assessment was made of recruitment rates only and further follow‐up was not relevant for this review.

Selective reporting

All included studies reported recruitment rates as their main outcome. In some studies, it was impossible to identify the total population of participants that the sample was drawn from, making inclusion in the quantitative analysis impossible.

Other potential sources of bias

We identified no other sources of bias.

Effect of methods

There were three main recruitment strategies: an alert system, giving additional input to study sites and using additional personnel.

Alert system

Five studies evaluated the use of an alert system. An alert system was where potential participants were 'flagged up' to the recruiting physician. Three studies used computerised alert systems (Bell‐Syer 2000; Cardozo 2010; Embi 2005). The other two used either a nurse or a clinical trials screening co‐ordinator to alert the doctor of a potential participant (Chen 2013; Paskett 2002).

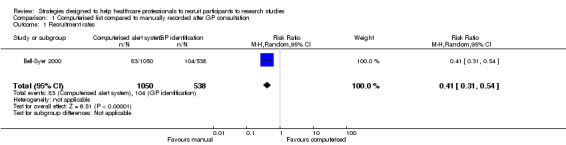

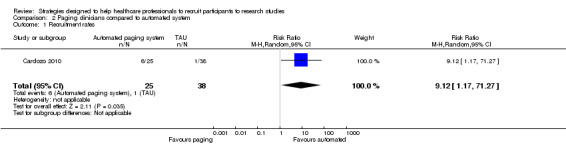

In the non‐randomised controlled trial by Bell‐Syer 2000, a computer system identified a list of potential participants, which was sent to the recruiting physicians in a general practice group. In the control group, a set of general practitioners (GPs) identified participants themselves, as they saw them in clinic. The risk ratio (RR) for enrolment was 0.41 (95% confidence interval (CI) 0.31 to 0.54) favouring personal approach by GPs (Analysis 1.1). However, in the pre‐post test study by Embi 2005, the computerised alert system increased the number of physician‐generated referrals (five before and 42 after, P value = 0.001). The intervention also increased the number of enrolments (five before and 11 after, P value = 0.03). There was also a doubling of their enrolment rates: 2.9 per month before and 6.0 per month after (RR 2.06, 95% CI 1.22 to 3.46; P value = 0.007). This was supported by Cardozo 2010, who used an automated paging system linked to the electronic record to identify inclusion criteria. The alert went straight to the investigators and significantly improved recruitment (RR 9.12, 95% CI 1.17 to 71.27), favouring the automated system (Analysis 2.1).

1.1. Analysis.

Comparison 1 Computerised list compared to manually recorded after GP consultation, Outcome 1 Recruitment rates.

2.1. Analysis.

Comparison 2 Paging clinicians compared to automated system, Outcome 1 Recruitment rates.

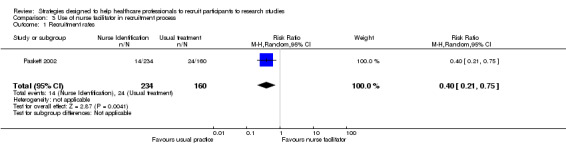

In the before and after study by Paskett 2002, a nurse facilitator identified potential participants for the recruiting physicians. Once again, this did not increase recruitment and instead found a RR of 0.40 (95% CI 0.21 to 0.75), favouring the recruitment period when the nurse facilitator was not present (Analysis 3.1). However, in the study by Chen 2013, a clinical trial screening co‐ordinator identified potentially eligible patients using an electronic medical record and flagged them to the treating physician, which resulted in an increased recruitment, from 61 patients to 73 participants during four‐month trial periods. Following removal of the clinical trial screening co‐ordinator this dropped to 51. However, the total number of patients screened is not reported, rather the number of clinic appointments only is available. By calculating clinic visits as an estimate of total population, this gives a risk ratio of 0.85 (95% CI 0.6 to 1.2), showing no clear benefit from this form of alert system. Physicians were asked about their attitudes to the alert system and 33 completed surveys were returned (total population unclear), indicating that 67% of alerts were helpful and 70% accurate.

3.1. Analysis.

Comparison 3 Use of nurse facilitator in recruitment process, Outcome 1 Recruitment rates.

Additional input to study sites

Three studies attempted to increase recruitment by using a variety of ways of keeping study sites up to date about the trials and providing them with additional information, which was designed to be both informative and educational.

Kimmick 2005 (a randomised trial) compared standard information to an educational intervention including seminars, educational materials, lists of available protocols, monthly mail shots and emails reminders, and a case discussion seminar for different research studies that were running during the trial period. The percentage of participants recruited in year one was 36% in the intervention group compared to 32% in the control group. In year two, recruitment rates were 31% in both the intervention and control groups. The overall number of participants who could have been recruited is not given.

Lienard 2006 conducted a randomised trial to assess whether on‐site monitoring initiatives, including on‐site visits, improved recruitment in the intervention group compared to the control group who did not receive on‐site initiatives. In the intervention group, 302 participants were recruited from 35 visited centres compared to 271 participants from 34 centres in the control group. This difference was not statistically significant. However, most sites consisted of an initiation visit only. Sites were not informed as to which group they were in, rather they were told that the lack of visits was due to budgetary constraint.

The third randomised trial examined targeted communication strategies in the intervention group, which received a communication package based on additional feedback about recruitment rates (Monaghan 2007). This was compared to virtual communication from the central trial co‐ordinators (control group). The outcome was time to reach 50% of the recruitment targets. In the intervention group, the time to reach half the recruitment targets was 4.4 months compared to 5.8 months in the control group (P value = 0.68).

Additional personnel

Three studies examined the role of additional personnel on recruitment rates, but each of these investigated a different approach.

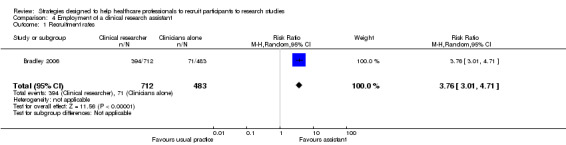

Bradley 2006 evaluated recruitment rates in radiology clinics with full‐time clinical research assistants and protocol modifications to simplify the outcomes and procedures, compared to recruitment by clinicians who were radiographers or nurses utilising a more complex protocol. They found a RR of 3.76 (95% CI 3.01 to 4.71), favouring the full‐time clinical research assistants and simpler protocol (Analysis 4.1). However, it is unclear which part of the intervention was the most effective.

4.1. Analysis.

Comparison 4 Employment of a clinical research assistant, Outcome 1 Recruitment rates.

Cox 2005 is a pre and post cohort study to evaluate the introduction of a trials officer to recruit to multiple studies. It found that recruitment rates improved from below 10% to 15% with the use of trial officers to aid recruitment to clinical trials in a cancer network. However, no overall sample size is provided for the pool of people from which trial participants were recruited.

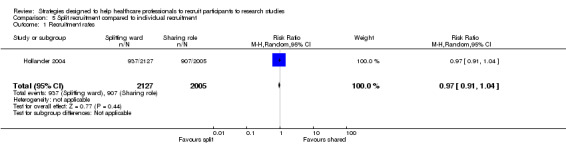

The third study evaluated two ways in which additional personnel might recruit participants (Hollander 2004). Recruitment rates in an emergency room were evaluated, with medical students comparing two different procedures for recruiting participants into clinical trials: sharing or splitting up recruitment responsibilities. The number of participants recruited was similar in each of the two groups: RR 0.97 (95% CI 0.91 to 1.04) (Analysis 5.1). Seventeen of 24 medical students (71%) found the split strategy to be "more helpful in enrolling subjects" and 20 of 24 (83%) found the split strategy helped them "keep better track" of patients.

5.1. Analysis.

Comparison 5 Split recruitment compared to individual recruitment, Outcome 1 Recruitment rates.

Cost‐effectiveness

None of the studies reported the cost‐effectiveness of the intervention.

Discussion

The most promising strategy was making a specific member of staff responsible for recruiting participants to studies. The largest effect size was from the study by Bradley 2006, which showed that using a member of the research staff to recruit, rather than the doctors and nurses delivering clinical care, increased recruitment. However, this study is at high risk of bias and the comparison was confounded because the designated recruiter used a less complicated protocol, which may have contributed to the benefit although it could be argued that using a simplified protocol demonstrates that recruitment can be improved by administering it through less highly trained professionals. Using a designated member of staff has cost implications for researchers, but it has been adopted by trials and introduced nationally by the National Institute for Health Research in the UK to improve recruitment to research studies (Darbyshire 2011). Recruitment rates nationally in the UK have increased in recent years, but it is unclear how much of this is attributable to the provision of designated recruiter research nurses, and how much is attributable to increased infrastructure to support research and other factors.

Simply alerting physicians to potentially eligible participants does not seem to improve recruitment overall (Bell‐Syer 2000; Paskett 2002), although this did seem to work in one study (Embi 2005). It is unclear why this strategy does not improve recruitment overall but one possibility is that the physicians did not trust the systems and preferred to use their own selection criteria. An additional effect on recruitment by the physicians may have been their knowledge that the study was ongoing. More surprising was that additional information, visits and educational strategies did not seem to improve recruitment. Possible explanations for this may be that the healthcare practitioners did not have sufficient time to read or act on the information and, therefore, it had no lasting impact on the recruiting physicians.

There are many limitations in interpreting the findings from these papers. The before and after designs lend themselves to the impact of other variables influencing outcomes (Bradley 2006; Cardozo 2010; Chen 2013). In the study by Bell‐Syer 2000, only one GP practice used the intervention (computer flagging of patients) whereas 18 control practices did not use it. The impact of the intervention needs to be evaluated in more than one site otherwise differences may be due to other factors specific to that practice. Hollander 2004 recognised that their findings may not be generalisable because, once again, the study was only in one setting, which they regarded as a 'mature' research environment. It is also difficult to know how long an intervention needs to be in place before a difference would be noted. Chen 2013 and Embi 2005 had intervention periods that lasted only four months and although Kimmick 2005 had an intervention period of more than one year, they too felt this may not be sufficient. In the study by Cox 2005, where a trial officer was introduced, they noted that a settling‐in period was required to embed the role.

Evaluating strategies to improve recruitment is difficult because these are complex interventions and other factors can impact upon outcomes. The study by Lienard 2006 showed that there might be a 'dose' response. The intervention was to visit recruitment sites but only 91% were visited and most of these were for the site initiation visit and were not visited again. Also, 6% of the control sites had at least one visit, which further confounded the findings. Monaghan 2007 highlighted that issues may have impacted on any potential impact of the intervention because other incentives were at play in the control arm, such as inclusion in additional research in the future.

It is problematic to try to analyse the proportion of the target population recruited for the study when there are challenges in agreeing and defining the target population for a particular study. There are debates about how this should be defined and applied, for example whether it is all people with the target condition, or only those with the target condition referred to a particular service or the proportion of eligible patients who are referred to the study once eligibility has been clarified. The definition of a target population was poorly applied in these studies.

This review focused primarily on identification and approaching potential participants rather than seeking their agreement through informed consent procedures. Failure to approach potential participants prevents them from making an informed decision as to whether they wish to know more about a study and potentially join or decline to participate. This can lead to people becoming disenfranchised from potential research.

Overall it may be most effective if a combination of strategies is used, but this review suggests that the evidence for any benefit of any single component is minimal. More studies incorporating nested recruitment evaluations into research studies would help to evaluate this further, and better reporting of recruitment strategies in all research studies might also provide observational evidence that could help in the design of effective strategies.

Summary of main results

Strategies to improve recruitment that appear to be beneficial include the employment of a staff member dedicated to the recruitment tasks but the two studies of this were not randomised comparisons and are at high risk of bias (Bradley 2006; Cox 2005). However, when a full‐time employee was used to prompt oncologists to recruit for trials, this did not have a positive effect (Paskett 2002). Mixed results have been demonstrated for the use of electronic alert systems in different settings. In a large US academic healthcare system, alerts had a positive effect on recruitment (Embi 2005), but they did not help in recruitment with GPs in the UK (Bell‐Syer 2000).

Overall completeness and applicability of evidence

Only 11 studies were included in this review, but these came from a thorough search strategy, including citation tracking. The evidence is relevant to the question, but is not of a sufficient quality to draw firm conclusions.

Quality of the evidence

Overall, the evidence was of low quality.

Potential biases in the review process

None of the authors of this review have any affiliation with the included studies and independent data extraction was maintained throughout.

Agreements and disagreements with other studies or reviews

This review is similar in scope to the Cochrane Methodology Review by Treweek 2010, which identified randomised trials evaluating recruitment strategies to randomised trials. We identified three studies in common (Kimmick 2005; Lienard 2006; Monaghan 2007). Neither review was able to gain sufficient data to report effect sizes for these studies and both rated them similarly.

Authors' conclusions

Implication for methodological research.

Research to evaluate the use of staff to improve recruitment is recommended. These staff would need to be paid specifically for this role as shown in these studies, and this might be evaluated in a cluster‐randomised trial. There might also be opportunities to conduct SWAT (Studies Within A Trial) (Smith 2013; Smith 2015), and outlines for some of these are available at http://go.qub.ac.uk/SWAT‐SWAR.

What's new

| Date | Event | Description |

|---|---|---|

| 29 February 2016 | Amended | Contact details updated. |

Acknowledgements

We would like to acknowledge the support of the Methodology theme of the Cancer Experiences Collaborative (CECo), who have supported this review.

Appendices

Appendix 1. MEDLINE search strategy

1. patient selection.mp. or exp Patient Selection/

2. patient participation.mp. or Patient Participation/

3. incentives.mp. or Motivation/

4. "Health Services Needs and Demand"/ or "Salaries and Fringe Benefits"/ or Gift Giving/ or inducement.mp. or "Fees and Charges"/

5. Financing, Personal/ or Reimbursement, Incentive/ or pay$.mp. or Cost‐Benefit Analysis/

6. compensation.mp. or "Compensation and Redress"/

7. gatekeeping.mp. or Gatekeeping/

8. 1 or 2

9. 3 or 4 or 5 or 6 or 7

10. 8 and 9

Appendix 2. PsycINFO search strategy

1. (recruit* OR patient AND selection OR patient AND participation OR particip*).ti,ab

2. (incentiv* OR induc* OR gatekeep* OR reward* OR altruist* OR coerci*).ti,ab

3. 1 AND 2

4. (recruit* OR patient AND selection OR patient AND participat* OR subjects).ti,ab

5. 2 AND 4

6. (recruit* OR patient AND selection OR patient AND participat* OR subjects).ti,ab

7. (incentiv* OR induc* OR gatekeep* OR reward* OR altruist* OR coerci*).ti,ab

8. 6 AND 7

(Limited to: Publication Year 1980‐Current and Human and English Language and (Population Groups Human))

Appendix 3. ASSIA search strategy

((recruit* or (patient selection) or (patient participat*)) or subjects) and ((incentiv* or induc* or gatekeep*) or (reward* or altruist* or coerci*))

Data and analyses

Comparison 1. Computerised list compared to manually recorded after GP consultation.

| Outcome or subgroup title | No. of studies | No. of participants | Statistical method | Effect size |

|---|---|---|---|---|

| 1 Recruitment rates | 1 | 1588 | Risk Ratio (M‐H, Random, 95% CI) | 0.41 [0.31, 0.54] |

Comparison 2. Paging clinicians compared to automated system.

| Outcome or subgroup title | No. of studies | No. of participants | Statistical method | Effect size |

|---|---|---|---|---|

| 1 Recruitment rates | 1 | 63 | Risk Ratio (M‐H, Random, 95% CI) | 9.12 [1.17, 71.27] |

Comparison 3. Use of nurse facilitator in recruitment process.

| Outcome or subgroup title | No. of studies | No. of participants | Statistical method | Effect size |

|---|---|---|---|---|

| 1 Recruitment rates | 1 | 394 | Risk Ratio (M‐H, Random, 95% CI) | 0.40 [0.21, 0.75] |

Comparison 4. Employment of a clinical research assistant.

| Outcome or subgroup title | No. of studies | No. of participants | Statistical method | Effect size |

|---|---|---|---|---|

| 1 Recruitment rates | 1 | 1195 | Risk Ratio (M‐H, Random, 95% CI) | 3.76 [3.01, 4.71] |

Comparison 5. Split recruitment compared to individual recruitment.

| Outcome or subgroup title | No. of studies | No. of participants | Statistical method | Effect size |

|---|---|---|---|---|

| 1 Recruitment rates | 1 | 4132 | Risk Ratio (M‐H, Random, 95% CI) | 0.97 [0.91, 1.04] |

Characteristics of studies

Characteristics of included studies [ordered by study ID]

Bell‐Syer 2000.

| Methods | Non‐randomised controlled trial of recruitment strategy to trials for interventions for treating lower back pain (acupuncture and exercise) | |

| Data | Primary care in the UK, including both men and women with lower back pain, aged 18 to 60 years; n = 1050 | |

| Comparisons | Computer referral list (1 practice) compared to general practitioner personal referrals (18 practices: 74 GPs) | |

| Outcomes | Proportion of participants recruited to trial | |

| Notes | See Analysis 1.1 | |

| Risk of bias | ||

| Item | Authors' judgement | Description |

| Random sequence generation (selection bias)? | No | Not randomised |

| Allocation concealment (selection bias)? | No | Not randomised |

| Blinding (performance bias and detection bias)? | No | Not randomised |

| Incomplete outcome data (attrition bias)? | No | |

| Selective reporting (reporting bias)? | No | |

Bradley 2006.

| Methods | Pre and post design to evaluate a recruitment strategy in palliative care radiotherapy research studies | |

| Data | Secondary care (outpatient radiotherapy clinics) in Canada Men and women aged 23 to 96 years; 1195 participants |

|

| Comparisons | Full‐time clinical research assistants employed to assist in recruiting participants for palliative care studies and a simplified protocol with exclusion criteria that were less strict compared to clinicians who were research nurses and research radiographers with more complex questionnaires and follow‐up and more restrictive eligibility criteria | |

| Outcomes | Percentage accrual before and after introduction of the intervention | |

| Notes | See Analysis 4.1 | |

| Risk of bias | ||

| Item | Authors' judgement | Description |

| Random sequence generation (selection bias)? | No | Not randomised |

| Allocation concealment (selection bias)? | No | Not randomised |

| Blinding (performance bias and detection bias)? | No | Not randomised |

| Incomplete outcome data (attrition bias)? | No | |

| Selective reporting (reporting bias)? | No | |

Cardozo 2010.

| Methods | Pre and post design to evaluate a recruitment strategy for research studies based in an emergency department | |

| Data | US Emergency Department No trial description but the trial included young women (15 to 20 years) with a chief complaint including the word 'ankle' in the triage notes |

|

| Comparisons | Clinicians were asked to page the investigator about a potentially eligible participant and were informed about the study by "an in service", posters and emails. Intervention was an automated paging system based upon the electronic records to page the investigator. ED staff were unaware of the implementation of the paging alert system | |

| Outcomes | Before the intervention: 1/17 potentially eligible patients were identified. During the intervention period: 7/7 potentially eligible patients were identified by the automated system, but only 1 of these was identified by the staff. This has a risk ratio of 9.12 (95% CI 1.17 to 71.27) | |

| Notes | See Analysis 2.1 | |

| Risk of bias | ||

| Item | Authors' judgement | Description |

| Random sequence generation (selection bias)? | No | Not randomised |

| Allocation concealment (selection bias)? | No | Not randomised |

| Blinding (performance bias and detection bias)? | Yes | Not randomised |

| Incomplete outcome data (attrition bias)? | No | None stated |

| Selective reporting (reporting bias)? | No | Data appear complete |

| Other bias? | No | |

Chen 2013.

| Methods | Pre and post intervention study to evaluate recruitment to 21 phase II to IV oncology clinical trials | |

| Data | Canada Cancer patients All adults but no details regarding gender or age |

|

| Comparisons | Clinical trial screening co‐ordinator to identify eligible patients. They had no prior clinical experience and minimal knowledge about clinical trials. They reviewed eligibility using electronic medical records, then completed a clinical trial notification report for potentially eligible participants 1 day before the clinic visit. This was attached to the medical notes to flag the patient to oncologists. This was compared to screening by an oncologist only, before and after the intervention of the clinical screening co‐ordinator | |

| Outcomes | Before the intervention: 61 participants were recruited, during the intervention 73 were recruited and after the intervention 51 recruited; no overall sample size was reported 33 surveys on the acceptability of the intervention by oncologists demonstrated that 67% of the 'flags' were helpful and 70% were accurate |

|

| Notes | — | |

| Risk of bias | ||

| Item | Authors' judgement | Description |

| Random sequence generation (selection bias)? | No | |

| Allocation concealment (selection bias)? | No | |

| Blinding (performance bias and detection bias)? | No | |

| Incomplete outcome data (attrition bias)? | No | |

| Selective reporting (reporting bias)? | No | |

| Other bias? | No | |

Cox 2005.

| Methods | Prospective case study including a pre and post intervention comparison in cancer clinical trials | |

| Data | UK cancer patients (no patient details reported) Quantitative and qualitative methods |

|

| Comparisons | Introduction of a clinical trials officer versus no clinical trials officer | |

| Outcomes | Proportion recruited to multiple studies. No overall sample size was reported | |

| Notes | — | |

| Risk of bias | ||

| Item | Authors' judgement | Description |

| Random sequence generation (selection bias)? | No | Not randomised |

| Allocation concealment (selection bias)? | No | Not randomised |

| Blinding (performance bias and detection bias)? | Unclear | Not randomised |

| Incomplete outcome data (attrition bias)? | Unclear | Difficult to apply because this is a case study with a mixed methods design |

| Selective reporting (reporting bias)? | Unclear | Difficult to apply because this is a case study with a mixed methods design |

| Other bias? | Unclear | Objective outcome reported |

Embi 2005.

| Methods | Pre and post intervention comparison study for a diabetic trial | |

| Data | Outpatients in academic health systems in the USA 114 physicians based at selected health clinics with electronic records No patient data reported |

|

| Comparisons | Before: traditional recruitment (12 months) including posting flyers, memos and discussions at meetings After intervention: clinical trial alerts that triggered potentially eligible participants through electronic records during consultations (4 months) |

|

| Outcomes | Enrolment rate Enrolment rate over time No overall sample size recorded |

|

| Notes | — | |

| Risk of bias | ||

| Item | Authors' judgement | Description |

| Random sequence generation (selection bias)? | No | Not randomised |

| Allocation concealment (selection bias)? | No | Not randomised |

| Blinding (performance bias and detection bias)? | No | Not randomised |

| Incomplete outcome data (attrition bias)? | Yes | Reported on data from all physicians |

| Selective reporting (reporting bias)? | Yes | Reported on all outcomes |

| Other bias? | Unclear | Objective outcome in the form of enrolment rates |

Hollander 2004.

| Methods | Pre and post intervention of a recruitment strategy for 6 clinical studies in an emergency department | |

| Data | Emergency Department in Pennsylvania, USA 4132 eligible participants |

|

| Comparisons | Recruitment over 2 15‐day periods using 2 different approaches to recruitment 1. 2 students sharing responsibility across 24‐hour emergency department rooms 2. 2 students splitting responsibility across 12 emergency rooms |

|

| Outcomes | Overall participants recruited into 6 studies Recruitment for each individual study Recruitment rate for each individual study (participants per day) Students preference for strategy |

|

| Notes | Authors noted that context‐specific strategies may have influenced the outcomes (e.g. electronic records and a status board) See Analysis 5.1 |

|

| Risk of bias | ||

| Item | Authors' judgement | Description |

| Random sequence generation (selection bias)? | No | Not randomised |

| Allocation concealment (selection bias)? | No | Not randomised |

| Blinding (performance bias and detection bias)? | No | Not randomised |

| Incomplete outcome data (attrition bias)? | Yes | Reported on incidence of recruitment to studies |

| Selective reporting (reporting bias)? | Yes | Reported on all outcomes mentioned |

| Other bias? | Unclear | Objective outcome reported |

Kimmick 2005.

| Methods | Randomised trial of a recruitment strategy for older people in cancer treatment trials | |

| Data | Centres of a cancer and leukaemia patient group | |

| Comparisons | Standard information or generic educational intervention including: 1. Educational seminar 2. Educational materials 3. List of available protocols for use on charts 4. Monthly email reminder for a year 5. Case discussion seminar |

|

| Outcomes | Percentage accrual of older participants during first and second years after educational seminar; no sample size was reported | |

| Notes | — | |

| Risk of bias | ||

| Item | Authors' judgement | Description |

| Random sequence generation (selection bias)? | Unclear | No information given |

| Allocation concealment (selection bias)? | Unclear | No information given |

| Blinding (performance bias and detection bias)? | No | Not blinded although would have been difficult to do |

| Incomplete outcome data (attrition bias)? | Yes | Intention‐to‐treat analysis |

| Selective reporting (reporting bias)? | Yes | Reported on all outcomes |

Lienard 2006.

| Methods | Randomised trial of a recruitment strategy for a randomised trial of adjuvant chemotherapy regimens for women with breast cancer | |

| Data | 135 hospitals (centres) in France Total number of participants eligible not reported |

|

| Comparisons | 68 centres allocated to receive on‐site monitoring initiatives versus 67 centres that were not visited for monitoring 6 centres in the control group requested visits but were analysed based on intention‐to‐treat |

|

| Outcomes | Number of randomised participants and centres | |

| Notes | Authors state no significant difference between groups but insufficient data are reported to calculate effect size. Those participating in the study were not informed of the randomisation to receive or not to receive a visit. They were told budgetary constraints were the reason for some centres not being visited Study closed prematurely |

|

| Risk of bias | ||

| Item | Authors' judgement | Description |

| Random sequence generation (selection bias)? | Unclear | No information given |

| Allocation concealment (selection bias)? | Yes | Participating studies were not informed of the random allocation; no mention of blinding reported |

| Blinding (performance bias and detection bias)? | No | No blinding |

| Incomplete outcome data (attrition bias)? | No | No report of numbers of eligible participants |

| Selective reporting (reporting bias)? | No | Not possible to determine rates of recruitment because the total number of people from whom the study participants were recruited is not given |

Monaghan 2007.

| Methods | Randomised trial of a recruitment strategy in multi‐centre study about diabetes and vascular disease. The regional co‐ordinating centres were blinded as to the randomisation group | |

| Data | Clinical sites in an international study Age and sex/gender are not reported |

|

| Comparisons | Additional communication strategies (communication package based on additional individually tailored feedback about recruitment) (n = 85 centres) versus usual communication strategies between the central trial co‐ordinators and the clinical sites in a large multi‐centre randomised trial | |

| Outcomes | Time to reaching 50% of the recruitment targets No sample size is reported | |

| Notes | — | |

| Risk of bias | ||

| Item | Authors' judgement | Description |

| Random sequence generation (selection bias)? | Yes | Computer‐generated algorithm with stratification by country |

| Allocation concealment (selection bias)? | Unclear | Not reported |

| Blinding (performance bias and detection bias)? | Unclear | Single‐blinded |

| Incomplete outcome data (attrition bias)? | Yes | |

| Selective reporting (reporting bias)? | Yes | |

Paskett 2002.

| Methods | Pre and post intervention study and a site comparison study for a recruitment strategy for rural patients with cancer to enrol in clinical trials | |

| Data | Primary care setting in the USA Women 29 to 100 years, mean 66 years Colorectal cancer Men and women |

|

| Comparisons | Investigated the role of a nurse who was responsible for alerting physicians about clinical trials that might be appropriate for their patients, plus a quarterly newsletter about cancer and the clinical trial sent to the GP | |

| Outcomes | Rate of recruitment into clinical trials in 1996 post intervention | |

| Notes | See Analysis 3.1 | |

| Risk of bias | ||

| Item | Authors' judgement | Description |

| Random sequence generation (selection bias)? | No | Not randomised |

| Allocation concealment (selection bias)? | No | Not randomised |

| Blinding (performance bias and detection bias)? | No | Not randomised |

| Incomplete outcome data (attrition bias)? | Unclear | Not all numbers in the trials reported for comparisons |

| Other bias? | Unclear | Objective outcome recorded |

CI: confidence interval ED: Emergency Department GP: general practitioner

Differences between protocol and review

There are no differences between the protocol and the review, and we have used the relevant parts of the Methods section to note where we were unable to implement our plans because of a lack of included studies or data.

Contributions of authors

The protocol was predominantly written by NP and CW, but with significant input from others in the team (MF, GE, CS, CT, JH, LC, SB, CBW). Screening of papers was conducted by all members of the team and data extraction was done by MF, GE, CS, CT, LC and CW. SB entered the data for the tables and NP wrote the first draft of the review.

Sources of support

Internal sources

-

Lancaster University, UK.

Salary support for researcher NP

-

University of Manchester, UK.

Salary support for CW, CT, SB, CBW, LC

-

Loughborough University, UK.

Salary support for CS

-

University of Southampton and Cardiff University, UK.

Salary support for JH

External sources

-

Cancer Experiences Collaborative (CECo), UK.

Funding for conducting the review, as well as meeting costs.

-

Macmillan Cancer Support, UK.

Funded the salary for two researchers (SB and MF) who worked part‐time on the review, through a Macmillan Cancer Support Post Doctoral Fellowship

Declarations of interest

NP, CW, MF, GE, CS, CT, JH, LC, SB and CBW state there are no declarations of interest.

Edited (no change to conclusions)

References

References to studies included in this review

Bell‐Syer 2000 {published data only}

- Bell‐Syer SE, Moffett JA. Recruiting patients to randomized trials in primary care: principles and case study. Family Practice 2000;17(2):187‐91. [DOI] [PubMed] [Google Scholar]

Bradley 2006 {published data only}

- Bradley N, Chow E, Tsao M, Danjoux C, Barnes EA, Hayter C, et al. Reasons for poor accrual in palliative radiation therapy research studies. Supportive Cancer Therapy 2006;3(2):110‐9. [DOI] [PubMed] [Google Scholar]

Cardozo 2010 {published data only}

- Cardozo E, Meurer WJ, Smith BL, Holschen JC. Utility of an automated notification system for recruitment of research subjects. Emergency Medicine Journal 2010;27:786‐7. [DOI] [PubMed] [Google Scholar]

Chen 2013 {published data only}

- Chen L, Grant J, Cheung WY, Kennecke HF. Screening intervention to identify eligible patients and improve accrual to phase II‐IV oncology clinical trials. Journal of Oncology Practice 2013;9(4):174‐81. [DOI] [PMC free article] [PubMed] [Google Scholar]

Cox 2005 {published data only}

- Cox K, Avis M, Wilson, E, Elkan R. An evaluation of the introduction of Clinical Trial Officer roles into the cancer clinical trial setting in the UK. European Journal of Cancer Care 2005;14:448‐56. [DOI] [PubMed] [Google Scholar]

Embi 2005 {published data only}

- Embi PJ, Jain A, Clark J, Bizjack S, Hornung R, Harris CM. Effect of a clinical trial alert system on physician participation in trial recruitment. Archives of Internal Medicine 2005;165(19):2272‐7. [DOI] [PMC free article] [PubMed] [Google Scholar]

Hollander 2004 {published data only}

- Hollander JE, Sparano DM, Karounos M, Sites FD, Shofer FS. Studies in emergency department data collection: shared versus split responsibility for patient enrollment. Academic and Emergency Medicine 2004;11(2):200‐3. [PubMed] [Google Scholar]

Kimmick 2005 {published data only}

- Kimmick GG, Peterson BL, Kornblith AB, Mandelblatt J, Johnson JL, Wheeler J, et al. Improving accrual of older persons to cancer treatment trials: a randomized trial comparing an educational intervention with standard information. Journal of Clinical Oncology 2005;23(10):2201‐7. [DOI] [PubMed] [Google Scholar]

Lienard 2006 {published data only}

- Lienard JL, Quinaux E, Fabre‐Guillevin E, Piedbois P, Jouhsuf A, Decoster G, et al on behalf of the European Association. Impact of on‐site initiation visits on patient recruitment and data quality in a randomized trial of adjuvant chemotherapy for breast cancer. Clinical Trials 2006;3:486‐92. [DOI] [PubMed] [Google Scholar]

Monaghan 2007 {published data only}

- Monaghan H, Richens A, Colman S, Currie R, Girgis S, Jayne K, et al. A randomised trial of the effects of an additional communication strategy on recruitment into a large‐scale, multi‐centre trial. Contemporary Clinical Trials 2007;28:1‐5. [DOI] [PubMed] [Google Scholar]

Paskett 2002 {published data only}

- Paskett ED, Cooper MR, Stark N, Ricketts TC, Tropman S, Hatzell T, et al. Clinical trial enrollment of rural patients with cancer. Cancer Practice 2002;10(1):28‐35. [DOI] [PubMed] [Google Scholar]

Additional references

Barnes 2005

- Barnes S, Gott M, Payne S, Parker C, Seamark D, Gariballa S, et al. Recruiting older people into a large, community‐based study of heart failure. Chronic Illness 2005;1:321‐9. [DOI] [PubMed] [Google Scholar]

Bryant 2005

- Bryant J, Powell J. Payment to healthcare professionals for patient recruitment to trials: a systematic review. BMJ 2005;331:1377‐8. [DOI] [PMC free article] [PubMed] [Google Scholar]

Darbyshire 2011

- Darbyshire J, Sitzia J, Cameron D, Ford G, Littlewood S, Kaplan R, et al. Extending the clinical research network approach to all of healthcare. Annals of Oncology 2011;22(Suppl 7):vii36‐43. [DOI] [PubMed] [Google Scholar]

Data Protection Act 1998

- UK Parliament. Data Protection Act. HMSO, London 1998.

Department of Health 2009

- Department of Health. Requirements to support research in the NHS. Department of Health, London 2009.

Ewing 2004

- Ewing G, Rogers M, Barclay S, McCabe J, Martin A, Todd C. Recruiting patients into a primary care based study of palliative care: why is it so difficult?. Palliative Medicine 2004;18:452‐9. [DOI] [PubMed] [Google Scholar]

Higgins 2011

- Higgins J, Altman D. Chapter 8: Assessing risk of bias in included studies. In: Higgins JPT, Green S (editors). Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0 [updated March 2011]. The Cochrane Collaboration, 2011. Available from www.cochrane‐handbook.org.

Horsley 2011

- Horsley T, Dingwall O, Sampson M. Checking reference lists to find additional studies for systematic reviews. Cochrane Database of Systematic Reviews 2011, Issue 8. [DOI: 10.1002/14651858.MR000026.pub2] [DOI] [PMC free article] [PubMed] [Google Scholar]

Ives 2009

- Ives J, Draper H, Damery S, Wilson S. Do family doctors have an obligation to facilitate research?. Family Practice 2009;26:543‐8. [DOI] [PubMed] [Google Scholar]

Lawlor 2001

- Lawlor DA, Stone T. Public health and data protection: an inevitable collision or potential for a meeting of minds?. International Journal of Epidemiology 2001;30:1221‐5. [DOI] [PubMed] [Google Scholar]

Lewis 2001

- Lewis S, Clarke M. Forest plots: trying to see the wood and the trees. BMJ 2001;322:1479‐80. [PUBMED: 11408310] [DOI] [PMC free article] [PubMed] [Google Scholar]

Mason 2007

- Mason VL, Shaw A, Wiles NJ, Mulligan J, Peters TJ, Sharp D, et al. GPs' experiences of primary care mental health research: a qualitative study of the barriers to recruitment. Family Practice 2007;24:518‐25. [DOI] [PubMed] [Google Scholar]

McDonald 2006

- McDonald AM, Knight RC, Campbell MK, Entwistle VA, Grant AM, Cook JA, et al. What influences recruitment to randomised controlled trials? A review of trials funded by two UK funding agencies. Trials 2006;7:9. [DOI] [PMC free article] [PubMed] [Google Scholar]

Pocock 2008

- Pocock SJ. The size of a clinical trial. Clinical Trials: A Practical Approach. Chichester: John Wiley & Sons, 2008. [Google Scholar]

Redsell 1998

- Redsell SA, Cheater FM. The Data Protection Act (1998): implications for health researchers. Journal of Advanced Nursing 2001;35:508‐13. [DOI] [PubMed] [Google Scholar]

Rendell 2007

- Rendell JM, Merritt RK, Geddes J. Incentives and disincentives to participation by clinicians in randomised controlled trials. Cochrane Database of Systematic Reviews 2007, Issue 2. [DOI: 10.1002/14651858.MR000021.pub3] [DOI] [PMC free article] [PubMed] [Google Scholar]

Salman 2014

- Salman RA, Beller E, Kagan J, Hemminki E, Phillips RS, Savulescu J, et al. Increasing value and reducing waste in biomedical research regulation and management. Lancet 2014;383:176‐85. [DOI] [PMC free article] [PubMed] [Google Scholar]

Smith 2013

- Smith V, Clarke M, Devane D, Begley C, Shorter G, Maguire L. SWAT 1: What effects do site visits by the principal investigator have on recruitment in a multicentre randomized trial?. Journal of Evidence‐based Medicine 2013;6(3):136‐7. [PUBMED: 24325369] [DOI] [PubMed] [Google Scholar]

Smith 2015

- Smith V, Clarke M, Begley C, Devane D. SWAT‐1: The effectiveness of a 'site visit' intervention on recruitment rates in a multi‐centre randomised trial. Trials 2015;16:211. [DOI: 10.1186/s13063-015-0732-z] [DOI] [PMC free article] [PubMed] [Google Scholar]

Stratford 1998

- Stratford JS, Stratford J. Data protection and privacy in the United States and Europe. International Association for Social Science Information Services and Technology 1998;Fall:17‐20. [Google Scholar]

Strobl 2000

- Strobl J, Cave E, Walley T. Data protection legislation: interpretation and barriers to research. BMJ 2000;321:890‐2. [DOI] [PMC free article] [PubMed] [Google Scholar]

Treweek 2010

- Treweek S, Pitkethly M, Cook J, Kjeldstrøm M, Taskila T, Johansen M, et al. Strategies to improve recruitment to randomised controlled trials. Cochrane Database of Systematic Reviews 2010, Issue 4. [DOI: 10.1002/14651858.MR000013.pub5] [DOI] [PubMed] [Google Scholar]

White 2008

- White C, Hardy J. Gatekeeping from palliative care research trials. Progress in Palliative Care 2008;16:167‐71. [Google Scholar]

Young 2011

- Young T, Hopewell S. Methods for obtaining unpublished data. Cochrane Database of Systematic Reviews 2011, Issue 11. [DOI: 10.1002/14651858.MR000027.pub2] [DOI] [PMC free article] [PubMed] [Google Scholar]

References to other published versions of this review

Preston 2012

- Preston NJ, Farquhar MC, Walshe CE, Stevinson C, Ewing G, Calman LA, Burden S, Brown Wilson C, Hopkinson JB, Todd C. Strategies to increase participant recruitment to research studies by healthcare professionals. Cochrane Database of Systematic Reviews 2012, Issue 9. [DOI: 10.1002/14651858.MR000036] [DOI] [PMC free article] [PubMed] [Google Scholar]