Abstract

The research progress in multimodal learning has grown rapidly over the last decade in several areas, especially in computer vision. The growing potential of multimodal data streams and deep learning algorithms has contributed to the increasing universality of deep multimodal learning. This involves the development of models capable of processing and analyzing the multimodal information uniformly. Unstructured real-world data can inherently take many forms, also known as modalities, often including visual and textual content. Extracting relevant patterns from this kind of data is still a motivating goal for researchers in deep learning. In this paper, we seek to improve the understanding of key concepts and algorithms of deep multimodal learning for the computer vision community by exploring how to generate deep models that consider the integration and combination of heterogeneous visual cues across sensory modalities. In particular, we summarize six perspectives from the current literature on deep multimodal learning, namely: multimodal data representation, multimodal fusion (i.e., both traditional and deep learning-based schemes), multitask learning, multimodal alignment, multimodal transfer learning, and zero-shot learning. We also survey current multimodal applications and present a collection of benchmark datasets for solving problems in various vision domains. Finally, we highlight the limitations and challenges of deep multimodal learning and provide insights and directions for future research.

Keywords: Applications, Computer vision, Datasets, Deep learning, Sensory modalities, Multimodal learning

Introduction

In recent years, much progress has been made in the field of artificial intelligence thanks to the implementation of machine learning methods. In general, these methods involve a variety of intelligent algorithms for pattern recognition and data processing. Usually, several sensors with specific characteristics are employed to obtain and analyze global and local patterns in a uniform way. These sensors are generally very versatile in terms of coverage, size, manufacturing cost, and accuracy. Besides, the availability of vast amounts of data (big data), coupled with significant technological advances and substantial improvements in hardware implementation techniques, has led the machine learning community to turn to deep learning to find sustainable solutions to a given problem. Deep learning, also known as representation-based learning [2], is a particular approach to machine learning that is gaining popularity due to its predictive power and portability. The work presented in [3] showed a technical transition from machine learning to deep learning by systematically highlighting the main concepts, algorithms, and trends in deep learning. In practice, the extraction and synthesis of rich information from a multidimensional data space require the use of an intermediate mechanism to facilitate decision making in intelligent systems. Deep learning has been used in many practices, and it has been shown that its performance can be greatly improved in several disciplines, including computer vision. This line of research is part of the rich field of deep learning, which typically deals with visual information of different types and scales to perform complex tasks. Currently, the deep learning algorithms have demonstrated their potential and applicability in other active areas such as natural language processing, machine translation, and speech recognition, performing comparably or even better than humans.

A large number of computer vision researchers focus each year on developing vision systems that enable machines to mimic human behavior. For example, some intelligent machines can use computer vision technology to simultaneously map their behavior, detect potential obstacles, and track their location. By applying computer vision to multimodal applications, complex operational processes can be automated and made more efficient. Here, the key challenge is to extract visual attributes from one or more data streams (also called modalities) with different shapes and dimensions by learning how to fuse the extracted heterogeneous features and project them into a common representation space, which is referred to as deep multimodal learning in this work.

In many cases, a set of heterogeneous cues from multiple modalities and sensors can provide additional knowledge that reflects the contextual nature of a given task. In the arena of multimodality, a given modality depends on how specific media and related features are structured within a conceptual architecture. Such modalities may include textual, visual, and auditory modalities, involving specific ways or mechanisms to encode heterogeneous information harmoniously.

In this study, we mainly focused on visual modalities, such as images as a set of discrete signals from a variety of image sensors. The environment in which we live generally includes many modalities in which we can see objects, hear tones, feel textures, smell aromas, and so on. For example, the audiovisual modalities are complementary to each other, where the acoustic and visual attributes come from two different physical entities. However, combining different modalities or data sources to improve performance is still often an attractive task from one standpoint, but in practice, it makes little sense to distinguish between noise, concepts, and conflicts between data sources. Moreover, the lack of labeled multimodal data in the current literature can lead to reduced flexibility and accuracy, often requiring cooperation between different modalities. In this paper, we reviewed recent deep multimodal learning techniques to put forward typical frameworks and models to advance the field. These networks show the utility of learning hierarchical representations directly from raw data to achieve maximum performance on many heterogeneous datasets. Thus, it will be possible to design intelligent systems that can quickly answer questions, reason, and discuss what is seen in different views in different scenarios. Classically, there are three general approaches to multimodal data fusion: early fusion, late fusion, and hybrid fusion.

In addition to surveys of recent advances in deep multimodal learning itself, we also discussed the main methods of multimodal fusion and reviewed the latest advanced applications and multimodal datasets popular in the computer vision community.

The remainder of this paper is organized as follows. In Sect. 2, we discuss the differences between similar previous studies and our work. Section 3 reviews recent advances in deep multimodal algorithms, the motivation behind them, and commonly used fusion techniques, with a focus on deep learning-based algorithms. In Sects. 4 and 5, we present more advanced multimodal applications and benchmark datasets that are very popular in the computer vision community. In Sect. 6, we discuss the limitations and challenges of vision-based deep multimodal learning. The final section then summarizes the whole paper and points out a roadmap for future research.

Comparison with previous surveys

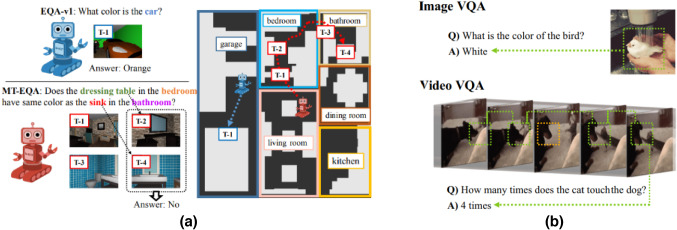

In recent years, the computer vision community has paid more attention to deep learning algorithms due to their exceptional capabilities compared to traditional handcrafted methods. A considerable amount of work has been conducted under the general topic of deep learning in a variety of application domains. In particular, these include several excellent surveys of global deep learning models, techniques, trends, and applications [4, 180, 182], a survey of deep learning algorithms in the computer vision community [179], a survey that focuses directly on the problem of deep object detection and its recent advances [181], and a survey of deep learning models including the generative adversarial network and its related challenges and applications [19]. Nonetheless, the applications discussed in these surveys include only a single modality as a data source for data-driven learning. However, most modern machine learning applications involve more than one modality (e.g., visual and textual modalities), such as embodied question answering, vision-and-language navigation, etc. Therefore, it is of vital importance to learn more complex and cross-modal information from different sources, types, and data distributions. This is where deep multimodal learning comes into play.

From the early works of speech recognition to recent advances in language- and vision-based tasks, deep multimodal learning technologies have demonstrated significant progress in improving cognitive performance and interoperability of prediction models in a variety of ways. To date, deep multimodal learning has been the most important evolution in the field of multimodal machine learning using deep learning paradigm and multimodal big data computing environments. In recent years, many pieces of research based on multimodal machine learning have been proposed [37], but to the best of our knowledge, there is no recent work that directly addresses the latest advances in deep multimodal learning particularly for the computer vision community. A thorough review and synthesis of existing work in this domain, especially for researchers pursuing this topic, is essential for further progress in the field of deep learning. However, there is still relatively little recent work directly addressing this research area [32–37]. Since multimodal learning is not a new topic, there is considerable overlap between this work and the surveys of [32–37], which needs to be highlighted and discussed.

Recently, the valuable works of [32, 33] considered several multimodal practices that apply only to specific multimodal use cases and applications, such as emotion recognition [32], human activity and context recognition [33]. More specifically, they highlighted the impact of multimodal feature representation and multilevel fusion on system performance and the state-of-the-art in each of these application areas.

Furthermore, some cutting-edge works [34, 36] have been proposed in recent years that address the mechanism of integrating and fusing multimodal representations inside deep learning architectures by showing the reader the possibilities this opens up for the artificial intelligence community. Likewise, Guo et al. [35] provided a comprehensive overview of deep multimodal learning frameworks and models, focusing on one of the main challenges of multimodal learning, namely multimodal representation. They summarized the main issues, advantages, and disadvantages for each framework and typical model. Another excellent survey paper was recently published by Baltrušaitis et al. [37], which reviews recent developments in multimodal machine learning and expresses them in a general taxonomic way. Here, the authors identified five levels of multimodal data combination: representation, translation, alignment, fusion, and co-learning. It is important to note here that, unlike our survey, which focused primarily on computer vision tasks, the study published by Baltrušaitis et al. [37] was aimed mainly at both the natural language processing and computer vision communities. In this article, we reviewed recent advances in deep multimodal learning and organized them into six topics: multimodal data representation, multimodal fusion (i.e., both traditional and deep learning-based schemes), multitask learning, multimodal alignment, multimodal transfer learning, and zero-shot learning. Beyond the above work, we focused primarily on cutting-edge applications of deep multimodal learning in the field of computer vision and related popular datasets. Moreover, most of the papers we reviewed are recent and have been published in high-quality conferences and journals such as the visual computer, ICCV, and CVPR. A comprehensive overview of multimodal technologies—their limitations, perspectives, trends, and challenges—is also provided in this article to deepen and improve the understanding of the main directions for future progress in the field. In summary, our survey is similar to the closest works [35, 37], which discuss recent advances in deep multimodal learning with a special focus on computer vision applications. The surveys we discussed are summarized in Table 1.

Table 1.

Summary of reviewed deep multimodal learning surveys

| Refs. | Year | Publication | Scope | Multimodality? |

|---|---|---|---|---|

| [4] | 2015 | Nature | A comprehensive overview of deep learning and related applications | ✗ |

| [19] | 2018 | IEEE Signal Processing Magazine | An overview of generative adversarial networks and related challenges in their theory and application | ✗ |

| [179] | 2016 | Neurocomputing | A review of deep learning algorithms in computer vision for image classification, object detection, image retrieval, semantic segmentation and human pose estimation | ✗ |

| [180] | 2018 | IEEE Access | A survey of deep learning: platforms, applications and trends | ✗ |

| [181] | 2019 | arXiv | A survey of deep learning and its recent advances for object detection | ✗ |

| [182] | 2018 | ACM Comput. Surv. | A survey of deep learning: algorithms, techniques, and applications | ✗ |

| [32] | 2019 | Book | A survey on multimodal emotion detection and recognition | |

| [33] | 2018 | Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies | A survey on multimodal deep learning for activity and context detection | |

| [34] | 2017 | IEEE Signal Processing Magazine | A survey of recent progress and trends in deep multimodal learning | |

| [35] | 2019 | IEEE Access | A comprehensive survey of deep multimodal learning and its frameworks | |

| [36] | 2015 | Proceedings of the IEEE | A comprehensive survey of methods, challenges, and prospects for multimodal data fusion | |

| [37] | 2017 | IEEE Transactions on Pattern Analysis and Machine Intelligence | A survey and taxonomy on multimodal machine learning algorithms |

Deep multimodal learning architectures

In this section, we discuss deep multimodal learning and its main algorithms. To do so, we first briefly review the history of deep learning and then focus on the main motivations behind this research to answer the question of how to reduce heterogeneity biases across different modalities. We then outline the perspective of multimodal representation and what distinguishes it from the unimodal space. We next introduce recent approaches for combining modalities. Next, we highlight the difference between multimodal learning and multitask learning. Finally, we discuss multimodal alignment, multimodal transfer learning, and zero-shot learning in detail in Sects. 3.6, 3.7, and 3.8, respectively.

Brief history of deep learning

Historically, artificial neural networks date back to the 1950s and the efforts of psychologists to gain a better understanding of how the human brain works, including the work of F. Rosenblat [8]. In 1960, F. Rosenblat [8] proposed a perceptron as part of supervised learning algorithms that is used to compute a set of activations, meaning that for a given neuron and input vector, it performs the sum weighted by a set of weights, adds a bias, and applies an activation function. An activation function (e.g., sigmoid, tanH, etc.), also called nonlinearity, uses the derived patterns to perform its nonlinear transformation. As a deep variant of the perceptron, a multilayer perceptron, originally designed by [9] in 1986, is a special class of feed-forward neural networks. Structurally, it is a stack of single-layer perceptrons. In other words, this structure gives the meaning of “deep” that a network can be defined by its depth (i.e., the number of hidden layers). Typically, a multilayer perceptron with one or two hidden layers does not require much data to learn informative features due to the reduced number of parameters to be trained. A multilayer perceptron can be considered as a deep neural network if the number of hidden layers is greater than one, as confirmed by [10, 11]. In this regard, many more advances in the field are likely to follow, such as the convolutional neural networks of LeCun et al. [21] in 1998 and the spectacular deep network results of Krizhevsky et al. [7] in 2012, opening the door to many real-world domains including computer vision.

Motivation

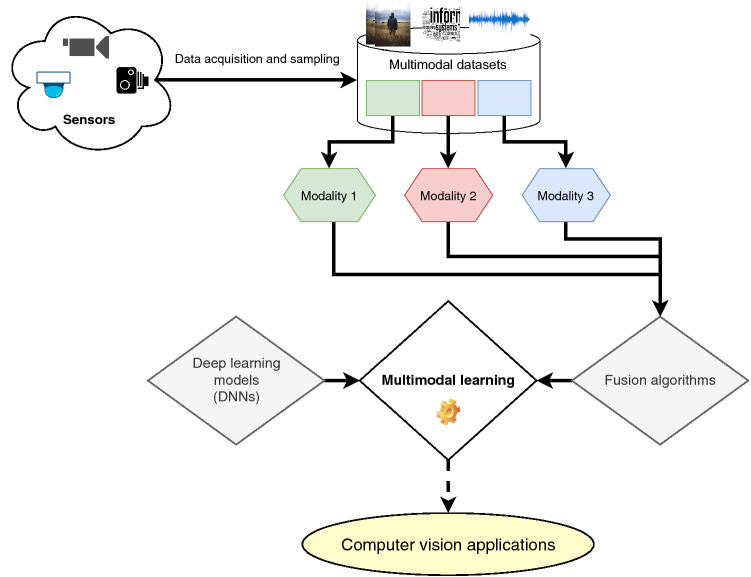

Recently, the amount of visual data has exploded due to the widespread use of available low-cost sensors, leading to superior performance in many computer vision tasks (see Fig. 1). Such visual data can include still images, video sequences, etc., which can be used as the basis for constructing multimodal models. Unlike the static image, the video stream provides a large amount of meaningful information that takes into account the spatiotemporal appearance of successive frames, so it can be easily used and analyzed for various real-world use cases, such as video synthesis and description [68], and facial expression recognition [123]. The spatiotemporal concept refers to the temporal and spatial processing of a series of video sequences with variable duration. In multimodal learning analytics, the audio-visual-textual features are extracted from a video sequence to learn joint features covering the three modalities. Efficient learning of large datasets at multiple levels of representation leads to faster content analysis and recognition of the millions of videos produced daily. The main reason for using multimodal data sources is that it is possible to extract complementary and richer information coming from multiple sensors, which can provide much more optimistic results than a single input. Some monomodal learning systems have significantly increased their robustness and accuracy, but in many use cases, there are shortcomings in terms of the universality of different feature levels and inaccuracies due to noise and missing concepts. The success of deep multimodal learning techniques has been driven by many factors that have led many researchers to adopt these methods to improve model performance. These factors include large volumes of widely usable multimodal datasets, more powerful computers with fast GPUs, and high-quality feature representation at multiple scales. Here, a practical challenge for the deep learning community is to strengthen correlation and redundancy between modalities through typical models and powerful mechanisms.

Fig. 1.

An example of a multimodal pipeline that includes three different modalities

Multimodal representation

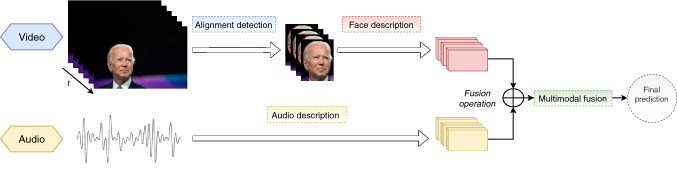

Multi-sensory perception primarily encompasses a wide range of interacting modalities, including audio and video. For simplicity, we consider the following temporal multimodal problem, where both audio and video modalities are exploited in a video recognition task (emotion recognition). First, let us consider two input streams of different modalities: and , where and refer to the n- and m-dimensional feature vectors of the and modalities occurring at time t, respectively. Next, we combine the two modalities at time t and consider the two unimodal output distributions at different levels of representations. Given ground truth labels , we aim here to train a multimodal learning model M that maps both and into the same categorical set of Z. Each parameter of the input audio stream and video stream is synchronized differently in time and space, where and , respectively. Here, we can construct two separate unimodal networks from and , denoted, respectively, by and , where , , and . Y denotes the predicted class label of the training samples generated by the output of the constructed networks and indicates the fusion operation. The generated multimodal network M can then recognize the most discriminating patterns in the streaming data by learning a common representation that integrates relevant concepts from both modalities. Figure 2 shows a schematic diagram of the application of the described multimodal problem to the video emotion recognition task.

Fig. 2.

A schematic illustration of the method used: The visual modality (video) involves the extraction of facial regions of interest followed by a visual mapping representation scheme. The obtained representations are then temporally fused into a common space. Additionally, the audio descriptions are also generated. The two modalities are then combined using a multimodal fusion operation to predict the target class label (emotion) of the test sample

Therefore, it is necessary to consider the extent to which any such dynamic entity will be able to take advantage of this type of information from several redundant sources. Learning multimodal representation from heterogeneous signals poses a real challenge for the deep learning community. Typically, inter- and intra-modal learning involves the ability to represent an object of interest from different perspectives, in a complementary and semantic context where multimodal information is fed into the network. Another crucial advantage of inter- and intra-modal interaction is the discriminating power of the perceptual model for multisensory stimuli by exploiting the potential synergies between modalities and their intrinsic representations [112]. Furthermore, multimodal learning involves a significant improvement in perceptual cognition, as many of our senses are involved in the process of treatment information from several modalities. Nevertheless, it is essential to learn how to interpret the input signals and summarize their multimodal nature to construct aggregate feature maps across multiple dimensions. In the multimodality theory, obtaining contextual representation from more than one modality has become a vital challenge, which has been termed in this study as the multimodal representation.

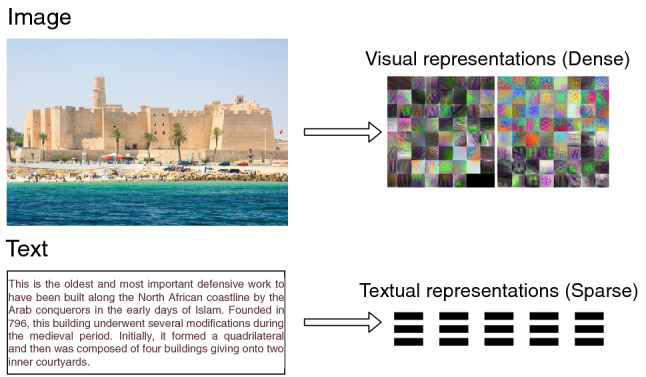

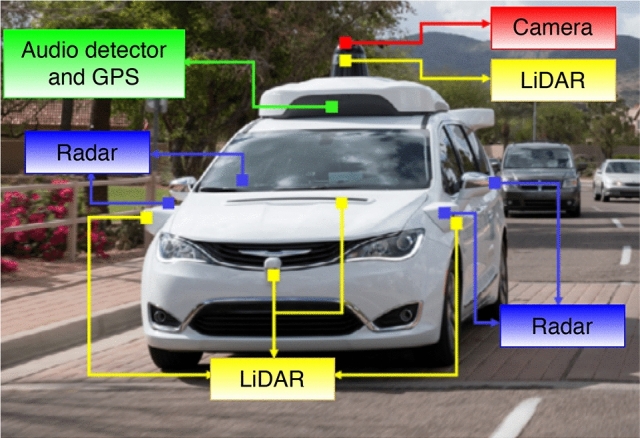

Typically, monomodal representation involves a linear or nonlinear mapping of an individual input stream (e.g., image, video, or sound, etc.) into a high-level semantic representation. The multimodal representation leverages the correlation power of each monomodal sensation by aggregating their spatial outputs. Thus, the deep learning model must be adapted to accurately represent the structure and representation space of the source and target modality. For example, a 2D image may be represented by its visual patterns, making it difficult to characterize this data structure using natural modality or other non-visual concepts. As shown in Fig. 3, the textual representation (i.e., a word embedding) is very sparse when compared to the image one, which makes it very challenging to combine these two different representations into a unified model. As another example, when the driver of a car is driving autonomously, he probably has a LiDAR camera and other embedded sensors (e.g., depth sensors, etc) [81] to perceive his surroundings. Here, poor weather conditions can affect the visual perception of the environment. Moreover, the high dimensionality of the state space poses a major challenge, since the vehicle can mobilize in both structured and unstructured locations. However, an RGB image is encoded as a discrete space in the form of grid pixels, making it difficult to combine visual and non-visual cues. Therefore, learning a joint embedding is crucial for exploiting the synergies of multimodal data to construct shared representation spaces. This implies the emphasis on multimodal fusion approaches, which will be discussed in the next subsection.

Fig. 3.

Difference between visual and textual representation

Fusion algorithms

The most critical aspect of the combinatorial approach is the flexibility to represent data at different levels of abstraction. By using an intermediate formalism, the learned information can be combined into two or more modalities for a particular hypothesis. In this subsection, we describe common methods for combining multiple modalities, ranging from the conventional to the modern methods.

Conventional methods

3.4.1.1 Typical techniques based

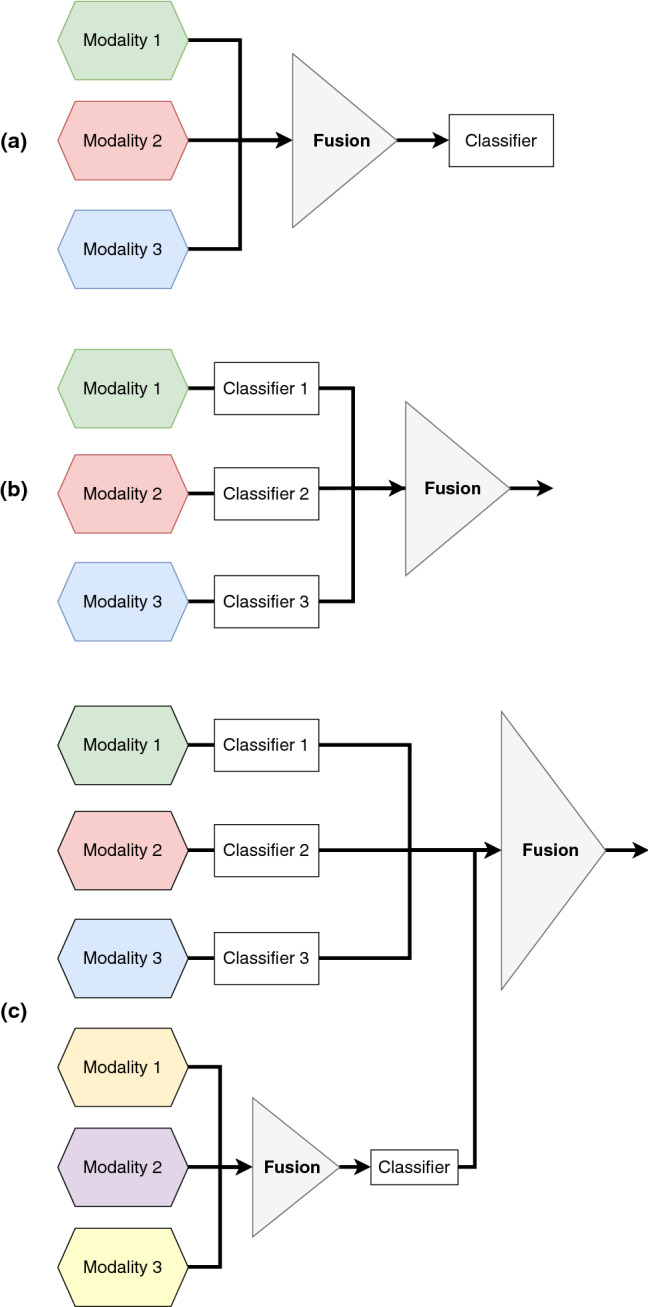

To improve the generalization performance of complex cognitive systems, it is necessary to capture and fuse an appropriate set of informative features from multiple modalities using typical techniques. Traditionally, they range from early to hybrid fusion schemes (see Fig. 4):

Early fusion: low-level features that are directly extracted from each modality will be fused before being classified.

Late fusion: also called “decision fusion”, which consists of classifying features extracted from separate modalities before fusing them.

Hybrid fusion: also known as “intermediate fusion”, which consists of combining multimodal features of early and late fusion before making a decision.

Feature-level fusion (i.e., early fusion) provides a richness of information from heterogeneous data. The extracted features often lack homogeneity due to the diversity of modalities and disparities in their appearance. Also, this fusion process can generate a single large representation that can lead to prediction errors. In the case of a late fusion, such techniques as majority vote [38] and low-rank multimodal fusion [39] may be used to aggregate the final prediction scores of several classifiers. Thus, each modality independently takes the decision, which can reduce the overall performance of the integration process. In the case of intermediate fusion, the spatial combination of intermediate representations of the different data streams usually produced with varying scales and dimensions, making them more challenging to merge. To overcome this challenge, the authors of [124] designed a simple fusion scheme, called multimodal transfer module (MMTM), to transfer and hierarchically aggregate shared knowledge from multiple modalities in CNN networks.

Fig. 4.

Conventional methods for multimodal data fusion: a Early fusion, b Late fusion, c Hybrid fusion

3.4.1.2 Kernel based

Since a long time ago, the support vector machine [40] classifier has been introduced as a learning algorithm for a wide range of classification tasks. Indeed, SVM is one of the most popular linear classifiers that are based on learning a single kernel function through the handling of linear tasks, such as discrimination and regression problems. The main idea of an SVM is to separate the feature space into two classes of data with a hard margin. Kernel-based methods are among the most commonly used techniques for performing fusion due to their proven robustness and reliability. For more details, we invite the reader to consult the work of Gönen et al. [41] that focused on the taxonomy of multi-kernel learning algorithms. These kernels are intended to make use of the similarities and discrepancies across training samples as well as a wide variety of data sources. In other words, these modular learning methods are used for multimodal data analysis. Recently, a growing number of studies have focused, in particular, on the potential of these kernels for multi-source-based learning for improving performance. In this sense, a wide range of kernel-based methods have been proposed to summarize information from multiple sources using a variety of input data. In this regard, Gönen et al. [41] pioneered multiple kernel learning (MKL) algorithms that seek to combine multimodal data that have distinct representations of similarity. MKL is the process of learning a classifier through multiple kernels and data sources. Also, it aims to extract the joint correlation of several kernels in a linear or nonlinear manner. Similarly, Aiolli et al. [42] proposed the MKL-based algorithm, called EasyMKL, which combines a series of kernels to maximize the segregation of representations and extract the strong correlation between feature spaces to improve the performance of the classification task. An alternative model, called convolutional recurrent multiple kernel learning (CRMKL), based on the MKL framework for emotion recognition and sentiment analysis is reported by Wen et al. [43]. In [43], the MKL algorithm is used to combine multiple features that are extracted from deep networks.

3.4.1.3 Graphical models based

One of the most common probabilistic graphical models (PGMs) includes the hidden Markov model (HMM) [44]. It is an unsupervised and generative model. It has a series of potential states and transition probabilities. In the Markov chain, the transition from one state to another leads to the generation of observed sequences in which the observations are part of a state set. A transition formalizes how it is possible to move from one state to another and for each one there is a probability distribution of being borrowed. The states are hidden, but the first state generates a visible state from a given one. The main property of Markov chains is that the probabilities depend only on the previous state of the model. In HMM, a kind of generalization of mixing densities defined by each state is involved, as confirmed by Ghahramani et al. [45]. Specifically, Ghahramani et al. [45] introduced the factorial HMM (FHMM) which consists of combining the state transition matrix of HMMs with the distributed representations of vector quantizer (VQ) [46]. According to [46], VQ is a conventional technique for quantifying and generalizing dynamic mixing models. FHMM addresses the limited representational power of the latent variables of HMM by presenting the hidden state under a certain weighted appearance. Likewise, Gael et al. [47] proposed the non-parametric FHMM, called iFHMM, by introducing a new stochastic process for latent feature representation of time series.

In summary, the PGM model can be considered a robust tool for generating missing channels by learning the most representative inter-modal features in an unsupervised manner. One of the drawbacks of the graphical model is the high cost of the training and inference process.

3.4.1.4 Canonical correlation analysis based

In general, a fusion scheme can construct a single multimodal feature representation for each processing stage. However, it is also straightforward to place constraints on the extracted unimodal features [37]. Canonical correlation analysis (CCA) [201] is a very popular statistical method that attempts to maximize the semantic relationship between two unimodal representations so that complex nonlinear transformations of the two data perspectives can be effectively learned. Formally, it can be formulated as follows:

| 1 |

where X1 and X2 stand for unimodal representations, v1 and v2 for two vectors of a given length, and corr for the correlation function. A deep variant of CCA can also be used to maximize the correlation between unimodal representations, as suggested by the authors of [202]. Similarly, Chandar et al. [203] proposed a correlation neural network, called CorrNet, which is based on a constrained encoder/decoder structure to maximize the correlation of internal representations when projected onto a common subspace. Engilberge et al. [204] introduced a weaker constraint on the joint embedding space using a cosine similarity measure. Besides, Shahroudy et al. [205] constructed a unimodal representation using a hierarchical factorization scheme that is limited to representing redundant feature parts and other completely orthogonal parts.

Deep learning methods

3.4.2.1 Deep belief networks based

Deep belief network (DBN) is part of the graphical generative deep model [15]. They form a deeper variant of the restricted Boltzmann machine (RBM) by combining it together. In other words, a DBN consists of stacking a series of RBM where the hidden layer of the first RBM is the visible layer of the higher hierarchies. Structurally, a DBN model has a dense structure similar to that of a shallow multilayer perceptron. The first RBM is designed to systematically reconstruct its input signal in which its hidden layer will be handled as the visible layer for the second one. However, all hidden representations are learned globally at each level of DBN. Note that DBN is one of the strongest alternatives to overcome the vanishing gradient problem through a stack of RBM units. Like a single RBM, DBN involves discovering latent features in the raw data. It can be further trained in a supervised fashion to perform the classification of the detected hidden representations.

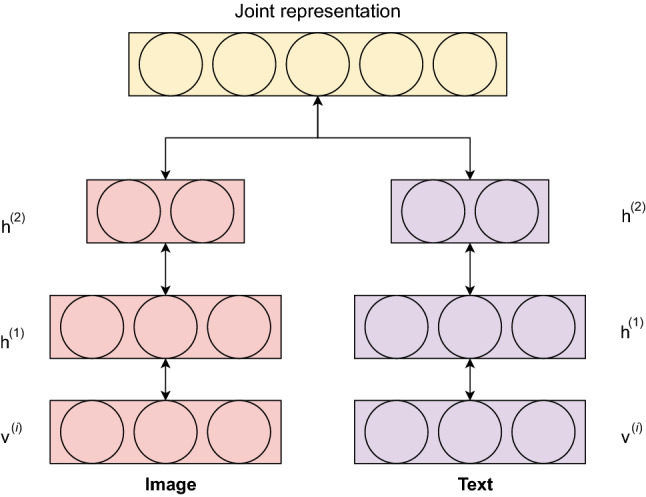

Compared to other supervised deep models, DBN requires only a very small set of labeled data to perform weight training, which leads to a high level of usefulness in many multimodal tasks. For instance, Srivastava et al. [206] proposed a multimodal generative model based on the concept of deep Boltzmann machine (DBM) which learns a set of multimodal features by filling in the conditional distribution of data on a space of multimodal inputs such as image, text, and audio. Specifically, the purpose of training a multimodal DBN model is to improve the prediction accuracy of both unimodal and multimodal systems by generating a set of multimodal features that are semantically similar to the original input data so that they can be easily derived even if some modalities are missing. Figure 5 illustrates a multimodal DBN architecture that takes as input two different modalities (image and text) with different statistical distributions to map the original data from a high-dimensional space to a high-level abstract representation space. After extracting the high-level representation from each modality, an RBM network is then used to learn the joint distribution. The image and text modalities are modeled using two DBMs, each consisting of two hidden layers. Formally, the joint representation can be expressed as follows:

| 2 |

where refers to the input visual and textual modalities, to the network parameters, and to the hidden layer of each modality.

Fig. 5.

Structure of a bimodal DBN

In a multimodal context, the advantage of using multimodal DBN models lies in their sensitivity and stability in both supervised, semi-supervised and unsupervised learning protocols. These models allow for better modeling of very complex and discriminating patterns from multiple input modalities. Despite these advantages, these models have a few limitations. For instance, they largely ignore the spatiotemporal cues of multimodal data streams, making the inference process computationally intensive.

3.4.2.2 Deep autoencoders based

Deep autoencoders (DAEs) [207] are a class of unsupervised neural networks that are designed to learn a compressed representation of input signals. Conceptually, they consist of two coupled modules: the encoding module (encoder) and the decoding module (decoder). On the one hand, the encoding module consists of several processing layers to map high-dimensional input data into a low-dimensional space (i.e., latent space vectors). On the other hand, the decoding module takes these latent representations as input and decodes them in order to reconstruct the input data. These models have recently drawn attention from the multimodal learning community due to their great potential for reducing data dimensionality and, thus, increasing the performance of training algorithms. For instance, Bhatt et al. [208] proposed a DAE-based multimodal data reconstruction scheme that uses knowledge from different modalities to obtain robust unimodal representations and projects them onto a common subspace. Similar to the work of Bhatt, Liu et al. [209] proposed the integration of multimodal stacked contractive AEs (SCAEs) to learn cross-modality features across multiple modalities even when one of them is missing, intending to minimize the reconstruction loss function and avoid the overfitting problem. The loss function can be formulated as follows:

| 3 |

Here, (, ) denotes a pair of two inputs, and (, ) represent their reconstructed outputs.

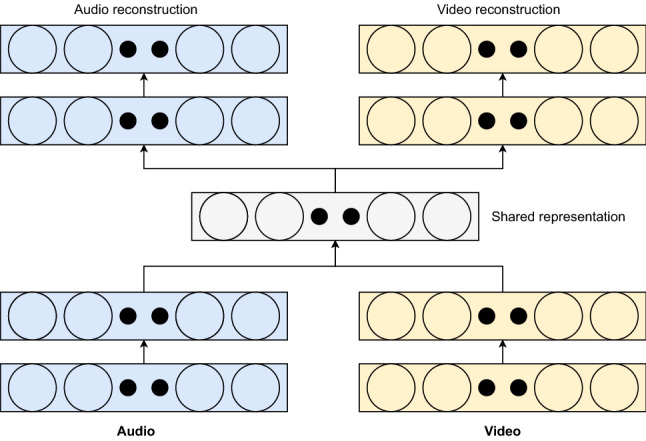

Several other typical models based on stacked AEs (SAEs) have been proposed to learn coherent joint representations across modalities. For example, the authors of [210–212] designed multimodal systems based on SAEs, where the encoder side of the architecture represents and compresses each unimodal feature separately, and the decoder side constructs the latent (shared) representation of the inputs in a unsupervised manner. Figure 6 shows the coupling mechanism of two separate AEs (bimodal AE) for both modalities (audio and video) into a jointly shared representation hierarchy where the encoder and decoder components are independent of each other. As a powerful tool for feature extraction and dimensionality reduction, the DAE aims to learn how to efficiently represent manifolds where the training data is unbalanced or lacking. One of the main drawbacks of DAEs is that many hidden parameters have to be trained, and the inference process is time-consuming. Moreover, they also miss some spatiotemporal details in multimodal data.

Fig. 6.

Structure of a bimodal AE

3.4.2.3 Convolutional neural networks based

Convolutional neural networks (CNNs or ConvNets) are a class of deep feed-forward neural networks whose main purpose is to extract spatial patterns from visual input signals [20, 22]. More specifically, such models tend to model a series of nonlinear transformations by generating very abstract and informative features from highly complex datasets. The main properties that distinguish CNNs from other models include their ability to capture local connectivity between units, to share weights across layers, and to block a sequence of hidden layers [4]. The architecture is based on hierarchical filtering operations, i.e., using convolution layers followed by activation functions, etc. Once the convolution layers are linearly stacked, the growth of the receptive field size (i.e., kernel size) of the neural layers can be simulated by a max-pooling operation, which implies a reduction in the spatial size of the feature map. After applying a series of convolution and pooling operations, the hidden representation learned from the model must be predicted. For this purpose, at least one fully connected layer (also called dense layer) is used that concatenates all previous activation maps.

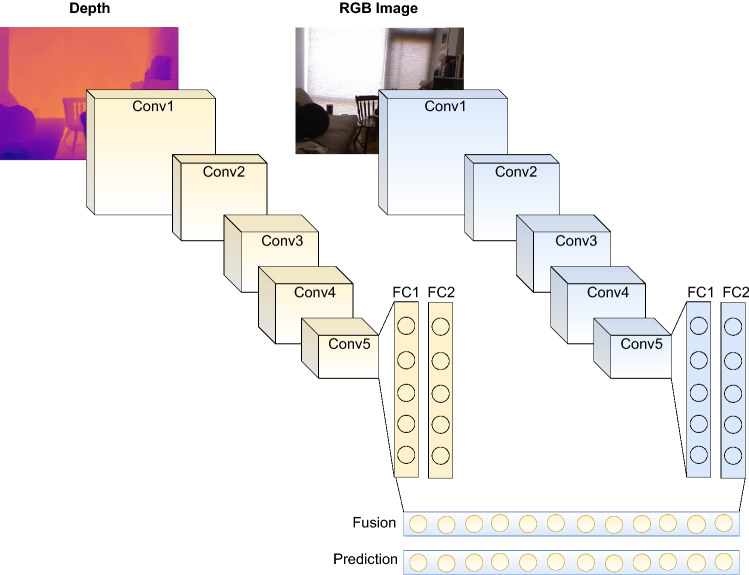

Since its introduction by Krizhevsky et al. [7] in 2012, the CNN model has been successfully applied to a wide range of multimodal applications, such as image dehazing [239, 240] and human activity recognition [241]. An adaptive multimodal mapping between two visual modalities (e.g., images and sentences) typically requires strong representations of the individual modalities [213]. In particular, CNNs have demonstrated powerful generalization capabilities to learn how to represent visual appearance features from static data. Recently, with the advent of robust and low-cost RGB-D sensors such as the Kinect, the computer vision community has turned its attention to integrating RGB images and corresponding depth maps (2.5D) into multimodal architectures as shown in Fig. 7. For instance, Couprie et al. [214] proposed a bimodal CNN architecture for multiscale feature extraction from RGB-D datasets, which are taken as four-channel frames (blue, green, red, and depth). Similarly, Madhuranga et al. [215] used CNN models for video recognition purposes by extracting silhouettes from depth sequences and then fusing the depth information with audio descriptions for activity of daily living (ADL) recognition. Zhang et al. [217] proposed to use multicolumn CNNs to extract visual features from the face and eye images for the gaze point estimation problem. Here, the regression depth of the facial landmarks is estimated from the facial images and the relative depth of facial keypoints is predicted by global optimization. To perform image classification directly, the authors of [217, 218] suggested the possibility of using multi-stream CNNs (i.e., two or more stream CNNs) to extract robust features from a final hidden layer and then project them onto a common representation space. However, the most commonly adopted approaches involve concatenating a set of pre-trained features derived from the huge ImageNet dataset to generate a multimodal representation [216].

Fig. 7.

Structure of a bimodal CNN

Formally, let be the feature map of j modalities and i be the current spatial location, where . As shown in Fig. 7, in our case , since the feature maps FC2 (RGB) and FC2 (D) were taken separately from the RGB and depth paths. The fused feature map , which is a weighted sum of the unimodal representations, can be calculated as follows:

| 4 |

Here, denotes the weight vectors that can be computed as follows:

| 5 |

In summary, a multimodal CNN serves as a powerful feature extractor that learns local cross-modal features from visual modalities. It is also capable of modeling spatial cues from multimodal data streams with an increased number of parameters. However, it requires a large-scale multimodal dataset to converge optimally during training, and the inference process is time-consuming.

3.4.2.4 Recurrent neural networks based

Recurrent neural networks (RNNs) [12] are a popular type of deep neural network architectures for processing sequential data of varying lengths. They learn to map input activations to the next hierarchy level and then transfer hidden states to the outputs using the recurrent feedback, which gives them the capacity to learn useful features from the previous states, unlike other deep feedforward networks such as CNNs, DBNs, etc. It also can handle time series and dynamic media such as text and video sequences. By using the backpropagation algorithm, the RNN function takes an input vector and a previous hidden state as input to capture the temporal dependence between objects. After training, the RNN function is fixed at a certain level of stability and can then be used over time.

However, the vanilla RNN model is typically incapable of capturing long-term dependencies in sequential data since they have no internal memory. To this end, several popular variants have been developed to efficiently handle this constraint and the gradient vanishing problem with impressive results, including long short-term memory (LSTM) [13] and gated recurrent linear units (GRU) [14]. In terms of computational efficiency, GRU is a lightweight variant of LSTM since it can modulate the information flow without using its internal memory units.

In addition to their use for unimodal tasks, RNNs have proved useful in many multimodal problems that require modeling long- and short-range dependencies across the input sequence, such as semantic segmentation [219] and image captioning [220]. For instance, Abdulnabi et al. [219] proposed a multimodal RNN architecture designed for semantic scene segmentation using RGB and depth channels. They integrated two parallel RNNs to efficiently extract robust cross-modal features from each modality. Zhao et al. [220] proposed an RNN-based multimodal fusion scheme to generate captions by analyzing distributional correlations between images and sentences. Recently, several new multimodal approaches based on RNN variants have been proposed and have achieved outstanding results in many vision applications. For example, Li et al. [221] designed a GRU-based embedding framework to describe the content of an image. They used GRU to generate a description of variable length from a given image. Similarly, Sano et al. [222] proposed a multimodal BiLSTM for ambulatory sleep detection. In this case, BiLSTM was used to extract features from the wearable device and synthesize temporal information.

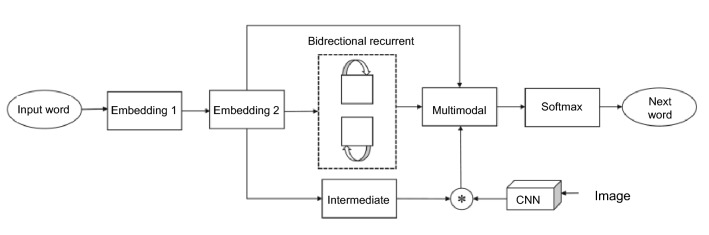

Figure 8 illustrates a multimodal m-RNN architecture that incorporates both word embeddings and visual features using a bidirectional recurrent mechanism and a pre-trained CNN. As can be seen, m-RNN consists of three components: a language network component, a vision network component, and a multimodal layer component. The multimodal layer here maps semantic information across sub-networks by temporally learning word embeddings and visual features. Formally, it can be expressed as follows:

| 6 |

where f(.) denotes the activation function, w and r consist of the word embedding feature and the hidden states in both directions of the recurrent layer and I represent the visual features.

Fig. 8.

A schematic illustration of bidirectional multimodal RNN (m-RNN) [223]

In summary, the multimodal RNN model is a robust tool for analyzing both short- and long-term dependencies of multimodal data sequences using the backpropagation algorithm. However, the model has a slow convergence rate due to the high computational cost in the hidden state transfer function.

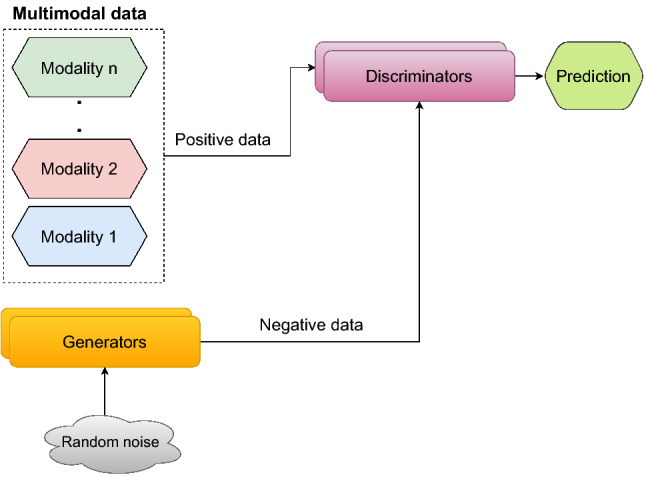

3.4.2.5 Generative adversarial networks based

Generative adversarial networks (GANs) are part of deep generative architectures, designed to learn the data distribution through the adversarial learning. Historically, they were first developed by Goodfellow et al. [16], which demonstrated the ability to generate realistic and reasonably impractical representations from noisy data domains. Structurally, GAN is a unified network consisting of two sub-networks, a generator network (G) and a discriminator network (D), which interact continuously during the learning process. The principle of its operation is as follows: The generator network takes as input the latent distribution space (i.e., a random noise (z)) and generates an artificial sample. The discriminator takes the true sample and those generated by the generator and tries to predict whether the input sample is true (false) or not. Hence, it is a binary classification problem, where the output must be between 0 (generated) and 1 (true). In other words, the generator’s main task is to generate a realistic image, while the discriminator’s task is to determine whether the generated image is true or false. Subsequently, they should use an objective function to represent the distance between the distribution of generated samples () and the distribution of real ones (). The adversarial training strategy consists of using a minimax objective function V(G, D), which can be expressed as follows:

| 7 |

Since their development in 2014, generative adversarial training algorithms have been widely used in various unimodal applications such as scene generation [17], image-to-image translation [18], and image super-resolution [224, 225]. To obtain the latest advances in super-resolution algorithms for a variety of remote sensing applications, we invite the reader to refer to the excellent survey article by Rohith et al. [226].

In addition to its use in unimodal applications, the generative adversarial learning paradigm has recently been widely adopted in multimodal arenas, where two or more modalities are involved, such as image captioning [227] and image retrieval [228]. In recent years, GAN-based schemes have been receiving a lot of attention and interest in the field of multimodal vision. For example, Xu et al. [229] proposed a fine-grained text-image generation framework using an attentional GAN model to create high-quality images from text. Similarly, Huang et al. [230] proposed an unsupervised image-to-image translation architecture that is based on the idea that the image style of one domain can be mapped into the styles of many domains. In [231], Toriya et al. addressed the task of image alignment between a pair of multimodal images by mapping the appearance features of the first modality to the other using GAN models. Here, GANs were used as a means to apply keypoint-mapping techniques to multimodal images. Figure 9 shows a simplified diagram of a multimodal GAN.

Fig. 9.

A schematic illustration of multimodal GAN

In summary, unsupervised GAN is one of the most powerful generative models that can address scenarios where training data is lacking or some hidden concepts are missing. However, it is extremely tricky to train the network when generating discrete distributions, and the process itself is unstable compared to other generative networks. Moreover, the function that this network seeks to optimize is an adversarial loss function without any normalization.

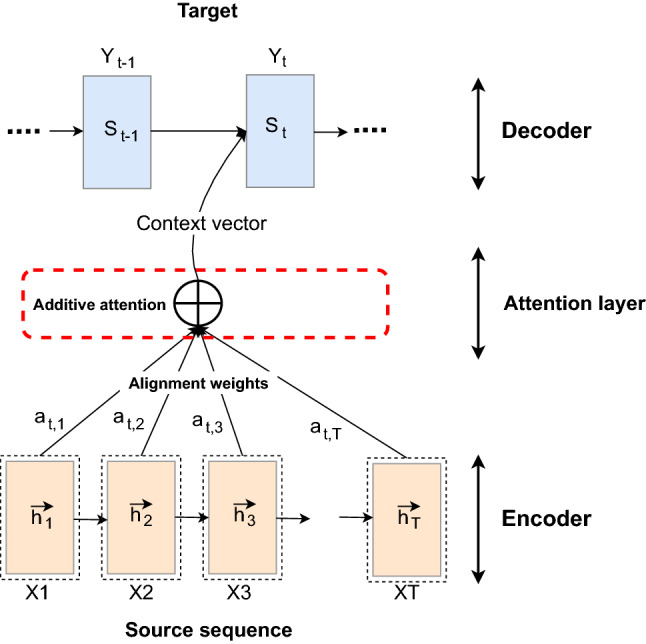

3.4.2.6 Attention mechanism based

In recent years, the attention mechanism (AM) has become one of the most challenging tasks in computer vision and machine translation [232]. The idea of AM is to focus on a particular position in the input image by computing the weighted sum of feature vectors and mapping them into a final contextual representation. In other words, it learns how to reduce some irrelevant attributes from a set of feature vectors. In the multimodal analysis, an attentional model can be designed to combine multiple modalities, each with its internal representation (e.g., spatial features, motion features, etc.). That is, when a set of features is derived from spatiotemporal cues, these variable-length vectors are semantically combined into a single fixed-length vector. Furthermore, an AM can be integrated into RNN models to improve the generalization capability of the former by capturing the most representative and discriminating patterns from heterogeneous datasets. A formalism for integrating an AM into the basic RNN model was developed by Bahdanau et al. [1]. Since the encoding side of an RNN generates a fixed-length feature vector from its input sequence, this can lead to very tedious and time-consuming parameter tuning. Therefore, the AM acts as a contextual bridge between the encoding and decoding sides of an RNN to pay attention only to a particular position in the input representation.

Consider as an example of neural machine translation [1] (see Fig. 10), where an encoder is trained to map a sequence of input vectors ) into a fixed-length vector c and a decoder to predict the next word () from previous predicted ones . Here, c refers to an encoded vector produced by a sequence of hidden states that can be expressed as follows:

| 8 |

where q denotes some activation functions. The hidden state () at time step t can be formulated as:

| 9 |

The context vector can then be computed as a weighted sum of a sequence of annotations as follows:

| 10 |

where the alignment weight of each annotation can be calculated as:

| 11 |

and . is the hidden state at the -th position of the input sequence.

Fig. 10.

A schematic illustration of the attention-based machine translation model

Since its introduction, the AM has gained wide adoption in the computer vision community due to its spectral capabilities for many multimodal applications such as video description [233, 234], salient object detection [235], etc. For example, Hori et al. [233] proposed a multimodal attention framework for video captioning and sentence generation based on the encoder–decoder structure using RNNs. In particular, the multimodal attention model was used as a way to integrate audio, image, and motion features by selecting the most relevant context vector from each modality. In [236], Yang et al. suggested the use of stacked attention networks to search for image regions that correlate with a query answer and identify representative features of a given question more precisely. More recently, Guo et al. [237] introduced a normalized variant of the self-attention mechanism, called normalised self-attention (NSA), which aims to encode and decode the image and caption features and normalize the distribution of internal activations during training.

In summary, the multimodal AM provides a robust solution for cross-modal data fusion by selecting the local fine-grained salient features in a multidimensional space and filtering out any hidden noise. However, the only weakness of AM is that the training algorithm is unstable, which may affect the predictive power of the decision-making system. Furthermore, the number of parameters to be trained is huge compared to other deep networks such as RNNs, CNNs, etc.

Multitask learning

More recently, multitask learning (MTL) [108, 109] has become an increasingly popular topic in the deep learning community. Specifically, the MTL paradigm frequently arises in a context close to multimodal concepts. In contrast to single-task learning, the idea behind this paradigm is to learn a shared representation that can be used to respond to several tasks in order to ensure better generalizability. Although, there are some similarities between the fusion methods discussed in Sect. 3.4 and the methods used to perform multi-tasks simultaneously. What they have in common is that the sharing of the structure between all tasks can be learned jointly to improve performance. The conventional typology of the MTL approach consists of two subtasks:

Hard parameter sharing [110]: It consists of extracting a generic representation for different tasks using the same parameters. It is usually applied to avoid overfitting problems.

Soft parameter sharing [111]: It consists of extracting a set of feature vectors and simultaneously drawing similarity relationships between them.

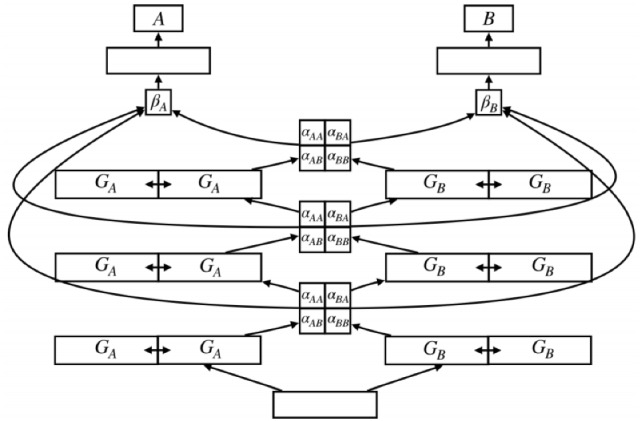

Figure 11 shows a meta-architecture for the two-task case. As can be seen, there are six intermediate layers in total, one shared input layer (bottom), two task-specific output layers (top), and three hidden layers per task, each divided into two G-subspaces. Typically, MTL contributes to the performance of the target task based on knowledge gained from auxiliary tasks.

Fig. 11.

A meta-architecture in the case of two tasks A and B [109]

Multimodal alignment

Multimodal alignment consists of linearly linking the features of two or more different modalities. Its areas of application include medical image registration [169], machine translation [1], etc. Specifically, multimodal image alignment provides a spatial mapping capability between images taken by sensors of different modalities, which may be categorized into feature-based [167, 168] and patch-based [165, 166] methods. Feature-based methods detect and extract a set of matching features that should be structurally consistent to describe their spatial patterns. Patch-based methods first split each image into local patches and then consider the similarity between them by computing their cross-correlation and combination. Generally, the alignment task can be divided into two subtasks: the attentional alignment task [170, 171] and the semantic alignment task [172, 173]. The attentional alignment task is based on the attentional mapping between the features of the input modality and the target one, while the semantic alignment task takes the form of an alignment method that directly provides alignment capabilities to a predictive model. The most popular use of semantic alignment is to create a dataset with associated labels and then generate a semantically aligned dataset. Both of these tasks have proven effective in multimodal alignment, where attentional alignment features are better able to take into account the long-term dependencies between different concepts.

Multimodal transfer learning

Typically, training a deep model from scratch requires a large amount of labeled data to achieve an acceptable level of performance. A more common solution is to find an efficient method that transfers knowledge already derived from another trained model onto a huge dataset (e.g., 1000k-ImageNet) [198]. Transfer learning (TL) [70] is one of the model regularization techniques that have proven their effectiveness for training deep models with a limited amount of available data and avoiding overfitting problems. Transferring knowledge from a pre-trained model associated with a sensory modality to a new task or similar domain facilitates the learning and fine-tuning of a target model using a target dataset.

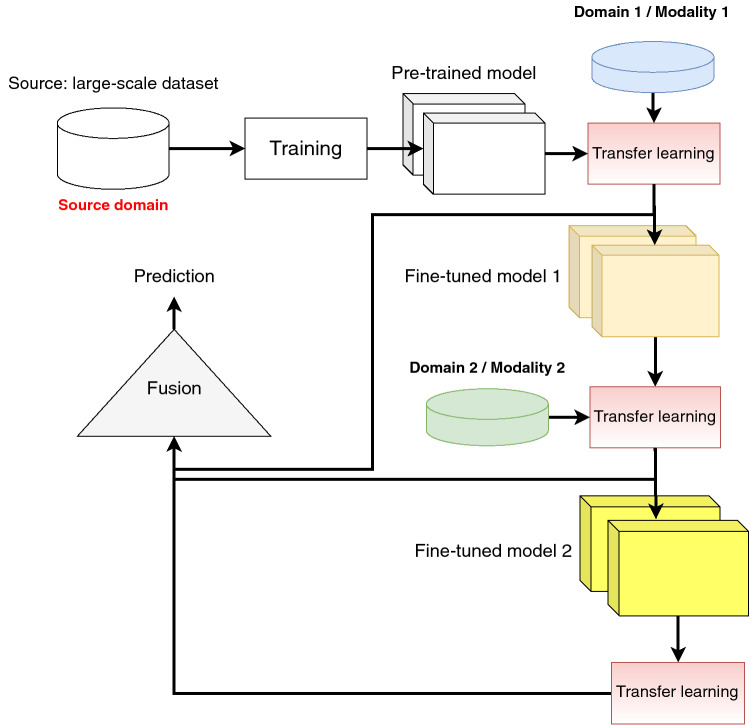

The technique can accelerate the entire learning process by reducing inference time and computational complexity. Moreover, the learning process can learn the data distribution in a non-parallel manner and ensure its synchronization over time. It can also learn rich and informative representations by using cooperative interactions among modalities. Moreover, it can improve the quality of the information transferred by eliminating any latent noise and conflict [113, 115–117]. For example, Palaskar et al. [113] proposed a multimodal integration pipeline that loads the parameters of a pre-trained model on the source dataset (transcript and video) to initialize the training of the target dataset (summary, question, and video). They used hierarchical attention [114] as a merging mechanism that can be used to generate a synthesis vector from multimodal sources. An example of a multimodal transfer learning pipeline based on the fine-tuning mechanism is shown in Fig. 12. It can be seen that a deep model is first pre-trained on a source domain, the learned parameters are then shifted to different modalities (i.e., fine-tuned models) and finally blended into the target domain using fusion techniques.

Fig. 12.

An illustration of an example of a multimodal transfer learning process

Zero-shot learning

In practice, the amount of labeled data samples for effective model training is often insufficient to recognize all possible object categories in an image (i.e., seen and unseen classes). This is why zero-shot learning [130] takes place. This supervised learning approach opens up many valuable applications such as object detection [131], object classification and retrieval of videos [141], especially when appropriate datasets are missing. In other words, it addresses multi-class learning problems when some classes do not have sufficient training data. However, during the learning process, additional visual and semantic features such as word embeddings [132], visual attributes [133], or descriptions [134] can be assigned to both seen and unseen classes. In the context of multimodality, a multimodal mapping scheme typically combines visual and semantic attributes using only data related to the seen classes. The objective is to project a set of synthesized features in order to make the model more generalizable toward the recognition of the unseen class in test samples [135]. Such methods tend to use GAN models to synthesize and reconstruct the visual features of the unseen classes, resulting in high accuracy classification and ensuring a balance between seen and unseen class labels [136, 137].

Tasks and applications

When modeling multimodal data, several compromises have to be made between system performance, computational burden, and processing speed. Also, many other factors must be regarded when selecting a deep model due to its sensitivity and complexity. In general, multimodality has been employed in many vision tasks and applications, such as face recognition and image retrieval. Table 2 summarizes the reviewed multimodal applications, their technical details, and the best results obtained according to evaluation metrics such as accuracy (ACC) and precision (PREC). In the following, we first describe the core tasks of computer vision, followed by a comprehensive discussion of each application and its intent.

Table 2.

Summary of the multimodal applications reviewed, their related technical details, and best results achieved

| References | Year | Application | Sensing modality/data sources | Fusion scheme | Dataset/best results |

|---|---|---|---|---|---|

| [73] | 2018 | Person recognition | Face and body information | Late fusion (Score-level fusion) | DFB-DB1 (EER = 1.52%) |

| ChokePoint (EER = 0.58%) | |||||

| [51] | 2015 | Face recognition | Holistic face + Rendered frontal pose data | Late fusion | LFW (ACC = 98.43%) |

| CASIA-WebFace (ACC = 99.02%) | |||||

| [93] | 2020 | Face recognition | Biometric traits (face and iris) | Feature concatenation | CASIA-ORL (ACC = 99.16%) |

| CASIA-FERET (ACC = 99.33%) | |||||

| [100] | 2016 | Image retrieval | Visual + Textual | Joint embeddings | Flickr30K (mAP = 47.72%; R@10 = 79.9%) |

| MSCOCO (R@10 = 86.9%) | |||||

| [101] | 2016 | Image retrieval | Photos + Sketches | Joint embeddings | Fine-grained SBIR Database (R@5 = 19.8%) |

| [102] | 2015 | Image retrieval | Cross-view image pairs | Alignment | A dataset of 78k pairs of Google street-view images (AP = 41.9%) |

| [103] | 2019 | Image retrieval | Visual + Textual | Feature concatenation | Fashion-200k (R@50 = 63.8%) |

| MIT-State (R@10 = 43.1%) | |||||

| CS (R@1 = 73.7%) | |||||

| [97] | 2015 | Gesture recognition | RGB + D | Recurrent fusion, Late fusion, and Early fusion | SKIG (ACC = 97.8%) |

| [98] | 2017 | Gesture recognition | RGB + D | A canonical correlation scheme | Chalearn LAP IsoGD (ACC = 67.71%) |

| [200] | 2019 | Gesture recognition | RGB + D + Opt. flow | A spatio-temporal semantic alignment loss (SSA) | VIVA hand gestures (ACC = 86.08%) |

| EgoGesture (ACC = 93.87%) | |||||

| NVGestures (ACC = 86.93%) | |||||

| [52] | 2019 | Image captioning | Visual + Textual | RNN + Attention mechanism | GoodNews (Bleu-1 = 8.92%) |

| [53] | 2019 | Image captioning | Visual + Textual + Acoustic | Alignment | MSCOCO (R@10 = 91.6%) |

| Flickr30K (R@10 = 79.0%) | |||||

| [128] | 2019 | Image captioning | Visual + Textual | Alignment | MSCOCO (BLUE-1 = 61.7%) |

| [174] | 2019 | Image captioning | Visual + Textual | Gated fusion network | MSR-VTT (BLUE-1 = 81.2%) |

| MSVD (BLUE-4 = 53.9%) | |||||

| [175] | 2019 | Image captioning | Visual + Acoustic | GRU Encoder-Decoder | Proposed dataset (BLUE-1 = 36.9%) |

| [176] | 2020 | Image captioning | Visual + Textual (Spatio-temporal data) | Object-aware knowledge distillation mechanism | MSR-VTT (BLUE-4 = 40.5%) |

| MSVD (BLUE-4 = 52.2%) | |||||

| [87] | 2018 | Vision-and-language navigation | Visual + Textual (instructions) | Attention mechanism + LSTM | R2R (SPL = 18%) |

| [88] | 2019 | Vision-and-language navigation | Visual + Textual | Attention mechanism + Language Encoder | R2R (SPL = 38%) |

| [118] | 2020 | Vision-and-language navigation | Visual + Textual (instructions) | Domain adaptation | R2R (Performance gap = 8.6) |

| R4R (Performance gap = 23.9) | |||||

| CVDN (Performance gap = 3.55) | |||||

| [119] | 2020 | Vision-and-language navigation | Visual + Textual (instructions) | Early fusion + Late fusion | R2R (SPL = 59%) |

| [120] | 2020 | Vision-and-language navigation | Visual + Textual (instructions) | Attention mechanism + Feature concatenation | VLN-CE (SPL = 35%) |

| [121] | 2019 | Vision-and-language navigation | Visual + Textual (instructions) | Encoder-decoder + Multiplicative attention mechanism | ASKNAV (Success rate = 52.26%) |

| [89] | 2018 | Embodied question answering | Visual + Textual (questions) | Attention mechanism + Alignment | EQA-v1 (MR = 3.22) |

| [90] | 2019 | Embodied question answering | Visual + Textual (questions) | Feature concatenation | EQA-v1 (ACC = 61.45%) |

| [122] | 2019 | Embodied question answering | Visual + Textual (questions) | Alignment | VideoNavQA (ACC = 64.08%) |

| [125] | 2019 | Video question answering | Visual + Textual (questions) | Bilinear fusion | TDIUC (ACC = 88.20%) |

| VQA-CP (ACC = 39.54%) | |||||

| VQA-v2 (ACC = 65.14%) | |||||

| [126] | 2019 | Video question answering | Visual + Textual (questions) | Alignment | TGIF-QA (ACC = 53.8%) |

| MSVD-QA (ACC = 33.7%) | |||||

| MSRVTT-QA (ACC = 33.00%) | |||||

| Youtube2Text-QA (ACC = 82.5%) | |||||

| [127] | 2020 | Video question answering | Visual + Textual (questions) | Hierarchical Conditional Relation Networks (HCRN) | MSRVTT-QA (ACC = 35.6%) |

| MSVD-QA (ACC = 36.1%) | |||||

| [129] | 2019 | Video question answering | Visual + Textual (questions) | Dual-LSTM + Spatial and temporal attention | TGIF-QA (l2 distance = 4.22) |

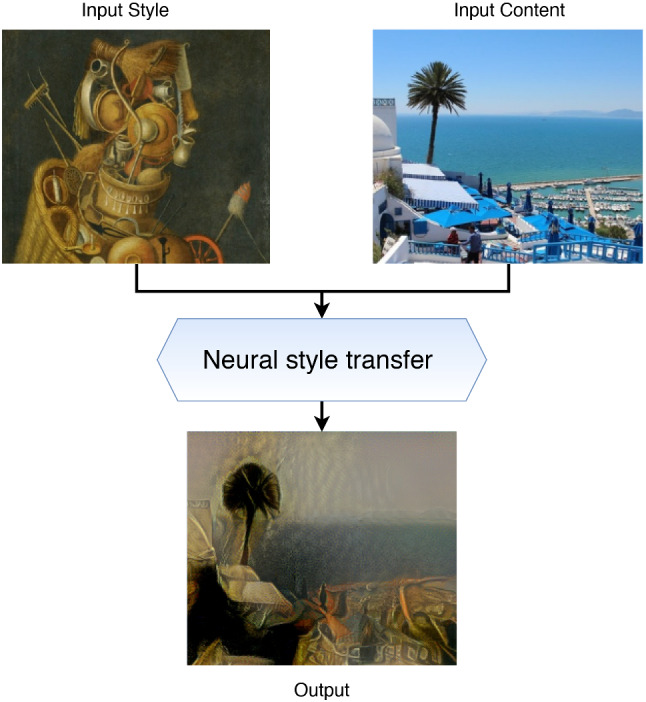

| [159] | 2019 | Style transfer | Content + Style | Graph based matching | A dataset of images from MSCOCO and WikiArt (PV = 33.45%) |

| [160] | 2017 | Style transfer | Content + Style | Hierarchical feature concatenation | A dataset of images from MSCOCO (PS = 0.54s) |

Generic computer vision tasks

Object detection

Object detection tasks generally consist of identifying rectangular windows (i.e., bounding boxes) in the image (i.e., object localization) and assigning class labels to them (i.e., object classification), through a process of patch extraction and representation (i.e., region of interest (RoI)). The localization process aims at defining the coordinates and position of the patch. In order to classify each object instance, a patch proposal strategy may be applied before the final prediction step. In practice, there are several possible detection methods. The most typical of these is to apply the classifier to an arbitrary region of the image or to a range of different shapes and scales. In the case of detecting patches, the same techniques used in traditional computer vision, such as the sliding window (SW) fashion, can be easily applied when patches are generated in SW mode, neural networks can be used to predict the target information. However, due to their complexity, this type of solution is not cost-effective, both in terms of training duration and memory consumption. In order to significantly reduce this complexity, the deep learning community has pioneered a new generation of CNN-based frameworks. Recent literature has focused on this challenging task: In [67], Jiao et al. studied a variety of deep object detectors, ranging from one-stage detectors to two-stage detectors.

4.1.1.1 One-stage detectors

Monomodal based The overfeat architecture [24] consists of several processing steps, each of which is dedicated to the extraction of multi-scale feature maps by applying the dense SW method to efficiently perform the object detection task. To significantly increase the processing speed of object detection pipelines, Redmon et al. [25] implemented a one-stage lightweight detection strategy called YOLO (You Only Look Once). This approach treats the object detection task as a regression problem, analyzing the entire input image and simultaneously predicting the bounding box coordinates and associated class labels. However, in some vision applications, such as autonomous driving, security, video surveillance, etc., real-time conditions become necessary. In this respect, two-stage detectors are generally slow in terms of real-time processing. In contrast, SSD (single-shot multibox detector) [78] has reduced the needs of the patches’ proposal network and, thus, accelerated the object detection pipeline. It can learn multi-scale feature representation from multi-resolution images. Its capability to detect objects at different scales enables it to enhance the robustness of the entire chain. Like most object detectors, the SSD detector consists of two processing stages: extracting the feature map through the VGG16 model and detecting the object by applying a convolutional filter through the layer. As similar to the principle of YOLO and SSD detectors, RetinaNet [79] takes only one stage to detect dense objects by producing multi-scale semantic feature maps using a feature pyramid network (FPN) backbone and the ResNet model. To deal with the class imbalance in the training phase, a novel loss function called “focal loss” is considered by [79]. This function allows training a one-stage detector with high accuracy by reducing the level of artifacts.

Multimodal based High-precision object recognition systems with multiple sensors are aware of external noise and environmental sensitivity (e.g., lighting variations, occlusion, etc.). More recently, the availability of low-cost and robust sensors (e.g., RGB-D sensors, stereo, etc.) has encouraged the computer vision community to focus on combining the RGB modality with other sensing modalities. According to experimental results, it has been shown that the use of depth information [183, 184], optical flow information [185], and LiDAR point clouds [186] in addition to conventional RGB data can improve the performance of one-stage based detection systems.

4.1.1.2 Two-stage detectors

Monomodal based The R-CNN detector [74] employs the patch proposal procedure using the selective search [80] strategy and applies the SVM classifier to classify any potential proposals. Fast R-CNN was introduced in [75] to improve the detection efficiency of R-CNN. The principle of Fast R-CNN is as follows: it first feeds the input image into the CNN network, extracts a set of feature vectors, applies a patch proposal mechanism, generates potential candidate regions using the RoI pooling layer, reshapes them to a fixed size, and then performs the final object detection prediction. As an efficient extension of fast R-CNN, Faster R-CNN [76] serves to use a deep CNN as a proposal generator. It has an internal strategy for proposing patches called region proposal network (RPN). Simultaneously, RPN carries out classification and localization regression to generate a set of RoIs. The primary objective is to improve the localization task and the overall performance of the decision system. In other words, the first network uses prior information about being an object, and the second one (at the end of the classifier) that deals with this information for each class. The feature pyramid network (FPN) detector [77] consists of a pyramidal structure that allows the learning of hierarchical feature maps extracted at each level of representation. According to [77], learning multi-scale representations is very slow and requires a lot of memory. However, FPN can generate pyramidal representations with a higher semantic resolution than traditional pyramidal designs.

Multimodal based As mentioned before, two-stage detectors are generally based on a combination of a CNN model to perform classification and a patch proposal module to generate candidate regions like RPNs. These techniques have proven effective for the accurate detection of multiple objects under normal and extreme environmental conditions. However, multi-object detection in both indoor and outdoor environments under varying environmental and lighting conditions remains one of the major challenges facing the computer vision community. Furthermore, a better trade-off between accuracy and computational efficiency in two-stage object detection remains an open question [84]. The question may be addressed more effectively by combining two or more sensory modalities simultaneously. However, the most common approach is to concatenate heterogeneous features from different modalities to generate an artificial multimodal representation. The recent literature has shown that it is attractive to learn shared representations from the complementarity and synergies between several modalities for increasing the discriminatory power of models [190]. Such modalities may include visual RGB-D [187], audio-visual data [188], visible and thermal data [189], etc.

4.1.1.3 Multi-stage detectors

Monomodal based Cascade R-CNN [26] is one of the most effective multi-stage detectors that have proven their robustness over one and two-stage methods. It is a cascaded version of R-CNN aimed at achieving a better compromise between object localization and classification. This framework has proven its capability in overcoming some of the main challenges of object detection, including overtraining problems [5, 6] and false alarm distribution caused by the patches’ proposal stage. In other words, the trained model may be over-specialized on the training data and can no longer generalize on the test data. The problem can be solved by stopping the learning process before reaching a poor convergence rate, increasing the data distribution in various ways, etc.

Multimodal based More recently, only a few multimodal-based multi-stage detection frameworks [191–193] have been developed and have achieved outstanding detection performance on benchmark datasets.

Visual tracking

For decades, visual tracking has been one of the major challenges for the computer vision community. The objective is to observe the motion of a given object in real time. A tracker can predict the trajectory of a given rigid object from a chronologically ordered sequence of frames. The task has attracted a lot of interest because of its enormous relevance in many real-world applications, including video surveillance [82], autonomous driving [83], etc. Over the last few decades, most deep learning-based object tracking systems have been based on CNN architectures [84, 139]. For example, in 1995, Nowlan et al. [85] implemented the first tracking system that tracks hand gestures in a sequence of frames using a CNN model. Multi-object tracking (MOT) has been extensively explored in recent literature for a wide range of applications [86, 138]. Indeed, MOT (tracking-by-detection) is another aspect of the generic object tracking task. However, MOT methods are mainly designed to optimize the dynamic matching of the objects of interest detected in each frame. To date, the majority of the existing tracking algorithms have yet to be adapted to various factors, such as illumination and scale variation, occlusions, etc [178]. Multimodal MOT is a universal aspect of MOT aimed at ensuring the accuracy of autonomous systems by mapping the motion sequence of dynamic objects [194]. To date, several multimodal variants of MOT have been proposed to improve the speed and accuracy of visual tracking by using multiple data sources, e.g., thermal, shortwave infrared, and hyperspectral data [195], RGB and thermal data [196], RGB and infrared data [197], etc.

Semantic segmentation

In image processing, image segmentation is a process of grouping pixels of the image together according to particular criteria. Semantic segmentation consists of assigning a class label to each pixel of a segmented region. Several studies have provided an overview of the different techniques used for semantic segmentation of visual data, including the works of [27, 28]. Scene segmentation is a subtask of semantic segmentation that enables intelligent systems to perceive and interact in their surrounding environment [27, 66]. The image can be split into non-overlapping regions according to particular criteria, such as pixel and edge detection and points of interest. Some algorithms are then used to define inter-class correlations for these regions.

Monomodal based Over the last few years, the fully convolutional network (FCN) [29] has become one of the robust models for a wide range of image types (multimedia, aerial, medical, etc.). The network consists of replacing the final dense layers with convolution layers, hence the reason for its name “FCN”. However, the convolutional side (i.e., the feature extraction side) of the FCN generates low-resolution representations which lead to fairly fuzzy object boundaries and noisy segmentations. Consequently, this requires the use of a posteriori regularizations to smooth out the segmentation results, such as conditional random field (CRF) networks [69]. As a light variant of semantic segmentation, instance segmentation yields a semantic mask for each object instance in the image. For this purpose, some methods have been developed, including Mask-RCNN [30], Hybrid Task Cascade (HTC) [31], etc. For instance, the Mask R-CNN model offers the possibility of locating instances of objects with class labels and segmenting them with semantic masks. Scene parsing is a visual recognition process that is based on semantic segmentation and deep architectures. A scene can be parsed into a series of regions labeled for each pixel that is mapped to semantic classes. The task is highly useful in several real-time applications, such as self-driving cars, traffic scene analysis, etc. However, fine-grained visual labeling and multi-scale feature distortions pose the main challenges in scene parsing.

Multimodal based More recently, it has been shown in the literature that the accuracy of scene parsing can be improved by combining several detection modalities instead of a single one [91]. Many different methods are available, such as soft correspondences [94], 3D scene analysis from RGB-D data [95], to ensure dense and accurate scene parsing of indoor and outdoor environments.

Multimodal applications

Human recognition

In recent years, a wide range of deep learning techniques has been developed that focus on human recognition in videos. Human recognition seeks to identify the same target at different points in space-time derived from complex scenes. Some studies have attempted to enhance the quality of person recognition from two data sources (audio-visual data) using DBN and DBM [72] models, which have allowed several types of representation to be combined and coordinated. Some of these works include [48, 73]. According to Salakhutdinov et al. [72], a DBM is a generative model that includes several layers of hidden variables. In [48], the structure of deep multimodal Boltzmann machines (DMBM) [71] is similar to that of DBM, but it can admit more than one modality. Therefore, each modality will be covered individually using adaptive approaches. After joining the multi-domain features, the high-level classification will be performed by an individual classifier. In [73], Koo et al. developed a multimodal human recognition framework based on face and body information extracted from deep CNNs. They employed the late fusion policy to merge the high-level features across the different modalities.

Face recognition

Face recognition has long been extremely important, ranging from conventional approaches that involve the extraction and selection of handcrafted features, such as Viola and Jones detectors [49] to the automatic extraction and training of end-to-end hierarchical features from raw data. This process has been widely used in biometric systems for control and monitoring purposes. The most biometric systems rely on three modes of operation: enrolment, authentication (verification), and identification [92]. However, most facial recognition systems, including biometric systems, suffer from a restriction in terms of universality and variations in the appearance of visual patterns. End-to-end training of multimodal facial representations can effectively help to overcome this limitation. Multimodal facial recognition systems can integrate complex representations derived from multiple modalities at different scales and levels (e.g., feature level, decision level, score level, rank level, etc.). Note that face detection, face identification, and face reconstruction are subtasks of face recognition [50]. Numerous works in the literature have demonstrated the benefits of multimodal recognition systems. In [51], Ding et al. proposed a new late fusion policy using CNNs for multimodal facial feature extraction and SAEs for dimensional reduction. The authors of [93] introduced a biometric system that combines biometric traits from different modalities (face and iris) to establish an individual’s identity.

Image retrieval