Abstract

Differences in visual attention have long been recognized as a central characteristic of autism spectrum disorder (ASD). Regardless of social content, children with ASD show a strong preference for perceptual salience—how interesting (i.e., striking) certain stimuli are, based on their visual properties (e.g., color, geometric patterning). However, we do not know the extent to which attentional allocation preferences for perceptual salience persist when they compete with top-down, linguistic information. This study examined the impact of competing perceptual salience on visual word recognition in 17 children with ASD (mean age 31 months) and 17 children with typical development (mean age 20 months) matched on receptive language skills. A word recognition task presented two images on a screen, one of which was named (e.g., Find the bowl!). On Neutral trials, both images had high salience (i.e., were colorful and had geometric patterning). On Competing trials, the distracter image had high salience but the target image had low salience, creating competition between bottom-up (i.e., salience-driven) and top-down (i.e., language-driven) processes. Though both groups of children showed word recognition in an absolute sense, competing perceptual salience significantly decreased attention to the target only in the children with ASD. These findings indicate that perceptual properties of objects can disrupt attention to relevant information in children with ASD, which has implications for supporting their language development. Findings also demonstrate that perceptual salience affects attentional allocation preferences in children with ASD, even in the absence of social stimuli.

Keywords: child, language, language development, attention, autism spectrum disorder, cues, information seeking behavior

Lay Summary:

This study found that visually striking objects distract young children with autism spectrum disorder (ASD) from looking at relevant (but less striking) objects named by an adult. Language-matched, younger children with typical development were not significantly affected by this visual distraction. Though visual distraction could have cascading negative effects on language development in children with ASD, learning opportunities that build on children’s focus of attention are likely to support positive outcomes.

Introduction

Differences in visual attention have long been recognized as a central characteristic of autism spectrum disorder [ASD; Ames & Fletcher-Watson, 2010; Bedford et al., 2014; Burack et al., 2016; Keehn, Muller, & Townsend, 2013; Mundy, Sigman, & Kasari, 1990; Mutreja, Craig, & O’Boyle, 2015]. One consistent finding is that individuals with ASD allocate their visual attention differently than individuals without ASD. For example, children with ASD often spend more time looking at nonsocial stimuli (e.g., objects) than social stimuli (e.g., faces), whereas the opposite is true for children with typical development or developmental delays not associated with ASD [Klin, Lin, Gorrindo, Ramsay, & Jones, 2009; Moore et al., 2018; Pierce et al., 2016; Pierce, Conant, Hazin, Stoner, & Desmond, 2011; Swettenham et al., 1998; Unruh et al., 2016]. Individuals with ASD also show a strong preference for perceptual salience—how interesting (i.e., striking) certain stimuli are, based on their visual properties (e.g., color, geometric patterning; Wang et al., 2015). In fact, perceptual salience appears to drive attention orienting in children with ASD, regardless of social content—a “bottom-up” processing style not seen in children without ASD [Amso, Haas, Tenenbaum, Markant, & Sheinkopf, 2014].

Understanding attentional allocation preferences in children with ASD is important because they are an early diagnostic indicator of ASD, may inform prognosis, and have implications for developing effective interventions [Patten & Watson, 2011; Pierce et al., 2011, 2016; Unruh et al., 2016]. However, there is much we do not yet know about the breadth and depth of attentional allocation preferences in children with ASD. First, we do not know the extent to which a preference for high perceptual salience persists in children with ASD when it competes with top-down linguistic information (e.g., spoken language input). Second, it is unclear to what extent perceptual salience affects attentional allocation preferences in the absence of social stimuli—namely, when children with ASD view objects with high versus low perceptual salience. The current study investigated these issues by examining the impact of competing perceptual salience on visual word recognition in children with and without ASD.

Attentional allocation preferences in typical development and ASD

At any given moment, the visual world is filled with many objects and events that may capture a child’s attention, including social and nonsocial stimuli with varying levels of perceptual salience (i.e., visual “striking-ness”). Generally speaking, “bottom up” processing occurs when attention is focused strongly on perceptually salient features of the environment. On the other hand, “top down” processing refers to situations in which attention is more influenced by higher-level social or linguistic features of the environment than by perceptual salience [Amso et al., 2014; Connor, Egeth, & Yantis, 2004; Maekawa et al., 2011]. Because attentional allocation preferences filter incoming visual information, they play a primary role in how children perceive their world [Smith, Suanda, & Yu, 2014]. From birth, infants and young children with typical development show a strong preference for social stimuli, including faces, eye contact, and biological motion [Bardi, Regolin, & Simion, 2011; Farroni, Csibra, Simion, & Johnson, 2002; Goren, Sarty, & Wu, 1975; Valenza, Simion, Cassia, & Umiltà, 1996]. These children can flexibly allocate their attention within the first few months of life [Hood & Atkinson, 1993; Johnson, Posner, & Rothbart, 1991] and, soon after, begin to initiate and respond to cues for joint, object-focused attention [Mundy & Gomes, 1998]. These attentional patterns appear to support many aspects of development, including language and communication [Brooks & Meltzoff, 2005; Papageorgiou, Farroni, Johnson, Smith, & Ronald, 2015; Scerif, 2010].

In contrast, infants and children with ASD commonly prefer to look at nonsocial rather than social stimuli and spend more time looking at objects than at people [Shi et al., 2015; Swettenham et al., 1998; Wang et al., 2015]. Many children with ASD show an intense interest in striking visual features such as lights, movement, and geometric repetition (e.g., spinning car wheels; American Psychiatric Association, 2013; Chawarska, Macari, & Shic, 2013; Klin et al., 2009; Pierce et al., 2011; Unruh et al., 2016). Children with ASD may show reduced eye contact, have difficulty flexibly shifting their attention, and engage in joint attention at much lower rates than their peers [Landry & Bryson, 2004; Mundy et al., 1990; Sacrey, Armstrong, Bryson, & Zwaigenbaum, 2014]. In combination, these attentional patterns are thought to contribute to atypical development in children with ASD, including delays in language development and word learning [Keehn et al., 2013; Venker, Bean, & Kover, 2018].

Experimental investigations of visual preferences in ASD

Much of what we know about attentional allocation preferences in ASD comes from observing children’s behavior as they engage in play-based social interactions [Adamson, Deckner, & Bakeman, 2010; Bottema-Beutel, 2016; Mundy et al., 1990; Sacrey, Bryson, & Zwaigenbaum, 2013; Swettenham et al., 1998]. However, researchers have also developed a variety of screen-based tasks to measure attentional allocation preferences within controlled, experimental contexts [Fischer et al., 2015; Pierce et al., 2011; Unruh et al., 2016]. Experimental attention tasks (several of which are explained in detail below) typically present a sequence of trials that display multiple images or a complex scene. Eye movements are monitored through automatic eye-tracking or manual coding of gaze location. Screen-based tasks offer numerous advantages for understanding attentional allocation preferences, as they (1) can easily depict a variety of different stimulus types, including static, dynamic, social, and nonsocial stimuli; (2) standardize stimuli and experimental procedures, which allows for more experimental control than observational methods; (3) have limited behavioral task demands; and (4) are feasible to use across varying ages and developmental levels [Falck-Ytter, Bölte, & Gredebäck, 2013; Sasson & Elison, 2012; Venker & Kover, 2015].

Social Versus Nonsocial Stimuli

Many screen-based tasks have been used to examine attention allocation preferences to social versus nonsocial stimuli. Using a screen-based looking paradigm, a line of work by Pierce and colleagues [Moore et al., 2018; Pierce et al., 2011, 2016] examined how toddlers with ASD allocate their attention when simultaneously presented with two dynamic scenes—one social (e.g., children dancing, doing yoga) and one nonsocial (moving geometric patterns). These studies found that toddlers with ASD looked significantly more at nonsocial, geometric stimuli than children with typical development or children with global developmental delay, who preferred social stimuli (also see Shi et al., 2015). In fact, Pierce et al. [2011] found that if a toddler looked at the geometric scenes 69% of the time or more, the positive predictive validity for accurately classifying the child as having an ASD diagnosis was 100%. Subsequent work revealed a potential ASD subtype characterized by a particularly strong preference for geometric repetition [Moore et al., 2018; Pierce et al., 2016]. Interestingly, toddlers with ASD who showed the strongest preference for geometric patterns had the weakest language and cognitive skills and the most severe autism symptoms [Moore et al., 2018; Pierce et al., 2016].

Influential Properties of Nonsocial Stimuli

Screen-based experimental studies have shown that attentional allocation preferences in individuals with ASD are influenced not only by social content, but also by the characteristics of nonsocial stimuli. For example, some objects (e.g., trains, which are likely to be the focus of circumscribed interests in males) attract attention in individuals with ASD more strongly than others (e.g., clothing plants; Sasson, Elison, Turner-Brown, Dichter, & Bodfish, 2011; Sasson, Turner-Brown, Holtzclaw, Lam, & Bodfish, 2008; Unruh et al., 2016; also see Thorup, Kleberg, & Falck-Ytter, 2017). Though individuals without ASD may prefer to look at the same types of objects as individuals with ASD [Thorup et al., 2017], their looking behaviors tend to be less affected by baseline visual preferences than individuals with ASD [Unruh et al., 2016]. Notably, there has been some discussion regarding whether the high salience of preferred objects lies in their content (i.e., their relationship to circumscribed interests) and/or their visual properties [Sasson et al., 2008]. This is a particularly interesting point to consider given that girls with ASD may demonstrate circumscribed interests that are more developmentally normative (e.g., horses) than those shown by boys [Antezana et al., 2019].

Attentional allocation preferences in individuals with ASD are also influenced by another visual property: perceptual salience (Amso et al., 2014; Wang et al., 2015; but see Freeth, Foulsham, & Chapman, 2011). High perceptual salience attracts attention in individuals without ASD [Freeth et al., 2011], but looking behaviors appear to be more strongly affected in children with ASD than in their peers [Amso et al., 2014]. Amso et al. [2014] conducted a screen-based task with 2- to 5-year-old children with and without ASD. Children viewed naturalistic scenes containing social and nonsocial stimuli with varying levels of perceptual salience. Regardless of social content, perceptual salience influenced attentional allocation preferences more strongly in children with ASD than in children with typical development. Specifically, the proportion of selecting visually-salient image regions (regardless of whether those regions contained a face) was higher in the children with ASD than in the children with typical development. Both groups were drawn to look at faces to some extent, but the children with ASD were more drawn to look at visually-salient information than the children with typical development. This finding suggested that the children with ASD relied more strongly on bottom-up (i.e., salience-driven) attentional processing strategies, whereas children with typical development were more influenced by top-down (i.e., social) information. In line with Pierce et al. [2016], the extent to which looking behaviors in the children with ASD were impacted by perceptual salience was negatively associated with their receptive language skills, highlighting the link between attentional allocation preferences and language.

Linguistic input and attention allocation

The emerging links between attentional allocation preferences and language skills in children with ASD underscore the importance of determining how top-down, linguistic information (e.g., spoken language input) interacts with visual preferences. Little is known about this issue, however, because most visual preference tasks in individuals with ASD have been conducted without explicit auditory stimuli [Amso et al., 2014; A. Moore et al., 2018; Unruh et al., 2016]. We propose that this gap in knowledge can be addressed by integrating the design features of a visual preference task with those of a visual word recognition task—a screen-based task explicitly designed to determine how spoken language input affects attention allocation in young children [Fernald, Zangl, Portillo, & Marchman, 2008; Golinkoff, Ma, Song, & Hirsh-Pasek, 2013; Marchman & Fernald, 2008; Swensen, Kelley, Fein, & Naigles, 2007]. Though the visual preference literature and visual word recognition literatures have historically been conducted separately, merging these approaches (i.e., by manipulating perceptual salience during a word recognition task) offers a unique opportunity to examine the interaction between linguistic input and attentional allocation preferences in children with ASD.

In a standard visual word recognition task, children view two images or dynamic scenes on a screen and hear language describing one of the images (e.g., See the bowl?). A significant increase in relative looking to the target image after it is named indicates recognition (i.e., comprehension) of the target noun. Ordinarily, word recognition tasks carefully balance the relative salience of target and distracter images to ensure that attention is driven by the linguistic signal, not by lower-level perceptual properties of objects [Fernald et al., 2008; Yurovsky & Frank, 2015]. However, introducing competing perceptual salience to a word recognition task—purposefully creating an imbalance in salience between target and distracter images—provides a means of exploring how bottom-up (i.e., salience driven) attentional preferences in children with ASD may be influenced by spoken language input.

Visual word recognition tasks have emerged as a robust method for measuring language comprehension in infants and young children—including those with ASD [Aslin, 2007; Bavin, Kidd, Prendergast, & Baker, 2016; Ellis Weismer, Haebig, Edwards, Saffran, & Venker, 2016; Fernald, Perfors, & Marchman, 2006; Goodwin, Fein, & Naigles, 2012; Swensen et al., 2007; Venker, Eernisse, Saffran, & Weismer, 2013]. Word recognition is closely linked with language outcomes in children with typical development and children with ASD, pointing to its importance in language development [Fernald et al., 2006; Fernald & Marchman, 2012; Venker et al., 2013]. Importantly, visual word recognition is thought not only to reflect existing lexical knowledge, but also to play a role in subsequent vocabulary development by supporting the development and refinement of a strong network of links between words and their meanings [Kucker, McMurray, & Samuelson, 2015; Venker et al., 2018]. In addition, there is evidence that word recognition mediates the relationship between adult language input and child language outcomes in typical development [Weisleder & Fernald, 2013].

Perceptual salience and word learning

To our knowledge, no published studies have examined the impact of the perceptual salience of objects on visual word recognition. However, the influence of perceptual salience on attentional allocation preferences has received some consideration in the literature on word learning in both typical development and ASD (Akechi et al., 2011; C. Moore, Angelopoulos, & Bennett, 1999; Parish-Morris, Hennon, Hirsh-Pasek, Golinkoff, & Tager-Flusberg, 2007; Pomper & Saffran, 2018; Yurovsky & Frank, 2015]. Many word-learning studies have placed perceptual salience in conflict with social cues to word meaning, such as pointing to or looking at an intended referent, with the goal of determining which type of cue children rely on more strongly. Early in typical development, infants’ attention allocation within a word-learning context is strongly driven by the perceptual salience of novel objects [Hollich et al., 2000; Hollich, Golinkoff, & Hirsh-Pasek, 2007; Pruden, Hirsh-Pasek, Golinkoff, & Hennon, 2006]. The effect of perceptual salience in typical development decreases between 12 and 24 months of age, such that 24-month-olds are more likely to rely on eye gaze cues than on perceptual salience when learning new words. Even in 3-year-olds with typical development, however, the presence of engaging, visually salient familiar objects as competitors (e.g., animals, food) decreases the speed and accuracy of novel word recognition, with negative effects on the retention of new label-object mappings [Pomper & Saffran, 2018].

Perceptual salience (e.g., visual strikingness, object movement) also affects word learning in individuals with ASD—either boosting or disrupting performance, depending on whether object salience overlaps or competes with other cues to word meaning [Akechi et al., 2011; Aldaqre, Paulus, & Sodian, 2015; Parish-Morris et al., 2007]. Interestingly, visual salience may continue to impact attentional allocation preferences during word learning in individuals with ASD beyond early childhood. For example, work by Parish-Morris et al. [2007] found that perceptual salience affected word learning in children with ASD even at a mean age of 5 years, though it did not have this same effect on language-matched or mental age-matched children without ASD [also see Aldaqre et al., 2015]. Aldaqre et al. [2015] found that visual salience—in this case, movement of the novel object—disrupted word learning even in adults with ASD.

Social attention and language development

Though the current study focuses on nonsocial aspects of attention within a linguistic context, it is also important to consider relevant findings from the vast literature on joint attention. Generally speaking, this line of work underscores the point that attention to relevant aspects of the visual environment supports language development. Young children with typical development frequently engage in joint attention with adults, and the ability to follow adult gaze cues is linked with language abilities in children with typical development even before age two [Brooks & Meltzoff, 2005; Mundy & Gomes, 1998]. However, children with ASD are less likely to initiate and respond to bids for shared, object-, or event-focused joint attention than children with typical development, with negative consequences for language development [Bottema-Beutel, 2016; Kasari, Sigman, Mundy, & Yirmiya, 1990; Mundy et al., 1990; Mundy & Jarrold, 2010]. In the current study, we consider how decreased attention to relevant aspects of the visual environment could also result from a nonsocial source—the perceptual salience of unnamed objects.

The current study

We developed an experimental eye-gaze task to determine how competing perceptual salience affects visual word recognition in children with and without ASD closely matched on receptive language skills. Children viewed two images on a screen, one of which was named (e.g., Find the shirt!). On Neutral trials, both the target and distracter images had high perceptual salience (i.e., colorful and geometrically patterned). On Competing trials, the distracter image had high salience but the target had low salience, creating competition between bottom-up (i.e., salience-driven) processes and top-down (i.e., language-driven) processes. Our primary aim was to determine the extent to which competing perceptual salience affects word recognition in young children with ASD and young children with typical development. We predicted that children would significantly increase their relative looking to the target after it was named—a pattern of results that would provide evidence of word recognition (in an absolute sense) across conditions. However, we also predicted that the children with ASD would be more disrupted by competing perceptual salience than the children with typical development as indicated by their relative looking to target after it was named on Competing versus Neutral trials. In addition to addressing our primary aim, we conducted exploratory analyses to examine looking patterns during baseline, before either image had been named.

Method

Participants

Participants were part of a broader study investigating lexical processing [Ellis Weismer et al., 2016; Mahr, McMillan, Saffran, Ellis Weismer, & Edwards, 2015; Pomper, Ellis Weismer, Saffran, & Edwards, 2019; Venker et al., 2020; Venker, Edwards, Saffran, & Ellis Weismer, 2019]. The study was prospectively approved by the university Institutional Review Board, and parents or legal guardians provided written informed consent for their child’s participation. Participants were recruited through doctors’ offices and clinics, early intervention providers, from the community, and through a research registry maintained by the Waisman Center at the University of Wisconsin-Madison. In total, 44 children with ASD and 28 children with typical development completed the task of interest. However, in this sample the children with ASD had significantly lower receptive language abilities than the children with typical development. Given the language-based nature of the task, we were interested in comparing groups with similar receptive language abilities. To generate these subgroups, each child with typical development was individually matched with a child with ASD on receptive language age equivalent (see Section 8.4), yielding groups of 17 children with ASD (11 males, 6 females) and 17 children with typical development (11 males, 6 females). Exclusionary criteria were known genetic syndromes (e.g., fragile X syndrome), birth prior to 37 weeks gestation, and exposure to a language other than English. Additional exclusionary criteria for the children with typical development were elevated scores on the Modified Checklist for Autism in Toddlers [Robins et al., 2014] and clinical observation or parent report of developmental delay or behaviors associated with ASD.

Procedure

Children took part in a 2-day visit conducted by a licensed clinical psychologist and a licensed and certified speech-language pathologist with extensive experience assessing young children with ASD. Some children had an existing ASD diagnosis, whereas others received their initial diagnosis within the study. Parents of all children with ASD participated in the Autism Diagnostic Interview, Revised [Rutter, LeCouteur, & Lord, 2003]. All children with ASD completed the Autism Diagnostic Observation Schedule, 2nd Edition (ADOS-2; Lord, Luyster, et al., 2012; Lord, Rutter, et al., 2012), which informed initial ASD diagnosis provided within the context of the research study or verified existing ASD diagnosis. The ADOS-2 also measured autism severity. The ADOS-2 modules used were: Toddler Module, no words (n = 1); Toddler Module, words (n = 4); Module 1, no words (n = 3); Module 1, words (n = 6); Module 2, under 5 (n = 3).

Standardized Assessments

The Mullen Scales of Early Learning [Mullen, 1995] measured visual reception and fine motor skills. Age equivalent scores from the Visual Reception and Fine Motor subscales were averaged, divided by chronological age, and multiplied by 100, yielding a nonverbal Ratio IQ score (see Bishop, Guthrie, Coffing, & Lord, 2011). Receptive and expressive language skills were measured by the Auditory Comprehension and Expressive Communication Scales of the Preschool Language Scales, 5th Edition (PLS-5; Zimmerman, Steiner, & Pond, 2011). Each scale produced a raw score, age equivalent, growth scale value, and standard score.

Group Matching

Each child with ASD was matched to a child with typical development on PLS-5 receptive language age equivalent score within 4 months, yielding groups of 17 children with ASD and 17 children with typical development. Groups did not significantly differ in receptive or expressive language age equivalents or growth scale values (see Table 1), indicating similar levels of absolute language skills. Groups significantly differed on all other variables.

Table 1.

Participant Characteristics

| Children with typical development (n = 17) |

Children with ASD (n = 17) |

Group difference |

|

|---|---|---|---|

| Mean (SD) range | Mean (SD) range | P value Cohen’s d variance ratio | |

| Chronological age (months) | 20.41 (1.66) 18–23 |

31.06 (3.67) 24–36 |

P < 0.001* d = 3.74 var ratio = 2.21 |

| Auditory comprehension | |||

| Growth scale value | 359.65 (25.97) 313–397 |

354.35 (29.17) 313–397 |

P = 0.580 d = 0.19 var ratio = 1.12 |

| Age equivalent (months) | 20.65 (4.49) 14–28 |

19.94 (4.99) 14–28 |

P = 0.667 d = 0.15 var ratio = 1.11 |

| Standard score | 99.47 (14.75) 77–124 |

70.71 (13.82) 53–98 |

P < 0.001* d = 2.01 var ratio = 1.07 |

| Expressive communication | |||

| Growth scale value | 356.47 (21.98) 325–407 |

349.12 (39.52) 285–407 |

P = 0.507 d = 0.23 var ratio = 1.80 |

| Age equivalent (months) | 23.00 (4.17) 18–33 |

22.06 (7.15) 11–33 |

P = 0.642 d = 0.16 var ratio = 1.71 |

| Standard score | 106.00 (10.59) 91–130 |

80.59 (12.79) 61–100 |

P < 0.001* d = 2.17 var ratio = 1.21 |

| Total Language Standard score |

102.77 (12.52) 86–129 |

74.12 (12.39) 56–95 |

P < 0.001* d = 2.30 var ratio = 1.01 |

| Nonverbal Ratio IQ (n = 16 in the ASD group) | 105.18 (13.31) 86–145 |

75.03 (10.12) 58–90 |

P < 0.001* d = 2.55 var ratio = 1.31 |

| ASD symptom severity | — | 7.47 (1.59) 4–10 |

— |

Note. Auditory Comprehension and Expressive Communication were measured by the Preschool Language Scales, 5th Edition. Nonverbal Ratio IQ was measured by the Mullen Scales of Early Learning. ASD Symptom Severity was measured by the ADOS-2 comparison score.

indicates P values are significant at at level of P < 0.001.

Visual Word Recognition Task

The visual word recognition task lasted approximately 5 min. Children sat on a parent’s lap in front of a 55-in. screen. Parents wore opaque glasses to prevent them from unintentionally affecting their child’s performance. Children’s faces were video recorded by a camera below the screen, which allowed for offline manual coding of gaze location.1 In each trial, children viewed two images (e.g., a bowl and a shirt) on either side of the screen and heard speech describing one of the images (e.g., Find the bowl!). Visual stimuli (color photos of familiar objects) were obtained through online image searches. Auditory stimuli were recorded using child-directed speech by a female English speaker native to the geographic area in which the study was conducted. Trials lasted 6.5 s, and the onset of the target noun (e.g., … bowl!) occurred 2,900 ms into the trial.

The task contained 10 Neutral trials and 10 Competing trials. Neutral and Competing trials contained 10 words yoked into pairs: cup-sock, shirt-bowl, hat-chair, door-pants, and shoe-ball. Each word in a pair served as the target and the distracter in both conditions. This feature of the task design accounted for the possibility that children may find some objects inherently more interesting than others. On Neutral trials, both the target and distracter were colorful and had geometric patterning (see Fig. 1). On Competing trials, the distracter was colorful and geometric (like the images in the Neutral Condition), but the target image was less colorful and did not have geometric patterning. We refer to images that were colorful and had geometric patterning as having high salience. We refer to images that were less colorful and did not have patterning as having low salience. The vibrancy (i.e., intensity and saturation) of the images was adjusted in Photoshop to maximize the distinction between high-salience and low-salience images (see Appendix A).

Figure 1.

Sample images from the Visual Attention task for the auditory stimulus, Find the bowl! Neutral trials (left) presented two high-salience images. Competing trials (right) presented a distracter (unnamed) image with high salience and a target (named) image with low salience.

Though there are many ways to experimentally represent perceptual salience, we manipulated color and geometric patterning because these are features that vary naturally in the real world. For example, it would be reasonable to see bowls and shirts that are colorful and patterned or bowls and shirts that are less colorful and have no patterning, which increases the ecological validity of this visual manipulation. The task only included objects that could reasonably be solid or patterned: clothing, dishes, toys, and furniture. The effectiveness of the salience manipulation was supported by a pilot study [Mathée, Venker, & Saffran, 2016].2

To prevent children from predicting that a low-salience image would always be named, the task included six filler trials in which the target had high salience, but the distracter had low salience. Filler trials included six distinct words that were not included in the Neutral or Competing Conditions: cake, blanket, balloon, cookie, plate, and bed. To ensure that specific aspects of the task design did not impact performance, two versions of the task were created with different trial orders and target sides. Neither version presented more than two consecutive trials of the same type (i.e., Neutral, Competing, and Filler). Task version (i.e., whether a child received Version 1 or 2) was semi-randomly assigned to account for children who participated in the study but did not meet inclusionary criteria.

Eye-gaze Coding

Gaze location was determined offline from video by trained research assistants using custom eye-gaze coding software. Coders were trained to mastery in differentiating head movements from eye movements by focusing on pupil movement and were unaware of target side. The sampling rate of the video was 30 Hz, yielding time frames lasting approximately 33 ms each. The visual angle of children’s eye gaze was 33.6° from center to the upper corners of the screen and 24° from center to the lower corners of the screen. Coders determined gaze location for each time frame based on the visual angle of children’s eyes and the known location of the left and right image areas of interest on the screen. Gaze location for each 33 ms time frame was classified as directed to the left image (i.e., the left area of interest), to the right image (i.e., the right area of interest), or neither (see Fernald et al., 2008 for additional detail).

Post processing converted left and right looks to target and distracter looks (based on the known target location in each trial). Time frames in which gaze was not directed to either image were categorized as missing data. Intercoder percent agreement for 12 randomly selected, independently coded videos was 98.1%. Consistent with previous studies of word recognition [e.g., Fernald, Thorpe, & Marchman, 2010], the window of analysis lasted from 300 to 2,000 ms after target noun onset. Following standard procedures for measuring word recognition accuracy [Fernald et al., 2008], the dependent variable of interest was the amount of time looking at the target divided by the total time looking at both images (i.e., target + distracter looks) during a given time window. This calculation represents the percentage of total image looking time that was focused on the target image. For simplicity, we refer to this variable as “relative looking to target.” There were no significant differences in relative looking to target between the two versions during the analysis window, F(1,42) = 0.11, P = 0.743, so the versions were col-lapsed in subsequent analyses.

Data Cleaning

Trials were excluded if children looked away from the images more than half of the time during the analysis window. On average, children with typical development contributed 9.24 trials in the Neutral Condition (SD = 0.83, range = 8–10) and 8.65 trials in the Competing Condition (SD = 1.37, range = 5–10). Children with ASD contributed 8.24 trials in the Neutral Condition (SD = 1.48, range = 6–10) and 8.12 trials in the Competing Condition (SD = 1.73, range = 5–10). We conducted a repeated measures ANOVA with number of trials per child as dependent variables nested within children, and Condition (Neutral vs. Competing), Group (typical development vs. ASD), and their interaction as independent variables. This analysis revealed no significant difference in the number of trials contributed per child between groups F(1,32) = 2.98, P = 0.094, a marginal difference between conditions F(1,32) = 4.00, P = 0.054, and no significant Group × Condition interaction F(1,32) = 1.78, P = 0.192.

We also examined the proportion of nonmissing (i.e., image-focused) looking time in the analyzed trials (i.e., those that were not excluded due to excessive missing data). The proportion of image-focused looking time for the children with ASD was .79 in both the Neutral Condition and the Competing Condition. The proportion of image-focused looking time in the analyzed trials for the children with typical development was .86 in the Neutral Condition and .80 in the Competing Condition. Repeated measures ANOVA with missing values as dependent variables nested within children, and Condition (Neutral vs. Competing), Group (typical development vs. ASD), and their interaction as independent variables showed no significant differences in missing data in the analysis window between Groups, F(1,32) <0.001, P = 0.990, Conditions, F(1,32) = 0.75, P = 0.393, or their interaction F(1,32) = 0.12, P = 0.735.

Initial Baseline Analyses

Following standard practice in visual word recognition studies [Fernald et al., 2008], we first examined looking patterns during baseline—the initial 2,900 ms of each trial during which both images were visible but neither had yet been named. We compared baseline looking patterns in each group and each condition to chance (0.50, which would indicate similar proportions of time looking at both images). In Neutral trials, where both images had high salience, we expected children to spend similar proportions of time looking at each image. Consistent with this expectation, relative looking to target during baseline in Neutral trials did not differ significantly from 0.50 for the children with typical development (M = 0.47, SD = 0.07, range = 0.36–0.65), t(16) = −1.36, P = 0.192, or the children with ASD (M = 0.51, SD = 0.08, range = 0.35–0.67), t(16) = 0.47, P = 0.645. This pattern of results suggests that we successfully matched the Neutral items on visual salience.

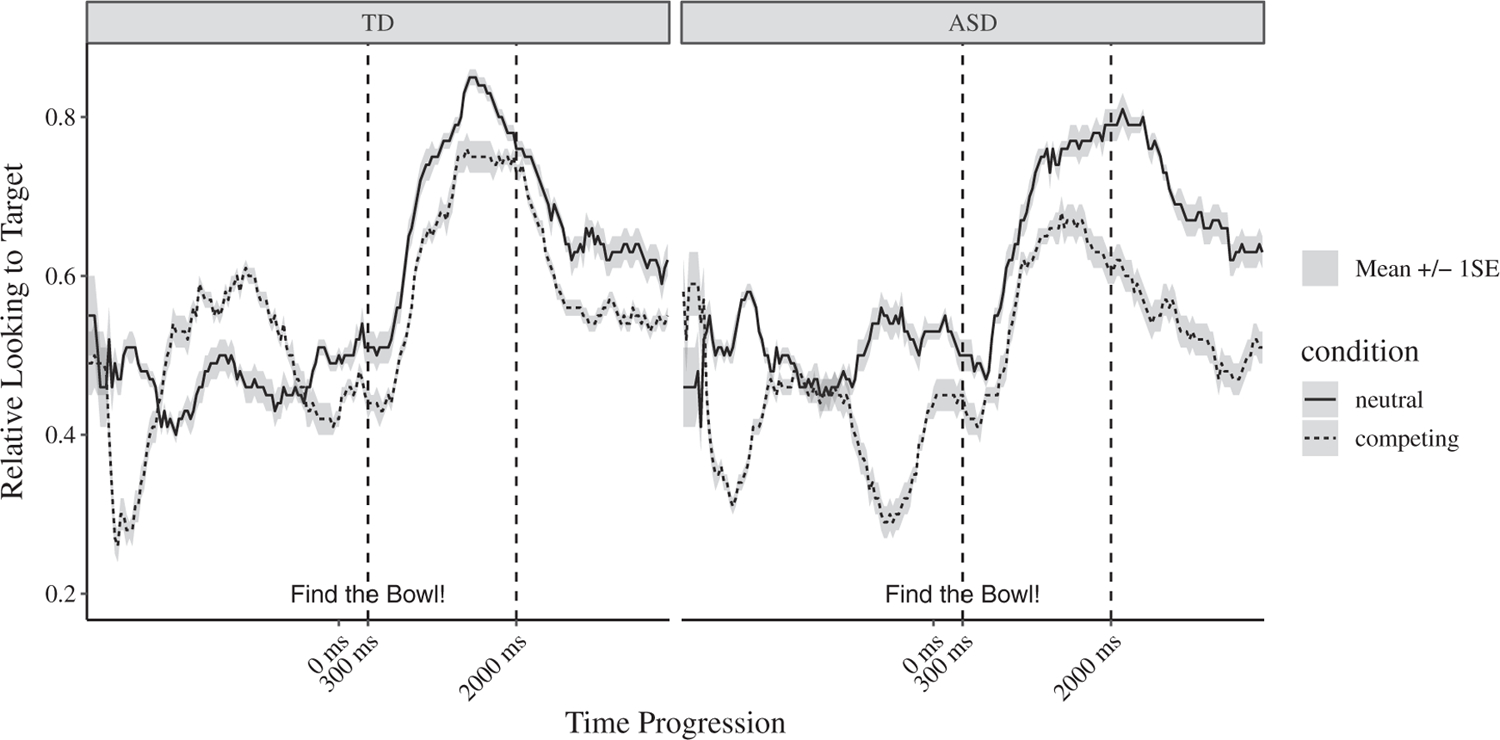

In the Competing condition, we expected children to spend a higher proportion of time looking at high-salience distracter image than the low-salience target image. Consistent with this expectation, in the ASD group, relative looking to target during baseline (M = 0.41, SD = 0.07, range = 0.27–0.52) was significantly lower than 0.50, t(16) = −5.24, P <0.001. However, in the children with typical development, relative looking to target during baseline in Competing trials did not differ significantly from 0.50 (M = 0.48, SD = 0.10, range = 0.26–0.62), t(16) = −0.88, P = 0.392. This finding indicated that—despite a strong initial preference for the high-salience distracter during the first second of viewing (see Fig. 2)—the children with typical development spent a similar amount of time looking at both images over the baseline period as a whole. We further characterize baseline looking patterns below (see Section 9.1).

Figure 2.

Time course of relative looking to target throughout the trial. Relative looking to target = looking to the target image divided by total looking to both target and distractor across participants. TD = children with typical development. ASD = children with autism spectrum disorder. Then, 0 ms indicates the onset of the target noun. The dashed lines indicate the analysis window (300–2,000 ms after noun onset).

Results

Figure 2 and Table 2 present mean performance across groups and conditions. Our primary aim was to determine the extent to which competing perceptual salience affected word recognition in young children with ASD and young children with typical development. To address this aim, we first tested whether relative looking to target significantly increased between baseline and the analysis window, which would provide evidence of word recognition in an absolute sense (i.e., that children significantly increased their relative looking to the target image after it was named). We conducted repeated measures ANOVAs with relative looking to target as the dependent variable and Condition (Neutral vs. Competing), Time Window (Baseline vs. Analysis Window), and their interaction as the independent variables. We expected both groups to significantly increase their relative looking to target in both conditions, providing evidence that word recognition had occurred.

Table 2.

Relative Looking to Target Across Groups and Conditions

| Children with typical development |

Children with ASD |

|

|---|---|---|

| Mean (SD) range | Mean (SD) range | |

| Neutral condition | ||

| Baseline | 0.47 (0.08) | 0.51 (0.08) |

| 0.36–0.65 | 0.35–0.67 | |

| Analysis window | 0.71 (0.13) | 0.67 (0.12) |

| 0.38–0.90 | 0.43–0.85 | |

| Late analysis window | 0.66 (0.15) | 0.69 (0.17) |

| 0.33–0.90 | 0.41–0.94 | |

| Competing condition | ||

| Baseline | 0.48 (0.10) | 0.41 (0.07) |

| 0.26–0.62 | 0.27–0.52 | |

| Analysis window | 0.64 (0.12) | 0.56 (0.15) |

| 0.34–0.77 | 0.18–0.79 | |

| Late analysis window | 0.58 (0.11) | 0.52 (0.19) |

| 0.44–0.89 | 0.29–0.91 |

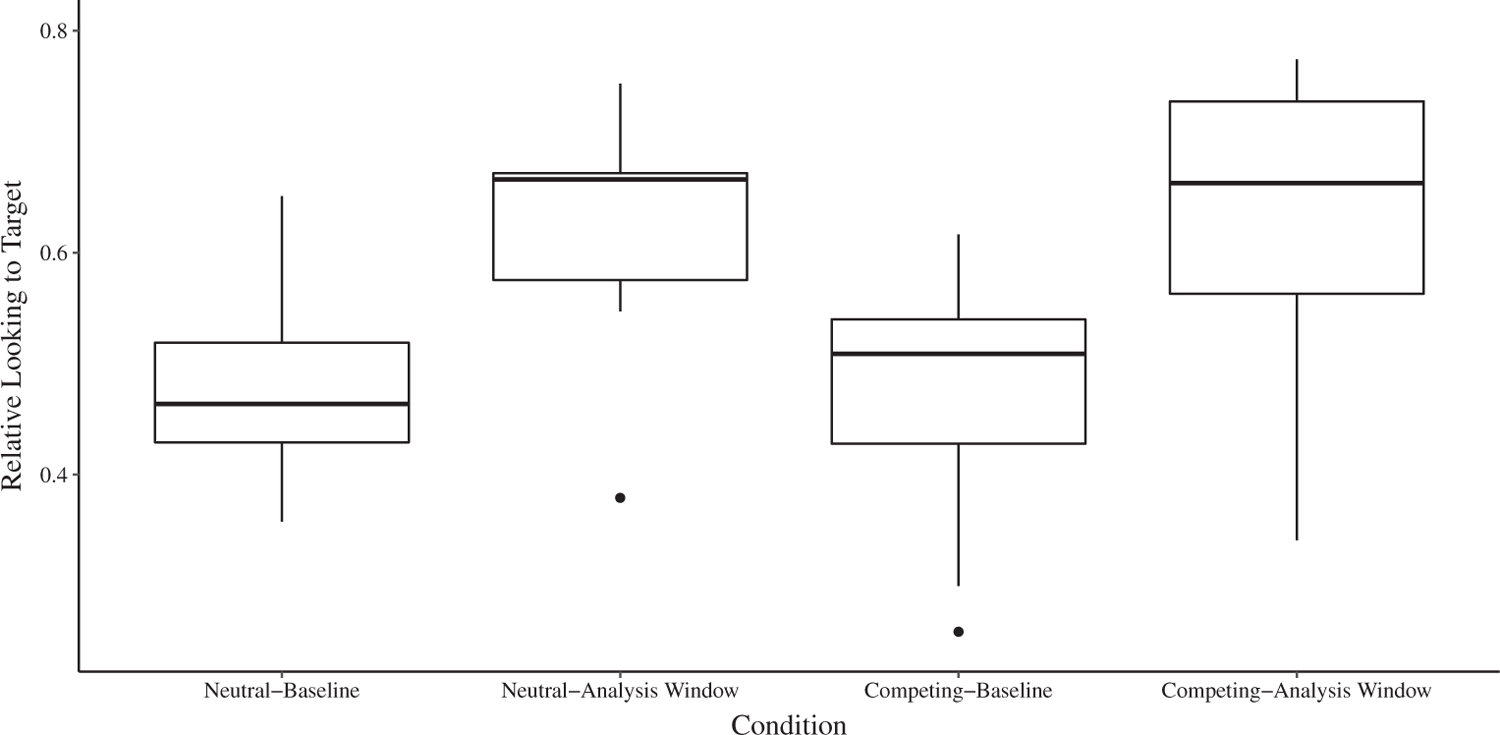

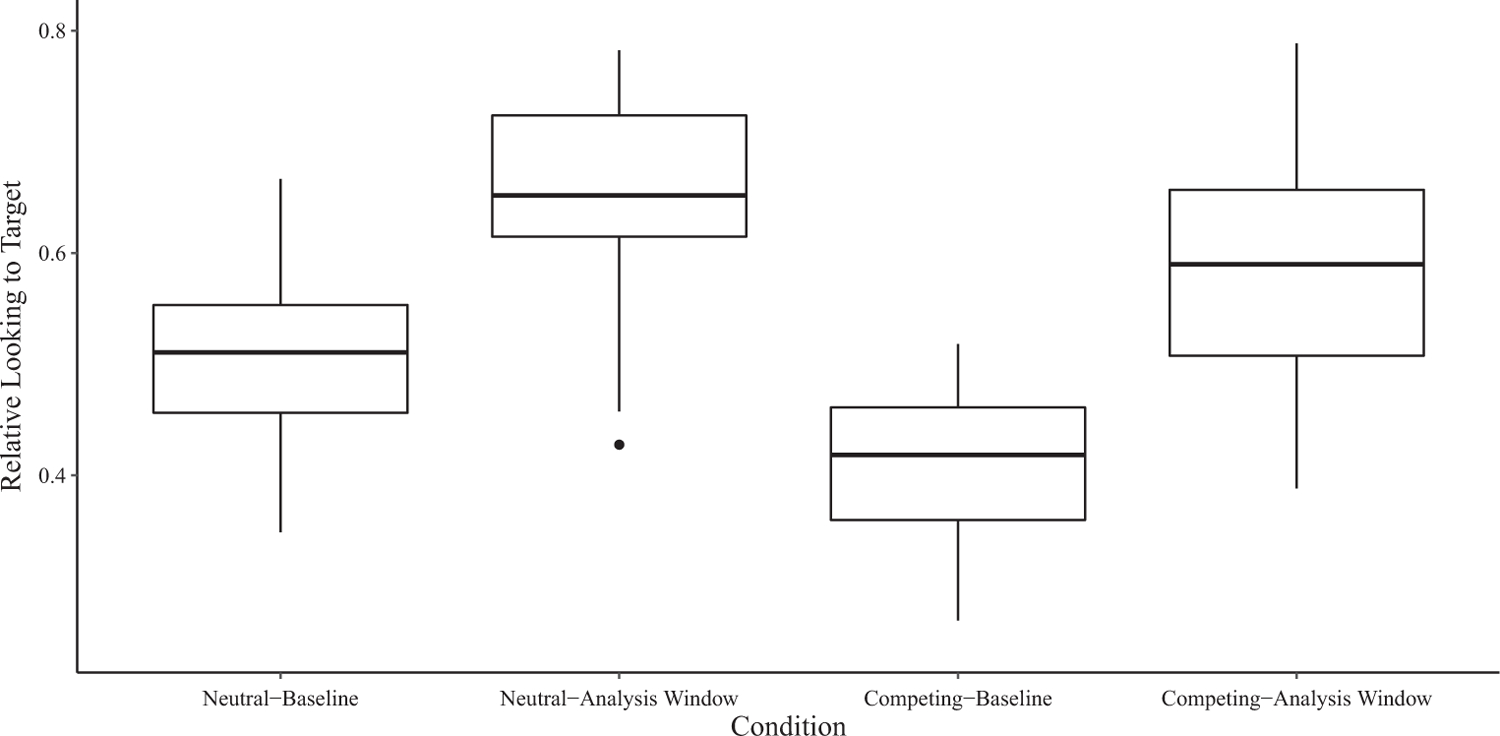

There was a statistically significant main effect of Time Window for the children with typical development, F(1,48) = 62.80, P <0.001, η2P = 0.57, and the children with ASD, F(1,48) = 36.88, p < 0.001, η2P = 0.43, indicating that children with typical development and children with ASD increased their relative looking to target after it was named (compared to baseline), regardless of Condition (see Figs. 3 and 4 and Table 2). In the children with typical development, the main effect of Condition was nonsignificant, F(1,48) = 1.85, P = 0.180, η2P = 0.04, as was the Condition × Time Window interaction, F(1,48) = 2.48, P = 0.122, η2P = 0.05. In the children with ASD, the interaction between Condition and Time Window was nonsignificant, F(1,48) = 0.01, P = 0.941, η2P <0.001, but there was a statistically significant main effect of Condition, F(1,48) = 16.05, P <0.001, η2P = 0.25. These results indicated that the children with ASD spent a significantly lower proportion of time looking at the target object in the Competing Condition than in the Neutral Condition, regardless of time window. Overall, these analyses provided evidence that word recognition had occurred in both groups and both conditions but suggested that the children with ASD were more affected by competing perceptual salience than the children with typical development.

Figure 3.

Relative looking to target across the Neutral and Competing Conditions for children with typical development. Baseline was the period before noun onset, and the analysis window was 300–2,000 ms after noun onset. The dark lines represent the median. The upper and lower hinges represent the first and third quartiles (i.e., 25th and 75th percentiles). The whiskers extend to the observed value no more than 1.5 times the distance between the first and third quartiles. Observed values beyond the whiskers are plotted individually.

Figure 4.

Relative looking to target across the Neutral and Competing Conditions for children with ASD. Baseline was the period before noun onset, and the analysis window was 300–2,000 ms after noun onset. The dark lines represent the median. The upper and lower hinges represent the first and third quartiles (i.e., 25th and 75th percentiles). The whiskers extend to the observed value no more than 1.5 times the distance between the first and third quartiles. Observed values beyond the whiskers are plotted individually.

To further quantify the effect of competing perceptual salience on word recognition, we examined relative looking to the target image after it was named in the Competing and Neutral conditions. We conducted a mixed effect repeated measures ANOVA with relative looking to target during the analysis window as the dependent variable, and Group (typical development vs. ASD), Condition (Neutral vs. Competing), and their interaction as the independent variables. Because of the nested data structure, we allowed error terms to correlate across participants and controlled for these. Planned pairwise comparisons were conducted using the Holm-Bonferroni method to correct for multiple comparisons [Holm, 1979].

There was a significant main effect of Condition, F(1,32) = 14.32, P = 0.001, η2P = 0.31. The main effect of Group was nonsignificant, F(1,32) = 2.68, P = 0.112, η2P = 0.18, as was the Group × Condition interaction, F(1,32) = 0.40, P = 0.530, η2P = 0.01. Planned (i.e., a priori) pairwise comparisons revealed no significant difference in relative looking to target between the Competing and Neutral Condition for the children with typical development during the analysis window (Δ = −0.07, P = 0.134, d = 0.76; see Table 2). In contrast, relative looking to target in the children with ASD was significantly lower during the analysis window in the Competing Condition than in the Neutral Condition (Δ = −0.10, P = 0.019, d = 1.07; see Table 2). Overall, these results demonstrated that competing perceptual salience significantly decreased relative looking to target in the children with ASD, but not in the children with typical development.3

Though we were primarily interested in the initial phase of word recognition immediately after noun onset, we also examined looking patterns during the late analysis window, which began 2 s after noun onset and lasted until the end of the trial (4,900–6,500 ms). The results for the late analysis window mirrored those in the main analysis window. There was a significant main effect of Condition, F(1,32) = 16.11, P < 0.001, η2P = 0.34. The main effect of Group again was nonsignificant, F(1,32) = 0.10, P = 0.754, η2P = 0.007, as was the Group × Condition interaction, F(1,32) = 2.35, P = 0.135, η2P = 0.07. As in the initial analysis window, planned pairwise comparisons revealed no significant difference in relative looking to target between the Competing and Neutral Condition for the children with typical development (Δ = −0.07, p = 0.267, d = 0.60). Relative looking to target in the children with ASD was again significantly lower in the Competing Condition than in the Neutral Condition (Δ = −0.17, p = 0.003, d = 1.35).

Exploratory Baseline Analyses

The time course data (see Fig. 2) and initial baseline analyses (see “Method”) pointed to potential group differences in looking patterns in the Competing Condition during baseline, before either image had been named. To better understand these unexpected differences, we conducted a set of exploratory analyses examining baseline looking patterns. Based on visual inspection of the mean time course data (see Fig. 2), we separated the baseline period into three equal-sized time windows. The first phase lasted from the start of the trial to 1,056 ms; the second phase lasted from 1,056 to 2,112 ms; and the third phase lasted from 2,112 to 3,168 ms. Thus, these three phases approximately represented the first 3 s of the trial, during which no information had yet been provided about the target object.

During the first baseline phase of the Competing Condition, mean relative looking to target was 0.41 in the children with ASD and 0.40 in the children with typical development. Pairwise comparisons revealed no significant difference between groups during the first baseline phase, t(32) = 0.24, P = 0.813. During the second baseline phase, relative looking to target was significantly lower in the children with ASD (M = 0.45) than in the children with typical development (M = 0.55), t(32) = −2.74, P = 0.010. During the third baseline phase, there was a marginal group difference in relative looking to target between the children with ASD (M = 0.36) and the children with typical development (M = 0.46), t(32) = −1.92, P = 0.064.

Discussion

Though the visual preference literature and visual word recognition literatures have historically been conducted separately, the current study merged these approaches to examine the interaction between linguistic input and attentional allocation preferences in children with ASD. Our aim was to determine the extent to which competing perceptual salience affects word recognition in young children with ASD and young children with typical development. Consistent with our predictions, children demonstrated evidence of word recognition in an absolute sense across both the Neutral and Competing conditions. However, competing perceptual salience significantly reduced relative looking to target after noun onset only in the ASD group, indicating that the children with ASD were more disrupted by competing perceptual salience than the children with typical development (who were younger but matched on receptive language skills). Consistent with prior work [Amso et al., 2014], these results indicate that attentional allocation preferences in children with ASD were driven more strongly by bottom-up (i.e., salience-driven) than top-down (i.e., language-driven) processes, whereas this was not the case in the children with typical development.

These findings advance our conceptual understanding of the breadth and depth of visual preferences in children with ASD in two key ways. First, these results demonstrate that attentional allocation preferences for perceptual salience persist in children with ASD when they compete with top-down, linguistic information (in addition to social visual information, as shown in previous work; Amso et al., 2014). This finding advances our understanding of the links between attentional allocation preferences and language development in children with ASD. Second, these results demonstrate the existence of attentional allocation preferences for high perceptual salience in young children with ASD, even in the absence of social stimuli. Though the current study was unable to differentiate whether children with ASD were drawn to look at the high-salience objects and/or drawn to look away from the low-salience objects, this finding paves the way for future visual perception-focused studies that examine preferences for specific visual features as a potential biomarker for ASD.

In addition to addressing our primary aim, we conducted exploratory analyses examining the unexpected group differences that emerged in looking patterns during baseline, before either image had been named. We had expected both groups to show increased attention to the high-salience objects during baseline. After all, high salience objects are likely to attract attention, regardless of ASD diagnosis [Freeth et al., 2011; Sasson et al., 2008; Thorup et al., 2017; Unruh et al., 2016]. As expected, the children with ASD were drawn to high perceptual salience throughout baseline. However, the children with typical development were drawn to the high-salience image only during the first 1,000 ms of the trial (see Fig. 2). In fact, following their initial preference for the high-salience image, the children with typical development immediately increased their looks to the low-salience image, thereby appearing to ensure (either knowingly or unknowingly) that they did not visually explore one image at the exclusion of the other. These differences in baseline looking patterns may indicate general differences in visual exploration and information seeking between the two groups [Amso et al., 2014; Vivanti et al., 2017; Young, Hudry, Trembath, & Vivanti, 2016].

Why did competing perceptual salience affect looking behaviors in the children with ASD more strongly than in the children with typical development? Though we cannot definitively answer this question, previous research points to several possible explanations. High perceptual salience may offer increased reward value in children with ASD [Dawson, Meltzoff, Osterling, Rinaldi, & Brown, 1998; Sasson et al., 2011], which could result in high-salience stimuli strongly attracting attention. Relatedly, differences in attentional control (e.g., inhibition) may have contributed to the increased disruption in the ASD group. For example, the children with ASD may have been less able (or less willing) to inhibit their looks to the high-salience distracter, even though it was not relevant to the spoken language they heard [Sasson et al., 2008; Yurovsky & Frank, 2015]. Another possibility is that the children with ASD had difficulty disengaging their attention from the high-salience object to shift their attention to the named, low-salience object [Sacrey et al., 2014; Venker, 2017]. It is also possible that the children with ASD were less motivated to align their attention with spoken language when they had the opportunity to look at something more visually salient than the target object [Burack et al., 2016].

Regardless of the underlying cause, competing perceptual salience significantly decreased attention to relevant visual stimuli in children with ASD—a scenario that is likely to have cascading negative effects on development. If children do not consistently attend to the intended target at the intended time, they may lose out on opportunities to strengthen existing word-meaning connections and help establish new ones [Kucker et al., 2015; Venker et al., 2018]. In addition, they are less well situated to receive additional semantic information about named objects, including color, function, and shape [Abdelaziz, Kover, Wagner, & Naigles, 2018]. Though we focused on attention to named familiar objects, competing perceptual salience may be even more problematic in word-learning contexts, given evidence that decreased attention to named novel objects can lead to poorer learning in individuals with ASD [Aldaqre et al., 2015; Tenenbaum, Amso, Righi, & Sheinkopf, 2017]. In particular, competing perceptual salience that decreases attention to relevant visual stimuli could prevent children from learning new words, cause word learning to progress more slowly, or—in extreme cases—lead to incorrect learning [Venker et al., 2018].

One limitation of this study was its relatively small sample size, which limits the extent to which the current findings may generalize to the broader population of children with ASD. Though it would have been desirable to include a larger group of children in our analyses, this was not possible because some of the children with ASD in our sample had receptive language skills that were so low that they could not be matched to a child with typical development. In fact, the children with ASD who were excluded had a mean receptive language age equivalent of 12 months (SD = 3). Even though this matching strategy constrained our sample size and, thus, the generalizability of our findings, we considered it to be a necessary first step in understanding attentional allocation preferences in this language-based task. Interpreting group differences would have been challenging (at best) if groups had differed in both diagnostic status and receptive language skills. Despite the relatively small sample sizes, we had adequate power to detect significant group differences. Furthermore, participant samples of this size are not uncommon in ASD research, particularly with group matching [Amso et al., 2014; Kaldy, Kraper, Carter, & Blaser, 2011]. A group matching design may not be ideal for fully characterizing individual differences across the full range of ability levels in children with ASD (as suggested by the nonsignificant results of the correlational analyses). Studies that include a larger, more representative sample are needed to determine whether children with weaker language skills are more disrupted by competing perceptual salience than children with stronger language skills. We predict that this may be the case, particularly since the children with ASD in the current study were those with stronger language skills, and children with weaker language skill may be even more affected by competing perceptual salience.

Another limitation was our inability to isolate the impact of distinct visual features associated with perceptual salience, including color, vibrancy, complexity (i.e., of patterning vs. solid objects), and contrast. We purposefully designed the stimuli to maximize differences in relative salience, using an ecologically valid salience manipulation that represented differences in object color and patterning that occur in everyday life. In doing so, however, multiple visual features that contribute to perceptual salience were confounded. Future studies of visual perception are needed to determine how specific features (e.g., contrast, movement) affect attentional allocation preferences among individuals with and without ASD. Notably, perceptual salience strongly affected looking behaviors in the children with ASD even though the visual stimuli were static. Competing dynamic stimuli may affect looking behaviors even more drastically [Sasson et al., 2008; Unruh et al., 2016].

In terms of clinical implications, the findings of this study emphasize the importance of providing language input that is relevant to the child’s current focus of attention [McDuffie & Yoder, 2010]. Using language to guide children’s attention to relevant aspects of the environment may not be entirely effective [Vivanti, Hocking, Fanning, & Dissanayake, 2016]—particularly when perceptual salience competes with the intended target. Although perceptual salience has a disruptive effect when it competes with relevant information, future studies should also examine ways in which attentional allocation preferences could serve as a springboard for learning [Burack et al., 2016]. Previous work has shown that perceptual salience can also boost attention to aspects of the environment that are relevant for language development [Akechi et al., 2011; Parish-Morris et al., 2007]. High-salience objects may help to establish object interest, play skills, and shared enjoyment in children with ASD. Findings from this and future studies may have implications for designing effective educational environments for children with ASD, including screen-based learning apps, electronic storybooks, and physical classroom and intervention contexts [Fisher, Godwin, & Seltman, 2014; Skibbe, Thompson, & Plavnick, 2018; Takacs & Bus, 2016; Thompson, Plavnick, & Skibbe, 2019]. In this way, attentional allocation preferences may help to support positive developmental outcomes in children with ASD.

Supplementary Material

Acknowledgments

This work was supported by funding from R01 DC012513 (Ellis Weismer, Saffran, & Edwards, MPIs), R21 DC016102 (Venker, PI), and a core grant to the Waisman Center at the University of Wisconsin-Madison (U54 HD090256). This work would not have been possible without the commitment of the children and families who participated. We offer our sincere thanks to Liz Premo, Tristan Mahr, Jessica Umhoefer, Heidi Sindberg, and Rob Olson for their support in completing this study. The authors also thank Dima Amso for her thoughts on the experimental design.

Footnotes

An automatic eye tracker also recorded information about children’s gaze location during the task. However, we used manually-coded data instead of automatic eye-tracking data based on recent evidence that automatic eye tracking produces significantly higher rates of data loss in children with ASD than manual gaze coding [Venker et al., 2019].

During the initial stages of stimulus preparation, we generated saliency maps using Saliency Toolbox [similar to the approach used by Amso et al., 2014] to contrast the relative visual salience of colorful, geometric objects and less colorful, non-patterned objects. The saliency maps consistently identified colorful, geometric patterned objects as having higher salience than less colorful, non-patterned images. Following this initial validation, we conducted a pilot study [Mathée et al., 2016] of the experimental task with an independent sample of 17 children with typical development (24–26 months old). As predicted, children spent significantly more time looking at colorful, patterned objects than less colorful, non-patterned images, which supported the effectiveness of the salience manipulation. The current study used the same experimental task validated in this pilot work.

Based on a suggestion from reviewers, we examined correlations in both groups between relative looking to target during the analysis window and receptive language skills (PLS-5 Auditory Comprehension Growth Scale Value, a measure of raw scores transformed to an equal-interval scale). In the children with typical development, the correlation between receptive language and relative looking to target was significant in the Competing Condition (r = 0.59, P = 0.013) but not in the Neutral Condition (r = 0.37, P = 0.147). In the children with ASD, the correlation between receptive language and relative looking to target was not significant in either the Neutral Condition (r = 0.41, P = 0.103) or the Competing Condition (r = 0.41, P = 0.102), despite an overall positive relationship between the two variables. These results should be interpreted with caution because the receptive language-matched subgroups in this study (n = 17 per group) were selected to examine potential differences in group performance, not to characterize individual differences in the ASD group as awhole.

Supporting Information

Additional supporting information may be found online in the Supporting Information section at the end of the article.

Appendix S1: Supporting Information

Contributor Information

Courtney E. Venker, Department of Communicative Sciences and Disorders, Michigan State University, Michigan, USA.

Janine Mathée, Waisman Center and Department of Communication Sciences and Disorders, University of Wisconsin-Madison, Madison, Wisconsin, USA.

Dominik Neumann, College of Communication Arts and Sciences, Michigan State University, East Lansing, Michigan, USA.

Jan Edwards, Waisman Center and Department of Communication Sciences and Disorders, University of Wisconsin-Madison, Madison, Wisconsin, USA; Department of Hearing and Speech Sciences, Maryland Science Center, University of Maryland, College Park, Maryland, USA.

Jenny Saffran, Waisman Center and Department of Psychology, University of Wisconsin-Madison, Madison, Wisconsin, USA.

Susan Ellis Weismer, Waisman Center and Department of Communication Sciences and Disorders, University of Wisconsin-Madison, Madison, Wisconsin, USA.

References

- Abdelaziz A, Kover ST, Wagner M, & Naigles LR (2018). The shape bias in children with autism spectrum disorder: Potential sources of individual differences. Journal of Speech, Language, and Hearing Research, 61(11), 2685–2702. 10.1044/2018_JSLHR-L-RSAUT-18-0027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adamson LB, Deckner DF, & Bakeman R (2010). Early interests and joint engagement in typical development, autism, and Down syndrome. Journal of Autism and Developmental Disorders, 40(6), 665–676. 10.1007/s10803-009-0914-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Akechi H, Senju A, Kikuchi Y, Tojo Y, Osanai H, & Hasegawa T (2011). Do children with ASD use referential gaze to learn the name of an object? An eye-tracking study. Research in Autism Spectrum Disorders, 5(3), 1230–1242. 10.1016/j.rasd.2011.01.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aldaqre I, Paulus M, & Sodian B (2015). Referential gaze and word learning in adults with autism. Autism, 19(8), 944–955. 10.1177/1362361314556784 [DOI] [PubMed] [Google Scholar]

- American Psychiatric Association. (2013). Diagnostic and statistical manual of mental disorders (5th ed.). Washington, DC: American Psychiatric Association. [Google Scholar]

- Ames C, & Fletcher-Watson S (2010). A review of methods in the study of attention in autism. Developmental Review, 30 (1), 52–73. 10.1016/j.dr.2009.12.003 [DOI] [Google Scholar]

- Amso D, Haas S, Tenenbaum E, Markant J, & Sheinkopf SJ (2014). Bottom-up attention orienting in young children with autism. Journal of Autism and Developmental Disorders, 44, 664–673. 10.1007/s10803-013-1925-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Antezana L, Factor RS, Condy EE, Strege MV, Scarpa A, & Richey JA (2019). Gender differences in restricted and repetitive behaviors and interests in youth with autism. Autism Research, 12(2), 274–283. 10.1002/aur.2049 [DOI] [PubMed] [Google Scholar]

- Aslin RN (2007). What’s in a look? Developmental Science, 10, 48–53. 10.1016/j.biotechadv.2011.08.021. Secreted [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bardi L, Regolin L, & Simion F (2011). Biological motion preference in humans at birth: role of dynamic and configural properties. Developmental Science, 14(2), 353–359. 10.1111/j.1467-7687.2010.00985.x [DOI] [PubMed] [Google Scholar]

- Bavin EL, Kidd E, Prendergast LA, & Baker EK (2016). Young children with ASD use lexical and referential information during on-line sentence processing. Frontiers in Psychology, 7, 1–12. 10.3389/fpsyg.2016.00171 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bedford R, Pickles A, Gliga T, Elsabbagh M, Charman T, & Johnson MH (2014). Additive effects of social and non-social attention during infancy relate to later autism spectrum disorder. Developmental Science, 17(4), 612–620. 10.1111/desc.12139 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bishop SL, Guthrie W, Coffing M, & Lord C (2011). Convergent validity of the Mullen scales of early learning and the differential ability scales in children with autism spectrum disorders. American Journal on Intellectual and Developmental Disabilities, 116, 331–343. 10.1352/1944-7558-116.5.331 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bottema-Beutel K (2016). Associations between joint attention and language in autism spectrum disorder and typical development: A systematic review and meta-regression analysis. Autism Research, 9, 1021–1035. 10.1002/aur.1624 [DOI] [PubMed] [Google Scholar]

- Brooks R, & Meltzoff AN (2005). The development of gaze following and its relation to language. Developmental Science, 8, 535–543. 10.1111/j.1467-7687.2005.00445.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burack JA, Russo N, Kovshoff H, Palma Fernandes T, Ringo J, Landry O, & Iarocci G (2016). How I attend—Not how well do I attend: Rethinking developmental frameworks of attention and cognition in autism spectrum disorder and typical development. Journal of Cognition and Development, 17 (4), 553–567. 10.1080/15248372.2016.1197226 [DOI] [Google Scholar]

- Chawarska K, Macari S, & Shic F (2013). Decreased spontaneous attention to social scenes in 6-month-old infants later diagnosed with autism spectrum disorders. Biological Psychiatry, 74(3), 195–203. 10.1016/j.biopsych.2012.11.022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Connor CE, Egeth HE, & Yantis S (2004). Visual attention: Bottom-up versus top-down. Current Biology, 14(19), R850–R852). Cell Press. 10.1016/j.cub.2004.09.041 [DOI] [PubMed] [Google Scholar]

- Dawson G, Meltzoff AN, Osterling J, Rinaldi J, & Brown E (1998). Children with autism fail to orient to naturally occurring social stimuli. Journal of Autism and Developmental Disorders, 28(6), 479–485. http://www.ncbi.nlm.nih.gov/pubmed/9932234 [DOI] [PubMed] [Google Scholar]

- Ellis Weismer S, Haebig E, Edwards J, Saffran J, & Venker CE (2016). Lexical processing in toddlers with ASD: Does weak central coherence play a role? Journal of Autism and Developmental Disorders, 46, 1–15. 10.1007/s10803-016-2926-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Falck-Ytter T, Bölte S, & Gredebäck G (2013). Eye tracking in early autism research. Journal of Neurodevelopmental Disorders, 5, 1–11. 10.1186/1866-1955-5-28 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farroni T, Csibra G, Simion F, & Johnson MH (2002). Eye contact detection in humans from birth. Proceedings of the National Academy of Sciences of the United States of America, 99(14), 9602–9605. 10.1073/pnas.152159999 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fernald A, & Marchman V. a. (2012). Individual differences in lexical processing at 18 months predict vocabulary growth in typically developing and late-talking toddlers. Child Development, 83, 203–222. 10.1111/j.1467-8624.2011.01692.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fernald A, Perfors A, & Marchman VA (2006). Picking up speed in understanding: Speech processing efficiency and vocabulary growth across the 2nd year. Developmental Psychology, 42(1), 98–116. 10.1037/0012-1649.42.1.98 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fernald A, Thorpe K, & Marchman VA (2010). Blue car, red car: Developing efficiency in online interpretation of adjective–noun phrases. Cognitive Psychology, 60, 190–217. 10.1016/J.COGPSYCH.2009.12.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fernald A, Zangl R, Portillo AL, & Marchman VA (2008). Looking while listening: Using eye movements to monitor spoken language comprehension by infants and young children. In Sekerina IA, Fernandez E, & Clahsen H (Eds.), Developmental Psycholinguistics: On-line methods in children’s language processing (pp. 97–135). Amsterdam: John Benjamins. [Google Scholar]

- Fischer J, Smith H, Martinez-Pedraza F, Carter AS, Kanwisher N, & Kaldy Z (2015). Unimpaired attentional disengagement in toddlers with autism spectrum disorder. Developmental Science, 19, 1095–1103. 10.1111/desc.12386 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fisher AV, Godwin KE, & Seltman H (2014). Visual environment, attention allocation, and learning in young children: When too much of a good thing may be bad. Psychological Science, 25, 1362–1370. 10.1177/0956797614533801 [DOI] [PubMed] [Google Scholar]

- Freeth M, Foulsham T, & Chapman P (2011). The influence of visual saliency on fixation patterns in individuals with autism spectrum disorders. Neuropsychologia, 49(1), 156–160. 10.1016/j.neuropsychologia.2010.11.012 [DOI] [PubMed] [Google Scholar]

- Golinkoff RM, Ma W, Song L, & Hirsh-Pasek K (2013). Twenty-five years using the intermodal preferential looking paradigm to study language acquisition: what have we learned? Perspectives on Psychological Science, 8(3), 316–339. 10.1177/1745691613484936 [DOI] [PubMed] [Google Scholar]

- Goodwin A, Fein D, & Naigles LR (2012). Comprehension of wh-questions precedes their production in typical development and autism spectrum disorders. Autism Research, 5(2), 109–123. 10.1002/aur.1220 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goren CC, Sarty M, & Wu PYK (1975). Visual following and pattern discrimination of face-like stimuli by newborn infants. Pediatrics, 56(4), 544–549. [PubMed] [Google Scholar]

- Hollich G, Golinkoff RM, & Hirsh-Pasek K (2007). Young children associate novel words with complex objects rather than salient parts. Developmental Psychology, 43(5), 1051–1061. 10.1037/0012-1649.43.5.1051 [DOI] [PubMed] [Google Scholar]

- Hollich G, Hirsh-Pasek K, Golinkoff RM, Brand RJ, Brown E, Chung HL, … Bloom L (2000). Breaking the language barrier: An emergentist coalition model for the origins of word learning. Monographs of the Society for Research in Child Development, 65, 1–135. [PubMed] [Google Scholar]

- Holm S (1979). A simple sequentially rejective multiple test procedure. Scandinavian Journal of Statistics, 6(2), 65–70. [Google Scholar]

- Hood BM, & Atkinson J (1993). Disengaging visual attention in the infant and adult. Infant Behavior and Development, 16(4), 405–422. [Google Scholar]

- Johnson MH, Posner MI, & Rothbart MK (1991). Components of visual orienting in early infancy: Contingency learning, anticipatory looking, and disengaging. Journal of Cognitive Neuroscience, 3, 335–344. 10.1162/jocn.1991.3.4.335 [DOI] [PubMed] [Google Scholar]

- Kaldy Z, Kraper C, Carter AS, & Blaser E (2011). Toddlers with autism spectrum disorder are more successful at visual search than typically developing toddlers. Developmental Science, 14, 980–988. 10.1111/j.1467-7687.2011.01053.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kasari C, Sigman M, Mundy P, & Yirmiya N (1990). Affective sharing in the context of joint attention interactions of normal, autistic, and mentally retarded children. Journal of Autism and Developmental Disorders, 20, 87–100. 10.1007/BF02206859 [DOI] [PubMed] [Google Scholar]

- Keehn B, Muller R-A, & Townsend J (2013). Atypical attentional networks and the emergence of autism. N euroscience & Biobehavioral Reviews, 37, 164–183. 10.1016/j.neubiorev.2012.11.014.Atypical [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klin A, Lin DJ, Gorrindo P, Ramsay G, & Jones W (2009). Two-year-olds with autism orient to non-social contingencies rather than biological motion. Nature, 459(7244), 257–261. 10.1038/nature07868 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kucker SC, McMurray B, & Samuelson LK (2015). Slowing down fast mapping: Redefining the dynamics of word learning. Child Development Perspectives, 9, 74–78. 10.1111/cdep.12110 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Landry R, & Bryson SE (2004). Impaired disengagement of attention in young children with autism. Journal of Child Psychology and Psychiatry, 45(6), 1115–1122. 10.1111/j.1469-7610.2004.00304.x [DOI] [PubMed] [Google Scholar]

- Lord C, Luyster R, Gotham K, & Guthrie W (2012). Autism diagnostic observation schedule, second edition (ADOS-2) manual (part 2): Toddler module. Torrence, CA: Western Psychological Services. [Google Scholar]

- Lord C, Rutter M, DiLavore PC, Risi S, Gotham K, & Bishop S (2012). Autism diagnostic observation schedule, second edition (ADOS-2) manual (part 1): Modules 1–4. Western Psychological Services.

- Maekawa T, Tobimatsu S, Inada N, Oribe N, Onitsuka T, Kanba S, & Kamio Y (2011). Top-down and bottom-up visual information processing of non-social stimuli in high-functioning autism spectrum disorder. Research in Autism Spectrum Disorders, 5(1), 201–209. 10.1016/j.rasd.2010.03.012 [DOI] [Google Scholar]

- Mahr T, McMillan BTM, Saffran JR, Ellis Weismer S, & Edwards J (2015). Anticipatory coarticulation facilitates word recognition in toddlers. Cognition, 142, 345–350. 10.1016/j.cognition.2015.05.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marchman VA, & Fernald A (2008). Speed of word recognition and vocabulary knowledge predict cognitive and language outcomes in later childhood. Developmental Science, 11, F9–F116. 10.1111/j.1467-7687.2008.00671.x.Speed [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mathée J, Venker CE, & Saffran J (2016). Visual Attention and Lexical Processing in Infants. University of Wisconsin-Madison Undergraduate Symposium. [Google Scholar]

- McDuffie A, & Yoder P (2010). Types of parent verbal responsiveness that predict language in young children with autism spectrum disorder. Journal of Speech, Language, and Hearing Research, 53, 1026–1039. 10.1044/1092-4388(2009/09-0023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore A, Wozniak M, Yousef A, Barnes CC, Cha D, Courchesne E, & Pierce K (2018). The geometric preference subtype in ASD: Identifying a consistent, early-emerging phenomenon through eye tracking. Molecular Autism, 9(1), 1–13. 10.1186/s13229-018-0202-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore C, Angelopoulos M, & Bennett P (1999). Word learning in the context of referential and salience cues. Developmental Psychology, 35, 60–68. [DOI] [PubMed] [Google Scholar]

- Mullen EM (1995). Mullen scales of early learning. Minneapolis, MN: AGS. [Google Scholar]

- Mundy P, & Gomes A (1998). Individual differences in joint attention skill development in the second year. Infant Behavior and Development, 21, 469–482. 10.1016/S0163-6383(98)90020-0 [DOI] [Google Scholar]

- Mundy P, & Jarrold W (2010). Infant joint attention, neural networks and social cognition. Neural Networks, 23, 985–997. 10.1016/J.NEUNET.2010.08.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mundy P, Sigman M, & Kasari C (1990). A longitudinal study of joint attention and language development in autistic children. Journal of Autism and Developmental Disorders, 20, 115–128. 10.1007/BF02206861 [DOI] [PubMed] [Google Scholar]

- Mutreja R, Craig C, & O’Boyle MW (2015). Attentional network deficits in children with autism spectrum disorder. Developmental Neurorehabilitation, 19, 1–9. 10.3109/17518423.2015.1017663 [DOI] [PubMed] [Google Scholar]

- Papageorgiou KA, Farroni T, Johnson MH, Smith TJ, & Ronald A (2015). Individual differences in newborn visual attention associate with temperament and behavioral difficulties in later childhood. Scientific Reports, 5, 11264. 10.1038/srep11264 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parish-Morris J, Hennon E. a., Hirsh-Pasek K, Golinkoff RM, & Tager-Flusberg H (2007). Children with autism illuminate the role of social intention in word learning. Child Development, 78, 1265–1287. 10.1111/j.1467-8624.2007.01065.x [DOI] [PubMed] [Google Scholar]

- Patten E, & Watson LR (2011). Interventions targeting attention in young children with autism. American Journal of Speech-Language Pathology, 20(1), 60–69. 10.1044/1058-0360(2010/09-0081 [DOI] [PubMed] [Google Scholar]

- Pierce K, Conant D, Hazin R, Stoner R, & Desmond J (2011). Preference for geometric patterns early in life as a risk factor for autism. Archives of General Psychiatry, 68, 101–109. 10.1001/archgenpsychiatry.2010.113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pierce K, Marinero S, Hazin R, McKenna B, Barnes CC, & Malige A (2016). Eye-tracking reveals abnormal visual preference for geometric images as an early biomarker of an ASD subtype associated with increased symptom severity. Biological Psychiatry, 79, 657–666. 10.1016/j.biopsych.2015.03.032 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pomper R, Ellis Weismer S, Saffran J, & Edwards J (2019). Specificity of phonological representations for children with autism spectrum disorder. Journal of Autism and Developmental Disorders, 49(8), 3351–3363. 10.1007/s10803-019-04054-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pomper R, & Saffran JR (2018). Familiar object salience affects novel word learning. Child Development, 00(0), 1–17. 10.1111/cdev.13053 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pruden SM, Hirsh-Pasek K, Golinkoff RM, & Hennon E. a. (2006). The birth of words: Ten-month-olds learn words through perceptual salience. Child Development, 77, 266–280. 10.1111/j.1467-8624.2006.00869.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robins DL, Casagrande K, Barton M, Chen C-MA, Dumont-Mathieu T, & Fein D (2014). Validation of the modified checklist for autism in toddlers, revised with follow-up (M-CHAT-R/F). Pediatrics, 133(1), 37–45. 10.1542/peds.2013-1813 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rutter M, LeCouteur A, & Lord C (2003). Autism diagnostic interview-revised. Los Angeles: Western Psychological Service. [Google Scholar]

- Sacrey L-AR, Armstrong VL, Bryson SE, & Zwaigenbaum L (2014). Impairments to visual disengagement in autism spectrum disorder: A review of experimental studies from infancy to adulthood. Neuroscience & Biobehavioral Reviews, 47, 559–577. 10.1016/j.neubiorev.2014.10.011 [DOI] [PubMed] [Google Scholar]

- Sacrey L-AR, Bryson SE, & Zwaigenbaum L (2013). Prospective examination of visual attention during play in infants at high-risk for autism spectrum disorder: A longitudinal study from 6 to 36 months of age. Behavioural Brain Research, 256, 441–450. [DOI] [PubMed] [Google Scholar]

- Sasson NJ, & Elison JT (2012). Eye tracking young children with autism. Journal of Visualized Experiments: JoVE, 61, 1–5. 10.3791/3675 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sasson NJ, Elison JT, Turner-Brown LM, Dichter GS, & Bodfish JW (2011). Brief report: Circumscribed attention in young children with autism. Journal of Autism and Developmental Disorders, 41, 242–247. 10.1007/s10803-010-1038-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sasson NJ, Turner-Brown LM, Holtzclaw TN, Lam KSL, & Bodfish JW (2008). Children with autism demonstrate circumscribed attention during passive viewing of complex social and nonsocial picture arrays. Autism Research, 1, 31–42. 10.1002/aur.4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scerif G (2010). Attention trajectories, mechanisms and outcomes: at the interface between developing cognition and environment. Developmental Science, 13(6), 805–812. 10.1111/j.1467-7687.2010.01013.x [DOI] [PubMed] [Google Scholar]

- Shi L, Zhou Y, Ou J, Gong J, Wang S, Cui X, … Luo X (2015). Different visual preference patterns in response to simple and complex dynamic social stimuli in preschool-aged children with autism spectrum disorders. PLoS One, 10(3), e0122280. 10.1371/journal.pone.0122280 [DOI] [PMC free article] [PubMed] [Google Scholar]