Abstract

Neuropsychological test is an essential tool in assessing cognitive and functional changes associated with late‐life neurocognitive disorders. Despite the utility of the neuropsychological test, the brain‐wide neural basis of the test performance remains unclear. Using the predictive modeling approach, we aimed to identify the optimal combination of functional connectivities that predicts neuropsychological test scores of novel individuals. Resting‐state functional connectivity and neuropsychological tests included in the OASIS‐3 dataset (n = 428) were used to train the predictive models, and the identified models were iteratively applied to the holdout internal test set (n = 216) and external test set (KSHAP, n = 151). We found that the connectivity‐based predicted score tracked the actual behavioral test scores (r = 0.08–0.44). The predictive models utilizing most of the connectivity features showed better accuracy than those composed of focal connectivity features, suggesting that its neural basis is largely distributed across multiple brain systems. The discriminant and clinical validity of the predictive models were further assessed. Our results suggest that late‐life neuropsychological test performance can be formally characterized with distributed connectome‐based predictive models, and further translational evidence is needed when developing theoretically valid and clinically incremental predictive models.

Keywords: brain connectomics, cognitive aging, dementia, machine learning, neuropsychological test, predictive modeling

The predictive models utilizing most of the connectivity features showed better accuracy than those composed of focal connectivity features, suggesting that its neural basis is largely distributed across multiple brain systems. The discriminant and clinical validity of the predictive models were further assessed, and further translational evidence is needed when developing theoretically valid and clinically incremental predictive models.

1. INTRODUCTION

The neuropsychological test has a distinct role in detecting and monitoring cognitive and functional changes associated with dementia‐associated diseases (Fields, Ferman, Boeve, & Smith, 2011). Neuropsychological tests systematically describe the abilities to perform given cognitive tasks and evaluate how an individual will cope with daily‐life functional activities (Donders, 2019; Fields et al., 2010). Thus, neuropsychological tests not only detect the presence of clinical impairment but also provide prognostically useful information in late‐life neurodegenerative disease (Belleville, Fouquet, Duchesne, Collins, & Hudon, 2014).

Despite strong evidence of the clinical utility of neuropsychological tests, however, it is unclear what neurobiological information the test scores provide. While the progression of the pathophysiological process of dementia strongly affects neuropsychological function (Jack et al., 2019; Mortamais et al., 2017), a large amount of variance is left unexplained even with multimodal biomarker information (Arenaza‐Urquijo & Vemuri, 2018; Habeck et al., 2016; Vemuri et al., 2011). Moreover, it is hard to predict whether the presence of pathophysiological markers directly leads to neural dysfunction within the behaviorally relevant regions (Hohman et al., 2016). These discordances suggest the need for narrowing down the explanatory gap between brain function and behavioral impairment that are relevant to neuropsychological tests.

To elucidate the underlying neural basis of neuropsychological tests, previous studies have examined the brain regional structures and functions highly associated with behavioral performance (Bayram, Caldwell, & Banks, 2018; Genon, Reid, Langner, Amunts, & Eickhoff, 2018; Mortamais et al., 2017). However, the previous studies typically narrowed down the region of interest within a focal brain structure (e.g., hippocampus) or averaged out overall brain measures features into fewer predictors (Charroud et al., 2016; Duchek et al., 2013; Shaw, Schultz, Sperling, & Hedden, 2015; Van Petten, 2004). While pinpointing or averaging the candidate neural correlate makes the theoretical account succinct, it is hard to discern whether the focally identified brain correlates are an optimal and exclusively relevant unit in explaining individual differences of cognitive function. Moreover, exploring multiple neural correlates with repetitive univariate tests leads to excessively conservative thresholding, and selective reports of focal correlates tend not to replicate in other datasets (Masouleh, Eickhoff, Hoffstaedter, & Genon, 2019). Such a limited scope of neural features may hinder the valid identification of replicable neural correlate.

An advancement of individualized neuroimaging and machine learning techniques has enabled characterizing the multivariate nature of the brain–behavior relationship (Bzdok & Ioannidis, 2019; Dubois & Adolphs, 2016; Jollans & Whelan, 2018). With the virtue of both approaches, it became possible to find the optimal balance between an overly simplified theory‐driven model and a complex data‐driven model of the brain–behavior relationship. More specifically, individuals' detailed profiles of functional connectivity (FC) patterns not only can classify clinical diagnosis but also can predict continuously measured behavioral traits. Accumulating evidence suggests that behavioral individual differences that are relevant in characterizing risks of neuropsychiatric disorders including attention task performance, personality, intelligence, and neuropsychological performance can be robustly predicted with functional connectivity patterns (Dubois, Galdi, Paul, & Adolphs, 2018; Lin et al., 2018; Nostro et al., 2018; Rosenberg, Hsu, Scheinost, Todd Constable, & Chun, 2018; Sui et al., 2018). Moreover, with the advantage of feasibility, resting‐state FC has been highlighted as a promising approach in investigating the neural basis of brain aging and preclinical dementia mechanisms (Ferreira & Busatto, 2013; Sala‐Llonch, Bartrés‐Faz, & Junqué, 2015; Sheline & Raichle, 2013). Brain functional features of FC may better explain whether an individual will undergo significant behavioral impairment when the location and presence of neuropathology cannot fully account for such individual differences (Fox, 2018; Siegel et al., 2016).

In the current study, we aimed to identify the underlying brain connectivity basis of neuropsychological test performance in the older adult population. Connectome‐based predictive modeling combined with a regularized regression technique was applied to examine the optimal combinations of neural features required for accurate prediction. We hypothesized that a widespread set of FC features are predictive of neuropsychological performance in novel individuals. In addition, we further tested whether the identified predictive models generalize to an external dataset composed of heterogeneous demographic characteristics (Dwyer, Falkai, & Koutsouleris, 2018; Woo, Chang, Lindquist, & Wager, 2017). While several studies have identified the predictive pattern of neuropsychological test performance, previous attempts mostly lacked evidence of external validity. Most of the reported prediction accuracies are confined to internal cross‐validation, which tests the generalizability of a model only within a homogeneous dataset. If the predictive model captures a large amount of variance unique to the given dataset (e.g., populational characteristics, MRI protocols), the theoretical reliability of the predictive model will be limited.

Finally, we further aimed to examine the discriminant and clinical validity of the connectome‐based predictive models. Although neuropsychological test performances are highly correlated with each other, each test represents theoretically distinct domains of cognitive function (Park et al., 2012). The discriminant validity can be tested based on how the predictive models are discriminantly correlated with the specific construct of cognitive function (Habeck et al., 2015; Woo et al., 2017). The predictive model of cognitive function can either be confined to the targeted score or indistinctively generalize to other test scores (Avery et al., 2019; Jangraw et al., 2018; Rosenberg et al., 2015). The clinical validity, on the other hand, can be tested whether neurally predicted scores from the connectome provide incremental clinical information beyond the actual behavioral score (Jollans & Whelan, 2016; Moons et al., 2012). If the connectome‐based prediction model only captures redundant information from the original behavioral score, the neurally expected score will not additively explain the severity of functional impairment relevant to dementia.

2. METHODS AND MATERIALS

2.1. Participants

Neuropsychological tests and neuroimaging datasets shared in Open Access Series of Imaging Studies (OASIS‐3) were used to test the validity of the connectome‐based predictive models. OASIS‐3 dataset shared clinical, neuropsychological, neuroimaging, and biomarker data of 1,098 participants (age range: 42–95; www.oasis-brain.org; LaMontagne et al., 2018). We analyzed the initial baseline data of 644 participants who completed both neuropsychological tests and MRI scans. Participants with incomplete MRI scans (without T1 structural image and two sessions of resting fMRI) (n = 366), excessive head movement (n = 37), incomplete neuropsychological tests at the baseline (n = 51) were excluded from the analysis. Descriptive statistics are provided based on the CDR score (Clinical Dementia Rating; Table 1). The CDR is a semi‐structured interview developed to provide a global rating of dementia severity, and it is useful for staging and tracking decline in AD (Fillenbaum, Peterson, & Morris, 1996; J. C. Morris et al., 1997; J. C. Morris, 1997). Each CDR scores represented levels of functional impairment (0 = no impairment, 0.5 = questionable impairment, 1 = mild impairment, 2 = moderate impairment) and summarized estimate of dementia severity (Marcus et al., 2007; J. Morris, 1993).

TABLE 1.

Descriptive statistics of OASIS‐3 (internal validation set, n = 644) and KSHAP (external validation set, n = 151)

| OASIS‐3 internal validation dataset (n = 644) | KSHAP external validation dataset (n = 151) | |||

|---|---|---|---|---|

| Not impaired (n = 436) | Very mild impairment (n = 169) | Mild–moderate impairment (n = 39) | ||

| Mean ± SD (range) | ||||

| Age | 70.9 ± 6.52 (46–92) | 72.2 ± 6.87 (50–88) | 76.1 ± 8.79 (60–96) | 71.7 ± 6.56 (59–93) |

| Sex (female: Male) | 239:197 | 72:97 | 16:23 | 96:55 |

| Years of education | 15.8 ± 2.67 (8–29) | 15.2 ± 2.85 (7–23) | 14.8 ± 3.34 (8–20) | 7.2 ± 4.12 (0–23) |

| MMSE a /MMSE‐DS | 28.9 ± 1.29 (21–30) | 27.0 ± 2.72 (18–30) | 24.0 ± 3.29 (18–30) | 27.0 ± 2.14 (21–30) |

| CDR | 0 | 0.5 | 1.04 ± 0.21 | 0.02 ± 0.11 |

| CDR‐SOB b | 0.02 ± 0.12 | 1.73 ± 1.02 | 5.35 ± 1.54 | |

Note: Descriptive statistics of OASIS‐3 dataset were presented based on CDR global score (0 = no impairment, 0.5 = questionable impairment, 1 = mild impairment, 2 = moderate impairment) which provide summarized estimate of dementia severity.

Abbreviations: CDR, Clinical Dementia Rating global score; CDR‐SOB, Clinical Dementia Rating Sum of Boxes; MMSE, Mini‐Mental Status Examination; MMSE‐DS, Mini‐Mental Status Examination Dementia Screen.

Missing value omitted (Not impaired: 1).

Missing value omitted (Not impaired: 11, Very Mild Dementia: 7). MMSE‐ DS for external validation dataset.

To examine whether the predictive model is robustly generalized to the heterogeneous demographical dataset, participants subsampled from Korean Social Life, Health, and Aging Project (KSHAP) were used as an external validation dataset (J. Lee et al., 2014; Youm et al., 2014). KSHAP participants who completed a neuropsychological assessment, psychosocial surveys, and neuroimaging scans were included in the study. The following exclusion criteria were applied: the presence of psychiatric or neurological disorders, vision or hearing problems, having metal in the body that cannot be removed, having a history of losing consciousness due to head trauma, showed excessive head movement during scans. Furthermore, older adults who were highly suspected of dementia were screened out based on age and education‐stratified norm (< −1.5 SD) of Mini‐Mental State Examination for Dementia Screening (MMSE‐DS) (Han et al., 2010). The CDR score ranged from 0 to 0.5, including seven participants with very mild functional impairment (CDR = 0.5, n = 7). Among participants who completed both neuropsychological tests and neuroimaging scans, the final data were composed of 151 subjects who did not meet any of the exclusion criteria (Table 1). The study was approved by the Institutional Review Boards of Seoul National University and Yonsei University. All participants provided written informed consent to the research procedures.

2.2. Neuropsychological test

2.2.1. OASIS‐3 neuropsychological test

OASIS‐3 consists of Neuropsychological Battery of the Uniform Data Set (UDSNB) developed in Alzheimer's Disease Centers (ADC) to establish unified and standardized data collection (Weintraub et al., 2009). The dataset of 10 neuropsychological tests measuring attention/working memory, executive function, processing speed, language, and episodic memory are currently shared (https://central.xnat.org).

Digit Span Forward (DIGI‐F) and Digit Span Backward (DIGI‐B) were used to assess attention and working memory function with the subtests included in the Wechsler Memory Scale (WMS‐R) (Wechsler, 1987b). Total correct trials from 2 to 7 (backward) or 3 to 8 (forward) length of digits were counted after a verbal presentation of digit numbers. Category fluency of animal (FLU‐ANI) and vegetable (FLU‐VEG) were measured with a total number of words generated in 1 min (Moms et al., 1989). Trail Making Test Part A (TRAIL‐A) consisted of consecutively numbered circles arranged randomly on a sheet of paper. Participants were asked to draw a line between the circles in ascending order as quickly as possible. In Trail Making Test Part B (TRAIL‐B), participants were asked to consecutively connect lines between the number (ascending order) and alphabet in an alternating way (1‐A‐2‐B‐…; Reitan & Wolfson, 1993). If the subject cannot complete the sample item for each part or exceeds the time limits, a maximum time score is assigned (TRAIL‐A: 150 s, TRAIL‐B: 300 s). Total time (s) to complete the lines were log‐transformed and inversed to adjust high skewness and indicate the same performance direction. Digit Symbol Coding from WAIS‐R (Wechsler, 1987a) was administered in the standard way, with the total number of items completed correctly in 90 s as the total score. Episodic memory function was assessed with Logical Memory (LOGI MEM) from WMS‐R (Wechsler, 1987b). Total items of Story A in the Immediate and Delayed Recall trial were used. The Boston Naming Test score consisted of the total number of items named correctly named within the 20‐s limit plus the number of items named correctly with a semantic cue (Kaplan et al., 1983). The tests represented cognitive domains of attention (Digit Span), memory (Logical Memory), language (Boston Naming, Fluency), executive, and speed (Trail Making, Digit Symbol) (Hayden et al., 2011; Park et al., 2012).

Among 732 participants who participated in both neuropsychological tests and MRI scans, we excluded participants who did not complete any of the tests [Digit Span Forward, Category fluency, Logical Memory score missing (n = 2); Digit Span Backward, Trail Making Test Part A score missing (n = 3); Trail Making Test Part B missing (n = 45)], leaving 644 final analysis set.

2.2.2. KSHAP neuropsychological test

To test the generalizability of the predictive model, we identified eight homologous neuropsychological tests included in the KSHAP dataset. Similarity and correspondence of administration procedures and contents were scrutinized. For the attention and working memory, Digit Span Forward, and Digit Span Backward tests included in the Elderly Memory disorder Scale (Chey, 2007) were available. The score was the sum of all correct trials were counted from 2 to 8 lengths of digits. The category fluency test asked participants to generate words from the two semantic categories (i.e., animal and supermarket) each within a minute (Kang, Chin, Na, Lee, & Park, 2000; Kang, Jang, & Na, 2012). While interference condition of the original Trail Making Test in UDSNB asks to alternate between numbers and alphabets (Reitan & Wolfson, 1993), modified Trail Making Test (mTMT) asked to alternate between numbers and symbols (triangle and rectangle) to minimize the floor effect of illiterate elderly (M. Park & Chey, 2003; Seo et al., 2006). The mTMT Part A (Trail A) consisted of 15 consecutively numbered circles arranged randomly on a sheet of paper. Participants were asked to draw a line between the circles in ascending order as quickly as possible. In mTMT Part B, they were asked to draw between triangles and squares alternately. In mTMT Part C (Trail C), participants consecutively connected lines between 8 numbers (ascending order) and 7 shapes (triangle and square) in an alternating way (1 – △ – 2 – □ – 3 – △ …). Total time consumed to complete the mTMT‐A and mTMT‐C were used in the analysis, and mTMT‐B was not used in the analysis due to the variability in the possible trail options. A few participants exceeded the typical time limit of mTMT‐A (150 s, n = 1) and mTMT‐C (300 s, n = 7), but a maximum of 300 and 600 s, respectively, were allowed to finish the task due to the participants' unskilled usage of pencil. Total time (s) to complete the lines were log‐transformed and inversed. The Story Recall Test included in the Elderly Memory disorder Scale was used to assess episodic memory function. The Story Recall Test consisted of one story modified from the Logical Memory subtest in WMS‐III into a more culturally familiar content (An & Chey, 2004). The SRT required subjects to recall a paragraph containing 24 semantic units and the delayed recall subtests were administered 15–30 min after the immediate recall session.

2.3. MRI acquisition and preprocessing

2.3.1. OASIS‐3 neuroimaging

Neuroimaging dataset in OASIS‐3 was collected in a 16‐channel head coil of different scanners (Siemens TIM Trio 3T, Siemens BioGraph mMR PET‐MR 3T, Siemens BioGraph mMR PET‐MR 3T, Siemens Sonata 1.5T, Siemens Vision 1.5T). High resolution T1‐weighted structural image (TR = 2.4 s, TE = 3.08 ms, FOV = 256 × 256 mm, FA = 8°, voxel size 1 × 1 × 1 mm3) and resting‐state functional image (EPI; TR = 2.2 s, TE = 27 ms, FOV = 240 × 240 mm, FA = 90°, voxel size 4 × 4 × 4 mm, 36 slices) were used. Participants who completed both structural and two consecutive runs of functional scans (6 min and 164 volumes per run) were analyzed.

Image preprocessing and denoising was performed using the SPM12 software (Welcome Department of Imaging Neuroscience, Institute of Neurology, London, UK) with the Conn toolbox 18.a (http://www.nitrc.org/projects/conn) default preprocessing pipeline. Functional images were corrected for motion and warped into MNI standard space. Images were smoothed with a Gaussian kernel of 8 mm full‐width half‐maximum. In addition, the Artifact Detection Tools (https://www.nitrc.org/projects/artifact_detect/) was used to identify motion and signal intensity outlier images. Images with global mean intensity Z‐value >5 and movement >0.9 mm were identified as outlier images. Estimated motion parameters and outlier images were used as nuisance covariates in the time‐series linear regression. T1‐weighted images were segmented into gray matter, white matter, and cerebrospinal fluid and warped into MNI standard space. Signals within white matter and CSF mask were regressed out to exclude nongray matter BOLD signal. Band‐pass temporal filtering (0.008–0.09) was applied to exclude physiological noise signals. Participants with excessive head motion were excluded (max motion >4.5, mean motion >0.6, n = 51).

For each subject, mean time‐series were extracted by averaging all voxels composing each region for each time point from 227 regions from the Shen et al. (2013) brain atlas (37 cerebellar regions were excluded; Shen et al., 2013; Figure S1). Pearson correlation across time‐series was calculated between each pair of regions and transformed to Fisher's Z scores. Therefore, 644 individuals’ whole‐brain connectivity matrices containing (227 × [227–1])/2 = 25,651 pairwise functional connectivity (FC) values were constructed and vectorized in the following analyses.

2.3.2. KSHAP neuroimaging

T1‐weighted magnetic prepared rapid gradient echo (MP‐RAGE) image and Resting‐state fMRI data were acquired on a 3T Siemens Trio 32channel scanner (T1: Sagittal slices, slice thickness 1 mm, TR = 2,300 ms, TE = 2.36 ms, FOV = 256 × 256 mm, FA = 9°, voxel size 1 × 1 × 1 mm3; Resting‐state EPI: TR = 2000 ms, TE = 30 ms, FOV = 240 × 240 mm, FA = 79°, voxel size 3 × 3 × 3 mm, gap = 1 mm, acquisition time = 5 min). During the scan, participants were instructed to rest quietly with their eyes open and not to fall asleep. We acquired two runs of 150 contiguous functional images. Thus, the total length and the number of images were similar to the OASIS‐3 dataset (OASIS‐3:328 volumes, 12 min; KSHAP: 300 volumes, 10 min). To acquire high spatial resolution, cerebellar regions were excluded from the acquisition. All of the preprocessing and functional network construction were conducted with the same procedure, except for adding slice timing correction. Participants with excessive head motion were excluded (max motion >4.5, mean motion >0.6, n = 26). Constructing individuals’ FC matrix of each participant was also the same as the procedures conducted with OASIS‐3 data. Therefore, 151 individuals’ 25,651 vectorized functional connectivities were used in the following analyses.

2.4. Connectome‐based predictive modeling

2.4.1. Internal validation: Prediction from discovery to holdout sample

Due to the high dimensionality of whole‐brain FC features, regularization techniques are widely used to select an optimal set of predictive features. Ridge regression develops a model that minimizes the sum of both the mean prediction error and L2‐norm regularization term (sum of the squares of regression coefficients). Unlike the Ordinary Least Square (OLS) method, this technique not only minimizes the errors but also penalizes the total amount of explanatory weight in the model. A regularization parameter λ is used to control the trade‐off between the prediction error in the training data and the size of total regression coefficients, that is, model complexity. A large λ gives a stronger penalty to the larger sum of beta coefficients and leads to a model with more shrunk regression coefficients, while a small λ allows large room for regression coefficients to explain the target variable. The optimal value of λ that minimizes prediction error is tuned by the cross‐validation procedure. Previous studies have successfully predicted individual differences in various behavioral phenotypes using L2‐norm regularization (ridge regression) (Cui & Gong, 2018; Dadi et al., 2019; Gao, Greene, Constable, & Scheinost, 2019; Siegel et al., 2016). L2‐norm (sum of squared betas) penalizes irrelevant features by reducing the size of weights.

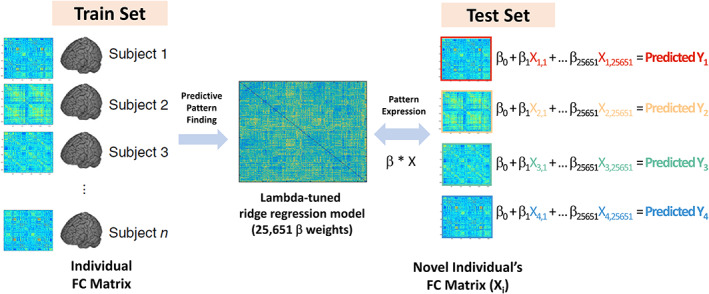

Connectome‐based predictive modeling was initially conducted by randomly splitting the train set and the test set (Figure 1). The training dataset was used to identify optimal predictive weights of the connectivity that contributes to accurate prediction in the behavioral scores, while the test dataset was used to evaluate how much the identified FC pattern is expressed in the novel individuals. The pattern expression score (i.e., FC‐predicted score) was calculated with the dot product (β × X) between predictive weights and the FC vector. The dot product of all 25,651 features estimated the predicted behavioral score based on the patterns of FC. The Pearson's correlation (r) between the actual (observed) neuropsychological test score and the FC‐predicted score in the test dataset indicated the predictive accuracy.

FIGURE 1.

Schematic overview of the connectome‐based predictive modeling. Train set of individuals’ FC matrix (left) is used to identify predictive weights across all connectivity features. The dot product between beta weights and the FC matrix of novel individuals estimates the FC‐predicted test scores

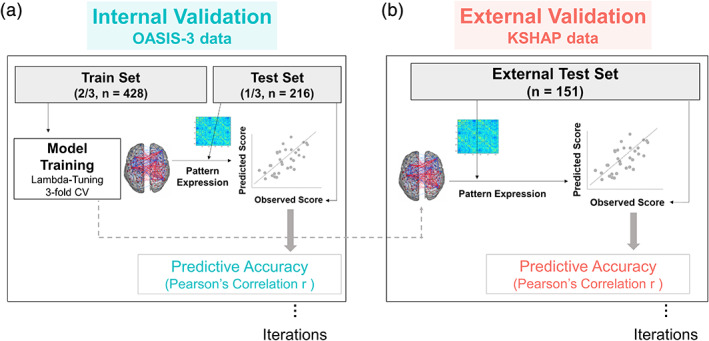

Connectome‐based predictive models of neuropsychological test scores were identified by utilizing a nested cross‐validation procedure (Varoquaux, 2018). Initially, the internal cross‐validation was initially conducted with the OASIS‐3 dataset (Figure 2a). Dataset was randomly split into the dataset for model training (discovery sample; 2/3, n = 428) and the dataset for testing predictive accuracy (holdout sample; 1/3, n = 216). The target variable was scaled and mean‐centered within the training dataset.

FIGURE 2.

Schematic overview of nested cross‐validation. Internal validation (left): Threefold cross‐validation identifies lambda‐tuned predictive weights in the train set. The trained predictive model is applied to test set FC. The FC‐predicted score (pattern expression) was calculated by the dot product between predictive weights and an individual's FC. Correlation between FC‐predicted score and the actual neuropsychological test score indicated predictive accuracy. External validation (right): Same iterative prediction was applied to KSHAP dataset

In the discovery sample, hyperparameter (λ) which regulates the complexity of the predictive model was tuned using three‐fold cross‐validation. The grid range of λ was generated based on the automatic algorithm implemented in the glmnet package. It generated 100 values of λ linear on the log‐scale starting from the maximum λ value that converges all of the coefficients to zeros (Hastie et al., 2016). After finding a λ value that minimizes the mean absolute error in the nested cross‐validation, the tuned λ is used to fit the prediction model and was applied to the holdout sample. To account for the variability of group splitting, the whole procedure was iterated 50 times. The iteration of the loop showed a range of predictive accuracy and deviations across iterations. When assessing the statistical significance of the prediction accuracy, the nested iteration of 1,000 times estimated the 95% confidence interval of the precision.

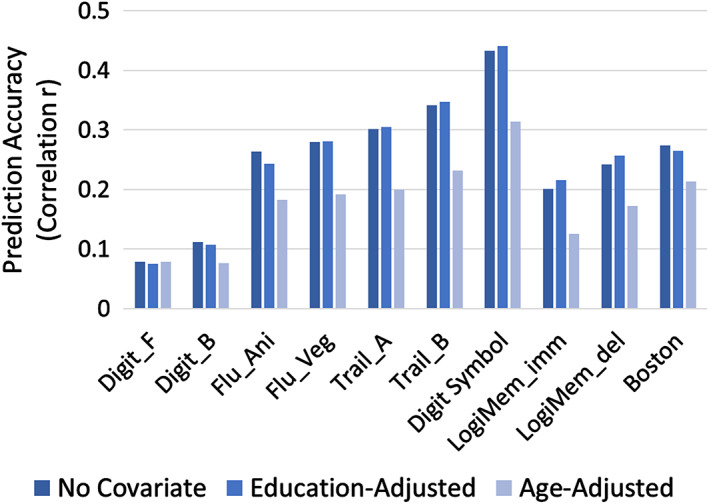

We conducted the same analysis when controlling for age and education effect. Before conducting predictive modeling, we regressed out the effect of age or education (years) on the cognitive test score, respectively in the test set. We compared how predictive accuracy (correlation coefficient) decreases when residualizing the effect of age or education. An identical analysis was conducted adjusting for the effect of sex and head motion as a nuisance variable.

While the main predictive accuracy was evaluated with the iteration of the nested cross‐validation procedure, the distributive relationship between the FC‐predicted score and the observed behavioral score in the scatter plots are presented for visualization purpose. The predicted scores calculated based on nested three‐fold cross‐validation were plotted. The training and testing dataset folds are randomly split. Each predicted score of 1/3 of the data fold was allotted using the predictive model trained within the rest of 2/3 data folds. In the case of the whole dataset within the internal cross‐validation procedure, the p values of the empirical correlation values, based on their corresponding null distribution, were computed by the following formula: (1 + the number of permutated r values greater than or equal to the empirical r)/1,001. The permutation test results revealed significant p values for the empirical r values.

2.4.2. External validation: Prediction in external sample

After identifying eight homologous neuropsychological tests, the same prediction procedure was applied to test whether each test model generalizes to external study with different populations and protocols (KSHAP dataset, n = 151; Figure 2b). Using the predictive model trained in the OASIS‐3 discovery sample (n = 428), the dot product between predictive weights and FC vectors of KSHAP dataset estimated the FC‐predicted score (pattern expression score). The training procedure identical to internal validation was applied and iterated 50 times using ridge regression. When assessing the statistical significance of the prediction accuracy, the nested iteration of 1,000 times estimated the 95% confidence interval of the precision.

Since clinical status and educational attainment widely differed between internal validation (OASIS‐3) and external validation dataset (KSHAP), we additionally examined whether the predictive model trained with a more homogeneous population (clinically normal, lower‐educated) shows better predictive accuracy.

2.5. Spatial pattern of predictive model

The predictive accuracy can differ according to the range and extent to which features are considered. In this study, we examined how feature thresholding, feature sparsity, or pre‐defined network shows systematically different levels of predictability and exhibit optimally distributed predictive models.

2.5.1. Feature filtering

To pinpoint the necessary range of brain predictors and infer the optimal sparsity of the model, we examined the systematic effect of the feature selection threshold on the predictive accuracy. Since using every FC feature in training can introduce noisy information which does not contribute to prediction, the filtering method may provide optimal precision of the predictive model. The filtering method excludes the predictors which are not strongly or weakly associated with the target variable (i.e., test score) by conducting univariate tests on each feature. This method can reduce dimensionality into a fewer set of predictors that are relevant to the outcome variable. Previous studies have shown that the extent of feature selection threshold may systematically influence the predictive performance (Gao et al., 2019; Greene, Gao, Scheinost, & Constable, 2018; Jangraw et al., 2018).

The spatial distributedness was examined in the OASIS‐3 dataset which was used in the internal cross‐validation. In the training set, FC features that are not correlated with the target test score above the specified threshold (Pearson's correlation |r| < .01, .02,…, .18) were excluded in the predictive modeling. The same features selected in the training set were also applied to the testing dataset. As the feature selection threshold increases, the features of the predictive model will be constructed with focally correlating features. If the individual difference of neuropsychological test score is based on the connectivities of broad brain regions, thresholding of features will lead to a significant decrease in the predictive performance. We identified the threshold point of maximum prediction accuracy and the inflection point was considered optimal sparsity of the prediction model.

2.5.2. Feature regularization type

To evaluate whether neuropsychological test performance is driven by selective features of functional connections, we additionally examined the prediction result across hyperparameter (α) that affects the sparsity of the predictive model. The mixing hyperparameter α (proportion of L2‐regularization term) defines the tendency of predictive weights to shrink into zero as shown in the following formula.

The current study will examine the sparsity of the features across the α values and infer the effect of feature sparsity (Gao et al., 2019). We examined whether predictive models with weak and distributed features (low‐α elastic net or ridge regression) more accurately predict neuropsychological performance than models with a selective number of features (high‐α elastic net or LASSO). The same predictive modeling procedure was iterated across the 21 grid values of α ([0.00, 0.05,…, 1.00]2). The systematic effect of the increased model sparsity on the predictive accuracy was examined.

2.5.3. Brain functional network composition of predictive model

In the previous study, brain regions are parcellated and clustered into eight functional networks (frontoparietal, medial frontal, default mode, subcortical/salience, motor/auditory, Visual I, Visual II, and visual association; Shen et al., 2013; Figure S1). The functional networks indicate a coherent set of regions that activates together and forms modular communities (Laird et al., 2013; Power et al., 2011). In order to interpret the relative importance of specific functional networks in predictive models, we assessed how much predictive accuracy decreases when excluding each functional network in conducting the same predictive analysis (Dubois et al., 2018; Whelan et al., 2014). If a particular test score requires unique neural information from a specific functional network, excluding the network connectivity information will deteriorate the original predictive performance. On the contrary, a minimal change will indicate that the neural basis of test performance is not confined to the excluded functional network.

2.6. Discriminant validity of predictive model

We examined whether the connectome‐based predictive model can represent a specific cognitive construct with discriminant validity. If the predictive model distinctively predicts the cognitive test that has trained the model, the predictive model may have captured a specific cognitive construct that was attempted to measure (Woo et al., 2017). On the contrary, if a specific FC‐predicted score is correlated not only with the test score used to train the very model but also with the other test scores representing distinct cognitive construct, it is more likely that the predictive model captures general and common component of cognitive function (Habeck et al., 2015; Rosenberg et al., 2015). The predictive models were trained with the OASIS‐3 discovery sample (n = 428), and the FC‐predicted score was estimated using the FC expression of the internal holdout sample (OASIS‐3, n = 216) and KSHAP sample (n = 151). In this way, we generated eight FC‐predicted scores of each dataset. The 8‐by‐8 correlation matrix between the actual behavioral scores and FC‐predicted scores of the eight cognitive tests was averaged across iterations of 1,000 times predictions. A higher correlation in the diagonal elements than off‐diagonal elements indicated convergent and discriminant characteristics of the predictive model.

2.7. Clinical validity of predictive model

To examine the clinical validity of the predictive model, we tested Spearman's rank correlation between neuropsychological test score (observed or predicted) and clinician‐rated functional impairment score across each pair of the eight homologous tests. The Clinical Dementia Rating scale–sum of boxes (CDR–SOB) was used to evaluate the ecological relevance of the predictive models. The CDR‐SOB provides levels of functional impairment across six domains of function (memory, orientation, judgment, community affairs, home and hobbies, personal care) (Lynch et al., 2005; J. C. Morris, 1997).

The FC‐predicted score in the OASIS‐3 dataset was estimated using the predictive models constructed with the homologous tests included in the KSHAP external dataset. Using three‐fold cross‐validation, optimal hyperparameter (λ) that minimizes mean absolute error (MAE) was identified in ridge regression (α = 0). Then the fitted regression model was applied to FCs of OASIS‐3 and estimated each of the eight FC‐predicted scores. The partial rank correlation between the neuropsychological score (observed or predicted) and CDR‐SOB controlling for the counterpart score (either the predicted or observed score) was tested to examine the incremental value of the FC‐predicted score. The remaining correlation even after adjusting the effect of the actual behavior score indicated the incremental information provided from the FC‐predicted score. Partial rank correlation analysis was conducted using ppcor package (Kim, 2015).

3. RESULTS

3.1. Internal cross‐validation

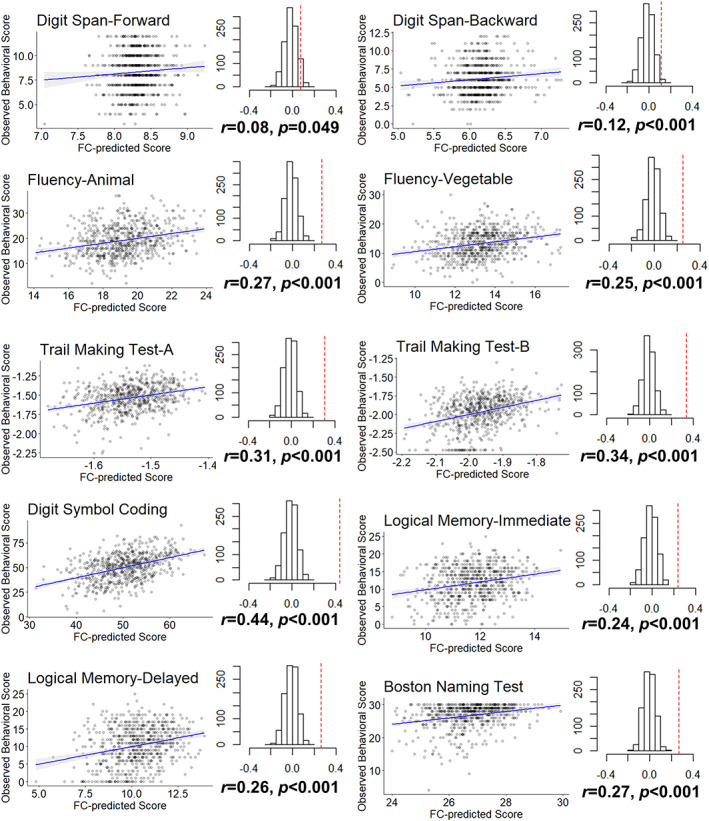

We first examined the correlation between observed behavioral test scores and FC‐predicted scores. The three‐fold cross‐validation yielded prediction results in OASIS‐3 total dataset (Figure 3). The ridge regression was used to regularize prediction coefficients in each iteration. The permutation testing also confirmed a significant correlation (p < 0.001) between the predicted and observed scores except for the marginal significance in the Digit Span Forward test (p = .049).

FIGURE 3.

Internal cross‐validated prediction result. Correlation between FC‐predicted score and observed behavioral score in OASIS‐3 (n = 644). The predicted scores were calculated based on three‐fold cross‐validation. Each of the folded datapoints (1/3) was calculated with the FC prediction model tuned and constructed within the other two folds of data (2/3). The permutation results are shown as the null distribution and the true predicted coefficient (dashed red line)

For the main predictive analysis using nested cross‐validation, prediction accuracy ranged from r = 0.08–0.44 (Figure 4). We additionally examined prediction results after adjusting the effect of age and education in both the training set and test set. Age‐adjustment resulted in a large decrease in prediction accuracy whereas education‐adjusted results showed a minimal decrease in the accuracy, indicating the age‐related attributes of the predictive model (no covariate mean correlation = 0.253, education‐adjustment mean correlation = 0.254, age‐adjusted mean correlation = 0.179). The results also remained largely unchanged when adjusting for the effect of sex (mean correlation = 0.252) and head motion (mean correlation = 0.247). The confidence interval of the iterated prediction showed statistically significant predictability except for Digit Span tests (Figure S2).

FIGURE 4.

Prediction accuracy (correlation between FC‐predicted score and observed NP score) in the test set (n = 216) when age and education effects are adjusted. The adjustments are conducted by residualizing age and education effect on the scores of the testing dataset. Mean correlation coefficients of 50 iterated prediction procedures are plotted. Digit_F, digit span forward; Digit_B, digit span backward; Flu_Ani/Veg, category fluency (animal/vegetable); Trail_A, Trail Making Test Part A; Trail_B, Trail Making Test Part B; LogiMem_imm, WMS‐R Logical Memory I immediate recall; LogiMem_del, WMS‐R Logical Memory II delayed recall; Boston, Boston Naming Test

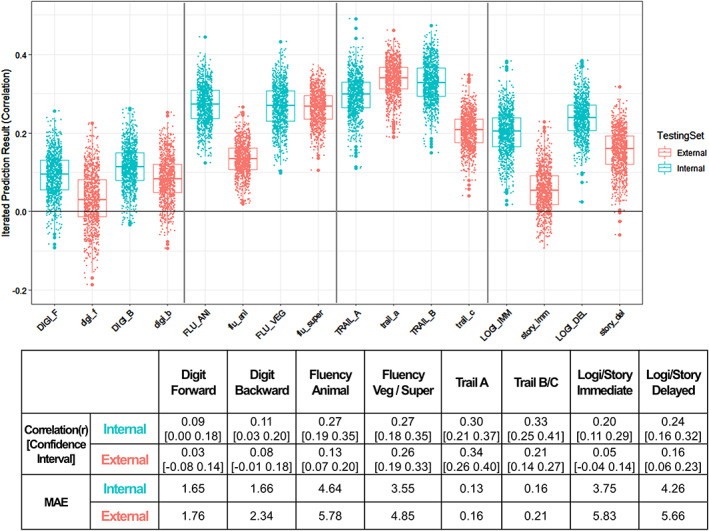

3.2. External cross‐validation

We identified eight homologous neuropsychological tests that can be tested as an external dataset (Table 2). When conducting nested cross‐validation (Figure 2) across both internal (OASIS‐3) and external (KSHAP) dataset showed a range of iterated prediction results (Figure 5). The result showed that internal cross‐validation produced higher accuracy (mean r = 0.226) than external cross‐validation (mean r = 0.159). Most of the correlation decreased whereas fluency (vegetable/supermarket) and Trail Making Test (A) showed preserved internal cross‐validation's accuracy.

TABLE 2.

Descriptive statistics of neuropsychological test performance in internal (OASIS‐3) and external (KSHAP) validation dataset

| Internal validation set OASIS‐3 (n = 644) | External validation set KSHAP (n = 151) | ||||||

|---|---|---|---|---|---|---|---|

| Mean | SD | Range | Mean | SD | Range | ||

| Digit span forward | 8.31 | 2.01 | 3–12 | Digit span forward | 7.64 | 2.16 | 4–14 |

| Digit span backward | 6.16 | 2.15 | 0–12 | Digit span backward | 4.39 | 2.12 | 1–14 |

| Fluency animal | 19.14 | 6.03 | 2–37 | Fluency animal | 13.72 | 4.21 | 3–30 |

| Fluency vegetable | 13.25 | 4.55 | 0–30 | Fluency supermarket | 15.76 | 6.41 | 3–34 |

| Trail making test A | 37.51 | 19.05 | 13–180 | Modified Trail making test A | 34.21 | 19.13 | 7–157 |

| Trail making test B | 106.43 | 63.95 | 20–300 | Modified Trail making test C | 132.64 | 79.37 | 25–522 |

| Digit symbol coding | 50.22 | 13.48 | 4–93 | ||||

| Logical memory immediate | 11.72 | 4.68 | 0–25 | Story recall test immediate | 13.44 | 6.76 | 0–28 |

| Logical memory delayed | 10.29 | 5.34 | 0–25 | Story recall test delayed | 11.48 | 6.89 | 0–28 |

| Boston naming test | 26.70 | 3.61 | 4–30 | ||||

FIGURE 5.

Prediction accuracy (correlation r between FC‐predicted score and observed NP score) of corresponding neuropsychological test across internal (OASIS‐3) and external (KSHAP) testing set. Model training: OASIS‐3 (n = 428); Internal cross‐validation: Holdout test dataset in OASIS‐3 (n = 216). External cross‐validation: KSHAP dataset (n = 151). Each dot indicates 50 times iterated results. DIGI_F/digi_f, digit span forward; DIGI_B/digi_b, digit span backward; FLU_ANI/flu_ani, category fluency (animal); FLU_VEG/flu_sto, category fluency (vegetable/supermarket); TRAIL_A/trail_a, Trail Making Test A; TRAIL_B/trail_c, Trail Making Test B/C; LOGIMEM_IMM/story_imm, Logical Memory/Story Recall Test immediate recall; LOGIMEM_DEL/story_del, Logical Memory/Story Recall Test delayed recall

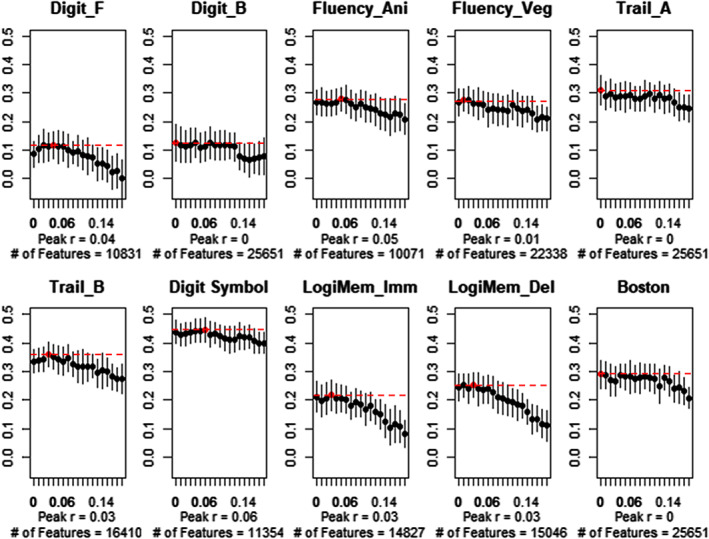

3.3. Feature filtering

To examine the extent to which focal brain regions are attributable to the prediction result, we tested the effect of the feature filtering threshold on the prediction accuracy. By applying univariate tests to each connectivity feature, we filtered the features that correlated positively or negatively with the test scores higher than the threshold. We confirmed that increasing the filtering threshold resulted in a decrease in the features utilized in the training set (Figure S3). When examining the optimal filter threshold, we found that excluding features with a light threshold (|r| < 0.06) or applying no threshold (|r| = 0.00) showed the highest predictive accuracy in the test set (Figure 6). Therefore, maintaining a large proportion of the features (10,831–25,651 edges, 42–100%) in the ridge regression models produced the most accurate prediction. In contrast, however, several tests preserved their original accuracy even when the relatively strong threshold was applied. Excluding features with a high threshold (|r| < 0.14) minimally changed the predictive accuracy with the smaller number of features. We also confirmed a consistent result with the other accuracy metric (mean absolute error; Figure S4).

FIGURE 6.

Predictive accuracy across feature filtering threshold (|r| > .00, .01,…, .17, 0.18). Error bar indicates SD of 50 times iteration results across thresholds. The highest peak accuracy points (red dots, dashed lines) and the number of connectivities that survived after filtering (averaged across iterations) are noted. Increasing the filter threshold left fewer features and produced lower predictive accuracy

3.4. Feature regularization

Similar to the feature filtering, we additionally examined the effect of the mixing parameter (α, proportion of L1‐norm) that affects the model sparsity. If the α parameter increases, the penalized model produces highly sparse features with fewer predictors, whereas the lowest α parameter (ridge regression) leaves highly distributed weights of features. We initially confirmed that setting higher α left fewer sets of predictors (Figure S5). Consistent with feature filtering results, penalizing regression coefficients with increased α resulted in lower predictive accuracy.

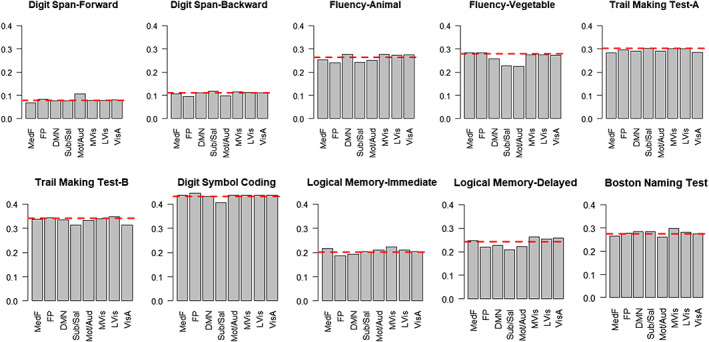

3.5. Leave‐network‐out prediction

To identify the brain functional networks that are critical to the prediction, we conducted a leave‐network‐out analysis. Similar to virtual lesion analysis, the predictive analysis was conducted after the connectivities of the designated functional network (features of within and between network) were excluded. The result showed that excluding specific functional networks (frontoparietal, default mode, subcortical/salience) showed a larger decrease than other networks, but excluding a single network minimally affected the prediction accuracy in general (Figure 7).

FIGURE 7.

Prediction performance (averaged 50 times iteration) after excluding each functional network. Within (network‐to‐network) and between (network‐to‐all others) connectivities were excluded one at a time, and the same predictive modeling procedure was conducted. Red dashed line indicates full model performance using all of the functional networks (25,651 features). DMN, default mode network (3,915 edges); FP, frontoparietal (5,950 edges); LVis, lateral visual 2 (1,341 edges); MedF, medial frontal (6,148 edges); Mot/Aud, sensorimotor/auditory (9,898 edges);MVis, medial visual 1 (3,915 edges); Sub/Sal, subcortical/salience (12,121 edges); VisA, dorsal attention; visual association (3,706 edges)

3.6. Single network prediction

When a single functional network was separately used as predictors, several functional networks achieved prediction accuracy similar to that of the full model (Figure S6). Digit Span Forward and Logical Memory‐immediate tests were highly predictable when using frontal modules (medial frontal, default mode, or frontoparietal networks). On the other hand, Fluency‐Vegetable, Digit Symbol Coding, Logical Memory‐delayed, and Boston Naming tests were especially predictable using a relatively central part of the brain (Subcortical/Salience and Sensorimotor). The posterior part (Visual, Visual Association) played a role especially in tests requiring motor and visuospatial speed (Trail Making Test A/B, Digit Symbol Coding), whereas Digit Span Forward, Logical Memory tests were minimally predictable with the posterior networks.

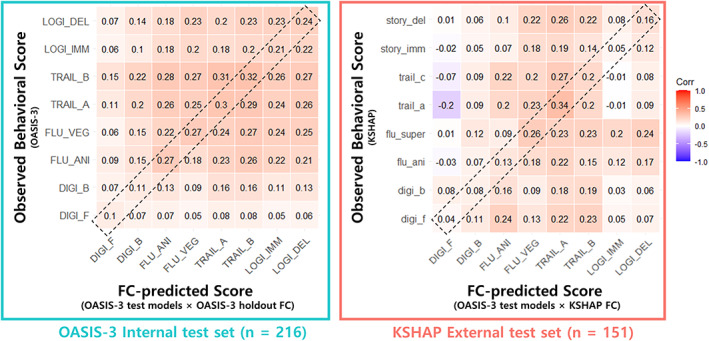

3.7. Discriminant validity of predictive model

We also further examined whether the connectome‐based predictive models specifically represent predictive information of the trained test. Based on the predictive models constructed with the OASIS‐3 training dataset (n = 428), FC‐predicted scores were calculated in the OASIS‐3 testing set and KSHAP. The correlations between eight of the predicted scores and eight corresponding behavioral scores were presented with the OASIS‐3 dataset (n = 216; Figure 8, left, identical test) and KSHAP dataset (n = 151; Figure 8, right, homologous test). The result showed that some FC models (trained with Fluency – vegetable/supermarket and Trail Making Test A) were the most predictable to the corresponding test, whereas other test models were not specifically predictable to the corresponding tests. Rather, predictive models with generally high accuracy also tended to predict scores of other cognitive domains.

FIGURE 8.

Discriminant validity of connectome‐based prediction (averaged 50 times iteration). Correlation between FC‐predicted and observed scores across eight subtests. X‐axis: Specific test used to train predictive model and its predicted score in the test set. Y‐axis: Observed neuropsychological test score in the test set. Diagonal elements within the dashed‐box indicate prediction with the same (internal) or homologous (external) test models (e.g., First column: FC model trained with Digit Span Forward [DIGI_F] is used to test the correlation between FC‐model predicted score and 8 behavioral scores)

3.8. Clinical validity of predictive model

Finally, we examined whether the brain predictive modeling approach captures clinically relevant information. We trained the predictive models in the KSHAP dataset (n = 151), and the models were applied to the OASIS‐3 total dataset (n = 644). The prediction from the KSHAP model to the OASIS‐3 dataset achieved a predictability pattern similar to the aforementioned analysis. Using these FC‐predicted scores, we conducted a rank correlation analysis between dementia functional impairment score (CDR‐SOB) and the neuropsychological performance (actual behavior score or FC‐predicted score). Since the original measures of the cognitive test are highly predictive of the clinical impairment, a unique association of the predicted‐ or observed scores are assessed with partial correlation. The result showed that the FC‐predicted score of Fluency (Supermarket) and Story/Logical Memory test (delayed) provided additive information when explaining the severity of clinical impairment (Table 3). Although FC‐predicted scores based on Fluency (Animal) and Trail Making Test (A) models were associated with the levels of clinical impairment, the other prediction scores did not remain statistically significant after adjusting for the effect of the actual behavioral performance score.

TABLE 3.

Incremental value as a clinical correlate

| Partial correlation between [observed score] and [CDR‐SOB] (FC‐predicted score controlled) | Partial correlation between [FC‐predicted score] and [CDR‐SOB] (observed score controlled) | |||

|---|---|---|---|---|

| Rho | p value | Rho | p value | |

| Digit span forward | −0.156 | 7 × 10 −5 | −0.012 | .76 |

| Digit span backward | −0.217 | 3 × 10 −8 | −0.025 | .53 |

| Fluency (animal) | −0.368 | 5 × 10 −22 | −0.077 | .05 |

| Fluency (vegetable/supermarket) | −0.418 | 1 × 10 −28 | −0.128 | 1 × 10 −3 |

| Trail making test A | −0.237 | 1 × 10 −9 | −0.044 | .26 |

| Trail making test B/C | −0.379 | 2 × 10 −23 | 0.032 | .42 |

| Logical memory immediate | −0.438 | 2 × 10 −31 | −0.048 | .22 |

| Logical memory delayed | −0.501 | 4 × 10 −42 | −0.027 | .50 |

Note: Spearman's rank partial correlation (rho) between the Observed score (real neuropsychological test performance), FC‐Predicted score (scores estimated based on the connectivity‐trained model), and clinical severity of dementia (CDR‐SOB) in OASIS‐3 total dataset (n = 643, missing = 1). Either the FC‐predicted or observed score was controlled when examining the counterpart score's association. The FC‐predicted score was estimated with the models trained with KSHAP homologous tests. A higher observed score indicates better behavioral performance. Higher CDR‐SOB indicates a poorer clinical function. Bold value has significance threshold ( p < 5*10‐2)

3.9. Clinical and demographic stratification

We additionally examined whether inconsistent clinical status across training and testing samples was associated with prediction accuracy. When the same prediction analysis was confined within the clinically unimpaired group (CDR score = 0; training data: OASIS‐3, n = 288), the prediction accuracy decreased both in the internal holdout sample (OASIS‐3, n = 148) and external KSHAP sample (n = 151; Figure S7). This result shows that training a predictive model only with the clinically normal and homogeneous population did not enhance the predictive accuracy. Rather the predictability overall decreased, indicating relevance to the clinically impaired population in the predictive model.

We also examined how heterogeneity of educational attainment across internal (OASIS‐3, mean education = 15.56) and external dataset (KSHAP, mean education = 7.17) systematically affects the prediction accuracy. We conducted an identical analysis procedure after stratifying the education group in the OASIS‐3 sample. By training the predictive models with different education groups, we tested whether the model trained with similar educational attainment more accurately predicts neuropsychological performance in the external KSHAP dataset. Our result showed, however, that while the model trained with lower‐educated older adults (7–12 years) showed relatively higher accuracy in Trail Making Test (A) and Logical Memory tests, the other tests did not show such tendencies (Figure S8).

4. DISCUSSION

In this study, we identified patterns of brain connectivities that are predictive of neuropsychological test performances in older adults. The identified predictive model across functional networks and the best prediction model required most of the connectivity features (39–100%). In addition, the predictive models trained with cognitive tests were generalized to the external dataset with heterogeneous demographic features. The evidence of discriminant validity was unclear, suggesting that the predictive models were not distinctively predictive of the corresponding tests. Also, scores estimated from connectome‐based models provided marginally additive information in explaining clinically relevant functional impairment. Our results demonstrate that late‐life neuropsychological test performance can be formally characterized by multivariate connectome‐based predictive models, and further translational evidence is needed when developing theoretically valid and clinically incremental predictive models.

In the current connectome‐based predictive modeling, tests mainly representing executive function and processing speed (Fluency, Trail Making Test, and Digit Symbol Coding) showed relatively high predictive accuracy. Notably, these neuropsychological tests have consistently shown prognostic value in predicting the progression of dementia alongside episodic memory function (Amieva et al., 2014; Ewers et al., 2012; Mortamais et al., 2017; Younes et al., 2019). The tests that assess executive and processing speed function sensitively respond to subcortical and vascular lesions and subtle cognitive decline in these domains may reflect increased dementia risk through a somewhat independent source of typical Alzheimer's pathophysiology (Chouiter et al., 2016; Hedden et al., 2012; Jiang et al., 2018; Parks et al., 2011; Rabin et al., 2019). Accordingly, the decrease in the predictive accuracy was most prominent when excluding the subcortical network, especially in predicting vegetable fluency, Trail Making Test (B), and Digit Symbol Coding tests. These results indicate that some portion of test performance requires necessary neural information from the subcortical network connectivities.

The tests representing attention and working memory function were less predictable with the connectome‐based models. Possible reasons for low predictability can be discussed. First, contrary to the previous studies that measured attentional performance with extensive trials, the Digit Span test included in the battery may have lacked reliable information from only 12 trials. Second, Digit Span tests are relatively less sensitive to vascular or Alzheimer's diseases’ pathophysiological processes (Belleville et al., 2014; Petersen et al., 2010; Ramirez‐Gomez et al., 2017). It is possible that the reliability of predictive neural correlates may be largely driven by the test's relevance to the pathological process of late‐life neurocognitive disorders.

Another possible explanation for the low predictive accuracy especially in Digit Span tests may be due to the nonordinal brain–behavior relationship. Unlike other neuropsychological tests that require homogeneous and repetitive trials, Digit Span test trials are incrementally ordered in terms of task loads. Although working memory tasks induce a parametric increase in neural response as a function of task load (i.e., the length of digits to maintain or manipulate), older adults undergoing age‐related brain changes fail to show a typical parametric increase, indicating early arrival to their maximum neural capacity (Nagel et al., 2009; Schneider‐Garces et al., 2010; Seghier & Price, 2018). As older adults utilize idiographic brain regions to compensate for the decreased neural inefficiency, the cognitive process during Digit Span tests may require neural features that are not uniform and ordinal (Carp, Gmeindl, & Reuter‐Lorenz, 2010). In other words, the corresponding neural correlates that each trial reflects may qualitatively differ across span lengths (e.g., 4–5 vs 7–8). These complications have also been referred to as Simpson's paradox, suggesting that the brain–behavior correlation can even be reversed across the subgroups or the individuals composing the population (Cabeza et al., 2018; Kievit, Frankenhuis, Waldorp, & Borsboom, 2013). For example, increased activity of the frontal cortex is one of the distinct features when comparing young adults and old adults, and it appear to be a beneficial sign for those who already underwent age‐related changes (Cabeza, Anderson, Locantore, & McIntosh, 2002; Davis, Kragel, Madden, & Cabeza, 2012). Interestingly, our study showed that the predictive model of the Digit Span Forward test predicted the worse performance of Trail Making Test A in the external dataset (r = −0.21). This result suggests that the behavioral relevance of neural correlates can be easily reversed, and more fine‐grained decomposition of individual differences is required in the future (Borsboom et al., 2016; Michell, 2012).

In the practice of connectome‐based predictive modeling, identifying which brain regions constitute the models has been a challenging issue. By conducting a leave‐network‐out analysis, logically similar to hierarchical regression, we examined whether the necessary predictive information is embedded in the specific units of brain predictor (Yarkoni & Westfall, 2017). Consistent with the previous attempt to specify regional specificity of predictive models, excluding a large portion of functional networks (5–47% of edges) minimally affected the predictive performance, indicating that the individual difference of neuropsychological functions is not solely based on a specific functional network (Nielsen, Barch, Petersen, Schlaggar, & Greene, 2019; Rosenberg, Finn, Scheinost, Constable, & Chun, 2017). One notable previous study also had shown that cognitive impairment of associative domains (attention and memory function) could be better predicted with diverse contributions of functional networks. In contrast, less‐associative domains (visual, somatomotor) can be better predicted with the locational information of stroke lesions (Siegel et al., 2016). In other words, if the neuropsychological test requires a collaboration of multiple cognitive modules, it becomes more unlikely to find a unitary neural predictor. In general, appears that neural disruption in multiple units of information processing modules (i.e., functional networks) combinatorially leads to behavioral impairment in the neuropsychological tests.

Moreover, when examining the optimal sparsity of the model, our results showed that utilizing a large proportion of the connectivity predictors in the model showed optimal predictive accuracy. Most of the test models required more than half of the total connectivities (r > .01– .06; 10,071–25,651 features) in order to achieve the best accuracy. While some tests (Digit Span backward, Digit Symbol Coding) largely maintained the original predictability even when applying a stronger filter threshold, the decreasing tendency was consistent across all tests. This result indicates that previous studies capturing focal neural correlate with conservative multiple comparison corrections (i.e., familywise error rate) may have omitted behaviorally relevant neural features (Masouleh et al., 2019). If a researcher identifies neural correlates by applying a relatively strong univariate test threshold, such focal models may not fully represent the highly distributed nature of the neuropsychological functions. Also, the predictive model that selects sparse features (higher α regularization) showed poorer predictive accuracy. Consistent with previous studies, accurate individualized prediction of test performance requires a fine‐grained combination of neural features rather than highly selective neural features (Cui & Gong, 2018; Gao et al., 2019; Hatoum et al., 2019).

This viewpoint may seem to run counter with the extensive discussions that have been made on the highly specified functional roles of the brain networks (Buckner, Krienen, & Yeo, 2013; Fox & Raichle, 2007; Vaidya, Pujara, Petrides, Murray, & Fellows, 2019). If functional neuroimaging reflects available regional neural resources, it is plausible that the inter‐individual difference mainly stems from the relevant brain areas (Lebreton, Bavard, Daunizeau, & Palminteri, 2019; Navon & Gopher, 1979; Poldrack, 2015). However, our brain‐wide predictive models suggest that inter‐individual difference in cognitive function is not only reflective of the focal brain characteristics but also of the distributed network systems (Fox, 2018; Sutterer & Tranel, 2017). This may be due to the high interdependency of functional connectivities. Even with small and focal brain lesions, the effect may result in the neural changes extending to adjacent connectivities (Fornito, Zalesky, & Breakspear, 2015; Gratton, Nomura, Pérez, & D'Esposito, 2012). This emergent property of the network may result in highly dispersed neural correlate of cognitive functions (Alstott, Breakspear, Hagmann, Cammoun, & Sporns, 2009; Stam, 2014). A growing body of research using other neuroimaging modalities also suggest that previously identified regional specificity of neurocognitive function (i.e., frontal lobe task), in fact, reflects distributed effects of inter‐dependent neural systems (Burgess & Stuss, 2017; Cole, Yarkoni, Repovš, Anticevic, & Braver, 2012). Future work is needed to bridge the explanatory gap between functional connectivity and dynamic reorganization of neural states in performing cognitive tasks (Barbey, 2018; Gu et al., 2015; Shine et al., 2015). Our result suggests that only a single sensory modality, especially visual modal, is less predictive of the cognitive tests compared to the higher‐order association system.

It should be noted, however, that focally selected connectivities also showed comparable predictive accuracies. For example, thresholding connectivities into a set of highly selective features showed preserved prediction accuracy, to some degree, in several tests (i.e., Digit Span Backward, Fluency, Trail Making A). This tendency was also described when a single functional network was used in the prediction. These results indicate that most of the individual differences can be represented with a relatively small proportion of important connectivities, and taking account for every other neural feature minimally adds to the total predictability. It is possible that neurodegenerative pathology is typically characterized by changes in brain regions with hub properties, and individual differences in topologically important regions may play a more significant role in predicting neuropsychological performance, especially in the early stage (Fischer, Wolf, & Fellgiebel, 2019).

In this study, we cross‐validated the predictive model in both internal (holdout) and external (KSHAP) test dataset. We found that some neuropsychological tests (Trail Making Test A, Fluency – vegetable/supermarket) maintained similar predictive accuracy even when applied to the external study population. In contrast, most of the tests showed decreased predictive accuracy in the external dataset. This study provides novel evidence that the extent to which a brain–behavior predictive model is replicable differs across specific tests. Although the matched sets of tests are highly homologous, even minor differences in test elements (e.g., digit number orders, semantic category, trail interference component, and story contents) may have significantly affected the qualitative attribute of the tests. This point warrants caution in developing replicable and generalizable brain predictive models. Although previous studies have verified predictive models with the internal cross‐validation, they may not guarantee predictability in the novel dataset, and recent studies including both internal and external validation of connectome‐based models have noted weak generalizability (Boeke, Holmes, & Phelps, 2019; Dinga et al., 2019; Fountain‐Zaragoza, Samimy, Rosenberg, & Prakash, 2019; Yoo et al., 2018). Another possible factor that affects the generalizability is demographic heterogeneity. It has been well‐documented that psychometric quality and the construct representativeness of the neuropsychological tests may differ across demographics and clinical status of the study population (Bertola et al., 2019; Mungas, Widaman, Reed, & Tomaszewski Farias, 2011; Siedlecki et al., 2010; Siedlecki, Honig, & Stern, 2008). Also, lifespan intellectual experience including educational attainment may alter brain‐behavior relation (Franzmeier et al., 2016; O'Shea et al., 2018; Resende et al., 2018; Steffener et al., 2014). Further examination is needed to account for the modifiers of connectome‐based models across stratified demographic characteristics (e.g., age range, years of education, and socioeconomic status).

In the previous studies, meaningful attempts were made using a similar predictive modeling approach (Lin et al., 2018; Meskaldji et al., 2016; Moradi, Hallikainen, Hänninen, & Tohka, 2017; Shim et al., 2017). While predictive patterns of neuropsychological test performance were often identified, studies typically summed various domains of functions into a total score or only examined a single domain (i.e., episodic memory function). Thus, the differential predictabilities across theoretically distinct cognitive domains were mostly unexamined. Identifying the generalizability or specificity of the predictive models will be crucial in developing formal theories of inter‐subject neuromarker of cognitive functions (Jangraw et al., 2018; Rosenberg et al., 2015, 2018; Woo et al., 2017).

In examining the correlation between the predicted scores and the behavioral scores, the evidence of convergent and discriminant validity (i.e., the distinctiveness of model predictability) was unclear. While we hypothesized that each of the predictive models represents individual differences of the corresponding cognitive test that trained the model, we observed several scores that showed generally stronger predictability across other tests. This result may be due to the fact that a large proportion of neural correlate is based on the individual differences shared across cognitive domains. The latent factor structure of neuropsychological tests typically shows high correlations between distinct cognitive domains (Greenaway, Smith, Tangalos, Geda, & Ivnik, 2009; Park et al., 2012), and a relatively small portion of variance may be uniquely attributable to each cognitive test. It has been suggested that age‐related decline in diverse domains of cognitive function are primarily driven by preceding changes in processing speed function (Salthouse, 1996a,1996b), due to major late‐life neuropathological changes that are typically observed across diffuse brain areas (Fjell et al., 2014; Habes, Erus, et al., 2016). Accordingly, most of the identified brain‐behavior relationship seems to be composed of common cognitive factors (S. Lee, Habeck, Razlighi, Salthouse, & Stern, 2016; Salthouse et al., 2015). Previous studies have shown that multivariate functional connectivity patterns tend to capture a strong covarying latent structure that commonly explains the variance of multiple cognitive functions (Perry et al., 2017; Smith et al., 2015). Although predictive modeling captures neural correlates of general cognitive functions, unique correlates of each domain can be masked if the given study population does not include subgroups that show heterogeneous cognitive impairment profiles (Delis, Jacobson, Bondi, Hamilton, & Salmon, 2003). The inclusion of various clinical subgroups may sharpen the unique specificity of each neuropsychological test (Corbetta et al., 2015; Sachdev et al., 2014).

This study also adds to a growing literature proposing that neuroimaging measures should be adaptable to translational goals (Arbabshirani, Plis, Sui, & Calhoun, 2017; Kapur, Phillips, & Insel, 2012; Woo et al., 2017). Regression models are suitable when neuropsychiatric disorders are essentially characterized by continuous dimensions of behavioral symptoms. In this way, the model can obtain subtle individual differences that would have been masked with binarized classification (Altman & Royston, 2006). Neurobehaviorally translated scores of the cognitive impairment may validly characterize behavioral significance and prognostic meaning of the preceding pathophysiological process of interest and play an important role in formalizing the dimensional construct of neurocognitive disorders (Bilder & Reise, 2019; Cuthbert & Insel, 2013). In the future, the predictive models will be able to gauge relative behavioral risk scores of dementia in generalized contexts similar to previously developed brain pathology risk scores (Habes, Erus, et al., 2016; Habes, Janowitz, et al., 2016). Nevertheless, our study result requires caution in applying predictive models to clinical usage. In the current study, while predictive models of category fluency and long‐term episodic memory test captured incremental clinical information that the original score did not provide, the explanatory size was minimal and far smaller than behavioral scores. Despite the promising remarks of neuroimaging‐based biomarkers in classifying diagnosis and prognosis of neuropsychiatric disorders (Orrù, Pettersson‐Yeo, Marquand, Sartori, & Mechelli, 2012; Pellegrini et al., 2018; Rathore, Habes, Iftikhar, Shacklett, & Davatzikos, 2017), more evidence is needed to conclude that the identified neurobehavioral marker provides incrementally useful information (Jollans & Whelan, 2016; Moons et al., 2012).

There are several limitations to the current study that should be noted. First, it is mostly unknown whether the FC modality can comprehensively represent various neural processes. Although FC is advantageous in bridging the theoretical gap between dynamic cognitive process and pathophysiology (Brier, Thomas, & Ances, 2014; Fox, 2018; Mill, Ito, & Cole, 2017; Tavor et al., 2016), its macroscale approach should be further specified with more direct and pathophysiological processes relevant to the current and future neuropsychological function. Combining other modalities and imaging biomarkers in the predictive model will clarify how much shared and unique behavioral features are ingrained in each modality (Hedden, Schultz, Rieckmann, Mormino, & Buckner, 2016; Pellegrini et al., 2018; Sui et al., 2018). Second, resting‐state FC based on low‐frequency fluctuation may not be an optimal modality that purely captures the focal neural phenomenon. Initial regional change may induce a cascade of FC responses (Fornito et al., 2015), and both increased and decreased functional connectivities may be a result of secondary compensatory reorganization processes (Chiesa et al., 2019; Reijmer et al., 2015; Schultz et al., 2017). Combining the primary neuropathological signs in the predictive models will significantly enhance the interpretation of predictive models (Siegel et al., 2016). Finally, the current method only utilizes the brain regional definition as a group‐norm atlas, rather than a personalized topology. However, recent studies underscore the individual difference in functional parcellation and its relevance to the behavioral traits (Cui et al., 2020). Future studies are necessary for delineating whether the prediction results are due to the strength of connectivities rather than the individual differences in functional topography.

We also note the cautious implication of our approach with regards to the validity of behavioral test measures. While we critically evaluated the possible reasons why some test performances are not accurately represented in the patterns of functional connectivities, the predictability of a brain model itself does not ensure the validity of behavioral measures. Reliable and robust correspondence to neural correlate may indicate what biological properties the test measures, but the specific and focal correspondence of neural correlate may instead compromise the comprehensive utility of the cognitive test to describe the real‐world activities (i.e., ecological validity; Bilder & Reise, 2019; Kessels, 2019). Both the neuroscientific correspondence and ecological validity of the recently developed neurobehavioral markers need to be assessed alongside existing behavioral instruments.

CONFLICT OF INTEREST

We declare no conflicts of interest.

Supporting information

FIGURE S1 Functional network parcellation map from Shen, Tokoglu, Papademetris, and Constable (2013)

FIGURE S2 Prediction Accuracy (Correlation between FC‐predicted score and observed NP score) in the test set (n = 216) when all covariates (age, education, sex, and head motion) are adjusted. The adjustment is made by residualized the effect in the testing dataset. The correlation coefficients of 1,000 iterated prediction procedures are plotted. Digit_F, digit span forward; Digit_B, digit span backward; Flu_Ani/Veg, category fluency (animal/vegetable); Trail_A, Trail Making Test Part A; Trail_B, Trail Making Test Part B; LogiMem_imm, WMS‐R Logical Memory I immediate recall; LogiMem_del, WMS‐R Logical Memory II delayed recall; Boston, Boston Naming Test; MAE, mean absolute error

FIGURE S3 Feature filtering result. Averaged number of connectivities selected in the predictive model across feature filtering threshold (correlation r = 0.00, 0.01,…,0.18). A higher filter threshold produces a predictive model composed of sparse and fewer predictors

FIGURE S4 Iterated predictive accuracy (mean absolute error) across a feature filtering threshold. Higher a produces generally lower predictive accuracy in the holdout test set. Error bar indicates SD of 50 iteration results. The red dot indicates the highest accuracy point

FIGURE S5 Feature sparsity result. The total number of connectivities survived in the predictive model across α hyperparameters (α = 0: ridge regression, α = 1: LASSO regression). (a) The number of survived features across α hyperparameter. Higher α produces a predictive model composed of sparse and fewer predictors. Y‐axis was log‐scaled. (b) Iterated predictive accuracy across α hyperparameter. Higher α produces lower predictive accuracy in the holdout test set. Error bar indicates SD of 50 iteration results. The red dot indicates the highest accuracy point

FIGURE S6 Prediction performance (r) using only a single functional network. Within (network‐to‐network) and between edges (network‐to‐all others) were excluded from the same predictive modeling. The Red dashed line indicates full model performance when predicting with all of the functional networks (25,651 features). MedF, medial frontal; FP, frontoparietal; DMN, default mode network; Sub/Sal, subcortical/salience; Mot/Aud, sensorimotor and auditory; MVis: medial visual (1); LVis, lateral visual (2); VisA, visual association

FIGURE S7 External prediction accuracy (r) that were trained with either total sample (OASIS‐3, n = 644) or cognitively unimpaired OASIS‐3 sample (CDR = 0)

FIGURE S8 External prediction accuracy (r) that were trained with either total sample (OASIS‐3, n = 644) or stratified education groups (7–12 years, n = 132; 13–15 years, n = 124; 16–17 years, n = 187; 18–29 years, n = 198)

TABLE S1 Prediction result from the KSHAP model to the OASIS‐3 dataset. The model was trained with the KSHAP dataset (n = 151) and tested in OASIS‐3 total dataset (n = 644). Correlation (r) between FC‐predicted score and observed behavior score in the OASIS‐3 dataset is presented

ACKNOWLEDGMENTS

Data were provided in part by OASIS‐3 Principal Investigators: T. Benzinger, D. Marcus, J. Morris; National Institutes of Health (NIH), P50AG00561, P30NS09857781, P01AG026276, P01AG003991, R01AG043434, UL1TR000448, and R01EB009352. This research is supported by the National Research Foundation of Korea (NRF‐2017S1A3A2067165), funded by the Ministry of Education, Science and Technology. We thank W. Ahn, S. Hahn, J. Lee, and C. Woo for the constructive and critical feedback.

Kwak S, Kim H, Kim H, Youm Y, Chey J. Distributed functional connectivity predicts neuropsychological test performance among older adults. Hum Brain Mapp. 2021;42:3305–3325. 10.1002/hbm.25436

Funding information National Research Foundation of Korea, Grant/Award Number: NRF‐2017S1A3A2067165; Ministry of Education, Science and Technology; National Institutes of Health (NIH), Grant/Award Numbers: R01EB009352, UL1TR000448, R01AG043434, P01AG003991, P01AG026276, P30NS09857781, P50AG00561

DATA AVAILABILITY STATEMENT

Data available on request due to privacy/ethical restrictions.

REFERENCES

- Alstott, J. , Breakspear, M. , Hagmann, P. , Cammoun, L. , & Sporns, O. (2009). Modeling the impact of lesions in the human brain. PLoS Computational Biology, 5(6), e1000408. 10.1371/journal.pcbi.1000408 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Altman, D. G. , & Royston, P. (2006). The cost of dichotomising continuous variables. BMJ, 332(7549), 1080. 10.1136/bmj.332.7549.1080 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amieva, H. , Mokri, H. , Le Goff, M. , Meillon, C. , Jacqmin‐Gadda, H. , Foubert‐Samier, A. , … Dartigues, J. F. (2014). Compensatory mechanisms in higher‐educated subjects with Alzheimer's disease: A study of 20 years of cognitive decline. Brain, 137(4), 1167–1175. 10.1093/brain/awu035 [DOI] [PubMed] [Google Scholar]

- An, H. , & Chey, J. (2004). A standardization study of the story recall test in the elderly Korean population. The Korean Journal of Clinical Psychology, 23(2), 435–454. [Google Scholar]

- Arbabshirani, M. R. , Plis, S. , Sui, J. , & Calhoun, V. D. (2017). Single subject prediction of brain disorders in neuroimaging: Promises and pitfalls. NeuroImage, 145, 137–165. 10.1016/j.neuroimage.2016.02.079 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arenaza‐Urquijo, E. M. , & Vemuri, P. (2018). Resistance vs resilience to Alzheimer disease. Neurology, 90(15), 695–703. 10.1212/WNL.0000000000005303 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Avery, E. W. , Yoo, K. , Rosenberg, M. D. , Greene, A. S. , Gao, S. , Na, D. L. , … Chun, M. M. (2019). Distributed patterns of functional connectivity predict working memory performance in novel healthy and memory‐impaired individuals. Journal of Cognitive Neuroscience, 32, 1–15. 10.1162/jocn_a_01487 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barbey, A. K. (2018). Network neuroscience theory of human intelligence. Trends in Cognitive Sciences, 22(1), 8–20. 10.1016/j.tics.2017.10.001 [DOI] [PubMed] [Google Scholar]

- Bayram, E. , Caldwell, J. Z. K. , & Banks, S. J. (2018). Current understanding of magnetic resonance imaging biomarkers and memory in Alzheimer's disease. Alzheimer's & Dementia, 4, 395–413. 10.1016/j.trci.2018.04.007 [DOI] [PMC free article] [PubMed] [Google Scholar]