Key Points

Question

Can artificial intelligence guide novice operators to obtain echocardiographic scans with limited diagnostic utility?

Findings

In this diagnostic study, 8 nurses without prior ultrasonography experience used artificial intelligence guidance to scan 30 patients each with a 10-view echocardiographic protocol (240 total patients). Five expert echocardiographers blindly reviewed these scans and felt they were of diagnostic quality for left ventricular size and function in 98.8% of patients, right ventricular size in 92.5%, and presence of pericardial effusion in 98.8%.

Meaning

Artificial intelligence can extend the reach of echocardiography to assess the 4 basic parameters of left ventricular size and function, right ventricular size, and presence of a nontrivial pericardial effusion to sites with limited expertise.

This diagnostic study tests whether nurses who were naive to ultrasonographic use could obtain 10-view transthoracic echocardiographic studies of diagnostic quality using deep-learning artificial intelligence–based software.

Abstract

Importance

Artificial intelligence (AI) has been applied to analysis of medical imaging in recent years, but AI to guide the acquisition of ultrasonography images is a novel area of investigation. A novel deep-learning (DL) algorithm, trained on more than 5 million examples of the outcome of ultrasonographic probe movement on image quality, can provide real-time prescriptive guidance for novice operators to obtain limited diagnostic transthoracic echocardiographic images.

Objective

To test whether novice users could obtain 10-view transthoracic echocardiographic studies of diagnostic quality using this DL-based software.

Design, Setting, and Participants

This prospective, multicenter diagnostic study was conducted in 2 academic hospitals. A cohort of 8 nurses who had not previously conducted echocardiograms was recruited and trained with AI. Each nurse scanned 30 patients aged at least 18 years who were scheduled to undergo a clinically indicated echocardiogram at Northwestern Memorial Hospital or Minneapolis Heart Institute between March and May 2019. These scans were compared with those of sonographers using the same echocardiographic hardware but without AI guidance.

Interventions

Each patient underwent paired limited echocardiograms: one from a nurse without prior echocardiography experience using the DL algorithm and the other from a sonographer without the DL algorithm. Five level 3–trained echocardiographers independently and blindly evaluated each acquisition.

Main Outcomes and Measures

Four primary end points were sequentially assessed: qualitative judgement about left ventricular size and function, right ventricular size, and the presence of a pericardial effusion. Secondary end points included 6 other clinical parameters and comparison of scans by nurses vs sonographers.

Results

A total of 240 patients (mean [SD] age, 61 [16] years old; 139 men [57.9%]; 79 [32.9%] with body mass indexes >30) completed the study. Eight nurses each scanned 30 patients using the DL algorithm, producing studies judged to be of diagnostic quality for left ventricular size, function, and pericardial effusion in 237 of 240 cases (98.8%) and right ventricular size in 222 of 240 cases (92.5%). For the secondary end points, nurse and sonographer scans were not significantly different for most parameters.

Conclusions and Relevance

This DL algorithm allows novices without experience in ultrasonography to obtain diagnostic transthoracic echocardiographic studies for evaluation of left ventricular size and function, right ventricular size, and presence of a nontrivial pericardial effusion, expanding the reach of echocardiography to clinical settings in which immediate interrogation of anatomy and cardiac function is needed and settings with limited resources.

Introduction

Echocardiography is the most common cardiac imaging modality, proven effective in diagnosis and management of heart failure, ischemia, valve disease, and congenital abnormalities, among others. In the US, echocardiography is typically performed in dedicated laboratories, with acquisition by expertly trained sonographers and interpretation by board-certified cardiologists.

The application of artificial intelligence (AI) to echocardiography has grown tremendously but has largely focused on analysis of images already acquired by sonographers, from chamber quantification to disease detection.1,2,3,4,5,6,7,8,9,10,11,12 The AI applications that target the acquisition of sonographer images represent a novel area of investigation.

In many clinical settings, echocardiography is unavailable because of a lack of trained personnel. In these settings, nonexpert users may perform limited examinations (point-of-care ultrasound [POCUS]) using handheld or portable machines, but quality is nonuniform, with risks for nondiagnostic and misleading imaging.13 POCUS is frequently used in emergency departments; intensive care units; outpatient and preoperative clinics; and medically underserved areas, from rural US to low- and middle-income nations to manned space flights. POCUS also enables frontline clinicians to acquire echocardiograms in patients with coronavirus disease 2019 (COVID-19), limiting the exposure of sonographers. Technology based on AI may for allow acquisition of diagnostic-quality ultrasonographic studies by users with minimal training in these settings.

Novel software, developed using deep-learning (DL) technology and recently authorized by the US Food and Drug Administration (FDA),14 can provide real-time prescriptive guidance (turn-by-turn instructions) to novice operators to obtain transthoracic echocardiographic (TTE) images that allow for limited diagnostic assessment of cardiac chambers. The objective of this prospective trial was to determine whether novice users without prior experience in ultrasonography could obtain 10 standard echocardiographic views using this DL-based software, allowing assessment of key cardiac parameters.

Methods

Ethical Approval

The study was approved by the institutional review boards at both participating institutions (Northwestern Memorial Hospital and the Minneapolis Heart Institute) and was conducted in accordance with the International Conference on Harmonisation’s Guideline for Good Clinical Practice, document E6. Written consent was obtained from each participant.

Development and Function of AI-Guided Image Acquisition Software

The AI-guided image acquisition software (Caption Guidance [Caption Health, previously known as Bay Labs]), described in greater detail in the eAppendix in the Supplement and shown schematically in eFigure 1 in the Supplement, provides real-time guidance during scanning to assist the user in obtaining anatomically correct images from standard transthoracic echocardiographic (TTE) transducer positions. The AI guidance is software only, developed with DL to emulate sonographer expertise. The software, designed to be compatible with multiple ultrasonography vendors, was installed on a commercially available system (uSmart 3200t Plus [Terason]). The software monitors image quality continuously, simultaneously providing iterative prescriptive cues to improve the image via the DL algorithm.

The guidance software used convolutional neural networks constructed by stacking computational layers, each taking input from the layer below, transforming and passing it along to the layer above. The software has several interconnected DL algorithms making 3 simultaneous estimates: (1) diagnostic quality of the imagery, (2) 6-dimensional geometric distance (by position and orientation) between current probe location and the location anticipated to optimize the image, and (3) corrective probe manipulations to improve diagnostic quality. Importantly, the algorithm makes these estimates from the ultrasonographic imagery alone; no trackers, fiducial markers, or additional sensors are required.

The DL algorithms were trained using more than 5 million observations associating transducer orientation, the diagnostic correctness of the resulting image, and the outcome of subsequent manipulations with diagnostic quality. This training data set came from 15 registered sonographers via scans of individuals with a range of body mass index (BMI) values and pathological conditions, further annotated for quality by expert sonographers and cardiologists (A.N., R.P.M., R.M.L., N.J.W., and J.D.T.). eFigure 1 in the Supplement depicts the DL model training data set, expert labeling for image quality, algorithm optimization, and run-time operation during the study.

The DL algorithm estimates image quality via a component called the quality meter, suggesting probe manipulations using prescriptive guidance (eFigure 1 in the Supplement). When the real-time quality meter exceeds a preset threshold, it automatically records a video clip (termed an auto-capture). Quality is continuously monitored throughout scanning, so the operator can retrospectively save the best clip observed if the auto-capture threshold is not exceeded. If auto-capture is not achieved within 2 minutes, the user may activate the option called save best clip or continue scanning to achieve auto-capture. These algorithms operate together to improve the ultrasonographic image, as in eFigure 1 in the Supplement, in which prescriptive guidance improves a parasternal long-axis view. eFigure 2 in the Supplement and Video 1 show additional detail on the user interface.

Video 1. Machine Learning–Guided Echocardiogram Image Acquisition.

The video demonstrates the interaction of a user with the software to obtain diagnostic echocardiographic images, illustrating the turn-by-turn instructions; the quality meter, which indicates how close the user is to a diagnostic image and dictates when a clip is automatically recorded (termed an auto-capture); and the ability to manually capture the best clip image if the auto-capture is never achieved.

Study Design

Patients at least 18 years old who were scheduled to undergo a clinically indicated echocardiogram were recruited between March and May 2019 at 2 academic medical centers: Northwestern Memorial Hospital, in Chicago, Illinois, and Minneapolis Heart Institute, in Minneapolis, Minnesota. Both inpatients and outpatients were recruited; individuals were excluded if they were unable to lie flat, had a severe chest wall deformity (including recent chest surgery), or were unable or unwilling to provide informed consent. To improve generalizability, patients were stratified evenly across BMI categories (<25, 25-30, and >30) and to ensure at least one-third had structural or functional cardiac abnormalities. After reviewing a list of daily scheduled echocardiograms, patients were approached by study coordinators for informed consent, taking into consideration BMI categories and suspected pathological conditions.

Registered nurses without prior experience performing or interpreting ultrasonography were recruited for the trial from hospital personnel. Four nurses were recruited from the heart failure service at Northwestern Memorial Hospital and 4 others with a medical or surgical background were recruited from the Clinical Trials Unit at Minneapolis Heart Institute. Each nurse underwent a 1-hour didactic session to become familiarized with the ultrasonography machine and AI guidance. Before undertaking the study, each nurse performed 9 practice scans on volunteer models and demonstrated familiarity with the software. A total of 8 nurses were included in the study, with each nurse performing 30 scans.

Nurses were instructed to obtain 10 standard TTE views: a parasternal long-axis view; parasternal short-axis views (at aortic, mitral, and papillary muscle levels); apical 4-chamber, 5-chamber, 2-chamber, and 3-chamber views; and subcostal 4-chamber and inferior vena cava (IVC) views.15 In addition to the scans by nurses, as part of the study, a duplicate control scan was obtained by a registered cardiac sonographer within 2 weeks (generally on the same day) using the Terason hardware but without AI guidance. This control scan was obtained in addition to the clinical echocardiogram, which was performed with hospital equipment and interpreted in the standard fashion but not used in the analysis. These 3 scans could be obtained in any order. The nurse scans were obtained independently, without any assistance other than the AI guidance, and the nurse was not present during any sonographer scan or clinical echocardiogram examination. The Terason machine stored ultrasonography images at 30 frames per second, with 2-second to 4-second clips recorded for each view.

Following all study and control examinations, a panel of 5 expert echocardiographers (R.M.L., N.J.W., S.G., D.R., and S.H.L.) independently (and blinded to whether the study was performed by a nurse or a sonographer) assessed whether each scan was of diagnostic quality. All readers were level 3–trained and certified by the National Board of Echocardiography. This end point was selected in consultation with the FDA as part of the De Novo submission and was designed to capture the basic essentials of what constitutes a diagnostic study, in which all images are viewed and a judgement is made as to whether a given cardiac parameter can be interpreted by the reader. A De Novo Submission was required because there was no predicate device for the AI guidance, which could be used for a 510(k) clearance. This appeared to be the first time that a diagnostic ultrasonographic device required a De Novo Submission and was granted breakthrough status by the FDA.

Four prospectively designated primary end points were evaluated for the study, which assessed whether the nurse examination, taken as a whole, was of sufficient quality for the expert readers to make qualitative visual assessment of (1) left ventricular (LV) size, (2) LV function, (3) right ventricular (RV) size, and (4) the presence of nontrivial pericardial effusion. The end points were tested sequentially. The FDA agreement required that at least 80% of scans be of sufficient quality for the particular assessment (ie, the 95% CI lower bound was >80%). Six additional echocardiographic parameters were assessed as secondary end points: qualitative assessment of RV function; left atrium size; structural assessment of the aortic, mitral, and tricuspid valves; and qualitative assessment of IVC size. Further secondary end points included comparing the diagnostic content of nurse scans vs sonographer scans obtained without DL software. Clinical interpretability required most of the expert readers’ approval; at least 3 of 5 readers had to agree that end points were assessable.

Medical records were reviewed for demographic information (age, sex, and BMI) and known cardiac pathology. Further information is available at ClinicalTrials.gov (identifier: NCT03897140).

Statistical Analysis

Since the study sought to evaluate the performance of nurses in performing AI-assisted echocardiograms, the statistical analysis was designed to account for variance both of the enrolled patients (eg, body habitus, technical difficulty, cardiac abnormalities) and the 8 nurses. This analysis was performed via a multireader, multicase power analysis and sample size determination using iMRMC version 4.0 (FDA).16 The MRMC software calculated the 95% CIs around the point estimate for each of the 4 primary end points, which accounted for clustering within nurses. If the lower confidence limit was more than 80%, the end point passed; the 4 end points were tested sequentially. All analysis was conducted by a statistician unaffiliated with study funder Caption Health (Doug Milikien, MS, Accudata Solutions), and all data were made available to the FDA for their review.

Using the results from preliminary data, we determined that a study of 8 nurses each scanning 30 patients (a total of 240 scans) would have statistical power of 0.98 for each parameter or greater than 0.92 for 4 sequentially tested parameters (eAppendix in the Supplement for more details).

For normally distributed variables, means and SDs are reported; for nonnormal variables, medians and interquartile ranges are reported. For the 4 primary end points, the proportion of studies judged by the panel to be diagnostic was compared with the predefined acceptance criterion of 80%. For the primary and secondary parameters, the proportion judged clinically evaluable are reported with 95% CIs. Also reported in eTable 5 in the Supplement is the number of cases in which the cardiologist judgment was unanimous, shared by 4 of 5 experts, or shared by 3 of 5 experts.

Results

As shown in Figure 1, a total of 244 patients were enrolled (123 at Minneapolis Heart Institute and 121 at Northwestern Memorial Hospital). Six patients (2.5%) did not complete 1 or both examinations, while 238 patients (97.5%) completed both the nurse and sonographer examinations. One patient withdrew because of discomfort during the scan, determined to be unrelated to the guidance software, and another was withdrawn because of equipment malfunction. The remaining 4 withdrawals (1.6%) were logistical and unrelated to the study. In all cases in which a nurse began a scan, all 10 views were successfully acquired. A total of 240 patients (mean [SD] age, 61 [16] years old; 139 men [57.9%]; 79 [32.9%] with body mass indexes >30) completed the study; 153 (63.8%) had known cardiac pathology, including 51 (21.3%) with implanted cardiac devices (intracardiac leads and prosthetic valves).

Figure 1. Study Design.

MHI indicates the Minneapolis Heart Institute; mITT, modified intention to treat; NM, Northwestern Memorial.

eTable 1 in the Supplement lists the patient demographics, with clinical history and known diagnoses at the time of enrollment. eTable 2 in the Supplement shows findings from the clinical echocardiographic study and observed and known implanted devices at each enrolling site.

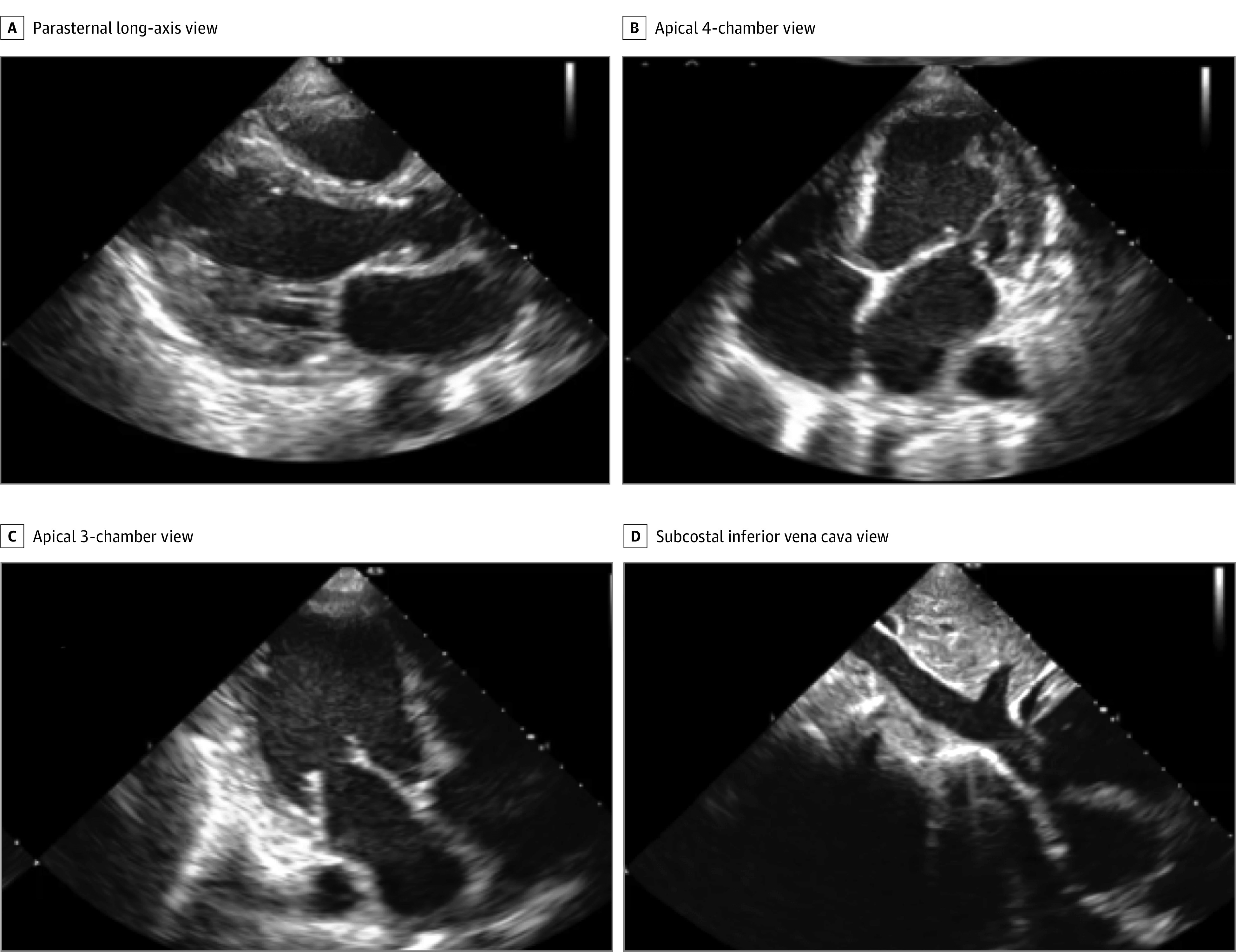

Of the 244 study patients, 240 were scanned by the 8 nurses and thus assessable for the primary and secondary end points. Figure 2, Video 2, and eFigure 3 in the Supplement show representative diagnostic-quality images obtained by a nurse. The nurses could scan and acquire images in all patients, with the median acquisition time for the 10 TTE images being 30 (range, 9-82) minutes. Overall, 1109 clips (46.2% of the nurse acquisitions) were auto-captured, while the rest used the save best clip option. From a technical perspective, the threshold of quality can be adjusted, resulting in higher or lower proportion of scans captured via auto-capture.

Figure 2. Representative Still Images of 4 of the 10 Standard Transthoracic Echocardiographic Views Acquired by a Nurse Using the Deep-Learning Algorithm That Were Judged to Be of Diagnostic Quality.

All 10 images are in eFigure 3 in the Supplement.

Video 2. Machine Learning–Guided Echocardiogram Image Acquisition—Sample Echocardiography Study.

Example of a nurse-obtained 10-view study demonstrating diagnostic-quality parasternal long-axis views; parasternal short-axis aortic and mitral valve and papillary muscle views; and apical 4-chamber, 5-chamber, 2-chamber, and 3-chamber views; a subcostal 4-chamber view; and an inferior vena cava views.

For the 4 primary end points, the nurse scans were judged to have adequate quality to assess the clinical parameters in nearly all patients, including LV size, LV function, and pericardial effusion (Table 1) in 237 of 240 scans (98.8%) and RV size in 222 of 240 scans (92.5%). There was no significant difference in nurses’ ability to obtain diagnostic scans across BMI categories and in patients with vs without cardiac pathology (eTable 4 in the Supplement), nor any change as nurses scanned more patients (eTable 6 in the Supplement). For the secondary end points, the proportion of scans with adequate quality was found in more than 90% of patients for all parameters, except for qualitative assessment of the IVC size (138 [57.5%]) and tricuspid valve (200 [83.3%]) (eTable 3 in the Supplement).

Table 1. Proportion of Nurse-Acquired Artificial Intelligence–Guided Echocardiography of Sufficient Quality to Assess Core Cardiac Clinical Parameters in Population Scanned by Nursesa.

| End point | Clinical parameter examined by qualitative visual assessment | Performance goal, % | Total scans performed, No. | Scans of sufficient quality, No. | Scans of sufficient quality (95% CI) |

|---|---|---|---|---|---|

| 1 | Left ventricular size | 80 | 240 | 237 | 98.8 (96.7-100) |

| 2 | Global left ventricular function | 80 | 240 | 237 | 98.8 (96.7-100) |

| 3 | Right ventricular size | 80 | 240 | 222 | 92.5 (88.1-96.9) |

| 4 | Nontrivial pericardial effusion | 80 | 240 | 237 | 98.8 (96.7-100) |

See eTable 3 in the Supplement for corresponding results for the secondary parameters.

When comparing nurse scans and sonographer scans using the same hardware without guidance, there was no significant difference in the assessment of the 4 primary end points (Table 2), nor was there a significant difference among the secondary clinical parameters, except for IVC size (nurse, 135 [57.4%] vs sonographer, 215 [91.5%]), which is likely associated with the proximity of the IVC and descending aorta and is a clear target for further algorithm development. Unsurprisingly, there were more parameters assessed as diagnostic in the sonographer scans than nurse scans. As shown in eTable 5 in the Supplement, most assessments reflected unanimity among the readers (1870 of 2400 [77.9%] for the nurse scans; 2054 of 2400 [85.6%] for the sonographer scans).

Table 2. Comparison of Nurse-Acquired and Sonographer-Acquired Studies for Primary and Secondary Clinical Parametersa.

| Image No. | Clinical parameter examined by qualitative visual assessment | No. (%) [95% CI] | Nurse-sonographer difference, percentage points | |

|---|---|---|---|---|

| Nurse examination | Sonographer examination | |||

| 1 | Left ventricular size | 232 (98.7) [96.3-99.7] | 235 (100) [98.4-100.0] | −1.3 |

| 2 | Global left ventricular function | 232 (98.7) [96.3-99.7] | 235 (100) [98.4-100.0] | −1.3 |

| 3 | Right ventricular size | 217 (92.3) [88.2-95.4] | 226 (96.2) [92.9-98.2] | −3.9 |

| 4 | Nontrivial pericardial effusion | 232 (98.7) [96.3-99.7] | 234 (99.6) [97.7-100.0] | −0.9 |

| 5 | Right ventricular function | 214 (91.1) [86.7-94.4] | 226 (96.2) [92.9-98.2] | −5.1 |

| 6 | Left atrial size | 222 (94.5) [90.7-97.0] | 234 (99.6) [97.7-100.0] | −5.1 |

| 7 | Aortic valve | 215 (91.5) [87.2-94.7] | 228 (97.0) [94.0-98.8] | −5.5 |

| 8 | Mitral valve | 226 (96.2) [92.9-98.2] | 233 (99.1) [97.0-99.9] | −2.9 |

| 9 | Tricuspid valve | 195 (83.0) [77.6-87.6] | 217 (92.3) [88.2-95.4] | −9.3 |

| 10 | Inferior vena cava size | 135 (57.4) [50.9-63.9] | 215 (91.5) [87.2-94.7] | −34.1 |

This Table includes both study populations shown in Figure 1.

Furthermore, the clinical assessment of 10 key cardiac parameters comparing normal conditions vs abnormalities based on the blinded evaluation from the expert cardiologists is presented in eTable 7 in the Supplement. When the studies were reviewed by expert echocardiographers (blinded to whether the acquisition was performed by the nurse or the sonographer), there was an agreement of greater than 90% between the nurse-acquired and sonographer-acquired studies when adjudicating whether LV size (95.7% [95% CI, 92.2%-97.6%]), LV function (96.6% [95% CI, 93.3%-98.2%]), RV size (92.5% [95% CI, 88.1%-95.3%]), RV function (92.9% [95% CI, 88.7%-95.7%]), presence of a nontrivial pericardial effusion (99.6% [95% CI, 97.6%-99.9%]), aortic valve structure (90.6% [95% CI, 85.9%-93.8%]), mitral valve structure (93.3% [95% CI, 89.3%-95.9%]), and tricuspid valve structure (95.2% [95% CI, 91.1%-97.4%]) were deemed either normal/borderline or abnormal.

Discussion

The application of AI to medical imaging is rapidly advancing. Most prior work has focused on interpretation or analysis of already acquired images, whether from radiography, computed tomography, magnetic resonance imaging, or ultrasonography. However, to our knowledge, no AI technology to guide image acquisition has been evaluated prospectively, especially with novice users. This study demonstrates that an AI algorithm can guide novices, without prior ultrasonography experience, to acquire 10 standard TTE images that, when analyzed together, provide limited diagnostic performance for the evaluation of LV size and function, RV size, and presence of a nontrivial pericardial effusion.

Echocardiography is a highly specialized imaging tool that is central to understanding cardiovascular pathology. Per the American College of Cardiology and American Society of Echocardiography, a level 3 echocardiographer (with the highest level of training) requires 9 cumulative months of specialized fellowship training in acquisition and interpretation of echocardiograms.17 Most sonographers spend at least 2 years in formal training before taking a registry examination. The ability to provide echocardiography outside the traditional laboratory setting is largely limited by a lack of trained sonographers and cardiologists to acquire and interpret images. Using this AI-based technology, individuals with no previous training may be able to obtain diagnostic echocardiographic clips of several key cardiac parameters.

Improvements in ultrasonography and computer hardware have led to the downsizing and cost reduction of ultrasonography machines, with handheld devices commercially available including standalone transducers interfacing with smart phones. The DL algorithm developed in this study is relatively compact (approximately 1.5 GB) and trained on images from multiple vendors, and it therefore could be ported to work on multiple platforms.

Our study met all FDA-prespecified primary end points, with consistent results across BMI categories and cardiac pathology, including potential distractors, such as pacemakers and prosthetic valves, with little difference between the nurse and sonographer scans. We designed this study to mimic clinical practice, in which the reader integrates multiple views to make a final diagnosis. For example, RV size may be indeterminate in parasternal and apical images but assessable in subcostal views. Similar to real-world interpretation, the blinded experts in this study had access to all views when judging quality. An alternative approach, where each clip is assessed individually for diagnostic quality, would be an interesting aspect to explore in the future.

In contrast with image acquisition, echocardiographic interpretation using AI has progressed in recent years. Multiple studies have demonstrated automated quantification of LV and RV volumes or ejection fraction, global longitudinal strain, and atrial size or function from both 2-dimensional and 3-dimensional acquisitions.1,5,6,10,11,12,18,19,20 Similarly, AI-driven disease identification has included aortic stenosis severity, LV wall motion abnormalities, differentiating causative mechanisms of LV hypertrophy (hypertrophic cardiomyopathy, cardiac amyloidosis, athlete heart), and grading diastolic dysfunction.2,7,8,9,10,11,12,21,22 Automated image interpretation has less variability than semiautomated or manual analyses. Combining AI-guided acquisition with automated interpretation could extend the reach of echocardiography to better recognize pathology.

This DL algorithm was developed to extend echocardiography access but not to replace sonographers who provide expert imaging. Combining this tool with automated image interpretation may allow cardiac screening in remote settings; an early version of this software was tested in Rwanda to diagnose rheumatic mitral stenosis from the parasternal long-axis view (C. Cadieu, PhD; written communication; November 5, 2018). Image interpretation was not included in the version of the software examined in this study. Future refinements may extend this guidance technology to include other views, spectral and color Doppler usage, and other organ systems.

In the US, cardiac ultrasonography has spread widely outside the echocardiography laboratory, with millions of studies performed in emergency department, intensive care units, primary care offices, and other sites. Training and credentialing guidelines are inconsistent across medical fields, with cardiology requiring 150 studies performed and 300 interpreted before independent practice23 while the American College of Emergency Physicians allows as few as 25 cardiac studies for competence.24 An unintended consequence of varying training requirements may be incorrect interpretation of ultrasonographic images.13,25 It is possible that the ultrasonography guidance demonstrated in this study, authorized by the FDA through a De Novo classification via the Breakthrough Devices Program, could serve to bring considerable expertise to users with less experience.

One emerging role for this technology is providing echocardiograms to patients with COVID-19. The American College of Cardiology states, “Patients demonstrating heart failure, arrhythmia, ECG changes or cardiomegaly should have echocardiography,”26 but this may also expose sonographers to excessive risk. To avoid this, the American Society of Echocardiography recommends that such patients undergo a POCUS examination by a frontline clinician to determine the need for a full echocardiogram.27 The AI guidance demonstrated here may increase the yield of POCUS echocardiograms and decrease sonographer exposure to COVID-19; this technology has been deployed in several COVID-19 units in the US, with promising early experience.

Limitations

There are several limitations to this study. While the sample size powered the primary end points, the number of patients and nurses is relatively small to fully assess generalizability. To include patients with a wide BMI and pathology spectrum, patient recruitment was not consecutive. Furthermore, patients were not recruited from intensive care units or the emergency department. This may limit the generalizability of the study. The study nurses were ultrasonography naive, but their medical background likely helped to some extent (although we have successfully trained certified medical assistants with even briefer familiarization to the software than used in this study28). Additional investigation using laypersons to capture echocardiography images using the software would aid in assessing generalizability.

Another limitation of this study was that there was no control group for the nurse scanners. The comparison in the study was against sonographer acquisitions, but an additional control group of novices untrained with the algorithm was not used. Furthermore, the study location were 2 large, academic hospitals. Further validation across a variety of settings would help strengthen the results of the study. To date, the algorithm only guides the acquisition of 10 standard TTE views (mostly allowing for the qualitative assessment of anatomic parameters and gross ventricular function). Future revisions of the algorithm may allow for color, pulsed-wave, and continuous-wave Doppler acquisitions, thereby allowing for interrogation of hemodynamics and valve function. These refinements would certainly require further investigation on performance. We anticipate further improvements in the quality of guidance with additional training of the DL algorithm. Furthermore, incorporation of enhanced algorithms to aid in interpretation of the obtained images would be helpful.

Conclusions

This AI guidance algorithm represents a step forward in the interaction of AI with medical imaging and may allow for extension of ultrasonography to settings that ordinarily do not have access. The ability of novice personnel to acquire limited diagnostic echocardiographic studies of 4 common parameters (LV size and function, RV size, and the presence of a nontrivial pericardial effusion) in patients across a spectrum of patient BMIs and cardiac pathologies should promote further investigation into the feasibility and dissemination of this technology.

eAppendix. Development of the AI-Guidance Algorithm

eFigure 1. Evidence base (left), neural network optimization (center), and user interface (right) of the AI guidance for echo acquisition

eFigure 2. The typical workflow for user interaction with the AI guidance

eFigure 3. Ten representative still images acquired by a study nurse from a single patient

eTable 1. Demographics of Enrolled Patients

eTable 2. Summary of Patients with Cardiac Abnormalities Identified through Scheduled Standard-of-Care Echocardiogram by Study Site

eTable 3. Proportion of Nurse-Acquired EchoGPS Echocardiography of Sufficient Quality to Assess Clinical Parameters (Secondary Endpoints) in Nurse Scan Population

eTable 4. Performance of nurse scans for primary and secondary endpoints stratified by BMI and presence of cardiac pathology

eTable 5. Panel Variability: Extent of Agreement among Cardiologists in Rating Acceptability of Echocardiography for Clinical Parameter Assessment by Primary and Secondary Parameters

eTable 6. Acceptability of Nurse-Acquired Caption Guidance Echocardiography for Primary Clinical Parameter Assessment (Primary Endpoints) in Nurse Scan Population (N=240), by Sequence Number of Scan Within Nurse

eTable 7. Cross-Classification of Cardiologists’ Clinical Assessment Using Nurse-Acquired vs. Sonographer-Acquired Echocardiograms - Primary Endpoints Qualitative Visual Assessment among Patients for Whom a Qualitative Visual Assessment Could Be Made in Both Scan Populations

eReferences.

References

- 1.Knackstedt C, Bekkers SC, Schummers G, et al. Fully automated versus standard tracking of left ventricular ejection fraction and longitudinal strain: the FAST-EFS multicenter study. J Am Coll Cardiol. 2015;66(13):1456-1466. doi: 10.1016/j.jacc.2015.07.052 [DOI] [PubMed] [Google Scholar]

- 2.Kusunose K, Abe T, Haga A, et al. A deep learning approach for assessment of regional wall motion abnormality from echocardiographic images. JACC Cardiovasc Imaging. 2020;13(2 pt 1):374-381. doi: 10.1016/j.jcmg.2019.02.024 [DOI] [PubMed] [Google Scholar]

- 3.Kusunose K, Haga A, Abe T, Sata M. Utilization of artificial intelligence in echocardiography. Circ J. 2019;83(8):1623-1629. doi: 10.1253/circj.CJ-19-0420 [DOI] [PubMed] [Google Scholar]

- 4.Madani A, Arnaout R, Mofrad M, Arnaout R. Fast and accurate view classification of echocardiograms using deep learning. NPJ Digit Med. 2018;1:1. doi: 10.1038/s41746-017-0013-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Medvedofsky D, Mor-Avi V, Amzulescu M, et al. Three-dimensional echocardiographic quantification of the left-heart chambers using an automated adaptive analytics algorithm: multicentre validation study. Eur Heart J Cardiovasc Imaging. 2018;19(1):47-58. doi: 10.1093/ehjci/jew328 [DOI] [PubMed] [Google Scholar]

- 6.Narang A, Mor-Avi V, Prado A, et al. Machine learning based automated dynamic quantification of left heart chamber volumes. Eur Heart J Cardiovasc Imaging. 2019;20(5):541-549. doi: 10.1093/ehjci/jey137 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Narula S, Shameer K, Salem Omar AM, Dudley JT, Sengupta PP. Machine-learning algorithms to automate morphological and functional assessments in 2D echocardiography. J Am Coll Cardiol. 2016;68(21):2287-2295. doi: 10.1016/j.jacc.2016.08.062 [DOI] [PubMed] [Google Scholar]

- 8.Raghavendra U, Rajendra Acharya U, Gudigar A, et al. Fusion of spatial gray level dependency and fractal texture features for the characterization of thyroid lesions. Ultrasonics. 2017;77:110-120. doi: 10.1016/j.ultras.2017.02.003 [DOI] [PubMed] [Google Scholar]

- 9.Sengupta PP, Huang YM, Bansal M, et al. Cognitive machine-learning algorithm for cardiac imaging: a pilot study for differentiating constrictive pericarditis from restrictive cardiomyopathy. Circ Cardiovasc Imaging. 2016;9(6):e004330. doi: 10.1161/CIRCIMAGING.115.004330 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Zhang J, Gajjala S, Agrawal P, et al. Fully automated echocardiogram interpretation in clinical practice. Circulation. 2018;138(16):1623-1635. doi: 10.1161/CIRCULATIONAHA.118.034338 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Ghorbani A, Ouyang D, Abid A, et al. . Deep learning interpretation of echocardiograms. NPJ Digit Med. 2020;3:10. doi: 10.1038/s41746-019-0216-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Ouyang D, He B, Ghorbani A, et al. Video-based AI for beat-to-beat assessment of cardiac function. Nature. 2020;580(7802):252-256. doi: 10.1038/s41586-020-2145-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.ECRI . 2020 top 10 health technology hazards executive brief. Published 2019. Accessed March 1, 2020. https://www.ecri.org/landing-2020-top-ten-health-technology-hazards

- 14.US Food and Drug Administration . FDA authorizes marketing of first cardiac ultrasound software that uses artificial intelligence to guide user. Published 2020. Accessed February 3, 2021. https://www.fda.gov/news-events/press-announcements/fda-authorizes-marketing-first-cardiac-ultrasound-software-uses-artificial-intelligence-guide-user

- 15.Mitchell C, Rahko PS, Blauwet LA, et al. Guidelines for performing a comprehensive transthoracic echocardiographic examination in adults: recommendations from the American Society of Echocardiography. J Am Soc Echocardiogr. 2019;32(1):1-64. doi: 10.1016/j.echo.2018.06.004 [DOI] [PubMed] [Google Scholar]

- 16.Gallas BD, Pennello GA, Myers KJ. Multireader multicase variance analysis for binary data. J Opt Soc Am A Opt Image Sci Vis. 2007;24(12):B70-B80. doi: 10.1364/JOSAA.24.000B70 [DOI] [PubMed] [Google Scholar]

- 17.Wiegers SE, Ryan T, Arrighi JA, et al. ; Writing Committee Members; ACC Competency Management Committee . 2019 ACC/AHA/ASE Advanced Training Statement on Echocardiography (revision of the 2003 ACC/AHA Clinical Competence Statement on Echocardiography): a report of the ACC Competency Management Committee. J Am Soc Echocardiogr. 2019;32(8):919-943. doi: 10.1016/j.echo.2019.04.002 [DOI] [PubMed] [Google Scholar]

- 18.Genovese D, Rashedi N, Weinert L, et al. Machine learning-based three-dimensional echocardiographic quantification of right ventricular size and function: validation against cardiac magnetic resonance. J Am Soc Echocardiogr. 2019;32(8):969-977. doi: 10.1016/j.echo.2019.04.001 [DOI] [PubMed] [Google Scholar]

- 19.Volpato V, Mor-Avi V, Narang A, et al. Automated, machine learning-based, 3D echocardiographic quantification of left ventricular mass. Echocardiography. 2019;36(2):312-319. doi: 10.1111/echo.14234 [DOI] [PubMed] [Google Scholar]

- 20.Asch FM, Poilvert N, Abraham T, et al. Automated echocardiographic quantification of left ventricular ejection fraction without volume measurements using a machine learning algorithm mimicking a human expert. Circ Cardiovasc Imaging. 2019;12(9):e009303. doi: 10.1161/CIRCIMAGING.119.009303 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Sanchez-Martinez S, Duchateau N, Erdei T, et al. Machine learning analysis of left ventricular function to characterize heart failure with preserved ejection fraction. Circ Cardiovasc Imaging. 2018;11(4):e007138. doi: 10.1161/CIRCIMAGING.117.007138 [DOI] [PubMed] [Google Scholar]

- 22.Tabassian M, Sunderji I, Erdei T, et al. Diagnosis of heart failure with preserved ejection fraction: machine learning of spatiotemporal variations in left ventricular deformation. J Am Soc Echocardiogr. 2018;31(12):1272-1284.e9. doi: 10.1016/j.echo.2018.07.013 [DOI] [PubMed] [Google Scholar]

- 23.Ryan T, Berlacher K, Lindner JR, Mankad SV, Rose GA, Wang A. COCATS 4 task force 5: training in echocardiography. J Am Coll Cardiol. 2015;65(17):1786-1799. doi: 10.1016/j.jacc.2015.03.035 [DOI] [PubMed] [Google Scholar]

- 24.American College of Emergency Physicians . Ultrasound guidelines: emergency, point-of-care, and clinical ultrasound guidelines in medicine. Published 2016. Accessed March 1, 2020. https://www.acep.org/globalassets/new-pdfs/policy-statements/ultrasound-guidelines—emergency-point-of-care-and-clinical-ultrasound-guidelines-in-medicine.pdf

- 25.Lewiss RE. “The ultrasound looked fine”: point-of-care ultrasound and patient safety. Published 2018. Accessed March 1, 2020, 2020. https://psnet.ahrq.gov/web-mm/ultrasound-looked-fine-point-care-ultrasound-and-patient-safety

- 26.American College of Cardiology . COVID-19 clinical guidance for the cardiovascular care team. Published 2020. Accessed March 23, 2020. https://www.acc.org/~/media/Non-Clinical/Files-PDFs-Excel-MS-Word-etc/2020/02/S20028-ACC-Clinical-Bulletin-Coronavirus.pdf

- 27.American Society of Echocardiography . ASE statement on the protection on patients and the echocardiography service providers during the 2019 novel coronavirus outbreak. Published 2020. Accessed March 23, 2020. https://www.asecho.org/wp-content/uploads/2020/03/ASE-COVID-Statement-FINAL-1.pdf

- 28.Cheema B, Hsieh C, Adams D, Narang A, Thomas J. Automated guidance and image capture of echocardiographic views using a deep learning-derived technology. American Heart Association; 2019. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eAppendix. Development of the AI-Guidance Algorithm

eFigure 1. Evidence base (left), neural network optimization (center), and user interface (right) of the AI guidance for echo acquisition

eFigure 2. The typical workflow for user interaction with the AI guidance

eFigure 3. Ten representative still images acquired by a study nurse from a single patient

eTable 1. Demographics of Enrolled Patients

eTable 2. Summary of Patients with Cardiac Abnormalities Identified through Scheduled Standard-of-Care Echocardiogram by Study Site

eTable 3. Proportion of Nurse-Acquired EchoGPS Echocardiography of Sufficient Quality to Assess Clinical Parameters (Secondary Endpoints) in Nurse Scan Population

eTable 4. Performance of nurse scans for primary and secondary endpoints stratified by BMI and presence of cardiac pathology

eTable 5. Panel Variability: Extent of Agreement among Cardiologists in Rating Acceptability of Echocardiography for Clinical Parameter Assessment by Primary and Secondary Parameters

eTable 6. Acceptability of Nurse-Acquired Caption Guidance Echocardiography for Primary Clinical Parameter Assessment (Primary Endpoints) in Nurse Scan Population (N=240), by Sequence Number of Scan Within Nurse

eTable 7. Cross-Classification of Cardiologists’ Clinical Assessment Using Nurse-Acquired vs. Sonographer-Acquired Echocardiograms - Primary Endpoints Qualitative Visual Assessment among Patients for Whom a Qualitative Visual Assessment Could Be Made in Both Scan Populations

eReferences.