Abstract

Three dissimilar methodologies in the field of artificial intelligence (AI) appear to be following a common path toward biological authenticity. This trend could be expedited by using a common tool, artificial nervous systems (ANS), for recreating the biology underpinning all three. ANS would then represent a new paradigm for AI with application to many related fields.

Main text

In 1955 when John McCarthy organized the historic Dartmouth Summer Research Project, he coined the term “artificial intelligence” (AI) as a methodology-neutral phrase because the hoped-for attendees supported diverse methodologies, and each had ardent adherents.1 Three of today’s AI methods yet stand out for their diversity and adherents, but despite this, all three increasingly incorporate biological inspiration for performance improvement. The improvements drive further inclusion of biology in a positive reinforcement loop that is gradually bringing the diversity into a common biological framework. To be clear, the terms “biology” and “biological” refer to the animal kingdom’s nervous systems for which we study neuroanatomy and neurophysiology; here, the terms do not refer to the study of plants, fungi, or sea sponges.

One of the three methodologies, machine learning (ML), is supported by varieties of networks, from neural networks (NN) to artificial neural networks (ANN) to recurrent neural networks (RNN) to convolutional neural networks (CNN) to general adversarial networks (GAN) to deep neural networks (DNN), and more. All of these have added to the success of ML and its progeny, deep learning (DL). These networks, especially CNN,2 have also incorporated biological features beginning with the concept of neurons (nodes of the networks) and their synaptic plasticity (node’s weight) to connections between these “neurons” (both forward and back) and the layers with which they are organized. According to IEEE Access, “However, despite the recent progress in DL methodologies and their success in various fields, such as computer vision, speech technologies, natural language processing, medicine, and the like, it is obvious that current models are still unable to compete with biological intelligence. It is, therefore, natural to believe that the state of the art in this area can be further improved if bio-inspired concepts are integrated into deep learning models3.”

A second methodology comes from Jeff Hawkins and his company, Numenta, who have been researching neuroscience and building computer models and algorithms to represent brain functions since 2004. Jeff says, “The key to AI has always been the representation” and in the last seventeen years has continually expanded his representation, beginning with modeling individual neurons to modeling collections of cortical columns. Jeff’s approach is different and considerably more biological then any NN, which places his technology in a unique AI category. Additionally, Numenta’s Hierarchical Temporal Memory (HTM) technology is one of the only methodologies to represent nervous system temporal connectivity, a key neurophysiological feature often overlooked in other AI technologies. His trend is dedicated to biological realism and improvement therein.

The third methodology, neuromorphic computing, is again a significantly different AI approach with promises of dramatically reducing the cost of intelligence processing; this is important considering the cost of training OpenAI’s GPT-3 Deep Learning Network was over US$12 million. Despite the use of parallel graphic processing units (GPU) for NNs, NN’s “neurons” run on traditional computing systems which process in a serial fashion, one line of code at a time; neuromorphic chips provide a hardware substrate that supports massive computing parallelism of its artificial neurons where every neuron operates under its own set of code independently, all the time. The savings and efficiency of parallelism are significant, and with thousands of companies investing in AI for big data analytics, customer service bots (natural language processing), and a myriad of other applications, the competition to provide the best service for the least cost pushes major companies in the hardware (neuromorphic) research direction. This includes companies like Microsoft and IBM as well neuromorphic chip variations such as the Tensor Processing Unit (TPU) from Google and Loihi from Intel. Though neuromorphic computing hasn’t gained the celebrity status of state-of-the-art in ML, the allure of power savings through neuromorphic chips and their inclusion as a component in server farms keeps research in neuromorphic processing moving forward.

Additionally, the neuromorphic development trend supports increasing levels of biological realism. Over the last 30+ years neuromorphic chips have progressed from modeling several handfuls of “neurons” to modeling hundreds of thousands of artificial spiking neurons and astrocytes.4 It is noteworthy that the inclusion of astrocytes in any AI system is a significant step in the direction of biological representation. Astrocytes subserve critical nervous system functions, outnumber neurons by a factor of 4:1, are presented as the gatekeeper of synaptic information transfer,5 and are an indispensable partner of synaptic plasticity (memory).6

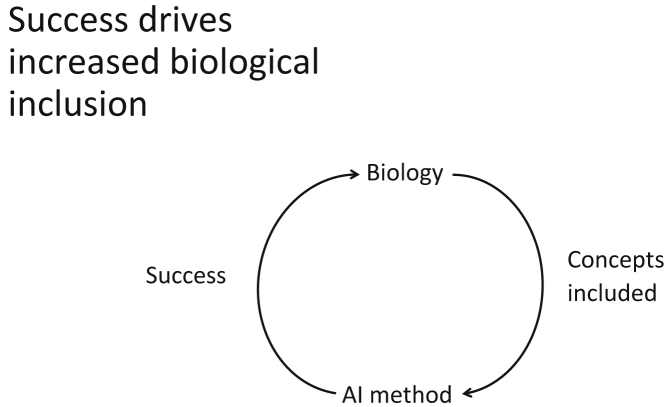

What has been shown thus far is that 3 different AI approaches all use nervous system concepts to make improvements; further, the improvements result in a positive feedback loop such that the more accomplishments made using nervous system concepts the more enticement there is to use more nervous system concepts and thus make even more progress (Figure 1).

Figure 1.

As AI methods make use of biological concepts and receive successful results, they drive further inclusion of biological concepts

Unfortunately, there is a natural limit to this process given that the methodologies are electronically and not biologically implemented, but there would be no such limit if there were tools which adequately reproduced nervous systems in an electronic form: artificial nervous systems (ANS). Then those three methodologies, or indeed any methodology, which wanted to reproduce human intelligence by means of mimicking nervous systems, would have no limit or impediments to the progress which could be made. No further technologies would be necessary to achieve AI because the very inspiration to achieve the goal, nervous systems, would be fully accessible.

With such tools, NN in any form could be defined with realistic neurons, plasticity, and inhibition or excitation and connected in any biological or non-biological configuration. Multiple networks could be coupled in a natural fashion to analyze multiple data types simultaneously, and both input and output could be configured within the same tool set. There are many more advantages.

Numenta’s efforts towards utilizing cortical columns could be significantly expanded to include a variety of cortical columns as are biologically represented in the fundamental cortical types of granular, frontal, polar, parietal, and aganular cortices. Additionally, communication between types over long distances using fasciculi could be utilized, and cortical structures outside of the telencephalon could be defined and integrated. Modeling different areas of the nervous system, like the diencephalon and the midbrain, would be a plus, especially for robotics. There are many more advantages.

Neuromorphic computing could also be advanced using ANS tools. As mentioned for the advantages for NN, any variety of neuron model, whether biological and probabilistic or artificial and deterministic (integrate and fire, or “spiking”) could be connected in any network configuration. Networks could be subdivided, specialized, and then naturally integrated to manage multiple types of input, output, and processing requirements. The results could be defined and simulated on regular computing hardware and then downloaded onto a flexible microchip architecture supporting parallelism, like field programmable gate arrays (FPGAs), where if the results are unsatisfactory, modifications can be made and the updates downloaded onto the same microchip. (FPGAs have major cost advantages over application-specific integrated circuit [ASIC] microchips, where if changes are required, the chip must be thrown away and a new one designed and fabricated.) There are many more advantages.

So, what would it take to create an ANS tool which could broadly assist today’s AI technologies? What would it look like? The answer to those questions is a long laundry list of what is missing in today’s nervous system models and simulators.

-

•

Architecture that is developed both top-down and bottom-up. A human brain is not a continuous homogeneous network. It consists of a heterogeneous architecture with an upper range of more than 200 identifiable areas in just the cerebral cortex, not to mention other parts of the forebrain.

-

•

Astrocyte networks which can alert upstream astrocyte neighbors of local changes in activity and metabolic resource demand.

-

•

Tripartite synapses giving astrocytes a key role in managing resources for their neuron partners by “listening” to the neurons’ activity. Astrocytes control the vasculature to neurons and help optimize the brain’s total resource consumption in a just-in-time manner. Normal blood flow is sufficient to keep neurons alive and conserves (optimizes) limited resources. Further metabolic demands, like for modifying synaptic structure (plasticity), are beyond resting level and require astrocyte intervention to modify the vasculature for greater blood flow volume.

-

•

Hormones and different neurotransmitters can communicate programmatic needs over long distances and persist over time to keep the environment balanced and optimized. This also obviates the need for direct neuron to neuron communications in many functions.

-

•

Dynamic myelination to improve the efficiency of long-range neural connections when activity demands are sufficient, another example of optimization.

-

•

Spike-timing-dependent plasticity (STDP) based upon clustering of dendritic spines; each cluster having the capacity for spiking and contributing to a soma spike. This includes both long-term and short-term plasticity and both synaptic growth and pruning.

-

•

Plasticity based upon the initial tagging7 of what should be modified and then allowed to proceed based on past cytoskeleton modifications to the same synaptic site and on current resource availability.

-

•

Global operating principals of optimization of resources and homeostatic balance, including the peripheral nervous system.8

-

•

Customizable neuron characteristics sufficient for the six most abundant neuron types as well as the hundreds of other known neuron types.

-

•

Cortical columns that can be customized to accommodate the five primary varieties of cortex, as well as the transition areas between them.

-

•

Support for the creation of nervous system structures beyond the cerebral cortex, such as nuclei of the diencephalon and midbrain, and the spinal cord and ganglia.

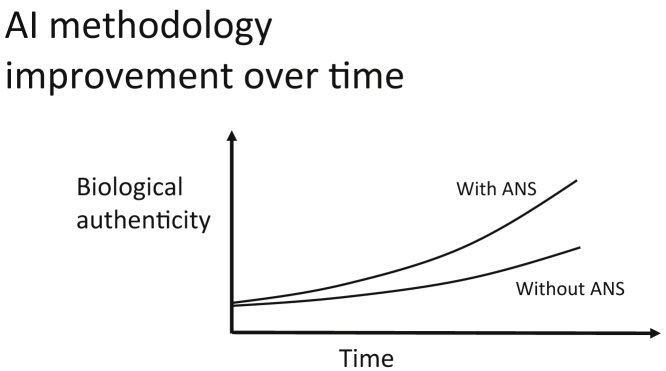

The above list is by no means comprehensive but does point out topics worthy of further investigation and development. If the hypothesis is correct and there is a trend toward biological convergence, then the better the diligence of reconstructing nervous systems, the better the outcome should be for AI (Figure 2).

Figure 2.

Depiction of how AI methods change over time toward biological authenticity with and without ANS

Importantly, ANS need to be user friendly and not require a PhD in neurophysiology to use. The ANS tools need to be as accessible as any computer language with the caveat that users would need to gain an appreciation for basic neurophysiology in a “Nervous System for Dummies” kind of way. Otherwise, users should be able to outline their goals along with the nature and extent of input and output, and the tools should take care of the rest. All ANS items should be customizable by the user and permit new application programming interfaces (APIs) beyond the basics provided, with the resultant code stored on GitHub or the like so that new APIs and modifications could be widely shared. This implies that the tools should accommodate any desired degree of biological authenticity from that which is sufficient for NN to that which is sufficient for medical research and remediation. The tool should make use of a standard database, like Structured Query Language (SQL) for offline storage, and integrate seamlessly into other existing tools or schemas like a “data lakehouse”. It ought to have a high-level design interface like a computer-aided design (CAD) plugin that could work with standard keyboard and mouse or with a virtual reality (VR) headset.

In fact, the tools do exist today that include all the “what would it look like?” features can reproduce any user-definable nervous system and have a resultant nervous system model that is downloadable onto FPGA microchips for parallelism or otherwise operates on conventional computers. The tools support not only the three areas of AI mentioned above, but also the fields of robotics, Internet of Things (IoT), cognitive computing, and biologically rigorous fields of medical diagnostics and remediation, such as for epilepsy and Alzheimer disease. The ANS tools are so new that this is their first public disclosure. The author seeks collaboration to improve the user-friendliness and usefulness of the tools and set ANS on a path into the public sector and fully elaborate the new paradigm.

Acknowledgments

Declaration of interests

F.N. is a patent holder of patents US 10,817,785 and US 10,990,880 and US patent applications 15,660,858 and 15,905,730, all of which address the issue of modeling nervous systems artificially.

Biography

About the author

Fredric Narcross has been researching neuroanatomy and neurophysiology for more than 30 years. He has also been programming computer systems since working at NASA in 1980. Those disciplines have led to 2 patents and an additional 2 patents pending, all of which detail tools for modeling nervous systems on regular computing hardware and neuromorphic microchips. He is currently at the Ben Gurion University of the Negev in the department of Biotechnology Engineering.

References

- 1.McCarthy J. A Proposal for the Dartmouth Summer Research Project on Artificial Intelligence. 1955. http://www-formal.stanford.edu/jmc/history/dartmouth/dartmouth.html

- 2.Le J. Convolutional Neural Networks: The Biologically Inspired Model. Towards data science. 2018 https://towardsdatascience.com/convolutional-neural-networks-the-biologically-inspired-model-f2d23a301f71 [Google Scholar]

- 3.IEEE Access Trends and Advances in Biol.-Inspired Image-Based Deep Learning Methodologies and Applications. IEEE Access. https://ieeeaccess.ieee.org/closed-special-sections/trends-and-advances-in-bio-inspired-image-based-deep-learning-methodologies-and-applications/

- 4.Tang G., Polykretis I.E., Ivanov V.A., Shah A., Michnizos K.P. Introducing Astrocytes on a Neuromorphic Processor: Synchronization, Local Plasticity and Edge of Chaos. NICE ’19: Neuro-Inspired Computational Elements Workshop. 2019 [Google Scholar]

- 5.Volman V., Ben-Jacob E., Levine H. The astrocyte as a gatekeeper of synaptic information transfer. Neural Comput. 2007;19:303–326. doi: 10.1162/neco.2007.19.2.303. [DOI] [PubMed] [Google Scholar]

- 6.Wenker I. An active role for astrocytes in synaptic plasticity? J. Neurophysiol. 2010;104:1216–1218. doi: 10.1152/jn.00429.2010. [DOI] [PubMed] [Google Scholar]

- 7.Wang S.H., Redondo R.L., Morris R.G. Relevance of synaptic tagging and capture to the persistence of long-term potentiation and everyday spatial memory. Proc. Natl. Acad. Sci. USA. 2010;107:19537–19542. doi: 10.1073/pnas.1008638107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.O’Leary T., Wyllie D.J.A. Neuronal homeostasis: time for a change? J. Physiol. 2011;589:4811–4826. doi: 10.1113/jphysiol.2011.210179. [DOI] [PMC free article] [PubMed] [Google Scholar]