Abstract

Purpose

Disruption of education can lead to drastic changes and therefore, we need to maximize the benefits of e-technology. We aimed to explore changes in knowledge, attitudes, and challenges regarding e-learning during the COVID-19 pandemic and determine how e-learning has influenced academic performance.

Methods

We conducted a self-administrated electronic survey to collect information on undergraduate medical students’ e-learning. We evaluated its validity, reliability and pilot tested the instrument.

Results

Between August 7 and 19, 2020, we received 995 responses. The majority of respondents answered that they knew about the tools used for e-learning, such as mobile learning, links, online classes, e-assessment; 84% (n=836), 82% (n=815), 82% (n=811) and 80% (n=796), respectively. Two-third of the respondents gained fair/very good knowledge from online classes and discussion boards; 65% (n=635), and 63% (n=620), respectively. Regarding attitudes, less than half had “somewhat” adequate knowledge and proper training; 45% (n=449) and 36% (n=361), respectively, and less than a third had “somewhat” positive feelings; 29% (n=289). The reported challenges were poor Internet speed (55%, n=545) and the lack of clinical experience and physical examination skills (51%, n=512). There is a statistical difference between the test score for the first and second semesters for year 6, year 5, year 4, and year 2 (P value < 0.05).

Conclusion

Most respondents reported that they knew about e-learning tools and answered that they gained fair/very good amounts of knowledge accompanied by acceptable attitudes. The challenges need to be addressed to improve e-learning infrastructure. The transition to e-learning accompanied by increased academic performance.

Keywords: e-learning, e-assessment, mobile-learning, COVID-19, medical education

Introduction

In 2017, Saudi Arabia initiated “Vision 2030” as a major investment and transformation of fundamental structural changes in all sectors, including the educational system.1,2 More than 1.3 million students are enrolled in 29 governmental and 55 private higher education institutes in the country.3 As part of this transformation, the undergraduate medical college at Umm Al Qura University (UQU) adopted a student-centered curriculum in collaboration with University College London (UCL). The current curriculum is based on competency-based learning, which combines both self-paced learning and directed self-learning in the form of lecture-based sessions that include standard large group presentations, interactive large group sessions, flipped classes, and peer-assisted learning (PAL). For small group sessions, students work in groups through problem-based learning (PBL) or task-based learning (TBL). Clinical exposure begins in the second and third academic years, where students are instructed to perform direct observation in hospitals. Students are expected to participate in clinical activities and bedside teaching in the fourth and fifth academic years. At the end of the sixth year (pre-internship), students spend most of their time rotating in hospitals. Workplace assessments include mini-clinical evaluation exercises (Mini-CEX), case-based discussions (CBD), evidence-based prescriptions (EBP), directly observed procedures (DOPS), clerking, and data and procedure cards. Clinical skills lab is considered a cornerstone for clinical teaching for all students as they need to demonstrate the required level of proficiency before going to hospitals. Assessment of performance and competencies include feedback on performance, end of module reports, objective structured clinical examinations (OSCE), short best answer (SBA) questions, portfolios, and formative and summative multiple-choice questions (MCQs).

The United Nations Educational, Scientific, and Cultural Organization (UNESCO) declared that more than 1 billion learners were affected by the COVID-19 pandemic, and there were 130 country-wide closures.4 Medical schools’ educational and clinical activities were disrupted across the world, and on March 3, 2020, Saudi Arabia announced the first case of coronavirus.5 On March 9, like many other countries, the Saudi Ministry of Education (MoE) closed all educational institutions, and students were removed from all hospitals. The MoE recommended that all precautionary measures should be strictly followed, and pre-existing established digital technologies should be used, such as distance learning and virtual classrooms5,6 to reduce the virus’s spread and ensure the continuity of learning.

E-learning educational programs have existed for many years but had not been implemented in many academic institutions before the COVID-19 pandemic. Academic institutions that had already implemented innovative e-learning programs could leverage them during emergencies. Traditional education is based on educational materials or in-person human interaction using objects, maps, or boards.6 Digital education comprises constantly evolving concepts and is often referred to as e-learning. E-learning modalities can include offline or online educational activities.7 Additionally, this form of learning can range from a basic conversion of in-person content to digital format to a more complex deployment of digital technologies (eg, mobile education, virtual patients, and virtual reality)6 E-learning can provide more flexible solutions for busy clinicians, allowing them to choose suitable times to work. It can also allow students to be more independent, as it is self-paced.

E-learning has been demonstrated to be equivalent or superior to traditional learning8,9 but when it is used to replace traditional programs, unique challenges to clinical teaching and learning experiences arise. However, e-learning is very suitable for certain scenarios, such as pandemics or disasters. As the COVID-19 pandemic has forced universities to transition to e-learning and created an educational system transformation, it has provided an opportunity to assess students’ perspectives and how prepared universities were for distance learning and determine future approaches to e-learning.

Three generated hypotheses were tested in this study: (1) the students’ transition to e-learning did not change their knowledge and attitudes; (2) there were no challenges to the transition; and (3) the transition did not influence students’ academic performance. Using a survey, we aimed to explore changes in knowledge, attitudes, and challenges regarding e-learning for medical students during the COVID-19 pandemic. Additionally, we intended to determine how e-learning influenced the academic performance of the medical students. Accordingly, this study may help stakeholders conduct future research to optimize information delivery to students by adopting the most appropriate technology platforms.

Materials and Methods

Design

A cross-sectional observation web-based questionnaire survey was conducted. The target population was undergraduate medical students (years two to six) at Medical College, UQU in Makkah, Saudi Arabia in the past academic year (Sep 2019–June 2020) who were over 18. We excluded first-year students, as year 1 is considered to be preparation for medical school. The population consisted of all 1268 undergraduate medical students. To identify the participants, we contacted the Office of Academic Affairs to access a list of all students and, subsequently, used convenient sampling. We contacted these students via WhatsApp as it is the most common communication method used by these students.

The Ethics Committee at UQU approved the study (identification number: HAPO-02-K-012-2020-08-436). Study participants provided consent to participate and received an electronic link via SurveyMonkey, accompanied by a cover letter stating the study title, objectives, voluntary rights, length of the survey, confidentiality, and the primary investigator’s name. They were also informed that the collected data would not be shared or linked to any identifying information and kept anonymous. The participants who consented participated in the survey. All collected data were anonymous and de-identified, exported to Microsoft Excel file for analysis, secured by double-locked passwords, kept locked-up, and only the primary investigator and the statistician had access to the data.

Instrument Development

We generated our survey instrument using rigorous survey development and testing methods.10 We selected the survey items based on a literature review, interviews with medical students, and discussion with experts via email. Five experts in the field of medical education extensively discussed the topic and reviewed the items. Then, the experts nominated and ranked each question to reach a consensus on the selected items. Additionally, methodology and content experts conducted another review to eliminate redundant items using binary responses (exclude/include). We aimed to have a survey that was simple, succinct, and easy for medical students to understand. During the construction of the survey, we grouped the items into domains that we wanted to explore and then refined the questions.11

The final self-administered survey consisted of 11 items that focused on the following three domains: (1) three demographic questions (age, gender, and academic year level); (2) four questions on knowledge, attitudes, and challenges of e-learning (knowledge of e-learning tools, benefits gained from e-learning tools, attitudes toward e-learning, and challenges faced with e-learning); and (3) four questions on academic performance (first-semester test score, second-semester test score, first semester absences, and second-semester absences). The outcomes were measured as a percentage of the responses of the students on their understanding, attitudes, and challenges towards e-learning. Academic performance was measured using the summative exam (test score) in the form of multiple-choice questions at the end of each semester. Absence rate was defined as the number of classes missed by the students in each semester. The data were collected by students via SurveyMonkey (Appendix).

Instrument Testing

During the pre- and pilot-testing, five medical education experts reviewed the questions that were developed for consistency and appropriateness.12,13 Then, a colleague who is not an expert in medical education reviewed the questions to assess the dynamics, flow, and accessibility. The questionnaire was piloted on 100 medical students selected randomly (we aimed for 10% of the entire population, ie, 120 students), but we received 100 responses. Then the reliability, validity, and interpretation of questions were determined. We assessed the comprehensibility of our instrument and invited five colleagues with medical education and methodologic expertise to measure the survey on a scale from 1 to 5. The mean score on the 5-point scale of 4.7 suggested that the instrument was comprehensible, and thus, no further modifications were required. Cronbach’s alpha was used to determine the reliability of the instrument. The estimate was greater than 0.76, indicating that the research instrument was reliable; accordingly, we adopted the survey instrument for use in this study. To determine whether the questionnaire was valid, we calculated Pearson’s r to estimate the correlations between each variable with the total score. The P value for each item was (P <0.001), indicating that the research instrument was valid.

Survey Recruitment and Administration

Each academic year student leader has a WhatsApp group to contact students, and the leaders sent a message to invite students to participate voluntarily without incentives two weeks before the opening of the survey. On August 7, 2020, we sent the participants an embedded link to the web-based survey on SurveyMonkey via WhatsApp. We sent reminders five and ten days later, and we closed the survey on August 19, 2020. Each question was displayed on a single screen, and there was a total of 11 questions. The structured response formats included the enforcement of at least one response option per question and binary (yes/no), nominal, and ordinal responses. Other options were also allowed, including “undetermined,” “other,” and any other comments in free text to capture unanticipated responses. The respondents could check for completeness with a submit button (highlighted mandatory items will appear if they have not been completed, and the participant must go back and answer that question before they could submit their results). We did not use randomization or adaptive questioning.

Response Rates

The survey was submitted to all the students (1268), and 995 completed surveys were returned, yielding a 78% response rate with a 100% completeness rate. We did not allow the survey to be taken more than once using the automatic feature available in SurveyMonkey.

Statistical Analysis

Descriptive statistics were used to summarize the data and report the variables. For the continuous variables, we also used proportions, frequencies, means, and standard deviations (when appropriate) to describe the data. We analyzed all the received responses as the number of respondents with missing variables was < 2%; they were included in the analysis when appropriate. For the variables measured with an ordinal scale,14 we assigned numbers to each variable to express the weights. First, we calculated the weighted average by estimating the length of the period. The period is the result of 4 divided by 5, where 4 represents the distance number (1–2 [first distance], 2–3 [second distance], 3–4 [third distance], and 4–5 [fourth distance]), and 5 represents the number of choices. When 4 is divided by 5, the length of the period is equal to 0.80, and the weighted average distribution is as illustrated in Appendix. We calculated the mean and standard deviation for all responses to questions on the following topics: knowledge about the tools used for e-learning, benefits gained from using e-learning tools, and attitudes toward e-learning. Similarly, we compared the mean to the weighted average and then calculated the weighted average for the three ordinal scale options. We conducted a paired sample t-test to compare the medical students’ first-semester test scores to their second-semester test scores.

Results

Overall, 56% (n=556) of respondents were females, and the mean age was 22 years. Most of the respondents were in the fourth year, 232 (23%), and the main characteristics are illustrated in Table 1.

Table 1.

Characteristics of Respondents (N=995)

| Characteristics | Total | Respondents |

|---|---|---|

| Age, years, (mean ± SD) | – | 22 ± 2.35 |

| Gender, n (%) | ||

| Male | 624 | 439 (44) |

| Female | 644 | 556 (56) |

| Academic year n (%) | ||

| Second year | 257 | 186 (17) |

| Third year | 260 | 192 (19) |

| Fourth year | 247 | 232 (23) |

| Fifth year | 214 | 162 (16) |

| Sixth year | 290 | 223 (22) |

The students responded that they were aware of the tools used for e-learning (mobile learning, links, online classes, e-assessments, discussion boards, e-portfolios, and e-resources). In contrast, on average, the students reported that they were “unsure” about whether e-learning can be used for simulations. Table 2 provides the results for the means, standard deviations, and weighted averages.

Table 2.

Survey Results for Respondents’ Knowledge of e-Learning Tools

| Questions | Response | Mean | SD | Result | ||

|---|---|---|---|---|---|---|

| Yes, n (%) | Unsure, n (%) | No, n (%) | ||||

| Did you know that mobile learning allows students to use mobile electronic devices to access online contents? | 836 (84) | 118 (12) | 40 (4) | 2.80 | 0.490 | Yes |

| Did you know that links allow students to access other resources, case studies, videos, audios, course notes, and presentations? | 815 (82) | 126 (13) | 54 (5) | 2.76 | 0.537 | Yes |

| Did you know that online classes allow students to attend virtual classes or a combination of the two? | 811 (82) | 112 (11) | 71 (7) | 2.74 | 0.577 | Yes |

| Did you know that e-assessments allow students to assess their knowledge (formative and summative) via computer-based testing? | 796 (80) | 123 (12) | 76 (8) | 2.72 | 0.594 | Yes |

| Did you know that discussion boards (blackboards) allow participants to communicate and learn? | 765 (77) | 148 (15) | 82 (8) | 2.69 | 0.617 | Yes |

| Did you know that e-portfolios allow students to build an online platform comprising their work, experiences, and reflections? | 684 (69) | 196 (20) | 115 (12) | 2.57 | 0.690 | Yes |

| Did you know that e-resources allow students to access materials such as syllabi or course outlines? | 671 (68) | 238 (24) | 84 (8) | 2.59 | 0.641 | Yes |

| Did you know that PBL can run in a completely online environment? | 532 (53) | 308 (31) | 155 (16) | 2.38 | 0.740 | Yes |

| Did you know that simulations (game-informed learning or virtual patients) can be delivered online? | 332 (33) | 321 (32) | 341 (34) | 1.99 | 0.823 | Unsure |

Notes: Bolded; highlight the calculated weighted average.

Abbreviations: PBL, problem-based learning; SD, standard deviation.

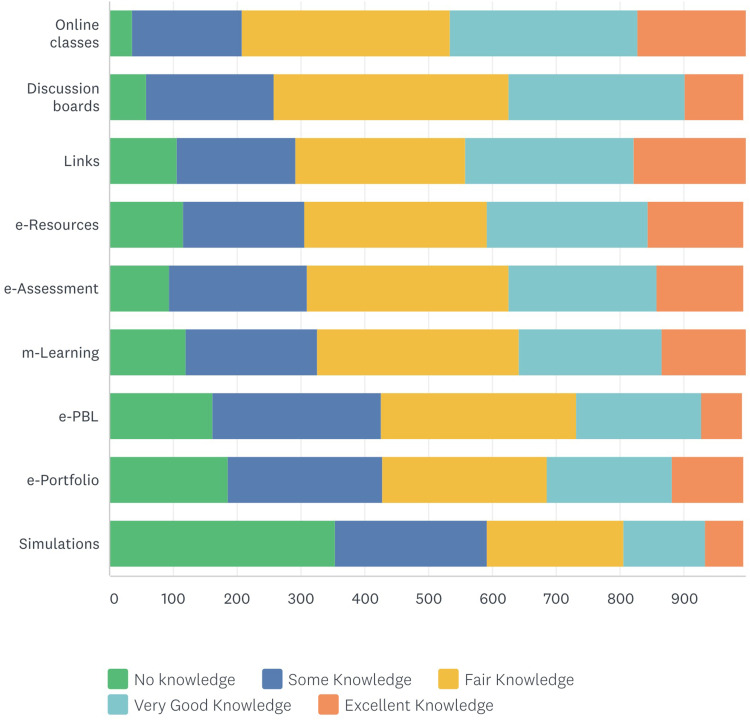

Nearly two-thirds of the respondents indicated that they gained a fair/very good amount of knowledge from online classes and discussion boards (65% [n=635] and 63% [n=620], respectively). Additionally, 55% (n=548), 55% (n=541), 54% (n=529), 54% (n=539), and 51% (n=503) of the respondents indicated that they gained a fair/very good amount of knowledge from e-assessments, mobile learning, links, e-resources, and e-PBL (problem-based learning), respectively. While 24% (n=238) responded that they gained “some knowledge” from the simulations. Table 3 and Figure 1 display the benefits that the respondents gained from using e-learning tools.

Table 3.

Survey Results for Benefits Gained from e-Learning Tools

| e-Learning Tools | No Knowledge n (%) | Some Knowledge n (%) | Fair Amount of Knowledge n (%) | Very Good Amount of Knowledge n (%) | Excellent Amount of Knowledge n (%) | Mean | SD | Result |

|---|---|---|---|---|---|---|---|---|

| Online classes | 36 (4) | 172 (17) | 326 (33) | 294 (30) | 167 (17) | 3.39 | 1.066 | Fair knowledge |

| Discussion boards | 57 (6) | 201 (20) | 368 (37) | 276 (28) | 92 (9) | 3.15 | 1.029 | Fair knowledge |

| Links | 105 (11) | 187 (19) | 265 (27) | 264 (27) | 174 (17) | 3.22 | 1.237 | Fair knowledge |

| e-resources | 116 (12) | 189 (19) | 287 (29) | 252 (25) | 149 (15) | 3.13 | 1.223 | Fair knowledge |

| m-Learning | 120 (12) | 205 (21) | 316 (32) | 225 (23) | 129 (13) | 3.04 | 1.197 | Fair knowledge |

| e-assessments | 94 (9) | 215 (22) | 316 (32) | 232 (23) | 137 (14) | 3.10 | 1.170 | Fair knowledge |

| e-PBL | 162 (16) | 263 (27) | 307 (31) | 196 (20) | 64 (6) | 2.73 | 1.142 | Fair knowledge |

| e-portfolio | 186 (19) | 242 (24) | 258 (26) | 195 (20) | 112 (11) | 2.80 | 1.266 | Fair knowledge |

| Simulations | 353 (36) | 238 (24) | 215 (22) | 128 (13) | 59 (6) | 2.30 | 1.239 | Some knowledge |

Notes: Bolded; highlight the calculated weighted average.

Abbreviations: m-Learning, mobile learning; e-PBL, problem-based learning; SD, standard deviation.

Figure 1.

Respondents’ reported benefits gained from e-learning tools.

Abbreviations: m-Learning, mobile learning; e-PBL, problem-based learning.

Overall, 45% (n=449) had “somewhat” adequate knowledge, 36% (n=361) had “somewhat” proper training, 29% (n=289) had “somewhat” positive feelings, and 31% (n=310) were “somewhat” comfortable in engaging in e-learning. While 25% (n=247) felt “undecided” in response to a question asking whether the student had no patience when experiencing minor technical issues. Table 4 displays the survey results concerning the respondent attitudes.

Table 4.

Survey Results for Attitudes Toward e-Learning

| Not at All n (%) | Not Really n (%) | Undecided n (%) | Somewhat n (%) | Very Much n (%) | Mean | SD | Result | |

|---|---|---|---|---|---|---|---|---|

| I have adequate knowledge about e-learning | 28 (3) | 130 (13) | 152 (15) | 449 (45) | 234 (24) | 3.74 | 1.048 | Somewhat |

| I have proper training with e-learning | 56 (6) | 201 (20) | 166 (17) | 361 (36) | 211 (21) | 3.47 | 1.190 | Somewhat |

| I feel I have positive feelings about e-learning | 100 (10) | 164 (17) | 162 (16) | 289 (29) | 279 (28) | 3.49 | 1.322 | Somewhat |

| I am comfortable with engaging in any e-learning programs | 80 (8) | 157 (16) | 176 (18) | 310 (31) | 272 (27) | 3.54 | 1.263 | Somewhat |

| I feel overwhelmed with the entire process of e-learning | 97 (10) | 217 (22) | 241 (24) | 271 (27) | 168 (17) | 3.20 | 1.233 | Somewhat |

| I have no patience while navigating minor technical issues | 102 (10) | 267 (27) | 247 (25) | 272 (27) | 106 (11) | 3.01 | 1.175 | Undecided |

Notes: The students used an ordinal scale to report how much they agreed/disagreed with a question (eg, from “not at all” to “very much”). Bolded; highlight the calculated weighted average.

Abbreviation: SD, standard deviation.

The majority of respondents, 55% (n=545), experienced poor Internet speed, and 51% (n=512) reported a lack of clinical experience and physical examination skills. Table 5 presents the results for the remaining challenges.

Table 5.

Survey Results for Challenges Faced with e-Learning

| Challenge | Responses n (%) |

|---|---|

| Poor Internet speed | 545 (55) |

| Lack of clinical experience and physical examination skills | 512 (51) |

| Lack of technical support | 374 (38) |

| Inadequate quality | 320 (32) |

| Lack of training | 300 (30) |

| Lack of social interactions | 275 (28) |

| Lack of communication from the institution | 257 (26) |

| Lack of feedback | 220 (22) |

| Lack of user skills | 207 (21) |

| Concerns about professional development | 188 (19) |

| Lack of guidelines | 180 (18) |

| Lack of engagement | 166 (17) |

| Psychological issues | 164 (16) |

| Lack of user knowledge | 153 (15) |

| Lack of privacy | 134 (13) |

| Lack of multimedia use | 109 (11) |

| Lack of devices and programs | 106 (11) |

| Lack of desktop computers | 74 (7) |

| Alignment with current curriculum | 68 (7) |

| Confidentiality | 66 (7) |

| Lack of security | 52 (5) |

| Cost | 37 (4) |

| Validity and applicability | 36 (4) |

| Lack of access using username and password | 33 (3) |

| Other | 31 (3) |

| Legal and ethical issues | 16 (2) |

Notes: Bolded; highlight the most common responses.

Table 6 indicates that the mean for the second-semester test score was higher (8.81 ± 1.22) compared to the first semester mean score (7.65 ± 1.35). The absence rate was lower in the second semester (2.98 ± 4.66) compared to the first semester.

Table 6.

Academic Performance of Respondents (N=995)

| Test Score (Mean ± SD) | |

| First semester test score | 7.65 ± 1.35 |

| Second semester test score | 8.81 ± 1.22 |

| Absence Rate (Mean ± SD) | |

| First semester | 3.35 ± 4.53 |

| Second semester | 2.98 ± 4.66 |

There is a statistically significant difference between the first and second semester test scores for year 6, year 5, year 4, and year 2 students (t-test = −4.39, −16.129, −13.24, −4.258, respectively [P value < 0.05]). The mean differences indicate that the students performed better on the second-semester tests with the transition to e-learning. However, this difference between the tests was not statistically significant for the third-year students (t-test= −1.566, P value = 0.057), and this is illustrated in Table 7.

Table 7.

Effect of e-Learning on Undergraduate Medical Students’ Academic Performance

| Academic Year | n | Mean Differences | SD | Std. Error Mean | t-Test | p-value |

|---|---|---|---|---|---|---|

| Year 6 | 220 | −0.2259 | 0.7626 | 0.0514 | −4.394 | 0.001 |

| Year 5 | 160 | −1.71300 | 1.34341 | 0.10621 | −16.129 | 0.020 |

| Year 4 | 232 | −1.60366 | 1.84488 | 0.12112 | −13.240 | 0.010 |

| Year 3 | 191 | −1.27429 | 4.2315 | 0.81352 | −11.460 | 0.057 |

| Year 2 | 185 | −2.01286 | 6.43024 | 0.47276 | −4.258 | 0.021 |

Notes: Paired sample t-test. Bolded; highlight the statistically significant values.

Abbreviations: SD, standard deviation; std. error mean, standard error means.

Discussion

This COVID-19 pandemic has accelerated the digital transformation in the education system as part of Vision 2030 in Saudi Arabia. After the transition to e-learning, the population in our study demonstrated that medical students know about e-learning tools, gained a fair amount of knowledge, and had acceptable attitudes towards e-learning. However, students faced problems such as poor Internet speed, lack of clinical experience, and physical examinations. Test scores were higher in the second semester, as was their attendance rate.

Our study demonstrated that this population of students know about mobile learning, links, online classes, e-assessments, discussion boards, e-portfolios, and e-resources. This is similar to research by Sud et al, which demonstrated that 85% of students know about e-learning tools.15 However, this is in contrast to a report from Brazil, where Carvalho et al found that students in public universities do not know about e-learning, and that was due to a lack of resources.16 Moreover, in our study, the students demonstrated that they gained a fair amount of knowledge, which is similar to Kaur et al’s results from a survey of 983 students that demonstrated that e-learning increased knowledge.17 Further, a systematic review and metanalysis demonstrated that e-learning enhanced the undergraduates’ knowledge.18

Nonetheless, the students reported that they were “unsure” about if e-learning could be used for simulations or virtual patients. This might be because the students in the early academic years had not yet been exposed to simulation-based learning compared to later academic years. Kaltman et al found that it is feasible to incorporate interactive simulations into the curriculum, which appealed to students.19 While simulated patients and e-learning technology is already a part of medical education, it is not available in many institutions, and substantial faculty time is required to implement this technology.20 Hence, when utilized properly, simulation-based learning can provide the knowledge, skills, communications, and proficiencies necessary for successful medical education.

Our results demonstrated that students have acceptable attitudes toward e-learning. Overall, the students remarked that they had “somewhat” adequate knowledge, proper training, and positive feelings in regard to e-learning and that they felt “somewhat” comfortable This similar to Sandhu et al’s findings that demonstrated positive attitudes by students who used online learning.21

Digitalization of course material can help medical students overcome limitations associated with traditional learning. However, e-learning presents unique challenges, such as poor Internet speed reported by Amir et al22 and Sud et al.15 Further, students reported a lack of clinical experience/physical examination. This is mainly because all hospitals suspended clinical activities (observerships, bedside teaching, clerkships, and electives), which is similar to students in other countries such as the UK,23 and a systematic review identified similar challenges imposed by removing students from hospitals. Subsequently, this resulted in a loss of direct patient interaction, feedback from clinicians, confidence, competencies, and development as future physicians.24 This is why traditional sessions can be replaced successfully with innovative virtual solutions. For example, the implementation of virtual bedside rounds using videoconferencing by experienced physicians led to improved student knowledge and engagement in 93% of respondents.25 Moreover, a virtual clerkship program was enjoyed by students, the feedback was positive, and it increased their clinical reasoning and communication skills.25 Our results are in line with a systematic review,26 in terms of the technical issues. However, our study respondents did not report significant issues with confidentiality (only in 7%) or reduced student engagement (17%).

Academic performance is an educational outcome concerning universities, teachers, and students, and it is usually measured using examination results or continuous assessment. However, there is no general agreement on which aspects are the most important and how it is best tested. We observed an interesting finding—after the transition to e-learning because of the COVID-19 pandemic (during the second semester), the students’ test scores increased compared to the first semester. While there is the concern of conducting valid and secure virtual exams,27 frequent testing, spaced learning, scaffolding, and interleaving are potential advantages of e-learning and can enhance academic performance. Additionally, we observed fewer student absences in the second semester, which is similar to Da Silva findings that demonstrated improved class attendance due to ease of access.28

This study has several strengths. First, this study is the first in the region to describe medical students’ e-learning knowledge, attitudes, and challenges, and it is the first to explore the influence of e-learning on medical students’ academic performance during the COVID-19 pandemic. Second, we used a rigorous method to design and generate items in a manageable list and conduct, validate, and administer the survey instrument. Moreover, it is the first instrument of its kind to be developed thus far. Third, we collected data in a realistic setting and had a high response rate.

However, there are limitations to this study that limit the inferences that can be drawn from the data. First, the participants were mostly from a single academic institution. This is an inherent weakness because the participation was restricted to undergraduate medical students. Second, differences in learning experiences vary between countries as students are also different, and this could limit the inferences. Ideally, we would collect responses from different institutions globally. Third, we measured academic performance changes using summative test scores in the first and second semesters for all academic years before and after the transition to e-learning. Additionally, there was no control group, and comparing test scores between two different semesters can imply different topics were assessed.

Our study adds to the existing knowledge of how educational systems responded to the COVID-19 pandemic. Our university and many others across the world already have the infrastructure to switch to e-learning. As this study presents students’ views and challenges, perhaps the next step is to focus on the faculties to understand their views, needs, and skills for implementing digital education. World-class medical education experts’ webinars should be conducted to explore more options and motivate students and faculties.

This study can expose challenges to e-learning that could be improved by increasing Internet speeds and designing tools to enhance the clinical experience and physical examination skills using virtual or simulated patients. In particular, virtual reality can add value to e-learning for clinical teaching, and educators need to integrate innovative designs in the curriculum to achieve desired educational goals. Some researchers have argued that e-learning cannot substitute real patient encounters.15,29 However, as the length of the COVID-19 pandemic is still unknown, further research is necessary to determine this outcome. Moreover, COVID-19 has created a new norm and paved the way for the transition to a digital era in most aspects of our life. Addressing these challenges can improve e-learning and enhance students’ and faculties’ willingness to engage in e-learning programs.

The delivery of e-learning is potentially subject to more variation, as several questions remain unanswered, such as ethical issues and professional development. Additionally, it can also affect interpersonal relationships, communication, social interaction, medical-legal aspects, psychological support, privacy, and logistics. Many institutions have switched to e-learning programs in response to the pandemic, and the outcomes of the switch to e-learning require further evaluation. Moreover, e-learning may only be feasible and applicable at certain institutions because of the high cost of using and maintaining the platforms. Further, fair medical education systems with adaptability and a strong medical school community are warranted.30

Future research could address the availability of funding to invest in infrastructure, e-learning modalities, learning materials, quality, information management systems, support, and resources. Further, partnerships with international expertise for continuous improvement to fill the gaps in education systems and create personalized learning programs. The digital revolution needs the collaboration of expertise in IT, educators, and private organizations to build a state-of-the-art platform to connect students, teachers, and devices effectively. As the sample size is small in many studies, future research could focus on the reliability of e-learning by using larger sample sizes and explore if artificial intelligence could be incorporated in digital education.

Conclusion

E-learning is a developing tool for teaching and learning. If implemented in the appropriate venues, it can improve educational outcomes and reduce the burden on teachers, clinicians, and students. Challenges associated with e-learning during the COVID-19 pandemic are yet to be addressed and are worth pursuing in further research. Collaboration and partnership with telecommunication companies, policymakers, and medical educators can lower costs in the long run and enrich universities’ teaching and learning experiences.

Acknowledgments

We thank all medical students at UQU who participated in this survey.

Abbreviations

COVID-19, coronavirus disease; e-PBL, problem-based learning; IT, information technology; m-Learning, mobile learning; UQU, Umm AlQura University.

Author Contributions

The author made a significant contribution to the work reported, whether that is in the conception, study design, execution, acquisition of data, analysis and interpretation, or in all these areas; took part in drafting, revising or critically reviewing the article; gave final approval of the version to be published; agreed on the journal to which the article has been submitted; and agreed to be accountable for all aspects of the work.

Disclosure

The author reports no conflicts of interest in this work.

References

- 1.Hassounah M, Raheel H, Alhefzi M. Digital response during the COVID-19 pandemic in Saudi Arabia. J Med Internet Res. 2020;22(9):e19338. doi: 10.2196/19338 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Saudi Vision. 2030. Available from: https://vision2030.gov.sa/en. Accessed December22, 2020.

- 3.Information and statistics. Available from: https://www.moe.gov.sa/en/knowledgecenter/dataandstats/Pages/infoandstats.aspx#. Accessed December22, 2020.

- 4.School closures caused by Coronavirus (Covid-19). Available from: https://en.unesco.org/covid19/educationresponse. Accessed December16, 2020.

- 5.Saudi Arabia announces first case of coronavirus. Available from: https://www.arabnews.com/node/1635781/saudi-arabia. Accessed December21, 2020.

- 6.Car J, Carlstedt-Duke J, Tudor car L, et al. Digital education in health professions: the need for overarching evidence synthesis. J Med Internet Res. 2019;21(2):e12913. doi: 10.2196/12913 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Martinengo L, Yeo NJY, Tang ZQ, Markandran KD, Kyaw BM, Tudor Car L. Digital education for the management of chronic wounds in health care professionals: protocol for a systematic review by the digital health education collaboration. JMIR Res Protoc. 2019;8(3):e12488. doi: 10.2196/12488 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.George PP, Papachristou N, Belisario JM, et al. Online eLearning for undergraduates in health professions: a systematic review of the impact on knowledge, skills, attitudes and satisfaction. J Glob Health. 2014;4(1). doi: 10.7189/jogh.04.010406 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Sharma AK, Sharma HR, Choudhary U. E-learning versus traditional teaching in medical education: a Comparative Study. JK Sci. 2020;22(3):137–40. Available from: https://www.jkscience.org/. [Google Scholar]

- 10.Burns KEA, Duffett M, Kho ME, et al. A guide for the design and conduct of self-administered surveys of clinicians. CMAJ. 2008;179(3):245–252. doi: 10.1503/cmaj.080372 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Passmore C, Dobbie AE, Parchman M, Tysinger J. Guidelines for constructing a survey. Fam Med. 2002;34(4):281–286. [PubMed] [Google Scholar]

- 12.Collins D. Pretesting survey instruments: an overview of cognitive methods. Qual Life Res. 2003;12(3):229–238. doi: 10.1023/A:1023254226592 [DOI] [PubMed] [Google Scholar]

- 13.Woodward CA. Questionnaire construction and question writing for research in medical education. Med Educ. 1988;22(4):345–363. doi: 10.1111/j.1365-2923.1988.tb00764.x [DOI] [PubMed] [Google Scholar]

- 14.Warmbrod JR. Reporting and interpreting scores derived from likert-type scales. J Agric Educ. 2014;55(5):30–47. doi: 10.5032/jae.2014.05030 [DOI] [Google Scholar]

- 15.Sud R, Sharma P, Budhwar V, Khanduja S. Undergraduate ophthalmology teaching in COVID-19 times: students’ perspective and feedback. Indian J Ophthalmol. 2020;68(7):1490. doi: 10.4103/ijo.IJO_1689_20 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Carvalho VO, Conceição LSR, Gois MB Jr. COVID-19 pandemic: Beyond medical education in Brazil. J Card Surg. 2020;35(6):1170–1171. doi: 10.1111/jocs.14646 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Kaur N, Dwivedi D, Arora J, Gandhi A. Study of the effectiveness of e-learning to conventional teaching in medical undergraduates amid COVID-19 pandemic. National Journal of Physiology, Pharmacy and Pharmacology. 2020;10(7):563–567. doi: 10.5455/njppp.2020.10.04096202028042020. [DOI] [Google Scholar]

- 18.Pei L, Wu H. Does online learning work better than offline learning in undergraduate medical education? A systematic review and meta-analysis. Med Educ Online. 2019;24(1):1666538. doi: 10.1080/10872981.2019.1666538 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kaltman S, Talisman N, Pennestri S, Syverson E, Arthur P, Vovides Y. Using technology to enhance teaching of patient-centered interviewing for early medical students. Simul Healthc. 2018;13(3):188–194. doi: 10.1097/SIH.0000000000000304 [DOI] [PubMed] [Google Scholar]

- 20.Geha R, Dhaliwal G. Pilot virtual clerkship curriculum during the COVID‐19 pandemic: podcasts, peers and problem-solving. Med Educ. 2020;54(9):855–856. doi: 10.1111/medu.14246 [DOI] [PubMed] [Google Scholar]

- 21.Sandhu P, de Wolf M. The impact of COVID-19 on the undergraduate medical curriculum. Med Educ Online. 2020;25(1):1764740. doi: 10.1080/10872981.2020.1764740 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Amir LR, Tanti I, Maharani DA, et al. Student perspective of classroom and distance learning during COVID-19 pandemic in the undergraduate dental study program Universitas Indonesia. BMC Med Educ. 2020;20(1):392. doi: 10.1186/s12909-020-02312-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Ahmed H, Allaf M, Elghazaly H. COVID-19 and medical education. Lancet Infect Dis. 2020;20(7):777–778. doi: 10.1016/S1473-3099(20)30226-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Dedeilia A, Sotiropoulos MG, Hanrahan JG, Janga D, Dedeilias P, Sideris M. Medical and surgical education challenges and innovations in the COVID-19 era: a systematic review. In Vivo (Brooklyn). 2020;34(3Suppl):1603–1611. doi: 10.21873/invivo.11950 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Hofmann H, Harding C, Youm J, Wiechmann W. Virtual bedside teaching rounds with patients with COVID-19. Med Educ. 2020;54(10):959–960. doi: 10.1111/medu.14223 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Wilcha R-J. Effectiveness of virtual medical teaching during the COVID-19 crisis: systematic review. JMIR Med Educ. 2020;6(2):e20963. doi: 10.2196/20963 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Kaup S, Jain R, Shivalli S, Pandey S, Kaup S. Sustaining academics during COVID-19 pandemic: the role of online teaching-learning. Indian J Ophthalmol. 2020;68(6):1220. doi: 10.4103/ijo.IJO_1241_20 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Da Silva BM. Will virtual teaching continue after the COVID-19 pandemic? Acta Med Port. 2020;33(6):446. doi: 10.20344/amp.13970 [DOI] [PubMed] [Google Scholar]

- 29.Woolliscroft JO. Innovation in response to the COVID-19 pandemic crisis. Acad Med. 2020;95(8):1140–1142. doi: 10.1097/ACM.0000000000003402 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Mann S, Novintan S, Hazemi-Jebelli Y, Faehndrich D. Medical students’ corner: lessons from COVID-19 in equity, adaptability, and community for the future of medical education. JMIR Med Educ. 2020;6(2):e23604. doi: 10.2196/23604 [DOI] [PMC free article] [PubMed] [Google Scholar]