Abstract

Given their inherent complexity, we need a better understanding of what is happening inside the “black box” of population health interventions. The theory-driven intervention/evaluation paradigm is one approach to addressing this question. However, barriers related to semantic or practical issues stand in the way of its complete integration into evaluation designs. In this paper, we attempt to clarify how various theories, models and frameworks can contribute to developing a context-dependent theory, helping us to understand the black box of population health interventions and to acknowledge their complexity. To achieve this goal, we clarify what could be referred to as “theory” in the theory-driven evaluation of the interventional system, distinguishing it from other models, frameworks and classical theories. In order to evaluate the interventional system with a theory-driven paradigm, we put forward the concept of interventional system theory (ISyT), which combines a causal theory and an action model. We suggest that an ISyT could guide evaluation processes, whatever evaluation design is applied, and illustrate this alternative method through different examples of studies. We believe that such a clarification can help to promote the use of theories in complex intervention evaluations, and to identify ways of considering the transferability and scalability of interventions.

Keywords: System, Evaluation, Theory, Public health

Introduction

Experimental designs are popular in health research because of their high internal validity [1]. This internal validity is related to the ability to control confounding variables (i.e., high internal validity gives substantial confidence that the results are due to the intervention itself). To limit such confounding variables, the experiment must employ principles of “all things being equal” (e.g., population characteristics and external factors) and high intervention standardization, which are in fact remote from real-life conditions (e.g., delivery modalities, stakeholder compliance and patient selection). This results in universal laws that are free from contextual influences, considered as bias [2].

However, population health interventions (PHIs) are generally considered complex, as they include several components which interact with one another to produce a number of outcomes [3]. Moreover, beyond the interventional components, the intervention should not be isolated from the specific context in which it is implemented [3–5]. Indeed, rather than an intervention, it should be considered an “interventional system” [5, 6] that includes pre-existing contextual parameters that could be within or outside the control of intervention developers and implementers. Hence, an evaluation should assume that (i) the contribution of all components in this interventional system, as well as the effect of their combination, must be evaluated, and (ii) the conclusions of the study/trial are context-based, (iii) even though some of the conclusions (i.e., the key functions) could be transferable to other settings [12].

The question thus becomes: How should the effect of individual components of this interventional system and their interactions be evaluated to identify the key functions? Such an analysis is necessary to define the conditions of intervention transferability and scalability.

One way to do this would be to theorize interventions by using the theory-driven evaluation (TDE) paradigm [6, 7]. Indeed, a TDE [8–10] is based on a contribution analysis [11] which assesses questions inferring causality in real-life programme evaluations [12]. The aim is to reduce uncertainty about the contribution of all input that could contribute to the outcome. A TDE could be an evaluation design on its own as an alternative to an experimental trial (e.g., a realist evaluation), or it could be combined with/integrated into a classical experimental design [7, 13, 14]. The core principle is to base the evaluation on an explicit conceptualization of the theory used to define the data collection, such that the theory conceptualizes the features of the intervention that should be made explicit and validated by the evaluation process.

What theory are we talking about in TDE, however? Indeed, while various methodological studies have acknowledged that this theory-based approach is crucial [7, 16], they have also noted the tendency to pick a theory “off the shelf” rather than use task-specific theories of change, even as many dominant theories “have done little to make interventions more effective” [16]. These points remind us of the need to pay careful attention to evidence-based arguments when selecting a theory [16]. Similar to other authors [7], they specify that the issue of integrating TDE more fully into an evaluation design involves clarifying what we actually mean by theory as “people are talking at cross purposes in relation to the various kinds of theory” [7]. We hypothesize that if TDE is underused in PHI research (PHIR) [15], it is due to the failure to define the so-called theory and the lack of clear and practical guidelines for designing and validating this theory.

This article therefore aims to further the use of TDE through two pragmatic suggestions based on our practical experience, namely, clarification of (i) what the “theory” in TDE could be and (ii) how it might be employed in intervention development and evaluation designs.

Designing and qualifying a theory in TDE

A theory has been defined as “a set of analytical principles or statements designed to structure our observation, understanding, and explanation of the world” [16]. This definition is broad and can generate confusion. Attempting to clarify the definition in the field of science implementation, Nilsen [16] proposed three main conceptualizations: a theory can be described as explanatory, “made up of definitions of variables (…) and a set of relationships between the variables and specific predictions”; a model can be described as descriptive, not explanatory, and as providing a “deliberate simplification of a phenomenon or a specific aspect of a phenomenon”; or it can be seen as a framework, which is also descriptive, but not explanatory, “presenting categories (e.g., concepts, constructs, or variables) and the relations between them that are presumed to account for a phenomenon”. Nilsen describes five theoretical approaches in implementation science: process models, determinant frameworks, classical theories, implementation theories, and evaluation frameworks [16]. The differences between these definitions remain slight, and some crossover exists between them. For example, some of the “classical theories” (i.e., essentially psychosocial theories) are called “models”, despite their offering explanations, such as the health belief model [17]. Evaluation frameworks (e.g., TDE) provide another example, describing the steps involved in conducting an evaluation. These can also be defined as process models dedicated to evaluation .

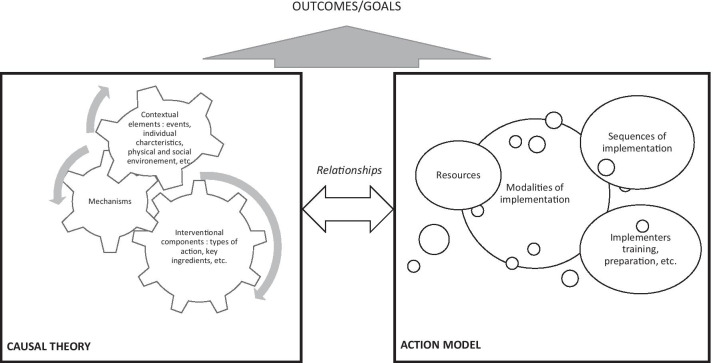

In this context, it is not easy to clarify a theory in TDE; is it one of the abovementioned theories, models or frameworks? Or a combination of them? In TDE, the theory explains how a programme generates its effects (why and how the intervention works) by defining a set of explicit or implicit assumptions on the part of stakeholders about which action is required to solve a problem and why the problem will respond to this action [9, 18, 19]. Following on from our previous work on interventional systems assuming this blurring between context and intervention components [5, 20], the theory in TDE should integrate various elements arising from other theories, models and frameworks because (i) it is explanatory, considering which causal pathway is expected to meet the goal, similar to classical theories; (ii) it hypothesizes which specific actions and sequences of implementation contribute to this causal pathway, similar to a process model; and (iii) it considers contextual elements and their influence within a specific setting. To be clear, we took on board Moore and Evans’s statement [21]: “[A] single theory will not tell the whole story because it could place weight on some aspects (e.g., certain causal factors) at the expense of others.” From this perspective, excluding one theoretical approach in favour of another could lead to a partial understanding of the intervention system; the theory in TDE should consider all of these theoretical approaches as long as they are well understood and differentiated. Therefore, we suggest introducing the concept of interventional system theory (ISyT), which would combine what we define as:

Causal theory (the term “theory” was chosen because of its explanatory aspect and its independence from a classical theory): Causal theory involves explanatory and mechanistic components, but it also considers all of the determinants likely to be involved as barriers or enablers to meet goals, as well as actions triggering the expected mechanisms.

Action model (the term model was chosen because of its sequential pattern, as in a process model): Action models are employed by developers and implementers. They provide concrete elements of action and implementation intended to guide the process to meet the purposes. The core aspect of the action model is its focus not only on the activities involved in outcomes, but also on the sequences, resources and prerequisites needed to implement them.

Figure 1 describes ISyT.

Fig. 1.

Interventional system theory

Articulation of various theoretical approaches with ISyT

Once the ISyT has been defined, the question of its articulation with existing theories, models and frameworks arises. In fact, we suggest that all of its components could be informed by these theories a priori. Indeed, a causal theory could be informed by explanatory theories such as classical theories (e.g., mechanisms and causal relationships between variables) or by determinant frameworks (contextual influencing parameters), and the action model by implementation theories or process models.

For example, one of the mechanisms involved in behavioural change is motivation (a mechanism), which is enhanced by self-efficacy (another mechanism). Motivation and self-efficacy and their influence on behaviour (goal) have been documented in numerous classical theories. One way to increase self-efficacy is to provide positive feedback on the change process (interventional component), an approach that has been documented by several implementation theories. This positive feedback could be provided by professionals (another interventional component), but also by relatives or communities around the person, who need to be involved and sensitized to support the person in the change process (another interventional component). Some experiments have explained how to mobilize these communities according to specific or generalizable process models that involve training or supportive processes. The ability to do this may be dependent on multiple contextual elements that can act as barriers or enablers (contextual elements). For instance, the motivation to change could be impeded or favoured by the opportunity for change due to a lack of, or the provision of, resources to support the change. The roles of these contextual factors have been documented by numerous socioecological determination frameworks [22–25].

Hence, we attempt to synthesize these different approaches (framework models, classical theories, etc.) in the field of PHI to assess how they might help to clarify theoretical understanding of the intervention system. This work is summarized in Table 1, which clarifies the contributions of these theories to an understanding of the interventional system, thereby providing a definition of the components of the ISyT. We did not include the methodological frameworks that are potentially useful to define the development and evaluation stages of ISyT (such as TDE frameworks).

Table 1.

Four theoretical approaches for understanding the population health interventional system (ISy)

| Terms | Definition | Constructs | Purpose | Specificities | Examples in the public health field | Value in understanding intervention systems |

|---|---|---|---|---|---|---|

| Determinant framework | An overview of determinants and categories presumed to account for a phenomenon by acting as barriers and enablers |

Environmental determinants Sociological determinants Psychological determinants Organizational determinants |

Providing clues as to how the micro–meso–macro context could influence a health phenomenon |

Multilevel With multiple influences Provides no explanation, only clues Derived from empirical studies of barriers and enablers |

Social determinant frameworks [22] | Identifying all of the elements to be considered in understanding the system from multilevel points of view |

| Classical theory | An explanatory definition of relationships between variables and the specific results of their combinations |

Psychosocial constructs Structural constructs Relationships among all constructs and specific predictions, especially those formulated as mechanisms |

Explaining how and why specific relationships among a set of constructs lead to specific events |

Focused on the mechanisms of effects Provides some explanations Derived from fundamental work in various disciplines (psychology, sociology, political sciences, etc.) |

Behavioural: social cognitive theory [26] Organizational/social: social capital theories [27] |

Identifying the mechanisms of effects and the factors potentially involved in their triggering |

| Process model | A deliberate simplification of a process describing how different resources could be combined to produce a change within a specific context |

Variables relating to implementation (training, communication, decision, revision, etc.) May include some contextual elements influencing the delivery |

Describing and/or guiding a process |

Recognizing a temporal sequence and conditions of the progression of implementation endeavours More or less emphasis on the context and its influence on delivery Derived from field expertise and experimentation |

The PRECEDE–PROCEED model [28] | Identifying the combination of resources and activities, as well as their sequence, needed to produce a change |

| Implementation theories | A combination of classical theories and activities, with or without a temporal sequence |

Implementation Constructs involved in mechanisms triggering effects Mechanisms of effects |

Explaining how and why specific relationships between a set of constructs and interventional elements lead to specific events |

Derived from field expertise and experimentation Derived from fundamental work in various disciplines (psychology, sociology, political sciences, etc.) |

The behaviour change wheel [29] | Linking mechanistic hypotheses and the resources and activities potentially influencing them to design or understand how interventional inputs could work |

The role of mechanisms in ISyT

The aim in designing this ISyT is to understand how these mechanisms are produced [5, 6] and under which conditions they trigger specific results. Such mechanisms are consequently considered key functions of the interventional system [5, 6]. Their integrity guarantees the transferability of an intervention. We should distinguish these key functions from their form which reflects adaptation to the context, at the same time noting that there are different definitions of mechanisms [5, 26]. Machamer et al. [27] defined them as “entities and activities organized such that they are productive of regular changes from start or set-up to finish or termination of conditions”. Weiss defined them as different from activities, but the response that the activities generate [8, 28]. In a similar vein, in the realistic approach, a mechanism is “an element of reasoning and reaction of an agent with regard to an intervention productive of an outcome in a given context” [26]. In health psychology, they can be defined as “the processes by which a behavior change technique regulates behavior” [29]. This could include, for instance, how practitioners perceive an intervention’s usefulness or how individuals perceive their ability to change their behaviour.

These definitions have a common point: mechanisms are the inescapable prerequisites for change. In this respect, we argue that they are key factors for investigation by means of a TDE, and are the elements of an interventional system that must be reproduced during transfer to other settings [5, 6]. Indeed, many combinations of intervention–contextual elements could produce the same mechanism (e.g., some people are sensitive to emotional support during smoking cessation, while others prefer to have technical support, but both types of support can trigger the same mechanism: i.e., the impression of being reassured, helped and supported). During the transfer to other settings, implementation variations, population characteristics and organizational factors can change, producing the same mechanism or mechanisms that differ from those expected. The intervention process could be adapted to each new context if these adaptations permit the expected mechanisms to occur, which is why the mechanisms are the key functions that must be reproduced.

Thus, the characteristics described in Table 2 can be attributed to ISyT.

Table 2.

Characteristics of ISyT

| Characteristics | |

|---|---|

| An explanatory purpose | Hypothesizing how intervention works within a context |

| A pragmatic role | Guiding how one should act to achieve a goal |

| A broad understanding of each element likely to influence outcomes | Including a systemic approach intervention/context |

| Context based | Conceived as a grounded theory describing all parameters in play in a specific context |

| Potentially generalizable | Highlighting the mechanisms of effect, which are conceived as the key functions of the intervention |

Using ISyT in the evaluation process

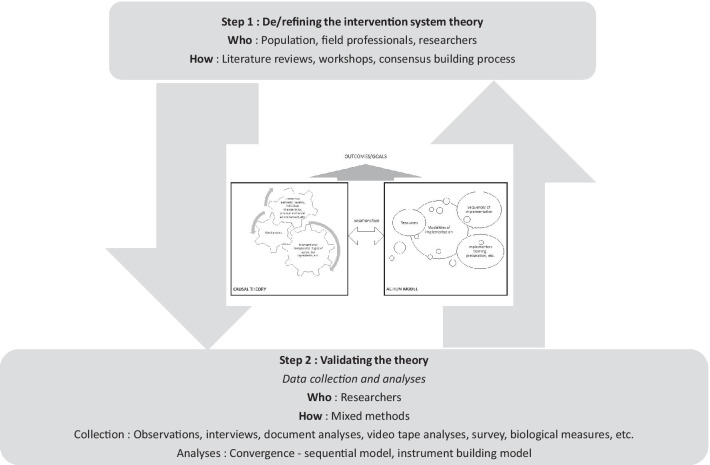

With reference to the theory-driven paradigm, ISyT contributes to the design and evaluation of an interventional system. As in the various theory-driven frameworks such as realistic evaluation [30] or the theory of change (TOC) framework [10], the process involves various key steps in articulating definitions, revising theory and collecting data to inform and refine the theory. To be complete and effective, this process should (i) be established in a participatory way that combines experiential and scientific knowledge, and involves different stakeholders, including populations targeted by the intervention, field professionals developing the intervention who are aware of the context, and researchers providing a global and multidisciplinary analysis of the phenomenon under study; (ii) employ an evidence-based and rigorous consensus-building process that includes, for example, literature reviews, workshops and exploratory studies; (iii) use a hybrid approach that combines both hypothetico-deductive and inductive methods; and (iv) mobilizes quantitative and qualitative data collected in mixed-methods designs [31, 32]. This process could be defined as that depicted in Fig. 2.

Fig. 2.

Using ISyT in the evaluation process

The way in which ISyT is developed depends on the subject, the actors involved (field professionals and population), the extent of their participation in the research process, and the point at which this development takes place in the intervention development process (e.g., to be developed, partly developed, already implemented, or an adaptation of an existing intervention). It is thus difficult to specify which method to use, except that, ideally, it should combine data from several sources: (i) data to explain the mechanisms to be activated (fundamental research, actors’ expertise, pre-existing theoretical frameworks), (ii) data to explain the influence of environments, actors and organizations on these mechanisms (determinants of change, existing and effective means of influencing these determinants), and (iii) data on the feasibility and acceptability of intervention components enabling the intervention inputs to be adjusted (perception of actors, concrete elements concerning the mobilization of resources to be used in order to promote the applicability of intervention components over time). These data must make it possible to understand all the components of the system to be considered and, as far as possible, to anticipate the way in which they interact with each other. This involves both combining reviews of the scientific literature with an analysis of reports or results on pre-existing interventional levers or programmes, qualitative investigations (interviews or observations) with the actors involved, and consensus-building processes in order to stabilize the hypotheses put forward by ISyT.

In the Transfert de Connaissances en REGion (TC-REG) study [40], the theory elaboration process involved two major steps. In step 1, we defined the initial middle-range theory and the knowledge-transfer scheme through (i) a literature review of evidence-based strategies for knowledge transfer and mechanisms to enhance evidence-based decision-making (e.g., the perceived usefulness of scientific evidence); (ii) a qualitative exploratory study in the four regions under study to collect information on existing actions and resources to implement knowledge-transfer strategies; and (iii) a workshop with experts in knowledge transfer, field professionals from the four regions, and TC-REG researchers to select the strategies to be implemented and develop hypotheses regarding the mechanisms potentially activated by these strategies together with any contextual factors potentially influencing them (e.g., the availability of scientific data). In step 2, we validated the initial middle-range theory through two qualitative studies, the first to identify specific actions implemented in the regional knowledge-transfer plan (one series of interviews), and the second to identify the final middle-range theories through two series of interviews and a workshop with the same stakeholders in order to elaborate the theory by combining strategies, contextual factors and the mechanisms to be activated.

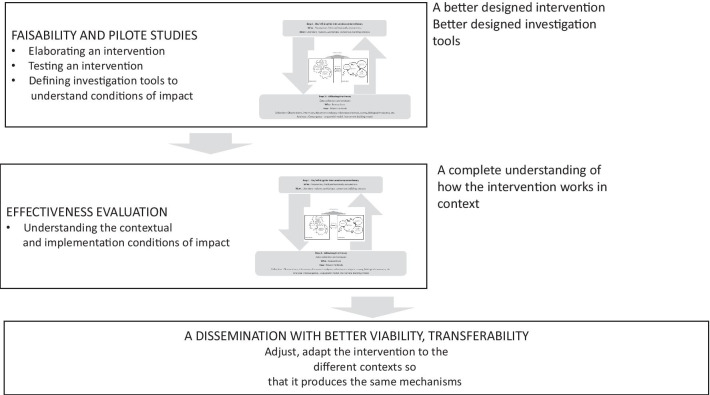

This process is not incompatible with the different stages of experimental evaluations, whatever the stages of their development might be. Indeed, the process could be combined with experimental designs [15]. Figure 3 presents the potential articulation between the intervention system and the experimental design based on the three major steps of trials outlined in the Medical Research Council guidelines [3]: pilot study, evaluation of effectiveness and dissemination. Associating a theory-based approach in pilot studies and subsequent effectiveness studies contributes to a better intervention and evaluation design [7], as well as a better understanding of how the intervention works in the designated context. Both contribute to enhanced dissemination of the intervention given its improved viability under real conditions [33].

Fig. 3.

Articulating experimental design and ISyT

We used this process in the OCAPREV [Objets connectés et applications en prévention] study, for example, to design an evidence- and theory-based intervention, namely a health application, in a pilot study prior to an evaluation [34]. In the ee-TIS [e-intervention Tabac Info Service] study [35], we conducted a randomized control trial with a contribution analysis evaluating a smoking-cessation app (Tabac Info Service). In addition to evaluating outcomes, the study sought to understand how each component of the app encouraged smoking cessation through the mechanisms triggered (e.g., self-efficacy, utility perception, confidence in the app) and the contextual parameters potentially influencing smoking cessation (e.g., smoking status of the domestic partner; presence of children; others’ support in smoking cessation; family, social or professional events).

Moreover, our process is not incompatible with other theory-driven frameworks such as TOC or realistic evaluation frameworks. For example, according to TOC, interventional components or ingredients are fleshed out and examined separately from the context. The initial hypothesis (the theory of change) is based on empirical or theoretical assumptions. Validation (or its absence) then addresses the extent to which the explanatory theory, including the implementation parameters, corresponds to observations. This explains how input, activities and outcomes are linked and how the various interventional components work together in a causal pathway involving causal inferences and implementation aspects to achieve the impact [10]. The difference from our approach is the lack of focus on mechanisms and on the influence of context; instead, in TOC frameworks, the focus is on the intervention and its specific components. In the systemic approach of an ISyT, contextual elements and the mechanisms of effects are actually included in the matrix [5]. This is what we did in the OCAPREV study, where the theory hypothesized 50 causal chains linking behavioural sources (capacity, motivation, opportunity to change) with specific behaviour-change techniques and mechanisms of effect. Some technical recommendations (i.e., implementation processes or contextual elements) were added to these chains to improve the app’s accessibility, acceptability and contribution to reducing health inequalities.

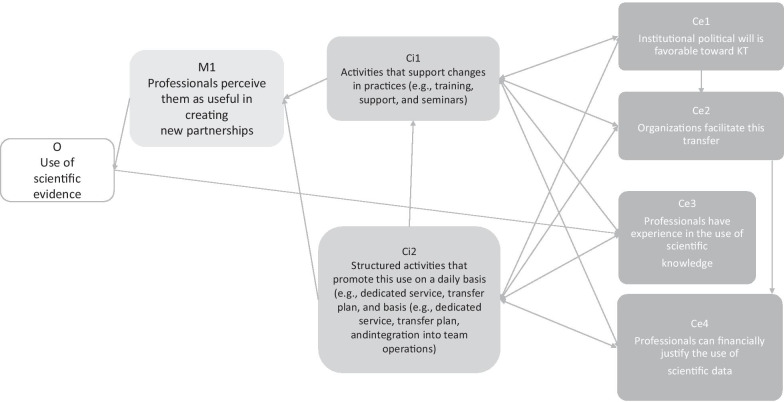

In realistic evaluations [30], contextual elements and mechanisms are considered core elements in middle-range theories. Interventional and implementation components, which are included in the ISyT, are not taken into consideration. Some authors have proposed including these interventional components, called “resources”, in the definition of mechanisms [36]. We do not share this opinion, however, preferring instead the definition of mechanism suggested by Lacouture et al. [26], who focused on the reaction of stakeholders situated in the context (including their interventional input). Thus, according to our definition, what Dalkin [36] termed resources is an aspect of contextual (pre-existing resources) and interventional components (resources provided by creators and implementers), rather than part of the triggering mechanisms. Others have distinguished between intervention and context by referring collectively to the intervention, context, actors, mechanisms, outcomes (ICAMO) configurations [40]. Our interventional system approach does not share this perspective, which blurs the distinction between intervention and context and disregards our position that actors are part of the context. We prefer to keep the tryptic C (context)–M (mechanism)–O (outcome) model by adapting it as follows: Ce (context external to the intervention)–Ci (interventional context)–M (mechanism)–O (outcome). For example, the goal in the TC-REG project [37] was to evaluate the conditions of effectiveness of a knowledge-transfer scheme aimed at evidence-based decision-making (EIDM) in different public health organizations. The middle-range theories were made up of external factors (e.g., initial training of implementers, interest in knowledge-transfer scheme dissemination, leadership profile, political support in the organizations, time to study evidence-based data, team size) (Ce = context external), interventional components (e.g., access to evidence-based data, training courses, seminars, knowledge brokering) (Ci = context interventional) and mechanisms (M) triggered by the combination of both (perception of EIDM utility, incentive to make evidence-based decisions, self-efficacy to analyse and adapt evidence in practice, etc.) to produce outcomes (O).

This process resulted in the creation of eight final middle-range CeCiMO theories about the mechanisms triggered and combined in a final ISyT, leading to the use of scientific evidence. Eight mechanisms were identified to achieve this goal, for example, “professionals perceive them as useful to legitimize or advocate for their professional activity”. Each was triggered by a combination of knowledge-translation activities (interventional components) and contextual components that influenced the activities and also directly influenced the mechanisms, for example, “political will in favour of knowledge translation”; “professionals are aware of the dissemination channels”; “political will and professionals’ experience”; and “favourable organizational conditions”. Figure 4 shows an example of CeCiMO in the TC-REG study.

Fig. 4.

An example of final middle range theory in TC REG Study

ISyT and other programme theories

The major difference of ISyT compared to other research work is the place of contextual elements which are completely integrated in a circular causal perspective, fully in line with the systemic approach as embodied by the principle of realistic evaluation. This approach is brought into effect by interactions between the different components in the system—contextual and interventional and mechanistic—simultaneously modified by and modifying one another.

Carol Weiss, for example, introduced the notion of theory of change, defined as “the set of assumptions that explain both the mini-steps that lead to the long term goal of interest and the connections between program activities and outcomes that occur at each step of the way” [38]. For her, the theory of change involved two components: an implementation theory (i.e., descriptively forecasting the steps to be taken in implementing the programme) and a programmatic theory (i.e., a theory based on the mechanisms that make things happen) [8]. ISyT could be considered as a theory of change according to Weiss’s definition, with the major difference that our causal theory introduced some contextual components in addition to, and in interaction with, the activities.

Funnell and Rogers [39] also added many clarifications to the different terms used in the TDE. They proposed clarifying: (i) the programme theory, defined as an explicit theory of how an intervention is understood to contribute to its intended or observed outcomes—ideally, it includes a theory of change and a theory of action (NB: different from Weiss’s definition); (ii) the theory of change, defined as the central processes or drivers by which change comes about for individuals, groups or communities, derived from a research-based theory of change or drawn from other sources; (iii) the theory of action, defined as the ways in which programmes or other interventions are constructed to activate these theories of change; and (iv) the logic model, defined as a representation of a programme theory, usually in the form of a diagram [39]. According to these definitions, ISyT should be a programme theory with the inclusion of contextual elements in the theory of change (according to their definition), in addition to the theory of action (input from the intervention and sequenced processes to achieve outcomes) (the action model in ISyT).

In a third example, Chen’s action model/change model schema [29], ISyT distributes its contextual components differently. For Chen [29], the change model is related to the specific area of intervention in a linear causality perspective, associating the intervention activities, the determinants and the outcomes they are supposed to impact. The action model is a systematic plan for arranging staff, resources, settings and support organizations in order to reach a target population and deliver the intervention services. Described as a “programmatic model” (different from Weiss’s definition), it sets out the major aspects a programme needs to secure: ensuring that the programme’s environment is supportive (or at least nonhostile), recruiting and enrolling appropriate target group members to receive the intervention, hiring and training programme staff, structuring modes of service delivery, designing an organization to coordinate efforts, and so on. Once again, the difference lies in the place and role of the system’s contextual components. In ISyT, they should not, as in Chen’s schema, be considered only through the implementation of the intervention (Chen’s action model), but also in the production of the effects (Chen’s change model), whether they are manipulable by the implementers (as suggested by Chen’s model), or not (as evoked in ISyT, such as the intrinsic characteristics of organizations or individuals). Here again, the difference lies in the search for feedback loops (as in realistic evaluation), where Chen’s schema proposes a linear reading of events.

Finally, ISyT attempts to contribute to Hawe and colleagues’ work on systems-thinking in the field of prevention [40]. The authors stressed the need to focus on the dynamic properties of the context in which the intervention is introduced via three dimensions: (i) their constituent activity settings, (ii) the social networks that connect the people and the settings; and (iii) the time. They consider the intervention as a “critical event in the history of a system, leading to the evolution of new structures of interaction and new shared meanings”. ISyT fits neatly into this approach through its attempt to add pragmatic methodological elements to untangle the different dimensions.

Conclusion

It is essential to develop a deeper understanding of what is happening inside the “black box” [41] of PHIs. One way to do this is through a theory-driven intervention/evaluation paradigm. However, several barriers have delayed its full integration into public health evaluation designs, especially when we consider an interventional system within a context rather than simply as an intervention. In this case, the evaluation concept should fit in with core aspects of the system [42], notably, the interactions between elements (the relationship between two elements is never unilateral), the holistic nature of the system (the system cannot be reduced to the sum of its parts), its organization (the system is an agent of a relationship that produces behaviours different from those produced by each component individually), and the fact that its complexity derives from the system itself as well as from the uncertainties and its inherent vagaries, randomness, and so on. These characteristics mean that we need to define the theory that will be adopted to represent this system. We thus suggest developing an ISyT (i.e., a context-dependent theory, that is different from classical theories, models or frameworks, but is nonetheless informed by them) and conceptualizing how it could be used and articulated in different evaluation designs, such as experimental trials or other TDE frameworks. This type of clarification could help encourage the use of theories in complex intervention evaluations and shed light on ways to consider the transferability and the scalability of interventions.

Acknowledgements

No acknowledgement.

Abbreviations

- TDE

Theory-driven evaluation

- ISyT

Intervention system theory

- PHIR

Population health intervention research

- TC-REG

Transfert de Connaissances en REGion

- TOC

Theory of change

- CeCiMO

Context external–context interventional–mechanism–outcome

Authors’ contributions

LC and FA wrote, revised and checked the present paper, contributing equally to the manuscript. Both authors read and approved the final manuscript.

Funding

No funding.

Availability of data and materials

Not applicable.

Declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no conflict of interest.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Campbell DT, Stanley JC. Experimental and quasi-experimental designs for research. Chicago: Rand McNally; 1973. p. 84. [Google Scholar]

- 2.Victora CG, Habicht JP, Bryce J. Evidence-based public health: moving beyond randomized trials. Am J Public Health. 2004;94:400–405. doi: 10.2105/AJPH.94.3.400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.MRC. Developing and evaluating complex interventions: new guidance. Medical Research Council, éditeur. London: Medical Research Council; 2012. 39 p.

- 4.Shiell A, Hawe P, Gold L. Complex interventions or complex systems? Implications for health economic evaluation. BMJ. 2008;336(7656):1281–1283. doi: 10.1136/bmj.39569.510521.AD. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Cambon L, Terral P, Alla F. From intervention to interventional system: towards greater theorization in population health intervention research. BMC Public Health. 2019;19(1):339. doi: 10.1186/s12889-019-6663-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Cambon L, Alla F. Current challenges in population health intervention research. J Epidemiol Community Health. 2019;73:990–992. doi: 10.1136/jech-2019-212225. [DOI] [PubMed] [Google Scholar]

- 7.Moore G, Cambon L, Michie S, Arwidson P, Ninot G, Ferron C, et al. Population health intervention research: the place of theories. Trials. 2019;20(1):285. doi: 10.1186/s13063-019-3383-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Weiss CH. Theory-based evaluation: past, present, and future. New Dir Eval. 1997;1997(76):41–55. doi: 10.1002/ev.1086. [DOI] [Google Scholar]

- 9.Chen HT. Theory-driven evaluation. London: SAGE; 1990. p. 326. [Google Scholar]

- 10.De Silva MJ, Breuer E, Lee L, Asher L, Chowdhary N, Lund C, et al. Theory of change: a theory-driven approach to enhance the Medical Research Council’s framework for complex interventions. Trials. 2014 doi: 10.1186/1745-6215-15-267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Mayne J. Addressing attribution through contribution analysis: using performance measures sensibly. Can J Program Eval. 2001;16(1):1–24. [Google Scholar]

- 12.Mayne J. Addressing cause and effect in simple and complex settings through contribution analysis. In: Schwartz R, Forss K, Marra M, editors. Evaluating the complex. Piscataway: Transaction Publishers; 2010. [Google Scholar]

- 13.Thabane L, Ma J, Chu R, Cheng J, Ismaila A, Rios LP, et al. A tutorial on pilot studies: the what, why and how. BMC Med Res Methodol. 2010;10(1):1. doi: 10.1186/1471-2288-10-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Bonell C, Fletcher A, Morton M, Lorenc T, Moore L. Realist randomised controlled trials: a new approach to evaluating complex public health interventions. Soc Sci Med 1982. 2012;75(12):2299–2306. doi: 10.1016/j.socscimed.2012.08.032. [DOI] [PubMed] [Google Scholar]

- 15.Minary L, Trompette J, Kivits J, Cambon L, Tarquinio C, Alla F. Which design to evaluate complex interventions? Toward a methodological framework through a systematic review. BMC Med Res Methodol. 2019;19:92. doi: 10.1186/s12874-019-0736-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. 2015;10(1):53. doi: 10.1186/s13012-015-0242-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Rosenstock IM, Strecher VJ, Becker MH. Social learning theory and the Health Belief Model. Health Educ Q Summer. 1988;15:175–183. doi: 10.1177/109019818801500203. [DOI] [PubMed] [Google Scholar]

- 18.Fitz-Gibbon CT, Morris LL. Theory-based evaluation1. Eval Pract. 1996;17(2):177–184. [Google Scholar]

- 19.Chen HT. Practical program evaluation. Assessing and improving planning, implementation, and effectiveness. Thousand Oaks: SAGE; 2005. p. 2005. [Google Scholar]

- 20.Minary L, Kivits J, Cambon L, Alla F, Potvin L. Addressing complexity in population health intervention research: the context/intervention interface. J Epidemiology Community Health. 2017;0:1–5. doi: 10.1136/jech-2017-209921. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Moore GF, Evans RE. What theory, for whom and in which context? Reflections on the application of theory in the development and evaluation of complex population health interventions. SSM Popul Health. 2017;3:132–135. doi: 10.1016/j.ssmph.2016.12.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Health C on EHP to A the SD of Health B on G Medicine I of National Academies of Sciences . Frameworks for addressing the social determinants of health. Washington, DC: National Academies Press (UC); 2016. [Google Scholar]

- 23.Marmot M, Friel S, Bell R, Houweling TAJ, Taylor S. Closing the gap in a generation: health equity through action on the social determinants of health. Lancet. 2008;372:1661–1669. doi: 10.1016/S0140-6736(08)61690-6. [DOI] [PubMed] [Google Scholar]

- 24.Hosseini Shokouh SM, Arab M, Emamgholipour S, Rashidian A, Montazeri A, Zaboli R. Conceptual models of social determinants of health: a narrative review. Iran J Public Health. 2017;46(4):435–446. [PMC free article] [PubMed] [Google Scholar]

- 25.World Health Organization. A conceptual framework for action on the social determinants of health: debates, policy & practice, case studies. 2010. http://apps.who.int/iris/bitstream/10665/44489/1/9789241500852_eng.pdf. Accessed 8 Jan 2020.

- 26.Lacouture A, Breton E, Guichard A, Ridde V. The concept of mechanism from a realist approach: a scoping review to facilitate its operationalization in public health program evaluation. Implement Sci. 2015;10:153. doi: 10.1186/s13012-015-0345-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Machamer P, Darden L, Craver CF. Thinking about mechanisms. Philos Sci. 2000;67(1):1–25. doi: 10.1086/392759. [DOI] [Google Scholar]

- 28.Weiss CH. How can theory-based evaluation make greater headway? Eval Rev. 1997;21(4):501–524. doi: 10.1177/0193841X9702100405. [DOI] [Google Scholar]

- 29.Michie S, Richardson M, Johnston M, Abraham C, Francis J, Hardeman W, et al. The behavior change technique taxonomy (v1) of 93 hierarchically clustered techniques: building an international consensus for the reporting of behavior change interventions. Ann Behav Med. 2013;46:81–95. doi: 10.1007/s12160-013-9486-6. [DOI] [PubMed] [Google Scholar]

- 30.Pawson R, Tilley N. Realistic evaluation. London: Sage Publications Ltd; 1997. [Google Scholar]

- 31.Creswell J. Research design: qualitative, quantitative, and mixed method approaches. Thousand Oaks: SAGE Publications Ltd; 2003. [Google Scholar]

- 32.Creswell J, Graham W. Designing and conducting mixed methods research. London: SAGE Publication; 2011. [Google Scholar]

- 33.Chen HT. The bottom-up approach to integrative validity: a new perspective for program evaluation. Eval Progr Plann. 2010;33:205–214. doi: 10.1016/j.evalprogplan.2009.10.002. [DOI] [PubMed] [Google Scholar]

- 34.Aromatario O, Van Hoye A, Vuillemin A, Foucaut A-M, Pommier J, Cambon L. Using theory of change to develop an intervention theory for designing and evaluating behavior change SDApps for healthy eating and physical exercise: the OCAPREV theory. BMC Public Health. 2019;19(1):1435. doi: 10.1186/s12889-019-7828-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Cambon L, Bergman P, Le Faou A, Vincent I, Le Maitre B, Pasquereau A, et al. Study protocol for a pragmatic randomised controlled trial evaluating efficacy of a smoking cessation e-’Tabac Info Service’: ee-TIS trial. BMJ Open. 2017;7(2):e013604. doi: 10.1136/bmjopen-2016-013604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Dalkin SM, Greenhalgh J, Jones D, Cunningham B, Lhussier M. What’s in a mechanism? Development of a key concept in realist evaluation. Implement Sci. 2015;10(1):49. doi: 10.1186/s13012-015-0237-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Cambon L, Petit A, Ridde V, Dagenais C, Porcherie M, Pommier J, et al. Evaluation of a knowledge transfer scheme to improve policy making and practices in health promotion and disease prevention setting in French regions: a realist study protocol. Implement Sci. 2017;12(1):83. doi: 10.1186/s13012-017-0612-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Weiss CH. Nothing as practical as good theory: exploring theory-based evaluation for comprehensive community initiatives for children and families. In: In new approaches to evaluating community initiatives: concepts, methods, and contexts. James Connell et al. Washington DC: Aspen Institute; 1995.

- 39.Funnell SC, Rogers PJ. Purposeful program theory: effective use of theories of change and logic models. Hoboken: Wiley; 2011. p. 471. [Google Scholar]

- 40.Hawe P, Shiell A, Riley T. Theorising interventions as events in systems. Am J Community Psychol. 2009;43:267–276. doi: 10.1007/s10464-009-9229-9. [DOI] [PubMed] [Google Scholar]

- 41.Salter KL, Kothari A. Using realist evaluation to open the black box of knowledge translation: a state-of-the-art review. Implement Sci. 2014;9(1):115. doi: 10.1186/s13012-014-0115-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Moigne J-LL. La théorie du système general: théorie de la modélisation. Paris: Presses universitaires de France; 1994. p. 338. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable.