Abstract

Objective:

Recent sepsis studies have defined patients as “infected” using a combination of culture and antibiotic orders rather than billing data. However, the accuracy of these definitions is unclear. We aimed to compare the accuracy of different established criteria for identifying infected patients using detailed chart review.

Design:

Retrospective observational study

Setting:

Six hospitals from three health systems in Illinois

Patients:

Adult admissions with blood culture or antibiotic orders, or Angus ICD infection codes and death were eligible for study inclusion as potentially infected patients. Nine hundred to 1,000 of these admissions were randomly selected from each health system for chart review, and a proportional number of patients who did not meet chart review eligibility criteria were also included and deemed not infected.

Interventions:

None

Measurements and Main Results:

The accuracy of published billing code criteria by Angus, et al. and EHR criteria by Rhee, et al. and Seymour, et al. (Sepsis-3) was determined using the manual chart review results as the gold standard. A total of 5,215 patients were included, with 2,874 encounters analyzed via chart review and a proportional 2,341 added who did not meet chart review eligibility criteria. In the study cohort, 27.5% of admissions had at least one infection. This was most similar to the percentage of admissions with blood culture orders (26.8%), Angus infection criteria (28.7%), and the Sepsis-3 criteria (30.4%). Sepsis-3 criteria was the most sensitive (81%), followed by Angus (77%) and Rhee (52%), while Rhee (97%) and Angus (90%) were more specific than the Sepsis-3 criteria (89%). Results were similar for patients with organ dysfunction during their admission.

Conclusions:

Published criteria have a wide range of accuracy for identifying infected patients, with the Sepsis-3 criteria being the most sensitive and Rhee criteria being the most specific. These findings have important implications for studies investigating the burden of sepsis on a local and national level.

Keywords: sepsis, infections, critical illness, epidemiology, diagnosis

INTRODUCTION

Sepsis, a life-threatening dysregulated host response to infection, causes significant morbidity and mortality (1–6). Efforts to quantify the national burden of sepsis, as well as those identifying risk factors and developing detection algorithms, have utilized various definitions to identify patients (2, 3, 6–18). Although the definition of sepsis has changed over time (1), the presence of infection has remained a cornerstone of the definition. As such, it is critical to accurately identify which patients are infected. Earlier studies utilized billing codes to identify infection (2, 16), but these codes do not provide timing information and have poor sensitivity (6, 19). Recently, studies by Seymour et al. and Rhee et al., utilized combinations of culture orders and antibiotics to identify potential infection (6, 7, 13). This has the advantage of providing the timing of infection and is less impacted by temporal changes in reimbursements compared to billing codes (6). However, these criteria were created using rule-based heuristics and have not been externally compared in multicenter data using manual chart review, and therefore, the criteria that best identifies infected patients is unknown.

Determining the most accurate method of identifying infected patients from electronic health record (EHR) data would allow for better quantification of the burden of sepsis, as well as improved understanding of short- and long-term outcomes of patients with sepsis. It could additionally provide critical information to those interested in developing diagnostic and prognostic tools. Therefore, we aimed to perform manual chart review to identify true cases of infection at three health systems across six hospitals in order to compare the accuracy of existing definitions of infection.

MATERIALS AND METHODS

Study Design

A retrospective electronic chart review of hospital encounters from three hospital systems- the University of Chicago Medicine (UCM), NorthShore University HealthSystem (NUS), and Loyola University Medical Center (LUMC)- was conducted. All adult (age ≥18 years) hospitalized patients at each site from the time of EHR implementation (Epic Systems Corporation; Verona, Wisconsin) with inpatient notes were eligible for inclusion, with the study period spanning 2006–2018. Data were collected and de-identified at each site and then sent to UCM for analysis. This study was approved by the Institutional Review Boards at each site with a waiver of informed consent (IRB16–608).

Hospital admissions were separated into two groups: those eligible and those not eligible for chart review. The criteria for chart review eligibility were: 1) at least one antibiotic order, or 2) a blood culture order, or 3) Angus billing codes for infection and death during the encounter (2, 19). These criteria were created to identify patients with a potential infection based on orders plus additional patients who deteriorated and died quickly before orders could be placed where infection was a possible cause of death. Antibiotics were excluded when defining these criteria if they were given 6 hours before or after an operation to exclude prophylactic peri-operative antibiotics. Oral fluconazole, oral acyclovir, and oral valacyclovir were also excluded as antibiotics to exclude possible chronic prophylactic medications. Between 900 and 1,000 admissions meeting eligibility criteria were randomly selected at each site for chart review. Then, a proportional number of admissions that did not meet chart review eligibility criteria were randomly selected at each site and were assumed to be not infected.

Data Collection

Detailed manual chart review was performed on the randomly selected admissions meeting eligibility criteria. An additional 40 patients not meeting these criteria were chart reviewed to confirm that those not meeting eligibility criteria were truly not infected, which provided >80% power to detect a 5% difference compared to our hypothesized 0% infection rate in this group. The central question of the chart review was “how likely was infection to be the cause of the patient’s signs and symptoms at the time of first suspicion of infection?,” which included infections due to any type of organism. This question was answered using a five-point Likert scale, ranging from Not Infected to Proven Infection. The Likert scale definitions were developed a priori based on clinical experience. Chart reviewers (JD, ND, and SB at UCM, EA and CY at LUMC, and Elizabeth Ryan RN, BSN at NUS) were instructed to examine all relevant clinical notes, orders, vital signs, laboratory values, and other test results to make their final determination regarding the likelihood of infection. Inter-observer agreement was calculated using a weighted kappa between site principal investigators (MC, CW, and MA) and between those investigators and the chart reviewers at each site for the first 20 encounters and then for an additional 20 encounters after 500 charts were reviewed. If the calculated weighted kappa was <0.60, additional training was performed before continuing chart reviews. Disagreements were adjudicated between chart reviewer and site PI by re-reviewing the discrepant chart together. After a threshold weighted kappa of 0.60 was reached (i.e., substantial agreement), independent chart review was performed by a single chart reviewer.

Admissions were rated as a “proven infection” when clinicians were concerned about a new or worsening infection and there was a positive culture. Admissions were rated as a “probable infection” when clinicians determined that infection was the most likely cause of a patient’s signs and symptoms, but there were no positive cultures. Admissions were rated as a “possible infection” when clinicians were concerned about infection, but there was a similar probability that the patient’s status was due to another, non-infectious cause. Admissions were rated as an “unlikely infection” when clinicians were initially concerned about infection but later determined that there was an alternative cause more likely than infection. Finally, admissions were rated as “not infected” if there was never a concern for infection. The non-infectious diagnosis causing the patient’s signs and symptoms for those with possible, unlikely, or no infection was also collected.

Chart review data were collected using Research Electronic Data Capture (REDCap) tools at each site (20). After the chart review was completed, ICD billing codes, culture orders, antibiotic orders, vital signs, laboratory results, discharge survival status, and demographic information from the EHR were merged with the chart review data for analysis.

Statistical Analysis

Patient characteristics and outcomes were compared across groups using chi-squared and analysis of variance (ANOVA) tests. Next, the proportion of patients meeting the infection criteria published by Rhee et al. (Rhee) (6), Seymour et al. (Sepsis-3) (7), and Angus billing infection criteria for each Likert scale value were calculated (2, 19). Rhee was defined as a blood culture order and at least four consecutive days of antibiotics or until one day prior to death or discharge (6). For Rhee, the first day of antibiotics must include an intravenous antibiotic and must have occurred within two calendar days of the blood culture. Sepsis-3 was defined as having a body fluid culture within 24 hours of an antibiotic if the antibiotic order was first, or receiving an antibiotic in the next 72 hours if the body fluid culture order was first (7). Angus infection criteria were defined as the full list of ICD-9 and ICD-10 infection billing codes (including those for sepsis) but without the non-specific organ failure codes (2, 19). Accuracy metrics were calculated for the Rhee, Sepsis-3, and Angus criteria in addition to the individual components of the scores. A subgroup analyses was performed for admissions with organ failure (defined as a sequential organ failure assessment (SOFA) score increase of ≥2 or death during the admission for non-infected patients and from one day before to seven days after the time of infection onset for infected patients, with a SOFA score of zero used as baseline if previous values were unavailable).

Finally, the measured prevalence of infection based on chart review was compared to the estimated prevalence based on the percentage who met Sepsis-3, Angus, and Rhee criteria. For all analyses, patients with “possible” infection were considered infected. A sensitivity analysis was performed by including the “possible infection” admissions as not infected. Data were analyzed using Stata version 15.1 (StataCorps, Texas), and a two-sided p-value <0.05 was considered statistically significant.

RESULTS

Study Population

A total of 678,311 admissions occurred during the study period. Of these, 370,807 encounters (55%) were eligible for chart review. Detailed manual chart review was performed on 1,000 patients each from UCM and NUS, which included 987 at each site who were eligible for chart review and 13 at each site who were not eligible to confirm that those not meeting eligibility were not infected. Nine-hundred charts meeting eligibility criteria were reviewed at LUMC, and an additional 14 were selected from those not meeting eligibility criteria. This resulted in 2,874 randomly selected admissions meeting eligibility criteria that underwent chart review. None of the 40 patients not meeting eligibility criteria were found to be infected, so a sample (n=2,341) of the 307,504 encounters not eligible for chart review were randomly selected, which was the same percentage utilized for the chart review group, to generate an additional “not infected” cohort for a total of 5,215 encounters in the final analysis to match the cohort proportions from the sample to that of the entire population (Supplementary eFigure 1).

Characteristics of admissions based on likelihood of infection

Of the 2,874 encounters analyzed via chart review, 758 (26%) were judged as “proven infection,” 554 (19%) as “probable infection,” 124 (4%) as “possible infection,” 381 (13%) as “unlikely infection,” and 1,057 (37%) as “not infected,” with an additional randomly selected 2,341 also deemed not infected from the not eligible for chart review group. This resulted in an estimated prevalence of infection in the hospital of 27.5% of admissions. Weighted kappa values between chart reviewers for determining the likelihood of infection were 0.91 at UCM, 0.80 at NUS, and 0.91 at LUMC.

Comparisons across these groups are shown in Table 1. Age was significantly different across groups (p<0.01), with the “not infected” (median age 61 years) and not eligible for chart review (60 years) being younger than the other groups (range 64–65 years). Admission source was also significantly different across groups, with the “not infected” chart review group being more likely to be admitted through interventional or operating room locations while the remainder of the groups were most commonly admitted through the emergency department. All groups met a median of 2 systemic inflammatory response syndrome (SIRS) criteria during admission except for the “not infected” and not eligible for chart review groups (median 1 SIRS criteria met). Furthermore, the “proven” and “probable” infection groups had the highest maximum temperature and white blood cell counts during their admission (p<0.01). Co-morbidities were also significantly different across groups; for example, the “possibly infected” had the highest rate of cardiopulmonary disease at baseline (25.8% vs. less than 13% for all other groups). Length of stay was significantly different across groups, with the “proven infection” group having the longest (median 5.8 days) and the not eligible for chart review group having the shortest (median 1.9 days). In-hospital mortality was highest for the “possibly infected” (7.3%) and “proven infection” (6.6%) groups and lowest for the “not infected” and not eligible for chart review groups (0.8% for both groups).

Table 1:

Comparisons of characteristics and outcomes between patients whose charts were reviewed compared with sampled patients whose charts were not reviewed.

| Variable | Proven Infected (n=758) | Probably Infected (n=554) | Possibly Infected (n=124) | Unlikely Infected (n=381) | Not Infected (n=1057) | Not Chart Reviewed (n=2341) | p-value |

|---|---|---|---|---|---|---|---|

| Age, years (median (IQR)) | 65 (51–79) | 64 (49–79) | 65 (53–78) | 64 (49–75) | 61 (47–71) | 60 (42–73) | <0.01 |

| Female (%) | 54.1 | 51.3 | 57.3 | 53.0 | 53.9 | 59.5 | <0.01 |

| Black (%) | 29.6 | 27.4 | 29.0 | 38.3 | 22.0 | 27.1 | <0.01 |

| Admission source (%) ED Ward ICU Other* |

57.3 28.2 9.2 5.3 |

62.1 24.5 6.0 7.4 |

58.1 33.1 6.5 2.4 |

61.2 26.0 6.3 6.6 |

21.2 30.5 6.6 41.7 |

43.6 30.0 2.9 23.5 |

<0.01 |

| Co-morbidities (Elixhauser, %) CPD Diabetes Renal Failure Liver Disease Solid Tumor Liquid Tumor Metastatic Cancer |

9.4 26.0 19.8 8.2 12.1 9.1 6.6 |

11.7 19.7 11.7 4.7 10.5 2.9 5.4 |

25.8 19.4 13.7 5.6 19.4 6.5 13.7 |

12.9 20.7 24.1 8.1 15.7 2.1 10.0 |

6.7 16.6 9.6 5.9 12.6 3.5 5.8 |

7.1 17.8 8.2 2.9 9.5 0.9 3.9 |

<0.01 |

| Had OR visit during hospitalization (%) | 24.8 | 16.6 | 16.1 | 18.1 | 64.1 | 24.6 | <0.01 |

| Highest temperature, C° (median (IQR)) | 37.7 (37.2–38.6) | 37.6 (37.1–38.3) | 37.4 (37–37.9) | 37.4 (37.1–38) | 37.3 (37.1–37.7) | 37.1 (36.8–37.3) | <0.01 |

| Highest bands, % (median (IQR)) | 0 (0–3) | 0 (0–0) | 0 (0–2) | 0 (0–0) | 0 (0–0) | 0 (0–0) | <0.01 |

| Highest WBC, 103/μL (median (IQR)) | 13.8 (9.6–21.3) | 12.5 (8.7–17.5) | 11.8 (8.4–17.6) | 12 (8.1–16.7) | 10.9 (8.4–14.3) | 9.1 (6.8–11.9) | <0.01 |

| Number of SIRS criteria met during hospitalization (median (IQR)) | 2 (1–2) | 2 (1–2) | 2 (1–2) | 2 (1–2) | 1 (1–2) | 1 (1–2) | <0.01 |

| Highest SOFA score (median (IQR)) | 3 (1–6) | 2 (1–4) | 2 (1–4) | 3 (1–5) | 1 (1–3) | 1 (0–2) | <0.01 |

| Length of stay, days (median (IQR)) | 5.8 (3.1–11) | 4 (2.4–6.8) | 4 (1.9–8.6) | 4.3 (2.6–7.5) | 3.2 (2–5.7) | 1.9 (1–3.2) | <0.01 |

| In-hospital mortality (%) | 6.6 | 4.7 | 7.3 | 2.4 | 0.8 | 0.8 | <0.01 |

OR, n/a, Interventional/Other, or Hospice

Abbreviations: ED = emergency department; ICU = intensive care unit; CPD = chronic pulmonary disease; OR = operating room; SIRS = systemic inflammatory response syndrome; SOFA = sequential organ failure assessment, WBC = white blood cell

Distributions and accuracy of different criteria for infection

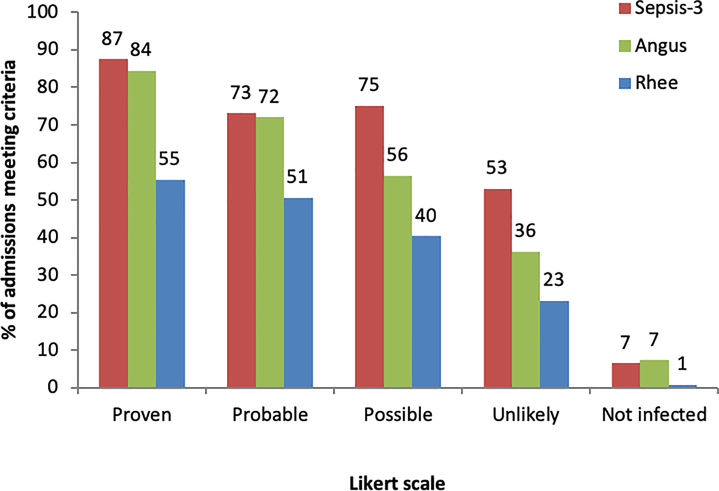

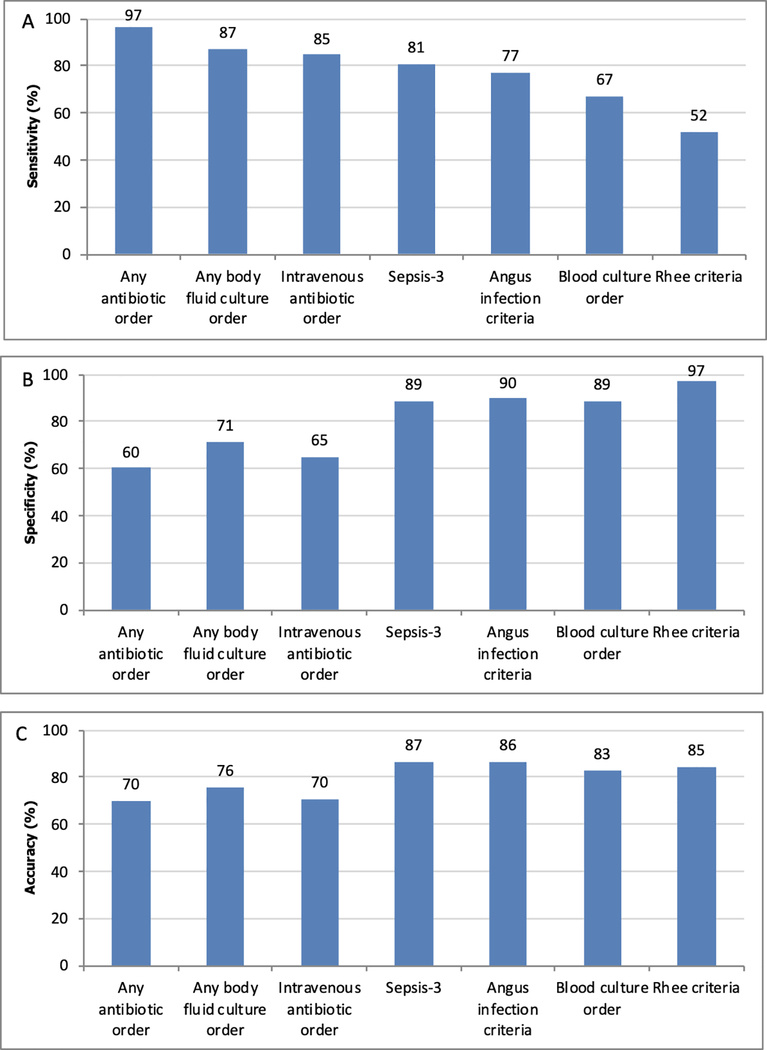

The percentage of admissions meeting Rhee, Sepsis-3, and Angus infection criteria across the different infection Likert scale values are shown in Figure 1, and sensitivity, specificity, and accuracy of these criteria and their individual components are shown in Figure 2. Positive and negative predictive values are shown in Supplementary Appendix eTable 1. Of the definitions, Sepsis-3 had the highest sensitivity (81% (95% CI 79–83%); p<0.01 compared to all other definitions), followed by the Angus infection criteria (77% (95% CI 75–79%)), and Rhee criteria (52% (95% CI 50–55%)). Specificity was highest for Rhee (97% (95% CI 96–97%)), followed by Angus (90% (95% CI 89–91%)), and Sepsis-3 (89% (95% CI 88–90%)). Sepsis-3 had the highest accuracy (87% (95% CI 86–88%); p<0.01 compared to all other definitions), followed by Angus (86% (95% CI 85–87%)) and Rhee (85% (95% CI 84–86%)). Of the individual components, any antibiotic administration had the highest sensitivity (97% (95% CI 96–98%); p<0.01 compared to other components), followed by body fluid culture order (87% (95% CI 85–89%)), intravenous antibiotic administration (85% (95% CI 83–86%)), and blood culture order (67% (95% CI 65–70%). Blood culture orders were the most specific (89% (95% CI 88–90%; p<0.01 compared to other individual components)) while any antibiotic administration was the least specific (60% (95% CI 59–62%)) individual component. Blood culture orders were the most accurate individual components (83% (95% CI 82–84%); p<0.01 compared to other individual components) while antibiotics were the least accurate (70% (95% CI 69–71%) for any and intravenous antibiotics). Results separated by hospital system showed similar patterns to the overall trend (Supplemental Appendix eFigure 2) as did results over time (Supplemental Appendix eFigures 3–5). A sensitivity analysis that moved the “possible infection” group to the not infected category demonstrated similar results to the main analysis (Supplementary Appendix eTable 2).

Figure 1.

Percentage of admissions with each Likert scale value who meet Sepsis-3, Angus, and Rhee infection criteria.

Figure 2.

Sensitivity (A), specificity (B), and accuracy (C) of previously published infection criteria and individual components for identifying infected patients.*

*Patients with possible infection are considered infected.

Causes of false positives

The most common conditions associated with false positive results are shown in Table 2. Forty-three percent of the false positives for the Sepsis-3 criteria were due to orders for prophylactic antibiotics. CHF (7%), pancreatitis (5%), non-infectious malignancy (5%) and surgical conditions (4%) were the next most common causes. Prophylactic antibiotics were also the most common cause of false positives for the Angus and Rhee criteria, followed by CHF and pancreatitis for the Angus criteria, compared to anaphylaxis and CHF for the Rhee criteria being the next most frequent conditions.

Table 2:

Most common conditions associated with false positives when using the Sepsis-3, Angus, and Rhee infection criteria.

| Sepsis-3 Criteria (n=420) (% of false positives) |

Angus Criteria (n=244) (% of false positives) |

Rhee Criteria (n=117) (% of false positives) |

|---|---|---|

| 1. Prophylactic antibiotic: 43% | 1. Prophylactic antibiotic: 33% | 1. Prophylactic antibiotic: 16% |

| 2. Congestive heart failure: 7% | 2. Congestive heart failure: 7% | 2. Anaphylaxis: 9% |

| 3. Pancreatitis: 5% | 3. Pancreatitis: 7% | 3. Congestive heart failure: 9% |

| 4. Malignancy: 5% | 4. Lung disease exacerbation: 5% | 3. Non-infectious malignancy related: 9% |

| 5. Non-infectious surgical complication: 4% | 4. Non-infectious malignancy related: 5% | 5. Pancreatitis: 7% |

Subgroup analysis in patients with organ dysfunction

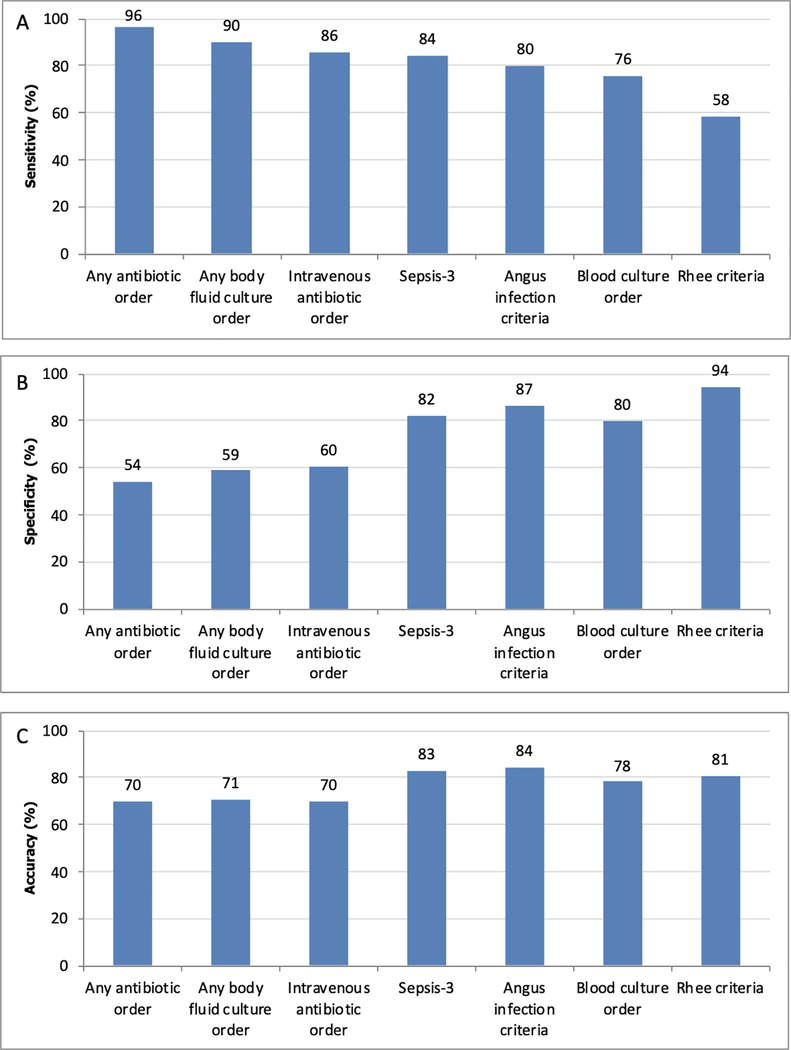

Sensitivity and specificity in patients with an increase in SOFA ≥2 during the admission are shown in Figure 3. Overall, results were similar to the entire cohort, with Sepsis-3 having the highest sensitivity (84% (95% CI 82–87%); p<0.01 compared to other definitions), followed by Angus infection (80% (95% CI 77–83%)), and Rhee criteria (58% (55–62%)). Rhee criteria had the highest specificity (94% (95% CI 93–96%); p<0.01 compared to other definitions), followed by Angus infection (87% (95% CI 85–88%)), and Sepsis-3 (82% (95% CI 80–84%)). Trends for the individual components were also similar to the full cohort.

Figure 3:

Sensitivity (A), specificity (B), and accuracy (C) of previously published criteria and individual components for identifying infection in hospitalized patients with an increase in SOFA score of ≥2.* *Patients with possible infection are considered infected.

Estimating frequency of infection

In the study cohort, 27.5% of admissions had at least one infection. This was most similar to the percentage of admissions with blood culture orders (26.8%), Angus infection criteria (28.7%), and the Sepsis-3 criteria (30.4%). The Rhee criteria considerably underestimated the prevalence of infection during admission in this cohort (16.6%). In the study cohort, 14.3% of admissions had sepsis based on chart review, which was best estimated by Angus infection criteria (14.7%), followed by blood culture orders (16.2%), Sepsis-3 (16.9%), and Rhee (10.3%).

DISCUSSION

In this multicenter study of almost 3,000 manual chart reviews across six hospitals within three health systems, we found that previously published criteria varied in their accuracy for identifying infected patients. The Sepsis-3 infection criteria had the highest sensitivity and overall accuracy, while the Rhee criteria had the highest specificity. This was true in both the entire cohort and in patients with organ dysfunction (i.e., sepsis). Antibiotics prescribed for prophylaxis were the most common contributing factor to false positives. Finally, we found that 27.5% of admissions had an infection and 14.3% has sepsis during their encounter, and this prevalence was best estimated using blood culture orders and Angus infection billing codes. These findings have important implications for studies estimating the burden of sepsis as well as for investigators developing or validating early warning scores and interventions for patients with suspected infection.

Prior investigations have utilized a variety of different definitions to identify potentially infected patients. Although these methods are less time consuming than chart review, their accuracy for identifying infected patients is poorly characterized. Furthermore, different goals may favor different test characteristics, such as preferring high specificity when performing disease surveillance. Historically, ICD billing codes have been used to determine the burden and trends over time of sepsis at a national level (2, 16). These include the codes described by Angus et al. (2), which include both infection, sepsis-specific, and organ failure codes (6). More recently, Rhee and colleagues utilized a combination of antibiotic orders and blood cultures to define infection, and their results suggested that much of the changes over time found in billing data could be explained by changes in coding practices (6). Our work provides important comparisons between the accuracy of the infection components of these criteria, including how well they estimate the burden of infection. We found that the Rhee criteria underestimates the true burden of infection while Sepsis-3 overestimates the burden, with blood culture orders and Angus infection billing codes being closer to the true estimate. This suggests recent studies relying on the Rhee criteria, which was developed to identify serious infections, may have underestimated the true burden of sepsis and that further modification of EHR infection criteria is needed to better estimate the burden of sepsis. In contrast, a significant proportion of the Sepsis-3 false positives were due to prophylactic antibiotics, which suggests that it may be more susceptible to changes in case mix (e.g., transplant patients on multiple prophylactic medications) than the more specific Rhee criteria.

Several groups have developed and validated early warning scores for patients with suspected infection using a variety of definitions (11, 12, 14, 15). Most highly cited are the quick-SOFA (qSOFA) criteria published by Seymour and colleagues, which used the Sepsis-3 infection criteria (7). The Sepsis-3 infection criteria has an advantage over utilizing ICD billing codes to identify infection because it provides a specific timepoint for the onset of infection. Our results show that the Sepsis-3 criteria are more sensitive and accurate than either the Rhee criteria or the Angus criteria but are less specific. Given that early warning scores are increasingly being built into sepsis pathways and that ordering cultures and antibiotics typically represents an initial suspicion of infection- even if this is later proven to be wrong- the Sepsis-3 infection criteria are a reasonable choice when developing tools to risk stratify patients with suspected infection. This is especially true for screening cases where false-positives are less important, and delays in antibiotic initiation have been associated with increased mortality (21).

Our study has several limitations. Most importantly, there is no gold standard for infection because cultures can be negative in up to 50% of patients with infection and some positive cultures are false positives due to contaminants. However, our chart reviewers had good agreement, and we utilized a Likert scale that allowed for documentation of the uncertainty of infection. Additionally, few patients (<5%) were labeled in the “possible infection” category, and the results did not substantively change no matter how this group was handled. In addition, chart reviews were performed in health systems in Illinois and may not be generalizable to other settings. Our cohort does include both teaching and non-teaching hospitals as well as urban, suburban, and rural settings, which increases external validity. Furthermore, our subgroup analysis of patients with SOFA ≥2 did not assess whether the organ dysfunction was caused by the infection, although we did limit the time period of when organ dysfunction occurred to be near the time of infection. Finally, we investigated standard published infection criteria, and it is possible that there are other combinations that are more accurate.

CONCLUSIONS

In conclusion, we found that 27.5% of all hospitalized patients have an infection during their admission and that different billing and EHR criteria to identify these patients varied widely in their accuracy. The Sepsis-3 criteria had the highest sensitivity and accuracy for identifying hospitalized patients with infection as compared to other standard and individual criteria. In contrast, blood culture orders and Angus infection billing codes best estimated the hospital burden of infection. These results have significant implications for studies aimed at identifying, risk stratifying, and estimating the prevalence of infection in hospitalized patients.

Supplementary Material

ACKNOWLEDGMENTS

We would like to thank Elizabeth Gillian (Gilly) Ryan RN, BSN for her assistance with chart reviews.

Conflicts of Interest and Source of Funding: Dr. Churpek is supported by an R01 from NIGMS (NIGMS (R01 GM123193). Drs. Churpek and Edelson have a patent pending (ARCD. P0535US.P2) for risk stratification algorithms for hospitalized patients, and have received research support from EarlySense (Tel Aviv, Israel). Dr. Edelson has received research support and honoraria from Philips Healthcare (Andover, MA), as well as research support from the American Heart Association (Dallas, TX) and Laerdal Medical (Stavanger, Norway). She has ownership interest in Quant HC (Chicago, IL), which is developing products for risk stratification of hospitalized patients. Dr. Afshar is supported by an K23 from NIAAA (K23 AA024503).

Copyright Form Disclosure:

Drs. Churpek and Edelson received funding from NIGMS R01 GM123193; disclosed a patent pending (ARCD. P0535US.P2) for risk stratification algorithms for hospitalized patients and have received research support from EarlySense. Drs. Churpek, Winslow, Shah, and Afshar received support for article research from the National Institutes of Health. Dr. Afshar also received support from a K23 from NIAA K23 AA024503. Dr. Edelson received support for article research from the American Heart Association and Laerdal Medical, and she received research support from Philips Healthcare. She disclosed ownership interest in Quant HC, disclosed that she is president/co-founder and shareholder of AgileMD with licensing agreements with Philips Healthcare and EarlySense, Inc., and disclosed that she is the chair for the American Heart Association Get with The Guidelines adult research task force. The remaining authors have disclosed that they do not have any potential conflicts of interest.

REFERENCES

- 1.Singer M, Deutschman CS, Seymour CW, Shankar-Hari M, et al. : The Third International Consensus Definitions for Sepsis and Septic Shock (Sepsis-3). JAMA 2016; 315(8):801–810 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Angus DC, Linde-Zwirble WT, Lidicker J, Clermont G, et al. : Epidemiology of severe sepsis in the United States: analysis of incidence, outcome, and associated costs of care. Crit Care Med 2001; 29(7):1303–1310 [DOI] [PubMed] [Google Scholar]

- 3.Liu V, Escobar GJ, Greene JD, Soule J, et al. : Hospital deaths in patients with sepsis from 2 independent cohorts. JAMA 2014; 312(1):90–92 [DOI] [PubMed] [Google Scholar]

- 4.Valles J, Fontanals D, Oliva JC, Martinez M, et al. : Trends in the incidence and mortality of patients with community-acquired septic shock 2003–2016. Journal of critical care 2019; 53:46–52 [DOI] [PubMed] [Google Scholar]

- 5.Kadri SS, Rhee C, Strich JR, Morales MK, et al. : Estimating Ten-Year Trends in Septic Shock Incidence and Mortality in United States Academic Medical Centers Using Clinical Data. Chest 2017; 151(2):278–285 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Rhee C, Dantes R, Epstein L, Murphy DJ, et al. : Incidence and Trends of Sepsis in US Hospitals Using Clinical vs Claims Data, 2009–2014. JAMA 2017; 318(13):1241–1249 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Seymour CW, Liu VX, Iwashyna TJ, Brunkhorst FM, et al. : Assessment of Clinical Criteria for Sepsis: For the Third International Consensus Definitions for Sepsis and Septic Shock (Sepsis-3). JAMA 2016; 315(8):762–774 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Churpek MM, Snyder A, Han X, Sokol S, et al. : Quick Sepsis-related Organ Failure Assessment, Systemic Inflammatory Response Syndrome, and Early Warning Scores for Detecting Clinical Deterioration in Infected Patients outside the Intensive Care Unit. Am J Respir Crit Care Med 2017; 195(7):906–911 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Churpek MM, Snyder A, Sokol S, Pettit NN, et al. : Investigating the Impact of Different Suspicion of Infection Criteria on the Accuracy of Quick Sepsis-Related Organ Failure Assessment, Systemic Inflammatory Response Syndrome, and Early Warning Scores. Crit Care Med 2017; 45(11):1805–1812 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Liu VX, Lu Y, Carey KA, Gilbert ER, et al. : Comparison of Early Warning Scoring Systems for Hospitalized Patients With and Without Infection at Risk for In-Hospital Mortality and Transfer to the Intensive Care Unit. JAMA Netw Open 2020; 3(5):e205191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Giannini HM, Ginestra JC, Chivers C, Draugelis M, et al. : A Machine Learning Algorithm to Predict Severe Sepsis and Septic Shock: Development, Implementation, and Impact on Clinical Practice. Crit Care Med 2019; 47(11):1485–1492 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Henry KE, Hager DN, Pronovost PJ, Saria S: A targeted real-time early warning score (TREWScore) for septic shock. Sci Transl Med 2015; 7(299):299ra122. [DOI] [PubMed] [Google Scholar]

- 13.Sathaporn N, Khwannimit B: Validation the performance of New York Sepsis Severity Score compared with Sepsis Severity Score in predicting hospital mortality among sepsis patients. Journal of critical care 2019; 53:155–161 [DOI] [PubMed] [Google Scholar]

- 14.Osborn TM, Phillips G, Lemeshow S, Townsend S, et al. : Sepsis severity score: an internationally derived scoring system from the surviving sepsis campaign database*. Crit Care Med 2014; 42(9):1969–1976 [DOI] [PubMed] [Google Scholar]

- 15.Thiel SW, Rosini JM, Shannon W, Doherty JA, et al. : Early prediction of septic shock in hospitalized patients. J Hosp Med 2010; 5(1):19–25 [DOI] [PubMed] [Google Scholar]

- 16.Martin GS, Mannino DM, Eaton S, Moss M: The epidemiology of sepsis in the United States from 1979 through 2000. The New England journal of medicine 2003; 348(16):1546–1554 [DOI] [PubMed] [Google Scholar]

- 17.Han X, Edelson DP, Snyder A, Pettit N, et al. : Implications of Centers for Medicare & Medicaid Services Severe Sepsis and Septic Shock Early Management Bundle and Initial Lactate Measurement on the Management of Sepsis. Chest 2018; 154(2):302–308 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Rudd KE, Johnson SC, Agesa KM, Shackelford KA, et al. : Global, regional, and national sepsis incidence and mortality, 1990–2017: analysis for the Global Burden of Disease Study. Lancet 2020; 395(10219):200–211 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Iwashyna TJ, Odden A, Rohde J, Bonham C, et al. : Identifying patients with severe sepsis using administrative claims: patient-level validation of the angus implementation of the international consensus conference definition of severe sepsis. Med Care 2014; 52(6):e39–43 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Harris PA, Taylor R, Thielke R, Payne J, et al. : Research electronic data capture (REDCap)--a metadata-driven methodology and workflow process for providing translational research informatics support. Journal of biomedical informatics 2009; 42(2):377–381 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Seymour CW, Gesten F, Prescott HC, Friedrich ME, et al. : Time to Treatment and Mortality during Mandated Emergency Care for Sepsis. The New England journal of medicine 2017; 376(23):2235–2244 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.