Abstract

The advent of large-scale high-performance computing has allowed the development of machine-learning techniques in oncologic applications. Among these, there has been substantial growth in radiomics (machine-learning texture analysis of images) and artificial intelligence (which uses deep-learning techniques for “learning algorithms”); however, clinical implementation has yet to be realized at scale. To improve implementation, opportunities, mechanics, and challenges, models of imaging-enabled artificial intelligence approaches need to be understood by clinicians who make the treatment decisions. This article aims to convey the basic conceptual premises of radiomics and artificial intelligence using head and neck cancer as a use case. This educational overview focuses on approaches for head and neck oncology imaging, detailing current research efforts and challenges to implementation.

INTRODUCTION: BEATING THE HUMAN EYE OR BRAIN

Radiomics and artificial intelligence (AI) have become buzzwords in the oncology research field and are now often the go-to proposed solution for complex clinical prediction problems when imaging is available. This is fueled by the promise of radiomics (machine-learning approaches for image features analyses) and AI (neural networks that learn from the image directly) to be able to extract information in images that cannot be observed by the human eye.1 Nevertheless, more than just detecting meaningful features that cannot visually be observed, these machine-learning pipelines can facilitate pattern recognition in images, detection in biomarker data, and integration with nonimaging variables that cannot be comprehended by the human brain, due to the large number of potential variables.2–4

This article aims to convey the basics of radiomics and AI in relation to head and neck oncologic imaging by addressing the application space for leveraging medical images, radiomics, and basic deep-learning mechanics and the challenges of cancer imaging– enabled AI specifically for patients with head and neck cancer.

OPPORTUNITIES: MODEL-BASED PERSONALIZED TREATMENT DECISION-MAKING

In general, medical machine learning can be categorized as focused on regression or classification tasks, which depends on the predicted outcome. Regression methods (e.g., linear regression, polynomial, and support vector regression) aim to predict continuous values, such as tumor volume reduction, weight loss, or biomarker values, whereas classification methods (e.g., logistic regression and random forest) aim to predict a binary/categorical value, such as tumor recurrence or toxicity development. An additional deviation of the classification is Cox hazard regression, which incorporates the time-to-event together with a binary event (e.g., death or censored). Using these classification and regression approaches, a broad swath of problems can be addressed that, on the surface, seem radically distinct in scope. For example, similar deep-learning approaches can be used for tasks as different as risk stratification, biomarker detection, image segmentation, and image reconstruction.

The most evident example is risk prediction of overall survival or tumor control after treatment based on imaging tumor and clinical characteristics in patients with head and neck cancer. By identifying patients who are at specific high or low risk of treatment failure before treatment, therapy can be tailored to their anticipated risk. For instance, HPV-positive tumors have exceptionally good outcome rates (5-year overall survival approximately 80%5–7); thus, having provoked clinical trials to reduce radiation fractionation dose,8 magnetic resonance (MR)–guided adaptive radiation dose de-escalation,9 and sparked discussions about the relative, as-yet-unquantified, potential benefits associated with chemotherapy for these patients.10,11 Nevertheless, not all patients with HPV-associated oropharyngeal cancer may do well with a de-escalated treatment regimen; thus, models based on radiomics combined with clinical variables (e.g., smoking status) and biomarkers (e.g., HPV subtypes) can play a role in directing treatment alterations more effectively. Similarly, for patients with head and neck cancer at high risk of treatment failure, clinical trials can target these patients specifically for intensified treatment regimens (e.g., image-guided doseescalation or induction chemotherapy). Although intensified regimens may not be effective when applied to the full population with head and neck cancer and may do more harm by increasing treatment-induced toxicity rates, it could be effective in a cohort saturated with high-risk patients only. Moreover, in general, for any treatment approach in which variable effectiveness response can be observed, such as immunotherapy for patients with head and neck cancer,12,13 prediction models can be potentially developed to identify patients who are likely to experience treatment success versus treatment failure and alter treatment accordingly.

Another application for image biomarkers is toxicity development after chemoradiation, as it is highly variable in patients with head and neck cancer (i.e., patients who receive a similar dose to critical organs can have a completely different toxicity profile after treatment). Tumor information is readily available not only in the form of clinical CT, PET, and MRI but also that of the normal tissues. Normal tissue image features can be extracted and may provide patient-specific radiosensitivity characteristics that are associated with toxicity development. For instance, the prediction of late xerostomia was improved with parotid gland CT radiomics features,14 which indicated that higher heterogeneity of the parotid glands was associated with a higher risk of xerostomia. Subsequently, from T1-weighted MRI image biomarkers, it was observed that higher fat concentrations in the parotid gland were associated with a higher risk of developing xerostomia.15 A handful of other studies have investigated the relationship between CT information and the development of a radiation-induced toxicity,16–18 whereas the use of MRI information on normal tissues is a relatively uncharted domain.19,20

Other areas within head and neck oncology imaging in which AI has already had a practical impact is autosegmentation of tumor or organ at risk, which is, in essence, a voxel-based classification task.21 The clinical usability of autosegmentation is to aid radiation oncologists (or clinicians) in organ and tumor definition, decreasing delineation times.22–25 Furthermore, AI is being deployed for MRI deformation,26–28 improved image reconstruction,29–31 and MRI to pseudo-CT.32–35 These applications show particular promise for MRI-based adaptive radiation and combined imaging-treatment device (such as MRI-linear accelerator) applications, as improved deformation can improve adaptive warping of contours to new scans. Adequate pseudo-CT approximations will allow for dose optimization from MRI only, whereas today, a CT scan is required to calculate radiation absorption for treatment planning.

This capacity to generate usable data is not limited to therapy delivery, however. For example, AI is being applied to develop MR fingerprinting, which is an acquisition strategy allowing multiparametric MRI reconstruction from a single acquisition.36,37 The practical benefit of MR fingerprinting is that it allows for the acquisition of multiple quantitative MR sequences (such as T1 or T2 maps) from a single scan acquisition, significantly reducing the total scan time. Because this process involves deep learning to reconstruct the images, the same deep-learning approach could simultaneously allow front-end integration of radiomics, segmentation, and motion correction in a “near live” time interval. This idea of solving multiple algorithmic processes simultaneously is an especially exciting developmental opportunity for AI-based approaches (but necessarily requires sufficiently large and representative training data sets).

Although radiomics and AI research studies are emerging exponentially, clinical implementation is often not (yet) reached for most of the prediction models at a level of clinical usability or with interpretable visualization interfaces. To improve implementation, models must be presented in a manner in which the results can be understood and adequately interpreted by clinicians, whom are responsible for making the treatment decisions. Moreover, the models must be reliable (i.e., well trained and validated on representative data), as well as transparently inform on risk uncertainty per individual prediction. This need for decision-support tools that allow for automatic integration of data processing, prediction, and quality assurance with effective interpretability to the end user is an imperative. The next sections will deal with the mechanics of radiomics and AI in head and neck cancer to improve the understanding of their potential and challenges.

MECHANICS: FROM PICTURE APPRECIATION TO ARTIFICIAL INTERPRETATION

Current oncology practice is necessarily dependent upon medical images, and this dependence has increased in the last decades. CT scans are generally considered the standard imaging modality for diagnostic and therapy planning purposes, with their intensities consistently representing electron tissue density. For patients with head and neck cancer in particular, MRI is a valuable additional image modality38 due to its exceptional soft tissue contrast as a result of the decomposition of radiofrequency resonance. Additionally, by detecting metabolic activity of tissues, PET can provide physiologic information to aid in distinguishing between normal and tumor tissues. This explains why MRI and PET are generally regarded as indispensable in tumor diagnosis, delineation, and response assessment,39,40 as well as detection and tumor border definitions.41–43 Moreover, the introduction of functional MRI sequences, such as diffusion-weighted imaging and dynamic contrast enhanced, provide spatially localized physiologic information in frame with anatomy, introducing an additional dimensionality to differentiate tumor from normal tissue.44–51

Semantic Image Evaluation

Because a trained human eye is excellent in pattern recognition, qualitative image evaluation by the radiologist has historically been the conventional clinical standard. Consequently, initial imaging research focused on semantic image features, which are image content descriptions according to human perception (e.g., “radiologically positive lymph node,” “hypodense tumor,” or “heterogeneous lesion” on a radiologist report), or simple image characteristic values (e.g., maximum longitudinal tumor diameter and mean intensity values). For instance, simple MRI and 18F-fluorodeoxyglucose PET evaluation measures improved the diagnostic accuracy for malignant lymph node detection.52 The predictive value of semantic imaging features directly translates to the clinical TNM staging based on radiologic image evaluation.53 Furthermore, Wang et al54 showed that tumor invasion and heterogeneity evaluation on MRI were predictive for local control in patients with nasopharyngeal cancer.

The improved quality and quantity of medical imaging of the last decades have opened doors to move from semantic or qualitative observer assessments (primary classification tasks) to quantitative machine-readable image measures for model-based treatment decision support. Additionally, the current climate of computational processing power, available large-scale (imaging) data, machine-learning, and AI approaches are now reaching a stage to exploit the evergrowing imaging data.

Machine Learning

In the early 2000s, terms such as computer-aided treatment, computer-aided detection, or computer-aided diagnosis were used to describe computational processes that process clinically useful image information.55 In the last decades, AI has made is entrance in the head and neck oncology field. The definition of AI is the capability of a machine to imitate intelligent human behavior and is a collective term for all computer processes that fall under this definition.

Machine learning is a subfield within AI (Fig. 1), which in a broader sense refers to a computerized algorithm that learns to solve tasks by recognizing patterns in the data.56 In other words, the “machine” (i.e., statistical model) learns from the associations in the presented data by minimizing the model’s cost function. Predefined variables can be continuous (e.g., height and weight), categorical (smoking status: never, former, or current), ordinal (e.g., T-stage and performance score), or binary (yes or no; 1 or 0). Machine learning for medical images requires feature extraction from specific areas in the images, such as mean intensity or volume of the delineated tumor. More advanced imageprocessing techniques have allowed for image characteristic extraction that can quantify more comprehensive and complex aspects of an image, referring to the umbrella terminology radiomics.

FIGURE 1.

Definitions in Artificial Intelligence

RADIOMICS: IMAGE FEATURE MINING

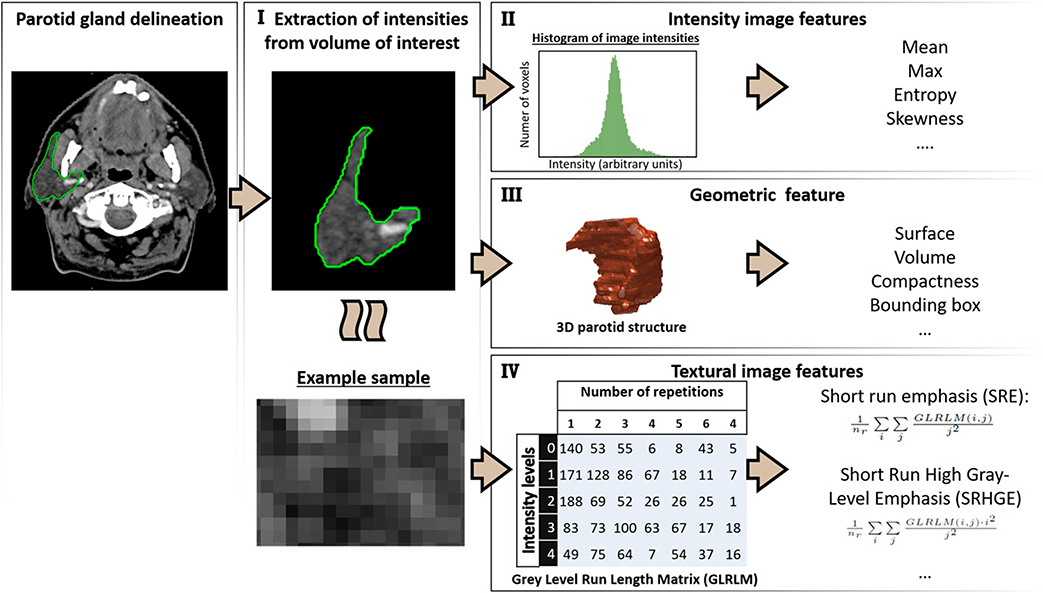

The wealth of raw images acquired for oncology treatment is a great source of information for patient-specific anatomy and physiology and can be used beyond the scope of tumor detection and delineation. Radiomics refers to the process of quantifying patient-specific tissue characteristics from images into features (i.e., image biomarkers), translating intensity, texture, and geometric properties of the tissue from a specific volume of interest into single variables that can be fed into a machine-learning modeling process (Fig. 2). Although these features can describe similar characteristics as semantic features (think: heterogeneity of the tumor), the hypothesis is that they can reveal more information in a quantitative manner, because the medical images are processed as data not as pictures.57,58 Although image texture analyses originated in the 1970s to 1980s,59–61 the radiomics concept was not introduced until 2012 by Aerts et al,4 proposing comprehensive extraction of geometric, first-order, and texture feature extraction, thereby converting medical images into high-dimensional minable data.3,58,62 Aerts et al4 showed that pretreatment CT-based radiomics features, describing the density, heterogeneity, and shape of the tumor, could predict overall survival of patients with non–small cell lung cancer and validated this in an independent patient cohort of both head and neck and lung cancer. Several subsequent studies have confirmed that tumor radiomics features can contribute to the prediction of overall, disease-free, and progression-free survival in patients with head and neck,4,63–66 lung,4,67 cervical,68 prostate, and rectal69 cancer. The explanation of radiomics features is often intuitive and relatable to the semantics, such that high values of texture feature that capture higher heterogeneity of the tumor are associated with a higher risk of recurrence.57,66 The geometric features can indicate that larger (e.g., volume) and more irregularly shaped (e.g., surface and bounding box) tumors are related to worse survival rates.66 Radiomics features have been shown to have additional prediction value to clinical variables such as age, TNM stage, and performance score, but they can also replace them. As shown by Zhai et al,66 the feature major axis length of all lymph nodes (i.e., the maximum length between pathologic lymph nodes) performed better in predicting survival than N stage. This indicates the potential of quantification of clinical variables, as well as the importance of being wary of multicollinearity (i.e., high interassociations between the independent variables).

FIGURE 2. Radiomics Extraction Process.

The segmented volume of interest is extracted from an image (I). The following radiomics types are extracted from this volume of interest: intensity image intensity features (II), geometric features (III), and texture features (IV). A gray-level run length matrix is given as example for texture calculation, which quantifies the number of repetitions of gray intensities from left to right.

Abbreviations: 3D, three-dimensional; Max, maximum.

DEEP LEARNING: MODELING DIRECTLY FROM THE IMAGE

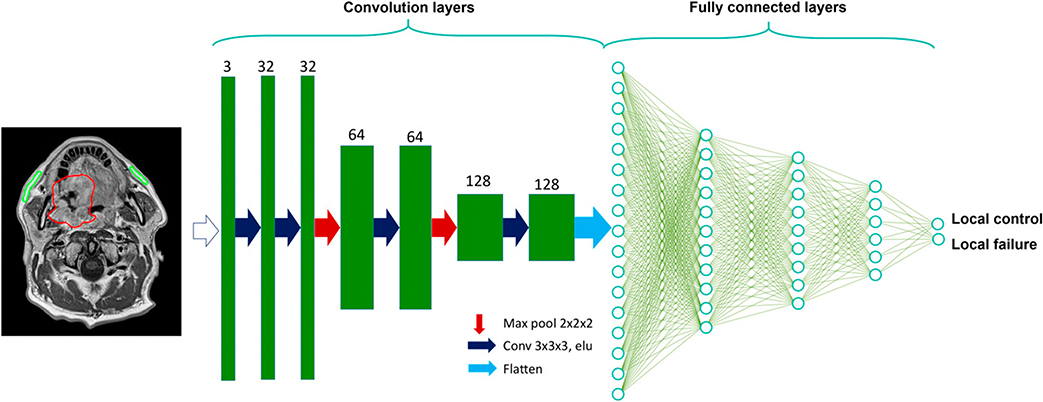

Deep learning is a subtype of machine learning (Fig. 1) yet distinguishes itself from general machine learning by not requiring predefined features or variables, but rather inculcating both feature selection and variable weighting simultaneously directly from the image or data within the deep-learning network.70,71 In imaging, the first layers of the neural network are convolutional layers, which are different sequential combinations of imaging filters that emphasize or suppress certain textures or patterns (Fig. 3). The convolution and fully connected network layers can extract image characteristics (e.g., eyes, tumor heterogeneity, and organ at-risk edges) without the human engineering of variables, thus being autodidactic in determining the most important aspect of the images. Similar to other machine-learning techniques, but even more prominent for deep learning, is the need to be wary for overfitting and lack of generalizability of the deep-learning model to new data. Important algorithm implementations have been made to charge a network for overfitting (e.g., dropout, internal cross validation, and permutations); nevertheless, it is extremely important to have a completely independent validation test set that has not been used during any part of model training process.

FIGURE 3. Deep Convolutional Neural Network Example for Classification Prediction Problem.

Starting with an MRI, for example, as the input layer, followed by convolutional, pooling, and flatten layers, which is followed by fully connected layers to output the endpoint local tumor control vs. local tumor failure.

Abbreviation: Max, maximum.

CHALLENGES IN MODELING

As for any statistical model, an overarching challenge is generating a generalizable model, meaning that the model will perform well in new unseen data. Key challenges that jeopardize the model generalizability and fragility are (1) the risk of false-positive associations, (2) over- and underfitting, (3) data representation bias, (4) unbalanced data, (5) multicollinearity, and (6) model result interpretability.

A false-positive variable refers to a statistically significant variable (p value less than set threshold) without a real underlying association of a variable with the outcome.72 This is particularly important for radiomics features, as they may be highly coherent in the representation of an imaging characteristic of a given region of interest. Radiomics approaches specifically would be well advised to carefully implement correction strategies as a function of the number of candidate biomarkers/thresholds/features. A proposed approach is multiple testing corrections,73 but they are often considered too simplistic for machine-learning approaches, and there is limited consensus on p-value adaptation in machine learning, as other methods may be more appropriate. This is a less direct issue for deep learning, but a network can detect “significant” areas in an image that for a biased relation with the endpoint or creating false areas in case of reconstruction.74

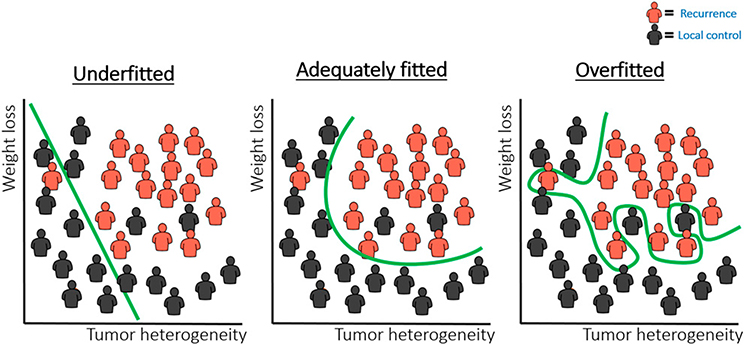

Overfitting or underfitting are main concerns in machine learning.75 Although underfitting refers to not giving the model enough degrees of freedom to fit to the data (Fig. 4, left), overfitting refers to fitting a model very specifically on training data (Fig. 4, right). In both scenarios, this results in a model that does not perform well when presented to new data. In other words, the model is not “generalizable” to external data. Addressing under- and overfitting remains a challenge during all steps of any machine-learning analysis that requires serious and continuous considerations.

FIGURE 4. Example Case for a Classification Task.

To distinguish the patients in red (e.g., recurrences) from those in black (e.g., local tumor control), a model is optimized to define a borderline that increases the distance between the two groups. Examples of model fits that are underfitted (left), adequately fitted (middle), and overfitted (right) are depicted.

The patient sample size can cause incorrect model fitting and, hence, make a model ungeneralizable to new data. The main concern of training a model on a small data set is that it is not an accurate representation of the full population. Moreover, medical data are often noisy, contain outliers, and nearly always hold some incorrect or erroneous information, due to clinical complexity and human error. Moreover, processes and reactions within the body are rarely fully predicted with a handful of variables in a simple linear manner. In other words, adequate patient numbers are required to represent medical processes and predict clinical outcome measures.

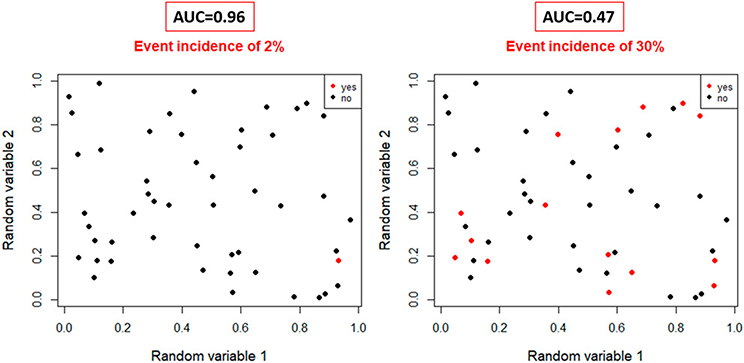

Unbalanced data can be a concern even in a representative cohort, as the dependent variable can be imbalanced, referring to a low number of events in the data set. For example, predicting the local recurrence with tumor image features in a cohort of patients with HPV-positive stage I to II oropharynx carcinomas is problematic due to extremely low local recurrence rates (approximately 2 in 100 patients). Fig. 5 demonstrates that the area under the curve performance measure is likely to be unrealistically high in unbalanced data, as isolated events can be falsely be detected. To mitigate this, the number of patients and events need to be increased. The pragmatic rule of thumb is that per 10 events in a data set, a model can contain a variable. Nevertheless, this is just a guideline and should be carefully evaluated in combination with overfitting monitoring measures.76 For deep learning, upsampling methods have been proposed to overcome the shortcoming of imbalanced data; however, similar to general machine learning, the training performance measures must be evaluated with caution, and effectiveness needs to be better investigated77; regardless, the model will always need to be tested on a large enough imbalanced data set.

FIGURE 5. Logistic Regression Area Under the Curve Performance in Random Data for Highly Imbalanced Event Data (2% Event Rate, Left) and More Balanced Data (30% Event, Right).

AUC for imbalanced data is unrealistically high.

Abbreviation: AUC, area under the curve.

Multicollinearity refers to the high correlated relation between variables; if variables are introduced in a model without having added value to each other, that can result in overcompensation and redundancy. This a particular concern for radiomics approaches, as this is better mitigated in deep learning. It is important to be aware of multicollinearity during the modeling process, as it can easily occur due to the competition of similar variables, and variables are wrongfully selected or omitted.

Model result interpretability can aid in the understanding of the modeling process, generalizability, and acceptance of a model by the medical community. The benefit of deep learning is that it allows for learning directly of the image itself and comes with the trade-off that it is much more a black box compared with radiomics machine-learning approaches, in which selected features, generally, can be compared with semantic understandings (e.g., tumor size and high heterogeneity). Efforts are arising to improve the human interpretability of deep-learning networks by visualizing the determining convolutional filters.78,79 However, there is an unmet need for a transition from representation to derive the underpinning biologic processes or conditions detected by machine learning. For example, radiomics features derived algorithmically have been demonstrated to serve as representations of physiologic tissue-level characteristics, such as fattiness15 and physiologic activity80 of the parotid glands associated with the development of xerostomia.

CHALLENGES IN ONCOLOGIC IMAGING USING RADIOMICS AND ARTIFICIAL INTELLIGENCE

The largest challenge for image-based machine-learning approaches is in consistent and large-scale curated and standardized image data acquisition. Ger et al81 showed that even if the same scanning protocol was used for CT image acquisition, this still resulted in differences in radiomics feature calculation on 100 scanners in 35 institutes. This illustrates that even CT, which is a much easier calibrated modality than PET or MRI, still suffers from substantial interand intrascanner variations. Many other studies have also illustrated the impact of different image reconstruction kernels, tube current, slice thickness, and scanner manufacturers on radiomics features.82–84 This emphasizes both the importance of image acquisition standardization and calibration as well as testing the robustness of prediction image-based models in a new patient population that are scanned with different scanners or imaging acquisition protocols. This challenges interinstitutional implementation of prediction models.

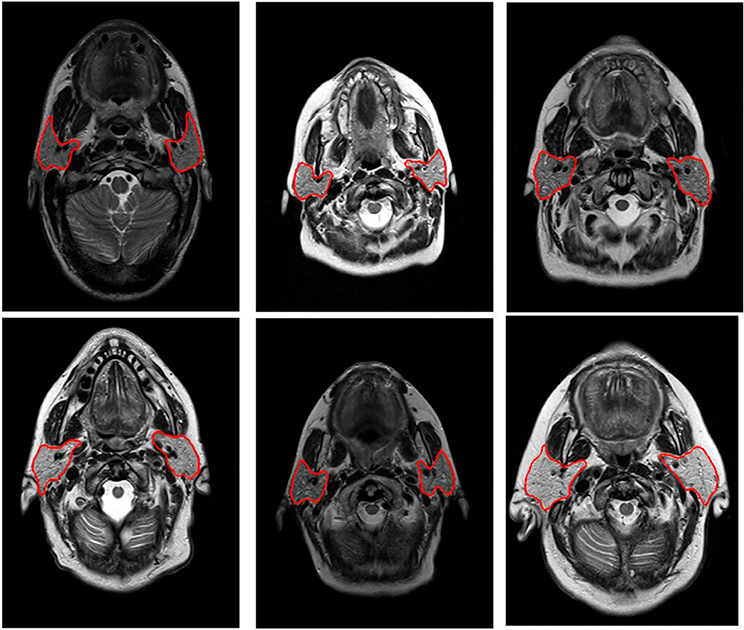

For MRI, an additional hurdle is the lack of consistently available scans, which consequently results in small cohort sizes and lack of external validation in research studies. Systematic MRI acquisition is hampered due to continuous MRI sequence development, resulting in periodical changes in the image acquisition parameters and large differences in acquisition settings between treatment sites. Furthermore, the vast majority of the MR radiomics studies do not incorporate any intensity standardization of MR scans, which is questionable due to known biases in MR intensities.85 A major, and often underestimated, challenge of MR-based AI applications is the lack of intrinsic value of MR intensities for the majority of acquisition methods (e.g., T1-/T2-/proton density–weighted images), meaning that they do not represent a specific fixed entity (i.e., they have arbitrary units), but rather have relative contrast value to adjacent MR intensity and structures (Fig. 6). MR normalization is currently still somewhat uncharted terrain outside the brain area.86–88 Quantitative imaging modalities have been introduced, designed to represent diagnostically meaningful intensity values. Examples are diffusion-weighted imaging, T1-weighted or T2-weighted maps, and dynamic contrast-enhanced MRI,89–91 yet currently, these MR sequences often have a limited signal-to-noise and courser resolution.

FIGURE 6. Pretreatment T1-Weighted MRIs Displayed With the Same Window Level (50–350 Arbitrary MR Units).

Clear differences can be observed in brightness (i.e., signal intensity) between the patients in tissues that are comparable in nature, such as fat and muscle.

REPORTING AND SHARING RADIOMICS AND ARTIFICIAL INTELLIGENCE APPROACHES

One major limitation is that processes and approaches of AI development and testing do not conform to historical frequentist hypothesis framework in many instances, thus making reporting of radiomics92 and AI models93,94 difficult and representing a considerable barrier to advanced application for clinical use and external validation. Nevertheless, the field is evolving to more consistency and more rigorous modeling approaches. Many standardized guidelines are being introduced for modeling reporting (e.g., Transparent Reporting of a multivariable prediction model for Individual Prognosis or Diagnosis [TRIPOD] methods),95 image acquisition (e.g., Quantitative Imaging Biomarkers Alliance),96 consistent image biomarker/ radiomics extraction (e.g., Image Biomarker Standardization Initiative),97 AI-driven clinical trials98 and general AI reporting,99 and imaging-specific applications.100 Moreover, to propel the field forward, more efforts are surfacing to publish data to make head and neck cancer imaging data FAIR (“Findable, Accessible, Interoperable, Reusable”)101 and thus spur new model building and validation. Similarly, through platforms like GIThub software/script publication, sharing and validation has become highly efficient. Finally, ontologies102 has made a concerted effort through improving the data annotation and informatics standards across data sources,103 allowing facilitation of model development103 and data transfer. These processes contribute to the implementation of robust actionable models in the head and neck cancer clinical practice and thereby consequently make it possible to tailor the treatment of head and neck cancer to improve therapy outcome and quality of life, allowing the transition of radiomics and AI from the laboratory to the clinic.

PRACTICAL APPLICATIONS.

Radiomics and imaging artificial intelligence are rapidly being deployed in research-level applications in head and neck cancer.

Personalized medicine using radiomics and artificial intelligence is necessarily dependent on the quality, robustness, and generalizability of generated prediction/classification models.

Though exceptionally promising, rigor in model development, reporting, and implementation will require oncologists to have a general understanding of these approaches, their advantages over traditional methods, and their limitations.

Footnotes

AUTHORS’ DISCLOSURES OF POTENTIAL CONFLICTS OF INTEREST AND DATA AVAILABILITY STATEMENT

Disclosures provided by the authors and data availability statement (if applicable) are available with this article at DOI https://doi.org/10.1200/EDBK_320951.

REFERENCES

- 1.Hatt M, Tixier F, Visvikis D, et al. Radiomics in PET/CT: more than meets the eye? J Nucl Med. 2017;58:365–366. [DOI] [PubMed] [Google Scholar]

- 2.Leite AF, Vasconcelos KDF, Willems H, et al. Radiomics and machine learning in oral healthcare. Proteomics Clin Appl. 2020;14:e1900040. [DOI] [PubMed] [Google Scholar]

- 3.Kumar V, Gu Y, Basu S, et al. Radiomics: the process and the challenges. Magn Reson Imaging. 2012;30:1234–1248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Aerts HJWL, Velazquez ER, Leijenaar RTH, et al. Decoding tumour phenotype by noninvasive imaging using a quantitative radiomics approach. Nat Commun. 2014;5:4006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Benson E, Li R, Eisele D, et al. The clinical impact of HPV tumor status upon head and neck squamous cell carcinomas. Oral Oncol. 2014;50:565–574. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Marur S, D’Souza G, Westra WH, et al. HPV-associated head and neck cancer: a virus-related cancer epidemic. Lancet Oncol. 2010;11:781–789. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Braakhuis BJM, Visser O, Leemans CR. Oral and oropharyngeal cancer in The Netherlands between 1989 and 2006: Increasing incidence, but not in young adults. Oral Oncol. 2009;45:e85–e89. [DOI] [PubMed] [Google Scholar]

- 8.Mirghani H, Blanchard P. Treatment de-escalation for HPV-driven oropharyngeal cancer: where do we stand? Clin Transl Radiat Oncol. 2017;8:4–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bahig H, Yuan Y, Mohamed ASR, et al. Magnetic resonance-based response assessment and dose adaptation in human papilloma virus positive tumors of the oropharynx treated with radiotherapy (MR-ADAPTOR): an R-IDEAL stage 2a-2b/Bayesian phase II trial. Clin Transl Radiat Oncol. 2018;13:19–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Gillison ML, Trotti AM, Harris J, et al. Radiotherapy plus cetuximab or cisplatin in human papillomavirus-positive oropharyngeal cancer (NRG Oncology RTOG 1016): a randomised, multicentre, non-inferiority trial. Lancet. 2019;393:40–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Blanchard P, Baujat B, Holostenco V, et al. ; MACH-CH Collaborative group. Meta-analysis of chemotherapy in head and neck cancer (MACH-NC): a comprehensive analysis by tumour site. Radiother Oncol. 2011;100:33–40. [DOI] [PubMed] [Google Scholar]

- 12.Gavrielatou N, Doumas S, Economopoulou P, et al. Biomarkers for immunotherapy response in head and neck cancer. Cancer Treat Rev. 2020;84:101977. [DOI] [PubMed] [Google Scholar]

- 13.Dogan V, Rieckmann T, Munscher A, et al. Current studies of immunotherapy in head and neck cancer. Clin Otolaryngol. 2018;43:13–21.¨ [DOI] [PubMed] [Google Scholar]

- 14.van Dijk LV, Brouwer CL, van der Schaaf A, et al. CT image biomarkers to improve patient-specific prediction of radiation-induced xerostomia and sticky saliva. Radiother Oncol. 2017;122:185–191. [DOI] [PubMed] [Google Scholar]

- 15.van Dijk LV, Thor M, Steenbakkers RJHM, et al. Parotid gland fat related magnetic resonance image biomarkers improve prediction of late radiation-induced xerostomia. Radiother Oncol. 2018;128:459–466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Colen RR, Fujii T, Bilen MA, et al. Radiomics to predict immunotherapy-induced pneumonitis: proof of concept. Invest New Drugs. 2018;36:601–607. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Anthony GJ, Cunliffe A, Castillo R, et al. Incorporation of pre-therapy 18F-FDG uptake data with CT texture features into a radiomics model for radiation pneumonitis diagnosis. Med Phys. 2017;44:3686–3694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Pota M, Scalco E, Sanguineti G, et al. Early prediction of radiotherapy-induced parotid shrinkage and toxicity based on CT radiomics and fuzzy classification. Artif Intell Med. 2017;81:41–53. [DOI] [PubMed] [Google Scholar]

- 19.Thor M, Tyagi N, Hatzoglou V, et al. A magnetic resonance imaging-based approach to quantify radiation-induced normal tissue injuries applied to trismus in head and neck cancer. Phys Imaging Radiat Oncol. 2017;1:34–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Abdollahi H, Mahdavi SR, Mofid B, et al. Rectal wall MRI radiomics in prostate cancer patients: prediction of and correlation with early rectal toxicity. Int J Radiat Biol. 2018;94:829–837. [DOI] [PubMed] [Google Scholar]

- 21.Cardenas CE, Yang J, Anderson BM, et al. Advances in auto-segmentation. Semin Radiat Oncol. 2019;29:185–197. [DOI] [PubMed] [Google Scholar]

- 22.Teguh DN, Levendag PC, Voet PWJ, et al. Clinical validation of atlas-based auto-segmentation of multiple target volumes and normal tissue (swallowing/ mastication) structures in the head and neck. Int J Radiat Oncol Biol Phys. 2011;81:950–957. [DOI] [PubMed] [Google Scholar]

- 23.Han X, Hoogeman MS, Levendag PC, et al. Atlas-based auto-segmentation of head and neck CT images. Med Image Comput Comput Assist Interv. 2008; 11(Part 2):434–441. [DOI] [PubMed] [Google Scholar]

- 24.van Dijk LV, Van den Bosch L, Aljabar P, et al. Improving automatic delineation for head and neck organs at risk by Deep Learning Contouring. Radiother Oncol. 2020;142:115–123. [DOI] [PubMed] [Google Scholar]

- 25.Ibragimov B, Xing L. Segmentation of organs-at-risks in head and neck CT images using convolutional neural networks. Med Phys. 2017;44:547–557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Yang X, Kwitt R, Styner M, et al. Quicksilver: fast predictive image registration - a deep learning approach. Neuroimage. 2017;158:378–396. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.de Vos BD, Berendsen FF, Viergever MA, et al. A deep learning framework for unsupervised affine and deformable image registration. Med Image Anal. 2019; 52:128–143. [DOI] [PubMed] [Google Scholar]

- 28.Hu Y, Modat M, Gibson E, et al. Weakly-supervised convolutional neural networks for multimodal image registration. Med Image Anal. 2018;49:1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Rodriguez-Ruiz A, Teuwen J, Vreemann S, et al. New reconstruction algorithm for digital breast tomosynthesis: better image quality for humans and computers. Acta Radiol. 2018;59:1051–1059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Putzky P, Karkalousos D, Teuwen J, et al. i-RIM applied to the fastMRI challenge. arXiv. Epub 2019. October 20. [Google Scholar]

- 31.Pinckaers H, Litjens G. Training convolutional neural networks with megapixel images. Medical Imaging Deep Learning. Epub 2018. April 16. [Google Scholar]

- 32.Dinkla AM, Wolterink JM, Maspero M, et al. MR-only brain radiation therapy: dosimetric evaluation of synthetic CTs generated by a dilated convolutional neural network. Int J Radiat Oncol Biol Phys. 2018;102:801–812. [DOI] [PubMed] [Google Scholar]

- 33.Maspero M, Savenije MHF, Dinkla AM, et al. Dose evaluation of fast synthetic-CT generation using a generative adversarial network for general pelvis MR-only radiotherapy. Phys Med Biol. 2018;63:185001. [DOI] [PubMed] [Google Scholar]

- 34.Gupta D, Kim M, Vineberg KA, et al. Generation of synthetic CT images from MRI for treatment planning and patient positioning using a 3-channel U-net trained on sagittal images. Front Oncol. 2019;9:964. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Florkow MC, Zijlstra F, Willemsen K, et al. Deep learning-based MR-to-CT synthesis: The influence of varying gradient echo-based MR images as input channels. Magn Reson Med. 2020;83:1429–1441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Cohen O, Zhu B, Rosen MS. MR fingerprinting Deep RecOnstruction NEtwork (DRONE). Magn Reson Med. 2018;80:885–894. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Virtue P, Yu SX, Lustig M. Better than real: complex-valued neural nets for MRI fingerprinting. Proc IEEE Int Conf Image Process. 2017;2017:3953–3957. [Google Scholar]

- 38.Dai YL, King AD. State of the art MRI in head and neck cancer. Clin Radiol. 2018;73:45–59. [DOI] [PubMed] [Google Scholar]

- 39.Stieb S, Kiser K, van Dijk L, et al. Imaging for response assessment in radiation oncology: current and emerging techniques. Hematol Oncol Clin North Am. 2020;34:293–306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Stieb S, McDonald B, Gronberg M, et al. Imaging for target delineation and treatment planning in radiation oncology: current and emerging techniques. Hematol Oncol Clin North Am. 2019;33:963–975. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Buchbender C, Heusner TA, Lauenstein TC, et al. Oncologic PET/MRI, part 1: tumors of the brain, head and neck, chest, abdomen, and pelvis. J Nucl Med. 2012;53:928–938. [DOI] [PubMed] [Google Scholar]

- 42.Patel SG, Shah JP. TNM staging of cancers of the head and neck: striving for uniformity among diversity. CA Cancer J Clin. 2005;55:242–258 quiz 261–262, 264. [DOI] [PubMed] [Google Scholar]

- 43.Adams S, Baum RP, Stuckensen T, et al. Prospective comparison of 18F-FDG PET with conventional imaging modalities (CT, MRI, US) in lymph node staging of head and neck cancer. Eur J Nucl Med. 1998;25:1255–1260. [DOI] [PubMed] [Google Scholar]

- 44.Tofts PS, Kermode AG. Measurement of the blood-brain barrier permeability and leakage space using dynamic MR imaging. 1. Fundamental concepts. Magn Reson Med. 1991;17:357–367. [DOI] [PubMed] [Google Scholar]

- 45.Zheng D, Chen Y, Liu X, et al. Early response to chemoradiotherapy for nasopharyngeal carcinoma treatment: Value of dynamic contrast-enhanced 3.0 T MRI. J Magn Reson Imaging. 2015;41:1528–1540. [DOI] [PubMed] [Google Scholar]

- 46.Shukla-Dave A, Lee NY, Jansen JFA, et al. Dynamic contrast-enhanced magnetic resonance imaging as a predictor of outcome in head-and-neck squamous cell carcinoma patients with nodal metastases. Int J Radiat Oncol Biol Phys. 2012;82:1837–1844. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Huang Z, Yuh KA, Lo SS, et al. Validation of optimal DCE-MRI perfusion threshold to classify at-risk tumor imaging voxels in heterogeneous cervical cancer for outcome prediction. Magn Reson Imaging. 2014;32:1198–1205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Lu Y, Jansen JFA, Stambuk HE, et al. Comparing primary tumors and metastatic nodes in head and neck cancer using intravoxel incoherent motion imaging: a preliminary experience. J Comput Assist Tomogr. 2013;37:346–352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Maeda M, Maier SE. Usefulness of diffusion-weighted imaging and the apparent diffusion coefficient in the assessment of head and neck tumors. J Neuroradiol. 2008;35:71–78. [DOI] [PubMed] [Google Scholar]

- 50.Kono K, Inoue Y, Nakayama K, et al. The role of diffusion-weighted imaging in patients with brain tumors. AJNR Am J Neuroradiol. 2001;22:1081–1088. [PMC free article] [PubMed] [Google Scholar]

- 51.Heijmen L, Verstappen MCHM, Ter Voert EEGW, et al. Tumour response prediction by diffusion-weighted MR imaging: ready for clinical use? Crit Rev Oncol Hematol. 2012;83:194–207. [DOI] [PubMed] [Google Scholar]

- 52.Braams JW, Pruim J, Freling NJM, et al. Detection of lymph node metastases of squamous-cell cancer of the head and neck with FDG-PET and MRI. J Nucl Med. 1995;36:211–216. [PubMed] [Google Scholar]

- 53.Veit-haibach P, Luczak C, Wanke I, et al. TNM staging with FDG-PET/CT in patients with primary head and neck cancer. Eur J Nucl Med Mol Imaging. 2007; 34:1953–1962. [DOI] [PubMed] [Google Scholar]

- 54.Wang Y, Zhao H, Zhang ZQ, et al. MR imaging prediction of local control of nasopharyngeal carcinoma treated with radiation therapy and chemotherapy. Br J Radiol. 2014;87:20130657. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Mahmood H, Shaban M, Indave BI, et al. Use of artificial intelligence in diagnosis of head and neck precancerous and cancerous lesions: a systematic review. Oral Oncol. 2020;110:104885. [DOI] [PubMed] [Google Scholar]

- 56.Neri E, de Souza N, Brady A, et al. ; European Society of Radiology (ESR). What the radiologist should know about artificial intelligence - an ESR white paper. Insights Imaging. 2019;10:44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Yip SSF, Liu Y, Parmar C, et al. Associations between radiologist-defined semantic and automatically computed radiomic features in non-small cell lung cancer. Sci Rep. 2017;7:3519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Gillies RJ, Kinahan PE, Hricak H. Radiomics: images are more than pictures, they are data. Radiology. 2016;278:563–577. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Haralick R, Shanmugan K, Dinstein I. Textural features for image classification [Internet]. Vol. 3. IEEE Trans Syst Man Cybern. 1973;SMC-3:610–621. [Google Scholar]

- 60.Galloway MM. Texture analysis using gray level run lengths. Comput Graph Image Process. 1975;4:172–179. [Google Scholar]

- 61.Amadasun M, King R. Textural features corresponding to textural properties. IEEE Trans Syst Man Cybern. 1989;19:1264–1273. [Google Scholar]

- 62.Lambin P, Rios-Velazquez E, Leijenaar R, et al. Radiomics: extracting more information from medical images using advanced feature analysis. Eur J Cancer. 2012;48:441–446. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Abgral R, Keromnes N, Robin P, et al. Prognostic value of volumetric parameters measured by 18F-FDG PET/CT in patients with head and neck squamous cell carcinoma. Eur J Nucl Med Mol Imaging. 2014;41:659–667. [DOI] [PubMed] [Google Scholar]

- 64.Koyasu S, Nakamoto Y, Kikuchi M, et al. Prognostic value of pretreatment 18F-FDG PET/CT parameters including visual evaluation in patients with head and neck squamous cell carcinoma. AJR Am J Roentgenol. 2014;202:851–858. [DOI] [PubMed] [Google Scholar]

- 65.Alluri KC, Tahari AK, Wahl RL, et al. Prognostic value of FDG PET metabolic tumor volume in human papillomavirus-positive stage III and IV oropharyngeal squamous cell carcinoma. AJR Am J Roentgenol. 2014;203:897–903. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Zhai T-T, van Dijk LV, Huang B-T, et al. Improving the prediction of overall survival for head and neck cancer patients using image biomarkers in combination with clinical parameters. Radiother Oncol. 2017;124:256–262. [DOI] [PubMed] [Google Scholar]

- 67.Thawani R, McLane M, Beig N, et al. Radiomics and radiogenomics in lung cancer: a review for the clinician. Lung Cancer. 2018;115:34–41. [DOI] [PubMed] [Google Scholar]

- 68.Lucia F, Visvikis D, Desseroit MC, et al. Prediction of outcome using pretreatment 18F-FDG PET/CT and MRI radiomics in locally advanced cervical cancer treated with chemoradiotherapy. Eur J Nucl Med Mol Imaging. 2018;45:768–786. [DOI] [PubMed] [Google Scholar]

- 69.Yi X, Pei Q, Zhang Y, et al. MRI-based radiomics predicts tumor response to neoadjuvant chemoradiotherapy in locally advanced rectal cancer. Front Oncol. 2019;9:552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Krizhevsky A, Sutskever I, Hinton GE. ImageNet classification with deep convolutional neural networks. Communications of the ACM. 2017;60:84–90 [Google Scholar]

- 71.McCulloch WS, Pitts W. A logical calculus of the ideas immanent in nervous activity. Bull Math Biophys. 1943;5:115–133. [PubMed] [Google Scholar]

- 72.Benjamini Y, Hochberg Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J R Stat Soc B. 1995;57:289–300. [Google Scholar]

- 73.Aickin M, Gensler H. Adjusting for multiple testing when reporting research results: the Bonferroni vs Holm methods. Am J Public Health. 1996;86:726–728. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Antun V, Renna F, Poon C, et al. On instabilities of deep learning in image reconstruction and the potential costs of AI. Proc Natl Acad Sci USA. 2020; 117:30088–30095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.van der Schaaf A, Xu CJ, van Luijk P, et al. Multivariate modeling of complications with data driven variable selection: guarding against overfitting and effects of data set size. Radiother Oncol. 2012;105:115–121. [DOI] [PubMed] [Google Scholar]

- 76.Vittinghoff E, McCulloch CE. Relaxing the rule of ten events per variable in logistic and Cox regression. Am J Epidemiol. 2007;165:710–718. [DOI] [PubMed] [Google Scholar]

- 77.Viloria A, Pineda Lezama OB, Mercado-Caruzo N. Unbalanced data processing using oversampling: Machine Learning. Procedia Comput Sci. 2020; 175:108–113. [Google Scholar]

- 78.Men K, Geng H, Zhong H, et al. A deep learning model for predicting xerostomia due to radiation therapy for head and neck squamous cell carcinoma in the RTOG 0522 clinical trial. Int J Radiat Oncol Biol Phys. 2019;105:440–447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Yosinski J, Clune J, Nguyen A, et al. Understanding neural networks through deep visualization. Paper presented at: Deep Learning Workshop, 32nd International Conference on Machine Learning; July 6–1, 2015; Lille, France. [Google Scholar]

- 80.van Dijk LV, Noordzij W, Brouwer CL, et al. 18F-FDG PET image biomarkers improve prediction of late radiation-induced xerostomia. Radiother Oncol. 2018; 126:89–95. [DOI] [PubMed] [Google Scholar]

- 81.Ger RB, Zhou S, Chi PM, et al. Comprehensive investigation on controlling for CT imaging variabilities in radiomics studies. Sci Rep. 2018;8:13047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.He L, Huang Y, Ma Z, et al. Effects of contrast-enhancement, reconstruction slice thickness and convolution kernel on the diagnostic performance of radiomics signature in solitary pulmonary nodule. Sci Rep. 2016;6:34921. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Larue RTHM, van Timmeren JE, de Jong EEC, et al. Influence of gray level discretization on radiomic feature stability for different CT scanners, tube currents and slice thicknesses: a comprehensive phantom study. Acta Oncol. 2017;56:1544–1553. [DOI] [PubMed] [Google Scholar]

- 84.Mackin D, Fave X, Zhang L, et al. Measuring CT scanner variability of radiomics features. Invest Radiol. 2015;50:757–765. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Shiri I, Abdollahi H, Shaysteh S, et al. Test-retest reproducibility and robustness analysis of recurrent glioblastoma MRI radiomics texture features. Iran J Radiol. 2017;31:e48035. [Google Scholar]

- 86.Nyul LG, Udupa JK, Zhang X. New variants of a method of MRI scale standardization. IEEE Trans Med Imaging. 2000;19:143–150.´ [DOI] [PubMed] [Google Scholar]

- 87.Sun X, Shi L, Luo Y, et al. Histogram-based normalization technique on human brain magnetic resonance images from different acquisitions. Biomed Eng Online. 2015;14:73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Loizou CP, Pantziaris M, Seimenis I, et al. Brain MR image normalization in texture analysis of multiple sclerosis. Paper presented at: 9th International Conference on Information Technology and Applications in Biomedicine; November 1–5, 2009; Larnaca, Cyprus. [Google Scholar]

- 89.Koh D-M, Collins DJ. Diffusion-weighted MRI in the body: applications and challenges in oncology. AJR Am J Roentgenol. 2007;188:1622–1635. [DOI] [PubMed] [Google Scholar]

- 90.Rosenkrantz AB, Mendiratta-Lala M, Bartholmai BJ, et al. Clinical utility of quantitative imaging. Acad Radiol. 2015;22:33–49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Abramson RG, Burton KR, Yu JPJ, et al. Methods and challenges in quantitative imaging biomarker development. Acad Radiol. 2015;22:25–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Jethanandani A, Lin TA, Volpe S, et al. Exploring applications of radiomics in magnetic resonance imaging of head and neck cancer: a systematic review. Front Oncol. 2018;8:131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Kim DW, Jang HY, Kim KW, et al. Design characteristics of studies reporting the performance of artificial intelligence algorithms for diagnostic analysis of medical images: results from recently published papers. Korean J Radiol. 2019;20:405–410. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Nagendran M, Chen Y, Lovejoy CA, et al. Artificial intelligence versus clinicians: systematic review of design, reporting standards, and claims of deep learning studies. BMJ. 2020;368:m689. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Moons KGM, Altman DG, Reitsma JB, et al. Transparent Reporting of a multivariable prediction model for Individual Prognosis or Diagnosis (TRIPOD): explanation and elaboration. Ann Intern Med. 2015;162:W1–W73. [DOI] [PubMed] [Google Scholar]

- 96.Shukla-Dave A, Obuchowski NA, Chenevert TL, et al. Quantitative Imaging Biomarkers Alliance (QIBA) recommendations for improved precision of DWI and DCE-MRI derived biomarkers in multicenter oncology trials. J Magn Reson Imaging. 2019;49:e101–e121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Zwanenburg A, Vallieres M, Abdalah MA, et al. The Image Biomarker Standardization Initiative: standardized quantitative radiomics for high-throughput image-` based phenotyping. Radiology. 2020;295:328–338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Liu X, Cruz Rivera S, Moher D, et al. ; SPIRIT-AI and CONSORT-AI Working Group. Reporting guidelines for clinical trial reports for interventions involving artificial intelligence: the CONSORT-AI extension. Nat Med. 2020;26:1364–1374. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Hernandez-Boussard T, Bozkurt S, Ioannidis JPA, et al. MINIMAR (MINimum Information for Medical AI Reporting): developing reporting standards for artificial intelligence in health care. J Am Med Inform Assoc. 2020;27:2011–2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Mongan J, Moy L, Kahn CE. Checklist for artificial intelligence in medical imaging (CLAIM): a guide for authors and reviewers. Radiol Artif Intell. 2020; 2:e200029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Goble C, Cohen-Boulakia S, Soiland-Reyes S, et al. FAIR computational workflows. Data Intell. 2019;23:108–121. [Google Scholar]

- 102.Traverso A, van Soest J, Wee L, et al. The radiation oncology ontology (ROO): publishing linked data in radiation oncology using semantic web and ontology techniques. Med Phys. 2018;45:e854–e862. [DOI] [PubMed] [Google Scholar]

- 103.Holzinger A, Haibe-Kains B, Jurisica I. Why imaging data alone is not enough: AI-based integration of imaging, omics, and clinical data. Eur J Nucl Med Mol Imaging. 2019;46:2722–2730. [DOI] [PubMed] [Google Scholar]