Abstract

It is time for a paradigm shift in the science of applied behavior analysis. Our current approach to applied research perpetuates power imbalances. We posit that the purpose of applied behavior analysis is to enable and expand human rights and to eliminate the legacies of colonial, oppressive social structures. We report the findings from our examination of the content of our flagship applied research journal. We reviewed 50 years of applied experiments from the standpoint of respect, beneficence, justice, and the participation of individuals and communities. Although there is some promise and movement toward inclusion, our findings indicate that we have not prioritized full participation across all segments of society, especially persons and communities that are marginalized. Social justice rests on the belief that human life is to be universally cherished and valued. In this article, we suggest that policies, strategies, and research practices within our field be interwoven with a commitment to social justice, including racial justice, for all. We offer recommendations to neutralize and diffuse power imbalances and to work toward a shift from colonial to participatory practices in the methods and aims of our applied science.

Keywords: applied behavior analysis, coloniality, community-based participatory research, cultural humility, human rights, research ethics

Editor’s Note

This manuscript is being published on an expedited basis, as part of a series of emergency publications designated to help practitioners of applied behavior analysis take immediate action to address police brutality and systemic racism. The journal would like to especially thank Associate Editor Dr. Kaston Anderson-Carpenter. Additionally, the journal extends thanks to Meka McCammon and Worner Leland for their insightful and expeditious reviews of this manuscript. The views and strategies suggested by the articles in this series do not represent the positions of the Association for Behavior Analysis International or Springer Nature.

—Denisha Gingles, Guest Editor

Our world is in transition. Recent events urge a reevaluation of human behavior, at both individual and institutional levels. The COVID-19 pandemic magnifies and reveals racism, disparities, and injustices at all levels. A science of behavior has the potential to address these maladies and universally improve basic human rights. Meaningful scientific change that engages with people will occur by igniting system agitation through amplifying voices, engaging in thoughtful critique, conducting careful functional analyses, and ongoing monitoring and revision of the scientific process and products over time (Benjamin, 2013).

The purpose of this article is to examine the practices of our applied science and to suggest ways to move forward in setting up research scenarios and agendas to address and challenge systemic injustices. Furthermore, we consider the construction of paradigms that contribute to the betterment of the world—to social justice—especially for those whose voices have been omitted from the process. We do not offer a checklist for police, a set of topographical responses for antiracist behaviors, or a template for effective training programs. Rather, we offer a fundamental and critical examination of applied behavior-analytic research processes regarding respect, beneficence, justice, and inclusion. In applied practice, crises present contingencies and opportunities for change (Risley, 2001). Our world is in crisis; the science of behavior may be able to help leverage change. To do that, one of the first steps is to examine our own behavior and to consider radical change. The intent is to create a paradigm shift in the science and practice of applied behavior analysis.

Exerting change requires thoughtful, reflective, and purposeful action. To proceed in ignorance or without acknowledgment and understanding of marginalization, trauma, and injustice is unwise and wrong. We begin by understanding that there are issues to be addressed, and we highlight them. In applied behavior analysis, this is happening in special sections and issues of flagship journals. Such initiatives highlight the content and the concerns of our times as related to culture, diversity, and social justice. However, stopping here would constitute tokenism and maintenance of the status quo. By definition, tokenism is perfunctory and symbolic (Merriam-Webster, n.d.-b). Tokens are likely to be short-lived and ineffective. Tokenism serves to signal to marginalized communities that they are seen but are not to be heard and not necessarily included.

Moreover, tokens alone are unlikely to result in systemic institutional changes that sustain over time. Our discipline will remain hegemonic. That is, our practices will continue to be representative of one dominant group that contributes to maintaining the current structures that are so problematic for the rest of the world’s population. Recent surveys and commentaries shed light on the homogeneity and lack of inclusiveness in our field (e.g., Beaulieu et al., 2019; Miller et al., 2019; Wright, 2019). In order to change, applied behavior analysis must take the next step in the process—to build a common commitment to understanding and aligning our aims—the goals, values, and methods of an applied science (and its associated practice). Only then can we begin to examine and revise the process of our science and build in safeguards that ensure outcomes produced contribute to and accelerate social justice. Here we offer a way to increase agreement, from within and outside of the discipline, on aims and outcomes that are of benefit to the entirety of humanity.

It is time. Social justice is not an auxiliary venture or specialty area of applied behavior analysis, such as autism intervention or problem behavior. It is the applied spirit of the science. The point of an applied science is to be socially meaningful (Wolf, 1978). We suggest that to be socially meaningful means to stand in the vantage point of love (Gilbert, 2013), including love in the formulation of justice (Yuille et al., 2020), and ensuring love for all humanity in the formulation and communication of our scientific practices. This stance should be infused in our research methods and in our clinical practices. It should pervade our work across all content areas. In our opinion, the root cause of the lack of diversity in the field is related to a drift away from the applied spirit of the science and is fueled by the larger and more complex structures of power that perpetuate racism, capitalism, and paradigmatic premises as stipulated by White heterosexual males of the global north. There are critical analytical traditions that offer theoretical responses to this epistemological hegemony of the global north. These include examinations of hegemony, such as Western science (e.g., Henrich et al., 2010), the coloniality of power (e.g., Quijano, 2000), and the commodification of the goods of science (e.g., Benjamin, 2013).

Some of the most horrific crimes against humanity the world has ever known have occurred under the guise of biomedical and behavioral research with human subjects. Historically, and in all fields, the burdens of research participation have been disproportionately endured by persons with vulnerabilities who involuntarily suffered inhumane treatments (National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research, 1979). In Europe during World War II, for example, Jewish concentration camp prisoners were tortured through human experimentation by Nazi physicians. In these experiments, prisoners were subjected to “freezing, injection of typhus into the blood, and direct ophthalmic injection of toxic substances, all in the name of ‘research’” (Rice, 2008, p. 1326).

On January 29, 1951, Henrietta Lacks entered Johns Hopkins Hospital in Baltimore, Maryland, in tremendous pain, seeking physician assistance after discovering a hard mass on her cervix. As a young Black mother living in the Jim Crow era, her options for medical treatment were limited to the subpar, segregated, Black ward of Johns Hopkins. There physicians scraped cells from her cervix for pathology testing to determine the malignancy of the mass and make recommendations for treatment. Without her consent or knowledge, Ms. Lacks’s cells were also sent to a research lab in the hospital for experimentation. Typically, when human cells were taken from patients, the cells only lived for a short period of time; this restricted the research process temporally. As a result, there was a need (in the 1950s) for the constant availability of new specimens for all ongoing research. When Ms. Lacks’s cells arrived at the laboratory, they did not die as was common; instead, they multiplied rapidly. Her cells became immortal. Ms. Lacks died of cervical cancer 9 months later on October 4, 1951—and in poverty. Her cells (“HeLa” cells) continue to be harvested and distributed by the trillions to research labs across the world, for use and experimentation. HeLa cells were the first to be shipped by mail, cloned, and sent to outer space. They were also integral in modern medical breakthroughs such as the polio vaccine and in vitro fertilization (Skloot, 2011). The world profited from Ms. Lacks—without her voice, without addressing her lack of health care or the systems that contributed to her precarious existence, and without her permission.

The U.S. Public Health Service Syphilis Study was a clinical study conducted from 1932 to 1972 at the Tuskegee Institute in Tuskegee, Alabama. The purpose of the research was to study syphilis. Participants were poor, illiterate, Black sharecroppers in Tuskegee County. Six hundred individuals were selected for participation and were given “incentives” such as free medical exams, transportation, meals, and burial stipends. The participants, under the assumption they were being treated for “bad blood,” willingly participated. They were unaware that the true purpose of the research was to study the effects of untreated syphilis postmortem. Despite the availability of penicillin as treatment for syphilis in 1947, the men who participated in this research were never treated (Tuskegee University, n.d.). The public outrage that followed the end of the Tuskegee Syphilis Study in 1974 served as a catalyst for the development of the National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research (Centers for Disease Control and Prevention, 2020; National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research, 1979).

One of the main outcomes of the National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research was the Belmont Report: Ethical Principles and Guidelines for the Protection of Human Subjects of Research (1979). The Belmont Report outlines the minimum-standard ethical principles and guidelines for biomedical and behavioral research that involves human subjects. The report outlines three core principles: (a) respect for persons, (b) beneficence, and (c) justice (National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research, 1979).

Historically, the burdens of research participation have been disproportionately endured by persons with vulnerabilities who involuntarily suffered inhumane treatments (National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research, 1979). To better understand the contingencies under which these events occurred, and to what extent they are present (or not) in the science of applied behavior analysis, we must look to the systems that perpetuate human rights violations. Again, the science of applied behavior analysis is largely hegemonic—composed of Western, White, and male structures (Miller et al., 2019). As early as 1991, Fawcett pointed out that power imbalances inherent in the researcher–participant relationship establish the researcher as the dominant knowledge-seeking authority and the participant as the subservient subject (Fawcett, 1991). These power imbalances create a coercive cycle that involves control, countercontrol, and counter-countercontrol (Delprato, 2002; Sidman, 2001; Skinner, 1953). Such cycles have been described as current aftermaths of “colonial practices,” which are characterized by a dynamic of power led by a hierarchical paternalistic authority and superiority in the social and intellectual milieus and upon subordinated racialized bodies (Miller et al., 2019; Quijano, 2000).

When coercive contingencies are established in a system, one group maintains dominance over the other. Coercive contingencies are reflected in punishment or in the threat of punishment that occasions avoidance and escape-maintained responses. These responses serve to establish and maintain power imbalances and social stratification (Holland, 1978; Sidman, 2001). If the mission of applied behavior analysis is actualized through relief of suffering, it can then be understood that one motivation for participants to volunteer for research is the potential for relief from suffering (i.e., the removal or lessening of aversive stimuli or conditions). This negative reinforcement contingency sets the occasion for an increase in certain participant responses such as asking for professional help and engaging in research activities. The anticipated reinforcers are the termination or avoidance of aversive stimuli or conditions, offering potential participants an approximation of an improved quality of life.

Researchers in the field of anthropology have grappled with the issue of inclusion and reduction of aversive control, particularly in the area of applied anthropology. Researchers and participants presumably operate under different contingencies; participants require relief from conditions of aversive stimulation, and researchers respond to the pursuit of generalized knowledge and the requirements of the scientific community. To reconcile this potential conflict, encourage collaboration, and equalize relationships, Tax (1958, 1960) proposed action anthropology, an approach involving methods to increase choice and inclusion of participants in identifying their own needs and strategies to be developed according to their values. This later developed into collaborative anthropology, which promoted egalitarian power distribution between the researcher and participants (Schensul & Schensul, 1992). Subsequent developments moved toward community-based action research, with an emphasis on social justice, intentional questioning of hegemonic structures, and methods and priorities tailored to reflect participant well-being and agency (e.g., H. A. Baer et al., 2004; Castro & Singer, 2004; Johnston & Downing, 2004; Singer, 2006).

Fawcett (1991) introduced these concepts to applied behavior analysis, offering a broader set of values rooted in community-based participatory research (CBPR) practices with an overall goal to reduce colonial research practices and to address more complex social problems that advance the meaningfulness and impact of applied research in behavior analysis. CBPR is an action research methodology; its hallmark is its emphasis on community engagement through the empowerment of community members as partners in the research endeavor (Fawcett et al., 2016). As such, participatory research practices developed by the applied fields of anthropology and behavior analysis focus on reducing hegemonic practices that perpetuate social inequalities and injustices (Miller et al., 2019). One key practice of CBPR is the empowerment of research participants through establishing practices that shift away from the traditional researcher-dominated relationship. There are groups, such as the Center for Community Health and Development, that have developed and offered models of this approach to research (e.g., Fawcett et al., 2013; Watson-Thompson et al., 2008; Watson-Thompson et al., 2020). The majority of their publications, however, are not in the flagship journals of applied behavior analysis.

This dovetails with the original emphasis in applied behavior analysis on the assessment of social validity. Measures of social importance are critical to ensuring participatory practices are robust because they serve as an ongoing, systematic evaluation of research goals, procedures, and outcomes (Wolf, 1978). In the context of applied research, measures of social validity and invalidity are a key practice in ensuring research outcomes align with the spirit of applied research aims (Schwartz & Baer, 1991). Queries of social validity are temporally extended from the onset of research engagement and terminate sometime after the conclusion of the experiment. In addition, measures of social validity are active, ongoing, and responsive to the established and emergent values of the participant. Throughout this process, the goals, procedures, and outcomes of the research are genuinely probed to allow space for responsive adjustments based on the expressed needs of the participant (Schwartz & Baer, 1991). Through this process, research outcomes are customized and reflective of the value system of the participants, rather than the agenda of the researcher, and allow for power and control of the research endeavor to be distributed more equitably between researcher and participant (Fawcett, 1991; Kazdin, 1977; Schwartz & Baer, 1991; Wolf, 1978). For example, one key practice of CBPR is the empowerment of research participants through establishing practices that shift away from the traditional researcher-dominated relationship. Collaborative practices act as a safeguard to promote inclusion of communities, include the voices of members, and prevent exploitation and further marginalization of persons with vulnerabilities that participate in research.

Fawcett’s (1991) overarching values and actions for applied behavior-analytic research include (a) the establishment of collaborative relationships (vs. colonial) between applied behavior-analytic researchers and participants; (b) research goals and methodology based on socially valid dependent variables, including generality and maintenance of research effects; (c) intervention maintenance after the researchers’ departure that is supported by locally sustainable funding sources; and (d) advocacy and community change, including increased participant empowerment.

This article examines the research practices in applied behavior analysis from the standpoints of Fawcett (1991) and the recommendations of the Belmont Report (1979) regarding the protection of human rights and the elimination of nonparticipatory, oppressive social structures in our research practices. Specifically, we sought to determine the extent to which applied behavior-analytic research has been reflective of (a) the applied spirit of the science as described by D. M. Baer et al. (1968), (b) the ethical principles for behavioral research involving human subjects as outlined in the Belmont Report, and (c) the collaborative versus colonial research practices as described by Fawcett (1991). To determine this, we purposefully sampled and evaluated the first 50 years of the Journal of Applied Behavior Analysis to assess trends about respect, beneficence, and justice as described in the Belmont Report (1979). We also examined trends related to identity, stakeholding, collaboration, social significance, funding, and participant empowerment (Fawcett, 1991). In total we examined 3,484 units associated with human rights and collaborative research practices and 134 experiments, spanning 50 years, across 26 different measures.

Method

General indicator categories were created based on core bioethical principles dedicated to the protection of human subjects of behavioral research (National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research, 1979). Each indicator in the main category was classified by its membership in the three core principles as outlined in the Belmont Report: respect for persons, beneficence, and justice. Respect for persons included participant consent, voluntariness, and assent. Beneficence included life improvement and social validity. Justice included participant age, race, ethnicity, religion, sex, gender, household income, education level, diagnosis, language/communication, marital status, and occupation. Subcategories were selected based on recommendations for community research and action (Fawcett, 1991) that paralleled and extended the core bioethical principles outlined in the Belmont Report. Subcategorical indicators included participant and researcher identity, researchers as community stakeholders, origin of research goals, intervention implementation, dependent measures, generalization of research effects, research setting, source of research funding, maintenance of research effects, and improvement of participant empowerment. Operational definitions were created for each indicator (see Table 1) based on the descriptors from the Belmont Report (1979) and Fawcett (1991).

Table 1.

Operational Definitions and Scoring Protocol

| Respect for persons | |

| Consent | Informed consent is the process by which researchers working with human participants describe their research project and obtain the subjects’ consent to participate in the research based on the subjects’ understanding of the project’s methods and goals. Scored as “yes” (consent reported), “no” (consent reported as not obtained), or “no information” (no information regarding consent reported). |

| Voluntary | Voluntary case selection is a form of case selection that is purposive rather than based on the principles of random or probability sampling. It usually involves individuals who agree to participate in research, sometimes for payment. Scored as “yes” (voluntary participation reported), “no” (involuntary participation reported), or “no information” (no information regarding voluntary participation reported). |

| Assent | The assent process is an ongoing, interactive conversation between the research team and the child, young adult, typically developing adult, or adult lacking the capacity to give informed consent. This provides them with the opportunity to leave or terminate the research study. This does not include guardians who give or withdraw consent. This does not include participants who miss, reschedule, or postpone a session due to illness, vacation, schedule conflicts, and so on. This does not include taking a break, pausing, or any other delays allowed during the research study. Scored as “yes” (assent reported), “no” (assent reported as not honored), or “no information” (no information regarding assent reported). |

| Beneficence | |

| Quality of life | Any impact the experiment had that improved the participants’ quality of life. Scored as “yes” (increased quality of life reported), “no” (decreased quality of life reported), or “no information” (no information regarding quality of life reported). |

| Social validity | Social validity or significance includes systems and measures for asking for participant feedback about how the research goals, procedures, or outcomes related to their values and reinforcers. Scored as “yes” (social validity reported), “no” (social validity reported as not obtained), or “no information” (no information regarding social validity reported). |

| Dependent variables | A dependent variable is the behavior(s) being measured in the experiment. Scored as “behaviors to increase” (e.g., learning janitorial skills), “behaviors to decrease” (e.g., reduction in physical aggression), or “proxy behaviors” (e.g., button pressing). |

| Justice | |

| Age | Chronological age—the number of years a person has lived (typically reported in years and/or months). Scored as “yes” (age reported) or “no information” (age not reported). |

| Race | A social construct that divides people into distinct groups based on characteristics such as physical appearance (e.g., bone structure and skin color). Scored as “yes” (race reported) or “no information” (race not reported). |

| Ethnicity | A social construct that divides people into smaller social groups based on characteristics such as a shared sense of group membership, values, behavioral patterns, language, political and economic interest, history, and ancestral geographical base. Scored as “yes” (ethnicity reported) or “no information” (ethnicity not reported). |

| Religion | A personal or institutionalized system of beliefs and practices concerning the cause, nature, and purpose of the universe, often grounded in belief in and reverence for some supernatural power or powers, and often involving devotional and ritual observances and a moral code governing the conduct of human affairs. Scored as “yes” (religion reported) or “no information” (religion not reported). |

| Sex | A medically constructed categorization. Sex is often assigned based on the appearance of the genitalia, either in ultrasound or at birth. Scored as “yes” (sex reported) or “no information” (sex not reported). |

| Gender | A social construct used to classify a person as a man, woman, or some other identity. Fundamentally different from the sex one is assigned at birth. Scored as “yes” (gender reported) or “no information” (gender not reported). |

| Household income | An economic measure used to measure the income of every resident in a household. Scored as “yes” (income reported) or “no information” (income not reported). |

| Education level | Level of schooling or credential. Scored as “yes” (education level reported) or “no information” (education level not reported). |

| Diagnosis | Nature of disability or illness. Scored as “yes” (diagnosis reported) or “no information” (diagnosis not reported). |

| Language/communication | System of communication, including augmentative communication systems, languages spoken, or modes of communication used within a particular community. Scored as “yes” (language/communication reported) or “no information” (language/communication not reported). |

| Marital status | The personal status of each individual in relation to the marriage laws or customs of a country. Scored as “yes” (marital status reported) or “no information” (marital status not reported). |

| Occupation | Type of work a person does (e.g., job title or industry) to earn money. Scored as “yes” (occupation reported) or “no information” (occupation not reported). |

| Collaboration | |

| Identity | A community is a group of people that is interconnected by demographics or other social variables (economic, social, race, ethnicity, level of education, etc.). Scored as “yes” (identity variables shared) or “no information” (no information about shared identity variables reported). |

| Stakeholders | A stakeholder is a representative of the identity population or is explicitly stated as an advocate or ally on behalf of the participant of the research. Scored as “yes” (researchers are community stakeholders), “no” (researchers are not community stakeholders), or “no information” (no information about researchers as community stakeholders). |

| Research goal | A research goal is the purpose of the research study or experiment. Scored as “yes” (research goal developed in collaboration with participant), “no” (research goal developed by researcher alone), or “no information” (no information about who developed research goals reported). |

| Intervention | Interventions or treatments can be behavioral procedures, intervention programs, or independent variables being applied. The person implementing the intervention describes who set up the procedural arrangement in the environment (e.g., materials, setting, observation room) where the research is conducted. Scored as “yes” (intervention goal developed in collaboration with participant), “no” (intervention goal developed by researcher alone), or “no information” (no information about who developed intervention goals reported). |

| Generalization | Generalization is behavior change that has not been explicitly trained and occurs outside of the training conditions. This includes stimulus/setting generalization and response generalization; it is also called “generalized outcome.” Scored as “yes” (generalization reported), “no” (generalization not achieved), or “no information” (no generalization information reported). |

| Setting | The research setting is the place(s) where the research took place. Scored as “yes” (research occurred in the natural setting; e.g., living room of group home) or “no” (research occurred in analogue setting; e.g., observation room). |

| Funding source | Research funding covers any funding of scientific research (e.g., grants, scholarships, donations). Scored as “yes” (funding source sustainable; e.g., funds from local taxpayer), “no” (funding source not sustainable; e.g., National Science Foundation grant), or “no information” (no information reported about source of funding). |

| Maintenance | Maintenance is the extent to which the learner continues to perform the target behavior after a portion or all of the intervention has been terminated (i.e., response maintenance); it is a dependent variable or characteristic of behavior. Scored as “yes” (maintenance reported), “no” (maintenance not achieved), or “no information” (no maintained information reported). |

| Empowerment | Empowerment refers to acting volitionally, based on one’s own mind or will, without external compulsion. For example, having a variety of available options and to be free from coercion when choosing between options. Scored as “yes” (skills to increase empowerment reported), “no” (skills reported did not improve empowerment), or “no information” (no information regarding whether researchers taught empowerment reported). |

Note. All “yes” responses are scored as 1; all “no” and “no information” responses are scored as 0.

Article Selection

A purposeful sample of articles from the Journal of Applied Behavior Analysis was selected from 10-year intervals spanning 50 years of publication: 1968, 1978, 1988, 1998, 2008, and 2018. Each publication year included one volume and four issues. The first four experimental articles of each issue were selected for each 10-year interval (see Table 2). Nonexperimental articles (e.g., conceptual articles, reviews, commentaries) were excluded from the analysis, and the next experimental article in that issue was selected instead.

Table 2.

Journal of Applied Behavior Analysis Article Sample

| Year | 1968 | 1978 | 1988 | 1998 | 2008 | 2018 |

|---|---|---|---|---|---|---|

| Editor | Montrose M. Wolf | K. Daniel O'Leary | Jon S. Bailey | David P. Wacker | Cathleen C. Piazza | Gregory P. Hanley |

| Spring | Hall et al. | Epstein & Masek | Fitterling et al. | Stromer et al. | Grow et al.* | Carlile et al. |

| Ayllon & Azrin | Schnelle et al. | Kohr et al. | Lane & Critchfield | Layer et al. | Griffith et al. | |

| Risley | Kantorowitz | Green et al. | Cuvo et al. | Reed & Martens* | Geiger et al. | |

| Thomas et al.* | Carnine & Fink | Guervremont et al. | Ervin et al. | Petursdottir et al. | Frampton & Shilingsburg | |

| Summer | Azrin et al. | Sturgis et al. | Mace et al.* | Piazza et al. | Glover et al. | Sump et al. |

| Hart & Risley* | Favell et al. | Lamm & Greer | Krantz & McClannahan | Penrod et al. | Carroll et al. | |

| Hopkins | Alevizos et al. | Schuster et al.* | Dixon et al. | Francisco et al. | Ghaemmaghami et al. | |

| Leitenberg et al. | Hollandsworth et al. | Wagner & Winett | Hagopian et al. | Trosclair-Lasserre et al. | Dass et al. | |

| Fall | Azrin & Powell | Yeaton & Bailey | Seekins et al.* | Carr et al. | Volkert et al.* | Toper-Korkmaz et al. |

| Birnbrauer | Neef et al. | Van Houten | Wood et al. | Roscoe et al.* | Schnell et al. | |

| Phillips* | Cuvo et al. | Poche et al. | Fisher et al. | Sigurdsson & Austin | Scott et al. | |

| Peterson | Parsonson & Baer | Rogers et al. | Drasgow et al. | Taylor & Hoch | DeQuinzio | |

| Winter | Risley & Hart | Rose | Wacker et al. | Fisher et al. | Chrivers et al. | Becraft et al. |

| Azrin et al. | Ortega | Baer et al. | Vollmer et al. | Donlin et al. | Fahmie et al. | |

| Guess et al. | Shreibman | Welch & Holborn | Fisher et al. | Ledgerwood et al. | Russell et al | |

| Kale et al.* | Goldstein | Wacker et al.* | Schepis et al. | Dunn et al. | Ming et al. |

Note: *Scored for Interrater Agreement

Scoring

Two independent scorers were trained to competency on the observation code and data sheet used for scoring. Each experiment from each article was read in its entirety; then it was read again and scored for content. If an experimental article included three experiments, each experiment was scored independently. Experiments that did not include a direct manipulation of an independent variable and measure of a dependent variable were not scored (e.g., preference assessments). The total number of scored articles per sample year was also tallied and recorded.

Interrater Agreement

To assess interrater agreement, the decades 1968, 1988, and 2008 were selected. Each article in the year was assigned a number (1–12) to facilitate the random selection of articles for interrater agreement. A random number generator was used to select the 12 articles (13% of total articles) scored for interrater agreement. Interrater agreement was calculated for each dependent measure. Two raters scored each article, and the mean agreement score was obtained for each indicator category. Overall agreement was calculated by calculating the mean agreement on all indicators. Agreement was scored early in the investigation processes to identify ambiguous operational definitions. Subsequently, operational definitions for scoring were revised based on disagreements. Scorers were provided the opportunity to write questions and notes on their data sheets, which informed adjustments to operational definitions. This process was repeated until optimal interrater agreement was obtained (Northup et al., 1993). Overall average agreement across all indicator categories was 98.26% (range 92%–100%). The lowest agreement scores by category were participant information (92%), intervention implementation (92%), and social validity (92%). The remaining indicator categories yielded 100% agreement scores. After interrater agreement on the randomly selected sample was obtained, the remaining experiments were read and scored by the first author according to the observation code and scoring protocols.

Results and Discussion

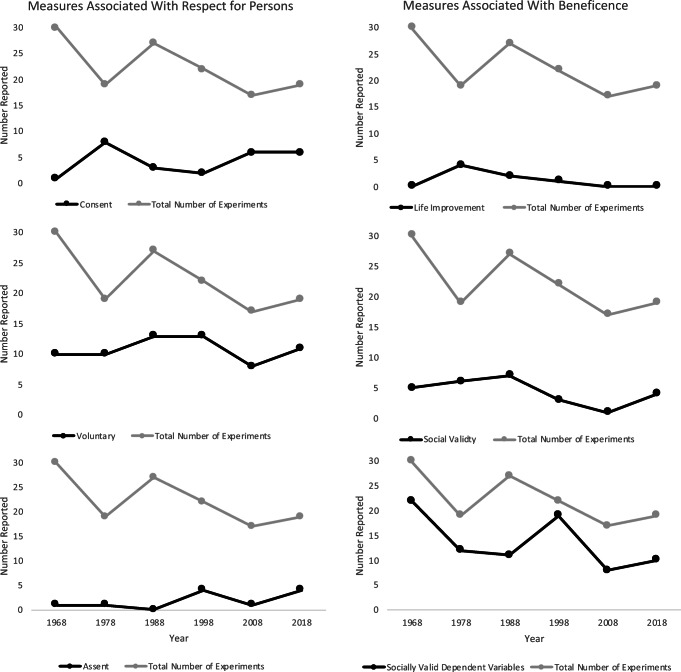

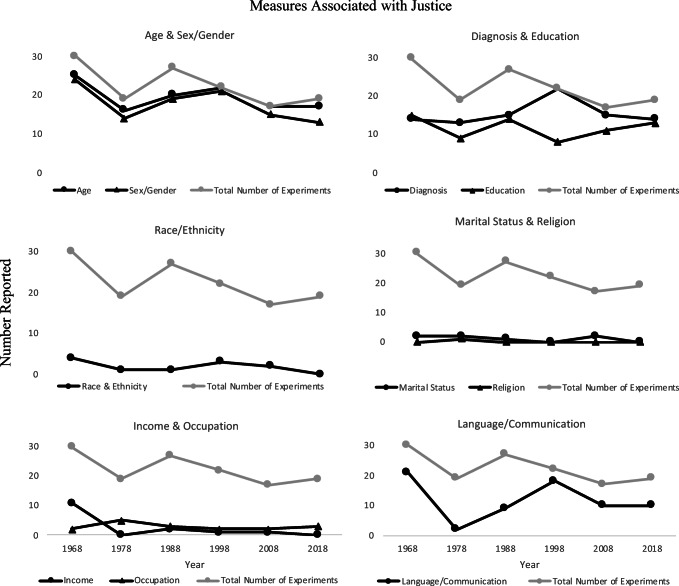

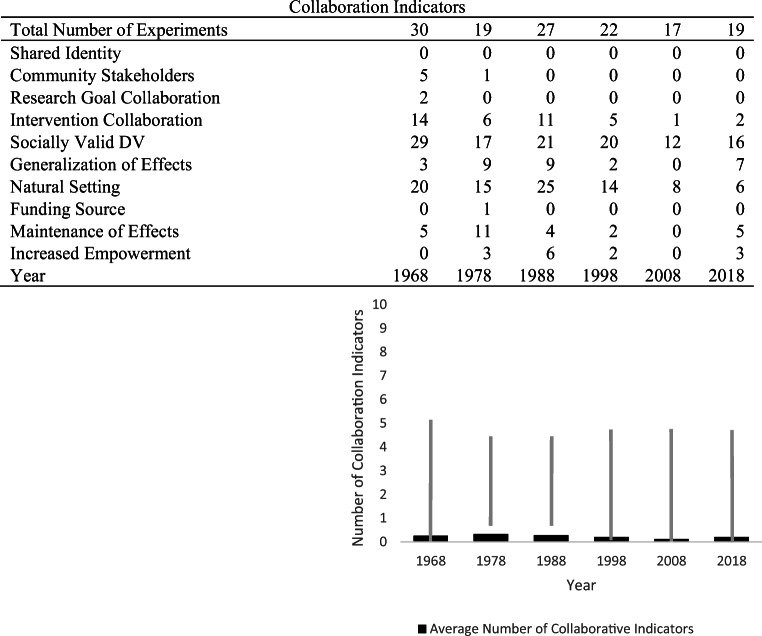

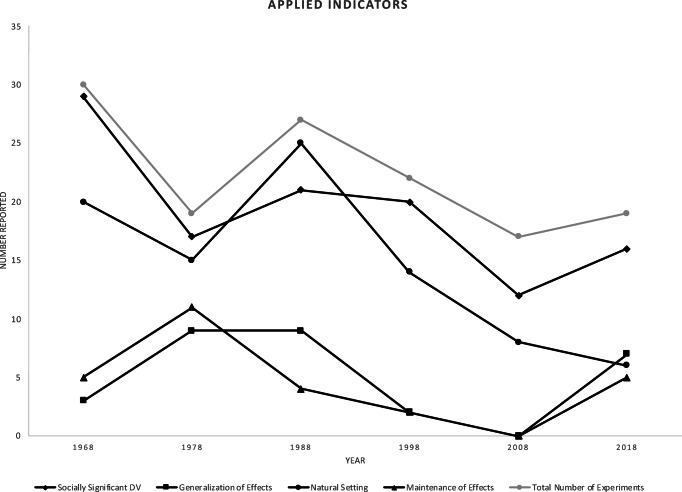

Figures 1, 2, 3 and 4 provide an analysis of trends for each of the questions posed in this study. Fig. 1 presents the data over time with regard to indicators of respect and beneficence, Fig. 2 addresses indicators of justice, Fig. 3 depicts indicators of collaboration, and Fig. 4 summarizes some of the most salient applied categories.

Fig. 1.

Experiments Reported Across 50 Years of JABA per Belmont Category. Note. Total number of experiments and number of experiments reported across 50 years of Journal of Applied Behavior Analysis (JABA) by two of the Belmont principles: respect for persons (left panels) and beneficence (right panels). The total number of measures and number of experiments by respect for persons categories are shown in the graphs for consent (upper left panel), voluntary (middle left panel), and assent (lower left panel). The total number of measures and number of experiments by beneficence categories are shown in the graphs for life improvement (upper right panel), social validity (middle right panel), and socially valid dependent variables (lower right panel)

Fig. 2.

Experiments Reported Across 50 Years of JABA per Justice Category. Number Reported. Note. Total number of experiments and number of experiments reported across 50 years of the Journal of Applied Behavior Analysis (JABA) by justice categories: age and sex/gender (upper left panel), race/ethnicity (middle left panel), income and occupation (lower left panel), diagnosis and education (upper right panel), marital status and religion (middle right panel), and language/communication (lower right panel)

Fig. 3.

Number and Range of Collaboration Indicators. Note. Total of number of collaboration indicators (top panel) and average and range of number of collaboration indicators (bottom panel) reported across 50 years of the Journal of Applied Behavior Analysis

Fig. 4.

Experiments Reported Across 50 Years of JABA by Applied Indicator. Note. Total number of experiments and number of experiments reported across 50 years of the Journal of Applied Behavior Analysis (JABA) by three applied indicators: socially valid dependent variables (DVs), generalization of research effects, research conducted in the natural setting, and maintenance of research effects.

The results indicate, in most cases, absences in information reported and data trends in unfavorable directions that are reflective of colonial, rather than collaborative, research practices. These data suggest that protections are either limited or underreported. Such patterns in the data suggest that we are contributing to a structure that is oppressive, commodified, and restrictive of the voices and participation of large segments of society. On the surface, it appears participants in our research have progressively become a means to an end.

A summary of the data reveals several salient themes. First, there is very limited reporting of the informed consent process. The total measures associated with consent reported were one in 1968, eight in 1978, three in 1988, two in 1998, six in 2008, and six in 2018 (see Fig. 1). Second, there is rarely an indication that the problems addressed were problems initiated or voiced by the community. Often, they are problems voiced by institutions or researchers. The total number of experiments that reported no information about voluntariness was twenty in 1968, nine in 1978, fourteen in 1988, nine in 1998, nine in 2008, and eight in 2018 (see Fig. 1). Third, there is little demographic information reported. For example, the total measures associated with race were four in 1968, one in 1978, one in 1988, two in 1998, two in 2008, and zero in 2018. The total measures associated with ethnicity were zero in 1968, zero in 1978, zero in 1988, one in 1998, zero in 2008, and zero in 2018 (see Fig. 2). Fourth, there is little indication that the dependent variables selected were changed in a manner that impacted the lives of the participants or that the outcomes resulted in sustainable improvements in participants’ lives outside of the research context. The total measures associated with life improvement reported were zero in 1968, four in 1978, two in 1988, one in 1998, zero in 2008, and zero in 2018 (see Fig. 1).

Perhaps the most troubling issue these data suggest is that it is difficult to understand who has elected to participate in our research and under what conditions. It is also not clear what communities we serve and whether we include the voices of the people in those communities. That is, until this information is systematically required, we have no way of understanding whether we have an overreliance on participants from certain populations and experimental questions (e.g., behavior problems with marginalized communities), and exclusion of other populations for certain change procedures (e.g., acquisition of skills for more privileged communities).

To facilitate discussion of these data, we offer several topics for consideration. We want to emphasize that this is one step toward examining our applied research paradigm. This is a deliberate move toward building a participatory science that includes all societal concerns through the empowerment of persons who experience societal marginalization (Benjamin, 2013). Examination is also a central point in disarming imbalanced power structures or kyriarchies (Fiorenza, 1992) and in eliminating racism, sexism, ableism, nationalism, and so on (Morgan, 1996). The rationale for such examination is that we must be constantly acting, examining, reflecting, and continuing in the struggle to ignite meaningful systemic changes (Benjamin, 2013; Roy, 2020). One group cannot dominate the process, as appears to be the current state of affairs, or the imbalances and resulting countercontrol and oppressive social systems are sustained (Holland, 1978). Practices that maintain coloniality are foundational to modern society (Quijano, 2000), and they are part of the science of applied behavior analysis. Here, we confront three features: (a) the commodification of behavior data (Benjamin, 2013), (b) the cultivation or “taking” of behavior data (Malott, 2002), and (c) the establishment and perpetuation of colonial relationships (Fawcett, 1991).

Commodification

A commodity is a good that can be exchanged within a particular market (Merriam-Webster, n.d.-a). In the context of applied behavior-analytic research, behavior data are the commodities. The commodification of behavior data is characterized by the exchange of behavior data for conditioned reinforcers (e.g., publications, disciplinary stature). Malott (2002) cautioned researchers about reinforcers that are more likely to benefit the student researcher, faculty member, or the institutions in which they operate than the participants themselves. The reinforcers include recognition, publications, citations, grant money, appointments to prestigious educational institutions, awards, fame, and elevated social status (Hull, 1978).

Taking Data

The findings and outcomes of research are valuable. Taking data is the process by which applied behavior-analytic researchers measure, count, and analyze behavior data in the context of the experiment (Cooper et al., 2019). With respect to “taking data,” the immediate concern is related to the word “taking.” This phrase shifts the measurement of behavior from a numerical form for analysis to an object to be extracted from the person and traded by the taker and is to the benefit of the takers.

Behavior data are a visual representation of a particular part of a person’s state of being. If the researcher controls the data, the autonomy of the participant is threatened because the researcher is then in a position of power. In addition, when data are displayed, reprinted, and publicized, what rights does the person have with respect to the visual representation of their behavior? Are these the aspects of importance to that person and for that person? With respect to ownership and personal liberty, to whom do the data belong once the data have been transferred from the acts of the person to a permanent product (e.g., graphs, publications)? Preventing the participant from coming into contact with their behavior data robs them of the opportunity to make informed, personal decisions (Hilts, 1974) about their life in the context of what they value and the urgencies of their conditions.

Colonial Relationships: Systems for Taking Commodities

Colonial relationships are established at the outset of the research endeavor and have the potential to exploit participants to suit the agenda of the researcher. In other words, colonial relationships are established and maintained through coercive contingencies. These contingencies subjugate human participants due to conditions of deprivation or pain and rely on power and control wielded by persons of greater authority such as the researcher. As a result, the researcher–subject relationship is maintained through power imbalances that favor the agenda of the researcher over the needs of the participant (Chavis et al., 1983; Fawcett, 1991).

For example, in what is often described as a seminal study in our field, and one of the first publications in which operant conditioning was applied with humans, Operant Conditioning of a Vegetative Human Organism, Fuller (1949) described experimental operant conditioning. In these sessions, the use of a “sugar-milk solution” was delivered as a reinforcer to shape arm-raising responses. Sessions were conducted following a 15-hr food deprivation period. That is, the young man was deprived of all sustenance for 15-hr periods to increase the value of the solution used as a reinforcer. There is no indication that the conditions of his life were improved in any way. In fact, he may have suffered more. This experiment demonstrated, contrary to the belief at the time, that through operant conditioning a person admitted to an institution for the feeble-minded [sic] could learn. Fuller concluded, “Perhaps by beginning at the bottom of the human scale the transfer from rat to man can be effected” (p. 590). This understanding advanced knowledge, and the world profited from this unnamed person, much like Ms. Henrietta Lacks.

One motivation for stakeholders and participants to volunteer for research is the potential for relief from suffering (i.e., the removal or lessening of aversive stimuli or conditions). Negative reinforcement contingencies may set the occasion for seeking help. Termination or avoidance of aversive conditions may or may not lead to an improved quality of life. Applied behavior-analytic researchers, however, are presumably operating under different contingencies—that is, they are typically seeking reinforcers or avoiding aversive events related to the pursuit of knowledge and position advancement. Those differing contingencies threaten equality and collaboration. To encourage collaboration and equalization of relationships, Fawcett (1991) offered a broader set of values rooted in CBPR practices with an overall goal to improve participant and community well-being, counteract colonial research practices, and address more complex social problems that advance the meaningfulness and impact of applied research in behavior analysis.

Are Meaningful and Collaborative Dependent Variables Currently a Critical Feature of an Applied Science of Behavior?

In 1978, the Journal of Applied Behavior Analysis included socially valid dependent variables such as teaching individuals with intellectual disabilities vocational skills (Cuvo et al., 1978) and how to use public transportation (Neef et al., 1978). Later, between 2008 and 2018, a sharp decrease in socially valid dependent variables is seen. The use of “proxy” (Fawcett, 1991) dependent measures (see Fig. 4) appears to increase over time. These include responses such as button pressing, inserting poker chips into a cylinder, or tacting arbitrary stimuli. The purpose of such stimuli and responses is to test procedures and to eliminate “noise” in the experimental process, and they are intentionally designed to have no relationship to the participant’s life. This, however, leaves the participants with little demonstrated benefit from their participation in applied research.

This shift may be attributed to several factors, such as trends in funding, calls for increased translational research (Critchfield & Reed, 2017), changes in training emphasis in university programs (e.g., rapid thesis production), or increased emphasis on internal validity at the expense of social validity. Regardless, beneficence is a basic protection and is the aim of applied research, for both the participant and for the generalized knowledge produced. The aims and protections of translational and basic research are different. Our core concerns are centered specifically on applied research practices as displayed by our disciplinary flagship applied journal.

Overall, the trends are moving in an unfavorable direction (see Fig. 1). In fact, they are moving in the opposite direction: There is less reporting of social validity, less discussion of life improvement/beneficence, and an increase in “proxy,” or arbitrary, dependent variables. With respect to social validity reporting, it is important to note these findings support other reviews in our field that have revealed that social validity has historically been underreported (e.g., Ferguson et al., 2019; Kennedy, 1992; Snodgrass et al., 2018). Beneficence is the aim of applied research, for both the participant and for the production of generalized knowledge, and should be both cherished and protected.

Just to be clear, we in no way wish to diminish the conceptual and procedural advances that have occurred in our field. Fuller (1949) allowed us to imagine that a person with severe disabilities could learn and progress. Studies that use “proxy,” or arbitrary, dependent variables allow us to understand basic mechanisms involved in learning. Our field has benefited, and we have developed progressively deeper understandings of how behavior works and under what conditions change is most likely to occur. At the same time, given the current state of race and power in the United States, while acknowledging our progress and the amount that we have learned, we propose that there is an urgency in assessing the nature of our dependent measures and learning to increase collaboration, especially with people who are marginalized. We can develop methods, as anthropology has striven to do, so that the degree to which participants and stakeholders are involved in applied research is strengthened. This would include collaboration to develop goals and procedures and monitor satisfaction with the outcomes, and to develop measures that allow us to understand what meaning and benefit the dependent variables have to participants’ lives.

Are Our Goals Paternalistic “Buy-In” or Collaboration and Empowerment?

There was little evidence of informed consent, let alone collaboration (see Fig. 3). The current sample suggests that few applied behavior-analytic researchers report conditions for obtaining consent, and the trend suggests that this is becoming even less common. These data could be interpreted in several ways. First, it is possible that researchers are not obtaining consent or that participants are not giving assent. This may indicate that consent is implied because of the context in which the research is conducted (e.g., in residential treatment facilities or state hospitals). Alternatively, editors and reviewers may ask authors to eliminate, or do not ask them to include, consent information given past concerns regarding the cost associated with printing paper journals. Behind the scenes, there have been recent efforts that require authors to confirm the research was approved by an institutional review board when submitting articles for publication. But this information is not included in the articles. Regardless of whether authors or editors may be accountable for this grave oversight, the virtual absence of this information in our studies indicates that our procedures for obtaining consent and assent are not peer reviewed. One may wonder why the procedures for adhering to such an important standard—respect for participants—are not valuable enough to undergo peer review along with our experimental procedures.

For the most part, all measures associated with respect for persons (consent, voluntariness, and assent) are low and remain low over time (see Fig. 1). In other words, either researchers are not reporting the conditions of consent, or participants are not giving consent, are not free to assent, or are not voluntarily participating. This is a most basic protection, and its absence in reporting is troubling. It is implicitly suggesting that we know what is best (paternalism) for participants and that our goal is “buy-in” to our (hegemonic) practices.

At a minimum, if we are engaging in these practices (e.g., informed consent and participatory collaboration regarding goals and desired outcomes) and not explicitly reporting them, we can start doing so. This is important for three reasons. First, it provides a model for others to follow. Second, it decreases the likelihood that new researchers will omit these processes. There is a strong possibility that there is some type of consent process, only that it is not reported. There is also a strong likelihood that the researchers are people who care deeply for the populations they serve—that is why most of us enter the field. The fact that we do not report and explain the type of relationship we have with participants leads to the third reason for inclusion. That is, if we describe and reflect on information about practices for obtaining consent, we can begin to improve and start to build a disciplinary knowledge base on how to do it better.

Who Is Applied Research Benefiting? The Scientist or Society?

It is hard to tell who the main beneficiary of applied behavior-analytic research is. Measures associated with justice are about the fairness, vulnerability, and equitable distribution of research burdens and benefits across social groups (National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research, 1979). These data indicate high, stable rates of reporting the age and sex of participants across all decades; however, participant gender was never reported (see Fig. 2). The lack of reporting of participant gender may be attributed to the years in which articles were published and the psychological theories related to gender beliefs and their relation to sexuality over time. For example, from 1968 through 1974, homosexuality was defined by the American Psychiatric Association as a sexual deviation disorder. It was not until the 1990s that gender, sex, and sexuality constructs shifted away from pathological characterizations (Drescher, 2015). Despite this shift, there is not an increase in reporting in 2008 and 2018, which is an example of the socially irresponsive nature of applied behavior-analytic research practices related to reporting participant gender. The lack of reporting may also be due to the notion that such variables do not affect processes or outcomes, but if that were the case, then no participant characteristics would be described. Furthermore, to ignore demographic information risks perpetuating racist and potentially discriminatory research practices (Benjamin, 2019) and hinders the process of evidence-based practice (Spencer et al., 2012) and practices of cultural humility (Tervalon & Murray-Garcia, 1998; Wright, 2019).

Reports of participant diagnosis were low and stable from 1968 through 1988 until reports peaked in 1998 (see Fig. 2). In 1998, every article in the sample reported the diagnosis of the participant. Since 1998, reports of participant diagnosis have decreased slightly. Reports of participant education level were also low and stable from 1968 through 1988 and then decreased to their lowest across all decades in 1998. Trends from 2008 through 2018 reflect increases in reporting participant education level and decreases in reporting participant diagnosis. This decreased emphasis on reporting participant diagnosis and increased emphasis on reporting education level are likely related to factors such as the shift of research focus in the mid-1990s toward functional analyses for problem behaviors, increased funding for providing services for populations with particular diagnoses, and calls for more translational research (Critchfield & Reed, 2017). Also reflected in this change are factors such as the editorial boards and authorship during these years, as there are large numbers of largely analogue-style research experiments. Variables related to author and editorial trends have also been discussed along other dimensions (see, e.g., Dunlap et al., 1998; Mathews, 1997).

Reports of language and communication are variable across decades (see Fig. 2). The highest reporting of language and communication was in 1968, followed by zero reports in 1978. Rates of reports increased steadily from 1978 to 1998 and have decreased slightly from 2008 to 2018. In 1968, reports of English speakers were most common (e.g., Hart & Risley, 1968), whereas in 1998, reports of expressive and receptive language skills were more common and were typically reported in conjunction with the diagnosis of the participant and the goals of the research project. This finding is problematic because central to the principle of justice are protections for the overselection of participants from homogenous cultural and ethnic groups.

Let us take communication as an example. People communicate in many different ways and in many different languages. As a field, we do not know whether we are meeting the communication needs of a range of cultural and communication groups because these data are largely absent. Moreover, when we do report communication, we focus on particular change techniques and outcomes that are divorced from their meaning and improvements in participant and stakeholder social contexts, values, and goals. A common technique is to teach, for example, mands and to show that a particular prompting procedure produces a change in the frequency of manding under experimental conditions. This is a concern because this type of information does not let us know whether the person’s life was changed in a meaningful way or whether the participants are from culturally and ethnically diverse communities—or are we as a field only producing communication for one sector of society and in only a few modalities or topographies? In those cases in which we might be working in culturally diverse contexts, we have little indication of whether that form of communication fits and sustains within the cultural context. This may be related to such things as research trends in verbal behavior and an increased emphasis on funding and publishing fidelity research in autism. In any case, it does not tell us whether the prompting procedure produced a change that mattered to the participant. Altogether, the emphasis on reporting communication is more likely because it is directly related to the dependent variable under investigation. For example, Schepis et al. (1998) conducted an experiment with four children with autism with expressive and receptive communication delays to evaluate the effects a voice output communication aid and naturalistic teaching procedures would have on communication skills. This shift in reporting is most likely influenced by communication skills being selected as the dependent variable to change in an experiment, not to ensure protections against over or underselection of persons with vulnerabilities (e.g., persons who speak English as a second language).

Reports of race were low and stable across all decades, with the greatest number of studies reporting participant race in 1968 and in 1998, and with a decreasing trend from 2008 to 2018 (see Fig. 2). Ethnicity was reported once; “Carl was a 14-year-old Hispanic boy” (Ervin et al., 1998, p. 68). The glaring absence of reported measures of race and ethnicity across all decades is of concern. The underreporting of race and ethnicity indicates a bias against identifying potential racial inequities (Benjamin, 2019) in applied behavior-analytic research. This absence of data prevents researchers from being able to assess and make necessary adjustments to potentially mitigate participant vulnerability. To think that it does not matter, again, perpetuates biased research practices and creates barriers to evidence-based practice and cultural humility.

Just as is seen in reports of race and ethnicity, the same low and stable rates of reporting marital status, religion, income, and occupation are shown from 1968 through 2018 (see Fig. 2). For example, marital status was reported for two participants as “widow” (Leitenberg et al., 1968) or “married” (Hollandsworth et al., 1978; Sturgis et al., 1978). Later trends indicate zero reports of marital status in 1998 and two reports of married participants in 2008 (Donlin et al., 2008; Ledgerwood et al., 2008), and marital status again was not reported in 2018. Religion is reported only once in the sample in a description of the participant’s position as a lay reader at his church (Hollandsworth et al., 1978). Reports of participant income level were highest in 1968 and decreased to very low and stable rates from 1978 through 2008. For example, Hart and Risley (1968), Phillips (1968), and Risley and Hart (1968) all reported participants with low income levels. The lack of reports of marital status, religion, income, and occupation is likely related to the same structural biases found in the underreporting of race and ethnicity and indicates indifference toward issues of vulnerability in the larger context of societal discriminations.

The lack of participant demographic information reported (e.g., measures associated with justice) in the Journal of Applied Behavior Analysis is alarming. The risk of overselection of persons with vulnerabilities for ease and benefit of the researchers’ agenda is too much of a probability. There are two issues. One, these factors are not reported. Two, there is not disciplinary monitoring of the issue. Both issues present barriers to human rights–oriented data-based design and decision making dedicated to the protection and betterment of marginalized persons (Benjamin, 2019).

What Stereotypes and Biases Are Evident in Applied Research?

Scientific research publications are permanent products that demonstrate what the science values and how the science is practiced to researchers, participants, and members of society. The context in which we name and frame our participants matters as it is a demonstration of their position in society and creates a relational frame (Hayes et al., 2016; Matsuda et al., 2020). There is an obligation to do so with responsibility. Yet, this is not always the case. For example, one group of researchers at Johns Hopkins described two participants with severe behavior problems by the pseudonyms “Ike” and “Tina.” The authors describe Ike as

a 13-year-old boy who had been diagnosed with mild to moderate mental retardation, attention deficit hyperactivity disorder, oppositional defiant disorder, and obesity. He was referred primarily for the treatment of physical aggression, but he also displayed verbal aggression, disruption, and dangerous behaviors. He was ambulatory, could follow two- to three-step instructions (e.g., “Stand up, push your chair under the table, and stand by me”), and generally spoke in complete sentences.

Tina was described as

a 14-year-old girl who had been diagnosed with pervasive developmental disorder, severe mental retardation, and bipolar Type II disorder who had been referred for the treatment of physical aggression. Tina was ambulatory, could follow simple one-step instructions, and had an expressive vocabulary of approximately 50 words. (Fisher et al., 1998, pp. 341–342)

In 1993, a popular film depicted the horrific domestic violence between Tina and Ike Turner (Gibson, 1993). The exposé of trauma became part of the tabloid culture of the mid- to late 1990s, also the period in which the study was published. Naming and describing the participants in this way also became part of a racial characterization of Black children who had a limited ability to communicate, to access reinforcers, and to exert control over their environments.

This is an example of nuanced racial stereotypes. The race of the participants was reported, and they were subsequently disrespected through their stereotyped pseudonyms. This establishes and contributes to a set of stimulus conditions that are likely to occasion and perpetuate erroneous and degrading racial stereotypes. This is further exacerbated especially when the outcomes of the study do not report improvements in quality of life within or beyond the experiment, measures of social validity, or evidence of teaching skills to improve agency or self-determination. These two children with vulnerabilities were mocked in an inappropriate tabloid-style joke. Other fields recognize that the naming of participants comes with responsibility and effects (Allen & Wiles, 2016; Lahman et al., 2015).

Moving Away From Coloniality and Toward a Participatory Science: Searching for Cusps

Human life is to be universally cherished and valued “without distinction of any kind, such as race, colour, sex, language, religion, political or other opinion, national or social origin, property, birth or other status” (UN General Assembly, 1948). Moreover, humanity is united and interdependent in dynamic ways, and the human species–environment relationship is characterized by individual and collective evolution (Karlberg & Farhoumand-Simms, 2006).

Applied behavior analysis was developed within a Western hegemonic structure. It is a discipline that operates within a larger structure that is racist, sexist, and ableist. Our work is to understand how that happens, to imagine how that can change, to produce changes, and to learn how the change process works. The present analysis suggests that we begin by including more people and that we collaborate with them as opposed to experiment on them.

Change requires commitment, resilience, and courage. Such work is effortful and exhausting. Thankfully, our science is responsive, progressive, and amenable to change (Leaf et al., 2016). Applied behavior analysis is a problem-solving science with an aim to address concerns of social significance (D. M. Baer et al., 1968, 1987). Social problems are systems problems that require analyses and interventions at the cultural level alongside the individual level (Skinner, 1961). In the context of applied behavior-analytic research, our humanitarian orientation suggests that systems of oppression must be identified and changed to improve the human condition. If not, the cultural practices that sustain suffering will perpetuate (Holland, 1978).

We suggest that policies, strategies, and research practices within our field be interwoven with a commitment to social justice, including racial justice, for all. The science of applied behavior analysis seeks not only to understand the processes by which behavior change occurs at the individual operant level but also to improve the human condition (D. M. Baer et al., 1968) and to ultimately help save the world from destruction (Skinner, 1987). We offer recommendations to neutralize and diffuse power imbalances to ensure the applied spirit of the science is actualized and to respond to the urgency of our times. Each is intertwined and recursive and includes an examination of oppressive systems, perspective taking, and cultural humility.

Oppressive systems are perpetuated through coercive control (Sidman, 2001; Skinner, 1953). In order to investigate and change systems of contingencies that devalue and potentially harm research participants, we must first examine the system. For example, Holland (1978) discussed the importance of conducting contingency analyses to examine societal structures and systems that set the occasion for (and reinforce) the continuation of coercive and oppressive behaviors that maintain stratified societal systems:

Our contingencies are largely programmed in our social institutions and it is these systems of contingencies that determine our behavior. If the people of a society are unhappy, if they are poor, if they are deprived, then it is the contingencies embodied in institutions, in the economic system, and in the government which must change. It takes changed contingencies to change behavior. If social equality is a goal, then all the institutional forms that maintain stratification must be replaced with forms that assure equality of power and equality of status. If exploitation is to cease, institutional forms that assure cooperation must be developed. Thus, experimental analysis provides a supporting rationale for the reformer who sets out to change systems. (p. 170)

The question then becomes, how do we accomplish this? We offer three potential pathways intended to serve as behavioral cusps, in that they are offered with the hope of producing nonlinear and system-altering changes (Rosales-Ruiz & Baer, 1997).

Context and Understanding: Perspectives, Social Empathy, and Dialogue

Perspective taking is critical in the neutralization of power imbalances because it allows space for historically oppressed persons to share their narratives and lived experiences. This process requires the listener to actively engage with the speaker for the purpose of having the opportunity to experience the perspective of the other person while gaining a better understanding of their emotional responses to their experiences (Taylor et al., 2019).

Perspective taking is a skill that requires a sincere commitment to learning about and being shaped by the experiences of another person. Development of this skill requires thoughtful practice and engagement with a variety of persons. Establishing and improving a perspective-taking repertoire requires cultivating the courage to speak to people outside of your typical comfort circle. This means making a concerted effort to encounter people who have a different worldview and engaging in meaningful (rather than superficial) conversations. Last, engaging in empathetic conversation requires being present and listening to the individual needs and feelings of the person with whom you are conversing. This requires vulnerability and actively exchanging personal narratives through storytelling and sharing similar life experiences with different people (Krznaric, 2012). Ideally, throughout this process, personal biases and prejudices are replaced with the identification of shared reinforcers.

Cultural humility is an orientation that can prevent the perpetuation of coercive cycles that are likely to result from disruptions of systems of privilege. The spirit of the science of applied behavior analysis can be executed through acts of cultural humility and collaborative research practices that allow space for voices, and the subsequent empowerment of members of our communities who experience marginalization (Wright, 2019). Behavior analysts can demonstrate cultural humility by engaging in purposeful acts of servitude in which researchers humbly work alongside participants to formulate every aspect of the research endeavor. Cultural humility extends beyond cultural competence by incorporating a “lifelong commitment to self-evaluation and critique, to redressing the power imbalances in the physician-patient dynamic, and to developing mutually beneficial and non-paternalistic partnerships with communities on behalf of individuals and defined populations” (Tervalon & Murray-Garcia, 1998, p. 117).

Intentional Values and Alignment of Behavior With Values

Developing a common value system based on love, compassion, human rights, and advancement of the well-being of humanity requires a realignment of and collective agreement on values. Values are forged through collective learning, action, and reflection. This requires a rejection of the Western normative orientation to goal development in applied behavior-analytic research. By collectively developing and articulating our values, our research culture and monitoring will encourage a reflective and accountable process of aligning our behavior and values (Binder, 2016).

It may seem out of place to talk about love in the context of scientific practices. However, love is the animating force that propels us to move forward in all of our actions with compassion and care. Loving is a skill and a fluid set of contextual actions; we have to form a common foundation and an ongoing dialogue about what it means to be loving in the context of scientific practice. As one entry point, we consider the conceptualizations offered by Yuille et al. (2020) about notions of love as they relate to justice and the Black community, and a second notion of love offered by Maparyan (2012) as it relates to womanism and our spiritual interconnectedness with the universe. In both cases, affection, care, concern, and empathy are related to actions, and those actions are related to how we choose to live our personal lives, how we practice in our disciplines, and how we collectively shape our policies and laws. Our reinforcers matter. Love and its related emotions and events are strong reinforcers. We suggest, as have others, that they be an important part of our narratives and our discussions about how to move forward.

The evaluation of the incongruences between the stated mission of the science of applied behavior analysis and applied behavior-analytic research practices is critical for systems change. If an organization decides something is important to them (their values), then there are ways for that organization to come to a consensus or to design a plan to set the occasion for behaviors that reflect said values (Binder, 2016). This will allow us to envision and intentionally design participatory-based research environments that include and reflect the values that allow all humans justice and love (Benjamin, 2013).

Third Ways: The Radical Shifting of Paradigms

We struggled with writing this article in a way that would be palatable to our colleagues, while agitating the system enough to ignite functional (rather than topographical) changes. As authors, we acknowledge as members and friends of the discipline that we were and are impacted by its scientific practices. Our aim is to improve our world through the science by asking pertinent and difficult questions. As we move forward, we have to acknowledge the oppressive systems in our world and that these oppressive systems contribute to the inequities experienced by members of society with vulnerabilities who do not have a voice in the process of applied behavior science. For this reason, our comfort is not primary to this effort. Social justice is difficult labor, and we value discomfort because it is a symptom of change and progress. We will keep moving forward.

As members of a science that constantly demands “actionable steps,” we suggest that the most important step is not something that is easy to check off on a checklist. Instead, we should adopt a humble posture of learning. This requires continually effortful engagement in understanding and learning about persons who have been historically marginalized, underrepresented, oppressed, and silenced. Checklists and “token, auxiliary attempts,” such as selecting one person of color to sit at the decision-making table, do not solve the problems of diversity—they amplify them. Moreover, it signals to persons who have been historically marginalized that the system is not inclusive, nor is it participatory:

To celebrate diversity without engaging in the broader concerns of subordinate social groups is invariably in the best interests only of the “inclusive” institution involved, and maybe of those token “diversity entrepreneurs” who are willing to rubber-stamp the institution’s agenda without vigorous engagement and critique. (Benjamin, 2013, p. 52)

Solidarity with others who are also working in this cause to figure out solutions brings us to third ways.

Third ways are solutions we have not yet discovered. We find third ways by taking multiple perspectives of a problem and working together toward solutions despite potential conflicting values and actions. Third ways are ways of discovering values that diffuse dichotomous, imbalanced power differentials and evoke unity toward stated values. Finding third ways creates space for and empowers marginalized voices, which allows persons to harmonize together toward common goals (Barrera & Kramer, 2009).

It is time. Applied behavior analysis is driven by a steadfast orientation toward the enhancement of human life and the amelioration of suffering. Applied research “is not determined by the research procedures used but by the interest which society shows in the problems being studied” (D. M. Baer et al., 1968, p. 92). Applied behavior analysts are members of the social systems within which they conduct research. As applied behavior analysts often engage in research motivated by the improvement of the human condition at the individual level, they are simultaneously operating under the contingencies in place in the social systems within which they operate (Goldiamond, 1978, 1984). Applied behavior-analytic researchers are obligated to be responsive to both the research participant and society. This can only be accomplished through a steadfast orientation toward the amelioration of the types of human suffering often experienced by members of society who are marginalized (Fawcett, 1991). Social justice is not a special project, an auxiliary venture, or a specialty area of the science of applied behavior analysis. It is the applied spirit of the science.

Conclusion

To proceed in ignorance or without acknowledgment of marginalization, trauma, and injustice is unwise. As a discipline, let us step back and assess, even if we do it for no other reason than that the majority of the analyzers are White and that the majority of those suffering are marginalized. Research on disciplinary trends of protections and benefits matters. Who conducts the self-evaluation research, and why they conduct it, matters (Benjamin, 2013). These questions are part of the way forward. The modern sciences emerged from a colonial structure. Applied behavior analysis is no exception.

The extreme conditions of suffering in our world have provided an opening, a source of aversive stimulation, to change the way we do things, to increase opportunity, and to seek just access to reinforcing environments. Roy (2020) stated,

Historically, pandemics have forced humans to break with the past and imagine their world anew. This one is no different. It is a portal, a gateway between one world and the next. We can choose to walk through it, dragging the carcasses of our prejudice and hatred, our avarice, our data banks and dead ideas, our dead rivers and smoky skies behind us. Or we can walk through lightly, with little luggage, ready to imagine another world. And ready to fight for it.

Let us take this opening of interest, a special issue on racism, and use it as leverage to reflect on our own disciplinary values and practices and find third ways that eliminate colonial structures that perpetuate human suffering.

Author Note

We wish to extend our gratitude to Josef Harris and Melody Jones for their gracious assistance with data collection that contributed to the development of this manuscript.

This article is based on the doctoral dissertation completed by Malika Pritchett (2020).

Compliance with Ethical Standards

Conflict of interest

We have no conflicts of interest to disclose.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Allen RE, Wiles JL. A rose by any other name: Participants choosing research pseudonyms. Qualitative Research in Psychology. 2016;13(2):149–165. doi: 10.1080/14780887.2015.1133746. [DOI] [Google Scholar]