Abstract

Evidence suggests that cyberbullying among school-age children is related to problem behaviors and other adverse school performance constructs. As a result, numerous school-based programs have been developed and implemented to decrease cyberbullying perpetration and victimization. Given the extensive literature and variation in program effectiveness, we conducted a comprehensive systematic review and meta-analysis of programs to decrease cyberbullying perpetration and victimization. Our review included published and unpublished literature, utilized modern, transparent, and reproducible methods, and examined confirmatory and exploratory moderating factors. A total of 50 studies and 320 effect sizes spanning 45,371 participants met the review protocol criteria. Results indicated that programs significantly reduced cyberbullying perpetration (g = −0.18, SE = 0.05, 95% CI [−0.28, −0.09]) and victimization (g = −0.13, SE = 0.04, 95% CI [−0.21, −0.05]). Moderator analyses, however, yielded only a few statistically significant findings. We interpret these findings and provide implications for future cyberbullying prevention policy and practice.

Supplementary Information

The online version contains supplementary material available at 10.1007/s11121-021-01259-y.

Introduction

Prior to the pandemic caused by COVID-19, survey research indicated that 93% of teens aged 13–17 had Internet access and 91% reported accessing online content from a mobile device (Lenhart et al., 2015). It is not surprising then, given the access to information and communication technology, that in the same survey, 4 out of 5 teens reported using the Internet “almost constantly” or “several times a day.” Throughout the pandemic, and once the pandemic subsides, youth and teens will continue to use technology on a regular basis for school, extracurricular activities, and to engage with friends.

One of the unfortunate consequences of pervasive and prolonged use of technology is the cyberbullying phenomenon. Cyberbullying perpetration is the act of inflicting or receiving negative, damaging, or abusive language or harassment through information and communications technology (Pearce et al., 2011). A victim of cyberbullying may be intentionally excluded from information sharing, online interactions or in-person interactions shared online, have their passwords or personal information stolen, or receive harassment or threatening messages in multiplayer gaming rooms (Bauman, 2015). The most common cyberbullying definition includes aspects from traditional bullying: repetition, power imbalance, and intention to harm (Hinduja & Patchin, 2008; Nocentini et al., 2010). Participant roles in traditional bullying and cyberbullying are similar; they include bullies, victims, and several different types of bystanders ranging from passive to active (Salmivalli, 2014). The sometimes anonymous nature of cyberbullying enables perpetrators to feel more comfortable bullying someone they perceive to be equally as strong or stronger than them (Vandebosch & Van Cleemput, 2008). Cyberbullying occurs on several information and communication technology platforms, including but not limited to text messages, email, instant messages, chatrooms, public or private social media platforms (e.g., Facebook, Twitter, Snapchat, Instagram, TikTok), or videos through YouTube or other platforms (Whittaker & Kowalski, 2015). Cyberbullying can also occur at any time of day in a wide variety of locations (Wong-Lo et al., 2011), which may make it more pervasive than traditional bullying because it is limited to in-person contexts. Not surprisingly, then, traditional bullying victimization is also highly correlated with cyberbullying victimization (Espelage et al., 2013a, 2013b).

Over the past decade, prevalence rates for cyberbullying involvement among youth between the ages of 10 and 17 years (as a victim, bully, or bully/victim) have been reported between 14 and 21% (Kowalski & Limber, 2007; Wang et al., 2009; Ybarra & Mitchell, 2004). The most recent National Center for Education Statistics (NCES) report from the School Crime Supplement to the National Crime Victimization Survey in 2017 estimated that 15% of students ages 12 through 18 were victims of cyberbullying during the 2016–2017 school year (Zhang et al., 2019). Meta-analytic findings revealed that approximately 15% of US students reported being the victim or perpetrator of cyberbullying in the past 30 days (Modecki et al., 2014). Prevalence rates vary widely in other countries, from a low of 5.0% in Australia to a high of 23.8% in Canada (Brochado et al., 2017). A recent small-scale survey further suggests cyberbullying perpetration and victimization may have increased following the pandemic, perhaps as a result of students’ increased technology use (Jain et al., 2020).

Evidence suggests that cyberbullying involvement is associated with several negative outcomes including problem behavior (e.g., substance use, peer aggression), as well as school performance, attachment, and satisfaction (Arslan et al., 2012; Kowalski & Limber, 2013; Marciano et al., 2020; Schneider, 2012). More specifically, victims of cyberbullying have been shown to suffer from anxiety more often, and demonstrate lower academic achievement (Kowalski & Limber, 2013; Marciano et al., 2020; Schneider et al., 2012) than those not victimized. Several longitudinal studies observed a statistically significant relation between cyberbullying victimization and later depressive symptoms (Gámez-Guadix et al., 2013; Hemphill et al., 2015; Landoll et al., 2015). Accordingly, victims of cyberbullying have been found to be more likely to report suicidal ideation when compared to their non-involved counterparts (Hinduja & Patchin, 2010). Similarly, perpetrators of cyberbullying have been shown to use drugs and alcohol more regularly, suffer from depression more often, report more suicidal ideation, have lower self-esteem, and demonstrate lower academic achievement, compared with students who are not involved in cyberbullying (Hinduja & Patchin, 2010; Kowalski & Limber, 2013; Kowalski et al., 2014; Marciano et al., 2020). These associations between cyberbullying involvement as a victim or a perpetrator and negative outcomes have also been supported by findings from meta-analyses (Guo, 2016; Kowalski et al., 2014; Marciano et al., 2020). In a recent meta-analytic review of 56 longitudinal studies, Marciano and colleagues (2020) investigated predictors and consequences of cyberbullying perpetration and victimization. Findings of their meta-analysis demonstrated a positive relation between involvement in cyberbullying as a perpetrator or as a victim and internalizing and externalizing outcomes.

Researchers, practitioners, and policymakers have attempted to reduce such acts through school-based interventions (Mishna et al., 2011). These behaviors are a global concern, and researchers from Europe, Serbia, Australia, Korea, Taiwan, and China have also studied prevention efforts on cyberbullying in their countries (Aricak et al., 2008; Bhat, 2008; Cassidy et al., 2013; Del Rey et al., 2015; Huang & Chou, 2010; Ortega et al., 2012a, 2012b; Popović-Ćitić et al., 2011; Yang et al., 2013; Yilmaz, 2011; Zhou et al., 2013). Existing interventions either specifically target cyberbullying or generally address it in bullying or school violence prevention programs. Given the emerging evidence, researchers have attempted to synthesize the available literature on the efficacy of anti-cyberbullying programs. The existing reviews differ from this current review by being out of date (Mishna et al., 2011), failing to conduct moderator analyses and investigate programming components (Van Cleemput et al., 2014), or lacking the use of modern meta-analytic techniques (Gaffney et al., 2019a). In addition, several researchers have conducted reviews related to the effects of cyberbullying programs, but these reviews synthesized correlation or prevalence effect sizes and therefore do not provide evidence of program effectiveness (Chen et al., 2017; Gardella et al., 2017; Guo, 2016; Marciano et al., 2020; Modecki et al., 2014; Zych et al., 2015).

Given the wide-ranging and pervasive problems caused by cyberbullying, the extensive resources devoted to it, and the lack of a comprehensive and up-to-date review of programming to prevent it, the purpose of this study was to conduct a systematic review and meta-analysis that synthesized the effects of school-based programs on cyberbullying perpetration or victimization outcomes. We conducted an extensive literature search, utilized reliable screening protocols, data extraction procedures, effect size estimation, and modern meta-analytic modeling techniques such as random-effects modeling with robust variance estimation. We also performed confirmatory moderator analyses, in addition to exploratory multiple predictor meta-regression analyses, to investigate how sample, measurement, and program characteristics moderate program effectiveness. A supplementary goal was to advance the field of meta-analysis, and as such, we prepared and made our data and statistical code publicly available which allows for complete reproducibility of study findings. We conclude this manuscript with recommendations for applying these results to school policy as well as future meta-analytic endeavors.

Methods

Prior to conducting the meta-analysis, we created a review protocol that articulated the inclusion/exclusion criteria, search strategy, screening procedures, data extraction codebook, and pre-analysis plan. When writing the protocol, we adhered to well-established standards and reporting guidelines outlined in the Campbell Collaboration’s Methodological Expectations of Campbell Collaboration Intervention Reviews checklist. We pre-registered the protocol and pre-analysis plan on the Open Science Framework (OSF) [https://osf.io/dzn2p/]. To meet to the highest possible reproducibility standards (Polanin et al., 2020), we also published the extracted and analytical datasets as well as the accompanying statistical R code to our OSF page. The published R code allows users to transform the raw dataset into the analytical dataset; reproduce all models conducted for the analysis; reproduce all results, tables, and figures presented in the main text; and reproduce all exploratory analyses and supplemental findings.

To efficiently warehouse all extracted information, we constructed a relational database using FileMaker Pro (Apple Inc., 2016). We stored data collected at each phase of the project (i.e., search, screening, data extraction, and effect size estimation), to organize the results and maintain a fully reproducible PRISMA flowchart. The result of this data management was a spreadsheet documenting if and when we screened out a citation. We used the R package PRISMAstatement to create the reproducible flowchart (Wasey, 2019). We also used the R packages metafor (Viechtbauer, 2010), clubSandwich, and tidyverse (Wickham et al., 2019) to clean and pre-process data, run meta-analytic and meta-regression models, and create tables and plots.

Inclusion/Exclusion Criteria

Primary research studies were selected based on the following inclusion and exclusion criteria. Studies must have included only students in K-12 settings. Studies also must have tested the effects of an intervention on cyberbullying. We did not exclude studies based on the type of intervention tested—meaning, we included studies of interventions specifically intended to reduce cyberbullying perpetration and victimization while also including any school-based social interventions such as general violence prevention programs. We included studies that randomly assigned participants to conditions and studies that did not randomly assign participants. Studies included may have assigned participants, classrooms, schools, or school districts to conditions.

Although the interventions in included studies did not need to target cyberbullying directly, studies must have measured a cyberbullying perpetration or victimization outcome variable to be included in the review. We also coded bullying-related outcomes for analysis, regardless of whether they were cyber-specific; that is, we coded traditional, in-person bullying perpetration or victimization outcome measures when available. Two related rationales guided the inclusion of traditional in-person bullying. Recent meta-analytic research indicated that traditional in-person bullying perpetration and victimization and cyberbullying perpetration and victimization are correlated (Marciano et al., 2020). We therefore sought to test for differences in program impacts across related but conceptually difference outcome domains. In addition, our original proposal and review protocol called for the extraction of these outcomes.

Because the terms cyberbullying, internet bullying, and computer bullying first appeared in the literature in the mid-1990s, we included any study published on or after 1995. We included all types of study reports, published or unpublished, to ensure that every available study report was included in the review and decrease the well-known upward bias of studies published in peer-reviewed journals (Polanin et al., 2016). Studies must have been published in English, Spanish, or Turkish. We chose these languages because members of our team spoke these languages fluently. We did not exclude studies based on country of origin (i.e., we included all studies, regardless of where a study’s sample was drawn).

Literature Search

We conducted several complementary search procedures to ensure a comprehensive review. We first performed an electronic bibliographic search of the literature, searching the following online databases, which included both published and unpublished studies: Academic Search Complete, Education Full Text, ERIC, National Criminal Justice Reference Service Abstracts, ProQuest Criminal Justice, ProQuest Dissertations and Theses, ProQuest Education Journals, ProQuest Social Science Journals, PsycINFO, PubMed (Medline), and Social Sciences Abstracts, CrimDoc, Grey Literature Database (Canadian), Social Care Online (UK), and the Social Science Research Network eLibrary. The search string was tailored to the requirements of each database. We conducted two rounds of electronic database searching, one on April 23, 2018, and a follow-up to capture additional materials from the previous year on Aug. 5, 2019. An example set of search terms and search string are included in the Supplemental Appendix.

We also conducted auxiliary searches to attempt to find all available studies. We manually searched Prevention Science, Child Development, and Aggressive Behavior, from 1995 through 2018, because the journals produced a considerable number of studies on school violence, bullying, and cyberbullying. We also hand-searched the Journal of Interpersonal Violence and Computers in Human Behavior because these journals had yielded the largest number of screened-in abstracts. We conducted backward and forward reference harvesting of all included studies. We implemented several author query processes as well. If the study authors evaluated a general violence or bullying prevention program, for example, but did not include a cyberbullying measure, we queried the primary study author and asked whether cyberbullying was measured and not reported. In addition to these direct author queries, we identified general violence prevention studies that may have collected, but did not report, an eligible outcome measure. Polanin and colleagues (2020) articulated this process and the rationale for conducting such a procedure. We emailed 600 primary authors asking for cyberbullying outcome data; 75 authors responded to our request. After reviewing authors’ responses, we added three studies that would have otherwise not been included for a total of 50 studies.

Screening

The large number of citations identified in the screening round required the use of the best practice abstract screening methods articulated in Polanin and colleagues (2019). We developed an abstract screening tool and screened the abstracts using the free Abstrackr software (Wallace et al., 2012)—an open-source, web-based tool. All review team members screened abstracts, including the principal and co–principal investigator, the research coordinator, graduate and undergraduate research assistants, and professional research assistants (10 individuals in total). We attempted to retrieve the full-text PDFs for all citations that were marked as eligible.

For all full-text PDFs retrieved, we conducted full-text screening procedures. We developed a full-text screening tool, using the previously described inclusion/exclusion criteria to guide this process. As with abstract screening, screeners received extensive training led by the first author, and pilot screening was conducted. The accuracy of the screening process was ensured as all final decisions were validated by senior review team members.

Data Extraction

A data extraction codebook detailed all information extracted from each study. We extracted study-level information (e.g., details on the sample demographics and how the individuals were placed in groups), characteristics of the intervention and comparison conditions (e.g., who developed or implemented the intervention; implementation fidelity), construct-level information (e.g., scale, reliability, and timing of measurements), and summary data that could be used to estimate effect sizes (e.g., means and standard deviations for the intervention and comparison conditions). We also captured information about study design quality using the What Works Clearinghouse (WWC) Standards and Procedures Handbooks, version 4.1 rating system (What Works Clearinghouse, 2020). All included studies were eligible to receive one of three WWC ratings: meets WWC standards without reservations (highest), meets WWC standards with reservation (medium), and does not meet WWC standards (lowest). Finally, based on the extraction of core intervention components, we grouped similar components together into intervention categories and reported how many interventions used the various core component categories.

Data Handling and Effect Size Estimation

We exported each study’s extracted data from FileMaker into R. In R, we recoded and cleaned all analytical variables (see “01_clean_data.R” for details). We estimated all effect sizes using metafor (Viechtbauer, 2010). Most outcome data were continuous, and we therefore estimated a standardized mean-difference adjusting for small-sample bias, commonly referred to as Hedges’ g (Hedges, 1981). Whenever possible, we accounted for possible selection bias due to pretest differences between conditions using a difference-in-difference effect size estimation procedure as outlined by the What Works Clearinghouse (WWC) version 4.1 Procedures Handbook (pg. E-5, WWC, 2020). We estimated the log odds ratio for any dichotomous outcome but then transformed it into Hedges’ g using the equations suggested by the WWC (pg. E-7). When study authors assigned clusters but did not account for the clustering of students within higher-level groups such as classrooms or schools, we estimated the effective sample size, which adjusts the analytic sample size in a manner that is equivalent to conducting a multilevel model (Higgins et al., 2020).

We handled missing data using the “infer, initiate, impute” method suggested by Pigott and Polanin (2020). We first sought to limit missingness by inferring data based on the information provided. One example is the participants’ average age. Some of the studies, for example, provided the exact average age, while other studies only provided the grades sampled. When the exact average was not available, we used the average age at those grade levels to infer the average for the study. Failing this method, we next initiated contact with the primary study author, sending an email directly to the author for the missing information. A very high proportion of study authors returned our request: of the 14 emails sent, 12 responded with at least some of the information requested (86%). As a last step, failing the infer or initiate stage, we sought to multiply impute the remaining missing information. Five potential moderators had missingness: percent of males (6% of studies had missingness), school setting (38%), school type (32%), socioeconomic status (36%), and outcome reliability (52%). We conducted multi-level multiple imputation, assuming a two-level model and a normal distribution (i.e., “2 l.glm.norm” function) using the mice R package (van Buuren, 2020). Our imputation model contained all variables with missingness along with 11 additional variables including condition type, effect size type, and effect size (see Supplemental Material for complete list of variables). The package estimated 5 complete datasets with a maximum iteration of 30 for each; visual inspection of the summary statistics at the iteration end points indicated sufficient convergence. We conducted sensitivity analyses, by using listwise deletion, to ensure results were robust to imputation methodology.

Meta-analysis Methods

First, we conducted separate meta-analytic models to estimate average effects of programs on cyberbullying perpetration, cyberbullying victimization, bullying perpetration, and bullying victimization. We used a multilevel, random-effects model with robust variance estimation (Hedges et al., 2010) to produce a weighted average of the effect sizes. Effect sizes were weighted inversely according to their variances and covariances as implied by the multilevel model. We used this specification to account for non-independent sampling errors due to the inclusion of multiple effect sizes from the same study (Moeyaert et al., 2017). We translated the overall average effects into the probability of positive impact (PPI), a relatively new metric defined by Mathur and VanderWeele (2020). The metric estimated the proportion of true effects above a null result; in other words, it provided the probability that a randomly selected and implemented program would reduce cyberbullying perpetration or victimization.

Given substantial effect size heterogeneity, we also conducted exploratory and confirmatory moderator analyses. Our analysis plan articulated six substantive moderators of interest; for these six moderators, we implemented six separate meta-regression models, performing ANOVA-like moderator analyses for the categorical variables and single-variable meta-regression models for the continuous variables. The six moderators of interest were as follows: country of origin (USA vs. non-USA), whether the program targeted cyberbullying (yes vs. no), measurement timepoint (immediate posttest vs. follow-up), original effect size type (continuous vs. dichotomous), percent of males in the sample, and percent of students identifying as nonwhite in the sample. We conducted two types of exploratory models. The first set of exploratory analyses examined two moderators of substantive interest: (1) risk of bias using the WWC’s rating system and (2) presence of one of the seven program categories. We opted to use ANOVA-like moderator analyses because of the variables’ substantive importance. The second set of exploratory analyses implemented meta-regression models with 26 variables for each of the four outcome bins. We grouped these exploratory moderators into 4 thematic categories, running one meta-regression model at a time, resulting in 4 different sets of models. Any statistically significant moderator was kept and added to a final, combined model. We should note that the meta-regression models may suffer from low statistical power and the results should be interpreted with some caution.

To ensure the models’ results were robust to potential sources of bias, we also conducted publication bias and sensitivity analyses. We tested for effect size differences between published and unpublished studies in our meta-regression models. We implemented Duval and Tweedie’s (2000) trim and fill method, both at the effect size level and aggregated to a single effect size per study at the study-level. We also controlled for effect size dependency by implementing Egger’s regression test using robust variance estimation as suggested by Rodgers and Pustejovsky (2019). Additional sensitivity analyses were conducted to assess the robustness of our findings to multiple imputation and potential risk of bias due to pretest differences between conditions in the included studies. To this end, we list-wise deleted effect sizes from studies with missing data and re-estimated the confirmatory moderator models to compare against the original models with imputed data. We also included baseline equivalence testing as a meta-regression moderator to see whether findings differed depending on whether studies did or did not test for pretest imbalance (and if they did test, whether or not they found pretest differences between conditions).

Results

Cyberbullying Prevention and Intervention Programming

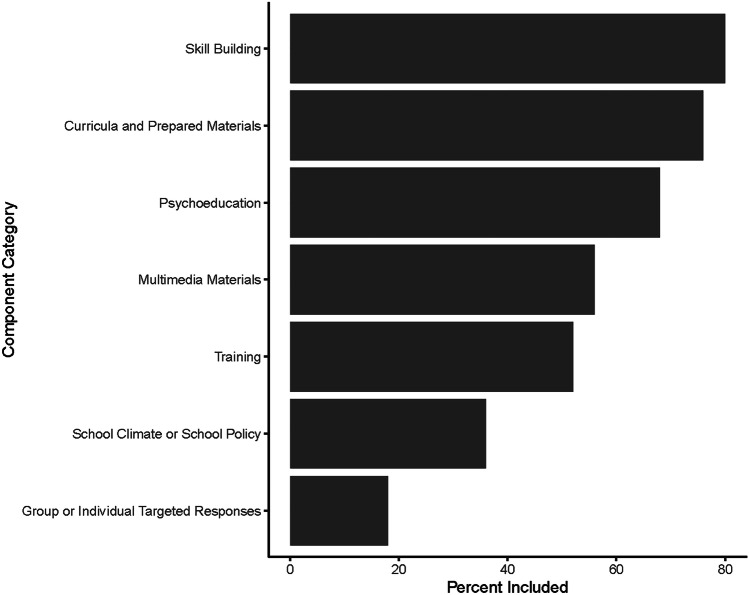

Programming to decrease cyberbullying perpetration and victimization takes various forms and includes numerous core components. To organize and synthesize the components of programs from studies included in our review, we extracted programming information from each study and grouped related components into seven categories (Supplemental Table S1). The categories do not represent mutually exclusive programming types. Instead, they represent groupings of similar programming components. An individual program could use multiple components and therefore be represented within multiple categories.

We defined the seven programming categories as follows: (1) skill-building, (2) curricula and prepared materials, (3) psychoeducation, (4) multimedia materials, (5) training, (6) school climate or school policy, and (7) group or individual targeted responses. Supplemental Table 1 delineates the components that constitute each category. Components were considered to be included as part of a program if referenced as part of the program description. We visualized the usage of each category by plotting the frequency of each category (Fig. 1). Nearly 80% of the programs included some form of skill building, and many others used curricula or other pre-packaged materials (67%) or multimedia materials (65%). We describe programs within each component category below.

Fig. 1.

Program component categories

Skill-Building

Skill-building broadly refers to teaching or training students to develop a competency that they can apply independently when appropriate in real-time. The relevance of skill-building to cyberbullying prevention is paramount: Students must learn how to respond safely and appropriately when or after they are in situations that give rise to cyberbullying, especially given that adults or other peers are likely not present during those interactions (Mishna et al., 2009).

Among programs that aim to increase skill-building, training delivery modality varies widely. Some programs like Skills for Life (Fekkes et al., 2016) and Relationships to Grow (Guarini et al., 2019) use tools such as role-play exercises, video and live demonstrative modeling, vignette discussion, and feedback embedded in a greater curriculum. NoTrap! is an example of a peer-led program that provides peer leaders with training on communication skills with regard to communicating awareness-raising messaging to peers and modeling bullying conflict management and coping (Palladino et al., 2016).

Curricula and Prepared Materials

The curricula and prepared materials category refers broadly to uniform, prepared, content that guides the delivery of the intervention by teachers, counselors, school staff, and sometimes students’ family members. Programs may train students to deliver content. Two examples of programs included in this category are WebQuest (Lee et al., 2013) and PREDEMA (Programa de Educación Emocional para Adolescentes or Program of Emotional Education for Adolescents; Taylor et al., 2017). WebQuest is an online curriculum that is composed of eight 45-min sessions (Lee et al., 2013). The program is delivered as a series of interactive “missions” that students must solve together in small teams regarding the dangers of cyberbullying. Additionally, the curriculum includes access to resources for students to reference on their own as needed. PREDEMA is a type of curriculum that teaches social-emotional learning skills, or the constellation of competencies that encourage self-regulation and bringing awareness to interactions with others (Taylor et al., 2017). The program seeks to improve social-emotional skills to enhance the quality of students’ interpersonal relationships.

Curricula identified in this review also included workbooks, homework, and other material designed to help students engage with the material. The Cyberbullying Prevention Program is an 8-week curriculum that was a hybrid of psychoeducation and social-emotional skill building. Students complete weekly homework assignments that focuses on self-reflection in the context of the material covered in class. Reference materials are distributed to parents on the content of the students’ curriculum and information on how to support them. The ConRed program is similar, but also incorporates family and staff training (Del Rey et al., 2016). All training sessions (i.e., student, teacher, and parent) utilize quizzes on the material during class in addition to debates and discussions.

Psychoeducation

The term psychoeducation emerged from the mental health field and is defined as a form of intervention focused on developing awareness, understanding, and effective coping strategies to manage an illness (Lukens & McFarlane, 2004). Psychoeducational programs are centered on the premise that participants’ increases in knowledge about a condition will lead them to improved condition-related outcomes (Lukens & McFarlane, 2004). In cyberbullying prevention, programs with a psychoeducational component are aimed at raising awareness of cyberbullying and increasing participants’ knowledge of cyber-safety and strategies to avoid or combat cyberbullying.

The ViSC (Viennese Social Competence) program employs a psychoeducational approach by implementing a “train the trainer cascade” where scientists train “multipliers,” and “multipliers” train teachers about how to recognize bullying cases, how to tackle acute cases, and how to implement prevention strategies in the school (Gradinger et al., 2015). Educators are also trained to review school policies about cyberbullying and provide parents and students with cyberbullying related training. The students then train in social competence skills to create a positive school environment in which bullying/cyberbullying will be less likely to occur (Gradinger et al., 2015). The Cyber Friendly Schools program is a psychoeducational program (Cross et al., 2016). Rather than eradicate the use of these technologies at school, the program focuses on educating youth about the potential risks associated with being online, and strategies to increase online safety (Cross et al., 2016). The program motivates students to reflect on their rights and responsibilities online and included modules about the nature and prevalence of cyberbullying, safety in social networks, positive cyber-relations or “netiquette,” managing online privacy, and legal issues associated with reporting, among other topics (Cross et al., 2016).

Media Materials

The creative use of media materials in cyberbullying prevention programs is designed to enhance student engagement and participation, which may translate to effective prevention results. Several programs employ video technology to deliver prevention curricula, and their use can be an effective way to deliver content and demonstrate skills on a large scale while keeping costs of implementation low. One example of a study using video to deliver prevention curriculum is the Second Step program (Espelage et al., 2015a, 2015b). The Second Step lessons are accompanied and supported by a media-rich DVD which included interviews with students and demonstrations of skills. The videos reinforce skill acquisition during the program delivery which supported other prevention strategies (Espelage et al., 2015a, 2015b). Ingram and colleagues (2019) leveraged the use of virtual reality technology to deliver interactive prevention content to middle school students. The use of virtual reality is designed to provide an immersive experience that enables students to decrease their sense of psychological distance from displayed scenarios and enhance intervention messaging effectiveness.

Finally, some cyberbullying programs employ online or web-based instruction to deliver content and create interactive experiences for students that extended beyond the classroom. The Italian program Noncadiamointrappola (Let’s not fall into a trap; Menesini et al., 2012) is a web-based instruction forum in which online educators post discussion threads, answer questions, and moderate conversations with students (Menesini et al., 2012). Four online instructors alternate the control of the forum for a period of 2 weeks each, which is also supplemented by face-to-face instruction.

Training

Providing participants in any intervention program with the knowledge, skills, and practice they need to accomplish a specific goal is a widely accepted best practice (Nation et al., 2003). Several cyberbullying interventions include a training component, though they differ somewhat on the skills they targeted. The Tabby Improved Prevention and Intervention Program (TIPIP) includes both teacher and student training components (Sorrentino et al., 2018). In this program, the developers train teachers across four sessions on ways to recognize, prevent, and manage cyberbullying among their students. The developers also train students in four sessions on how to recognize and avoid becoming involved in risky online behaviors including cyberbullying (Sorrentino et al., 2018). Additionally, several programs include a parental training component that focuses on providing parents with skills to support their children in navigating cyberbullying (e.g., Cross et al., 2016; Del Rey et al., 2016; Wölfer et al., 2014).

School Climate or School Policy

School climate refers to a summation of individuals’ (often students’) perceptions of the educational structures, values, practices, and relationships that create their school experiences (Thapa et al., 2013). The Olweus Bullying Prevention Program (OBPP) proposes a logic model where bullying is reduced through strengthening components of school climate (Olweus, 1991; Olweus & Limber, 2010a, 2010b). This program is the oldest, arguably most researched, anti-bullying program, developed in Norway in the mid-1980s. To address the goals of reducing existing bullying, preventing new bullying, and improving general peer relations, program school personnel work to restructure the school environment to build a sense of community that reduces opportunities and rewards for bullying (Olweus et al., 2019). The multi-level program, composed of community-level, whole school-level, classroom-level, and individual-level components, targeted reducing bullying in general, but did not target cyberbullying in particular.

Similarly, the Restorative Practices Intervention, developed in 1999 by the International Institute for Restorative Practices and evaluated by Acosta and colleagues (2019), trains all school staff to develop a supportive school environment through 11 restorative practices (“Essential Elements”). These programming elements range along an informal to formal continuum, such as using affective statements to communicate feelings, holding restorative “circles” or “conferences,” and using restorative practices during interactions with family members (Acosta et al., 2019). Like the OBPP, the Restorative Practices Intervention does not describe specific components that target cyberbullying, but instead aims to reduce general negative interactions through building a supportive environment.

Group or Individual Targeted Responses

Although most programs in the review use a universal prevention approach, some interventions target specific groups of students. Some programs, such as the Sensibility Development Program against Cyberbullying, target students at-risk for exposure to bullying behaviors (Tanrıkulu et al., 2015). Others, such as Cyberprogram 2.0, are designed to be implemented with classroom-sized groups of students who are not explicitly identified as high risk (Garaigordobil & Martínez-Valderrey, 2015a, 2015b).

Cyberprogram 2.0 (Garaigordobil & Martínez-Valderrey, 2015a, 2015b), implemented in Spain, is a targeted anti-cyberbullying program aiming to increase adolescents’ anti-bullying skills through building interpersonal conflict resolution skills and self-esteem. The intervention consisted of 19 one-hour sessions with groups of approximately twenty adolescents, using group dynamic strategies “to stimulate the performance of the activity and the debate,” such as role-playing, brainstorming, case study, and guided discussion through questions (pg. 231). Another example, The Sensitivity Development Program against Cyberbullying (Tanrıkulu et al., 2013), implemented in Istanbul, specifically targeted adolescents at-risk of being exposed to cyberbullying behaviors. The treatment group of eight youth participated in five sessions, which each contained psychologically based group activities to increase cyberbullying awareness, and computer-based lectures to increase technical knowledge about cyberspace and a discussion facilitated by a technology expert.

Systematic Review and Meta-analysis Results

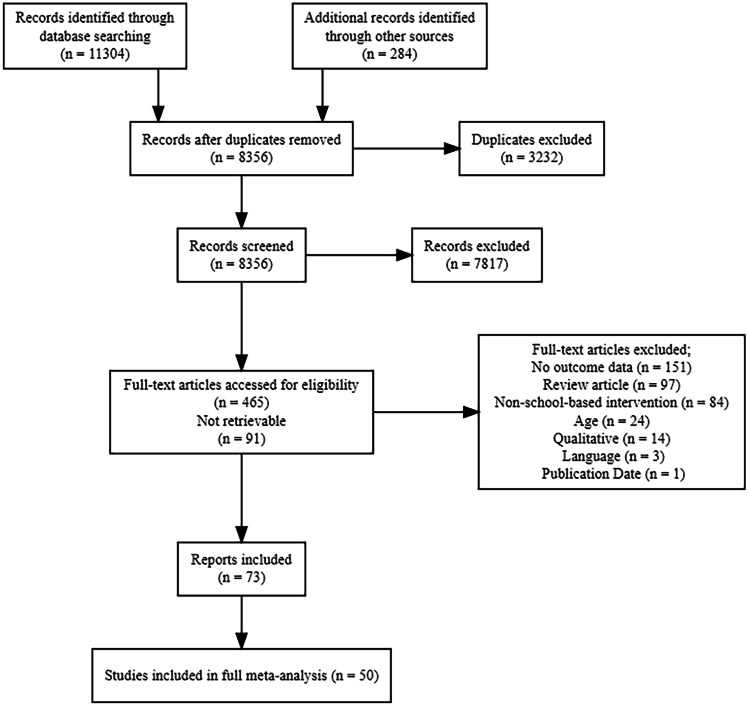

The full results of our search, screen, and data extraction procedures can be found in the PRISMA flowchart (Fig. 2). Our search resulted in 11,588 citations. A total of 3232 were identified as duplicates and removed. Of the 8356 remaining citations, 7.817 were excluded during abstract screening, yielding 483 citations for full-text review. Of the 465 full-text PDFs retrieved and reviewed, 73 met our eligibility criteria and were retained for analysis. These full-text articles were grouped based on whether they used the same sample, yielding a total of 50 independent studies for our review and synthesis. Characteristics from each included study can be found in Supplemental Table 2.

Fig. 2.

PRISMA flow diagram

Of the 50 studies (Table 1), most were conducted outside the USA (64%), published in a peer-reviewed journal (76%), and received funding (54%). A small majority of studies used random assignment (54%), yet only 18% of studies met the WWC’s highest rating (meets WWC standards without reservation). Fewer than half of included studies sampled students from low or low-middle socioeconomic status (24%), and studies on average included about a third of students who identified as nonwhite (35%). Fewer than half the students were identified by the studies as male (48%) and averaged 13.0 years old (SD = 1.73). A large share of the programs directly targeted cyberbullying (76%), and the average program lasted 22 weeks.

Table 1.

Summary characteristics of included studies

| Characteristics | Summary statistic |

|---|---|

|

Studies (effect sizes) Participants (M, SD) |

50 (320) 45,371 (1600, 4624) |

| Publication status | |

| Published | 38 (76%) |

| Unpublished | 12 (24%) |

| Funding | |

| Funded | 27 (54%) |

| Not funded | 23 (46%) |

| Program target | |

| Cyberbullying | 38 (76%) |

| Not cyberbullying | 12 (24%) |

| Design | |

| NR-Cls | 5 (10%) |

| NR-Ind | 7 (14%) |

| NR-Scl | 11 (22%) |

| R-Cls | 10 (20%) |

| R-Scl | 11 (22%) |

| R-Ind | 6 (12%) |

| WWC rating | |

| MSWoR | 9 (18%) |

| MSWR | 19 (38%) |

| DNMS | 22 (44%) |

| Location | |

| USA | 18 (36%) |

| Non-USA | 32 (64%) |

| SES | |

| Low | 11 (22%) |

| Low-middle | 9 (18%) |

| Middle | 12 (24%) |

| High-middle | 13 (26%) |

| High | 5 (10%) |

| Mean percentage male | 51% |

| Mean percentage nonwhite | 35% |

| Mean age in years (SD) | 13 (1.73) |

| Mean length of intervention in weeks (SD) | 22 (25.5) |

Study-level summary statistics presented

NR-Cls non-random assignment at the classroom level, NR-Ind non-random assignment at the individual level, NR-Sclnon-random assignment at the school level, R-Cls random assignment at the classroom level, R-Ind random assignment at the individual level, MSWoR meets WWC standards without reservation, MSWR meets standards with reservation, DNMS does not meet WWC standards

A total of 45,371 students participated in the identified evaluations. Separate analyses were conducted for each of the four outcome categories: (1) cyberbullying perpetration (44 studies, 96 effect sizes), (2) cyberbullying victimization (39 studies, 75 effect sizes), (3) traditional bullying perpetration (22 studies, 67 effect sizes), and (4) traditional bullying victimization (24 studies, 82 effect sizes). Across all 50 included studies, we estimated 320 effect sizes.

The results of the meta-analytic models revealed that school-based prevention or intervention programs were associated with significant reductions in all four outcome variables (Table 2). The programs were associated with significant reductions in cyberbullying perpetration (k = 44, m = 96, g = −0.18, SE = 0.05, p = 0.0001, 95% CI [−0.28, −0.09]) and cyberbullying victimization (k = 39, m = 75, g = −0.13, SE = 0.04, p = 0.0012, 95% CI [−0.21, −0.05]). The programs, on average, were also associated with significant reductions in traditional bullying perpetration (k = 22, m = 67, g = −0.18, SE = 0.05, p = 0.0008, 95% CI [−0.28, −0.08]), and traditional bullying victimization (k = 24, m = 82, g = −0.16, SE = 0.05, p = 0.0039, 95% CI [−0.27, −0.05]). For all four outcomes, the results indicated moderate to high heterogeneity (τ2: cyberbullying perpetration = 0.06; cyberbullying victimization = 0.02; bully perpetration = 0.03; bully victimization = 0.05). Based on these findings, the programs included in our synthesis have a 76% probability of reducing cyberbullying perpetration and a 73% probability of reducing cyberbullying victimization.

Table 2.

Overall meta-analysis results

| Outcome domain | Number of studies | Number of effect sizes | Average effect size (SE) | 95% CI | Tau-squared (between) | I-squared (between, within) | 95% PI | PPI |

|---|---|---|---|---|---|---|---|---|

| Cyberbullying perpetration | 44 | 96 | −0.18 (.05) | −0.28, −0.09 | 0.06 | 79.71, 9.78 | −0.67, 0.30 | 76.08 |

| Cyberbullying victimization | 39 | 75 | −0.13 (.04) | −0.21, −0.05 | 0.02 | 34.90, 53.77 | −0.40, 0.14 | 72.61 |

| Bullying perpetration | 22 | 67 | −0.18 (.05) | −0.28, −0.08 | 0.03 | 55.20, 37.44 | −0.54, 0.17 | 77.94 |

| Bullying victimization | 24 | 82 | −0.16 (.05) | −0.27, −0.05 | 0.05 | 63.21, 28.97 | −0.59, 0.26 | 73.19 |

SE standard error, CI confidence interval, PI prediction interval, PPI probability of positive impact

We conducted two sets of moderator analyses, confirmatory and exploratory. For both cyberbullying perpetration and victimization, the confirmatory moderator results indicated that none of the six variables were related to the intervention effectiveness (Tables 3 and 4, respectively). It should be noted, however, that programs with a focus on cyberbullying were found to have a higher average intervention effectiveness for both cyberbullying perpetration and victimization, relative to programs with a focus on general violence prevention. The confirmatory moderator results for traditional bullying perpetration and victimization revealed similar findings for most moderators (Supplemental Tables 3, 4). One notable exception was the targeting of cyberbullying: for both traditional bullying perpetration and victimization, programs that included a specific cyberbullying targeted component were more effective compared to general prevention programming (perpetration: targeting = −0.26, non-targeting = −0.02, p = 0.01; victimization: targeting = −0.25, non-targeting = −0.01, p = 0.02).

Table 3.

Confirmatory moderator analyses for cyberbullying perpetration

| Variable | Number of studies | Number of effects | Coef. or mean | Standard error | 95% CI—Lower | 95% CI—Upper | T-statistic | df | p-value |

|---|---|---|---|---|---|---|---|---|---|

| Country of origin | 0.87 | 23.28 | 0.39 | ||||||

| Non-USA | 30 | 66 | −0.22 | 0.04 | −0.31 | −0.13 | |||

| USA | 14 | 30 | −0.11 | 0.11 | −0.33 | 0.10 | |||

| Focus of program | −0.53 | 12.57 | 0.61 | ||||||

| No cyber target | 9 | 26 | −0.15 | 0.08 | −0.30 | 0.01 | |||

| Cyberbullying targeted | 35 | 70 | −0.20 | 0.06 | −0.30 | −0.09 | |||

| Timepoint | 0.10 | 3.05 | 0.92 | ||||||

| Posttest | 42 | 79 | −0.18 | 0.05 | −0.28 | −0.09 | |||

| Follow-up | 8 | 17 | −0.18 | 0.06 | −0.29 | −0.07 | |||

| Effect size type | 2.21 | 2.94 | 0.12 | ||||||

| Continuous | 36 | 80 | −0.20 | 0.05 | −0.29 | −0.11 | |||

| Dichotomous | 9 | 16 | −0.05 | 0.08 | −0.20 | 0.11 | |||

| Percent males | 44 | 96 | 0.03 | 0.03 | −0.03 | 0.10 | 0.96 | 1.20 | 0.49 |

| Percent nonwhite | 44 | 96 | −0.11 | 0.12 | −0.34 | 0.12 | −0.94 | 19.66 | 0.36 |

df degrees of freedom

Table 4.

Confirmatory moderator analyses for cyberbullying victimization

| Variable | Number of studies | Number of effects | Coef. or mean | Standard error | 95% CI-Lower | 95% CI-Upper | T-statistic | df | p-value |

|---|---|---|---|---|---|---|---|---|---|

| Country of origin | 0.87 | 24.22 | 0.39 | ||||||

| Non-USA | 24 | 47 | −0.15 | 0.05 | −0.26 | −0.04 | |||

| USA | 15 | 28 | −0.11 | 0.06 | −0.21 | 0.01 | |||

| Focus of program | −0.50 | 16.79 | 0.62 | ||||||

| No cyber target | 12 | 27 | −0.11 | 0.06 | −0.23 | 0.02 | |||

| Cyberbullying targeted | 27 | 48 | −0.15 | 0.05 | −0.24 | −0.05 | |||

| Timepoint | 0.55 | 6.12 | 0.60 | ||||||

| Posttest | 36 | 57 | −0.14 | 0.04 | −0.22 | −0.05 | |||

| Follow-up | 8 | 18 | −0.11 | 0.04 | −0.18 | −0.04 | |||

| Effect size type | 1.21 | 9.85 | 0.26 | ||||||

| Continuous | 29 | 53 | −0.16 | 0.04 | −0.24 | −0.09 | |||

| Dichotomous | 10 | 22 | 0.00 | 0.13 | −0.25 | 0.25 | |||

| Percent males | 39 | 75 | −0.38 | 0.17 | −0.71 | −0.05 | −2.23 | 2.89 | 0.12 |

| Percent nonwhite | 39 | 75 | −0.13 | 0.10 | −0.32 | 0.07 | −1.28 | 14.28 | 0.22 |

df degrees of freedom

We conducted two sets of exploratory moderator analyses. The first set of exploratory analyses investigated whether the WWC rating or a program’s core component category explained variation between included studies. We conducted these analyses across all four outcome domains. For the analysis of the WWC rating, the results indicated that studies meetings standards had a slightly larger average effect across each domain, but the differences were not statistically significant (Supplement Table 5). The results of the program core component did not indicate any statistically significant or substantively important trends (Supplemental Tables 6–9 ). The second set of exploratory analyses used a multiple-predictor meta-regression analysis; the results revealed two statistically significant findings. Intervention effects were larger for cyberbullying victimization when a larger proportion of males were in the sample (b = −0.43, p = 0.01) and for samples of higher socioeconomic status (b = −0.12, p = 0.04). The proportion of males in the sample was not significant as a standalone moderator in the confirmatory analyses, so we are cautious not to overinterpret its significance in the multivariate exploratory analyses that controlled for other covariates. The full results from these models can be found in Supplemental Tables S10–S13.

We conducted several publication bias analyses to investigate any potential impact. First, we conducted a meta-regression analysis including publication status as a predictor, and the results indicated that published studies had slightly yet non-statistically significantly smaller intervention effects (b = 0.03, SE = 0.15, t(14) = 0.22, p = 0.83). Second, we conducted two trim and fill tests for cyberbullying perpetration and victimization, one ignoring effect size dependency and one aggregating effects to the study level. Each of the analyses indicated no presence of publication bias (Figures S1–S4). Third, our final publication bias analysis, Egger’s test using the sandwich estimator (Egger et al., 1997), accounted for the dependency among effect sizes. The results again revealed no presence of publication bias (b = −0.48, SE = 0.29, t(1.4) = 1.62, p = 0.30). Finally, we conducted sensitivity analyses to investigate the potential impact of our missing data technique (Supplementary Tables S14, S15). The results did not reveal statistically significant findings that would nullify our main conclusions. We feel confident in the results and the resulting conclusions.

Discussion

The purpose of this project was to understand the effectiveness of prevention programs at reducing cyberbullying perpetration and victimization. To do so, we conducted a comprehensive systematic review and meta-analysis, locating and synthesizing published and unpublished studies. We identified 50 primary studies, extracting 320 total effect sizes, spanning multiple countries and 45,371 participants. All of these evaluations were conducted within the last 15 years and the vast majority, 36 studies, within the last 5 years.

The results of the meta-analysis indicated that the prevention programs were effective for both cyberbullying perpetration as well as victimization. Program effectiveness was slightly larger for cyberbullying perpetration (g = −0.18) compared to cyberbullying victimization (g = −0.13). Translated to PPI (Mathur & VanderWeele, 2020), we estimated that the average program would have a 76% and 73% probability of reducing cyberbullying perpetration and victimization, respectively. We are relatively confident, therefore, that future implementation of one of the included programs that showed statistically significant intervention effects will reduce cyberbullying.

These findings align with previous meta-analyses and systematic reviews that found evidence for the effectiveness of cyberbullying prevention programs in reducing cyber victimization and perpetration. For example, our findings were consistent with recent meta-analyses by Van Cleemput and colleagues (2014) and Gaffney and colleagues (2019a), which found that cyberbullying prevention programs were associated with significant reductions of both cyberbullying perpetration and victimization. Mishna and colleagues (2011), however, found that cyber abuse prevention programs were only associated with an increase in internet safety knowledge. The discrepancies with Mishna and colleagues (2011) could be due to differences in the scope of their inclusion criteria or due to our use of more advanced meta-analytic techniques. Overall, our findings strengthen the support for the effectiveness of cyberbullying prevention programs.

Implications for Prevention Policy and Practice

Results from our confirmatory moderator analyses suggest programs that specifically target cyberbullying behavior were more effective in reducing cyberbullying relative to general violence prevention programs. This is especially clear from the results of the synthesis of traditional bullying outcomes: programs that include a cyberbullying component were more effective than programs that do not. We suspect this finding could be a time driven effect (i.e., violence prevention programs have improved over time and cyberbullying has only emerged recently, and thus, these programs would have leveraged more state-of-the-art techniques), but were unable to test this in our analysis due to the restricted publication date range of studies included in our review. This could also result from the common overlap between both forms of bullying (Espelage et al., 2013a, 2013b). Some caution is needed because the review of traditional bullying outcomes was a result of them being measured in the same study as cyberbullying outcomes. Therefore, the results of the bullying outcomes meta-analysis are not comprehensive. Most bullying prevention program meta-analyses, however, find average effects are about 0.15–0.20 (Gaffney et al., 2019b; Ttofi & Farrington, 2011), which is lower than the average effect for including a cyberbullying component found in this meta-analysis (0.25). Future research should confirm this finding to determine whether traditional bullying could be reduced simply by including a cyberbullying component within the prevention program. However, traditional anti-bullying programs cannot be expected to also serve as anti-cyberbullying programs.

Also notable, although a small majority of studies used a random assignment procedure (54%), only 18% of studies met the highest WWC rating; more than 4 in 10 studies (44%) did not meet WWC standards. These descriptive findings suggest that evaluations of cyberbullying or related programs require greater attention to study design and study quality. The WWC’s rating system is designed to ensure that only the most rigorously designed and evaluated studies contribute to assessments of program effectiveness. Despite moderator results revealing no statistically significant differences between studies that meet and did not meet WWC standards, the field must be cautious in using future evaluations without a rigorous study design.

The remainder of our moderator results were also not statistically significant; thus, we can only speculate useful information for practitioners and policymakers. One variable that did trend in the direction hypothesized, however, was the country of origin. As found in other previous work (Yeager et al., 2015), studies conducted outside of the USA produced greater overall effectiveness. While it is not clear why these differences have consistently emerged, it could be due in part to an implicit and explicit focus on understanding and preventing cyberbullying, and the development of prevention programs by European scientists from 2008 through 2012. During this time, an intergovernmental framework for European Cooperation in Science and Technology ([COST], Välimäki et al., 2013) included 28 participating European countries (the USA was not a participating country), allowing the coordination of nationally funded research in Europe on cyberbullying. The COST collaboration contributes to reducing the fragmentation in European research investments and opening the European Research Area to cooperation worldwide, and has led to the development of numerous prevention programs targeting cyberbullying. We are not aware of a similar collaboration in North America.

The results show promise in reducing cyberbullying and traditional bullying behaviors. Our recommendation to those looking for guidance in cyberbullying prevention is to select a program in our review that has been evaluated using a random assignment procedure, met the WWC’s highest rating, and has a treatment effect greater than 0.10. Selecting one of these programs means there is a great probability that the intervention will lead to a lower incidence of cyberbullying compared to a known-neutral alternative or none at all. Several of the included studies fit these criteria. We stop short of recommending any specific programs because multiple factors might weigh differently in the practice of subscribing to a program, such as school culture and classroom needs. Additionally, schools must also carefully consider the availability of resources needed for program implementation. Although we attempted to collect cost information, none of the studies specifically mentioned program or per-pupil expenditures.

Using Transparent and Reproducible Meta-analytic Practices

Conducting a high-quality systematic review and meta-analysis has historically meant using transparent methods and following comprehensive reporting guidelines (APA Publications and Communications Board Working Group on Journal Reporting Standards, 2008). Several recent meta-review results indicate, however, that some meta-analysts have failed to transparently report all elements of the review process (Polanin et al., 2020). Few meta-analysts, furthermore, report their statistical scripts and complete datasets, despite their own reliance on the reporting practices of primary researchers. Part of the purpose of this manuscript, therefore, was to continue the push for transparent reporting and reflect on this process.

As articulated in the “Methods” section, we made available all datasets, scripts, codebooks, and other materials necessary to completely reproduce the analyses and results. The process to do so is challenging, requires forethought, and experiences to know what to and what not to focus on, which is difficult to achieve during the early stages of the review process. We found the most challenging aspect was “data wrangling” (especially moving from the extracted dataset to the analytic dataset) and producing tables from the software’s output. The former problem is one many data scientists and statisticians struggle with and simply requires continued education and experience. The latter is an element that will require continued support from the R package maintainers and developers. Our attempt to conduct a fully reproducible analysis, including the final tables, is at the forefront of this movement. We suspect that with time, software package developers and maintainers will make it easy for meta-analysts to produce well-formatted final tables from their analyses. Until then, we encourage meta-analysts to use our reporting as a guide: produce the reproducible scripts, publish the datasets, and ensure that the analyses are labeled and correspond to the analyses in the manuscript.

Conclusions

Our work reflects the most comprehensive systematic review and meta-analysis to date that examined the effectiveness of K-12 school-based prevention programs in reducing both cyberbullying and traditional bullying outcomes. Results indicated that when programs have an explicit focus on targeting cyberbullying, reductions are achieved for both cyberbullying and traditional bullying. We strongly encourage developers of bully prevention programs or those that are revising their programs to include specific and elaborate content on cyberbullying, given its rising prevalence and associations with other forms of aggression. The results of this systematic review and meta-analysis should help guide future developers, education practitioners, and administrators reduce cyberbullying perpetration and victimization, with the hopes of increasing the success and well-being of students.

Supplementary Information

Below is the link to the electronic supplementary material.

Acknowledgements

The authors would like to thank Qing Li, Amy Bellmore, and Sandra Jo Wilson for their guidance throughout the project; Fran Harmon, Hannah Lyden, Tyler Hatchel, Yuanhong Huang, and Sarah Young for their help on screening and coding; and Sean Grant, Emily Tanner-Smith, and Evan Mayo-Wilson for their helpful feedback during the editorial process. These individuals, and numerous others who assisted, made this project a success.

Funding

This project was supported by award no. 2017-CK-BX-0009, awarded by the National Institute of Justice, Office of Justice Programs, US Department of Justice; additional support was received through the Methods of Synthesis and Integration Center (MOSAIC) at AIR.

Declarations

Ethics Approval

This project received approval from the Development Services Group, Inc. Institutional Review Board (DSG IRB #IORG0002047, IRB00002564).

Conflict of Interest

The authors declare no competing interests.

Disclaimer

The opinions, findings, and conclusions or recommendations expressed in this publication are those of the authors and do not necessarily reflect those of the Department of Justice.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Acosta J, Chinman M, Ebener P, Malone PS, Phillips A, Wilks A. Evaluation of a whole-school change intervention: Findings from a two-year cluster-randomized trial of the restorative practices intervention. Journal of Youth and Adolescence. 2019;48:876–890. doi: 10.1007/s10964-019-01013-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- APA Publications and Communications Board Working Group on Journal Article Reporting Standards Reporting standards for research in psychology: Why do we need them What might they be. The American Psychologist. 2008;63:839–851. doi: 10.1037/0003-066X.63.9.839. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Apple Inc. (2016). Filmmaker Pro [Computer software]. Santa Clara, CA: Apple, Inc.

- Aricak T, Siyahhan S, Uzunhasanoglu A, Saribeyoglu S, Ciplak S, Yilmaz N, Memmedov C. Cyberbullying among Turkish adolescents. Cyberpsychology & Behavior. 2008;11:253–261. doi: 10.1089/cpb.2007.0016. [DOI] [PubMed] [Google Scholar]

- Arslan S, Savaser S, Hallett V, Balci S. Cyberbullying among primary school students in Turkey: Self-reported prevalence and associations with home and school life. Cyberpsychology Behavior and Social Networking. 2012;15:527–533. doi: 10.1089/cyber.2012.0207. [DOI] [PubMed] [Google Scholar]

- Bauman, S. (2015). Types of cyberbullying. Cyberbullying: What counselors need to know, 53–58. American Counseling Association. 10.1002/9781119221685

- Bhat CS. Cyber bullying: Overview and strategies for school counsellors, guidance officers, and all school personnel. Journal of Psychologists and Counsellors in Schools. 2008;18:53–66. doi: 10.1375/ajgc.18.1.53. [DOI] [Google Scholar]

- Brochado S, Soares S, Fraga S. A scoping review on studies of cyberbullying prevalence among adolescents. Trauma, Violence, & Abuse. 2017;18:523–531. doi: 10.1177/1524838016641668. [DOI] [PubMed] [Google Scholar]

- Cassidy W, Faucher C, Jackson M. Cyberbullying among youth: A comprehensive review of current international research and its implications and application to policy and practice. School Psychology International. 2013;34:575–612. doi: 10.1177/0143034313479697. [DOI] [Google Scholar]

- Chen L, Ho SS, Lwin MO. A meta-analysis of factors predicting cyberbullying perpetration and victimization: From the social cognitive and media effects approach. New Media & Society. 2017;19:1194–1213. doi: 10.1177/1461444816634037. [DOI] [Google Scholar]

- Cross D, Shaw T, Hadwen K, Cardoso P, Slee P, Roberts C, Barnes A. Longitudinal impact of the Cyber Friendly Schools program on adolescents’ cyberbullying behavior. Aggressive Behavior. 2016;42:166–180. doi: 10.1002/ab.21609. [DOI] [PubMed] [Google Scholar]

- Del Rey R, Casas JA, Ortega R. Impact of the ConRed program on different cyberbullying roles. Aggressive Behavior. 2016;42:123–135. doi: 10.1002/ab.21608. [DOI] [PubMed] [Google Scholar]

- Del Rey R, Casas JA, Ortega-Ruiz R, Schultze-Krumbholz A, Scheithauer H, Smith P, Guarini A. Structural validation and cross-cultural robustness of the European Cyberbullying Intervention Project Questionnaire. Computers in Human Behavior. 2015;50:141–147. doi: 10.1016/j.chb.2015.03.065. [DOI] [Google Scholar]

- Duval S, Tweedie R. Trim and fill: A simple funnel-plot–based method of testing and adjusting for publication bias in meta-analysis. Biometrics. 2000;56:455–463. doi: 10.1111/j.0006-341X.2000.00455.x. [DOI] [PubMed] [Google Scholar]

- Egger M, Smith GD, Schneider M, Minder C. Bias in meta-analysis detected by a simple, graphical test. British Medical Journal. 1997;315:629–634. doi: 10.1136/bmj.315.7109.629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Espelage DL, Low S, Polanin J, Brown E. The impact of a middle-school program to reduce aggression, victimization, and sexual violence. Journal of Adolescent Health. 2013;53:180. doi: 10.1016/j.jadohealth.2013.02.021. [DOI] [PubMed] [Google Scholar]

- Espelage DL, Low S, Van Ryzin MJ, Polanin JR. Clinical trial of second step middle school program: Impact on bullying, cyberbullying, homophobic teasing, and sexual harassment perpetration. School Psychology Review. 2015;44:464–479. doi: 10.17105/spr-15-0052.1. [DOI] [Google Scholar]

- Espelage DL, Low S, Polanin J, Brown E. Clinical trial of Second Step© middle-school program: Impact on aggression & victimization. Journal of Applied Developmental Psychology. 2015;37:52–63. doi: 10.1016/j.appdev.2014.11.007. [DOI] [Google Scholar]

- Espelage, D.L., Rao, M.A., & Craven, R. (2013). Relevant theories for cyberbullying research. In Bauman, S., Walker, J., & Cross, D. (Eds), Principles of Cyberbullying Research: Definition, Methods, and Measures. Routledge.

- Fekkes M, van de Sande MCE, Gravesteijn JC, Pannebakker FD, Buijs GJ, Diekstra RFW, Kocken PL. Effects of the Dutch Skills for Life program on the health behavior, bullying, and suicidal ideation of secondary school students. Health Education. 2016;116:2–15. doi: 10.1108/HE-05-2014-0068. [DOI] [Google Scholar]

- Gaffney H, Farrington DP, Espelage DL, Ttofi MM. Are cyberbullying intervention and prevention programs effective? A systematic and meta-analytical review. Aggression and Violent Behavior. 2019;45:134–153. doi: 10.1016/j.avb.2018.07.002. [DOI] [Google Scholar]

- Gaffney H, Ttofi MM, Farrington DP. Evaluating the effectiveness of school-bullying prevention programs: An updated meta-analytical review. Aggression and Violent Behavior. 2019;45:111–133. doi: 10.1016/j.avb.2018.07.001. [DOI] [Google Scholar]

- Gámez-Guadix M, Orue I, Smith PK, Calvete E. Longitudinal and reciprocal relations of cyberbullying with depression, substance use, and problematic internet use among adolescents. Journal of Adolescent Health. 2013;53:446–452. doi: 10.1016/j.jadohealth.2013.03.030. [DOI] [PubMed] [Google Scholar]

- Garaigordobil, M., & Martínez-Valderrey, V. (2015). Effects of Cyberprogram 2.0 on “face-to-face” bullying, cyberbullying, and empathy. Psicothema, 27, 45–51 [DOI] [PubMed]

- Garaigordobil, M., & Martínez-Valderrey, V. (2015). The effectiveness of Cyberprogram 2.0 on conflict resolution strategies and self-esteem. Journal of Adolescent Health, 57, 229–234. 10.1016/j.jadohealth.2015.04.007 [DOI] [PubMed]

- Gardella JH, Fisher BW, Teurbe-Tolon AR. A systematic review and meta-analysis of cyber-victimization and educational outcomes for adolescents. Review of Educational Research. 2017;87:283–308. doi: 10.3102/0034654316689136. [DOI] [Google Scholar]

- Gradinger P, Yanagida T, Strohmeier D, Spiel C. Prevention of cyberbullying and cyber victimization: Evaluation of the ViSC Social Competence Program. Journal of School Violence. 2015;14:87–110. doi: 10.1080/15388220.2014.963231. [DOI] [Google Scholar]

- Guarini A, Menin D, Menabò L, Brighi A. RPC teacher-based program for improving coping strategies to deal with cyberbullying. International Journal of Environmental Research and Public Health. 2019;16:948–961. doi: 10.3390/ijerph16060948. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guo S. A meta-analysis of the predictors of cyberbullying perpetration and victimization. Psychology in the Schools. 2016;53:432–453. doi: 10.1002/pits.21914. [DOI] [Google Scholar]

- Hedges, L. V. (1981). Distribution theory for Glass's estimator of effect size and related estimators. Journal of Educational Statistics, 6(2), 107-128.

- Hedges LV, Tipton E, Johnson MC. Robust variance estimation in meta-regression with dependent effect size estimates. Research Synthesis Methods. 2010;1:39–65. doi: 10.1002/jrsm.5. [DOI] [PubMed] [Google Scholar]

- Hemphill SA, Kotevski A, Heerde JA. Longitudinal associations between cyber-bullying perpetration and victimization and problem behavior and mental health problems in young Australians. International Journal of Public Health. 2015;60:227–237. doi: 10.1007/s00038-014-0644-9. [DOI] [PubMed] [Google Scholar]

- Higgins J. P. T., Eldridge S., Li T. (2020). Chapter 23: Including variants on randomized trials. In: Higgins J. P. T, Thomas J., Chandler J., Cumpston M., Li T., Page M. J., & Welch V. A. (Eds.). Cochrane handbook for systematic reviews of interventions version 6.1. Cochrane. Available from www.training.cochrane.org/handbook

- Hinduja S, Patchin JW. Cyberbullying: An exploratory analysis of factors related to offending and victimization. Deviant Behavior. 2008;29:129–156. doi: 10.1080/01639620701457816. [DOI] [Google Scholar]

- Hinduja S, Patchin JW. Bullying, cyberbullying, and suicide. Archives of Suicide Research. 2010;14:206–221. doi: 10.1080/13811118.2010.494133. [DOI] [PubMed] [Google Scholar]

- Huang YY, Chou C. An analysis of multiple factors of cyberbullying among junior high school students in Taiwan. Computers in Human Behavior. 2010;26:1581–1590. doi: 10.1016/j.chb.2010.06.005. [DOI] [Google Scholar]

- Ingram KM, Espelage DL, Merrin GJ, Valido A, Heinhorst J, Joyce M. Evaluation of a virtual reality enhanced bullying prevention curriculum pilot trial. Journal of Adolescence. 2019;71:72–83. doi: 10.1016/j.adolescence.2018.12.006. [DOI] [PubMed] [Google Scholar]

- Jain O, Gupta M, Satam S, Panda S. Has the COVID-19 pandemic affected the susceptibility to cyberbullying in India? Computers in Human Behavior Reports. 2020;2:100029. doi: 10.1016/j.chbr.2020.100029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kowalski RM, Giumetti GW, Schroeder AN, Lattanner MR. Bullying in the digital age: A critical review and meta-analysis of cyberbullying research among youth. Psychological Bulletin. 2014;140:1073. doi: 10.1037/a0036634. [DOI] [PubMed] [Google Scholar]

- Kowalski RM, Limber SP. Electronic bullying among middle school students. Journal of Adolescent Health. 2007;41:S22–S30. doi: 10.1016/j.jadohealth.2007.08.017. [DOI] [PubMed] [Google Scholar]

- Kowalski RM, Limber SP. Psychological, physical, and academic correlates of cyberbullying and traditional bullying. Journal of Adolescent Health. 2013;53:S13–S20. doi: 10.1016/j.jadohealth.2012.09.018. [DOI] [PubMed] [Google Scholar]

- Landoll RR, La Greca AM, Lai BS, Chan SF, Herge WM. Cyber victimization by peers: Prospective associations with adolescent social anxiety and depressive symptoms. Journal of Adolescence. 2015;42:77–86. doi: 10.1016/j.adolescence.2015.04.002. [DOI] [PubMed] [Google Scholar]

- Lee, M. S., Zi-Pei, W., Svanström, L., & Dalal, K. (2013). Cyber bullying prevention: intervention in Taiwan. Plos One, 8. [DOI] [PMC free article] [PubMed]

- Lenhart A, Duggan M, Perrin A, Stepler R, Rainie L, Parker K. Pew Research Center. Pew Internet & American Life Project; 2015. [Google Scholar]

- Lukens EP, McFarlane WR. Psychoeducation as evidence-based practice: Considerations for practice, research, and policy. Brief Treatment & Crisis Intervention. 2004;4:205–225. doi: 10.1093/brief-treatment/mhh019. [DOI] [Google Scholar]

- Marciano L, Schulz PJ, Camerini AL. Cyberbullying perpetration and victimization in youth: A meta-analysis of longitudinal studies. Journal of Computer-Mediated Communication. 2020;25:163–181. doi: 10.1093/jcmc/zmz031. [DOI] [Google Scholar]

- Mathur, M. B., & VanderWeele, T. J. (2020). Robust metrics and sensitivity analyses for meta-analyses of heterogeneous effects. Epidemiology, 31(3), 356-358. [DOI] [PMC free article] [PubMed]

- Menesini E, Nocentini A, Palladino BE. Empowering students against bullying and cyberbullying: Evaluation of an Italian peer-led model. International Journal of Conflict and Violence. 2012;6:313–320. doi: 10.4119/ijcv-2922. [DOI] [Google Scholar]

- Mishna F, Cook C, Saini M, Wu MJ, MacFadden R. Interventions to prevent and reduce cyber abuse of youth: A systematic review. Research on Social Work Practice. 2011;21:5–14. doi: 10.1177/1049731509351988. [DOI] [Google Scholar]

- Mishna F, Saini M, Solomon S. Ongoing and online: Children and youth’s perceptions of cyber bullying. Children and Youth Services Review. 2009;31:1222–1228. doi: 10.1016/j.childyouth.2009.05.004. [DOI] [Google Scholar]

- Modecki KL, Minchin J, Harbaugh AG, Guerra NG, Runions KC. Bullying prevalence across contexts: A meta-analysis measuring cyber and traditional bullying. Journal of Adolescent Health. 2014;55:602–611. doi: 10.1016/j.jadohealth.2014.06.007. [DOI] [PubMed] [Google Scholar]

- Moeyaert M, Ugille M, Natasha Beretvas S, Ferron J, Bunuan R, Van den Noortgate W. Methods for dealing with multiple outcomes in meta-analysis: A comparison between averaging effect sizes, robust variance estimation and multilevel meta-analysis. International Journal of Social Research Methodology. 2017;20:559–572. doi: 10.1080/13645579.2016.1252189. [DOI] [Google Scholar]

- Nation M, Crusto C, Wandersman A, Kumpfer KL, Seybolt D, Morrissey-Kane E, Davino K. What works in prevention: Principles of effective prevention programs. American Psychologist. 2003;58:449–456. doi: 10.1037/0003-066X.58.6-7.449. [DOI] [PubMed] [Google Scholar]

- Nocentini A, Calmaestra J, Schultze-Krumbholz A, Scheithauer H, Ortega R, Menesini E. Cyberbullying: Labels, behaviours and definition in three European countries. Australian Journal of Guidance and Counselling. 2010;20:129–142. doi: 10.1375/ajgc.20.2.129. [DOI] [Google Scholar]

- Olweus, D. (1991). Bully/victim problems among schoolchildren: Basic facts and effects of a school based intervention program. In D. J. Pepler & K. H. Rubin (Eds.), The development and treatment of childhood aggression (pp. 411–448). Erlbaum.

- Olweus D, Limber SP. Bullying in school: Evaluation and dissemination of the Olweus Bullying Prevention Program. American Journal of Orthopsychiatry. 2010;80:124–134. doi: 10.1111/j.1939-0025.2010.01015.x. [DOI] [PubMed] [Google Scholar]

- Olweus, D., & Limber, S. P. (2010). The Olweus Bullying Prevention Program: Implementation and evaluation over two decades. In S. R. Jimerson, S. M. Swearer, & D. L. Espelage (Eds.), Handbook of bullying in schools: An international perspective (pp. 377–401). Routledge.

- Olweus D, Limber SP, Breivik K. Addressing specific forms of bullying: A large scale evaluation of the Olweus bullying prevention program. International Journal of Bullying Prevention. 2019;1:70–84. doi: 10.1007/s42380-019-00009-7. [DOI] [Google Scholar]

- Ortega R, Del Rey R, Casas JA. Knowing, building and living together on internet and social networks: The ConRed cyberbullying prevention program. International Journal of Conflict and Violence. 2012;6:302–312. [Google Scholar]

- Ortega R, Elipe P, Mora-Merchán JA, Genta ML, Brighi A, Guarini A, Tippett N. The emotional impact of bullying and cyberbullying on victims: A European cross-national study. Aggressive Behavior. 2012;38:342–356. doi: 10.1002/ab.21440. [DOI] [PubMed] [Google Scholar]

- Palladino BE, Nocentini A, Menesini E. Evidence-based intervention against bullying and cyberbullying: Evaluation of the NoTrap! program in two independent trials. Aggressive Behavior. 2016;42:194–206. doi: 10.1002/ab.21636. [DOI] [PubMed] [Google Scholar]

- Pearce N, Cross D, Monks H, Waters S, Falconer S. Current evidence of best practice in whole-school bullying intervention and its potential to inform cyberbullying interventions. Journal of Psychologists and Counsellors in Schools. 2011;21:1–21. doi: 10.1375/ajgc.21.1.1. [DOI] [Google Scholar]

- Pigott TD, Polanin JR. Methodological guidance paper: High-quality meta-analysis in a systematic review. Review of Educational Research. 2020;90:24–46. doi: 10.3102/0034654319877153. [DOI] [Google Scholar]

- Polanin JR, Hennessy EA, Tsuji S. Transparency and reproducibility of meta-analyses in psychology: A meta-review. Perspectives on Psychological Science. 2020 doi: 10.1177/1745691620906416. [DOI] [PubMed] [Google Scholar]

- Polanin, J. R., Espelage, D. L., Grotpeter, J. K., Valido, A., Ingram, K. M., Torgal, C., ... & Robinson, L. E. (2020). Locating unregistered and unreported data for use in a social science systematic review and meta-analysis. Systematic Reviews, 9, 1-9. [DOI] [PMC free article] [PubMed]

- Polanin JR, Pigott TD, Espelage DL, Grotpeter JK. Best practice guidelines for abstract screening large-evidence systematic reviews and meta-analyses. Research Synthesis Methods. 2019;10(3):330–342. doi: 10.1002/jrsm.1354. [DOI] [Google Scholar]

- Polanin JR, Tanner-Smith EE, Hennessy E. Estimating the difference between published and unpublished effect sizes: A meta-review. Review of Educational Research. 2016;86:207–236. doi: 10.3102/0034654315582067. [DOI] [Google Scholar]

- Popović-Ćitić B, Djurić S, Cvetković V. The prevalence of cyberbullying among adolescents: A case study of middle schools in Serbia. School Psychology International. 2011;32:412–424. doi: 10.1177/0143034311401700. [DOI] [Google Scholar]

- Rodgers, M. A., & Pustejovsky, J. E. (2019, December 3). Evaluating meta-analytic methods to detect selective reporting in the presence of dependent effect sizes. 10.31222/osf.io/vqp8u [DOI] [PubMed]

- Salmivalli, C. (2014). Participant roles in bullying: How can peer bystanders be utilized in interventions?. Theory Into Practice, 53(4), 286-292.

- Schneider SK, O’donnell, L., Stueve, A., & Coulter, R. W. Cyberbullying, school bullying, and psychological distress: A regional census of high school students. American Journal of Public Health. 2012;102:171–177. doi: 10.2105/AJPH.2011.300308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sorrentino A, Baldry AC, Farrington DP. The efficacy of the Tabby improved prevention and intervention program in reducing cyberbullying and cybervictimization among students. International Journal of Environmental Research and Public Health. 2018;15:2536. doi: 10.3390/ijerph15112536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tanrfukulu T. The analysis of variables about cyber bullying and the effect of an intervention program with tendency to reality therapy on cyber bullying behvaiors (Unpublished dissertation) Sakarya University; 2013. [Google Scholar]

- Tanrıkulu T, Kınay H, Arıcak OT. Sensibility development program against cyberbullying. New Media & Society. 2015;17:708–719. doi: 10.1177/1461444813511923. [DOI] [Google Scholar]