Abstract

Two types of neural circuits contribute to legged locomotion: central pattern generators (CPGs) that produce rhythmic motor commands (even in the absence of feedback, termed “fictive locomotion”), and reflex circuits driven by sensory feedback. Each circuit alone serves a clear purpose, and the two together are understood to cooperate during normal locomotion. The difficulty is in explaining their relative balance objectively within a control model, as there are infinite combinations that could produce the same nominal motor pattern. Here we propose that optimization in the presence of uncertainty can explain how the circuits should best be combined for locomotion. The key is to re-interpret the CPG in the context of state estimator-based control: an internal model of the limbs that predicts their state, using sensory feedback to optimally balance competing effects of environmental and sensory uncertainties. We demonstrate use of optimally predicted state to drive a simple model of bipedal, dynamic walking, which thus yields minimal energetic cost of transport and best stability. The internal model may be implemented with neural circuitry compatible with classic CPG models, except with neural parameters determined by optimal estimation principles. Fictive locomotion also emerges, but as a side effect of estimator dynamics rather than an explicit internal rhythm. Uncertainty could be key to shaping CPG behavior and governing optimal use of feedback.

Subject terms: Computer modelling, Control theory, Oscillators, Computational models, Computational neuroscience, Computational neuroscience, Motor control, Sensorimotor processing

Introduction

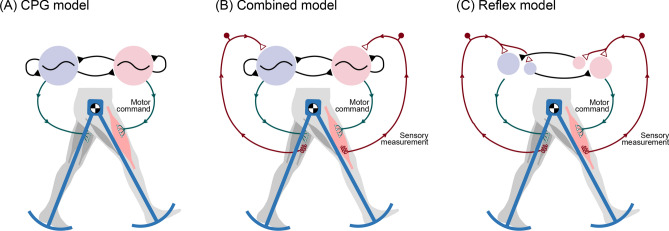

A combination of two types of neural circuitry appears responsible for the basic locomotory motor pattern. One type is the central pattern generator (CPG; Fig. 1A), which generates pre-programmed, rhythmically timed, motor commands1–3. The other is the reflex circuit, which produces motor patterns triggered by sensory feedback (Fig. 1C). Although they normally work together, each is also capable of independent action. The intrinsic CPG rhythm patterns can be sustained with no sensory feedback and only a tonic, descending input, as demonstrated by observations of fictive locomotion4,5. Reflex loops alone also appear capable of controlling locomotion1, particularly with a hierarchy of loops integrating multiple sensory modalities for complex behaviors such as stepping and standing control6,7. We refer to the independent extremes as pure feedforward control and pure feedback control. Of course, within the intact animal, both types of circuitry work together for normal locomotion (Fig. 1B)8. However, this cooperation also presents a dilemma, of how authority should optimally be shared between the two9.

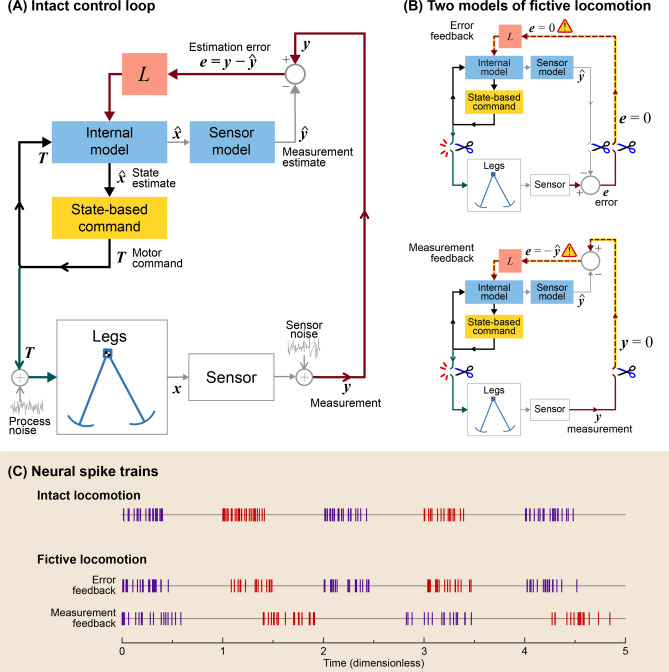

Figure 1.

Three ways to control bipedal walking. (A) The central pattern generator (CPG) comprises neural oscillators that can produce rhythmic motor commands, even in the absence of sensory feedback. Rhythm can be produced by mutually inhibiting neural half-center oscillators (shaded circles). (B) In normal animal locomotion, the CPG is thought to combine an intrinsic rhythm with sensory feedback, so that the periphery can influence the motor rhythm. (C) In principle, sensory feedback can also control and stabilize locomotion through reflexes, without need for neural oscillators. The extreme of (A) CPG control without feedback is referred to here as pure feedforward control, and the opposite extreme (C) with no oscillators as pure feedback control. Any of these schemes could potentially produce the same nominal locomotion pattern, but some (B) combination of feedforward and feedback appears advantageous.

The combination of central pattern generators with sensory feedback has been explored in computational models. For example, some models have added feedback10–13 to biologically-inspired neural oscillators e.g.,14, which employ networks of mutually inhibiting neurons to intrinsically produce alternating bursts of activity. Sensory input to the neurons can change network behavior based on system state, such as foot contact and limb or body orientation, to help respond to disturbances. The gain or weight of sensory input determines whether it slowly entrains the CPG15, or whether it resets the phase entirely16,17. Controllers of this type have demonstrated legged locomotion in bipedal18 and quadrupedal robots19,20, and even swimming and other behaviors21. A general observation is that feedback improves robustness such as against uneven terrain22. And the addition of feedforward into feedback-based control has been used to vary walking speed23, adjust interlimb coordination24, or enhance stability25. However, a disadvantage is that the means of combining CPG and feedback is often designed ad hoc. This makes it challenging to extend the findings from one CPG model to another or to other gaits or movement tasks.

Optimization principles offer a means for a model to be uniquely defined by quantitative and objective performance measures26. Indeed, CPG models have long used optimization to determine parameter values23,27,28. However, the most robust and capable models to date have tended not to use CPGs or intrinsic timing rhythms. For example, human-like optimization models can traverse highly uneven terrain29–31 using only state-based control, where the control command is a function of system state (e.g., positions and velocities of limbs). In fact, reinforcement learning and other robust optimization approaches (e.g., dynamic programming32,33) are typically expressed solely in terms of state, and do not even have provision for time as an explicit input. They have no need for, nor even benefit from, an internally generated rhythm. But feedforward is clearly important in biological CPGs, suggesting that some insight is missing from these optimal control models.

There may be a principled reason for a biological controller not to rely on state, as measured, alone. Realistically, a system's state can only be known imperfectly, due to noisy and imperfect sensors. The solution is state estimation33, in which an internal model of the body is used to predict the expected state and sensory information, and feedback from actual sensors is used to correct the state estimate. The sensory feedback gains may be optimized for minimum estimation error separate from control. The separation principle33 of control systems shows that state-based control may be optimized for control performance without regard to noisy sensors, and nevertheless combine well with state estimation33. In practice, actual robots (e.g., bipedal Atlas34 and quadrupedal BigDog35) gain high performance and robustness through such a combination of state estimation driving state-(estimator)-based control. In fact, state-estimator control may be optimized for a noisy environment33, and has been proposed as a model for biological systems36–38. The internal model for state estimation is not usually regarded as relevant to the biological CPG’s feedforward, internal rhythm, but we have proposed that it can potentially produce a CPG-like rhythm under conditions simulating fictive locomotion39. Here the rhythmic output is interpreted not as the motor command per se, but as a state estimate that drives the motor command. We demonstrated this concept with a simple model of rhythmic leg motions39 and a preliminary walking model40. This suggests that a walking model designed objectively with state estimator-based control might produce CPG-like rhythms that objectively contribute to locomotion performance.

The purpose of the present study was to test an estimator-based CPG controller with a dynamic walking model. We devised a simple state-based control scheme to produce a stable and economical nominal gait, producing stance and swing leg torques as a function of the leg states. Assuming a noisy system and environment, we devised a state estimator for leg states. A departure from other CPG models is that we optimize sensory feedback and associated gains not for walking performance, but for accurate state estimation. The combination of control and estimation define our interpretation of a CPG controller that incorporates sensory feedback in a noisy environment. Moreover, this same controller may be realized in the form of a biologically-inspired half center oscillator14, with neuron-like dynamics. Because the control scheme depends on accurate state information for its stability and economy, we expected that minimizing state estimation error (and not explicitly walking performance) would nonetheless allow this model to achieve better walking performance. Scaling the sensory feedback either higher or lower than theoretically optimal would be expected to yield poorer performance. Such a model may conceptually explain how CPGs could optimally incorporate sensory feedback.

Results

Central pattern generator controls a dynamic walking model

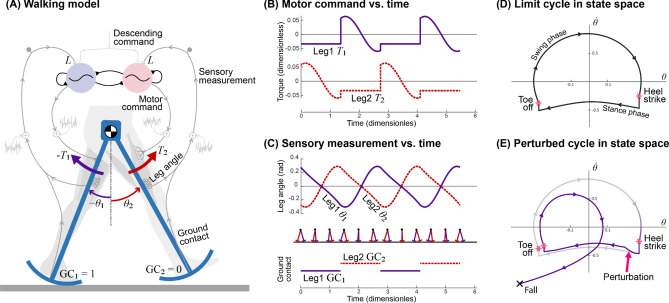

The CPG controller produced a periodic gait with a model of human-like dynamic walking (Fig. 2A). The model was inspired by the mechanics and energetics of humans41, whose legs have pendulum-like passive dynamics42 (the swing leg as a pendulum, the stance leg as inverted pendulum), modulated by active control. Model parameters such as mass distribution and foot curvature, and the resultant walking speed and step length were similar to human walking. The nominal step length for a given walking speed also minimized the model’s energy expenditure, as also observed in humans43. Acting on the legs were torque commands ( and , Fig. 2B) from the CPG, designed to yield a periodic gait (Fig. 2C), by restoring energy dissipated with each step’s ground contact collision44,45. The leg angles and the ground contact condition (“GC”, 1 for contact, 0 otherwise; Fig. 2C) were treated as measurements to be fed back to the CPG. Each leg’s states () described a periodic orbit or limit cycle (Fig. 2D), which was locally stable for zero or mild disturbances, but could easily be perturbed enough to make it fall (Fig. 2E).

Figure 2.

Dynamic walking model controlled by CPG controller with feedback. (A) Pendulum-like legs are controlled by motor commands for hip torques and , with sensory feedback of leg angle and ground contact “GC” relayed back to controller. (B) Controller produces alternating motor commands versus time, which drive (C) leg movement . Sensory measurements of leg angle and ground contact in turn drive the CPG. (D) Resulting motion is a nominal periodic gait (termed a “limit cycle”) plotted in state space versus . (E) Discrete perturbation to the limit cycle can cause model to fall.

The resulting nominal (undisturbed) gait had approximately human-like walking speed and step length. The nominal walking speed was equivalent to 1.25 m/s with step length 0.55 m (or normalized 0.4 and 0.55 , respectively; is gravitational constant, is leg length). The corresponding mechanical cost of transport was 0.053, comparable to other passive and active dynamic walking models (e.g.,43,46,47).

This controller had four important features for the analyses that follow. First, the gait had dynamic, pendulum-like leg behavior similar to humans41,42. Second, the controller stabilized walking, meaning ability to withstand minor perturbations due to its state-based control. Third, the information driving control was produced by the controller including CPG, whose entire dynamics and feedback gains were objectively designed by optimal estimation principles, with no ad hoc design. And fourth, the overall amount of feedback could be varied continuously between either extreme of pure feedforward and pure feedback (sensory feedback gain ranging zero to infinity), while always producing the same nominal gait under noiseless conditions. This was to facilitate study of how parametric variation of sensory feedback affects performance, particularly under noisy conditions.

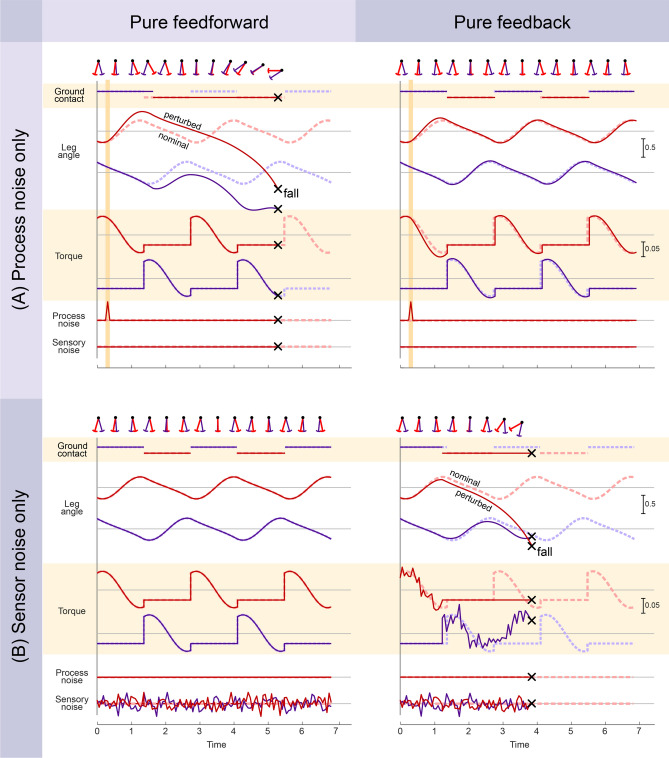

Pure feedforward and pure feedback are both susceptible to noise

The critical importance of sensory feedback was demonstrated with a disturbance acting on the legs (Fig. 3A). Termed process noise, it represents not only disturbances but also any uncertainty in the environment or internal model. For this demonstration, the disturbance consisted of a single impulsive swing leg angular acceleration (amplitude 5 at 15% of nominal stride time). The pure feedforward controller failed to recover (Fig. 3A left), and would fall within about two steps. Its perturbed leg and ground contact states became mismatched to the nominal rhythm, which in pure feedforward does not respond to state deviations. In contrast, the feedback controller could recover from the same perturbation (Fig. 3A right) and return to the nominal gait. Feedback control is driven by system state, and therefore automatically alters the motor command in response to perturbations. Our expectation is that even if a feedforward control is stable under nominal conditions (with zero or mild disturbances), a feedback controller could generally be designed to be more robust.

Figure 3.

Pure feedforward and pure feedback (left and right columns, respectively) are adversely affected by (A) process and (B) sensor noise. Process noise refers to disturbances from the environment or imperfect actuation, and sensor noise refers to imperfect sensing. Plots show ground contact condition, leg angles, commanded leg torques, and noise levels versus time, including both the nominal condition without noise (dashed lines), and the perturbed condition with noise (solid lines). With an impulsive, process noise disturbance, pure feedforward control tended to fall, whereas pure feedback was quite stable. With sensor noise alone, pure feedforward was unaffected, but pure feedback tended to fall.

We also applied an analogous demonstration with sensor noise (Fig. 3B). Adding continuous sensor noise (standard deviation of for each independent leg) to sensory measurements had no effect on pure feedforward control (Fig. 3B left), which ignores sensory signals entirely. But pure feedback was found to be sensitive to noise-corrupted measurements, and would fall within a few steps (Fig. 3B right). This is because erroneous feedback would trigger erroneous motor commands not in accordance with actual limb state. The combined result was that both pure feedforward and pure feedback control had complementary weaknesses. They performed identically without noise, but each was unable to compensate for its particular weakness, either process noise or sensor noise. Feedback control can be robust, but it needs accurate state information.

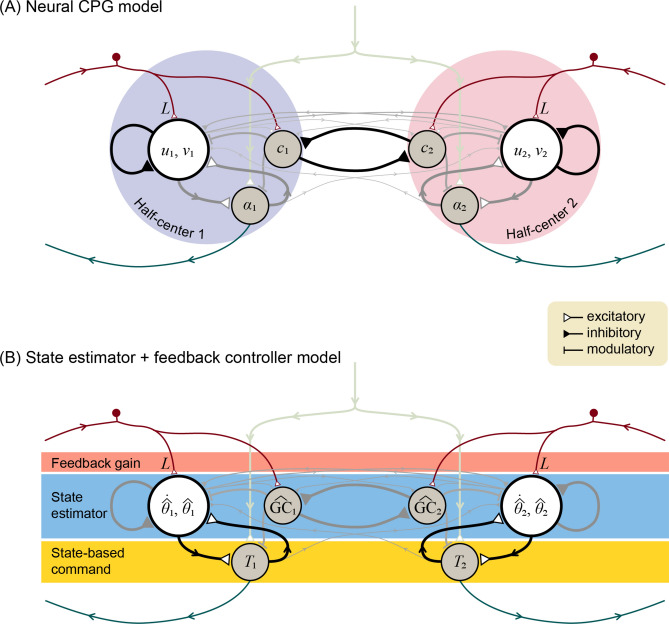

Neural half-center oscillators are re-interpreted as state estimator

We determined two apparently different representations for the same CPG model. This first was a biologically inspired, neural oscillator (Fig. 4A) representation, intended to resemble previous CPG models10–13 demonstrating incorporation of sensory feedback. As with such models, the intrinsic rhythm was produced with two mutually inhibiting half-center oscillators, one driving each leg ( for left leg, for right leg). Each half-center had a total of three neurons, one a primary neuron with standard second-order dynamics (states and ). Its output drove the second neuron () producing the motor command to the ipsilateral leg. The third neuron was responsible for relaying ground contact (“”) sensory information, to both excite the ipsilateral primary neuron and inhibit the contralateral one. Although this architecture is superficially similar to previous ad hoc models, the present CPG rhythmic dynamics were determined objectively by optimal estimation principles.

Figure 4.

Locomotion control circuit interpreted in two representations: (A) Neural central pattern generator with mutually inhibiting half-center oscillators, and as (B) state estimator with feedback control. Each half-center has a primary neuron with two states ( and , respectively), an auxiliary neuron for registering ground contact, and an alpha motoneuron driving leg torque commands. Inputs include a tonic descending drive, and afferent sensory data with gain . State estimator acts as second-order internal model of leg dynamics to estimate leg states (hat symbol denotes estimate) and ground contact , which drive state-based command . The estimator dynamics and estimator parameters including sensory feedback , and thus the corresponding neural connections and weights, are designed for minimum mean-square estimation error. Leg dynamics have nonlinear terms (see “Methods” section) of small magnitude (thin grayed lines).

The same CPG architecture was then re-interpreted in a second, control systems and estimation framework (Fig. 4B), while changing none of the neural circuitry. Here, the structure was not treated as half-center oscillators, but rather as three neural stages from afferent to efferent. The first stage receiving sensory feedback signal was interpreted as a feedback gain (upper rectangular block, Fig. 4B), modulating the behavior of the second stage, interpreted as a state estimator (middle rectangular block, Fig. 4B) acting as an internal model of leg dynamics. Its output was interpreted as the state estimate, which was fed into the third, state-based motor command stage (lower rectangular block, Fig. 4B). In this interpretation, the three stages correspond with a standard control systems architecture for a state estimator driving state feedback control. In fact, the neural connections and weights of the half-center oscillators were determined by, and are therefore specifically equivalent to, a state estimator driving motor commands to the legs.

The two representations provide complementary insights. The half-center model shows how sensory information can be incorporated into and modulate a CPG rhythm. Half-center models have previously been designed ad hoc and tuned for a desired behavior, and have generally lacked an objective and unique means to determine the architecture (e.g., number of neurons and interconnections) and neural weights. The state estimator-based model offers a means to determine the architecture, neural weights, and parameters for the best performance. This half-center model with feedback could thus be regarded as optimal, for producing accurate state estimates despite the presence of noise.

Optimized sensory feedback gain L yields accurate state estimates

We next examined walking performance in the presence of both process and sensor noise, while varying sensory feedback gain above and below optimal (Fig. 5; result from 20 simulation trials per condition, 100 steps per trial). We intentionally applied a substantial amount of noise (with fixed covariances), sufficient to topple the model. This was to demonstrate how walking performance can be improved with appropriate sensory feedback gain , unlike the noiseless case where the model always walks perfectly.

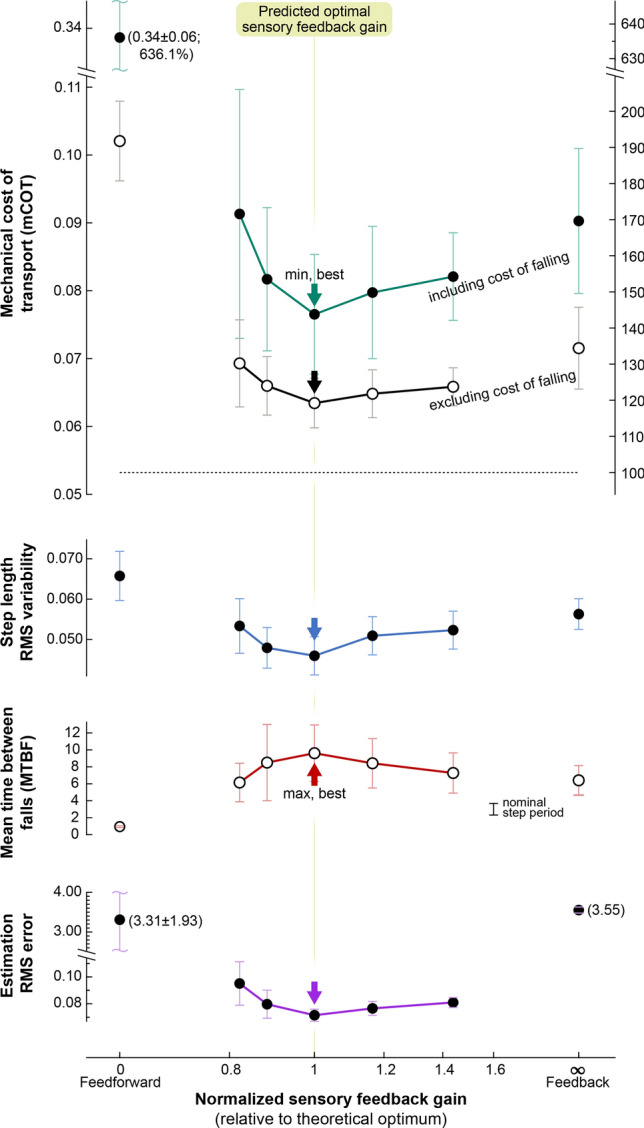

Figure 5.

State estimation accuracy and walking performance under noisy conditions, as a function of sensory feedback gain. The theoretically optimal sensory feedback gain (normalized gain of 1) yielded best performance, in terms of mechanical cost of transport (mCOT), step length variability, mean time between falls (MBTF), and state estimator error. Normalized sensory feedback gain varies between extremes of pure feedforward (to the left) and pure feedback (to the right), with 1 corresponding to theoretically predicted optimum . Formally, normalized gain is defined as where denotes matrix norm. Vertical arrow indicates best performance (minimum for all measures except maximum for MTBF). For all gains, model was simulated with a fixed combination of process and sensor noise as input to multiple trials, yielding ensemble average measures. Each data point is an average of 20 trials of 100 steps each, and errorbar indicates standard deviation of the trials. Mechanical cost of transport (mCOT) was defined as positive work divided by body weight and distance travelled, and step variability as root-mean-square (RMS) variability of step length. Falling takes time and dissipates mechanical energy, and so mCOT was computed both including and excluding losses from falls (work, time, distance).

As expected of optimal estimation, best estimation performance was achieved for the gain equal to theoretically predicted optimum (Fig. 5, normalized sensory feedback gain of 1). We had designed with linear quadratic estimation (LQE) based on the covariances of process and sensor noise. Applying that gain in nonlinear simulation with added noise resulted in minimum estimation error (Fig. 5, bottom). This suggests that a linear gain was sufficient to yield a good state estimate despite system nonlinearities.

The optimal sensory feedback gain also yielded best walking performance. Even though the same state-based motor command function ( in Fig. 4) was applied in all conditions, that function was dependent on accurate state information. As a result, the optimal gain yielded greater economy, less step length variability, and fewer falls (Fig. 5). This was actually a side-effect of optimal state estimation, because our state-based motor command was not explicitly designed to optimize any of these performance measures. The minimal mechanical cost of transport was 0.077 under noisy conditions, somewhat higher than the nominal 0.053 without noise. Step length variability was 0.046 , and the model experienced occasional falls, with MTBF (mean time between falls) of about 9.61 (or about 7.1 steps). This optimal case served as a basis for comparisons with other values for gain .

Accurate state estimates yield good walking performance

Applying sensory feedback gains either lower or higher than theoretically predicted optimum generally resulted in poorer walking performance (Fig. 5). We expected that any state-based motor command would be adversely affected by poorer state estimates. The effect of reducing sensory feedback gain (normalized gain less than 1) was to make the system (and particularly its state estimate) more reliant on its feedforward rhythm, and less responsive to external perturbations. The effect of increasing sensory feedback gain was to make the system more reliant and responsive to noisy feedback. Indeed, the direct effect of selecting either too low or too high a sensory feedback gain was an increased error in state estimate. The consequences of control based on less accurate state information were more falls, more step length variability, and greater cost of transport. Over the range of gains examined (normalized sensory feedback gain ranging 0.82–1.44), the performance measures worsened on the order of about 10% (Fig. 5). This suggests that, in a noisy environment, a combination of feedforward and feedback is important for achieving precise and economical walking, and for avoiding falls. Moreover, the optimal combination can be designed using control and estimation principles. This testing condition was referred as “reference condition” for the following demonstration.

Amount of noise determines optimal sensory feedback gain L

We next evaluated how walking performance and feedback gain would change with different amounts of noise. The theoretically calculated optimum sensory feedback gain depends on the ratio of process noise to sensor noise covariances48. Relatively more process noise favors higher sensory feedback gain, and relatively more sensor noise favors a lower sensory feedback gain and thus greater reliance on the feedforward internal model. We demonstrated this by applying different amounts of process noise (low, medium, and high) with a fixed amount of sensor noise, evaluating the optimal sensory feedback gain, and performing walking simulations with gains varied about the optimum. Noise covariances were set to multiples of the reference conditions (Fig. 5), of 0.36, 1.15, and 2.06 respectively for low, medium, high process noise; sensor noise was 1.15 of reference condition. The ratio of process to sensor noise covariance was thus smaller (low), the same (medium), and larger (high) compared to the reference condition, as was the theoretically optimal gain obtained using linear quadratic estimation (LQE) equation (Fig. 6).

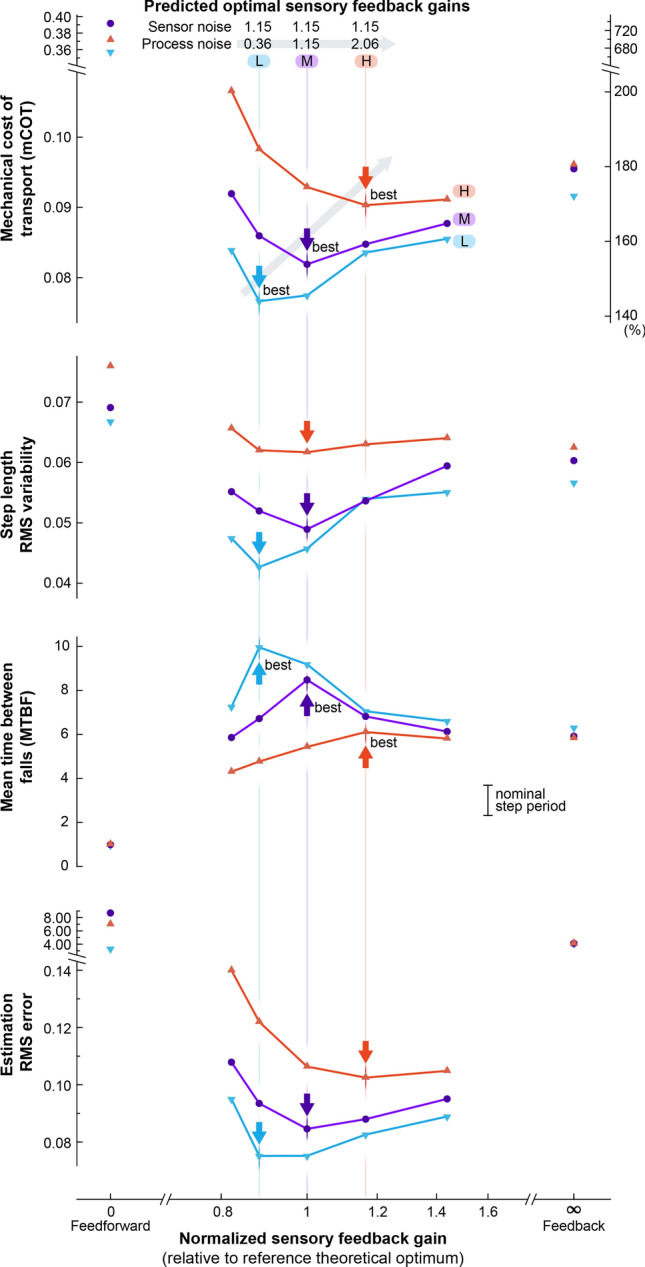

Figure 6.

Theoretically optimal sensory feedback gains increase with greater process noise. Effect of three conditions of increasing process noise (L low, M medium, H high) on walking performance as a function of sensory feedback gain. The theoretically optimal gains (vertical lines) led to best performance, as quantified by mechanical cost of transport (mCOT, including falls), step length variability, mean time between falls (MBTF), and state estimator error. (An exception was step length variability, which had a broad and indistinct minimum.) The predicted optimal sensory feedback gains for each noise condition are indicated with vertical lines. Arrows indicate best performance for each measure for each noise condition. Performance is plotted with normalized sensory feedback gain ranging between extremes of pure feedforward (to the left) and pure feedback (to the right), with 1 corresponding to theoretical optimum of the previous testing condition (Fig. 5). The process noise covariance was set to multiples of the previous reference values: 0.36 for L, 1.15 for M, and 2.06 for H. Sensor noise covariance was set to 1.15 of previous value.

With varying noise, good performance was still achieved with the corresponding, theoretically optimal gain (Fig. 6). The theoretically predicted optimal gain increased with greater process noise, and simulation trials yielded minimum state estimation error at that gain (Fig. 6, Estimation RMS error). Accurate state estimation also contributed to walking performance, with a trend of minimum cost of transport and step variability, and maximum time between falls at the corresponding theoretical optimum. An exception was step length variability in the high process noise condition, which had a broad minimum, with simulation optimum at a slightly lower gain than theoretically predicted. Overall, the linear state estimator predicted the best performing gain well, in terms of state estimation error, energy economy, and robustness to falls. These results are consistent with the expectation that accurate state estimation contributes to improved control.

Removal of sensory feedback causes emergence of fictive locomotion

Although the CPG model normally interacts with the body, it was also found to produce fictive locomotion with peripheral feedback removed (Fig. 7). Here we considered two types of biological sensors, referred to as “error feedback” and “measurement feedback” sensors. Error feedback refers to sensors that can distinguish unexpected perturbations from intended movements49. For example, some muscle spindles and fish lateral lines50 receive corollary efferent signals (e.g. gamma motor neurons in mammals, alpha in invertebrates51) that signify intended movements, and could be interpreted as effectively computing an error signal within the sensor itself50. Measurement feedback sensors refers to those without efferent inputs (e.g., nociceptors, golgi tendon organs, cutaneous skin receptors, and other muscle spindles52), that provide information more directly related to body movement. Both types of sensors are considered important for locomotion, and so we examined the consequences of removing either type.

Figure 7.

Emergence of fictive locomotion from CPG model. (A) Block diagram of intact control loop, where sensory measurements and estimation error are fed into internal model. Motor command drives the legs and (through efference copy) the internal model of legs. (B) Two models of fictive locomotion, starting with the intact system but with sensory feedback removed in two ways. Error feedback refers to sensors that receive efferent copy as inhibitory drive (e.g., some muscle spindles). Removal of error (dashed line) results in sustained fictive rhythm, due to feedback between internal model and state-based command. Measurement feedback refers to other, more direct sensors of limb state . Removal of such feedback can also produce sustained rhythm from internal model of legs and sensors, interacting with state-based command. (C) Simulated motor spike trains show how fictive locomotion can resemble intact. Measurement feedback case produces slower and weaker rhythm than error feedback.

These cases were modeled by disconnecting the periphery in two different ways. This is best illustrated by redrawing the CPG (Fig. 4) more explicitly as a traditional state estimator block diagram (Fig. 7A). The state estimator block diagram could be rearranged into two equivalent forms (Fig. 7B) by relocating the point where the error signal is calculated. In the Error feedback model (Fig. 7B, top), the error is treated as a peripheral comparison associated with the sensor, and in the Measurement feedback model (Fig. 7B, bottom), as a more central comparison. The two block diagrams are logically equivalent when intact, but they differ in behavior with the periphery disconnected.

The case of fictive locomotion with Error feedback sensors (Fig. 7B, top) was modeled by disconnecting error signal , so that the estimator would run in an open-loop fashion, as if the state estimate were always correct. Despite this disconnection, there remained an internal loop between the estimator internal model and the state-based command generator, that could potentially sustain rhythmic oscillations. The case of fictive locomotion with measurement feedback sensors (Fig. 7C Measurement feedback) was modeled by disconnecting afferent signal y, and reducing estimator gain by about half, as a crude representation of highly disturbed conditions. There remained an internal loop, also potentially capable of sustained oscillations. We tested whether either case would yield a sustained fictive rhythm, illustrated by transforming the motor command into neural firing rates using a Poisson process.

We found that removal of both types of sensors still yielded sustained neural oscillations (Fig. 7C), equivalent to fictive locomotion. In the case of Error feedback (Fig. 7B), the motor commands from the isolated CPG were equivalent to the intact case without noise in terms of frequency and amplitude. In the case of Measurement feedback (Fig. 7B), the state estimator tended to drive estimate toward zero, and simulations still produced periodic oscillations, albeit with slower frequency and reduced amplitude compared to intact. This is not unlike observations of fictive locomotion in animals53, although our model’s response depends on manner of disconnection. Here, with both types of disconnection, the resulting fictive locomotion should not be interpreted as evidence of an intrinsic rhythm, but rather a side effect of incorrect state estimation.

Discussion

We have examined how central pattern generators could optimally integrate sensory information to control locomotion. Our CPG model offers an adjustable gain on sensory feedback, to allow for continuous adjustment between pure feedback control to pure feedforward control, all with the same nominal gait under perfect conditions. The model is compatible with previous neural oscillator models, while also being designed through optimal state estimation principles. Simulations reveal how sensory feedback becomes critical under noisy conditions, although not to the exclusion of intrinsic, neural dynamics. In fact, a combination of feedforward and feedback is generally favorable, and the optimal combination can be designed through standard estimation principles. Estimation principles apply quite broadly, and could be readily applied to other models, including ones far more complex than examined here. The state estimation approach also suggests new interpretations for the role of CPGs in animal or robot locomotion.

Feedforward and feedback may be combined optimally for state estimation

One of our most basic findings was that the extremes of pure feedforward or pure feedback control each performed relatively poorly in the presence of noise (Fig. 3). Pure feedforward control, driven solely by an open-loop rhythm, was highly susceptible to falling as a result of process noise. The general problem with a feedforward or time-based rhythm is that a noisy environment can disturb the legs from their nominal motion, so that the nominal command pattern is mismatched for the perturbed state. Under noisy conditions, it is better to trigger motor commands based on feedback of actual limb state, rather than time. But feedback also has its weaknesses, in that noisy sensory information can lead to noisy commands.

Better than these extremes is to combine both feedforward and feedback together, modulated by sensory feedback gain . The relative trade-off between process and measurement noise, described by the ratio of their covariances, determines the theoretically optimal gain. That gain was found to yield the least estimation error in simulation (normalized sensory feedback gain = 1 in Fig. 5). Moreover, variations in covariance produced predictable shifts in the theoretical optimum, which again yielded least estimation error in simulation (Fig. 6). We demonstrated this using relatively simple, but rigorously defined33 linear estimator dynamics, which worked well despite nonlinearities in the motor command and walking dynamics. Still better performance would be expected with nonlinear estimation techniques such as extended Kalman filters and particle filters54.

State estimation may be separated from state-based control

A unique aspect of our approach is the separation of sensory processing from control. We treat sensory information as inherently noisy, and treat the CPG as the optimal filter of noisy sensory information yielding the best state estimate. The control is then driven by that estimate, and may be designed independently of sensors and for arbitrary objectives. In fact, the present state-based motor command (Eq. 6) was designed ad hoc for reasonable performance, without explicitly optimizing any performance measures. Nevertheless, measures such as cost of transport and robustness against falls showed best performance with the theoretically optimal sensory feedback gain (Figs. 5, 6).

There are several advantages to this combination of control and estimation. First, accurate state estimation contributes to good state-based control, and poor estimation to poor control. For example, imprecise visual information can induce variability in foot placement55. Increased variability in walking has been associated with poorer walking economy56 and increased fall risk57. Second, this is manifested more rigorously as “the separation principle” of control systems design, where state-based control and state estimation may be optimized separately and then combined for good performance33,48. Third, the same state estimator (and therefore most of the CPG) can be paired with a variety of different state-based motor control schemes, such as commands for different gaits, for non-rhythmic movements including gait transitions, or to achieve different objectives such as balance and agility. This differs from ad hoc approaches, where different tasks are generally expected to require re-design of the entire CPG.

Central pattern generators may be re-interpreted as state estimators

Our model also explains how neural oscillators can be interpreted as state estimators (Fig. 4). Previous CPG oscillator models have incorporated sensory feedback for locomotion15–18,20–22,58, but have not generally defined an optimal feedback gain based on mechanistic control principles. We have re-interpreted neural oscillator circuits in terms of state estimation (Fig. 4), and shown how the gain can be determined in a principled manner, to minimize estimation error (Fig. 5) in the presence of noise. Here, the entire optimal state estimator architecture is defined objectively by the dynamics of the body and environment (including noise parameters), with no ad hoc parameters or architecture. This makes it possible to predict how feedback gains should be altered for different disturbance characteristics (Fig. 6). The nervous system has long been interpreted in terms of internal models, for example in central motor planning and control59–61 and in peripheral sensors49. Here we apply internal model concepts to CPGs, for better locomotion performance.

This interpretation also explains fictive locomotion as an emergent behavior. We observed persistent CPG activity despite removal of sensors (and either error or measurement feedback; Fig. 7), but this was not because the CPG was in any way intended to produce rhythmic timing. Rather, fictive locomotion was a side effect of a state-based motor command, in an internal feedback loop with a state estimator, resulting in an apparently time-based rhythm (Fig. 7B). Others have cautioned that CPGs should not be interpreted as generating decisive timing cues62–64, especially given the critical role of peripheral feedback in timing9,65,66. In normal locomotion, central circuits and periphery act together in a feedback loop, and so neither can be assigned primacy. The present model operationalizes this interaction, demonstrates its optimality for performance, and shows how it can yield both normal and fictive locomotion.

Optimal control principles may be compatible with neural control

This study argues that it is better to control with state rather than time. The kinematics and muscle forces of locomotion might appear to be time-based trajectories driven by an internal clock. But another view is that the body and legs comprise a dynamical system dependent on state (described e.g. by phase-plane diagrams, Fig. 2), such that the motor command should also be a function of state. That control could be continuous as examined here, or include discrete transitions between circuits (e.g.,8), and could be optimized or adapted through a variety of approaches, such as optimal control33, dynamic programming32, iterative linear quadratic regulators67, and deep reinforcement learning31). Such state-based control is capable of quite complex tasks, including learning different gaits and their transitions, avoiding or climbing over obstacles, and kicking balls31,68. But with noisy sensors34,35, state-based control typically also requires state estimation34,54, which introduces intrinsic dynamics and the possibility of sustained internal oscillations. We therefore suggest that models should be controlled by state-based control (e.g. deep reinforcement learning) coupled with state estimation for noisy environments to achieve advanced capability and performance. The resulting combination of state-driven control and estimation might also exhibit CPG-like fictive behavior, despite having no explicit time-dependent controls.

State estimation may also be applicable to movements other than locomotion. The same circuitry employed here (Fig. 4) could easily contribute a state estimate for any state-dependent movements. For example, a different rhythmic39 movement might be produced with a different state-based motor command; a non-rhythmic postural stabilization might employ a reflex-like (proportional-derivative) control; and a point-to-point movement might be produced by a descending command, supplemented by local stabilization. All of these would be equivalent to substituting different gains and interconnections to the state-based motor command ( or in Fig. 4), while still relying on all of the remaining circuitry (of Fig. 4). In our view, persistent oscillations could be the outcome of state estimation with an appropriate state-based command for the motoneuron (see “Methods” section). But the same half-center circuitry could be active and contribute to other movements that use non-locomotory, state-based commands. It is certainly possible that biological CPGs are indeed specialized purely for locomotion alone, but the state estimation interpretation suggests the possibility of a more general, and perhaps previously unrecognized, role in other movements.

The present optimization approach may offer insight on neural adaptation. Although we have explicitly designed a state estimator here, we would also expect a generic neural network, given an appropriate objective function, to be able to learn the equivalent of state estimation. The learning objective could be to minimize error of predicted sensory information, or simply locomotion performance such as cost of transport. Moreover, our results suggest that the eventual performance and control behavior should ultimately depend on body dynamics and noise. A neural system adapting to relatively low process noise (and high sensor noise) would be expected to learn and rely heavily on an internal model. Conversely, relatively high process noise (and low sensor noise) would rely more heavily on sensory feedback. A limitation of our model is that it places few constraints on neural representation, because there are many ways (or “state realizations”48) to achieve the same input–output function for estimation. But the importance and effects of noise on adaptation are hypotheses that might be testable with artificial neural networks or animal preparations.

Limitations of the study

There are, however, cases where state estimation is less applicable. State estimation applies best to systems with inertial dynamics or momentum. Examples include inverted pendulum gaits with limited inherent (or passive dynamic46) stability and pendulum-like leg motions39. The perturbation sensitivity of such dynamics makes state estimation more critical. But other organisms and models may have well-damped limb dynamics and inherently stable body postures, and thus benefit less from state estimation. Others have proposed that intrinsic CPG rhythms may have greater importance in lower than higher vertebrates, potentially because of differences in inherent stability69. There may also be task requirements that call for fast reactions with short synaptic delays, or organismal, energetic, or developmental considerations that limit the complexity of neural circuitry. Such concerns might call for reduced-order internal models48, or even their elimination altogether, in favor of faster and simpler pure feedforward or feedback. At the same time, actual neural circuitry is considerably more complex than the half-center model depicted here, and animals have far more degrees of freedom than considered here. There are some aspects of animal CPGs that could be simpler than full state estimation, and others that encompass very complex dynamics. A more holistic view would balance the principled benefits of internal models and state estimation against the practicality, complexity, and organismal costs.

There are a number of other limitations to this study. The “Anthropomorphic” walking model does not capture three-dimensional motion and multiple degrees of freedom in real animals. We used such a simple model because it is unlikely to have hidden features that could produce the same results for unexpected reasons. We also modeled extremely simple sensors, without representing the complexities of actual biological sensors. The estimator also used a constant, linear gain, and could be improved with nonlinear estimator variants. However, more complex body dynamics could be incorporated quite readily, because the state estimator (Fig. 4) consists of an internal model of body dynamics (Eq. 7) and a feedback loop with appropriate gain ( designed by estimation principles), and no ad hoc parameters. Nonlinearities might also be accommodated by methods of Bayesian estimation70, of which optimal state estimation or Kalman filtering is a special case.

Another limitation is that we used a particularly simple, state-based command law, which was designed more for robustness than for economy. Better economy could be achieved by powering gait with precisely-triggered, trailing-leg push-off39, rather than the simple hip torque applied here. However, the timing is so critical that feedforward conditions (low sensory feedback gains, Fig. 5) would fall too frequently to yield meaningful economy or step variability measures. We therefore elected for more robust control to allow a range of feedforward through feedback to be compared (Fig. 5). But even with more economical control or other control objectives, we would still expect best performance to correspond with optimal sensory gain, due to the advantages of accurate state information. We also used a relatively simple model of walking dynamics, for which more complex models could readily be substituted (Eq. 7) to yield an appropriate estimator. But more complex dynamics also imply more complex state-based locomotion control, for which there are few principled approaches. The present study interprets the CPG as a means to produce an accurate state estimate, which could be considered helpful for state-based control of any complexity.

Conclusion

Our principal contribution has been to reconcile optimal control and estimation with biological CPGs. Evidence of fictive locomotion has long shown that neural oscillators produce timing and amplitude cues. But pre-determined timing is also problematic for optimal control in unpredictable situations63, leading some to question why CPG oscillators should dictate timing62,64. To our knowledge, previous CPG models have not included process or sensor noise in control design. Such noise is simply a reality of non-uniform environments and imperfect sensors. But it also yields an objective criterion for uniquely defining control and estimation parameters. The resulting neural circuits resemble previous oscillator models and can produce and explain nominal, noisy, or fictive locomotion. In our interpretation, there is no issue of primacy between CPG oscillators and sensory feedback, because they interact optimally to deal with a noisy world.

Method

Details of the model and testing are as follows. The CPG model is first described in terms of neural, half-center circuitry, which is then paired with a walking model with pendulum-like leg dynamics. The walking gait is produced by a state-based command generator, which governs how state information is used to drive motor neurons. The model is subjected to process and sensor noise, which tend to cause the gait to be imprecise and subject to falling. The CPG is then re-interpreted as an optimal state estimator, for which sensory feedback gain and internal model parameters may be designed, as a function of noise characteristics. The model is then simulated over multiple trials to computationally evaluate its walking performance as a function of sensory feedback gain. It is also simulated without sensory feedback, to test whether it produces fictive locomotion.

CPG architecture based on Matsuoka oscillator

The CPG consists of two, mutually-inhibiting half-center oscillators, receiving a tonic descending input (Fig. 4A). Each half-center has second-order dynamics, described by states and . This is equivalent to a primary Matsuoka neuron with states for a membrane potential and adaptation or fatigue14. Locomotion requires relatively longer time constants than is realistic for a single biological neuron, and so each model neuron here should be regarded as shorthand for a network of biological neurons with adaptable time constants and synaptic weights, that in aggregate produce first- or second-order dynamics of appropriate time scale. The state produces an output that can be fed to other neurons. In addition, we included two types of auxiliary neurons (for a total of three neurons per half-center): one for accepting the ground contact input (, with value 1 when in ground contact and 0 otherwise for leg ), and the other to act as an alpha () motoneuron to drive the leg. We used a single motoneuron to generate both positive and negative (extensor and flexor) hip torques, as a simplifying alternative to including separate rectifying motoneurons.

Each half-center receives a descending command and two types of sensory feedback. The descending command is a tonic input , which determines the walking speed. Sensory input from the corresponding leg includes continuous and discrete information. The continuous feedback contains information about leg angle from muscle spindles and other proprioceptors71, which could be modeled as leg angle for measurement feedback, or error for error feedback sensors. The discrete information is about ground contact sent from cutaneous afferents72.

The primary neuron’s second-order dynamics are described by two states. The state is mainly affected by its own adaptation, a mutually inhibiting connection from other neurons, sensory input, and efference copy of the motor commands. The second state has a decay term, and is driven by the same neuron’s as well as sensory input. This is described by the following equations, inspired by14 and previous robot controllers designed for rhythmic arm movements (e.g.,73 and walking22):

| 1 |

| 2 |

| 3 |

where there are several synaptic weightings: decays and , adaptation gain , mutual inhibition strength (weighting of neuron ’s input from neuron ’s output, where ), an output function (set to identity here), sensory input gains and , and efference copy strength . The neuron also receives efference copy of its associated motor command , which depends on neuron state, descending drive and ground contact. There are also secondary, higher-order influences summarized by the function , which have a relatively small effect on neural dynamics but are part of the state estimator as described below (see “Theoretical equivalence” section). The network parameters for such CPG oscillators are traditionally set through a combination of design rules of thumb and hand-tuning, but here nearly all of the parameters will be determined from an optimal state estimator, as described below.

Walking model with pendulum dynamics

The system being controlled is a simple bipedal model walking in the sagittal plane (Fig. 2A). The model features pendulum-like leg dynamics46, with 16% of body mass at each leg (of length ) and 68% to pelvis/torso, which is modeled as a point mass. The leg’s center of mass was located at 0.645 from the feet, radius of gyration of 0.326 , and curved foot with radius 0.3 . The legs are also actively actuated by added torque inputs (the "Anthropomorphic Model,"43), and energy is dissipated mainly with the collision of leg with ground in the step-to-step transition. The dissipation determines the amount of positive work required each step. In humans, muscles perform much of that work, which in turn accounts for much of the energetic cost of walking44.

The walking model is described mathematically as follows. The equations of motion may be written in terms of vector as

| 4 |

where M is the mass matrix, C describes centripetal and Coriolis effects, G contains position-dependent moments such as from gravity, contains ground contact, and contains hip torques exerted on the legs (State-based control, below). The equations of motion depend on ground contact because each leg alternates between stance and swing leg behaviors, inverted pendulum and hanging pendulum, respectively. We define each matrix to switch the order of elements at heel-strikes, so that equation of motion can be expressed in the same form.

At heelstrike, the model experiences a collision with ground affecting the angular velocities. This is modeled as a perfectly inelastic collision. Using impulse-momentum, the effect may be summarized as the linear transformation

| 5 |

where the plus and minus signs (‘ + ’ and ‘–’) denote just after and before impact, respectively. The ground contact states are switched such that the previous stance leg becomes the swing leg, and vice versa. The simulation avoided inadvertent ground contact of the swing legs by ignoring collisions until the stance leg reached a threshold (set as 10% of nominal stance leg angle at heel strike), as is common in simple 2D walking models with rigid legs46.

The resulting gait has several characteristics relevant to the CPG. First, the legs have pendulum-like inertial dynamics, which allow much of the gait to occur passively. For example, each leg swings passively, and its collision with ground automatically induces the next step of a periodic cycle46. Second, inertial dynamics integrate forces over time, such that disturbances can disrupt timing and cause falls. This sensitivity could be reduced with overdamped joints and low-level control, but humans are thought to have significant inertial dynamics42. And third, inertial dynamics are retained in most alternative models with more degrees of freedom and more complexity (e.g.,29,31). We believe most other dynamical models would also benefit from feedback control similar in concept to presented here.

State-based motor command generator

The model produces state-dependent hip torque commands to the legs. Of the many ways to power a dynamic walking model (e.g.,43,74–76), we apply a constant extensor hip torque against the stance leg, for its parametric simplicity and robustness to perturbations. The torque normally performs positive work (Fig. 2B) to make up for collision losses, and could be produced in reaction to a torso leaned forward (not modeled explicitly here;46). The swing leg experiences a hip torque proportional to swing leg angle (Fig. 2B,C), with the effect of tuning the swing frequency39. This control scheme is actually suboptimal for economy, and is selected for its robustness. Optimal economy actually requires perfectly-timed, impulsive forces from the legs45, and has poor robustness to the noisy conditions examined here. The present non-impulsive control is much more robust, and can still have its performance optimized by appropriate state estimation.

The overall torque command for leg is used as the motor command , and may be summarized as

| 6 |

where the stance phase torque is increased from the initial value by the amount proportional to the descending command with gain . The swing phase torque has gain for the proportionality to leg angle .

There are also two higher level types of control acting on the system. One is to regulate walking speed, by slowly modulating the tonic, descending command (Eq. 6). An integral control is applied on , so keep attain the same average walking speed despite noise, which would otherwise reduce average speed. The second type of high-level control is to restart the simulation after falling. When falling is detected (as a horizontal stance leg angle), the walking model is reset to its nominal initial condition, except advanced one nominal step length forward from the previous footfall location. No penalty is assessed for this re-set process, other than additional energy and time wasted in the fall itself. We quantify the susceptibility to falling with a mean time between falls (MTBF), and report overall energetic cost in two ways, including and excluding failed steps. The wasted energy of failed steps is ignored in the latter case, resulting in lower reported energy cost.

Noise model with process and sensor noise

The walking dynamics are subject to two types of disturbances, process and sensor noise (Fig. 3). Both are modeled as zero-mean, Gaussian white noise. Process noise (with covariance ) acts as an unpredictable disturbance to the states, due to external perturbations or noisy motor commands. Sensor or measurement noise (with covariance ) models imperfect sensors, and acts additively to the sensory measurements . The errors induced by both types of noise are unknown to the central nervous system controller, and so both tend to reduce performance.

The noise covariances were set so that the model would be significantly affected by both types of noise. We sought levels sufficient to cause significant risk of falling, so that good control would be necessary to avoid falling while also achieving good economy. Process noise was described by covariance matrix , with diagonals filled with variances of noisy angular accelerations, which had standard deviations of for stance leg, for swing leg for the reference testing condition. Sensor noise covariance was also set as a diagonal matrix with both entries of standard deviation for the reference testing condition. For the later demonstrations to test the effect of the different amount of noise, we multiplied 1.15 to the sensor noise covariance, and 0.36, 1.15, 2.06 to the process noise covariance. Noise was implemented as a spline interpolation of discrete white noise sampled at frequency of 16 (well above pendulum bandwidth) and truncated to no more than ± 3 standard deviations.

State estimator with internal model of dynamics

A state estimator is formed from an internal model of the leg dynamics being controlled (see block diagram in Fig. 4), to produce a prediction of the expected state and sensory measurements (with the hat symbol ‘^’ denoting an internal model estimate). Although the actual state is unknown, the actual sensory feedback is known, and the expectation error may be fed back to the internal model with negative feedback (gain ) to correct the state estimate. Estimation theory shows that regulating error toward zero also tends to drive the state estimate towards actual state (assuming system observability, as is the case here; e.g.,48). This may be formulated as an optimization problem, where gain is selected to minimize the mean-square estimation error. Here we interpret the half-center oscillator network as such an optimal state estimator, the design of which will determine the network parameters.

The estimator equations may be described in state space. The estimator states are governed by the same equations of motion as the walking model (Eqs. 4, 5), with the addition of the feedback correction. Again using hat notation for state estimates, the nonlinear state estimate equations are

| 7 |

We used standard state estimator equations to determine a constant sensory feedback gain . This was done by linearizing the dynamics about a nominal state, and then designing an optimal estimator based on process and sensor noise covariances ( and ) using standard procedures (“lqe” command in Matlab, The MathWorks, Natick, MA). This yields a set of gains that minimize mean-square estimation error (), for an infinite horizon and linear dynamics. The constant gain was then applied to the nonlinear system in simulation, with the assumption that the resulting estimator would still be nearly optimal in behavior. Another sensory input to the system is ground contact , a boolean variable. The state estimator ignores measured for pure feedforward control (zero feedback gain ), but for all other conditions (non-zero ), any sensed change in ground contact overrides the estimated ground contact . When the estimated ground contact state changes, the estimated angular velocities are updated according to the same collision dynamics as the walking model (Eq. 5 except with estimated variables).

The state estimate is applied to the state-based motor command (Eq. 6). Although the walking control was designed for actual state information (), for walking simulations it uses the state estimate instead:

| 8 |

As with the estimator gain, this also requires an assumption. In the present nonlinear system, we assume that the state estimate may replace the state without ill effect, a proven fact only for linear systems (certainty-equivalence principle,33,77). Both assumptions, regarding gain and use of state estimate, are tested in simulation below.

Theoretical equivalence between neural oscillator and state estimator

Having fully described the walking model in terms of control systems principles, the equivalent half-center oscillator model may be determined (Fig. 4B). The identical behavior is obtained by re-interpreting the neural states in terms of the dynamic walking model states,

| 9 |

along with the neural output function defined as identity,

| 10 |

In addition, motor command and ground contact state are defined to match state-based variables (Eq. 6):

| 11 |

The synaptic weights and higher-order functions (Eqs. 1–3) are defined according to the internal model equations of motion (Eq. 7),

| 12 |

| 13 |

| 14 |

| 15 |

| 16 |

Because the mass matrix and other variables are state dependent, the weightings above are state dependent as well. The functions and are higher-order terms, which could be considered optional; omitting them would effectively yield a reduced-order estimator.

The result of these definitions is that the half-center neuron equations (Eqs. 1–3) may be rewritten in terms of and , to illustrate how the network models the leg dynamics and receives inputs from sensory feedback and efference copy:

| 17 |

| 18 |

The above may be interpreted as an internal model of the stance and swing leg as pendulums, with pendulum phasing modulated by error feedback and efference copy of the motor command (plus small nonlinearities due to inertial coupling of the two pendulums).

The result is that the entire neural circuitry and parameters are fully specified by the control systems model. In Eqs. (17–18), all of the quantities except motor command are determined by a state estimator (Eq. 7) with optimal gains (determined by single Matlab command ‘lqe’.) For example, higher-order terms are defined by Eq. (13). The only aspect of the system not determined by optimal estimation was the motor command , equal to the state-based motor command (Eq. 8). This was designed ad hoc to produce alternating stance and swing phases with high robustness to perturbations.

Parametric effect of varying sensory feedback gain L

The sensory feedback gain is selected using state estimation theory, according to the amount of process noise and sensor noise. High process noise, or uncertainty about the dynamics and environment, favors a higher feedback gain, whereas high sensor noise favors a lower feedback gain. The ratio between the noise levels determines the optimal linear quadratic estimator gain (Matlab function “lqe”). A constant gain was determined based on a linear approximation for the leg dynamics, an infinite horizon for estimation, and a stationarity assumption for noise. In simulation, the state estimator was implemented with nonlinear dynamics, assuming this would yield near-optimal performance.

It is thus instructive to evaluate walking performance for a range of feedback gains. Setting too low or too high would be expected to yield poor performance. Setting equal to the optimal LQE gain would be expected to yield approximately the least estimation error, and therefore the most precise control (e.g.78). In terms of gait, more precise control would be expected to reduce step variability and mechanical work, both of which are related to metabolic energy expenditure in humans (e.g.,56). The walking model is also prone to falling when disturbed by noise, and optimal state estimation would be expected to reduce the frequency of falling.

We performed a series of walking simulations to test the effect of varying the feedback gain. The model was tested with 20 trials of 100 steps each, subjected to pseudorandom process and sensor noise of fixed covariance ( and , respectively). In each trial, walking performance was assessed with mechanical cost of transport (mCOT, defined as positive mechanical work per body weight and distance travelled; e.g.,47), step length variability, and mean time between falls (MTBF) as a measure of walking robustness (also referred to as Mean First Passage Time79). The sensory feedback gain was first designed in accordance with the experimental noise parameters, and then the corresponding walking performance was evaluated. Additional trials were performed, varying sensory feedback gain with lower and higher than optimal values to test for a possible performance penalty. These sub-optimal gains were determined by re-designing the estimator with process noise ( between 10^-4 and 10^0.8, with smaller values tending toward pure feedforward and larger toward pure feedback). This procedure guarantees stable closed-loop estimator dynamics, which would not be the case if the matrix were simply scaled higher or lower. For all trials, the redesigned was tested in simulations using the fixed process and sensor noise levels. The overall sensory feedback gain was quantified with a scalar, defined as the L2 norm (largest singular value) of matrix , normalized by the L2 norm of .

We expected that optimal performance in simulation would be achieved with gain close to the theoretically optimal LQE gain, . With too low a gain (, feedforward Fig. 1A), the model would perform poorly due to sensitivity to process noise, and with too high a gain (, feedback Fig. 1C), it would perform poorly due to sensor noise. And for intermediate gains, we expected performance to have an approximately convex bowl shape, centered about a minimum at or near . These differences were expected from noise alone, as the model was designed to yield the same nominal gait regardless of gain . Simulations were necessary to test the model, because its nonlinearities do not admit analytical calculation of performance statistics.

Evaluation of fictive locomotion

We tested whether the model would produce fictive locomotion with removal of sensory feedback. Disconnection of feedback in a closed-loop control system would normally be expected to eliminate any persistent oscillations. But estimator-based control actually contains two types of inner loops (Fig. 7A), both of which could potentially allow for sustained oscillations in the absence of sensory feedback. However, the emergence of fictive locomotion and its characteristics depend on what kind of sensory signal is removed. We considered two broad classes of sensors, referred to producing error feedback and measurement feedback, with different expectations for the effects of their removal.

Some proprioceptors relevant to locomotion, including some muscle spindles and fish lateral lines50, could be regarded as producing error feedback. They receive corollary discharge of motor commands, and appear to predict intended movements, so that the afferents are most sensitive to unexpected perturbations. The comparison between expected and actual sensory output largely occurs within the sensor itself, yielding error signal (Fig. 7B). Disconnecting the sensor would therefore disconnect error signal , and would isolate an inner loop between state-based command and internal model. The motor command normally sustains rhythmic movement of the legs for locomotion, and would also be expected to sustain rhythmic oscillations within the internal model. Fictive locomotion in this case would be expected to resemble the nominal motor pattern.

Sensors that do not receive corollary discharge could be regarded as direct sensors, in that they relay measurement feedback related to state. In this case, disconnecting the sensor would be equivalent to removing measurement . This isolates two inner loops, both the command-and-internal-model loop above, as well as a sensory prediction loop between sensor model and internal model. The interaction of these loops would be expected to yield a more complex response, highly dependent on parameter values. Nonetheless, we would expect that removal of would substantially weaken the sensory input to the internal model, and generally result in a weaker or slower fictive rhythm.

We tested for the existence of sustained rhythms for both extremes of error feedback and measurement feedback. Of course, actual biological sensors within animals are vastly more diverse and complex than this model. But the existence of sustained oscillations in extreme cases would also indicate whether fictive locomotion would be possible with some combination of different sensors within these extremes.

Acknowledgements

This work was supported in part by NSERC (Discovery Award and CRC Tier I), Dr. Benno Nigg Research Chair, and University of Calgary BME Graduate Program.

Author contributions

All authors contributed to the conceptualization and design of the study, interpretation of the simulation results, writing and reviewing the manuscript. H.X.R. wrote simulation code and prepared figures.

Code availability

The source code for the simulation, supplementary table & video are available in a public repository at: https://github.com/hansolxryu/CPG_biped_walker_Ryu_Kuo (https://doi.org/10.5281/zenodo.4739744).

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Brown TG. On the nature of the fundamental activity of the nervous centres; together with an analysis of the conditioning of rhythmic activity in progression, and a theory of the evolution of function in the nervous system. J. Physiol. 1914;48:18–46. doi: 10.1113/jphysiol.1914.sp001646. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Wilson DM. The central nervous control of flight in a locust. J. Exp. Biol. 1961;38:471–490. doi: 10.1242/jeb.38.2.471. [DOI] [Google Scholar]

- 3.Wilson DM, Wyman RJ. Motor output patterns during random and rhythmic stimulation of locust thoracic Ganglia. Biophys. J. 1965;5:121–143. doi: 10.1016/S0006-3495(65)86706-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Grillner S. Locomotion in vertebrates: central mechanisms and reflex interaction. Physiol. Rev. 1975;55:247–304. doi: 10.1152/physrev.1975.55.2.247. [DOI] [PubMed] [Google Scholar]

- 5.Feldman AG, Orlovsky GN. Activity of interneurons mediating reciprocal 1a inhibition during locomotion. Brain Res. 1975;84:181–194. doi: 10.1016/0006-8993(75)90974-9. [DOI] [PubMed] [Google Scholar]

- 6.Sherrington CS. Flexion-reflex of the limb, crossed extension-reflex, and reflex stepping and standing. J. Physiol. 1910;40:28–121. doi: 10.1113/jphysiol.1910.sp001362. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Pringle JWS. The Reflex Mechanism of the Insect Leg. J. Exp. Biol. 1940;17:8–17. doi: 10.1242/jeb.17.1.8. [DOI] [Google Scholar]

- 8.Büschges A. Sensory control and organization of neural networks mediating coordination of multisegmental organs for locomotion. J. Neurophysiol. 2005;93:1127–1135. doi: 10.1152/jn.00615.2004. [DOI] [PubMed] [Google Scholar]

- 9.Bässler U, Büschges A. Pattern generation for stick insect walking movements–multisensory control of a locomotor program. Brain Res. Brain Res. Rev. 1998;27:65–88. doi: 10.1016/S0165-0173(98)00006-X. [DOI] [PubMed] [Google Scholar]

- 10.Liu, C. J., Fan, Z., Seo, K., Tan, X. B. & Goodman, E. D. Synthesis of Matsuoka-Based Neuron Oscillator Models in Locomotion Control of Robots. in 2012 Third Global Congress on Intelligent Systems 342–347 (2012). 10.1109/GCIS.2012.99.

- 11.Habib, M. K., Watanabe, K. & Izumi, K. Biped locomotion using CPG with sensory interaction. in 2009 IEEE International Symposium on Industrial Electronics 1452–1457 (2009). 10.1109/ISIE.2009.5219063.

- 12.Auddy, S., Magg, S. & Wermter, S. Hierarchical Control for Bipedal Locomotion using Central Pattern Generators and Neural Networks. in 2019 Joint IEEE 9th International Conference on Development and Learning and Epigenetic Robotics (ICDL-EpiRob) 13–18 (2019). 10.1109/DEVLRN.2019.8850683.

- 13.Cristiano J, García MA, Puig D. Deterministic phase resetting with predefined response time for CPG networks based on Matsuoka’s oscillator. Robot. Auton. Syst. 2015;74:88–96. doi: 10.1016/j.robot.2015.07.004. [DOI] [Google Scholar]

- 14.Matsuoka K. Mechanisms of frequency and pattern control in the neural rhythm generators. Biol. Cybern. 1987;56:345–353. doi: 10.1007/BF00319514. [DOI] [PubMed] [Google Scholar]

- 15.Iwasaki T, Zheng M. Sensory feedback mechanism underlying entrainment of central pattern generator to mechanical resonance. Biol. Cybern. 2006;94:245–261. doi: 10.1007/s00422-005-0047-3. [DOI] [PubMed] [Google Scholar]

- 16.Nassour J, Hénaff P, Benouezdou F, Cheng G. Multi-layered multi-pattern CPG for adaptive locomotion of humanoid robots. Biol. Cybern. 2014;108:291–303. doi: 10.1007/s00422-014-0592-8. [DOI] [PubMed] [Google Scholar]

- 17.Tsuchiya, K., Aoi, S. & Tsujita, K. Locomotion control of a biped locomotion robot using nonlinear oscillators. in Proceedings 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003) (Cat. No.03CH37453) vol. 2 1745–1750 (IEEE, 2003).

- 18.Morimoto, J. et al. Modulation of simple sinusoidal patterns by a coupled oscillator model for biped walking. in Proceedings 2006 IEEE International Conference on Robotics and Automation, 2006. ICRA 2006. 1579–1584 (2006). 10.1109/ROBOT.2006.1641932.

- 19.Kimura H, Fukuoka Y, Cohen AH. Adaptive dynamic walking of a quadruped robot on natural ground based on biological concepts. Int. J. Rob. Res. 2007;26:475–490. doi: 10.1177/0278364907078089. [DOI] [Google Scholar]

- 20.Righetti, L. & Ijspeert, A. J. Pattern generators with sensory feedback for the control of quadruped locomotion. in 2008 IEEE International Conference on Robotics and Automation 819–824 (IEEE, 2008). 10.1109/ROBOT.2008.4543306.

- 21.Bliss T, Iwasaki T, Bart-Smith H. Central pattern generator control of a tensegrity swimmer. IEEE/ASME Trans. Mechatron. 2013;18:586–597. doi: 10.1109/TMECH.2012.2210905. [DOI] [Google Scholar]

- 22.Endo, G., Morimoto, J., Nakanishi, J. & Cheng, G. An empirical exploration of a neural oscillator for biped locomotion control. in IEEE International Conference on Robotics and Automation, 2004. Proceedings. ICRA ’04. 2004 vol. 3 3036–3042 Vol.3 (2004).

- 23.Dzeladini F, van den Kieboom J, Ijspeert A. The contribution of a central pattern generator in a reflex-based neuromuscular model. Front Hum. Neurosci. 2014;8:371. doi: 10.3389/fnhum.2014.00371. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Owaki D, Ishiguro A. A quadruped robot exhibiting spontaneous gait transitions from walking to trotting to galloping. Sci. Rep. 2017;7:277. doi: 10.1038/s41598-017-00348-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Daley MA, Felix G, Biewener AA. Running stability is enhanced by a proximo-distal gradient in joint neuromechanical control. J. Exp. Biol. 2007;210:383–394. doi: 10.1242/jeb.02668. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Alexander RM. Optima for Animals. Princeton University Press; 1996. [Google Scholar]

- 27.Kimura S, Yano M, Shimizu H. A self-organizing model of walking patterns of insects. Biol. Cybern. 1993;69:183–193. doi: 10.1007/BF00198958. [DOI] [Google Scholar]

- 28.Taylor A, Cottrell GW, Kristan WB. A model of the leech segmental swim central pattern generator. Neurocomputing. 2000;32–33:573–584. doi: 10.1016/S0925-2312(00)00214-9. [DOI] [Google Scholar]

- 29.Geyer H, Herr H. A muscle-reflex model that encodes principles of legged mechanics produces human walking dynamics and muscle activities. IEEE Trans. Neural Syst. Rehabil. Eng. 2010;18:263–273. doi: 10.1109/TNSRE.2010.2047592. [DOI] [PubMed] [Google Scholar]

- 30.Heess, N. et al. Emergence of Locomotion Behaviours in Rich Environments. http://arxiv.org/abs/1707.02286 (2017).

- 31.Peng, X. B., Berseth, G., Yin, K. & Van De Panne, M. DeepLoco: Dynamic Locomotion skills using hierarchical deep reinforcement learning. ACM Trans. Graph.36, 41:1–41:13 (2017).

- 32.Bellman R. The theory of dynamic programming. Bull. Am. Math. Soc. 1954;60:503–515. doi: 10.1090/S0002-9904-1954-09848-8. [DOI] [Google Scholar]

- 33.Bryson AE. Applied Optimal Control: Optimization, Estimation and Control. CRC Press; 1975. [Google Scholar]

- 34.Kuindersma S, et al. Optimization-based locomotion planning, estimation, and control design for the atlas humanoid robot. Auton. Robot. 2016;40:429–455. doi: 10.1007/s10514-015-9479-3. [DOI] [Google Scholar]

- 35.Wooden, D. et al. Autonomous navigation for BigDog. in 2010 IEEE International Conference on Robotics and Automation 4736–4741 (2010). 10.1109/ROBOT.2010.5509226.

- 36.Kuo AD. An optimal state estimation model of sensory integration in human postural balance. J. Neural. Eng. 2005;2:S235–249. doi: 10.1088/1741-2560/2/3/S07. [DOI] [PubMed] [Google Scholar]

- 37.Kuo AD. An optimal control model for analyzing human postural balance. IEEE Trans. Biomed. Eng. 1995;42:87–101. doi: 10.1109/10.362914. [DOI] [PubMed] [Google Scholar]

- 38.Todorov E. Stochastic optimal control and estimation methods adapted to the noise characteristics of the sensorimotor system. Neural. Comput. 2005;17:1084–1108. doi: 10.1162/0899766053491887. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Kuo AD. The relative roles of feedforward and feedback in the control of rhythmic movements. Mot. Control. 2002;6:129–145. doi: 10.1123/mcj.6.2.129. [DOI] [PubMed] [Google Scholar]

- 40.O’Connor SM. The Relative Roles of Dynamics and Control in Bipedal Locomotion. University of Michigan; 2009. [Google Scholar]

- 41.Kuo AD, Donelan JM, Ruina A. Energetic consequences of walking like an inverted pendulum: step-to-step transitions. Exerc. Sport Sci. Rev. 2005;33:88–97. doi: 10.1097/00003677-200504000-00006. [DOI] [PubMed] [Google Scholar]

- 42.Alexander RM. Simple models of human motion. Appl. Mech. Rev. 1995;48:461–469. doi: 10.1115/1.3005107. [DOI] [Google Scholar]

- 43.Kuo AD. A simple model of bipedal walking predicts the preferred speed-step length relationship. J. Biomech. Eng. 2001;123:264–269. doi: 10.1115/1.1372322. [DOI] [PubMed] [Google Scholar]

- 44.Donelan JM, Kram R, Kuo AD. Mechanical work for step-to-step transitions is a major determinant of the metabolic cost of human walking. J. Exp. Biol. 2002;205:3717–3727. doi: 10.1242/jeb.205.23.3717. [DOI] [PubMed] [Google Scholar]

- 45.Kuo AD. Energetics of actively powered locomotion using the simplest walking model. J. Biomech. Eng. 2002;124:113–120. doi: 10.1115/1.1427703. [DOI] [PubMed] [Google Scholar]

- 46.McGeer T. Passive dynamic walking. Int. J. Robot. Res. 1990;9:62. doi: 10.1177/027836499000900206. [DOI] [Google Scholar]

- 47.Collins S, Ruina A, Tedrake R, Wisse M. Efficient bipedal robots based on passive-dynamic walkers. Science. 2005;307:1082–1085. doi: 10.1126/science.1107799. [DOI] [PubMed] [Google Scholar]

- 48.Kailath T. Linear Systems. Prentice-Hall; 1980. [Google Scholar]

- 49.Dimitriou M, Edin BB. Human muscle spindles act as forward sensory models. Curr. Biol. 2010;20:1763–1767. doi: 10.1016/j.cub.2010.08.049. [DOI] [PubMed] [Google Scholar]

- 50.Straka H, Simmers J, Chagnaud BP. A new perspective on predictive motor signaling. Curr. Biol. 2018;28:R232–R243. doi: 10.1016/j.cub.2018.01.033. [DOI] [PubMed] [Google Scholar]