Abstract

The problem of reconstructing a string from its error-prone copies, the trace reconstruction problem, was introduced by Vladimir Levenshtein two decades ago. While there has been considerable theoretical work on trace reconstruction, practical solutions have only recently started to emerge in the context of two rapidly developing research areas: immunogenomics and DNA data storage. In immunogenomics, traces correspond to mutated copies of genes, with mutations generated naturally by the adaptive immune system. In DNA data storage, traces correspond to noisy copies of DNA molecules that encode digital data, with errors being artifacts of the data retrieval process. In this paper, we introduce several new trace generation models and open questions relevant to trace reconstruction for immunogenomics and DNA data storage, survey theoretical results on trace reconstruction, and highlight their connections to computational biology. Throughout, we discuss the applicability and shortcomings of known solutions and suggest future research directions.

I. Introduction

TWO decades ago, Vladimir Levenshtein introduced the Trace Reconstruction Problem, reconstructing an unknown seed string from a set of its error-prone copies, which are referred to as traces [1]. In information-theoretic terminology, the seed string is observed by passing it through a noisy channel multiple times. Levenshtein set forth the challenge of developing efficient algorithms to infer the seed string and characterizing the number of traces needed for its reconstruction [2], [3]. He succeeded in solving these problems in the case of the substitution channel, where random symbols in the seed string are mutated independently, and demonstrated that a small number of deletions or insertions may be tolerated. A few years later, Batu et al. [4] analyzed the trace reconstruction problem in the deletion channel, where random symbols are deleted from the seed string independently so that a trace is a random subsequence of the seed string.

After these seminal papers [1]-[4], trace reconstruction has received a lot of attention, especially in the last few years [5]-[20]. However, despite a wealth of theoretical work, there is a surprising lack of practical trace reconstruction algorithms. Although Batu et al., [4] and many follow-up studies motivated trace reconstruction by the multiple alignment problem in computational biology [21], we are not aware of any software tools that use trace reconstruction for constructing multiple alignments and applying them for follow-up biological analysis.

Transforming a biological problem into a well-defined algorithmic problem comes with many challenges. An attempt to model all aspects of a biological problem often results in an intractable algorithmic problem while ignoring some of its aspects (like in the initial formulation of the Trace Reconstruction Problem) may lead to a solution that is inadequate for practical applications. Computational biologists try to find a balance between these two extremes and typically use a simplified (albeit inadequate) problem formulation to develop algorithmic ideas that eventually lead to practical (albeit approximate) solutions of a more complex biological problem.

The first applications of trace reconstruction emerged only recently in the context of two rapidly developing research areas: personalized immunogenomics [22], [23] and DNA data storage [24]-[33]. In this survey paper, we identify a variety of open trace reconstruction problems motivated by immunogenomics and DNA data storage, describe several practically motivated objectives for trace reconstruction, and discuss the applicability and shortcomings of known solutions. Our goal is to introduce information theory experts to emerging practical applications of trace reconstruction, and, at the same time, introduce computational biology experts to recent theoretical results in trace reconstruction.

A. Trace Reconstruction in Computational Immunology

How have we survived an evolutionary arms race with pathogens?:

Humans are constantly attacked by pathogens that reproduce at a much faster rate than humans do. How have we survived an evolutionary arms race with pathogens that evolve a thousand times faster than us?

All vertebrates have an adaptive immune system that uses the VDJ recombination to develop a defensive response against pathogens at the time-scale at which they evolve. It generates a virtually unlimited variety of antibodies, proteins that recognize a specific foreign agent (called antigen), bind to it, and eventually neutralize it. There are ≈ 108 antibodies circulating in a human body at any given moment (unique for each individual!) and this set of antibodies is constantly changing. How can a human genome (only ≈ 20, 000 genes) generate such a diverse defense system?

VDJ recombination:

In 1987, Susumu Tonegawa received the Nobel Prize for the discovery of the VDJ recombination [34]. The immunoglobulin locus is a 1.25 million-nucleotide long region in the human genome that contains three sets of short segments known as V, D, and J genes (40 V, 27 D, and 6 J genes). Figure 1 illustrates the VDJ recombination process that selects one V gene, one D gene, and one J gene and concatenates them, thus generating an immunoglobulin gene that encodes an antibody. In our discussion, we hide some details to make the paper accessible to information theorists without immunology background. For example, although there are multiple immunoglobulin loci in the human genome, we limit attention to the 1.25 million-nucleotide long immunoglobulin heavy chain locus. Although we stated above that an immunoglobulin gene encodes an antibody, in reality it encodes only the heavy chain of an antibody (antibodies are formed by both heavy and light chains).

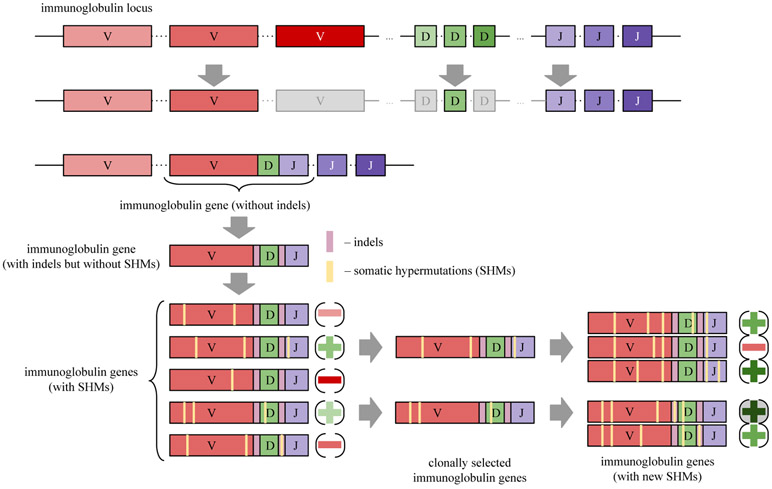

Fig. 1:

Generation of an antibody repertoire. The VDJ recombination affects the immunoglobulin locus that includes three sets of genes: V (variable), D (diversity), and J (joining). It randomly selects one gene from each set and concatenates them. The resulting sequence represents a potential immunoglobulin gene that encodes an antibody. However, this simple representation of an immunoglobulin gene is unrealistic since real immunoglobulin genes have indels at the V-D and D-J junctions. Somatic hypermutations (SHMs) further change the sequence of an immunoglobulin gene and thus affect its affinity. While some mutations increase affinity (sequences marked by the green ‘+’ signs), other mutations reduce it (sequences marked by the red ‘−’ signs). The clonal selection process iteratively retains antibodies with increased affinities and filters out antibodies with reduced affinities, thus launching an evolutionary process that eventually generates a high-affinity antibody able to neutralize an antigen (an antibody marked by a circled dark green ‘+’ sign).

Since the described process can generate only 40 × 27 × 6 = 6480 antibodies, it cannot explain the astonishing diversity of human antibodies. However, the VDJ recombination is more complex than this: it deletes some nucleotides at the start and/or the end of V, D, J genes and inserts short stretches of randomly generated nucleotides (non-genomic insertions) between V-D and D-J junctions. Such insertions and deletions (indels) greatly increase the diversity of antibodies generated through the VDJ recombination process. But this is only the beginning of the molecular process that further diversifies the set of antibodies.

Somatic hypermutations and clonal selection:

Indels greatly increase the diversity of antibodies but even this diversity is insufficient for neutralizing a myriad of antigens that the organism might face. However, the VDJ recombination generates sufficient diversity to achieve an important goal—some of the generated antibodies in this huge collection bind to a specific antigen, albeit with low affinity (i.e., the strength of antibody-antigen binding) that is insufficient for neutralizing the antigen. The adaptive immune system uses an ingenious evolutionary mechanism for gradually increasing the affinity of binding antibodies and thus eventually neutralizing an antigen [34].

Once an antibody binds to an antigen (even an antibody with a low affinity), the corresponding immunoglobulin gene undergoes random mutations (referred to as somatic hypermutations or SHMs) that can both increase and reduce the affinity of an antibody. To enrich the pool of antibodies with high affinity, these mutations are iteratively accompanied by the clonal selection process that eliminates antibodies with low affinity (Figure 1). The iterative somatic hypermutations and clonal selection are not unlike an extremely fast evolutionary process that generates a huge variety of antibodies from a single initial antibody and eventually leads to generating a new high-affinity antibody able to neutralize an antigen.

Personalized immunogenomics:

Modern DNA sequencing technologies sample the set of antibodies by generating sequences of millions of randomly selected immunoglobulin genes (antibody repertoire) out of ≈ 108 distinct antibodies circulating in our body. Analysis of antibody repertoires across various patients opens new horizons for developing antibody-based drugs, designing vaccines, and finding associations between genomic variations in the immunoglobulin loci and diseases. The emergence of antibody repertoire datasets in the last decade raised new algorithmic problems that remain largely unsolved.

The immunoglobulin locus is a highly variable region of the human genome—the sets of V, D, and J genes (referred to as germline genes) differ from individual to individual. Identifying germline V, D, and J genes in an individual is important since variations in these genes have been linked to various diseases [35], differential response to infection, vaccination, and drugs [36], and disease susceptibility [35], [37]. The ImMunoGeneTics (IMGT) database of variations in germline genes remains incomplete even in the case of well-studied human genes [38]. In the case of immunologically important model organisms, such as camels or sharks, the germline genes remain largely unknown. Unfortunately, since assembling the sequence of the highly repetitive immunoglobulin locus faces challenges [39] and does not provide one with information on how various germline genes contribute to an antibody repertoire, the efforts like the 1,000 Genomes Project have resulted only in limited progress toward inferring the population-wide census of human germline genes [40], [41].

Since the information about germline genes in an individual (personalized immunogenomics data) is typically unavailable, researchers use the reference genes instead of personal germline genes, thus limiting various immunogenomics applications. Personalized immunogenomics studies attempt to derive the germline genes by analyzing antibody repertoires. Each antibody can be viewed as a trace generated from the three sets of unknown seed strings (all V genes, all D genes, and all J genes in an individual) through the VDJ recombination and somatic hypermutations (Figure 1). Hence, one can reformulate reconstruction of germline genes from an antibody repertoire as a novel Trace Reconstruction Problem. In Section III, we describe a series of problems with gradually increasing complexity that model antibody generation from the germline genes.

B. Trace Reconstuction in DNA Data Storage

DNA has emerged as a potentially viable storage medium for large quantities of digital data [24]-[33]. A digital file can be encoded by a collection of DNA sequences where each individual sequence represents a small part of the data. One application is archival storage, where DNA promises to have orders of magnitude improved data density and durability as compared to existing storage media (e.g., magnetic tapes or solid state). The field is rapidly growing, and current DNA data storage systems can store and retrieve hundreds of megabytes of data, with many additional features such as random data access [30]. We provide an overview of DNA data storage and highlight the role that trace reconstruction plays in the data retrieval process [30], [33]. Figure 2 depicts the core components of the storage and retrieval pipeline.

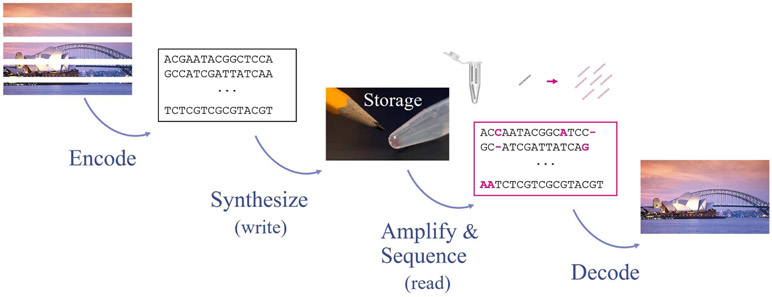

Fig. 2:

The DNA data storage and retrieval pipeline. Trace reconstruction problems come into play just before the Decode step.

Storing the data:

Storing a file in DNA involves several steps. First, the digital file is compressed and partitioned into small, non-overlapping blocks. Then, each individual block is either encoded using an error-correcting code or is randomized using an independent pseudo-random sequence. This provides a set of strings that encode the content of the digital file. To store the location of each block, an address is added to each string. Finally, a global error-correcting code is applied to the resulting set of strings, and the strings are translated into the {A, C, G, T} alphabet. If multiple files are stored together, then a file identifier is also added in the form of a DNA primer (a short nucleotide string). This process results in a large collection of short strings (for example, millions of strings, each containing hundreds of characters). This set of strings, which we call seed strings, are then synthesized into real DNA molecules and stored in a tube until the file is ready to be retrieved. The synthesis process generates many copies of each seed string.

Retrieving the data:

The stored information is read using standard DNA sequencing machines such as DNA sequencers produced by Illumina. A small amount of DNA is extracted from a tube so that the remaining DNA may be used for other retrieval attempts later on. Since this amount may be insufficient for reading DNA (sequencing machines have limitations with respect to the minimal amount of DNA they can sequence), the extracted DNA is amplified using polymerase chain reaction (PCR) to generate multiple copies (e.g., 5–30) of each DNA molecule in a sample. The PCR step enables random access retrieval—to access a subset of files, it suffices to copy and sequence the subset of seed strings with primers (file IDs) corresponding to these files.

However, the PCR process introduces additional errors in each of the amplified copies. Since DNA sequencing machines are not able to identify the error-free sequence of nucleotides in a DNA molecule, they add extra errors to the previously introduced amplification errors. The combination of amplification and sequencing errors typically results in ≈ 1–2% error rate (substitutions and indels). However, there is some debate about the rate and the most common type of error [42], [43]. The output of sequencing is a set of strings that contains several error-prone copies (called reads) of each originally synthesized seed string. Much longer seed strings (tens of thousands of nucleotides versus hundreds of nucleotides in existing applications) can be sequenced using the recently emerged long-read sequencing technologies but the current error rate of such technologies is ≈ 10%, with a large proportion of indels [30], [33], [44], [45].

DNA data retrieval as a trace reconstruction problem:

After the sequencing reads are generated, the goal is to recover the seed strings from the observed error-prone reads that have indels and substitutions. The first challenge is to determine which reads correspond to which seed strings by clustering reads so that each cluster contains the error-prone copies derived from a single seed string [46]. In some DNA data storage systems, the seed strings are randomized or encoded in a such a way that they have large pairwise edit distance [30], [47]-[49]. This property simplifies the clustering problem because the underlying clusters are well-separated. In this context, clustering algorithms have been developed that scale to billions of reads [46].

Recovering the seed strings from the reads can be formulated as a trace reconstruction problem. Each seed string is observed a small number of times, where the error-prone copies (traces) correspond to the reads in a cluster. The objective is to recover as many seed strings as possible. A small number of missing or erroneous seed strings may be tolerated because of the error correction methods. Consequently, it suffices to ensure that a reconstruction algorithm recovers a seed string with probability ReconstructionRate, where the exact success probability depends on the amount of redundancy in the error-correcting code (e.g., the default value may be ReconstructionRate = 0.95). There is a trade-off where having more traces leads to lower error rate in reconstruction, but it incurs a higher sequencing cost and time. In practice, it is typical to use clusters that contain 5–30 reads (traces) [30].

While we focus on trace reconstruction problems in DNA data storage, there are many other challenges and recent results, including better automation methods [50], [51], alternative synthesis schemes [52], [53], improved density and robustness using codes [27], [44], [54]-[66], and more realistic error models and fundamental limits [16], [67]-[70]. For more details about DNA data storage, see the following surveys and references therein [24], [32].

C. Similarities and differences of the two applications

The trace reconstruction problems for immunogenomics and DNA data storage are distinct, both in terms of the trace generation models and how well the models have been studied in the literature.

In immunogenomics, the traces contain important mutations that are introduced during the VDJ recombination and somatic hypermutagenesis. While sequencing and amplification technologies also introduce errors in sequenced antibody repertoires, their rate is much lower compared to the mutations introduced at the antibody generation step. Therefore, we ignore sequencing and amplification errors in immunogenomics applications and focus on the mutations. In contrast, the errors in the DNA data storage applications are only because of the artifacts of the process used to access the data stored in DNA and thus cannot be ignored.

The seed strings in immunogenomics represent real genetic data, whereas the DNA data storage sequences are synthesized to represent information in a digital file. While there has been a considerable amount of work in trace reconstruction problems motivated by DNA data storage, trace reconstruction studies in immunogenomics have only started to emerge [22], [23].

D. Outline

The rest of the paper is organized as follows. In Section II, we introduce the algorithmic and information-theoretic formulations of trace reconstruction. Section III describes trace generation in computational immunogenomics. In Sections III-A and III-B, we introduce the D genes trace reconstruction problem. In Section III-C, we introduce a more complex problem of reconstructing V, D, and J genes that are concatenated together to form antibodies. Section IV describes the theoretical formulation of trace reconstruction problems for DNA data storage. In Section V, we survey theoretical results and practical solutions to the trace reconstruction problem for the deletion channel, along with open problems relevant to developing DNA data storage. Finally, in Section VI we propose several directions for future work.

II. Algorithmic and information-theoretic formulations

In this section, we formalize the algorithmic goals of the trace reconstruction problems. We begin by considering an abstract model, where a single, unknown seed string s generates a random trace c with probability Pr(c ∣ s). For each possible trace c and seed string s, the model specifies Pr(c ∣ s). To recover the seed string s, the reconstruction algorithm receives a collection of traces generated from s, which we refer to as a trace-set C = {c1, c2,…, cT}. For simplicity, we assume that the traces are independent and identically distributed, and hence,

Given an integer T, we use to denote the collection of all possible sets of T strings over a fixed alphabet, and we note that .

We also consider cases where the generation process involves sets of seed strings. In these cases, one string is sampled at a time from a set, and the traces are independently generated from the sampled strings (sometimes concatenating groups of traces to obtain the final trace-set). For example, we can consider the two step process where a seed string s is uniformly randomly selected from an unknown seed-set S, and then s generates a trace. Given a seed-set S = {s1,…, sM}, the probability of a trace-set C is

In other words, in the multiple seed string case, we can still define the probability of a trace-set C in terms of the probability of generating a single trace from a single seed string. The goal is to recover all or most of the strings in S by using a trace-set generated via this two step process.

We next discuss how to evaluate a reconstruction algorithm A that takes as input the trace-set C and outputs a string A(C). We assume that the algorithm knows the trace generation model, that is, for any trace c and seed string s, it knows the probability Pr(c ∣ s). The goal is to reconstruct the seed string using the traces. The fact that the traces themselves are random means that there are at least two ways to evaluate a reconstruction algorithm.

The maximum likelihood estimate (MLE) is a string that maximizes among all seeds. As the probabilities are known to the algorithm, the MLE can always be computed by exploring all strings (i.e., brute-force search) as long as the set of possible candidate strings is finite. The trace generation models that we consider have the property that the maximum length of the seed string can be inferred from the trace-set with high probability. Therefore, the brute-force search can be taken over a finite set of strings. For some models, an efficient algorithm computing the MLE is known, with running time that is polynomial in the number T of traces and the length ∣s∣ of the seed string (see Section III-A). However, for many trace generation models, computing the MLE in polynomial time is currently an open question (i.e., the only known solution is brute-force search).

To circumvent the difficulty of the maximum likelihood objective, previous work instead measures the probability that an algorithm outputs the seed string s used to generate the traces. The trace-set is viewed as a random input, and the probability is taken over the randomness in the trace generation process. We start with definitions for a fixed, but unknown, seed string s, and we later also consider s itself being random. Define the success probability of an algorithm A and a seed string s as

where 1{A(C)=s} is the indicator function for the event {A(C) = s} that the algorithm outputs the seed string s. It is straightforward to extend the definition of PA(s, T) to randomized algorithms; the output A(C) would also be a random variable, and the term 1{A(C)=s} would be replaced with Pr(A(C) = s).

Let be a universe of possible seed strings (e.g., all strings of a certain length over a binary or quaternary alphabet). We define the worst-case success probability of algorithm A for trace-sets of size T over universe as

Then, the worst-case trace reconstruction problem is to develop an efficient algorithm that maximizes . The definition above guarantees that the algorithm succeeds with probability at least when s is an arbitrary seed string from the universe and the trace-set has size T.

We also consider the average-case trace reconstruction problem, where the seed string s is chosen uniformly at random from the universe (instead of being arbitrary, as in the worst-case version). More precisely, the goal is to develop an efficient algorithm A that maximizes the average-case success probability, which is defined as

Notice that the probability here is taken over both the seed string s and the trace-set C. The average-case formulation leads to a nice connection to the MLE. Expanding PA(s, T), we have that

Therefore, the inner sum over is maximized when A outputs the string maximizing , or in other words, when the algorithm outputs the MLE.

We note that algorithm does not know the seed string, and hence, it cannot determine whether it outputs s or some other string s′ that could have generated the trace-set. In contrast, the MLE is always rigorously defined because it allows the algorithm to output any string that maximizes the likelihood. To rigorously reason about the maximum success probability formulation, we assume that the trace-set is large enough so that a unique seed string must have generated the traces with high probability, and hence, the algorithm can recover this string with high probability. Later on, we also discuss how to empirically determine the success probability with a benchmark dataset.

In summary, when the seed string is random (i.e., the average-case version), then the maximum likelihood solution also maximizes the success probability . In particular, the ideal solution to the average-case trace reconstruction problem would be an efficient algorithm that computes the MLE, with running time that is polynomial in the number of traces and the seed string length. For the new models that we introduce, we remain hopeful that such an algorithm can be found. However, the only presently known algorithm for all but one of the models is to perform brute-force search. Moreover, in the worst-case version, the MLE may not maximize the success probability PA(s, T), and these two formulations may lead to different optimal algorithms.

In Section III, we introduce various trace generation models in computational immunogenomics. For each model, we provide a problem statement that asks for an MLE solution, i.e., an algorithm that outputs a seed string (or a seed-set) that maximizes the likelihood of a given trace-set. However, we also note that it would be valuable to develop an algorithm with high success probability when the input is viewed as a random trace-set. While both of these are valid and important formulations, the MLE version is a long-standing tradition in bioinformatics that is widely used in such areas as computing phylogenetic trees [71] and genetic linkage analysis [72]. In immunogenomics, MLE was used for computing antibody clonal trees [73], modeling VDJ recombination [74], [75], and modeling antibody-antigen interactions [76], [77]. On the other hand, information theory and computer science researchers may prefer to develop (approximation) algorithms that are evaluated based on their success probability. Therefore, we briefly discuss evaluation metrics before introducing the models.

A. Approximation Algorithms and Empirical Success Probability

As for many other bioinformatics problems, since brute-force solutions are prohibitively slow, the goal is to develop fast approximation or heuristic algorithms that are practical for typical input sizes. For an analogy, although the edit distance problem between two sequences can be solved in polynomial time [78], the closely related sequence alignment problem between multiple sequences is NP-hard [79]. Nevertheless, since the multiple sequence alignment problem is at the heart of sequence comparison in bioinformatics, hundreds of heuristic algorithms have been developed for solving it [80]. The ultimate goal of these algorithms is to generate biological insights, and hence, they are often benchmarked on datasets with known solutions [81].

Turning back to trace reconstruction problems, it would often suffice to output the MLE on most trace-sets, instead of all of them (e.g., failing with vanishingly small probability). Alternatively, when it is difficult to find the entire seed string s maximizing Pr(C ∣ s), it may be possible to find a sufficiently long substring instead. Doing so could further enable finding the entire seed string through a complementary experimental approach. For example, a seed string reconstructed by an approximation or heuristic algorithm can be later validated and error-corrected by using genomics data that complements the immunogenomics data [23].

We also mention one more choice: is the number of traces fixed in advance or not? For a fixed number of traces T, the goal is to design an algorithm with highest possible success probability. Alternatively, since the success probability increases as T increases, we consider an additional input parameter ReconstructionRate, where 0 ≤ ReconstructionRate ≤ 1 and the goal is to design an algorithm with success probability surpassing ReconstructionRate using as few traces as possible. Formally, we want to determine the minimum value T* such that the trace reconstruction problem with T traces is feasible for a given ReconstructionRate as long as T ≥ T*. This value T* is called the trace complexity, and we discuss it further in Section IV. We also note that the success probability can be driven to one by using more traces, assuming it starts above 0.5. Indeed, taking the majority vote over O(log(1/β)) trials for any value 0 < β < 1 will lead to success probability 1 – β, which follows via a Chernoff bound. Both algorithmic formulations are relevant for practical applications.

For the immunology models, we consider a fixed number of traces. The reason is that the number of traces depends on multiple factors—such as the reconstruction of clonal trees during antibody development [82] or selecting the best candidate for follow-up antibody engineering efforts [83]—and accurate reconstruction of germline genes is only one of them. For the DNA data storage models, we consider an information-theoretical perspective and focus on determining the minimum number of traces that suffice for a certain success probability.

The average-case success probability can be empirically calculated by choosing the seed string s at random and testing whether A(C) = s when the trace-set is generated at random from s. For the worst-case success probability, it is infeasible to compute the minimum over all possible length n strings. Instead, it would be easier to use seed strings from a benchmark dataset. For example, if the ReconstructionRate is 0.95, then the algorithm will likely output A(C) = s at least 95 times over 100 randomly generated trace-sets, and this should hold for each seed string s from the dataset. In the DNA data storage application, the seed strings are constructed synthetically during the storage process, and therefore, they may be used as a benchmark.

III. Trace Generation in Computational Immunogenomics

Reconstructing D genes is more difficult than reconstructing V and J genes:

Inferring the sequences of germline genes using immunosequencing data obtained from an individual antibody repertoire is an important problem [22], [23], [84]-[87]. In the case of V and J genes, this challenge was addressed by [85]-[88]. Reconstruction of shorter D genes is a more challenging task [88]. D genes contribute to the complementarity determining region 3 (CDR3) that spans the V-D and D-J junctions and represents an important and highly divergent part of antibodies that accumulates many SHMs. Since D genes typically get truncated on both sides during VDJ recombination, the CDR3 typically contains a truncated D gene. Each CDR3 also contains some random insertions at the V-D and D-J junctions. These truncations and insertions, combined with the fact that D genes are much shorter than V and J genes, make the task of aligning various CDR3s (and thus aligning segments of D genes that survive within these CDR3s) more difficult than alignment of longer and typically less mutated fragments of immunoglobulins that originated from V and J genes.

The biologically adequate problem formulations in immunogenomics are rather complex, making it difficult to develop and test algorithmic ideas for solving these problems. That is why the usual path toward solving such problems is to start from simple and often inadequate formulations that however shed light on algorithmic ideas that can be used for solving more complex problems [89]. We follow this path by starting with a simple formulation for the problem of inferring D genes from CDR3s extracted from an antibody repertoire. Although efficient algorithms for the complex biologically adequate problems remain unknown, the recently developed MINING-D heuristic [23] led to the discovery of previously unknown D genes across multiple species. After describing open problems relevant to finding new D genes, we formulate more difficult problems relevant to inferring the sets of V, D, and J genes (rather than D genes only).

Generating CDR3 from a D gene:

We denote the length of a string s as ∣s∣ and the concatenation of strings s1 and s2 as s1 * s2. We refer to a random string of length l (each symbol is generated uniformly at random from a fixed alphabet ) as rl. Given an integer t, we define a random string Random≤t as rl, where an integer l is sampled uniformly at random from [0, t]. In this paper, .

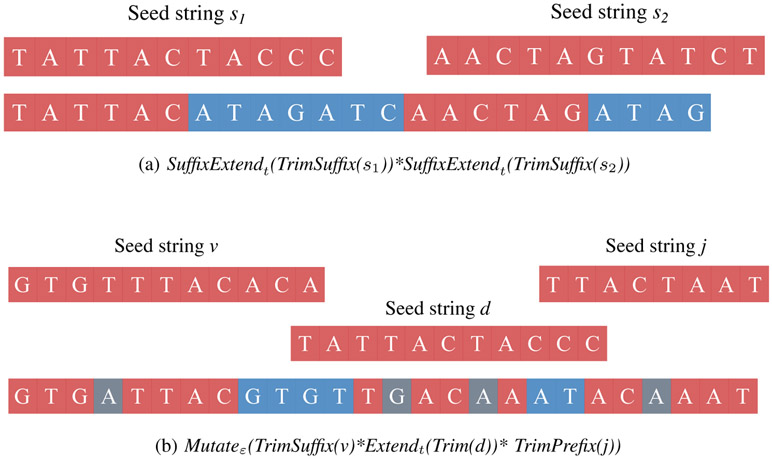

Below we describe various models for generating traces from a seed string or from a seed-set. In all models, we assume that each trace is generated independently. To model generation of a CDR3 (trace) from a D gene (seed) in the models below, we describe the following operations on a string s (Figure 3):

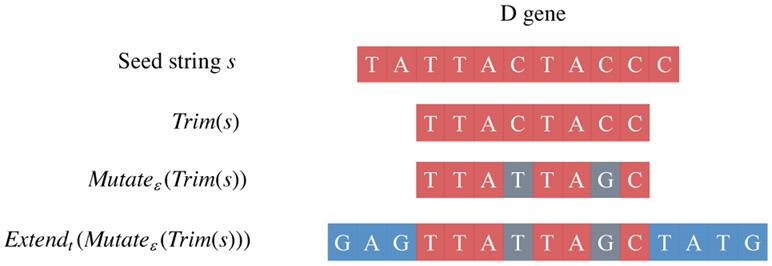

Fig. 3:

Trim, Mutate, and Extend operations model the process of generating a CDR3 of an immunoglobulin gene from a D gene using somatic hypermutations (shown in green) and random insertions (shown in blue).

Trim(s): A pair of integers l and k are sampled uniformly at random from the set of all pairs of non-negative integers (i, j) satisfying the condition i + j ≤ ∣s∣. The prefix of length l and the suffix of length k of s are trimmed.

Mutateε(s): Each letter in s is independently mutated with probability ε in such a way that mutations into all symbols (differing from the symbol in s) are equally likely.

Extendt(s): a string R1 * s * R2 where R1 and R2 are independent instances of Random≤t.

Figure 3 illustrates the Extendt(Mutateε(Trim)) model for generating a CDR3 from a D gene using random deletions/insertions and somatic hypermutations. Before considering this rather complex model, we will consider a series of simpler (albeit less adequate) models for generating CDR3s (Figure 4) that use the operations listed below.

Fig. 4:

Trace generation for various trace reconstruction problems motivated by analysis of immunosequencing data. Insertions (i.e., random strings of random length) are shown in blue. Hypermutations are shown in green.

TrimSuffix(s): an integer k is sampled uniformly at random from [0, ∣s∣] and the suffix of s of length k is trimmed.

TrimSuffixAndExtend(s): an integer k is sampled uniformly at random from [0, ∣s∣], the suffix of s of length k is trimmed, and the resulting string is concatenated with rk.

SuffixExtendt(s): a string s * Random≤t.

TrimAndExtend(s): a pair of integers l and k are sampled uniformly at random from the set of all pairs of non-negative integers (i, j) satisfying the condition i+j ≤ ∣s∣. The prefix of length l and the suffix of length k of s are trimmed resulting in a string Trim(s). TrimAndExtend(s) is defined as rl * Trim(s) * rk.

We will start with a simple TrimSuffixAndExtend model where the seed string and the modified strings are of equal lengths. The next SuffixExtendt(TrimSuffix) model relaxes the assumption that the lengths of all modified strings generated from a seed string are the same since the same D gene can produce CDR3s of different lengths in the VDJ recombination process. In the next SuffixExtendt(Mutateε(TrimSuffix)) model, we further allow mutations to occur in the seed string. This is important because the immune system introduces random somatic hypermutations to increase the affinity towards an antigen.

In the above models, only the suffix of the seed string gets trimmed in the first step. In the real VDJ recombination process, however, D genes get trimmed on both sides. To incorporate this fact in the above models, we next present the TrimAndExtend model that allows trimming on both sides while keeping the lengths of the modified strings the same. This is analogous to the TrimSuffixAndExtend model and the only difference between the two models is that the former gets trimmed on both sides whereas in the latter, only the suffix is trimmed. To introduce mutations in this model, where the seed string gets trimmed on both sides, we then present the Mutateε(TrimAndExtend)) model, while still keeping the lengths of all modified strings the same. Finally, to allow for the possibility of different lengths of modified strings, while keeping intact the trimming from both sides and the random mutations, we introduced the Extendt(Mutateε(Trim)) model which is the most biologically adequate model for VDJ recombination among all introduced models.

All models presented in the next subsection can be extended to the multiple seed strings case where a seed string is chosen randomly from a seed-set, a trace is then generated from the chosen seed string according to a model, and the process is independently repeated a number of times to generate a set of traces. In Section V-A, we will discuss the population recovery problem, which also concerns reconstructing multiple seed strings under a different trace generation model.

The average length and the number of D genes varies among species—for humans and many immunologically important mammals (e.g., mice and rats), the length of D genes does not exceed 40 nucleotides and the number of D genes varies from 20 to 40. In contrast, other immunologically important mammals (e.g., cows) have long (150 nucleotides) and very repetitive D genes. Since future personalized immunogenomics studies may involve thousands or even millions of individuals, the D gene reconstruction algorithms must scale accordingly, e.g., the running time should not exceed a few hours.

A. A Simple but biologically inadequate model for D gene reconstruction

TrimSuffixAndExtend:

Although this model (Figure 4a) does not adequately reflect the realities of VDJ recombination, the trace reconstruction problem for this model can be efficiently solved. A seed string may generate the same trace for different values of the trimming integer k in the TrimSuffixAndExtend model. The probability Pr(c ∣ s) that a seed string s generates a trace c depends only on the length m of their longest shared prefix and is given by

where is constant given the length of the seed string and the alphabet size. The probability that a seed string s generates a trace-set C = {c1, c2,…, cT} is computed as

| (1) |

Trace Reconstruction Problem in the TrimSuffixAndExtend model

Input: A trace-set C generated from an unknown seed string according to the TrimSuffixAndExtend model.

Output: A string s maximizing Pr(C ∣ s).

Solving Trace Reconstruction Problem in the TrimSuffixAndExtend model:

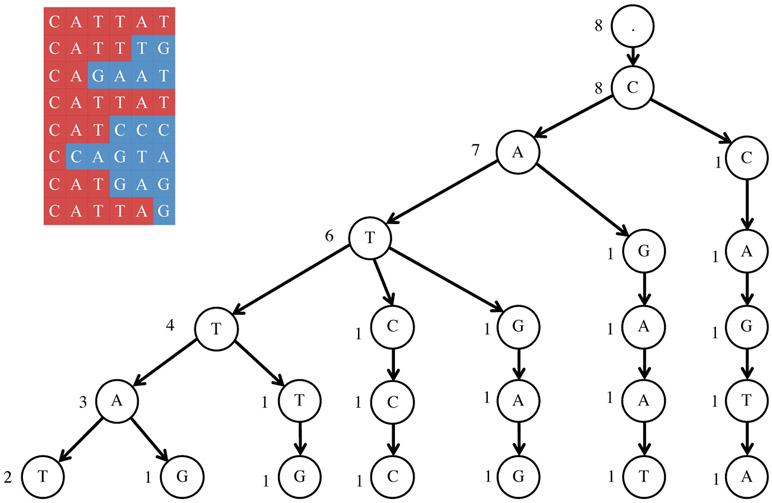

Pr(C ∣ s) is maximized by one of the traces. This observation leads to an algorithm for solving the String Reconstruction Problem (with complexity O(∣s∣ · T2)) that simply computes Pr(C ∣ s) for each of the T traces. We describe an improved algorithm for solving this problem with a running time of O(∣s∣ · T), which is linear in the input size.

Maximizing Pr(C ∣ s) is equivalent to maximizing , where mi is the length of the longest shared prefix between s and ci [23]. Since is a constant, it is equivalent to finding a string s that maximizes

We denote and search for a string s that maximizes where mi is the length of the longest shared prefix between s and ci. We denote a t-symbol prefix (t-prefix) of a string c as ct and the set of all t-prefixes of strings from C as Ct. Given a string s and an integer t, we say that a string c is t-similar to s if t-prefixes of s and c coincide. The number of strings in C that are t-similar to s is denoted as simt(C, s). Given a string s,

| (2) |

We use this recurrence to efficiently compute score (C ∣ s) for each string s from C using dynamic programming on a trie constructed from all traces in C [90]. Each vertex in the trie is a t-prefix st of a string from C, and we recursively compute score(Ct ∣ st) in each vertex of the trie using the above recurrence assuming that the score of the root is . The optimal string corresponds to the leaf node with the maximum score.

For all strings in C and all values of t, the quantities simt(C, s) can be computed during the construction of the trie as follows. Traces are added sequentially to construct the trie. In addition to t-prefixes, each vertex also stores simt(C, s) which is initialized to 1 for a new vertex. For example, in Figure 5, we start with an empty trie and first add the trace “CATTAT” by creating six new vertices, each representing one of the six t-prefixes. At this point, the trie contains only one string, and for all vertices, we have simt(C, s) = 1. Then, we add the next trace “CATTTG”. For t ≤ 4, the t-prefixes of “CATTAT” and “CATTTG” coincide. In other words, they share the first four vertices in the trie. For all vertices that are traversed while inserting a new trace, the values of simt(C, s) are updated by adding 1 to the current values. For new vertices, like before, the values of simt(C, s) are initialized to 1. In this example, for the vertices representing t-prefixes “C”, “CA”, “CAT”, and “CATT”, the value of simt(C, s) will be updated to 2, whereas for the two new vertices representing t-prefixes “CATTT” and “CATTTG”, the values of simt(C, s) will be 1. All traces are inserted to the trie in this manner. We can thus compute all simt(C, s) values during the construction of the trie with complexity O(∣s∣ · T). After the construction of the trie, all quantities score(Ct ∣ st) can then be computed by a single Depth-First Search using Eq. (2).

Fig. 5:

Illustration of the algorithm for solving the String Reconstruction Problem in the TrimSuffixAndExtend model. The set of traces is shown on the left, and their trie is shown on the right. The string associated with each vertex is the one that is formed by traversing the trie from the root node to the vertex. The values of simt(C, s) for all vertices are shown.

TrimSuffixAndExtend model with multiple seeds:

Next, we consider a modified TrimSuffixAndExtend model with a seed-set S = {s1, s2,…, sM}. Traces are generated via a two step approach. First, a string si ∈ S is chosen uniformly randomly from S. Then, si is modified to generate a trace c according to the TrimSuffixAndExtend model. We note that S can either be an arbitrary set of M strings (worst-case) or the strings in S can be chosen independently and uniformly from the universe of possible strings (average-case). Note that the above model is described for a uniform distribution over the seed strings. In the real VDJ recombination process, various D genes contribute to immunoglobulin genes with varying propensities. To incorporate this fact, the above model can be reformulated by considering an arbitrary distribution on the seed strings.

Trace Reconstruction with Multiple Seeds Problem in the TrimSuffixAndExtend model

Input: A trace-set C generated from an unknown set of M seed strings of the same length according to the TrimSuffixAndExtend model.

Output: A set of strings S = {s1, s2,…, sM} maximizing Pr(C ∣ S).

The MINING-D heuristic algorithm:

Although the trace reconstruction problem can be efficiently solved in the TrimSuffixAndExtend model, it is unclear how to generalize the algorithm for the more complex models with multiple D genes and varying lengths of modified strings. Bhardwaj et. al. [23] propose a practical greedy heuristic for this model that, while being suboptimal, motivates practical algorithms for more complex models.

For the TrimSuffixAndExtend model, the algorithm starts with an empty string and at step j extends it on the right by the most abundant symbol in C at position j and discards from C the strings that have symbols that are not the most abundant symbols at position j. This procedure repeats until the length of the resulting string equals the length of the seed string s. This greedy algorithm, however, cannot be directly used in practice because (a) the CDR3s are formed by multiple D genes, (b) the number of D genes is unknown a priori, (c) the D genes have different lengths that are unknown, (d) CDR3s generated by the same D gene can have different lengths.

The MINING-D algorithm [23], inspired by the above greedy algorithm, considers the complexities of the real immunogenomics data. It uses the observation that, although D genes typically get truncated on both sides during the VDJ recombination process, their truncated substrings are often present in the newly recombined genes, and, hence, the CDR3s. Therefore, we expect the truncated substrings of D genes to be highly abundant in a CDR3 dataset. MINING-D starts by finding the most abundant k-mers (a k-mer is a string of length k). It then extends them on both sides using the greedy algorithm to recover entire D genes that contain highly abundant k-mers as substrings. MINING-D defines a probabilistic stopping rule as the lengths of the D genes are not known a priori. This stopping rule also allows us to recover D genes of different lengths. Since some abundant k-mers can be substrings of multiple D genes, MINING-D allows multiple extensions from each k-mer in the extension procedure.

We next introduce models that incorporate more complexities of the VDJ recombination process, leading up to the model that mimics the real formation of an immunoglobulin gene from a set of V, D, and J genes. To the best of our knowledge, these models have not been studied in the literature and brute-force search is the only known exact solution to trace reconstruction in these models.

B. Toward a biologically adequate model for D gene reconstruction

SuffixExtendt(TrimSuffix):

Unlike the TrimSuffixAndExtend model, the SuffixExtendt(TrimSuffix(s)) model (Figure 4b) generates traces of varying lengths from a single seed string s. Let strim be the substring of s that remains after the operation TrimSuffix is applied on s. Then, Pr(c ∣ s) is given by

Let m be the length of the longest shared prefix between c and s, as before. Then, Pr(c ∣ s, ∣strim∣ = k) is non-zero only if ∣c∣ – t ≤ k ≤ m and can be written as

Thus Pr(c ∣ s) is zero if m < ∣c∣ – t. Otherwise,

| (3) |

where x+ = max(x, 0).

Trace Reconstruction Problem in the SuffixExtendt(TrimSuffix(s)) model

Input: A trace-set C generated from an unknown seed string according to the SuffixExtendt(TrimSuffix(s)) model.

Output: A string maximizing Pr(C ∣ s).

SuffixExtendt(Mutateε(TrimSuffix)):

We now consider a slightly more realistic model for trace generation that incorporates somatic hypermutations (Figure 4c). The probability Pr(c ∣ s) that a seed string s generates a trace c is given by

where dk is the Hamming distance between the prefixes of c and s of length k.

Trace Reconstruction Problem in the SuffixExtendt(Mutateε(TrimSuffix)) model

Input: A trace-set C generated from an unknown seed string according to the SuffixExtendt(Mutateε(TrimSuffix)) model.

Output: A string maximizing Pr(C ∣ s).

TrimAndExtend:

In all the models above, only the suffix of the seed string gets trimmed in the first step. In contrast, during the VDJ recombination process, the D gene gets trimmed from both sides. We will thus consider the TrimAndExtend model (Figure 4d) for generating a trace c from a seed string s.

Since strings s and c have the same length, their comparison results in a binary comparison vector where 1s (0s) correspond to the match (mismatch) positions. Let t(i) denote the length of the continuous run of 1s starting at position i + 1 in the comparison vector. The probability that a seed string s generates a trace c is given by

Trace Reconstruction Problem in the TrimAndExtend model

Input: A trace-set C generated from an unknown seed string according to the TrimAndExtend model.

Output: A string maximizing Pr(C ∣ s).

Mutateε(TrimAndExtend):

We now consider a model that incorporates mutations in the TrimAndExtend model (Figure 4e). Let substringl,k(s) be the substring of the seed string s where the prefix of length l and the suffix of length k have been trimmed. The probability that a seed string s generates a trace c in the Mutateε(TrimAndExtend) model is given by

where dl,k is the Hamming distance between substringl,k(c) and substringl,k(s).

Trace Reconstruction Problem in the Mutateε(TrimAndExtend) model

Input: A trace-set C generated from an unknown seed string according to the Mutateε(TrimAndExtend) model.

Output: A string maximizing Pr(C ∣ s).

Extendt(Mutateε(Trim)):

The biologically adequate model for generating traces from a seed string is the Extendt(Mutateε(Trim)) model illustrated in Figure 3. This model is more complex than the previous ones as it requires consideration of all possible pairs of equally sized substrings of the seed string and the trace. Note that in all previous models, the traces either had the same length as the seed string, or were aligned with the seed string on the left. Let subl(s) denote all the substrings of s of length l and denote all substrings of c of length l such that the number of symbols in c before or after the substring do not exceed t. Then, the probability that a seed string s generates a trace c in the Extendt(Mutateε(Trim)) model is given by

| (4) |

where ds1,s2 is the Hamming distance between strings s1 and s2.

Trace Reconstruction Problem in the Extendt(Mutateε(Trim)) model

Input: A trace-set C generated from an unknown seed string according to the Extendt(Mutateε(Trim)) model.

Output: A string maximizing Pr(C ∣ s).

C. Trace Reconstruction of V, D, and J genes

Above, we considered the trace reconstruction problems that are relevant to generating a CDR3 from a D gene. We will now consider more complex trace reconstruction problems that model concatenation of V, D, and J genes to form an entire immunoglobulin gene (Figure 6). We will start from the simplest problem when each trace represents a concatenation of just two traces generated by two different seed strings.

Fig. 6:

Trace generation that involves concatenation of multiple seed strings. Insertions are shown in light blue, hypermutations are shown in green. The most general model for the VDJ recombination is shown in (b).

SuffixExtendt(TrimSuffix)*SuffixExtendt(TrimSuffix):

We first consider a model when two seed strings s1, s2 of equal length n generate a single trace c according to the SuffixExtendt(TrimSuffix(s1))*SuffixExtendt(TrimSuffix(s2)) model (Figure 6a). Let prefixl(s) and suffixl(s) be the prefix and suffix of string s of length l. The probability that the seed strings s1 and s2 generate a trace c is given by

| (5) |

where Pr(prefixl(c) ∣ s1) is defined according to the SuffixExtendt(TrimSuffix) model (Eq. 3) if l ≤ n + t and 0 otherwise. Pr(suffix∣c∣–l(c) ∣ s2) is defined similarly.

Trace Reconstruction Problem in the SuffixExtendt(TrimSuffix)*SuffixExtendt(TrimSuffix) model

Input: A trace-set C generated from two unknown seed strings according to the SuffixExtendt(TrimSuffix)*SuffixExtendt(TrimSuffix) model.

Output: Strings s1 and s2 maximizing Pr(C ∣ s1, s2).

SuffixExtendt(TrimSuffix)*SuffixExtendt(TrimSuffix) model with multiple seeds:

Next, we consider a modification of the above model where each trace is generated by two sets of seed strings of the same length n, and , rather than a pair of seed strings. Seed strings s1 and s2 are randomly chosen (from the sets S1 and S2 according to a uniform distribution) and the chosen strings generate a trace according to the SuffixExtendt(TrimSuffix)*SuffixExtendt(TrimSuffix) model.

Trace Reconstruction with Multiple Seeds Problem in the SuffixExtendt(TrimSuffix)*SuffixExtendt(TrimSuffix) model

Input: A trace-set C generated from two unknown sets containing M1 and M2 seed strings according to the SuffixExtendt(TrimSuffix)*SuffixExtendt(TrimSuffix) model.

Output: A set of M1 seed strings and a set of M2 seed strings maximizing Pr(C ∣ S1, S2).

VDJ recombination model (single v, d, and j seed strings):

We now consider a model when three strings v, d, and j of length nv, nd, and nj respectively generate a trace c according to the Mutateε(TrimSuffix(v)*Extendt(Trim(d))* TrimPrefix(j)) model (Figure 6b). Here, TrimPrefix(s) is defined similarly to TrimSuffix(s), where an integer k is sampled uniformly from [0, ∣s∣], and the prefix of s of length k is trimmed. However, like the Extendt(Mutateε(Trim))) model, it is a complicated model because one must consider all triples of substrings of the trace c. The probability Pr(c ∣ v, d, j) that the seed strings v, d, and j generate a trace c is given by

where P1 (prefixi(c) ∣ v) is given by

where di is the Hamming distance between prefixi(c) and prefixi(v). P2(substringi,j(c) ∣ d) is defined as in Eq. (4). P3 is defined similarly to P1.

Trace Reconstruction Problem in the VDJ recombination (single v, d, and j seed strings) model

Input: A trace-set C generated from three unknown seed strings according to the VDJ recombination model.

Output: Three strings s1 , s2, and s3 maximizing Pr(C ∣ v, d, j).

VDJ recombination model (multiple v, d, and j seed strings):

We will now consider a model when three seed-sets V = {v1, v2, …, vMv}, D = {d1, d2,…, dMd}, and J = {j1, j2,… , jMj} generate a trace c according to the following model. One string from each of the sets V, D, and J is uniformly randomly chosen and the chosen strings v, d, and j generate a trace according to the VDJ recombination model. The probability that a trace c is generated by seed strings in V, D, and J is given by

Trace Reconstruction Problem in the VDJ recombination (multiple v, d, and j seed strings) model

Input: A trace-set C generated from three unknown seed-sets (containing Mv, Md, and Mj strings respectively) according to the VDJ recombination model.

Output: Set S1 with Mv strings, set S2 with Md strings, and set S3 with Mj strings maximizing Pr(C ∣ S1, S2, S3).

IV. Trace Reconstruction problems for DNA Data storage

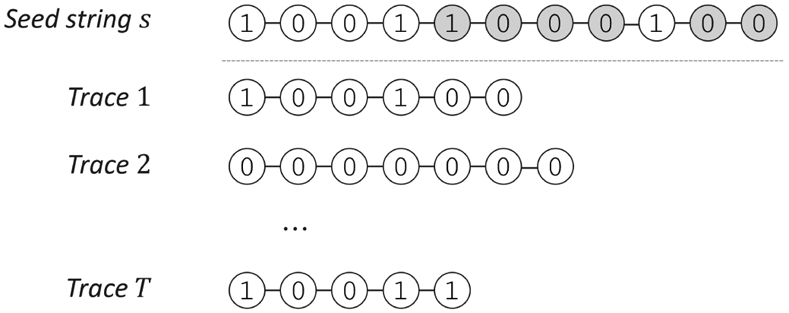

A popular formulation of trace reconstruction considers the deletion channel, where random symbols in the seed string s are deleted independently with probability q and 0 < q < 1 is the deletion probability. This produces a trace c representing a random subsequence of s. This process is repeated independently T times to produce a random trace-set C (Figure 7). The trace reconstruction algorithm takes the traces (without any information about which symbols were deleted from the seed string), the length of the seed string, and the deletion probability as an input. For simplicity, we focus on binary seed strings, while the definitions can be extended to larger alphabets.

Fig. 7:

Seed string and example traces from the deletion channel. Gray circles indicate the deleted bits to generate the bottom trace.

The maximum likelihood solution would output the string s that maximizes Pr(C ∣ s) for the given trace-set C. We first consider the probability Pr(c ∣ s) for a single trace c. Let Ns(c) denote the number of times c appears as a subsequence of s. For example, if s = 11010 then c = 110 appears Ns(c) = 4 times, corresponding to the subsequences {110••, 11•• 0, 1•• 10, • 1 • 10}, where • denotes a deleted symbol. The value of Ns(c) can be computed using dynamic programming [21]. Recalling that ∣s∣ denotes the length of a string, the probability Pr(c ∣ s) can be computed as follows

Since each trace in C is produced independently, we have that

The value Pr(C ∣ s) can be calculated for any fixed s. However, the optimization problem that determines the optimal s is challenging. Designing an efficient algorithm (with time polynomial in ∣C∣ and ∣s∣) that outputs a string s maximizing Pr(C ∣ s) is an open question. Partial results are known when ∣C∣ is very small [19], [91]–[94].

We focus on the success probability in this section, and we also restrict to length n seed strings. We define the worst-case success probability of an algorithm A over all binary strings of length n as

Similarly, the average-case success probability of A over all binary strings of length n is

Trace Complexity:

Most previous work provides information-theoretic results in terms of the trace complexity, which is the minimum value of T such that there exists an algorithm with success probability at least the given ReconstructionRate. This will depend on the deletion probability q. For any fixed ReconstructionRate, the number of input traces must be at least the trace complexity for the algorithmic problem to be feasible. It is often convenient to fix the ReconstructionRate to a default value, such as ReconstructionRate = 0.95. This does not affect the trace complexity too much because arbitrarily large ReconstructionRate can be achieved by increasing the number of traces by a logarithmic factor (taking a majority vote over several trials). Therefore, we define the worst-case trace complexity as

and the average-case trace complexity as

The trace complexity may depend on the error rate. Certain algorithms only succeed when the deletion probability decreases as a function of the length n of the seed string. Historically, the initial results assume that the deletion probability scales inversely with n, e.g., or q = O(1/log n) [4], [12], [20]. These results have been later strengthened to handle a constant rate of deletions, e.g., q = 0.5 [7], [13], [18]. The extent to which the deletion probability impacts the trace complexity remains unknown in general.

For simplicity, we restrict our attention to the deletion channel, but many of the results that we discuss also extend to a more general error model that includes insertions and substitutions [7], [13], [18], [20]. We refer the reader to the following surveys for other error models and related theoretical open questions [91], [95].

V. Theoretical Results on Trace Reconstruction

We survey theoretical results for reconstructing a seed string s of length n. We begin with three variants depending on the nature of the unknown string: it can be arbitrary (worstcase); it can be chosen uniformly at random (average-case); or, it can be chosen from a predefined set of encoded strings (coded trace reconstruction). For each variant, we first present a formal problem statement. The information-theoretic goal is to determine the values of the parameters T, q, n, and ReconstructionRate for which the problem is solvable. The next step is to design an efficient algorithm for such cases. In the latter half of this section, we also mention generalizations to multiple strings and to higher-order structures (such as trees). We conclude with a brief description of some recent practical developments. Throughout, we use to abbreviate the output of a reconstruction algorithm A on an input trace-set C.

Worst-case trace reconstruction:

We first describe the case where the seed string s is arbitrary, and the success probability is calculated over the randomness in generating the trace-set C.

Worst-Case Trace Reconstruction Problem for the Deletion Channel

Input: A random trace-set C of size T generated from a seed string s of length n according to the deletion channel model with deletion probability q, as well as the ReconstructionRate.

Output: A string such that with success probability at least ReconstructionRate.

The current best trace complexity for worst-case strings is Tq(n) = exp(O(n1/5 log5 n)) when the deletion probability q is at most 1/2 [96]. When q ∈ (1/2, 1), then the known result is Tq(n) = exp(O(n1/3)) [7], [18]. The latter result uses a mean-based algorithm that first pads each trace with trailing zeros so that the length equals the seed length n (here, we consider a binary alphabet). Then, the mean of the traces is computed by summing the padded traces coordinate-wise and normalizing by the number of traces (i.e., this computes the fraction of ones in each position). It is known that when the number of traces is at least exp(O(n1/3)) then these means suffice to determine the unknown string with high success probability [7], [18]. The improvement to Tq(n) = exp(O(n1/5 log5 n)) when q ≤ 1/2 uses a similar algorithm, with the subtle difference and important difference that certain substring frequencies are approximated instead of single bits [96].

An intriguing aspect of the worst-case result is the use of techniques from complex analysis. The elegant argument involves expressing the mean-based statistics (from averaging the padded traces) in terms of a complex-valued generating function (whose coefficients are determined by the seed string and deletion probability). The aim is to lower bound the statistical distance between trace-sets that are generated from distinct seed strings. It is fairly easy to show that the maximum modulus of this function in a certain arc of the complex unit disk provides such a lower bound. Then, to complete the proof, the authors use prior results on Littlewood polynomials [97], [98]. This argument serves as the basis of a trace reconstruction algorithm with running time proportional to the number of traces. Surprisingly, the bound is tight for mean-based algorithms, in the sense that exp(Ω(n1/3)) traces are necessary if an algorithm uses only the coordinate-wise means [7], [18]. These results have further inspired the use of related generating functions to derive improved bounds for other statistical learning problems [99], [100].

Improvements to the trace complexity are known for a very small deletion probability; if each bit is deleted with probability less than n−1/2–δ for a small constant δ, then a nearly-linear number of traces suffice [12]. We note that mean-based algorithms extend to handle insertions and substitutions as well [7], [18]. It is an open question to determine the smallest deletion probability such that a polynomial number of traces suffice. When the deletion probability does not decrease with n (e.g., q = 0.5), then lower bounds on the trace complexity are known. Previous work shows traces are necessary [8], [11], where the notation hides polylog factors.

The central open problem is to close the exponential gap between upper and lower bounds on the worst-case trace complexity. A first step could be to better understand which seeds strings are the most challenging to reconstruct. For many algorithms, simple strings demonstrate that the current analysis is tight. However, other methods readily reconstruct these strings. For example, the lower bound is derived for the task of distinguishing a pair of alternating strings with two flipped bits, e.g..

1010 ⋯ 101010 ⋯ 1010

1010 ⋯ 100110 ⋯ 1010

Telling apart these strings using traces is straightforward, and an algorithm using traces is known. Hence, the lower bound for this pair is nearly tight [8], [11]. Another futile attempt comes from considering a uniformly random string. In many areas, the probabilistic method suffices to identify difficult instances [101], [102]. For reconstruction problems, the opposite is often true: random objects can be reconstructed with less information than worst-case instances [103]-[105]. In particular, random strings are easier to reconstruct, as we will now see.

Average-case trace reconstruction:

We move on to consider the case when the seed string s is a uniformly random length n string. In this case, the seed string is chosen randomly before generating each set of traces, and the success probability is calculated with respect to both the trace-set generation and the choosing of the seed string.

Average-Case Trace Reconstruction Problem for the Deletion Channel

Input: A random trace-set C of size T generated from a uniformly random seed string s of length n according the deletion channel model with deletion probability q, as well as the ReconstructionRate.

Output: A string such that with success probability at least ReconstructionRate.

The current best upper bound on the trace complexity is for uniformly random strings, and this holds for any deletion probability q bounded away from one [13]. This upper bound is exponentially better than the result for worst-case strings [7], [18]. The lower bound for average-case reconstruction shows that traces are necessary to reconstruct a random string with constant deletion probability, where here the notation hides log log n factors [8], [11]. When the deletion probability scales inverse-logarithmically with n, then logarithmic upper bounds on the average-case trace complexity are known [4], [20].

The algorithms for average-case reconstruction are much more involved than the current methods for worst-case reconstruction. Instead of relying only on statistical quantities, the algorithm iteratively reconstructs the seed string one character at a time. At the beginning, a small number of traces are used to learn a short prefix exactly. This partial reconstruction then serves as an anchoring method to approximately align the traces. When the seed string is random, its short substrings are locally unique with high probability, and therefore, such alignments can be reliable. The algorithm moves left-to-right and employs a worst-case algorithm to reconstruct the next bit. This general approach, along with a careful analysis of the alignment process, led to an algorithm that requires traces when the deletion probability is less than 0.5 [106], building on a similar approach that uses poly(n) traces [12]. Subsequent work extends this idea with a more sophisticated alignment method and many technical developments, leading to the best known algorithm for average-case trace reconstruction that achieves a trace complexity of for any deletion probability q bounded away from one [13]. Recently, an algorithm has also been proposed that achieves a polynomial number of traces in a smoothed-analysis setting that interpolates between the worst-case and average-case reconstruction problems; more specifically, in this model, a worst-case seed string is first randomly perturbed, where each bit is flipped with some probability less than 1/2, and then the traces are all generated from this randomized string [107].

Coded trace reconstruction:

The next variation assumes that the seed string s is chosen from a predefined set of possible strings (e.g., these may be codewords from a suitable code, where it is desirable for these codewords to have an efficient construction procedure as well). For example, in DNA data storage, there is flexibility to encode the seed strings. The definition of success probability can either be the minimum over all predefined seed strings (worst-case) or the expectation over a uniformly random predefined seed string (average-case).

Coded Trace Reconstruction Problem for the Deletion Channel

Input: A random trace-set C of size T generated from a seed string s of length n according to the deletion channel model with deletion probability q, where s is guaranteed to be from a predefined set of possible strings, as well as the ReconstructionRate.

Output: A string such that with success probability at least ReconstructionRate.

Compared to reconstructing worst-case strings, better trace complexity upper bounds are known. The improvement depends on the number of possible encoded strings, i.e., the rate of the code [5], [6], [9]. We mention a few results that exemplify different regimes. For this discussion, we consider worst-case reconstruction, where the success probability guarantee holds for all predefined strings. It will also be convenient to frame the encoding process as adding redundancy to an arbitrary seed string. The code maps the unknown seed string s of length n to a new string s′ of larger length n′ > n. Applying this mapping to all possible strings generates the predefined seed strings in the coded trace reconstruction problem. The objective is to simultaneously minimize n′ while developing an efficient reconstruction algorithm with small trace complexity.

We say the code has redundancy n′ – n equal to the number of extra characters in the encoding. When the redundancy is small, such as O(n/log n), algorithms are known with trace complexity polylog(n), which is sublinear in seed string length [9]. The high-level strategy is to create the new string s′ by concatenating many codewords. The added redundancy comes from padding the codewords with a run of zeros followed by a run of ones. For example, the codewords could have length Θ(log2 n) and runs have length Θ(log n). This implies that none of the padded portions are deleted in a trace with high probability. The padding enables the algorithm to align the codeword portions in each trace. The redundancy for such a scheme is O(n/log n). After identifying the padded and codeword portions, the encoded seed string s′ can be reconstructed from polylog(n) traces.

In the larger redundancy regime, such as redundancy εn with ε ∈ (0, 1) being a constant, an improved trace complexity of exp(O(log1/3 (1/ε))) is achievable [6]. Recent work also more thoroughly studies coded trace reconstruction in the insertion/deletion channel when there are a constant number of errors or a constant number of traces [5], [92], [108]-[110]. Before integrating these results into a DNA data storage system, certain ulterior constraints should be addressed as well. The synthesis process imposes limitations on the seed string length, and hence, the redundancy must be relatively small [24], [30]. Trace reconstruction is also only one part of the pipeline. The encoding and decoding schemes may need to satisfy other properties, such as error-correction capabilities [30] and enough separation between seed strings to enable clustering [46].

Non-uniform error rate:

The deletion channel model assumes that the deletion probability q is fixed for all characters in the seed string. A biologically relevant modification considers varying deletion probabilities, where the position or value of each character may affect the error probability [10]. For certain assumptions on the deletion probabilities, the current best algorithm is the same as for worst-case strings with constant deletion probability (i.e., a mean-based algorithm), and the trace complexity is asymptotically the same exp(O(n1/3)) as well. It is an important open question to extend current theoretical results to more realistic error models.

A. Reconstruction of multiple seed strings

In many applications, the goal is to reconstruct a set of unknown seed strings (rather than a single seed string) given a set of their traces. For example, in DNA data storage, the original set of short seed strings is stored together as an unordered collection in a tube. Recovering the data results in a set of traces arising from these seed strings and involves accurately determining a large fraction of the seed strings. Storing and retrieving a set of strings leads to interesting coding-theoretic problems as well [56], [59], [61], [63], [64], [68], [69].

Trace reconstruction for multiple strings has been explored recently [111]-[113]. Historically, this originates in the area of population recovery, determining an unknown distribution over a set of strings [114], [115]. In the language of trace reconstruction, the population recovery model can be described as follows. There is an unknown set S of seed strings, where only the number of strings in S is given as an input. The traces are generated using a two-step process. First, a string s is chosen randomly from the set of seed strings S based on the uniform distribution over S. Then, a trace is produced from s. This process repeats T times, leading to a trace-set C. The goal is to reconstruct at least a 1 – δ fraction of the strings in S for a given accuracy parameter 0 < δ < 1. In other words, the algorithm outputs a candidate set with , and we require that . The success probability is defined as the probability that , calculated over the random trace-set.

Analogous to the single string problems, there are variations depending on whether a set of seed strings is an arbitrary (worst-case) or random (average-case) set of strings [111]-[113]. For the worst-case version, we define the success probability over the randomness in the trace-set generation. For the average-case version, we also include the probability of choosing random set S of length n strings where ∣S∣ is fixed. We remark that prior work actually considers a more intricate population recovery model for a non-uniform distribution over S [111], [112], [114], [115]. However, we use the uniform distribution because it seems more relevant to practical applications (e.g., in DNA data storage, the seed strings are chosen from S with approximately equal probability).

Multiple String Trace Reconstruction Problem for the Deletion Channel

Input: A random trace-set C of size T generated from a set of unknown seed strings S of length n according the Deletion Channel model with deletion probability q and an accuracy parameter δ, as well as the ReconstructionRate.

Output: A set of strings with such that with success probability at least ReconstructionRate.

The output is verifiable when the original set of strings S is known. In DNA data storage, the set S corresponds to the set of strings that store the data, which may be used to benchmark a reconstruction algorithm.

Average-case population recovery problem has a straightforward reduction to the single string case, both in theory [112] and in practice [30], [46]. When the seed strings are sufficiently long, they are also far apart geometrically because they have pairwise edit distance scaling linearly with their length [47]-[49]. This ensures a clear separation between groups of traces that come from one seed string rather than another. Clustering methods can accurately partition the trace-set into subsets that are generated from each individual seed string [46], [112]. Then, algorithms for the average-case problem will succeed in exactly reconstructing most of the seed strings from the clusters. When there are ∣S∣ = M seed strings, the trace complexity is poly(M) · exp(O(log1/3 n)) [112].

Reconstructing a worst-case collection of seed strings is more challenging. The first approach to do so rigorously relied on subsequence statistics, and their method uses traces [111]. Subsequent work improved this bound by showing how to extend the mean-based analysis for the worst-case reconstruction of a single seed string [113]. The resulting algorithm uses only exp(O(M3 · n1/3)) traces. Notice that when M = 1, then this matches the best known bound for a single worst-case string [7], [18].

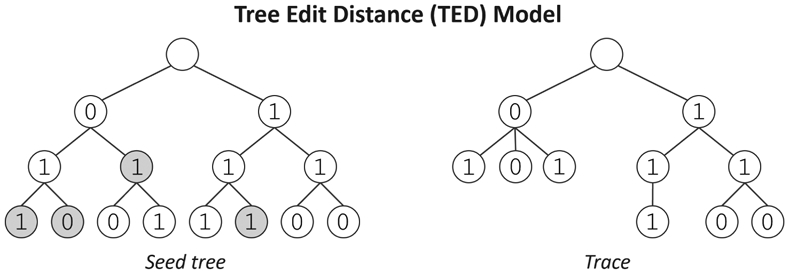

B. Reconstructing Higher-Order Structures

Recent work proposes a generalization of string trace reconstruction, known as tree trace reconstruction [116]. The goal is to reconstruct a node-labeled tree using traces from a channel that deletes nodes. The tree topology is known ahead of time, and learning the unknown node labels is the sole objective. They propose two deletion models that differ from each other based on how the children of a deleted node move in the tree. Figure 8 depicts an example tree and trace for one of the models, which is derived from the notion of tree edit distance. When a node is deleted, its children move up to become children of the deleted node’s parent. In particular, deletions still result in a connected tree. For technical reasons, the root is never deleted. The model assumes a left-to-right ordering of every level, and hence, the trees are presented in a consistent way. The tree reconstruction problem in this model generalizes string reconstruction from the deletion channel, coinciding when the tree is a path.

Fig. 8:

Labeled seed tree and example trace from the Tree Edit Distance deletion channel. Gray circles indicate deleted nodes.

The tree reconstruction problem provides a vantage point to study the complexity of reconstructing higher-order structures. Perhaps surprisingly, for many classes of trees, such as complete k-ary trees and multi-arm stars (a.k.a. spider trees), a polynomial number of traces suffice for worst-case reconstruction [116]. This is in contrast to the string case, where the current algorithms use exponentially many traces [7], [18]. The algorithms for reconstructing complete k-ary trees also differ significantly from the known methods for string reconstruction. As there is more structure in the tree, combinatorial methods can be used to identify the location of certain subtrees. The algorithms make heavy use of traces that contain a root-to-leaf path of the same length as the depth of the seed tree. If the deletion probability is constant, and the tree has depth O(log n), then such a path survives with inverse-polynomial probability. Under certain conditions, the nodes in such paths suffice to recover the corresponding labels. The algorithm for reconstructing spider trees proceeds via a mean-based approach (analogous to the worst-case reconstruction results [7], [10], [18]). This involves generalizing the complex-analytic techniques to capture mean-based statistics for spider trees. It also is known that paths (a.k.a. strings) are the most difficult tree because any tree can be reconstructed using a string reconstruction algorithm with the same asymptotic trace complexity. Related endeavors study reconstructing matrices from a channel that deletes rows and columns [14] or circular seed strings from a channel that applies a random circular permutation before deleting characters [117].

Biological motivation for the tree trace reconstruction problem can be loosely attributed to the goal of identifying certain molecules that inherently have a tree-like structure. For example, recent advances have shown that tree-structured DNA is useful for bio-sensing applications [118], [119] and storing digital information [120]. In these applications, a variety of tree topologies have been studied. The DNA molecule could take a star-shaped form, with multiple arms connected to a shared center. The arms may be single- or double-stranded DNA, and each arm of the star contains roughly 50–100 nucleotides. Such nanostructures have been developed in the context of DNA-based nanomaterials [121], using building blocks such as a 4-arm star, known as a Holliday junction [122].