Abstract

The orbitofrontal cortex (OFC) plays a prominent role in signaling reward expectations. Two important features of rewards are their value (how good they are) and their specific identity (what they are). Whereas research on OFC has traditionally focused on reward value, recent findings point toward a pivotal role of reward identity in understanding OFC signaling and its contribution to behavior. Here, we review work in rodents, non-human primates, and humans on how the OFC represents expectations about the identity of rewards, and how these signals contribute to outcome-guided behavior. Moreover, we summarize recent findings suggesting that specific reward expectations in OFC are learned and updated by means of identity errors in the dopaminergic midbrain. We conclude by discussing how OFC encoding of specific rewards complements recent proposals that this region represents a cognitive map of relevant task states, which forms the basis for model-based behavior.

Introduction

In order to make adaptive decisions, organisms must be able to learn associations between rewards experienced in the environment and the sensory cues and actions that predict their occurrence. Knowledge about these associations allows one to choose optimally amongst various options, and to make appropriate approach and consummatory responses. This necessarily involves the formation of internal representations of expected rewards that are evoked by predictive cues. A large body of work has identified the orbitofrontal cortex (OFC) as a key substrate involved in signaling cue-reward associations (O’Doherty, 2007; Rolls, 2000; Rushworth, Noonan, Boorman, Walton, & Behrens, 2011). What remains unclear, however, is what type of information about expected rewards is represented in OFC, and how that information contributes to decision-making.

Traditionally, most research on human and animal OFC has focused on identifying neural correlates of subjective value that are invariant to specific types or modalities of the reward (Chib, Rangel, Shimojo, & O’Doherty, 2009; Chikazoe, Lee, Kriegeskorte, & Anderson, 2014; Critchley & Rolls, 1996; Gross et al., 2014; Kim, Shimojo, & O’Doherty, 2011; D.J. Levy & Glimcher, 2011; McNamee, Rangel, & O’Doherty, 2013; Padoa-Schioppa & Assad, 2006; Schoenbaum & Eichenbaum, 1995). According to economic theories, such general value signals would allow comparison of disparate options on a common scale to choose the best options (D. J. Levy & Glimcher, 2012; Montague & Berns, 2002; Padoa-Schioppa, 2011). However, value is not the only relevant attribute of rewards. For instance, in order to appropriately guide behavior toward essential rewards such as food, shelter, or mates, behavioral control mechanisms must also have access to predictive information about the identity of rewards (Cardinal, Parkinson, Hall, & Everitt, 2002; Rudebeck & Murray, 2014). Here we refer to ‘reward identity’ as the holistic representation of a specific outcome. Importantly, this conceptualization of identity does not imply an explicit representation of the sensory or perceptual features of a reward, but considers specific outcomes as a point in an abstract state space, which may change with task demands. Such representations of specific rewards are the cornerstone of so-called goal-directed (i.e., outcome-guided or model-based) behaviors, which enable us to flexibly adapt decision-making according to current motivational states or environmental contingencies, without the need for tedious trial-and-error learning (Balleine & Dickinson, 1998; Daw, Niv, & Dayan, 2005; O’Doherty, Cockburn, & Pauli, 2017).

While the importance of reward identity is immediately obvious for the complex behavior of primates and rodents, responding that is sensitive to specific reward expectations is quite universal. A previous experiment in larval drosophila exemplifies how specific reward expectations are incorporated into even the most basic neural circuitry (Schleyer, Miura, Tanimura, & Gerber, 2015). Animals were trained to approach an odor source that was previously paired with a sugar reward. When tested in the presence of the original sugar reward, approach to the odor source was abolished. In contrast, when tested in the presence of an equally valued but qualitatively different aspartic acid reward, animals continued to approach the odor source. This demonstrates that a portion of learned responding in organisms as simple as larval drosophila can be attributed to the specific identity of predicted rewards.

The focus of this review is to synthesize recent work demonstrating that the OFC is a key region for signaling expectations about specific rewards. We first discuss studies in humans, non-human primates, and rodents that demonstrate how reward identity information is encoded in OFC in response to predictive cues. We then consider how specific reward representations dynamically contribute to decision-making, and review how OFC damage or inactivation impairs behavior that requires representations of specific outcomes. We then examine how limbic and midbrain regions interact with OFC to learn and update specific reward expectations. Finally, we discuss how reward identity is a fundamental feature of cognitive maps in OFC, which form the basis for our ability to use planning and inference to efficiently solve a variety of complex cognitive tasks. This review focuses on studies that investigate anticipatory representations of specific primary rewards and that experimentally control for the sensory features of predictive cues. However, the ubiquity of higher-order conditioning processes, for example those involving money, can make the distinction between cues and rewards less clear.

It is worth noting at the outset that the OFC is a large and heterogeneous region, covering much of the ventral surface of the frontal lobe. As such, the specific functions of OFC subregions, defined based on cytoarchitecture (Henssen et al., 2016), anatomical connections (Carmichael & Price, 1996), and functional connectivity (Kahnt, Chang, Park, Heinzle, & Haynes, 2012), remains a topic of active investigation. While several excellent review papers discuss cross-species comparisons of OFC subregions (Rudebeck & Murray, 2011; Wallis, 2011), we do not make such detailed considerations a focus of the present review. However, signals related to reward identity tend to be found in the central/lateral OFC (areas 13, 11l, and 12/47) in humans and non-human primates (J. D. Howard, Gottfried, Tobler, & Kahnt, 2015; J. D. Howard & Kahnt, 2018; McNamee et al., 2013; Padoa-Schioppa & Assad, 2006), and in lateral (as opposed to medial) OFC in rodents (McDannald et al., 2014; Stalnaker et al., 2014). This region corresponds roughly to the “orbital” network identified by Carmichael & Price (Carmichael & Price, 1996), and receives projections from sensory cortices that ostensibly contain information about specific reward identities. Conversely, medial OFC (areas 14 and 11m) and adjacent ventromedial prefrontal cortex (vmPFC) primarily show correlations with outcome-general value in human imaging studies (Bartra, McGuire, & Kable, 2013; Clithero & Rangel, 2014; D. J. Levy & Glimcher, 2012). Both the medial OFC and vmPFC are part of the “medial” network in primates and are connected primarily to limbic and visceromotor areas (Carmichael & Price, 1996). However, exceptions to this strict functional distinction between medial and central/lateral OFC can be found (e.g., see (Klein-Flugge, Barron, Brodersen, Dolan, & Behrens, 2013; McNamee et al., 2013), indicating that determining the precise functional organization of OFC subregions requires further investigation.

Representations of specific rewards in OFC

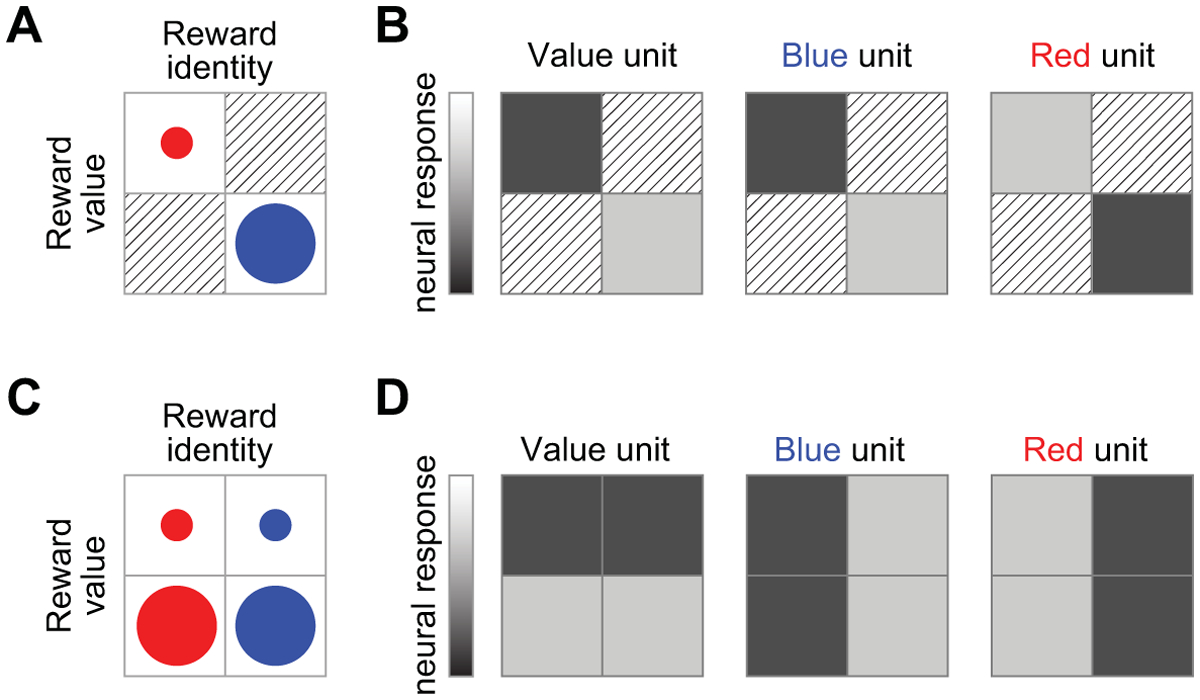

A challenge to establishing whether or not OFC signals contain information about specific rewards is that identity often covaries with value. For example, chocolate cake and strawberry ice cream differ not only in their identity, but one is also likely to have a higher subjective value than the other. In turn, a neuronal unit (e.g., a single neuron or a voxel that samples thousands of neurons) whose activity appears to discriminate between the two identities may actually respond to their difference in value, and vice versa (Fig. 1A–B). Furthermore, other shared features such as perceptual attributes may also covary with value and identity. Studies testing for neural correlates of reward identity must therefore carefully control for value (Fig. 1C–D), or use experimental procedures such as devaluation to dissociate these factors.

Figure 1. Responses of hypothetical neural units to rewards.

A) In a hypothetical experiment, two outcomes differ in value, as indicated by the relative size of the circles, and also have distinct identities, as indicated by color. B) In this scenario, a hypothetical neural unit that codes for value (i.e., higher response to higher-value reward than smaller-value reward) would be indistinguishable from a neural unit that responds specifically to “blue”. C) In another hypothetical experiment, the two distinct reward identities have a value-matched small-value (small circles) and large-value (large circles) version, which may be achieved by carefully manipulating reward magnitude. D) In this case, a value-coding unit has a distinct response pattern compared to “blue” and “red” units.

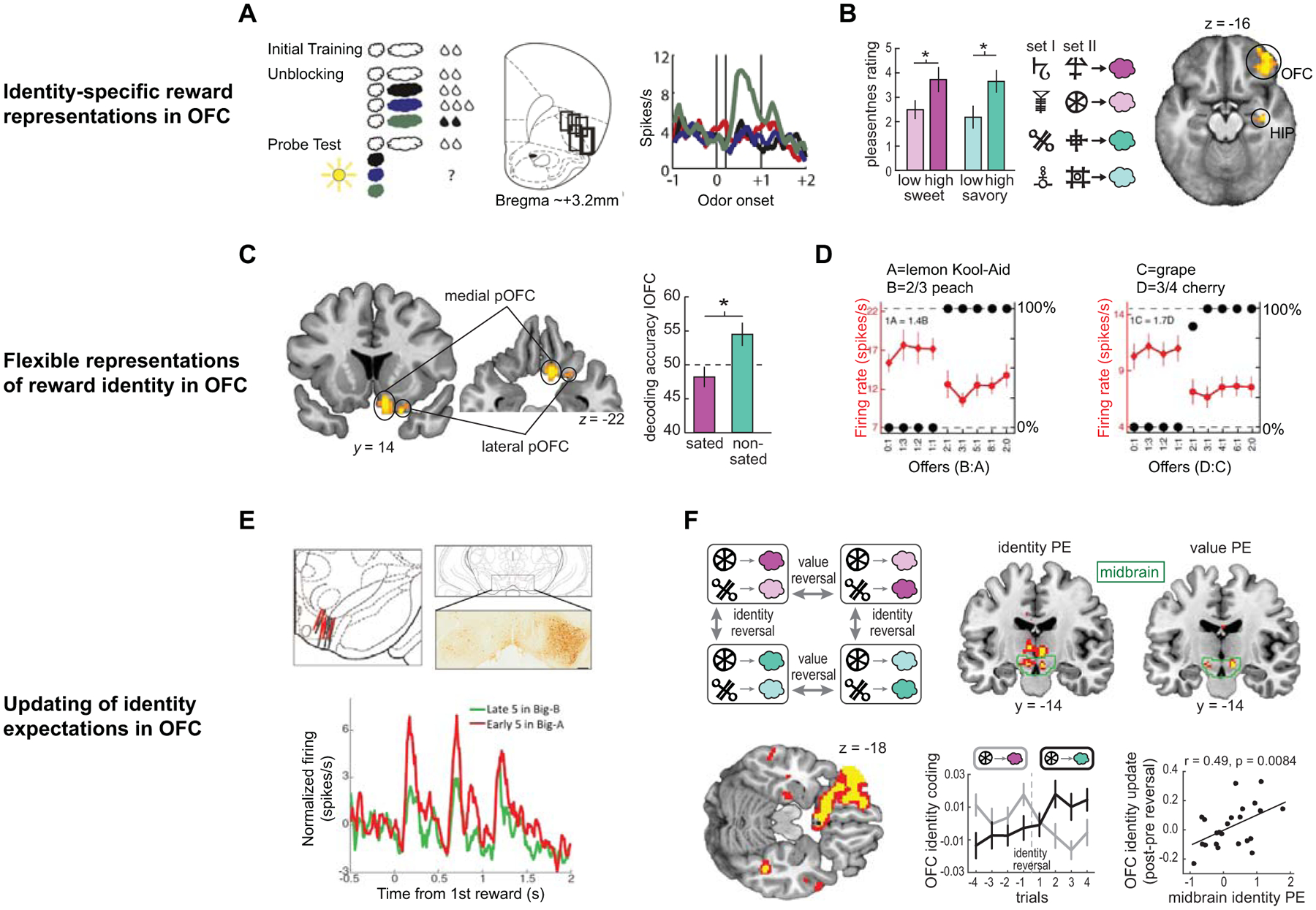

A previous electrophysiological recording study in rats achieved this by implementing an elegant unblocking paradigm to test whether OFC responses reflect the specific identity of expected rewards (McDannald et al., 2014)(Fig. 2A). In this experiment, rats were initially trained to associate an odor cue with a flavored milk reward (e.g., vanilla). The rats were then given this same cue in compound with novel odor cues in three conditions: one paired with the original reward flavor and amount (i.e., “blocked”), one paired with the same reward flavor but an increased amount (i.e. “value unblocked”), and one paired with a different reward flavor (e.g., chocolate) but the same amount (i.e., “identity unblocked”). Following basic principles of associative learning, any feature of the rewards delivered in the compound conditions that is shared with the predicted reward is “blocked”, and that feature is not associated with the compound cue (Kamin, 1969). In line with this, in a subsequent probe session, rats responded more to the novel odors corresponding to the “value unblocked” and “identity unblocked” conditions than to the odor corresponding to the “blocked” condition. Critically, neurons in lateral OFC acquired predictive responses to both the “value unblocked” and “identity unblocked” cues, and a portion of those neurons responded exclusively to “identity unblocked” cues. Together with recording studies in monkeys and rats reporting OFC responses to cues predicting specific flavors of food rewards (Padoa-Schioppa & Assad, 2006; Stalnaker et al., 2014), these results demonstrate that the specific identity of expected rewards is represented independently from value in OFC neurons.

Figure 2. Representations of specific reward identity in OFC.

A) Adapted from (McDannald et al., 2014). Left: schematic of the unblocking paradigm showing predictive odor cues (clouds) and flavored milk rewards (drops). Middle: Boxes show location of single unit recording in rat lateral OFC and agranular insula. Right: A portion of single units in lateral OFC responded to “flavor unblocked” odor cues. B) Adapted from (J. D. Howard et al., 2015). Left: Odors of distinct identity (pink=sweet, teal=savory) were individually chosen and matched for rated pleasantness (i.e. value) at two different intensity levels. Middle: two sets of visual cues were paired with odor rewards in a Pavlovian conditioning task while subjects underwent fMRI. Right: Decoding of patterns of fMRI activity evoked by predictive cues revealed significant information about reward identity encoded in right lateral OFC and hippocampus (HIP). C) Adapted from (J. D. Howard & Kahnt, 2017). Left: Information about specific reward identity was decoded in medial and lateral posterior OFC (pOFC) in the pre-satiety phase of a reinforcer devaluation task. Right: After one reward was selectively devalued, decoding of identity information for cues predicting the sated (i.e., devalued) reward was no longer significant in the post-satiety phase, whereas identity decoding remained intact for the non-sated (i.e. non-devalued) reward. D) Adapted from (Xie & Padoa-Schioppa, 2016). An example neuron recorded in macaque OFC showing preferential responding to the value of a specific reward identity in one block of binary choice trials (left: kiwi punch), and the same neuron responding preferentially to the value of a different identity (right: apple juice) in a subsequent block of binary choice trials. E) Adapted from (Takahashi et al., 2017). Top: location of single unit recording electrodes targeting midbrain dopaminergic neurons in the rat. Bottom: Response of midbrain dopamine neurons to violations in expected reward identity. F) Adapted from (J. D. Howard & Kahnt, 2018). Top left: Schematic of reversal learning task, in which associations periodically changed to induce violations in either reward value (i.e., a “value reversal”) or reward identity (i.e., an “identity reversal”). Top right: violations in both reward value and reward identity evoked prediction error responses in overlapping regions of the human midbrain. Bottom left: Pattern-based analysis of cue-evoked activity revealed information about expected reward identity encoded in left lateral OFC. Bottom middle: After an identity reversal, information in OFC activity patterns changed to reflect associations with the new reward identity. Bottom right: The change in OFC identity coding after an identity reversal (i.e., the “OFC identity update”) was directly related to the magnitude of the identity prediction error signal across subjects.

Efforts to control for value while testing for specific reward representations have also been made in human neuroimaging studies. One study implemented an fMRI adaptation design in which visual cues that had been previously paired with qualitatively distinct, but value-matched, food pictures were presented in rapid succession during scanning (Klein-Flugge et al., 2013). Successive presentation of two different cues associated with distinct rewards evoked a greater adaptation effect in caudal OFC compared to the presentation of two different cues predicting the same food reward. In this case, adaptation to a particular feature such as identity is indicative of a neural representation of that feature in a given brain region. In our own work, we utilized multivoxel pattern analysis (MVPA) of fMRI data acquired during a Pavlovian conditioning task in which hungry subjects viewed visual cues that were paired with appetitive food odor rewards (J. D. Howard et al., 2015)(Fig. 2B). MVPA of cue-evoked activity patterns showed that cues predicting qualitatively distinct but value-matched odor rewards evoked discriminable patterns of activity in lateral OFC. Similar pattern-based methods have been used to show that OFC encodes the value of specific reward categories such as food vs. trinket (McNamee et al., 2013), the value of specific elemental features of food rewards (Suzuki, Cross, & O’Doherty, 2017), and stimulus-stimulus associations (Pauli, Gentile, Collette, Tyszka, & O’Doherty, 2019; F. Wang, Schoenbaum, & Kahnt, 2020). Thus, depending on task demands, specific outcome representations in OFC can incorporate information at multiple hierarchical levels, from individual features to broad categories.

Together, these human imaging studies demonstrate that predictive information about specific rewards can be readily identified in OFC ensemble activity. Importantly, both fMRI adaptation (Barron, Garvert, & Behrens, 2016) and MVPA (Kahnt, 2018) techniques are attuned to detecting representations encoded in distributed patterns of activity, and thus serve as an alternative to univariate methods in which signals across relative large swaths of cortex are spatially smoothed to test for global signal fluctuations related to conditions of interest. Because MPVA techniques query ensemble activity, they are ideally suited to uncover specific reward signals in the OFC (Kahnt, 2018), which are encoded with both positive and negative responses in individual neurons and do not show an obvious spatial clustering within areas (Kennerley, Dahmubed, Lara, & Wallis, 2009; Morrison & Salzman, 2011; Saez et al., 2018; Stalnaker et al., 2014).

Flexible representations of specific rewards

How are reward identity expectations incorporated into decisions? In other words, what are they good for? Most importantly, they allow organisms to generate outcome predictions that can be updated on the fly to reflect moment-to-moment changes in value, context, or motivational state. A classic assay of such behavior is the devaluation task. In this task, subjects first learn associations between predictive cues and specific food (or food-related) rewards, and then make choices between those cues, establishing a measure of baseline preference. One reward is then selectively devalued, either through satiation or by pairing its consumption with illness. After devaluation, choices for the predictive cues are again evaluated in a probe session, typically conducted in extinction. When first encountering the predictive cues in this probe session, intact animals and humans redirect choices away from cues predicting the devalued reward compared to cues predicting the non-devalued reward. Causal studies in rodents (Bradfield, Dezfouli, van Holstein, Chieng, & Balleine, 2015; Gallagher, McMahan, & Schoenbaum, 1999; Gardner, Conroy, Shaham, Styer, & Schoenbaum, 2017; Parkes et al., 2018; Pickens, Saddoris, Gallagher, & Holland, 2005), non-human primates (Izquierdo, Suda, & Murray, 2004; Murray, Moylan, Saleem, Basile, & Turchi, 2015; Rudebeck, Saunders, Prescott, Chau, & Murray, 2013; West, DesJardin, Gale, & Malkova, 2011), and humans (J. D. Howard et al., 2020; Reber et al., 2017) demonstrate that lesioned, inactivated, or disrupted OFC impairs behavior in this task, such that subjects continue to choose cues that predict devalued rewards, even though preferences for the rewards themselves are not affected (James D. Howard & Kahnt, 2021).

The implication of these causal studies is that OFC is necessary to access the updated representation of the specific reward that has been devalued. However, while OFC activity has previously been associated with changes in behavior using this task (Gottfried, O’Doherty, & Dolan, 2003; Gremel & Costa, 2013; Valentin, Dickinson, & O’Doherty, 2007), definitive evidence linking identity-specific expectations to outcome-guided behavior was lacking. To address this issue, we previously conducted a study in which human subjects made choices between predictive visual cues to receive food odors while undergoing fMRI in two sessions: one prior to and one immediately after selective satiety-induced devaluation of one of the food odors (J. D. Howard & Kahnt, 2017)(Fig. 2C). We found that in the lateral posterior OFC, identity-specific representations of the devalued reward were diminished, while representations of the non-devalued reward remained intact. This suggests that devaluation altered the representation of the expected reward identity, or a conjunction between the specific reward and its current value, without new stimulus-outcome learning.

Other studies have also shown that dynamic changes in identity-specific OFC representations contribute to flexible decision-making. One study in monkeys demonstrated that a given OFC neuron that encodes a specific outcome in one choice set consisting of two distinct juices, re-maps its response to another juice in a different choice set (Xie & Padoa-Schioppa, 2016)(Fig. 2D). Moreover, when rats trained on an identity-based reversal learning task receive a reward of a different identity from what was expected in one food well, OFC neurons change their firing to reflect predictive information about the newly expected reward identity in the other well without new learning (Stalnaker et al., 2014). These studies indicate that OFC representations adapt to reflect specific rewards, as part of the overall task structure that is relevant at a given moment. Another recording study in non-human primates suggests that outcome-related adaptations in OFC responses can take place during the deliberation process of a single choice itself (Rich & Wallis, 2016). Macaque monkeys made a series of binary choices between visual stimuli that were paired with differing amounts of a juice reward. After cue presentation, but prior to choice, ensemble OFC activity fluctuated between patterns that resembled the eventually chosen and eventually unchosen options. Strikingly, the strength of the representation for the eventually chosen option was directly related to faster choice times. The available options in this task differed in juice amount (i.e., value), but not necessarily in identity, and thus future studies are needed to determine whether OFC activity during the decision process also fluctuates according to differences in reward identity.

How are specific reward expectations learned and updated?

The studies discussed above suggest that OFC represents specific reward expectations, but what are the mechanisms by which these expectations are learned? We know that learning value expectations involves a prediction error-based process in the dopaminergic midbrain (Schultz, Dayan, & Montague, 1997). A recent study in rats found that the same midbrain dopamine neurons that respond to classical value prediction errors also respond to violations in expected reward identity when value is held constant (Takahashi et al., 2017)(Fig. 2E). This finding has been corroborated by human imaging studies, which further link identity-based error signals to subsequent learning. One study found that error signals related to specific reward type in OFC were correlated with repetition suppression effects in hippocampus reflecting newly learned stimulus-outcome associations (Boorman, Rajendran, O’Reilly, & Behrens, 2016). In a study design similar to that used by Takahashi et al., we showed that the human midbrain encodes a reward identity prediction error signal, and that the magnitude of this signal is related to subsequent updating of specific reward expectations in lateral OFC activity patterns (J. D. Howard & Kahnt, 2018)(Fig. 2F). Interestingly, identity prediction error signals appear to be independent of the perceptual distance between expected and received rewards (Suarez, Howard, Schoenbaum, & Kahnt, 2019), suggesting that the identity comparison made to compute the identity error occurs in an abstract state space rather than a perceptual similarity-based space.

Moreover, in both rats and humans, these identity prediction errors contain specific information about the mis-predicted reward (Stalnaker et al., 2019). For example, in both rats and humans, expecting a sweet reward but receiving a savory reward evokes a distinct pattern of ensemble activity in the dopaminergic midbrain compared to expecting a savory reward but receiving a sweet reward. The implication of this finding is that the error signal is not a mere “surprise” signal indicating in a non-specific way that something has changed, but rather that this signal may confer specificity on downstream targets such as OFC for updating expectations. Together with prior studies showing that the midbrain responds to value-less errors in sensory expectations (Iglesias et al., 2019; Schwartenbeck, FitzGerald, & Dolan, 2016), these findings point toward an expanded role for dopamine in associative learning to include stimulus features beyond reward value (Gardner, Schoenbaum, & Gershman, 2018).

In addition to the midbrain, several studies suggest that interactions between OFC and amygdala are critical for behaviors that require representations of specific outcomes. Monkeys with surgical disconnection of amygdala and OFC are impaired in the devaluation task (Baxter, Parker, Lindner, Izquierdo, & Murray, 2000), and amygdalectomy results in decreased encoding of specific stimulus-outcome associations in OFC (Rudebeck, Ripple, Mitz, Averbeck, & Murray, 2017). In rats, inactivation of projections from basolateral amygdala to OFC impairs animals’ ability to use specific reward expectations to guide behavior, suggesting that input from amygdala to OFC is necessary for adaptive decision making (Lichtenberg et al., 2017). This is in line with work in humans showing that fMRI activity in the amygdala during Pavlovian conditioning is better explained by a model-based algorithm than various model-free algorithms (Prevost, McNamee, Jessup, Bossaerts, & O’Doherty, 2013). Together, these studies suggest that OFC works in close concert with amygdala to use information conveyed by predictive cues to appropriately guide decisions for specific rewards.

Reward identity as a key component of cognitive maps

The precise contribution of OFC to learning and decision-making has undergone many formulations over the past several decades (Stalnaker, Cooch, & Schoenbaum, 2015). An influential recent theory postulates that OFC represents a cognitive map of task space (Wilson, Takahashi, Schoenbaum, & Niv, 2014), defined as an abstract representation of any relevant information needed to plan and perform a given task. Consider the example of the reinforcer devaluation task in which a subject first learns associations between predictive cues and specific food rewards before one of the rewards is selectively devalued. When presented with a choice between cues after devaluation, the subject has not yet directly experienced that selecting one of the cues leads to an outcome with a new, lower value. They must therefore use a representation of the associations between the cues and the specific rewards to appropriately infer the new value of the cues and to choose the cue predicting the non-devalued reward. When subjects are unable to access this map (e.g., when OFC is damaged), they can only rely on what they have previously learned about the value of the cues, and may therefore choose the cue predicting the now devalued reward. Importantly while reward identity is thus a key component of cognitive maps that underlie flexible behavior, within this conceptual framework, any task-relevant variable may be represented here. Indeed, a large variety of reward-related variables has been observed in OFC activity, including reward timing, probability, and context (Farovik et al., 2015; Rushworth et al., 2011; Saez et al., 2018; M. Z. Wang & Hayden, 2017).

Further evidence supporting the idea that OFC represents a cognitive map of task space comes from recent neuroimaging experiments. In one study, subjects needed to use recently experienced, but currently unobservable, information to perform a task featuring 16 unique states (Schuck, Cai, Wilson, & Niv, 2016). Ensemble fMRI activity in OFC encoded the current state in this task, and the fidelity of these state representations predicted task performance. Another study utilized a coin collection task to show that OFC activity tracks transitions between latent task states (Nassar, McGuire, Ritz, & Kable, 2019). While performance in these tasks required mental representations of recently experienced or inferred states to make optimal decisions, another recent study found that activity patterns in OFC and vmPFC encode states predicted up to 30 s in the future (Wimmer & Buchel, 2019). Taken together, these findings suggest that OFC is important for both integrating information about past decisions to make inferences about the current location (or state) within a cognitive map, and simulating the consequences of actions on future states. A dichotomy between these two functionalities has recently been proposed based on rodent data, in which lateral OFC represents one’s initial location in a cognitive map, while medial OFC represents potential future locations based on available actions (Bradfield & Hart, 2020). It remains to be tested whether a similar anatomical distinction can be made along these medial/lateral lines in human OFC.

Summary

Causal studies in animals and humans demonstrate that the OFC is necessary for a range of behaviors that require representations of specific rewards. Until recently, however, it was unknown whether and how specific rewards are reflected in OFC activity. Key innovations in multi-unit recording and analysis, from the single unit to the voxel level, alongside carefully controlled experimental designs have revealed that specific reward expectations are represented in OFC ensemble activity. These representations readily undergo re-mapping to reflect changes in motivational state or associative contingencies, enabling flexible decision-making that can re-orient behavior on the fly. Learning and updating these representations in OFC may involve an error-based mechanism that depends on the dopaminergic midbrain. Together with relevant information about reward context, timing, and probability, identity information may be integrated into cognitive maps in OFC to support decision-making in which knowledge of future or currently unobservable states is required. These recent advances in our understanding of how OFC contributes to learning and decision-making help to clarify how OFC dysfunction may contribute to disorders such as substance use disorder (Franklin et al., 2002; Goldstein et al., 2007; Volkow & Fowler, 2000), obsessive compulsive disorder (Menzies et al., 2008; Nakao, Okada, & Kanba, 2014), and depression (Cheng et al., 2016; Drevets, 2007).

Acknowledgements

Research described in this review was supported by grants from the National Institute on Deafness and other Communication Disorders (NIDCD, R01DC015426) and the National Institute on Drug Abuse (NIDA, R03DA040668) to T.K.

References

- Balleine BW, & Dickinson A (1998). Goal-directed instrumental action: contingency and incentive learning and their cortical substrates. Neuropharmacology, 37(4–5), 407–419. [DOI] [PubMed] [Google Scholar]

- Barron HC, Garvert MM, & Behrens TE (2016). Repetition suppression: a means to index neural representations using BOLD? Philos Trans R Soc Lond B Biol Sci, 371(1705). doi: 10.1098/rstb.2015.0355 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bartra O, McGuire JT, & Kable JW (2013). The valuation system: a coordinate-based meta-analysis of BOLD fMRI experiments examining neural correlates of subjective value. Neuroimage, 76, 412–427. doi: 10.1016/j.neuroimage.2013.02.063 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baxter MG, Parker A, Lindner CC, Izquierdo AD, & Murray EA (2000). Control of response selection by reinforcer value requires interaction of amygdala and orbital prefrontal cortex. J Neurosci, 20(11), 4311–4319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boorman ED, Rajendran VG, O’Reilly JX, & Behrens TE (2016). Two Anatomically and Computationally Distinct Learning Signals Predict Changes to Stimulus-Outcome Associations in Hippocampus. Neuron, 89(6), 1343–1354. doi: 10.1016/j.neuron.2016.02.014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bradfield LA, Dezfouli A, van Holstein M, Chieng B, & Balleine BW (2015). Medial Orbitofrontal Cortex Mediates Outcome Retrieval in Partially Observable Task Situations. Neuron, 88(6), 1268–1280. doi: 10.1016/j.neuron.2015.10.044 [DOI] [PubMed] [Google Scholar]

- Bradfield LA, & Hart G (2020). Rodent medial and lateral orbitofrontal cortices represent unique components of cognitive maps of task space. Neurosci Biobehav Rev, 108, 287–294. doi: 10.1016/j.neubiorev.2019.11.009 [DOI] [PubMed] [Google Scholar]

- Cardinal RN, Parkinson JA, Hall J, & Everitt BJ (2002). Emotion and motivation: the role of the amygdala, ventral striatum, and prefrontal cortex. Neurosci.Biobehav.Rev, 26(3), 321–352. [DOI] [PubMed] [Google Scholar]

- Carmichael ST, & Price JL (1996). Connectional networks within the orbital and medial prefrontal cortex of macaque monkeys. J Comp Neurol, 371(2), 179–207. doi: [DOI] [PubMed] [Google Scholar]

- Cheng W, Rolls ET, Qiu J, Liu W, Tang Y, Huang CC, … Feng J (2016). Medial reward and lateral non-reward orbitofrontal cortex circuits change in opposite directions in depression. Brain, 139(Pt 12), 3296–3309. doi: 10.1093/brain/aww255 [DOI] [PubMed] [Google Scholar]

- Chib VS, Rangel A, Shimojo S, & O’Doherty JP (2009). Evidence for a common representation of decision values for dissimilar goods in human ventromedial prefrontal cortex. J Neurosci, 29(39), 12315–12320. doi: 10.1523/JNEUROSCI.2575-09.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chikazoe J, Lee DH, Kriegeskorte N, & Anderson AK (2014). Population coding of affect across stimuli, modalities and individuals. Nat Neurosci, 17(8), 1114–1122. doi: 10.1038/nn.3749 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clithero JA, & Rangel A (2014). Informatic parcellation of the network involved in the computation of subjective value. Soc Cogn Affect Neurosci, 9(9), 1289–1302. doi: 10.1093/scan/nst106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Critchley HD, & Rolls ET (1996). Olfactory neuronal responses in the primate orbitofrontal cortex: analysis in an olfactory discrimination task. J Neurophysiol, 75(4), 1659–1672. [DOI] [PubMed] [Google Scholar]

- Daw ND, Niv Y, & Dayan P (2005). Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nat Neurosci, 8(12), 1704–1711. doi: 10.1038/nn1560 [DOI] [PubMed] [Google Scholar]

- Drevets WC (2007). Orbitofrontal cortex function and structure in depression. Ann N Y Acad Sci, 1121, 499–527. doi: 10.1196/annals.1401.029 [DOI] [PubMed] [Google Scholar]

- Farovik A, Place RJ, McKenzie S, Porter B, Munro CE, & Eichenbaum H (2015). Orbitofrontal cortex encodes memories within value-based schemas and represents contexts that guide memory retrieval. J Neurosci, 35(21), 8333–8344. doi: 10.1523/JNEUROSCI.0134-15.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Franklin TR, Acton PD, Maldjian JA, Gray JD, Croft JR, Dackis CA, … Childress AR (2002). Decreased gray matter concentration in the insular, orbitofrontal, cingulate, and temporal cortices of cocaine patients. Biol Psychiatry, 51(2), 134–142. doi: 10.1016/s0006-3223(01)01269-0 [DOI] [PubMed] [Google Scholar]

- Gallagher M, McMahan RW, & Schoenbaum G (1999). Orbitofrontal cortex and representation of incentive value in associative learning. J Neurosci, 19(15), 6610–6614. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gardner MPH, Conroy JS, Shaham MH, Styer CV, & Schoenbaum G (2017). Lateral Orbitofrontal Inactivation Dissociates Devaluation-Sensitive Behavior and Economic Choice. Neuron, 96(5), 1192–1203 e1194. doi: 10.1016/j.neuron.2017.10.026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gardner MPH, Schoenbaum G, & Gershman SJ (2018). Rethinking dopamine as generalized prediction error. Proc Biol Sci, 285(1891). doi: 10.1098/rspb.2018.1645 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldstein RZ, Tomasi D, Rajaram S, Cottone LA, Zhang L, Maloney T, … Volkow ND (2007). Role of the anterior cingulate and medial orbitofrontal cortex in processing drug cues in cocaine addiction. Neuroscience, 144(4), 1153–1159. doi: 10.1016/j.neuroscience.2006.11.024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gottfried JA, O’Doherty J, & Dolan RJ (2003). Encoding predictive reward value in human amygdala and orbitofrontal cortex. Science, 301(5636), 1104–1107. doi: 10.1126/science.1087919 [DOI] [PubMed] [Google Scholar]

- Gremel CM, & Costa RM (2013). Orbitofrontal and striatal circuits dynamically encode the shift between goal-directed and habitual actions. Nat Commun, 4, 2264. doi: 10.1038/ncomms3264 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gross J, Woelbert E, Zimmermann J, Okamoto-Barth S, Riedl A, & Goebel R (2014). Value signals in the prefrontal cortex predict individual preferences across reward categories. J Neurosci, 34(22), 7580–7586. doi: 10.1523/JNEUROSCI.5082-13.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henssen A, Zilles K, Palomero-Gallagher N, Schleicher A, Mohlberg H, Gerboga F, … Amunts K (2016). Cytoarchitecture and probability maps of the human medial orbitofrontal cortex. Cortex, 75, 87–112. doi: 10.1016/j.cortex.2015.11.006 [DOI] [PubMed] [Google Scholar]

- Howard JD, Gottfried JA, Tobler PN, & Kahnt T (2015). Identity-specific coding of future rewards in the human orbitofrontal cortex. Proc Natl Acad Sci U S A, 112(16), 5195–5200. doi: 10.1073/pnas.1503550112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howard JD, & Kahnt T (2017). Identity-Specific Reward Representations in Orbitofrontal Cortex Are Modulated by Selective Devaluation. J Neurosci, 37(10), 2627–2638. doi: 10.1523/JNEUROSCI.3473-16.2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howard JD, & Kahnt T (2018). Identity prediction errors in the human midbrain update reward-identity expectations in the orbitofrontal cortex. Nat Commun, 9(1), 1611. doi: 10.1038/s41467-018-04055-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howard JD, Reynolds R, Smith DE, Voss JL, Schoenbaum G, & Kahnt T (2020). Targeted Stimulation of Human Orbitofrontal Networks Disrupts Outcome-Guided Behavior. Curr Biol, 30(3), 490–498 e494. doi: 10.1016/j.cub.2019.12.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howard James D., & Kahnt Thorsten. (2021). Causal investigations into orbitofrontal control of human decision making. Current Opinion in Behavioral Sciences, 38, 14–19. doi: 10.1016/j.cobeha.2020.06.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iglesias S, Mathys C, Brodersen KH, Kasper L, Piccirelli M, den Ouden HEM, & Stephan KE (2019). Hierarchical Prediction Errors in Midbrain and Basal Forebrain during Sensory Learning. Neuron, 101(6), 1196–1201. doi: 10.1016/j.neuron.2019.03.001 [DOI] [PubMed] [Google Scholar]

- Izquierdo A, Suda RK, & Murray EA (2004). Bilateral orbital prefrontal cortex lesions in rhesus monkeys disrupt choices guided by both reward value and reward contingency. J Neurosci, 24(34), 7540–7548. doi: 10.1523/JNEUROSCI.1921-04.2004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahnt T (2018). A decade of decoding reward-related fMRI signals and where we go from here. Neuroimage, 180(Pt A), 324–333. doi: 10.1016/j.neuroimage.2017.03.067 [DOI] [PubMed] [Google Scholar]

- Kahnt T, Chang LJ, Park SQ, Heinzle J, & Haynes JD (2012). Connectivity-based parcellation of the human orbitofrontal cortex. J Neurosci, 32(18), 6240–6250. doi: 10.1523/JNEUROSCI.0257-12.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kamin LJ (1969). Predictability, surprise, attention, and conditioning. In Church RM & Campbell BA (Eds.), Punishment and aversive behavior. New York: Appleton-Century-Crofts. [Google Scholar]

- Kennerley SW, Dahmubed AF, Lara AH, & Wallis JD (2009). Neurons in the frontal lobe encode the value of multiple decision variables. J Cogn Neurosci, 21(6), 1162–1178. doi: 10.1162/jocn.2009.21100 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim H, Shimojo S, & O’Doherty JP (2011). Overlapping responses for the expectation of juice and money rewards in human ventromedial prefrontal cortex. Cereb Cortex, 21(4), 769–776. doi: 10.1093/cercor/bhq145 [DOI] [PubMed] [Google Scholar]

- Klein-Flugge MC, Barron HC, Brodersen KH, Dolan RJ, & Behrens TE (2013). Segregated encoding of reward-identity and stimulus-reward associations in human orbitofrontal cortex. J Neurosci, 33(7), 3202–3211. doi: 10.1523/JNEUROSCI.2532-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levy DJ, & Glimcher PW (2012). The root of all value: a neural common currency for choice. Curr Opin Neurobiol, 22(6), 1027–1038. doi: 10.1016/j.conb.2012.06.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levy DJ, & Glimcher PW (2011). Comparing apples and oranges: using reward-specific and reward-general subjective value representation in the brain. J. Neurosci, 31(41), 14693–14707. doi: 10.1523/Jneurosci.2218-11.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lichtenberg NT, Pennington ZT, Holley SM, Greenfield VY, Cepeda C, Levine MS, & Wassum KM (2017). Basolateral Amygdala to Orbitofrontal Cortex Projections Enable Cue-Triggered Reward Expectations. J Neurosci, 37(35), 8374–8384. doi: 10.1523/JNEUROSCI.0486-17.2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDannald MA, Esber GR, Wegener MA, Wied HM, Liu TL, Stalnaker TA, … Schoenbaum G (2014). Orbitofrontal neurons acquire responses to ‘valueless’ Pavlovian cues during unblocking. Elife, 3, e02653. doi: 10.7554/eLife.02653 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McNamee D, Rangel A, & O’Doherty JP (2013). Category-dependent and category-independent goal-value codes in human ventromedial prefrontal cortex. Nat Neurosci, 16(4), 479–485. doi: 10.1038/nn.3337 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Menzies L, Chamberlain SR, Laird AR, Thelen SM, Sahakian BJ, & Bullmore ET (2008). Integrating evidence from neuroimaging and neuropsychological studies of obsessive-compulsive disorder: the orbitofronto-striatal model revisited. Neurosci Biobehav Rev, 32(3), 525–549. doi: 10.1016/j.neubiorev.2007.09.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Montague PR, & Berns GS (2002). Neural economics and the biological substrates of valuation. Neuron, 36(2), 265–284. [DOI] [PubMed] [Google Scholar]

- Morrison SE, & Salzman CD (2011). Representations of appetitive and aversive information in the primate orbitofrontal cortex. Ann N Y Acad Sci, 1239, 59–70. doi: 10.1111/j.1749-6632.2011.06255.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray EA, Moylan EJ, Saleem KS, Basile BM, & Turchi J (2015). Specialized areas for value updating and goal selection in the primate orbitofrontal cortex. Elife, 4. doi: 10.7554/eLife.11695 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakao T, Okada K, & Kanba S (2014). Neurobiological model of obsessive-compulsive disorder: evidence from recent neuropsychological and neuroimaging findings. Psychiatry Clin Neurosci, 68(8), 587–605. doi: 10.1111/pcn.12195 [DOI] [PubMed] [Google Scholar]

- Nassar MR, McGuire JT, Ritz H, & Kable JW (2019). Dissociable Forms of Uncertainty-Driven Representational Change Across the Human Brain. J Neurosci, 39(9), 1688–1698. doi: 10.1523/JNEUROSCI.1713-18.2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- O’Doherty JP (2007). Lights, camembert, action! The role of human orbitofrontal cortex in encoding stimuli, rewards, and choices. Ann N Y Acad Sci, 1121, 254–272. doi: 10.1196/annals.1401.036 [DOI] [PubMed] [Google Scholar]

- O’Doherty JP, Cockburn J, & Pauli WM (2017). Learning, Reward, and Decision Making. Annu Rev Psychol, 68, 73–100. doi: 10.1146/annurev-psych-010416-044216 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Padoa-Schioppa C (2011). Neurobiology of economic choice: a good-based model. Annu Rev Neurosci, 34, 333–359. doi: 10.1146/annurev-neuro-061010-113648 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Padoa-Schioppa C, & Assad JA (2006). Neurons in the orbitofrontal cortex encode economic value. Nature, 441(7090), 223–226. doi: 10.1038/nature04676 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parkes SL, Ravassard PM, Cerpa JC, Wolff M, Ferreira G, & Coutureau E (2018). Insular and Ventrolateral Orbitofrontal Cortices Differentially Contribute to Goal-Directed Behavior in Rodents. Cereb Cortex, 28(7), 2313–2325. doi: 10.1093/cercor/bhx132 [DOI] [PubMed] [Google Scholar]

- Pauli WM, Gentile G, Collette S, Tyszka JM, & O’Doherty JP (2019). Evidence for model-based encoding of Pavlovian contingencies in the human brain. Nat Commun, 10(1), 1099. doi: 10.1038/s41467-019-08922-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pickens CL, Saddoris MP, Gallagher M, & Holland PC (2005). Orbitofrontal lesions impair use of cue-outcome associations in a devaluation task. Behav Neurosci, 119(1), 317–322. doi: 10.1037/0735-7044.119.1.317 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prevost C, McNamee D, Jessup RK, Bossaerts P, & O’Doherty JP (2013). Evidence for model-based computations in the human amygdala during Pavlovian conditioning. PLoS Comput Biol, 9(2), e1002918. doi: 10.1371/journal.pcbi.1002918 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reber J, Feinstein JS, O’Doherty JP, Liljeholm M, Adolphs R, & Tranel D (2017). Selective impairment of goal-directed decision-making following lesions to the human ventromedial prefrontal cortex. Brain, 140(6), 1743–1756. doi: 10.1093/brain/awx105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rich EL, & Wallis JD (2016). Decoding subjective decisions from orbitofrontal cortex. Nat Neurosci, 19(7), 973–980. doi: 10.1038/nn.4320 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rolls ET (2000). The orbitofrontal cortex and reward. Cereb Cortex, 10(3), 284–294. doi: 10.1093/cercor/10.3.284 [DOI] [PubMed] [Google Scholar]

- Rudebeck PH, & Murray EA (2011). Balkanizing the primate orbitofrontal cortex: distinct subregions for comparing and contrasting values. Ann N Y Acad Sci, 1239, 1–13. doi: 10.1111/j.1749-6632.2011.06267.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rudebeck PH, & Murray EA (2014). The orbitofrontal oracle: cortical mechanisms for the prediction and evaluation of specific behavioral outcomes. Neuron, 84(6), 1143–1156. doi: 10.1016/j.neuron.2014.10.049 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rudebeck PH, Ripple JA, Mitz AR, Averbeck BB, & Murray EA (2017). Amygdala Contributions to Stimulus-Reward Encoding in the Macaque Medial and Orbital Frontal Cortex during Learning. J Neurosci, 37(8), 2186–2202. doi: 10.1523/JNEUROSCI.0933-16.2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rudebeck PH, Saunders RC, Prescott AT, Chau LS, & Murray EA (2013). Prefrontal mechanisms of behavioral flexibility, emotion regulation and value updating. Nat Neurosci, 16(8), 1140–1145. doi: 10.1038/nn.3440 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rushworth MF, Noonan MP, Boorman ED, Walton ME, & Behrens TE (2011). Frontal cortex and reward-guided learning and decision-making. Neuron, 70(6), 1054–1069. doi: 10.1016/j.neuron.2011.05.014 [DOI] [PubMed] [Google Scholar]

- Saez I, Lin J, Stolk A, Chang E, Parvizi J, Schalk G, … Hsu M (2018). Encoding of Multiple Reward-Related Computations in Transient and Sustained High-Frequency Activity in Human OFC. Curr Biol, 28(18), 2889–2899 e2883. doi: 10.1016/j.cub.2018.07.045 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schleyer M, Miura D, Tanimura T, & Gerber B (2015). Learning the specific quality of taste reinforcement in larval Drosophila. Elife, 4. doi: 10.7554/eLife.04711 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoenbaum G, & Eichenbaum H (1995). Information coding in the rodent prefrontal cortex. I. Single-neuron activity in orbitofrontal cortex compared with that in pyriform cortex. J. Neurophysiol, 74(2), 733–750. [DOI] [PubMed] [Google Scholar]

- Schuck NW, Cai MB, Wilson RC, & Niv Y (2016). Human Orbitofrontal Cortex Represents a Cognitive Map of State Space. Neuron, 91(6), 1402–1412. doi: 10.1016/j.neuron.2016.08.019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schultz W, Dayan P, & Montague PR (1997). A neural substrate of prediction and reward. Science, 275(5306), 1593–1599. [DOI] [PubMed] [Google Scholar]

- Schwartenbeck P, FitzGerald THB, & Dolan R (2016). Neural signals encoding shifts in beliefs. Neuroimage, 125, 578–586. doi: 10.1016/j.neuroimage.2015.10.067 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stalnaker TA, Cooch NK, McDannald MA, Liu TL, Wied H, & Schoenbaum G (2014). Orbitofrontal neurons infer the value and identity of predicted outcomes. Nat Commun, 5, 3926. doi: 10.1038/ncomms4926 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stalnaker TA, Cooch NK, & Schoenbaum G (2015). What the orbitofrontal cortex does not do. Nat Neurosci, 18(5), 620–627. doi: 10.1038/nn.3982 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stalnaker TA, Howard JD, Takahashi YK, Gershman SJ, Kahnt T, & Schoenbaum G (2019). Dopamine neuron ensembles signal the content of sensory prediction errors. Elife, 8. doi: 10.7554/eLife.49315 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Suarez JA, Howard JD, Schoenbaum G, & Kahnt T (2019). Sensory prediction errors in the human midbrain signal identity violations independent of perceptual distance. Elife, 8. doi: 10.7554/eLife.43962 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Suzuki S, Cross L, & O’Doherty JP (2017). Elucidating the underlying components of food valuation in the human orbitofrontal cortex. Nat Neurosci, 20(12), 1780–1786. doi: 10.1038/s41593-017-0008-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Takahashi YK, Batchelor HM, Liu B, Khanna A, Morales M, & Schoenbaum G (2017). Dopamine Neurons Respond to Errors in the Prediction of Sensory Features of Expected Rewards. Neuron, 95(6), 1395–1405 e1393. doi: 10.1016/j.neuron.2017.08.025 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Valentin VV, Dickinson A, & O’Doherty JP (2007). Determining the neural substrates of goal-directed learning in the human brain. J Neurosci, 27(15), 4019–4026. doi: 10.1523/JNEUROSCI.0564-07.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Volkow ND, & Fowler JS (2000). Addiction, a disease of compulsion and drive: involvement of the orbitofrontal cortex. Cereb Cortex, 10(3), 318–325. doi: 10.1093/cercor/10.3.318 [DOI] [PubMed] [Google Scholar]

- Wallis JD (2011). Cross-species studies of orbitofrontal cortex and value-based decision-making. Nat Neurosci, 15(1), 13–19. doi: 10.1038/nn.2956 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang F, Schoenbaum G, & Kahnt T (2020). Interactions between human orbitofrontal cortex and hippocampus support model-based inference. PLoS Biol, 18(1), e3000578. doi: 10.1371/journal.pbio.3000578 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang MZ, & Hayden BY (2017). Reactivation of associative structure specific outcome responses during prospective evaluation in reward-based choices. Nat Commun, 8, 15821. doi: 10.1038/ncomms15821 [DOI] [PMC free article] [PubMed] [Google Scholar]

- West EA, DesJardin JT, Gale K, & Malkova L (2011). Transient inactivation of orbitofrontal cortex blocks reinforcer devaluation in macaques. J Neurosci, 31(42), 15128–15135. doi: 10.1523/JNEUROSCI.3295-11.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson RC, Takahashi YK, Schoenbaum G, & Niv Y (2014). Orbitofrontal cortex as a cognitive map of task space. Neuron, 81(2), 267–279. doi: 10.1016/j.neuron.2013.11.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wimmer GE, & Buchel C (2019). Learning of distant state predictions by the orbitofrontal cortex in humans. Nature Communications, 10. doi: ARTN 2554 10.1038/s41467-019-10597-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xie J, & Padoa-Schioppa C (2016). Neuronal remapping and circuit persistence in economic decisions. Nat Neurosci, 19(6), 855–861. doi: 10.1038/nn.4300 [DOI] [PMC free article] [PubMed] [Google Scholar]