Abstract

This paper introduces and studies a class of evolutionary dynamics—pairwise interact-and-imitate dynamics (PIID)—in which agents are matched in pairs, engage in a symmetric game, and imitate the opponent with a probability that depends on the difference in their payoffs. We provide a condition on the underlying game, named supremacy, and show that the population state in which all agents play the supreme strategy is globally asymptotically stable. We extend the framework to allow for payoff uncertainty, and check the robustness of our results to the introduction of some heterogeneity in the revision protocol followed by agents. Finally, we show that PIID can allow the survival of strictly dominated strategies, leads to the emergence of inefficient conventions in social dilemmas, and makes assortment ineffective in promoting cooperation.

Subject terms: Population dynamics, Social evolution, Human behaviour

Introduction

In evolutionary game-theoretic models, it is standard practice to assume that agents make decisions according to short-sighted adaptive rules. These include avoidance of strategies that performed poorly in the past, best response to the empirical distribution of opponents’ strategies, and imitation of successful peers1,2. The last has been shown to be common in both humans and animals, and is generally recognized as a cognitively parsimonious social heuristic3–5. An important aspect of imitative dynamics is the relation between the structure of interactions and agents’ reference groups. The interaction structure specifies how agents are matched, e.g. in a purely random manner or assortatively in some respect6–11; an individual’s reference group consists instead of those agents whom that individual observes and takes as a reference for comparison purposes. This paper examines the case where agents compare their payoff to that of their opponent, obtaining clear-cut and perhaps surprising results in a variety of games. In doing so, it shows that the interplay of interaction structure and reference groups, which so far has received little attention in the literature, plays a fundamental role in determining evolutionary outcomes.

Distinctions among imitative rules can be made as to what drives behavior, who is imitated, and how much information is needed for decision making12,13. For example, rules of the kind ‘copy the first person you see’ make actions depend only on their popularity, whereas other rules consider actions to be a function of observed payoffs. The target of comparisons may consist of either a single agent or a (possibly large) group of individuals, and information requirements can range from very low to extremely high levels, yielding a wide range of different behavioral rules14–21. Often, these rules treat interaction structure and reference groups as separate entities: whenever an agent receives a revision opportunity, she randomly selects another individual as reference, observes this individual’s strategy, and switches to it with a probability that depends on relative payoffs22–24. This is most plausible in the case of games against nature or when agents cannot observe their opponents’ payoff. However, cases also exist in which the decoupling of interaction structure and reference groups does not hold, as often people can only observe, and act upon, the behavior of those with whom they interact. This idea is recurrent in the literature on games on networks, where typically agents play with and imitate their nearest or next-nearest neighbors25–30.

Building on this insight, this paper introduces and studies a class of evolutionary dynamics in which interaction structure and reference groups overlap, that is, where those whom one interacts with are also those with whom she compares herself. When given a revision opportunity, an agent playing strategy i against an opponent playing strategy j will switch to j with positive probability if the payoff from j against i is greater than the payoff from i against j. We name this revision protocol Pairwise Interact-and-Imitate. Intuitively, this appears to be a reasonable criterion for strategy updating in situations where interacting with another agent suffices to make that agent salient as a comparison reference, which may occur, for instance, when interaction and observation opportunities are constrained by the same factors, be them physical, social or cultural. In such cases an overlap between interaction structure and reference groups is established indirectly, as the result of both interaction and observation being determined by the same factors.

Our work is close in spirit to pairwise comparative models of traffic dynamics, where changes from one route to another occur at a frequency that depends on differences in traveling costs31. It is also related to local replicator dynamics32, in which agents are uniformly matched at random in groups of size n, engage in pairwise interactions with members of their group, and imitate each other depending on the difference in their payoffs; when these models yield a Pairwise Interact-and-Imitate dynamic with uniform random matching (while here we also consider matching processes that are not uniformly random).

The purpose of our paper is twofold. First, we introduce the Pairwise Interact-and-Imitate revision protocol and study the resulting dynamics in symmetric games. We give a condition on the stage game, named supremacy, and show that the population state in which all agents choose the supreme strategy is globally asymptotically stable. Roughly speaking, a strategy is supreme if it always yields a payoff higher than the payoff received by an opponent playing a different strategy. We then generalize the framework to allow for payoff uncertainty, we check the robustness of our results to the introduction of some heterogeneity in revision protocols, and we show that PIID can allow the survival of strictly dominated strategies. Second, we apply the revision protocol to social dilemmas, showing that PIID causes the emergence of inefficient conventions and makes assortment ineffective in facilitating cooperation.

Results

The model

Consider a unit-mass population of agents who repeatedly interact in pairs to play a symmetric stage game. The set of strategies available to each agent is finite and denoted by . A population state is a vector , with the fraction of the population playing strategy . Payoffs are described by a function , where F(i, j) is the payoff received by an agent playing strategy i when the opponent plays strategy j. As a shorthand, we refer to an undirected pair of individuals, one playing i and the other playing j, as an ij pair. The set of all possible undirected pairs is denoted by .

The interaction structure is modeled as a function subject to (since the mass of pairs is half the mass of agents), with indicating the mass of ij pairs formed in state x. Note that the mass of ij pairs can never exceed , that is, for all x. We assume that p is continuous in X, and that if and only if and —meaning that the probability of an ij pair being formed is strictly positive if and only if strategies i and j are played by someone. In the case of uniform random matching, and for any i and .

The revision protocol is modeled as a function , where is the probability that an ij pair will turn into an ii pair minus the probability that it will turn into a jj pair, conditional on the population state being x and an ij pair being formed. We assume that is continuous in X. We note that by construction for all , and hence for all . Our main assumption on the revision protocol is the following, which is met, among others, by pairwise proportional imitative and imitate-if-better rules22.

Assumption 1

For every , if .

In what follows we consider a dynamical system in continuous time with state space X, characterized by the following equation of motion.

Definition 1

(Pairwise interact-and-imitate dynamics—PIID) For every and every :

| 1 |

Main findings

Global asymptotic convergence

In any purely imitative dynamics, if , then for every . This implies that we cannot hope for global asymptotic convergence in a strict sense. Thus, to assess convergence towards a certain state x in a meaningful way, we restrict our attention to those states where all strategies that have positive frequency in x have positive frequency as well. We denote by the set of states whose support contains the support of x.

Definition 2

(Supremacy) Strategy is supreme if for every .

We note that under PIID, the concept of supremacy is closely related to that of asymmetry33,34, in that implies that agents can only switch from strategy j to strategy i.

Proposition 1

If is a supreme strategy, then state is globally asymptotically stable for the dynamical system with state space and PIID as equation of motion.

Relation to replicator dynamics

To further characterize the dynamics induced by the pairwise interact-and-imitate protocol, we make two additional assumptions. First, matching is uniformly random, meaning that everyone in the population has the same probability of interacting with everyone else; formally, and for all i and . Second, the probability that an agent has to imitate the opponent is proportional to the difference in their payoffs if the opponent’s payoff exceeds her own, and is zero otherwise. As a consequence, up to a proportionality factor. Let

,

, and

.

Under these assumptions, at any point in time, the motion of is described by:

| 2 |

which is a modified replicator equation. According to (2), for every strategy i chosen by one or more agents in the population, the rate of growth of the fraction of i-players, , equals the difference between the expected payoff from playing i in state x and the average payoff received by those who are matched against an agent playing i. In contrast, under standard replicator dynamics35, the fraction of agents playing i varies depending on the excess payoff of i with respect to the current average payoff in the whole population, i.e., .

A noteworthy feature of replicator dynamics is that they are always payoff monotone: for any , the proportions of agents playing i and j grow at rates that are ordered in the same way as the expected payoffs from the two strategies36. In the case of PIID, this result fails.

Proposition 2

Pairwise-Interact-and-Imitate dynamics need not satisfy payoff monotonicity.

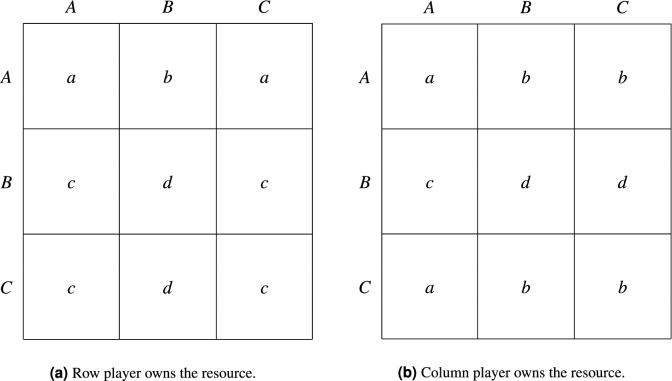

To verify this, it is sufficient to consider any symmetric game where but for some , meaning that i is the supreme strategy but j yields a higher expected payoff in state x. See Fig. 1 for an example where, in the case of uniform random matching, the above inequalities hold for any x; if strategies are updated according to the interact-and-imitate protocol, then this game only admits switches from i to j, therefore violating payoff monotonicity. Proposition 2 can have important consequences, including the survival of pure strategies that are strictly dominated.

Figure 1.

A game where the supreme strategy is strictly dominated.

Survival of strictly dominated strategies

An recurring topic in evolutionary game theory is to what extent does support exist for the idea that strictly dominated strategies will not be played. It has been shown that if strategy i does not survive the iterated elimination of pure strategies strictly dominated by other pure strategies, then the fraction of the population playing i will converge to zero in all payoff monotone dynamics37,38. This result does not hold in our case, as PIID is not payoff monotone.

More precisely, under PIID, a strictly dominated strategy may be supreme and, therefore, not only survive but even end up being adopted by the whole population. This suggests that from an evolutionary perspective, support for the elimination of dominated strategies may be weaker than is often thought. Our result contributes to the literature on the conditions under which evolutionary dynamics fail to eliminate strictly dominated strategies in some games, examining a case which has not yet been studied39.

To see that a strictly dominated strategy may be supreme, consider the simple example shown in Fig. 1. Here each agent has a strictly dominant strategy to play A; however, since the payoff from playing B against A exceeds that from playing A against B, strategy B is supreme. Thus, by Proposition 1, the population state in which all agents choose B is globally asymptotically stable.

Figure 1 can also be used to comment on the relation between a supreme strategy and an evolutionary stable strategy, which is a widely used concept in evolutionary game theory40,41. Indeed, while B is the supreme strategy, A is the unique evolutionary stable strategy because it is strictly dominant. However, if F(B, A) were reduced below 2, holding everything else constant, then B would become both supreme and evolutionary stable. We therefore conclude that no particular relation holds between evolutionary stability and supremacy: neither one property implies the other, nor are they incompatible.

Applications

Having obtained general results for the class of finite symmetric games, we now restrict the discussion to the evolution of behavior in social dilemmas. We show that if the conditions of Proposition 1 are met, then inefficient conventions emerge in the Prisoner’s Dilemma, Stag Hunt, Minimum Effort, and Hawk–Dove games. Furthermore, this result holds both without and with the assumption that agents interact assortatively.

Ineffectiveness of assortment

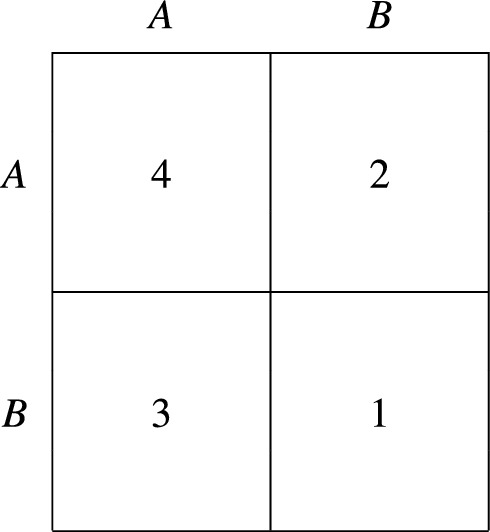

Consider the game represented in Fig. 2. If , then mutual cooperation is Pareto superior to mutual defection but agents have a dominant strategy to defect. The resulting stage game is the Prisoner’s Dilemma, whose unique Nash equilibrium is (B, B). Moreover, since , B is the supreme strategy and the population state in which all agents defect is globally asymptotically stable.

Figure 2.

A stage game.

We stress that defection emerges in the long run for every matching rule satisfying our assumptions, and therefore also in the case of assortative interactions. Assortment reflects the tendency of similar people to clump together, and can play an important role in the evolution of cooperation42–45. Intuitively, when agents meet assortatively, the risk of cooperating in a social dilemma may be offset by a higher probability of playing against other cooperators. However, under PIID, this is not the case: the decision whether to adopt a strategy or not is independent of expected payoffs, and like-with-like interactions have no effect except to reduce the frequency of switches from A to B.

Emergence of the maximin convention

If , and , then the game in Fig. 2 becomes a Stag Hunt game, which contrasts risky cooperation and safe individualism. The payoffs are such that both and are strict Nash equilibria, that is Pareto superior to , and that B is the maximin strategy, i.e., the strategy which maximizes the minimum payoff an agent could possibly receive. We also assume that , so that one of A and B is risk dominant46. If , then A (Stag) is both payoff and risk dominant. When the opposite inequality holds, the risk dominant strategy is B (Hare).

Since , B is supreme independently of whether or not it is risk dominant to cooperate. This can result in large inefficiencies because, in the long run, the process will converge to the state in which all agents play the riskless strategy regardless of how rewarding social coordination is. As in the case of the Prisoner’s Dilemma, this holds for all matching rules satisfying our assumptions.

Evolution of effort exertion

In a minimum effort game, agents simultaneously choose a strategy i, usually interpreted as a costly effort level, from a finite subset S of . An agent’s payoff depends on her own effort and on the minimum effort in the pair:

where and are the cost and benefit of effort, respectively. From a strategic viewpoint, this game can be seen as an extension of the Stag Hunt to cases where there are more than two actions. The best response to a choice of j by the opponent is to choose j as well, and coordinating on any common effort level gives a Nash equilibrium. Nash outcomes can be Pareto-ranked, with the highest-effort equilibrium being the best possible outcome for all agents. Thus, choosing a high i is rationalizable and potentially rewarding but may also result in a waste of effort.

Under PIID, any implies by Assumption 1, meaning that agents will tend to imitate the opponent when the opponent’s effort is lower than their own. The supreme strategy is therefore to exert as little effort as possible, and the population state in which all agents choose the minimum effort level is the unique globally asymptotically stable state.

Emergence of aggressive behavior

Consider again the payoff matrix shown in Fig. 2. If , then the stage game is a Hawk–Dove game, which is often used to model the evolution of aggressive and sharing behaviors. Interactions can be framed as disputes over a contested resource. When two Doves (who play A) meet, they share the resource equally, whereas two Hawks (who play B) engage in a fight and suffer a cost. Moreover, when a Dove meets a Hawk, the latter takes the entire prize. Again we have that , implying that B is the supreme strategy and that the state where all agents play Hawk is the sole asymptotically stable state.

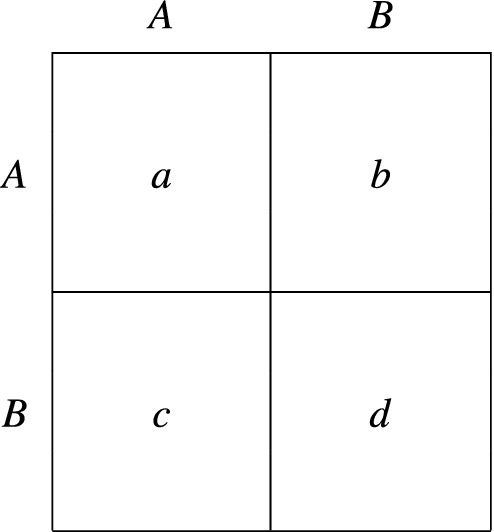

The inefficiency that characterizes the (B, B) equilibrium in the Hawk–Dove game arises from the cost that Hawks impose on one another. This can be viewed as stemming from the fact that neither agent owns the resource prior to the interaction or cares about property. A way to overcome this problem may be to introduce a strategy associated with respect for ownership rights, the Bourgeois, who behaves as a Dove or Hawk depending on whether or not the opponent owns the resource41. If we make the standard assumption that each member of a pair has a probability of 1/2 to be an owner, then in all interactions where a Bourgeois is involved there is a 50 percent chance that she will behave hawkishly (i.e., fight for control over the resource) and a 50 percent chance that she will act as a Dove.

Let R and C denote the agent chosen as row and column player, respectively, and let and be the states of the world in which R and C owns the resource. The payoffs of the resulting Hawk–Dove–Bourgeois game are shown in Fig. 3. If agents behave as expected payoff maximizers, then All Bourgeois can be singled out as the unique asymptotically stable state. Under PIID, this is not so; depending on who owns the resource, an agent playing C against an opponent playing B may either fight or avoid conflict and let the opponent have the prize. It is easy to see that , meaning that the payoff from playing C against B, conditional on owning the resource, equals the payoff from playing B against C conditional on not being an owner. In contrast, the payoff from playing C against B, conditional on not owning the resource, is always worse than that of the opponent, i.e., . Thus, in every state of the world, B (Hawk) yields a payoff that is greater or equal to that from C (Bourgeois). Moreover, since in both states of the world, strategy B is weakly supreme by Definition 4, and play unfolds as an escalation of hawkishness and fights.

Figure 3.

The Hawk–Dove–Bourgeois game.

Discussion

We have studied a novel class of evolutionary dynamics, named pairwise interact-and-imitate dynamics, in which agents choose whether or not to change strategy by comparing their payoff with that of their opponent. Our main result is that under PIID, if there exists a supreme strategy (that is, a strategy that always yields a payoff higher than the payoff received by an opponent playing a different strategy), then the state in which the whole population chooses the supreme strategy is globally asymptotically stable. Importantly, the supreme strategy may be a dominated strategy, and the strategy profile played in the asymptotically stable state may not be a Nash equilibrium.

Under PIID, externalities have an important role. Whenever a strategy, say i, generates an increase (decrease) in the payoff of an opponent playing a different strategy, say j, it is more (less) likely that i will be updated in favor of j. Moreover, this is so regardless of the payoff received when using the same strategy as the opponent. Thus, ceteris paribus, strategies that impose negative (positive) externalities are more (less) likely to be selected by evolution, possibly leading to inefficient outcomes.

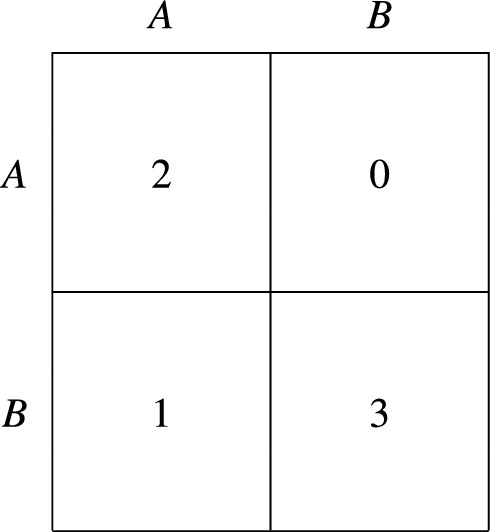

However, it is worth noting that PIID do not necessarily lead to inferior outcomes as compared to other evolutionary dynamics. The simple example of Fig. 4 shows this. For instance, under Pairwise Proportional Imitation12,22, if the fraction of agents playing A is sufficiently large, then the system will move to the state where the whole population plays A—which, however, is Pareto dominated by everyone playing B. Conversely, under PIID, the system always moves to the state where everyone plays B (since B is supreme). This result holds in general, i.e., even without the additional assumptions required to represent evolution by means of the modified replicator equation.

Figure 4.

A game where PIID selects an efficient outcome.

Overall, our findings provide a case for why individual behaviors may direct evolution towards outcomes that do not meet Pareto efficiency and strategy dominance criteria. Rather, our dynamics depend on which strategy, if any, is supreme, i.e. systematically outperforms other strategies when these are chosen by one’s opponents. This implies that the outcome of evolution can be either very undesirable or very desirable, depending on how large the payoff from the supreme strategy is when this strategy is chosen by everyone in the population. Moreover, since the structure of interactions plays no role in determining which strategy is supreme, the long-run equilibrium selected by PIID is not affected by institutions and other factors that influence how agents interact, such as those generating assortment.

These results may help explain previous findings in the literature showing that local interactions favor the evolution of cooperation when considering death-birth processes, but not when considering birth-death processes47. This can be interpreted as originating from differences in the relation between the interaction structure and agents’ reference groups: death-birth processes assume a distinction between matching and comparisons, whereas birth-death processes make them coincide (as is the case in our model), thereby causing cooperation to be selected against in the long run.

We have shown that when applied to the evolution of behavior, pairwise interact-and-imitate dynamics lead to clear-cut and sometimes surprising results in a variety of games. However, not all classes of games are suited to our revision protocol; in this paper we have considered only symmetric games, leaving aside those cases where agents can choose among different strategies or have different payoff functions. When agents’ strategy sets differ from one another, it does not seem very reasonable to assume that choices are updated according to a pairwise imitative rule based on payoff differentials. Nevertheless, we believe that an imitative protocol like ours may still be applied in a meaningful way to those cases in which agents have the same strategy set but differ in some other respect. For instance, in a setting where agents differ in wealth, a poor individual may be driven to imitate the strategy chosen by a rich individual earning a high payoff, even if this is due to differences in wealth rather than in strategy.

An extension of the model developed here would be to consider the case of a finite population of agents. This would facilitate comparisons with some of the literature32, but would come at the cost of hindering the analysis when introducing payoff uncertainty and studying how PIID relate to replicator dynamics. Another extension would be to move from two-player to n-player symmetric games, which would require defining the class of Groupwise Interact-and-Imitate Dynamics and adjusting the notion of supremacy to consider the relative performance of a strategy towards profiles of others’ strategies.

Finally, a question that may be worthy of further investigation is how the dynamics will behave when no supreme strategy exists. To answer this question, one may define a binary relation such that if and only if . One may then define as the transitive closure of , and let be set of supremal strategies. Our conjecture is that, under PIID, all strategies that do not belong to will die out independently of the structure of interactions; however, the precise characterization of limit sets may depend on details of the payoff structure, the interaction structure, and the revision protocol.

Methods

Lyapunov’s method

To prove Proposition 1 we use Lyapunov’s second method for global stability. We want to show that , with

is a strict Lyapunov function. It is easy to see that f is of class , that for every , and that . We are left to show that (i) and (ii) for every . Taking the time derivative of f and using Definition 1, we can write:

We observe that for every , which implies . For every , there exists such that . Since and , we therefore have that . Moreover, since for every , we have that by Assumption 1. To see that for every , we finally note that is always non-negative and that is positive for all by the definition of supremacy and Assumption 1.

Supremacy under uncertainty

So far we have only considered games in which payoffs are not subject to any uncertainty in their realization. Here we extend our analysis by allowing for this possibility, which is relevant for a variety of applications (e.g., the Hawk–Dove and Hawk–Dove–Bourgeois games).

Let be a finite set of states of the world and be the probability of state occurring. We write to denote the payoff received by an agent playing strategy i against an opponent playing j when state of the world occurs. For every and every , let be the -matrix with typical element , the latter being the net inflow from j to i when the population state is x, the state of the world is , and an ij pair is formed.

We replace Assumption 1 with the following.

Assumption 2

For every and every , if .

We also assume that, at any point in time, the net population inflow from j to i is obtained by averaging over the set of states of the world, i.e.:

| 3 |

The convergence result of Proposition 1 can be extended to the case of uncertainty if we define supremacy as follows.

Definition 3

(Supremacy under uncertainty) In the presence of uncertainty, strategy is supreme if for every and every .

To see that the result holds, note that if strategy i is supreme by Definition 3 and Assumption 2 holds, then for every and every . Moreover, is continuous in X if is continuous in X for every . By a reasoning analogous to that used in the proof of Proposition 1, we therefore have that state is globally asymptotically stable for the dynamical system with state space and PIID as equation of motion.

A less restrictive definition of supremacy under uncertainty is given below.

Definition 4

(Weak supremacy under uncertainty) In the presence of uncertainty, strategy is weakly supreme if for every and every , and if for every there exists such that .

Under the conditions of Definition 4, our convergence result holds if we strengthen Assumption 2 as follows.

Assumption 3

For every and every , if and only if .

Here the ‘only if’ is required to deal with those states of the world where , which are not ruled out by Definition 4.

An even weaker definition of supremacy can be given when focusing on a specific . For instance, consider the case where the probability that agents have to imitate the opponent is proportional to the difference in their payoffs if the opponent’s payoff exceeds their own, and is zero otherwise. Under this protocol, letting the expected payoff from playing i against j be , we can define the following.

Definition 5

(Supremacy in expectation under uncertainty) In the presence of uncertainty, strategy is supreme in expectation if for every .

It can now be seen that:

meaning that the net population inflow from j to i is positive if and only if i is supreme in expectation. This suffices to replicate the result of Proposition 1.

Heterogeneous revision protocols

Although we believe the interact-and-imitate protocol to be reasonable in many circumstances, it may well be the case that agents also rely on other revision protocols occasionally. If our results crucially hinged on the assumption that agents always follow the interact-and-imitate rule, they would be of little interest.

Let the set of possible states of the world be . Suppose that agents follow the pairwise interact-and-imitate protocol in state and a different revision protocol in state . Now let us define a continuous function , where is the probability that an ij pair will turn into an ii pair minus the probability that it will turn into a jj pair, conditional on the population state being x, an ij pair being formed, and the state of the world being . Note that may also reflect the fact that members of a pair interact after, rather than before, having updated their strategies, in which case the interact-and-imitate protocol cannot be applied.

We define:

| 4 |

with . The equation of motion for this dynamical system is the following.

Definition 6

(Quasi pairwise interact-and-imitate dynamics—QPIID) For every and every :

| 5 |

Note that Assumption 1 concerns only, and that we have no analogous assumption for . This notwithstanding, a convergence result in the spirit of Proposition 1 can be obtained if agents follow the protocol rarely enough.

Proposition 3

If is supreme, then there exists such that, for every , state is globally asymptotically stable for the dynamical system with state space and QPIID as equation of motion.

We want to show that if for every , then there exists such that, for every , for every . Moreover, since both and are continuous in X, is continuous in X as well. As a consequence, the statement in Proposition 3 can be proven by replicating the argument used in the proof of Proposition 1.

We consider the worst case to have positive. For every , we have that exists, since is a continuous function on a compact set. Moreover, by continuity of and noting that due to and Assumption 1, we also have that . We define , and note that . Also, we define:

| 6 |

Since cannot be smaller than , if then we have that for every , as can be easily checked from (4).

We stress that the bound on given in (6) is independent of which protocol is being considered. A more precise value for the bound can be obtained by making specific assumptions about the strategy revision process. For example, suppose that in state of the world , agents imitate the opponent with unit probability whenever the latter receives a higher payoff than they do, so that if . In this case, we can have the maximum possible amount of heterogeneity in revision protocols (i.e., ) and still have global asymptotic stability of the state in which the whole population chooses the supreme strategy.

Acknowledgements

The authors gratefully acknowledge financial support from the Italian Ministry of Education, University and Research (MIUR) through the PRIN project Co.S.Mo.Pro.Be. “Cognition, Social Motives and Prosocial Behavior” (Grant n. 20178293XT) and from the IMT School for Advanced Studies Lucca through the PAI project Pro.Co.P.E. “Prosociality, Cognition, and Peer Effects”.

Author contributions

E.B., L.B., and N.C. contributed equally to setting the model, developing theoretical results and finding applications, as well as to writing the paper.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Fudenberg D, Levine DK. The Theory of Learning in Games. MIT Press; 1998. [Google Scholar]

- 2.Young HP. Individual Strategy and Social Structure: An Evolutionary Theory of Institutions. Princeton University Press; 1998. [Google Scholar]

- 3.Pingle M, Day RH. Modes of economizing behavior: experimental evidence. J. Econ. Behav. Org. 1996;29:191–209. doi: 10.1016/0167-2681(95)00059-3. [DOI] [Google Scholar]

- 4.Rendell L, et al. Why copy others? insights from the social learning strategies tournament. Science. 2010;328:208–213. doi: 10.1126/science.1184719. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Gigerenzer G, Gaissmaier W. Heuristic decision making. Annu. Rev. Psychol. 2011;62:451–482. doi: 10.1146/annurev-psych-120709-145346. [DOI] [PubMed] [Google Scholar]

- 6.Szabó G, Fáth G. Evolutionary games on graphs. Phys. Rep. 2007;446:97–216. doi: 10.1016/j.physrep.2007.04.004. [DOI] [Google Scholar]

- 7.Jackson MO, Zenou Y. Games on networks. In: Young HP, Zamir S, editors. Handbook of Game Theory. North Holland; 2014. pp. 95–164. [Google Scholar]

- 8.Alger I, Weibull JW. Homo moralis–preference evolution under incomplete information and assortative matching. Econometrica. 2013;81:2269–2302. doi: 10.3982/ECTA10637. [DOI] [Google Scholar]

- 9.Alger I, Weibull JW. Evolution and kantian morality. Games Econ. Behav. 2016;98:56–67. doi: 10.1016/j.geb.2016.05.006. [DOI] [Google Scholar]

- 10.Newton J. The preferences of homo moralis are unstable under evolving assortativity. Int. J. Game Theory. 2017;46:583–589. doi: 10.1007/s00182-016-0548-4. [DOI] [Google Scholar]

- 11.Bilancini E, Boncinelli L, Wu J. The interplay of cultural intolerance and action-assortativity for the emergence of cooperation and homophily. Eur. Econ. Rev. 2018;102:1–18. doi: 10.1016/j.euroecorev.2017.12.001. [DOI] [Google Scholar]

- 12.Sandholm WH. Population Games and Evolutionary Dynamics. MIT Press; 2010. [Google Scholar]

- 13.Newton J. Evolutionary game theory: a renaissance. Games. 2018;9:31. doi: 10.3390/g9020031. [DOI] [Google Scholar]

- 14.Helbing D. A mathematical model for behavioral changes by pair interactions. In: Haag G, Mueller U, Troitzsch KG, editors. Economic Evolution and Demographic Change: Formal Models in Social Sciences. Springer; 1992. pp. 330–348. [Google Scholar]

- 15.Ellison G, Fudenberg D. Word-of-mouth communication and social learning. Quart. J. Econ. 1995;110:93–125. doi: 10.2307/2118512. [DOI] [Google Scholar]

- 16.Bjrönerstedt J, Weibull JW. Nash equilibrium and evolution by imitation. In: Arrow K, Colombatto E, Perlman M, Schmidt C, editors. The Rational Foundations of Economic Behavior. Macmillan; 1996. [Google Scholar]

- 17.Robson AJ, Vega-Redondo F. Efficient equilibrium selection in evolutionary games with random matching. J. Econ. Theory. 1996;70:65–92. doi: 10.1006/jeth.1996.0076. [DOI] [Google Scholar]

- 18.Vega-Redondo F. The evolution of walrasian behavior. Econometrica. 1997;65:375–384. doi: 10.2307/2171898. [DOI] [Google Scholar]

- 19.Sandholm W. Pairwise comparison dynamics and evolutionary foundations for nash equilibrium. Games. 2010;1:3–17. doi: 10.3390/g1010003. [DOI] [Google Scholar]

- 20.Duersch P, Oechssler J, Schipper BC. Unbeatable imitation. Games Econ. Behav. 2012;76:88–96. doi: 10.1016/j.geb.2012.05.002. [DOI] [Google Scholar]

- 21.Khan A. Coordination under global random interaction and local imitation. Int. J. Game Theory. 2014;43:721–745. doi: 10.1007/s00182-013-0399-1. [DOI] [Google Scholar]

- 22.Schlag KH. Why imitate, and if so, how? a boundedly rational approach to multi-armed bandits. J. Econ. Theory. 1998;78:130–156. doi: 10.1006/jeth.1997.2347. [DOI] [Google Scholar]

- 23.Alós-Ferrer C, Schlag KH. Imitation and learning. In: Anand P, Pattanaik P, Puppe C, editors. Handbook of Rational and Social Choice. Oxford University Press; 2009. [Google Scholar]

- 24.Izquierdo SS, Izquierdo LR. Stochastic approximation to understand simple simulation models. J. Stat. Phys. 2013;151:254–276. doi: 10.1007/s10955-012-0654-z. [DOI] [Google Scholar]

- 25.Fosco C, Mengel F. Cooperation through imitation and exclusion in networks. J. Econ. Dyn. Control. 2011;35:641–658. doi: 10.1016/j.jedc.2010.12.002. [DOI] [Google Scholar]

- 26.Tsakas N. Imitating the most successful neighbor in social networks. Rev. Network Econ. 2014;12:403–435. doi: 10.1515/rne-2013-0119. [DOI] [Google Scholar]

- 27.Cimini G. Evolutionary network games: equilibria from imitation and best response dynamics. Complexity. 2017;2017:1–14. doi: 10.1155/2017/7259032. [DOI] [Google Scholar]

- 28.Alós-Ferrer C, Weidenholzer S. Contagion and efficiency. J. Econ. Theory. 2008;143:251–274. doi: 10.1016/j.jet.2007.12.003. [DOI] [Google Scholar]

- 29.Alós-Ferrer C, Weidenholzer S. Imitation and the role of information in overcoming coordination failures. Games Econ. Behav. 2014;87:397–411. doi: 10.1016/j.geb.2014.05.013. [DOI] [Google Scholar]

- 30.Cui Z, Wang R. Collaboration in networks with randomly chosen agents. J. Econ. Behav. Organ. 2016;129:129–141. doi: 10.1016/j.jebo.2016.06.015. [DOI] [Google Scholar]

- 31.Smith MJ. The stability of a dynamic model of traffic assignment—An application of a method of Lyapunov. Transp. Sci. 1984;18:245–252. doi: 10.1287/trsc.18.3.245. [DOI] [Google Scholar]

- 32.Hilbe C. Local replicator dynamics: a simple link between deterministic and stochastic models of evolutionary game theory. Bull. Math. Biol. 2011;73:2068–2087. doi: 10.1007/s11538-010-9608-2. [DOI] [PubMed] [Google Scholar]

- 33.Peski M. Generalized risk-dominance and asymmetric dynamics. J. Econ. Theory. 2010;145:216–248. doi: 10.1016/j.jet.2009.05.007. [DOI] [Google Scholar]

- 34.Newton, J. Conventions under heterogeneous choice rules. Forthcoming in Rev. Econ. Stud. (2020).

- 35.Taylor PD, Jonker LB. Evolutionary stable strategies and game dynamics. Math. Biosci. 1978;40:145–156. doi: 10.1016/0025-5564(78)90077-9. [DOI] [Google Scholar]

- 36.Weibull JW. Evolutionary Game Theory. MIT Press; 1995. [Google Scholar]

- 37.Nachbar JH. “Evolutionary” selection dynamics in games: Convergence and limit properties. Int. J. Game Theory. 1990;19:59–89. doi: 10.1007/BF01753708. [DOI] [Google Scholar]

- 38.Samuelson L, Zhang J. Evolutionary stability in asymmetric games. J. Econ. Theory. 1992;57:363–391. doi: 10.1016/0022-0531(92)90041-F. [DOI] [Google Scholar]

- 39.Hofbauer J, Sandholm WH. Survival of dominated strategies under evolutionary dynamics. Theor. Econ. 2011;6:341–377. doi: 10.3982/TE771. [DOI] [Google Scholar]

- 40.Smith JM, Price GR. The logic of animal conflict. Nature. 1973;246:15–18. doi: 10.1038/246015a0. [DOI] [Google Scholar]

- 41.Maynard Smith J. Evolution and the Theory of Games. Cambridge University Press; 1982. [Google Scholar]

- 42.Eshel I, Cavalli-Sforza LL. Assortment of encounters and evolution of cooperativeness. Proc. Natl. Acad. Sci. 1982;79:1331–1335. doi: 10.1073/pnas.79.4.1331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Bergstrom TC. The algebra of assortative encounters and the evolution of cooperation. Int. Game Theory Rev. 2003;5:211–228. doi: 10.1142/S0219198903001021. [DOI] [Google Scholar]

- 44.Bergstrom TC. Measures of assortativity. Biol. Theory. 2013;8:133–141. doi: 10.1007/s13752-013-0105-3. [DOI] [Google Scholar]

- 45.Allen B, Nowak MA. Games among relatives revisited. J. Theor. Biol. 2015;378:103–116. doi: 10.1016/j.jtbi.2015.04.031. [DOI] [PubMed] [Google Scholar]

- 46.Harsanyi JC, Selten R. A General Theory of Equilibrium Selection in Games. MIT Press; 1988. [Google Scholar]

- 47.Ohtsuki H, Hauert C, Lieberman E, Nowak MA. A simple rule for the evolution of cooperation on graphs and social networks. Nature. 2006;328:502–505. doi: 10.1038/nature04605. [DOI] [PMC free article] [PubMed] [Google Scholar]