Abstract

Transparency in decision modelling is an evolving concept. Recently, discussion has moved from reporting standards to open source implementation of decision analytic models. However, in the debate about the supposed advantages and disadvantages of greater transparency, there is a lack of definition. The purpose of this article is not to present a case for or against transparency, but rather to provide a more nuanced understanding of what transparency means in the context of decision modelling and how it could be addressed. To this end, we review and summarise the discourse to date, drawing on our collective experience. We outline a taxonomy of the different manifestations of transparency, including reporting standards, reference models, collaboration, model registration, peer review, and open source modelling. Further, we map out the role and incentives for the various stakeholders, including industry, research organisations, publishers, and decision-makers. We outline the anticipated advantages and disadvantages of greater transparency with respect to each manifestation, as well as the perceived barriers and facilitators to greater transparency. These are considered with respect to the different stakeholders and with reference to issues including intellectual property, legality, standards, quality assurance, code integrity, health technology assessment processes, incentives, funding, software, access and deployment options, data protection, and stakeholder engagement. For each manifestation of transparency, we discuss the ‘what’, ‘why’, ‘who’, and ‘how’. Specifically, their meaning, why the community might (or might not) wish to embrace them, whose engagement as stakeholders is required, and how relevant objectives might be realised. We identify current initiatives aimed to improve transparency to exemplify efforts in current practice and for the future.

1. INTRODUCTION

Transparency is a defining characteristic of all scientific endeavours. Without it, the integrity and validity of research findings cannot be independently tested and verified, which is necessary to ensure the reliable use of evidence in decision making.

Daniels and Sabin [1] have argued that transparency is critical for a public agency “to be accepted as [a] legitimate moral authority for distributing health care fairly” [2]. Bodies such as the National Institute for Health and Care Excellence (NICE) in England and the Canadian Agency for Drugs and Technologies in Health (CADTH) require formal decision analysis as part of health technology assessment (HTA) submissions, which inform their recommendations for the adoption of health technologies. Thus, if we accept the arguments of Daniels and Sabin, models used to inform public decision-making ought to be transparent.

Having established the principle that transparency is desirable, we take an instrumental approach in this paper to consider how transparency might effectively be achieved through different mechanisms including the use of reporting standards, open source models, model registration, and peer review. For each, we consider how they can best be employed to promote transparency and the degree to which they can deliver benefits. We consider how these different mechanisms interact with the objective functions of various agents involved in the development and use of models, such as analysts (both for-profit and non-profit), decision-makers, and academics.

There are numerous potential benefits to greater transparency. Transparency can facilitate replication and reduce the prevalence of technical errors, leading to more reliable information being available to decision-makers. More effective sharing of information and model concepts could aid incremental improvements in model structure, improving external validity. Together, these factors should improve the credibility of models, leading to them being more influential in decision-making. In turn, this should lead to better-informed decisions regarding resource allocation to existing technologies, more appropriate access and pricing of newly introduced technologies, and more appropriate price signals to developers and investors.

It is for these reasons that numerous attempts to identify good practices in decision modelling have incorporated transparency to a greater or lesser extent [3,4]. While there has been discussion in the literature about the benefits of greater transparency, the potential disadvantages and unintended consequences are poorly described. It is essential that all stakeholders understand the implications of greater transparency (in its various forms) in order to mitigate any risks and ensure that the benefits outweigh the costs.

Yet, recommendations for achieving transparency in HTA and academic publications have been vague. Models are routinely reported in a way that does not facilitate interrogation or replication. Part of the problem, we believe, is that the concept of transparency in the context of decision modelling has not been adequately explored. There are a variety of ways in which research can be transparent, and any given piece of research may be simultaneously transparent and unclear in different respects. Furthermore, transparency is an evolving concept that needs to be revised as new practices develop.

In this article, we provide a detailed consideration of transparency. We outline the various manifestations of transparency that have been demonstrated or hypothesised in the literature (‘what’). Based on this taxonomy, we reflect on the associated benefits and risks (‘why’) and explore the role of different stakeholders (‘who’). Finally, we consider the means by which effective transparency in the context of decision modelling might be achieved and the research that could guide this transition (‘how’).

2. WHAT? MANIFESTATIONS OF TRANSPARENCY

The International Society for Pharmacoeconomics and Outcomes Research and Society for Medical Decision Making (ISPOR-SMDM) Modelling Good Research Practices Task Force characterised transparency as “clearly describing the model structure, equations, parameter values, and assumptions to enable interested parties to understand the model” [3]. This definition allows for endless interpretations. What constitutes a clear description? What duty of ‘enablement’ rests with the modeller? Who should qualify as an interested party? And, importantly, what constitutes understanding? It is inevitable that the satisfaction of these requirements is subjective, and also that the achievement of transparency cannot be demonstrated in any consistent manner. Alternative definitions of transparency could extend beyond this definition, requiring replicability, for example.

In this section, we do not seek to define transparency or to support or refute previous definitions. Rather, we assert that there is a variety of manifestations of transparency, which could satisfy the ISPOR-SMDM Task Force definition in diverse ways and to varying degrees. These manifestations vary in respect to which aspects of the modelling process are transparent and to whom.

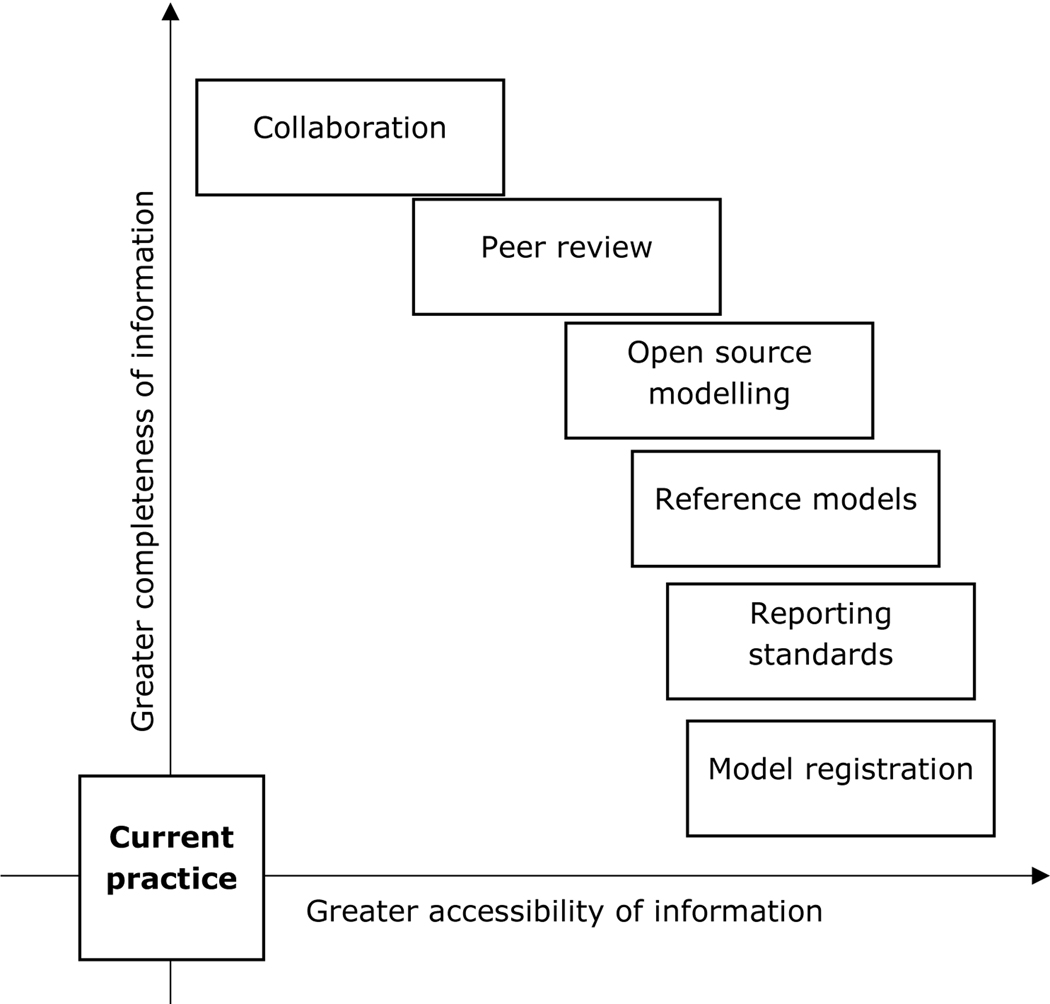

Figure 1 specifies the manifestations of transparency that we discuss in this paper, recognising that these are not mutually exclusive. Transparency can be increased (or decreased) along two dimensions. First, research reporting is more transparent if it provides a greater amount of information relating to the research, which we characterise as ‘depth’. Second, research is more transparent if the information provided is accessible to a greater number of people, which we characterise as ‘breadth’. Each manifestation may increase transparency along one or both of these dimensions and to varying extents. For example, each manifestation could be highly accessible or highly restrictive. There may be an inherent trade-off between completeness (depth) and accessibility (breadth), because ensuring the clarity and accessibility of information may become more challenging as the amount of information shared is increased. Nevertheless, the positioning of the items in Figure 1 is illustrative. We observe that no single manifestation of transparency is sufficient to achieve complete transparency.

Figure 1: Manifestations of transparency.

Note: the positioning of the manifestations is illustrative and not a generalisable ranking of the expected impact of any manifestation of transparency in the context of a given model.

2.1. Model registration

Analysts and decision-makers do not routinely know about modelling studies that are currently planned or underway. Nor do they know about modelling studies that have been conducted but not reported. Thus, there is a lack of transparency in the process and wider ecosystem of modelling.

Sampson and Wrightson proposed the creation of a generic model registry with a linked database of decision models [5]. Such a registry would be analogous to clinical trial registries and would serve some of the same purposes. In particular, it could help to reduce publication bias, which may be common in cost-effectiveness modelling [6,7].

The inclusion of a model in a registry would involve the sharing of a small amount of information with a wide audience. Of all the forms of transparency discussed in this article, a model registry would provide the least amount of information. The impact of such a registry would depend on the information recorded therein. Nevertheless, the sharing of details about studies previously completed, currently underway, or planned for the future, is a form of transparency that could create value. A model registry could extend transparency and encourage collaboration by complementing other initiatives and by recording the history of the development and application of a model. Disease-specific registries and databases have been created in diabetes [8] and cancer [9], and generic databases are also in development [10].

2.2. Reporting standards

Perhaps the most widely used and long-standing approach to achieving transparency has been the adoption of reporting guidelines and standards. The Consolidated Health Economic Evaluation Reporting Standards (CHEERS) is one of the best-known and regularly used reporting guidelines for economic evaluations [11]. Insofar as they support fuller and more consistent reporting, methodological standards can also facilitate transparency. Methodological standards exist for the specific context of decision modelling in health care, notably the Philips checklist [4] and the ISPOR-SMDM Modeling Good Research Practices guidelines [12].

Reporting standards, guidelines, and checklists are principally of value in facilitating transparency with respect to the ‘soft’ aspects of the model development process. They encourage transparency in describing the theoretical basis for the model structure and the rationale for all aspects of model development. Transparency in this respect can only be achieved by a full description in writing. Reporting standards can help to ensure that the overall purpose of a modelling study is clear. They can also ensure that interested parties understand any assumptions inherent in the design or execution of the model. In discussing transparency, Philips and colleagues [4] focus particularly on the importance of describing structural assumptions inherent in the model and the means by which data are identified and used.

Providing a greater amount of information in order to achieve transparency creates a risk of obfuscation, as key details may be lost in fuller reporting. Clarity of communication is necessary to ensure transparency with respect to accessibility, which can be supported by the use of guidelines. Reporting standards can also guide visual representation of model structures and processes, which can increase transparency with respect to accessibility.

No reporting guideline can be perfect for every study. For example, it has been suggested that CHEERS may be insufficient for the reporting of cost-benefit analyses [13]. Reporting guidelines can become quickly outdated and are not usually a sufficient basis for achieving transparent reporting. The CHEERS authors themselves acknowledged that economic evaluation methods are evolving over time and that CHEERS should be reviewed for updating after several years of use [14]. Moreover, even if a guideline is well-suited and used in the reporting of a study, there is always room for different interpretations and subjectivity in its application. Clear and complete communication of the intricacies of a complex model can be very difficult to achieve within the confines of a journal article, even with the use of extensive supplementary appendices. This limits the scope of reporting standards in facilitating complete and transparent reporting.

2.3. Reference models

In some cases, there may be disparate solutions to modelling disease pathways, which could be reconciled and applied in a generalised way to numerous decision problems. Models developed on this basis have been described as reference models or policy models [15]. A framework for the development of reference models has been proposed, which the authors suggest would enhance transparency [16].

Reference models are typically the results of dedicated efforts, with more resources available for better modelling of the natural history of the disease and more complete evidence synthesis. They have been proposed as a way to ensure consistency in the development of models that represent the natural history of specific diseases [17]. Reference or policy models have been created for a variety of conditions, including cardiovascular disease [18], AIDS [19], cancer [20], and population health [21], among others. These models can be used to describe clinical pathways overlaying natural history, which may be jurisdiction-specific but still generalisable.

We consider the development of reference models as a step towards transparency because, by their nature, reference models separate the model building activity from specific applications. As such, they provide an opportunity for transparent reporting of model structure and validation steps, independent of the nuances of individual policy questions that may create barriers to transparency. Individual applications of reference models will have more space (within the confines of the standards of contemporary publications) to elaborate on the incremental approaches they have made for the particular question at hand, enabling greater completeness of reporting. Reference models can be made available through the sharing of code or web-based interfaces (e.g. [22]).

2.4. Open source modelling

A growing number of decision models are developed using statistical programming languages. In this case, the characteristics of a model’s structure and execution can be fully described within source code. Models can be made open source if this source code is made publicly available with a suitable licence that enables full interrogation and adaptation of the source code. Thus, open source models have the potential to provide any interested stakeholder with the necessary information to precisely replicate a modelling study. Whilst there are examples of open source models (e.g. [22–24]), they remain scarce. Several initiatives have begun to operate in this space, including the Open-Source Model Clearinghouse, hosted by Tufts Medical Center [25], and the Open-Source Value Platform, hosted by the Innovation and Value Initiative [26].

Open source modelling is a significant step towards complete transparency. However, it should not be seen as a panacea because information other than the source code is required to fully understand a model. For many models, provision of the code alone would not enable replication because the code depends on data. Some source code might require specialist software to run, meaning that, while the code can be read by an evaluator, the results cannot easily be replicated.

To evaluate complex model code without detailed documentation can be a challenge. Therefore, while open source may allow for the disclosure of a large amount of information relating to the execution of a model, it is not sufficient for all interested parties to understand it.

2.5. Peer review

Peer review is often conducted under conditions of confidentiality. It therefore provides an opportunity for analysts to be wholly transparent with a selection of peers who have agreed to review the work in detail while protecting their intellectual property.

Transparency and peer review have a bidirectional relationship, with each enhancing the other. On the one hand, a manuscript providing a clear and complete report of the study allows for a more meaningful peer review, whereas a reviewer cannot comment on a particular methodological aspect of a study if it is not clear what was done. On the other hand, peer review often leads to a more complete study report in the final published paper (e.g. if a reviewer requests that the authors clarify or elaborate on their methods).

In practice, reviewers cannot typically review a model in detail, either due to lack of expertise or lack of time. This may limit the value of transparency in peer review. Authors are often blind to the identities of reviewers and sometimes vice versa, and readers of the final published paper are usually blind to the reviewer comments and revisions. As such, matters relating to model development during the peer review process are not widely accessible, limiting transparency in model development.

Here, we consider peer review as currently practiced, as a manifestation of transparency. It is important to note that there is a lively discussion ongoing around the role of peer review and its future in scholarly communication. In future, peer review processes may be adapted and decoupled from other publication processes [27], which could facilitate the review of models separately from their application to specific decision problems or description in research papers.

2.6. Collaboration

Arnold and Ekins proposed multi-stakeholder collaboration across various sectors, which would involve greater transparency between members of the modelling community [28]. Decision models in many disease areas are necessarily complex, which can mean that peer reviewers are not adequately equipped to fully understand the work. A prime example is health economics models to simulate diabetes and its complications [29]. While models like the UK Prospective Diabetes Study Outcomes Model have been reported in a high level of detail to promote transparency and reproducibility [30], their complexity can mean that only a highly specialised audience is able to interrogate them.

One way to extend transparency is through the development of networks of health economists that are working on a particular disease area. There are examples of collaborative initiatives in a variety of conditions, including diabetes (the Mount Hood meetings) [31], muscular dystrophy (Project HERCULES) [32], chronic obstructive pulmonary disease [33], and cancer [20]. One of the most well-established networks is the Mt Hood Diabetes Challenge, which involves most groups that have developed diabetes simulation models [34]. The Mt Hood network meets every two years to undertake a series of pre-defined simulations known as challenges. Such meetings provide an opportunity for groups to test and cross-validate their models against each other as well as validate them using real-world data. The Fifth Mt Hood Challenge involved eight modelling groups, who were given four challenges such as replicating the results of major diabetes trials including ADVANCE and ACCORD [35]. Model outcomes for each challenge were compared with the published findings of the respective trials. The discussion of such challenges promotes scrutiny of models by other groups and thereby promotes transparency.

Collaboration is a limited form of transparency in that only a key group of collaborators have full access to materials. However, by establishing a small specialised group, it is more feasible to ensure complete transparency of content.

3. WHY? BENEFITS AND RISKS

The importance of evidence-based medicine for tangible improvements in quality and length of life over the last century and across jurisdictions is known, and illustrated by the ongoing work of the Cochrane Collaboration [36]. The merit of a similarly evidence-based approach to the creation of HTA tools to inform decision-making has long been recognised [37]. There are many potential benefits to transparency that have been proposed by commentators [3,38].

Transparency in modelling is essential for truly evidence-based decision making, just as clinical trial standards and rules have become a requisite component of evidence-based medicine. In the remainder of this section, we specify different aspects of the multifaceted benefits and risks of transparency in modelling, though by no means claim to provide a complete account.

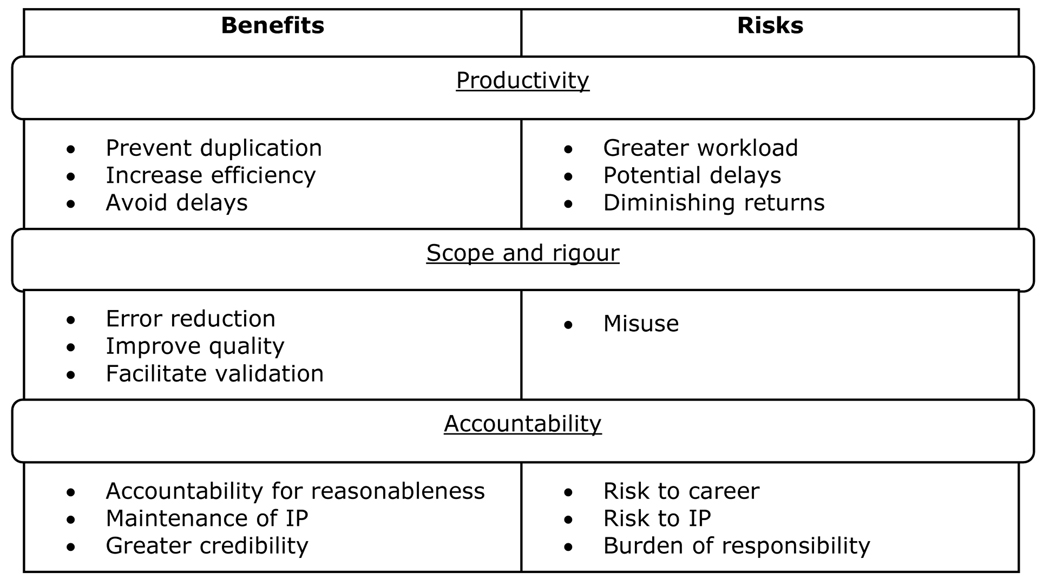

We believe that the main potential benefits and risks of greater transparency are related to three broad areas, as shown in Figure 2: productivity, scope and rigour, and accountability.

Figure 2: The benefits and risks of greater transparency.

3.1. Productivity

Greater transparency in modelling could reduce waste that arises from the duplication of efforts. Full documentation of process has been characterised as a means of achieving efficiencies in model development [39]. Model registration and disease-specific collaborations could prevent separate groups of analysts from unnecessarily commencing equivalent modelling studies [5].

Models are often created for a single purpose and then languish in journal archives, health authority databases, or various proprietary settings. Rather than using or updating a pre-existing model, new models are often created for new medications or new indications at considerable time and financial expense [28]. The availability of reference models could help to avoid this situation, thus freeing up analysts’ time. Similarly, existing models could be ‘reused’ or ‘recycled’ with different data.

A transparently reported model has the potential to be reused or recycled to answer new questions, either by incorporating additional elements or simply revising a decision analysis once new data become available. A model in a similar disease area or related decision problem provides a valuable resource, saving time on decisions over the model structure and key data sources. The analyst is able to review the decisions taken by the previous author(s) and judge whether they are appropriate for their own analysis. Furthermore, they can review the literature searches for parameter values to determine whether they are fit for purpose or require modifying or updating.

With greater transparency, models could be continually revised as new information becomes available. One example where this approach has been applied is for the Birmingham Rheumatoid Arthritis Model (BRAM), which has subsequently been extended by several groups [40]. To do so, however, these groups have been required to each first rebuild the original model, which incurs large costs before new work can be done.

With greater information sharing between different stakeholders, transparency may also lead to a reduction in delays in the modelling process or in health technology assessment. This is especially likely in cases where de novo models are built as part of an appraisal process in lieu of being able to access existing models.

However, the achievement of transparency could greatly increase the time required for model development, with no discernible benefit. For example, if an analyst is to publish their model open source, it is likely that more time will be dedicated to annotation and otherwise making the code understandable to other users. Yet, if the model is never replicated, validated, recycled, or otherwise made use of, this time is wasted. There is also a lack of specific guidance on best practice in sharing model files in a transparent and accessible way, meaning that time expended in facilitating transparency could be ineffective.

Concurrent with a greater workload, ensuring transparency may delay the release of decision models. We might also expect there to be diminishing marginal returns to greater transparency, implying that ‘complete’ transparency could be a suboptimal strategy. There is little evidence available to inform this productivity trade-off, though the time demands of open source modelling have been documented [24].

3.2. Scope and rigour

In addition to facilitating more efficiency in current practice, transparency could also extend the scope and rigour of cost-effectiveness modelling. In particular, the quality of decision models could be improved if greater transparency facilitates more comprehensive testing. Replication and validation are means of distinguishing ‘good’ models from those that are ‘bad’, due to the presence of errors, bias, or poor representation of the decision problem.

Transparency in peer review can allow for a fuller assessment of the validity of the analysis ahead of publication, which may be especially valuable if it provides greater opportunity for refinement of models. This has been recognised with respect to the statistical code used for analyses published in medical journals (see, for example [41]) and economic journals (see, for example [42]). In the experience of one of the authors (TW), who is an editor of health economics journals that routinely request authors to provide a copy of their model for peer review, the practice helps confirm model validity, often results in model improvements, and sometimes identifies major errors.

Improving the validity of models via increased transparency should lead to better decision making. This could result not only from having more accurate information from better models but also from decision-makers being more likely to use the evidence due to greater confidence in the models [43]. Greater transparency about the validity tests that have already been performed on a model can increase confidence in the accuracy of the model [44]. Greater transparency could also facilitate a meta-analytic approach to decision modelling, with meta-modelling representing a more robust source of evidence for decision making [45].

However, there is no guarantee that the replication, validation, or other reuse of models will lead to improvements in quality. If models are published open source, there is a risk that individuals may (intentionally or otherwise) misuse them. A fully transparent modelling infrastructure could allow for misuse to be identified but, without ubiquity in transparency, it creates a greater opportunity (or risk) for models to be taken ‘off-the-shelf’ and applied inappropriately and with opacity.

3.3. Accountability

Registering models and reporting them fully could reduce publication bias and help to make plain any bias in the reporting or methods [46]. Transparency can increase accountability for both the analysts developing the models and the decision-makers using them.

From an analyst’s perspective, greater accountability may be beneficial if models are explicitly reused with appropriate credit, rather than being an implicit influence. Reuse and reapplication of a model can signal the quality of an analyst’s work and bring career benefits. Where models are not transparently reported, but influence future modelling work, intellectual property cannot be easily identified. From a decision-maker’s perspective, in line with the notion of accountability for reasonableness (the idea that decision-making processes with respect to the rationing of health care must be public [2]), greater transparency could enable decision-makers to more clearly justify their decisions.

Greater transparency would facilitate the identification of errors in published work, which, while good for the development of the evidence base, could be damaging to analysts whose errors are identified. A system that mandates transparency could encourage analysts to be less transparent as a result of their concern about the potential for embarrassment or a negative career impact.

It is not clear how accountability can or should be maintained after the transparent publication of a model. Once a model is in the public domain, it is not clear who should be responsible for the fidelity of the underlying model or any results derived from it. Analysts may (reasonably) deny responsibility for the results derived by others from a model that they have developed.

4. WHO? STAKEHOLDERS

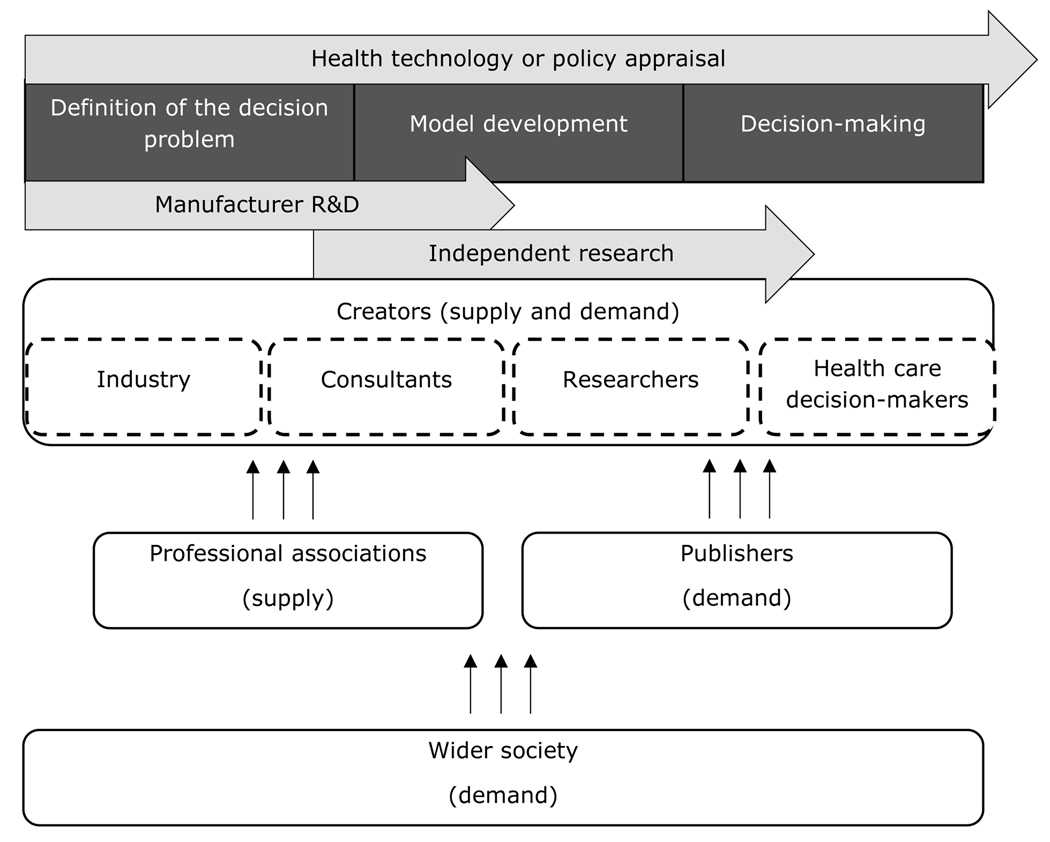

To understand how and whether transparency should be extended in different respects, it is important to identify the motivations of different stakeholders and how they might be affected by transparency. There is a broad array of stakeholders in decision modelling. First, there are those who create, manage, and evaluate decision models. This includes industry, consultancies, research organisations, and HTA agencies. Second, there are stakeholders not directly involved in the development of models, but who are in a strong position to influence (and be affected by) the level of transparency in decision modelling. These include professional associations – for both individuals and organisations – and publishers. Third, there is wider society, which includes policymakers outside the health care resource allocation process and the public at large. Figure 3 illustrates the role of these stakeholders and links between them, as described in the remainder of this section. We refer to appraisals in the broadest sense of evidence-based policymaking, which need not apply to HTA for drugs or devices. Each stakeholder’s influence can be summarised as operating on the supply-side, demand-side, or both.

Figure 3: Stakeholders in decision modelling.

4.1. Industry

Decision problems often arise as a result of new health technologies becoming available and decision-makers having to appraise their costs and benefits to inform reimbursement. In this context, decision models are routinely employed. Many HTA gatekeeper bodies require technology owners to submit the evidence for their reimbursement-seeking technologies, including NICE in England through their Single Technology Appraisal process. Many decision models are thus developed, sponsored, and owned by pharmaceutical companies and other manufacturers.

In an environment where the profit-maximising price of a patented health technology is partly or wholly determined by perceived payer-relevant value in terms of health outcomes in each jurisdiction, and profit-driven firms own the patents, these firms have an incentive to develop (or, as considered in 4.2, pay a consultancy to develop) and control models estimating the payer-relevant value of their patented technologies. To command a profit-maximising price across different jurisdictions in the current environment is, in many applications, to agree to a confidential discount to a list price with each payer who is not willing to meet the list price, with the aim of achieving the maximum price each payer is willing to pay. As such, pharmaceutical companies and agents therein may be incentivised to ensure confidentiality, rather than transparency.

However, there may be countervailing drivers towards greater transparency. A more transparent model may be compelling for decision-makers. In addition, it is important to recognise that companies are not monolithic enterprises and the motives of individuals working in companies may act towards greater transparency [48].

4.2. Consultants

Whilst pharmaceutical companies are responsible for models that are submitted to HTA bodies, the reality is that the majority are built by consultancies staffed with experts in modelling. Each consultancy will have different capabilities and views of appropriate methods. As the size of consultancies varies from departments in large publicly listed companies to privately held organisations with a handful of staff, it is also unlikely that a consensus view will be held amongst companies as to the appropriate level of transparency for modelling.

The defining feature of modelling work conducted by consultancies is that it is done primarily to satisfy a client’s requirements. In this sense, consultancies might be considered rule-takers with respect to modelling standards and guidelines. Should guidelines mandate a level of transparency, it would effectively motivate companies to comply.

Models to support HTA submissions are likely to be transparent for the parties involved, with the level of transparency being defined by the HTA agency (see section 4.5). Conversely, internal decision-making or pricing models, though built according to modelling standards, are likely to remain highly confidential with no documented evidence of their existence made available outside of the department for which they are developed. In this case, transparency may only extend to the client.

Where models are used publicly, consultancies may want access to the code to be restricted to relevant stakeholders, and not made widely available. Even where HTA submission reports are provided in the public domain, model code is not published, and often model input data are redacted, which hampers replication. Whilst inefficient, this is driven by the desire for companies to keep their intellectual property secret and make it difficult for other companies to copy the elements which they see as key to their differentiation.

Within the competitive bidding process to provide economic modelling services, there is currently little or no incentive for a profit-incentivised consultancy to include clauses for open source dissemination within proposals for work. To insert such a clause would typically lower the expected probability of the proposal bid being accepted, unless the industry procurement agent is incentivised by transparency. As described in 4.1, there is little to suggest this is likely to be the case.

However, we should also recognise that revenue may be generated in different ways. For example, the development of a model may act as a ‘loss-leader’ with revenue being generated through add-on consultancy services. This may incentivise consultancies to develop open source models as a driver for subsequent consulting services. Likewise, as mentioned previously, if model transparency improves the likelihood that a model is viewed as unbiased, consultants and clients may support transparency.

4.3. Researchers

Universities, independent research organisations, and individual researchers all conduct decision modelling in the context of health care. It is important to note that there is not necessarily a clear distinction between research and consultancy, with some organisations (including university groups) conducting both with similar terms.

Nevertheless, grant-funded or salaried researchers are likely to have the freedom to be rule-makers to a greater extent than consultants. In this respect, they may face different incentives regarding the use of the various manifestations of transparency. Researchers and research institutions may prioritise reputation over short-term profitability. The implications of this for transparency are ambiguous; researchers may benefit from greater publicity associated with transparency but may also fear the increased scope for criticism.

As outlined in 3.3., greater accountability for model development may be associated with clearer identification of intellectual property, which could incentivise researchers to report models with greater transparency. However, the value of the intellectual property associated with the development of a model can extend beyond the immediate purposes of the model and its initial publication. Researchers may be able to derive further publications and credit from new applications or extension to a model that only they can fully implement. Thus, the incentives faced by researchers are likely to differ depending on the specifics of the work being conducted.

There is considerable variation across academic organisations, especially looking internationally. There are examples of health economics researchers and teams working collaboratively with the health care system and driven primarily to ensure evidence and analysis-informed policy decision making. In such situations, open source and transparent modelling is likely to be embraced and nurtured, as exemplified by the work of Sadatsafavi and colleagues on the EPIC COPD reference model [22].

4.4. Publishers

The dissemination of model reports is facilitated primarily via the publication of journal articles, and journals can have a positive influence on model transparency. For example, journals are often involved in the development and dissemination of research reporting standards, including those applicable to models, such as CHEERS. Being strong advocates for – and even enforcers of – reporting standards, journals have contributed to an improvement in reporting over time. Moreover, standard journal processes such as editorial assessment and peer review can routinely improve the completeness of a research report. Some journals have recognised the importance of transparency in achieving reproducible research and have created policies accordingly (see, for example [49]).

In addition to improvements in the ‘depth’ of transparency via more comprehensive research articles, the open access movement has resulted in a marked increase in the ‘breadth’ of transparency via wider access to articles when they are published with open access.

4.5. Health care decision-makers

HTA agencies, such as NICE and CADTH, are major beneficiaries and drivers of model transparency, and will likely be a major determinant of the fate of model transparency initiatives. HTA agencies play crucial roles in the approval of new health technologies for market entry in their respective jurisdictions. As part of submissions for approval of new health technologies, such agencies receive HTA reports, including economic evaluations and budget impact analyses, from the technology manufacturer, and embark on an internal or external assessment of the models. Transparency in the models will greatly enhance the capacity of such agencies towards evaluating the claims of cost-effectiveness made by the manufacturer.

Transparency in reporting and documentation can also enhance the value of one HTA agency’s work for other HTA agencies. The vast documentation made publicly available online for each NICE appraisal allows other agencies to learn from NICE’s own critique if subsequently appraising the same technology in the same indication, for different jurisdictions. For the most part, HTA agencies already enforce certain transparency standards, such as requiring the manufacturer to submit the model source code. Transparency in the HTA process should also be bidirectional. Agencies commission the development of models, and these models should be transparently shared with companies.

In theory, it is already a requirement to base practice on a systematic review of the evidence. CADTH guidelines suggest that “Researchers should consider any existing well-constructed and validated models that appropriately capture the clinical or care pathway for the condition of interest when conceptualizing their model.” Agencies could push the transparency agenda further by, for example, requiring the manufacturer to use reference models instead of their own in-house models. This should lead to the use (or improvement) of reference models. The European Network for Health Technology Assessment (EUnetHTA) is supporting a movement towards greater transparency in HTA submissions by providing a core HTA model framework and a guide to best practices [50,51].

4.6. Professional associations

Seeing potential benefits associated with transparency, a variety of organisations have articulated their support, including the International Society for Pharmacoeconomics and Outcomes Research (ISPOR), Health Technology Assessment International (HTAi), the Academy of Managed Care Pharmacy (AMCP) [52], and the Society for Medical Decision Making (SMDM). Professional associations for individuals involved in the development of decision models can facilitate collaboration within and between different stakeholder groups. Trade associations, such as the European Federation of Pharmaceutical Industries and Associations (EFPIA), might also play a role in enabling collaboration and providing infrastructure.

It will be in the interest of professional associations to extend transparency if this reflects well on the profession or industry that they represent. For example, if transparency improves the legitimacy of modelling, the influence of relevant associations may be extended. Similarly, if transparency is seen to serve the public good, trade associations may be able to use it as a platform for positive publicity.

4.7. Wider society

A large part of the justification for the broader open science movement is that it serves society in a positive way. Especially when supported by public funding, research is often seen as a public good. Wider society can influence modelling practices through funders, policymakers, or via any other stakeholders, and could be affected by the level of transparency.

Non-specialist audiences are unlikely to utilise information that provides greater transparency with respect to the depth of reporting. However, initiatives such as model registries could facilitate the work of patient groups and other stakeholders, who may wish to understand the nature of modelling work. This may be especially relevant to the allocation of research funding, which should be guided by the public’s priorities and by the prevailing research landscape.

5. HOW? ACHIEVING TRANSPARENCY

Having identified what transparency is, why we might want it, and who needs to be involved, we outline some of the areas in which changes need to be made in order to benefit from greater transparency. Given the range of stakeholders and interests related to increased transparency of decision models, achieving the benefits while managing the risks requires careful consideration. It is also worth considering that the achievement of transparency may be more or less difficult depending on the context and the nature of any given model. For example, there may be a trade-off between transparency and complexity, as more complex models consist of more information. We discuss three broad strategies that require attention: i) removing barriers, ii) creating incentives, and iii) establishing infrastructure.

5.1. Removing barriers

5.1.1. Legal

Legal challenges can arise with respect to several manifestations of transparency. Collaboration may be particularly difficult for manufacturers, who are subject to antitrust legislation. This is an area where companies will need to tread carefully if they are to work together. In practice, it may mean working through a third party (such as a patient organisation or consultancy company) whereby such concerns are minimised because the project is hosted by the third party. Efforts should be focussed towards facilitating direct collaboration between companies on the development of an evidence base, without risk of legal proceedings.

One example of collaboration which has managed to navigate this area is economic modelling in Duchenne Muscular Dystrophy. In this area, multiple companies developing assets have come together to jointly develop evidence that will be required for market access, including data on the natural history of the disease, burden of illness, and an economic model. Whilst there were numerous issues in the project being set up, the role of the patient organisation in holding the contracts and providing leadership was key [53].

The current flux in privacy law, particularly the introduction of the General Data Protection Regulation (GDPR) in the European Union, also complicates transparency in modelling. Analysts and agents need to be particularly careful regarding potentially identifiable patient data and how they are shared. This is an active area of research; how to anonymise data is particularly problematic in rare diseases, where even the most basic information needed for modelling potentially makes it possible to identify patients [54].

It is vital that model transparency initiatives are able to manage data protection requirements. The rise of open source statistical software such as R [55] and Python [56], and adoption of standard practices in structuring code, will increase accessibility and readability. Patient-level data can be replaced with either summary distributions or pseudo-patient level data (samples drawn from the respective joint summary distributions).

5.1.2. Software

Software plays an important role in the definition of model components and in stakeholders’ understanding of their function. Some modelling software is more suited to sharing and collaboration than others. Purpose-built software, such as TreeAge Pro, may have the benefit of allowing easy model development and use but can lack transparency. Microsoft Excel can be used to generate models that are transparent in the sense that the calculations are presented in the worksheets, and spreadsheet models are sometimes recommended on this basis [52]. However, for a reader to understand the model structure in more complex models, they may be required to trace the model calculations cell-by-cell. Thus, the choice of software can be a barrier to transparency or to the cost of deriving value from that transparency. Furthermore, the statistical limitations of Excel mean that often more sophisticated calculations are conducted in specialist statistical software such as Stata, which may be a further hindrance to transparency.

5.1.3. Knowledge

There are at least two respects in which analysts may not know how to effectively achieve transparency, due to a lack of knowledge. First, there is limited specific guidance on making decision models transparent. Second, many analysts (and those reviewing models) are not trained in the use of software that facilitates transparency.

If reference models, open source modelling, or model registries are to be part of the model development process, then standards need to be developed to guide analysts in achieving best practice. The availability of guidance can help to create confidence in researchers to pursue transparency. Yet, selecting the best standards to use can also be challenging [57]. Wider uptake – and appropriate use – of standards, by both modellers and journals, should be encouraged, and research should evaluate the suitability of guidance relating to transparency.

As outlined above, the use of particular software packages can hinder transparency. There is increasing use of script-based languages, such as R, to both generate statistical calculations for models and build models directly [58]. Such an approach can facilitate the sharing of models, either publicly as open source or within the context of peer review or collaboration. However, transparency depends on the code being well-structured and clearly commented and a user or reviewer is required to have knowledge of the language in order to validate, use, or adapt the model. There is a steep learning curve for analysts unfamiliar with script-based models (many of whom principally use Microsoft Excel), which can act as a barrier to transparency. There may also be barriers in the communication of models due to a lack of knowledge for end-users. However, this can be substantially mitigated with the use of open access interface packages, like the R package Shiny, which can be used to turn an R model into an interactive web application [59]. This allows a user without any R knowledge to use or, in part, validate the model. Research should identify why analysts use particular software packages and the implications of this for transparency.

Training and education is key to addressing knowledge barriers, and should be directed towards the aforementioned challenges: knowledge of good practice and technical expertise. Further training opportunities need to be created to facilitate a transition to more transparent practices in the use of software.

5.2. Creating incentives

5.2.1. Monetary

All stakeholders involved in the development of decision models are potentially motivated by financial incentives. If intellectual property rights are maintained when models are transparent, there is an opportunity to monetise models. Such an approach could potentially be adopted by consulting companies or researchers. Using script-based models, proprietary sections of code could be attributed to the developers and any updates vetted and versioned. In the research sector, policymakers could reward transparency with financial support. Such a scheme could be analogous (or contiguous) to existing national research assessments, such as the Research Excellence Framework in the UK.

5.2.2. Non-monetary

At present, analysts are, in general, not incentivised to ensure transparency in the development of models. In order to facilitate increases in transparency, a non-monetary incentive structure should be created. This may take the form of explicit sources where models can be cited (as has occurred with The Journal of Statistical Software) or forms of ‘kudos’ or credibility as seen on systems like GitHub [60]. Whilst incentives may help in promoting transparency, a change in culture to one where transparency is expected from all parties is more likely to have an effect than more ephemeral rewards, which may not apply equally across sectors (publications, for instance, are not a performance indicator in the commercial sector).

5.2.3. Quality assurance

Incentives to use (as well as create) transparent models should also be implemented. If more models are reported transparently, quality assurance will become increasingly important. Users of models are more likely to express demand for open source models or reference models if their quality is assured. This may involve testing of code integrity by a third party or other forms of validation.

5.3. Establishing infrastructure

5.3.1. Mandates

As an alternative or complement to creating incentives, analysts could be mandated to make models transparent in specified ways. HTA agencies, in particular, are in a strong position to encourage transparency. For example, HTA agencies could tighten the requirements for confidentiality marking. Currently, HTA bodies provide guidance for companies to protect their confidential information but provide little incentive for companies to be as open as possible in doing so. Much of the documentation made available for each NICE technology appraisal – for example – contains redacted data. As noted in 4.5, HTA agencies could build on requirements for the manufacturer to base their model on a systematic review of evidence and submit the model source code, towards an open source model registry, noting confidentiality issues.

As we see movement towards less emphasis on technology adoption and more discussion on ‘technology management’ by agencies and health care systems, the case is strengthened for ongoing maintenance of models, especially reference or policy models. The argument here is for policy models to be used as analytic infrastructure for health system management, with health economists supporting the search for efficiency (setting the agenda for policy changes) rather than reacting to a flow of technologies and guiding one-off yes/no decisions [61,62]. Further, technology management implies an ongoing assessment of technologies through their life-course, and models again represent the obvious analytic vehicle for this. If the health care system sees ongoing value in policy models then the funding for maintenance and development of such models could flow from the system itself.

5.3.2. Platforms

Bertagnolli and colleagues [63] have discussed data sharing for clinical trial results—a feat more than 60 years in the making—which has been hampered by similar forces to those facing the sharing of decision models, namely a lack of systems technology to facilitate the sharing of information. Some of the challenges can be overcome by using gatekeeper models (a central repository overseen by an independent expert committee), active open source data sharing models [63] and federated data models (whereby the data requester’s analyses are run from the data owner’s computer) [64].

There is an increasing number of online tools that are viable for storing and sharing model source code and related content, including GitHub, FigShare (Digital Science), and Mendeley (Elsevier). Code for models published in peer-reviewed journals can be provided as supplementary material and therefore available for further adaptation by other researchers.

R models in particular lend themselves to being shared via a version-controlled code repository like GitHub. A repository can be archived and assigned a Digital Object Identifier, making it citable. All changes are tracked, which means the model can evolve beyond the initial publication and the differences between versions can be easily determined. This is particularly helpful for validation. Conflicts which may occur when collaborators are working on the same part of the code are flagged and can then be resolved to preserve code integrity. Anyone wishing to use the model can pull the code onto their own machine and amend accordingly. The effects of environment discrepancies can be averted by deploying via a software container platform such as Docker. The code and the dependencies required for it to run are packaged up together ensuring that model functionality and outcomes are the same on different machines.

The information provided may not be an adequate basis on which to judge the appropriateness of the model, which will likely require an explanation. Several of us are involved in the development of an open source model exchange platform that would initially require manual model vetting via an advisory panel using the CHEERS checklist; this would eventually be replaced with an algorithm to automate the process [65].

Thus, there are many options already available (and more in development) that could constitute platforms for making models more transparent. Yet, the lack of an accepted platform and standards for easily sharing models means that very few are ever made available to peer reviewers, let alone expert readers, public health officials, or decision-makers. A lack of knowledge in the use of existing platforms may be a barrier, and so training for decision modellers will be necessary. Concerns about intellectual property may also be a barrier to model transparency, for both individuals and organisations. Thus, it is important to create an infrastructure in which intellectual property is not threatened by initiatives to increase transparency.

The infrastructure associated with maintaining any transparent modelling initiative will require funding. It is necessary that stakeholders should come together to identify who will sustainably fund the practical and technical support required.

5.3.3. Stakeholder networks

There are many stakeholders in the development and use of decision models in health care and an ongoing dialogue needs to be maintained. Analysts require reassurance that there are no serious risks associated with making models more transparent.

Despite the importance of models for comparative effectiveness and cost-effectiveness research and guideline development, there is no efficient process for creating, disseminating, sharing, evaluating, and updating these models. These issues must be evaluated and debated in an open forum to facilitate collaboration while honestly exploring how best to afford protections where they are required or desired. Publishers may also play an intermediary role in the debate between different researchers, companies, and other stakeholders. Greater transparency can raise issues of copyright and access, so there is a need to define how model sharing can be achieved in a fair and equitable manner [3,28,66]. We have developed a special interest group (SIG) within ISPOR to curate an ongoing dialogue around the creation, dissemination, sharing, evaluation, and updating of open source cost-effectiveness models.

There is a clear direction in health research internationally for a stronger patient orientation, with all research about patients being guided and driven by those with the lived experience of the illness in question (e.g. in Canada [67], the US [68], and the UK [69]). This case has been made in the specific context of decision modelling by van Voorn et al [70]. A key benefit of transparency, therefore, is that it potentially allows for the fuller engagement of patients and caregivers as stakeholders. Some groups are moving strongly in this direction, notably the Innovation and Value Initiative.

6. CONCLUSION

We have outlined six distinct (though connected) manifestations of transparency: i) model registration, ii) reporting standards, iii) reference models, iv) open source modelling, v) peer review, and vi) collaboration. Each of these involves different processes, promoting transparency in distinct ways. Any given initiative may involve the promotion or implementation of multiple manifestations of transparency, but it is important to recognise the unique role of each. This is because no single manifestation is a sufficient tool for the achievement of transparency, and each is subject to its own limitations, challenges, and benefits. Greater transparency in modelling has the potential to support wider and more effective scrutiny of models, which could lead to a reduction in technical errors and facilitate incremental improvements to support external validity. However, the implementation of transparency initiatives does not guarantee these goals and, in order to be successful, changes are required within the modelling community. Legal, practical, and intellectual barriers prevail, which could hamper transparency initiatives. It is vital that stakeholders work together to identify risks and opportunities in increasing transparency to develop a supportive and sustainable infrastructure.

KEY POINTS FOR DECISION-MAKERS.

There is a variety of manifestations of transparency in decision modelling, ranging in the amount of information and accessibility of information that they tend to provide.

There is a broad array of stakeholders who create, manage, influence, evaluate, use, or are otherwise affected by, decision models.

Given the range of stakeholders and interests related to increased transparency of decision models, achieving the benefits while managing the risks requires careful consideration. Issues such as intellectual property, legal matters, funding, use, and sharing of software need to be addressed.

ACKNOWLEDGEMENTS

SE kindly acknowledges his colleagues at Collaborations Pharmaceuticals.

FUNDING

SE acknowledges funding from NIH/ NIGMS R43GM122196, R44GM122196-02A1.

COMPLIANCE WITH ETHICAL STANDARDS

No specific funding was received to support the preparation of this manuscript. CS is an employee of the Office of Health Economics, a registered charity, research organisation, and consultancy, which receives funding from a variety of sources including the Association of the British Pharmaceutical Industry and the National Institute for Health Research (NIHR). CS has received honoraria from the NIHR in relation to peer review activities. CS has received travel support from Duke University for attendance at a meeting related to the content of this manuscript. SB receives salary support from the University of British Columbia, Vancouver Coastal Health and the British Columbia Academic Health Science Network. He previously chaired CADTH’s Health Technology Expert Review Panel and provides consultancy advice to CADTH, for which he received honoraria and travel support. All of his decision model project work is supported through public sources, primarily the CIHR and the BC Ministry of Health. PC has received payment for workshops run alongside Mt Hood conferences. SE is CEO and Owner of Collaborations Pharmaceuticals, Inc. SE has received a grant from the National Institutes for Health to build machine leaning models and software. DM is employed by the University of Calgary, with a salary funded by Arthur J.E. Child Chair in Rheumatology and Canada Research Chair in Health Systems and Services Research. DM’s research is funded through multiple funding organisations including the Canadian Institutes for Health Research (CIHR), Arthritis Society, CRA, and AAC in peer-reviewed funding competitions, none of which relate to this work. DM has received reimbursement for travel from Illumina and Janssen for attendance and presentation at scientific meetings not related to this work. RA, PC, AH, NH, SL, MS, WS, EW, and TW declare that they have no conflicts of interest.

Contributor Information

Christopher James Sampson, The Office of Health Economics, 7th Floor, Southside, 105 Victoria Street, London, SW1E 6QT, United Kingdom.

Renée Arnold, Arnold Consultancy & Technology, LLC, 15 West 72nd Street - 23rd Floor, New York, NY 10023-3458, United States.

Stirling Bryan, University of British Columbia, 701-828 West 10th Avenue, Research Pavilion, Vancouver, BC V5Z 1M9, Canada.

Philip Clarke, University of Oxford, Richard Doll Building, Old Road Campus, Oxford OX3 7LF, United Kingdom.

Sean Ekins, Collaborations Pharmaceuticals Inc., 840 Main Campus Drive, Lab 3510, Raleigh, NC 27606, United States.

Anthony Hatswell, Delta Hat, 212 Tamworth Road, Nottingham, NG10 3GS, United Kingdom.

Neil Hawkins, University of Glasgow, 1 Lilybank Gardens, Glasgow G12 8RZ, United Kingdom.

Sue Langham, Maverex Limited, 5 Brooklands Place, Brooklands Road, Sale, Cheshire, M33 3SD, United Kingdom.

Deborah Marshall, University of Calgary, 3280 Hospital Drive NW, Calgary, Alberta T2N 4Z6, Canada.

Mohsen Sadatsafavi, University of British Columbia, 2405 Wesbrook Mall, Vancouver, BC, V6T1Z3, Canada.

Will Sullivan, BresMed Health Solutions, Steel City House, West Street, Sheffield, S1 2GQ, United Kingdom.

Edward C F Wilson, Health Economics Group, Norwich Medical School, University of East Anglia, Norwich, NR4 7TJ, United Kingdom; Tim Wrightson, Adis Publications, 5 The Warehouse Way, Northcote 0627, Auckland, New Zealand.

REFERENCES

- 1.Daniels N, Sabin J. Limits to Health Care: Fair Procedures, Democratic Deliberation, and the Legitimacy Problem for Insurers. Philosophy & Public Affairs. 1997;26:303–50. [DOI] [PubMed] [Google Scholar]

- 2.Daniels N, Sabin JE. Accountability for Reasonableness. Setting Limits Fairly: Can we learn to share medical resources? Oxford University Press; 2002. [Google Scholar]

- 3.Eddy DM, Hollingworth W, Caro JJ, Tsevat J, McDonald KM, Wong JB. Model Transparency and Validation: A Report of the ISPOR-SMDM Modeling Good Research Practices Task Force–7. Medical Decision Making. 2012;32:733–43. [DOI] [PubMed] [Google Scholar]

- 4.Philips Z, Ginnelly L, Sculpher M, Claxton K, Golder S, Riemsma R, et al. Review of guidelines for good practice in decision-analytic modelling in health technology assessment. Health Technol Assess. 2004;8:iii–iv, ix–xi, 1–158. [DOI] [PubMed] [Google Scholar]

- 5.Sampson CJ, Wrightson T. Model Registration: A Call to Action. PharmacoEconomics - Open. 2017;1:73–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Freemantle N, Mason J. Publication Bias in Clinical Trials and Economic Analyses: PharmacoEconomics. 1997;12:10–6. [DOI] [PubMed] [Google Scholar]

- 7.Sacristán JA, Bolaños E, Hernández JM, Soto J, Galende I. Publication bias in health economic studies. Pharmacoeconomics. 1997;11:289–92. [PubMed] [Google Scholar]

- 8.Mt Hood Database [Internet]. Mt hood diabetes challenge network. [cited 2019 Feb 18]. Available from: https://www.mthooddiabeteschallenge.com/registry

- 9.CISNET Model Registry Home [Internet]. [cited 2019 Feb 18]. Available from: https://resources.cisnet.cancer.gov/registry

- 10.Global Health CEA - Open-Source Model Clearinghouse [Internet]. [cited 2019 Feb 18]. Available from: http://healtheconomics.tuftsmedicalcenter.org/orchard/open-source-model-clearinghouse

- 11.Husereau D, Drummond M, Petrou S, Carswell C, Moher D, Greenberg D, et al. Consolidated Health Economic Evaluation Reporting Standards (CHEERS) Statement. PharmacoEconomics. 2013;31:361–7. [DOI] [PubMed] [Google Scholar]

- 12.Caro JJ, Briggs AH, Siebert U, Kuntz KM. Modeling Good Research Practices—Overview: A Report of the ISPOR-SMDM Modeling Good Research Practices Task Force–1. Med Decis Making. 2012;32:667–77. [DOI] [PubMed] [Google Scholar]

- 13.Sanghera S, Frew E, Roberts T. Adapting the CHEERS Statement for Reporting Cost-Benefit Analysis. PharmacoEconomics. 2015;33:533–4. [DOI] [PubMed] [Google Scholar]

- 14.Husereau D, Drummond M, Petrou S, Carswell C, Moher D, Greenberg D, et al. Consolidated Health Economic Evaluation Reporting Standards (CHEERS)—Explanation and Elaboration: A Report of the ISPOR Health Economic Evaluation Publication Guidelines Good Reporting Practices Task Force. Value in Health. 2013;16:231–50. [DOI] [PubMed] [Google Scholar]

- 15.Haji Ali Afzali H, Karnon J. Addressing the Challenge for Well Informed and Consistent Reimbursement Decisions: The Case for Reference Models. PharmacoEconomics. 2011;29:823–5. [DOI] [PubMed] [Google Scholar]

- 16.Afzali HHA, Karnon J, Merlin T. Improving the Accuracy and Comparability of Model-Based Economic Evaluations of Health Technologies for Reimbursement Decisions: A Methodological Framework for the Development of Reference Models. Medical Decision Making. 2013;33:325–32. [DOI] [PubMed] [Google Scholar]

- 17.Frederix GWJ, Haji Ali Afzali H, Dasbach EJ, Ward RL. Development and Use of Disease-Specific (Reference) Models for Economic Evaluations of Health Technologies: An Overview of Key Issues and Potential Solutions. PharmacoEconomics. 2015;33:777–81. [DOI] [PubMed] [Google Scholar]

- 18.Lewsey JD, Lawson KD, Ford I, Fox K a. A, Ritchie LD, Tunstall-Pedoe H, et al. A cardiovascular disease policy model that predicts life expectancy taking into account socioeconomic deprivation. Heart. 2015;101:201–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Rydzak CE, Cotich KL, Sax PE, Hsu HE, Wang B, Losina E, et al. Assessing the Performance of a Computer-Based Policy Model of HIV and AIDS. PLOS ONE. 2010;5:e12647. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Cancer Intervention and Surveillance Modeling Network (CISNET) [Internet]. [cited 2019 Feb 18]. Available from: https://cisnet.cancer.gov/

- 21.Hennessy DA, Flanagan WM, Tanuseputro P, Bennett C, Tuna M, Kopec J, et al. The Population Health Model (POHEM): an overview of rationale, methods and applications. Popul Health Metr. 2015;13:24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Sadatsafavi M, Ghanbarian S, Adibi A, Johnson K, FitzGerald JM, Flanagan W, et al. Development and Validation of the Evaluation Platform in COPD (EPIC): A Population-Based Outcomes Model of COPD for Canada. Medical Decision Making. 2019;39:152–67. [DOI] [PubMed] [Google Scholar]

- 23.Sullivan W, Hirst M, Beard S, Gladwell D, Fagnani F, López Bastida J, et al. Economic evaluation in chronic pain: a systematic review and de novo flexible economic model. Eur J Health Econ. 2016;17:755–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Incerti D, Curtis JR, Shafrin J, Lakdawalla DN, Jansen JP. A Flexible Open-Source Decision Model for Value Assessment of Biologic Treatment for Rheumatoid Arthritis. PharmacoEconomics. 2019; [DOI] [PubMed] [Google Scholar]

- 25.Global Health CEA - About the Clearinghouse [Internet]. [cited 2019 Feb 14]. Available from: http://healtheconomics.tuftsmedicalcenter.org/orchard/about-the-clearinghouse

- 26.Open-Source Value Platform [Internet]. Innovation and Value Initiative. [cited 2019 Feb 14]. Available from: https://www.thevalueinitiative.org/open-source-value-project/

- 27.Priem J, Hemminger BM. Decoupling the scholarly journal. Front Comput Neurosci [Internet]. 2012. [cited 2019 Jun 6];6. Available from: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3319915/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Arnold RJG, Ekins S. Time for Cooperation in Health Economics among the Modelling Community: PharmacoEconomics. 2010;28:609–13. [DOI] [PubMed] [Google Scholar]

- 29.American Diabetes Association Consensus Panel. Guidelines for Computer Modeling of Diabetes and Its Complications. Diabetes Care. 2004;27:2262–5. [DOI] [PubMed] [Google Scholar]

- 30.Hayes AJ, Leal J, Gray AM, Holman RR, Clarke PM. UKPDS outcomes model 2: a new version of a model to simulate lifetime health outcomes of patients with type 2 diabetes mellitus using data from the 30 year United Kingdom Prospective Diabetes Study: UKPDS 82. Diabetologia. 2013;56:1925–33. [DOI] [PubMed] [Google Scholar]

- 31.Palmer AJ, Si L, Tew M, Hua X, Willis MS, Asseburg C, et al. Computer Modeling of Diabetes and Its Transparency: A Report on the Eighth Mount Hood Challenge. Value in Health. 2018;21:724–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Project HERCULES [Internet]. Duchenne UK. [cited 2019 Feb 14]. Available from: https://www.duchenneuk.org/project-hercules

- 33.Hoogendoorn M, Feenstra TL, Asukai Y, Briggs AH, Hansen RN, Leidl R, et al. External Validation of Health Economic Decision Models for Chronic Obstructive Pulmonary Disease (COPD): Report of the Third COPD Modeling Meeting. Value in Health. 2017;20:397–403. [DOI] [PubMed] [Google Scholar]

- 34.Mount Hood 4 Modeling Group. Computer modeling of diabetes and its complications: a report on the Fourth Mount Hood Challenge Meeting. Diabetes Care. 2007;30:1638–46. [DOI] [PubMed] [Google Scholar]

- 35.Palmer AJ. Computer Modeling of Diabetes and Its Complications: A Report on the Fifth Mount Hood Challenge Meeting. Value in Health. 2013;16:670–85. [DOI] [PubMed] [Google Scholar]

- 36.About Cochrane | Cochrane Library [Internet]. [cited 2019 Feb 14]. Available from: https://www.cochranelibrary.com/about/about-cochrane

- 37.Goeree R, Levin L. Building Bridges Between Academic Research and Policy Formulation: The PRUFE Framework - an Integral Part of Ontario’s Evidence-Based HTPA Process. PharmacoEconomics. 2006;24:1143–56. [DOI] [PubMed] [Google Scholar]

- 38.Drummond M, Sorenson C. Use of pharmacoeconomics in drug reimbursement in Australia, Canada and the UK: what can we learn from international experience? In: Arnold RJG, editor. Pharmacoeconomics: From Theory to Practice [Internet]. Florida, USA: CRC Press; 2009. [cited 2019 Jan 17]. p. 175–96. Available from: http://www.taylorandfrancis.com/default.asp [Google Scholar]

- 39.Cooper NJ, Sutton AJ, Ades AE, Paisley S, Jones DR, on behalf of the working group on the ‘use of evidence in economic decision models.’ Use of evidence in economic decision models: practical issues and methodological challenges. Health Economics. 2007;16:1277–86. [DOI] [PubMed] [Google Scholar]

- 40.Barton P Development of the Birmingham Rheumatoid Arthritis Model: past, present and future plans. Rheumatology (Oxford). 2011;50:iv32–8. [DOI] [PubMed] [Google Scholar]

- 41.Localio AR, Goodman SN, Meibohm A, Cornell JE, Stack CB, Ross EA, et al. Statistical Code to Support the Scientific Story. Annals of Internal Medicine. 2018;168:828. [DOI] [PubMed] [Google Scholar]

- 42.Christensen G, Miguel E. Transparency, Reproducibility, and the Credibility of Economics Research. Journal of Economic Literature. 2018;56:920–80. [Google Scholar]

- 43.Merlo G, Page K, Ratcliffe J, Halton K, Graves N. Bridging the Gap: Exploring the Barriers to Using Economic Evidence in Healthcare Decision Making and Strategies for Improving Uptake. Appl Health Econ Health Policy. 2015;13:303–9. [DOI] [PubMed] [Google Scholar]

- 44.Vemer P, Corro Ramos I, van Voorn GAK, Al MJ, Feenstra TL. AdViSHE: A Validation-Assessment Tool of Health-Economic Models for Decision Makers and Model Users. PharmacoEconomics. 2016;34:349–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Hua X, Lung TW-C, Palmer A, Si L, Herman WH, Clarke P. How Consistent is the Relationship between Improved Glucose Control and Modelled Health Outcomes for People with Type 2 Diabetes Mellitus? a Systematic Review. PharmacoEconomics. 2017;35:319–29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Goodacre S Being economical with the truth: how to make your idea appear cost effective. Emergency Medicine Journal. 2002;19:301–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Herndon T, Ash M, Pollin R. Does high public debt consistently stifle economic growth? A critique of Reinhart and Rogoff. Cambridge J Econ. 2014;38:257–79. [Google Scholar]

- 48.Dunlop WCN, Mason N, Kenworthy J, Akehurst RL. Benefits, Challenges and Potential Strategies of Open Source Health Economic Models. PharmacoEconomics. 2017;35:125–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Laine C, Goodman SN, Griswold ME, Sox HC. Reproducible Research: Moving toward Research the Public Can Really Trust. Annals of Internal Medicine. 2007;146:450. [DOI] [PubMed] [Google Scholar]

- 50.Lampe K, Mäkelä M, Garrido MV, Anttila H, Autti-Rämö I, Hicks NJ, et al. The HTA Core Model: A novel method for producing and reporting health technology assessments. International Journal of Technology Assessment in Health Care. 2009;25:9–20. [DOI] [PubMed] [Google Scholar]

- 51.EUnetHTA. HTA Core ModelVersion 3.0 [Internet]. 2016. January. Report No.: JA2 WP8. Available from: https://www.eunethta.eu/wp-content/uploads/2018/03/HTACoreModel3.0-1.pdf

- 52.Academy of Managed Care Pharmacy. The AMCP Format for Formulary Submissions [Internet]. 2012. Report No.: Version 3.1. Available from: http://amcp.org/practice-resources/amcp-format-formulary-submisions.pdf

- 53.Hatswell AJ, Chandler F. Sharing is Caring: The Case for Company-Level Collaboration in Pharmacoeconomic Modelling. PharmacoEconomics. 2017;35:755–7. [DOI] [PubMed] [Google Scholar]

- 54.Cole A, Towse A. Legal Barriers to the Better Use of Health Data to Deliver Pharmaceutical Innovation [Internet]. Office of Health Economics; 2018. December. Report No.: 002096. Available from: https://ideas.repec.org/p/ohe/conrep/002096.html [Google Scholar]

- 55.R Core Team. R: A language and environment for statistical computing [Internet]. Vienna, Austria: R Foundation for Statistical Computing; 2018. Available from: https://www.R-project.org/ [Google Scholar]

- 56.Python Software Foundation. Python Language Reference [Internet]. 2008. Available from: http://www.python.org

- 57.Frederix GWJ. Check Your Checklist: The Danger of Over- and Underestimating the Quality of Economic Evaluations. PharmacoEconomics - Open. 2019; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Jalal H, Pechlivanoglou P, Krijkamp E, Alarid-Escudero F, Enns E, Hunink MGM. An Overview of R in Health Decision Sciences. Medical Decision Making. 2017;37:735–46. [DOI] [PubMed] [Google Scholar]

- 59.Chang W, Cheng J, Allaire JJ, Xie Y, McPherson J. shiny: Web Application Framework for R [Internet]. 2018. Available from: https://CRAN.R-project.org/package=shiny [Google Scholar]

- 60.Dabbish L, Stuart C, Tsay J, Herbsleb J. Social Coding in GitHub: Transparency and Collaboration in an Open Software Repository. Proceedings of the ACM 2012 Conference on Computer Supported Cooperative Work [Internet]. New York, NY, USA: ACM; 2012 [cited 2019 Feb 19]. p. 1277–1286. Available from: http://doi.acm.org/10.1145/2145204.2145396 [Google Scholar]

- 61.Bryan S, Mitton C, Donaldson C. Breaking the Addiction to Technology Adoption. Health Economics. 2014;23:379–83. [DOI] [PubMed] [Google Scholar]

- 62.Scotland G, Bryan S. Why Do Health Economists Promote Technology Adoption Rather Than the Search for Efficiency? A Proposal for a Change in Our Approach to Economic Evaluation in Health Care. Med Decis Making. 2017;37:139–47. [DOI] [PubMed] [Google Scholar]

- 63.Bertagnolli MM, Sartor O, Chabner BA, Rothenberg ML, Khozin S, Hugh-Jones C, et al. Advantages of a Truly Open-Access Data-Sharing Model. New England Journal of Medicine. 2017;376:1178–81. [DOI] [PubMed] [Google Scholar]

- 64.Sotos JG, Huyen Y, Borrelli A. Correspondence: Data-Sharing Models. New England Journal of Medicine. 2017;376:2305–6. [DOI] [PubMed] [Google Scholar]

- 65.Ekins S, Arnold RJG. From Machine Learning in Drug Discovery to Pharmacoeconomics. In: Arnold RJG, editor. Pharmacoeconomics: from theory to practice. 2nd ed. CRC Press; 2019. [Google Scholar]

- 66.Arnold RJG, Ekins S. Ahead of Our Time: Collaboration in Modeling Then and Now. PharmacoEconomics. 2017;35:975–6. [DOI] [PubMed] [Google Scholar]

- 67.Government of Canada CI of HR. Strategy for Patient-Oriented Research [Internet]. 2018. [cited 2019 Feb 18]. Available from: http://www.cihr-irsc.gc.ca/e/41204.html

- 68.PCORI [Internet]. [cited 2019 Feb 18]. Available from: https://www.pcori.org/

- 69.INVOLVE | INVOLVE Supporting public involvement in NHS, public health and social care research [Internet]. [cited 2019 Feb 18]. Available from: https://www.invo.org.uk/

- 70.van Voorn GAK, Vemer P, Hamerlijnck D, Ramos IC, Teunissen GJ, Al M, et al. The Missing Stakeholder Group: Why Patients Should be Involved in Health Economic Modelling. Appl Health Econ Health Policy. 2016;14:129–33. [DOI] [PMC free article] [PubMed] [Google Scholar]