Abstract

Objective

The spread of misinformation about COVID‐19 severely influences the governments' ability to address the COVID‐19 pandemic. This study explores the predictors of accurate beliefs about COVID‐19 and its influence on COVID‐related policy and behavior.

Methods

Data from an original survey collected by Lucid in July 2020 are used. Ordinary Least Squares regression (OLS) is used to predict accurate beliefs about COVID‐19. Ordered logistic regression models are estimated to examine the relationship between COVID‐19 knowledge, policy preferences, and health behavior intentions.

Results

Ideology and education were found to have a positive effect on knowledge about COVID‐19. Moreover, low levels of knowledge about COVID‐19 were found to reduce support for mandatory vaccination policy and willingness to get a coronavirus vaccine when available.

Conclusion

These findings will help policymakers develop communication strategies for the public on the coronavirus vaccination.

Since March of 2020, the COVID‐19 pandemic has devastated populations’ worldwide, causing considerable health, economic, and social costs. By November of 2020, over 55 million individuals have contracted the virus, with over 1.3 million deaths (COVID‐19 Dashboard, 2020). Unfortunately, a disproportionate share of the burden has been imposed on the United States with over 11 million confirmed cases and over 250,000 deaths, with some studies suggesting that the actual case counts could be somewhere between 6 and 24 times higher than reported cases.

There has been substantial disagreement about COVID‐19 since the outbreak began in the United States among politicians and health experts. Despite the United States setting daily records for cases, hospitalizations, and deaths, President Trump and some Republican governors have continuously downplayed the virus, consistently claiming that the United States was rounding the corner or that it is no worse than the common flu (Maxouris, 2020). The downplaying of the virus by politicians has public health implications and politicizes the issue, making it more difficult to control the virus.

Ending the COVID‐19 pandemic in the United States will require a tremendous undertaking on the part of government across both state and federal levels. Downplaying the virus influences mitigation efforts and affects public health efforts to educate the public on how the virus spreads. Recent research has shown that not only did knowledge gaps about coronavirus among the public occur at the beginning of the outbreak (McCormack et al., 2020) but also influenced perceptions about mortality rates (Gollust et al., 2020), incident rates (Alsan et al., 2020), and the effectiveness of masks (Nagler et al., 2020). While much has been written about how partisanship and the conservative news media has driven conspiratorial beliefs toward COVID‐19 (Motta, Stecula, and Farhart, 2020; Pickup, Stecula, and Van Der Linden, 2020; Uscinski et al., 2020), less is known about how the prevalence of misinformation influences knowledge about COVID‐19 and subsequent health behaviors.

In this article, I examine the factors surrounding coronavirus knowledge and argue that politics influences coronavirus knowledge. Like other political issues, coronavirus information is viewed through a political lens leading individuals to use prior knowledge that reinforces their ideological predispositions and ignore contradictory information (Lodge and Taber, 2013). I find evidence that overall coronavirus knowledge is influenced by both ideology and education level. Liberals are much more likely than conservatives to answer coronavirus questions correctly; however, education moderates the relationship leading education to facilitate motivated reasoning (Kahan et al., 2012). I also find a notable gap between individuals with different education levels; conservatives with lower education levels are more likely to incorrectly answer questions about coronavirus versus liberals with similar levels of education.

However, contrary to existing research on motivated reasoning, I show that conservatives with higher education levels correctly answer coronavirus knowledge questions similar to liberals with higher and lower educational levels. Like Redlawsk, Civettini, and Emmerson (2010), I argue that the media environment toward coronavirus has created a potential “tipping point” for highly educated conservatives, thereby potentially mitigating motivated reasoning. Finally, I demonstrate that coronavirus knowledge is consequential for policy preferences. I find that correct beliefs about coronavirus significantly increases support for mandatory COVID‐19 vaccination policies and willingness to get a COVID‐19 vaccine.

The Politicization of COVID‐19

The COVID‐19 pandemic captivated the attention of not only the United States but also the world. In the beginning days of the pandemic, individuals were glued to the news, trying to make sense of navigating through the pandemic (Molla, 2020). In any crisis, the chances it becomes politicized increase as more individuals are impacted. However, with COVID‐19, the politicization happened almost immediately when, on March 16, 2020, then President Trump referred to COVID‐19 as the “Chinese Virus” in a tweet, fueling a series of violent crimes against Asians and Asian Americans, including an attack of a 91‐year‐old man in the Chinatown area of Oakland, CA (Yancy‐Bragg, 2021). Further fueling the politicization of COVID‐19 many governors openly defied the Center for Disease Control and Prevention (CDC) by disparaging the use of face masks and not fully implementing social distancing measures (Groves and Kolpack, 2020; Siemaszko, 2020).

While COVID‐19 continues to pose significant risks to the country, perceptions in the United States have become political, with individuals viewing the pandemic through a political lens (Milligan, 2020; Roberts, 2020). Given research on how politicized news coverage influences public attitudes and exacerbates political divides, this raises questions about the role politicians and the media play in amplifying the politicization of COVID‐19 (Bolsen, Druckman, and Cook, 2014a; Brulle, Carmichael, and Jenkins, 2012; Druckman, Peterson, and Slothuus, 2013). Indeed, there is a wide array of literature suggesting that both politicians and the media have shaped public opinion toward COVID‐19. For example, Hart, Chinn, and Soroka (2020) found that both network news and newspapers were highly polarized when covering COVID‐19. They found that politicians appeared more often than scientists in newspaper coverage while receiving equal network news coverage. Similarly, Green et al. (2020) found that elite cues from political leaders toward COVID‐19 were also polarized. They found that when Democrats discussed emerging threats caused by the pandemic, they focused on the threat to public health and American workers, while Republican leaders primarily focused on China and businesses.

The politicization of COVID‐19 by both the media and politicians severely impacts health officials' ability to contain the spread of COVID‐19 by discounting social distancing efforts and facilitating the spread of misinformation in right‐wing media circles (Motta, Stecula, and Farhart, 2020). Gadarian, Goodman, and Pepinsky (2020) found that political differences were the single most important factor determining health behaviors and policy preferences. Their research found that Democrats were much more likely to report they had adopted a number of health behaviors in response to the pandemic and were much more likely to approve policies to prevent the spread of COVID‐19. Likewise, Stecula and Pickup (2021) found that individuals who believe conspiracy theories about COVID‐19 are less likely to follow public health recommendations.

Given the research findings and the politicization of the pandemic by both the media and politicians, it is entirely possible to assume that individuals will default to information that aligns with their political priors. Thus, motivated reasoning provides a valuable framework to evaluate how knowledge about COVID‐19 influences policy preferences and health behaviors.

Theory and Hypothesis

While the presence of misinformation within politics did not begin with COVID‐19, the prevalence of misinformation has long been a staple within the American political system, with real consequences. Nevertheless, misinformation existing within public health threatens public health and can change how individuals interact with the health system (Goldacre, 2009; Vogel, 2017). Scholars have long studied individuals' tendency to believe inaccurate news stories on a range of policy issues from climate change, public health, and gun control (Aronow and Miller, 2016). One potential explanation scholars have found for why individuals hold inaccurate beliefs is partisan motivated reasoning (Kahan, 2012; Lodge and Taber, 2013). Motivated reasoning posits that two distinct goals become activated when an individual processes information (Kunda, 1990). The first is directional and motivates individuals to reach a specific conclusion. Individuals seek out information that reinforces their political preferences, allowing them to rationalize away the conflicting information to remain consistent with their partisan beliefs (Lauderdale, 2016). The second is accuracy goals—when asked a question of fact, individuals desire to answer the question correctly. This motivation influences individuals to assess information objectively by carefully considering all of the information presented (Baumeister and Newman, 1994). Both goals influence how individuals process information and how people search for and integrate information to formulate conclusions. Motivated reasoning is a useful framework to assess how the public processes information regarding coronavirus.

Previous research in political science has established that partisanship and prior beliefs about policies strongly influence how individuals process information (Bolsen, Druckman, and Cook, 2014b; Johnston, 2006; Taber and Lodge, 2006) across various policy issues. For example, Gaines, Kuklinski, and Quirk (2007) found that Democrats and Republicans interpreted death statistics about the Iraq War differently. Similarly, Joslyn and Haider‐Markel (2014) showed that Republicans and Democrats disagreed sharply on global warming and evolution, despite broad scientific consensus on the issues.

Nevertheless, partisan motivated reasoning influences individuals differently, and often contextual factors may influence how individuals process information. One such factor could be the influence of elite messaging. Druckman, Peterson, and Slothuus (2013) found that highly polarized environments significantly influence how individuals process information. They find that a polarized environment decreases the impact of substantive information and increases confidence toward stories less grounded in reality. Another factor that could influence how individuals process information is a perceived identity threat. Research has shown that individuals are heavily influenced by their peers and social contacts (Bollinger and Gillingham, 2012; Bond et al., 2012; Gerber, Green, and Larimer, 2008; Gerber and Rogers, 2009; Meer, 2011; Paluck, 2011; Paluck and Shepherd, 2012) and may feel pressured to think in ways that conform to their existing group identities (Kahan et al., 2017; Sinclair, Stecula, and Pickup, 2012). With the political environment created by President Trump and the intense loyalty, he requires of political figures, it would make sense that individuals would feel pressure to remain consistent with existing group identities.

Research on how individuals process information involving matters of science proves particularly useful when examining coronavirus. When scientific information conflicts with existing predispositions, it is challenging for individuals to reconcile scientific consensus with their existing beliefs. One reason for the difficulty is that real political divides exist toward science in general and experts specifically (Funk et al., 2019; Mooney, 2005, 2012). For example, Blank and Shaw (2015) found ideology and religion influence attitudes toward science. They found that when scientific information conflicts with individual predispositions, they are much less likely to accept scientific research.

Similarly, Schuldt, Roh, and Schwarz (2015) found that when you included the word “global warming” versus “climate change,” it decreased beliefs among Republicans in climate science, but not Democrats. A similar trend exists within public health, in particular vaccines. Rabinowitz et al. (2016) found that liberals were more likely than conservatives to support pro‐vaccine statements. Joslyn and Sylvester (2019) found that Republicans were more likely to believe that the MMR vaccine given to children caused autism.

In sum, the research shows us that individuals are willing to go to great lengths to defend their political predispositions. With right‐leaning media coverage facilitating the spread of coronavirus and President Trump continuing to downplay coronavirus (Garcia‐Roberts, 2020), we should expect that conservatives will have difficulty correctly answer coronavirus knowledge questions when exposed to information that may be contrary to their predispositions.

-

Hypothesis 1: Conservatives will be less likely than liberals to answer questions on COVID‐19 correctly.

How then is directionally motivated reasoning moderated? One of the most critical moderators is cognitive sophistication, which can include education. According to the “John Q. Public” model, political and policy perceptions about issues are rooted in making quick decisions about issues. This is primarily caused by individuals using what Lodge and Taber (2013) call system‐1 responses (directional) and system‐2 reasoning, which does the “dirty work” of allowing individuals to rationalize their perceptions. However, one unfortunate implication of this model is that individuals with higher levels of cognitive sophistication are better equipped to process information in a manner that is consistent with their prior beliefs.

Indeed, Lodge and Taber (2013) found that directionally motivated reasoning occurred more often among individuals with high education and knowledge levels. Similarly, Kahan (2012) found that individuals with higher cognitive sophistication levels were more likely to show ideologically motivated cognition. Finally, Nyhan, Reifler, and Ubel (2013) found that correcting misperceptions about the “death‐panels” liked to the Affordable Care Act backfired among people scoring highest on political knowledge. In essence, among those with higher levels of sophistication, once misinformation becomes ingrained, it is harder to correct these misperceptions, leading scholars to refer to the “paradox” of political knowledge: while a democratic citizenry needs to be informed, often more informed citizens can rationalize away conflicting information.

While educational attainment is indeed a crude indicator of cognitive sophistication, in the absence of a Cognitive Reflection Test (CRT), a wide array of literature has found that educational attainment works as an adequate proxy for CRT. For example, when examining U.S. survey data regarding the causes of climate change, research has consistently shown the most substantial partisan disagreements among those individuals with the most education (Bolin and Hamilton, 2018; Drummon and Fischhoff, 2017; Ehret, Sparks, and Sherman 2017; McCright and Dunlap, 2011; van de Linden, Leiserowitz, and Maibach, 2018).

Beyond climate change, the same pattern among those with higher education levels exists when research has examined more specific cognitive indicators. This includes science literacy and intelligence, numeracy, and measures of open‐minded and analytic thinking (Bolsen, Druckman, and Cook, 2015; Drummond and Fischhoff, 2017; Hamilton, Cutler, and Schaefer, 2012; Kahan et al., 2012; Kahan et al., 2017). Individuals with higher levels of education are more equipped to challenge information that conflicts with their prior attitudes. In comparison, individuals with lower education levels are either unprepared to defend their predispositions or are unaware of facts that threaten their political identity. This is particularly applicable when examining coronavirus knowledge as simple facts about the benefits of wearing a mask have become politicized.

-

Hypothesis 2: Individuals with higher levels of education should exhibit the greatest differences in knowledge about COVID‐19.

While it is essential to understand the various predictors behind COVID‐19 knowledge, it is also necessary to understand the implications for individual policy preferences. Research has found that having incorrect beliefs about issues does influence policy attitudes. For example, Joslyn and Sylvester (2019) found that individuals who held inaccurate beliefs about the MMR and autism link were less likely to support mandatory vaccination policies and allowing unvaccinated children to attend school. Research has also shown that inaccurate beliefs lead individuals to participate less in the health system. Rabinowitz et al. (2016) argue that inaccurate beliefs over the human papillomavirus (HPV) vaccine lead to lower vaccination rates among individuals. Finally, Baumgaertner, Carlisle, and Justwan (2018) found that conservatives were less likely to express pro‐vaccination beliefs and directly impact vaccine propensity. We should expect to see a similar relationship between coronavirus knowledge, policy preferences, and health behavior intentions.

-

Hypothesis 3: Individuals with low levels of coronavirus knowledge will be less likely to support mandatory coronavirus vaccine policies compared to those with more knowledge.

-

Hypothesis 4: Individuals with low levels of coronavirus knowledge will be less willing to receive a coronavirus vaccine when available compared to those with more COVID‐19 knowledge.

Data and Methods

To test the expectations laid out above, I used a demographically representative survey of 7,064 U.S. adults fielded in July 2020. Respondents were invited to participate in the survey via the Lucid Theorem tool, a large online opt‐in panel using quota sampling to ensure representativeness across key demographics (age, gender, race, education, income, and region). Lucid initially invited 10,020 individuals to participate in this study, yielding a completion rate of 70 percent. Despite concerns with online opt‐in panels, Lucid has been found to be more nationally representative than other traditional convenience samples on various demographic, political, and psychological factors (Coppock and McClellan, 2019). Research in political science and public health have previously published articles using Lucid data (Callaghan et al., 2019, 2020; Haeder, Sylvester, and Callaghan, 2021; Lunz Trujillo et al., 2020). The data were weighted to reflect population benchmarks drawn from the U.S. Census 2018 Current Population Survey (CPS). Although the unweighted data are not far off from these benchmarks, Table 1 shows that the weights improve representativeness.

TABLE 1.

Comparison of Raw and Weighted Data to National Benchmarks

| Variable | Survey Data (Raw) | Survey Data (Weighted) | Benchmark | Benchmark Source |

|---|---|---|---|---|

| Female | 51% | 51% | 51% | CPS 2018 |

| College Degree | 42% | 34% | 31% | CPS 2018 |

| Black | 11% | 13% | 13% | CPS 2018 |

| White | 67% | 62% | 62% | CPS 2018 |

| Hispanic | 13% | 17% | 18% | CPS 2018 |

| Democrat | 43% | 34% | ANES (Wgt.) | |

| Republican | 39% | 28% | ANES (Wgt.) | |

| Independent | 17% | 32% | ANES (Wgt.) | |

| Mean Age | 44 | 45 | 47 | ANES (Wgt.) |

| Median Income | $35–49,999 | $50–74,999 | $55–59,999 | ANES (Wgt.) |

Note: Comparison of the data to known population benchmarks. CPS = Current Population Survey (U.S. Census, 2018). ANES = American National Election Study (2016). Preference is given to CPS considering its sample size and representativeness, but using weighted ANES data whenever it was not possible to use CPS (i.e., CPS does not ask questions about Party ID). Weights in column two adjust for gender, education, race, age, and income. Party ID is not included in the weighting formula and is shown only due to the potential interests of those who might use or otherwise consume these data. N (Survey Data) = 7,073.

The key outcome variable in the analysis is Coronavirus Knowledge. I assessed coronavirus knowledge using an 11‐question true/false battery developed based on facts from the CDC, including questions on symptoms, prevention recommendations, and treatments. The variable is a count of correctly answered items, coded to range from 0 to 1. An individual who scores a 0.50 on this scale indicates they answered 50 percent of the questions correctly, while a score of 1 means the respondent answered 100 percent of the questions correctly.1 Full question wording and the percent of the respondents that correctly responded to each question are shown in Table 2. As can be seen, respondents were more likely to correctly answer the clinical symptoms of coronavirus (77 percent), how coronavirus is commonly spread (81 percent), and standard social distancing practices (80 percent). Conversely, there was less agreement over whether coronavirus had similar symptoms to the cold (49 percent), drugs such as remdesivir and hydroxychloroquine prevent coronavirus (51 percent), and coming into contact or eating a wild animal could result in becoming infected with coronavirus (44 percent). On average, respondents answered 68 percent of the questions correctly, with 6 percent of respondents correctly answering every question.

TABLE 2.

COVID‐19 Knowledge Battery and Descriptive Statistics

| Descriptive Statistics | |

|---|---|

| Question #1: The main clinical symptoms of COVID‐19 are fever, fatigue, and a dry cough. |

True = 77% False = 11% Don't know = 12% |

| Question #2: Similar to the common cold, a stuffy nose, runny nose, and sneezing are common in individuals infected with the COVID‐19 virus. |

True = 49% False = 31% Don't know = 20% |

| Question #3: Drugs like Hydroxychloroquine and Remdesivir prevent individuals from contracting and spreading COVID‐19. |

True = 19% False = 51% Don't know = 30% |

| Question #4: Not all individuals with COVID‐19 will develop severe cases. Most deaths are occurring in individuals who are elderly and have underlying health issues |

True = 72% False = 17% Don't know = 11% |

| Question #5: Coming into contact with or eating wild animals could result in individuals becoming infected with COVID‐19. |

True = 25% False = 44% Don't know = 30% |

| Question #6: Individuals with COVID‐19 cannot infect other individuals when a fever is not present. |

True = 14% False = 72% Don't know = 14% |

| Question #7: COVID‐19 commonly transmits through respiratory droplets (i.e., a cough or sneeze) from infected individuals. |

True = 81% False = 9% Don't know = 9% |

| Question #8: Individuals can wear masks to reduce the chance of becoming infected with COVID‐19. |

True = 76% False = 14% Don't know = 9% |

| Question #9: It is not necessary for children and young adults to take measures to prevent becoming infected with COVID‐19. |

True = 17% False = 74% Don't know = 9% |

| Question #10: To prevent becoming infected with COVID‐19, individuals should avoid going to crowded places such as train stations and avoid taking public transportation. |

True = 80% False = 11% Don't know = 9% |

| Question #11: People who have had contact with someone infected with COVID‐19 can reduce the chance of spreading the virus to others if they immediately isolate for 14 days. |

True = 76% False = 13% Don't know = 11% |

Note: Correct answers are in bold.

The primary independent variables in the analysis are ideology and education. Conservatism is measured by asking respondents to identify where they would place themselves on the political spectrum, ranging from “Extremely Liberal” to “Extremely Conservative.” The variable was recoded to range from 0 to 1, such that a score of 1 reflects individuals who identified themselves as “Extremely Conservative.” Education is a nominal measure of respondents' highest earned degree, recoded to range from 0 to 1, such that a score of 1 reflects an individual who earned a college degree or higher.

Finally, I also included control variables commonly used in assessing motivated reasoning, all coded to range from 0 to 1; gender (a dichotomous measure of whether the respondent is male or female); age (recoded to range from 0 to 1, such that a score of 1 reflects the oldest person in the dataset); race (dichotomous indicators of whether the respondents are Black or Hispanic); and total yearly household income (a six‐point scale ranging from low [less than $14,000] to high [greater than $75,000]). These variables are well known to be associated with knowledge about political issues (Carpini and Keeter, 1996; Carpini, 2000).

Results

Table 3, column 1, displays logistic estimates for the full model. Positive coefficients indicate a greater likelihood of correctly answering coronavirus questions; a negative estimate suggests a lower probability. Ideology was strongly and significantly (at the p < 0.05 level, two‐tailed) associated with coronavirus knowledge, and conservatives were less likely to answer questions about COVID‐19 than liberals correctly (H1). The performance of other variables, most notably education, increases the confidence in the dependent variable. Consistent with the literature on political knowledge, those with higher educational attainment levels are significantly more likely to correctly answer coronavirus knowledge questions than individuals with lower levels of education attainment (b = 0.05, p < 0.000).

TABLE 3.

Determinants of Knowledge About COVID‐19

| Full Model | ||

|---|---|---|

| Variable or Statistic | Estimate | Interaction |

| Gender | 0.05*** | 0.05*** |

| (0.007) | (0.007) | |

| Age | 0.30*** | 0.30*** |

| (0.015) | (0.015) | |

| Black | −0.07*** | −0.07*** |

| (0.013) | (0.013) | |

| Hispanic | −0.04*** | −0.03*** |

| (0.012) | (0.012) | |

| Income | 0.09*** | 0.09*** |

| (0.013) | (0.013) | |

| Conservatism | −0.05*** | −0.11*** |

| (0.012) | (0.028) | |

| Education | 0.05*** | −0.01 |

| (0.015) | (0.023) | |

| Conservatism × Education | 0.11*** | |

| (0.037) | ||

| Constant | 0.50*** | 0.53*** |

| (0.014) | (0.018) | |

| Observations | 7,001 | 7,001 |

| R 2 | 0.18 | 0.18 |

Note: Tabled are OLS coefficients (and their standard errors). The outcome variable is overall knowledge about COVID‐19. Increased scores on this scale translate to increased knowledge about COVID‐19. Data are weighted.

p < 0.01,

** p < 0.05,

* p < 0.10 (two‐tailed).

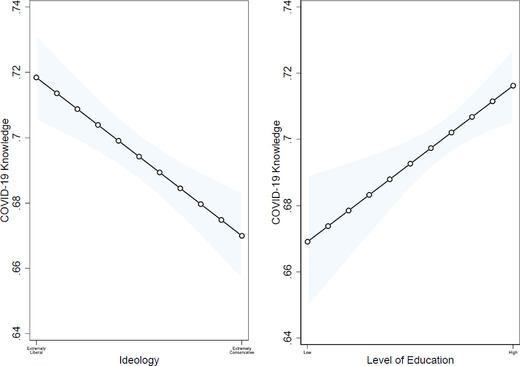

Figure 1 plots predicted coronavirus knowledge levels across ideology (the left‐hand panel) and educational attainment (the right‐hand panel), holding all other covariates constant. Recall that a score of 0.50 on the coronavirus scale indicates that people correctly answered about 50 percent of the questions. Individuals with lower levels of education were projected to earn a score of 0.67 on the scale, indicating that on average, they answered 67 percent of the questions correctly, compared to individuals with higher levels of education who on average answered 72 percent of the questions correctly. A similar trend can also be seen with ideology. Liberals were projected to earn a score of 0.72, indicating that on average, they answered 72 percent of the questions correctly, compared to conservatives who answered 67 percent of the questions correctly.

FIGURE 1.

The Predicted Effects of Ideology and Education on COVID‐19 Knowledge

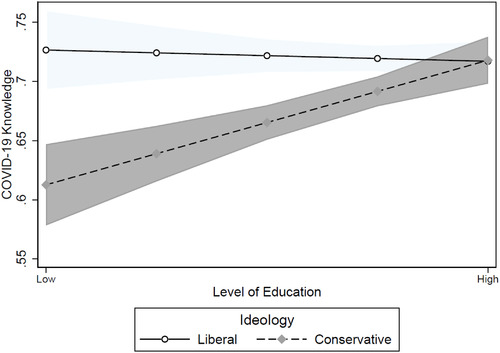

To investigate the moderating influence of education, I re‐estimated the model in Table 3 to include an interaction term between political ideology and education. The interaction effect is robust (b = 0.11, p < 0.002) and indicates ideological differences in respondents with lower educational levels. Figure 2 makes this clear. I map the effects of education for liberals and conservatives—while controlling for the impact of other predictors. As shown in Figure 2, almost immediately, there are ideological differences between liberals and conservatives with lower education levels. These findings are inconsistent with the expectations laid out in H2. Contrary to the existing literature that finds more partisan motivated reasoning among those with higher education levels (Kahan, 2012; Lodge and Taber, 2013), the results here show that these differences are more pronounced at lower education levels. However, as education increases, conservatives begin to correctly answer coronavirus questions, similar to liberals at both lower and higher levels of education.

FIGURE 2.

The Predicted Effects of Education by Ideology on COVID‐19 Knowledg

Policy Consequences of Inaccurate Knowledge of Coronavirus

Thus far, the analysis has identified the influence of both ideology and educational attainment on coronavirus knowledge. I now turn to the critical question of whether accurate beliefs about coronavirus have significant policy implications. Research has shown that having factual knowledge about an issue influences political preferences, and exposure to misinformation affects how individuals view policies (Berinsky, 2017; Gilens, 2001).

Since the beginning of the pandemic, public health officials have pursued strategies to slow the spread of the disease, including educating the public on proper handwashing techniques, wearing masks, social distancing, and the symptoms of COVID‐19. However, one of the more unique strategies discussed to combat the coronavirus is developing a safe and effective vaccine. Unfortunately, mounting evidence suggests up to half of the country is not sure about their plans to get a COVID‐19 vaccine or plan not to get one altogether (Callaghan et al., 2020; Cornwall, 2020). While large segments of the population are trusting of vaccinations in general, these individuals are also concerned about the safety of the COVID‐19 vaccine (Lunz Trujillo and Matt, 2020).

In the survey, I asked respondents two policy questions. First, “When a vaccine for the novel coronavirus (COVID‐19) becomes widely available, how likely are you to request to be vaccinated,” coded to range from 1 to 4, such that a 4 reflects an individual who is very likely to get a coronavirus when one becomes available.2 Second, “Do you support or oppose requiring all individuals to receive a vaccination against COVID‐19 once it becomes available,” coded to range from 1 to 4, with 4 reflecting a respondent who strongly supports requiring individuals get a COVID‐19 vaccine. All the same control variables and knowledge variable listed in Table 3 are also used.

The results of the ordinal logistic regression appear in Table 4. Positive coefficients indicate an increased probability of supporting requiring individuals to get a coronavirus vaccine or willingness to get a coronavirus vaccine. In both instances, increased coronavirus knowledge increased the support of mandating a coronavirus vaccine (b = 1.21, p < 0.000) and willingness to get a coronavirus vaccine (b = 1.49, p < 0.000). Controlling for the other covariates, the influence of knowledge is relatively strong. The probability of supporting a mandatory vaccine increases by approximately 31 percent when respondents have higher levels of knowledge about coronavirus (H3). To compare, liberals are 21 percent more likely than conservatives to support mandating a coronavirus vaccine. Similar results are found with the willingness to get a coronavirus vaccine. The probability of being willing to get a coronavirus vaccine increases by approximately 31 percent if a respondent correctly answers coronavirus knowledge questions (H4). Liberals are 21 percent more willing than conservatives to get a coronavirus vaccine when one is released.

TABLE 4.

The Influence of COVID‐19 Knowledge on COVID‐Related Policy and Behavior

| Mandatory | Willingness | Mandatory | Willingness | |

|---|---|---|---|---|

| Variable or Statistic | COVID Vaccine | To Vaccinate | COVID Vaccine | To Vaccinate |

| COVID‐19 Knowledge | 1.21*** | 1.49*** | 2.33*** | 2.70*** |

| (0.141) | (0.143) | (0.247) | (0.269) | |

| Conservatism | −1.15*** | −0.97*** | 0.35 | 0.64* |

| (0.110) | (0.113) | (0.316) | (0.356) | |

| Education | 0.48*** | 0.67*** | 0.49*** | 0.67*** |

| (0.108) | (0.109) | (0.108) | (0.110) | |

| Gender | −0.24*** | −0.30*** | −0.25*** | −0.32*** |

| (0.059) | (0.060) | (0.059) | (0.061) | |

| Age | 0.63*** | 0.83*** | 0.65*** | 0.86*** |

| (0.134) | (0.136) | (0.135) | (0.136) | |

| Black | −0.41*** | −0.58*** | −0.41*** | −0.59*** |

| (0.102) | (0.102) | (0.103) | (0.102) | |

| Hispanic | −0.10 | −0.21** | −0.08 | −0.20** |

| (0.089) | (0.089) | (0.089) | (0.088) | |

| Income | 0.11 | 0.24** | 0.12 | 0.25** |

| (0.099) | (0.099) | (0.100) | (0.099) | |

| Knowledge × Conservatism | −2.24*** | −2.40*** | ||

| (0.445) | (0.492) | |||

| /cut1 | −1.19*** | −1.09*** | −0.44** | −0.28 |

| (0.127) | (0.131) | (0.188) | (0.203) | |

| /cut2 | −0.12 | 0.02 | 0.64*** | 0.83*** |

| (0.127) | (0.129) | (0.188) | (0.202) | |

| /cut3 | 1.30*** | 1.46*** | 2.06*** | 2.28*** |

| (0.128) | (0.131) | (0.189) | (0.204) | |

| Observations | 7,001 | 7,001 | 7,001 | 7,001 |

| Pseudo R‐Squared | 0.04 | 0.06 | 0.04 | 0.06 |

| Log Pseudolikelihood | −8288.29 | −7751.99 | −8265.97 | −7727.00 |

Ordered logistic regression coefficients presented; standard errors in parentheses. Questions: Mandatory COVID Vaccine: “Do you support or oppose requiring all individuals to receive a vaccination against COVID‐19 once it becomes available?” (1 = Strongly oppose, 2 = Somewhat oppose, 3 = Somewhat support, 4 = Strongly support). Willingness to Vaccinate: When a vaccine for the novel coronavirus (COVID‐19) becomes widely available, how likely are you to request to be vaccinated?" (1 = Not likely at all, 2 = Not too likely, 3 = Somewhat likely, 4 = Very likely).

p < 0.01,

p < 0.05,

p < 0.10 (two‐tailed).

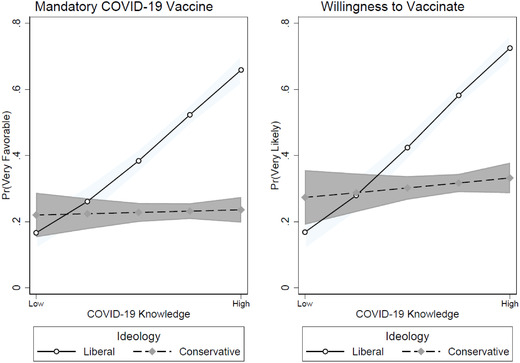

Considering the consistent findings between the two questions with knowledge and ideology, one would expect that ideology would moderate the relationship. To test this, I again re‐estimated both models to include an interaction term between political ideology and coronavirus knowledge. The interaction effects are robust for support for a mandatory vaccine (b = −2.24, p < 0.000) and willingness to get a coronavirus vaccine (b = −2.40, p < 0.000). Figure 3 shows the predicted probabilities graphed for both questions. For both policies, notice that regardless of the respondent's level of knowledge about coronavirus, conservatives are less likely to support mandating a coronavirus vaccine than liberals (approximately a 42 percent decrease) and are approximately 40 percent less likely to be willing to get a coronavirus vaccine when it becomes available compared to liberals. In sum, the results in Table 4 and Figure 3 show that coronavirus knowledge is consistent and relatively strong predictors of both policy preferences and health behavior.

FIGURE 3.

The Predicted Effects of COVID‐19 Knowledge by Ideology on COVID‐Related Policy and Behavior

Conclusion

As public health efforts continue to struggle to communicate the devastating effects of coronavirus on the public, it is crucial to understand the factors that explain coronavirus knowledge. As this paper shows, motivated reasoning provides a valuable framework for understanding why individuals would dismiss basic coronavirus facts. However, contrary to existing literature (Kahan, 2012; Lodge and Taber, 2013), the results show a more pronounced difference in respondents' ability to answer coronavirus questions among those with low education levels. While the findings were unexpected, considering the political rhetoric surrounding coronavirus, the results are not surprising. Necessary health measures have become politicized and incorporated into a symbol of political identity that individuals want to protect, thereby allowing strong ideological motivations to bias information processing and factual understanding. Individuals with strong ideological leanings will digest information that matters the most to them. Correct beliefs may or may not match their ideological identity, most often exhibited among the least educated. Several important conclusions can be drawn from the analysis.

First, the analysis demonstrated significant ideological differences between liberals and conservatives about coronavirus knowledge. These results are not surprising, as research has shown that conservatives are more likely to accept false beliefs, especially when it comes to coronavirus (Jamieson and Albarracin, 2020; Motta, Stecula, and Farhart, 2020). Nevertheless, it is important to note that while conservatives are more likely to accept misinformation concerning COVID‐19, the results here indicate that conservatives still answered most coronavirus questions correctly. Given the politicized coverage of COVID‐19 and the misinformation being disseminated in right‐wing media, it is remarkable that the percentage of questions answered correctly was not lower. One possible explanation could be that the knowledge questions did not primarily focus on misinformation but instead focused on questions based on easily consumed information. Future research should examine how acceptance of conspiracy theories regarding COVID‐19 influences willingness to receive a COVID vaccine.

The data also yielded an intriguing result; contrary to existing literature that finds the highly educated are more likely to participate in directionally motivated reasoning (Joslyn and Sylvester, 2019; Kahan et al., 2012; Lodge and Taber, 2013), the results here suggest that directionally motivated reasoning occurred with individuals with lower levels of education. I had expected that directionally motivated reasoning would be more easily reinforced by education when the scientific evidence is consistent with ideological priors. This was the case for liberals as facts about coronavirus fit well with their existing viewpoints. However, the results showed that highly educated conservatives could wrestle with their existing ideological priors and correctly answer coronavirus questions. These findings may be specific to coronavirus; however, Redlawsk, Civettini, and Emmerson (2010) suggest that individuals exposed to information opposite their priors may reach what they call a “tipping point” where they become more willing to reconsider their views, thereby mitigating the potential effects of motivated reasoning. Despite the facilitation of misinformation among right‐leaning media sources (Motta, Stecula, and Farhart, 2020), because coronavirus is documented extensively in the media, individuals are consistently exposed to coronavirus knowledge, thereby creating a potential “tipping point” for highly educated conservatives. While not discounting past research on motivated reasoning, future research should consider if the type of news sources individuals consume influences overall coronavirus knowledge and whether or not the results here are specific to coronavirus.

Second, the analysis showed that coronavirus knowledge influences policy preferences and willingness to get a coronavirus vaccine. The results found that coronavirus knowledge decreased support for a mandatory coronavirus and decreased desire to get a coronavirus vaccine when one becomes available. While it is essential to understand the predictors behind coronavirus knowledge, perhaps more important is the influence knowledge has on policy preferences and willingness to get a vaccine. Overcoming the coronavirus pandemic will involve individuals resisting individualistic instincts. Having correct knowledge about coronavirus could encourage individuals to incorporate the societal benefits of following science into their decision‐making calculus. Conversely, individuals with incorrect beliefs about coronavirus may produce counterproductive actions to overcome the pandemic and irresponsible governance, leading to policymakers' bad policy decisions (Hochschild and Einstein, 2015). The winter surge currently being witnessed in the United States offers a cautionary tale about the devastating impacts incorrect coronavirus beliefs have on individuals and communities.

Despite the importance of these findings, it is necessary to acknowledge the limitations of the study. First, the coronavirus knowledge battery created does not explore all aspects of coronavirus knowledge. Most notably, it does not tap into some of the misinformation discussed among right‐wing media circles, such as the virus being created within a lab in China. Future research should examine where respondents receive most of their coronavirus knowledge. Those individuals who receive coronavirus knowledge from social media may be less likely to answer knowledge questions correctly than individuals who do not. Second, the cross‐sectional nature of the data can only provide a snapshot at a single moment in time. As such, it is impossible to account for how knowledge about coronavirus changes over time and its subsequent impact on health behavior and policy preferences. It is also important to acknowledge that the vaccine had not been released when the survey was conducted, and complete information about the vaccine's safety and efficacy had not been released to the public. Finally, it is important to recognize that while the data‐collection platform Lucid is widely used in social science research, it is nonetheless an Internet‐based survey platform, limiting the representativeness the opt‐in sampling frame can provide.

Ultimately, even with these limitations, the results have important implications for understanding communication for COVID‐19 and science communication more broadly. The findings here show evidence for motivated reasoning consistent with Kahan et al. (2012). High knowledge conservatives use their knowledge of coronavirus to downplay concerns relative to where we might expect them to be compared to high knowledge liberals, and given that low coronavirus knowledge is associated with opposition for everyone. These findings indicate that public health officials will struggle to communicate the benefit of receiving a coronavirus vaccine to individuals who already have a propensity to distrust the government and science more broadly, even when respondents have a high level of knowledge about coronavirus. This not only has implications for the COVID‐19 pandemic but any future health emergency that relies on the government conveying information to the public. Adding to these frustrations, public health officials will continue to have to compete with politicians who see the electoral incentives of politicizing health emergencies (Bolsen and Druckman, 2015). Future research should examine what communication strategies public health officials may use to mitigate misinformation about coronavirus to encourage individuals to get a vaccine when available.

Footnotes

It is possible that a respondent answering “Don't know” is a way to express coronavirus skepticism, or respondents are, in fact ambivalent, holding simultaneously conflicting beliefs about coronavirus. In any event, these respondents failed to answer the questions correctly.

While no state or employer is currently mandating the COVID‐19 vaccine, researchers have stated that it is likely that individuals will need a booster shot (Lovelace, 2021). Given this, it is important to understand how individuals feel about a mandatory coronavirus vaccine policy, given the potential that it could be needed every year and is shown to be more deadly than the flu, which many employers require employees to receive to maintain employment. In addition, universities like Rutgers, Duke University, The University of Notre Dame; two Ivy League universities, Brown and Cornell; and Northeastern University in Massachusetts are among those requiring the vaccine for the fall semester.

REFERENCES

- Alsan, Marcella , Stantcheva Stefanie, Yang David, and Cutler David. 2020. “Disparities in Coronavirus 2019 Reported Incidence, Knowledge, and Behavior Among US Adults.” JAMA Network Open 3(6):e2012403–. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aronow, Peter M. , and Miller Benjamin T.. 2016. “Policy Misperceptions and Support for Gun Control Legislation.” The Lancet 387(10015):223. [DOI] [PubMed] [Google Scholar]

- Baumeister, Roy F. , and Newman Leonard S.. 1994. “Self‐Regulation of Cognitive Inference and Decision Processes.” Personality and Social Psychology Bulletin 20(1):3–19. [Google Scholar]

- Baumgaertner, Bert , Carlisle Juliet E., and Justwan Florian. 2018. “The Influence of Political Ideology and Trust on Willingness to Vaccinate.” PLoS One 13(1):e0191728. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berinsky, Adam J . 2017. “Rumors and Health Care Reform: Experiments in Political Misinformation.” British Journal of Political Science 47(2):241–62. [Google Scholar]

- Blank, Joshua M. , and Shaw Daron. 2015. “Does Partisanship Shape Attitudes Toward Science and Public Policy? The Case for Ideology and Religion.” The Annals of the American Academy 658:18–35. [Google Scholar]

- Bolin, Jessica L. , and Hamilton, Lawrence C . 2018. “The News You Choose: News Media Preferences Amplify Views on Climate Change. ” Environmental Politics, 27(3):455–476. [Google Scholar]

- Bollinger, Bryan , and Gillingham Kenneth. 2012. “Peer Effects in the Diffusion of Solar Photovoltaic Panels.” Marketing Science 31(6):900–12. [Google Scholar]

- Bolsen, Toby , and Druckman James N.. 2015. “Counteracting the Politicization of Science.” Journal of Communication 65(5):745–69. [Google Scholar]

- Bolsen, Toby , Druckman James N., and Cook Fay Lomax. 2014a. “How Frames can Undermine Support for Scientific Adaptations: Politicization and the Status‐quo Bias.” Public Opinion Quarterly 78(1):1–26. [Google Scholar]

- Bolsen, Toby , Druckman James N., and Cook Fay Lomax. 2014b. “The Influence of Partisan Motivated Reasoning on Public Opinion.” Political Behavior 36(2):235–62. [Google Scholar]

- Bolsen, Toby , Druckman James N., and Cook Fay Lomax. 2015. “Citizens', Scientists', and Policy Advisors' Beliefs about Global Warming.” The ANNALS of the American Academy of Political and Social Science, 658(1):271–295. [Google Scholar]

- Bond, Robert M. , Fariss Christopher J., Jones Jason J., Kramer Adam D.I., Marlow Cameron, Settle Jaime E., and Fowler James H.. 2012. “A 61‐Million‐Person Experiment in Social Influence and Political Mobilization.” Nature 489(7415):295–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brulle, Robert J. , Carmichael Jason, and Jenkins J. Craig. 2012. “Shifting Public Opinion on Climate Change: An Empirical Assessment of Factors Influencing Concern Over Climate Change in the US, 2002–2010.” Climatic Change 114(2):169–88. [Google Scholar]

- Callaghan, Timothy , Moghtaderi Ali, Lueck Jennifer A., Hotez Peter J., Strych Ulrich, Dor Avi, Fowler Erika Franklin, and Motta Matt. 2020. “Correlates and Disparities of Covid‐19 Vaccine Hesitancy.” SSRN Electronic Journal. 〈 10.2139/ssrn.3667971〉 [DOI] [Google Scholar]

- Callaghan, Timothy , Motta Matthew, Sylvester Steven, Trujillo Kristin Lunz, and Blackburn Christine Crudo. 2019. “Parent Psychology and the Decision to Delay Childhood Vaccination.” Social Science & Medicine 238:112407. [DOI] [PubMed] [Google Scholar]

- Carpini, Michael Delli , and Keeter Scott. 1996. What Americans Know About Politics and Why it Matters. New Haven, CT: Yale University Press. [Google Scholar]

- Carpini, Michael X . 2000. “In Search of the Informed Citizen: What Americans Know About Politics and Why It Matters.” The Communication Review 4(1):129–64. [Google Scholar]

- Coppock, Alexander , and McClellan Oliver A.. 2019. “Validating the Demographic, Political, Psychological, and Experimental Results Obtained from a New Source of Online Survey Respondents.” Research & Politics 6(1):1–14. [Google Scholar]

- Cornwall, Warren. 2020. “Just 50% of Americans Plan to Get a Covid‐19 Vaccine. Here's How to Win Over the Rest.” Science 30. Available at 〈https://www.sciencemag.org/news/2020/06/just‐50‐americans‐plan‐get‐covid‐19‐vaccine‐here‐s‐how‐win‐over‐rest〉 [Google Scholar]

- COVID‐19 Dashboard . 2020. John Hopkins University of Medicine, Coronavirus Resource Center. https://coronavirus.jhu.edu/map.html

- Druckman, James N. , Peterson Erik, and Slothuus Rune. 2013. “How Elite Partisan Polarization Affects Public Opinion Formation.” American Political Science Review 107(1):57–79. [Google Scholar]

- Drummon, Caitlin , and Fischhoff Baruch. 2017. “Individuals with Greater Science Literacy and Education Have More Polarized Beliefs on Controversial Science Topics.” Proceedings of the National Academy of Sciences, 114(36):9587–9592. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ehret, Phillip J. , Sparks Aaron C., and Sherman David K.. 2017. “Support for Environmental Protection: An Integration of Ideological‐Consistency and Information‐Deficit Models.” Environmental Politics, 26(2): 253–277. [Google Scholar]

- Funk, Cary , Hefferon Meg, Kennedy Brian, and Johnson Courtney. 2019. “Trust and Mistrust in Americans' Views of Scientific Experts.” Pew Research Center. [Google Scholar]

- Gadarian, Shana , Goodman Sara Wallace, and Pepinsky Thomas B. 2020. “Partisanship, Health Behavior, and Policy Attitudes in the Early Stages of the COVID‐19 Pandemic.” PLoS One. 〈 10.1371/journal.pone.0249596〉 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gaines, Brian J. , Kuklinski James H., and Quirk Paul J.. 2007. “The Logic of the Survey Experiment Reexamined.” Political Analysis:1–20. [Google Scholar]

- Garcia‐Roberts, Gus. 2020. “Willful Denial: Top Republicans Follow Trump's Lead in Ignoring Coronavirus Wake‐up Call.” USA Today October 6. [Google Scholar]

- Gerber, Alan S. , Green Donald P., and Larimer Christopher W.. 2008. “Social Pressure and Voter Turnout: Evidence from a Large‐Scale Field Experiment.” American Political Science Review:102(1):33–48. [Google Scholar]

- Gerber, Alan S. , and Rogers Todd. 2009. “Descriptive Social Norms and Motivation to Vote: Everybody's Voting and so Should You.” The Journal of Politics 71(1):178–91. [Google Scholar]

- Gilens, Martin. 2001. “Political Ignorance and Collective Policy Preferences.” American Political Science Review:95(2):379–96. [Google Scholar]

- Goldacre, Ben. 2009. “Media Misinformation and Health Behaviours.” The Lancet Oncology 10(9):848. [DOI] [PubMed] [Google Scholar]

- Gollust, Sarah E. , Vogel Rachel I., Rothman Alexander, Yzer Marco, Fowler Erika Franklin, and Nagler Rebekah H.. 2020. “Americans' Perceptions of Disparities in Covid‐19 Mortality: Results from a Nationally‐Representative Survey.” Preventive Medicine 141:106278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green, Jon , Edgerton Jared, Naftel Daniel, Shoub Kelsey, and Cranmer Skyler J.. 2020. “Elusive Consensus: Polarization in Elite Communication on the COVID‐19 Pandemic.” Science Advances 6(28):eabc2717. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Groves, Stephen , and Kolpack Dave. 2020. “Dakotas Lead US in Virus Growth as Both Reject Mask Rules.” ABC News September 13. [Google Scholar]

- Haeder, Simon F , Sylvester Steven, and Callaghan Timothy. 2021. “Lingering Legacies: Public Attitudes about Medicaid Beneficiaries and Work Requirements.” Journal of Health Politics, Policy and Law, 46(2):305–355. [DOI] [PubMed] [Google Scholar]

- Hart, P. Sol , Chinn Sedona, and Soroka Stuart. 2020. “Politicization and Polarization in COVID‐19 News Coverage.” Science Communication 42(5):679–97. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hamilton, Lawrence C. , Cutler Matthew J., and Schaefer Andrew. 2012. “Public Knowledge and Concern about Polar–Region Warming.” Polar Geography, 35(2):155–168. [Google Scholar]

- Hochschild, Jennifer L. , and Einstein Katherine Levine. 2015. Do Facts Matter?: Information and Misinformation in American Politics. Normon, OK: University of Oklahoma Press. [Google Scholar]

- Jamieson, Kathleen Hall , and Albarracin Dolores. 2020. “The Relation between Media Consumption and Misinformation at the Outset of the SARS‐CoV‐2 Pandemic in the US.” The Harvard Kennedy School Misinformation Review. 〈 10.37016/mr-2020-012〉 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnston, Richard. 2006. “Party Identification: Unmoved Mover or Sum of Preferences?” Annual Review of Political Science 9:329–51. [Google Scholar]

- Joslyn, Mark R. , and Haider‐Markel Donald P.. 2014. “Who Knows Best? Education, Partisanship, and Contested Facts.” Politics and Policy 42(6):919–47. [Google Scholar]

- Joslyn, Mark R. , and Sylvester Steven M.. 2019. “The Determinants and Consequences of Accurate Beliefs about Childhood Vaccinations.” American Politics Research 47(3):628–49. [Google Scholar]

- Kahan, Dan M . 2012. “Ideology, Motivated Reasoning, and Cognitive Reflection: An Experimental Study.” Judgment and Decision making 8:407–24. [Google Scholar]

- Kahan, Dan M , Jamieson Kathleen Hall, Landrum Asheley, and Winneg Kenneth. 2017. “Culturally Antagonistic Memes and the Zika Virus: An Experimental Test.” Journal of Risk Research 20(1):1–40. [Google Scholar]

- Kahan, Dan M. , Peters Ellen, Wittlin Maggie, Slovic Paul, Ouellette Lisa Larrimore, Braman Donald, and Mandel Gregory. 2012. “The Polarizing Impact of Science Literacy and Numeracy on Perceived Climate Change Risks.” Nature Climate Change 2(10):732–5. [Google Scholar]

- Kunda, Ziva. 1990. “The Case for Motivated Reasoning.” Psychological Bulletin 108(3):480–98. [DOI] [PubMed] [Google Scholar]

- Lauderdale, Benjamin E . 2016. “Partisan Disagreements Arising from Rationalization of Common Information.” Political Science Research and Methods 4(3):477–92. [Google Scholar]

- Lovelace, Berkeley Jr . 2021. “Top FDA Official Says Fully Vaccinated People Could Need COVID Booster Shots Within 12 Months.” CNBC April 15. [Google Scholar]

- Lodge, Milton , and Taber Charles S. 2013. The Rationalizing Voter. Cambridge, UK: Cambridge University Press. [Google Scholar]

- Lunz Trujillo, Kristin , and Matt Motta. 2020. “Many Vaccine Skeptics Plan to Refuse a COVID‐19 Vaccine, Study Suggests.” U.S. News May 4. [Google Scholar]

- Lunz Trujillo, Kristin , Motta Matthew, Callaghan Timothy, and Sylvester Steven. 2020. “Correcting Misperceptions about the MMR Vaccine: Using Psychological Risk Factors to Inform Targeted Communication Strategies.” Political Research Quarterly. 〈 10.1177/1065912920907695〉. [DOI] [Google Scholar]

- Maxouris, Christina. 2020. “US is ‘Rounding the Corner into a Calamity,’ Expert Says, with Covid‐19 Deaths Projected to Double Soon.” CNN November 29. [Google Scholar]

- McCormack, Lauren A. , Squiers Linda, Frasier Alicia M., Lynch Molly, Bann Carla M., and MacDonald Pia D.M.. 2020. “Gaps in Knowledge About COVID‐19 Among US Residents Early in the Outbreak.” Public Health Reports 136:107–16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCright, Aaron M. , and Dunlap Riley E.. 2011. “The Politicization of Climate Change and Polarization in the American Public's Views of Global Warming, 2001–2010.” The Sociological Quarterly, 52(2):155–194. [Google Scholar]

- Meer, Jonathan. 2011. “Brother, Can You Spare a Dime? Peer Pressure in Charitable Solicitation.” Journal of Public Economics 95(7‐8):926–41. [Google Scholar]

- Milligan, Susan. 2020. “The Political Divide Over the Coronavirus: From the Origins to the Response, Republicans and Democrats have Very Different Ideas About the Coronavirus.” U.S. News March 18 [Google Scholar]

- Molla, Rani. 2020. “It's Not Just You. Everybody is Reading the News More Because of Coronavirus.” Vox March 17. [Google Scholar]

- Mooney, Chris. 2005. The Republican War on Science. New York: Basic Books. [Google Scholar]

- Mooney, Chris. 2012. The Republican Brain: The Science of Why They Deny Science‐and Reality. Hoboken, NJ: Wiley. [Google Scholar]

- Motta, Matt , Stecula Dominik, and Farhart Christina. 2020. “How Right‐Leaning Media Coverage of COVID‐19 Facilitated the Spread of Misinformation in the Early Stages of the Pandemic in the US.” Canadian Journal of Political Science/Revue canadienne de science politique:1–8. [Google Scholar]

- Nagler, Rebekah H. , Vogel Rachel I., Gollust Sarah E., Rothman Alexander J., Fowler Erika Franklin, and Yzer Marco C. 2020. “Public Perceptions of Conflicting Information Surrounding COVID‐19: Results from a Nationally Representative Survey of US Adults.” PLoS One 15(10):e0240776. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nyhan, Brendan , Reifler Jason, and Ubel Peter A. 2013. “The Hazards of Correcting Myths About Health Care Reform.” Medical Care:127–32. [DOI] [PubMed] [Google Scholar]

- Paluck, Elizabeth Levy . 2011. “Peer Pressure Against Prejudice: A High School Field Experiment Examining Social Network Change.” Journal of Experimental Social Psychology 47(2):350–8. [Google Scholar]

- Paluck, Elizabeth Levy , and Shepherd Hana. 2012. “The Salience of Social Referents: A Field Experiment on Collective Norms and Harassment Behavior in a School Social Network.” Journal of Personality and Social Psychology 103(6):899. [DOI] [PubMed] [Google Scholar]

- Pickup, Mark , Stecula Dominik, and Van Der Linden Clifton. 2020. “Novel Coronavirus, Old Partisanship: COVID‐19 Attitudes and Behaviours in the United States and Canada.” Canadian Journal of Political Science/Revue canadienne de science politique 53(2):357–64. [Google Scholar]

- Rabinowitz, Mitchell , Latella Lauren, Stern Chadly, and Jost John T. 2016. “Beliefs About Childhood Vaccination in the United States: Political Ideology, False Consensus, and the Illusion of Uniqueness.” PLoS One 11(7):e0158382. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Redlawsk, David P. , Civettini Andrew J.W., and Emmerson Karen M.. 2010. “The Affective Tipping Point: Do Motivated Reasoners Ever “Get It”?” Political Psychology 31(4):563–93. [Google Scholar]

- Roberts, David. 2020. “Partisanship is the Strongest Predictor of Coronavirus Response.” UCI News March 31. [Google Scholar]

- Schuldt, Jonathon P. , Roh Sungjong, and Schwarz Norbert. 2015. “Questionnaire Design Effects in Climate Change Surveys: Implications for the Partisan Divide.” The ANNALS of the American Academy of Political and Social Science 658(1):67–85. [Google Scholar]

- Siemaszko, Corky . 2020. “Florida's Coronavirus Stay‐At‐Home Order Doesn't Bar Churches from Holding Services.” NBC News April 7. [Google Scholar]

- Sinclair, Betsy. 2012. The Social Citizen: Peer Networks and Political Behavior. Chicago, IL: University of Chicago Press. [Google Scholar]

- Stecula, Dominik A. , and Pickup Mark. 2021. “How Populism and Conservative Media Fuel Conspiracy Beliefs About COVID‐19 and What It Means for COVID‐19 Behaviors.” Research & Politics 8(1). 〈 10.1177/2053168021993979〉 [DOI] [Google Scholar]

- Taber, Charles S. , and Lodge Milton. 2006. “Motivated Skepticism in the Evaluation of Political Beliefs.” American Journal of Political Science 50(3):755–69. [Google Scholar]

- Uscinski, Joseph E , Enders Adam M, Klofstad Casey, Seelig Michelle, Funchion John, Everett Caleb, Wuchty Stefan, Premaratne Kamal, and Murthi Manohar. 2020. “Why Do People Believe COVID‐19 Conspiracy Theories?” Harvard Kennedy School Misinformation Review 1(3):1–12. [Google Scholar]

- Vogel, Lauren. 2017. “Viral Misinformation Threatens Public Health.” Canadian Medical Association Journal 189. 〈 10.1503/cmaj.109-5536〉 [DOI] [PMC free article] [PubMed] [Google Scholar]

- van de Linden, Sander , Leiserowitz Anthony, and Maibach Edward. 2018. “Scientific Agreement Can Neutralize Politicization of Facts.” Nature Human Behaviour, 2(1):2–3. [DOI] [PubMed] [Google Scholar]

- Yancy‐Bragg, N'dea . 2021. “Stop Killing Us’: Attacks on Asian Americans Highlight Rise in Hate Incidents Amid Covid‐19.” USA Today February 25. [Google Scholar]