Abstract

Objective.

Holographic mixed reality (HMR) allows for the superimposition of computer-generated virtual objects onto the operator’s view of the world. Innovative solutions can be developed to enable the use of this technology during surgery. The authors developed and iteratively optimized a pipeline to construct, visualize, and register intraoperative holographic models of patient landmarks during spinal fusion surgery.

Methods.

The study was carried out in two phases. In phase 1, the custom intraoperative pipeline to generate patient-specific holographic models was developed over 7 patients. In phase 2, registration accuracy was optimized iteratively for 6 patients in a real-time operative setting.

Results.

In phase 1, an intraoperative pipeline was successfully employed to generate and deploy patient-specific holographic models. In phase 2, the registration error with the native hand-gesture registration was 20.2 ± 10.8 mm (n = 7 test points). Custom controller-based registration significantly reduced the mean registration error to 4.18 ± 2.83 mm (n = 24 test points, P < .01). Accuracy improved over time (B = −.69, P < .0001) with the final patient achieving a registration error of 2.30 ± .58 mm. Across both phases, the average model generation time was 18.0 ± 6.1 minutes (n = 6) for isolated spinal hardware and 33.8 ± 8.6 minutes (n = 6) for spinal anatomy.

Conclusions.

A custom pipeline is described for the generation of intraoperative 3D holographic models during spine surgery. Registration accuracy dramatically improved with iterative optimization of the pipeline and technique. While significant improvements and advancements need to be made to enable clinical utility, HMR demonstrates significant potential as the next frontier of intraoperative visualization.

Keywords: augmented reality, holographic mixed reality, HoloLens™, 3D visualization, spine surgery

Introduction

Despite the fact that medical imaging is often acquired in three dimensions (3D), the majority of preoperative data is visualized in two dimensions (2D). During an operation, surgeons are tasked with coalescing this 2D information into an internal representation of the patient’s 3D anatomy. This internal model is what guides intraoperative decisions.

In spine fusion surgery, intraoperative navigation systems have facilitated visualization of these otherwise internal representations and have improved spine surgery morbidity by decreasing operation time, facilitating less invasive procedures, and reducing hardware misplacement rates from 20–30% down to 0–4%.1–3However, these traditional navigation systems all involve 2D displays of patient anatomy. Moreover, these data are primarily displayed on monitors outside of the operative field, or more recently, on a heads-up display–capable headset.4–7

Holographic mixed reality (HMR), a specific type of augmented reality (AR), allows the superimposition of computer-generated virtual objects onto the operator’s view of the real world. Seeking to capitalize on the ability of AR to project a 3D model of patient anatomy directly onto the surgical field, several experimental systems for neurosurgical applications have been developed.8–12 Li et al11 utilized pre- and postoperative CTscans to calculate deviation from the target in hologram-guided external ventricular drain (EVD) placement in comparison to classic freehand EVD insertion.11 Tabrizzi and Mahvash used calipers to manually measure a projection error between actual and projected tumor borders to the same set of fiducial markers.9 Van Doormal et al employed an iterative closest point algorithm to register holograms to anatomic models of the head as well as on three patients and calculated fiducial registration error.12 The maximal accuracy measurements in these initial studies were highly variable but as low as 2–4 mm. Further, many of the accuracy measurements were created based on relatively crude measurement tools such as rulers, calipers, and manual drawings. Finally, accuracies can range between 1 and 4 cm when gesture-based manual registration is performed with manual measurements.10 Despite these limitations, these initial studies highlight the potential ability of AR-based systems to register holographic models to patient anatomy.

During spine fusion surgery, visualization methods for pedicle screw placement primarily include fluoroscopy-guided or optical navigation techniques. In both of these methods, pertinent anatomical landmarks are visualized on 2D monitors away from the surgical field. HMR visualization during spinal fusion surgery would enable the direct projection of these pertinent bony landmarks (ie, pedicle boundaries, facet joints, and vertebral body end plates) onto the patient’s actual anatomy and, thus, transform the surgeon’s previously held internal representation of the patient’s 3D anatomy into an accurate mixed reality projection. For intraoperative visualization during spine fusion surgery, preoperative imaging is not always reliable due to differences in bony alignment between the supine (preoperative imaging) and prone (intraoperative) positioning. This scenario would also apply for numerous other surgeries where target structure and anatomical positioning could change between preoperative and intraoperative setups. Further, the ability to purchase expensive proprietary equipment will vary among hospitals. Thus, it is imperative to design a generalizable, scalable pipeline to enable the use of readily accessible intraoperative imaging modalities to generate, deploy, and register 3D HMR patient models for this cutting-edge intraoperative visualization. The initial steps and challenges of developing such a prototype system are reported.

A generalizable pipeline was developed for intraoperative spinal 3D model generation, deployment, and registration using intraoperative low-resolution O-arm™ (Medtronic, Inc, Minneapolis, Minnesota) imaging. This pipeline was iteratively improved over time with registration initially performed using a native hand-gesture technique followed by a custom controller-based application. Accuracy testing was performed using validation test points (spinal hardware) on these intraoperatively generated and manually registered 3D models with measurements obtained in a common reference space using a standard neurosurgical navigation system.

Methods

Overview

This study was approved by the institutional review board (IRB#828346). Informed consent was obtained for each patient. An intraoperative pipeline to generate, deploy, and register a position-specific anatomic 3D model was developed, tested, and iterated over a total of 13 patients undergoing spine fusion surgery among two phases of our study.

Two primary types of surface-rendered 3D models were generated. The model type used for testing accuracy of registration was generated by isolating the spinal hardware and the radiopaque fiducial marker (patient-fixed spinous process clamp or bed-fixed metallic retractor arm). In these models, focus was placed on the metallic components only as these were the best source of reliable test points. Models were also generated to test the pipeline for spinal anatomy, reflecting what would actually be used during surgery. In these models, the anatomy of interest (spinous process, facet joints, pedicles, and vertebral bodies) of clinically relevant spinal levels was targeted along with the metallic fiducial marker as described above. The 3D models were built using a series of processing steps including segmentation, data reduction, and deployment to an AR-capable headset (HoloLens™, Microsoft Corp, Redmond, Washington) for rendering. These series of steps utilized three open-source software packages downloadable on any laptop and will be discussed further in the next section.

Phase 1: Development of HMR Model Reconstruction Pipeline

Phase 1 of this study (n = 7 patients) focused on developing a fully intraoperative pipeline to generate a holographic model of patient-specific and position-specific anatomy from low-resolution intraoperative imaging. For the initial patients, bony anatomy along with metallic fiducials was used as the regions of interest for the hologram as would be required for clinical utility (patients A-E). For the final two patients, in this phase the holographic models were generated from isolated spinal hardware (patients F and G) to qualitatively assess the difference in the rendering of metallic elements and compare model generation time. Reconstruction times were recorded based on time stamps from import into image segmentation software to export as a 3D holographic model (Table 1).

Table 1.

Summary of Patients, Procedures, Indications, and Results for Phase 1: Development of Reconstruction Pipeline.

| Patient | Procedure | Indication | Hologram Type | Reconstruction Time (min) |

|---|---|---|---|---|

| A | T11-L2 percutaneous fusion with pedicle screws at all levels bilaterally | Dislocation of T12-L1 thoracic vertebra | Bone + fiducials | 29 |

| B | T1-7 fusion with bilateral pedicle screws at T1-3 and T5-7 | T4 burst fracture | Bone + fiducials | 23 |

| Use of spinal neuronavigation | ||||

| Use of local autograft | ||||

| Use of morselized allograft | ||||

| T4 laminectomy | ||||

| Bilateral T4 transpedicular decompression of T4 burst fracture | ||||

| C | L3 laminectomy | Closed compression fracture of third lumbar vertebra | Bone + fiducials | 39 |

| Reduction of L3 vertebral body fracture | ||||

| L2-5 fusion with bilateral pedicle screws at L2, L4, and L5 | ||||

| Use of spinal neuronavigation | ||||

| D | Left L4-5 hemilaminectomy, left L5 gill procedure, L4-S1 pedicle screw fusion using O-arm and Stealth, lateral fusion L4-S1 using allograft and local autograft | Left leg sciatica L4-S1 stenosis, L5 pars defects | Bone + fiducials | 33 |

| E | T12 lateral extracavitary resection of T12 vertebral body, cage reconstruction | T12 primary bone tumor and pathologic fracture | Bone + fiducials | 31 |

| T9-L3 instrumented fusion using O-arm and Stealth navigation, allograft fusion T9-L3, sectioning of T11, and T12 spinal nerves | ||||

| F | L4-5 laminectomy, L4-5 instrumented fusion with local autograft, allograft, O-arm/Stealth | L4-5 stenosis | Isolated hardware | 21 |

| G | Removal of previous hardware from T12 to L4. Pedicle screw fusion from T11 to the pelvis using the arm Stealth navigation, allograft fusion from T11 to the pelvis | Previous failed fusion T12 to S1 with prior removal of the L5 and S1 screws and L5-S1 ALIF | Isolated hardware | 11 |

Integration of HMR Pipeline into Intraoperative Workflow

The patients were anesthetized with general endotracheal anesthesia and laid prone on Jackson table with pressure points well padded. The operative site was prepped, draped, and anesthetized locally. Subperiosteal dissection was used to expose laminae, facets, and transverse processes of interest. A spinous process marker was placed per usual clinical protocol (patient-fixed). In some cases, a Greenberg retractor set was placed near the operative field that was fixed to the bed (bed-fixed) to serve as a radiopaque fiducial marker. Consistent with common clinical practice, an intraoperative low-resolution CT scan was obtained using an O-arm™ (512 × 512 matrix, .833 slice thickness) after initial bony exposure. Using Stealth navigation, pedicle screws were then placed in the standard fashion. A second post-instrumentation scan was then taken with the O-arm imaging system to confirm satisfactory placement of hardware as per standard clinical protocol. Intraoperatively, one or both of these O-arm images were de-identified and downloaded from our institution’s PACS in standard DICOM format to a portable workstation (Microsoft Surface Book™ laptop, Microsoft Corp, Redmond, Washington). A surface-rendered holographic model was then reconstructed using the pipeline detailed below.

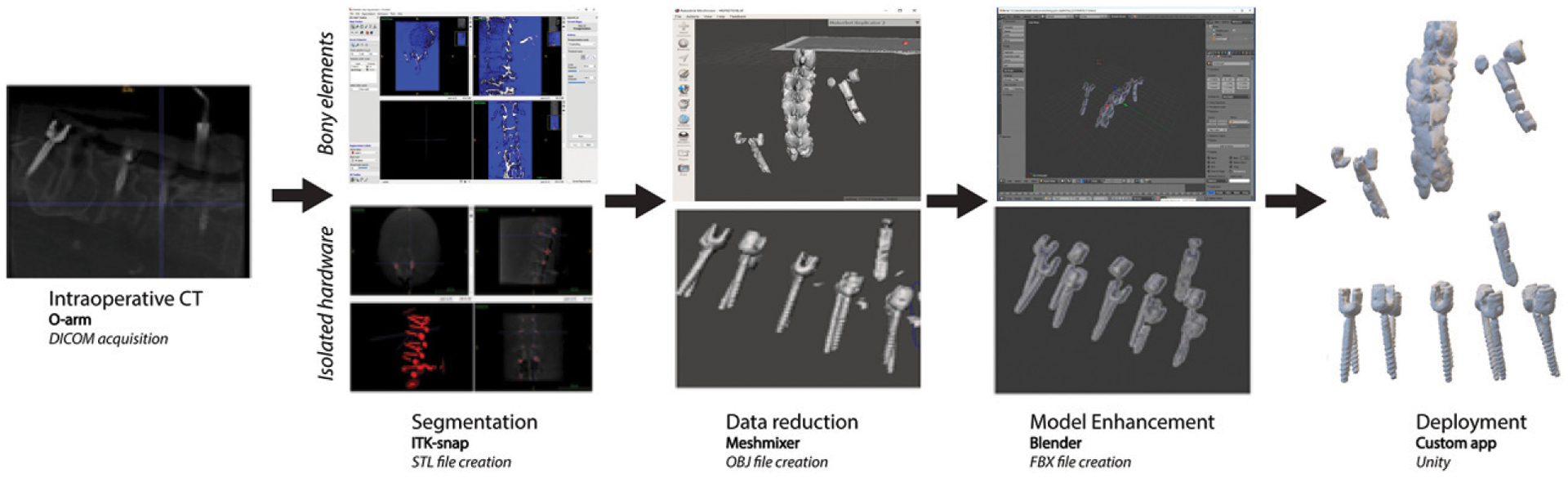

Intraoperative Hologram Reconstruction Using Surface Rendering

Once de-identified and downloaded to the portable workstation, the images were processed in ITK-SNAP (ITK-SNAP v.3.6, www.itksnap.org) for semiautomated segmentation. This segmentation was performed in a two-step process using threshold masking followed by iterative region growing.13 The parameters for the threshold masking and iterative region growing were optimized manually for bone densities and/or metallic hardware (including the fiducial markers) to be efficiently segmented (Figure 1). In models including bony elements on top of metallic hardware elements, the segmentation of each of those categories were carried out in series. The metallic elements were segmented through detection of image density contrast between metal and all other materials on the low-resolution CT. The bony elements were segmented by supervised threshold masking and iterative region growing.

Figure 1.

Workflow of 3D model generation from intraoperative O-arm scan.

The de-identified 3D segmentations were then exported to Meshmixer (Autodesk, Inc, San Rafael, California), and mesh decimation was performed with Meshmixer’s reduce brush tool.14 This enabled data reduction without compromising clinically relevant contours and was required to achieve smooth rendering performance on HoloLens. The decimated meshes were exported to Blender (Blender Foundation, Amsterdam, Netherlands) to define the color, transparency, scale, and orientation of the mesh (Figure 2).15 The model was then exported as a de-identified Filmbox (FBX) file for deployment. Depending on the registration method, the deployment method differed. These are detailed below.

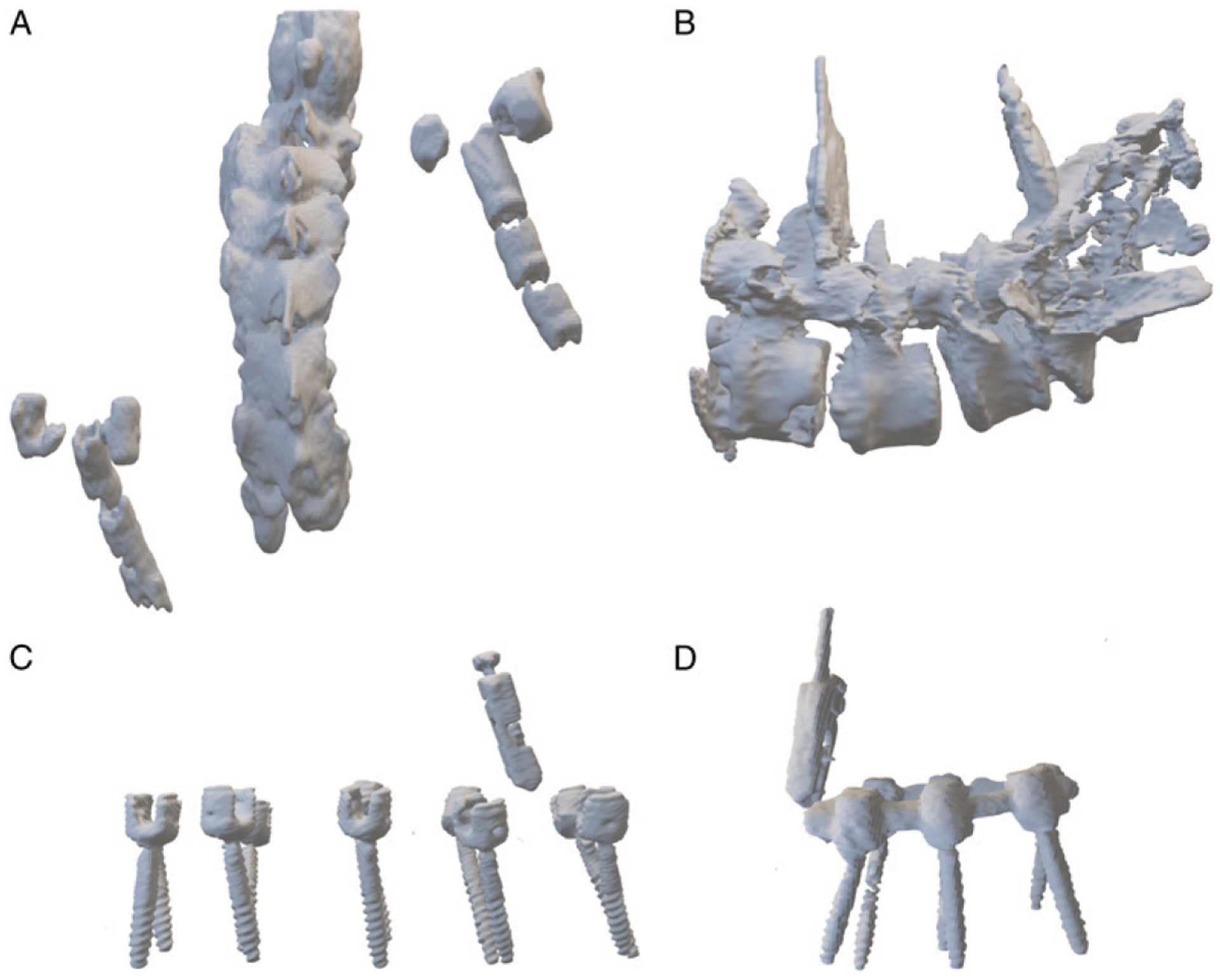

Figure 2.

Example models for (A, B) bony elements with fiducial markers and (C, D) isolated spinal instrumentation.

Phase 2: Development and Optimization of HMR Spatial Registration Pipeline

In phase 2 of the study, the manual registration accuracy was assessed in 6 patients (patients 1–6, Table 2). All registrations across the patients were performed by the same experienced user wearing the HoloLens. This user was not the primary or assistant surgeon, and thus, none of the holographic information was being seen by the operative team. The user aligned the metallic fiducial marker on the projected 3D model to the actual marker on the surgical field. To assess registration accuracy, the position of test points (pedicle screw crown) on the patient and on the model was acquired in a common reference space using StealthStation Neuronavigation™ (Medtronic Sofamor Danek, Memphis, Tennessee, USA). The number of test points per patient differed based on the number of spinal levels being fused. The Stealth navigation probe was used to acquire the location of the pedicle screw crown in anatomical space by the surgeon (attending or senior resident), while the location of the HMR model’s homologous pedicle screw crown in holographic space was acquired by the experienced HoloLens user (nonoperative team member). These two individual points acquired in the same reference space enabled Euclidean distance error calculations between homologous points in anatomical vs holographic space. For patients 1 and 2, registration was performed using a hand-gesture technique that is native to the HoloLens. The hand-gesture technique was limited in its capability for the user to make fine adjustments in multiple planes. These challenges prompted the implementation of a custom controller-based application for patients 3–6. Further, the models generated for patients 1 and 2 were the combination of bone and hardware. For patients 3–6, this was switched to isolated hardware alone due to clinical time limitations during the surgery. Model reconstruction times were also collected for the second phase except for patient 2, in whom the final FBX file did not export to the encrypted drive due to a software error and thus, an accurate registration time was not available.

Table 2.

Summary of Patients, Procedures, Indications, and Results for Phase 2: Optimization of Registration Pipeline.

| Patient | Procedure | Indication | Hologram Type | Reconstruction Time (minutes) | Registration Method | Accuracy (mm) |

|---|---|---|---|---|---|---|

| 1 | L2-L5 laminectomy | L3 burst fracture, lumbar stenosis | Bone + hardware | 48 | Hand gesture | 7.3 |

| L1-L5 instrumented screw fusion using allograft, autograft, Stealth navigation | ||||||

| 2 | Removal of segmental hardware L4-S1 | L3-L4 junctional stenosis above level of previous L4-S1 fusion | Bone + hardware | N/A | Hand gesture | 22.3 ± 10.0 |

| Laminectomy L3-L4 | ||||||

| Pedicle Screw and allograft fusion L3-L5 using O-arm/Stealth navigation | ||||||

| 3 | L4-L5 laminectomy | L4-L5 stenosis | Isolated hardware | 28 | Controller-based | 7.3 ± 3.4 |

| L4-L5 instrumented fusion using allograft, autograft, Stealth navigation | ||||||

| 4 | L4-L5 laminectomy | L4-L5 stenosis | Isolated hardware | 19 | Controller-based | 7.9 ± 2.8 |

| L4-L5 instrumented fusion using allograft, autograft, Stealth navigation | ||||||

| 5 | Lateral extra-cavitary T10 corpectomy | T10 metastatic tumor | Isolated hardware | 16 | Controller-based | 3.2 ± 1.5 |

| T8-T12 instrumented fusion with allograft, titanium cage and O-arm/Stealth navigation, sectioning of left T10 nerve root | ||||||

| 6 | L4-L5 laminectomy | L4-5 stenosis | Isolated hardware | 13 | Controller-based | 2.3 ± .6 |

| L4-L5 instrumented fusion using allograft, autograft, Stealth navigation |

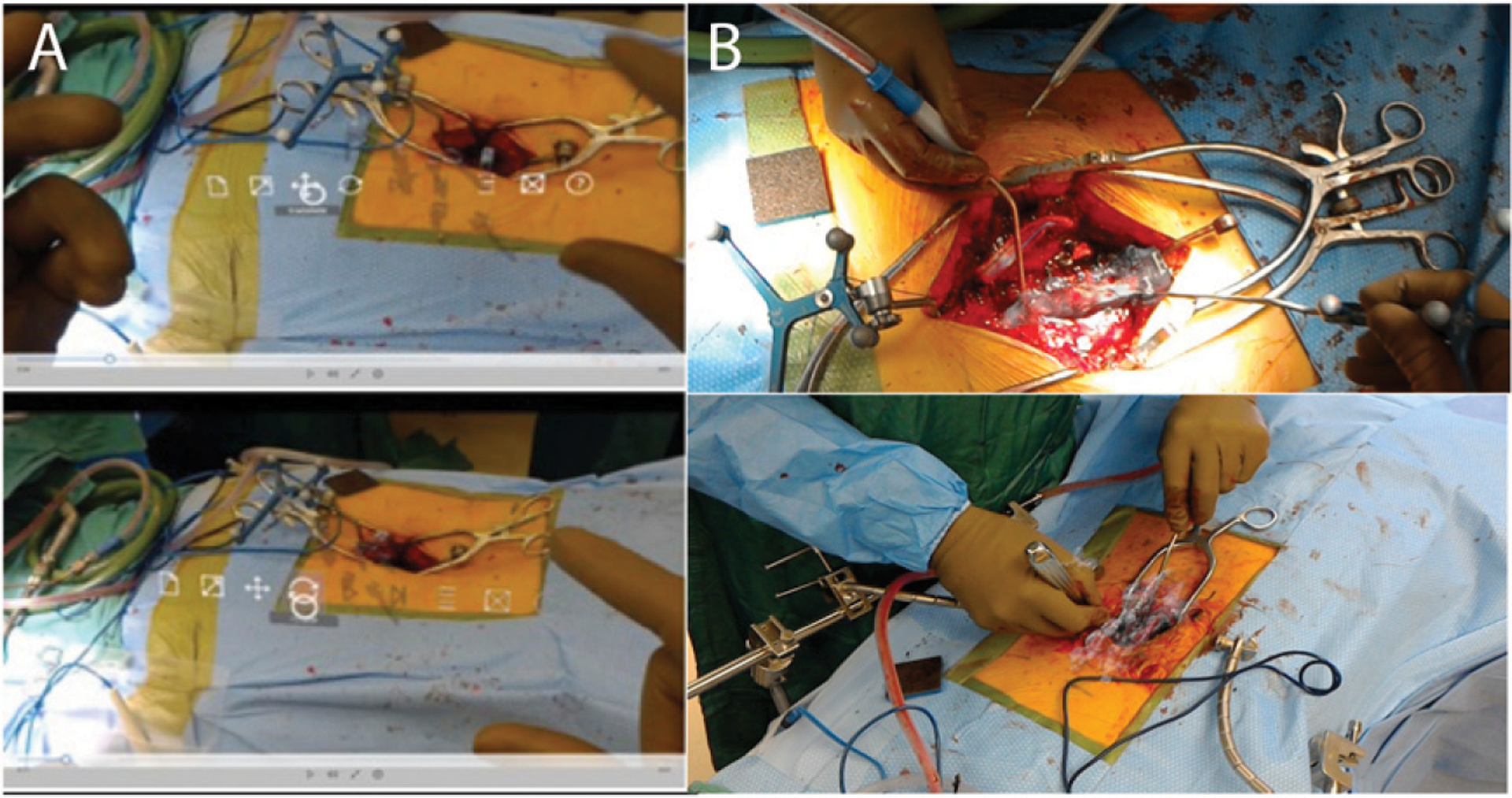

Hand-Gesture Registration

For the hand-gesture registration method, the FBX file was uploaded to a password-protected Microsoft One-Drive over the hospital’s secure wireless network. Using the Microsoft HoloLens, the uploaded FBX file was opened in the native 3D Viewer Beta application on the device to project and render the patient-specific HMR model. The size, position, and orientation of the 3D model were manipulated using native hand gestures by the HoloLens user. Registration focused on matching the size, position, and orientation of the modeled fiducial markers to the actual fiducial markers in the operative field. Manipulation of the HMR model was performed using hand gestures until registration was as accurate as visually possible (Figure 3).

Figure 3.

HoloLens hand-gesture registration and controls for Unity. (A) Hand-gesture control. B) Manual registration.

Controller Registration

For the controller-based registration method, a custom application was developed for HoloLens using Unity development platform (Unity Technologies, San Francisco, California). This custom application enabled hologram manipulability with a wireless Xbox™ gaming controller (Microsoft Corp, Redmond, Washington). Translation, rotation, and magnification of the holographic model were mapped to the buttons and joysticks of the controller. The controller was then placed in a sterile bag for use intraoperatively (Figure 4). Again, registration focused on matching the size, position, and orientation of the modeled fiducial markers to the actual fiducial markers in the operative field. Manipulation of the HMR model was performed using the controller until registration was as accurate as visually possible.

Figure 4.

Button commands on Xbox controller for Unity and intraoperative deployment for controller-based registration.

Accuracy Measurements

In addition to assessing hologram reconstruction times, accuracy was also assessed for the patients in phase 2 of the study (patients 1–6, Table 2). For these patients, accuracy was determined by measuring the distance between the holographic representation of test points to the position of the same points in real space (n = 31; 25 implanted screw heads and 6 spinous process clamp screw heads). Each marker was numbered chronologically with 1 being the first marker measured (patient 1) and 31 being the last marker measured (patient 6). Distances were obtained using StealthStation Neuronavigation™. Using the Stealth navigation probe, the holographic model pedicle screw crown positions were marked by a single user wearing the HoloLens. Then, using the same navigation probe, the surgeon marked the location of the anatomical pedicle screw crown in real space. The Euclidean distance between the virtual and physical pedicle screw crowns was calculated by the StealthStation navigation system. For each patient, the mean and standard deviation was calculated across the distribution of Euclidean distances for each marker. For the first patient in this pipeline, only one test point was available, and therefore, it was reported as a single value.

Statistical Analysis

Mean values ± SDs were calculated and reported for registration errors between native hand-gesture–based and controller-based techniques as well as reconstruction times for isolated spinal hardware and spinal anatomical models. An unpaired two-sample Welch’s t test was used to compare the distribution of errors between the native and controller-based registration techniques. This was used due to unequal variances between the two distributions. To estimate the linear trend in error over time, a linear regression model was fit to the chronological dataset. The formula for the linear regression model was

where y was a vector with length n corresponding to the Euclidean distance error measurements for each test point across subjects and X1 was an ordinal vector from 1:n corresponding to each test point across time. All statistical analyses were performed using MATLAB statistics and regression toolbox (Mathworks, Inc, Natick, Massachusetts).

Results

Phase I: Development of HMR Model Reconstruction Pipeline

Low density, high fidelity 3D holograms of anatomical regions of interest were successfully generated from the intraoperative O-arm CT scans using a low-cost custom pipeline. This reconstruction pipeline was developed over 7 patients (Table 1). In the first five patients, HMR models of anatomical regions of interest, that is, bony elements of the spinal column along with radiopaque fiducial markers, were generated to mimic bony models potentially usable for intraoperative HMR visualization (Figures 2A and 2B). For the other 2 patients, the same reconstruction pipeline was used to create 3D holographic models of just the isolated spinal hardware. This included the fiducial marker as well as the pedicle screws as exemplified (Figures 2C and 2D). This was done to assess for any qualitative difference in rendering quality of the hardware which would ultimately be used as validation points as well as compare reconstruction times. Qualitatively, there was no substantial difference in the visible metallic elements in the bone + fiducials vs isolated hardware models. However, due to the fact that the bone + fiducial models required serial segmentation of metallic followed by bony elements and the lower resolution of these bony elements, as expected there was a reduction in the amount of time required for model generation by approximately 50% (31 ± 5.8 minutes, patients A-E; to 16 ± 7 minutes, patients F-G).

Phase 2: Development and Optimization of HMR Spatial Registration Pipeline

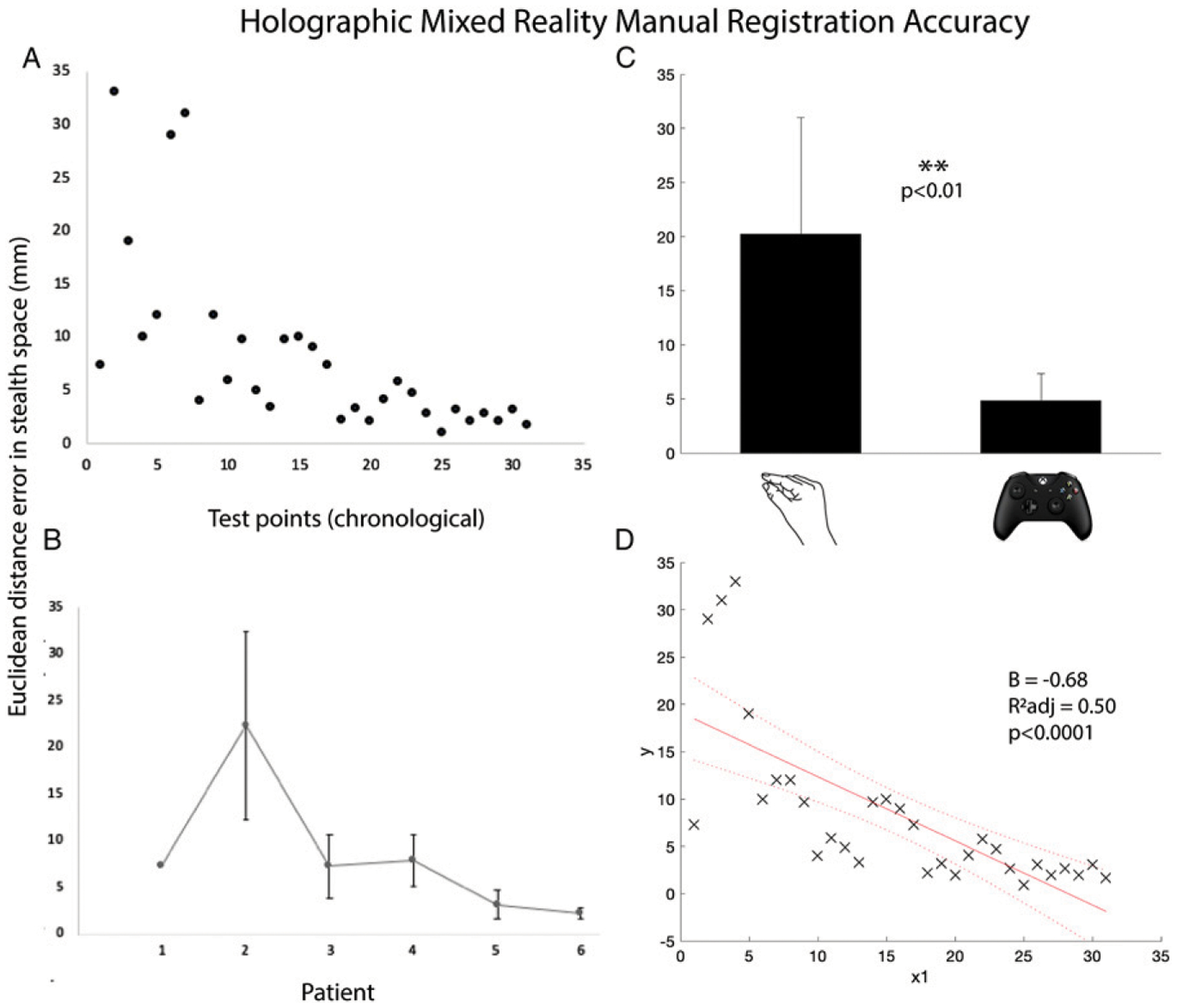

A custom, generalizable pipeline was developed to enable intraoperative rendering and manual registration of 3D HMR patient-specific models from the intraoperative O-arm scan. This manual registration pipeline was iterated and optimized over 6 patients. Registration was performed initially with HoloLens native hand-gesture methods (patients 1–2). This resulted in highly variable Euclidean distance errors between anatomical and holographic space due to the inability to make multiplanar fine adjustments with hand-gesture control. Therefore, a custom controller-based registration method was developed and employed (patients 3–6). The mean registration error for hand-gesture registration data points was 20.2 ± 10.8 mm (n = 7 test points). After development of the custom controller-based registration, the mean registration error decreased significantly to 4.18 ± 2.83 mm (n = 24 test points, t(6.3) = 3.7, P = .009, Figure 5C, Table 3). Overall, there was a steady improvement in accuracy over time with the final patient achieving a registration error of 2.30 ± .58 mm (regression coefficient B = −.68, adjusted R2 = .50, P = 5.2 × 10−6, Figure 5D).

Figure 5.

Holographic mixed reality registration accuracy. (A) Euclidean distance in stealth space between anatomical and holographic test points across time. (B) Average residual error per patient. (C) Mean error for hand-gesture vs controller-based registration. (D) Linear regression model fit for estimating linear trend in error over time.

Table 3.

Location and Euclidean Distance Between Anatomical and Holographic Test Points (mm).

| Euclidean Distance Between Anatomical and Holographic Test Points per Patient (mm) | |||||||

|---|---|---|---|---|---|---|---|

| Level | Side | Pt 1* | 2 | 3 | 4 | 5 | 6 |

| T8 | Left | 5.8 | |||||

| Right | 2.2 | ||||||

| T9 | Left | 4.7 | |||||

| Right | 3.2 | ||||||

| T11 | Left | ||||||

| Right | 2.0 | ||||||

| T12 | Left | 2.7 | |||||

| Right | 4.1 | ||||||

| L4 | Left | 33.0 | 4.0 | 10.0 | 2.0 | ||

| Right | 29.0 | 12 | 3.3 | 2.0 | |||

| L5 | Left | 19.0 | 5.9 | 9.0 | 3.1 | ||

| Right | 31.0 | 9.7 | 9.7 | 2.7 | |||

| Marker 1 | 7.3 | 10.0 | 4.9 | 7.3 | .9 | 1.7 | |

| Marker 2 | 12.0 | 3.1 | |||||

| Average | 7.3 | 22.3 | 7.3 | 7.9 | 3.2 | 2.3 | |

Initial patient tested with only one validation marker.

In phase 2, model reconstruction times were also calculated to supplement the data from phase 1. Across both phases, total time for segmentation, rendering, and deployment for bone + hardware models took 33.8 ± 8.6 minutes (n = 6: patients A-E, phase 1; and patient 1, phase 2). As expected, for isolated hardware models, the total time was reduced to 18 ± 6.1 minutes (n = 6: patients F-G, phase 1 and patients 3–6, phase 2). As described, for the primary purpose of determining registration accuracy of this pipeline and to decrease the amount of intraoperative time required during phase 2, model generation for patients 3–6 was switched from bone + hardware to isolated hardware reconstruction (Table 2).

Discussion

In this study, the initial development and iterative improvements on a custom, generalizable, accessible, and low-cost pipeline is described that enables 3D model rendering and registration of intraoperative imaging obtained by a low-resolution portable CT (O-arm) that is readily available in both community and academic hospital systems during live spinal fusion surgery. The attractiveness of AR as a tool to help with surgical planning, education, and intraoperative visualization has entered the forefront of discussions about the future of surgery.2,16 In one study assessing the impact of AR on the surgical experience when inserting pedicle screws in cadavers, surgeons consistently rated an AR-enhanced system as “excellent” on the qualities of attractiveness, perspicuity, efficiency, dependability, stimulation, and novelty. Beyond the ergonomic advantages of the AR system, screw placement accuracy was also improved over a conventional surgical approach.17 Additionally, with equipment for robot-assisted surgery costing up to $1 million in addition to annual costs of disposables, AR systems are significantly cheaper tools with similar potential to radically improve spinal surgery.18,19 These studies used preoperative volume-rendering or cadaveric imaging to generate 3D models. However, preoperative imaging may not adequately account for changes in anatomical orientation, alignment, or position of regions of interest between the preoperative and intraoperative state. Further, volume-rendered models are data intensive as they utilize a 3D reconstruction of an entire image acquisition. These can be distracting for surgeons since the excess information being seen may be disruptive to their workflow. Surface rendering, on the other hand, enables targeted reconstruction of only anatomical regions of interest. HMR is a specific type of AR that allows the superimposition of computer-generated virtual objects onto the operator’s view of the real world. HMR surface-rendered 3D models enable the superimposition of targeted virtual objects onto real-world space. Therefore, an accurate and efficient pipeline to generate, deploy, and register HMR surface-rendered models using intraoperative patient anatomy could significantly enhance the scope of this technology.

Initial steps and challenges in the development of a pipeline for this nascent technology were iteratively improved through trial and error. In phase 1, the initial subset of patients was used to learn how to generate, deploy, and register intraoperative HMR models. For these patients, registration accuracy was not measured as the team established the novel intraoperative process. For the subset of patients in phase 2, registration accuracies were measured, and registration technique was iteratively improved. In the initial two patients (patients 1–2), hand-gesture registration was used. Hand-gesture control required individual, sequential movements in X, Y, and Z planes, as well as separate rotation and scaling functions. This means that from deployment to registration, multiplanar manipulation required iterative movements made in each plane one after another. Therefore, native hand-gesture control of the HMR model did not afford precision or fine control resulting in large registration errors (mean 20.2 ± 10.8 mm, Figures 5A–5C). Therefore, subsequently, a custom application in Unity development package was designed utilizing an external wireless gaming controller as a mechanism for HMR model manipulation. With this controller package, X, Y, Z, rotation, and scale manipulation could all be made simultaneously using the different buttons (Figure 4). The advent of controller-based registration significantly reduced manual registration error to 4.18 ± 2.83 mm (p < .01, Figures 5A–5C). With iterative improvements in technique and experience, registration accuracy also showed a continuous improvement over time (P < .0001), achieving a mean minimum error of 2.3 mm in the final patient (Figure 5D). This improvement over time was a result of both controller-based registration as well as the learning curve itself of HMR registration. However, given that the HoloLens user was an experienced user of the headset, the learning curve alone would not account for the dramatic shift between the performance of the two registration techniques.

HMR model reconstruction times were nearly half for isolated hardware (18 ± 6.1 minutes) compared to spinal anatomy (33.8 ± 8.6 minutes). This difference stems from the segmentation ability of the two-step semiautomated segmentation algorithm13 with metallic objects vs anatomical structures using low-resolution O-arm™ imaging. For anatomical structures, the low resolution of the O-arm™ creates a more challenging segmentation problem compared to normal CT scans. In spinal fusion surgery, intraoperative model generation time can be longer because other components of the surgery can be performed prior to utilizing the model for spinal hardware placement. In other surgery types though, that time may be too long for clinical feasibility. In this pipeline, bed-fixed fiducial markers were able to be employed. Therefore, once optimized and validated, the low-resolution intraoperative scan could come after positioning the patient but prior to making incision. This, in combination with improving the segmentation algorithm, means that HMR models could be reconstructed during the initial surgical exposure and, therefore, deployed and ready by the early stages of the surgery.

Several components of this pipeline must be improved prior to validation testing for clinical utility. Many of the processes were performed manually but have the potential to be automated. This includes decreasing model generation time, improving ease of deployment, and developing automated or semiautomated registration techniques. Nevertheless, the proposed pipeline demonstrates the attractiveness and potential utility for this technology, which may feasibly lead to a novel visualization method for the clinical operative setting.

Limitations

This proof-of-concept study was performed on a small number of patients. More testing is required to confirm clinically adequate registration accuracy across multiple patients and users with the single most optimized protocol in addition to a robust evaluation of the learning curve. Due to the small sample size in this initial proof-of-concept study, we did not evaluate error measurements as a function of distance from the fiducial marker or based on specific anatomical levels. Further, given the sequential nature with which the registration techniques were employed, the comparison of registration error between the two techniques was partially confounded by the natural learning curve of HMR registration. Further, points in space cannot be accurately captured with the HoloLens camera due to the lack of stereoscopic still picture or video recording. Therefore, the pictures and videos recorded from HoloLens are not an accurate reflection of what is seen by the user. This makes it difficult to visually document each holographic validation point in space and introduces the potential for user bias. However, the next generation HoloLens 2 will implement stereoscopic still and video recording to better capture the vantage point of the user.

Additionally, there are known concerns in the AR realm with stability of holographic models. Intermittent shifts or jitter, known as drift, can occur when the headset user changes his/her gaze.20 This is likely a result of background sensing and tracking that leads to frequent position recalculations as well as limitations of HoloLens’s built-in sensors. These issues are known and promise to be improved with software and hardware updates in the near future. Moreover, automated registration tools, such as marker-based tracking with computer vision, will allow for automated continuous reregistration of the hologram that should further improve registration accuracy and reliability.

Conclusion

A custom pipeline is described for the generation of intraoperative 3D holographic models during spine surgery. The pipeline uses intraoperatively acquired, low-resolution imaging to generate, deploy, and register holographic models onto patients on the operating table. Registration accuracy dramatically improved with optimization of the pipeline and technique. While significant improvements and advancements need to be made to enable clinical utility, HMR demonstrates significant potential as the next frontier of intraoperative visualization.

Funding

The author(s) received no financial support for the research, authorship, and/or publication of this article.

Footnotes

Declaration of Conflicting Interests

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

References

- 1.Holly LT, Foley KT. Intraoperative spinal navigation. Spine. 2003;28(15 Suppl):S54–S61. doi: 10.1097/01.BRS.0000076899.78522.D9 [DOI] [PubMed] [Google Scholar]

- 2.Linsler S, Antes S, Senger S, Oertel J. The use of intraoperative computed tomography navigation in pituitary surgery promises a better intraoperative orientation in special cases. Journal of Neurosciences in Rural Practice. 2016;7(4):598–602. doi: 10.4103/0976-3147.186977 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Soliman MAR, Kwan BYM, Jhawar BS. Minimally invasive unilateral percutaneous transfracture fixation of a hangman’s fracture using neuronavigation and intraoperative fluoroscopy. World Neurosurgery. 2019;122: 90–95. doi: 10.1016/j.wneu.2018.10.140 [DOI] [PubMed] [Google Scholar]

- 4.Diaz R, Yoon J, Chen R, Quinones-Hinojosa A, Wharen R, Komotar R. Real-time video-streaming to surgical loupe mounted head-up display for navigated meningioma resection. Turkish Neurosurgery. 2017. Epub ahead of print. doi: 10.5137/1019-5149.JTN.20388-17.1 [DOI] [PubMed] [Google Scholar]

- 5.Yoon JW, Chen RE, Han PK, Si P, Freeman WD, Pirris SM. Technical feasibility and safety of an head-up display device during spine instrumentation. The International Journal of Medical Robotics and Computer Assisted Surgery. 2017; 13(3):e1770. doi: 10.1002/rcs.1770 [DOI] [PubMed] [Google Scholar]

- 6.Yoon JW, Chen RE, ReFaey K, et al. Technical feasibility and safety of image-guided parieto-occipital ventricular catheter placement with the assistance of a wearable head-up display. The International Journal of Medical Robotics and Computer Assisted Surgery. 2017;13(4):e1836. doi: 10.1002/rcs.1836 [DOI] [PubMed] [Google Scholar]

- 7.Yoon JW, Richter K, Vivas-Buitrago T, et al. Technical feasibility and safety of ultrasound-guided supraclavicular nerve block with assistance of a wearable head-up display. Regional Anesthesia and Pain Medicine. 2018;43(5): 559–561. doi: 10.1097/AAP.0000000000000803 [DOI] [PubMed] [Google Scholar]

- 8.Badiali G, Ferrari V, Cutolo F, et al. Augmented reality as an aid in maxillofacial surgery: validation of a wearable system allowing maxillary repositioning. Journal of Cranio-Maxillofacial Surgery. 2014;42(8):1970–1976. doi: 10.1016/j.jcms.2014.09.001 [DOI] [PubMed] [Google Scholar]

- 9.Tabrizi LB, Mahvash M. Augmented reality–guided neurosurgery: Accuracy and intraoperative application of an image projection technique. Journal of Neurosurgery. 2015; 123(1):206–211. doi: 10.3171/2014.9.JNS141001 [DOI] [PubMed] [Google Scholar]

- 10.Frantz T, Jansen B, Duerinck J, Vandemeulebroucke J. Augmenting microsoft’s hololens with vuforia tracking for neuronavigation. Healthcare Technology Letters. 2018; 5(5):221–225. doi: 10.1049/htl.2018.5079 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Li Y, Chen X, Wang N, et al. A wearable mixed-reality holographic computer for guiding external ventricular drain insertion at the bedside. Journal of Neurosurgery. 2019;131: 1599–1606. doi: 10.3171/2018.4.JNS18124 [DOI] [PubMed] [Google Scholar]

- 12.van Doormaal TPC, van Doormaal JAM, Mensink T. Clinical accuracy of holographic navigation using point-based registration on augmented-reality glasses. Operative Neurosurgery. 2019;17(6):588–593. doi: 10.1093/ons/opz094 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Yushkevich PA, Piven J, Hazlett HC, et al. User-guided 3D active contour segmentation of anatomical structures: Significantly improved efficiency and reliability. Neuroimage. 2006;31(3):1116–1128. doi: 10.1016/j.neuroimage.2006.01.015 [DOI] [PubMed] [Google Scholar]

- 14.Schmidt R, Singh K. Meshmixer: An interface for rapid mesh composition. In: ACM SIGGRAPH 2010 Talks. SIGGRAPH ‘10 New York, NY: ACM; 2010;Vol. 6:1–6. doi: 10.1145/1837026.1837034 [DOI] [Google Scholar]

- 15.Rodrigues AM, Bardella F, Zuffo MK, Leal Neto RM. Integrated approach for geometric modeling and interactive visual analysis of grain structures. Computer-Aided Design. 2018;97:1–14. doi: 10.1016/j.cad.2017.11.001 [DOI] [Google Scholar]

- 16.Vávra P, Roman J, Zoncč P, et al. Recent development of augmented reality in surgery: A review. Journal of Healthcare Engineering. 2017;2017:1. doi: 10.1155/2017/4574172 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Molina CA, Theodore N, Ahmed AK, et al. Augmented reality-assisted pedicle screw insertion: a cadaveric proof-of-concept study. Journal of Neurosurgery: Spine. 2019;31: 139–146. doi: 10.3171/2018.12.SPINE181142 [DOI] [PubMed] [Google Scholar]

- 18.Galetta MS, Leider JD, Divi SN, Goyal DKC, Schroeder GD. Robotics in spinal surgery. Annals of Translational Medicine. 2019;7(Suppl 5):S165. doi: 10.21037/atm.2019.07.93 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Staub BN, Sadrameli SS. The use of robotics in minimally invasive spine surgery. Journal of Spine Surgery. 2019; 5(Suppl 1):S31–S40. doi: 10.21037/jss.2019.04.16 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Franzini L, Ribble JC, Keddie AM. Understanding the Hispanic paradox. Ethnicity & disease. 2001;11(3):496–518. [PubMed] [Google Scholar]