Abstract

Integration of social cues to initiate adaptive emotional and behavioral responses is a fundamental aspect of animal and human behavior. In humans, social communication includes prominent nonverbal components, such as social touch, gestures and facial expressions. Comparative studies investigating the neural basis of social communication in rodents has historically been centered on olfactory signals and vocalizations, with relatively less focus on non-verbal social cues. Here we outline two exciting research directions: First, we will review recent observations pointing to a role of social facial expressions in rodents. Second, we will outline observations that point to a role of “non-canonical” rodent body language: body posture signals beyond stereotyped displays in aggressive and sexual behavior. In both sections, we will outline how social neuroscience can build on recent advances in machine learning, robotics and microengineering to push these research directions forward towards a holistic systems neurobiology of rodent body language.

Introduction

Many social cues are nonverbal (a smile, a raised eyebrow, a shrug). A failure to correctly process and interpret social cues is thought to underlie social dysfunction in many neuropsychiatric conditions, from negatively biased interpretations of social signals in depression (Weightman et al., 2014) to a near-complete breakdown of social understanding in some individuals with autism spectrum disorder (Klin et al., 2002). A comparative investigation in rodents – where we have advanced tools for monitoring and manipulating neural activity during behavior – could be a powerful way to advance our understanding of the evolution and function of neural circuits for processing social cues (Anderson and Adolphs, 2014).

In general, we know little about the use of posture and gesture in orchestrating social group behavior. A comparative study of body language is an old idea (Darwin, 1872), but the systems neuroscience of rodent body language is still in early days. It is clear that rodents make use of stereotyped body postures and movements in sexual courtship (e.g., female rats darting) and in aggression and dominance (e.g., rat boxing) (Schweinfurth, 2020). However, compared to our detailed knowledge about the processing of socially significant olfactory signals in aggressive (Anderson, 2016), sexual (Lenschow and Lima, 2020) and parental (Kohl, 2020) behaviors, we know much less about how body language signals (touch, movement, postures) are integrated by the rodent brain.

Facial expressions and whisking

Mice and rats display a variety of facial expressions. Both mice (Langford et al., 2010) and rats (Sotocina et al., 2011) make stereotyped expressions (‘grimaces’) with their facial musculature in response to pain and stress: tightening of orbital muscles, squinting eyes and retraction of the ears (Figure 1a). Rats also make facial expressions (forward movement and blushing of the ears) (Finlayson et al., 2016) (Figure 1b) and jumps (Ishiyama and Brecht, 2016) when experiencing positive emotions, such as after tickling. In wild mice, ear posture correlates with their behavior in tests that are thought to measure the animals’ emotional state: approaching a novel odor and exploring the open arms in an elevated plus-maze. Mice with retracted ears behave more cautiously than mice with their ears in an upright, forward position (Lecorps and Féron, 2015). Rats also display different facial expressions when presented with tastants that evoke different emotional responses (e.g. bitter, unpleasant quinine, and sweet and palatable sucrose) (Grill and Norgren, 1978a, 1978b).

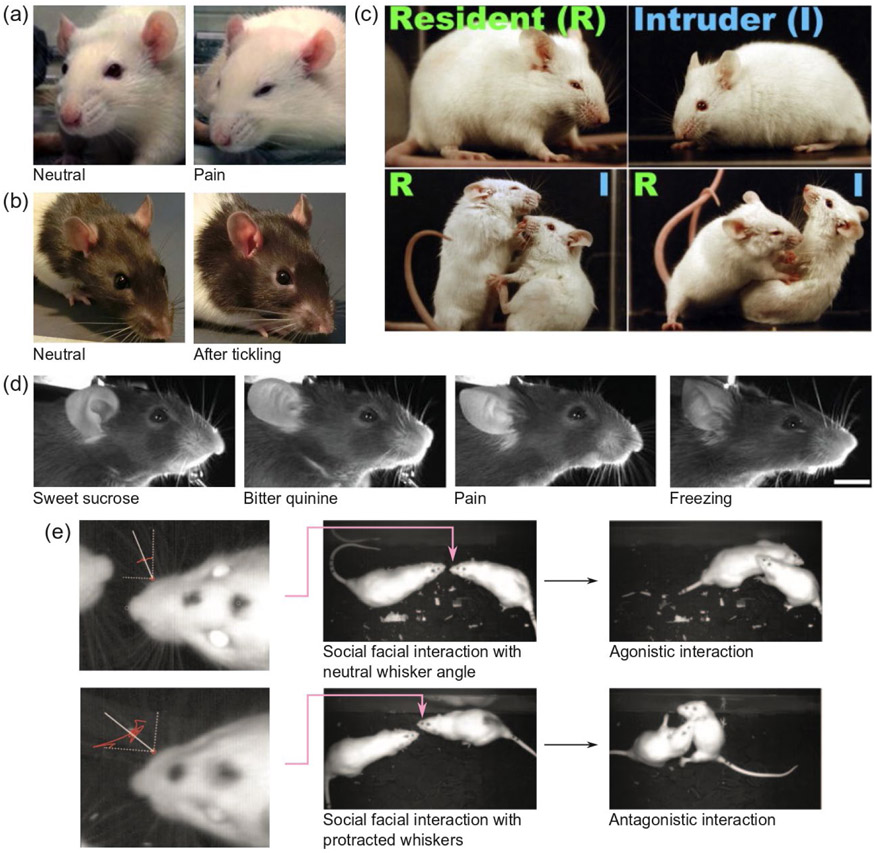

Figure 1: Rodent facial expressions.

(a) Pain grimace in rats: Orbital tightening, cheek flattening, folded, curled ears angled forwards or outwards (Sotocina et al., 2011) (b) Altered facial expression after tickling: Ear blushing and ears angled backwards (Finlayson et al., 2016) (c) In a mouse resident-intruder paradigm, the resident and intruder mice display two different facial expressions maintained during fighting: The resident displays tightened eyes and flattened ears, while the intruder displays widened eyes and erect ears (Defensor et al., 2012) (d) Examples of distinguishable facial expressions in mice: expressions after drinking sweet and bitter liquid, pain and freezing behavior (Dolensek et al., 2020) (e) In rats, whiskers are more protracted in social facial interactions before an aggressive interaction than in social facial interactions before nonaggressive interactions. (Wolfe et al., 2011). Figure permissions pending. Permissions: (a) Reproduced from (Sotocina et al., 2011) under a CC BY 2.0 license, (b) reproduced from (Finlayson et al., 2016) under a CC BY 4.0 license, (c) reproduced from (Defensor et al., 2012) with permission from Elsevier (d) reproduced from (Dolensek et al., 2020) with permission from AAAS (e) reproduced from (Wolfe et al., 2011) with permission from APA.

If rodent facial expressions differ between emotional states, that raises the possibility that these facial cues could be perceived by conspecifics and play a role in social communication. Increasing evidence across species suggests that facial expressions are displayed in social situations, and distinguishable by conspecifics. For example, ear wiggling is a social signal displayed by female rats during courtship (Erskine, 1989; Vreeburg and Ooms, 1985). Naked mole rats – a eusocial rodent species – have an extensive vocabulary of non-verbal body language, including elaborate facial interactions (e.g. head-on pushing, mouth gaping and tooth fencing) (Lacey et al., 2017). These facial interactions are involved in the control of ‘lazy’ workers (Reeve, 1992) and help maintain reproductive suppression (Clarke and Faulkes, 1997). A landmark study found that when an intruder mouse was placed into the cage of the resident mouse, the two mice displayed two different facial expressions, which they maintained, even during fighting: The resident displayed tightened eyes and flattened ears, while the intruder displayed widened eyes, erect ears and an open mouth (Defensor et al., 2012) (Figure 1c). A recent study found that it was possible to train an image classifier to distinguish between facial expressions in head fixed mice in a wide range of situations (aversive and palatable tastants, LiCl-indcued nausea, painful electric shock, freezing) (Dolensek et al., 2020) (Figure 1d).

Rats are nocturnal (Barnett, 1975), have modest visual acuity (Prusky et al., 2000), and often encounter conspecifics head-on in burrows (Blanchard et al., 2001). This suggests that ethologically they more often would sense faces of conspecifics with the whiskers rather than by vision. Beyond palpating the face of a social interaction partner, the whiskers themselves might also convey information, social or otherwise. During rat social facial interactions, whiskers are more protracted in aggressive than in nonaggressive interactions (Figure 1e), and female rats whisk with a lower amplitude when meeting male conspecific than when meeting female conspecific (Wolfe et al., 2011). During social facial interactions, cessation of sniffing by a subordinate rat decreases the likelihood that a dominant rat will initiate antagonistic behaviors (Wesson, 2013a). It is still unclear what aspects of rat behavior communicate subordination during such a facial interaction: The cessation of sniffing itself (Wesson, 2013b), altered patterns of ultrasonic vocalizations (Assini et al., 2013; Rao et al., 2014), whisking (Wolfe et al., 2011), body posture (Barnett, 1975) or – perhaps – some combination of the recently described, sniff-locked nose-twitching and head-bobbing (Kurnikova et al., 2017).

Positioning, motion, and asymmetry of the mouth, nose and whiskers (Dominiak et al., 2019; Towal and Hartmann, 2006) and rapid whisker twitches (whisker ‘pumps’) (Wallach et al., 2020) are predictive of upcoming motor behavior (e.g., running, turning). Whisker pumps might serve as a social cue during facial interactions. Rats have also been shown to display contagious yawning (Moyaho et al., 2014). Yawning is a social signal in many species (Guggisberg et al., 2010), but we know little about if and how yawning functions as a social signal in rats (Moyaho et al., 2017).

Mice also spontaneously engage in social facial whisker touch (Heckman et al., 2017) and neonatal whisker trimming leads to social behavior deficits in adult (Soumiya et al., 2016). Mice also perform an interesting whisking-related social dominance behavior referred to as ‘whisker barbering’: dominant mice will pin down subordinates, grab their vibrissae by the teeth and pull them out by the roots with a hard tug (Sarna, 2000; Strozik and Festing, 1981).

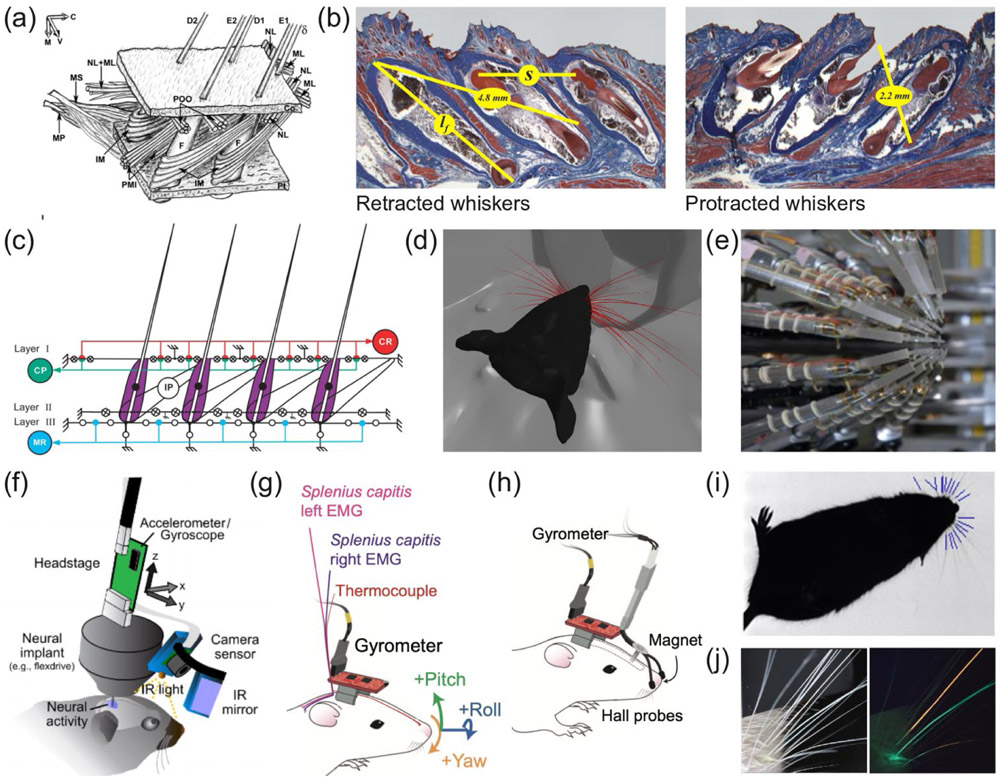

Detailed knowledge about the facial musculature (Haidarliu et al., 2010, 2011, 2012, 2013, 2014; Hill et al., 2008), 3D facial anatomy (Belli et al., 2018; Huet and Hartmann, 2014; Knutsen et al., 2008), and whisker biomechanics (Yang et al., 2019; Zweifel et al., 2019) (Figure 2a-d) might together provide an understanding of the topology of facial expression space, and predict the range of facial expressions that a rodent can produce (Hill et al., 2008; Luo et al., 2020; Sherman et al., 2013; Simony et al., 2010). This would enable the description of a kind of ‘natural scene statistics’ of a facial expressions, a powerful analytical framework pioneered in classic investigations of visual cortex (Geisler, 2008). Building on recent approaches in robotic methods of delivering complex, 3D sensory stimuli to whiskers (Goldin et al., 2018; Jacob et al., 2010; Ramirez et al., 2014) (Figure 2e), it might be possible to present complex, naturalistic, and/or full-field patterns of whisker stimulation to estimate facial expression receptive fields in the whisker system. Such an approach would allow us to understand if and how the neural encoding of socially significant whisker stimuli (Bobrov et al., 2014; Ebbesen et al., 2017, 2019; Lenschow and Brecht, 2015; Rao et al., 2014) differ from the encoding of non-social stimuli, such as objects and textures (Maravall and Diamond, 2014; Petersen, 2019).

Figure 2: Towards a holistic systems neuroscience of social facial expressions.

(a) Anatomical drawing of the rat whisker motor plant (Haidarliu et al., 2010) (b) Estimating the functional anatomy of the rat whisker musculature (Hill et al., 2008) (c) Biomechanical model of the rat whisker motor plant (Haidarliu et al., 2011) (d) A computational model of a realistic rat whisker field interacting with a complex scene (Zweifel et al., 2019) (e) A robotic system for simultaneous stimulation of 24 individual whiskers (Goldin et al., 2018) (f) A head-mounted camera system for mice (Meyer et al., 2018) (g,h) Head-mounted, physiological sensors systems for recording of sniffing, nose movements and head-bobbing in rats (Kurnikova et al., 2017) (i) Automatic whisker tracking in a freely moving mouse (Gillespie et al., 2019) (j) Using fluorescent dye to visualize single whiskers within the whisker field (Rigosa et al., 2017). Permissions: (a) reproduced from (Haidarliu et al., 2010) with permission from Wiley and Sons (b) reproduced from (Hill et al., 2008) with permission, copyright 2008 Society for Neuroscience, (c) reproduced from (Haidarliu et al., 2011) with permission from Wiley and Sons, (d) With permission from N. Zweifel and M. J. Z. Hartmann, (e) reproduced from (Goldin et al., 2018) under a CC BY 4.0 license, (f) reproduced from (Meyer et al., 2018) under a CC BY 4.0 license, (g-h) reproduced from (Kurnikova et al., 2017) with permission from Elsevier, (i) reproduced from (Gillespie et al., 2019) with permission from Elsevier, (j) reproduced from (Rigosa et al., 2017) under a CC BY 4.0 license.

Engineering advances in miniaturization make it now possible to record gaze direction and eye movements – and likely also whisker movements – by head-mounted cameras in freely-moving rats (Wallace et al., 2013) and mice (Meyer et al., 2018; Sattler and Wehr, 2020) (Figure 2f). Using this approach, it was recently reported that mice close their eyes when a conspecific is within close distance (Meyer et al., 2020) – an unexpected and interesting observation in the context of making and recognizing facial expressions. With miniaturized, head-mounted thermocouples, accelerometers, gyrometers, and Hall-effect probes, it is possible to quantify sniffing patterns, nose movements, and head-bobbing in freely-moving animals (Kurnikova et al., 2017; Wesson, 2013a) (Figure 2g-h). It remains unclear how these aspects of facial behavior vary during social interactions. Alternatively to using head-mounted cameras to record facial behavior in freely moving animals, a recently described method combines real-time tracking with motorized cameras to capture high-resolution ‘close-up’ images of animals moving in a large 3D arena (Nourizonoz et al., 2020).

Quantifying whisker movements during social facial interactions remains a challenge. Whisker tracking of solitary animals has reached high levels of accuracy. In head-fixed mice with most whiskers trimmed, simultaneous measurements of the three-dimensional shapes and kinematics of eight whiskers can be obtained automatically (Petersen et al., 2020). However, in socially interacting animals (with full, un-trimmed whisker fields), overlapping and occluded whiskers remain a major problem, and thus far social whisking patterns have either been tracked manually (Bobrov et al., 2014; Lenschow and Brecht, 2015; Wolfe et al., 2011) or approximated by automatically tracking the average movement of the whisker field as a whole (Ebbesen et al., 2017). A promising path towards automatic whisker tracking in socially-interacting animals is to combine recent advances in automatic whisker tracking in freely-moving animals (Gillespie et al., 2019) (Figure 2i) with techniques for tracking the movement of single whiskers despite overlaps and occlusions by painting single whiskers with a fluorescent dye (Nashaat et al., 2017; Rigosa et al., 2017) (Figure 2j).

Posture and movement as body language signals

A role of body language in signaling distress

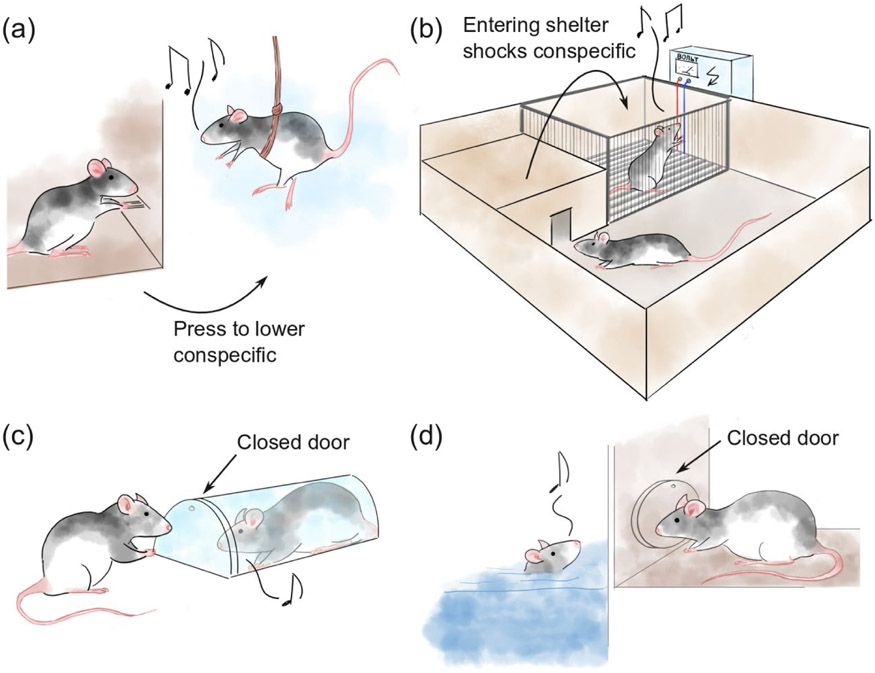

Several studies have shown that rats will actively help conspecifics in distress. Rats will press a lever to lower a distressed and wriggling rat dangling in a harness (prodded with a sharp pencil if it did not exhibit sufficient signs of discomfort) (Rice and Gainer, 1962)_(Figure 3a), rats will press a lever to remove a conspecific from a water tank (Rice, 1965), and rats will leave a dark and comforting hiding place and stay in a brightly lit, open arena to ensure that a nearby conspecific does not receive a painful shock (Preobrazhenskaya and Simonov, 1974)_(Figure 3b). More recent studies have shown that rats (Bartal et al., 2011) and mice (Ueno et al., 2019a) will open a door to release a conspecific trapped in a small plastic tube (Figure 3c), and that rats will open a door that lets cagemate escape a pool of water (Sato et al., 2015)(Figure 3d).

Figure 3: Investigating body language signals of distress.

(a) A classic paradigm to investigate actibe helping in rats: Aversive dangling from a harness. (Rice and Gainer, 1962) (b) Another classic paradigm: One rat has to decide between a brightly lit arena and a dark and comforting shelter. Entering the shelter delivers a shock to a nearby conspecific. (Preobrazhenskaya and Simonov, 1974). (c) The aversive-restraint-tube paradigm: One rat can open a door that lets a conspecific escape a transparent tube. (Bartal et al., 2011) (d) The aversive-swimming-pool paradigm: One rat can open a door that lets a conspecific escape a pool of water. (Sato et al., 2015).

What drives the behavior of the helper animal? In rats, restraint-tube-opening behavior depends on familiarity with the strain of rat in distress (Bartal et al., 2014). Behavioral changes occur after drugging the helper rat, with benzodiazepine sedation leading to longer opening latency (Bartal et al., 2016) and heroin abolishes opening(Tomek et al., 2019). Door-opening latency changes if there are multiple potential helpers, and depends on if these ‘bystanders’ are sedated (Havlik et al., 2020). In voles, oxytocin receptor knockout delays door-opening for a soaked conspecific (Kitano et al., 2020). Multiple studies have varied the rescue paradigms to clarify what emotional states might motivate door opening and helping behavior. Helpers might be motivated by empathic concern for the distressed, may desire rewarding social interactions, open the door out of curiosity or boredom, or might be irritated by aversive cues from the trapped animal, among other hypotheses (Blystad et al., 2019; Carvalheiro et al., 2019; Cox and Reichel, 2020; Hachiga et al., 2018; Hiura et al., 2018; Schwartz et al., 2017; Silberberg et al., 2014; Silva et al., 2020; Ueno et al., 2019b; Vasconcelos et al., 2012).

Relatedly, the behaviors of the distressed animal might also be an important factor. Trapped animals produce lower-frequency distress calls in the first restraint sessions (Bartal et al., 2011, 2014), but might also display other signs or signals of stress, such as seen in pain and sickness (Barnett, 1975; Kolmogorova et al., 2017). This raises the possibility that other signals such as olfactory cues (Bredy and Barad, 2009; Kiyokawa et al., 2006) or elements of body language such as gesture and posture could be used to signal distress and solicit help. Several studies have found rodents are indeed sensitive to body language signals of distress, such as freezing (Atsak et al., 2011; Cruz et al., 2020), and rats prefer a room decorated with images of conspecifics in a neutral pose rather than a room decorated with images of conspecifics in pain (i.e., facial grimaces and hunched posture) (Nakashima et al., 2015).

Controlled experiments involving robotic animals (Abdai et al., 2018) or virtual animals (Naik et al., 2020) is powerful way to probe the sensitivity of animals to visual social stimuli. Some studies have simulated body language distress signals by robotic animals (Abdai et al., 2018). Rats will work to release a moderately rat-like robot from a restraint tube, and rats seem to discriminate between robots based on behavior (Quinn et al., 2018). There is ongoing work to develop more complex rat robots, capable of realistic postures and movement patterns (Ishii et al., 2013; Li et al., 2020; Shi et al., 2015).

Instructing social partners through body language

Several studies have investigated the behavior of rats in artificial social games which also might involve body language. Rats will cooperate at rates above chance level in iterated prisoner’s dilemma games (Gardner et al., 1984; Viana et al., 2010; Wood et al., 2016), but the interpretation of such games is complex, since an iterated prisoner’s dilemma can be dominated without any theory of mind (Press and Dyson, 2012). Body language is usually not quantified but a classic study reported that cooperation would break down if the animals could not see each other, and that rats would engage in specific left- or right-turning feint behaviors apparently to influence the behavior of the partner animal (Gardner et al., 1984). The importance of visual observation has been highlighted in another social coordination nose-poke task (Łopuch and Popik, 2011).

Multiple studies have found that rats will work to deliver food to conspecifics (Dolivo and Taborsky, 2015; Rutte and Taborsky, 2007, 2008; Schneeberger et al., 2012; Schweinfurth and Taborsky, 2018a, 2018b), and that – when given the option to donate food to conspecifics at no extra cost to themselves – rats will prefer that conspecifics receive food also (Hernandez-Lallement et al., 2015; Kentrop et al., 2020; Márquez et al., 2015; Oberliessen et al., 2016). Many of these studies report observations that are in line with the supposition that rats use body language signals to communicate what they want the ‘chooser’ animal to do, and that the chooser animal is sensitive to these signals. In one study, the likelihood of donating food by the chooser rat incurring no extra cost, was modulated by the display of food-seeking behavior by the prospective recipients, expressed as poking a nose port and by social interactions through a mesh (Márquez et al., 2015). In another study, where rats could work to deliver food to a conspecific only, subject rats provide food correlated with the intensity of movements and body postures displayed by the prospective recipients. These putative body language signals included stretching their paws towards the food, sniffing through the mesh in the direction of the food, and other attention-grabbing behaviors directed at the subject rat (Schweinfurth and Taborsky, 2018a).

Studies investigating the behavior of groups of mice in complex environments have found marked individual differences in displays of social postures and movements (Forkosh et al., 2019; Torquet et al., 2018), and patterns in social interaction partnering (König et al., 2015; Peleh et al., 2019; Shemesh et al., 2013; Weissbrod et al., 2013). However, while postures and movement patterns correlate with social dominance (Forkosh et al., 2019; Wang et al., 2014), it is still unclear if and how body language cues might help establish, maintain, or adjust the dominance hierarchy (Forkosh et al., 2019) or social network of co-habituation (König et al., 2015).

Individual differences in movement and postures during co-housing or colony dynamics might mirror the observation that animals tend to take on different behavioral roles. When rats are moving together in dyads, some become ‘leaders’ and some become ‘followers’ (Weiss et al., 2015). In a test where rats have to dive underwater to collect morsels of food, some become ‘divers’ (swimming and collecting food), and other rats become non-divers which wait for the other animal to bring them food (Grasmuck and Desor, 2002; Krafft et al., 1994). In wild mice performing collective nest building, some mice will become nest-builders (carrying out the vast majority of the work in collecting nesting material) and some mice will only participate weakly or not at all (Serra et al., 2012).

One important set of behavioral roles in group-housed animals is co-parenting and caretaking of infant rodent pups. Parenting behavior and active care for pups is orchestrated by innate circuits to some degree (Kohl, 2020). In the context of body language, however, maternal female mice can solicit the help of sexually experienced males (Liang et al., 2014; Liu et al., 2013; Tachikawa et al., 2013) and virgin females (Ehret et al., 1987; Krishnan et al., 2017; Marlin et al., 2015) for aspects of pup caretaking (e.g., nest building, pup retrieval, crouching, and pup grooming). The parental behaviors expressed by males and female virgins develop with exposure or experience with pups (concaveation) and during co-housing with a dam and litter. The presence of experienced dams accelerates concaveation (Carcea et al., 2020; Marlin et al., 2015) indicating that dams engage in some behaviors or interactions that affect the emergence of co-parenting abilities in males or virgin females. Olfactory and auditory cues from the dam play a role. Blockade of these signals delay the development of co-parenting in males and – even without visual input from the dam – replay of dam vocalizations or dam odors can induce parenting in males (Liu et al., 2013). Body language and motor activity of the dam also contribute, as dams will actively engage virgins in maternal care by ‘shepherding’ the virgins to the nest and pups. Furthermore, dams demonstrate maternal behavior in spontaneous pup retrieval episodes that allow virgin females to learn by observation (Carcea et al., 2020). Active social engagement and demonstration by dams might be a key driver in facilitation fast social learning of co-parenting. Free-living, wild dams selectively choose to communally nurse (Ferrari et al., 2018; Harrison et al., 2018), but we do not yet know the role of body language signals in coordinating co-parenting between dams in outdoor colonies.

The apparently active demonstration of parenting is in contrast to other studies reporting that wild rats do not rapidly acquire new foraging techniques by observation, even if they are performed by conspecifics (Galef, 1982). It is, however, in line with other reported examples of rodents learning by observation (Petrosini et al., 2003). For example, rats can learn to solve a Morris water maze by observing a trained conspecific swim to the hidden platform (Leggio et al., 2000), mice can learn to solve a complex ‘puzzle box’ by observing conspecifics (Carlier and Jamon, 2006), and rats will imitate joystick movements that they have seen a conspecific make to receive a food reward (even though their joystick movements do not actually affect their reward at all) (Heyes et al., 1994).

Pose estimation and quantitative analysis of body language

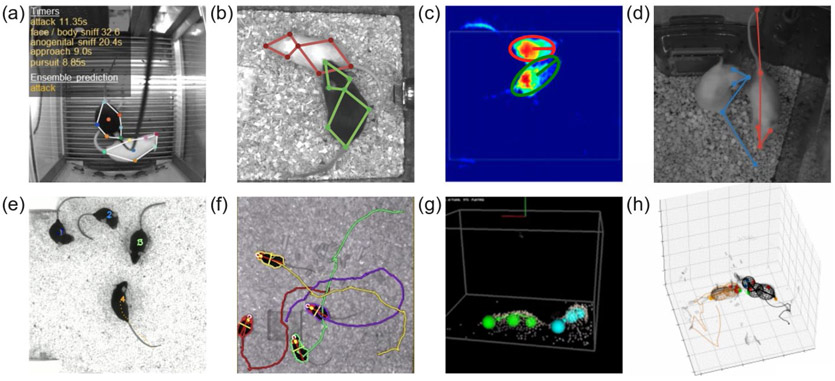

Methodological advances in computer vision and machine learning provide new ways to monitor and analyze body language signals for social behavior. Multiple open source packages for machine-learning based markerless tracking of posture and body parts in single animals have recently been developed, including DeepLabCut (Mathis et al., 2018), LEAP (Pereira et al., 2020), DeepPoseKit (Graving et al., 2019), OptiFlex (Liu et al., 2020), DeepGraphPose (Wu et al., 2020) and others (von Ziegler et al., 2020). However, translating single animal tracking to multiple animals is not straightforward, for at least two reasons. First, the camera view on a specific animal might be occluded by other animals (especially if any have neural implants). Second, even if all body parts are visible, the body parts have to be ‘grouped’ correctly and assigned to the correct animal.

One straightforward way to distinguish two interacting animals is to use animals that are physically marked or of a different coat color (Hong et al., 2015; Nilsson et al., 2020; Segalin et al., 2020) (Figure 4a-c). This is a robust method, but excludes some use-cases (e.g., studies of behavioral genetics that require a specific background or where it is important that animals come from the same litter). Another method have used deep neural networks to recognize body parts and metrics of spatiotemporal continuity to group body parts and maintain tracking of animal identities, in unmarked animals of the same coat color (Pereira et al., 2020) (Figure 4d). Another approach maintains the identities of multiple animals by training a network to recognize subtle differences in the appearance of individual animals (Pérez-Escudero et al., 2014; Romero-Ferrero et al., 2019; Walter and Couzin, 2020) (Figure 4e). Another approach combines the use of implanted RFID chips(Kritzler et al., 2007; Peleh et al., 2019) and the use of depth videography (Aguilar-Rivera et al., 2018; Gerós et al., 2020; Hong et al., 2015; Matsumoto et al., 2013; Sheets et al., 2013; Wiltschko et al., 2015) to track movements patterns and body postures in multiple mice, in real time (Chaumont et al., 2019) (Figure 4f). The RFID-based identity tracking provides a robust cross-validation of animal position (when sufficiently separated), but may interfere with electrophysiological recordings. We have taken a related approach, building on pioneering work in tracking by physical modeling in rats (Aguilar-Rivera et al., 2018; Matsumoto et al., 2013) (Figure 4g), that combines deep learning-based keypoint detection and depth videography in a robust tracking algorithm capable of automatically tracking a 3D model of the posture of interacting mice. This method is compatible with electrophysiology (robust to occlusions and camera artifacts due to wires and a neural recording implant carried by the mouse on the right) (Figure 4h) (Ebbesen and Froemke, 2020).

Figure 4: New computational methods for automatically estimating body postures in socially interacting rodents.

(a,b) Disambiguating body parts of two mice by their coat color (a: (Nilsson et al., 2020), b: (Segalin et al., 2020)). (c) Imaging mice of different coat colors and estimating their body postures by approximating the animals as ellipses in a simultaneously acquired depth image (only depth image shown) (Hong et al., 2015). (d) Tracking animals of the same coat color by using a spatiotemporal loss function to assign detected body pars to the correct animals (Pereira et al., 2020) (e) Tracking the identity of multiple animals by training a network to recognize subtle differences in each individual animal’s appearance (Romero-Ferrero et al., 2019) (f) Combining depth videography with implanted RFID-chips to track and disambiguate multiple mice in real time (Chaumont et al., 2019) (g) Combined depth videography and physical modeling in a computational tool for semi-automatic tracking of body postures in interacting rats (Aguilar-Rivera et al., 2018; Matsumoto et al., 2013). (h) Combining deep learning, physical modeling and a particle-filter based tracking algorithm with spatiotemporal constraints to automatically track the body postures of interacting mice, compatible with electrophysiology (robust to occlusions and camera artifacts due to wires and a neural recording implant carried by the mouse on the right) (Ebbesen and Froemke, 2020). Permissions: (a) With permission from S. R. O. Nilsson & S. A. Golden. (b) With permission from A. Kennedy. (c) reproduced from (Hong et al., 2015) with permission from National Academy of Sciences (d) With permission from T. Pereira & J. Shaevitz. (e) With permission from the idtracker.ai team, (f) reproduced from (Chaumont et al., 2019) with permission from Springer Nature, (g) reproduced from (Matsumoto et al., 2013) under a CC BY license.

Beyond recording raw postural and movement data, machine learning methods have also provided new ways to segment raw tracking data into behavioral categories in a principled and objective manner, and to discover behavioral structure – the building blocks of body language – in a purely data-driven way. The latter is especially promising, because it could allow discovery of new postures and movement patterns, purely from statistical properties in the behavioral kinematics and agnostic to potential observer bias.

A very effective way of automatically segmenting raw tracking data is to use a supervised approach and train a classifier to reproduce human annotation of behavioral categories (Hong et al., 2015; Nilsson et al., 2020; Segalin et al., 2020). This approach will , when using modern, deep-leaning based classifiers and large training sets (Nilsson et al., 2020; Segalin et al., 2020), provide a precise way to automatically annotate behavioral data. Unsupervised approaches learn the behavioral categories from the data itself. Tracked behavioral features from an animal (e.g,. 3D coordinates of many body parts) is a high-dimensional time series. To find structure in such, it is possible to draw from a recent work in laboratory studies of worm and insect behavior (Brown and Bivort, 2018; Calhoun and Murthy, 2017) and field ethology (Patterson et al., 2017; Smith and Pinter-Wollman, 2020).

One approach to discover behavioral categories is to look for ‘building blocks’ of the observed behaviors that re-occur. To this end, an elegant and robust approach is to perform a nonlinear projection from the high-dimensional space of all tracked body part coordinates (often augmented with derived features, such as time derivatives and spectral components) down to a low-dimensional 2D (Berman et al., 2014, 2016; Braun et al., 2010; Johnson et al., 2020; Klibaite and Shaevitz, 2019; Klibaite et al., 2017; Pereira et al., 2019; Werkhoven et al., 2019; York et al., 2020) or 3D manifold (Hsu and Yttri, 2019; Mearns et al., 2020) in a manner that preserves local similarity (e.g. t-SNE (Maaten and Hinton, 2008)). On this low-dimensional manifold, similar behaviors will form clusters, that can be identified by density-based clustering algorithms. The generated clusters are manually inspected and curated (e.g. merged or split) and assigned names (e.g., ‘locomotion’, ‘grooming’, etc.).

Another approach to discover behavioral categories is to define a generative model – e.g. some flavor of state space model – and fit this model to the high-dimensional time series of tracked body features (Adam et al., 2019; Calhoun et al., 2019; DeRuiter et al., 2016; Ebbesen and Froemke, 2020; Heiligenberg, 1973; Katsov et al., 2017; Macdonald and Raubenheimer, 1995; Markowitz et al., 2018; Tao et al., 2019; Wiltschko et al., 2015, 2020). This approach is attractive, because it is highly expressive: It is possible to define very complex models, e.g. by adding autoregressive terms (Wiltschko et al., 2015), by allowing for complex hidden dynamics (Linderman et al., 2019), by incorporating nested structures (Tao et al., 2019), and by including nonlinear transformations (Calhoun et al., 2019). It is also possible to explicitly incorporate knowledge about the animals anatomy, by writing a full generative model of the animal’s body itself, akin to (Johnson et al., 2020; Merel et al., 2019). However, these methods also have drawbacks. First, complex models quickly become prohibitively computationally expensive to fit to data. Fitting can be accelerated, e.g. by using fast modern and efficient sampling algorithms (Leos-Barajas and Michelot, 2018) or GPU-accelerated variational inference(Ebbesen and Froemke, 2020), but even these methods often show poor mixing/convergence for complex models. Second, even if a model is well fit to data, there is no principled way to discover what the ‘true’ latent structure is (e.g., the true number of hidden states or transition graph structure) (Adam et al., 2019; Fox et al., 2010; Li and Bolker, 2017; Pohle et al., 2017). Thus, for example, the number of hidden states in a state space model of behavior – i.e. the number of different behavioral categories – has to be set using a heuristic, e.g., by fitting a model with the number of latent states as a free parameter and then choosing a cutoff (Markowitz et al., 2018; Wiltschko et al., 2015, 2020), by fixing the number of states based on inspection of raw data and the desired coarseness of the model (Ebbesen and Froemke, 2020; Katsov et al., 2017; Tao et al., 2019), or – in a very elegant approach – by comparing models with different latent structure according to their ability to capture multiple aspects of the observed data, such both the most likely state and transitions between states (Calhoun et al., 2019).

Machine learning based approaches for behavioral tracking and analysis are in continual development along several directions that are of particular interest to the analysis of socially-interacting animals. For example, there are several methods for estimating 3D locations of body parts by triangulation of multiple simultaneous 2D views of the animal (Bala et al., 2020; Günel et al., 2019; Nath et al., 2019; Zimmermann et al., 2020), but such triangulation methods are sensitive to occlusions and thus difficult to use in interacting animals. A recent report showed, that after having collected one good ‘ground-truth’ multi-view 3D dataset, it was possible to train a network to predict the 3D posture of an animal from a single 2D view only (Gosztolai et al., 2020). Building upon work in humans, it might even be possible to learn 3D body skeletons from only 2D views, i.e., without the need to capture a ground truth 3D data from multiple cameras in the first place (Novotny et al., 2019). Such methods for estimating the 3D posture from a single 2D view could be a very powerful way to deal with camera occlusions in studies of interacting animals.

An elegant way to improve unsupervised behavioral clustering is to do everything in a single operation, whereby a deep neural net simultaneously learns to project the data onto a low-dimensional manifold and estimate an optimal number of latent clusters according to single objective function (Graving and Couzin, 2020; Luxem et al., 2020). Another promising approach is to use a dictionary-based approach to identify behavioral categories as sequence ‘motifs’ in the raw tracking data (Reddy et al., 2020).

When analyzing the behavior of single animals, some studies have eschewn body part tracking altogether and identified behavioral categories by fitting state space models directly to video data (Batty et al., 2019; Markowitz et al., 2018; Wiltschko et al., 2015, 2020) or by training a network to replicate human labeling directly from raw video (Bohnslav et al., 2020). It would be very useful if these approaches can be modified to handle multiple animals in the same video. This challenge is difficult, not just due to occlusions, but because multiple animals are interaction and thus will have complicated between-animal statistics. Writing a generative model of two animals is more challenging than utilizing two copies of a generative model of a solitary animal. In fact, to understand the structure of rodent body language, and its neural basis, these between-animal statistics are critical to document in high resolution. For example, running towards a conspecific or running away from a conspecific have a very different social “meaning”, but may be identical in the kinematic space the single animal, if that animal is modeled in isolation.

Mathematical methods for understanding the behavior of interacting animals are still in active development, with many important and open questions to work on. Recent reports have used unsupervised methods to elucidate how the behavior of interacting Drosophila depends on the relative spatial location of the interacting animals (Klibaite et al., 2017) and the animals’ behavioral state (e.g., courting or not) (Klibaite and Shaevitz, 2019). In ethology, there is related work in the use of information theory (Pilkiewicz et al., 2020), modeling (Sumpter et al., 2012) and network theory (Weiss et al., 2020) to understand the role of social interactions in determining collective movement, e.g., in fish (Rosenthal et al., 2015) and baboons (Strandburg-Peshkin et al., 2015). One recent study combined information-theory and presentation of robotic conspecifics to understand the statistics of dyadic interactions in zebrafish (Karakaya et al., 2020) and another study used purely statistical methods to discover that rats rely on social information from conspecifics when exploring a maze (Nagy et al., 2020).

Conclusions

As outlined above, some methods for automated behavioral analysis – e.g., those that discover behavioral structure directly from raw video (Batty et al., 2019; Bohnslav et al., 2020; Wiltschko et al., 2015) – do not return an explicit physical ‘body model’ of the animal; only discrete behavioral categories. For many biological questions, such an “ethogram-centric” view has no drawbacks, but when relating neural data to behavior, continuous information about movement and posture kinematics can be critical. Neural activity is modulated by motor signals (Georgopoulos et al., 1982; Kropff et al., 2015; Parker et al., 2020) and vestibular signals (Angelaki et al., 2020; Kalaska, 1988; Mimica et al., 2018) in many brain areas. To understand how neural circuits process body language cues during social interactions, these “low-level” motor and posture related confounds must be regressed out. For example, it is in principle not enough to know that activity in a brain region is different during mutual allogrooming than during boxing to conclude that a neural region is responding to a difference in social ‘meaning’ (e.g., aggressive, but not agonistic behaviors). Differences in neural activity between behavioral categories might just as well be related simply to “low-level” differences in movements and postures made by the animals in those different behavioral categories.

Regressing out confounding low-level motor and postural signals is a difficult task. Without a body model, it is possible to regress out some variance by regressing the neural activity onto variance in the raw video itself, e.g. ,regress out activity related to face movement by regressing onto principal components of a video of the face (Musall et al., 2019; Stringer et al., 2019). However, movement and posture signals are generally aligned to the animals own body in some form of egocentric frame of reference, e.g. to muscles, posture or movement trajectories (Omrani et al., 2017). The transformation from body to video is highly non-linear and difficult to discover automatically.

There are related complexities when interpreting differences in neural activity in social situations that are associated with sensory input, e.g., social events that include vocalizations and social touch. Social touch widely modulates the brain from the hypothalamus (Tang et al., 2020) to frontal and sensory cortices (Ebbesen et al., 2019). Moreover – in context of understanding the structure of body language – it is likely essential to know if a close contact between animals included a social touch or not. While behavioral tracking methods that can estimate the animal 3D posture as a “skeleton” of body points are suited for regressing out signals due to the animals’ own posture and movement, a full, deformable 3D surface model of the animal is required to measure social body touch. To this end, there are also promising machine learning methods on the horizon. For example, starting from a detailed, deformable 3D model of the animal’s shape and color, it is possible to extract a detailed 3D model of an animal’s body surface from a single 2D view, even in complex images (Badger et al., 2020; Biggs et al., 2018; Kearney et al., 2020; Zuffi et al., 2017, 2018, 2019).

Rodents display a wide range of facial expressions (grimaces and whisker movements), including during social interactions. Multiple observations suggest that body postures and movements of conspecifics function as an important social signal. Recent major advances machine-learning methods for behavioral analysis and microengineering of behavioral sensors are making it possible to quantify facial expressions and body postures during complex, social interactions. These data will reveal new questions about the neural basis of social cognition in rodents to understand the comparative neurobiology of body language.

Acknowledgements

This work was supported by The Novo Nordisk Foundation (C.L.E.), the BRAIN Initiative (NS107616 to R.C.F.) and a Howard Hughes Medical Institute Faculty Scholarship (R.C.F.).

Footnotes

Conflict of interest statement

The authors declare no conflicts of interest.

References

- Abdai J, Korcsok B, Korondi P, and Miklósi Á (2018). Methodological challenges of the use of robots in ethological research. Anim. Behav. Cogn 5, 326–340. [Google Scholar]

- Adam T, Griffiths CA, Leos-Barajas V, Meese EN, Lowe CG, Blackwell PG, Righton D, and Langrock R (2019). Joint modelling of multi-scale animal movement data using hierarchical hidden Markov models. Methods Ecol. Evol 10, 1536–1550. [Google Scholar]

- Aguilar-Rivera M, Gygi E, Thai A, Matsumoto J, Nishijo H, Coleman TP, Quinn LK, and Chiba AA (2018). Real-time tools for the classification of social behavior and correlated brain activity in rodents. In Program No. 520.03. 2018, (San Diego, CA: Society for Neuroscience; ), p. [Google Scholar]

- Anderson DJ (2016). Circuit modules linking internal states and social behaviour in flies and mice. Nat. Rev. Neurosci 17, 692–704. [DOI] [PubMed] [Google Scholar]

- Anderson DJ, and Adolphs R (2014). A Framework for Studying Emotions across Species. Cell 157, 187–200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Angelaki DE, Ng J, Abrego AM, Cham HX, Asprodini EK, Dickman JD, and Laurens J (2020). A gravity-based three-dimensional compass in the mouse brain. Nat. Commun 11, 1855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Assini R, Sirotin YB, and Laplagne DA (2013). Rapid triggering of vocalizations following social interactions. Curr. Biol 23, R996–R997. [DOI] [PubMed] [Google Scholar]

- Atsak P, Orre M, Bakker P, Cerliani L, Roozendaal B, Gazzola V, Moita M, and Keysers C (2011). Experience modulates vicarious freezing in rats: A model for empathy. PLoS ONE 6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Badger M, Wang Y, Modh A, Perkes A, Kolotouros N, Pfrommer BG, Schmidt MF, and Daniilidis K (2020). 3D Bird Reconstruction: a Dataset, Model, and Shape Recovery from a Single View. ArXiv200806133 Cs.# Uses a combination of deep learning and physical modeling to estimate the 3D body pose of single birds from a single camera view and multiple, socially interacting birds from multi-camera views.

- Bala PC, Eisenreich BR, Yoo SBM, Hayden BY, Park HS, and Zimmermann J (2020). OpenMonkeyStudio: Automated Markerless Pose Estimation in Freely Moving Macaques. BioRxiv 2020.01.31.928861. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barnett SA (1975). The rat: a study in behavior (Chicago: University of Chicago Press; ). [Google Scholar]

- Bartal IB-A, Decety J, and Mason P (2011). Empathy and Pro-Social Behavior in Rats. Science 334, 1427–1430. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bartal IB-A, Rodgers DA, Bernardez Sarria MS, Decety J, and Mason P (2014). Pro-social behavior in rats is modulated by social experience. ELife 3, 1–16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bartal IBA, Shan H, Molasky NMR, Murray TM, Williams JZ, Decety J, and Mason P (2016). Anxiolytic treatment impairs helping behavior in rats. Front. Psychol 7, 1–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Batty E, Whiteway M, Saxena S, Biderman D, Abe T, Musall S, Gillis W, Markowitz J, Churchland A, Cunningham JP, et al. (2019). BehaveNet: nonlinear embedding and Bayesian neural decoding of behavioral videos. In Advances in Neural Information Processing Systems 32, Wallach H, Larochelle H, Beygelzimer A, d\textquotesingle Alché-Buc F, Fox E, and Garnett R, eds. (Curran Associates, Inc.), pp. 15706–15717. [Google Scholar]

- Belli HM, Bresee CS, Graff MM, and Hartmann MJZ (2018). Quantifying the three-dimensional facial morphology of the laboratory rat with a focus on the vibrissae. PloS One 13, e0194981. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berman GJ, Choi DM, Bialek W, and Shaevitz JW (2014). Mapping the stereotyped behaviour of freely moving fruit flies. J. R. Soc. Interface 11, 20140672.## Pioneered the use of wavelet filtering and nonlinear embedding for analysis of animal behavior.

- Berman GJ, Bialek W, and Shaevitz JW (2016). Predictability and hierarchy in Drosophila behavior. Proc. Natl. Acad. Sci 113, 1–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Biggs B, Roddick T, Fitzgibbon A, and Cipolla R (2018). Creatures great and SMAL: Recovering the shape and motion of animals from video. ArXiv181105804 Cs. [Google Scholar]

- Blanchard RJ, Dulloog L, Markham C, Nishimura O, Nikulina Compton J, Jun A, Han C, and Blanchard DC (2001). Sexual and aggressive interactions in a visible burrow system with provisioned burrows. Physiol. Behav 72, 245–254. [DOI] [PubMed] [Google Scholar]

- Blystad MH, Andersen D, and Johansen EB (2019). Female rats release a trapped cagemate following shaping of the door opening response: Opening latency when the restrainer was baited with food, was empty, or contained a cagemate. PLOS ONE 14, e0223039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bobrov E, Wolfe J, Rao RP, and Brecht M (2014). The representation of social facial touch in rat barrel cortex. Curr. Biol 24, 109–115. [DOI] [PubMed] [Google Scholar]

- Bohnslav JP, Wimalasena NK, Clausing KJ, Yarmolinksy D, Cruz T, Chiappe E, Orefice LL, Woolf CJ, and Harvey CD (2020). DeepEthogram: a machine learning pipeline for supervised behavior classification from raw pixels (Neuroscience). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Braun E, Geurten B, and Egelhaaf M (2010). Identifying Prototypical Components in Behaviour Using Clustering Algorithms. PLOS ONE 5, e9361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bredy TW, and Barad M (2009). Social modulation of associative fear learning by pheromone communication. Learn. Mem. Cold Spring Harb. N 16, 12–18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown AEX, and de Bivort B (2018). Ethology as a physical science. Nat. Phys 14, 653–657. [Google Scholar]

- Calhoun AJ, and Murthy M (2017). Quantifying behavior to solve sensorimotor transformations: advances from worms and flies. Curr. Opin. Neurobiol 46, 90–98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calhoun AJ, Pillow JW, and Murthy M (2019). Unsupervised identification of the internal states that shape natural behavior. Nat. Neurosci 22, 2040–2049.## Used an elegant permutation test to estimate the number of hidden states when fitting a state-space model of drosophila behavior to data.

- Carcea I, Caraballo NL, Marlin BJ, Ooyama R, Riceberg JS, Navarro JMM, Opendak M, Diaz VE, Schuster L, Torres MIA, et al. (2020). Oxytocin Neurons Enable Social Transmission of Maternal Behavior. BioRxiv 845495.# Found that nursing mouse dams appear to actively display pup-retrieval to cohabiting virgin female animals, and coax the virgins to be near the nest with pups.

- Carlier P, and Jamon M (2006). Observational learning in C57BL/6j mice. Behav. Brain Res 174, 125–131. [DOI] [PubMed] [Google Scholar]

- Carvalheiro J, Seara-cardoso A, Mesquita AR, Sousa LD, Oliveira P, Summavielle T, Magalhães A, Carvalheiro J, Seara-cardoso A, Mesquita AR, et al. (2019). Helping Behavior in Rats ( Rattus norvegicus ) When an Escape Alternative Is Present. [DOI] [PubMed]

- Chaumont F. de, Ey E, Torquet N, Lagache T, Dallongeville S, Imbert A, Legou T, Sourd AML, Faure P, Bourgeron T, et al. (2019). Real-time analysis of the behaviour of groups of mice via a depth-sensing camera and machine learning. Nat. Biomed. Eng. 3, 930–942.# Uses a combination of depth videography and implanted RFID-chips to allow real-time tracking of multiple mice, with RFID-based detection and correction of tracking errors.

- Clarke FM, and Faulkes CG (1997). Dominance and queen succession in captive colonies of the eusocial naked mole–rat, Heterocephalus glaber. Proc. R. Soc. Lond. B Biol. Sci 264, 993–1000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox SS, and Reichel CM (2020). Rats display empathic behavior independent of the opportunity for social interaction. Neuropsychopharmacology 45, 1097–1104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cruz A, Heinemans M, Márquez C, and Moita MA (2020). Freezing Displayed by Others Is a Learned Cue of Danger Resulting from Co-experiencing Own Freezing and Shock. Curr. Biol 30, 1128–1135.e6. [DOI] [PubMed] [Google Scholar]

- Darwin C (1872). The Expression of the Emotions in Man and Animals (London: JOHN MURRAY, ALBEMARLE STREET; ). [Google Scholar]

- Defensor EB, Corley MJ, Blanchard RJ, and Blanchard DC (2012). Facial expressions of mice in aggressive and fearful contexts. Physiol. Behav 107, 680–685.## Described facial expression in socially interacting mice.

- DeRuiter SL, Langrock R, Skirbutas T, Goldbogen JA, Chalambokidis J, Friedlaender AS, and Southall BL (2016). A multivariate mixed hidden Markov model to analyze blue whale diving behaviour during controlled sound exposures. ArXiv160206570 Q-Bio Stat. [Google Scholar]

- Dolensek N, Gehrlach DA, Klein AS, and Gogolla N (2020). Facial expressions of emotion states and their neuronal correlates in mice. Science 368, 89–94.#Investigated the neural correlates and visual discriminability of a wide range of mouse facial expressions.

- Dolivo V, and Taborsky M (2015). Cooperation among Norway Rats: The Importance of Visual Cues for Reciprocal Cooperation, and the Role of Coercion. Ethology 121, 1071–1080. [Google Scholar]

- Dominiak SE, Nashaat MA, Sehara K, Oraby H, Larkum ME, and Sachdev RNS (2019). Whisking Asymmetry Signals Motor Preparation and the Behavioral State of Mice. J. Neurosci. 39, 9818–9830. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ebbesen CL, and Froemke RC (2020). Automatic tracking of mouse social posture dynamics by 3D videography, deep learning and GPU-accelerated robust optimization. BioRxiv 2020.05.21.109629.# Uses deep learning, physical modeling and a particle-filter based tracking algorithm with spatiotemporal constraints to automatically track the body postures of interacting mice, compatible with electrophysiology (occlusions and camera artifacts due to wires and a neural recording implant

- Ebbesen CL, Doron G, Lenschow C, and Brecht M (2017). Vibrissa motor cortex activity suppresses contralateral whisking behavior. Nat. Neurosci 20, 82–89. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ebbesen CL, Bobrov E, Rao RP, and Brecht M (2019). Highly structured, partner-sex- and subject-sex-dependent cortical responses during social facial touch. Nat. Commun 10, 1–16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ehret G, Koch M, Haack B, and Markl H (1987). Sex and parental experience determine the onset of an instinctive behavior in mice. Naturwissenschaften 74, 47. [DOI] [PubMed] [Google Scholar]

- Erskine MS (1989). Solicitation behavior in the estrous female rat: A review. Horm. Behav 23, 473–502. [DOI] [PubMed] [Google Scholar]

- Ferrari M, Lindholm AK, and König B (2018). Fitness Consequences of Female Alternative Reproductive Tactics in House Mice (Mus musculus domesticus). Am. Nat 193, 106–124. [DOI] [PubMed] [Google Scholar]

- Finlayson K, Lampe JF, Hintze S, Würbel H, and Melotti L (2016). Facial indicators of positive emotions in rats. PLoS ONE 11, 1–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Forkosh O, Karamihalev S, Roeh S, Alon U, Anpilov S, Touma C, Nussbaumer M, Flachskamm C, Kaplick PM, Shemesh Y, et al. (2019). Identity domains capture individual differences from across the behavioral repertoire. Nat. Neurosci 22, 2023–2028. [DOI] [PubMed] [Google Scholar]

- Fox E, Sudderth E, Jordan M, and Willsky A (2010). Bayesian Nonparametric Methods for Learning Markov Switching Processes. IEEE Signal Process. Mag 5563110. [Google Scholar]

- Galef BG (1982). Studies of social learning in norway rats: A brief review. Dev. Psychobiol 15, 279–295. [DOI] [PubMed] [Google Scholar]

- Gardner RM, Corbin TL, Beltramo JS, and Nickell GS (1984). The Prisoner’s Dilemma Game and Cooperation in the Rat. Psychol. Rep 55, 687–696. [Google Scholar]

- Geisler WS (2008). Visual Perception and the Statistical Properties of Natural Scenes. Annu. Rev. Psychol 59, 167–192. [DOI] [PubMed] [Google Scholar]

- Georgopoulos AP, Kalaska JF, Caminiti R, and Massey JT (1982). On the relations between the direction of two-dimensional arm movements and cell discharge in primate motor cortex. J. Neurosci 2, 1527–1537. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gerós A, Magalhães A, and Aguiar P (2020). Improved 3D tracking and automated classification of rodents’ behavioral activity using depth-sensing cameras. Behav. Res. Methods. [DOI] [PubMed] [Google Scholar]

- Gillespie D, Yap MH, Hewitt BM, Driscoll H, Simanaviciute U, Hodson-Tole EF, and Grant RA (2019). Description and validation of the LocoWhisk system: Quantifying rodent exploratory, sensory and motor behaviours. J. Neurosci. Methods 328, 108440. [DOI] [PubMed] [Google Scholar]

- Goldin MA, Harrell ER, Estebanez L, and Shulz DE (2018). Rich spatio-temporal stimulus dynamics unveil sensory specialization in cortical area S2. Nat. Commun 9, 4053.# Used a multi-whisker stimulator system that can move 24 whiskers independently to investigate how spatiotemporal features of whisker stimuli are encoded in secondary somatosensory cortex.

- Gosztolai A, Gunel S, Abrate MP, Morales D, Rios VL, Rhodin H, Fua P, and Ramdya P (2020). LiftPose3D, a deep learning-based approach for transforming 2D to 3D pose in laboratory animals. BioRxiv 2020.09.18.292680.## Showed that it is possible to train a network to predict the 3D posture of a mouse from a single 2D view in laboratory conditions.

- Grasmuck V, and Desor D (2002). Behavioural differentiation of rats confronted to a complex diving-for-food situation. Behav. Processes 58, 67–77. [DOI] [PubMed] [Google Scholar]

- Graving JM, and Couzin ID (2020). VAE-SNE: a deep generative model for simultaneous dimensionality reduction and clustering. BioRxiv 2020.07.17.207993.# Combines machine learning methods for low-dimensional manifold embedding and a distance-based loss function on that manifold to perform unsupervised discovery of behavioral categories in a single step.

- Graving JM, Chae D, Naik H, Li L, Koger B, Costelloe BR, and Couzin ID (2019). DeepPoseKit, a software toolkit for fast and robust animal pose estimation using deep learning. ELife 8, e47994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grill HJ, and Norgren R (1978a). Chronically decerebrate rats demonstrate satiation but not bait shyness. Science 201, 267–269. [DOI] [PubMed] [Google Scholar]

- Grill HJ, and Norgren R (1978b). The taste reactivity test. I. Mimetic responses to gustatory stimuli in neurologically normal rats. Brain Res. 143, 263–279. [DOI] [PubMed] [Google Scholar]

- Guggisberg AG, Mathis J, Schnider A, and Hess CW (2010). Why do we yawn? Neurosci. Biobehav. Rev 34, 1267–1276. [DOI] [PubMed] [Google Scholar]

- Günel S, Rhodin H, Morales D, Campagnolo J, Ramdya P, and Fua P (2019). DeepFly3D, a deep learning-based approach for 3D limb and appendage tracking in tethered, adult Drosophila. ELife 8, e48571. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hachiga Y, Schwartz LP, Silberberg A, Kearns DN, Gomez M, and Slotnick B (2018). Does a rat free a trapped rat due to empathy or for sociality? J. Exp. Anal. Behav 110, 267–274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haidarliu S, Simony E, Golomb D, and Ahissar E (2010). Muscle architecture in the mystacial pad of the rat. Anat. Rec 293, 1192–1206. [DOI] [PubMed] [Google Scholar]

- Haidarliu S, Simony E, Golomb D, and Ahissar E (2011). Collagenous Skeleton of the Rat Mystacial Pad. Anat. Rec 294, 764–773. [DOI] [PubMed] [Google Scholar]

- Haidarliu S, Golomb D, Kleinfeld D, and Ahissar E (2012). Dorsorostral Snout Muscles in the Rat Subserve Coordinated Movement for Whisking and Sniffing. Anat. Rec 295, 1181–1191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haidarliu S, Kleinfeld D, and Ahissar E (2013). Mediation of Muscular Control of Rhinarial Motility in Rats by the Nasal Cartilaginous Skeleton. Anat. Rec 296, 1821–1832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haidarliu S, Kleinfeld D, Deschênes M, and Ahissar E (2014). The Musculature That Drives Active Touch by Vibrissae and Nose in Mice. Anat. Rec 00, n/a-n/a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harrison N, Lindholm AK, Dobay A, Halloran O, Manser A, and König B (2018). Female nursing partner choice in a population of wild house mice (Mus musculus domesticus). Front. Zool. 15, 4.## Use a complex multi-nest tracking system to show that free-living female house mice are ‘choosy’ in their decision to co-nest and communally nurse.

- Havlik JL, Sugano YYV, Jacobi MC, Kukreja RR, Jacobi JHC, and Mason P (2020). The bystander effect in rats. Sci. Adv 6, eabb4205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heckman JJ, Proville R, Heckman GJ, Azarfar A, Celikel T, and Englitz B (2017). High-precision spatial localization of mouse vocalizations during social interaction. Sci. Rep 7, 3017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heiligenberg W (1973). Random processes describing the occurrence of behavioural patterns in a cichlid fish. Anim. Behav 21, 169–182.## A landmark work in the use of statistical methods to understand the structure of animal behavior in a data-driven manner.

- Hernandez-Lallement J, Van Wingerden M, Marx C, Srejic M, and Kalenscher T (2015). Rats prefer mutual rewards in a prosocial choice task. Front. Neurosci 9, 1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heyes CM, Jaldow E, Nokes T, and Dawson GR (1994). Imitation in rats (Rattus norvegicus): The role of demonstrator action. Behav. Processes 32, 173–182. [DOI] [PubMed] [Google Scholar]

- Hill DN, Bermejo R, Zeigler HP, and Kleinfeld D (2008). Biomechanics of the vibrissa motor plant in rat: rhythmic whisking consists of triphasic neuromuscular activity. J. Neurosci. Off. J. Soc. Neurosci 28, 3438–3455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hiura LC, Tan L, and Hackenberg TD (2018). To free, or not to free: Social reinforcement effects in the social release paradigm with rats. Behav. Processes 152, 37–46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hong W, Kennedy A, Burgos-Artizzu XP, Zelikowsky M, Navonne SG, Perona P, and Anderson DJ (2015). Automated measurement of mouse social behaviors using depth sensing, video tracking, and machine learning. Proc. Natl. Acad. Sci 112, E5351–E5360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hsu AI, and Yttri EA (2019). B-SOiD: An Open Source Unsupervised Algorithm for Discovery of Spontaneous Behaviors (Neuroscience). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huet LA, and Hartmann MJZ (2014). The search space of the rat during whisking behavior. J. Exp. Biol 217, 3365–3376. [DOI] [PubMed] [Google Scholar]

- Ishii H, Shi Q, Fumino S, Konno S, Kinoshita S, Okabayashi S, Iida N, Kimura H, Tahara Y, Shibata S, et al. (2013). A novel method to develop an animal model of depression using a small mobile robot. Adv. Robot 27, 61–69. [Google Scholar]

- Ishiyama S, and Brecht M (2016). Neural correlates of ticklishness in the rat somatosensory cortex. Science 354, 757–760.# Found that rats would jump of joy during tickling. This is one of the few known displays of positive emotion in rats.

- Jacob V, Estebanez L, Le Cam J, Tiercelin J-Y, Parra P, Parésys G, and Shulz DE (2010). The Matrix: A new tool for probing the whisker-to-barrel system with natural stimuli. J. Neurosci. Methods 189, 65–74. [DOI] [PubMed] [Google Scholar]

- Johnson RE, Linderman S, Panier T, Wee CL, Song E, Herrera KJ, Miller A, and Engert F (2020). Probabilistic Models of Larval Zebrafish Behavior Reveal Structure on Many Scales. Curr. Biol 30, 70–82.e4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kalaska JF (1988). The representation of arm movements in postcentral and parietal cortex. Can. J. Physiol. Pharmacol 66, 455–463. [DOI] [PubMed] [Google Scholar]

- Karakaya M, Macrì S, and Porfiri M (2020). Behavioral Teleporting of Individual Ethograms onto Inanimate Robots: Experiments on Social Interactions in Live Zebrafish. IScience 23.## Transferred the social behavior of real zebrafish onto robotic replicas of zebrafish, to be able to vary a social cue (the animals size) while keeping the behavioral kinematics constant.

- Katsov AY, Freifeld L, Horowitz M, Kuehn S, and Clandinin TR (2017). Dynamic structure of locomotor behavior in walking fruit flies. ELife 6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kearney S, Li W, Parsons M, Kim KI, and Cosker D (2020). RGBD-Dog: Predicting Canine Pose from RGBD Sensors. ArXiv200407788 Cs. [Google Scholar]

- Kentrop J, Kalamari A, Danesi CH, Kentrop JJ, van IJzendoorn MH, Bakermans-Kranenburg MJ, Joëls M, and van der Veen R (2020). Pro-social preference in an automated operant two-choice reward task under different housing conditions: Exploratory studies on pro-social decision making. Dev. Cogn. Neurosci 45, 100827. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kitano K, Yamagishi A, Horie K, Nishimori K, and Sato N (2020). Helping Behavior in Prairie Voles: A Model of Empathy and the Importance of Oxytocin. BioRxiv 2020.10.20.347872. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kiyokawa Y, Shimozuru M, Kikusui T, Takeuchi Y, and Mori Y (2006). Alarm pheromone increases defensive and risk assessment behaviors in male rats. Physiol. Behav 87, 383–387. [DOI] [PubMed] [Google Scholar]

- Klibaite U, and Shaevitz JW (2019). Interacting fruit flies synchronize behavior. BioRxiv 545483.## Used unsupervised behavioral analysis to investigate how the movements and postures of socially interacting drosophila depends on the relative spatial location of the interacting animals and the animals’ behavioral state (e.g. courting or not).

- Klibaite U, Berman GJ, Cande J, Stern DL, and Shaevitz JW (2017). An unsupervised method for quantifying the behavior of paired animals. Phys. Biol 14, 015006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klin A, Jones W, Schultz R, Volkmar F, and Cohen D (2002). Defining and Quantifying the Social Phenotype in Autism. Am. J. Psychiatry 159, 895–908. [DOI] [PubMed] [Google Scholar]

- Knutsen PM, Biess A, and Ahissar E (2008). Vibrissal Kinematics in 3D: Tight Coupling of Azimuth, Elevation, and Torsion across Different Whisking Modes. Neuron 59, 35–42. [DOI] [PubMed] [Google Scholar]

- Kohl J (2020). Parenting — a paradigm for investigating the neural circuit basis of behavior. Curr. Opin. Neurobiol 60, 84–91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kolmogorova D, Murray E, and Ismail N (2017). Monitoring Pathogen-Induced Sickness in Mice and Rats. Curr. Protoc. Mouse Biol 7, 65–76. [DOI] [PubMed] [Google Scholar]

- König B, Lindholm AK, Lopes PC, Dobay A, Steinert S, and Buschmann FJ-U (2015). A system for automatic recording of social behavior in a free-living wild house mouse population. Anim. Biotelemetry 3, 39. [Google Scholar]

- Krafft B, Colin C, and Peignot P (1994). Diving-for-Food: A New Model to Assess Social Roles in a Group of Laboratory Rats. Ethology 96, 11–23. [Google Scholar]

- Krishnan K, Lau BYB, Ewall G, Huang ZJ, and Shea SD (2017). MECP2 regulates cortical plasticity underlying a learned behaviour in adult female mice. Nat. Commun 8, 14077. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kritzler M, Lewejohann L, and Krüger A (2007). Analysing Movement and Behavioural Patterns of Laboratory Mice in a Semi Natural Environment Based on Data collected via RFID-Technology.

- Kropff E, Carmichael JE, Moser M-B, and Moser EI (2015). Speed cells in the medial entorhinal cortex. Nature 523, 419–424. [DOI] [PubMed] [Google Scholar]

- Kurnikova A, Moore JD, Liao SM, Deschênes M, and Kleinfeld D (2017). Coordination of Orofacial Motor Actions into Exploratory Behavior by Rat. Curr. Biol 27, 688–696.## Used a combination of behavioral and physiological sensors to discover and characterize temporal patterns of synchronization among nose movement, sniffing, whisking and head-bobbing. A major step towards a holistic understanding of rodent facial behavior.

- Lacey EA, Alexander RD, Braude SH, Sherman PW, and Jarvis JUM (2017). 8. An Ethogram for the Naked Mole-Rat: Nonvocal Behaviors. In The Biology of the Naked Mole-Rat, Sherman PW, Jarvis JUM, and Alexander RD, eds. (Princeton: Princeton University Press; ), pp. 209–242. [Google Scholar]

- Langford DJ, Bailey AL, Chanda ML, Clarke SE, Drummond TE, Echols S, Glick S, Ingrao J, Klassen-Ross T, Lacroix-Fralish ML, et al. (2010). Coding of facial expressions of pain in the laboratory mouse. Nat. Methods 7, 447–449. [DOI] [PubMed] [Google Scholar]

- Lecorps B, and Féron C (2015). Correlates between ear postures and emotional reactivity in a wild type mouse species. Behav. Processes 120, 25–29. [DOI] [PubMed] [Google Scholar]

- Leggio MG, Molinari M, Neri P, Graziano A, Mandolesi L, and Petrosini L (2000). Representation of actions in rats: The role of cerebellum in learning spatial performances by observation. Proc. Natl. Acad. Sci 97, 2320–2325. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lenschow C, and Brecht M (2015). Barrel Cortex Membrane Potential Dynamics in Social Touch. Neuron 85, 718–725. [DOI] [PubMed] [Google Scholar]

- Lenschow C, and Lima SQ (2020). In the mood for sex: neural circuits for reproduction. Curr. Opin. Neurobiol 60, 155–168. [DOI] [PubMed] [Google Scholar]

- Leos-Barajas V, and Michelot T (2018). An Introduction to Animal Movement Modeling with Hidden Markov Models using Stan for Bayesian Inference. ArXiv180610639 Q-Bio Stat. [Google Scholar]

- Li M, and Bolker BM (2017). Incorporating periodic variability in hidden Markov models for animal movement. Mov. Ecol 5, 1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li C, Shi Q, Gao Z, Ma M, Ishii H, Takanishi A, Huang Q, and Fukuda T (2020). Design and optimization of a lightweight and compact waist mechanism for a robotic rat. Mech. Mach. Theory 146, 103723.# Recent progress towards a robotic rat model, capable of biomechanically realistic 3D body postures.

- Liang M, Zhong J, Liu H-X, Lopatina O, Nakada R, Yamauchi A-M, and Higashida H (2014). Pairmate-dependent pup retrieval as parental behavior in male mice. Front. Neurosci 8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Linderman S, Nichols A, Blei D, Zimmer M, and Paninski L (2019). Hierarchical recurrent state space models reveal discrete and continuous dynamics of neural activity in C. elegans. BioRxiv 621540.## This study demonstrates the use of a recurrent Switching State Space Model for analyzing neural activity. This is an elegant model, with characteristics suitable for modeling behavior: the model includes continuous latent states with linear dynamics and the probability of transitioning to a new latent state depends on the location in these continuous states.

- Liu H-X, Lopatina O, Higashida C, Fujimoto H, Akther S, Inzhutova A, Liang M, Zhong J, Tsuji T, Yoshihara T, et al. (2013). Displays of paternal mouse pup retrieval following communicative interaction with maternal mates. Nat. Commun 4, 1346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu X, Yu S, Flierman N, Loyola S, Kamermans M, Hoogland TM, and Zeeuw CID (2020). OptiFlex: video-based animal pose estimation using deep learning enhanced by optical flow. BioRxiv 2020.04.04.025494. [Google Scholar]

- Łopuch S, and Popik P (2011). Cooperative Behavior of Laboratory Rats (Rattus norvegicus) in an Instrumental Task. J. Comp. Psychol 125, 250–253. [DOI] [PubMed] [Google Scholar]

- Luo YF, Bresee CS, Rudnicki JW, and Hartmann MJZ (2020). Constraints on the deformation of the vibrissa within the follicle. BioRxiv 2020.04.20.050757. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luxem K, Fuhrmann F, Kürsch J, Remy S, and Bauer P (2020). Identifying Behavioral Structure from Deep Variational Embeddings of Animal Motion. BioRxiv 2020.05.14.095430.# Used unsupervised training of an encoder-decoder network to automatically estimate behavioral ‘motifs’ (latent clusters), and used the transition probability between these motifs to estimate their hierarchical structure.

- van der Maaten L, and Hinton G (2008). Visualizing Data using t-SNE. J. Mach. Learn. Res 9, 2579–2605. [Google Scholar]

- Macdonald IL, and Raubenheimer D (1995). Hidden Markov Models and Animal Behaviour. Biom. J 37, 701–712. [Google Scholar]

- Maravall M, and Diamond ME (2014). Algorithms of whisker-mediated touch perception. Curr. Opin. Neurobiol 25, 176–186. [DOI] [PubMed] [Google Scholar]

- Markowitz JE, Gillis WF, Beron CC, Neufeld SQ, Robertson K, Bhagat ND, Peterson RE, Peterson E, Hyun M, Linderman SW, et al. (2018). The Striatum Organizes 3D Behavior via Moment-to-Moment Action Selection. Cell 174, 44–58.e17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marlin BJ, Mitre M, D’amour JA, Chao MV, and Froemke RC (2015). Oxytocin enables maternal behaviour by balancing cortical inhibition. Nature 520, 499–504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Márquez C, Rennie SMM, Costa DFF, and Moita MAA (2015). Prosocial Choice in Rats Depends on Food-Seeking Behavior Displayed by Recipients. Curr. Biol 25, 1736–1745.# Found that rats are sensitive to behavioral displays by the conspecific during a paradigm where one rat could deliver food to a conspecific.

- Mathis A, Mamidanna P, Cury KM, Abe T, Murthy VN, Mathis MW, and Bethge M (2018). DeepLabCut: markerless pose estimation of user-defined body parts with deep learning. Nat. Neurosci 21, 1281–1289.## A well-maintained and user-friendly library for pose estimation in laboratory animals.

- Matsumoto J, Urakawa S, Takamura Y, Malcher-Lopes R, Hori E, Tomaz C, Ono T, and Nishijo H (2013). A 3D-Video-Based Computerized Analysis of Social and Sexual Interactions in Rats. PLOS ONE 8, e78460.## A pioneering study that combined depth videography and physical modeling in a computational tool for semi-automatic tracking of body postures in socially interacting rats.

- Mearns DS, Donovan JC, Fernandes AM, Semmelhack JL, and Baier H (2020). Deconstructing Hunting Behavior Reveals a Tightly Coupled Stimulus-Response Loop. Curr. Biol 30, 54–69.e9. [DOI] [PubMed] [Google Scholar]

- Merel J, Aldarondo D, Marshall J, Tassa Y, Wayne G, and Ölveczky B (2019). Deep neuroethology of a virtual rodent. ArXiv191109451 Q-Bio.## This very elegant study analyzed the emergent of structure a ‘virtual rodent’ – a generative model of a rodent-like body – trained by reinforcement learning to solve various motor tasks.

- Meyer AF, Poort J, O’Keefe J, Sahani M, and Linden JF (2018). A Head-Mounted Camera System Integrates Detailed Behavioral Monitoring with Multichannel Electrophysiology in Freely Moving Mice. Neuron 100, 46–60.e7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meyer AF, O’Keefe J, and Poort J (2020). Two Distinct Types of Eye-Head Coupling in Freely Moving Mice. Curr. Biol 30, 2116–2130.e6.## Used a head-mounted miniature camera to record eye movements during social interactions in mice.

- Mimica B, Dunn BA, Tombaz T, Bojja VPTNCS, and Whitlock JR (2018). Efficient cortical coding of 3D posture in freely behaving rats. Science 362, 584–589. [DOI] [PubMed] [Google Scholar]

- Moyaho A, Rivas-Zamudio X, Ugarte A, Eguibar JR, and Valencia J (2014). Smell facilitates auditory contagious yawning in stranger rats. Anim. Cogn 18, 279–290. [DOI] [PubMed] [Google Scholar]

- Moyaho A, Flores Urbina A, Monjaraz Guzmán E, and Walusinski O (2017). Yawning: a cue and a signal. Heliyon 3, e00437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Musall S, Kaufman MT, Juavinett AL, Gluf S, and Churchland AK (2019). Single-trial neural dynamics are dominated by richly varied movements. Nat. Neurosci 22, 1677–1686. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nagy M, Horicsányi A, Kubinyi E, Couzin ID, Vásárhelyi G, Flack A, and Vicsek T (2020). Synergistic Benefits of Group Search in Rats. Curr. Biol 0.# Used analysis and modeling of behavior in a clever paradigm to discover that rats rely of cues from conspecifics when exploring a maze.

- Naik H, Bastien R, Navab N, and Couzin I (2020). Animals in Virtual Environments. IEEE Trans. Vis. Comput. Graph 26, 2073–2083. [DOI] [PubMed] [Google Scholar]

- Nakashima SF, Ukezono M, Nishida H, Sudo R, and Takano Y (2015). Receiving of emotional signal of pain from conspecifics in laboratory rats. R. Soc. Open Sci 2, 140381.## Investigated behavioral responses to images of distressed conspecifics.

- Nashaat MA, Oraby H, Peña LB, Dominiak S, Larkum ME, and Sachdev RNS (2017). Pixying Behavior: A Versatile Real-Time and Post Hoc Automated Optical Tracking Method for Freely Moving and Head Fixed Animals. Eneuro 4, ENEURO.0245–16.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nath T, Mathis A, Chen AC, Patel A, Bethge M, and Mathis MW (2019). Using DeepLabCut for 3D markerless pose estimation across species and behaviors. Nat. Protoc 14, 2152–2176. [DOI] [PubMed] [Google Scholar]

- Nilsson SRO, Goodwin NL, Choong JJ, Hwang S, Wright HR, Norville Z, Tong X, Lin D, Bentzley BS, Eshel N, et al. (2020). Simple Behavioral Analysis (SimBA): an open source toolkit for computer classification of complex social behaviors in experimental animals. BioRxiv 2020.04.19.049452. [Google Scholar]

- Nourizonoz A, Zimmermann R, Ho CLA, Pellat S, Ormen Y, Prévost-Solié C, Reymond G, Pifferi F, Aujard F, Herrel A, et al. (2020). EthoLoop: automated closed-loop neuroethology in naturalistic environments. Nat. Methods 17, 1052–1059.# Uses real-time tracking to steer motorized ‘close-up’ cameras, that capture zoomed-in images of mouse lemurs moving freely in a large 3D behavioral arena.

- Novotny D, Ravi N, Graham B, Neverova N, and Vedaldi A (2019). C3DPO: Canonical 3D Pose Networks for Non-Rigid Structure From Motion. ArXiv190902533 Cs.# Showed a methods for estimating the 3D shape and reconstructing the ‘pose’ of deformable objects from a single monocular view the motion of keypoints.

- Oberliessen L, Hernandez-Lallement J, Schäble S, van Wingerden M, Seinstra M, and Kalenscher T (2016). Inequity aversion in rats, Rattus norvegicus. Anim. Behav 115, 157–166. [Google Scholar]

- Omrani M, Kaufman MT, Hatsopoulos NG, and Cheney PD (2017). Perspectives on classical controversies about the motor cortex. J. Neurophysiol 118, jn.00795.2016-jn.00795.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parker PRL, Brown MA, Smear MC, and Niell CM (2020). Movement-Related Signals in Sensory Areas: Roles in Natural Behavior. Trends Neurosci. 43, 581–595. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patterson TA, Parton A, Langrock R, Blackwell PG, Thomas L, and King R (2017). Statistical modelling of individual animal movement: an overview of key methods and a discussion of practical challenges. ArXiv160307511 Q-Bio Stat. [Google Scholar]

- Peleh T, Bai X, Kas MJH, and Hengerer B (2019). RFID-supported video tracking for automated analysis of social behaviour in groups of mice. J. Neurosci. Methods 325, 108323. [DOI] [PubMed] [Google Scholar]

- Pereira TD, Aldarondo DE, Willmore L, Kislin M, Wang SS-H, Murthy M, and Shaevitz JW (2019). Fast animal pose estimation using deep neural networks. Nat. Methods 16, 117–125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pereira TD, Tabris N, Li J, Ravindranath S, Papadoyannis ES, Wang ZY, Turner DM, McKenzie-Smith G, Kocher SD, Falkner AL, et al. (2020). SLEAP: Multi-animal pose tracking. BioRxiv 2020.08.31.276246.## Combines deep learning with a combination of ‘bottom-up’ and ‘top-down’ spatiotemporal regularization to track body parts of interacting animals, agnostic to species.