Abstract

Sign language phonological parameters are somewhat analogous to phonemes in spoken language. Unlike phonemes, however, there is little linguistic literature arguing that these parameters interact at the sublexical level. This situation raises the question of whether such interaction in spoken language phonology is an artifact of the modality or whether sign language phonology has not been approached in a way that allows one to recognize sublexical parameter interaction. We present three studies in favor of the latter alternative: a shape‐drawing study with deaf signers from six countries, an online dictionary study of American Sign Language, and a study of selected lexical items across 34 sign languages. These studies show that, once iconicity is considered, handshape and movement parameters interact at the sublexical level. Thus, consideration of iconicity makes transparent similarities in grammar across both modalities, allowing us to maintain certain key findings of phonological theory as evidence of cognitive architecture.

Keywords: Sign languages, Phonology, Manual movement, Iconicity, Depiction

1. Introduction

In models of sign language phonology, the manual articulations consist of discrete, contrastive units known as the manual parameters: movement, location, handshape, and orientation (though some analyze orientation as a feature of handshape—see Brentari, 1998; Emmorey, 2002; Sandler, 1989). The handshape and movement parameters are generally treated as independent of one another. However, across a series of three studies we have found a range of correlations between the two, which come to light once one considers depictive possibilities within these parameters.

Study 1 is a shape‐drawing task, where deaf signers were presented with pictures of two‐dimensional shapes and asked to communicate them in their sign language, as though conversing with a friend. When “drawing” in the air, signers used three types of handshapes that we compare to paintbrushes. Geometric properties of the movement path correlate with whether one draws with one moving hand or two moving hands, and the correlation is strongest with one type of paintbrush. Studies 2 and 3 are dictionary studies. Study 2 is of signs in an ASL online dictionary whose movement path draws the shape of (part of) the signified entity. Here both flat entities (effectively two‐dimensional) and thick entities (three‐dimensional) are drawn. This wider range of data called for a readjustment of our initial categorization of handshapes (in Study 1) into two broader categories: edge‐drawing handshapes (ED‐handshapes) and surface‐drawing handshapes (SD‐handshapes), where ED‐handshapes are more commonly used with flat entities and SD‐handshapes, with thick entities. Again, geometric properties of the movement path correlate to whether one draws the sign with one or two moving hands, where the correlation is strongest with ED‐handshapes. Study 3 is a comparison of 16 lexical items across 34 sign languages, comparing handshapes for those signs in which the movement path draws the shape of (part of) the signified entity. Study 3 offers strong confirmation of correlations found in Study 2. The findings of these studies are critical in allowing us to maintain assumptions about phonological theory that have been foundational to various endeavors in the cognitive sciences.

2. Background on phonological parameter interactions

The information here will be important for understanding later discussion of the lexicon. Sign languages have two types of signs: frozen/conventionalized ones and productive ones (created spontaneously in discourse; Brennan, 2001). Frozen signs are found in dictionaries and include such items as mouse, chew, happy 1 (Bellugi & Klima, 1976; Russo, 2004; Supalla, 1986). Productive signs, instead, are typically called classifier constructions or classifier predicates, and are reported to occur in almost all sign languages researched to date. 2 , 3 They are often (but not exclusively) used when someone indicates (change of) location of an entity (Morgan & Woll, 2007). For example, to express the proposition “the cat sits on the table” in ASL, one might articulate the frozen signs table and cat and point to locations to give them a spatial reference, then move the dominant hand from the spatial location of cat to perch on top of the nondominant hand in the spatial location of table, where the dominant hand assumes the handshape used to indicate (most) animals and the nondominant hand assumes the handshape used to indicate (most) flat surfaces. The handshapes in classifier constructions are called classifiers, 4 , 5 since the same handshape used to indicate the cat could be used in a different proposition to indicate a mouse (or a wolf, or a crow, or any other entity that matched the requirements for being in the relevant class in the given language) and the same handshape used to indicate the table could be used in a different proposition to indicate a bed (or a shelf, or the ground, or any other entity that matched the requirements for being in the relevant class in a given language; Engberg‐Pedersen, 1993).

Frozen signs are generally analyzed as composed of manual and nonmanual phonological parameters independent of the proposition they appear in. 6 Productive signs are generally analyzed as composed of a classifier handshape determined by the entity that moves, where the rest of the manual and nonmanual phonological parameters are determined by the particular proposition.

2.1. Phonological parameters

Signs consist of nonmanual and manual articulations. The lexical and prosodic interaction of these two types of articulations is complex; to handle it would require detailed discussion of phonological domains (Sandler, 2012a), of the different functions of nonmanual articulations (Crasborn, van der Kooij, Waters, Woll, & Mesch, 2008; Pfau & Quer, 2010), and of the integration of lexical nonmanual articulations into the phonological representation of lexical items (Pendzich, 2020). In the present study we focus only on the interaction of handshape and movement—two types of manual parameters—thus here we outline the relevant literature only with respect to the manual parameters.

The manual parameters are often treated as analogous to phonemes in spoken languages (Sandler, 2012a). Unlike phonemes, however, there is little scholarship arguing that the parameters interact within a sign. 7

An early argument for parameter interaction is found in Mandel (1981, p. 83). Mandel shows that the features of handshape are not entirely independent from the features of location and movement. In particular, only the selected fingers of a handshape can make contact with a body location, and only the selected fingers can move (but see a range of complications for the notion of selected fingers in van der Kooij, 1998, and others since).

Another argument for parameter interaction is based on Brentari and Poizner (1994). For able‐bodied signers (in contrast to parkinsonian signers), handshape change within a lexical item happens continuously throughout the movement of the sign; the timing of handshape change is linked to the duration of path movement (where path movement involves shoulder and/or elbow articulation; other movement is secondary and non‐path). But when one sign is followed by another with a different handshape, the change from the first sign's handshape to the following sign's handshape can occur at any point in the transitional movement between the two signs (where transitional movement is not phonologically part of either sign—but is more like a ligature between letters in script). 8

Neither of these examples involve anything like the robust interaction of features in spoken languages at the sublexical level in assimilation (spreading) or dissimilation. We do, however, find spreading of manual features with respect to morphologically complex lexical items, such as roots with affixes (Sandler, Aronoff, Meir, & Padden, 2011) and compounds (Brentari, 2019, especially section 8.3). As a compound becomes lexicalized (conventionalized), a given parameter (such as handshape, orientation, or both) can spread across both compound elements without affecting or being affected by the other parameters of the compound elements. 9 , 10 That is, we have replacement of a full parameter.

2.2. Phonological models with respect to interaction at the supra‐ and sublexical levels

Given the paucity of literature on phonological parameter interaction, one might conclude that the robust effects seen between phonemes at the sublexical level in spoken languages are largely absent in sign languages. This may not seem surprising, given that in spoken language the effects of one phoneme on another are usually stated in terms of feature spreading. Vowels and consonants have some features in common (such as ±voice, ±back, or ±nasal), thus spreading of feature values can easily occur between segments within a word. In contrast, manual parameters in sign languages have few obvious features in common. Where is the potential for feature interaction?

While a number of models have been proposed for the representation and organization of sign language phonology, each parameter in general is taken to involve pretty much the same features, which are largely (but not entirely) discrete from one parameter to the next (Brentari, 1998, 2019; Sandler, 2012a). The movement parameter has a path with beginning and end, shape and direction, iteration or not, and dynamics. Handshape involves the shape the fingers produce via thumb position and spreading and/or flexing and extending of digits. Orientation involves where the palm and/or fingertips point. And location involves points or areas in space or on the signer's body.

One obvious potential source of feature interaction is between the parameter of location and the parameter of movement with respect to the endpoints of the path (the setting feature in the prosodic model of sign language phonology). Additionally, any joint articulation other than shoulder or elbow affects orientation (by radioulnar articulation—the orientation change feature of the movement parameter in the prosodic model—or by wrist flexion/extension) or handshape change (by knuckle articulation—the setting of aperture change of the movement parameter in the prosodic model), and if this change in orientation or in handshape occurs during a movement, there is potential for feature interaction between orientation/handshape and movement parameters. Still, that potential does not seem to be exploited by sign language phonology. We do not find reports of instances within a lexeme, for example, where a starting point of A and an ending point for B requires that the movement path be an arc. Nor do we find instances where the fact that the radioulnar articulates requires the movement to be iterated, or where the fact that the interphalangeal knuckles flex requires the movement to be abrupt. This kind of phonotactics does not seem to occur at the sublexical level (though, again, we find it in compounds, see, e.g., Sandler, 1999, 2012b). And, most important for us, the present models do not allow potential for interaction between the features of an unchanging handshape (a handshape that stays fixed throughout the articulation of the sign) and the features of movement.

Certainly, there are rules and processes in spoken languages that affect phonology and apply only at a supralexical level (Hayes, 1990). With respect to rules that apply at the phrasal level, the debate is ongoing as to whether domain‐sensitive phenomena of this type have direct access to syntactic structure or have access to a distinct prosodic structure within the phonological representation of the sentence (Selkirk, 2011). It is theoretically possible, then, that the manual phonological parameters in sign languages interact only at the supralexical level—and that we see exceptional behavior in the two noted phenomena: the correlations noted by Mandel (1981) regarding handshape and contact, and the correlations noted by Brentari and Poizner (1994) between handshape change (and, note, this is not a fixed handshape) and movement duration.

However, since many phonological rules apply across varying domains, from the sublexical to the supralexical (Rice, 1990), it is also possible that the manual phonological parameters within a sign can, in fact, interact, but in ways that linguists have not yet dealt with and maybe not yet noticed. This may be because the features included in prevalent models of phonology make it difficult to accommodate or notice these interactions.

Crasborn and van der Kooij (1997) argue precisely that position with respect to the parameters of orientation and location. They point out that there are two ways to think about orientation. The prevalent one is in terms of the absolute direction in space that the palm/fingers face (Stokoe, 1978, and most works since), and the other is in terms of the relationship of some part of the hand to the place of articulation (Friedman, 1976; Mandel, 1981). The relative orientation approach subsumes what has been called focus (the part of the hand that points in the direction of movement), facing (the part of the hand oriented toward the location), and point of contact (the part of the hand that touches the location). Adopting relative orientation instead of absolute orientation as a parameter allows sign phonology to acknowledge sublexical correlations between orientation and location. In particular, Crasborn and van der Kooij note that generalizations about handshape variation in signs can be made with this approach: The same handshapes show up as variants of each other (such as the B‐handshape and the 1‐handshape) when they have certain parts of them (such as the fingertips) contacting the location. They also note that absolute orientation variants of each other are allowed with signs in which the index finger makes contact with a certain location (such as the ipsilateral temple); in other words, what matters here is relative orientation only. Further, only relative orientation enters into the description of agreement phenomena (Meir, 1995). Crasborn and van der Kooij claim that absolute orientation is useful only in classifier constructions and with respect to describing the weak hand in “unbalanced” two‐handed signs (two‐handed signs in which only the dominant/strong one moves), and the handshape and orientation of the weak hand differs from that of the strong hand (van der Hulst, 1996).

Prevalent models of phonology may be keeping us from seeing correlations between the parameters at the sublexical level in another possible area: iconicity. Sign languages are highly iconic in that there are a number of ways in which the relationship between form and meaning in sign language grammar is not arbitrary, but, rather, depictive (Cuxac & Sallandre, 2007; Hoiting & Slobin, 2007; Malaia & Wilbur, 2012; Perniss & Özyürek, 2008; among many). For example, in British Sign Language (BSL) signs involving cognitive processes (idea, ponder, realize, think, wonder, etc.) are made on the temple while many signs involving emotional processes are made on the trunk (Kyle & Woll, 1985, p. 114)—and this observation holds for various other sign languages, as a quick check on the website spreadthesign.com verifies. However, the perception of iconicity depends greatly on an individual's language and sociocultural experience (Occhino, Anible, Wilkinson, & Morford, 2017, p. 104; Pizzuto & Volterra, 2000; Taub, 2001; Wilcox, 2000), defying an easy circumscription of the role that iconicity might play in phonology.

While some have argued that iconicity is not “sufficient to predict grammaticization and stabilization of form” (Brentari, 2007, p. 69), others have proposed that [±iconic] should be a feature of handshape (Boyes Braem, 1981) and of location (Friedman, 1976) that can be appealed to in accounting for grammatical forms, and van der Kooij (2002) argues that signs for which any parameter is iconic should be handled separately in order to allow for a constrained model of phonology.

In fact, although Crasborn and van der Kooij (1997) do not explicitly invoke iconicity, we suspect it is at the heart of their suggestions for a different definition of the orientation parameter. We might then ask whether iconicity is the culprit behind the correlations between selected fingers and point of contact that Mandel (1981) first noted. That is, iconic signs are messy for phonological theory. But, as more recent studies have shown, iconicity's prevalence means it cannot be ignored if one is to offer an adequate model of sign language grammar (Perniss, Thompson, & Vigliocco, 2010; Russo, 2004).

3. Our hypotheses

Handshape and path movement would seem to be independent of one another in the sense that any handshape can move with equal ease (allowing for anatomically needed adjustments in orientation and facing) along any path, in any direction, with any dynamics, with or without iteration. Thus, neither hand physiology nor movement factors of geometry or energy consumption (regarding dynamics or iteration) lead one to expect correlations between these two parameters. Nevertheless, such correlations have been noted. In a study of Adamarobe Sign Language, Nyst (2016) looks at how information about size and shape of an entity is conveyed (as have many others, starting with Klima & Bellugi, 1979; see in particular the discussion in Taub, 2001). Nyst proposes that movement in these signs “either signals extent (when combined with shape for shape depiction) or a change in it (when combined with distance for size depiction)” (2016, p. 75). That is, when the movement of handshapes traces the outline of an entity (what Mandel, 1977, calls “drawing” and what Streeck, 2008, calls “sketching”), the length of the movement depicts the extent of the entity. Nyst warns that the analysis of containers (bowls, barrels, and so on), in particular, can be ambiguous because curved hands could embody the sides of the curved entity or could depict the handling of the sides of the curved entity. These studies consistently focus on meaning associated with phonological parameters—that is, iconicity.

We build on such insights as well as on findings from Ferrara and Napoli (2019), which reported on two studies. In the first, pictures of two‐dimensional shapes were presented to signing deaf participants, who were asked to communicate those shapes with their hands. The expectation was that participants would draw the shapes in the air using either one moving hand (Method One) or two moving hands (Method Two). The study sought to determine whether method choice was related to mathematical properties of the shapes. This was, in fact, the case: If a shape is (a) bilaterally symmetrical across the Y‐axis (labeled +YSym, adopting terminology from the Cartesian plane) and (b) has all straight edges rather than any curved edge(s) (labeled −Curve), the likelihood of it being rendered via Method Two is great. All other shapes are more likely to be rendered via Method One. Thus the shapes in Fig. 1A–C are more likely to be drawn with two moving hands (moving in reflexive symmetry across the vertical line that bisects the shape, indicated here), while the shapes in Fig. 1D–F are more likely to be drawn with only one moving hand (the dominant hand). 11

Fig. 1.

Shapes and number of moving hands used to draw them.

Ferrara and Napoli point out that the use of two hands in drawing shapes in the air is what needs to be accounted for, since people draw on paper with only one hand. Why would signers expend the extra effort involved in two‐handed drawing? They found an account in their second study. In this study, the full lexicon (as of summer 2018) in one ASL online dictionary (the “main dictionary” at aslpro.com 12 ) was examined, with attention to lexical items whose movement path drew (part of) an entity's shape, to see if mathematical properties of the movement path correlated to whether a sign used one moving hand or two. Again, the answer was yes. They concluded that a lexical principle was at play, and that when signers drew shapes in the air, they were not simply drawing, they were signing—so they followed that lexical principle. The findings of both studies are summed up with the following two principles:

Lexical Drawing Principle: If the primary movement path of a lexical item is iconic of an entity's shape, and if that path is +YSym and −Curve, the lexical item is more likely to involve two moving hands (a bimanual sign); otherwise, it is more likely that only one hand will move (a unimanual sign).

Shape Drawing Principle: When drawing a shape in the air, signers apply the Lexical Drawing Principle.

In other words, Method Two is favored under certain conditions; Method One is favored elsewhere (in the sense of Kiparsky, 1973).

The use of one versus two moving hands in drawing shapes/objects has important communicative benefits. If two hands are moving, we know the shape/object is unlikely to have curves and is likely to be symmetrical across the Y‐axis; in other words, we know a lot from the very moment the hands begin to move. When one hand is moving, the shape/object might have curves (where the slope is constantly changing) or might be irregular in any number of ways; the viewer is alerted to pay extra attention because this line may change direction in unpredictable ways. These clues can be particularly helpful in fast signing. If someone signs the lexical item blackboard (in which the second element of the compound is a rectangle drawn in the air) so quickly that the corners are rounded, we still know there are angular corners because the two moving hands assure us that the shape/object has no curves. The Lexical Drawing Principle, then, is an example of the linguistic principle of Contrast Preservation (Łubowicz, 2003).

Importantly, the shape of all movement paths in Ferrara and Napoli's study was necessarily iconic of the shape of the entity conveyed. Hence, the movement parameter and the handshape parameter were shown to have features that interact (for movement, path shape; for handshape, number of moving hands), an observation that emerged only via considering iconic paths. This fact led us to explore the possibility of a relationship between path movement and handshape where either one is iconic (not only movement) in any of the various ways that paths or handshapes can be iconic.

Path movement can be iconic in at least two ways. First, it might draw the shape of the entity the sign signifies (as in Ferrara & Napoli, 2019). Second (and not mutually exclusive), it might perform an action associated with the sense of the sign, typically in a reduced representational or metaphorical form (Taub, 2001; Wilcox, 2000).

Handshape can be iconic in many ways, two of which seem to have the potential to interact with the first way we noted that path movement can be iconic. First, the hands can assume the shape of a drawing tool, with one or more points. Indeed, several have noted handshapes that draw perimeters of a shape (Liddell & Johnson, 1987; and others since), where the extended fingers are, in our terms, drawing tools.

Second, the hands can assume a shape that indicates physical properties of a substance. Many have noted this for entity classifiers, where handshape can indicate that a surface is bumpy, for example (Corazza, 1990; Supalla, 1986). Since classifier(‐like) handshapes can be part of lexical signs (Lepic & Occhino, 2018), this means the hands might be able to assume a shape with characteristics of the surface of the signified entity, such as whether the entity is flat or curved, thick or thin, etc. Emmorey, Nicodemus, and O'Grady (forthcoming, p. 19) conclude that for signs signifying three‐dimensional entities, “ASL signers produced two‐handed classifier signs in which the configuration and movement of the hands depict the shape.” They give examples like sphere (in which the fingertips of the Claw handshape come together twice) and cylinder (in which the C‐handshapes move to show the length of the sides of the cylinder). In their dictionary study, Ferrara and Napoli (2019), instead, find that both one‐handed and two‐handed signs can signify three‐dimensional entities. For this reason, we began the current study with the most rudimentary of hypotheses:

Dimension Principle (first approximation): If path movement is iconic in that it draws (part of) the signified entity, handshapes for drawing two‐dimensional entities should have a recognizable point or points, whereas handshapes for drawing three‐dimensional entities should allow representation of surface features.

We conducted three studies to test this hypothesis: (1) a shape‐drawing study, (2) a dictionary study of ASL, and (3) a cross‐linguistic lexical study.

4. Shape‐drawing study

Our shape‐drawing study uses the data in Ferrara and Napoli (2019), which we now describe. Deaf signing participants were individually presented with pictures of two‐dimensional shapes and asked to record themselves communicating that shape to someone else of their deaf community. This study was conducted in order to investigate whether signers would use one moving hand or two, and whether and which mathematical factors influence that choice. When the study was repeated with non‐signing hearing participants, all participants opted to trace the shapes in the air with only one hand. 13 Additionally, we gathered data from a hearing participant in a deaf family, who grew up using sign (a child of deaf adults, CODA). If her data had been distinguishable from those of our deaf participants, we intended to run the survey with additional CODAs. However, they were not. We did not include her in our statistics, but had we, she would have fallen within the interquartile range in all our boxplots of the data from deaf participants. Ferrara and Napoli (2019) therefore reported only on the study of the deaf participants. Since the non‐signing hearing participants all used only the 1‐handshape (i.e., they drew in the air with their index finger), and since we had only one CODA participant, we have nothing more to say about them regarding the present questions. So here, as well, we report only on the study of the deaf participants, turning our attention to the relationship of handshape to path movement.

4.1. The stimuli and coding

The shape‐drawing study task was designed to be completed in approximately 5 min by our participants. Initial test runs thus led us to limit our task to 49 shapes (trials). All 49 shapes were presented to each participant (shape stimuli are in Appendix S1, with information on how many clips were produced of each shape). The choice of shapes in the study was dictated by our expectations of what mathematical properties of the shapes might be relevant for determining method choice of using one hand (Method One) or two (Method Two).

Importantly, the selection of shapes was not influenced by expectations about handshape, since we anticipated drawing would be done with the 1‐handshape (an index finger extended from a fist shown in Fig. 2 along with the i‐handshape. This is the handshape demonstrated in instructional resources (textbooks and online) for teaching how to sign shapes, and it can be used for both hands regardless of method, including for a hand that may (optionally) serve as a point buoy (a fixed hand that holds the initial starting point for movement of the other hand, see Liddell, Vogt‐Svendsen, & Bergman, 2007). That is, we anticipated signers would use the index fingertip like a paintbrush tip, as all hearing participants did and as is done in many lexical items. As Nyst (2016, p. 86) says, there are some signs where only the fingertips trace a shape on the body or in space—leaving a “virtual trace or imprint.”

Fig. 2.

Two precise‐tip paint‐brush handshapes.

4.2. Participants

Seventeen deaf signers (eight men, nine women) participated in the shape‐drawing study, ranging in age from 20s to 60s. Twelve of the participants use ASL (five men, seven women); 10 come from different areas of the United States and two, from Canada, but their signing was indistinguishable in this study from that of the Americans. The remaining five use the sign languages of Brazil (1), Italy (1), the Netherlands (2), and Turkey (1). All but one reported a sign language to be the language (or one of the languages) they were most comfortable communicating with. The remaining participant reported ASL as her second most comfortable language after English. This individual was late‐deafened (in early adult years), but her signing was indistinguishable in this study from that of the other signers. None of the participants use a sign language exclusively; all have received a university‐level education and interact with non‐signers frequently.

We did not collect information on participants with respect to their signing history for three reasons. First, signing shapes is a task based on iconicity and aspects of signing that rely heavily on iconicity tend to be resilient (Goldin‐Meadow, 2003); signers master them reliably regardless of the age at which they began signing. Second, in many deaf communities it is not acceptable protocol to collect such information (Napoli, Sutton‐Spence, & Quadros, 2017); doing so might have unnecessarily limited who was willing to participate. Finally, collecting such data might have “a negative effect on deaf communities by exalting the language of those who were privileged enough to acquire a firm foundation in signing during the sensitive period for language development and discounting the language of others” (Fisher, Mirus, & Napoli, 2019, pp. 152–153).

Participants were recruited through friends and acquaintances, who shared the invitation with other deaf people who primarily use a sign language. Participants were instructed to video‐record themselves while communicating the list of shapes (all used webcam). Participants positioned themselves to include from about the lower chest area to about the top of their heads in a frame, which displayed the full range of movement of the manual articulators. The instructions allowed participants to offer as many responses as they deemed necessary, but to provide their preferred rendering first, and include any alternative renderings following that (see Appendix S2).

4.3. Design

Participants were given a link to a Qualtrics‐based web survey with the task instructions. Each participant received a randomized list of the 49 shapes, each large enough to fill the screen, so, although participants had the ability to scroll ahead to later trials if they chose, they would otherwise see only their current shape. Based on the order and pacing of their responses, no participants appear to have done this. Although in this paper, the stimuli shapes are given labels to refer to them, none of these labels were seen by participants. Participants were free to spend as much time as they needed and, overall, completed the task within the expected time frame (the full video recordings were 5.7 min long on average, 5.4 for ASL signers, 6.4 for non‐ASL signers). All signers completed the task in one sitting, and with a sufficiently lit setting.

4.4. Total videos

Eight responses were unable to be included in our analysis: One response is missing from a participant who skipped a shape inadvertently; another signer's video froze on seven shapes. Of the analyzable data, six signers gave only one attempt for each shape. All others produced multiple attempts for at least one shape and some produced multiple attempts for most shapes. Of these, the maximum number of video clips any one participant provided was 95. The total number of clips was 1,009.

Responses were characterized by several strategies. Four discrete non‐drawing strategies were observed: (a) comments (where a signer described the shape by saying, e.g., that it looked like a window), (b) fingerspelling, (c) hand‐as‐shape (where a signer made [part of] the shape by configuring their hands in a certain way and did not, then, move the hand[s]), and (d) name (such as “star” or “box”). Sometimes signers combined non‐drawing strategies with each other and with drawing. The vast majority of clips, however, consisted solely of drawing in the air: 784 total, of which 545 are by ASL signers. These 784 clips are analyzed with respect to method choice in Ferrara and Napoli (2019), and these are the clips we now report on with respect to handshape and path movement.

4.5. Results

Since our ASL and non‐ASL signer data did not significantly differ, we analyze them as a whole. Of the 784 clips that were pure drawing, all but 133 of them used the 1‐handshape. With respect to handshapes in these 133 clips, when there were enough data to make comparisons, the ASL and non‐ASL signer data again did not significantly differ, so we again report on them as one data set. These 133 clips used a total of eight distinct handshapes: baby‐C‐, C‐, B‐, i‐, 5‐, flat‐O‐, claw, and flat‐B‐ (all shown below as they are discussed).

In Table 1, each row is headed by one of the nine handshapes used in drawing shapes. Under the column “Method One” is the number of clips that used only one moving hand, whether or not there was also a point buoy (in all but seven instances the handshape of the buoy was the same handshape as the moving hand). 14 Under the column “Method Two” is the number of clips that used two moving hands (in all of these the two handshapes were identical). Under the column “Mix” is the number of clips that used some combination of the two methods—usually starting with two moving hands and then changing to just one, or vice versa.

Table 1.

Raw counts method choice by handshapes

| Method One | Method Two | Mix | Total No. of Clips | |

|---|---|---|---|---|

| 1‐handshape | 391 | 203 | 57 | 651 (83%) |

| baby‐C‐handshape | 29 | 46 | 10 | 85 (<11%) |

| C‐handshape | 3 | 10 | 2 | 15 (<2%) |

| i‐handshape | 8 | 2 | 4 | 14 (<2%) |

| B‐handshape | 2 | 7 | 3 | 12 (1.5%) |

| 5‐handshape | 0 | 3 | 0 | 3 |

| flat‐O | 2 | 0 | 0 | 2 |

| Claw | 0 | 1 | 0 | 1 |

| flat‐B | 1 | 1 | ||

| Total for special handshapes only | 44 | 70 | 19 | 133 |

From Table 1, we conclude that the 1‐handshape is the norm. In support, we note that the 1‐handshape is unique in that it is used in drawing all 49 shapes, always for some shapes and at least a few times for each shape. The other eight, then, are special—and their use is triggered by factors pertinent only to signers, given that non‐signers used only the 1‐handshape. Our first question was whether there was any subgrouping to be made among the eight special handshapes that might better allow us insights into their use in these clips.

Since in all these clips people drew shapes in the air, it stands to reason that the heavily favored handshape is the 1‐handshape (in Fig. 2; recall that hearing non‐signers used the 1‐handshape). That is, the 1‐handshape acts analogously to a paint brush with a precise tip. Another handshape analogous to a precise‐tip paintbrush is the i‐handshape (also in Fig. 2). Like the 1‐handshape, a single finger is extended, the tip of which traces the shape in the air.

A third handshape seems to be like a precise‐tip paint brush—if not quite so precise as 1‐ and i‐: the flat‐O. All fingertips line up together and meet the thumb tip (with full extension of the interphalangeal knuckles), as though the sum of fingertips and thumb tip is a point, as seen in Fig. 3. We call these three the PT‐handshapes (for “precise‐tip”).

Fig. 3.

Another precise‐tip paint brush handshape.

While we grouped 1‐, i‐, and flat‐O‐ as PT‐handshapes on the basis of their ability to act like a point tracing a shape in the air, these three handshapes are distinguished in our study from the other six in another way as well: Flat‐O‐ is used only with Method One, and both 1‐ and i‐ are more heavily used with Method One than with Method Two or Mix. In contrast, all other special handshapes are used more with Method Two than with Method One or Mix. In other words, the other six special handshapes are used with the method that is conditioned (Method Two), while the PT‐handshapes are used with the elsewhere method (Method One). That means the PT‐handshapes behave as a unit and in contrast to the other six handshapes with respect to geometric properties of the movement paths (which are iconic of the shapes drawn).

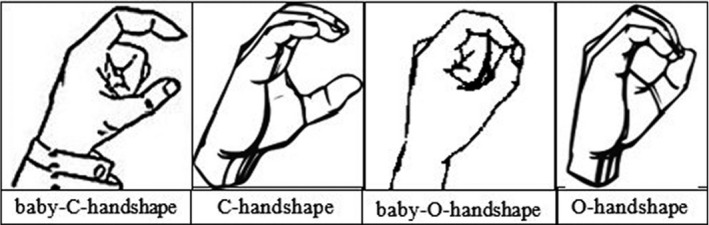

A second subgrouping among the eight special handshapes involves the baby‐C‐handshape and the C‐handshape. These two, shown in Fig. 4, are distinguished by the fact that the thumb tip acts like one drawing tool while the fingertip(s) act like a separate drawing tool—almost as if we had two hands drawing at once. This characterization seems apt when we consider that in language after language these handshapes are used as perimeter classifiers (Collins‐Ahlgren, 1990; Corazza, 1990; Liddell & Johnson, 1987). Hence, we call them MA‐handshapes (for “multiple‐articulator”). In all instances in which MA‐handshapes were used, the fingertips of the fingers that draw the shape began and/or ended touching (i.e., in baby‐O‐ or O‐handshape); so we are changing from [±contact] to [∓contact].

Fig. 4.

Two multiple‐articulator handshapes with their [+contact] counterparts.

Notice that in the [+contact] handshapes, baby‐O‐ and O‐, the selected fingertips (one or all four) bunch up to meet the thumb tip. So, like the flat‐O‐handshape in Fig. 3, these look like they are precise‐tip paintbrushes. Here, however, these [+contact] handshapes do not trace a shape. Rather, they indicate a starting point that opens up into the (baby‐)C‐handshape multiple‐articulator and/or they indicate a final point that the (baby‐)C‐handshape closes to. Thus, we do not classify them with respect to being drawing tools (and we return to consideration of them in Appendix S6).

In the remaining four special handshapes used in drawing shapes, that is, B‐, 5‐, claw, and flat‐B‐, multiple digits line up beside each other to trace the shape, and can be considered variations on a single basic handshape (Brentari, 2011; Whitworth, 2011). These handshapes vary among themselves by whether or not thumb is opposed to fingers, whether the digits are abducted (spread) or not, and whether the interphalangeal knuckle is flexed or not (Keane, Sevcikova Sehyr, Emmorey, & Brentari, 2017). They are shown in Fig. 5.

Fig. 5.

Four thick‐tip paintbrush handshapes.

In the B‐ and flat‐B‐handshapes, the digits touch, but in a line (and, importantly, they do not make contact with the thumb tip, in contrast to flat‐O), so using the tips of these handshapes to draw seems more like using a thick‐tip paint brush than like using either a PT‐handshape or an MA‐handshape. With the 5‐handshape and claw, the digits do not touch, so using these handshapes to draw a shape opens the possibility that each fingertip might act as a separate articulator, but they might just as well act like a thick‐tip paint brush. Because we have so few data (only three instances of the 5‐handshape and one of claw), we go forward, keeping track of the four handshapes in Fig. 5 as a group and separately from both the PT‐handshapes and the MA‐handshapes. We call these the TT‐handshapes (for “thick tip”).

We now have three sets of handshapes: precise‐tip paint brushes (1‐, i‐, flat‐O‐), multiple articulators (baby‐C‐ and C‐, which always begin and/or end closed into baby‐O‐ or O‐), and thick‐tip paint brushes (B‐, flat‐B‐, 5‐, claw). The data from Table 1 are reconfigured under this organization, in Table 2. In Fig. 6 we see the segmented standardized bar graphs corresponding to Table 2.

Table 2.

Raw data on method choice for handshape type

| Method One | Method Two | Mix | Total No. of Clips | |

|---|---|---|---|---|

| PT (1‐, i‐, flat‐O‐) | 401 | 205 | 61 | 667 |

| MA (baby‐C, C‐) | 32 | 56 | 12 | 100 |

| TT (B‐, flat‐B‐, 5‐, claw) | 2 | 12 | 3 | 17 |

| Total | 435 | 273 | 76 | 784 |

Fig. 6.

Chart for method choice for handshape type.

We cannot simply run a χ2 test here to confirm that these sets are significantly distinct, since the individual data points in our corpus are not independent from each other. Instead, many data points come from the same participant and many are in response to the same stimulus. Still, we notice something interesting: Setting aside Mix Method, the PT‐handshapes and the MA‐handshapes are near‐inverse partitions with respect to the use of Method One or Method Two. Further, the few TT‐handshapes appear to stand alone.

In Fig. 7 we see pie charts for the three methods according to types of handshapes. The PT‐handshapes were used the most by far in all methods by virtue of the fact that this type includes the norm 1‐handshape. Overall, 85.1% of the 784 clips used a PT‐handshape. The PT‐handshapes appeared in 92.2% of the clips that used Method One; 80.3% of the clips that used Method Mix; and 75.1% of the clips that used Method Two. Overall, 12.8% of the 784 clips used an MA‐handshape. The MA‐handshapes appeared in 20.5% of the clips that used Method Two; 15.8% of the clips that used Method Mix; and 8% of the clips that used Method One. Finally, overall, 2.2% of the 784 clips used a TT‐handshape. The TT‐handshapes appeared in 4.4% of the clips that used Method Two; 4% of the clips that used Method Mix; and barely 0.5% of the clips that used Method One. In the following subsections, we search for correlations between handshape and primary movement among these types of handshapes.

Fig. 7.

Use of handshape type for each method type.

4.5.1. Accounting for the use of PT‐handshapes

Since the 1‐handshape is used when no factors favor a special handshape, we set it aside for now and note details about the two special PT‐handshapes: i‐ and flat‐O‐. Four of our ASL signers produced the 14 instances of the i‐handshape (seven for one of them, five for another, and one for each of the other two). One signer (from the Netherlands) produced both instances of flat‐O‐. Of the 16 combined instances of use of i‐ and flat‐O‐, only two were for shapes that enclosed a unified space, with starting point and endpoint meeting (in mathematical terms, these shapes are walkable as Hamiltonian cycles, which we denote with +HamC 15 ; details are in Appendix S3). We suggest very tentatively (since the data are so few) that when a shape consists of a line (straight or curved, wiggly or ziggidy or looping) with starting and final vertices that do not meet (in mathematical terms, these shapes are walkable as open trails), the signer's attention is drawn to those non‐meeting endpoints. If those endpoints are relatively close to each other, the shape looks like it is missing a segment (the gap between the final vertex and the initial vertex); and the very absence of that segment is important in recognizing and, thus, in communicating the shape. That is, the eye will have a tendency to fill in a small gap in a line (Boudewijnse, 1996), particularly if the gap seems to be the absence of something that somehow “belongs” in a figure (Yazdanbakhsh & Mingolla, 2019). So the signer chooses a special paintbrush to signal that attention should be paid to this important detail, and both i‐ and flat‐O‐ are special in that they are marked (Boyes Braem, 1990) and, thus, noticeable. The i‐, in particular, is the most fine‐tipped of the PT‐handshapes; thus, it may be the most apt for signaling that fine‐grained details are involved in drawing this shape. We may, then, have exposed a tendency regarding handshape and movement path: When the line itself is iconic, the i‐handshape may indicate a line that does not close.

4.5.2. Accounting for the use of MA‐handshapes

In MA‐handshapes the thumb and the finger(s) act as separate articulators, almost like two mini‐hands. In a sign in which two hands move, each hand usually has its own movement path (Napoli & Wu, 2003), and the relationship of the two hands can tell us something about the relative positions of parts of described entities (as in drawing shapes) or of whole described entities (as in classifier constructions; Brozdowski, Secora, & Emmorey, 2019). Thus, in MA‐handshapes we might expect the extended thumb to trace one part of a shape and the other finger(s) to trace another part. When two hands move in signing a lexical item (Battison, 1978) or a shape (Ferrara & Napoli, 2019), they generally move in a reflexively symmetrical way across a plane, typically the midsagittal plane that vertically divides the body into two halves. However, with the baby‐C‐ or C‐handshape, the thumb and finger(s) of a single hand should be expected to move (close to) reflexively symmetrically to each other across the plane that bisects the space between them (cutting through the web of the thumb), regardless of the orientation of the hand. Thus, the MA‐articulators are freer, in a sense, than two moving hands; they are not wedded to the bilateral symmetry of the two manual articulators, which are anchored in the trunk of the body; instead, they are wedded to a symmetry internal to one manual articulator (the hand) as it moves through the air. So, for example, an MA‐handshape could draw any (relatively) parallel lines; it could also draw any angle 90 degrees or smaller by starting with the tip(s) of the fingers and the thumb tip separated by the appropriate amount to allow the web between the thumb and the fingers to form the desired angle and then moving the hand away from that angle while closing those tips together toward the point in space that the web of the hand originally occupied (and see Emmorey, Nicodemus, & O'Grady, forthcoming, their table 2 and fig. 4; where they talk about the L‐handshape changing to a G in drawing the angle of a triangle, which, almost assuredly is a variation on the baby‐C‐ changing to a baby‐O‐ in our shape‐drawing study). As Nyst (2016, p. 90) points out, the aperture between the fingers and the opposing thumb can indicate a stretch of space between two edges or lines in the same way that distance between the two hands can.

We expect these two‐handshapes (C‐ and baby‐C‐) to be able to render shapes that are narrow enough that the distance between the thumb and the finger(s) can easily span the shape. For example, it would be easy to draw the perimeter of the exaggeratedly narrow shapes in Fig. 8 with baby‐C‐ (opening from a point indicated by baby‐O‐ at the start of drawing and closing to another point indicated by baby‐O‐ at the end), using Method One.

Fig. 8.

Two shapes easy to draw with the baby‐C‐handshape.

Appendix S4 shows the shapes that were drawn with an MA‐handshape at least once. All but one is +HamC (the exception is CRESCENT_COMPO). So the MA‐handshapes contrast with the i‐handshape, which, as noted in the subsection above, is only rarely used with +HamC shapes. We arrive at another tendency regarding handshape and movement path: The space between the internal articulators (the tips of finger[s] and thumb) in the MA‐handshapes may be iconic of the space enclosed in a shape defined by the movement path, while the internal articulators may be iconic of the edges of that space.

In support of this tendency, we note that three of the 49 shapes were only rarely signed with the 1‐handshape and usually signed with an MA‐handshape, and a fourth was signed with an MA‐handshape only one time fewer than with the 1‐handshape, as shown in Table 3.

Table 3.

Signs rendered with an MA‐handshape often compared to the 1‐handshape

| 1‐Handshape | MA‐Handshape | |

|---|---|---|

| RECTANGLE_THIN | 1 | 14 |

| EYE_HORIZ | 1 | 13 |

| CRESCENT | 5 | 12 |

| ARROW_LEFT | 4 | 3 |

These four shapes are canonical with respect to eliciting the MA‐handshapes: They are narrow, +HamC shapes, with a point (or something close to a point in the case of RECTANGLE_THIN) at one or both ends (recall that drawings with MA‐handshapes begin and/or end with the tips of the selected fingers touching, i.e., [+contact]).

More information about possible tendencies comes from consideration of method choice. The MA‐handshapes are used with all three methods (see Fig. 9). In fact, the MA‐handshapes occur more frequently with Method Two (56%) than with Method One (32%). This fact at first seems an anomaly: These handshapes are already multiple‐articulators, so why would anyone use both hands?

Fig. 9.

Segmented bar chart for frequency of shapes drawn with MA‐handshapes, given in Appendix S4.

Fourteen shapes were rendered at least once with an MA‐handshape and Method One, and all have two clear vertices (whether angles formed by straight lines or points of exaggerated curvature or cusps—see Appendix S4) that could be taken as the endpoints of the shape, exactly as expected. Seven of these shapes were rendered exclusively with Method One when an MA‐handshape was used.

Thirteen shapes were rendered at least once with an MA‐handshape and Method Two. These are the shapes we need to account for, which we do in Appendix S4 by considering details of each shape. In brief, MA‐handshapes can more efficiently convey shapes that spread narrowly along the horizontal axis and that are symmetrical across that axis (i.e., +XSym). Further, the use of MA‐handshapes with Method Two can allow one to get around the constraint against using Method Two with curves, since these MA‐handshapes can convey the curves via hand‐internal movement, maintaining a straight primary movement path.

Eight shapes were rendered at least once with an MA‐handshape and Mix. We find no single explanation for the eight shapes that do this. Five of them were also rendered by one or both of the other methods; only three were not: EGGS_2, EGGS_3, and STAR. That we do not see a coherence is not disturbing: Mix is not coherent. We include those data as a service, in case others see a pattern we have not.

In Fig. 9 we give a segmented bar chart for these data, where across the bottom we have the number of each sign as given in Appendix S4 Table 1 and where the numbers on the vertical axis indicate the number of signs, so shape 12 (ARROW_LEFT), for example, was drawn with an MA‐handshape three times, using only Method One; while shape 1 (ARROW_RIGHT) was drawn with an MA‐handshape five times, using Method One three time, and Methods Two and Mix one time each.

4.5.3. Accounting for the use of TT‐handshapes

Only 17 clips exhibited TT‐handshapes, with only nine shapes rendered, so generalizations are hard to come by. Detailed information is given in Appendix S5.

Twelve of the 17 clips use Method Two, two use Method One, and three use Mix. That is, the TT‐handshapes are used more than six times more frequently with Method Two (12/17 = 70.6%) than with Method One (2/17 = 11.8%). In this way the TT‐handshapes contrast sharply with the PT‐handshapes, which appear with Method One almost twice as often (401/667 = 60.1%) as with Method Two (205/667 = 30.7%). Interestingly, while Mix is the least used method for the PT‐handshapes and the MA‐handshapes, for the TT‐handshapes it is used more than Method One. This fact might be of no significance, since the numbers are so few, or perhaps it reflects confusion or difficulty that signers felt in rendering the relevant shapes. We also note that only four clips with TT‐handshapes involve shapes that are −Curve, where all use Method Two (TRIANGLE_DOWN, VERTEX_UP, and VERTEX_DOWN [twice]). So the correlation of TT‐handshape to Method Two seems to be independent of whether a shape is +Curve.

Although we have nothing firm to say about these uses of TT‐handshapes, we have suspicions that will turn out pertinent in analyzing the results of our dictionary studies in later sections—suspicions involving relating shapes to entities in the world. While we selected our shapes without any intention of making them “look like” anything, many of them can be seen as resembling three‐dimensional entities, particularly ones where the third dimension is minimal, so they are, essentially, flat. A rectangle, for example, could look like a flat‐screen TV or a blackboard or a poster or so many other things. Shapes that have no conventional name are particularly prone to being seen as resembling three‐dimensional entities (even if the third dimension is minimal); when Sevcikova Sehyr, Nicodemus, Petrich, and Emmorey (2018) asked people to refer to non‐nameable figures, people started out referring to figures' geometric properties (their shape), but after various iterations they shifted to describing figures by resemblance to nameable entities. We note, in particular, that five of the shapes that are non‐nameable in ordinary (non‐mathematical) talk and were rendered at least once with TT‐handshapes bear resemblance to robustly three‐dimensional entities: VERTEX_DOWN (a valley?), PARABOLA (a hill?); VERTEX_UP (a volcano?); CRESCENT_COMPO (a [basket]ball?); SQUARE_ARC (one signer stated that it looked like an arched window). Perhaps the participants in our shape‐drawing study used the handshapes in these instances than they would have used in the lexical signs (all TT‐handshapes). If that were the case, that might account for 10 out of the 17 clips.

4.6. Conclusions from the shape‐drawing study

We can now use the shape‐drawing study as a test of the Dimension Principle, restated here:

Dimension Principle (first approximation): If path movement is iconic in that it draws (part of) the signified entity, handshapes for drawing two‐dimensional entities should have a recognizable point or points, whereas handshapes for drawing three‐dimensional entities should allow representation of surface features.

Fully 85% of the clips used PT‐handshapes, which was the most used handshape type regardless of method because within this type falls the 1‐handshape. Since all shapes in the study were two‐dimensional and were (intended as) not iconic of anything three‐dimensional, this prevalence is predicted by the Dimension Principle.

PT‐handshapes were used most frequently with Method One. However, that is an accidental product of the experiment design: In the set of 49 shapes, only eight of them were +YSym, −Curve, which turned out to be the factors that together favor Method Two.

Nearly 13% of the clips (100 out of 784) used an MA‐handshape. This also is consistent with the Dimension Principle: In each instance, the tips of the selected fingers were drawing (nearly) reflexively symmetrical edges of the shape.

The remaining 2% of the clips used TT‐handshapes, where no mathematics‐based explanation emerged. However, if our suspicion that the participants saw a three‐dimensional entity in the image we presented them and used the handshape appropriate for signing that entity, only seven clips used TT‐handshapes to render two‐dimensional shapes. In that case, more than 99% of the clips were consistent with the Dimension Principle.

Other conclusions from this study that relate to conclusions in one or both of the other studies described in Sections 5 and 6 are postponed until Section 7.

5. Dictionary study of ASL

As pointed out in Section 2, sign languages have a productive lexicon and a frozen lexicon, where only the latter is found in dictionaries. The productive lexicon consists of classifier constructions, in which the phonological parameters convey meaning (Benedicto & Brentari, 2004; Janis, 1992; Kegl, 1990; Liddell, 2003; Schembri, 2003; Supalla, 1982, 1986; among many). Classifier constructions have been analyzed as examples of noun incorporation (Meir, 2001) and of inflected verbs (Glück & Pfau, 1998). Experimental work has found that handshape in these constructions is categorically produced and perceived (although it can display gradient properties, Emmorey & Herzig, 2003), just as it is in homesign systems (Goldin‐Meadow, Mylander, & Franklin, 2007). Handshapes in classifier constructions encode argument structure (Benedicto & Brentari, 2004) and largely adhere to phonological rules (Liddell, 2003), displaying phonological patterns not found in the gestures hearing individuals produce in gesture tasks (Brentari, Coppola, Mazzoni, & Goldin‐Meadow, 2012; see also Goldin‐Meadow, Brentari, Coppola, Horton, & Senghas, 2015).

Nevertheless, we here limit our discussion to the frozen lexicon, because we see no reason to expect a correlation between handshape and path movement in classifier constructions and every reason not to. Handshape in these predicates is chosen independently of movement since it is the appropriate handling or entity classifier for the arguments in the event. The path of movement in classifier constructions is chosen independently of handshape; it is dictated by trying to present an analogy between the path the manual articulators trace and the movement of an argument in the real world. Since any entity can move or be moved along any shaped path (generally speaking), handshape and the shape of path movement should not be constrained by or correlated to the other in classifier constructions.

Caution is in order here, though: The line between the productive lexicon and the fixed lexicon can be crossed. Sandler and Lillo‐Martin (2006, p. 103) point out that if a given classifier handshape is frequently combined with a certain kind of movement, the classifier construction can get reanalyzed as an item in the frozen lexicon. As an example they point out the verb iron in ASL: A particular classifier handshape (here a handling one, for how one grips the handle of the iron) moves side to side in front of the signer. They claim (quite reasonably) that, out of context, this productive classifier construction would have meant something like “slide a narrow‐handled object back and forth along a surface.” But context, utility, and frequency conspired to lexicalize this classifier construction, so much so that it can inflect for temporal aspect, like other frozen lexicon verbs but unlike productive classifier constructions. Still, the line between frozen lexicon items and classifier constructions is generally distinct synchronically, and we return to this question in Section 7.

From here out, then, when we talk about lexical items, we mean only frozen lexical items. 16

5.1. Older study and new study

In spring 2018 we considered the entire inventory of signs in the online dictionary aslpro.com, with random checks in two other online dictionaries: handspeak.com and signingsavvy.com. This study was done to test whether the same constraints operative in the shape‐drawing study with respect to method choice are operative in the lexicon for signs whose primary movement path draws (part of) the outline of the signified entity's shape. In particular, we did not consider metaphorical signs (such as the hands moving away from each other forming a large V for general(ly)), or signs whose movement mimics an action associated with the sense of the sign (such as the hour hand of a clock moving in a circle for hour). Limiting our study to only this one kind of movement iconicity, we found that 82% of the dictionary entries were consistent with the Lexical Drawing Principle. An additional 7% had movement paths that corresponded to disconnected graphs—which we also found to be unruly in the shape‐drawing study, hence our earlier warning that we suspect that the Lexical Drawing Principle applies only to connected movement paths.

In March 2019 we did a second dictionary study, gathering the relevant data from aslpro.com all over again, on the chance that the dictionary had added or replaced items (a common occurrence for online sign dictionaries). We here give findings from only that one online dictionary (to make it easier for our reader to confirm the articulations we discuss), although we compare to other dictionaries when helpful. We focus on correlations between handshape types and movement paths in signs in which the movement path draws (part of) the signified entity.

5.2. Data gathered

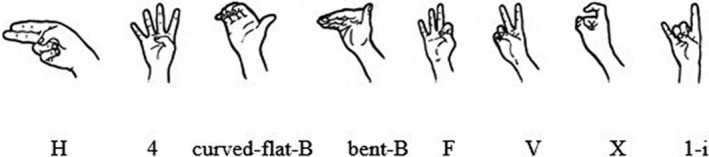

In Appendix S6 we organize the 120 dictionary entries we found pertinent. A glance at the table there shows the inclusion of eight handshapes that did not appear in our shape‐drawing study. These new handshapes are shown in Fig. 10.

Fig. 10.

Handshapes in Study 2 that did not appear in Study 1.

The number of signs using each handshape type in Study 2 is shown in bar graphs in Fig. 11 (based on data in Appendix S6 Table 1), where they are categorized according to the geometric properties of their path movement that were found to be relevant to method choice in Study 1. For purposes of analyzing our results, initially we maintain the H‐handshape and the 4‐handshape as their own types. All other handshapes are conflated under the rubric “other,” since there are so few signs that use each one. Note that we cannot use the symbols + (plus) and − (minus) in the legend of this chart, so the absence of a symbol here means plus, and we write “no” for minus. An asterisk indicates signs that did not behave as predicted by the Lexical Drawing Principle. Thus, signs marked “Curve” used Method One as predicted, and signs marked “*Curve” used Method Two; signs marked “YSym, no Curve” used Method Two as predicted, and signs marked “*YSym, no Curve” used Method One; and signs marked “noYSym, no Curve” used Method One as predicted, which was all of the −YSym, −Curve signs.

Fig. 11.

Bar graphs for frequency of handshape types in Study 2 according to characteristics of movement path.

Our chi‐square statistic calculator does not allow cells to have zero in them; therefore, we substituted 1 for zero. The five first sets of handshapes (excluding the “others”) are not significantly different from one another (Pearson's χ2 test: p‐value = .050849). Thus, we need to take a closer look at handshape types and consider possible complications for the Lexical Drawing Principle.

5.3. Reorganization of handshapes into two major types

In Appendix S7 we give details on the diverse array of handshapes used in our study and how they pattern with method choice. On the basis of those observations, we suggest a reorganization of the signs in Appendix S6 Table 1 into two groups: ED‐handshapes and SD‐handshapes.

The ED‐handshapes contain the PT‐handshapes 1‐ and i‐, as well as baby‐C‐, 4‐, V‐, and 1‐i‐. These handshapes form a natural class in that not all fingers are selected (here, extended though they may curve) and selected fingers are not touching one another. In ED‐handshapes the tips of the extended fingers are separate from one another and they each draw an edge.

The SD‐handshapes contain the TT‐handshapes (various B handshapes, 5‐, claw), as well as C‐ and O‐. These handshapes also form a natural class in that all five digits behave the same way with regard to extension or flexion and spreading; they can be viewed as variants of one another (see general discussion in studies from Stokoe, Casterline, & Croneberg, 1965, to Whitworth, 2011). While the fingers might or might not touch, they do not separately draw edges. Instead, if there is space between the fingers, it indicates a wider—but continuous—surface. In SD‐handshapes, the entire handshape is a surface used to draw a three‐dimensional entity.

Flat‐O‐ appeared in our shape‐drawing study, and we analyzed it as a PT‐handshape. However, flat‐O‐ was not used for drawing in the ASL dictionary study. At this point we set it aside, without gathering it into either of the two new handshape super‐types.

We also leave out F‐, which has only two fingers that are [+contact]—but behaves more like a surface‐drawing handshape (which typically has all five digits in the same position—extended or flexed, spread or not). This might seem a regrettable omission, since the use of F‐, while infrequent in the frozen lexicon (Henner, Geer, & Lillo‐Martin, 2013), is common in conversation as a classifier. However, we found only one (distinct) lexical sign that used it in drawing. Its frequency for use in drawing in conversation almost assuredly is due to classifier constructions. Therefore, the omission seems right‐minded. We also omit baby‐O‐ and X‐, since these handshapes occurred with only one example each.

We sum up our new organization of the data in Table 4, which reports the total number of signs in each cell; the reader is referred to Appendix S6 Table 1 for the lexical items. A number with an asterisk following it means that that number of signs did not conform to the Lexical Drawing Principle. These data are arranged in bar graphs in Fig. 12. These two groups are significantly different (Pearson's χ2 test: p < .05 – p‐value = .012644).

Table 4.

ASL signs classified by handshape super‐types ED‐ and SD‐

| +YSym, −Curve | +Curve | −YSym, −Curve | Total | |

|---|---|---|---|---|

| Edge‐drawing‐handshapes | ||||

| 1 | 8 | 11 | 5 | 24 |

| 1* | 1* | |||

| i | 3 | 3 | ||

| 1* | 1* | |||

| H | 4 | 1 | 5 | |

| baby‐C | 8 | 7 | 3 | 18 |

| 1* | 2* | 3* | ||

| 4 | 1 | 2 | 4 | 7 |

| V | 1 | 1 | ||

| 1‐i | 1 | 1 | ||

| Total | 26 | 21 | 12 | 59 |

| 2* | 3* | 5* | ||

| Surface‐drawing‐handshapes | ||||

| Flat‐B | 13 | 3 | 2 | 18 |

| 4* | 4* | |||

| Curved or bent flat‐B | 1 | 3 | 1 | 5 |

| 1* | 1* | |||

| B | 2 | 2 | ||

| claw | 3 | 1 | 4 | |

| 4* | 4* | |||

| 5 | 3 | 3 | ||

| 1* | 1* | |||

| C | 1 | 1 | ||

| 5* | 2* | 7* | ||

| O | 2 | 1 | 3 | |

| Total | 19 | 13 | 4 | 36 |

| 5* | 12* | 17* | ||

| Grand Total | 45 | 34 | 16 | 95 |

| 7* | 15* | 22* | ||

Fig. 12.

Bar graphs for frequency of handshape super‐types in Study 2.

5.4. Conclusions from the ASL dictionary study

ED‐handshapes strongly conform to the Lexical Drawing Principle (92.2% [59/64] in this dictionary obeyed it). SD‐handshapes, while most obey the principle, are more lax (67.9% [36/53] obeyed it). We postpone our suspicions on why until Section 7.

Now let us return to the Dimension Principle, which we reframe given our new organization of handshapes:

Dimension Principle (second approximation): If path movement is iconic in that it draws (part of) the signified entity, then ED‐handshapes should be used for two‐dimensional entities, whereas SD‐handshapes should be used for three‐dimensional entities.

In order to evaluate the viability of this hypothesis, we need to understand it in a way that makes sense given the realities of entities in the world. Nearly all entities have three dimensions, but some entities are flat or “thin” 17 (like award ribbons and even televisions, since the screen is what really matters to us). When drawing in the air, signers might treat these flat entities as though they are two‐dimensional. Other entities are thicker (like planets and mountains, but also containers like boxes and baskets). When drawing in the air, signers might treat thick entities as though they are three‐dimensional. In Appendix S8 we classify all the signs in Appendix S6 Table 1 in terms of whether they are more likely (in our judgment) to be viewed as flat or thick. ED‐handshapes were used in 54 flat signs (85.7% of the ED‐handshape signs) and only nine thick signs (14.3% of the ED‐handshape signs), whereas SD‐handshapes were used in only12 flat signs (22.6% of the SD‐handshape signs) and 41 thick signs (77.4% of the SD‐handshape signs). Thus, the Dimension Principle finds confirmation in this study.

Other conclusions that follow from this ASL dictionary study that relate to findings from one or both of the other two studies in Sections 4 and 6 are taken up in Section 7.

6. Cross‐linguistic lexical study

The findings of our shape‐drawing study pertained cross‐linguistically. In order to test if our findings from the follow‐up dictionary study of ASL also pertain cross‐linguistically, we did a second lexical study, this time across multiple languages.

6.1. The stimuli and coding

In order to look across languages, we used the spreadthesign.com dictionary, downloading videos in July 2018. 18 Our first task was to choose the lexical items to compare, since it was beyond our capacities to compare entire dictionaries. We chose lexical items for which we expected signers might use the movement parameter to draw the signified entity. 19 Then we grouped them into those that signify two‐dimensional (i.e., flat) entities versus those that signify three‐dimensional (i.e., thick) entities, where we used our own judgments, then checked with three other hearing people (one male, two females, adults, native speakers of American English). We found 15 signs on which these three people agreed with our judgments as to whether the entities signified by the English words were (thought of by them as) thick or thin. Eight of those words signified flat entities: baking pan, belt, door, face, sash, sheet, television, and window. The other seven signified thick entities: ball, banana, clam, elephant, hill, pipe, tyre. 20

To the mix we added the sign moon, because it posed an interesting question for us. We authors had classified the moon as thick, but our three hearing consultants classified it as flat, basing their classification on visual perception, not on knowledge of the moon. Please recall that in Appendix S8 we analyze moon crescent as “flat” since people do not tend to think about the moon crescent except as they see it in the sky, a flat object up there. However, moon crescents differ from the whole moon in that people talk about the rotation of the moon and about astronauts and space craft landing on it and exploring its volcanoes and craters. So other people (in contrast to our three consultants, but like the authors of this article) might judge moon to signify a thick entity. Our predictions for handshape choice differ based on what classification moon has. Further, how people treat the sign moon can help indicate to us whether perception or knowledge is more influential in classifying entities for the purpose of drawing (part of) them in sign languages. For the moment then, we leave the classification of moon undetermined.

There are 34 sign languages for which there is a video for at least one of these 16 signs: sign languages used in Argentina, Austria, Belarus, Brazil, Bulgaria, China, Croatia, Cuba, Czech Republic, England, Estonia, Finland, France, Germany, Greece, Iceland, India‐English, Italy, Japan, Latvia, Lithuania, Mexico, Pakistan (labeled Urdu on the website), Poland, Portugal, Romania, Russia, Spain, Sweden, Syria, Turkey, Uganda, Ukraine, and the United States. Some languages had more than one variant for a sign. 21

Each of our 16 signs yielded between 14 and 30 video clips, for a total of 415 clips. Of these clips, 231 (55.7%) drew (part of) the signified entity. Others rendered the sign via other strategies (some iconic), like the strategies in our shape‐drawing study.

6.2. Coding the data

The 231 pure‐drawing clips use a total of 14 handshapes, where we merge the various B‐handshapes. Sometimes handshapes begin and/or end with contact of the selected fingers (and see Mandel, 1981). In one case, F → 5 (in drawing sheet). That is, handshapes obey the Handshape Sequence Constraint (Sandler, 1987, p. 93), which says that handshape change in monomorphemic signs is limited to handshape internal movement of precisely this sort (and see revisions in Brentari, 1990). When useful, we indicate that a handshape begins or ends in its +contact/−contact counterpart with “→” preceding it (for “begins with”) and “→” following it (for “ends with”). Thus “→C” indicates the handshape started as O‐ then changed to C‐; “C→” indicates the handshape started as C‐ then changed to O‐; and “→baby‐C→” indicates that the handshape started as baby‐O‐, changed to baby‐C‐, and then changed again to baby‐O‐.

Ten of the handshapes in our cross‐linguistic study also appeared in our ASL dictionary study, so we categorize those 10 as having ED‐handshape or SD‐handshape according to their behavior in the ASL dictionary study. Three new handshapes appeared that did not appear in our ASL dictionary study; we keep them in a separate list so we can determine which type (ED‐ or SD‐), if either, the new ones belong to. We also maintain the F‐handshape separate from the others since our ASL dictionary study led us to believe that the F‐handshape was used primarily in ways more like classifier constructions than frozen lexical items (and see comments at the end of Appendix S7). All the handshapes used for drawing in the cross‐linguistic study are listed by type in Table 5.

Table 5.

Drawing handshapes for the 34 languages

| Edge‐drawing | 1‐, baby‐C‐, (spread‐thumb)H‐, 1‐i‐, V‐ |

| Surface‐drawing | 5‐, all the various B‐, C‐, claw, O‐ |

| New | open‐F‐, S‐, 10‐ (drawing with thumb) |

| Classifier? | F‐ |

We included only signs with path movement. Some signs are compounds, where we give information on only the relevant part of the compound (just as we did in the ASL dictionary study). Further details on coding appear in Appendix S9.

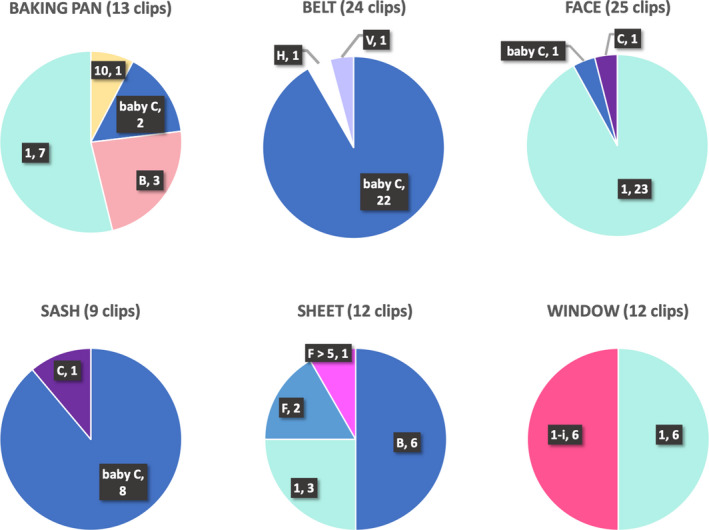

Of our 16 signs, four were never or rarely rendered purely via drawing: door, clam, television, banana. (Details appear in Appendix S10.) Of the remaining 12 signs, six are flat, five are thick, and moon remains undetermined. These 12 signs were rendered via drawing in several clips, with varying handshapes, as shown in the following pie charts. The total number of clips in the pie charts here is 224 (i.e., 231 minus the two drawings of clam, the two drawings of television, and the three drawings of banana described in Appendix S10). In Fig. 13 we see the percentages for the five signs signifying thick entities according to handshape used. In Fig. 14 we see the percentages for the six signs signifying thin entities according to handshape used. In Fig. 15 we see the percentages for moon according to handshape used.

Fig. 13.

Handshapes for five thick entities.

Fig. 14.

Handshapes for six flat entities.

Fig. 15.

Handshapes for moon.

To the 112 clips of thick entities in Fig. 13, we add five more clips—two for clam and three for banana, making a total of 117 clips. To the 95 clips of flat entities in Fig. 14, we add two more clips—both for television, making a total of 97 clips. The distribution of handshapes by type of entity signified is shown in Table 6, where we omit information on handshape changes (recall that all handshape changes make or break contact of the selected fingertips at the start and/or end of the sign). There are 231 total clips and 14 total handshapes represented in Table 6.

Table 6.

Handshapes used in 15 of our signs

| Edge‐Drawing | Surface‐Drawing | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | baby‐C | H | 1‐i | V | 10 | open‐F | F | S | 5 | B | C | O | claw | ||

| Thick | Ball | 6 | 14 | ||||||||||||

| Banana | 1 | 2 | |||||||||||||

| Clam | 1 | 1 | |||||||||||||

| Elephant | 1 | 5 | 14 | 3 | |||||||||||

| Hill | 5 | 19 | 2 | ||||||||||||

| Pipe | 1 | 1 | 2 | 7 | 3 | 13 | 2 | ||||||||

| Tyre | 5 | 9 | |||||||||||||

| Undetermined | Moon | 15 | 2 | ||||||||||||

| Thin | Baking pan | 7 | 2 | 1 | 3 | ||||||||||

| Belt | 22 | 1 | 1 | ||||||||||||

| Face | 23 | 1 | 1 | ||||||||||||

| Sash | 8 | 1 | |||||||||||||

| Sheet | 3 | 3 | 6 | ||||||||||||

| Television | 2 | ||||||||||||||

| Window | 6 | 6 | |||||||||||||

| Total | 44 | 55 | 3 | 6 | 1 | 1 | 2 | 10 | 3 | 5 | 42 | 38 | 2 | 19 | |

6.3. Results

We now consider the results with regard to the Dimension Principle and the Lexical Drawing Principle.

6.3.1. Testing the Dimension Principle

Of the 109 (44 + 55 + 3 + 6 + 1) uses of ED‐handshapes, 82 were in signs signifying flat entities and only 12 were in signs signifying thick entities. Additionally, 15 out of the 17 clips for moon used ED‐handshapes. We conclude that our consultants were right and we were wrong: moon is treated as a flat entity; it appears that (at least in this instance) visual perception trumps knowledge, a point to which we return in our conclusions. Thus, fully 97 out of the 109 uses (89%) of ED‐handshapes were for signs signifying flat entities, and only 12 were for signs signifying thick entities. Of the 106 (5 + 42 + 38 + 2 + 19) uses of SD‐handshapes, 93 (88%) were in signs signifying thick entities and only 13 were in signs signifying flat entities (where we now include moon among the signs signifying flat entities).

We turn now to the four handshapes that we have not designated as either ED‐ or SD‐. 10‐ occurs only once, and with a sign signifying a flat entity. Drawing is done with the thumb—the selected finger. S‐ occurs three times, always for pipe (tube/conduit), a sign signifying a thick entity. Since 10‐ has a single selected finger (the thumb) and since S‐ is a handshape in which all fingers act the same, it appears that basing our original classification of ED‐ vs. SD‐handshapes according to physiology is correct. Handshapes that select anything other than all fingers are edge‐drawing; handshapes in which all fingers do the same thing are surface‐drawing. With this new understanding, we fold the single example using 10‐ and the three examples using S‐ into the regular classifications. We now find that 98 out of 110 clips (89%) that use ED‐handshapes are of signs that signify flat entities, while 96 out of 109 clips (88%) that use SD‐handshapes are of signs that signify thick entities.

At this point it is clear that the aberrations in our data are not more frequent for any particular sign nor for any particular language. In conclusion, it appears that the Dimension Principle holds cross‐linguistically.

6.3.2. Testing the Lexical Drawing Principle

We now consider what our data from the cross‐linguistic lexical study tell us about the Lexical Drawing Principle. Of our 231 drawing clips (117 in signs signifying thick entities and 114 in signs signifying flat entities), 12 use F‐ or open‐F‐, and all these obey the Lexical Drawing Principle. But since we are unconvinced that these two handshapes are not classifier uses, we remove them from our calculations, 22 and we return to a brief discussion of them in our Section 7. Thus, we work with a total of 219 clips.