Abstract

We present a new, computationally efficient framework to perform forward uncertainty quantification (UQ) in cardiac electrophysiology. We consider the monodomain model to describe the electrical activity in the cardiac tissue, coupled with the Aliev‐Panfilov model to characterize the ionic activity through the cell membrane. We address a complete forward UQ pipeline, including both: (i) a variance‐based global sensitivity analysis for the selection of the most relevant input parameters, and (ii) a way to perform uncertainty propagation to investigate the impact of intra‐subject variability on outputs of interest depending on the cardiac potential. Both tasks exploit stochastic sampling techniques, thus implying overwhelming computational costs because of the huge amount of queries to the high‐fidelity, full‐order computational model obtained by approximating the coupled monodomain/Aliev‐Panfilov system through the finite element method. To mitigate this computational burden, we replace the full‐order model with computationally inexpensive projection‐based reduced‐order models (ROMs) aimed at reducing the state‐space dimensionality. Resulting approximation errors on the outputs of interest are finally taken into account through artificial neural network (ANN)‐based models, enhancing the accuracy of the whole UQ pipeline. Numerical results show that the proposed physics‐based ROMs outperform regression‐based emulators relying on ANNs built with the same amount of training data, in terms of both numerical accuracy and overall computational efficiency.

Keywords: artificial neural network regression, cardiac electrophysiology, reduced basis method, reduced order modeling, sensitivity analysis, uncertainty quantification

We rely on physics‐based ROMs to speed up sensitivity analysis and forward uncertainty quantification in cardiac electrophysiology. We account for the approximation error with respect to the full order model by means of inexpensive ANN regression models. We show quantitatively that the duration of the refractory period is a key factor for the sustainment of the reentry.

Abbreviations

- ANN

artificial neural network

- FE

finite element

- FOM

full order model

- GSA

global sensitivity analysis

- MLP

multilayer perceptron

- ODE

ordinary differential equation

- PDE

partial differential equation

- POD

proper orthogonal decomposition

- RB

reduced basis

- ROM

reduced order model

- UQ

uncertainty quantification

1. INTRODUCTION

High‐performance computing has enhanced, in the past decade, organ‐level numerical simulations of the heart, integrating complex multi‐scale phenomena – ranging from the subcellular to the tissue scale – with multi‐physics interactions, such as the electromechanical coupling. 1 Computational cardiology is nowadays a recognized tool of clinical utility regarding risk stratification, decisions' support and personalized medicine. It relies on physics‐based mathematical models and accurate discretization techniques: the former usually depend on several inputs, that can be either directly measured or indirectly estimated according to experimental data; the latter often requires extremely small mesh sizes and time steps.

Model inputs such as physical and/or geometrical parameters – and more generally speaking problem data – cannot be treated, in this context, as completely known quantities, because of both (i) intrinsic randomness affecting physical processes, and (ii) intra‐subject variability – that is, differences between individuals. 2 , 3 , 4 , 5 , 6 Variability and lack of knowledge are the two main causes 1 of uncertainty – this latter being commonly defined as the confidence by which a quantity can be assigned a value. Whenever interested to move towards data‐model integration, embedding uncertainties carried by subject‐specific features into the computational models, and quantifying their impact on the computed results are crucial steps, thus motivating the application of global sensitivity analysis (GSA) and uncertainty quantification (UQ) techniques to cardiovascular problems. 7

In this paper we focus on cardiac electrophysiology, that is, the description of the cardiac electrical activity, 8 , 9 , 10 , 11 using the monodomain system coupled with the Aliev‐Panfilov ionic model. Despite the input parameters of these models often have a direct physical interpretation, setting their values can be extremely troublesome. For instance, electrical conductivities are tensor fields depending on tissue anisotropy, induced by the presence of fibers and sheets 12 ; on the other hand, repolarization properties are not easily measurable and ionic models are extremely sensitive to the values of some of their parameters, thus making some pathological conditions hard to reproduce accurately. More generally speaking, uncertainty might affect any input parameter related to the geometrical configuration of the domain where the model is set, model coefficients, sources, initial and boundary conditions. As a result, quantifying the sources of variability and uncertainty in model inputs is of paramount importance to obtain reliable outputs of clinical interest, such as voltage or activation maps, through the numerical approximation of the cardiac models. The task of forward UQ is that of providing a statistical model consisting of a probability distribution of model outputs as a function of uncertain model inputs, and related statistics of interest. Sensitivity analysis can be used instead, prior to forward UQ, to identify model inputs that have either a dominant influence (and so should be measured as precisely as possible), or a mild effect (in which case uncertainty in those inputs may be neglected) on a given output. Even if this work focuses on UQ, we highlight that this latter is only one of the three pillars – verification, validation, and uncertainty quantification – providing the so‐called VVUQ framework, aiming at improving processes in computational science. Verification and validation of models in cardiac electrophysiology, however, are beyond the scope of the present work.

UQ and sensitivity analysis entail tremendous computational costs because of the need to rely on stochastic sampling (e.g., Monte Carlo or quasi Monte Carlo) techniques, 13 requiring a large number of queries to the state problem (given in our case by a nonlinear, time‐dependent, coupled PDE‐ODEs system). To improve the performances we can follow different strategies such as, (i) replacing computationally expensive high‐fidelity full‐order models (FOMs) with computationally inexpensive surrogate or reduced‐order models (ROMs), (ii) to improve sampling procedures exploiting, for example, (adaptive) sparse grid, 14 , 15 , 16 multi‐level or multi‐fidelity 17 Monte Carlo techniques 18 , 19 or (iii) to adopt different stochastic procedures, 20 such as stochastic Galerkin and stochastic collocation methods. 21 , 22 In this paper we focus on the first strategy for its ease of implementation and flexibility.

Among surrogate models, several options are available, such as (i) data fits or emulators, obtained via artificial neural network (ANN) regression, 23 polynomial chaos expansions 24 or Gaussian process regression, 25 that directly approximates the input–output mapping by fitting an emulator to a set of training data – that is, a set of inputs and corresponding outputs obtained from model runs; (ii) lower‐fidelity models, introducing modeling simplifications (e.g., coarser meshes or simplified physics, such as the Eikonal or reaction‐Eikonal models 26 ); and (iii) ROMs obtained through a projection process on the equations governing the FOM to reduce the state‐space dimensionality. Although typically more intrusive to implement, ROMs often yield more accurate approximations than data fits and usually generate more significant computational gains than lower‐fidelity models, requiring less training data. Since we are interested to deal with complex depolarization and repolarization patterns including sustained or non‐sustained reentries, we avoid using simplified physical models, because they might fail in describing such complex patterns. 26 , 27 We instead compare data fits and projection‐based ROMs when dealing with sensitivity analysis and forward UQ. In particular, we assess the effect of parameters (and related uncertainty) on tissue activation patterns and tissue refractoriness, leading to sustained and non‐sustained reentry waves. Despite several works have proposed computational models to enhance understanding of cardiac arrhythmias, focusing on both two‐dimensional geometries 28 , 29 , 30 , 31 , 32 and three‐dimensional, patient‐specific configurations, 33 , 34 , 35 , 36 uncertainty propagation in these applications has been seldom taken into account systematically; we mention, for example, Reference 6 for the estimation of the local tissue excitability of a cardiac electrophysiological model and Reference 37 for the quantification of the uncertainty about the shape of the left atrium derived from cardiac magnetic resonance images. On the other hand, several works have focused on the way uncertainty can be quantified and propagated within single‐cell models. 4 , 38

In this paper, we propose a novel, physics‐based computational pipeline to perform global sensitivity analysis and forward UQ in cardiac electrophysiology, aiming at investigating the effect of model parameters (related with both the stimulation protocol and the ionic activity) on complex patterns including spiral waves reentry, simulating the presence of tachycardia. Our framework features several novelties compared to existing literature:

First of all, we exploit efficient and accurate ROMs built through the reduced basis (RB) method for parametrized PDEs as physics‐based surrogate models to speed up our UQ analysis, rather than data fits or emulators. In this way, we reduce the computational complexity entailed by stochastic sampling approaches by relying on less expensive queries to the full‐order state problem, still preserving the fidelity of an accurate model such as the monodomain equation.

Moreover, we properly account for the approximation error with respect to the FOM – which can also be seen as a form of simulator uncertainty – by means of inexpensive ANN regression models. Then, we perform a variance‐based global sensitivity analysis, taking into account the simultaneous variation of multiple parameters and their possible interactions.

Furthermore, we propagate uncertainty from the most relevant inputs (among several parameters affecting the ionic model, the coefficients and data of the monodomain model) to outputs of interest related with the activation map.

Finally, we show that a purely data‐driven emulator of the input–output map, built through an ANN regression model, does not ensure the same accuracy reached by the proposed physics‐based ROM strategy.

The structure of the paper is as follows. In Section 2 we formulate the monodomain system coupled with the Aliev‐Panfilov ionic model, the high‐fidelity FOM, and an efficient ROM based on the POD‐Galerkin method. Moreover, we show how to take advantage of the proposed ANN and ROM strategies to perform variance‐based GSA and forward UQ. In Section 3 we assess the computational performances of the proposed methods on a two‐dimensional benchmark problem, where the parameters of interest are related to the stimulation protocol and the ionic activity. A discussion on the obtained numerical results, and a comparison with existing literature, are reported in Section 4, followed by some Conclusions in Section 5.

2. METHODS

After formulating the problem we focus on, we show how to solve it efficiently through an efficient ROM. Then, we recall some fundamentals in sensitivity analysis and forward UQ, showing how reduction errors propagate through the forward UQ process.

2.1. State problem, input uncertainties and outputs of interest

Mathematical models of cardiac electrophysiology describe the action‐potential mechanism of depolarization and repolarization of cardiac cells, which consists in a rapid variations of the cell membrane electric potential with respect to a resting potential. Indeed, the generation of ionic currents at the microscopic scale through the cellular membrane produces a local action potential, which is propagated, at the macroscopic scale, from cell to cell, in the form of a trans‐membrane potential. This latter is described by means of PDEs – the bidomain model, or the simplified monodomain model – suitably coupled with ODEs modeling the ionic currents in the cells. Several ionic models have been investigated in the past decades, either providing a phenomenological description of the action potential disregarding sub‐cellular processes (such as the Rogers‐McCulloch, Aliev‐Panfilov, Bueno‐Orovio models), or allowing explicit description of the kinetics of different ionic currents (see, e.g., 8 , 10 , 39 ).

Throughout the paper, will denote an input parameter vector, whose components might represent physical and/or geometrical features affecting the coupled ODE‐PDE model; will denote the parameter space. Here we are interested to quantify the uncertainty in the evolution of the electric potential for a range of physical parameters affecting both electric conductivities at the tissue level and the ionic dynamics at the cellular scale. The state problem is obtained by coupling the monodomain model for the (dimensionless 2 ) transmembrane potential u(μ) with a ionic model – here involving a single gating variable w(μ) – in a domain Ω ⊂ ℝd, d = 2, 3, representing, for example, a portion of the myocardium.

This results in the following time‐dependent nonlinear diffusion–reaction problem: for each t ∈ (0, T),

| (1) |

Here t denotes a rescaled time, I app is an applied current providing the (initial) activation of the tissue, while the reaction term I ion and the function g both depend on u and w, thus making the PDE and the ODEs system two‐ways coupled. The diffusivity tensor D depends on the fibers‐sheets structure of the tissue, and affects conduction velocities and directions. Among several possible choices of ionic models, we consider the Aliev‐Panfilov model, for which

| (2) |

the coefficients K, a, b, ε 0, c 1, c 2 are related to the cell.

2.2. High‐fidelity, full‐order model

We consider the Galerkin finite element (FE) method as high‐fidelity FOM. To this goal, problem (1) is first discretized in space using linear finite elements for the transmembrane potential; the number of degrees of freedom related to the spatial discretization is denoted by N h and corresponds in this case to the number of mesh vertices. The time interval [0, T] is partitioned in N t = T/Δt time steps, t (k) = kΔt, k = 0, …, N t, and a semi‐implicit, first order, one‐step scheme is then used for time discretization 41 ; the nonlinear term at each time t (k + 1) is then evaluated at the solution already computed at time t (k). At each time step t (k), k = 1, …, N t, a system of N h (independent) nonlinear equations must then be solved, arising from the backward (implicit) Euler method: given w 0(μ) = w 0(μ), solve

| (3) |

The so‐called ionic current interpolation strategy is used to evaluate the ionic current term, so that only the nodal values are used to build a (piecewise linear) interpolant of the ionic current. This yields a sequence in time of μ‐dependent linear systems,

| (4) |

where and denote the FOM vector representation of the transmembrane potential and the state variables, respectively, at time t (k). The vectors , provide instead the initial conditions. Here denotes the mass matrix, encodes the diffusion operator appearing in the monodomain equation, whereas encodes the applied current at time t (k + 1).

The major computational costs are entailed by assembling the terms I ion and g at each time step and by the solution of the linear system (4); indeed, extremely small spatial mesh sizes h and time steps Δt must be chosen to capture the fast propagation of sharp (and, possibly, μ‐dependent) moving fronts correctly, 29 , 42 , 43 thus yielding an extremely large dimension N h.

2.3. Reduced‐order models for parametrized systems

To speed up the solution of the state problem (1) and make both GSA and UQ feasible for the application at hand, we rely on the reduced basis (RB) method for parametrized PDEs. This technique performs a Galerkin projection onto low‐dimensional subspaces built from a set of snapshots of the high‐fidelity FOM, by, for example, the Proper Orthogonal Decomposition (POD) technique. We refer to the resulting projection‐based ROM as to POD‐Galerkin ROM. In this case, snapshots are obtained by FOM solutions calculated for different values of the parameters (selected through Latin hypercube sampling), at different time steps. Then, suitable hyper‐reduction techniques, such as the Discrete Empirical Interpolation Method (DEIM) 44 and its matrix version MDEIM, 45 allow us to efficiently handle nonlinear and parameter‐dependent terms. 46 , 47

For the sake of brevity, here we only sketch the main aspects involved in the RB approximation of the problem at hand. Regarding the PDE system (4), we assume that the RB approximation of the transmembrane potential at time t (k) is expressed by a linear combination of the RB basis functions,

| (5) |

where collects the (degrees of freedom of the) reduced basis functions. In the case of POD, is made by the first n singular vectors of the snapshot matrix .

After updating the state variables to its current value w (k + 1)(μ) at time t (k + 1) by solving (3), the Galerkin‐RB problem reads as:

where and . Since the μ‐dependence shown by these matrices is nonaffine, we rely on MDEIM to get an approximate affine expansion. Then, we can take advantage of DEIM to avoid the evaluation of the full‐order array and preserve the overall ROM efficiency. We thus approximate

once m ≪ N h μ‐independent vectors , 1 ≤ q ≤ m basis vectors have been calculated, from a set of N snap ⋅ N t snapshots ; the μ‐dependent weights are then computed by imposing m interpolation constraints. Basis vectors are computed by means of POD, 44 whereas the set of points (in the physical domain) where interpolation constraints are imposed are iteratively selected by employing the so‐called magic points algorithm. 48 , 49 The ionic term in the potential equation can be thenapproximated by

where and , with , being ℐ the set of m interpolation indices ℐ ⊂ {1, ⋯, N h}, with ∣ℐ ∣ = m. Note that the matrix is μ‐independent and can be assembled once for all. This way of proceeding also enhances the solution of the ODE system (3). Indeed, only m components ℐ1, …, ℐm must be advanced in time, thus resulting in a reduced ODEs system for the vector .

Finally, the ROM for the monodomain system (1) reads as: given , find such that u 0(μ), , and, for k = 0, …, N t − 1,

Despite several works have exploited POD‐Galerkin ROMs for the simulation of the cardiac function, 50 , 51 , 52 , 53 , 54 for the sake of computational efficiency here we consider a generalization of the usual POD approach, requiring the construction of local RB spaces, as proposed in Reference 47. In this respect, clustering algorithms, such as the k‐means algorithm, are employed, prior to performing POD, to partition snapshots (of both the solution to the parametrized coupled monodomain‐ionic model (1), and the nonlinear terms) into N c clusters, for a chosen number N c ≥ 1; then, a local reduced basis is built for each cluster through POD. 55

For instance, in the case of solution snapshots, we employ k‐means to partition the columns of S into N c submatrices in order to minimize the distance between each vector in the cluster and the cluster sample mean. In other words, the objective is to find:

before computing the POD basis. Here, are the so‐called centroids (i.e., the cluster centers) selected by the k‐means algorithm. Then, when solving the ROM, the local basis is selected at each time step k = 0, …, N t − 1 with respect to the current solution of the system by minimizing the distance between and the centroids, that is, . It is possible to show (see Reference 47) that this latter task can be performed inexpensively only relying on the ROM arrays. A similar procedure is then applied for the construction of local bases to treat nonlinear terms through the DEIM.

We highlight that more sophisticated bioelectrical activity models (e.g., ten Tusscher‐Panfilov, O'Hara‐Rudy, or others) with many state variables would not impact on the construction of the ROM dramatically. In those cases, the ROM would result even more efficient compared to the FOM, due to the large number of ODEs this latter would involve. This is due to the use of the ionic current interpolation, and to the fact that the DEIM only requires to evaluate ionic variables at a set of few, selected points in the domain, thus requiring the solution of few ODEs during the online stage.

2.4. Sensitivity analysis and forward uncertainty quantification

Hereon, we assume that μ is a vector‐valued random variable (or random vector), whose support is , being , i = 1, …, p, enabling us to parametrize uncertain inputs of the ODE‐PDE system; we denote by π(μ) the probability density function (pdf) of μ. For the sake of simplicity, we consider all the parameters normalized in the range [0, 1]. Therefore, the (approximated) solution of this latter system, u h(t; μ), is itself a random function of a random vector, besides spatial coordinates and time. In addition, we denote by some (random) output Quantities of Interest (QoI) we want to evaluate, which depends by the random input μ through the state variable u h.

Because of the dependence on u h, the pdf of y h cannot be determined in closed form; therefore, we need to draw samples from its distribution, and to compute statistics such as its expected value and its variance

With this aim, we rely on Monte Carlo (MC) methods, which provide an approximation to and Var(y h) exploiting a random sample {μ q}, q = 1, …, N mc, drawn from the distribution of μ, as follows:

| (5) |

We also need to compute conditional expectations and conditional variances when dealing with variance‐based sensitivity analysis, since the sensitivities of the output with respect to the parameters are measured by looking at the amount of variance caused by the parameter μ i, i = 1, …, p. Indeed, assume to fix the parameter μ i at a particular value , and let be the resulting variance of y h, taken over μ ∼i (all parameters but μ i). We call this a conditional variance, as it is conditional on μ i being fixed to , and can use it as a measure of the relative importance of μ i – the smaller , the greater the influence of μ i on the QoI. To make this measure independent of , we average it over all possible values , obtaining

| (6) |

which we also refer to as residual variance. According to the law of total variance (or variance decomposition formula),

| (7) |

The first term at the right hand side is the so‐called explained variance, that is, the variance (with respect to μ i) of the conditional expectation

that is,

| (8) |

This term thus represents the reduction of the variance in the output QoI due to the knowledge of μ i. We have denoted by the conditional pdf of μ given μ i, defined as , having assumed that the parameter components μ 1, …, μ p are independent; here π i(μ i) denotes the marginal pdf of μ i. Also to compute the conditional expectation and its variance we will rely on MC methods, see Section 2.4.1.

2.4.1. Variance‐based global sensitivity analysis

Sensitivity analysis quantifies the effects of parameter variation on the output QoI, providing a criterium to rank the most influential input parameters. 56 In this work we consider a variance‐based global sensitivity analysis (GSA) which describes the amount of output variance generated from the variation of any single parameter, and also from interactions among parameters. 57 In this setting, input parameters ranking is based on which input, if fixed to its true value, yields the largest expected reduction in output QoI uncertainty. Compared to the elementary effect method, which computes the effect associated with changes in the ith parameter by changing one parameter at a time, variance‐based GSA enables us to take into account interactions among parameters on the output QoI. While several applications of the elementary effect method related to cardiac electrophysiology can be found in literature, only few works have exploited variance‐based GSA methods in this context. 3

In variance‐based GSA, the sensitivities of the output with respect to the parameters are measured in terms of the variance caused by each parameter μ i. According to definitions (6) and (8), the first‐order sensitivity index (or first Sobol index) of μ i on y h can be defined as the ratio between the explained variance (by μ i) and the total variance:

| (9) |

This quantity measures the effect (on the variance of the output QoI) of varying μ i alone, averaged over variations of the remaining input parameters, normalized over the total variance of y h. Hence, S i enables to determine which parameter μ i, i = 1, …, p, leads on average to the greatest reduction in the variance of the output y h. If the total variance of y h cannot be explained by superimposing the first‐order effects – that is, if – interactions among parameters are present. In this case, Var(y h) can be decomposed through the so‐called ANOVA decomposition into first‐order effects and interaction effects, which are used to construct the interaction indices

between any couple (μ i, μ j) of parameters. However, evaluating all possible interaction indices S i,j, i, j = 1, …, p would become soon impractical even for moderate values of p. It is then preferable to construct a total effect (or total sensitivity) index, given by

To derive a direct formula for avoiding the calculation of higher‐order effects due to interactions, we can consider again the variance decomposition formula (7), this time rewritten as follows:

Indeed, the residual quantity

| (10) |

is the remaining variance of y h that would be left if we could determine the true values of μ j for all j ≠ i. The total effect (or total sensitivity) index is then obtained by dividing the residual quantity (10) by the total variance Var(y h):

| (11) |

The total effect index (11) is much more informative than the first‐order index (9), except when there are no interaction effects, in which case they coincide. Large values of correspond to influential parameters μ i for the output QoI; instead, if , μ i is a non‐influential parameter and can be fixed to any value in its range without affecting the value of Var(y h).

We rely on the so‐called Sobol method, 58 a quasi MC method based on Sobol sequences of quasi‐random numbers, to numerically approximate the first‐order and total effect indices (9)–(11). Given the desired sample size N s > 0 of the MC estimates,

generate a N s × 2p matrix of numbers (where N s is the Sobol sequence sample size), obtained as input realizations from a Sobol’ quasi‐random sequence (through, e.g., the Matlab function sobolset);

define two matrices each containing half of the samples

-

3.

construct p matrices , i = 1, …, p, using all columns of B except the ith column taken from A

-

4.

compute the output QoI for all the vectors of parameters given by the rows of A, B and C i

The results are respectively p + 2 vectors of output QoIs y A, y B and of dimension N s, which can be employed to compute the following MC estimates of the first‐order sensitivity and total‐effect indices S i and , i = 1, …, p 57 :

| (12) |

where is the (sample) mean of the components of y A, and

| (13) |

The main drawback of this procedure is the need of evaluating the output QoI (and thus solving the state system) (p + 2)N s times, to compute all the indices. Since N s must be large enough to minimize the statistical error generated by MC sampling, surrogate or reduced order models are necessary to avoid repeated queries to the FOM, and make this procedure feasible.

2.4.2. Uncertainty propagation

The goal of uncertainty propagation is to quantify the impact of input uncertainties on the output QoI y h(μ), by computing the empirical distribution of y h through sampling techniques, or some statistics – its mean and its variance being the most common indicators. Regarding this latter task, MC sampling 13 represents the standard approach 3 : a large number N mc of independent samples are drawn from π(μ), then the output QoI is evaluated yielding the sample , to approximate the expected value and the variance according to (5). This approach has been successfully adopted in a variety of applications, but suffers from slow performance in terms of convergence rate: since the statistical error scales as , a large number N mc of samples is required. Instead of enhancing MC convergence by relying on common variance reduction techniques 64 or more recent multi‐level Monte Carlo, 18 , 19 we enhance the MC sampling techniques by replacing the high‐fidelity FOM with surrogate models, and still relying on sufficiently large sample sizes N mc.

2.5. Reduced‐order and ANN‐based models for uncertainty quantification

To speed up the MC sampling for both variance‐based GSA and forward UQ, we introduce two surrogate models:

a physics‐based surrogate model consisting of a ROM built though the RB method;

a data‐driven emulator of the input–output map μ ↦ y h(μ), built through an ANN regression,

and compare their performances. In the case a ROM is used, the high‐fidelity FOM solution u h(t; μ) of the state problem is replaced by a cheaper yet accurate approximation u n(t; μ) built through the RB method, as shown in Section 2.3. As a result, is the resulting output QoI, which depends by the random input μ through the ROM approximation u n(t; μ). Hereon, we will refer to y n as to the ROM output QoI.

Regarding GSA, the calculation of the indices S i and , i = 1, …, p through the Sobol method requires the computation of the ROM output QoI for all the vectors of parameters given by the rows of the matrices A, B and C i, i = 1, …, p, see Section 2.4.1. The resulting vectors y n,A, y n,B and , i = 1, …, p will then replace y A, y B and , respectively, in the formulas (12) and (13), thus enabling a more efficient evaluation of S i and , i = 1, …, p.

On the other hand, when dealing with uncertainty propagation, empirical distributions of the output QoI, as well as statistics like the expected value and the variance, will be built by sampling y n instead of y h. For instance, given a set of random samples , the expected value and the variance of the ROM output QoI will be estimated, respectively, by

Alternatively, we could directly replace the output QoI y h(μ) by the prediction y MLP(μ; θ *) of a NN regression model, built by training an artificial neural network which emulates the input–output map μ ↦ y h(μ). In this work we focus on the multilayer perceptron (MLP), which is a feedforward neural network such that there are no cycles in the connections between the nodes. A MLP is made by a set of hidden layers (each layer being an array of neurons) and an output layer; between each couple of layers, a nonlinear activation function is applied. 23 Due to its form, a MLP can be viewed as a forward map from the input to the output, which depends on a set of parameters θ, namely a set of weights and biases; these latter are estimated during the training phase, starting from a training set , formed by inputs and output QoIs evaluated at each , where is a selected training sample of cardinality .

During the training phase, the optimal weights and biases θ * are determined by minimizing a (mean squared error) loss function given by the sum of the squared misfit between the target output y h and the output y MLP predicted by the MLP:

The minimization of the loss function is performed through iterative procedures, such as the stochastic gradient descent, the L‐BFGS method, or the so‐called ADAM optimizer, to mention the most popular options, exploiting mini‐batch learning. 23 Once trained, the network is then used during the testing phase to provide evaluations of the output QoI for any . For instance, given a set of random samples , the expected value and the variance of the MLP output QoI will be estimated, respectively, by (omitting the dependence on θ * in the expression of y MLP)

We highlight that when performing UQ through ANN‐based surrogate models, reliability and accuracy of the results strongly depend on the quality and amount of training data. We will compare, in the following sections, a projection‐based ROM (POD‐Galerkin ROM) and a MLP emulator, in terms of training costs, efficiency and accuracy of computed indices.

Another option to enhance the evaluation of those indices would be to rely on a multi‐fidelity framework, where models of different fidelity (such as, e.g., FOMs and ROMs) are combined to compute sensitivity analysis and uncertainty measures, as shown in Reference 65. However, multiple queries to a FOM in cardiac electrophysiology would be extremely demanding, thus compromising the overall performance of the methodology. For this reason, we still rely on a crude MC approach, exploiting an offline‐online splitting, to avoid to query the FOM multiple times when sensitivity indices (or uncertainty measures) are computed.

2.6. Reduced‐order error propagation

A relevant issue, arising when either MLP emulators or ROMs are exploited for the sake of sensitivity analysis and forward UQ, is related to the propagation of approximation errors, that is, the error between the FOM output QoI and the MLP or ROM output QoI. Approximation errors can also be seen as a form of model uncertainty, see, for example, Reference 66. As a matter of fact, neglecting this additional error source might easily yield biased sensitivity indices or skewed distributions of the output QoIs.

wHere we focus on the physics‐based POD‐Galerkin ROM since it proves to be efficient and, compared to the MLP emulator, provides an approximation of the whole potential field – rather than the single output QoI – whose accuracy can be more easily controllable. Indeed, ROM error on the potential field – and, ultimately, the error on the output QoI – can be related to the neglected POD modes, ordered by decreasing importance. To enhance the ROM accuracy and correct the possible output bias, we equip the ROM with a suitable error surrogate. In this way, we expect to improve the overall accuracy of the complete UQ workflow. In this Section we analyze the effect that the ROM error on the output QoI y h(μ) − y n(μ) has on uncertainty indices such as the output QoI expected value and its variance; indeed, these quantities are also relevant when dealing with the evaluation of the sensitivity indices. Further details about the impact that the use of a surrogate model such as a ROM has on uncertainty indices can be found, for example, in Reference 67.

In particular, we introduce a new, ANN‐based surrogate model to correct the ROM output QoI, so that the impact of the ROM error y h(μ) − y n(μ) on the output QoI expected value and its variance is mitigated. Once the ROM has been built, we replace the ROM output QoI y n(μ) by the prediction of a NN regression model, obtained by training an artificial neural network which emulates the map (μ, y n(μ)) ↦ y h(μ). Also in this case, we rely on a MLP architecture, whose parameters (that is, weights and biases) are denoted by θ c. These latter are estimated during the training phase, starting from a training set , formed by FOM and ROM output QoIs evaluated at each , where is a selected training sample of cardinality . Hence, after building the ROM, we compute both the ROM and the FOM output QoIs at N offline,c points in the parameter space, and minimize the following loss function:

Hereon, we refer to as to the corrected ROM output QoI and, for the sake of notation, we omit the dependence of y n,c on θ c. This procedure can be seen as a more general way to perform a correction of the ROM output, than the (additive) ROM error surrogate model proposed by the authors in Reference 68.

Let us now first consider the error on the expected value of the output QoI,

which can be expressed as the combination of two terms. The former is the expected value of the ROM error on the output QoI – that is, the bias introduced, on average, on the output QoI when replacing the FOM with the ROM – and depends on the ROM accuracy; the latter is the statistical error, depending exclusively on the MC sample size N mc.

Hence, provided that the corrected ROM output is such that

| (14) |

for a given tolerance , the overall error between the expected value of the output QoI and its MC approximation relying on the corrected ROM output can be bounded as follows:

On the other hand, the overall error between the variance of the output QoI and its MC approximation relying on the corrected ROM output is given by

The approximation error can be further decomposed as

where, according to Cauchy‐Schwarz inequality, the last term can be bounded as

so that

Hence, provided that the corrected ROM output is such that

| (15) |

for a given tolerance , we obtain that the overall error between the variance of the output QoI and its MC approximation relying on the corrected ROM output can be bounded as follows:

Therefore, the purpose of correcting the ROM output QoI is to ensure that assumptions (14) and (15) are verified, and in particular that

so that approximation errors are as small as possible. Assumptions (14) and (15) are crucial for both sensitivity analysis 4 and uncertainty propagation, to mitigate the effect of approximation errors. Note that an estimate of and Var(y h − y n,c) can be obtained by directly inspecting the performance of the ANN on an additional test set during the training procedure of the ANN which emulates the map (μ, y n(μ)) ↦ y h(μ) and returns y n,c(μ).

3. NUMERICAL RESULTS

To show the effectivity of the proposed strategy, in terms of both efficiency and accuracy, we consider a modified version of the pinwheel experiment proposed by Winfree, 69 consisting of a first rightward propagating planar wave generated by exciting the entire left edge of a square portion of the tissue (S1), followed by a second stimulus (S2) at the center of the square. The effect of the S2 stimulus depends on the following parameters:

‐. the time interval μ 1 = t 2 ∈ [480,520] ms, between S1 and S2 stimuli;

‐. the radius μ 2 = r ∈ [2.5,8.5] mm of the circular region in which S2 is applied;

‐. the tissue recovery properties, modeled by the coefficient μ 3 = ε 0 ∈ [0.005,0.02] appearing in (2).

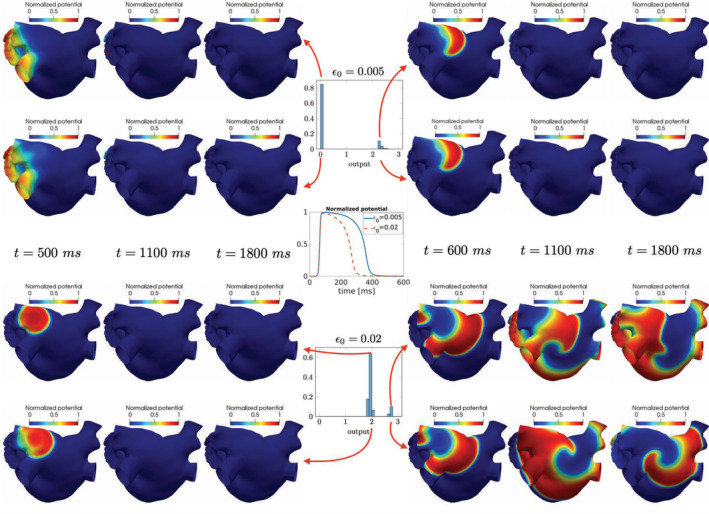

Three different scenarios might then arise as possible outcomes (see Figure 1):

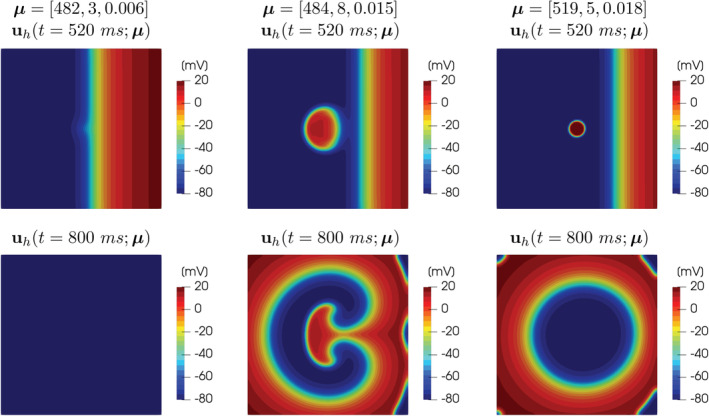

FIGURE 1.

FOM solutions of the monodomain system coupled with the Aliev‐Panfilov ionic model for different values of the parameters, at t = 520 ms and t = 800 ms. The great variability of the solution with respect to the parameters μ 1, μ 2, μ 3 is clearly visible: from left to right, tissue refractoriness, sustained reentry and non‐sustained reentry cases are reported

tissue refractoriness: S2 is delivered when the tissue is still in a refractory state and is not yet excitable (see Figure 1, left);

sustained reentries: S2 is delivered in the vulnerable window, such that the propagation of the circular depolarization wave is blocked rightward when it encounters refractory tissue, but not leftward where the tissue has already recovered its excitability after the passage of the first wave. This mechanism results in two reentrant circuits around each singularity, forming the so‐called figure of eight (see Figure 1, center);

non‐sustained reentry: S2 is delivered after the vulnerability window, such that the propagation of the circular depolarization wave is not blocked rightward, but only slowed down. This mechanism results in a second activation of the tissue, however without producing reentrant circuits (see Figure 1, right).

In order to construct a classifier of this three possible outcomes, we consider as output QoI the deviation between the activation map from a reference value,

Here AT(x; μ) is the activation time, which provides information about the last recorded time when the electric wavefront has reached a given point of the computational domain. Indeed, given the electric potential , the (unipolar) activation map at a point x ∈ Ω is evaluated as the minimum time at which the AP peak reaches x; from a practical standpoint, we can evaluate the activation time as the time at which the time derivative of the transmembrane potential is maximized, that is,

being (T 1, T 2) the latest time depolarization‐polarization interval in which the potential exceeded a certain threshold.

In our case, AT ref is a reference activation map, obtained for μ 3 = 0.0125, when no S2 stimulus is delivered. By construction, values of the output QoI y(μ) ≈ 0 indicate that the tissue is refractory; instead, reentries are induced when y(μ) > 0. In our numerical tests, we observed that for values of the output close to 2 the reentry was not sustained, while for values of the output larger than 2.5 the reentry was sustained. Figure 2 shows a summary of the considered input–output relationship.

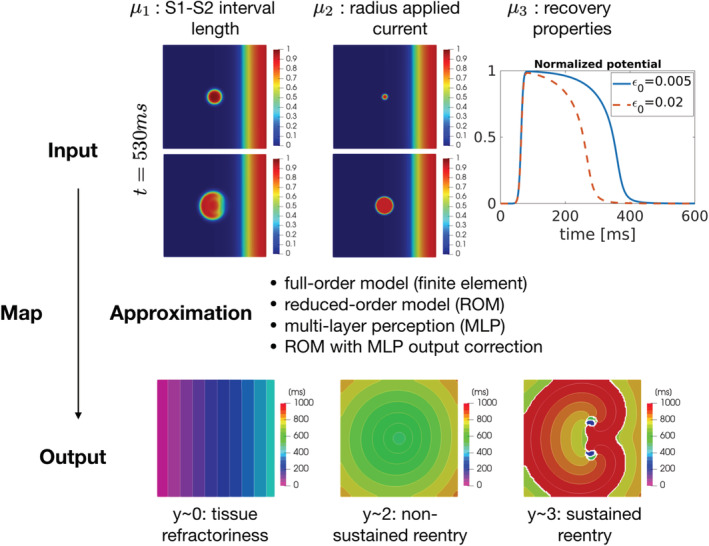

FIGURE 2.

Graphical sketch of the input–output map. Input parameters (top) are related with the time interval between S1 and S2 stimuli (μ 1), the radius of the circular region in which S2 is applied (), and the tissue recovery properties, modeled by the coefficient ε 0 affecting the ionic model (μ 3). The output quantity of interest y(μ) represents the deviation of the activation map from a reference value, evaluated as a scalar index and acts as a classifier: small values of the output are related with tissue refractoriness, intermediate values with non‐sustained reentry, large values with sustained reentry. Being dependent of the transmembrane potential u(μ), the output y(μ) can be evaluated exploiting one of the proposed models: a reduced order model (ROM), an ANN‐based emulator (MLP), a ROM corrected with an ANN‐based emulator for the ROM error (ROM+MLP)

All computational timings shown below are obtained by performing calculations on an Intel(R) Core i7‐8700 K CPU with 64 Gb DDR4 2666 MHz RAM using Matlab(R). The physical coefficients considered for the monodomain (1) and the ionic (2) models are reported in Table 1.

TABLE 1.

Values (taken from Reference 70) of the model physical coefficients employed in the numerical simulations

| K | a | c 1 | c 2 | b | Dii(mm2/ms) |

|---|---|---|---|---|---|

| 8 | 0.01 | 0.2 | 0.3 | 0.15 | 0.2 |

3.1. Full order model

We introduce on a square domain of size (0, 10) × (0, 10) cm2 a structured triangular grid made by 20,000 triangular elements, and N h = 10, 201 vertices, resulting by choosing a maximum element size equal to h = 1.0 mm and consider over the time interval [0, 1000] ms a time step equal to Δt = 0.5 ms. We build the FOM (3) and (4) through the finite element method, using linear finite elements; a single query to the FOM takes 2 min 25 s to be computed.

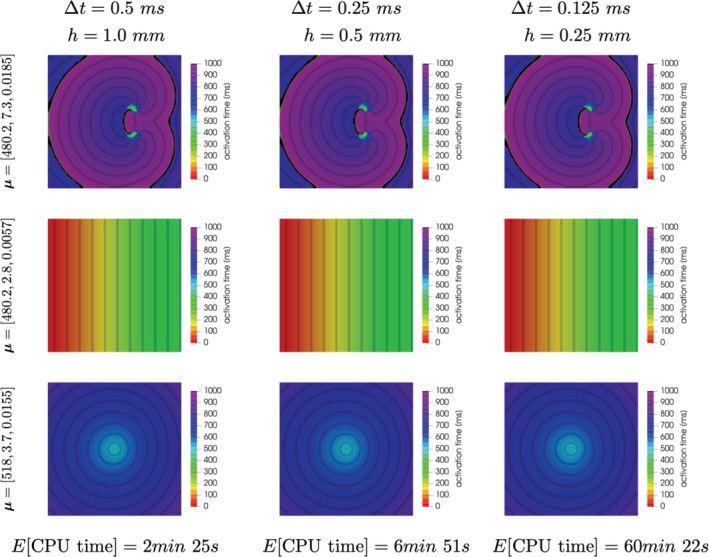

In this respect, a comment about mesh resolution is in order. Indeed, we compare the activation times computed for different choices of (h, Δt) = (1.0 mm,0.5 ms), (0.5 mm,0.25 ms), and (0.25 mm,0.125 ms). Results obtained for different choices of the parameters (representative of the different scenarios that will be discussed later) are reported in Figure 3. Differences are almost negligible in terms of activation times among the three different setting, thus motivating the use of a discretization with (h, Δt) = (1.0 mm,0.5 ms) for the case at hand. However, we highlight how this choice could reveal less appropriate when dealing with cardiac electrophysiology on realistic geometries – and, more importantly, when using realistic cell models and tissue anisotropic and inhomogeneous conductivities; here, our focus is on a benchmark problem involving a simple two‐dimensional slab. Another reason motivating our choice is the need to compare the results obtained with the proposed reduced order (or surrogate) models with the ones provided by the FOM. Indeed, the computational cost entailed by a single query to the FOM would increase to 6 min 51 s (resp., 60 min 22 s) when considering (h, Δt) = (0.5 mm,0.25 ms) (resp., (h, Δt) = (0.25 mm,0.125 ms)), thus making the numerical comparisons impracticable.

FIGURE 3.

Activation maps computed from the FOM solution for different choices of (h, Δt) = (1.0 mm,0.5 ms), (0.5 mm,0.25 ms), and (0.25 mm,0.125 ms) (from left to right) and different parameter values describing sustained reentry, tissue refractoriness, or non‐sustained reentry (from top to bottom)

3.2. Physics‐based ROMs

We then assess the computational performance and the accuracy of the ROM built by considering the k‐means algorithm for the construction of local ROMs in the state space, according to the strategy proposed in Reference 47. We start from a training sample of 48 parameter vectors selected through Latin hypercube sampling to compute N c = 6 centroids and the transformation matrices for the PDE solution on each cluster. With a prescribed POD tolerance of 10−2, we obtain a maximum (over the N c clusters) number of basis functions of 205 and a minimum of 51. Then, DEIM is used to approximate the nonlinear term using a training sample of 66 random parameter vectors and exploiting the same local reduced basis structure. In this case, we prescribed a tolerance of 10−6, resulting in a maximum (over the clusters) number of basis functions of 2585 and a minimum of 950.

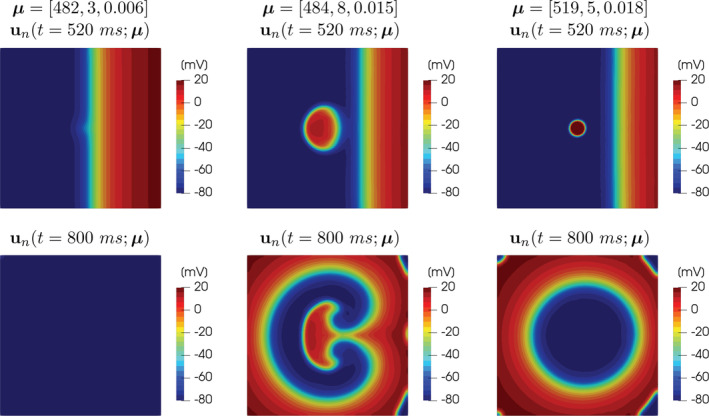

Compared to the FOM, the ROM computes a solution to the problem for any new selected parameter instance with a speed‐up of 25 times; see Figure 4 for the comparison between FOM and ROM solutions. The resulting ROM output QoI y n(μ) approximates the FOM output QoI y h(μ) with a mean square error of 0.0308 evaluated over a test set formed by 300 random parameter samples.

FIGURE 4.

ROM solutions of the monodomain system coupled with the Aliev‐Panfilov ionic model for different values of the parameters, at t = 520 ms and t = 800 ms

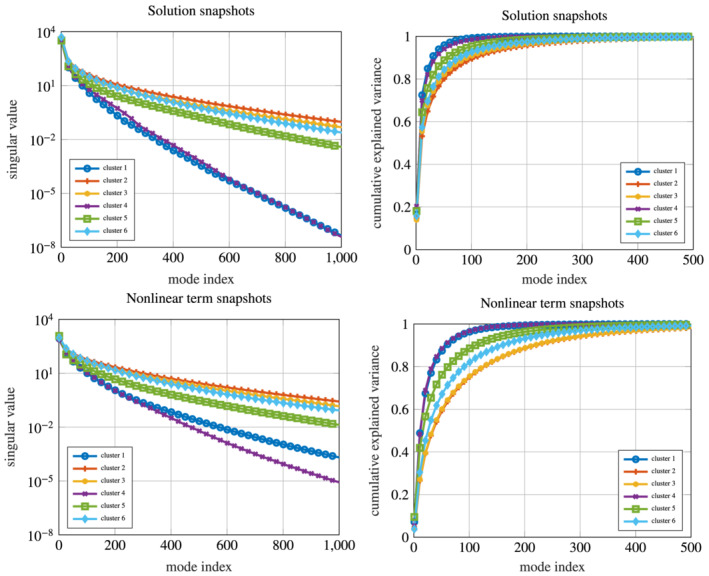

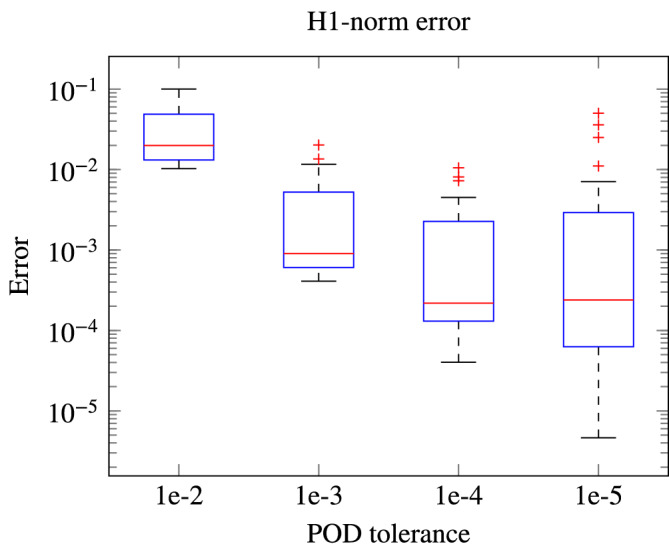

To have a better insight on the ROM accuracy, we report in Figure 5 the singular values of both the solution and the nonlinear term snapshots set, after their partitioning into N c clusters. The decay of the singular values is directly related with the projection error of the snapshots onto the low‐dimensional subspace spanned by the first singular vectors; this can be seen (up to some constant) as an estimate of the error between the ROM approximation and the FOM solution. Moreover, we report the errors between the ROM and the FOM solutions, obtained on a test set of dimension N test = 25 sampled from , for decreasing POD tolerances, in Figure 6, reflecting an increasing accuracy of the ROM for smaller POD tolerances, and showing an almost exponential convergence of the error.

FIGURE 5.

Singular values decay and cumulative expressed variance for the solution (top) and the nonlinear term (bottom) snapshot sets, after their clustering obtained through the k‐means algorithm. The query to extremely accurate ROM might be computationally demanding due to the high number of retained POD modes

FIGURE 6.

Relative errors on solution obtained with ROMs of increasing accuracy. For decreasing POD tolerances, ROMs of increasing dimensions allow us to obtain decreasing error norms on the solution. The (H 1(Ω)) computed norm for the solution error sums errors on both the solution and its spatial derivatives. Note that the ROMs with POD tolerances 10−5 (resp., 10−4, 10−3) are 5 (resp., 4, 1.7) times slower than the ROM with POD tolerance 10−2

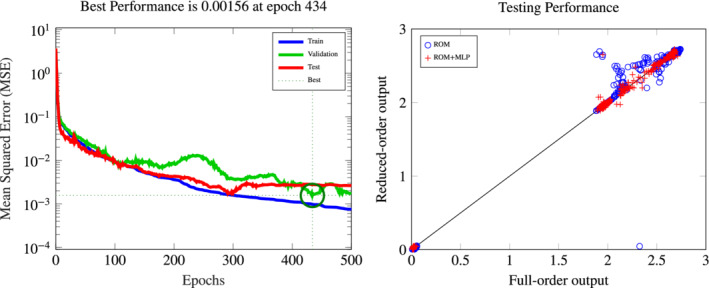

To correct the ROM output QoI through a NN regression model, we adopt a MLP formed by two hidden layers with 12 nodes each and sigmoids activation functions; the corrected ROM output QoI is denoted by y n,c(μ). The net is trained using the BFGS quasi‐Newton method to reproduce the FOM output QoI starting from a training set of output values. In particular, we precompute 2000 output realizations from the FOM and the ROM; the first 1400 realizations are used for the MLP training, 300 are used for the validation and the last 300 for testing (so that N offline,c = 1400 + 300 = 1700). In this setting the best performance on the validation set is reached at epoch 434 with an MSE of 1.56 ⋅ 10−3 and a MSE of 2.7 ⋅ 10−3 on the test set (see Figure 7).

FIGURE 7.

MLP construction of the correction of the ROM output QoI. Left: convergence of the training in terms of MSE over the epochs on the training, validation, and test sets. Right: testing performance of the MLP correction of the ROM output

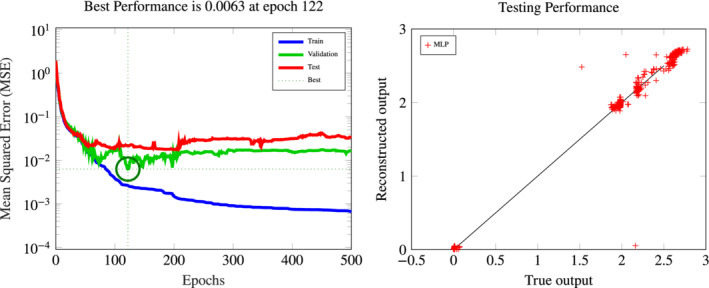

3.3. Black‐box MLP model

As alternative to the (corrected) ROM output, we also consider the prediction y MLP(μ) of a NN regression model, built by training a net which emulates the input–output map μ ↦ y h(μ). Also in this case, we consider a MLP formed by two hidden layers with 12 nodes each and sigmoids activation functions. The training dataset is formed by input–output samples ; also in this case, the training set (including validation points) has cardinality . The absence of the ROM output QoI as additional input to the net affects the accuracy of the output QoI approximation: the best performance on the validation set is reached at epoch 122 with an MSE of 6.3 ⋅ 10−3 and a corresponding MSE of 2.8 ⋅ 10−2 on the testing set (see Figure 8). Computational costs related to the evaluation of the MLP output QoI are negligible: for each new , the computation of the output requires only a small number of operations, since there are only 12 neurons per layer.

FIGURE 8.

MLP construction of the ANN‐based input–output surrogate. Left: convergence of the training procedure in terms of MSE over the epochs on the training, validation, and test sets. Right: testing performance of the MLP output

However, the computational bottleneck arising when training this MLP model is dataset generation, which requires 1700 FOM queries. Such amount of FOM evaluations is feasible in this setting, but would be out of reach when dealing with patient‐specific 3D simulations with finer meshes and smaller time steps. On the other hand, as the number of training samples decreases, we observe an increase in the MSE error on the test set (see Figure 9) – hence, a MLP surrogate model can be reliable, for the case at hand, only provided it has been trained on a sufficiently large dataset. Indeed, if we consider only N offline = 100 training samples, the error is an order of magnitude larger than the one given by the same MLP model trained on N offline = 1700 data. On the other hand, the information provided by a physics‐based ROM helps in improving, by at least one order of magnitude, the accuracy of the black‐box MLP, hence giving the possibility to use also small samples for the MLP training (and, as a consequence, to reduce the cost of the training phase dramatically).

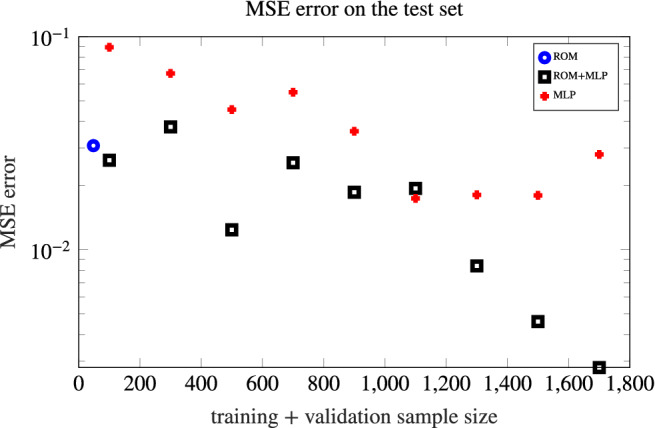

FIGURE 9.

Accuracy of the MLP and ROM+MLP models in terms of mean squared error (MSE) over the test set, plotted as a function of the size N offline of training+validation sample. The ROM+MLP is more accurate than the MLP (in terms of MSE error), and definitely outperforms the MLP for N offline > 1000. The MSE error provided by the ROM is also reported

From Figure 9 we also highlight that the ROM+MLP is more accurate than the MLP (in terms of MSE error), and definitely outperforms the MLP for N offline > 1000. Hence, provided a huge snapshots set from the FOM is available, the ROM+MLP strategy (involving a physics‐based ROM) is preferable with respect to the MLP (which is purely data‐driven), for the same online computational cost. Moreover, keeping the (offline) computational effort comparable, using a MLP to obtain a corrected ROM output, rather than the output itself, is a better option. On the other hand, compared to the ROM, a corrected ROM provides significant improvements only provided the number of FOM solutions used for its training is sufficiently large.

3.4. Sensitivity analysis

We now perform the variance‐based GSA for the case at hand, using the Sobol’ method with N s = 104, comparing the following options for the input–output map:

the FOM output QoI μ ↦ y h(μ);

ROM, the ROM output QoI μ ↦ y n(μ);

MLP (100), the MLP output QoI μ ↦ y MLP(μ), with N offline = 100;

MLP (1700), the MLP output QoI μ ↦ y MLP(μ), with N offline = 1700;

ROM + MLP (100), the corrected ROM output QoI through a MLP model, (μ, y n(μ)) ↦ y n,c(μ), with N offline,c = 100;

ROM + MLP (1700), the corrected ROM output QoI through a MLP model, (μ, y n(μ)) ↦ y n,c(μ), with N offline,c = 1700.

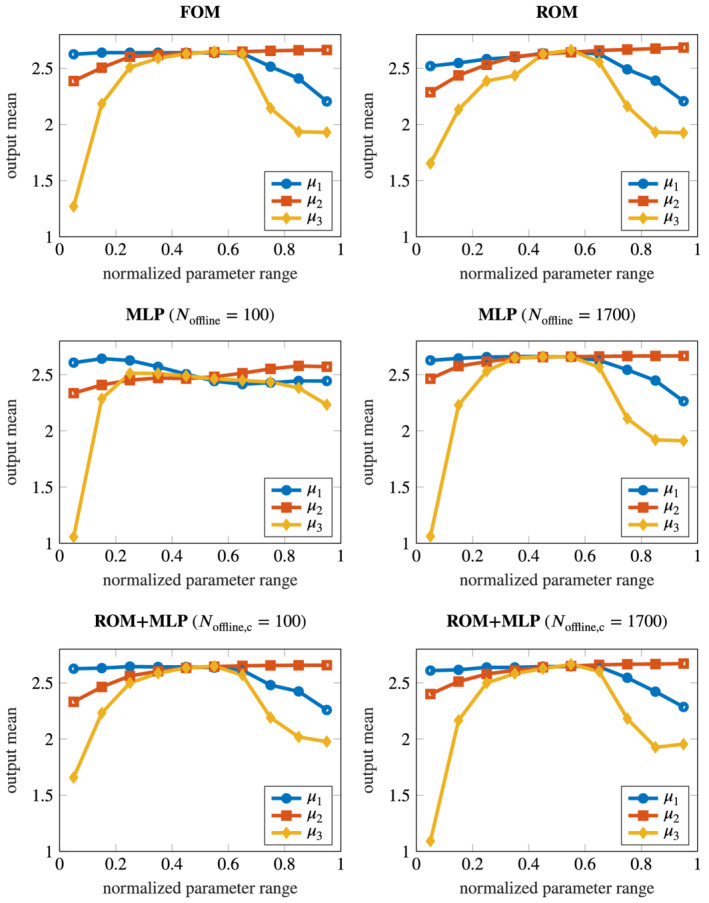

Regarding the ROM, the corrected ROMs and the MLP outputs, we group these options in two classes, depending on the number of FOM snapshots used to build the corresponding ROM/surrogate model: the ROM, MLP (100) and ROM+MLP(100) are characterized by a small dataset size, while MLP(100) and ROM+MLP (1700) are characterized by a large dataset size. For each option, we report the main effect plots and compute the sensitivity indices defined in (9) and (11).The so‐called main effect plots, showing the output mean (with respect to μ ∼i) as a function of each input μ i, i = 1,2,3, are useful to visualize, intuitively, if different levels of an input affect the output differently. For the case at hand, main effect plots are reported in Figure 10 considering 10 levels for each input and show that, in general, the output QoI is strongly influenced by changes in the third parameter (modeling the recovery property of the tissue). Moreover, the output is close to 1 only for small values of the third parameter, thus indicating that there are several scenarios of tissue refractoriness. From these results, we can also observe that when the stimulus is released later in the time window spanned by the first parameter (corresponding to high values of μ 1), it is less likely that the reentry is sustained. All the models allow to observe these main characteristics, except for the MLP (100), which shows remarkable distortions of the curves with respect to the FOM ones (used as reference), hence leading to different conclusions regarding the impact of parameter variations on the tissue response. Instead, the ROM yields only a significant distortion of the third curve (yellow one). However, this error is only slightly reduced by the ROM‐MLP (100), but is almost eliminated by the ROM‐MLP (1700), which is the closest one to the FOM results in terms of accuracy.

FIGURE 10.

Main effect plots obtained with the six considered models, showing the output mean (with respect to μ ∼i) as a function of each input μ i, i = 1,2,3. From top to bottom, from left to right: FOM, ROM; MLP (100); MLP (1700); ROM+MLP (100), ROM+MLP (1700). Main effect plots are useful to visualize if different levels of each μ i affect the output differently

The analysis of the first‐order sensitivity and the total effect indices (see Table 2) shows that:

TABLE 2.

First order sensitivity indices S i and total effect indices of the parameters μ i, i = 1,2,3, on the output computed with the six considered models

| N s | S 1 | S 2 | S 3 |

|

|

|

||||

|---|---|---|---|---|---|---|---|---|---|---|

| FOM | 103 | .0209 | .0539 | .4383 | .4305 | .2940 | .9091 | |||

| Small dataset size | ||||||||||

| ROM | 103 | .0109 | .0623 | .3649 | .4562 | .3616 | .9012 | |||

| ROM+MLP (100) | 103 | .0015 | .0575 | .4095 | .4261 | .3284 | .9213 | |||

| MLP (100) | 103 | .0355 | .0737 | .5243 | .3676 | .2936 | .9094 | |||

| Large dataset size | ||||||||||

| ROM+MLP (1700) | 103 | .0315 | .0663 | .4351 | .4249 | .2939 | .9275 | |||

| MLP (1700) | 103 | .0213 | .0585 | .4530 | .4185 | .2892 | .9310 | |||

Note: In all cases, Monte Carlo (MC) estimates have been considered according to formulas (12) and (13), in which N s MC samples have been generated (for a total number of (p + 2)N s queries to the model.

fixing the value of the tissue recovery property does not reduce considerably the output variance (in fact, S 3 is close to .45, while S 1 and S 2 are much smaller than S 3);

the sum of first‐order sensitivity indices S i is much lower than one, indicating the presence of interactions among parameters;

ε 0 is the most influential among the three parameters, as shown by the computed value of , which is approximatively twice as the value of or ;

the sum of the total effect indices is greater than 1, meaning that the model is nonadditive. 5

Note that the approximation error introduced by efficient input–output models impacts with some bias on the resulting indices: for instance, S 3 is overestimated using the MLP (100) (0.5243 instead of 0.4383), and underestimated using the ROM (0.3649 instead of 0.4383). As remarked for the main effects plots, the corrected ROM‐MLP (1700) is the most accurate one when compared to the FOM (S 3 = 0.4351). For this specific example, the observed bias on the indices does not lead to different conclusions on the effects of the parameters on the output. However, when a larger number of parameters is involved, biased sensitivity indices might lead to wrong analysis and conclusions about the role of the parameters with respect to the output QoI.

3.5. Forward uncertainty quantification

We now consider uncertainty propagation on the output of interest by using Monte Carlo sampling based on N mc = 1000 evaluations of the input–output map. In a first test case, we consider six different configurations with uniform distributions, characterized by the following supports:

Case 1: {480} × [2.5,8.5] × [0.005,0.02], to describe scenarios with fixed early ectopic impulse;

Case 2: {520} × [2.5,8.5] × [0.005,0.02], to describe scenarios with fixed late ectopic impulse;

Case 3: [480,520] × {2.5} × [0.005,0.02], to describe scenarios with fixed small radius of the impulse;

Case 4: [480,520] × {8.5} × [0.005,0.02], to describe scenarios with fixed large radius of the impulse;

Case 5: [480,520] × [2.5,8.5] × {0.005}, to describe scenarios with fixed long APD;

Case 6: [480,520] × [2.5,8.5] × {0.02}, to describe scenarios with fixed short APD.

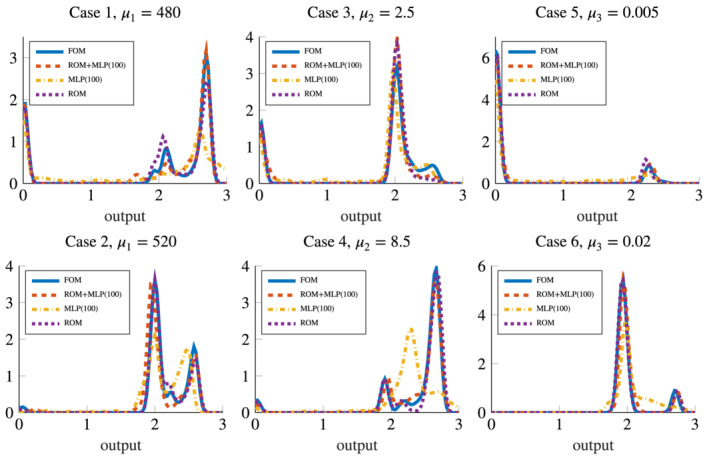

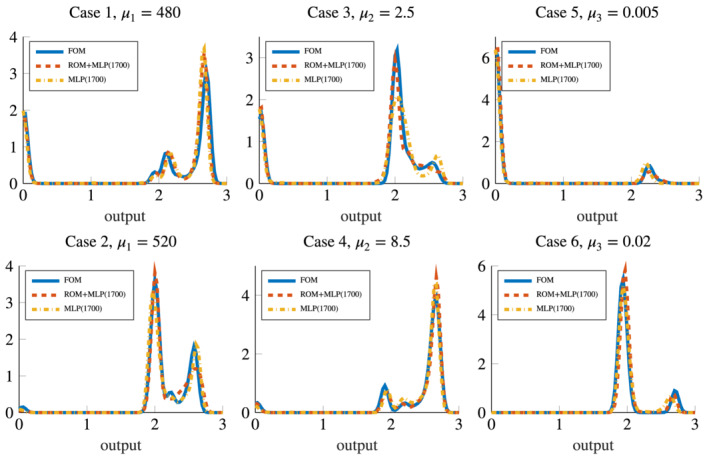

The resulting distributions of the output QoI obtained with the six options for the input–output map previously described are reported in Figures 11 and 12; sample means, sample variances and resulting coefficient of variations are reported in Table 3. We obtain that case (4), involving an impulse with a fixed large radius, is the one yielding to the larger probability of having a sustained reentry. This result is shared by all the considered approximations of the input–output map except for the MLP (100). In this latter case (see Figure 11, bottom‐center plot) we obtain an output distribution less skewed and with smaller mean. In general, the effect of the approximation error impacts on the output QoI distributions: indeed, using less accurate models might result in distributions showing larger skewness or variances, and in means affected by some discrepancies compared to the results obtained with the FOM.

FIGURE 11.

Output QoI distributions resulting from forward propagation obtained with the ROM, the ROM+MLP (100) and the MLP (100) models (hence, in presence of a small dataset size), compared with the FOM output QoI distribution

FIGURE 12.

Output QoI distributions resulting from forward propagation obtained with the ROM+MLP (1700) and the MLP (1700) models (hence, in presence of a large dataset size), compared with the FOM output QoI distribution

TABLE 3.

Uncertainty propagation: sample mean, standard deviation and coefficient of variation of the output QoI computed with the different models, in cases 1, …, 6

| Case 1 | Case 2 | Case 3 | Case 4 | Case 5 | Case 6 | ||

|---|---|---|---|---|---|---|---|

| μ 1 = 480 | μ 1 = 520 | μ 2 = 2.5 | μ 2 = 8.5 | μ 3 = 0.005 | μ 3 = 0.02 | ||

| FOM | Mean | 1.8581 | 2.1607 | 1.6635 | 2.3927 | .3588 | 2.0281 |

| Standard deviation | 1.1238 | .3933 | .8983 | .5810 | .8180 | .2521 | |

| Coeff. of variation | .6048 | .1820 | .54 | .2428 | 2.2798 | .1243 | |

| Small dataset size | |||||||

| ROM | Mean | 1.8580 | 2.1593 | 1.6273 | 2.4289 | .4179 | 2.0207 |

| Standard deviation | 1.0830 | .3455 | .8482 | .5598 | .8553 | .2550 | |

| Coeff. of variation | .5829 | .16 | .5212 | .2305 | 2.0466 | .1261 | |

| ROM+MLP (100) | Mean | 1.8851 | 2.1305 | 1.6088 | 2.4229 | .4041 | 2.0510 |

| Standard deviation | 1.1075 | .3526 | .8705 | .4873 | .8444 | .2497 | |

| Coeff. of variation | .5875 | .1655 | .5411 | .2011 | 2.0896 | .1214 | |

| MLP (100) | Mean | 1.9099 | 2.1297 | 1.6234 | 2.2949 | .554 | 2.129 |

| Standard deviation | 1.1768 | .3680 | .8376 | .4743 | .8478 | .3195 | |

| Coeff. of variation | .6161 | .1727 | .5159 | .2067 | 1.5303 | .15 | |

| Large dataset size | |||||||

| ROM+MLP (1700) | Mean | 1.9336 | 2.2045 | 1.6141 | 2.4196 | .3265 | 2.0376 |

| Standard deviation | 1.0773 | .3367 | .9246 | .5595 | .7778 | .2188 | |

| Coeff. of variation | .5571 | .1527 | .5728 | .2312 | 2.3822 | .1074 | |

| MLP (1700) | Mean | 1.8598 | 2.1848 | 1.6489 | 2.4247 | .3693 | 2.0288 |

| Standard deviation | 1.1170 | .3599 | .9342 | .4976 | .8125 | .2154 | |

| Coeff. of variation | .6006 | .1647 | .5665 | .2052 | 2.2001 | .1062 | |

In a second test case, we consider simultaneous variations of all parameters, and restrict the support of the input parameter (uniform) distribution, after normalizing each parameter to the [0, 1] range, for the sake of variance shrinking, thus aiming at reducing the effects of sampling variation. Denoting by

we consider four different options, by sampling uniformly in the set

with α = 0.1,0.2,0.3,0.4, respectively. For each input distribution, we compute the output mean and standard deviation (see Table 4) using all the approximations of the input–output map previously described. The most significant bias in the mean is observed when using the MLP output QoI trained with only N offline = 100 input–output observations, and when the input distribution support decreases. Regarding the variance, we observe that the largest discrepancies arise from the use of the MLP output QoI, with a recurrent underestimation of the output variance when the MLP is trained on the smallest dataset. On the other hand, a slightly greater variance is obtained when using the ROM output QoI, compared to the FOM case: the cause of the mild variance increase might be related to the propagation of the ROM approximation error in the output. Once again, correcting the ROM output QoI with a MLP model seems the best option, yielding the closest result to what we would obtain if we relied on the FOM output QoI to perform forward uncertainty propagation.

TABLE 4.

Uncertainty propagation: sample mean, standard deviation and coefficient of variation of the output QoI computed with the different models, in cases 1, …, 6 (variance shrinking scenario)

| U([0.1,0.9]3) | U([0.2,0.8]3) | U([0.3,0.7]3) | U([0.4,0.6]3) | ||

|---|---|---|---|---|---|

| FOM | Mean | 2.2877 | 2.4545 | 2.5612 | 2.6369 |

| Standard deviation | .5701 | .2875 | .1943 | .0161 | |

| Coeff. of variation | .2492 | .1171 | .0759 | .0061 | |

| Small dataset size | |||||

| ROM | Mean | 2.2658 | 2.4031 | 2.5177 | 2.6365 |

| Standard deviation | .5277 | .2935 | .2218 | .0461 | |

| Coeff. of variation | .2329 | .1221 | .0881 | .0175 | |

| ROM+MLP (100) | Mean | 2.2941 | 2.4517 | 2.5554 | 2.6382 |

| Standard deviation | .528 | .2617 | .1839 | .0221 | |

| Coeff. of variation | .2301 | .1067 | .072 | .0084 | |

| MLP (100) | Mean | 2.2911 | 2.4542 | 2.4916 | 2.4764 |

| Standard deviation | .5163 | .1965 | .0737 | .0421 | |

| Coeff. of variation | .2253 | .0801 | .0296 | .017 | |

| Large dataset size | |||||

| ROM+MLP (1700) | Mean | 2.2880 | 2.4542 | 2.5642 | 2.6445 |

| Standard deviation | .5669 | .2890 | .2004 | .0245 | |

| Coeff. of variation | .2478 | .1178 | .0781 | .0093 | |

| MLP (1700) | Mean | 2.2906 | 2.4579 | 2.5725 | 2.6586 |

| Standard deviation | .5592 | .2912 | .2027 | .0106 | |

| Coeff. of variation | .2441 | .1184 | .0788 | .004 | |

4. DISCUSSION

4.1. Computational efficiency

The numerical results of the previous section show that, whenever available, a physics‐driven reduced order model approximating the primal variables of the problem can have a remarkable impact on the accuracy of an ANN‐based model, when used for the sake of sensitivity analysis and forward uncertainty quantification.

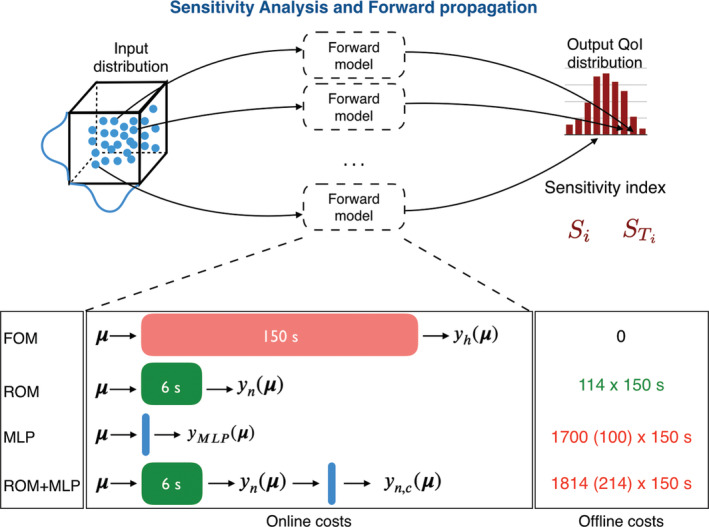

Regarding the online computational costs, the ROM provides a speedup of about 25 times compared to the FOM; hence, sensitivity analysis can be performed in a CPU time of 8.3 h – rather than 208 h if relying on the FOM – while forward UQ only requires a CPU time of 1.5 h, rather than 83 h. The same analyses performed with the MLP models are even more faster, requiring their online evaluation less than 1 s. Similarly, the online CPU time required by the ROM+MLP models is almost equal to the one employed by the ROM. See Figure 13 for the comparison between online and offline costs entailed by a single query to the proposed models.

FIGURE 13.

Comparison between online and offline costs entailed by the proposed models. Performing forward UQ requires N mc evaluations of the output QoI, while GSA requires (p + 2)N s evaluations of the output QoI. For the case at hand, N mc = 1000, p = 3 and N s = 104

Regarding instead the offline computational costs, we remark that the proposed ROM only requires 114 evaluations of the FOM for the sake of POD basis construction, resulting in a CPU time of about 5 h. MLP and ROM+MLP models require instead many more FOM evaluations, with a CPU time of about 4 h for MLP (100), 9 h for ROM+MLP (100), 70 h for MLP (1700), 76 h for ROM+MLP (1700).

From the computed results, we have shown that ROM+MLP models are definitely better than MLP models, with a remarkable gain in terms of accuracy, and one order of magnitude more accurate than the ROM model. Increasing the ROM accuracy is of course possible by considering a large number n of basis functions. However, this option would increase the online computational costs, yielding to a potentially smaller speedup compared with the use of a FOM. We underline that the tradeoff between accuracy and efficiency is problem‐dependent, and what really makes the difference is the computational speedup between the ROM and the FOM, rather than the relationship between the ROM dimension and the corresponding online time. Otherwise said, for the case at hand, the speedup between the FOM and the ROM is about 25, and a factor 5 extra computational cost for the ROM (that would ensure a factor 103 better error) would drop the speedup to only 5, thus making the overall cost of the analysis extremely large.

On the other hand, the online evaluation of an ANN‐based model for the error is almost inexpensive. Indeed, we can obtain the same accuracy of a higher dimensional ROM (which is, however, computationally demanding online, due to the high number of retained POD modes) by using a corrected ROM+MLP model built with a small ROM dimension, provided a large solution dataset is available. On the other hand, provided the same number of FOM problems is solved offline, an approach involving a ROM like the ROM+MLP model, is definitely more convenient than a purely data‐driven model, like the MLP, in terms or resulting accuracy. For these reasons, we propose to rely on a sufficiently accurate ROM with preferably smaller dimension, and to correct its outcome with an inexpensive ANN‐based model, rather than increasing the ROM dimension too much.

We also highlight that the purpose of our work is to set, analyze and apply physics‐based and/or data‐driven techniques in order to enable UQ in cardiac electrophysiology; the other two pillars of VVUQ analysis – verification and validation – do not fit among the goals of this work. The former task, verification, establishes how accurately a computer code solves the equations of a mathematical model; the latter, validation, determines how well a mathematical model represents the real world phenomena it is intended to predict. Nevertheless, results reported in Section 3 provide some insights into: the FOM calculation verification, since spatio‐temporal discretization convergence has been performed on the FOM solution (Section 3.1); the ROM calculation verification, having assessed the ROM convergence with respect to the number of basis functions for both the primal variable and the output quantity of interest (Section 3.2); the validation of the proposed framework against the results obtained with the FOM, here treated as truth high‐fidelity solution (Sections 3.4 and 3.5).

4.2. Further extension to more realistic scenarios

The most common atrial arrhythmias, also called supraventricular arrhythmias, are atrial fibrillation and atrial flutter. In the former, the atria are activated with a disorganized pattern, while in the latter the activation follows a regular, but self‐sustained and accelerated, pattern. The ectopic beats are considered as a common trigger of atrial arrhythmias. In order to be effective, the ectopic foci must find a combination of factors, mainly related to the electrophysiological characteristics of the tissue, such as conduction properties and refractory period duration. 71 As shown in the simplified case we considered, impulses persist to re‐excite the tissue autonomously only provided that suitable conditions are verified – that is, whenever there is an appropriate substrate for re‐entry. The numerical results confirm that the duration of the refractory period (controlled in our case by the physical coefficient of the ionic equation ɛ0) is a key factor for the sustainment of the reentry: the longer the refractory periods, the less likely is the re‐entry sustainment, due to the head‐tail interaction of the impulse in the reentry circuit. This kind of interaction can be also modified by considering different dimensions of the isthmus of the figure of eight reentry, which is in our examples determined by the dimension of the ectopic foci.

Our study shows how to extract meaningful indices related to sustainability of reentry in a context of uncertain inputs. The proposed framework, merging physics‐based reduced‐order models and artificial neural networks, could enhance the quantitative description of a range of possible (physiological and pathological) phenomena, possibly dealing with more complex scenarios, where also anisotropy in electric signal conduction and specific ionic concentration variations are taken into account. We have realized a proof of concept showing a possible way to translate our results into a more realistic scenario, where numerical simulations have been run on a left atrium geometry, see Figure 14. As realistic geometry, we use the Zygote solid 3D heart model, 72 a complete heart geometry reconstructed from high‐resolution CT‐scans representing an average healthy heart. As shown in Reference 73, atrial ectopic beats can be found mostly in the pulmonary veins (with the highest incidence in the left superior pulmonary vein); a possible strategy to avoid that arrhythmias are induced consists of the electrical isolation of the veins with radio‐frequency ablation. 73 , 74 Hence, we consider the case where the ectopic beat is located on the left superior pulmonary veins, and explore different scenarios on the basis of the numerical results obtained in the previous, two‐dimensional case.

FIGURE 14.

Electric potential computed on a 3D template left atrium geometry for different parameter values. The input–output setting described for the two‐dimensional case can represent a first step towards the classification of different conditions also on more complex configurations

In Figure 14 we have reported the (normalized) electric potential computed on a template left atrium geometry, over time, by fixing a value of μ 3 = ε 0 and thus considering two different scenarios (ε 0 = 0.005 and ε 0 = 0.02, respectively) regarding the tissue recovery property. Since in these two cases the values of the output QoI are clustered around two opposite values (see, e.g., the results obtained for Cases 5 and 6 in Figures 11 and 12), we randomly sample from the distributions of μ 1 and μ 2 and compute the corresponding electric potential over time. As highlighted in Figure 14, the conclusions reached for the two‐dimensional case prove to be useful to classify possible scenarios occurring in a left atrium geometry. Also in this case, we indeed obtain a sustained reentry provided that ε 0 = 0.02, and the output QoI takes larger values. In this respect, the analysis performed on the simplified two‐dimensional test case might be helpful in view of a systematic investigation of the effect of physical parameters (related with both the stimulation protocol and the ionic activity) on complex patterns including spiral waves reentry.

4.3. Comparison with other existing approaches

The analysis we carried out is the first attempt of integrating a physics‐based surrogate model built through rigorous reduced‐order modeling techniques such as the POD‐Galerkin method, and an ANN regression model properly accounting for the approximation error between the FOM and the ROM.

So far, only few papers have addressed a systematic uncertainty quantification (UQ) and propagation in cardiac electrophysiology models, despite the very rapid growth of cardiac modeling and the need to deal with multiple scenarios, towards model personalization. For instance, the role of infarct scar dimensions, border zone repolarization properties and anisotropy in the origin and maintenance of cardiac reentry has been considered in Reference 75; the evaluation of cardiac mechanical markers (such as longitudinal and circumferential strains) to estimate the electrical activation times has been addressed in Reference 76. See, for example References 2, 77, for a review of the possible sources of uncertainty in these models at different spatial scales.

A systematic uncertainty quantification and sensitivity analysis in cardiac electrophysiology models must necessarily rely on surrogate models, because of the computational bottlenecks entailed by the need to query the input–output map repeatedly. Usual paradigms for the construction of surrogate models have relied on either lower‐fidelity models or emulators:

regarding the use of lower‐fidelity models, forward UQ has been performed on a simplified Eikonal model in Reference 78, where statistics of the activation map (e.g., activation time at a specific point of the left ventricle) have been computed given the uncertainty associated with the conductivity tensor modeling the fiber orientation, exploiting Bayesian multi‐fidelity methods combining the Eikonal model and a physics‐based simplification of this latter. Although possible – see, for example, 79 , 80 , 81 – the use of the Eikonal model to describe re‐entrant activity is not straightforward, if one aims at taking properly into account both activation and depolarization phases. A simplified one‐dimensional surrogate model providing a cable representation of a three‐dimensional transmural section across the ventricular wall has been considered in Reference 38, where a multi‐fidelity Gaussian process (GP) regression has been exploited to assess the effect of ion channel blocks on the QT interval;

several papers have focused on the use of emulators such as GP regression or generalized polynomial chaos expansions (PC) of the input–output map. For instance, the forward UQ problem of propagating the uncertainty in maximal ion channel conductances to suitable outputs of interest, such as the action potential duration, has been addressed in Reference 3 by means of a Monte Carlo approach exploiting GP emulators. Similar techniques have been used in Reference 5 to quantify the effect of uncertainty on input parameters like fiber rotation angle, ischemic depth, blood conductivity and six bidomain conductivities on outputs characterizing the epicardial potential distribution. A GP surrogate of the exact posterior probability density function has been exploited in Reference 6 for the sake of parameter estimation and model personalization, where the estimation of local tissue excitability in a 3D cardiac electrophysiological model has been performed using input data from simulated 120‐lead electrocardiographic (ECG) data. See also, for example, Reference 82 for model parameter inference based on GP emulation on whole‐heart mechanics, Reference 83 for interpolation of uncertain local activation times on human atrial manifolds and References 84, 85 for the analysis of the effect of wall thickness uncertainties on left ventricle mechanics.

Our technique represents a promising trade‐off between the use of lower‐fidelity models and emulators. Indeed, the ANN regression model can be seen as an emulator, applied to the discrepancy between the FOM and the ROM; this latter might be seen as a lower‐fidelity model, however keeping into account physical complexity (yet featuring a lower computational cost) being built through a projection‐based approach.