Highlights

-

•

Reducing learning inequality in foundational literacy is possible.

-

•

Interventions focusing on foundational skills may reduce learning inequality even without specific inequality targeting.

-

•

Measurement of learning inequality in foundational literacy is possible using existing tools familiar to economics.

-

•

Measuring learning at the bottom of the pyramid can lead to insights otherwise not observable through sample averages.

-

•

Improvements in means and in learning equity are not only compatible but indeed tend to happen simultaneously.

Keywords: Learning inequality, Foundational literacy, Learning poverty at bottom of pyramid, Learning measurement, Distribution of impact, Learning outcomes

Abstract

This paper uses measurements of learning inequality to explore whether learning interventions that are aimed at improving means also reduce inequality, and if so, under what conditions. There is abundant evidence that learning levels are generally low in low- and middle-income countries (LMIC), but there is less knowledge about how learning achievement is distributed within these contexts, and especially about how these distributions change as mean levels increase. We use child-level data on foundational literacy outcomes to quantitatively explore whether and how learning inequality using metrics borrowed from the economics and inequality literature can help us understand the impact of learning interventions. The paper deepens recent work in several ways. First, it extends the analysis to six LMIC, displaying which measures are computable and coherent across contexts and baseline levels. This extension can add valuable information to program evaluation, without being redundant with other metrics. Second, we show the large extent to which the disaggregation of inequality of foundational skills between- and within-schools and grades varies by context and language. Third, we present initial empirical evidence that, at least in the contexts of analysis of foundational interventions, improving average performance can reduce inequality as well, across all levels of socioeconomic status (SES). The data show that at baseline, the groups with the highest internal inequality tend to be the groups with lowest SES and lowest reading scores, as inequality among the poor themselves is higher than among their wealthier counterparts. Regardless of which SES groups benefit more in terms of a change in mean levels of reading, there is still a considerable reduction in inequality by baseline achievement as means increase. These results have policy implications in terms of targeting of interventions: much can be achieved in terms of simultaneously improving averages and increasing equality. This seems particularly true when the initial learning levels are as low as they currently are the developing world.

1. Motivation

The learning crisis—estimated to affect about half of the children in low- and middle-income countries—is a well-documented phenomenon (World Bank, 2017; UNESCO Institute for Statistics, 2017). In spite of the impressive recent gains in enrollment, many pupils around the world spend a large portion of their childhood and teenage years in school without substantially improving their foundational literacy and numeracy skills. As a result, large swaths of children exit the educational system being functionally illiterate and innumerate. As such, the learning crisis is a policy issue deserving attention from policymakers and citizens. From the governmental perspective, large amounts of public funds are invested into educational systems with relatively little learning to show in return. From the household perspective, the opportunity cost of schooling is high for parents and children, especially those in extreme poverty. Increasing the return on all this sacrifice through the delivery of higher cognitive and noncognitive skills, which in turn can enable higher standards of living in the future ought to be a core function of education systems. This is especially true in low- and middle-income countries (LMIC) today where, unlike during the colonial past, mere credentialing, or actual education but only of a minority, is unlikely to ensure jobs or higher earnings. These severe educational deficits enforce and perpetuate intra-country inequalities in terms of educational achievement and mobility. In order to address these cognitive gaps and inequalities, informed research and policy requires deeper knowledge of the distribution of foundational skills within countries and sub-populations, beyond the more aggregated reports which support the existence of a learning crisis or that show that interventions can improve mean learning levels. In this study, we leverage detailed, individual-level foundational literacy data across six LMIC to characterize the distribution of foundational skills in detail, to show how that distribution is affected by progression in the school system and by factors such as socioeconomic status, and by certain programs aimed only at improving means, and to display what has happened to inequality when mean performance has increased.

This paper responds explicitly to three strands of current literature. Chronologically, the first strand of the literature is work on “learning at the bottom of the pyramid”, mostly associated with Wagner et al. (2018). The second line of research is the literature on inequality of learning, flat learning profiles and learning at the right level associated with Pritchett and others at the RISE Programme (see Pritchett and Beatty, 2015; Kaffenberger and Pritchett, 2020; Crouch et al., 2020). The third body of knowledge is the literature mostly associated with the World Bank around “learning poverty” (World Bank, 2019). A discussion of these literatures follows, interweaving the various strands of it. We foreshadow one important distinction between these three strands, subtly following what we say in the paper. The term “bottom of the pyramid” is usually associated with a concept of material deprivation or marginalization, and refers to populations who are not normally well reached by large, formal, “modern,” standardized systems (be it private sector corporations or education). The implied targeting criterion is by income or other vulnerability factors. The other two literatures, especially the learning poverty strand, refer to inequality of learning outcomes and emphasize cut points in learning as a way of targeting. Obviously, cut points in learning will tend to correlate with cut points in the income distribution or socioeconomic status (SES), but as this paper will show in detail, they are definitely not the same construct. The RISE literature tries to bring the various strands together, more recently with an emphasis on pedagogical coherence. This paper tries to work with all these distinctions but, given the provenance of the paper, it tries to work mostly with the strand on pedagogical coherence. Our use of terms such as “bottom of the pyramid,” “learning poverty,” and others is meant to link to these literatures. It should be noted that these literatures are themselves not always cognizant of how, precisely, they talk to each other.

Beyond the overall low learning outcomes in LMIC, a volume of recent research has documented the existence of persistent inequalities and inequities in educational achievement (Crouch et al., 2020). Glewwe et al. (2009) describe the strong elite-bias of the Kenyan educational system inherited from colonial educational structures, and the skewed incentives that teachers have to cater their education towards the top achieving students. Similarly, Muralidharan et al. (2019) describe how the entry of a large number of first-generation primary school students into the Indian educational system as a result of the increase in overall enrollment has also boosted within-school and within-class inequalities, with students from a more diverse background now going to school. These first-generation students are more likely to drop out of school, and perform worse in school (Portela and Atherton, 2020), which ultimately increases within-class inequality. On top of this, there is work, such as that of Pritchett and Beatty (2015), which describes the deep mismatch between fast-moving and wide-spanning curricula, and the baseline achievement of children. In contexts where pupil-teacher ratios are very large, catering to the full range of achievement becomes an extremely challenging task (Duflo et al., 2011), effectively forcing teachers to choose who to teach. The combination of “overambitious curricula”, large classes with heterogenous age and skill distributions, instruction in colonial languages, and structural incentives that reward “teaching to the top” can yield low and unequal learning levels. All these factors together can result in already high-achieving students benefiting even more from schooling, possibly exacerbating existing inequalities at the time of entry into school. This type of detailed analysis of inequality and its progression throughout the different grades needs to be more carefully developed, as we do in the current paper.

In light of the learning crisis, researchers and policymakers have started to shift their focus from increasing enrollment and years of schooling to raising learning for all children. Furthermore, some of these efforts have also pivoted towards measuring equity and equality, beyond just tracking average performance and access within specific geographic regions, as required by Sustainable Development Goal 4, with its distributional emphasis (United Nations and Economic and Social Council. Statistical Commission, 2016).1 Important work has developed a better understanding of the types of educational policies that enable higher overall learning, as we review below. Most of this work has focused on identifying the local constraints for learning, and relieving these barriers through scalable approaches. For instance, one strand of work has focused on better matching between class instruction and students’ starting level and pace of learning, such as the Teach at the Right Level interventions in India and Sub-Saharan Africa (for instance, Banerjee et al., 2017). By tailoring instruction to what students already know, and how quickly each student moves to higher achievement levels, schools can ensure that students are never too far behind or ahead. Furthermore, this type of tailored instruction can reduce classroom inequality through approaches that group students by achievement rather than by age, enabling teachers to better cater to more homogenous classes. Approaches in the same spirit such as Room to Read in India (Joddar and Cooper, 2017; Joddar, 2018), or PRIMR and later on Tusome in Kenya (Piper et al., 2015, 2017, 2018a, 2018b), have also provided evidence that by relieving the constraint of poorly targeted instruction, children’s learning can improve significantly.

Other comprehensive interventions, such as Eble et al. (2019) in Gambia, implement improvements to instruction delivery via teacher coaching, tutoring, and teacher scripts. The promising findings of this study support the hypothesis that improvements in instruction quality can significantly increase learning levels. Furthermore, improving instruction can take many forms and must be tailored to the local context. Work in Pakistan by Beg et al. (2019) shows that videos with expertly-delivered content that fill in teachers’ knowledge can be an effective and scalable approach to improving instruction. Finally, there have been many attempts to relieve constraints via traditional input interventions (e.g., more books, more teacher training), or more traditional “governance” interventions such as school-based management or more parental involvement, but, on average, these “governance” interventions have not been as effective at raising learning outcomes, compared to those that target instructional and pedagogical approaches. (Crouch and DeStefano, 2017; Evans and Yuan, 2019). Even in terms of reducing inequalities, Zuze and Leibbrandt (2011) find that under the right conditions, policies that promote physical resource availability could amount to equity gains but also that, in general, equalizing access to education does not guarantee more equitable outcomes. Even within this category of interventions, appropriately designed policies can still be plausible avenues. For instance, work in Tanzania by Mbiti et al. (2019) shows that combining teaching incentives with school capitation grants to ensure that teachers are motivated and have the physical inputs to deliver quality instruction, can improve learning outcomes. The current body of impact evaluation research briefly reviewed above has been extremely valuable, as these new solutions start informing broader policy plans, such as the large-scale implementation of Teach at the Right Level in Botswana (TaRL, 2020).

Unfortunately, a blind spot in this general body of work is the little attention paid to thoroughly characterizing the changes in the full underlying distribution of foundational skills induced by these policies (Gruijters and Behrman, 2020). Research studying the previous implementations of these programs which mostly targeted, and were successful at, improving the average results, did not necessarily focus on descriptions of the initial distributions or their shifts post intervention. To be fair to the current literature, there is substantial focus on analyses by sub-groups of policy interest through specifications that test for heterogeneous effects by baseline performance, gender, region, grade, among others. However, the main focus of these analyses was some version of aggregated treatment effects by these sub-groups, rather than on analyses of the complete distributions.

Some work has started to explore cross-sectional changes in inequality, but more thorough and longitudinal studies of inequality still need to be better developed. As an exception to the lack of longitudinal analyses, a paper by Crouch and Rolleston (2017) puts forth evidence from regional learning assessments and special longitudinal studies that measure learning in the same group of children as they grow older (studied through SACMEQ and Young Lives). Similarly, Crouch et al. (2006 uses household surveys from most of Latin America to look at intergenerational changes in inequality, finding that the Gini coefficient for years of education improved significantly from 0.58 to 0.36. Other studies (Crouch and Rolleston, 2017;Crouch et al., 2006) provide some initial evidence that countries that go from very poor mean performance to middle mean performance do so by reducing the percent of students at very low levels. In this sense, work such as Gruijters and Behrman (2020), and Oketch et al. (2020) has pointed out that raising overall outcomes, and decreasing dispersion is desirable, as long as this shift comes from “raising the floor”, and not at the expense of higher performers. Even earlier work by Willms (1999) identified socioeconomic gradients in literacy, where countries with the highest literacy rates also having the flattest gradients. Taken together, these papers highlight that relative literacy equality tends to benefit students across the full distribution. In fact, these studies provide initial evidence that countries that experience increases in learning from low average levels to middle average levels, do so by shifting the distribution from the left to the middle, without significantly affecting the right tail of the distribution. Beyond these studies, not much research explores long-term changes in the inequality coefficients. Therefore, in spite of how entrenched and critical intra-country educational inequality may be for educational systems and labor markets, researchers have not yet explored a set of harmonized tools to characterize the underlying skill distributions.

There have been some calls to pay attention to educational inequality, particularly at the “bottom of the pyramid”, from scholars like Dan Wagner et al. (2018), by proposing a Gini coefficient for education, in the context of the more foundational skills. Unfortunately, this work has not yet reached the mainstream of impact evaluation education research in LMIC. Looking at broader descriptive work, international assessments like ASER or Uwezo have been instrumental at providing evidence for the magnitude of the learning crisis. In fact, PAL and EGRA2 sorts of measures have now been used in hundreds of country/language/script contexts.3 The rapid and basic nature of these assessments has been pivotal to scale them at the level of countries. However, it is important to note that these assessments focus on emerging or foundational literacy, and not as much on the deeper comprehension tasks that are more commonly measured towards the end of primary schooling.

Understanding the intra-country distribution of skills in depth is also critical for policy design. Countries aiming to raise standards must ensure that the median or modal individual student gains knowledge, in addition to students collectively gaining knowledge on average. The distinction here is that improvement in a small portion of exceptional students may drive an average improvement, even if the median or modal student does not see any improvement. It is not a mathematical necessity that improving averages would reduce inequality. Thus, if efforts that focus solely or mostly on improving means can also drive reductions of inequality, that is a meaningful or non-trivial result. After all, raising outcomes for students at the low end of the distribution will result in the same overall average gain as raising outcomes by the same amount for a similarly sized group at the top of the distribution. Therefore, understanding the relative sizes of these portions of the distribution, and how they change with interventions that are successful at raising the mean, will illuminate the extent to which differentially targeted policies can raise average country-wide outcomes. Furthermore, understanding the distribution of skills not only by baseline skills purely, but also by other important characteristics like socioeconomic status, gender, and geographic clusters allows for a better-informed set of policies. In particular, understanding how inequality manifests in the broader population can inform whether average-improving policies should be targeted at specific groups, schools and regions, or whether broader reform is needed to have a wider reach. Finally, there is evidence that not all gaps in achievement are a result of differential school experiences, but also that home and community environments can play an important role in shaping school performance (Chetty and Hendren, 2018a, b; Doyle et al., 2017; Heckman and Karapakula, 2019). Given the recent influx of first-generation students, the distribution of achievement at the time of entry into the formal educational systems has likely widened. By understanding how learning is initially distributed and how it evolves during the first years of schooling, policymakers can design effective policies that fill in the resource gaps which end up compounding achievement gaps for different groups of students.

The paper proceeds as follows: section II introduces the broader concept of inequality and the methodological approaches to measure it; sections III-V describe certain types of quantitative analyses across six countries which can be performed to characterize inequality; section VI provides some limitations to our methods and contributions; and section VII concludes by exploring some of the pedagogical underlying causes to such inequality mostly as suggestions for further research.

2. An understanding of inequality for the purposes of this paper

As previously noted, the large volume of research on interventions that improved average educational outcomes has not focused as much on the distributional changes that may have happened during the course of each intervention. Similarly, little is currently known about how foundational skills are distributed throughout the most disadvantaged populations in LMIC. Understanding inequality in learning outcomes is key to designing policies which boost overall learning while also improving equity. Given these relatively large gaps in the foundational learning literature within LMIC, we will consider different angles from which inequality can be described. These different approaches, which include various measures of inequality and dispersion, will allow us to be more precise about where outcomes are most unequal, and how these might evolve over time as average skills improve.

2.1. Definition

There is still a need for the literature to consider more rigorously the distributional changes of interventions. In order to understand these distributional changes, and resulting inequality, we must be clear about the working definition of “inequality.” From a positivist point of view, we will define inequality as the extent to which children with a common characteristic (e.g., grade, school, or even country of origin) perform differently in a given task, which in our case will be foundational literacy, as measured by oral reading fluency. Inherently, any measure of inequality quantifies dispersion of the overall outcome data, as opposed to the computation of statistics like the median or the mean. Instead, inequality is concerned with how far apart similar children’s learning levels are, especially relative to the mean level. Within this definition, there are several substantive mathematical tools to quantify the actual distance among children.

From a normative point of view, much policy and philosophical discussion has been geared towards addressing whether inequality per se is a worrying outcome, especially in terms of material well-being (e.g. income or wealth). In this sense, inequality must not be confused with inequity. Inequity relates to the concept of social justice, and carries negative implications of unfairness. Instead, inequality may or may not be a product of inequity, but it may also arise due to other circumstances such as random chance (e.g. testing issues), weak application of quality standards and quality assurance, or innate differences in ability. While part of the inequalities that we describe do indeed come from inequities in human capital development along lines of gender (Jayachandran and Pande, 2017), ethnicity and regionality (Ejdemyr et al., 2017), or language of instruction (Glewwe et al., 2009), among others, disentangling what portion of a given measure of inequality comes from which inequities is a challenging and messy task, which we will not attempt. Still, some of these may be more obvious than others: for example, gender inequality is a more recognizable and quantifiable form of inequity than inequality based on poor standards (e.g., the bad luck of having a “bad” teacher in a system that does not effectively guarantee a minimum to teacher—or teaching—quality).

Our only prescriptive argument regarding inequality is that, for educational purposes in the earlier grades, ceteris paribus, less inequality is better than more inequality. Fig. 1 illustrates this point by also layering an additional lens of mean achievement onto inequality, as measured by different degrees of dispersion. The argument we make for the top row is that conditional on having the same mean, a narrower distribution is preferable as it allows teachers and schools to target instruction and resources for the more specific level where children are.

Fig. 1.

Simulated distributions with variations of high/low achievement and high/low dispersion.

Notes: the data shown is the result of four different simulations with n=5,000 each, for illustrative purposes only. Each simulation comes from a random number generator drawing from a normal distribution, with the mean and standard deviation specified above each panel.

This is consistent with the literature on teaching at the right level and “over-ambitious curricula” noted above. The normative case for which of the two cases on the bottom row is preferable is tougher, as there could be advantages for a country of having high performers even if the overall mean is low. However, from the point of view of intervening to raise outcomes, the case with low means and low dispersion also provides an opportunity to target a level that will reach many children. In the case of low mean and high inequality, as displayed by the bottom left quadrant, policymakers might have to make an explicit or implicit decision to target the top or bottom portions of the distribution, unless it is the case that certain types of programs are in some sense self-targeting to the left hand side, an issue that we hope to better inform through some of our findings in this paper.

2.2. Types of inequality

For analytical purposes, we also need to distinguish between two key types of inequality: “pure” inequality and inequality by other characteristics. Pure inequality refers to the inequality that stems from different achievement levels. It is typically quantified by identifying features of the underlying distribution of achievement, such as measures of dispersion or the comparison of arbitrary points on the distribution. Pure inequality is assumed to be at least partly generated through randomly allocated factors such as innate skills at the time of birth or indifferent teaching (where some children might be in luck and get a fairly good teacher, and others are out of luck). Mathematically speaking, it represents the largest possible inequality, as any other sub-group analysis is by definition a sub-sample of the broad distribution described by pure inequality. Pure inequality is also, from a pedagogical point of view, the micro-level reality of many teachers on the ground that need to cater to students within a class with its own level of pure inequality.

The second type of inequality refers to inequality by individual or community-level characteristics, such as socioeconomic status, gender, language, ethnicity, or province. This type of inequality is usually reported through sub-group analyses, and comparisons of summary statistics across sub-populations of interests. Furthermore, this type of inequality often deeply molds the distribution described by pure inequality measures. This type of inequality has gotten more attention in the literature for at least two key reasons. First, it may be more actionable than pure inequality. For example, identifying the students for large-scale interventions intended to narrow gaps due to, for instance, ethnic groups may be more actionable and pragmatic than identifying interventions aimed at the bottom 20 % of students nationally. Secondly, this type of inequality is much more often associated with connotations of inequity than pure inequality. Still, policy-makers should have strong theoretical and practical reasons to pay attention to both types of inequality.

2.3. Measures of inequality

In an effort to quantitatively describe inequality, there are several descriptive metrics available to researchers for this purpose. For the most part, these measures are borrowed from the economics literature interested in inequality and variability. The specific metrics we explore are the Gini coefficient, the coefficient of variation (CV), different Px to Py ratios, and the percent of children scoring at zero.4 Table 1 presents further details of what these measures are, and their respective strengths and weaknesses. Furthermore, it is important to clarify that this is by no means a comprehensive list of measures of inequality. We purposefully curated a list of measures that could both reliably inform about the shape and position of the underlying distribution, but that could also be intuitively understood by thoughtful policymakers and researchers in fields that do not use these measures as often. For example, the “generalized index of entropy with α=x” has desirable theoretical properties, such as more control over which portions of the distribution have a heavier weight on the measure of inequality at the time of producing one summary figure. However, it is not a well-known measure, nor does it have an intuitive “natural connection” to the data in the way that other metrics such as Px to Py ratios might. Therefore, we decided to not include measures such as this one.

Table 1.

Inequality measures explored in this paper.

| Measure | Description | Strengths | Weaknesses |

|---|---|---|---|

| Gini coefficient | A measure of relative inequality expressed as a coefficient ranging from 0 to 1. A value of 0 represents a situation in which all individuals own an equal amount of the good in question (in this case, “learning” as represented by ORF). A value of 1 represents a situation where one individual owns all of the good in question (and no others own any). If all ORF scores increase by the same absolute amount, the Gini coefficient decreases even if the absolute distance between the highest- and lowest-scoring individuals is the same as before, as it is computed relative to the overall level. | The measure is well-known to both economists and non-economists, and has been the most used measure in education thus far (albeit generally applied to years of schooling rather than reading outcomes). Any Gini coefficient has a graphical equivalent in the Lorenz curve, and the Lorenz curve, in turn, can be used to visualize where in the distribution the inequality comes from. | No “natural” translation to the magnitude of the measure. Particularly sensitive to high outliers, particularly when the overall mean is low. |

| Coefficient of variation (CV) | Standard deviation over the mean. It is bounded below at zero and has no theoretical upper bound. As with the Gini coefficient, an equal absolute increase in ORF scores for all individuals would drive the measure lower. | Easy to calculate. No specialized substantive or computational knowledge required. It has a “natural” interpretation in the data. | Breaks down when the mean is zero (for instance, with normalized data). Being a ratio of two numbers, a change in CV does not immediately reveal which of the two (or both) moved. No well-established general bounds. |

| Ratio of Px to Py, typically 90th to 10th or 75th to 25th. | Ratio of the score recorded by the person(s) at the xth percentile of the outcome distribution to the score recorded by the person(s) at the yth percentile of the same distribution. It has a lower bound of 1, no upper bound, and is a relative measure in the same way as the others. | Intuitively appealing and commonly used. Analogous to the popular economic and political literature around “% of wealth possessed by the 1 percent-ers.” | Breaks down when the lower Py is 0. Even if ratio is flipped to avoid dividing by zero, uninformative as the result would always be zero regardless of denominator. No well-established general bounds. |

| Percent scoring zero | This is not strictly a measure of inequality; rather, it is the proportion of students unable to read a single word. It is a stark, visceral indicator of poor learning outcomes that is both intuitive and effective in catalyzing institutional effort to remedy. | Very easy to interpret. Commonly used in EGRA reports as a metric that can be appropriately compared across languages and scripts without encountering the dangers of comparing ORF scores across scripts or language families. | Only provides a snapshot of two portions of the distribution, hence does not characterize the full distribution. |

Notes: table adapted from Crouch and Slade (2020).

The importance of at least initially exploring the issue of inequality through more than one “preferred” metric arises from two fronts. Firstly, no single measure can be expected to reliably describe all features of a distribution which could be of interest to researchers and policymakers. In this sense, none of these measures is a perfect reflection of learning inequality. Hence, analyzing inequality through diverse lenses, each with their own strengths and weaknesses, is likely to provide a more well-rounded understanding of skills at the bottom of the pyramid (in an income sense) or among the learning poor (in terms of learning thresholds). Secondly, to the best of our knowledge, these measures have not been fully tested on the type of data we use to describe learning at the bottom of the pyramid. Hence, exploring more than one measure across different settings and datasets can provide a better sense of the empirical characteristics of each of these metrics on this type of data.

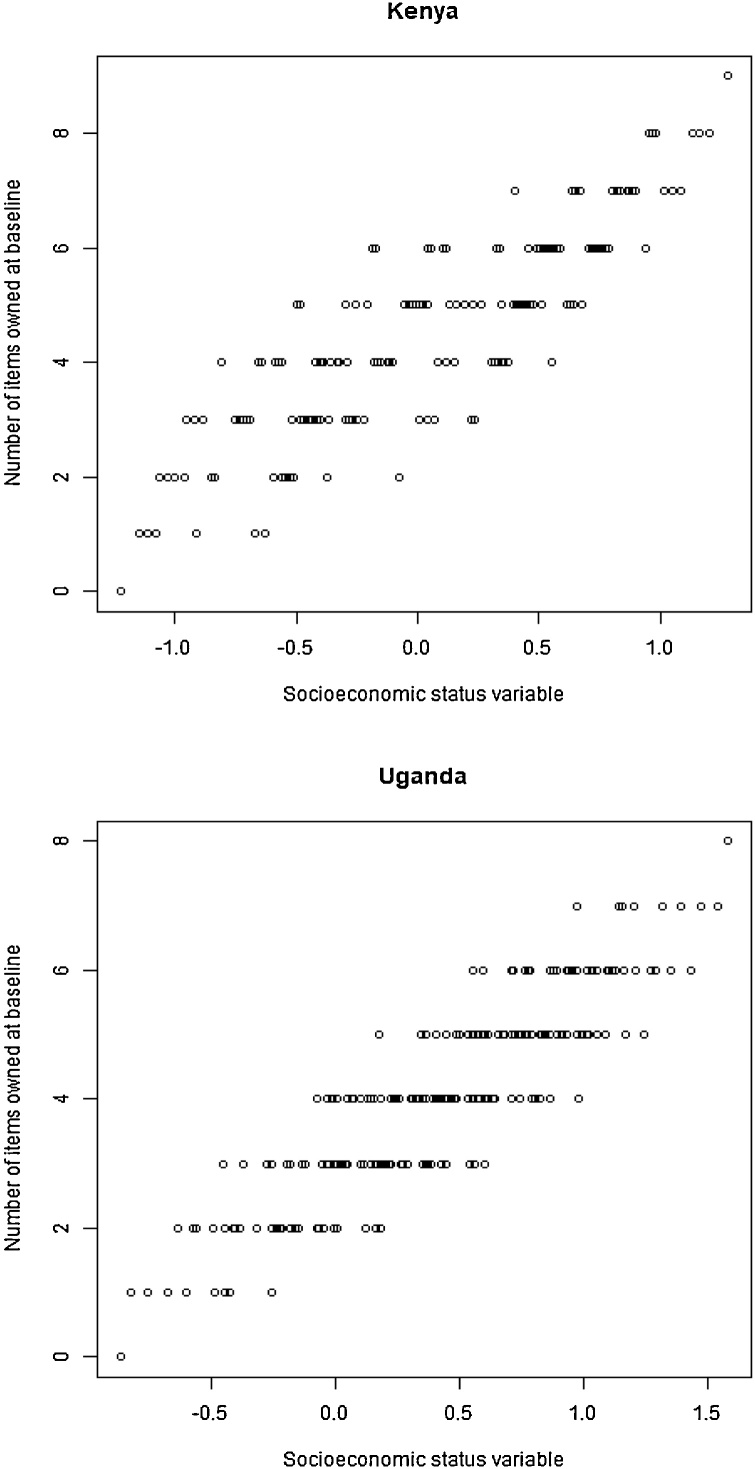

2.4. Instrument and data used, context for the data-gathering

The inequality analyses presented in this paper are based upon the oral reading fluency (ORF) subtask of the early grade reading assessment (EGRA) battery of measures. The EGRA is a one-on-one, face-to-face, live oral reading assessment, conducted by an assessor having a child read in response to a set of stimuli (familiar words, a connected text passage, letters to be sounded out, etc.) and then recording the child’s responses on a paper or electronic response form.5 The assessment focuses on skills that are foundational, such as knowledge of the alphabet and letter sounds, ability to read familiar words, non-familiar or invented words that follow the orthographic rules of the language in question (to assess decoding rather than memorization), ability to read a connected text passage, and answer a few comprehension questions. The assessment takes about 20 minutes. It is in the public domain and therefore not all applications are well documented. Still, more or less “official” applications with known quality control features include some 150 country/year/language combinations, usually in two or three grades per case (most often grades 2 and 3, but sometimes including grades 1, 4, and 6), in dozens of languages (the assessment is applied in the language of instruction in the foundation grades) and many non-Latin scripts. For the purposes of this paper, we leverage EGRA data from six different countries. These six countries were chosen because the datasets from the countries had data on SES, had both baseline and end-line measures from an intervention, and had many languages, or some important combination of those factors. Table 2 provides a description of the data sets used.

Table 2.

Description of data used for this study.

| Country | Number of students (schools) | Type of data (panel/ repeated cross-section/ snapshot) | Grades | Language(s) |

|---|---|---|---|---|

| Democratic Republic of Congo | 2346 (230); 7081 (290) | Unique round; Baseline/Endline | 3; 4, 6 | Lingala, Tshiluba, Kiswahili; French |

| Egypt | 2118 (56) | Baseline/Endline | 2 | Arabic |

| Kenya | 12,769 (302) | Baseline/Midline/Endline | 1, 2 | English, Kiswahili |

| Malawi | 5120 (173) | Baseline/Unique rounds | 1, 2, 3 | Chichewa |

| Philippines | 6414 (308) | Baseline/Endline | 1,2 | Cebuano, Ilokano, Hiligaynon, Maguindanaoan |

| Uganda | 12,146 (620) | Baseline/Endline | 1−6 | English, and 12 local languages like Luganda, Acoli, and Lugwere |

Notes: the data from the Democratic Republic of Congo comes from two different projects. The details for each project are separated by a semi-colon.

One advantage of the EGRA is that, similar to those assessments in the PAL network such as ASER and UWEZO (and PISA for that matter), the same assessment is used in all grades, so one can observe the flatness of the grade-wise learning profile, and therefore differential performance by grade is attributable to something about the learning levels and processes, not a difference in the assessment. The pedagogical and policy uses of EGRA are explained in the literature (see, e.g., Dubeck and Gove., 2015; Gove et al., 2015). A toolkit that explains sampling approaches and application procedures is available (RTI International 2016). Gove et al. (2017) describe how the assessment has been used in impact evaluation of reading programs similar to those used for this paper, and the nature of such programs. Several psychometric descriptions exist—a fairly typical and thorough one (using Spanish as a case in point but generalizing thoroughly) is Jiménez et al. (2014).6 As a way of characterizing the sorts of interventions and their usage of EGRA or similar assessments, Graham and Kelly (2017) summarize more than two dozen such interventions and document a median effect size on ORF of 0.45, equivalent to boosting learning (in these terms) by about 2 grade-equivalents. Thus, these are significantly impactful interventions in terms of mean effects—useful background that prompts to ask whether they might also have an impact on equality. Piper et al. (2018a, 2018b) characterize successful interventions as typically consisting of structured lesson plans, teacher coaching, better, plentiful, and inexpensive books, appropriate assessment (such as, for these purposes, EGRA or something like it), time on task, all with a focus on reading per se, not “language” or language arts. Crouch and DeStefano (2015) note how these various inputs have to be very tightly and intensely managed and coordinated, and how such management needs to be based on reading outcomes data.

2.5. Outcome of interest

Among all the sub-skills measured by the EGRA, we chose “oral reading fluency” (ORF) as the main outcome of interest. ORF is the closest analogue in the current EGRA battery to the key skill of independent reading of narrative text, and to the more colloquial meaning of “being literate.” Similarly, ORF is usually the skill that is most correlated with the other skills in the assessment, and has the highest loading on a principal component analysis (Jiménez et al., 2014) of the assessment. ORF scores are quantified in correct words per minute (cwpm) and are calculated by tallying the number of words the student has correctly read aloud and dividing that sum by the proportion of time elapsed in seconds. Specifically, correct words per minute is measured as follows:

In particular, ORF exhibits several useful characteristics which make it well-suited for these analyses. First and most importantly, it is a continuous measure with a large range, typically characterized by an absolute minimum of 0 and no theoretical maximum.7 Second, it is easily interpretable: an ORF of 60 cwpm represents a child reading one word per second, a cadence which is easy for audiences to model and evocative to listeners. Third, it is empirically meaningful: fluency is highly correlated with reading comprehension, which is the most salient reading skill for broader educational achievement.8 Note that this paper does not assert that reading and learning are synonymous.

We also do not claim that oral reading fluency is the best measure of whether a child is truly reading, nor that ORF is the best measure to estimate the metrics that we explore. We select ORF as a reasonable proxy for reading skill because it is well correlated with comprehension, relatively straightforward to measure with fidelity (as compared to, say, silent reading), and available for a wide range of languages in a wide variety of contexts since reading-skill assessments in low- and middle-income countries began to grow in popularity around 2010 (RTI International, 2015).9 ORF as a metric also poses certain methodological challenges. Unlike alphabetical knowledge or phonological awareness, ORF is beyond the earliest of “emergent” literacy skills and as such, depending on the grade-targeted and context, a more advanced measure like ORF could yield “floor effects” by placing many children at 0. These floor effects would hinder the researcher’s ability to distinguish amongst these students (even if, for instance, the number of letters they can recognize is indeed different). This clearly has implications for the “percent scoring zero” metric as well: even within the same sample, more advanced literacy tasks like ORF will yield higher shares of children at zero than emergent literacy skills like alphabetic knowledge. We also acknowledge that had we chosen another more emergent skill, the opposite could have happened by reaching ceiling effects in certain sub-populations (in an extreme example, measuring letter names among 12th graders). Ultimately, we do not advocate for the universal use of ORF as the key measure to compute inequality metrics, as this is a highly context-dependent decision. Our use of ORF partly reflects a need to choose a common metric to showcase our analysis across all samples used in this paper. Similarly, the potential presence of floor or ceiling effects in any of our samples is not necessarily a weakness of the metric in question, but rather of the choice of skills used to estimate the inequality metrics.

Note that an issue with measuring inequality with this type of achievement data is that they do not necessarily follow as simple and clear-cut distribution as other educational outcome data do, by circumstance or by design, such as, PISA or TIMSS. Therefore, the simple characterization of the distribution of early literacy or numeracy skills through the computation of a mean and a variance may not be enough to understand what the distribution looks like, as it would in more regular distributions. There is a clear need to explore and utilize other (and varied) methodological tools and metrics to understand inequality in these contexts, as we do in this paper.

3. The performance of different measures of inequality

Using data on oral reading fluency across six countries, we empirically test the different metrics described in Table 1, as shown in Table 3. Specifically, Table 3 is meant as an illustration of how these indicators perform, and not as a comprehensive display of all possible sub-populations in these data sets.10 Instead, this table should serve as a pattern-seeing tool to evaluate the appropriateness of these metrics to measure what students in these samples and contexts know, and how this knowledge is distributed. Specifically, through this table we would like to understand how these metrics “behave”, i.e. whether they yield interesting and meaningful values when applied to these data. Below we go through a description of how each metric behaves, and how they behave together—do they cohere to give a fuller picture?

Table 3.

Computation of inequality measures for select subsamples across six LMIC.

| Country | Language | Grade | Phase | Mean | Gini | CV | p90/p10 | p75/p25 | % zero |

|---|---|---|---|---|---|---|---|---|---|

| Democratic Republic of Congo | French | 4 | Baseline | 8.1 | 0.755 | 1.7 | . | . | 59.4 |

| Endline | 9.0 | 0.749 | 1.7 | . | . | 54.4 | |||

| 6 | Baseline | 27.1 | 0.467 | 0.8 | . | 12.8 | 23.3 | ||

| Endline | 32.5 | 0.458 | 0.8 | . | 5.7 | 16.4 | |||

| Kiswahili | 3 | Unique round | 1.6 | 0.895 | 2.9 | . | . | 80.5 | |

| Lingala | 1.8 | 0.899 | 3.0 | . | . | 78.7 | |||

| Tshiluba | 2.5 | 0.875 | 2.6 | . | . | 77.2 | |||

| Egypt | Arabic | 2 | Baseline | 10.3 | 0.701 | 1.6 | . | . | 47.6 |

| Endline | 18.7 | 0.661 | 1.4 | . | . | 34.2 | |||

| Kenya PRIMR | English | 1 | Baseline | 6.5 | 0.799 | 2.0 | . | . | 62.4 |

| Midline | 26.4 | 0.543 | 1.0 | . | 21.5 | 23.9 | |||

| Endline | 29.1 | 0.522 | 1.0 | . | 16.7 | 21.6 | |||

| 2 | Baseline | 25.1 | 0.546 | 1.0 | . | . | 25.8 | ||

| Midline | 46.1 | 0.411 | 0.7 | . | 3.3 | 10.1 | |||

| Endline | 54.5 | 0.363 | 0.6 | 25.3 | 2.7 | 8.5 | |||

| Kiswahili | 1 | Baseline | 4.4 | 0.809 | 2.0 | . | . | 68.9 | |

| Midline | 18.6 | 0.482 | 0.9 | . | 9.7 | 21.9 | |||

| Endline | 18.9 | 0.499 | 0.9 | . | . | 25.8 | |||

| 2 | Baseline | 17.8 | 0.522 | 0.9 | . | . | 30.7 | ||

| Midline | 30.1 | 0.359 | 0.6 | . | 2.4 | 10.4 | |||

| Endline | 32.6 | 0.338 | 0.6 | 14.3 | 2.3 | 8.9 | |||

| Malawi | Chichewa | 1 | Baseline | 0.2 | 0.980 | 6.7 | . | . | 97.3 |

| 2 | Unique round | 1.0 | 0.948 | 4.2 | . | . | 91.0 | ||

| 3 | 2.7 | 0.900 | 2.8 | . | . | 83.8 | |||

| Philippines | Cebuano | 1 | Baseline | 21.1 | 0.535 | 1.0 | . | 17.5 | 22.2 |

| Endline | 21.9 | 0.495 | 0.9 | . | 35.0 | 23.4 | |||

| 2 | Baseline | 39.8 | 0.338 | 0.6 | 23.6 | 2.5 | 7.8 | ||

| Endline | 44.7 | 0.285 | 0.5 | 9.1 | 1.9 | 4.6 | |||

| Ilokano | 1 | Baseline | 14.7 | 0.574 | 1.1 | . | . | 33.3 | |

| Endline | 17.7 | 0.497 | 0.9 | . | 9.7 | 17.4 | |||

| 2 | Baseline | 30.1 | 0.375 | 0.7 | . | 3.7 | 12.9 | ||

| Endline | 33.4 | 0.329 | 0.6 | 28.7 | 2.3 | 9.9 | |||

| Hiligaynon | 1 | Baseline | 12.9 | 0.660 | 1.3 | . | . | 44.2 | |

| Endline | 13.7 | 0.639 | 1.2 | . | . | 35.6 | |||

| 2 | Baseline | 31.6 | 0.451 | 0.8 | . | 12.5 | 21.7 | ||

| Endline | 27.0 | 0.519 | 0.9 | . | 45.0 | 24.6 | |||

| Maguindanaoan | 1 | Baseline | 6.9 | 0.782 | 1.8 | . | . | 66.3 | |

| Endline | 8.2 | 0.751 | 1.6 | . | . | 60.4 | |||

| 2 | Baseline | 20.9 | 0.547 | 1.0 | . | . | 38.0 | ||

| Endline | 22.8 | 0.485 | 0.9 | . | . | 28.6 | |||

| Uganda | English | 1 | Baseline | 0.2 | 0.984 | 8.3 | . | . | 96.3 |

| Endline | 0.7 | 0.950 | 4.5 | . | . | 89.1 | |||

| 2 | Baseline | 3.0 | 0.874 | 2.8 | . | . | 72.8 | ||

| Endline | 5.7 | 0.835 | 2.2 | . | . | 67.3 | |||

| Luganda | 1 | Baseline | 0.2 | 0.988 | 9.0 | . | . | 97.7 | |

| Endline | 2.1 | 0.905 | 2.9 | . | . | 86.3 | |||

| 2 | Baseline | 6.2 | 0.788 | 1.8 | . | . | 65.4 | ||

| Endline | 10.4 | 0.672 | 1.3 | . | . | 48.5 | |||

| Acoli | 1 | Baseline | 0.0 | 0.994 | 17.0 | . | . | 99.2 | |

| Endline | 0.3 | 0.969 | 5.6 | . | . | 95.8 | |||

| 2 | Baseline | 0.5 | 0.975 | 6.2 | . | . | 93.8 | ||

| Endline | 3.3 | 0.907 | 3.0 | . | . | 84.1 |

Notes: “Mean”: average number of correct words per minute. "CV”: coefficient of variation; “% zero”: Percent of children at 0 correct words per minute. A dot in a cell means “undefined.”.

3.1. The performance of different metrics

The first interesting feature of these data is the wide diversity, especially across samples, in terms of oral reading fluency (ORF). English scores are close to zero for the earlier grades in the Uganda and Malawi samples, but they are closer to 40 words per minute for Cebuano in the Philippines sample. Therefore, it is important to keep in mind that given that the outcome variable (correct words per minute) is bounded on the left by zero, the shape of each distribution will be invariably affected by the proximity of its mean to zero. Having said this, the direction in which the relative position of the mean will influence inequality is ambiguous.11 It could be the case that means closer to zero represent a distribution where everyone is equally low, or, instead, it could be that averages which are further from zero allow more individuals to have at least some of the “good” (oral reading fluency) in question, decreasing inequality. We therefore also aim to understand the relationship between mean achievement and inequality for this type of foundational literacy outcome.

Note that the mean, here, is not a measure of inequality and is in the table only to indicate where the distribution is “centered,” an important issue to consider in interpreting the inequality measures. Aside from these summary measures in Table 3, one could also utilize tools that do not require the collapse of different distributional features into a single feature. For example, Fig. 2 shows a visual analysis which describes certain properties of the kernel distribution while maintaining interpretability, for two different contexts. Specifically, the column with panels on the left simply shows the distribution of normalized scores at baseline and at endline (using the baseline parameters) for two studies. Therefore, if a given intervention had positive and equal distributional effects across the full sample, the endline density would appear shifted to the right, relative to the baseline density. In practice, changes do not need to happen equally across the full distribution (i.e., through perfect rightward shifts), as the Kenya panel (bottom left) shows. Instead, these changes are driven by a large improvement across a large section of the middle distribution. This approach also allows to see whether no substantial distributional (or even average) effects happen, such as in the case of Malawi. These plots can then be translated into cumulative distributions that show the underlying values at each percentile. Interestingly, the two examples we show display very different characteristics: in Malawi, over 90 % of all children achieve 0 cwpm, and there is no change across testing rounds—clearly not a very successful intervention on just about any score. In Kenya, instead, a little over one in three of all children achieve only 0 cwpm at baseline, compared to fewer than one in five at endline. Furthermore, the cumulative distributions are the most apart at the lower levels, emphasizing that the largest changes happened at the bottom of the distribution. While these visual analyses provide a very informative grasp of distributional differences, it is hard to systematically compare many sub-populations and contexts by individually and graphically analyzing their distributions, and hence the need for more collapsed statistics.

Fig. 2.

Distributional comparisons of oral reading fluency across countries.

Notes: the left column displays the baseline and endline distributions, normalized using the baseline data means and standard deviations. The right column displays the mean oral reading fluency (as measures by correct words per minute) by percentile.

The second key metric shown in Table 3 is the Gini coefficient, which overall seems to behave well. The values observed lie between 0.285 and 0.984. Across all samples and sub-populations, the mean for the Gini coefficient is 0.646 and the standard deviation is 0.221, with values largely lying within a normal distribution. As an interesting benchmark or point of comparison, note that these values are higher than those observed for goods like income. For instance, the latest World Bank data on the national-level income per capita Gini index ranges from 0.242 in Slovenia to 0.630 in South Africa (World Development Indicators). The lowest Gini in Table 1 corresponds to the Philippines in grade 2, when tested in Cebuano at endline, being 0.285. This specific sub-population also has the highest mean ORF. Contrarily, the highest Gini coefficients representing the most unequal sub-populations are each from the Malawi samples, and also correspond to the six highest percent-zero scores across all samples. Interestingly, these subpopulations have extremely low achievement levels. Note that Gini coefficients this high can quickly devolve into “degenerate” metrics as they approach 1, in the sense that they are not very informative (similar to the previous discussion of floor effects). Therefore, measures like the Gini, which are relative to the mean, tend to be less useful as the mean is closer to zero, given how sensitive the metric becomes to changes in the upper tail.12 In fact, the issue of degenerate metrics due to prevalence of zeros is related to the fact that the choice of ORF as the main metric may be methodologically unfitting for this sub-sample, but also to the pedagogical implication that ORF is possibly a frontier to cross over for any intervention that aims to raise literacy outcomes in countries similar to those sampled here.

Moving on to the coefficient of variation (CV), this indicator also seems to behave well. The correlation coefficient between the CV and the Gini is 0.81 across all cohorts and samples. Hence, these two measures of inequality move together well, as they did in studies for PRIMR and Tusome (Crouch and Slade, 2020). Across all samples, the mean value of the CV decreases 4.2 to 2.5 from baseline to endline, with a statistically significant decrease in value (p-value of 0.00). Much like the Gini coefficient, the value of the CV is highly dependent on the overall mean. This factor could enter through two different channels. First, if inequality indeed decreases as means increase, then it is also natural to expect the CV to reflect lower levels of inequality given higher means. However, this could also be due to the mathematical fact that the CV is calculated by dividing the standard deviation over the mean. Two populations with the same standard deviation but different means will have different CVs, and in particular the population with the higher mean will have the lowest CV. In this case, all the empirical considerations regarding the expected value of the Gini coefficient conditional on the average seem to also apply to CV, as mathematically they are both computed, either explicitly or implicitly, factoring in the sample mean.

The most problematic metric reported in Table 3 is the ratio of Px to Py. In general, a worrying feature of any ratio is how it behaves around values near or at 0. Particularly in the type of foundational literacy data from LMIC that we are using, it is very common for children to score 0. Taking P90 to P10 as an example, dividing whatever number the 90th percentile is achieving by 0, which is what the 10th percentile tends to achieve, yields a mathematically undefined expression. On the flip side, calculating P10 to P90 yields a 0 regardless of what the value of P90 is—a fully uninformative metric. In fact, for 53 % of subpopulations in our data, the P90 to P10 cannot be calculated because more than 10 percent of the children assessed recorded a score of 0. While P75 to P25 can be calculated more frequently, it is available for only approximately 63 % of those sub-populations. Interestingly, across these datasets these ratios are more often undefined for colonial languages such as French and English than for mother tongue languages, partly reflecting the lack of general mastery of these colonial languages by the left tail of the distribution (and a clear disadvantage of early instruction in these languages). In theory, Px to Py ratios could be intuitive metrics due to the simple interpretation that they could yield (e.g. “the Xth percentile performs n times higher than the Yth percentile”). However, this is less relevant as this ratio does not necessarily translate into policy recommendations in the form that “students in the Yth percentile needs n times more resources and instruction as students in the Xth percentile”. Furthermore, unlike measures like the Gini, there are fewer benchmarks or limits that can put into perspective a given value of the Px to Py ratio, making it harder to use as a tool to compare across countries and samples. In general, the nature of foundational literacy data does not make these ratios suitable metrics.

The last key metric displayed in Table 3 is the percent of children reading at 0 correct words per minute (“% zero”). This measure, most closely linked conceptually (in that it is a percentage at a minimum) with “learning poverty” as defined by the World Bank (World Bank, 2019), displays interesting features not captured by the other metrics. First of all, as expected, it “behaves well”, in the sense that it can actually be calculated for all sub-populations. Furthermore, it displays enough variation across sub-samples to make it an interesting point of comparison for different contexts. This metric has a correlation with the Gini coefficient across all of our sub-populations of 0.71, which is high enough to assume that they tend to move together, but also to convey slightly different information. Furthermore, the positive correlation indicates again that the higher the number of low performers in a given sample (as measured by the number of children performing at 0 cwpm), the higher the Gini tends to be. This measure is highly intuitive and actionable, making it easier for policymakers to set learning goals for the bottom of the pyramid. A significant disadvantage of the “percent at zero” metric is that it “dichotomizes” a distribution by splitting it into those above or below an arbitrary threshold. While this is valuable if there is a strong theoretical or empirical motivation for choosing a specific threshold, it could also oversimplify the description of a distribution, perhaps even excluding those just above the selected threshold. Thus, this metric works best when complemented by other measures that also inform about the distribution of skills above the threshold. Note that as result of early observations on the behavior of this indicator, interventions by INGOs and governments started to do two things: a) target a reduction in the percentage of children who could not read at all as a meaningful intermediate benchmark, and b) start to track the percentage of children who would meet a more ambitious but reasonable benchmark such as 30 or 40 correct words per minute (for example, see a goal setting exercise in Ghana described in USAID, 2014). While the World Bank’s target is not to improve the percentage of children who cannot read down to zero, a target related to the percentage of children being below some minimum by age 10 (i.e., out of “learning poverty”), and halving that, is a measure similar to driving to 0 the percentage of children who cannot read at all—but more useful later in the grade structure. The fact that some implementers have found the “% zero” to be a useful benchmark suggests that “% reaching a minimum at age 10″ would also be. But one must make note of the caveats in this paragraph.

Beyond the comparison of the different metrics, Table 3 also highlights some interesting features of the data. A salient pattern is that even within country samples from the same project, different languages have different mean achievement levels and inequality results. As an example of this, the baseline performance in grade 1 in the Philippines ranges from 6.9–21.1, and the Gini coefficient ranges from 0.497 to 0.791. Similarly, the changes from baseline to endline are not uniform. The CV for grade 2 increases in Hiligaynon, decreases in Ilokano, and remains almost constant for Cebuano. These changes are not insignificant, as they may have equity consequences across ethnic groups down the line, were the interventions yielding these changes in inequality to be taken to scale without better understanding these distributional issues. Looking at the data cannot tell us what the precise sociological or pedagogical reasons why this might be—but even merely looking at the data can alert us that there is something that needs to be looked at more substantively. While one hypothesis could be that while part of these differences could be due to differences in how foundational literacy develops across different languages (for examples of this see Spaull, 2016 and Spaull et al., 2020 in South Africa), part of these changes could also be due to differential levels of investment and quality of education across ethnic groups.

3.2. The Gini coefficient for oral reading fluency interpreted via Lorenz curves

The Gini coefficient is one of the most well-known measures of inequality, typically used by economists to quantify income or wealth inequality.13 Given the prominence of this measure, we present a more detailed discussion of its potential applications and features for the type of data describing foundational literacy. In particular, a nice feature of the Gini coefficient is that it has a visual equivalent through the plotting of “Lorenz curves.” A Lorenz curve is a representation of the cumulative distribution of a certain “good” on the vertical axis (“wealth” when measuring “wealth inequality”, or oral reading fluency, in our illustration of learning measures), graphed against the ordered percentiles of the same good on the horizontal axis. Continuing with the wealth example, any particular point on the Lorenz curve with the coordinates (x, y) shows that all individuals up to the xth percentile on wealth for this sample cumulatively have y percent of the total wealth. Similarly, the Lorenz curve is usually graphed alongside the “line of perfect equality” (i.e., the 45-degree line), which would be the hypothetical Lorenz curve of a population where everyone has the same amount of the good in question. Empirically, the closer the Lorenz curve is to the line of perfect equality, the more equal the underlying distribution is. Visually, the Gini coefficient represents the area between the Lorenz curve and the line of perfect equality as a share of the total area under the line of perfect equality. In this paper, we are treating oral reading fluency, as measured by the number of correct words per minute, as the “good” to be accumulated. We analogize that there is a total amount of “oral reading fluency”, and we study how it is distributed across the population. Of course, ORF is much less of a “zero-sum game” in the short term than goods like wealth, particularly because ORF cannot be redistributed across children the way wealth can. In other words, the only way to change the distribution is by “creating” more of the good, or increasing children’s ORF.14

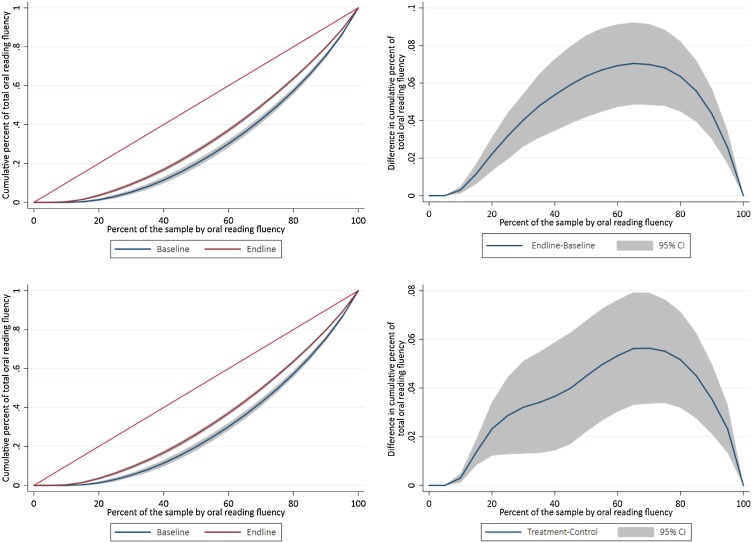

Given the visual connection between Gini coefficients and the visual representation of Lorenz curves, this metric can be a valuable tool to not only understand distributions better, but the particular differences in these distributions. Furthermore, Lorenz curves allow for the creation of “contrast plots”, as displayed in the panel on the right column of Fig. 3. Contrast plots simply compute the difference between two Lorenz curves by the baseline percentile regardless of the absolute achievement level behind each curve. An additional advantage to Lorenz curves is that both Lorenz curves and contrast plots allow for the computation of confidence intervals which allow for formal testing in differences for a given portion of the distribution across two sub-populations.

Fig. 3.

Lorenz curves and contrast plots for the grade 2 experimental sample of the PRIMR intervention in Kenya.

Notes: the sample for the top row consists of only the treatment group for grade 2, while the sample for the bottom row is both the control and the treatment groups. The outcome used was the oral reading fluency in English. The Gini coefficients for the top row panels (the treatment group at baseline and endline) are 0.419 at baseline and 0.332 at endline. The Gini coefficients for the bottom row panels (the treatment and control groups at end) are 0.332 for the treatment group, and 0.400 for the control group, both at endline.

We showcase the utility of Lorenz curves and Gini coefficients in analyzing learning inequality and the relationship between inequality and a threshold or “learning poverty” (à la World Bank) concept through two examples. The first example is displayed in Fig. 3, and it uses the grade 2 experimental group from the PRIMR intervention in Kenya. The top row panels of Fig. 3 effectively compare the distribution for the treatment group before and after the intervention, therefore showing what portions of the distribution contributed the most to the improvement in equality for this specific subgroup. A similar interpretation can be given to the bottom row panels: the contrast plot shows what portions of the distributions displayed on the bottom left contributed the most to the improvement in equality for this specific subsample, comparing treatment and control groups. Both of these contrast plots depict a situation in which indeed the underlying distribution became more equal across most of the full range of ability post-intervention.

Neither the shape of the contrast plots, nor their signs at any point of the distribution are mere artifacts of the data. For instance, for the top right panel, the short left-tail of the contrast plot mimics the short, left tail of the Lorenz curves that are precisely at 0 for the cumulative amount of oral reading fluency. This left tail represents the approximately 1 in 10 children that could not read a single word in English at baseline and at endline, and ties to a “learning poverty” threshold concept, but shows the relationship between that and a summary of the distribution such as a Gini. Following the contrast plot, inequality starts to decrease from baseline to endline as percentiles of achievement increase, reaching its maximum around the 65th percentile, where the endline distribution became the most equal compared to the baseline distribution. As mentioned before, it is significant that the contrast plot is always positive, and almost always in a statistically significant manner. It could be that there are cases or countries where it is always negative, or that it is negative in some portions, displaying true heterogeneity in how the distribution changes. In this case, either explicitly or implicitly, the intervention served a wide range of children in this context, and this is something that needs to be examined jointly with the pedagogical features of the program that made this possible.

Importantly, this analysis is not at the individual level, but rather at the distribution level. When we say that the distribution changed at some percentile X, we do not mean that the change happened for the specific child who was at Xth percentile at baseline, but rather for this percentile at baseline and endline, regardless of whether this is the same child or not. This has implications for the analysis of the curves. Consider a hypothetical toy example of an intervention with only 100 children, in which only the median child at baseline (say, Ana) gained from an intervention and that by the endline round, Ana scores higher than the top percentile of the baseline. This change would translate to a contrast plot in the following manner. For the top percentile, it would compare the baseline performance of whatever child was in the top percentile at baseline, to Ana’s endline performance. Similarly, the change at the median would compare Ana’s performance at baseline to the endline performance of whichever child was in the 51st percentile at baseline (as that child now becomes the new median, given Ana’s shift in the distribution). In this sense, this example is meant to reinforce that the Lorenz curve, resulting contrast plots, and Gini coefficients are powerful tools to compare distributions, but not specific individuals’ performance.

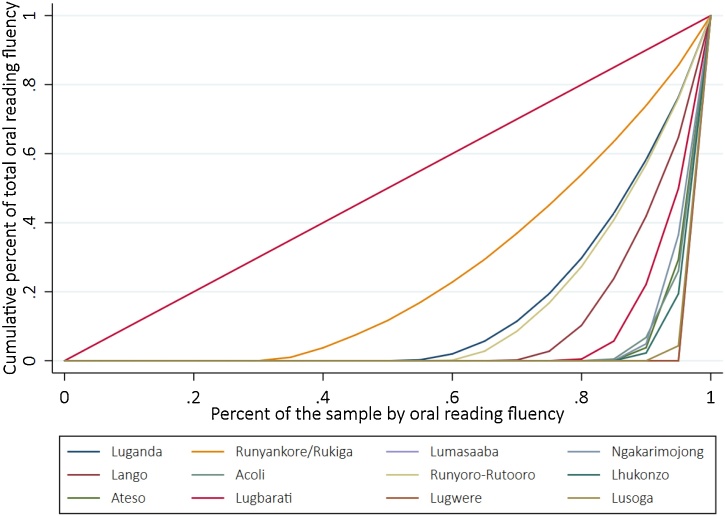

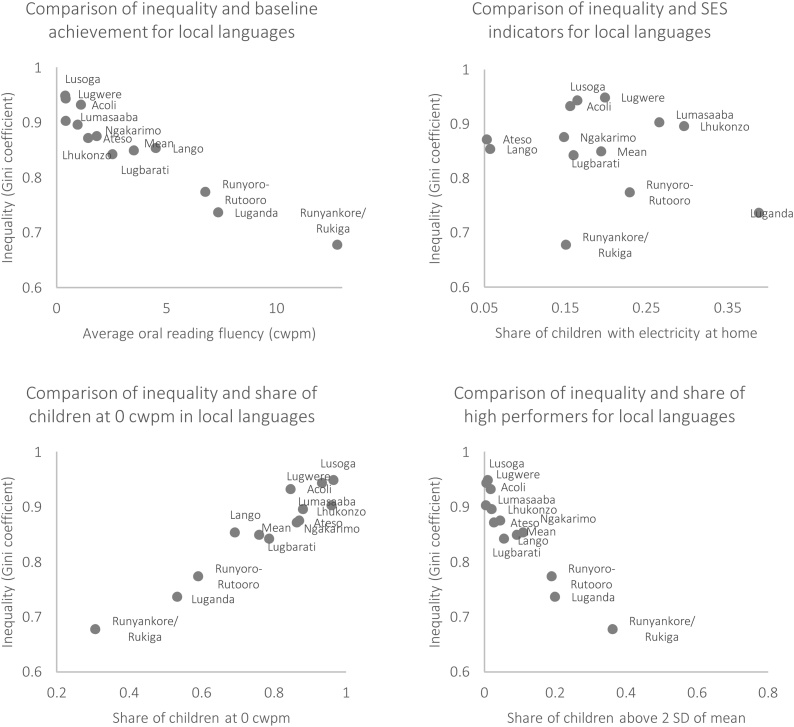

The second insight that can be derived from the visual depiction of Lorenz curves is inter-group comparisons of inequality at a given point of time. Unlike the previous example, this use has less to do with “pure inequality”, and more to do with inequalities across sub-populations linked to their demographic characteristics. To exemplify this approach, Fig. 4 plots the Lorenz curves for the oral reading fluency of a specific sample of grade 3 Ugandan children in their mother tongue. There are stark differences between each of these curves and the line of perfect equality. Languages such as Runyankore-Rukiga or Luganda are relatively closer to the line of perfect equality, with underlying Gini coefficients of 0.679 and 0.737 respectively. Interestingly, Runyankore-Rukiga is a south-western language spoken in the district of origin of the Ugandan president Yoweri Museveni and Luganda is the most commonly-spoken non-colonial language in the capital, Kampala. On the other extreme, languages such as Lhukonzo or Lugwere are much further from the line of perfect equality, with Gini coefficients of 0.897 and 0.949 respectively.15 In this sense, inequality, mean performance, and the language of each sub-population within countries are strongly correlated, likely also with other “unobservable” characteristics linked to other types of inequality like political or economic inequality.

Fig. 4.

Lorenz curves for oral reading fluency in native language in Uganda.

Notes: the sub-sample of interest was grade 3 students at baseline. The outcome of interest was oral reading fluency in the mother tongue of each child.

In spite of the striking differences in inequality across languages for Ugandan children shown in Fig. 4, one cannot infer the relationship between inequality and average performance from these Lorenz plots. This is an important feature to explore, as high inequality could be driven by a few very high performers even though most children still do well—in a sense, inequality among relatively good performers, which is indeed inequality but less worrisome that a situation driven by fat left tails of children with very low achievement levels. Fig. 5 displays the relationship between the underlying Gini coefficients for each of the Lorenz plots in Fig. 4, and other important variables at the time of describing inequality in outcomes. First, the top left panel shows a negative correlation between Gini coefficient and mean achievement. In other words, in this specific context, as the average child scores higher on oral reading fluency, the overall inequality decreases. This is an interesting result in itself as it sheds some light on the question of whether moving overall means can have effects on inequality, which is not an obvious result in itself. In contrast, the top right panel correlates the Gini coefficient with an indicator of socioeconomic status like household level access to electricity. Indeed, the relationship is much weaker than the correlation between inequality and mean outcome. In this sense, we see that to move unequal results, targeting performance can be a more proximate input to the desired outcome than interventions targeted based on the socioeconomic status of children.

Fig. 5.

Comparison of Gini coefficient across different Ugandan languages, and different metrics of performance.

Notes: the sub-sample of interest was grade 3 students at baseline. The outcome of interest was oral reading fluency in the mother tongue of each child. The “share of electricity at home” is defined as the number of children who report having electricity at home divided by the total number of children within each language group. The share of children at 0 correct words per minute (cwpm) is defined as the number of children who could not read any words divided by the total number of children within each language group. The share of children above 2 standard deviations (SD) is defined as the number of children performing at least 2 standard deviations higher than the mean (a threshold of ∼17 cwpm) divided by the total number of children.

Another interesting result that stems from Fig. 5 is the high correlation between the Gini, and the presence of outliers in either end of the distribution. On the bottom row, we show that a lower Gini is indeed strongly correlated with lower share of low performers (measured as the percent of students at 0 cwpm) and higher shares of high performers (measured as the percent of students above two standard deviations of the mean). The Lorenz curves shown in Fig. 4 are characterized by long left tails (or rather an intercept with the horizontal axis), with many children reading 0 correct words per minute. That is, the correlation between lower shares of low-performers and the Gini coefficients is not surprising, as pushing kids from the left-tails towards higher levels should indeed result in more equitable distributions. The fact that a lower Gini is correlated with larger shares of high performers is perhaps more surprising in isolation, but not in this context. A larger number of high performers, as we are measuring it, is a reflection of an underlying sub-distribution that is more centered towards the right (i.e. higher mean), and hence a larger proportion of the right tail makes the cut for “higher performers.”

From these two correlations, one could hypothesize that targeting either high or low performers might decrease inequality at the same rate, but this argument would miss two key points. First, as previously discussed, a larger number of high performers is just an artifact of where each language distribution is generally placed, not of a special group of high performing students. Furthermore, targeting an intervention only at high-performing students (however they might be defined in different contexts), without moving the rest of the distribution, is likely to increase inequality instead. The second key issue is that the share of low-performing students is much higher than the share of high-performing students for all languages. Even languages with relatively high means such as Luganda have 53 % of students performing at 0 cwpm, and have only 20 % as “high performers.” Other extreme cases such as Lugwere have 96 % of students at 0 cwpm and 0% at the high-performing echelons. Therefore, inequality-reducing policies that target the critically-low performing students are likely to find a much larger audience than those aiming at relatively high-performing students. Mathematically, policy targeting mean performance of the higher-performing students would need to be several times more effective at moving the mean than its counterpart of targeting low-performing students to move the overall average by the same amount.

4. How is inequality distributed within countries?

Beyond the different determinants of inequality within certain language, country, or grade subgroups, it is also critical to understand how inequality is distributed within a country. In the particular case of education, inequality can be clustered around a “natural unit” of education delivery among the population: schools. This is a key empirical question particularly for the bottom of the pyramid, as it is pivotal to an understanding of whether the low performing students are concentrated in certain schools or whether it is widespread across the whole geographic area covered by the data. In fact, previous work such as Brunner et al. (2018) has revealed that the between-school vs. within-school variation in learning, but also other measures like motivation or affect, can greatly differ by country. To explore this question in our context, we will follow a simple variance decomposition of the kind that is typically applied to TIMSS or PIRLS data. We base our approach on the method proposed by Foy (2005). In particular, we will understand the “between-school” variance as the intraclass correlation (ICC) within schools (a measure more familiarly used in determining ideal sample size in clustered random sampling, but useful also in this context), which is the between-school variance as the proportion of the sum of the two variance components, described in the following equation (Foy, 2005):

Where is the between-school variance, and is the within-school variance. This approach is particularly enticing given the straightforward interpretation of the outcome: in this case, ICC is always between 0 and 1, and it represents the share of the total variance in outcome (oral reading fluency) than can be explained by differences between schools. Furthermore, very similar approaches have been implemented in reports for international tests such as PISA (OECD, 2017), allowing us to compare our results with the results found in typically more developed countries (and much later grades).16 We display the variance decomposition for different data sets in Table 4 below, by testing round. One way to interpret these numbers is that the higher the values in the “between” columns, the more concentrated oral reading fluency is within certain schools, as differences in the outcome would be largely explained by differences between schools.

Table 4.

variance decomposition of oral reading fluency between/within schools.

| Country | Language | Grade | Baseline |

Endline |

||

|---|---|---|---|---|---|---|

| Between | Within | Between | Within | |||

| Malawi | English | 1 | 11 % | 89 % | 13 % | 87 % |

| 3 | 18 % | 82 % | 17 % | 83 % | ||

| DRC | Tshiluba | 3 | 19 % | 81 % | 24 % | 76 % |

| Kiswahili | 3 | 36 % | 64 % | 12 % | 88 % | |

| Lingala | 3 | 34 % | 66 % | 19 % | 81 % | |

| French | 4 | 20 % | 80 % | 28 % | 72 % | |

| French | 5 | 32 % | 68 % | 27 % | 73 % | |

| French | 6 | 31 % | 69 % | 43 % | 57 % | |

| Egypt | Arabic | 2 | 35 % | 65 % | . | . |

| Kenya PRIMR | English | 1 | 34 % | 66 % | 47 % | 53 % |

| 2 | 39 % | 61 % | 43 % | 57 % | ||

| Kiswahili | 1 | 27 % | 73 % | 29 % | 71 % | |

| 2 | 32 % | 68 % | 31 % | 69 % | ||

| Philippines | Cebuano | 2 | 38% | 62 % | 26 % | 74 % |

| Ilokano | 2 | 36 % | 64 % | 36 % | 64 % | |

| Hiligaynon | 2 | 33 % | 67 % | 42 % | 58 % | |

| Maguindanaoan | 2 | 31 % | 69 % | 33 % | 67 % | |

| Uganda | English | 1 | 8% | 92 % | 35 % | 65 % |

| 2 | 40% | 60 % | 39 % | 61 % | ||

| 3 | 39 % | 61 % | 31 % | 69 % | ||

| 4 | 37% | 63 % | 35 % | 65 % | ||

| 5 | 37% | 63 % | 35 % | 65 % | ||

| AcolI | 1 | 4% | 96 % | 9% | 91 % | |

| 2 | 19 % | 81 % | 19 % | 81 % | ||

| 3 | 13 % | 87 % | 19 % | 81 % | ||

| 4 | 11 % | 89 % | . | . | ||

| 5 | 25 % | 75 % | . | . | ||

| Luganda | 1 | 6% | 94 % | 25 % | 75 % | |

| 2 | 36 % | 64 % | 17 % | 83 % | ||

| 3 | 22% | 78 % | 13 % | 87 % | ||

| 4 | 10 % | 90 % | 11 % | 89 % | ||

| 5 | 21 % | 79 % | . | . | ||

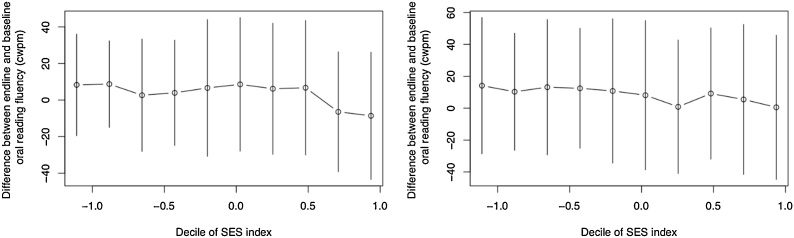

Table 4 displays three interesting patterns.17 Firstly, the between-school variance in foundational literacy in LMIC has a wide range. The ICC for English in the Malawi sample for grade 1 was only 11 %, while it was 34 % for the Kenyan sample also in grade 1, also tested in English. In other words, performance is much more clustered by schools in the Kenya sample than in the Malawi sample (again, at least for these two samples). The second interesting feature in Table 3 is the typically low between-school variation for grade 1 across contexts, with the exception of Kenya. In countries where preschool penetration is low and uncommon even among the relatively wealthy sub-groups, it is natural to expect that children across all schools enter the first grade with close to no oral reading fluency. This means that the low between-school variation is due to the fact that there are many children at 0 during the baseline round, the modal experience across all schools. This is typically reverted by the endline, where Uganda provides a stark contrast: first grade Luganda goes from an ICC of 6%–25%, and English from 8% to 35 %. Contextualizing this change, in the 2015 OECD PISA data only Iceland had an ICC of less than 8%, and only 3 countries out of 35 OECD countries had IC higher than 35 %. In other words, achievement in Uganda goes from being as little clustered as the least concentrated country in the OECD to as clustered as the most concentrated countries in the OECD in just one year of schooling.