Abstract

A previous functional magnetic resonance imaging (fMRI) study by Weiss et al. (Weiss et al., Human Brain Mapping, 2018, 39, 4334–4348) examined brain specialization for phonological and semantic processing of spoken words in young children who were 5 to 6 years old and found evidence for specialization in the temporal but not the frontal lobe. According to a prominent neurocognitive model of language development (Skeide & Friederici, Nature Reviews Neuroscience, 2016, 17, 323–332), the frontal lobe matures later than the temporal lobe. Thus, the current study aimed to examine if brain specialization in the frontal lobe can be observed in a slightly older cohort of children aged 7 to 8 years old using the same experimental and analytical approach as in Weiss et al. (Weiss et al., Human Brain Mapping, 2018, 39, 4334–4348). One hundred and ten typically developing children were recruited and were asked to perform a sound judgment task, tapping into phonological processing, and a meaning judgment task, tapping into semantic processing, while in the MRI scanner. Direct task comparisons showed that these children exhibited language specialization in both the temporal and the frontal lobes, with the left posterior dorsal inferior frontal gyrus (IFG) showing greater activation for the sound than the meaning judgment task, and the left anterior ventral IFG and the left posterior middle temporal gyrus (MTG) showing greater activation for the meaning than the sound judgment task. These findings demonstrate that 7‐ to 8‐year‐old children have already begun to develop a language‐related specialization in the frontal lobe, suggesting that early elementary schoolers rely on both specialized linguistic manipulation and representation mechanisms to perform language tasks.

Keywords: frontal lobe, language specialization, phonology, semantic

We designed an auditory sound judgment task tapping into phonological processing and an auditory meaning judgment task tapping into semantic processing. We found that 7‐ to 8‐year‐old children exhibited language specialization in both the temporal and the frontal lobes, with the left posterior dorsal inferior frontal gyrus (IFG) showing greater activation for the sound than the meaning judgment task, and the left anterior ventral IFG and the left posterior middle temporal gyrus (MTG) showing greater activation for the meaning than the sound judgment task. Our findings suggest that early elementary schoolers rely on both specialized linguistic manipulation and representation mechanisms to perform language tasks.

1. INTRODUCTION

According to the interactive specialization framework, cortical regions begin with broad functionality. However, over the course of development, interactions between regions sharpen functionality and thus lead to cortical specialization (Johnson, 2011). Language processing, a skill that takes many years to master, is crucial for success in daily life. Although the IS account has been supported in many areas such as face processing, social cognition, reading, and cognitive control (Johnson, 2011), how the “language brain” specializes in developing children is less clear. In addition, several developmental disorders have been characterized as having delayed or atypical patterns of specialization (Johnson, 2011). Thus, it is important to study language specialization in typically developing children because not only can it inform the IS account within the domain of language processing but it can also be used as a reference for children with developmental language disorders.

Semantic and phonological processing are two critical components in language comprehension and serve as foundations for later reading acquisition (e.g., Frost, Madsbjerg, Niedersøe, Olofsson, & Sørensen, 2005; Wang, Joanisse, & Booth, 2020). According to neurocognitive models of language processing (e.g., Binder, Desai, Graves, & Conant, 2009; Friederici & Gierhan, 2013; Hickok & Poeppel, 2007), the left posterior superior temporal gyrus (STG), supramarginal gyrus (SMG), inferior parietal lobule (IPL), and posterior dorsal inferior frontal gyrus (dIFG) support phonological processing. In contrast, the left middle temporal gyrus (MTG), angular gyrus (AG), anterior fusiform gyrus (FG), and anterior ventral inferior frontal gyrus (vIFG) are associated with semantic processing. Language specialization, or language‐related activation that is specific to a particular task, is best supported by evidence from double dissociations, in which lingusitic processes are directly compared to isolate the brain regions that support each skill specifically. Previous studies have consistently shown, using this technique, that adults have established specialized phonological and semantic processing networks consisting of these frontal and temporoparietal regions (e.g., Booth et al., 2006; Devlin, Matthews, & Rushworth, 2003; McDermott, Petersen, Watson, & Ojemann, 2003; Poldrack et al., 1999; Price & Mechelli, 2005; Price, Moore, Humphreys, & Wise, 1997). However, it is less clear when such specialization is established in developing children.

Many previous studies have separately examined phonological or semantic processing in children (e.g., Balsamo, Xu, & Gaillard, 2006; Brennan, Cao, Pedroarena‐Leal, McNorgan, & Booth, 2013; Cao et al., 2017; Cone, Burman, Bitan, Bolger, & Booth, 2008; Dębska et al., 2016; Desroches et al., 2010; Kovelman et al., 2012; Paquette et al., 2015; Raschle, Zuk, & Gaab, 2012; Weiss‐Croft & Baldeweg, 2015). However, given that only one task was examined in these studies, questions about specialization could not be addressed. Only a few studies, to date, have directly contrasted phonological and semantic processing in children (Landi, Mencl, Frost, Sandak, & Pugh, 2010; Liu et al., 2018; Mathur, Schultz, & Wang, 2020). Landi et al. (2010) recruited children who were 9 to 19 years old and asked them to perform a cross‐modal (auditory and visual) categorical meaning judgment task and a visual rhyme judgment task. They found that children engaged the left STG and AG more in the meaning task than the rhyming task. While greater recruitment of the AG during semantic processing is consistent with prior work on language specialization in adults, greater engagement of the STG, a region implicated in phonological processing, was unexpected. However, given that the stimuli in the meaning task were presented both visually and auditorily, whereas the stimuli in the rhyming task were only presented visually, this unexpected finding could be due to the modality differences across tasks. In contrast to their findings for the meaning task, Landi et al. (2010) did not find evidence that children engaged any regions more in the rhyming task as compared to the meaning task, which may have been driven by the fact that the rhyming task was too easy for the children examined. Liu et al. (2018) recruited children aged 11 to 13 years old and compared their brain activation during a rhyming and a meaning association task on visually presented Chinese characters. Unlike the results of Landi et al. (2010), the authors did not find any significant results. Lack of evidence for specialization was also observed in a study using a univariate analysis approach to examine processing in 5‐ to 7‐year‐old children during a rhyme judgment and a meaning judgment task on visually presented English words (Mathur et al., 2020). However, Mathur et al. (2020) did find that the posterior dIFG was sensitive to the rhyme task, and the anterior vIFG was sensitive to the semantic task using a multi‐voxel pattern analysis (MVPA) searchlight approach. Although the MVPA results suggest a sub‐division of function within the IFG, this interpretation was based on visual inspection of the brain activation maps within each task and not a direct comparison across tasks. Therefore, whether these cortical regions within the frontal lobe are specialized for, or just support, semantic and phonological processing remains unclear.

The overall lack of evidence for language‐related specialization in children observed across these studies may in part be driven by the use of visual stimuli. The visual presentation of the words places demands on decoding skills before phonological or semantic judgments can be made. According to the connectionist model of reading proposed by Harm and Seidenberg (2004), orthography, phonology, and semantics are interconnected and are automatically co‐activated during reading. Thus, semantic and phonological processing regions are more likely to be recruited during both the semantic and phonological tasks when visual words are utilized, thereby reducing the likelihood of finding a double dissociation. Weiss et al. (2018) were among the first to examine language specialization using an auditory sound judgment and an auditory meaning judgment task. The authors found a double dissociation in 5‐ to 6‐year‐old children, such that the left STG showed greater activation for the sound than the meaning task, and the left MTG exhibited greater activation for the meaning than the sound task. However, no evidence for specialization in the frontal lobe was observed, suggesting that this lobe has not yet matured in these young children. This interpretation is in accordance with the neurocognitive model of language development proposed by Skeide and Friederici (2016), which argues that the temporal lobe develops earlier than the frontal lobe. Given that adults show language‐related specialization in the left IFG (e.g., Booth et al., 2006; Devlin et al., 2003; McDermott et al., 2003; Poldrack et al., 1999; Price & Mechelli, 2005), it is clear that frontal regions do eventually become a part of the specialized phonological and semantic networks. However, when this frontal lobe specialization occurs for language processing remains to be seen.

The goal of the current study was to examine whether phonological and semantic specialization in the frontal lobe can be observed in slightly older children, aged 7 to 8 years old, using the same auditory experimental paradigm and analytical approach as in Weiss et al. (2018). Consistent with Weiss et al. (2018), three levels of analyses were used to examine language specialization using a phonological and a semantic task. First, we directly contrasted activation elicited by an auditory sound judgment task and an auditory meaning judgment task to examine the double dissociation between phonological and semantic processing. Second, we contrasted a hard and an easy condition within each task (onset vs. rhyme and weak vs. strong association) to examine whether the specialized regions for each task were also sensitive to within task difficulty levels. Third, we examined the correlation between neural specialization of phonological or semantic processing and two standardized behavioral measures, assessing phonological awareness and semantic association skill, to determine if the higher skill was associated with greater specialization.

Based on Weiss et al. (2018), which found evidence for specialization in the temporal lobe in 5‐ to 6‐year‐old children, we predicted that language specialization in the temporal lobe would persist in 7‐ to 8‐year‐old children. More specifically, we predicted that the left STG would show greater activation for the sound than the meaning judgment task, and the left MTG would show greater activation for the meaning than the sound judgment task. While Weiss et al. (2018) did not find evidence of frontal specialization, Mathur et al. (2020) showed a sub‐division of phonological and semantic processing in the frontal lobe during visual word judgment tasks in 5‐ to 7‐year‐old children using an MVPA searchlight approach. Thus, we expected to observe language specialization in the frontal lobe in 7‐ to 8‐year‐old children during our auditory sound and meaning tasks. We predicted that the anterior vIFG would be more engaged for the meaning than the sound judgment task, and the posterior dIFG would be more engaged for the sound than the meaning judgment task. In addition, Weiss et al. (2018) found further evidence for phonological and semantic specialization in the temporal lobe using a parametric modulation approach and brain‐behavioral correlations. Thus, as further evidence for phonological specialization in our 7‐ to 8‐year‐old children, we predicted that phonological processing regions, such as the left STG and posterior dIFG, would show greater activation for the onset than the rhyme condition and that brain activation for the sound minus the meaning judgment task in these regions would be correlated with phonological awareness skill. Finally, as further evidence for semantic specialization in our 7‐ to 8‐year‐old children, we predicted that semantic processing regions, such as the left MTG and anterior vIFG, would show greater activation for the weak than the strong association condition and that brain activation for the meaning minus the sound judgment task in these regions would be correlated with semantic association skill.

2. METHODS

2.1. Participants

Two‐hundred and four children were originally enrolled in this study. Three were excluded for not completing MRI scanning sessions. Twenty‐five were excluded after screening for movement during the fMRI tasks (see Section 2.4 for criteria). Fifty‐five were excluded due to low fMRI task accuracy (see Section 2.2 for criteria). One was excluded due to left‐handedness. Six were excluded due to not being mainstream English speakers. One was excluded due to missing the phonological skill measure. Three were excluded due to a nonverbal IQ score lower than 80. Thus, 110 children (65 females, mean age = 7.38, SD = 0.29, range = 7.05 to 8.27 years old) were included in the final sample for this study. All participants were recruited in the Austin, Texas metropolitan area. The Institutional Review Board at the University of Texas at Austin approved all the experimental procedures. Consent was collected from participants' parents or guardians and assent was collected from children before participation in our study.

Parents or guardians were asked to complete an exclusionary survey and a developmental history questionnaire. Then, participants completed several screening tests, which included the diagnostic evaluation of language variation (DELV) Part 1 language variation status (Seymour, Roeper, & De Villiers, 2003) and 5‐handedness questions in which the child had to pretend to write, erase, pick, open, and throw something. Participants were included if they met the following criteria: (a) right‐handed, defined as completing at least 3 of the 5 handedness tasks with their right hand; (b) a mainstream American English speaker, defined as 9 of 15 responses for 7‐year‐olds and 11 of 15 responses for 8‐year‐olds that were categorized as mainstream English on the DELV Part I Language Variation Status sub‐test based on the scoring manual for this test; (c) no learning, neurological, or psychiatric disorders, according to the developmental history questionnaire; and (d) normal hearing and normal or corrected‐to‐normal vision, as reported by each child's parent or guardian.

Children also completed a series of standardized tests to assess their language skills and non‐verbal IQ. General language skill was measured using the Core Language Scale (CLS) score on the Clinical Evaluation of Language Fundamentals, Fifth Edition (CELF‐5, Wiig, Secord, & Semel, 2013). Nonverbal IQ was measured using the Kaufman Brief Intelligence Test, Second Edition (KBIT‐2, Kaufman & Kaufman, 2004). All children included in the analyses had normal IQ, as indexed by a standardized score of 80 or higher on the KBIT‐2, and typical language abilities, as indexed by a standardized CLS score of 80 or higher on the CELF‐5. Children's phonological skill was measured using the phoneme isolation subtest on the comprehensive test of phonological processing (CTOPP‐2, Wagner, Torgesen, & Rashotte, 2013). This test requires participants to produce parts of the word, answering questions such as “What is the first sound in the word man?” This subtest was selected because it resembles the in‐scanner phonological task and is similar to the sound matching subtest used with younger children in Weiss et al. (2018). Children's semantic association skill was measured using the word classes subtest on the CELF‐5, in which children are asked to choose two out of three or four words that go together semantically. This subtest was selected as it was used by Weiss et al. (2018). Descriptive statistics for the standardized testing measures are shown in Table 1.

TABLE 1.

Descriptive statistics for the standardized tests

| Tests | Raw scores | Standardized scores | ||

|---|---|---|---|---|

| Mean (SD) | Range | Mean (SD) | Range | |

| KBIT‐2 nonverbal IQ | 27.3 (6.4) | 15–41 | 112.4 (16.2) | 80–147 |

| CELF‐5 core language scale | 44.5 (8.4) | 27–63 | 107.3 (13.8) | 81–139 |

| CTOPP‐2 phoneme isolation | 24.4 (4.7) | 9–31 | 10.5 (2.5) | 5–16 |

| CELF‐5 word classes | 24.1 (4.6) | 7–37 | 12.7 (3.3) | 3–19 |

2.2. Procedure

2.2.1. The sound judgment task

The sound judgment task taps into children's phonological processing for spoken words. In this task, participants were auditorily presented, through earphones, with two sequential one‐syllable words. Children were asked to judge whether the two words share any of the same sounds. The task included three different experimental conditions: Rhyme, Onset, and Unrelated (see Table 2). Children were expected to press the “yes” button for both the onset and rhyme conditions and the “no” button for the unrelated condition. In addition to the three experimental conditions, the task included a perceptual control condition in which participants heard two sequentially presented frequency‐modulated sounds (i.e., “shh‐shh”) and were asked to press the “yes” button. Participants completed two runs of the task with 12 trials per condition per run for a total of 24 trials for each of the four conditions. The task included a total number of 96 trials divided into two separate 48‐trial runs. Each auditory word had a duration ranging from 439 to 706 ms. The second word was presented approximately 1,000 ms after the onset of the first word. Overall, in each trial, the stimuli duration (i.e., the two words with a brief pause in between) ranged from 1,490 to 1865 ms and was followed by a jittered response interval ranging from 1,500 to 2,736 ms. A blue circle appeared simultaneously with the auditory presentation of the stimuli to help maintain attention on the task. The blue circle changed to yellow to provide a 1,000 ms warning for the participants to respond if they had not already done so, before moving on to the next trial. The total trial duration ranged from 3,000 to 4,530 ms. Each run lasted ~3 min.

TABLE 2.

Experimental conditions in the sound and meaning judgment tasks

| Task | Condition | Response | Brief explanation | Example |

|---|---|---|---|---|

| Sound task | Onset | Yes | Two words share the first sound | Coat‐cup |

| Rhyme | Yes | Two words share the final sound | Wide‐ride | |

| Unrelated | No | Two words do not share sounds | Zip‐cone | |

| Perceptual | Yes | Frequency modulated noise | Shh‐shh | |

| Meaning task | Low | Yes | Two words are weakly associated in meaning | Save‐keep |

| High | Yes | Two words are strongly associated in meaning | Dog‐cat | |

| Unrelated | No | Two words are not related in meaning | Map‐hut | |

| Perceptual | Yes | Frequency modulated noise | Shh‐shh |

Note: In the sound judgment task, children were asked: “Do the two words share any of the same sounds?” In the meaning judgment task, children were asked: “Do the two words go together?”

The auditory word conditions were designed according to the following standards. For the onset condition, the word pairs only shared the same initial phoneme (corresponding to one letter of their written form). For the rhyme condition, the word pairs shared the same final vowel and phoneme/cluster (corresponding to 2 to 3 letters at the end of their written form). For the unrelated condition, there were no shared phonemes (or letters in their written form). All words were monosyllabic, and all word pairs had no semantic association based on the University of South Florida Free Association Norms (Nelson, McEvoy & Schreiber, 2004). There were no significant differences between conditions in word length, the number of phonemes, written word frequency, orthographic neighbors, phonological neighbors, semantic neighbors, and the number of morphemes for either the first or the second word in a trial within a run (Rhyme vs. Onset: ps > .123; Rhyme or Onset vs. Unrelated: ps > .123) or across runs (Rhyme: ps > .162; Onset: ps > .436; Unrelated: ps > .436; linguistic characteristics were obtained from the English Lexicon Project https://elexicon.wustl.edu/, Balota et al., 2007). There were also no significant differences between conditions in phonotactic probabilities, including both phoneme and bi‐phone probabilities for either the first or the second word in a trial either within a run (Rhyme vs. Onset: ps > .400; Rhyme or Onset vs. Unrelated: ps > .456) or across runs (Rhyme: ps > .068; Onset: ps > .225; Unrelated: ps > .206; phonotactic probabilities were obtained from a phonotactic probability calculator https://calculator.ku.edu/phonotactic/English/words, Vitevitch & Luce, 2004).

2.2.2. The meaning judgment task

The meaning judgment task examines children's semantic processing for spoken words. In this task, participants were auditorily presented, through earphones, with two sequential one‐ to two‐syllable words. Children were asked to determine whether the two words go together semantically. The task included three different experimental conditions: Low association, High association, and Unrelated (see Table 2). Children were expected to press the “yes” button for both the low and high association conditions and the “no” button for the unrelated condition. In addition to the three experimental conditions, the task included a perceptual control condition in which participants heard two sequentially presented frequency‐modulated sounds (i.e., “shh‐shh”) and were asked to press “yes” button. Participants completed two runs of the task with 12 trials per condition per run for a total of 24 stimuli for each of the four conditions. The task included a total number of 96 trials divided into two separate 48‐trial runs. Each auditory word had a duration ranging from 500 to 700 ms. The second word was presented ~1,000 ms after the onset of the first word. Overall, in each trial, the stimuli duration (i.e., the two words with a brief pause in between) ranged from 1,500 to 1,865 ms and was followed by a jittered response interval ranging from 1,800 to 2,701 ms. A blue circle appeared simultaneously with the auditory presentation of the stimuli to help maintain attention on the task. The blue circle changed to yellow to provide a 1,000 ms warning for the participants to respond if they had not already done so, before moving on to the next trial. The total trial duration ranged from 3,300 to 4,565 ms. Each run lasted ~3 min.

The auditory word conditions were designed according to the following standards. The low association condition was defined as word pairs having a weak semantic association with an association strength between 0.14 and 0.39 (M = 0.27, SD = 0.07). The high association condition was defined as word pairs having a strong semantic association with an association strength between 0.40 and 0.85 (M = 0.64, SD = 0.13). The unrelated condition was defined as word pairs that shared no semantic association. Associative strength was derived from Forward Cue‐to‐Target Strength (FSG) values reported by the University of South Florida Free Association Norms (Nelson, McEvoy, & Schreiber, 2004). There were no significant differences in association strength between the two runs of the meaning judgment task (ps > .425). There were also no significant differences between conditions in word length, number of phonemes, number of syllables, written word frequency, orthographic neighbors, phonological neighbors, semantic neighbors, and number of morphemes for either the first or the second word in a trial either within runs (High vs. Low: ps > .167; High or Low vs. Unrelated: ps > .068) or across runs (High: ps > .069; Low: ps > .181; Unrelated: ps > .097; linguistic characteristics were obtained from the English Lexicon Project https://elexicon.wustl.edu/, Balota et al., 2007).

In order to make sure that the participants understood the task and to acclimate them to the scanner environment, all children were required to complete the fMRI tasks, with different stimuli, in a mock scanner, and on a computer before the fMRI scanning session.

Participants who scored within an acceptable accuracy range and had no response bias on the fMRI tasks were included in the final analysis. Specifically, to be included, children had to score greater than or equal to 50% on the perceptual and rhyme/strong conditions (to ensure that children were engaged in and capable of performing the tasks), and children had to have an accuracy difference between the rhyme/strong condition (requiring a “yes” response) and the unrelated condition (requiring “no” response) of lower than 40% (to ensure that there was no apparent response bias during the tasks). The mean, SD, and range of the accuracies and reaction times for each condition during the sound and the meaning judgment tasks for the final sample are shown in Table 3. The average reaction time for each condition was only based on correct trials, calculated from the onset of the second word for the three experimental word conditions, and the onset of the trial for the perceptual control condition. Reaction times, which were less than or greater than 3 SD from the mean of all correct trials within a run, or were less than 250 ms, were excluded.

TABLE 3.

Behavioral performance during the sound and the meaning judgment tasks

| Tasks | Conditions | Accuracy (%) | Reaction time (ms) | ||

|---|---|---|---|---|---|

| Mean (SD) | Range | Mean (SD) | Range | ||

| Sound task | Onset | 69.7 (14.6) | 25–96 | 1,329 (199) | 982–1,873 |

| Rhyme | 87.9 (10.0) | 58–100 | 1,253 (165) | 905–1,701 | |

| Unrelated | 84.4 (10.6) | 46–100 | 1,360 (174) | 953–1,851 | |

| Perceptual | 94.6 (6.6) | 67–100 | 1,286 (428) | 639–2,306 | |

| Meaning task | Low | 84.6 (11.4) | 46–100 | 1,330 (192) | 841–1,743 |

| High | 90.0 (7.1) | 67–100 | 1,234 (168) | 835–1,645 | |

| Unrelated | 84.2 (10.1) | 58–100 | 1,389 (180) | 996–1,817 | |

| Perceptual | 96.1 (4.9) | 75–100 | 1,284 (450) | 529–2,396 | |

2.3. Data acquisition

Participants laid in the scanner with a response button box placed in their right hand. To keep participants focused on the task, visual stimuli were projected onto a screen, viewed via a mirror attached to the head coil. Participants wore earphones to hear the auditory stimuli, and two pads in between the earphones and the head coil were used to attenuate scanner noise.

Images were acquired using a 3.0 T Skyra Siemens scanner with a 64‐channel head coil. The blood oxygen level dependent (BOLD) signal was measured using a susceptibility weighted single‐shot echo planar imaging (EPI) method. Functional images were acquired with multiband EPI. The following parameters were used: TE = 30 ms, flip angle = 80°, matrix size = 128 × 128, FOV = 256 mm2, slice thickness = 2 mm without gaps, number of slices = 56, TR = 1,250 ms, multi‐band acceleration factor = 4, voxel size = 2 × 2 × 2 mm. A high‐resolution T1‐weighted MPRAGE scan was acquired with the following scan parameters: TR = 1900 ms, TE = 2.34 ms, matrix size = 256 × 256, field of view = 256 mm2, slice thickness = 1 mm, number of slices = 192.

2.4. Data analysis

Statistical Parametric Mapping 12 (SPM12, http://www.fil.ion.ucl.ac.uk/spm) was used to analyze the MRI data. First, all functional images were realigned to their mean functional image across runs. The anatomical image was segmented and warped to a pediatric tissue probability map template to get the transformation field. An anatomical brain mask was created by combining the segmented products (i.e., gray, white, and cerebrospinal fluid), and then applied to its original anatomical image to produce a skull‐stripped anatomical image. All functional images, including the mean functional image, were then co‐registered to the skull‐stripped anatomical image. All functional images were then normalized to a pediatric template by applying the transformation field to them and re‐sampled with a voxel size of 2 × 2 × 2 mm. The pediatric tissue probability map template was created using CerebroMatic (Wilke et al., 2017), a tool that makes SPM12 compatible pediatric templates with user‐defined age, sex, and magnetic field parameters. The unified segmentation parameters estimated from 1919 participants (Wilke et al., 2017, downloaded from https://www.medizin.uni‐tuebingen.de/kinder/en/research/neuroimaging/software/) were used. We defined our parameters as a magnetic field strength of 3.0 T, age range from 7 to 9 years old with 1‐month intervals, and sex as two females and two males at each age interval, resulting in a sample of 100 participants, to obtain our age‐appropriate pediatric template. After normalization, smoothing was applied to all the functional images with a 6 mm isotropic Gaussian kernel.

To reduce movement effects on the brain signal, Art‐Repair (Mazaika, Hoeft, Glover, & Reiss, 2009) was used to identify outlier volumes, which were defined as those with volume‐to‐volume head movement exceeding 1.5 mm in any direction, head movements greater than 5 mm in any direction from the mean functional image across runs, or deviations of more than 4% from the mean global signal intensity. These outlier volumes were then repaired using interpolation based on the nearest non‐outlier volumes. Participants included in our study had no more than 10% of the volumes and no more than six consecutive volumes repaired within each run. Six motion parameters estimated during realignment were entered during first level modeling as regressors of no interest, and the repaired volumes were deweighted.

Statistical analyses at the first level were calculated using an event‐related design. A high pass filter with a cutoff of 128 s and an SPM default mask threshold of 0.5 were applied. All experimental trials were included as individual events for analysis and modeled using a canonical hemodynamic response function (HRF). All four conditions in each task in each run (i.e., Onset, Rhyme, Unrelated and Perceptual in the sound judgment task, and Low, High, Unrelated and Perceptual in the meaning judgment task) were taken as regressors of interests and entered into the general linear model (GLM). We compared the Related conditions (i.e., Onset + Rhyme) with the Perceptual condition during the sound judgment task to obtain the brain activation map for phonological processing within each participant. We compared the Related conditions (i.e., Low + High) with the Perceptual condition during the meaning judgment task to obtain the brain activation map for semantic processing within each participant. To examine neural specialization within each participant, we compared brain activation between the two tasks (the sound task [Related > Perceptual] > the meaning task [Related > Perceptual], or the meaning task [Related > Perceptual] > the sound task [Related > Perceptual]). To examine the parametric modulation effect within each participant, we contrasted the two Related conditions within each task (Onset > Rhyme in the sound task, and Low > High in the meaning task).

In the second‐level analyses, task comparison contrast maps from each individual (i.e., the sound task > the meaning task, or the meaning task > the sound task) were entered into a one‐sample t test to generate a brain specialization map at the group level for either phonological or semantic processing. Contrast maps for the parametric modulations from each individual (i.e., onset > rhyme or low > high) were also entered into a one‐sample t test to generate a parametric modulation map at the group level for either phonological or semantic processing. Finally, the task comparison contrast maps from each participant and behavioral performance on the phoneme isolation test from the CTOPP‐2 or the word classes test from the CELF‐5 were entered into a regression model to generate a brain‐behavior correlation map at the group level for either phonological or semantic processing.

We first examined brain activation for Related > Perceptual within each task and the task comparisons at the whole‐brain level. Statistical significance for the group level analysis within the whole brain mask (135,398 voxels) was defined using Monte Carlo simulations using AFNI's 3dClustSim program (see http://afni.nimh.nih.gov/). 3dClustSim carries out a 10,000 iteration Monte Carlo simulation of random noise activations at a particular voxel‐wise alpha level within a masked brain volume. Following the suggestions made by Eklund, Nichols, and Knutsson (2016) regarding the inflated statistical significance achieved using some packages, we used 3dFWHMx to calculate the smoothness of the data for each participant, using a spatial autocorrelation function, and then averaged those smoothness values across all participants (ACF = 0.48, 4.60, 13.00). This average smoothness value was then entered into 3dClustSim to calculate the cluster size needed for significance. The threshold for the size of a significant cluster within the whole brain mask was 77 voxels at a voxel‐wise threshold at p < .001 uncorrected and cluster‐wise threshold at p < .05 corrected.

Then, we focused on our specific predictions regarding specialization within the left hemisphere language network using a functional mask. The functional mask reflects the union of activation for the Related > Perceptual in the sound and meaning judgment tasks within a literature‐based anatomical mask. The anatomical mask was created by combining brain regions associated with phonological and semantic processing based on prior literature. It included the left IFG, STG, MTG, SMG, AG, IPL, and FG (Binder, Desai, Graves, & Conant, 2009; Friederici & Gierhan, 2013; Hickok & Poeppel, 2007). This anatomical mask is consistent with the mask used by Weiss et al. (2018) with younger children. Statistical significance for the group level analysis within the functional mask (5,458 voxels) was defined using AFNI's 3dClustSim program. The threshold for the size of a significant cluster within the functional language mask was 17 voxels at a voxel‐wise threshold at p < .001 uncorrected and cluster‐wise threshold at p < .05 corrected. At a more lenient threshold of voxel‐wise p < .005 and cluster‐wise p < .05 corrected level, 29 voxels were required for a cluster to be significant based on 3dClustSim.

3. RESULTS

3.1. Behavioral results

Table 3 shows the mean and SD of the accuracies and reaction times (RTs) during the sound and meaning judgment tasks performed inside the scanner. Accuracies and RTs were compared between the two tasks using paired sample t tests. Children had higher overall accuracy for the meaning task as compared to the sound task (t[109] = −7.475, p < .001) but showed no significant difference in RTs (t[109] = −0.202, p = .840). Spearman correlations showed that the accuracies for both the sound and meaning judgment tasks were correlated with raw scores on the phoneme isolation (sound: r[110] = .220, p = .021; meaning: r[110] = .210, p = .028) and word classes (sound: r[110] = .253, p = .008; meaning: r[110] = .195, p = .041) subtests. Pearson correlations did not show significant correlations between RTs and raw scores on the phoneme isolation subtest (ps > .255) but did show significant correlations between RTs and raw scores on the word classes subtest [sound: r(110) = 0.219, p = .021; meaning: r(110) = 0.217, p = .023].

To examine the parametric manipulation effect, paired sample t tests were used to compare the onset and rhyme conditions within the sound judgment task and to compare the low and high association conditions within the meaning judgment task. We found that the accuracies in the onset condition were significantly lower than those in the rhyme condition (t[109] = −12.211, p < .001), and the RTs in the onset condition were significantly longer than those in the rhyme condition (t[109] = 5.350, p < .001), suggesting that the onset condition was more difficult than the rhyme condition in the sound judgment task. Similarly, in the meaning judgment task, we found that the accuracies in the low association condition were significantly lower than those in the high association condition (t[109] = −6.720, p < .001), and the RTs in the low association condition were significantly longer than those in the high association condition (t[108] = 9.398, p < .001), suggesting that the low association condition was more difficult than the high association condition in the meaning judgment task.

3.2. fMRI results

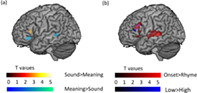

The univariate voxel‐wise results for each task and task comparisons within the whole‐brain mask are shown in Table 4 and Figure 1. As shown in Figure 1a,b, similar language regions were activated in both tasks for the contrast of Related > Perceptual, including the bilateral STG, left MTG, left IFG, and bilateral fusiform gyri. In the direct task comparisons, we found that the sound judgment task elicited greater activation than the meaning judgment task in the left insula, left precentral gyrus, and left supramarginal gyrus (see Figure 1c). We also found a significant cluster, the left middle temporal gyrus, at the whole‐brain level showing greater activation for the meaning judgment task as compared with the sound judgment task (see Figure 1d).

TABLE 4.

Voxel‐wise analysis significant results within the whole‐brain mask

| Brain regions | Brodmann area | Peak coordinate (MNI) | Number of voxels | T value |

|---|---|---|---|---|

| Related (onset + rhyme) > perceptual in the sound task | ||||

| Left superior+middle temporal gyrus/ left inferior frontal gyrus | 22/21/44/45 | −62 −14 6 | 5,438 | 18.38 |

| Right superior+middle temporal gyrus | 22/21 | 64–4 −2 | 2,721 | 16.38 |

| Left fusiform gyrus | 37 | −44 −44 −16 | 743 | 9.52 |

| Left caudate | ‐ | −12 10 10 | 488 | 6.48 |

| Right fusiform gyrus | 37 | 42–38 −18 | 108 | 5.37 |

| Right caudate | ‐ | 16 10 0 | 80 | 4.54 |

| Related (low + high) > perceptual in the meaning task | ||||

| Left middle+superior temporal gyrus/left inferior frontal gyrus | 21/22/45/47 | −62 −10 −2 | 4,747 | 19.13 |

| Right superior+middle temporal gyrus/right fusiform | 22/21/37 | 66–6 0 | 2,259 | 18.20 |

| Left fusiform gyrus | 37 | −42 −42 −16 | 552 | 9.17 |

| Sound task (related > perceptual) > meaning task (related > perceptual) | ||||

| Left insula | 13 | −18 12 4 | 105 | 5.52 |

| Left precentral gyrus | 6 | −64 6 24 | 606 | 5.37 |

| Right precuneus | 23 | 18–54 28 | 82 | 3.92 |

| Left supramarginal gyrus | 2 | −68 −20 36 | 122 | 3.84 |

| Meaning task (related > perceptual) > sound task (related > perceptual) | ||||

| Left middle temporal gyrus | 21 | −48 −42 2 | 90 | 4.39 |

FIGURE 1.

Voxel‐wise significant activation, within the whole‐brain mask for the contrast of: (a) Related (Onset + Rhyme) > Perceptual in the Sound Judgment Task, (b) Related (Low + High) > Perceptual in the Meaning Judgment Task, (c) Sound Judgment Task (Related > Perceptual) > Meaning Judgment Task (Related > Perceptual), (d) Meaning Judgment Task (Related > Perceptual) > Sound Judgment Task (Related > Perceptual). All clusters greater than 77 voxels are shown, thresholded at a voxel‐wise p < .001 uncorrected and cluster‐wise p < .05 corrected

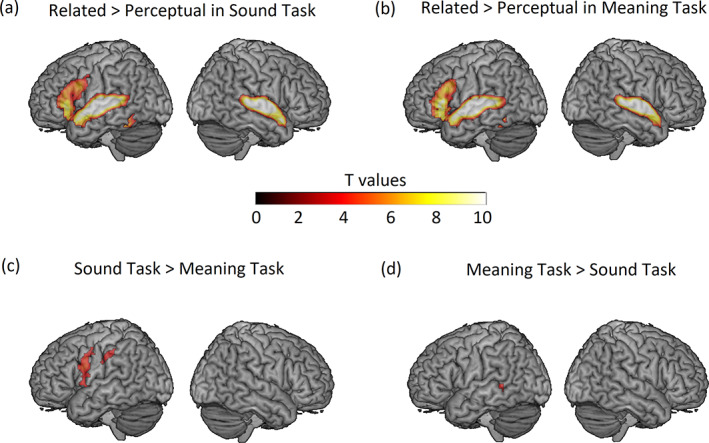

The univariate voxel‐wise results within the combined functional and literature‐based anatomical mask are shown in Table 5 and Figure 2. The direct comparisons between the sound and meaning judgment tasks (see Figure 2a) revealed significantly greater activation for the sound judgment task in the left triangular part of the IFG and significantly greater activation for the meaning judgment task in the left orbitalis part of the IFG and the left MTG. For the sound judgment task, the comparison between onset and rhyme conditions showed significant clusters in the left STG/MTG and the left opercular and triangular parts of IFG (see Figure 2b). These regions did not overlap with the phonologically specialized clusters found in the direct task comparison (Sound > Meaning). Similarly, for the meaning judgment task, the comparison between the low and high association conditions showed significant clusters in the left triangular and opercular part of the IFG (see Figure 2b). These regions also did not overlap with the semantically specialized clusters found in the direct task comparison (Meaning > Sound). The comparison of parametric modulations across tasks ([Onset > Rhyme] > [Low > High] and [Low > High] > [Onset > Rhyme]) revealed no significant clusters. Finally, the regression analyses did not reveal any significant clusters between specialization‐related brain activation and phonological awareness or semantic association skill as measured by the standardized tests. The same pattern of results for the direct and parametric modulation comparisons were found using a more lenient threshold of a voxel‐wise p < .005 uncorrected and cluster‐wise p < .05 corrected level (see Table S1 and Figure S1) with the only exception being that at the more linient threshold the left STG also showed greater activation for the sound judgment task as compared to the meaning judgment task.

TABLE 5.

Voxel‐wise analysis significant results within the combined functional and literature‐based anatomical mask

| Brain regions | Brodmann area | Peak coordinate (MNI) | Number of voxels | T value |

|---|---|---|---|---|

| Sound task (related > perceptual) > meaning task (related > perceptual) | ||||

| Left inferior frontal gyrus—triangular | 45 | −36 28 10 | 42 | 5.43 |

| Meaning task (related > perceptual) > sound task (related > perceptual) | ||||

| Left middle temporal gyrus | 21 | −48 −42 2 | 69 | 4.39 |

| Left inferior frontal gyrus—orbitalis | 47 | −52 30–6 | 47 | 4.20 |

| Onset > rhyme in the sound task | ||||

| Left inferior frontal gyrus—opercular | 44 | −54 18 32 | 327 | 5.73 |

| Left superior+middle temporal gyrus | 22/21 | −68 −34 4 | 644 | 5.47 |

| Left inferior frontal gyrus—triangular | 45/47 | −54 20 0 | 26 | 4.18 |

| Left inferior frontal gyrus—triangular | 45/44 | −46 14 12 | 25 | 3.76 |

| Low > high in the meaning task | ||||

| Left inferior frontal gyrus—triangular | 45 | −40 30 14 | 53 | 4.46 |

| left INFERIOR frontal gyrus—opercular | 44 | −52 18 28 | 44 | 3.90 |

FIGURE 2.

Voxel‐wise significant activation within the combined functional and literature‐based anatomical mask for the contrast of: (a) task comparisons: Sound Task (Related > Perceptual) > Meaning Task (Related > Perceptual) in hot colors, Meaning Task (Related > Perceptual) > Sound Task (Related > Perceptual) in cool colors; (b) parametric modulations: Onset > Rhyme within the Sound Task in red, Low > High within the Meaning Task in blue, overlap in purple. All clusters greater than 17 voxels are shown, thresholded at a voxel‐wise p < .001 uncorrected and cluster‐wise p < .05 corrected

4. DISCUSSION

The current study aimed to examine phonological and semantic specialization during spoken language processing in 7‐ to 8‐year‐old children. The same experimental paradigm and analytical approach used in Weiss et al. (2018), which explored specialization in 5‐ to 6‐year‐old children, was used to investigate whether a more adult‐like pattern of both frontal and temporal specialization could be observed in older children. Consistent with the results of Weiss et al. (2018), we found that 7‐ to 8‐year‐old children continued to show semantic specialization in the left posterior MTG and phonological specialization in the left STG, although this result was only evident with a more lenient threshold. In addition, the results from the current study extended previous research by showing that 7‐ to 8‐year‐old children showed a double dissociation in the frontal lobe, with the left posterior dIFG (i.e., the triangular part of the IFG) exhibiting greater activation for the sound judgment task as compared with the meaning judgment task and the left anterior vIFG (i.e., the orbitalis part of the IFG) exhibiting greater activation for the meaning judgment task as compared to the sound judgment task. This finding of phonological and semantic specialization in both the temporal and frontal lobes is consistent with previous studies in adults (Booth et al., 2006; Devlin et al., 2003; McDermott et al., 2003; Poldrack et al., 1999; Price et al., 1997; Price & Mechelli, 2005), suggesting that 7‐ to 8‐year‐old children have developed adult‐like language specialization for semantic and phonological processing. Given that Weiss et al. (2018), which used the same experimental and analytical approach, showed a double dissociation in the temporal lobe, but not in the frontal lobe in 5‐ to 6‐year‐old children, our finding suggests a developmental progression of language specialization from temporal to frontal regions. This developmental progression is consistent with a prominent neurocognitive model of language development (Skeide & Friederici, 2016), which argues that the frontal lobe matures later than the temporal lobe. However, because the current study utilized a cross‐sectional design, future longitudinal studies should investigate how specialization changes over time to confirm this developmental progression.

According to the Memory, Unification, and Control (MUC) model by Hagoort (2016), regions in the temporal cortex subserve knowledge representations that have been laid down in memory during acquisition. These regions store information, including phonological word forms, orthographic word forms, word meanings, and syntactic templates associated with nouns, verbs, and adjectives. Previous studies have shown that the left MTG appears to store semantic‐related information (e.g., Binder & Desai, 2011), whereas the left STG appears to store phonological‐related information (e.g., Mesgarani, Cheung, Johnson & Chang, 2014). Alternatively, frontal regions, which are structurally and functionally connected to temporal regions, support memory retrieval, decomposition, and unification operations. As compared to younger 5‐ to 6‐year olds, 7‐ to 8‐year‐old children have already received one or 2 years of formal education with extensive training on phonological awareness (e.g., Bus & Van IJzendoorn, 1999) and vocabulary (e.g., Marulis & Neuman, 2010), which tap into children's meta‐linguistic skills in phonological and semantic processing. Therefore, the observed double dissociation in the frontal lobe for 7‐ to 8‐year‐old children likely reflects a maturation of mechanisms supporting access and manipulation of phonological and semantic information. The fact that we found a strong phonological specialization effect in the frontal lobe but a weaker effect in the temporal lobe in 7‐ to 8‐year‐old children suggests a mechanistic transition while performing phonological awareness tasks. As compared to younger 5‐ to 6‐year‐old children who mainly rely on the quality of phonological representations, older children may have developed matured phonological representation and rely on the effectiveness of phonological access and manipulations to fine‐tune their task performance.

It was predicted that in addition to evidence from the direct task comparisons, additional evidence for specialization would be observed through the parametric modulation and brain‐behavior correlation analyses. In terms of the parametric modulation analyses, although it was found that the opercular part of the left IFG and the left STG/MTG were more active for the onset than the rhyme condition in the sound judgment task, the opercular part of the left IFG was also more active for the low than the high association condition in the meaning judgment task. In fact, as shown in Figure 2b, the parametric effects in the opercular part of the left IFG partially overlap across tasks. Furthermore, direct comparisons of these parametric modulations showed no significant differences across tasks. These results suggest that the greater activation observed for the harder as compared to the easier condition in each task was not task‐specific but rather reflects a general difficulty effect. In the broader literature, the opercular part of the left IFG (or Broca's area, BA44) has often been associated with cognitive control and is recruited to promote a nonautomatic but appropriate response, in lieu of a more automatic or dominant response (e.g., Novick, Trueswell, & Thompson‐Schill, 2010). In the present study, it is possible that participants initially wanted to respond with a “no” response in the harder conditions and, thus, had to inhibit this initial response in order to make a correct “yes” response for trials in the harder conditions. This interpretation would suggest that the difficulty effect found for both tasks in the opercular part of the left IFG is related to a greater cognitive control demands associated with the harder conditions.

Unlike the opercular part of the left IFG, which was found in the parametric modulation analyses for both tasks, the left STG/MTG was only found to show greater activation for the onset as compared to the rhyme condition in the sound judgment task. Weiss et al. (2018) also showed a parametric effect in the left STG for the sound judgment task. The left STG is associated with phonological representations (e.g., Boets et al., 2013; Myers, Blumstein, Walsh, & Eliassen, 2009) and furthermore, as evidenced by the findings of both the current study and the previous study by Weiss et al. (2018), is specialized for phonological processing. Thus, the parametric effect in the left STG for the sound judgment task found in the current study is likely due to the greater demand for phonemic representational precision required by the harder condition. Given that the left MTG is thought to store semantic information (e.g., Binder & Desai, 2011), it was not predicted that this region would show a parametric effect for the sound judgment task. However, part of the left MTG was found to be activated greater for the onset as compared to the rhyme condition within the sound judgment task. Several behavioral studies have suggested that newly acquired words are more phonologically robust in verbal short‐term memory when they have well‐defined semantic representations and that semantic representations can compensate for weak phonological processing (e.g., Savill, Cornelissen, Whiteley, Woollams, & Jefferies, 2019; Savill, Ellis, & Jefferies, 2017). Thus, it is possible that the 7‐ to 8‐year‐old children in this study engaged additional semantic representations to enhance their phonological processing in order to perform well on the harder condition within the phonological task.

Inconsistent with the findings of Weiss et al. (2018), we did not find a parametric effect for the meaning judgment task in the left MTG in 7‐ to 8‐year‐old children. While there was a significant difference in accuracy between the low and high association conditions in the meaning judgment tasks, the size of this effect was smaller than what was observed by Weiss et al. (2018) in their younger sample (7‐ to 8‐year olds: 90% for high vs. 84% for low compared to 5‐ to 6‐year‐olds: 85% for high vs. 76% for low). This difference was also smaller than that observed on the sound judgment task in the current study (87% for rhyme vs. 69% for onset). Therefore, any potential difficulty‐driven effects in the left MTG for the meaning judgment task may have been attenuated in the present study, thus leading to the null results observed. In addition, the accuracies for both the low and high association conditions were near the ceiling, indicating relative maturity of semantic processing in 7‐ to 8‐year‐old children. Thus, although the harder condition requires higher cognitive control, as reflected by the greater recruitment of the opercular part of IFG for the low versus high condition, the maturity of semantic representations may have led to similar activation in the left MTG across conditions.

Finally, we did not find any significant clusters that showed a correlation with phonological awareness or semantic association skills. This is inconsistent with the previous study on 5‐ to 6‐year‐old children (Weiss et al., 2018). Additionally, according to the IS account (Johnson, 2011), greater brain specialization should be associated with better skill. However, this account also argues for a dynamic mapping between the brain and cognition. When a new computation is acquired, there is a re‐organization of connections between brain regions. The previous study (Weiss et al., 2018) with younger children found a double dissociation only in the temporal lobe, suggesting that 5‐ to 6‐year‐old children mainly relied on phonological and semantic representations to perform the tasks. However, in the current study with 7‐ to 8‐year‐old children, a double dissociation in both the temporal and frontal lobe was found, suggesting that older children not only relied on phonological and semantic representations, but also relied on decomposing and unification operations to perform the tasks. Thus, a simple calculation of activation difference between tasks in a voxel‐wise linear regression analysis may not be a sensitive enough index for individual differences in language specialization in older children who rely on more complex strategies.

An alternative hypothesis for the lack of a linear brain‐behavior relation in our study is that brain specialization and skill could have a nonlinear relationship such that only children with high skills would show specialization. To examine this hypothesis, we partitioned our total sample into three groups consisting of 30 participants with the highest semantic association skills, 30 with the lowest semantic association skills, and 30 with skills in the middle. Then we examined the semantic specialization effect within each group, respectively, to see if specialization differs across groups. We found that only children with high skill showed significant semantic specialization in both the frontal and temporal lobes whereas children with low and middle‐skill did not show semantic specialization (see Table S2 and Figure S2a). Similarly, we formed three groups of children with high, low, and middle phonological awareness skills and examined the phonological specialization effect within each group, respectively. Surprisingly, we found that children with both high and low skill showed phonological specialization in the frontal lobe whereas children with middle‐skill did not show evidence for phonological specialization (see Table S2 and Figure S2b). This U‐shape brain‐behavior correlation in the frontal lobe in developing children was also found by a previous study (Booth et al., 2000) during sentence comprehension. The authors suggested that their participants who performed poorly were trying extremely hard to answer the questions correctly. Thus, we also speculate that the greater engagement of the left dIFG for the sound judgment task than the meaning judgment task could be due to greater cognitive control needed for the sound than the meaning judgment task in the low‐skill children. In fact, we found that low skill children struggled more with phonological than semantic processing (scaled score of phoneme isolation: 7.6; scaled score of word classes: 10.9; discrepancy: −2.3), whereas high skill children did not (scaled score of phoneme isolation: 13.5; raw score of word classes: 14.1; discrepancy: −0.8). Independent sample t test showed that the between task discrepancy score in low skill children were significantly lower than that in high skill children (t[58] = −2.869, p = .006). Therefore, while we interpret the greater engagement of the left dIFG in high skill children as reflecting phonological specialization, in low skill children, greater activation in the left dIFG for the sound than the meaning judgment tasks was likely due to greater cognitive demand rather than greater phonological specialization.

While the current study shows promising support for both frontal and temporal language‐related specialization in 7‐ to ‐8 year‐old children, there are a few limitations in the current study that should be noted. First, although our results, taken together with the results of Weiss et al. (2018), suggest a developmental progression in language specialization from the temporal to the frontal lobe. The current study is only cross‐sectional. Without a longitudinal design, it is difficult to determine whether the pattern of results observed across the current and previous study (Weiss et al., 2018) is due to an age or cohort effect. In addition, the current study, in line with the analytic approach used by Weiss et al. (2018), only focused on specialization in distinct regions. However, it is known that brain regions do not work independently and, in fact, a key component to the Interactive Specialization account proposed by Johnson (2011) is that region‐to‐region interactions drive the development of brain specialization. Thus, to provide further support for the Interactive Specialization account within the domain of language development, future research should also investigate how connectivity between brain regions within the language network changes over development and whether these changes do indeed drive the development of language‐related specialization.

In sum, the current study examined 7‐ to 8‐year‐old children's phonological and semantic specialization using an auditory sound judgment task and an auditory meaning judgment task. Phonological and semantic specialization was observed in both the temporal and frontal lobes, with the left posterior dIFG showing greater activation for the sound than the meaning judgment task and the left anterior vIFG and the left posterior MTG showing greater activation for the meaning than the sound judgment task. These findings indicate that 7‐ to 8‐year‐old children have already begun to develop language specialization in the frontal lobe. Together with previous work with 5‐ to 6‐year‐old children (Weiss et al., 2018), these findings suggest a progression from temporal to the frontal lobe in the development of language‐related neural specialization.

CONFLICT OF INTEREST

All authors declare no conflicts of interest.

AUTHOR CONTRIBUTIONS

Jin Wang, hypothesis formation, data analysis, result interpretation, manuscript writing.

Brianna L. Yamasaki, result interpretation, extensive manuscript revision.

Yael Weiss, manuscript revision.

James R. Booth, experimental design, hypothesis formation, manuscript revision.

Supporting information

Appendix S1. Supporting Information.

ACKNOWLEDGMENT

This study was supported by the National Institute of Health (R01 DC013274) to James R. Booth.

Wang J, Yamasaki BL, Weiss Y, Booth JR. Both frontal and temporal cortex exhibit phonological and semantic specialization during spoken language processing in 7‐ to 8‐year‐old children. Hum Brain Mapp. 2021;42:3534–3546. 10.1002/hbm.25450

Funding information National Institute of Health, Grant/Award Number: R01 DC013274

DATA AVAILABILITY STATEMENT

We are in the process of making the data from the entire project available on openNeuro, and will have it posted at the latest by September 2021. We will also supply the data to individual researchers before that time upon request. The exact participants, fMRI run names, and the imaging data analyses codes used in the current study are also shared and available on GitHub https://github.com/wangjinvandy/PhonSem_Specialization_7‐8

REFERENCES

- Balota, D. A. , Yap, M. J. , Hutchison, K. A. , Cortese, M. J. , Kessler, B. , Loftis, B. , … Treiman, R. (2007). The English lexicon project. Behavior Research Methods, 39(3), 445–459. [DOI] [PubMed] [Google Scholar]

- Balsamo, L. M. , Xu, B. , & Gaillard, W. D. (2006). Language lateralization and the role of the fusiform gyrus in semantic processing in young children. NeuroImage, 31(3), 1306–1314. [DOI] [PubMed] [Google Scholar]

- Binder, J. R. , & Desai, R. H. (2011). The neurobiology of semantic memory. Trends in cognitive sciences, 15(11), 527–536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binder, J. R. , Desai, R. H. , Graves, W. W. , & Conant, L. L. (2009). Where is the semantic system? A critical review and meta‐analysis of 120 functional neuroimaging studies. Cerebral Cortex, 19(12), 2767–2796. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boets, B. , de Beeck, H. P. O. , Vandermosten, M. , Scott, S. K. , Gillebert, C. R. , Mantini, D. , … Ghesquière, P. (2013). Intact but less accessible phonetic representations in adults with dyslexia. Science, 342(6163), 1251–1254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Booth, J. R. , Lu, D. , Burman, D. D. , Chou, T. L. , Jin, Z. , Peng, D. L. , … Liu, L. (2006). Specialization of phonological and semantic processing in Chinese word reading. Brain Research, 1071(1), 197–207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Booth, J. R. , MacWhinney, B. , Thulborn, K. R. , Sacco, K. , Voyvodic, J. T. , & Feldman, H. M. (2000). Developmental and lesion effects in brain activation during sentence comprehension and mental rotation. Developmental Neuropsychology, 18(2), 139–169. [DOI] [PubMed] [Google Scholar]

- Brennan, C. , Cao, F. , Pedroarena‐Leal, N. , McNorgan, C. , & Booth, J. R. (2013). Reading acquisition reorganizes the phonological awareness network only in alphabetic writing systems. Human Brain Mapping, 34(12), 3354–3368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bus, A. G. , & Van IJzendoorn, M. H. (1999). Phonological awareness and early reading: A meta‐analysis of experimental training studies. Journal of Educational Psychology, 91(3), 403–414. [Google Scholar]

- Cao, F. , Yan, X. , Wang, Z. , Liu, Y. , Wang, J. , Spray, G. J. , & Deng, Y. (2017). Neural signatures of phonological deficits in Chinese developmental dyslexia. NeuroImage, 146, 301–311. [DOI] [PubMed] [Google Scholar]

- Cone, N. E. , Burman, D. D. , Bitan, T. , Bolger, D. J. , & Booth, J. R. (2008). Developmental changes in brain regions involved in phonological and orthographic processing during spoken language processing. NeuroImage, 41(2), 623–635. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dębska, A. , Łuniewska, M. , Chyl, K. , Banaszkiewicz, A. , Żelechowska, A. , Wypych, M. , … Jednoróg, K. (2016). Neural basis of phonological awareness in beginning readers with familial risk of dyslexia—Results from shallow orthography. NeuroImage, 132, 406–416. [DOI] [PubMed] [Google Scholar]

- Desroches, A. S. , Cone, N. E. , Bolger, D. J. , Bitan, T. , Burman, D. D. , & Booth, J. R. (2010). Children with reading difficulties show differences in brain regions associated with orthographic processing during spoken language processing. Brain Research, 1356, 73–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Devlin, J. T. , Matthews, P. M. , & Rushworth, M. F. (2003). Semantic processing in the left inferior prefrontal cortex: A combined functional magnetic resonance imaging and transcranial magnetic stimulation study. Journal of Cognitive Neuroscience, 15(1), 71–84. [DOI] [PubMed] [Google Scholar]

- Eklund, A. , Nichols, T. E. , & Knutsson, H. (2016). Cluster failure: Why fMRI inferences for spatial extent have inflated false‐positive rates. Proceedings of the National Academy of Sciences, 113(28), 7900–7905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friederici, A. D. , & Gierhan, S. M. (2013). The language network. Current Opinion in Neurobiology, 23(2), 250–254. [DOI] [PubMed] [Google Scholar]

- Frost, J. , Madsbjerg, S. , Niedersøe, J. , Olofsson, Å. , & Sørensen, P. M. (2005). Semantic and phonological skills in predicting reading development: From 3–16 years of age. Dyslexia, 11(2), 79–92. [DOI] [PubMed] [Google Scholar]

- Hagoort, P. (2016). MUC (Memory, Unification, Control): A model on the neurobiology of language beyond single word processing. Neurobiology of language (pp. 339–347). Cambridge, MA: Academic Press. [Google Scholar]

- Harm, M. W. , & Seidenberg, M. S. (2004). Computing the meanings of words in reading: Cooperative division of labor between visual and phonological processes. Psychological Review, 111(3), 662–720. [DOI] [PubMed] [Google Scholar]

- Hickok, G. , & Poeppel, D. (2007). The cortical organization of speech processing. Nature Reviews Neuroscience, 8(5), 393–402. [DOI] [PubMed] [Google Scholar]

- Johnson, M. H. (2011). Interactive specialization: A domain‐general framework for human functional brain development? Developmental Cognitive Neuroscience, 1(1), 7–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaufman, A. S. , & Kaufman, N. L. (2004). Kaufmann brief intelligence test (2nd ed.). Bloomington, MN: Pearson Assessments. [Google Scholar]

- Kovelman, I. , Norton, E. S. , Christodoulou, J. A. , Gaab, N. , Lieberman, D. A. , Triantafyllou, C. , … Gabrieli, J. D. (2012). Brain basis of phonological awareness for spoken language in children and its disruption in dyslexia. Cerebral Cortex, 22(4), 754–764. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Landi, N. , Mencl, W. E. , Frost, S. J. , Sandak, R. , & Pugh, K. R. (2010). An fMRI study of multimodal semantic and phonological processing in reading disabled adolescents. Annals of Dyslexia, 60(1), 102–121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu, X. , Gao, Y. , Di, Q. , Hu, J. , Lu, C. , Nan, Y. , … Liu, L. (2018). Differences between child and adult large‐scale functional brain networks for reading tasks. Human Brain Mapping, 39(2), 662–679. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marulis, L. M. , & Neuman, S. B. (2010). The effects of vocabulary intervention on young children's word learning: A meta‐analysis. Review of Educational Research, 80(3), 300–335. [Google Scholar]

- Mathur, A. , Schultz, D. , & Wang, Y. (2020). Neural bases of phonological and semantic processing in early childhood. Brain Connectivity, 10, 5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazaika, P. K. , Hoeft, F. , Glover, G. H. , & Reiss, A. L. (2009). Methods and software for fMRI analysis of clinical subjects. NeuroImage, 47(Suppl 1), S58. [Google Scholar]

- McDermott, K. B. , Petersen, S. E. , Watson, J. M. , & Ojemann, J. G. (2003). A procedure for identifying regions preferentially activated by attention to semantic and phonological relations using functional magnetic resonance imaging. Neuropsychologia, 41(3), 293–303. [DOI] [PubMed] [Google Scholar]

- Mesgarani, N. , Cheung, C. , Johnson, K. , & Chang, E. F. (2014). Phonetic feature encoding in human superior temporal gyrus. Science, 343(6174), 1006–1010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Myers, E. B. , Blumstein, S. E. , Walsh, E. , & Eliassen, J. (2009). Inferior frontal regions underlie the perception of phonetic category invariance. Psychological Science, 20(7), 895–903. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nelson, D. L. , McEvoy, C. L. , & Schreiber, T. A. (2004). The University of South Florida free association, rhyme, and word fragment norms. Behavior Research Methods, Instruments, & Computers, 36(3), 402–407. [DOI] [PubMed] [Google Scholar]

- Novick, J. M. , Trueswell, J. C. , & Thompson‐Schill, S. L. (2010). Broca's area and language processing: Evidence for the cognitive control connection. Lang & Ling Compass, 4(10), 906–924. [Google Scholar]

- Paquette, N. , Lassonde, M. , Vannasing, P. , Tremblay, J. , González‐Frankenberger, B. , Florea, O. , … Gallagher, A. (2015). Developmental patterns of expressive language hemispheric lateralization in children, adolescents and adults using functional near‐infrared spectroscopy. Neuropsychologia, 68, 117–125. [DOI] [PubMed] [Google Scholar]

- Poldrack, R. A. , Wagner, A. D. , Prull, M. W. , Desmond, J. E. , Glover, G. H. , & Gabrieli, J. D. (1999). Functional specialization for semantic and phonological processing in the left inferior prefrontal cortex. NeuroImage, 10(1), 15–35. [DOI] [PubMed] [Google Scholar]

- Price, C. J. , & Mechelli, A. (2005). Reading and reading disturbance. Current Opinion in Neurobiology, 15(2), 231–238. [DOI] [PubMed] [Google Scholar]

- Price, C. J. , Moore, C. J. , Humphreys, G. W. , & Wise, R. J. (1997). Segregating semantic from phonological processes during reading. Journal of Cognitive Neuroscience, 9(6), 727–733. [DOI] [PubMed] [Google Scholar]

- Raschle, N. M. , Zuk, J. , & Gaab, N. (2012). Functional characteristics of developmental dyslexia in left‐hemispheric posterior brain regions predate reading onset. Proceedings of the National Academy of Sciences, 109(6), 2156–2161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Savill, N. , Cornelissen, P. , Whiteley, J. , Woollams, A. , & Jefferies, E. (2019). Individual differences in verbal short‐term memory and reading aloud: Semantic compensation for weak phonological processing across tasks. Journal of Experimental Psychology: Learning, Memory, and Cognition, 45(10), 1815–1831. [DOI] [PubMed] [Google Scholar]

- Savill, N. , Ellis, A. W. , & Jefferies, E. (2017). Newly‐acquired words are more phonologically robust in verbal short‐term memory when they have associated semantic representations. Neuropsychologia, 98, 85–97. [DOI] [PubMed] [Google Scholar]

- Seymour, H. N. , Roeper, T. W. , & De Villiers, J. (2003). Diagnostic evaluation of language variation: Screening test. Cambridge, MA: NCS Pearson, Inc. [Google Scholar]

- Skeide, M. A. , & Friederici, A. D. (2016). The ontogeny of the cortical language network. Nature Reviews Neuroscience, 17(5), 323–332. [DOI] [PubMed] [Google Scholar]

- Vitevitch, M. S. , & Luce, P. A. (2004). A web‐based interface to calculate phonotactic probability for words and nonwords in English. Behavior Research Methods, Instruments, & Computers, 36(3), 481–487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wagner, R. , Torgesen, J. , & Rashotte, C. (2013). Comprehensive test of phonological processing (2nd ed.). Austin, TX: PRO‐ED. [Google Scholar]

- Wang, J. , Joanisse, M. F. , & Booth, J. R. (2020). Neural representations of phonology in temporal cortex scaffold longitudinal reading gains in 5‐to 7‐year‐old children. NeuroImage, 207, 116359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weiss, Y. , Cweigenberg, H. G. , & Booth, J. R. (2018). Neural specialization of phonological and semantic processing in young children. Human Brain Mapping, 39(11), 4334–4348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weiss‐Croft, L. J. , & Baldeweg, T. (2015). Maturation of language networks in children: A systematic review of 22 years of functional MRI. NeuroImage, 123, 269–281. [DOI] [PubMed] [Google Scholar]

- Wiig, E. H. , Secord, W. A. , & Semel, E. (2013). Clinical evaluation of language fundamentals: CELF‐5. San Antonio, TX: Pearson. [Google Scholar]

- Wilke, M. , Altaye, M. , Holland, S. K. , & CMIND Authorship Consortium . (2017). CerebroMatic: A versatile toolbox for spline‐based MRI template creation. Frontiers in Computational Neuroscience, 11, 5. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix S1. Supporting Information.

Data Availability Statement

We are in the process of making the data from the entire project available on openNeuro, and will have it posted at the latest by September 2021. We will also supply the data to individual researchers before that time upon request. The exact participants, fMRI run names, and the imaging data analyses codes used in the current study are also shared and available on GitHub https://github.com/wangjinvandy/PhonSem_Specialization_7‐8