Abstract

BACKGROUND:

Evaluation is one of the most important tools for determining the quality of any educational program, which can lead to reformation, revision, or termination of programs. Quality in higher education requires assessment and judgment of goals and strategies, executive policies, operational processes, products, and outcomes. The Context, Input, Process, and Product (CIPP) model is a comprehensive perspective that attempts to provide information in order to make the best decisions related to CIPP. Due to the importance of this topic, the present study examined the application of the CIPP model in the evaluation of medical education programs through a systematic review.

MATERIALS AND METHODS:

In this systematic review, Persian databases including ISC, SID, Mag Iran, CivilicaL, and Noormags and English databases including PubMed, Web of Science, Scopus, ProQuest Dissertations, Embase, CINAHL, ERIC, and Google Scholar were searched using relevant keywords, such as evaluation, program evaluations, outcome and process assessment, educational assessment, and educational measurements. The search was done with no time limits and 41 papers were obtained until May 22, 2020. This systematic review was performed by following the data extraction steps and assessing the quality of the studies and findings. Critical Appraisal Skills Programs and Mixed-Methods Appraisal Tool checklists were used to check the quality of the papers.

RESULTS:

This systematic review was conducted on 41 studies, 40 of which were research papers and one was a review paper. From the perspective of the CIPP model of evaluation, most papers showed quite a good level of evaluation of educational programs although some studies reported poor levels of evaluation. Moreover, factors such as modern teaching methods, faculty members, financial credits, educational content, facilities and equipment, managerial and supervisory process, graduates’ skills, produced knowledge, and teaching and learning activities were reported as the factors that could influence the evaluation of educational programs.

CONCLUSION:

Due to the important role of evaluation in improvement of the quality of educational programs, policymakers in education should pay special attention to the evaluation of educational programs and removal of their barriers and problems. To promote the quality of educational programs, policymakers and officials are recommended to make use of the CIPP model of evaluation as a systemic approach that can be used to evaluate all stages of an educational program from development to implementation.

Keywords: Evaluation, outcome and process assessment, program evaluations

Introduction

Today, improving the quality of higher education is the most important and fundamental tool for the sustainable and comprehensive growth and development of a country.[1] The system of higher education is effective and useful when its activities are implemented based on appropriate and acceptable standards, and achieving such a quality in the higher education entails using appropriate research and evaluation.[1] Because the quality of an educational program is a multidimensional and complex concept, it is very difficult to judge a program. Hence, evaluation as a means of judging and documenting quality is of paramount importance.[2] Evaluation also makes it possible to assess the development and implementation of programs as well as the achievement of educational goals and aspirations. By evaluating an educational program, it is possible to understand the degree of compatibility and harmony of that program with the needs of individuals and the target community and to determine the effective factors in the development of the program.[3] Principled evaluation, while ameliorating the strengths and minimizing the weaknesses, can be the foundation for many educational decisions and plans and can provide the required tools for improving universities’ academic levels.[4] Evaluation makes education transform from a static state to a dynamic one. One of the most important factors influencing effective evaluation is certainly the existence of an effective tool and model that can properly evaluate educational programs.[5] There are several ways to evaluate educational programs. One of these models is the CIPP evaluation model, which is the acronym of Context, Input, Process, and Product and evaluates educational programs in these four areas.[6] Evaluation of the context aims to provide a logical ground for setting educational goals. It also attempts to identify problems, needs, and opportunities in a context or educational situation. The purpose of input evaluation is to facilitate the implementation of the program designed in the context stage. In addition, it focuses on human and financial resources, policies, educational strategies, barriers, and limitations of the education system. Process evaluation refers to identification or prediction of performance problems during educational activities and determining the desirability of the implementation process. In the process stage, the implementation of the program and the effect of the educational program on learners are discussed. Output evaluation is done in order to judge the appropriateness and efficiency of educational activities. In fact, the results of the program are compared to the goals of the program, and the match between the expectations and the actual results is determined.[7] The most important goal of evaluation based on the CIPP model is to improve the performance of the program. Stufflebeam and Zhang referred to the CIPP evaluation model as a cyclical process that focuses more on the process than on the product, and the most important goal of the evaluation, he maintained, is to improve the curriculum or the educational program.[8] In addition, studies have indicated that the CIPP evaluation model covers all stages of revising an educational program, which is consistent with the complex nature of medical education programs. This model provides constructive information required to improve educational programs and to make informed decisions.[8] The CIPP model does not only emphasize answering clear questions, but it also focuses on the general and systematic determination of the competencies of an educational program.

To the best knowledge of the researchers, most studies in medical sciences have been done to prove the achievement of predetermined goals in an educational program, while the CIPP model aims to help improve the quality of an educational program rather than documenting the achievement of goals.[9] This research policy of the CIPP model and the necessity to examine the researchers’ approach toward using it in the evaluation of educational programs prompted the researchers to use a systematic review to study the scope and manner of research on the application of the CIPP evaluation model in medical sciences.

Materials and Methods

In this systematic review, 14 international and national databases were systematically searched from April 22, 2020, to May 22, 2020. The research population included all domestic and foreign papers that used the CIPP evaluation model to evaluate educational programs in medical sciences. Because the number of papers in this domain was limited, the search was not limited temporally. All steps of evaluating the papers for inclusion in the study were done separately by two independent researchers. In case of discrepancy between the two researchers, a third expert was asked to evaluate the papers and the final decision was made based on the agreement among the three evaluators.

Search strategy

Searching for the papers was done with a specific strategy with no time limit from April 22, 2020, to May 22, 2020. The search was carried out in Persian databases including SID, Mag Iran, CivilicaL, Iran Medical Articles Bank, Noormags, and ISC and English databases including Scopus, PubMed, Web of Science, ProQuest Dissertations, Embase, CINAHL, and ERIC. Google Scholar search engine was used in both English and Persian. The search was separately performed in each database based on the relevant keywords. An example of the search method in the PubMed database is given in Table 1.

Table 1.

PubMed search query

| 1 | SEARCH ((((((EVALU*[TITLE/ABSTRACT]) OR ASSESS* [TITLE/ABSTRACT]) OR PROGRAM EVALUATION[TITLE/ABSTRACT]) OR (OUTCOME[TITLE/ABSTRACT] AND PROCESS ASSESSMENT[TITLE/ABSTRACT])) OR PROGRAM EFFECTIVNESS[TITLE/ABSTRACT]) OR EDUCATIONAL ASSESSMENT[TITLE/ABSTRACT]) OR EDUCATIONAL MEASUREMENT*[TITLE/ABSTRACT] |

| 2 | SEARCH (((CIPP MODEL[TITLE/ABSTRACT]) OR CIPP MODEL[MESH TERMS]) OR (CONTEXT INPUT PROCESS[TITLE/ABSTRACT] AND PRODUCT EVALUATION[TITLE/ABSTRACT])) OR (CONTEXT INPUT PROCESS AND PRODUCT EVALUATION[MESH TERMS]) |

| #1 AND #2 | |

| 3 | CONTEXT[ALL FIELDS] AND INPUT[ALL FIELDS] AND PROCESS[ALL FIELDS] AND PRODUCT[ALL FIELDS] AND (“EVALUATION”[JOURNAL] OR “EVALUATION (LOND)”[JOURNAL] OR “EVALUATION”[ALL FIELDS]) AND MODEL[ALL FIELDS]) AND ((“FACULTY, NURSING”[MESH TERMS] OR (“FACULTY”[ALL FIELDS] AND “NURSING”[ALL FIELDS]) OR “NURSING FACULTY”[ALL FIELDS] OR (“CLINICAL”[ALL FIELDS] AND “FACULTY”[ALL FIELDS]) OR “CLINICAL FACULTY”[ALL FIELDS]) AND PROGRAM[ALL FIELDS] |

A multistage approach was adopted in the selection of studies. To achieve the relevant studies, initially, a wide range of keywords listed in the MeSH, such as evaluation, program evaluations, outcome and process assessment, educational assessment, and educational measurements, were searched. In order to increase the likelihood of finding relevant studies, the terms “medical” and “education” were searched both as separate words and as a combination. It should be noted that there was no other equivalent for the CIPP model in the list of MeSH terms. The studies were reviewed and selected in three stages. In the first step, citation information and abstracts of the papers extracted from the databases were transferred to Endnote. Then, the titles of the selected papers were reviewed and the papers that were repetitive or irrelevant to the main topic of the research were deleted. In the second step, reading the abstracts of the remaining papers, those related to the main purpose of the research were selected. In the third step, the full texts of the papers were analyzed based on the inclusion and exclusion criteria [Table 2].

Table 2.

Inclusion and exclusion criteria for the studies

| Inclusion criteria | Exclusion criteria |

|---|---|

| Studies published in English and Persian | Studies published in languages other than English and Persian |

| Availability of full texts | Unavailability of full texts |

| Related to an evaluation in the medical field | Evaluation in areas other than medical sciences |

| Evaluation based on the CIPP model | Evaluation based on other evaluation models |

CIPP=Context, Input, Process, and Product

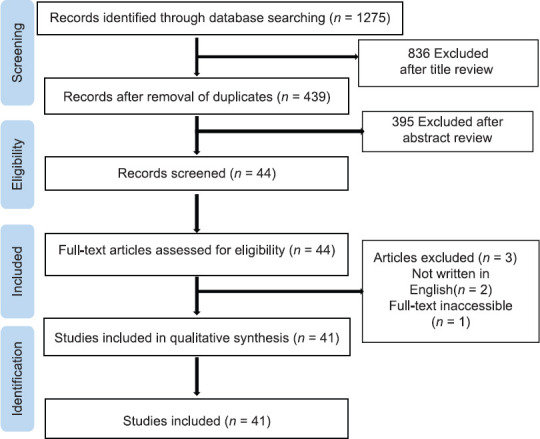

Finally, 41 studies that were in line with the purpose of the study, were written in English or Persian, and had full texts available to the researchers were selected and qualitatively analyzed [Figure 1].

Figure 1.

The process of selection of final articles

Data extraction and synthesis

For the selected papers, two researchers extracted the relevant information independently using a standard data-mining form.

They discussed any mismatches in data mining, which was followed by a complementary analysis done by a third researcher to ensure the precision of the extracted information. This form included the following specifications: first author's name, year, geographical area, research design, and objectives. After completing this form, the results obtained from the analysis of the papers were summarized and reported.

Quality assessment

Critical Appraisal Skills Programs (CASP) checklist, which is a standard tool for evaluating the quality of papers, was used to check the quality of the papers.[10] The checklist used in the present study included 18 items and each item was given a score of 1 (indicating that the item was noticed in the paper) or 0 (indicating that the item was ignored in the paper). These items were divided into four areas: participant characteristics (five items), attitude assessment tools (three items), study design (five items), and results (five items). The total score of this checklist could range from 0 to 18.[11] After a thorough study of the full text of each article, the checklist of paper quality was completed by the first researcher and the items were scored. The second researcher followed the same procedure in the re-evaluation process of each paper. In case of disagreement in scoring the items, a final score was obtained in a joint session. Next, based on the scores obtained from this checklist, the reviewed papers were divided into three categories of good, moderate, and poor quality. The cutoff point was determined based on that reported in similar papers and experts’ judgments. Accordingly, the total scores of 75% and above were classified as good quality (scores 13 and above), total scores between 25% and 75% were classified as moderate quality (scores 6–12), and total scores lower than 25% (scores 5 and below) were classified as poor quality.[11] In order to assess the quality of mixed-methods papers, Mixed-Methods Appraisal Tool (MMAT) was used in this study.[12,13] Four areas of the qualitative criteria used in the MMAT are as follows: (1) eligibility of participants and appropriateness of sampling procedure; (2) data analysis process including data collection procedure, data format, and data analysis; (3) attention to the effect of setting on data collection; and (4) attention to the impact of the researchers’ ontological and epistemological beliefs. The critical appraisal of mixed-methods also included three areas, namely relevance of mixed-methods design, synthesis of data, and attention to methodology limitations. Each study was given an overall quality score (unclassified, 25%, 50%, 75%, or 100%) based on the MMAT scoring system.[12,13]

Results

In the first step, the titles of the 1275 papers obtained in the initial search of the studies were examined, and duplicate titles were deleted either using Endnote or manually. At this stage, 836 papers with duplicate titles were deleted and 439 papers remained. In the second step, the abstracts were studied by the researcher and an expert colleague. As a result, 395 papers unrelated to the main research topic were removed and 44 papers related to the main objective of the project were selected. In the third step, after reading the full texts of the 44 papers, three studies were deleted and 41 using the CIPP model in medical sciences were selected [Figure 1].

The results showed that the quantitative methodology was used slightly more by researchers compared to other methods [Table 3].

Table 3.

Types of studies

| Language | Types of studies | |||

|---|---|---|---|---|

| Quantitative studies | Qualitative studies | Review articles | Mixed methods | |

| English | 13 | 2 | 1 | 8 |

| Persian | 16 | 0 | 0 | 1 |

All studies aimed at examining the attitudes of students, instructors, and those involved in the quality of educational programs based on the CIPP evaluation model. In addition, most studies examined students’ perspectives on educational programs. A large number of papers (n = 29) were descriptive, cross-sectional studies and evaluated educational programs using researcher-made questionnaires. In addition, nine studies used a mixed-methods design where the authors used questionnaires and individual interviews to examine the participants’ attitudes. In two studies, qualitative methodology and individual interviews were used to evaluate educational programs. Finally, one study included a review of other papers that had used the CIPP model [Table 3]. Most studies (n = 29) on the evaluation of curricula based on the CIPP model were conducted in Iran [Table 4].

Table 4.

The summary of the studies’ results

| Author | Language | Year | Country | Study type | Aim | Statistical population | Data collection tool | Overall quality score |

|---|---|---|---|---|---|---|---|---|

| Zadeh and Far[14] | English | 2012 | Iran | Quantitative study | Evaluation of midwifery in Islamic Azad University of Mahabad | Students | Questionnaire | 5 |

| 27% | ||||||||

| Ehsanpour[15] | Persian | 2006 | Iran | Quantitative study | Achieving minimum learning requirements from the viewpoints of midwifery students in Isfahan. | Students | Questionnaire | 10 |

| 56% | ||||||||

| AbdiShahshahani et al.[16] | English | 2015 | Iran | Quantitative study | Evaluation of reproductive health PhD program in Iran | Students, faculty members, managers, graduates | Questionnaire | 14 |

| 78% | ||||||||

| AbdiShahshahani et al.[17] | English | 2014 | Iran | Quantitative study | Evaluation of the input indicators of reproductive health PhD program in Iran | Students, faculty members, managers, graduates | Questionnaire | 15 |

| 83% | ||||||||

| Kool et al.[18] | English | 2017 | New Zealand | Mixed-methods study | The aim of this study was to evaluate if the revised program provided an important experiential learning opportunity for medical students without imposing an unsustainable burden on clinical services | Supervisors, students, academic administrators, hospital staff | Questionnaire-Interview--Focus group | * |

| 25% | ||||||||

| Mohebbia et al.[19] | English | 2011 | Iran | Quantitative study | Evaluating the medical records education course at MSc level in Iranian universities of medical sciences | Students, faculty members, graduates | Questionnaire | 14 |

| 78% | ||||||||

| Maqbool Alia et al.[20] | English | 2018 | Australia | Quantitative study | Evaluation of an interactive case-based learning system for medical education | Students | Questionnaire | 11 |

| 61% | ||||||||

| Lee et al.[21] | English | 2019 | Korea | Mixed methods study | Evaluation of medical humanities course in the college of medicine | Students, faculty members | Questionnaire-Interview -Focus group | *** |

| 75% | ||||||||

| Phattharayuttawat et al.[22] | English | 2009 | Thailand | Mixed methods study | Evaluation of the curriculum of a graduate program in clinical psychology | Students, faculty members, graduates | Questionnaire-Interview | 1* |

| 25% | ||||||||

| Bazrafshan et al.[23] | English | 2015 | Iran | Mixed methods study | Synthesis and development of a framework to evaluate the quality of the HSM program at Kerman University of Medical Sciences | Students, faculty members, graduates | Questionnaire-Interview | *** |

| 75% | ||||||||

| Powell and Conrad[24] | English | 2015 | USA | Quantitative study | To examine the enhancement of a university health course through the utilization of the CIPP model as a means to develop an integrated service learning component | Students | Questionnaire | 10 |

| 56% | ||||||||

| Mirzazadeh et al.[25] | English | 2016 | Iran | Mixed methods study | Evaluation of a new undergraduate medical education program in a period of 8 years | Students, faculty members, graduates | Questionnaire-interview - Focus group-Expert panel | |

| *** | ||||||||

| 75% | ||||||||

| Yarmohammadian and Mohebbi[26] | English | 2015 | Iran | Quantitative study | Review and development of the evaluation criteria of health information technology course at MSc level in Tehran, Shahid Beheshti, Isfahan, Shiraz, and Kashan | Students, faculty members, librarians | Questionnaire | 13 |

| 72% | ||||||||

| Rooholamini et al.[27] | English | 2017 | Iran | Mixed methods study | Evaluation of an integrated basic science medical curriculum in Shiraz Medical School | Students, faculty members, graduates | Questionnaire-Interview Focus group | * |

| 25% | ||||||||

| Adham and Diala[28] | English | 2014 | Pakistan | Quantitative study | Evaluation project for (FPDP) in order to provide empirical evidence on its importance as a way to smooth the transition to the (BL). | Faculty members | Questionnaire | 9 |

| 50% | ||||||||

| Kim et al.[29] | English | 2010 | Korea | Qualitative study | Quality of faculty, students, curriculum, and resources for nursing doctoral education in Korea | Students, faculty members, managers, graduates | Focus group | *** |

| 75% | ||||||||

| Lippe and Carter[30] | English | 2017 | USA | Mixed methods study | Evaluation of the quality and merit of end-of-life care education within a nursing program | Students, faculty members | Questionnaire- Interview-Focus group | * |

| 25% | ||||||||

| Ashghali-Farahani et al.[31] | English | 2018 | Iran | Qualitative study | Evaluation of the challenges of neonatal intensive care nursing curriculum based on the CIPP evaluation model | Students, faculty members, graduates | Interview | *** |

| 75% | ||||||||

| Souto et al.[32] | English | 2018 | Brazil | Mixed methods study | To evaluate the teaching-learning methodologies adopted by teachers of a nursing course from the perspective of students | Students, faculty members | Questionnaire- Focus group | *** |

| 75% | ||||||||

| Neyazi et al.[33] | English | 2016 | IRAN | Quantitative study | Evaluation of selected faculties at Tehran University of Medical Sciences using the CIPP model from the perspective of students and graduates | Students, graduates | Questionnaire | 13 |

| 72% | ||||||||

| Nagata et al.[34] | English | 2012 | Japan | Quantitative study | Evaluation of doctoral nursing education in Japan by students, graduates, and faculty members | Students, faculty members, graduates | Questionnaire | 15 |

| 83% | ||||||||

| Young et al.[35] | English | 2019 | Korea | Review study | How to execute CIPP evaluation model in medical health education | Articles | Checklists | 15 |

| 83% | ||||||||

| Akhlaghi et al.[36] | Persian | 2011 | Iran | Quantitative study | Evaluating the quality of educational programs in higher education using the CIPP model | Students, faculty members, graduates, librarians | Questionnaire | 11 |

| 61% | ||||||||

| Okhovati et al.[37] | Persian | 2014 | Iran | Quantitative study | Evaluating the Bachelor’s degree program of HSM at Kerman University of Medical Sciences | Students, faculty members, graduates | Questionnaire | 10 |

| 56% | ||||||||

| Yazdani and Moradi[38] | Persian | 2017 | Iran | Quantitative study | Evaluation of the quality of undergraduate nursing education program in Ahvaz based on the CIPP evaluation model | Students | Questionnaire | 13 |

| 72% | ||||||||

| Tabari et al.[39] | English | 2016 | Iran | Quantitative study | Evaluation of educational programs of pediatrics, orthodontics, and restorative departments of Babol dental school from the perspective of the students based on the CIPP model | Students | Questionnaire | 13 |

| 72% | ||||||||

| Pakdaman et al.[40] | Persian | 2011 | Iran | Quantitative study | Evaluation of the achievement of educational objectives of the Community Oral Health and Periodontics Departments using the CIPP model of evaluation from the students’ perspective | Students | Questionnaire | 14 |

| 87% | ||||||||

| Tabari et al.[41] | English | 2018 | Iran | Quantitative study | Evaluation of educational programs in endodontics, periodontics, oral, and maxillofacial surgery departments of Babol Dental School based on the CIPP model from the students’ perspective | Students | Questionnaire | 15 |

| 83% | ||||||||

| Jannati et al.[42] | Persian | 2017 | Iran | Quantitative study | Evaluating Educational Program of Bachelor of Sciences in Health Services Management Using CIPP Model in Tabriz | Students, faculty members, graduates | Questionnaire | 15 |

| 83% | ||||||||

| Makarem et al.[43] | Persian | 2013 | Iran | Quantitative study | Evaluation of the educational status of Oral Health and Community Dentistry Department at Mashhad Dental School | Students, faculty members | Questionnaire | 14 |

| 78% | ||||||||

| Alimohammadi et al.[44] | Persian | 2013 | Iran | Quantitative study | Evaluation of the Medical School Faculty of Rafsanjan University of Medical Sciences | Students, faculty members, graduates | Questionnaire | 13 |

| 72% | ||||||||

| Hemati et al.[45] | Persian | 2018 | Iran | Quantitative study | Evaluating the neonatal intensive care nursing (MSc) program based on the CIPP model in Isfahan University of Medical Sciences | Students, faculty members, managers, graduates | Questionnaire | 16 |

| 84% | ||||||||

| Tezakori et al.[46] | Persian | 2010 | Iran | Mixed methods study | Evaluation of Nursing PhD curriculum in Iran based on CIPP- model evaluation | Students, graduates | Questionnaire-Interview | * |

| 25% | ||||||||

| Saberian et al.[47] | Persian | 2003 | Iran | Quantitative study | A pattern for internal evaluation of the nursing school | Students, faculty members | Questionnaire | 6 |

| 33% | ||||||||

| Esfeden et al.[48] | Persian | 2020 | Iran | Quantitative study | Evaluation of the realization of clinical nursing students’ learning objectives using the CIPP evaluation model | Students | Checklists | 12 |

| 67% | ||||||||

| Mahram et al.[49] | Persian | 2012 | Iran | Quantitative study | Achievement of educational goals from the perspectives of undergraduate nursing students and head nurses | Students, head nurses | Questionnaire | 15 |

| 83% | ||||||||

| Olia and Nejad[50] | Persian | 2018 | Iran | Quantitative study | Comprehensive evaluation of the Anatomy Department of Shahid Sadoughi University of Medical Sciences | Students, faculty members, managers, graduates | Questionnaire | 12 |

| 67% | ||||||||

| Mohebbi and Yarmohammadian[51] | Persian | 2013 | Iran | Quantitative study | Developing evaluation indicators for the health information technology course at Master’s degree in selected universities of medical sciences | Students, faculty members, managers, library staff | Questionnaire | 8 |

| 44% | ||||||||

| Mazloomy Mahmoudabad et al.[52] | Persian | 2018 | Iran | Quantitative study | Evaluation of the externship curriculum for the public health course in Yazd University of Medical Sciences | Students, faculty members | Questionnaire | 15 |

| 83% | ||||||||

| Shayan et al.[53] | Persian | 2010 | Iran | Quantitative study | Designing the internal evaluation indicators of educational planning in postgraduate program (input, process, and outcome domains) in the Faculty of Public Health, Isfahan | Faculty members | Questionnaire | 10 |

| 56% | ||||||||

| Siswadi et al.[54] | English | 2019 | Indonesia | Quantitative study | Evaluation of the nursing schools’ performance in (INNCT) using the CIPP evaluation model | Faculty members, graduates | Questionnaire | 12 |

| 67% |

HSM=Health Services Management, CIPP=Context, Input, Process, and Product, FPDP= Faculty Professional Development Program, BL= Blended Learning program, INNCT= Indonesian National Nursing Competency Test, MSc= Master of Science

Examining the quality of studies based on the indicators of CASP showed that 23 studies had good quality, 13 ones had moderate quality, and only five studies had poor quality. The results of the quality assessment of the studies are displayed in Table 4. Moreover, most studies were performed on the assessment of the nursing curriculum based on the CIPP model, while the lowest number of studies was conducted on medical records [Table 5].

Table 5.

Frequency distribution of context, input, process, and product-based evaluation of educational programs in medical sciences

| Education disciplines | n (%) |

|---|---|

| Midwifery | 4 (10) |

| Nursing | 14 (34) |

| Dentistry | 4 (10) |

| Medicine | 11 (27) |

| Health and well-being services | 7 (17) |

| Medical records | 1 (2) |

Discussion

This systematic review examined the scope of research conducted in medical sciences based on the CIPP model. The CIPP model evaluates the context, input, process, and output of educational programs and curricula using a systematic approach and by identifying their weaknesses and strengths, it can help policymakers at the macro level to plan expert actions and decide whether to continue, stop, or revise the educational program, ultimately promoting the satisfaction with the implementation of the program. Various factors can influence the satisfaction with educational programs.[55] Factors, such as experienced professors, suitable facilities and equipment, educational and research budgets, appropriate educational content, and proper educational environment, which are measured in the CIPP model, can affect the satisfaction with educational programs. Although most studies have evaluated the satisfaction with educational programs as relatively high,[18,19,22,26,36,38,47,51] some other studies have reported moderate or low satisfaction levels.[33,45,46,48,54]

Due to the nature of the CIPP model, educational programs are evaluated in four areas (context, input, process, and output). Context evaluation involves identifying the relevant elements in the educational environment as well as identifying problems, needs, and opportunities in a context or educational situation. Through this evaluation, it is possible to judge the appropriateness of predetermined goals. In context evaluation, factors such as needs, facilities, and problems are examined in a specific and defined environment. At this stage, the education system is evaluated in terms of goals and the target population.[7] Context has been evaluated in different studies. For instance, Okhovati et al. evaluated the curriculum of health services management in Kerman University of Medical Sciences. Evaluation of context showed that the mean score obtained in the domain of goals had a poor situation, whereas the mean score obtained in providing scientific and specialized services indicated that the situation was relatively satisfactory. The overall mean score of context evaluation of the curriculum was reported as relatively high.[37] Consistently, Akhlaghi et al. evaluated the Master's curriculum in medical records at Iran University of Medical Sciences and revealed that the context was relatively desirable.[36] Yazdani and Moradi also reported a desirable evaluation of the context of the undergraduate nursing curriculum at Ahvaz University.[38] In the same line, Mohebbi and Yarmohammadian studied the undergraduate curriculum of medical records and found that the context was satisfactory.[51] In another study by Kool et al., the context of the gynecology curriculum was desirable in achieving the goals.[18] The results of the study by AbdiShahshahani et al. also showed that the context of the Iranian doctoral curriculum in reproductive health was desirable.[17] However, the results of a study conducted by Lee on a Humanities Course in College of Medicine showed that there were problems with the context of the curriculum. Although the educational goals were clearly stated in the curriculum, the results of content analysis indicated that the goals of the curriculum were not clear and that the students demanded the goals of the curriculum to be clearly stated.[21] Moreover, the results of another study performed by Niazi on the selected faculties of Tehran University of Medical Sciences demonstrated that the context was not desirable and that the students believed that they were not adequately informed about the goals and policies of the department[33] In general, problems related to the contexts of curricula can be due to the lack of periodic review of program goals, incompatibility of goals with the job needs of the target population, incomprehensive goals, vague goals, expectations, capabilities that students must learn, and different structures of educational environments.

In the input dimension, the use of the resources and strategies to achieve the goals of an educational program or system is evaluated. Input includes all individuals and human resources, including students, professors, principals, financial resources, and scientific resources that are connected to an educational program. At this stage of evaluation, the required information is collected on how the resources are used to achieve the goals of the educational program.[7] The main purpose of input evaluation is to help develop a program that can bring about educational changes to achieve the goals set in the context evaluation stage so that the consequences and outputs of the educational system have high utility and value.[7] The study by Okhovati et al. showed that there were major weaknesses in the input dimension of the curriculum. It seemed that the management curriculum was not up to date and needed to be reviewed and revised. The facilities and equipment were not satisfactory, as well.[37] In Yazdani and Moradi's study, the evaluation of input showed that educational resources were available, but theoretical and practical courses were not proportionate, nor were educational facilities and equipment appropriate.[38]

In Mohebbi and Yarmohammadian's study, input evaluation showed that the educational budget and financial resources were not satisfactory.[51] Similarly, Alimohammadi et al. evaluated the School of Medicine at Rafsanjan University of Medical Sciences and reported that input, students’ abilities, educational content, facilities, and equipment were not desirable.[44] Input evaluation of Master's program of the neonatal intensive care was also reported to be unsatisfactory by Hemati et al.[45] Furthermore, Phattharayuttawat aimed at evaluating the curriculum of the master of clinical psychology and indicated that educational resources were available for learning and teaching and were quite appropriate. Although the input was appropriate in terms of students, professors, and educational content, some educational resources, such as clinical wards and availability of patients, were not adequate.[22]

Nagata et al. studied the nursing doctoral curriculum in Japan and found that in terms of input, the number of professors, facilities, and equipment such as the library and computer systems was not appropriate.[34] So young Lee stated that in order to improve the input of the curricula, their educational contents had to be improved.[35]

Process focuses on the way the program is implemented and determines the effect of the educational program on learners. Process evaluation involves evaluation of teaching–learning activities as well as instructors’ behaviors, knowledge, and experiences and examines the management and supervision procedures. In other words, process refers to all activities that take place during the implementation of the program. It also provides an opportunity to simultaneously apply the results of the two previous stages of evaluation to improve the implementation of the educational program.[7]

Output evaluates and determines the effects of the educational program on graduates, compares the results of the educational program to the goals of the program, and determines the relationship between expectations and actual results. Output refers to all graduates, newly produced knowledge, and achievements of the program. This type of evaluation is performed to judge the desirability of the effectiveness of educational activities.[7] In a study carried out by Tazakkori based on the CIPP model, it was found that the Iranian nursing doctoral program was devoid of basic defects and flaws in terms of history, philosophy, mission, vision, and aims. In addition, course specifications and contents were in accordance with the philosophy and goals of the program. However, the evaluation results showed that there were major problems in the process and implementation of the program, and that the output was affected by the poor implementation of the process.[46]

Ehsanpour conducted a research in the School of Nursing and Midwifery of Isfahan University of Medical Sciences in order to evaluate undergraduate midwifery students’ achievement of the minimum requirements of midwifery learning. Based on the results, the students did not have enough experience in rare cases in clinical education.[15] Pakdaman et al. also examined the achievement of educational goals of periodontics and oral health programs at the University of Tehran based on the CIPP model. They concluded that students were more satisfied with the content, but believed that instructors were not sufficiently motivated and skilled. Overall, the students were not very satisfied with the process and assessed the output of some courses as poor.[40] Okhovati's et al. study showed that the process was relatively satisfactory in terms of students’ activities, teaching–learning activities, and research activities. However, evaluation of the input of the curriculum showed that the graduates’ specialized skills were not satisfactory.[37] On the contrary to the results of the abovementioned studies, the findings of the study by Phattharayuttawat et al. showed that in terms of context, the goals of the curriculum were clearly stated and matched social needs. The structure of the curriculum was also well designed. In addition, input evaluation showed that educational resources were available for learning and teaching, but they were not quite adequate. The results also showed that the process and educational performance were very good and the evaluation of the output showed that the graduates had achieved the general and specialized competencies stated in the goals of the program.[22]

Based on the comprehensive and systematic CIPP model, it is expected that all elements of the education system be consistently interconnected, as it is assumed that education is an ongoing process and the educational system is designed based on these processes. However, the findings of the present study showed that such an interconnection has not been fully established between the components of the educational system in different studies, and there have been discrepancies in some cases. The results of some studies also showed that students did not achieve the intended educational goals. Therefore, revision of educational programs and systems and provision of guidelines were found to be necessary.[15,22,35,37,40,46]

What was very noteworthy in the present study was that many studies tended to adopt a quantitative approach to the evaluation of educational programs. However, in order to conduct a comprehensive evaluation, both quantitative and qualitative data must be analyzed. A careful and comprehensive examination of the methods and results of numerous domestic and international evaluation studies, especially those conducted in medical sciences education, demonstrated that most of these studies focused on answering explicit and clear questions rather than on viewing and measuring the overall value and competence of an educational program. While such studies have often been conducted to find the success or failure of educational programs in achieving predetermined goals, the most important goal of CIPP evaluation is to improve the quality of the program and not to prove its quality.[9] Although the underlying assumption of the CIPP model is that evaluation is a prognostic phenomenon and is done gradually along with the development of a program,[56] most published papers have sufficed to conduct a cross-sectional study using a questionnaire including the four components of the CIPP model. Therefore, using questionnaires with items on the context, input, process, and output does not necessarily mean using the CIPP evaluation model.[9] Studies by Makarem et al., Pakdaman et al., Hemati et al., and others have all examined some aspects or views of some program beneficiaries based on a quantitative approach through using questionnaires and are consequently subject to the same criticism because they have adopted a goal-oriented approach and have evaluated the achievement of the final results,[40,43,45] while the systematic evaluation process should formatively evaluate all aspects of the program according to the views of all stakeholders and parties involved in the educational program and the results of each stage should be used simultaneously to enhance the program.[9] In terms of study participants, most studies have evaluated educational programs from the viewpoint of a particular group and have failed to take qualitative approaches and viewpoints of different parties into account. Evaluating educational programs from the perspective of different people involved in the program can help discover different aspects of the program or the weaknesses that have been less addressed. Paying attention to the views of other people involved in the educational program in different societies according to the cultural conditions prevailing in that society can help reform and revise the educational programs, as well. In this way, using a holistic approach to the educational program makes it possible to provide a framework for interventions that can be implemented in educational programs.

Limitations

One of the limitations of this systematic review was the potential for incomplete retrieval of studies due to the restriction of the search to the articles published in English.

Innovation

This was the first systematic review examining the CIPP model of evaluation in medical education.

Conclusion

The results of this review study emphasized the need for formative evaluation through a systematic CIPP model with a holistic approach during the implementation of educational programs. Using the quantitative and qualitative results of such studies, various aspects of educational programs should be revised to improve their competencies. Until now, various previous studies have been investigated with a focus on the CIPP evaluation model from a practical perspective. These results showed that evaluations using the CIPP model, which could be considered rather difficult, could provide the basis for education improvement. Specifically, omission of evaluation of the unset parts becomes more vulnerable for quantitative evaluations. These materials can contribute to obtaining a diverse range of opinions that cannot be explained by quantitative materials. Furthermore, rather than utilizing a single group such as students as the evaluation material collection source, having a balanced perspective of various interested parties regarding education can improve the reliability and validity of an evaluation, which can then be utilized as a convincing database.

Financial support and sponsorship

This study was financially supported by Tehran University of Medical Sciences.

Conflicts of interest

There are no conflicts of interest.

Acknowledgment

This paper was extracted from a PhD dissertation in Reproductive Health (IR.TUMS.FNM.REC.1398.057) approved by Tehran University of Medical Sciences. The authors would like to thank Ms. A. Keivanshekouh at the Research Improvement Center of Shiraz University of Medical Sciences for improving the use of English in the manuscript.

References

- 1.Mosleh Amirdehi H, Neyestani M R, Jahanian I. The role of external evaluation on upgrading the quality of higher education system: Babol University of Medical Sciences case. IRPHE. 2017;22:99–111. [Google Scholar]

- 2.Pazargadi M, Azadi Ahmadabadi G. Quality and Quality Assessment in Universities and Higher Education Institutions. Tehran: Boshra Publishers; 2008. pp. 45–9. [Google Scholar]

- 3.Leverenz L. Allied Health Education Program Accreditation-what does it mean? [Last accessed on 2014 May 19]. Available from: http://www.medical-colleges.net/alliedhealth.htm .

- 4.Thawabieh AM. Students evaluation of faculty. Int Educ Stud. 2017;10:35–43. [Google Scholar]

- 5.Amini R, Vanaki Z, Emamzadeh Ghassemi H. The validity and reliability of an evaluation tool for nursing management practicum. Iran J Med Educ. 2005;5:23–31. [Google Scholar]

- 6.Stufflebeam DL, Zhang G. The CIPP Evaluation Model: How to Evaluate for Improvement and Accountability. 1st ed. New York: Guilford Publications; 2017. [Google Scholar]

- 7.Saif AA. Educational Measurement, Assessment and Evaluation. 7th ed. Tehran: Dowran Publisher; 2017. [Google Scholar]

- 8.Frye AW, Hemmer PA. Program evaluation models and related theories: AMEE guide no.67. Med Teach. 2012;34:288–99. doi: 10.3109/0142159X.2012.668637. [DOI] [PubMed] [Google Scholar]

- 9.Gandomkar R, Mirzazadeh A. Cipp Evaluation model. Supporting planners and implementers of educational programs. Developmental steps in medical education. J Med Educ Dev Dev Center. 2014;11:401–2. [Google Scholar]

- 10.CASP Checklists-Critical Appraisal Skills Programme. [Last accessed on 23 Oct 2020]. Available from: https://casp-uk.net/casp-tools-checklists .

- 11.Bahri N, Latifnejad Roudsari R. A Critical Appraisal of Research Evidence on Iranian Women's Attitude towards Menopause. IJOGI. 2016;18:1–11. [Google Scholar]

- 12.Hong QN, Fabregues S, Bartlett G, et al. The Mixed Methods Appraisal Tool (MMAT) version 2018 for information professionals and researchers. Education for Information. 2018;34:285–291. [Google Scholar]

- 13.Hong Q, Gonzalez-Reyes A, Pluye P. Improving the usefulness of a tool for appraising the quality of qualitative, quantitative and mixed methods studies, the Mixed Methods Appraisal Tool (MMAT) J Eval Clin Pract. 2018;24:459–67. doi: 10.1111/jep.12884. [DOI] [PubMed] [Google Scholar]

- 14.Habib Zadeh S, Far YM. Internal evaluation of Midwifery in Islamic Azad University of Mahabad. Eur J Exp Biol. 2012;2:1175–80. [Google Scholar]

- 15.Ehsanpour S. Achieving minimum learning requirements from the viewpoints of midwifery students in Isfahan School of Nursing and Midwifery. Iran J Med Educ. 2006;6:17–24. [Google Scholar]

- 16.AbdiShahshahania M, Ehsanpourb S, Yamanic N, Kohand S, Hamidfare B. The Evaluation of Reproductive Health PhD Program in Iran. A CIPP Model Approach. 2015;197:88–97. [Google Scholar]

- 17.AbdiShahshahani M, Ehsanpour S, Yamani N, Kohan S. The evaluation of reproductive health PhD program in Iran: The input indicators analysis. Iran J Nurs Midwifery Res. 2014;19:620–8. [PMC free article] [PubMed] [Google Scholar]

- 18.Kool B, Michelle R. Is the delivery of a quality improvement education programme in obstetrics and gynaecology for final year medical students feasible and still effective in a shortened time frame? BMC Med Educ. 2017;17:2–9. doi: 10.1186/s12909-017-0927-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Mohebbi N, Akhlaghib F, Hossein Yarmohammadian M, Khoshgamd M. Application of CIPP model for evaluating the medical records education course at master of science level at Iranian medicalsciences universities. Procedia Soc Behav Sci. 2011;15:3286–90. [Google Scholar]

- 20.Maqbool A Ali, Soyeon Caren H Han, Hafiz Syed Muhammad Bilal, Sungyoung L Lee, Matthew Jee Yun K Kang, Byeong Ho K Kang, et al. iCBLS: An interactive case-based learning system for medical education. Int J Med Inform. 2018;109:55–69. doi: 10.1016/j.ijmedinf.2017.11.004. [DOI] [PubMed] [Google Scholar]

- 21.So Young L Lee, Seung Hee L Lee, Jwa Seop Shin. Evaluation of medical humanities course in college of medicine using the context, input, process, and product evaluation model. J Korean Med Sci. 2019;34:1–16. doi: 10.3346/jkms.2019.34.e163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Phattharayuttawat S, Chantra Y, Chaiyasit W. An evaluation of the curriculum of a graduate programme in Clinical Psychology. South East Asian J Med Educ. 2009;3:14–9. [Google Scholar]

- 23.Bazrafshan A, Haghdoost AA, Rezaei H, Beigzadeh A. A practical framework for evaluating health services management educational program: The application of the mixed-method sequential explanatory design. Res Dev Med Educ. 2015;4:47–54. [Google Scholar]

- 24.Powell B B, Conrad E. Utilizing the CIPP model as a means to develop an integrated service-learning component in a university health course. J Health Educ Teach. 2015;6:21–32. [Google Scholar]

- 25.Mirzazadeh A, Gandomkar R, Mortaz Hejri S, Hassanzadeh G, Emadi Koochak H, Golestani A. Undergraduate medical education programme renewal: A longitudinal context, input, process and product evaluation study. Perspect Med Educ. 2016;5:15–23. doi: 10.1007/s40037-015-0243-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Yarmohammadian MH, Mohebbi N. Review evaluation indicators of health information technology course of master's degree in medical sciences universities’ based on CIPP Model. J Edu Health Promot. 2015;4:1–8. doi: 10.4103/2277-9531.154122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Rooholamini A, Amini M, Bazrafkan L, Dehghani MR, Esmaeilzadeh Z, Nabeiei P, et al. Program evaluation of an integrated basic science medical curriculum in Shiraz Medical School, using CIPP evaluation model. J Adv Med Educ Prof. 2017;5:148–54. [PMC free article] [PubMed] [Google Scholar]

- 28.Adham A, Diala AH. The transition to blended learning in a school of nursing at a developing country: An evaluation. J Educ Tech. 2014;11:16–21. [Google Scholar]

- 29.Kim MJ, Lee H, Kim HK, Ahn YH, Kim E, Yun SN, et al. Quality of faculty, students, curriculum and resources for nursing doctoral education in Korea: A focus group study. Int J Nurs Stud. 2010;47:295–306. doi: 10.1016/j.ijnurstu.2009.07.005. [DOI] [PubMed] [Google Scholar]

- 30.Lippe M, Carter P. Using the CIPP model to assess nursing education program qualityand merit1. Teach Learn Nurs. 2017;3:1–5. [Google Scholar]

- 31.Ashghali-Farahani M, Ghaffari F, Hoseini SS. Neonatal intensive care nursing curriculum challenges based on context, input, process, and product evaluation model: A qualitative study. Iran J Nurs Midwifery Res. 2018;23:111–8. doi: 10.4103/ijnmr.IJNMR_3_17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Souto RQ, Pereira Linhares FM, De Melo Canêjo MI, Solange F, Tourinho V, Cavalcanti Cordeiro R, et al. Teaching-learning methodologies from the perspective of nursing students. Rev Rene. 2018;19:3408. [Google Scholar]

- 33.Neyazi N, Arab PM, Farzianpour F, Mahmoudi Majdabadi M. Evaluation of selected faculties at Tehran University of Medical Sciences using CIPP model in students and graduates point of view. Eval Program Plann. 2016;59:88–93. doi: 10.1016/j.evalprogplan.2016.06.013. [DOI] [PubMed] [Google Scholar]

- 34.Nagata S, Gregg MF, Miki Y, Arimoto A, Murashima S, Kim MJ. Evaluation of doctoral nursing education in Japan by students, graduates, and faculty: A comparative study based on a cross-sectional questionnaire survey. Nurse Educ Today. 2012;32:361–7. doi: 10.1016/j.nedt.2011.05.019. [DOI] [PubMed] [Google Scholar]

- 35.So young Lee, Jwa-Seop Shin, and Seung-Hee Lee. How to execute Context, Input, Process, and Product evaluation model in medical health education. J Educ Eval Health Prof. 2019;16(40):1–8. doi: 10.3352/jeehp.2019.16.40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Akhlaghi F, Yarmohammadian MH, Khoshgam M, Mohebbi N. Evaluating the Quality of Educational Programs in Higher Education Using the CIPP Model. Health Inform Manage. 2011;8:629. [Google Scholar]

- 37.Okhovati M, YazdiFeyzabadi V, Beigzadeh A, Shokoohi M, Mehrolhassani M. Evaluating the program of bachelor degree in health services management at kerman university of medical sciences, Iran, Using the CIPP model (Context, Input, Process, Product) Strid Dev Med Educ. 2014;11:101–13. [Google Scholar]

- 38.Yazdani N, Moradi M. Evaluation of the quality of undergraduate nursing education program in Ahvaz based on CIPP evaluation model. Sadra Med Sci J. 2017;5:159–72. [Google Scholar]

- 39.Tabari M, Nourali Z, Khafri S, Gharekhani S, Jahanian I. Evaluation of educational programs of pediatrics, orthodontics and restorative departments of babol dental schools from the perspective of the students based on the CIPP model. Caspian J Dent Res. 2016;5:8–16. [Google Scholar]

- 40.Pakdaman A, Soleimani Shayesteh Y, Kharazi fard MJ, Kabosi R. Evaluation of the achievement of educational objectives of the Community Oral Health and Periodontics Departments using the CIPP model of evaluation Students’ perspective. J Dent Tehran Univ Med Sci. 2011;24:20–2. [Google Scholar]

- 41.Tabari M, Nourali Z, Jahanian I I, Khafri S. Evaluation of educationalprograms in endodontics, periodontics and oral & maxillofacial surgery departments of babol dental school from students’ perspective based on CIPP model. Caspian J Dent Res. 2018;7:8–15. [Google Scholar]

- 42.Jannati A, Gholami M, Narimani M, Gholizadeh M, Kabiri N. Evaluating educational program of bachelor of sciences in health services management using CIPP model in Tabriz. Depict Health. 2017;8:104–10. [Google Scholar]

- 43.Makarem A, Movahed T, Sarabadani J, Shaker M, Asadian Lalim T, Neda Eslam. Evaluation of educational status of oral health and community dentistry department at Mashhad dental school using CIPP evaluation model in 2013. Mash Dent Sch. 2015;38:347–62. [Google Scholar]

- 44.Alimohammadi T, Rezaeian M, Bakhshi H, VaziriNejad R. The Evaluation of the Medical School Faculty of Rafsanjan University of Medical Sciences Based on the CIPP Model in 2010. J Rafsanjan Univ Med Sci. 2013;12:205–18. [Google Scholar]

- 45.Hemati Z, Irajpour A, Allahbakhshian M, Varzeshnejad M, AbdiShahshahani M. Evaluating the neonatal intensive care nursing MSc program based on CIPP model in Isfahan university of medical sciences. Iran J Med Educ. 2018;18:324–32. [Google Scholar]

- 46.Tezakori Z, Mazaheri E, Namnebat M, Torabizadeh K, Fathi S, Ebrahimibil F. The evaluation of PhD nursing in Iran (Using the CIPP Evaluation Model) J Faculty Nurs Midwifery. 2010;12:44–51. [Google Scholar]

- 47.Saberian M, Asgari M, Asadi AA, Nobahar M, Atashnafas E, Ghods AA, et al. The pattern for internal evaluation of nursing school. J Med Semnan Med Sci Univ Special Med Educ. 2003;5:59–68. [Google Scholar]

- 48.Esfeden ZB, Dashtgard A, Ebadinejad Z. Evaluation of the realization of clinical nursing students’ learning objectives using CIPP evaluation model. Iran J Nurs Res. 2020;14:66–73. [Google Scholar]

- 49.Mahram B, Vahidi M, Areshtanab N. Achievement of educational goals from the perspectives of undergraduate nursing students and head nurses. J Nurs Educ. 2012;1:29–35. [Google Scholar]

- 50.Owlia Z, MotahariNejad H. Comprehensive evaluation of the anatomy department of the Shahid Sadoughi University of Medical Sciences, Yazd, based on CIPP model. The second international conference on innovation and research in educational sciences, management and psychology. 2018. [Last accessed on 23 Oct 2020]. pp. 1–6. Available from: https://www.civilica.com/Paper-HPCONF02-HPCONF02_192.html .

- 51.Mohebbi N, Yarmohammadian M. Develop evaluation indicators of health information technology course at master's degree in selected medical sciences universities. Health Inf Manage. 2013;10:570. doi: 10.4103/2277-9531.154122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Mazloomy Mahmoudabad SS, Moradi L. Evaluation of Externship curriculum for public health Course in Yazd University of Medical Sciences using CIPP model. J Educ Strategies Med Sci. 2018;11:27–36. [Google Scholar]

- 53.Shayan S, Mohammadzadeh Z, Entezari MH, Falahati M. Designing the internal evaluation indicators of educational planning in postgraduate program (input, process, outcome domains) in public health faculty. Isfahan. Iran J Med Educ. 2010;10:994–1005. [Google Scholar]

- 54.Siswadi Y, Houghty GS, Agustina T. Implementation of the CIPP evaluation model in Indonesian nursing schools. J Ners. 2019:14:126–31. [Google Scholar]

- 55.Danaei SM, Mazareie E, Hosseininezhad S, Nili M. Evaluating the clinical quality of departments as viewed by juniors and seniors of Shiraz dental school. J Edu Health Promot. 2015;4:75:1–7. doi: 10.4103/2277-9531.171788. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Fitzpatrick JL, Sanders JR, Worthen BR. Program Evaluation: Alternative Approaches and Practical Guidelines. 4th ed. Washington: Pearson Publishers; 2011. [Google Scholar]