Abstract

We design two‐stage confirmatory clinical trials that use adaptation to find the subgroup of patients who will benefit from a new treatment, testing for a treatment effect in each of two disjoint subgroups. Our proposal allows aspects of the trial, such as recruitment probabilities of each group, to be altered at an interim analysis. We use the conditional error rate approach to implement these adaptations with protection of overall error rates. Applying a Bayesian decision‐theoretic framework, we optimize design parameters by maximizing a utility function that takes the population prevalence of the subgroups into account. We show results for traditional trials with familywise error rate control (using a closed testing procedure) as well as for umbrella trials in which only the per‐comparison type 1 error rate is controlled. We present numerical examples to illustrate the optimization process and the effectiveness of the proposed designs.

Keywords: Bayesian optimization, conditional error function, subgroup analysis, utility function

1. INTRODUCTION

It is increasingly common to integrate subgroup identification and confirmation into a clinical development program. Biomarker‐guided clinical trial designs have been proposed to close the gap between the exploration and confirmation of subgroup treatment effects. Numerous statistical considerations (eg, multiplicity issues, consistency of treatment effects, trial design) need to be taken into account to ensure a proper interpretation of study findings, as outlined in recent reviews. 1 , 2 , 3 , 4

Several study designs are available for the investigation of subgroups in clinical trials. These include all‐comers designs where biomarker status or subgroup are not considered for enrolment but only in the trial analysis, and stratified designs where the trial prevalences for each subgroup, that is the proportion of patients recruited from each subgroup, are chosen initially and maintained throughout the trial. 5 , 6 Adaptive enrichment designs have been proposed to increase the efficiency of these trials. 7 , 8 , 9 , 10 , 11 These designs allow subgroups to be dropped for futility at interim analyses with the rest of the trial being conducted with subjects from the remaining groups only. The U.S. Food and Drug Administration guidance on adaptive designs highlights the use of adaptive enrichment designs as a means to increase the chance to detect a true drug effect over that of a fixed sample design. 12

Master protocols provide an infrastructure for efficient study of newly developed compounds or biomarker‐defined subgroups. 13 , 14 Such studies simultaneously evaluate more than one investigational drug or more than one disease type within the same overall trial structure. 15 , 16 , 17 An umbrella trial is a particular type of master protocol in which enrolment is restricted to a single disease but the patients are screened and assigned to molecularly defined subtrials. Each subtrial may have different objectives, endpoints or design characteristics. An example of an umbrella trial is the ALCHEMIST trial, in which patients with nonsmall cell lung cancer are screened for EGFR mutation or ALK rearrangement and assigned accordingly to subtrials with different treatments. 18

In this paper, we study confirmatory trials that allow the investigation of the treatment effect in prespecified nonoverlapping subgroups. In particular, we focus on adaptive clinical trials that allow the modification of design elements without compromising the integrity of the trial. 19 We propose a class of adaptive enrichment designs that use a Bayesian decision framework to optimize the design parameters, such as the trial prevalences of the subgroups, the weights for multiple hypotheses testing, and adaptation rules. A similar framework has been used in References 20, 21, 22, 23, 24, 25, 26, 27 for adaptive enrichment trials.

We consider two types of problem. In the first case, we study designs that preserve the familywise error rate (FWER) of the trial using a closed testing procedure to test the null hypotheses of no treatment effect in the two subgroups. This is what is typically required in adaptive enrichment trials where a single treatment is evaluated against a control. In the second case, we show results for umbrella trial designs without multiplicity adjustment. Here, we consider studies made up of separate simultaneous trials, for which it has been argued that no control of multiplicity is needed. 28 Our work, therefore, provides an overarching framework for both adaptive enrichment designs and umbrella trials.

The manuscript is organized as follows: In Section 2, we introduce the designs and distinguish between single‐stage designs (Section 2.2) and two‐stage designs (Section 2.3), and in Section 2.4 we discuss how to adapt our proposed designs to umbrella trials. In Sections 3 and 4 we present numerical examples. We describe how our methods may be extended to designs with more than two stages in Section 5 and we end with conclusions and a discussion in Section 6.

2. BAYES OPTIMAL DESIGNS

2.1. The class of trial designs

Consider a confirmatory parallel‐group clinical trial comparing a new treatment and a control with respect to a pre‐defined primary endpoint. We assume the patient population may be divided into disjoint, biomarker‐defined subgroups. Given a maximum achievable sample size, n, we aim to optimize the trial design by maximising a specific utility function.

Suppose two biomarker‐defined subgroups have been identified before commencing the trial. Let be the prevalence of the first subgroup in the underlying patient population and the prevalence of the second subgroup. Let and be the treatment effects, denoting the difference in the mean outcome between treatment and control, in the first and second subgroups, respectively. We consider trials to investigate the null hypotheses H01: and H02: with corresponding alternative hypotheses H11: and H12: . In Sections 2.2 and 2.3 we consider confirmatory trials in which strong control of the FWER is imposed. 29 In our discussion of umbrella trials in Section 2.4, we assume multiplicity control is not required.

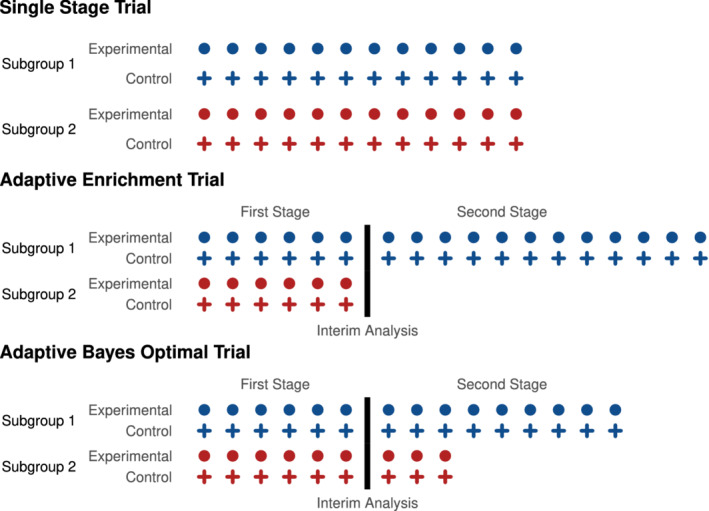

We consider optimization within a class of designs that have a single interim analysis at which adaptation can take place. The total sample size is fixed at n with s(1)n patients in the first stage and s(2)n patients in the second stage, where s(1) > 0, s(2) ≥ 0 and s(1) + s(2) = 1. In the first stage, patients are recruited from subgroup 1 and from subgroup 2, where , and . In the second stage, patients are recruited from subgroup 1 and from subgroup 2, where , and , and the values of and may depend on the first stage data. Within each stage and subgroup, we assume equal allocation to the two treatment arms (this assumption is not strictly necessary and could be relaxed). Figure 1 gives a schematic representation of the trial design.

FIGURE 1.

Schematic representation of the three types of trial design. In the single‐stage trial, the sampling prevalences of the subgroups are fixed throughout the trial. In standard adaptive enrichment trials, patients are recruited with predefined subgroup prevalences until the interim analysis, at which point a decision is taken to continue with the same prevalences or to sample from a single subgroup. In the Bayes optimal adaptive trial designs that we consider, the sampling prevalences may be changed at the interim analysis [Colour figure can be viewed at wileyonlinelibrary.com]

The definition of a particular design in is completed by specifying the multiple testing procedure to be used and the method for combining data across stages when adaptation occurs. We use a closed testing procedure to control FWER, applying a weighted Bonferroni procedure to test the intersection hypothesis. In this procedure, weights are initially set as and but these may be modified in the second stage if adaptation occurs. The error rate for each hypothesis test is controlled by preserving the conditional type I error rate when an adaptation is made. Thus, while we use a Bayesian approach to optimize the design, the trial is analyzed using frequentist procedures that control error rates at the desired level, adhering to conventional regulatory standards.

We follow a Bayesian decision theoretic approach to optimize over trial designs in the class . In assessing each design, we assume a prior distribution for the treatment effects in each subgroup and a utility function 30 that quantifies the value of the trial's outcome. We shall optimize designs with respect to the timing of the interim analysis, the proportion of patients recruited from the two subgroups at each stage of the trial, the weights in the weighted Bonferroni test, and the rule for updating these weights given the interim data.

We summarize the data observed during the trial by the symbol , noting that this summary should contain information about the numbers of observations from each subgroup and weights to be used in the weighted Bonferroni test at each stage, as well as estimates of and obtained from observations before and after the interim analysis. We define our utility function to be

| (1) |

where is the indicator function. By definition, the data summary contains the information needed to determine if each of the hypotheses H01 and H02 is rejected.

The utility (1) involves the size of the underlying subgroups as well as the rejection of the corresponding hypotheses. Thus, rejection of the null hypothesis for a larger subgroup is given greater weight. If the population prevalence of the two subgroups is not known, a prior on may be added. We note that terms in the function (1) are positive when a null hypothesis is rejected but the associated treatment effect is very small or even negative: this issue could be addressed by multiplying each term by an indicator variable which takes the value 1 if the relevant parameter, or , is larger than zero or above a clinically relevant threshold (eg, Stallard et al 31 where a similar approach is used for treatment selection).

Since the trial design is optimized with respect to the stated utility, it is important to choose a utility function that reflects accurately the relative importance of possible trial outcomes. Furthermore, the definition of utility can be adapted to reflect the interest of different stakeholders, for example, Ondra et al 21 and Graf, Posch and König 24 propose utility functions that represent the view of a sponsor or take a public health perspective.

Let denote the prior distribution for . Then, the Bayes expected utility for a trial design is

where we have taken the expectation over the sampling distribution of the trial data given the true treatment effects , with an outer integral over the prior distribution .

When choosing the prior , it is important to remember that represents the expected utility, averaged over . If an “uninformative” prior is chosen, this will place weight on extreme scenarios, such as large negative treatment effects, which have little credibility. Thus, when considering the Bayes optimal design, it is important to use subjective, informative priors. In some cases, pilot studies or historic observational data may be available to construct the prior distribution.

In this paper, we assume the prior distribution to be bivariate normal,

| (2) |

Here, the correlation coefficient reflects the belief about the existence of common factors that contribute to the treatment effects in the two subgroups.

2.2. Bayes optimal single‐stage design

2.2.1. Patient recruitment and estimation

Suppose we wish to conduct a single‐stage trial, which is the special case where s(2) = 0, usually referred to as a stratified design. For simplicity of notation in this section, we write rjand rather than and for j = 1 and 2. We assume patients can be recruited at these rates regardless of the true proportions and in the underlying patient population. In addition, we assume that patients are randomised between the new treatment and the control with a 1 : 1 allocation ratio in each subgroup.

During the trial we observe a normally distributed endpoint for each patient and we assume a constant variance for all observations. For patient i from subgroup j on the new treatment we have , i = 1, … , rjn/2, and for patient i from subgroup j on the control treatment we have , i = 1, … , rjn/2. The estimate of the treatment effect in subgroup j, is

| (3) |

2.2.2. Hypothesis testing in the single‐stage design

Consider the case s(2) = 0 and 0 < r1 < 1. Then

and the corresponding Z‐values

follow standard normal distributions under the null hypotheses H01 and H02.

We use a closed testing procedure to ensure strong control of the FWER at level. 32 To construct this, we require level tests of H01: , H02: and H01 ∩ H02: . We reject H01 globally if the level tests reject H01 and H01 ∩ H02. Similarly, we reject H02 globally if the level tests reject H02 and H01 ∩ H02.

For the individual tests we reject H01 if and H02 if . To test the intersection hypothesis, we use a weighted Bonferroni test: given predefined weights and , where , we reject H01 ∩ H02 if or . The resulting closed testing procedure is equivalent to the weighted Bonferroni‐Holm test and will be generalised to adaptive tests in Section 2.3.

We note that the choice of a closed testing procedure is not restrictive in this setting since any procedure that gives strong control of the FWER may be written as a closed testing procedure. 22 , 23 Furthermore in the special cases r1 = 1 and r2 = 1, where the trial recruits from only one of the subgroups, just one subgroup is tested and only the test of the individual hypothesis is required. These cases are accommodated in our general class of designs by setting when r1 = 1 and when r2 = 1.

2.2.3. Bayesian optimization

In the single‐stage trial we wish to optimize the trial prevalences of each subgroup, r1 and r2, and the weights in the Bonferroni‐Holm procedure, and . Given the constraints r1 + r2 = 1 and , we denote the set of parameters to optimize by .

Let denote the conditional distribution of given for design parameters a. The Bayes expected utility is given by

The Bayes optimal design is given by the pair that maximises the Bayes expected utility of the trial, that is

Given our simple choices for the prior distribution and the utility function this integral may be computed directly (see Section S1.2 of Appendix S1). We find the Bayes optimal single‐stage trial by a numerical search over possible values of a.

2.3. Bayes optimal two‐stage adaptive design

2.3.1. Adding a second stage

Consider now a two‐stage design in which data from the first stage inform adaptations in the second stage. The estimate of for subgroup j based on data collected in stage k is

| (4) |

where and are the mean responses in subgroup j in stage k for the treatment arm and control arm, respectively. Given the value of , the first stage estimates are independent with distributions

The trial prevalences, and , of the two subgroups in the second stage are dependent on and but, conditional on and , the second‐stage estimates are independent and conditionally independent of and with

2.3.2. Hypothesis testing in the two‐stage adaptive design

There is a variety of approaches to test multiple hypotheses in a two‐stage adaptive design. 33 , 34 , 35 , 36 We shall use a closed testing procedure to ensure strong control of the FWER at level , as we did for the single‐stage design in Section 2.2.2. In constructing level tests of the null hypotheses H01, H02 and H01 ∩ H02 we employ the conditional error rate approach. 37 , 38 Based on a reference design and its predefined tests, we calculate the conditional error rate for each hypothesis and define adaptive tests which preserve this conditional error rate, thereby controlling the overall type I error rate.

Consider a reference design in which the trial prevalences of subgroups 1 and 2 and the weights in the weighted Bonferroni test of H01 ∩ H02 remain the same across stages, so and for j = 1 and 2. In the reference design, tests are performed by pooling the stage‐wise data within each subgroup and treatment arm, and using the conventional test statistics, as for the single‐stage test. For j = 1 and 2, the pooled estimate of across the two stages of the trial is

with corresponding Z‐value

and the null hypothesis H0j is rejected at level if . Let

then the conditional distribution of given the interim data is

and the conditional error rates for the tests of H0j are

| (5) |

Similarly, the conditional error rate for the test of H01 ∩ H02 is

| (6) |

See Section S1.1 of Appendix S1 for further details on the derivations of the conditional distributions.

In the adaptive design, if no adaptations are made at the interim analysis we apply the tests as defined for the reference design. Suppose now that adaptations are made and the trial prevalences in stage 2 are set to be and with weights and for the weighted Bonferroni test. In this case, we calculate the conditional error rates A1, A2 and A12 prior to adaptation from Equations (5) and (6). We then define tests of H01, H02 and H01 ∩ H02 based on stage 2 data alone that have these conditional error rates as their type 1 error probabilities. Given the updated and ,

Thus, in our level tests, we reject H01 if , we reject H02 if and, applying a weighted Bonferroni test with weights and , we reject H01 ∩ H02 if . Finally, following the closed testing procedure, we reject H01 globally if the level tests reject H01 and H01 ∩ H02 and we reject H02 globally if the level tests reject H02 and H01 ∩ H02.

2.3.3. Two‐stage optimization

We denote the set of initial design parameters by and the second‐stage parameters by . Let and be the vectors of estimated treatment effects in each subgroup, based on the first and second‐stage data, respectively, as defined in Equation (4). Denote the conditional distributions of the estimated effects in each stage of the trial by and and the posterior distribution of given the stage 1 observations by . Then, the Bayes expected utility can be written as

| (7) |

We find the optimal combination of design parameters a1 before stage 1 and a2 before stage 2 using the backward induction principle. First we construct the Bayes optimal a2 for all possible and a1. Then we construct the Bayes optimal a1 given that the optimal a2 will be used in the second stage of the trial.

2.3.3.1. Optimizing the decision at the interim analysis

Denoting the marginal distribution of by , we have

and the right‐hand side of Equation (7) can be written as

Thus, given a1 and , the Bayes optimal decision for the second stage is the choice of a2 that maximises

For known values of and a1, we can find the conditional error rates A1, A2, and A12 used in hypothesis testing in stage 2, hence we may evaluate for given a1, , a2, and . Our choices for the prior distribution and utility function mean that it is quite straightforward to compute for given a1, a2 and . Thus, we are able to perform a numerical search seeking

to find the Bayes optimal a2.

2.3.3.2. Overall trial optimization

Having found the Bayes optimal parameters a2 for the second stage of the trial as a function of , we determine a1, the Bayes optimal choice for the initial parameters, as

We conduct a search over possible values of a1 to maximize the above integral and find the optimal choice of a1. Computing the integral for a given value of a1 by numerical integration is not straightforward. Instead, we have used Monte Carlo simulation to carry out this calculation for each value of a1.

2.4. Bayes optimal umbrella trials

We now consider the case of umbrella trials, where it has been argued that no multiplicity adjustment is required as the hypotheses to be tested concern different experimental treatments targeted to different molecular markers or subgroups. 28 Since each treatment is assessed separately, an umbrella trial can be viewed a set of independent trials even though they are run under a single protocol.

We consider umbrella trials with two subgroups, as in the previous sections. However, without multiplicity adjustment, the hypothesis testing procedure reduces to testing the elementary hypotheses H01 and H02 each at level . In applying the conditional error rate approach, only the computation of conditional error rates A1 and A2 from Equation (5) is required. Then, with and denoting the test statistics based on second‐stage data only, H01 is rejected if and H02 is rejected if . No test of the intersection hypothesis is performed.

Design parameters are optimized with respect to the utility function in Equation (1). To frame the optimization problem in the same way as in the previous sections, the interim decision in a two‐stage umbrella trial will optimize only the second‐stage subgroup trial prevalences, so , while in the first stage we optimize the subgroup trial prevalences and the timing of the interim analysis, so . In the case of a single‐stage umbrella trial, only the subgroup prevalences are optimized, so a = (r1). We have used a normal prior distribution, as defined in Equation (2), in optimizing the design parameters of single‐stage and two‐stage trials. In the case of two‐stage designs, the interim analysis uses the test statistics from the first stage and the prior distribution to perform adaptations and the final tests are performed using the conditional error rate approach.

3. NUMERICAL EXAMPLES AND COMPARISONS

In this section, we give numerical examples of optimized single‐stage and two‐stage designs in a range of scenarios. We show results for cases with and without multiplicity correction, referring to these as enrichment and umbrella trials, respectively. Additionally, we illustrate the optimization of the decision rule at the interim analysis. In Table 1, we provide an overview of the scenarios considered and the parameters that are optimized.

TABLE 1.

The scenarios considered in the numerical examples. The term “opt” indicates that parameters were optimized, while “N/A” means the parameters are not applicable. The parameters and are either specified by a prior distribution in which or specific values of and are given

|

|

|

|

|

|

s(1) |

|

|

|

|

|||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Figure 2 | Single‐stage | 0.3 | 0 to 0.3 | 0, 0.2 | 0.02 to 0.44 | 0.5 | N/A | opt | opt | prior | ||||||||||

| Figure S2 | Single‐stage | 0.3 | 0 to 0.3 | 0, 0.2 | 0.2 | −1 to 1 | N/A | opt | opt | prior | ||||||||||

| Figure 3 | Interim decision | 0.3 | 0.1 | 0 | 0.2 | −0.8, 0.5 | 0.25, 0.5 | 0.3 | 0.3 | prior | ||||||||||

| Figure 4 | Two‐stage | 0.3 | 0, 0.3 | 0, 0.2 | 0.2 | 0.5 | 0.1 to0.9 | opt | opt | prior | ||||||||||

| Figure 5 | Two‐stage | 0.3 | 0 to 0.3 | 0, 0.2 | 0.02 to 0.4 | 0.5 | opt | opt | opt | prior | ||||||||||

| Figure S10 | Two‐stage | 0.3 | 0 to 0.3 | 0, 0.2 | 0.2 | −0.8 to 0.8 | opt | opt | opt | prior | ||||||||||

| Figures 6 and S11 | Power | 0.3 | 0.1, 0.2 | 0 | 0.2 | 0.5 | opt | opt | opt | 0 to 0.3 | 0, 0.2 | |||||||||

3.1. Optimal single‐stage designs

In studying the impact of the prior distribution on optimized trial design parameters for single‐stage designs, we consider studies where the response variance is and the total sample size is fixed at n = 700. We assume a multivariate normal prior distribution for as defined in Equation (2) with parameters , , and , and we compute optimal designs for a variety of such priors. The FWER in enrichment designs and the per‐comparison error rate in umbrella designs is fixed at .

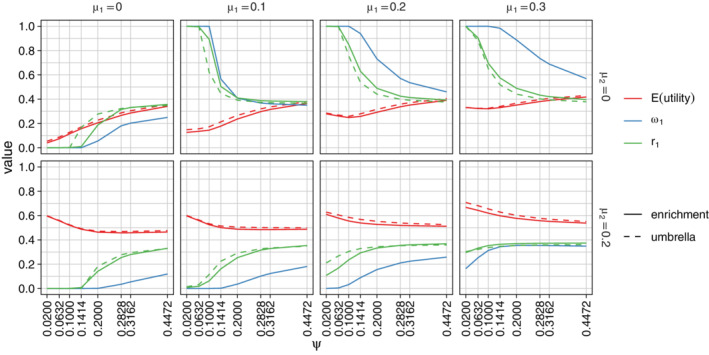

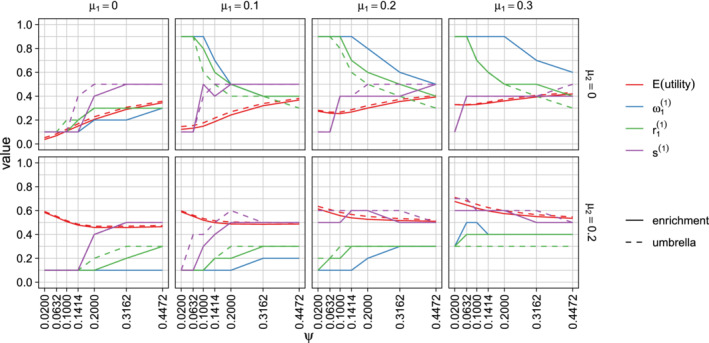

In Figure 2 we display the effect of the prior SD on the optimal design parameters when the population prevalence of subgroup 1 is . We considered prior SDs of 0.02, 0.0632, 0.1, 0.1414, 0.2, 0.3162, and 0.44, corresponding to information from studies with 10 000, 1000, 400, 200, 100, 40 and 20 subjects in each subgroup.

FIGURE 2.

Optimized design parameters for single‐stage designs and the expected utility, averaged over the prior. Parameters are for enrichment trials and a = (r1) for umbrella trials. Results are classified by and , the prior means of and , and the prior SD . The prior correlation between and is fixed at and the population prevalence of subgroup 1 is assumed to be [Colour figure can be viewed at wileyonlinelibrary.com]

The mean and variance of the prior distribution have a large impact on the optimal design parameters r1 and . The optimal values of r1 and and the expected utility of the resulting designs are very similar for enrichment and umbrella designs. If and , optimal values of r1 and are larger than 0.3, the population prevalence of subgroup 1, so the design over‐samples this subgroup. If and , the optimal design under‐samples subgroup 1. When both and are greater than zero, the optimal design has r1 < 0.5 and , reflecting the fact that it is advantageous to sample more subjects from subgroup 2 and allocate more type 1 error probability to the test of H02 since implies that P(Reject H02) has a greater weight than P(Reject H01) in the utility function.

In extreme cases where , and the prior variance is small, the optimal design has r1 = 0, so only subgroup 2 is sampled. When , and the prior variance is small, the optimal design has r1 = 1 and only subgroup 1 is sampled.

In Figure S2, we show the effect of the prior correlation on the design parameters when the prior SD is . We observe that the correlation has an impact on the optimal weight for testing the intersection hypothesis, in particular, when the treatment effects and have a high positive correlation, it is better to place most weight on one hypothesis rather than split the weight between the two hypotheses.

In Figures S3 and S4 we present further results for different values of , varying in Figure S3 and in Figure S4. Since the utility to be maximized depends on the population prevalences, the optimal design parameters vary considerably with . We see from Figure S3 that has only a small impact on the optimal value of r1 when adjusting for multiplicity and no impact at all in umbrella designs where no multiplicity adjustment is made. Figure S4 shows that the dependence of optimal design parameters on is similar to that seen in Figure 2: when the prior variance is large the optimal choices for r1 and are close to , while for smaller variances the optimal designs depend on the prior means and as well as .

3.2. Optimal two‐stage designs

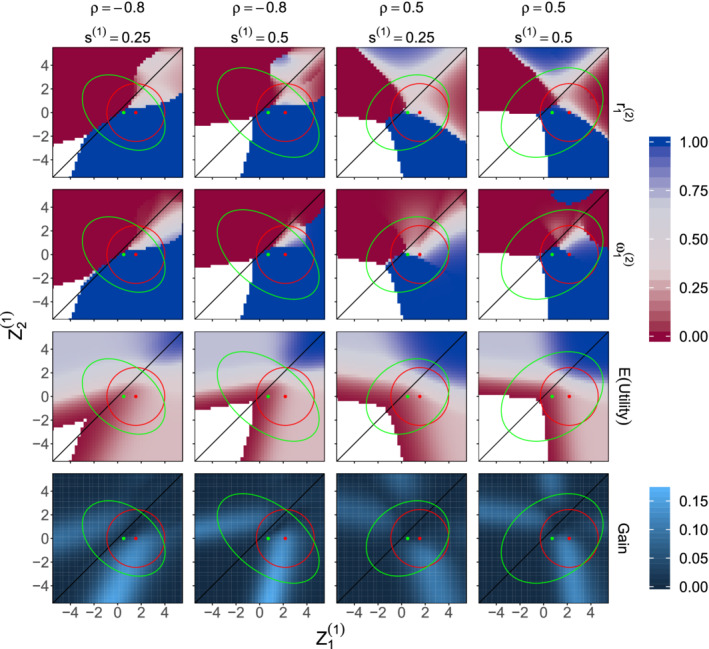

Figure 3 illustrates optimal adaptation rules for two‐stage designs. In these examples n = 700, , the population prevalence of subgroup 1 is , and the prior distribution for has parameters , , and or −0.8. The first‐stage design parameters have not been optimized and are set as with s(1) equal to 0.25 or 0.5. The FWER in enrichment designs and the per‐comparison error rate in umbrella designs is fixed at .

FIGURE 3.

Examples of optimal adaptation rules when , the prior distribution for has parameters , , and or −0.8, and first stage design parameters are set as and s(1) = 0.25 or 0.5. Optimized values of and are shown for each combination of first stage Z‐values and . Also shown are the conditional expected utility when the trial proceeds using the optimized values of and and the increase in conditional expected utility compared to continuing with no adaptation. In each plot, the red circle indicates the 95% highest density region for the distribution of when the true treatment effects are and and the green ellipse indicates the 95% highest density region for the prior predictive distribution of . The white regions contain values of for which the maximum conditional expected utility is below 0.01. In these cases the numerical optimization becomes unstable and optimal values for and are not displayed [Colour figure can be viewed at wileyonlinelibrary.com]

The adaptation rules specify the second‐stage design parameters that optimize the expected utility, as defined in Equation (1), given the first stage statistics and . The optimal and are calculated using the Hooke‐Jeeves derivative‐free minimization algorithm through the hjkb function in the dfoptim package 39 in R. 40 We also calculated the conditional expected utility if the trial continued with no adaptation, so and , and the plots in the bottom row of Figure 3 show the gain in the conditional expected utility due to the optimized adaptation. In Section S3 of Appendix S1, we present optimal interim rules for further values of .

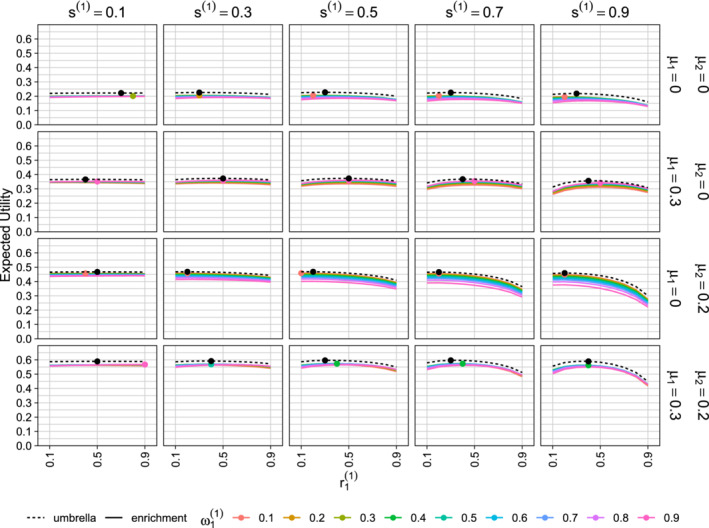

In Figure 4, we illustrate the procedure for optimizing first‐stage design parameters, for an enrichment design or for an umbrella design. For each combination of prior parameters and first‐stage design parameters a, we generated 1000 samples of first‐stage data under treatment effects drawn from the prior distribution. For each first‐stage dataset, we found the optimal second‐stage design parameters and noted the conditional expected utility using these optimal parameters. We took the average of the 1000 values of the optimized conditional expected utility as our simulation‐based estimate of the expected utility for this choice of a. The optimal first‐stage design parameters for a given prior distribution are those values of s(1), , and in the case of an enrichment design , that yield the highest expected utility.

FIGURE 4.

Optimization of first‐stage design parameters. The population prevalence of subgroup 1 is and the prior distribution for has parameters or 0.3, or 0.2, and . Each column shows results for a different value of . The plots show the expected utility as a function of , with coloured solid lines for different values of in an enrichment trial and black dashed lines for an umbrella trial with no multiplicity adjustment. In each panel, the colored dot indicates the combination of and that yields the maximum expected utility for an enrichment design and the black dot shows the optimum value of for an umbrella design [Colour figure can be viewed at wileyonlinelibrary.com]

Our results show the impact of the prior distribution on the optimized trial design parameters. The flat lines when s(1) = 0.1 indicate that the expected utility is hardly affected by the choice of and when the interim analysis is performed early in the trial. When the interim analysis is performed later, the choice of first‐stage design parameters is more important. It should be noted that for each pair of prior means , expected utility close to the overall optimum can be achieved using a wide range of first‐stage design parameters as long as the second‐stage design is optimized, given the first‐stage data.

In Figures 5 and S10 we present optimized values of the first‐stage design parameters, s(1), , and , given that optimal values of the second‐stage design parameters will be used following the interim analysis. The results are similar to those observed for optimal single‐stage designs. The prior variance has a large impact on the first‐stage optimal design: for smaller variances, interim analyses closer to the beginning of the trial yield a larger expected utility, while with larger variances, interim analyses after around 40% to 60% of the patients have been recruited are preferable. When the prior means are both 0 the optimal design parameters and are close to the subgroup 1 prevalence . However, if the prior suggests a benefit is more likely in subgroup 1, the optimal design over‐samples this subgroup, increasing its trial prevalence and testing weight. Figure S10 shows that, for enrichment designs, the prior correlation has a large impact on the choice of but little effect on the optimal trial prevalences.

FIGURE 5.

Optimized design parameters for two‐stage designs and the expected utility, averaged over the prior. Parameters are for enrichment trials and for umbrella trials. Results are classified by and , the prior means for and , and by the prior SD . The prior correlation between and is fixed at and the population prevalence of subgroup 1 is assumed to be [Colour figure can be viewed at wileyonlinelibrary.com]

As for single‐stage designs, the optimal values of are similar for enrichment and umbrella designs. A notable difference is that while the prior correlation has no effect at all on the optimal values of r1 in a single‐stage umbrella design, the optimal value of in a two‐stage umbrella design does show a small dependence on . In the case of a single‐stage umbrella design, the marginal distributions of and do not depend on and thus, with no multiplicity adjustment in testing H01 and H02, the expected value of the utility defined in Equation (1) does not depend on . However, in a two‐stage umbrella trial, the optimal choice of and the resulting conditional expected utility depends on both and and it is the joint distribution of , which depends on , that determines the optimal value of .

It should be noted that the procedures we have described impose a high computational burden. While it is relatively straightforward to optimize the decision at the interim analysis, the overall optimization of the trial is performed using simulations over a grid of values for the first‐stage design parameters. More rapid computation of the optimal values may be achieved by using approximations to the utility when extreme first‐stage values are observed, for example, if both and are large and negative, the expected utility is practically zero for all choices of and . In practice, one may wish to add the option of stopping the trial for futility if extreme negative results are observed at the interim analysis. The methods we have presented can be extended to find efficient designs that incorporate this option by working with a utility of the form

assigning a positive value k to each observation saved by early stopping.

3.3. Performance of the Bayes optimal design under specific alternative hypotheses

In this section we consider adaptive designs optimized for a particular prior distribution for but we evaluate their performance under specific values of . We consider trials with a total sample size n = 700, response variance , and population prevalence of subgroup 1 equal to . As a benchmark for comparison, we consider a nonoptimized, single‐stage design with and . We derive and assess the performance of single‐stage designs for which design parameters r1 and are optimized as described in Section 2.2, and we derive and assess two‐stage designs for which first‐stage design parameters and the adaptation rule are optimized as described in Section 2.3. In optimizing designs, we assume the normal prior distribution for presented in Equation (2) with or 0.2, , and . These priors reflects the belief that a treatment benefit is more likely in subgroup 1. The prior SD of 0.2 corresponds to information from a trial with 100 subjects in each subgroup.

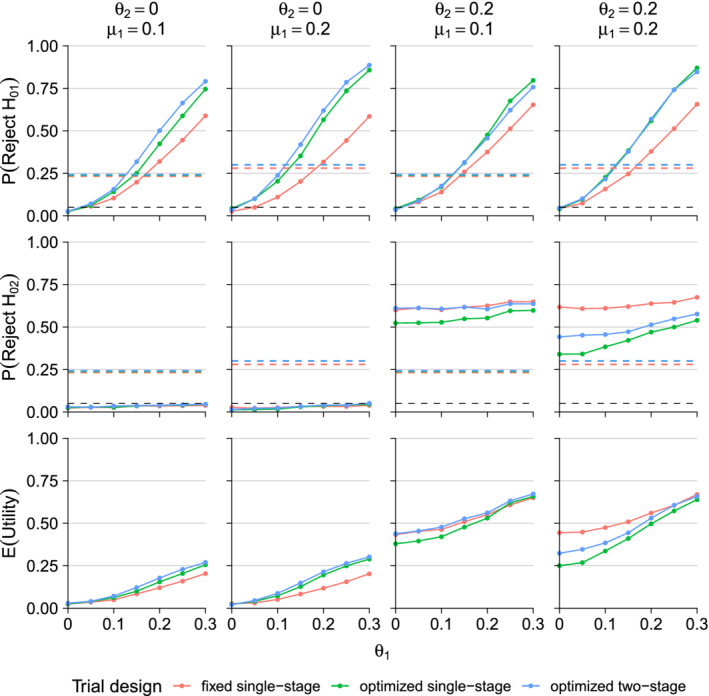

We evaluate the operating characteristics of the designs for values of ranging from 0 to 0.3 and or 0.2. This creates scenarios with a treatment effect in only one subgroup when or with a treatment effect in both subgroups when and . Figure 6 presents simulation results for enrichment trials and Figure S11 presents results for umbrella trials. The plots show the probabilities of rejecting H01 and H02 and the average utility at the end of the trial for a variety of combinations of , , , and . For the scenarios considered, we see that optimizing the trial for the assumed priors leads to a substantial increase in the power to reject H01 as compared to the nonoptimized, single‐stage design. However, the optimized designs have lower power to reject H02 when . The optimized designs have a higher average utility than the nonoptimized design when . If , the two‐stage design optimized for the prior with has similar average utility to the the nonoptimized design but average utility of the optimized one‐stage design is a little lower; both one‐stage and two‐stage designs optimized for the prior with have lower average utility than the the nonoptimized design. These results are in line with previous studies 41 , 42 which showed adaptive enrichment designs provide the greatest advantage when a treatment effect is present in only one subgroup.

FIGURE 6.

Operating characteristics of enrichment trials. The prior distribution for subgroup treatment effects is normal with means or 0.2 and , SDs and correlation . The total sample size is 700 and the population prevalence of subgroup 1 is . Results are given for ranging from 0 to 0.3 and or 0.2. The black dashed lines in the two top rows are placed at 0.05 as reference to the significance level, while the dashed lines in the third row indicates the expected utility of the trial given the initial design parameters [Colour figure can be viewed at wileyonlinelibrary.com]

4. WORKED EXAMPLE: IMPLEMENTING AN OPTIMIZED ADAPTIVE ENRICHMENT TRIAL

Suppose we wish to compare an experimental treatment to a control in a phase III clinical trial. We intend to use adaptive sample allocation as there is reason to believe the new treatment may only benefit a subgroup of patients. This trial will have a normally distributed endpoint with variance and, using information from a pilot study with 40 subjects from each subgroup, we construct a prior distribution for the treatment effects

The total sample size for the trial is planned to be n = 700 subjects. The population prevalence of subgroup 1 is and a FWER is to be used for the study.

Under the above assumptions, the results in Figure 5 for show the optimal first‐stage parameters to be s(1) = 0.5, and . Thus, we recruit 350 patients in the first stage of the trial with 40% of these from subgroup 1.

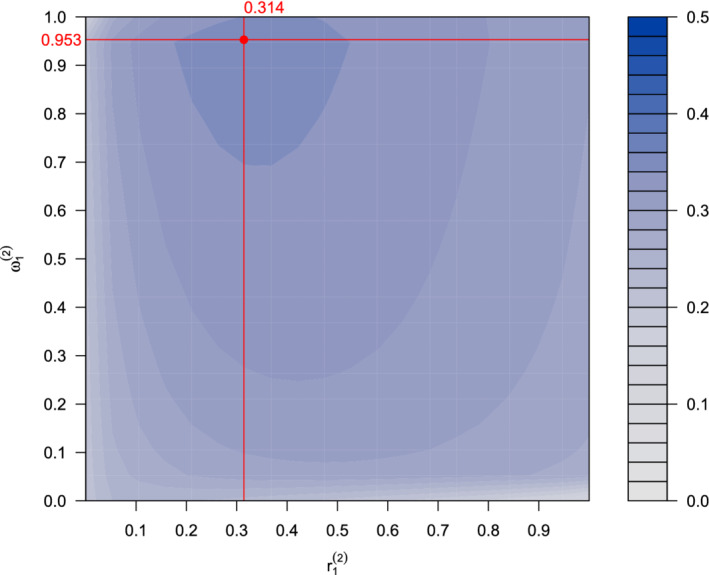

Now suppose we observe interim estimates and . These give Z‐values and and the conditional error rates, as defined in Equations (5) and (6), are A1 = 0.6140, A2 = 0.0184, and A12 = 0.3912. At this point, we optimize the second‐stage design parameters and . Figure 7 plots the conditional expected utility as a function of and on a color‐coded scale. The maximum conditional expected utility, obtained using the Hooke‐Jeeves algorithm, is at and . We therefore conduct the second stage of the trial using these parameter values.

FIGURE 7.

Interim optimization. The color indicates the expected utility given interim data for each combination of second‐stage prevalence for subgroup 1 and testing weight given the interim data [Colour figure can be viewed at wileyonlinelibrary.com]

Suppose, after recruiting the remaining subjects, the second‐stage estimates are and . The corresponding Z‐values are and , with P‐values and . Since and

we can globally reject H01. However, since we cannot reject H02.

5. EXTENDING THE DESIGNS

The methods we have described can be extended to trial designs with more than two stages or more than two subgroups. Suppose K disjoint subgroups S1, … , SK are specified and we wish to test the null hypotheses H0k: against the alternatives H1k: , where denotes the treatment effect in subgroup k. In a trial with J stages and a total sample size n, we recruit s(j)n patients in each stage, where s(1) + ⋯ + s(J ) = 1, and at stage j we recruit patients from subgroups k = 1, … , K, where . The data provide estimates , at each stage j, from which we obtain Z‐values . In an enrichment design where control of the FWER is required, a suitable closed testing procedure is defined in terms of the . Then, H0k is rejected globally at level if all intersection hypotheses involving H0k are rejected in local, level tests.

An adaptive design can be created by repeated application of the conditional error approach. An initial reference design is stated and when adaptation occurs, the modified testing procedure is defined so as to preserve the conditional error rate of each individual and intersection hypothesis test under the updated design for the remainder of the trial. This updated design becomes the new reference design under which conditional error rates will be calculated at any subsequent adaptation point.

We can consider optimizing the choice of the design parameters s(j) and or weights in the tests of intersection hypotheses. The generalization of our earlier approach requires a prior distribution for the treatment effects and a utility function whose expectation is to be maximised. If is the population prevalence of subgroup k, k = 1, … , K, a natural extension of Equation (1) is

In Section 2.3.3 we applied backwards induction to find the optimal design for a trial with two subgroups and two stages. Since the dimension of the state space grows with the number of subgroups and stages, such a direct application of backwards induction may not be feasible more generally. Other methods of optimization can be employed to find efficient, if not globally optimal, designs. For example, in a multistage design one may construct the adaptation rule at each interim analysis assuming the trial will continue without any further adaptation. We note that the optimization process is liable to be computationally intensive and it is important to commit resources to assess trial designs in a timely manner.

6. DISCUSSION

We have presented a Bayesian decision theoretic framework in which a clinical trial design can be optimized when two disjoint subgroups are under investigation. Our approach has both Bayesian and frequentist elements: the rules for hypothesis testing control the type I error rate and Bayesian decision tools are used to choose the design parameters within this scheme. This allows optimization of the sampling prevalence of each subgroup and weights in a weighted Bonferroni test of the intersection hypothesis, as well as optimal adaptation of these design parameters at the interim analysis. The optimal design maximizes the expected value of the specified utility function, averaged over the prior distribution assumed for the treatment effects in the two subgroups. After focusing on two‐stage trials with two subgroups in Sections 2 and 4, we outlined how our optimization framework may be extended to allow more subgroups or stages in the trial in Section 5.

Our results provide insights into how the mean and variance of the prior distribution affects the optimal timing of the interim analysis and the trial prevalences for each subgroup of patients. In practice, it is advisable to consider the sensitivity of the design's efficiency to modeling assumptions in order to create a trial design with robust efficiency.

In contrast to adaptive enrichment designs where recruitment is either from the full patient population or restricted to a single subgroup, we propose sampling from each subgroup at a specific rate which may differ from its population prevalence. We acknowledge that achieving the optimized prevalences in a trial may be challenging: additional screening will be required and over‐sampling a particular subgroup may delay a trial compared to an all‐comers design. 43 , 44 If logistical considerations imply that each subgroup is either dropped or sampled according to its population prevalence, our framework can still be used to optimize the other design parameters.

In Section 3.2 we discussed designs with the option of early stopping for futility and how the utility function might be modified to facilitate optimizing such designs. A similar approach could be followed to relax the requirement of a fixed total sample size and allow re‐assessment of future sample size at an interim analysis.

We have defined methods for normally distributed observations and a normal prior for treatment effects. While this has allowed us to demonstrate how to construct such designs, it is not a necessary restriction. With normally distributed responses, one could allow a separate response variance for each patient subgroup, placing prior distributions on these variances. In trials with other types of response distribution, including survival or categorical endpoints, standardized test statistics will still be approximately normally distributed if sample sizes are large enough, although nonnormal prior distributions may be appropriate. 45

We assumed the null hypotheses of interest are that there is no treatment effect in each subgroup. Our decision theoretic framework can accommodate other formulations, such as testing for treatment effects in the full population and in one particular subgroup, 8 , 20 , 22 , 23 , 24 , 46 , 47 , 48 in which case the stage‐wise test statistics for different subgroups are correlated. Care is required to ensure that enrichment designs control FWER when test statistics are correlated but this is not an issue in umbrella trials with separate level tests for each null hypothesis. 31

Although we have focused on hypothesis testing instead, estimating treatment effects after an adaptive trial is also important. 49 Simultaneous or marginal confidence regions for parameters, with or without multiplicity adjustment, can be constructed following a two‐stage design. 50 , 51 Point estimates may be obtained by a weighted average of the treatment effects observed in the first and second stages 11 , 52 but, due to the sample size adaptations and subgroup selection these estimators may be biased with the bias depending on the specific adaptation rules and the true parameter values. A thorough investigation of estimation for adaptive enrichment designs will be a topic of future research.

Software in the form of an R package is available at https://github.com/nicoballarini/OptimalTrial.

AUTHOR CONTRIBUTIONS

Dr Ballarini and Dr Burnett are the co‐primary authors and they contributed equally to this work.

Supporting information

Appendix S1. Technical appendices and additional simulation results.

ACKNOWLEDGEMENTS

Nicolás Ballarini is supported by the EU Horizon 2020 Research and Innovation Programme, Marie Sklodowska‐Curie grant No 633567. Thomas Jaki is supported by the National Institute for Health (NIHR‐SRF‐2015‐08‐001) and the Medical Research Council (MR/M005755/1). Franz König and Martin Posch are members of the EU Patient‐Centric Clinical Trial Platform (EU‐PEARL) which has received funding from the Innovative Medicines Initiative 2 Joint Undertaking, grant No 853966. This Joint Undertaking receives support from the EU Horizon 2020 Research and Innovation Programme, EFPIA, Children's Tumor Foundation, Global Alliance for TB Drug Development, and SpringWorks Therapeutics. The views expressed in this publication are those of the authors. The funders and associated partners are not responsible for any use that may be made of the information contained herein.

Ballarini NM, Burnett T, Jaki T, Jennison C, König F, Posch M. Optimizing subgroup selection in two‐stage adaptive enrichment and umbrella designs. Statistics in Medicine. 2021;40:2939–2956. 10.1002/sim.8949

Funding information H2020 Marie Skłodowska‐Curie Actions, 633567; Innovative Medicines Initiative, 853966; Medical Research Council, MR/M005755/1; National Institute for Health Research, NIHR‐SRF‐2015‐08‐001

Data Availability Statement

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

References

- 1. Dmitrienko A, Muysers C, Fritsch A, Lipkovich I. General guidance on exploratory and confirmatory subgroup analysis in late‐stage clinical trials. J Biopharm Stat. 2016;26(1):71‐98. [DOI] [PubMed] [Google Scholar]

- 2. Alosh M, Huque MF, Bretz F, D'Agostino RB Sr. Tutorial on statistical considerations on subgroup analysis in confirmatory clinical trials. Stat Med. 2017;36(8):1334‐1360. [DOI] [PubMed] [Google Scholar]

- 3. Ondra T, Dmitrienko A, Friede T, et al. Methods for identification and confirmation of targeted subgroups in clinical trials: a systematic review. J Biopharm Stat. 2016;26(1):99‐119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Antoniou M, Jorgensen AL, Kolamunnage‐Dona R. Biomarker‐guided adaptive trial designs in phase II and phase III: a methodological review. PLoS One. 2016;11(2):e0149803. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Mandrekar SJ, Sargent DJ. Clinical trial designs for predictive biomarker validation: theoretical considerations and practical challenges. J Clin Oncol. 2009;27(24):4027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Freidlin B, LM MS, Korn EL. Randomized clinical trials with biomarkers: design issues. J Natl Cancer Inst. 2010;102(3):152‐160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Simon N, Simon R. Adaptive enrichment designs for clinical trials. Biostatistics. 2013;14(4):613‐625. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Brannath W, Zuber E, Branson M, et al. Confirmatory adaptive designs with Bayesian decision tools for a targeted therapy in oncology. Stat Med. 2009;28(10):1445‐1463. [DOI] [PubMed] [Google Scholar]

- 9. Friede T, Parsons N, Stallard N. A conditional error function approach for subgroup selection in adaptive clinical trials. Stat Med. 2012;31(30):4309‐4320. [DOI] [PubMed] [Google Scholar]

- 10. Sugitani T, Posch M, Bretz F, Koenig F. Flexible alpha allocation strategies for confirmatory adaptive enrichment clinical trials with a prespecified subgroup. Stat Med. 2018;37(24):3387‐3402. [DOI] [PubMed] [Google Scholar]

- 11. Chiu Y‐D, Koenig F, Posch M, Jaki T. Design and estimation in clinical trials with subpopulation selection. Stat Med. 2018;37(29):4335‐4352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Food and Drug Administration Adaptive designs for clinical trials of drugs and biologics. guidance for industry; 2018.

- 13. Berry DA. The Brave new world of clinical cancer research: adaptive biomarker‐driven trials integrating clinical practice with clinical research. Mol Oncol. 2015;9(5):951‐959. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Meyer EL, Mesenbrink P, Dunger‐Baldauf C, et al. The evolution of master protocol clinical trial designs: a systematic literature review. Clin Ther. 2020;42(7):1330‐1360. [DOI] [PubMed] [Google Scholar]

- 15. Food and Drug Administration . Master protocols: efficient clinical trial design strategies to expedite development of oncology drugs and biologics. guidance for industry; 2018;.

- 16. Renfro LA, Sargent DJ. Statistical controversies in clinical research: basket trials, umbrella trials, and other master protocols: a review and examples. Ann Oncol. 2016;28(1):34‐43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Woodcock J, LaVange LM. Master protocols to study multiple therapies, multiple diseases or both. New Engl J Med. 2017;377(1):62‐70. [DOI] [PubMed] [Google Scholar]

- 18. Govindan R, Mandrekar SJ, Gerber DE, et al. ALCHEMIST trials: a golden opportunity to transform outcomes in early‐stage non‐small cell lung cancer. Clin Cancer Res. 2015;21(24):5439‐5444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. European Medicines Agency Reflection paper on methodological issues in confirmatory clinical trials planned with an adaptive design; 2007.

- 20. Ondra T, Jobjörnsson S, Beckman RA, et al. Optimized adaptive enrichment designs. Stat Methods Med Res. 2019;28(7). 10.1177/0962280217747312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Ondra T, Jobjörnsson S, Beckman RA, et al. Optimizing trial designs for targeted therapies. PLoS One. 2016;11(9):e0163726. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Burnett T. Bayesian Decision Making in Adaptive Clinical Trials [PhD thesis]. University of BathUK; 2017.

- 23. Burnett T, Jennison C. Adaptive enrichment trials: what are the benefits? Stat Med. 2021;40(3):690‐711. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Graf AC, Posch M, Koenig F. Adaptive designs for subpopulation analysis optimizing utility functions. Biom J. 2015;57(1):76‐89. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Beckman RA, Clark J, Chen C. Integrating predictive biomarkers and classifiers into oncology clinical development programmes. Nat Rev Drug Discov. 2011;10(10):735. [DOI] [PubMed] [Google Scholar]

- 26. Rosenblum M, Fang X, Liu H. Optimal, two stage, adaptive enrichment designs for randomized trials using sparse linear programming. Department of Biostatistics Working Papers. Working Paper 273, Johns Hopkins University; 2017.

- 27. Krisam J, Kieser M. Optimal decision rules for biomarker‐based subgroup selection for a targeted therapy in oncology. Int J Mol Sci. 2015;16(5):10354‐10375. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Stallard N, Todd S, Parashar D, Kimani PK, Renfro LA. On the need to adjust for multiplicity in confirmatory clinical trials with master protocols. Ann Oncol. 2019;30(4):506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Dmitrienko A, D'Agostino RB Sr, Huque MF. Key multiplicity issues in clinical drug development. Stat Med. 2013;32(7):1079‐1111. [DOI] [PubMed] [Google Scholar]

- 30. Spiegelhalter DJ, Abrams KR, Myles JP. Bayesian Approaches to Clinical Trials and Health‐Care Evaluation. Hoboken, NJ: John Wiley & Sons; 2004. [Google Scholar]

- 31. Stallard N, Posch M, Friede T, Koenig F, Brannath W. Optimal choice of the number of treatments to be included in a clinical trial. Stat Med. 2009;28(9):1321‐1338. [DOI] [PubMed] [Google Scholar]

- 32. Marcus R, Peritz E, Gabriel KR. On closed testing procedures with special reference to ordered analysis of variance. Biometrika. 1976;63(3):655‐660. [Google Scholar]

- 33. Posch M, Koenig F, Branson M, Brannath W, Dunger‐Baldauf C, Bauer P. Testing and estimation in flexible group sequential designs with adaptive treatment selection. Stat Med. 2005;24(24):3697‐3714. [DOI] [PubMed] [Google Scholar]

- 34. Bauer P, Kieser M. Combining different phases in the development of medical treatments within a single trial. Stat Med. 1999;18(14):1833‐1848. [DOI] [PubMed] [Google Scholar]

- 35. Bretz F, Koenig F, Brannath W, Glimm E, Posch M. Adaptive designs for confirmatory clinical trials. Stat Med. 2009;28(8):1181‐1217. [DOI] [PubMed] [Google Scholar]

- 36. Bauer P, Bretz F, Dragalin V, König F, Wassmer G. Twenty‐five years of confirmatory adaptive designs: opportunities and pitfalls. Stat Med. 2016;35(3):325‐347. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Müller H‐H, Schäfer H. Adaptive group sequential designs for clinical trials: combining the advantages of adaptive and of classical group sequential approaches. Biometrics. 2001;57(3):886‐891. [DOI] [PubMed] [Google Scholar]

- 38. Müller H‐H, Schäfer H. A general statistical principle for changing a design any time during the course of a trial. Stat Med. 2004;23(16):2497‐2508. [DOI] [PubMed] [Google Scholar]

- 39. Varadhan R, Borchers HW. dfoptim: derivative‐free optimization. R package version 2018.2‐1; 2018.

- 40. R Core Team R: a language and environment for statistical computing; 2018.

- 41. Simon R, Maitournam A. Evaluating the efficiency of targeted designs for randomized clinical trials. Clin Cancer Res. 2004;10(20):6759‐6763. [DOI] [PubMed] [Google Scholar]

- 42. Hoering A, LeBlanc M, Crowley JJ. Randomized phase III clinical trial designs for targeted agents. Clin Cancer Res. 2008;14(14):4358‐4367. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Klauschen F, Andreeff M, Keilholz U, Dietel M, Stenzinger A. The combinatorial complexity of cancer precision medicine. Oncoscience. 2014;1(7):504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Eichler H‐G, Bloechl‐Daum B, Bauer P, et al. “Threshold‐crossing”: a useful way to establish the counterfactual in clinical trials? Clin Pharmacol Ther. 2016;100(6):699‐712. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Brückner M, Burger HU, Brannath W. Nonparametric adaptive enrichment designs using categorical surrogate data. Stat Med. 2018;37(29):4507‐4524. [DOI] [PubMed] [Google Scholar]

- 46. Wang SJ, O'Neill RT, Hung HJ. Approaches to evaluation of treatment effect in randomized clinical trials with genomic subset. Pharm Stat J Appl Stat Pharm Ind. 2007;6(3):227‐244. [DOI] [PubMed] [Google Scholar]

- 47. Alosh M, Huque MF. A flexible strategy for testing subgroups and overall population. Stat Med. 2009;28(1):3‐23. [DOI] [PubMed] [Google Scholar]

- 48. Spiessens B, Debois M. Adjusted significance levels for subgroup analyses in clinical trials. Contemp Clin Trials. 2010;31(6):647‐656. [DOI] [PubMed] [Google Scholar]

- 49. Stallard N, Todd S, Whitehead J. Estimation following selection of the largest of two normal means. J Stat Plann Infer. 2008;138(6):1629‐1638. [Google Scholar]

- 50. Mehta CR, Bauer P, Posch M, Brannath W. Repeated confidence intervals for adaptive group sequential trials. Stat Med. 2007;26(30):5422‐5433. [DOI] [PubMed] [Google Scholar]

- 51. Magirr D, Jaki T, Posch M, Klinglmueller F. Simultaneous confidence intervals that are compatible with closed testing in adaptive designs. Biometrika. 2013;100(4):985‐996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Kimani PK, Todd S, Stallard N. Estimation after subpopulation selection in adaptive seamless trials. Stat Med. 2015;34(18):2581‐2601. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix S1. Technical appendices and additional simulation results.

Data Availability Statement

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.