Abstract

Coronavirus (COVID-19) and its new strain resulted in massive damage to society and brought panic worldwide. Automated medical image analysis such as X-rays, CT, and MRI offers excellent early diagnosis potential to augment the traditional healthcare strategy to fight against COVID-19. However, the identification of COVID infected lungs X-rays is challenging due to the high variation in infection characteristics and low-intensity contrast between normal tissues and infections. To identify the infected area, in this work, we present a novel depth-wise dense network that uniformly scales all dimensions and performs multilevel feature embedding, resulting in increased feature representations. The inclusion of depth wise component and squeeze-and-excitation results in better performance by capturing a more receptive field than the traditional convolutional layer; however, the parameters are almost the same. To improve the performance and training set, we have combined three large scale datasets. The extensive experiments on the benchmark X-rays datasets demonstrate the effectiveness of the proposed framework by achieving 96.17% in comparison to cutting-edge methods primarily based on transfer learning.

Keywords: COVID19, Diagnosis, Management, Deep learning

Introduction

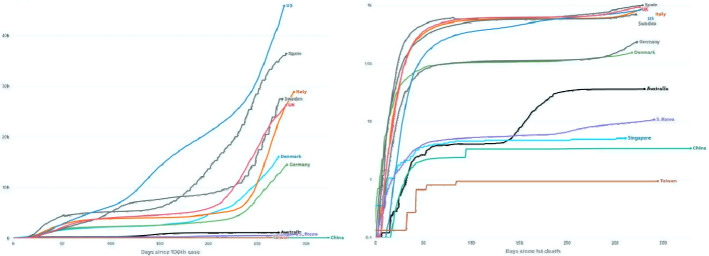

Coronavirus infection, a severe acute respiratory syndrome, is an ongoing pandemic that the world has facing since December 2019. Up to 31st October, 45.95 million COVID cases have been reported, with 1.19 million deaths (153.2/million) across 216 countries and territories worldwide. US and India are the leading affected countries with 9.31 million and 8.13 million reported cases. Figure 2 shows the recent death and recovery rate. A variant of COVID-19 is aggressive than the original COVID, and its rapid spread been blamed for strict tier four mixing rules for millions of people and much harsher restrictions and travel ban. COVID-19 is a novel beta-corona virus that affects different people with different symptoms and shares similarities with Middle East Respiratory Syndrome (MERS) and SARS viruses that were previously responsible for endemics in 2012 and 2003, respectively.

Fig. 2.

Cumulative known cases per million since 100th case and deaths per million since 1st death recorded. (https://www.abc.net.au/news/2020-05-13/coronavirus-numbers-worldwide-data-tracking-charts/12107500?nw=0)

Reverse Transcription Polymerase Chain Reaction (RT-PCR) is used as standard practice for COVID screening. However, strict testing requirements for testing environments and equipment shortage limit the accurate and fast screening of COVID suspected patient. Additionally, RT-PCR has high false-negative rates. Radiological image analysis showed itself as an alternative for efficient and precise diagnosis of COVID. It provides the diagnosis and gives considerable knowledge of the disease progression, evolution, and follow-up assessment. However, manual analysis of infections in the lungs is labour-intensive, tedious, time-consuming, and time-consuming. In addition to this, it is a highly subjective (inter and intra radiologist variabilities) task as often influenced by radiologist experience and bias. Thus, automatic classification of affected areas is highly desirable for early diagnosis of COVID. However, identifying COVID-19 affection areas from radiology images is a challenging task for several reasons, i.e., the huge variations in size and position of lesions. In addition to this, the infection may be different complex appearances such as consolidation, reticulation, and ground-glass opacity etc. Furthermore, the ambiguous boundary and irregular shape of the lesion further complicated and difficult to segment.

Recently, deep learning has been extensively applied in medical image analysis. Several methods have presented to analyze the Chest images (CT and MRI) to detect patients infected with COVID-19 (Fan et al., 2020; Razzak et al., 2020b; Wang et al., 2020a). Fan et al. (2020) aggregated high-level features using parallel partial decoder and presented semi-supervised lung infection segmentation deep network (Inf-Net) for segmentation of infected regions from CT images. To enhance the representation, explicit edge and implicit reverse attention is used that model the infection boundaries. Wang et al. (2020a) presented noise-robust dice loss-based Pneumonia Lesion segmentation ensemble network (COPLE-Net) for segmentation of COVID affected areas. COPLE-Net and noise-robust Dice loss are combined through the ensembling network. The student model’s exponential moving average is used as a teaching model that is updated adaptively by suppressing the student network to the exponential moving average in case the student network has a larger training loss. Li et al. (2020) presented COVID-19 detection neural network (COVNet) for COVID lesion segmentation from CT exams. U-net and its variants are the most widely used encoder–decoder network for segmentation since it capture high and low-level features through encoder and semantic features through the decoder. Chen et al. (2020) applied UNet++ for the detection of COVID suspected lesion. Hu et al. (2018) presented squeeze and excitation blocks to improve the representational of the network by modelling the inter-dependencies between the channels of its convolutional features. Similarly, Roy et al. (2018) fused spatial and channel SE blocks features by re-calibrating the feature representation channel-wise and spatially (Figs. 1, 2).

Fig. 1.

COVID-19 Death rate and recovery rate in different countries. (www.worldometers.info)

In this work, we propose a depth-wise multilevel dense network for the diagnosis of COVID-19 lesions to address the aforementioned challenges. We fine-tuned our newly added convolutional layers in this pre-trained model by feeding it with pre-processed COVID19 scan training dataset. After fine tuning, we picked different layers from different dense blocks of DenseNet along with features. The network consists of multiple blocks that uniformly scale all dimensions (depth, width and resolution) and perform multilevel feature embedding. Unlike traditional CNN methods, the network arbitrarily scales the measurements with a fixed set of scaling co-efficients in each block, followed by multilevel feature fusion by aggregating the high-level features to combine the contextual information. The inclusion of depth-wise components and squeeze-and-excitation results in better performance by capturing more receptive fields than the traditional convolutional layer; however, the parameters are almost the same.

The key contributions of this work are

present depth-wise multilevel feature fusion by aggregating the high-level features to combine the contextual information by balancing the width, depth and resolution of the network can result in better feature representation,

introduce a novel multilevel depth deep framework for an accurate and efficient automated detection of COVID infection.

performed extensive experiments on benchmark X-rays dataset that demonstrate the effectiveness of the proposed framework in comparison to cutting-edge methods primarily based on transfer learning.

The rest of the paper is organized as follows: In Sect. 2, we present the recent development on COVID lesion identification, followed by the proposed depth-wise multilevel deep network. In Sect. 4, we present experimental analysis and evaluation of the proposed framework on the benchmark dataset.

Related work

Recently, deep learning has been extensively using for medical image analysis, and several deep learning methods have presented to analyze the Chest images (Ultrasound, CT and MRI) for the detection of patients infected with COVID-19 (Born et al., 2020; Fan et al., 2020; Wang et al., 2020a). Brändle et al. presented a large-scale public lung ultrasound COVID-19 dataset (Born et al., 2020) consisting of three classes (bacterial pneumonia, COVID19 and healthy). Deep convolutional neural network has been applied to classify the COVID-19 patient from ultrasound videos with high sensitivity. In addition to this, they have used class activation maps for the spatiotemporal localization of pulmonary biomarkers.

Gozes et al. presented a framework for COVID-19 disease from chest CT (Gozes et al., 2020) which includes lung segmentation, 2D slice classification, and fine-grained localization. The severity of the virus is graded through COVID bio-marker and COVID-19 radiographic manifestations is performed using clustering based on unsupervised feature space. Fan et al. aggregated high-level features using parallel partial decoder and presented semi-supervised lung infection segmentation deep network (Inf-Net) for segmentation of infected regions from CT images (Fan et al., 2020). To enhance the representation, explicit edge and implicit reverse attention is used that model the infection boundaries. Yao et al. (2020) presented pixel-wise anomaly detection using a normalcy-converting network (NormNet) for CT image analysis that distinguish the affected area from the normal area. The framework converts the abnormal area back to normal by synthesizing the lesions through simple operations. Amyar et al. presented multitask deep framework that consists of the common encoder to disentangled features representation and two additional decoder for image segmentation, classification and reconstruction (Amyar et al., 2020). The framework jointly identifies COVID-19 affected patient as well as segment COVID-19 affected lungs area from CT. Lesion segmentation, classification, and reconstruction are performed together on different datasets to leverage useful information in multiple related tasks, resulting in improved classification and segmentation performance. Li et al. (2020) applied a two-stage mapping-segmentation approach to segment the affected areas from CT images. The image-level domain-adaptive approach is used to transform the image into a target type followed by the segmentation of affected areas.

Elharrouss et al. (2020) presented a multi-task deep-learning-based approach for a segmentation lung infection on CT images. Initially, infected lung regions are segmented, followed by the segmentation of infected areas, and it also allows the model to learn about many features that can improve the results. Multi-task learning deals with the challenge of the small, labelled dataset. To perform a multi-class segmentation, the network is trained using the two-stream inputs. The framework showed decent segmentation lung infections with a high degree of performance. Louefki et al. designed an efficient tool for segmentation and measurement of lungs infection in CT images (Oulefki et al., 2020). The lungs region from CT images is extracted, followed by segregation of left and right lung. After extensive image enhancement, the authors applied modified local contrast enhancement for detail CT target. Experiments on publicly available benchmark datasets consisting of 275 CT scans are COVID-19 positive and new data acquired from the EL-BAYANE centre for radiology and medical imaging. Structural relationships are critical for accurate detection of pulmonary lobes, especially for COVID-19. Xie et al. (2020) presented relational non-local deep network by leveraging the structured relationships. To produce self-attention weights, the framework learns both geometric and visual relationships among the convolution features. Initially, the model was trained on 5000 subjects from the COPDGene study, followed by retraining the network through transfer on 470 COVID-19 suspects.

Wang et al. (2020a) presented noise-robust dice loss based Pneumonia Lesion segmentation ensemble network (COPLE-Net) for segmentation of COVID affected areas. COPLE-Net and noise-robust Dice loss are combined through the ensembling network. The student model’s exponential moving average is used as a teaching model that is updated adaptively by suppressing the student network to the exponential moving average in case the student network has larger training loss. Li et al. (2020) presented COVID-19 detection neural network (COVNet) for COVID lesion segmentation from CT exams. U-net and its variants are the most widely used encoder-decoder network for segmentation since it capture high and low-level features through the encoder and semantic features through the decoder. Chen et al. (2020) applied UNet++ for the detection of COVID suspected lesion. Hu et al. (2018) presented squeeze and excitation blocks to improve the representational of the network by modelling the inter-dependencies between the channels of its convolutional features. Similarly, Roy et al. (2018) fused spatial and channel SE blocks features by re-calibrating the feature representation channel-wise and spatially. Saeedizadeh et al. (2020) presented variants (2D-anisotropic total-variation) of UNet based framework for segmentation of ground glass regions from CT images. A regularization term is added in the loss function to promote the segmentation map’s connectivity for COVID-19 affected areas at pixel level. To deal with a small dataset and reduce the number of training parameter, the Capsules network has also been applied to solve the problem of CNN architecture (Afshar et al., 2020; Hinton et al., 2018). Afshar et al. (2020) applied capsule network for diagnosis of COVID patient in X-ray images. Ma et al. (2020) presented an active framework contour regularized semi-supervised learning for segmentation of COVID infection on small labelled dataset. Region-scalable fitting model is embedded into the loss function for active contour regularization and for refinement of pseudo labels of unlabeled data. Splitting method is designed to optimize the fegion-scalable fitting regularization and the segmentation loss. Xu et al. (2020) presented deep generative adversarial learning for weakly supervised COVID affected region segmentation. The framework is optimized to segmentation COVID affected areas through the segmentator and replace the abnormal area with the generator’s normal appearance. Zheng et al. (2020) multi-scale discriminative network by integrating channel attention block, pyramid convolution block and residual refinement block for segmentation of lungs area affected with COVID. The pyramid convolution block uses the different number of the kernel with different size, hence, increases the receptive field. The channel attention block fuses both stages and focuses features from the segmented area only, and the residual refinement block refines the feature maps; thus, integration results in strengthening the power to segment the COVID affected area of different sizes. Bizopoulos et al. (2020) combined Linknet, UNet, FPN, PSPNet with 25 randomly initialized and pretrained encoders (such as ResNet, DenseNet, ResNext, VGG, DPN, MobileNet, Xception, EfficientNet, and Inception-v4) for lung segmentation and lesion segmentation and lesion segmentation.

Methodology

In this section, we first describe the components of the proposed Depth-wise multilevel features concatenated deep neural network to identify COVID affected areas from lungs X-rays images. In earlier work, scaling up the ConvNets is widely explored and comparatively achieved better accuracy. However, most of the earlier work considers one of three dimensions, size, depth or width. Although it is possible to scale all dimensions arbitrarily, it requires extensive tuning and challenging efforts to achieve performance. In this work, we consider scaling up the ConvNets and presenting a novel framework by balancing the scaling factors. We presented depth-wise multilevel concatenation as a learning approach to combine the different level of features representation with improving the deep network’s performance and making generalization ability better. The network consists of multiple blocks that uniformly scale all dimensions (depth, width and resolution) and perform multilevel feature embedding. The compound depth, width and resolution scaling increase the receptive field and channels; thus, the network capture more fine-grained patterns. Unlike traditional CNN methods, the network arbitrarily scales the dimensions with a fixed set of scaling coefficients in each block, followed by multi-level feature fusion by aggregating the high-level features to combine the contextual information. The inclusion of depth-wise components and squeeze-and-excitation results in better performance by capturing more receptive fields than the traditional convolutional layer; however, the parameters are almost the same.

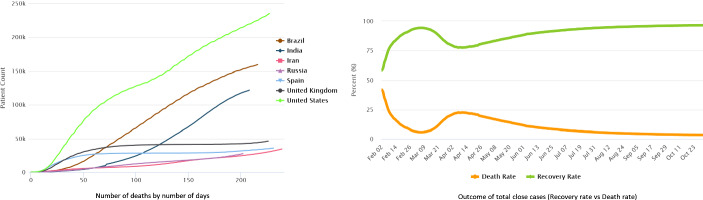

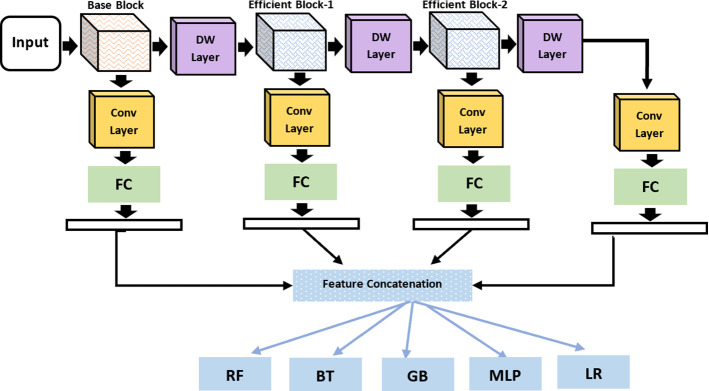

Figure 3 illustrate the proposed depth-wise multilevel concatenated deep neural network. The network consists of three main blocks such as Base Block, Efficient Block-1 and Efficient Block-2. Notice that the network combines the features from different blocks, which results in features with different depth and scale, hence increases the receptive field and strengthening the power to classification the COVID affected area of various sizes. The integration of varying depth-wise blocks concatenation results in features with different depth and scale results in efficient features representation, thus showing better performance than fine-tune methods. CNN’s core building block is the convolution operator that enables the convolutional neural networks to construct informative feature representation by fusing channel-wise and spatial information within each layer’s local receptive fields. Squeeze-and-Excitation is simple and can be embedded into any architecture by replacing the component with a squeeze-and-excitation block. It can recalibrate channel-wise features response adaptively by modelling the inter-dependencies between different channels, thus considerably improving performance. We have used squeeze-and-excitation block in all three blocks. Each squeeze-and-excitation block uses global averaging pooling operations in channel-wise feature response through explicit modelling of interdependences in different challenges.

Fig. 3.

Proposed depth wise multilevel deep network

Figure 3 illustrate the proposed depth-wise multilevel network. Notice that features are extracted from different blocks and concatenated at different stages to have compact and discriminant feature representation with wide coverage. Base block consists of four components, convolution, depth convolution, squeeze-and-excitation and finally projection. Similarly, an efficient block consists of expanded convolution, depth convolution, squeeze-and-excitation, projection. In both base block and efficient block, we applied depth-wise convolution. We used each filter channel only at one input channel in depth-wise convolution. For example, we have 3 channel image and 3 channel filter. After breaking the image and filter into three different channels, we performed convolution, followed by stacking them back. The inclusion of depth-wise component into the proposed network results in better performance by capturing more receptive fields than the traditional convolutional layer; however, the parameters are almost the same.

In each block, we have used the different components with different number of layers for the classification of COVID19. Figure 3 shows the basic proposed framework that consists of three blocks (base and two efficient blocks). The efficient block consists of different components with skip connections. We have used expansion convolutional layer with depthwise, Squeeze-and-Excitation and projection convolutional layer. In the expansion convolutional layer, the number of feature maps are increased, whereas in the depth-wise convolutional layer, the spatial input size is reduced to reduce computational complexity. In contrast, the projection layer, the 1 × 1 convolutional layer, has been proposed to leverage a different number of feature maps. Besides, we have used the skip connection used for smooth backpropagation of gradient in backpropagation step during gradient computation.

Recently, several researchers investigated the spatial component to improve the representation power of convolutional network through enhancement of spatial encoding using feature hierarchy. Squeeze-and-Excitation block adaptively re-calibrates the channel-wise feature responses by modelling the inter-dependencies between different channels explicitly. It is a simple structure, and we can embed it in any state of the art network by replacing the component with a squeeze-and-excitation unit. The squeeze-and-excitation is designed to improve the representation power of the network through perform dynamic channel-wise feature recalibration. The SE block structure is simple and can be used directly in existing state-of-the-art architectures by replacing components with squeeze-and-excitation block, where the performance can be effectively enhanced. We have embedded squeeze-and-excitation component in all blocks as shown in Fig. 3, which improves the discriminative power of features. It results in significant improvement in performance while being computationally very light.

Experiments

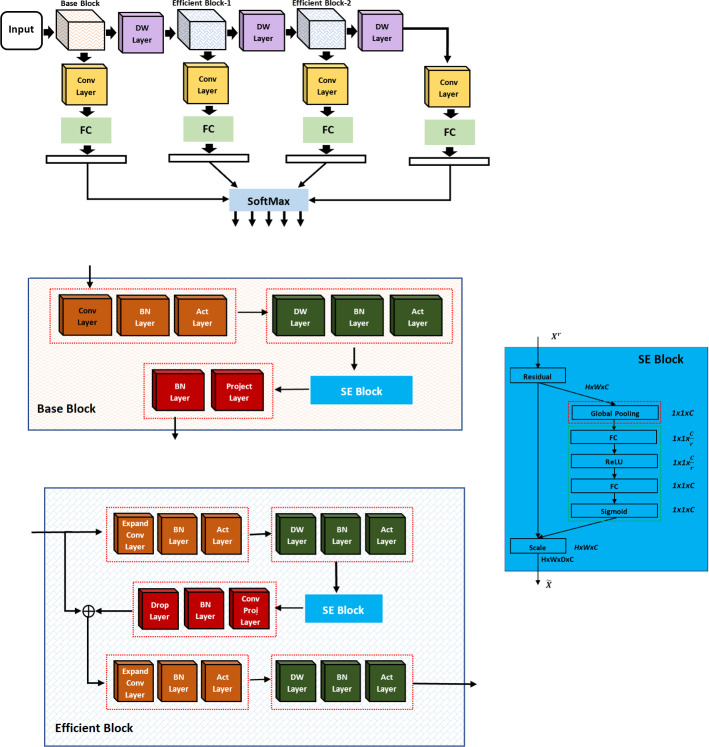

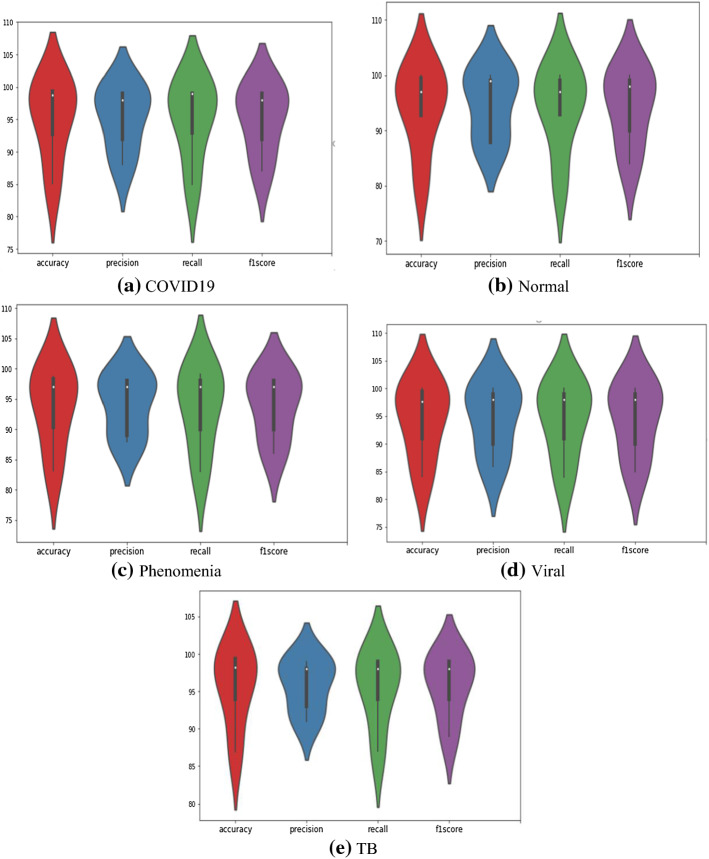

This section presented the experimental setup and evaluation of the proposed depth-wise multilevel concatenated deep neural network. We have performed two case studies, in our first experiment, we applied the proposed framework as an end-to-end approach for COVID diagnosis as shown in Fig. 3. In our second experiment, we have applied the proposed framework as a feature extractor and used different classifiers for classification as shown in Fig. 4. To generalize the performance, we have performed tenfold validation on the benchmark 5-class COVID-19 X-rays image dataset. Figure 5 illustrates the comparative analysis of proposed methods on different classes. For evaluation purposes, we performed an extensive experiment and compared the performance using precision, recall and accuracy. Experimental results showed that the proposed framework achieved considerable improvement in the diagnostic performance of affected areas compared to the methods.

Fig. 4.

Proposed depth wise multilevel deep network as feature extractor

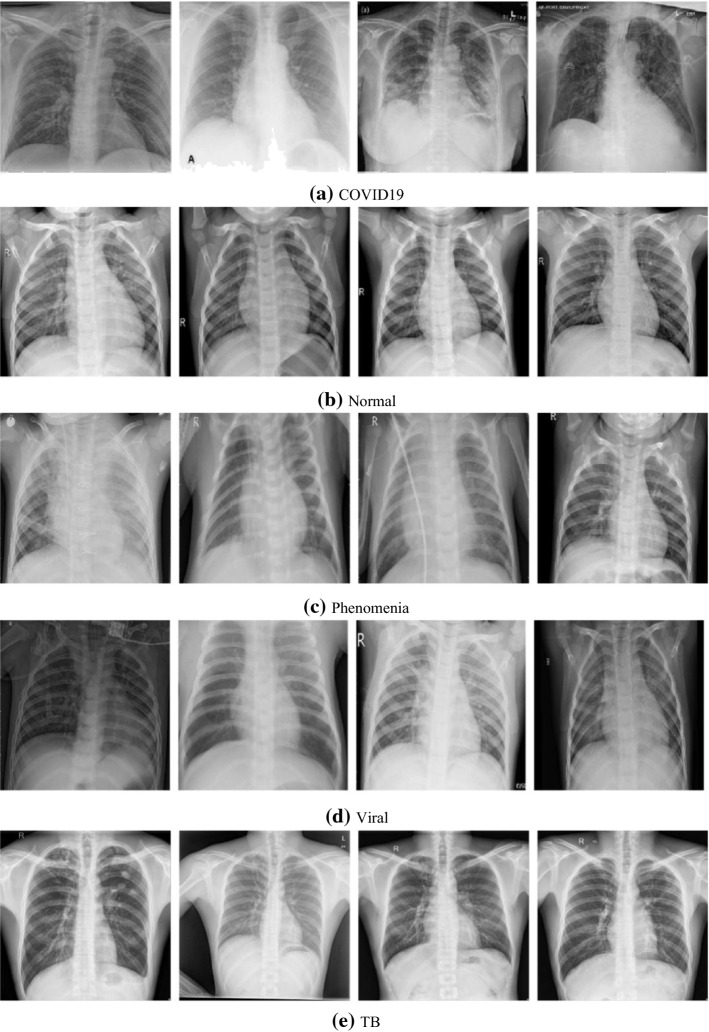

Fig. 5.

Sample dataset images a COVID, b normal, c phenomena, d phenomena viral, e tuberculosis

Dataset

In this work, we constructed a five-class COVID-19 larger dataset from three main sources. We have collected cases from Candemir et al. (2013), Cohen et al. (2020), Jaeger et al. (2013) and Kermany et al. (2018) that consist of X-rays and CT images of pulmonary diseases, including COVID-19, SARS and MEARS. Cohen et al. dataset (2020) consists of 256 males and 136 female patients of average age 54.6 years ± 16.7 (mean ± standard deviation) is the larger COVID dataset that consists of multiple pulmonary diseases, including COVID-19, SARS (Severe acute respiratory syndrome) and MERS (Middle East respiratory syndrome) collected from many sources. The dataset is up to date and updated regularly. The dataset1 consist of 752 X-ray images; among these, we have considered 435 COVID-19 X-ray images in this study and excluded the lateral X-ray and CT images. We have also included X-ray TB dataset2 from U.S. National Library of Medicine, consisting of two datasets of chest X-ray. TB dataset consists of 394 infected images from 2 different sources (58 images from Montgomery County set and 336 images from China set). As the TB X-ray images are less in number, we applied data augmentation by randomly selecting the images by rescaling 40 random images. To further increase the dataset size, we have also included 5863 X-ray images of 3-class including source of images is the Pneumonia and normal dataset3. Thus, normal, pneumonia bacterial and pneumonia viral 5 from the newly composed dataset consist of 5 classes is a large dataset. We have randomly selected 439 images of each class from the pneumonia dataset to be included in the study to construct the COVID-19 balanced 5-class dataset (Johnson & Khoshgoftaar, 2019). Figure 5 shows the samples of fives classes included in the dataset. The new COVID-19 5-class dataset has a larger number of COVID-19 X-ray images, than previous studies (Afshar et al., 2020; Khan et al., 2020; Narin et al., 2020; Razzak et al., 2020a; Sethy & Behera, 2020; Wang et al., 2020b) (Figs. 6, 7, 8, 9).

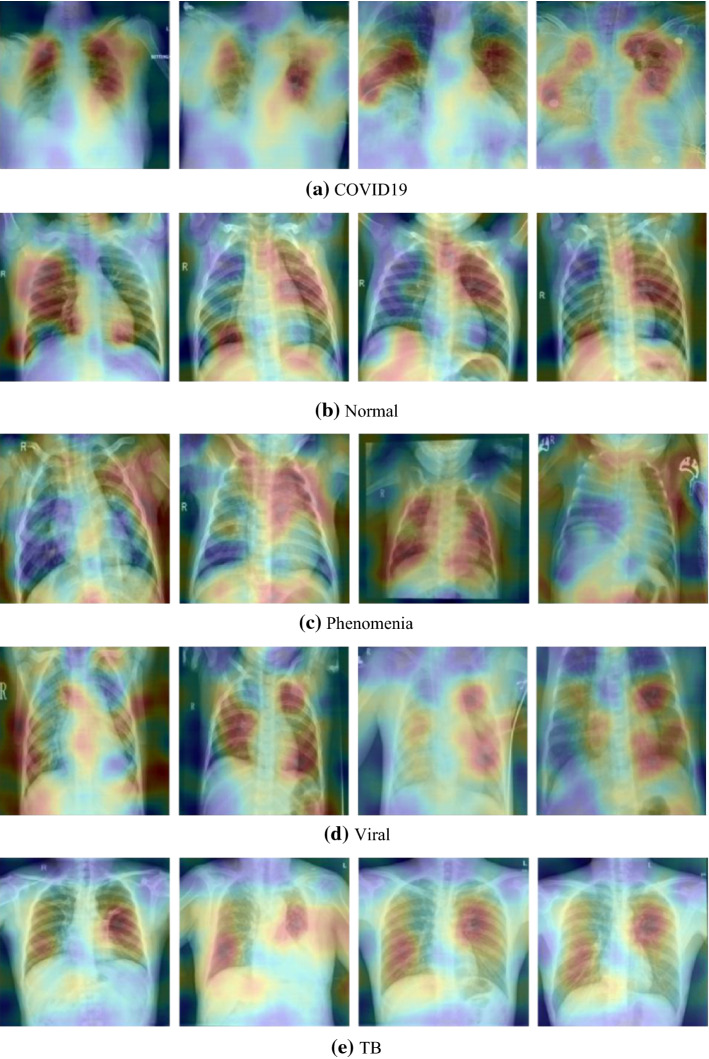

Fig. 6.

The visualization of some predicted samples based on our proposed model using RISE library

Fig. 7.

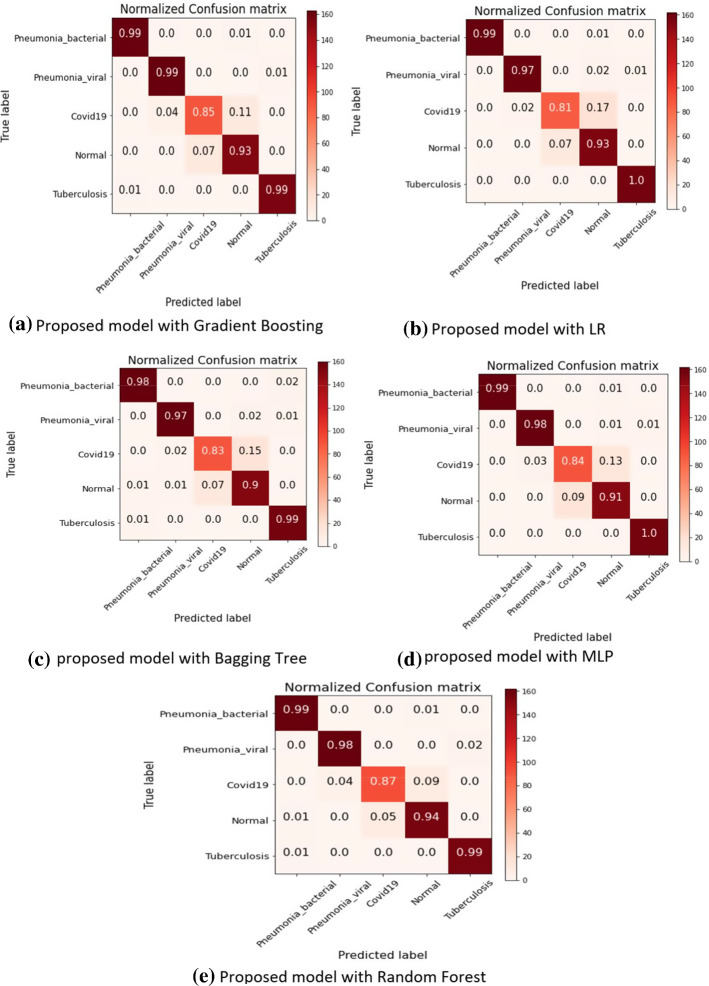

Confusion metrics of proposed framework

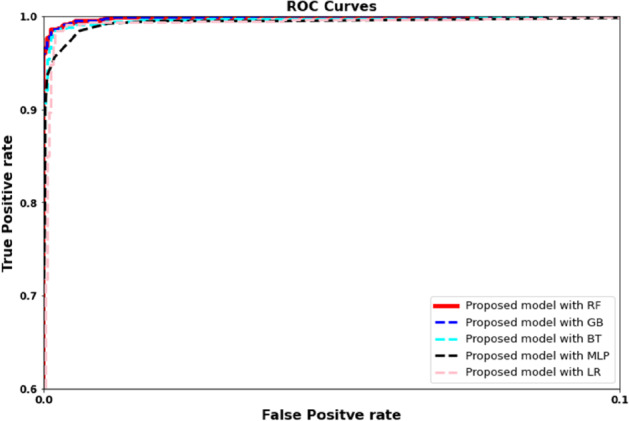

Fig. 8.

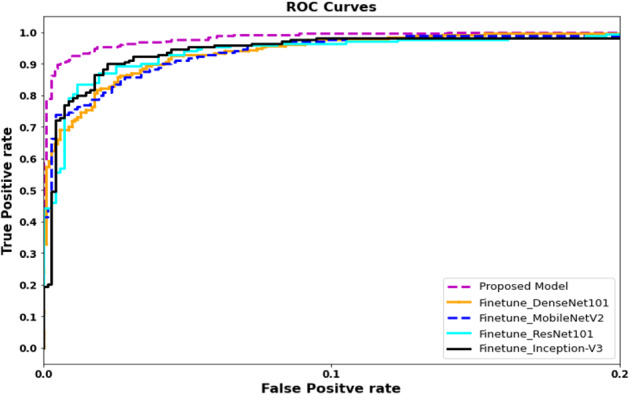

ROC curves for proposed and fine-tuned deep learning models

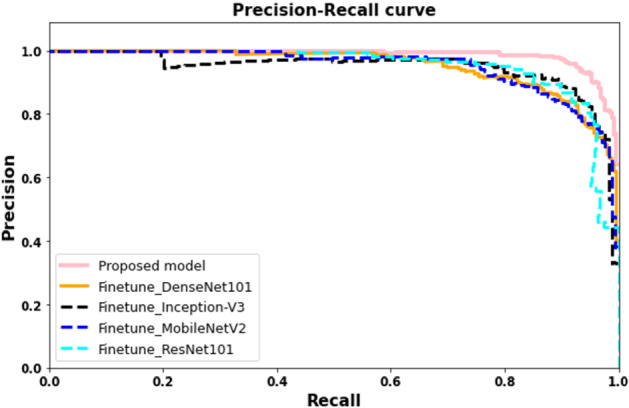

Fig. 9.

Precision recall curves for proposed and fine-tuned deep learning models

Parameters

To generalize the performance, we have performed tenfold validation on the benchmark 5-class COVID-19 CT image dataset. The proposed model is trained using the Pytorch library. In depth-wise convolution, we have used each filter channel only at one input channel, i.e. 3 channel filter and 3 channel image. We break the image and filter into three different channels following by convolution operation on the corresponding image with the corresponding channel, and finally stack them back. Results showed that depth-wise convolutional produced significantly better performance and the ability to capture more receptive fields than standard convolutional layers with the same number of parameters. The Adam optimizer with 0.0003 learning and 12 batch size used for training the proposed model. The 500 epochs were set for complete training. The google Colab with GPU has 12 GB RAM used for training and validation of the proposed model. The scikit-learn library used for machine learning classifiers and performance visualization such as region of convergence (ROC), accuracy, precision and recall (Table 1).

Table 1.

Details of the COVID-19 5-classes dataset

| Class | Number of X-ray images |

|---|---|

| COVID-19 | 435 |

| Normal | 439 |

| Pneumonia-bacterial | 439 |

| Pneumonia-viral | 439 |

| Tuberculosis | 434 (394 + 40 augmented) |

| Total | 2186 |

Results

In this paper, we have performed two experiments for the classification of COVID affected areas. As we have combined different datasets to increase the sample size, thus the current COVID-19 5-class study has a larger number of COVID-19 X-ray images than previous studies (Afshar et al., 2020; Khan et al., 2020; Narin et al., 2020; Razzak et al. 2020a; Sethy & Behera, 2020; Wang et al., 2020b). In our first experiment, we presented an end-to-end network that consists of three main blocks as Base Block, Efficient Block-1 and Efficient Block-2. Our second experiment used the same network to extract the best feature representation and forwarded it to different classifiers such as random forest, SVM, etc. Figures 10, 11 and 12 illustrate the COVID affected area detection in detail. Table 2 and Table describes the results of experiment 1 and experiment 2. We have further performed several experiments by the fine-tuning state of the art network. Table 2 describes the comparative evaluation of the proposed framework with fine-tuning methods (Table 3).

Fig. 10.

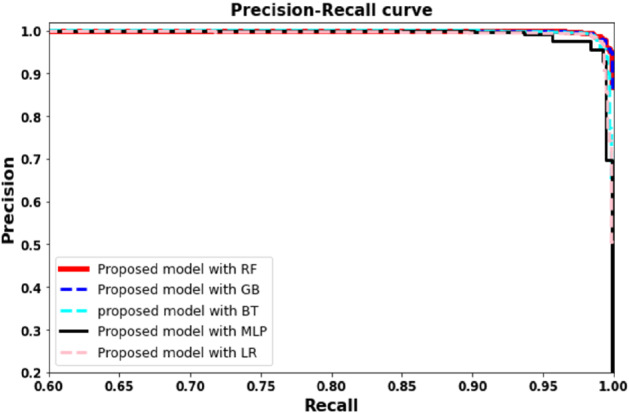

Precision recall curves plots based on proposed model with RF, LR, GB, and BT algorithms

Fig. 11.

ROC plots based on proposed model with RF, LR, GB, and BT algorithms

Fig. 12.

COVID-19 diagnosis using proposed approach on five classes

Table 2.

Comparison of proposed model with fine-tuned deep learning models

| Algorithms | Accuracy | Precision | Recall | F1 score |

|---|---|---|---|---|

| Finetune + ResNet | 0.8579 | 0.8598 | 0.8530 | 0.8512 |

| Finetune + MobileNet | 0.8934 | 0.8828 | 0.8802 | 0.8795 |

| Finetune + InceptionV3 | 0.9053 | 0.9094 | 0.9123 | 0.9072 |

| Finetune + DesneNet | 0.8735 | 0.8654 | 0.8602 | 0.8596 |

| Proposed model | 0.9328 | 0.9371 | 0.9090 | 0.9169 |

Table 3.

Feature extraction using proposed model with traditional machine learning classifiers

| Proposed + classifier | Accuracy | Precision | Recall | F1 score |

|---|---|---|---|---|

| Random forest | 0.9617 | 0.9581 | 0.9556 | 0.9567 |

| Logistic regression | 0.9498 | 0.9457 | 0.9408 | 0.94288 |

| Gradient boosting | 0.9366 | 0.9302 | 0.9305 | 0.9304 |

| Bagging trees | 0.9419 | 0.9377 | 0.9347 | 0.9360 |

| Multilayer perceptron | 0.9511 | 0.9450 | 0.9440 | 0.9445 |

In our first experiment, we have applied an end to end framework for the classification of COVID affected areas. The proposed network combines the features from different blocks, which results in features with different depth and scale, hence increases the receptive field and strengthening the power to classify COVID affected area of different sizes. The efficient blocks consist of expanded convolution, depth convolution, squeeze-and-excitation, projection. The integration of different depth wise blocks concatenation results in features with different depth and scale results in efficient features representation. Figures 8 and 9, Tables 2 and 4 illustrate the evaluation results. Notice that proposed framework showed considerably significantly performance 0.932 in comparison to 0.905, 0.873, 0.8579 and 0.893 using InceptionV3, DenseNet, ResNet and MobileNet respectively. We can observe a similar trend for precision, recall, and F-score.

Table 4.

Comparison with state of the art methods

| Study | Dataset | Model description | Classification accuracy (%) |

|---|---|---|---|

| Narin et al. (2020) | 2-class: 50 COVID-19/50 normal | Transfer learning with Resnet50 and InceptionV3 | 91.13 |

| Panwar et al. (2020) | 2-class 142 COVID-19/142 normal | nCOVnet CNN | 88 |

| Altan et al. (Altan & Karasu, 2020) | 3-class: 219 COVID-19 1341 normal, 1345 pneumonia viral | 2D curvelet transform, chaotic salp swarm algorithm (CSSA), EfficientNet-B0 | 91 |

| Chowdhury et al. (2020) | 3-class, 423 COVID-19, 1579 normal, 1485 pneu monia viral | transfer learning with ChexNet | 92.70 |

| Wang and Wong (Wang et al., 2020b) | 3-class, 358 COVID-19/5538 normal/8066 pneumonia | COVID-Net | 93.30 |

| Das et al. (2020) | 3-class: 62 COVID-19/1341 normal/1345 pneumonia | ResNet features and XGBoost classifier | 90 |

| Sethy and Behera (2020) | 3-class: 127 COVID-19/127 normal/127 pneumonia | Resnet50 features and SVM | 92.33 |

| Ozturk et al. (2020) | 3-class: 125 COVID-19/500 normal 500 pneumonia | DarkCovidNet CNN | 87.20 |

| Khan et al. (2020) | 4-class: 284 COVID-19/310 normal/330 pneumonia bacterial/327 pneumonia viral | CoroNet CNN | 89.60 |

| Mahmud et al. (2020) | 4-class: 305 COVID-19 + 305 normal + 305 viral, pneumonia + 305 bacterial pneumonia | Stacked multi-resolution CovXNet | 90.30 |

| Al-Timemy et al. (2020) | 5-class, 435 COVID-19/439 normal/439 pneumonia bacterial/439 pneumonia viral/434 tuberculosis | Resnet50 features and ensemble of subspace discriminant classifier | 91.6 |

| Proposed framework | 5-class, 435 COVID-19/439 normal/439 pneumonia bacterial/439 pneumonia viral/434 tuberculosis | Multi-scale features CoVIRNet | 93.28 |

| Proposed frame- work + random forest | 5-class, 435 COVID-19/439 normal/439 pneumonia bacterial/439 pneumonia viral/434 tuberculosis | Multi-scale features CoVIRNet | 96.17 |

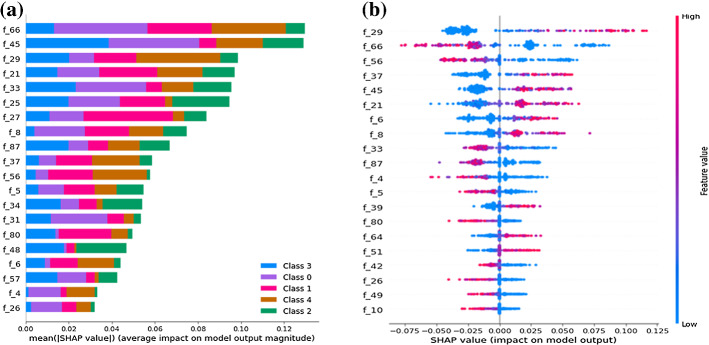

Our second experiment used the proposed framework as a feature extractor and applied SVM, random forest, multilayer perceptron, logistic regression, gradient boosting, and bagging tree as the classifier. Figures 10 and 11 and Tables 3 and 4 illustrate the comparative evaluation of different classifier methods. Notice that the proposed framework showed significant performance when used as a feature extractor with a classifier compared to an end-to-end framework (experiment I). We observe that random forest-based framework achieved 0.961 accuracy compared to 0.951, 0.941, 0.9366 and 0.9498 using multilayer perceptron, with gradient boasting bagging tree and logistic regression, respectively. We can observe a similar trend for other evaluation parameters. Figure 13 showed the confusion matrix. Notice that the proposed frame-work with random forest achieved higher detection performance (87%) for COVID infection comparatively. A similar trend can be noticed in Fig. 12.

Fig. 13.

Confusion metrics for proposed COVID-19 identification framework

Table 3 and Fig. 12 describes the comparative results of proposed framework. Results showed that experiments-2 showed considerably better performance in comparison to experiment 1. We have used SHAP python-based library to further validate our proposed model’s results with machine learning classifiers. The Fig. 7a shows the distribution of feature importance extracted from a proposed model using random forest algorithm and Fig. 7b also validated the feature importance and showed f66, f29 highest feature importance values. We have used the RSIE python library to explain the qualitative visualization analysis of some input sample and the saliency map generated based on proposed model prediction and created 1000 randomly masked versions of given X-ray image samples. Figure 6 shows the feature map importance shows the corresponding right location of features and would be better to see the feature maps activation of respective class where the features provided more attention for right features.

Discussion

The researcher found the variants are much more transmissible or could present challenges for the Covid vaccine i.e. a UK based variant of COVID-19 is identified as fast-spreading and 70% more transmissible than existing strains. The aggressive growth variant of COVID patients is overwhelming healthcare systems across the world. Although test kits can detect infectious cases, detecting possible COVID-19 infections on Chest X-ray may help analyze the damage to lunges and detect high-risk patients. X-ray and CT machines are already available in almost all healthcare systems, especially there no time of transportation required for modern X-ray systems. In this work, we propose using a chest X-ray to detect the damage to the lungs and detect the high-risk patient and prioritize the selection of patients for further RT-PCR testing. The proposed framework can also be used in an inpatient setting where the current systems struggle to decide the COVID affected patient and high-risk patients.

We presented depth-wise multilevel features concatenated deep neural network to diagnose COVID affected areas from lungs X-rays images. We consider scaling up the ConvNets and presenting a novel framework by balancing the scaling factors. The depth-wise multilevel concatenation is a learning approach that combines a different level of features representation with improving the performance of the deep network and making generalization ability better. The network consists of multiple blocks that uniformly scale all dimensions (depth, width and resolution). To evaluate the performance of proposed framework, we have compared the performance of proposed framework with state of the art methods such as Resnet50 (Narin et al., 2020), InceptionV3 (Narin et al., 2020), CNN (Panwar et al., 2020), curvelet transform (Altan & Karasu, 2020), chaotic salp swarm algorithm (CSSA) (Altan & Karasu, 2020), EfficientNet-B (Altan & Karasu, 2020), Transfer learning with ChexNet (Chowdhury et al., 2020; Das et al., 2020; Razzak et al., 2020a), COVID-Net (Wang et al., 2020b), ResNet features and XGBoost (Das et al., 2020), Resnet50 features and SVM (Sethy & Behera, 2020) DarkCovidNet CNN (Ozturk et al., 2020), CoroNet (Khan et al., 2020) Cov-eXnet (Mahmud et al., 2020) and Resnet50 features and ensemble of subspace discriminant classifier (Al-Timemy et al., 2020). Table 3 describes the comparative evaluation of the proposed framework with state of the art methods. Results showed that the proposed framework achieved significant gain in performance 96.18 and 93.28 using framework plus random forest and end-to-end model respectively compared to the state of the art methods. Figure 13 showed the confusion matrix. Notice that the proposed framework with random forest achieved higher detection performance (87%) for COVID infection comparatively. This shows that the inclusion of depth-wise components, squeeze-and-excitation, results in better performance by capturing more receptive fields than traditional convolutional layers. However, the parameters are almost the same i.e. balancing the width, depth and resolution of the network resulted in better feature representation. In contrast, the depth-wise multilevel feature fusion by aggregating the high level features results in combining the contextual information from different levels.

The promising and encouraging results of the proposed framework in diagnosing COVID-19 affected areas from radiography images indicate that it can be used as an application for the detection of COVID. The promising and encouraging results of the proposed depth-wise framework in detecting COVID affected patients from X-rays images indicate that deep learning has a more significant role in fighting against COVID-19. Further in-depth analysis can be performed, and extensive data can be used to improve the detection performance.

Conclusion

The exponential increase in COVID-19 patients is over-whelming healthcare systems across the world. With limited testing kits, every patient with respiratory illness can’t be tested using conventional techniques. In this paper, we presented a novel framework for the classification of COVID affected areas. We presented a depth-wise deep neural network for the detection of COVID affected lungs regions. Considering the depth-wise component and squeeze-and-excitation results in better performance by capturing more receptive fields than traditional convolutional layers; however, the parameters are almost the same. The extensive experiments on the benchmark X-rays dataset demonstrated the proposed framework’s effectiveness by achieving 93.28% and 96.17% using experiment 1 and experiment 2 in comparison to cutting-edge methods primarily based on transfer learning. In future, we are planning to consider a depth analysis of the network and consider transfer learning on the proposed framework.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Abdul Qayyum, Email: abdul.qayum@Bourgogne.edu.fr.

Imran Razzak, Email: imran.razzak@deakin.edu.au.

M. Tanveer, Email: mtanveer@iit.edu.in

Ajay Kumar, Email: akumar@em-lyon.com.

References

- Afshar, P., Heidarian, S., Naderkhani, F., Oikonomou, A., Plataniotis, K. N., & Mohammadi, A. (2020). Covid-caps: A capsule network-based framework for identification of covid-19 cases from X-ray images. arXiv preprint arXiv:2004.02696 [DOI] [PMC free article] [PubMed]

- Altan A, Karasu S. Recognition of covid-19 disease from X-ray images by hybrid model consisting of 2d curvelet transform, chaotic salp swarm algorithm and deep learning technique. Chaos, Solitons & Fractals. 2020;140:110071. doi: 10.1016/j.chaos.2020.110071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Al-Timemy, A. H., Khushaba, R. N., Mosa, Z. M., & Escudero, J. (2020). An efficient mixture of deep and machine learning models for covid-19 and tuberculosis detection using X-ray images in resource limited settings. arXiv preprint arXiv:2007.08223

- Amyar A, Modzelewski R, Li H, Ruan S. Multi-task deep learning based CT imaging analysis for covid-19 pneumonia: Classification and segmentation. Computers in Biology and Medicine. 2020;126:104037. doi: 10.1016/j.compbiomed.2020.104037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bizopoulos, P., Vretos, N., & Daras, P. (2020). Comprehensive comparison of deep learning models for lung and covid-19 lesion segmentation in CT scans. arXiv preprint arXiv:2009.06412

- Born, J., Wiedemann, N., Brandle, G., Buhre, C., Rieck, B., & Borgwardt, K. (2020). Accelerating covid-19 differential diagnosis with explainable ultrasound image analysis. arXiv preprint arXiv:2009.06116

- Candemir S, Jaeger S, Palaniappan K, Musco JP, Singh RK, Xue Z, Karargyris A, Antani S, Thoma G, McDonald CJ. Lung segmentation in chest radiographs using anatomical atlases with nonrigid registration. IEEE Transactions on Medical Imaging. 2013;33(2):577–590. doi: 10.1109/TMI.2013.2290491. [DOI] [PubMed] [Google Scholar]

- Chen, J., Wu, L., Zhang, J., Zhang, L., Gong, D., Zhao, Y., Hu, S., Wang, Y., Hu, X., Zheng, B., Wu, H., Dong, Z., Xu, Y., Zhu, Y., Chen, X., Zhang, M., Yu, L., Cheng, F., & Yu, H. (2020). Deep learning-based model for detecting 2019 novel coronavirus pneumonia on high-resolution computed tomography: A prospective study. MedRxiv. [DOI] [PMC free article] [PubMed]

- Chowdhury, M. E. H., Rahman, T., Khandakar, A., Mazhar, R., Kadir, M. A., Mahbub, Z. B., Islam, K. R., Khan, M. S., Iqbal, A., Al- Emadi, N., Reaz, M. B. I., & Islam, M. T. (2020). Can AI help in screening viral and covid-19 pneumonia? arXiv preprint arXiv:2003.13145

- Cohen, J. P., Morrison, P., Dao, L., Roth, K., Duong, T. Q., & Ghassemi, M. (2020). Covid-19 image data collection: Prospective predictions are the future. arXiv preprint arXiv:2006.11988

- Das, N. N., Kumar, N., Kaur, M., Kumar, V., & Singh, D. (2020). Automated deep transfer learning-based approach for detection of covid-19 infection in chest X-rays. IRBM. [DOI] [PMC free article] [PubMed]

- Elharrouss, O., Subramanian, N., & Al-Maadeed, S. (2020). An encoder–decoder-based method for covid-19 lung infection segmentation. arXiv preprint arXiv:2007.00861 [DOI] [PMC free article] [PubMed]

- Fan D-P, Zhou T, Ji G-P, Zhou Y, Chen G, Fu H, Shen J, Shao L. Inf-Net: Automatic covid-19 lung infection segmentation from CT images. IEEE Transactions on Medical Imaging. 2020;395:497. doi: 10.1109/TMI.2020.2996645. [DOI] [PubMed] [Google Scholar]

- Gozes O, Frid-Adar M, Sagie N, Kabakovitch A, Amran D, Amer R, Greenspan H. A weakly supervised deep learning framework for covid-19 CT detection and analysis. In: Petersen J, Estépar RSJ, Schmidt-Richberg A, Gerard S, Lassen-Schmidt B, Jacobs C, Beichel R, Mori K, editors. Thoracic image analysis. Springer International Publishing; 2020. pp. 84–93. [Google Scholar]

- Hinton, G. E., Sabour, S., & Frosst, N. (2018). Matrix capsules with em routing. In International conference on learning representations.

- Hu, J., Shen, L., & Sun, G. (2018). Squeeze-and-excitation networks. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 7132–7141).

- Jaeger S, Karargyris A, Candemir S, Folio L, Siegelman J, Callaghan F, Xue Z, Palaniappan K, Singh RK, Antani S, Thoma G. Automatic tuberculosis screening using chest radiographs. IEEE Transactions on Medical Imaging. 2013;33(2):233–245. doi: 10.1109/TMI.2013.2284099. [DOI] [PubMed] [Google Scholar]

- Johnson JM, Khoshgoftaar TM. Survey on deep learning with class imbalance. J Big Data. 2019;6(1):1–54. doi: 10.1186/s40537-018-0162-3. [DOI] [Google Scholar]

- Kermany DS, Goldbaum M, Cai W, Valentim CCS, Liang H, Baxter SL, McKeown A, Yang G, Wu X, Yan F, Dong J, Prasadha MK, Pei J, Ting MYL, Zhu J, Li C, Hewett S, Dong J, Ziyar I, Shi A, Zhang R, Zheng L, Hou R, Shi W, Fu X, Duan Y, Huu VAN, Wen C, Zhang ED, Zhang CL, Li O, Wang X, Singer MA, Sun X, Xu J, Tafreshi A, Lewis MA, Xia H, Zhang K. Identifying medical diagnoses and treatable diseases by image-based deep learning. Cell. 2018;172(5):1122–1131. doi: 10.1016/j.cell.2018.02.010. [DOI] [PubMed] [Google Scholar]

- Khan AI, Shah JL, Bhat MM. Coronet: A deep neural network for detection and diagnosis of covid-19 from chest X-ray images. Computer Methods and Programs in Biomedicine. 2020;196:105581. doi: 10.1016/j.cmpb.2020.105581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li, L., Qin, L., Xu, Z., Yin, Y., Wang, X., Kong, B., Bai, J., Lu, Y., Fang, Z., Song, Q., & Cao, K. (2020). Artificial intelligence distinguishes covid-19 from community acquired pneumonia on chest CT. Radiology. [DOI] [PMC free article] [PubMed]

- Li, T., Wang, Z., Chen, Y., Zhang, L., Gao, Y., Shi, F., Qian, D., Wang, Q., & Shen, D. (2020). Two-stage mapping-segmentation framework for delineating covid-19 infections from heterogeneous CT images. In International workshop on thoracic image analysis (Vol. 65, pp. 3–13). Springer.

- Ma J, Nie Z, Wang C, Dong G, Zhu Q, He J, Gui L, Yang X. Active contour regularized semi-supervised learning for covid-19 CT infection segmentation with limited annotations. Physics in Medicine Biology. 2020;65:225034. doi: 10.1088/1361-6560/abc04e. [DOI] [PubMed] [Google Scholar]

- Mahmud T, Rahman MA, Fattah SA. CovXNet: A multi-dilation convolutional neural network for automatic covid-19 and other pneumonia detection from chest X-ray images with transferable multi-receptive feature optimization. Computers in Biology and Medicine. 2020;122:103869. doi: 10.1016/j.compbiomed.2020.103869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Narin, A., Kaya, C., & Pamuk, Z. (2020). Automatic detection of coro-navirus disease (covid-19) using X-ray images and deep convolutional neural networks. arXiv preprint arXiv:2003.10849 [DOI] [PMC free article] [PubMed]

- Oulefki A, Agaian S, Trongtirakul T, Laouar AK. Automatic covid-19 lung infected region segmentation and measurement using CT-scans images. Pattern Recognition. 2020;114:107747. doi: 10.1016/j.patcog.2020.107747. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ozturk T, Talo M, Yildirim EA, Baloglu UB, Yildirim O, Acharya UR. Automated detection of covid-19 cases using deep neural networks with X-ray images. Computers in Biology and Medicine. 2020;121:103792. doi: 10.1016/j.compbiomed.2020.103792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Panwar H, Gupta PK, Siddiqui MK, Morales-Menendez R, Singh V. Application of deep learning for fast detection of covid-19 in X-rays using ncovnet. Chaos, Solitons & Fractals. 2020;138:109944. doi: 10.1016/j.chaos.2020.109944. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Razzak, I., Naz, S., Rehman, A., Khan, A., & Zaib, A. (2020a). Improving coronavirus (covid-19) diagnosis using deep transfer learning. medRxiv.

- Razzak MI, Imran M, Xu G. Big data analytics for preventive medicine. Neural Computing and Applications. 2020;32(9):4417–4451. doi: 10.1007/s00521-019-04095-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roy, A. G., Navab, N., & Wachinger, C. (2018). Concurrent spatial and channel ‘squeeze and excitation’ in fully convolutional net-works. In International conference on medical image computing and computer-assisted intervention (pp. 421–429). Springer.

- Saeedizadeh, N., Minaee, S., Kafieh, R., Yazdani, S., & Sonka, M. (2020). Covid TV-Unet: Segmenting covid-19 chest CT images using connectivity imposed U-net. arXiv preprint arXiv:2007.12303 [DOI] [PMC free article] [PubMed]

- Sethy PK, Behera SK. Detection of coronavirus disease (covid-19) based on deep features. Preprints. 2020;2020030300:2020. [Google Scholar]

- Wang G, Liu X, Li C, Xu Z, Ruan J, Zhu H, Meng T, Li K, Huang N, Zhang S. A noise-robust framework for automatic segmentation of covid-19 pneumonia lesions from CT images. IEEE Transactions on Medical Imaging. 2020;39(8):2653–2663. doi: 10.1109/TMI.2020.3000314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang L, Lin ZQ, Wong A. Covid-net: A tailored deep convolutional neural network design for detection of covid-19 cases from chest X-ray images. Scientific Reports. 2020;10(1):1–12. doi: 10.1038/s41598-019-56847-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xie W, Jacobs C, Charbonnier JP, van Ginneken B. Relational modeling for robust and efficient pulmonary lobe segmentation in CT scans. IEEE Transactions on Medical Imaging. 2020;39(8):2664–2675. doi: 10.1109/TMI.2020.2995108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xu, Z., Cao, Y., Jin, C., Shao, G., Liu, X., Zhou, J., Shi, H., & Feng, J. (2020). GASNet: Weakly-supervised framework for covid-19 lesion segmentation. arXiv preprint arXiv:2010.09456

- Yao, Q., Xiao, L., Liu, P., & Zhou, S. K. (2020). Label-free segmentation of covid-19 lesions in lung CT. arXiv preprint arXiv:2009.06456 [DOI] [PMC free article] [PubMed]

- Zheng B, Liu Y, Zhu Y, Yu F, Jiang T, Yang D, Xu T. MSD-Net: Multi-scale discriminative network for covid-19 lung infection segmentation on CT. IEEE Access. 2020;8:185786–185795. doi: 10.1109/ACCESS.2020.3027738. [DOI] [PMC free article] [PubMed] [Google Scholar]