Abstract

Artificial intelligence (AI) is a fascinating new technology that incorporates machine learning and neural networks to improve existing technology or create new ones. Potential applications of AI are introduced to aid in the fight against colorectal cancer (CRC). This includes how AI will affect the epidemiology of colorectal cancer and the new methods of mass information gathering like GeoAI, digital epidemiology and real-time information collection. Meanwhile, this review also examines existing tools for diagnosing disease like CT/MRI, endoscopes, genetics, and pathological assessments also benefitted greatly from implementation of deep learning. Finally, how treatment and treatment approaches to CRC can be enhanced when applying AI is under discussion. The power of AI regarding the therapeutic recommendation in colorectal cancer demonstrates much promise in clinical and translational field of oncology, which means better and personalized treatments for those in need.

Keywords: AI technology, Prediction, Diagnosis, Treatment, Colorectal cancer

Introduction

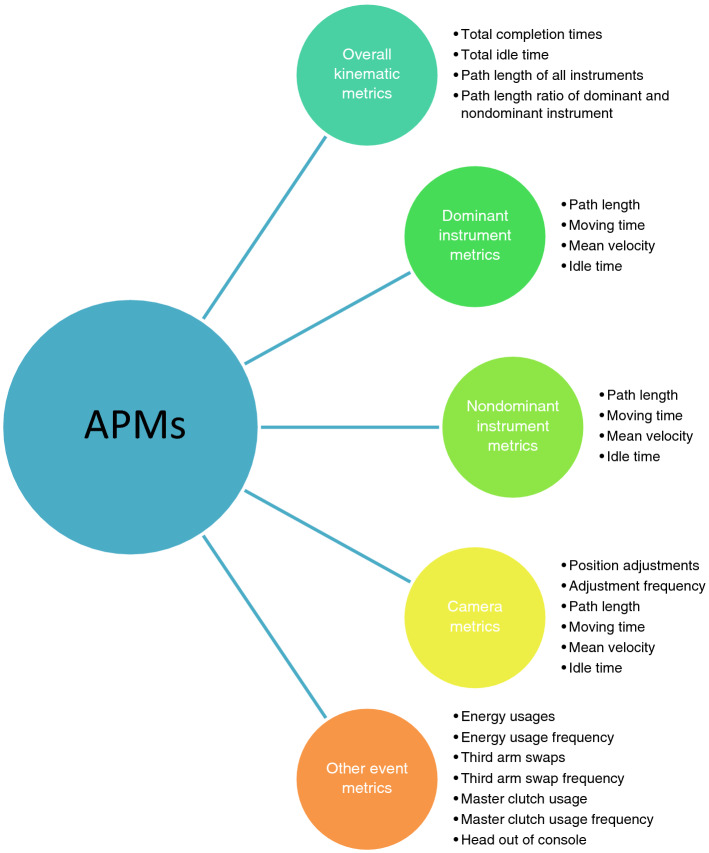

On the edge of the inevitability of artificial intelligence (AI) becoming a mainstay in our daily lives, researchers find that thinking systems have a plethora of potential applications in everyday living. Things like forecasting weather, self-driving cars, or predicting the amount of new customers for telecommunication companies are just some of the areas we’ve seen this technology slowly make its way into (Zheng June 2019; Sallab et al. 2017; Qureshi et al. 2013). Deep learning and machine learning are defined as the ability of AI to learn representations of data with multiple levels of abstraction, which are then coupled with an algorithm that indicates how much change there should be compared to its previous internal parameters (LeCun et al. 2015) (Fig. 1). The ability of deep learning to view and react to certain trends or patterns truly puts it in a category of its own, with strengths come from the process of categorizing, understanding, and predicting (Murphy 2012; Chan et al. 1994). The power potentially harnessed from deep learning comes from the ability to feed on large amounts of data to train itself to be better. Its current impact cannot be measured or quantified at this moment, which allows one to imagine how this circumstance can be used for the general public’s mental and physical health needs. The application of intelligent systems in gastrointestinal health is no different. With systems like AI, we do find ourselves in a conundrum of having no set boundaries for the application, but also no necessary starting points either, though slowly the lines become clearer (Russell et al. 2015).

Fig. 1.

Comparison between artificial intelligence (AI), machine learning (ML) and deep learning (DL)

AI for epidemiological prediction of colorectal cancer

AI is a tool that can improve the methods of how doctors collect data for epidemiological purposes. The aforementioned predictive power of AI is one of the most practical applications of this technology (Chen and Asch 2017). The use of AI predictive models for public safety has been examined in the subdivision of environmental epidemiology in cases like forest fires and earthquakes (Sakr et al. 2010; Khoshemehr et al. 2019). The application of AI in these areas can be very useful for public health and safety (Rigano 2018), but overall is dwarfed by the potential impact artificial intelligence can have when applied to cancer, cancer research, cancer treatment and cancer prevention. According to the National Center for Health Statistics’ (NCHS) 10 year report, the number of deaths due to fires of any kind in the US from 2008 to 2017 was roughly 31,895, while at the same time deaths from cancer in 2016 alone were estimated at 595,690 (Fire Administration and Agency 2019; Siegel et al. 2016). Colorectal cancer made up roughly 8.2%, or 49,190, of all cancer deaths in the US in 2016 and still remains the 3rd highest cause of mortality for cancer patients of any kind and 3rd highest amount of new cases (Siegel et al. 2016). This leaves us with the question of how AI can be and is being used to impact the epidemiology of colorectal cancer.

Steps to apply deep learning to epidemiological studies have begun as general measures to interpret research and data, but encounter some degree of difficulty due to things such as access to data, categorization, and general boundaries of these new ideas are still being fleshed out (Thiébaut and Thiessard 2018, 2019; González et al. 2019; Denecke 2017). Various types of modalities and subspecialties of AI appear promising for the use of predictive studies, incidence and distribution of colorectal cancer.

A specialized facet of AI called GeoAI is a tool that can potentially help healthcare providers in certain necessitated areas (Janowicz et al. 2020). GeoAI works by taking any information and data from a specific geographical area and allows artificial intelligence modalities to compile and retrieve more specific information from these data, based on what type of information they want (Janowicz et al. 2020). This is due to the concept of spatial science that allows for a more specific targeted interpretation of science-based data in one specific area (VoPham et al. 2018).Information that is available can vary from things like geographical (terrain) information, food consumption information, healthcare information, and a plethora of others (VoPham et al. 2018). The hope is that specific areas which suffer more from colorectal cancer can benefit the most from this type of information collection.

Countries like South Korea have an exorbitant amount of cases of gastrointestinal cancer. Organ specific rate for cancer in 2015 stood at 33.8 per 100,000 for gastric cancer, and 30.4 per 100,000 for colorectal cancer, making them the 2nd and 3rd highest incidence rates respectively (Kweon 2018) and are 1st, if classified as gastrointestinal cancers, not just individually. Since we recognize that colorectal cancer is a complex process, which must take into consideration things like geographic location, genetic predisposition, nutrition, and habits (Kuipers et al. 2015). GeoAI in this space can be tremendously useful as it’s had initial steps in being applied into environmental health in the case of environmental exposures, genetics, food consumption patterns, and applying specific treatments to specific people in specific areas (VoPham et al. 2018; Kweon 2018; Kuipers et al. 2015; Kamel Boulos et al. 2019; KamelBoulos and Blond 2016). This type of data set extraction can help define the future of colorectal cancer epidemiology with regards to how effects of cancer may be seen locally.

Another useful but potentially controversial way of AI application comes from digital epidemiology (Salathé et al. 2012). Digital epidemiology specifically deals with a different facet of public health, but in this case focuses on gathering information that can be quantified by AI and includes methods of data gathering that include digital sources like social media and digital devices to give more depth to epidemiological information gathered (Velasco 2018).This opens the door to a massive pool of information that was not previously available to physicians and can aid in early disease detection and health surveillance of the public at large (Eckmanns et al. 2019a). A huge benefit of digital epidemiology is that it is strictly digitally gathered but the big caveat is that information was not generated with the medical epidemiology in mind (Salathé 2018) but rather volunteered by people online. This is where we run into a massive problem for this type of data collection, which can directly infringe on patient’s privacy and can call into question general questions about information security and confidentiality (Denecke 2017; Kostkova 2018). Examples of this grey area can be found in a work by Sadilek et al. which surveilled google, twitter, and social media for keywords like stomach illnesses and restaurant names then applied machine learning to categorize and eventually tabulate which restaurants were potentially exposing people to illness and also the locations of those restaurants by use geo-tagged searches (Sadilek et al. 2018). One can easily imagine how examples like this can be useful for ascertaining information from people who may or may not have colorectal cancer, but are openly discussing potential symptoms or issues they are experiencing, which can in turn be used for understanding health needs (Table 1) (Eckmanns et al. 2019b; Tarkoma et al. 2020; Dion et al. 2015; Dodson et al. 2016; Hii et al. 2018).

Table 1.

Potential implementation of Artificial intelligence (AI) for epidemiology of colorectal cancer

| Definition | Function example | In colorectal cancer | |

|---|---|---|---|

| GeoAI (Janowicz 2020) | Subfield of spatial data science to process geographic information using AI | Image classification, object detection, geo-enrichment | Etiological studies, such as food consumption, genetic predisposition, healthcare variance |

| Digital epidemiology—Global Public Health Intelligence Network (Tarkoma et al. 2020; Dion et al. 2015) | Increase situational (public health events) awareness and capability and global network links | Early detection of SARS | Increase global capacity to early-detect risks and tumor burdens |

| Digital epidemiology—HealthMap (Tarkoma et al. 2020; Dodson et al. 2016) | Utilizing online informal sources for disease surveillance | Real-time surveillance on COVID-19 | Achieve a comprehensive view of global tumor burden |

| Digital epidemiology—Program for Monitoring Emerging Diseases (Tarkoma et al. 2020; Hii et al. 2018) | Exploiting the Internet and serving as a warning system | Early reports on COVID-19 | Monitoring of emerging etiological factors associated with colorectal cancer |

SARS: Severe Acute Respiratory Syndrome; COVID-19: Coronavirus disease 2019

Though we perceive certain information sets to be dull and essentially useless, a very helpful instrument for collecting and sorting this information to yield something relevant and useful is called data mining (Hand et al. 2001). Data mining uses AI to take large quantities of information that may be simple or complex, related or unrelated and sorts through them to find facts, patterns and information (Han 2012). According to the book, Data Mining: Concepts and techniques by J. Han, the process includes “databases (datasets), cleaning and integration (of data), data warehouses (where all relevant data is stored), data mining (“knowledge mining from data”), patterns, evaluation and presentation (expressing it in an understandable way) and knowledge (“knowledge discovery from data”) (Han 2012). This means that medical data on colorectal cancer can come from any type of source whether rural hospitals, research centers, or even the aforementioned social media posts and still have some useful lesson or concept we can use. Use of data mining technology applied to diagnosing colorectal cancer can help us uncover relationships; associations and a plethora of other potential unseen factors that were never even considered but can hold a large piece of the puzzle into what makes up colorectal cancer.

AI for diagnosis of colorectal cancer

As more and more technologies look towards AI as an aid, it is no surprise that diagnostic methods for detecting cancer have slowly been implementing ways to better diagnose patients with stronger accuracy and precision (Huang et al. 2019). Another added benefit is its ability to handle large amounts of data and being able extract information that experts simply cannot see (Huang et al. 2019). The attempt to improve medical imaging methods has begun by examining how deep learning can enhance how find cancer using imaging and range from tools that allow for increased ability to scan and interpret images faster, assure better workflow and quality of images or enhancing image quality by incorporating 3d technologies into image extraction (Liu et al. 2018a; Topol 2019; Thompson et al. 2018; Li et al. 2018).

Though imaging modalities seem like a natural fit for AI incorporation, the potential use for assisting in pathology and genetic disease diagnosis seems equally promising and deserves equal focus. This could entail changes to the current methods of medical testing or can open up new ways of seeing diseases as they appear. Overall, bettering current methods of imaging or testing can have transformative.

Endoscopy and MRI/CT imaging

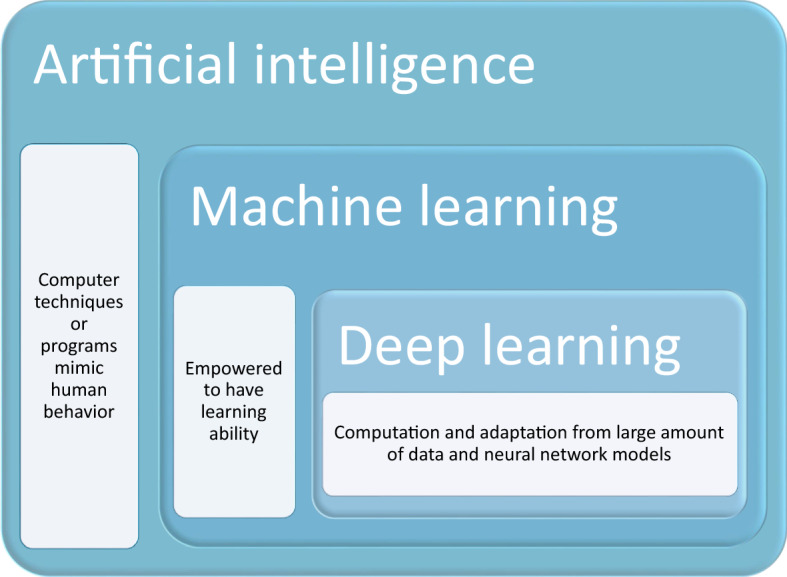

Understanding how AI is implemented into visual imagery interpretation begins with understanding another dimension of AI, namely Convolutional Neural Networks. These are artificial neural networks (ANN) that deal exclusively any parameters of image data (Wu 2017) (Fig. 2). By taking in large quantities of data sets (images), they can learn and find patterns to identify new things, and since they are a branch of AI, they allow for optimization and have the capacity to learn new things (O'Shea and Nash 2015). Another thing to note about imaging and AI is that information taken in by computers and interpreted real-time by AI is referred to as computer vision, which includes any information coming from camera or videos. Naturally, one makes the leap to how we can apply methods from CNNs and computer vision to one of the most powerful tools available in colorectal cancer, the endoscope. With regards to CNN, the most popular way for objection detection is via the replicated feature approach which employs copies of a similar feature detector to view them in different positions (Le 2018). Other important features are that it can cause free parameter reduction, is “able to replicate across scale and orientation” and employs feature types that allow for their “own map of replicated detectors” (Le 2018). This is beneficial two-fold by “not making neural networks invariant to translation” and for the allowance of having features useful in certain area, be useful in all areas, also referred to as invariant knowledge (Le 2018).

Fig. 2.

Introduction of an artificial neural network (ANN) with input layer (I), hidden layer (H), bias layer (B) and output layer (O). Connections between each node are dynamically adjusted to the feedback of training process. Positive correlation is showed in black line while negative correlation is in grey line. The thickness of line is in proportion to relative significance. Such ANN allows new input and generates given output

Initial steps for enhancing the endoscope have come from technology referred to as segmentation, which comes from the ability of computer vision to differentiate objects as different and not just one big object (Yuheng and Hao 2017). The key is that segmentation ideally would allow for differentiation of anatomy and also differentiation from normal and cancerous masses (Kayalibay et al. 2017). In order to know what is “normal” and “abnormal” there may be the incorporation of pattern recognition, which gives a spatial input recognition, which when coupled with neural network architectures like CNN, allows there to be some form of differentiation. Zhang et al. recently noted that hollow organs can have difficulty with regards to unwanted artifacts (patient-related, signal-related, or equipment related) due to instrumentation actively moving and possible displacing things creating unwanted artifacts that may interfere with a proper diagnoses (Zhang and Xie 2019). They viewed different available datasets to try and incorporate methods to refine how to minimize the number of artifacts picked up on by the segmentation application. Recently, a much larger and similar project was done by Ali et al., which sought to compare 23 different algorithms using a common datasets for segmentation in endoscopy (Ali et al. 2020). They found that for the most part the performance aspect of detecting artifacts was similar in the algorithms, but a big issue most faced was dealing with larger artifacts, which can present a large issue due to potential amounts of misinterpretation of findings or false positive findings (Ali et al. 2020).

More direct methods have been tried when applying CNNs to endoscopy by attempting to diagnose polyps directly. First attempts by Misawa et al. created their own personal algorithms to try to correctly identify colorectal polyps (Misawa et al. 2018). Video data sets were run through their algorithm to try and correctly identify polyps, whilst 2 experts, treated as the gold standard, also reviewed the same data sets and annotated their findings, they were later compared to the AI algorithm. Findings concluded that computer aided detection correctly diagnosed flat lesions, the most difficult to diagnose, correctly at 64.5% (100/155), while correctly identified 94% of the test polyps (Misawa et al. 2018; Kudo et al. 2019; Liu et al. 2018b).

Building on these findings, Mori et al. applied the same algorithm used by Misawa et al. to conduct a live experiment (Mori et al. 2019). This time he used an endocytoscope, which has an over 500-fold magnification power, while still functioning as a normal endoscope. Six patients were chosen and all underwent the same procedure. All 6 patients were identified in real time ranging from adenoma or hyperplastic polyp (Mori et al. 2019). Extra features they incorporated from the previous experiment included a change in corner color of the screen that indicated there was a change from normal, a warning sound and more importantly was able to determine in real time which were neo-plastic or non-neoplastic by evaluating the microscopic image (Mori et al. 2019).

It is postulated by Mori et al. that the added benefits of incorporating deep learning into colonoscopies, other than the obvious one of increased diagnosis accuracy, comes from minimizing any detection rate variations, allowing for better teaching tools that allow to endoscopists and those training, and to reduce the amount of unnecessary polypectomies (Mori et al. 2017). Endoscopic studies involved with AI have been blossomed (Table 2) (Ichimasa et al. 2018; Nakajima et al. 2020; Lai et al. 2021; Yamada et al. 2019; Chen et al. 2018; Repici et al. 2020; Kudo et al. 2020; Mori et al. 2018; Nguyen et al. 2020; Deding et al. 2020). Roadblocks to implementation of these technologies include technological progress, the lack of regulations, clinical trials, feasibility, risks of misdiagnosis and others (Kudo et al. 2019; Mori et al. 2017). Potentially, the largest issue lies in the nature of the system itself. As all AI relies on data sets to subsist and grow, the collection of available data sets for this specialty are very scarce meaning the capacity to learn new things is also scarce limiting the network overall (Mori et al. 2017).

Table 2.

Endoscopic studies involving artificial intelligence for diagnosis and prediction of colorectal cancer

| AI algorithm/system | Study design | Sensitivity | Specificity | AUC | Accuracy | |

|---|---|---|---|---|---|---|

| Ichimasa et al. (2018) | SVM | Prediction of lymph node metastasis post endoscopic resection of T1 colorectal cancer | 100% | 66% | – | 69% |

| Nakajima et al. (2020) | CNN | Automatic diagnosis system by computer-aided diagnosis (CAD) based on plain endoscopic images | 81% | 87% | 0.888 | 84% |

| Lai et al. (2021) | DNN | Improve polyp detection and discrimination by CAD | 100% | 100% | – | 74–95% |

| Yamada et al. (2019) | CNN | Develop a real-time detection system for colorectal neoplasm | 97.3% | 99% | 0.975 | Good and excellent* |

| Chen et al. (2019) | DNN | Develop a CAD diagnosis system to analyze narrow-bind images | 96.3% | 78.1% | – | 90.1 |

| Repici et al. (2020) | CNN | To assess the safety and efficacy of a computer-aided detection (CADe) system | – | – | – | – |

| Kudo et al. (2020) | CNN | To determine diagnostic accuracy of EndoBRAIN | 96.9% | 100% | – | 98% |

| Mori et al. (2018) | SVM | Evaluate the performance of real-time CADe with endocytoscope | 91.3–95.2% | 65.6–95.9% | – | – |

| Nguyen et al. (2020) | CNN | To pre-classify the in vivo endoscopic images | 19.6–87.4% | 42.5–90.6% | – | 52.6–68.9% |

| Deding et al. (2020) | – | To investigate relative sensitivity of colon capsule endoscopies compared with computer tomography colongraphy | 2.67# | – | – | – |

SVM: support vector machines; CNN: convolutional neural network; DNN: deep neural network; AUC: area under the curve from the receiver operating characteristics; *shown using intersection over the union (IOU); #relative sensitivity

CT and MRI also benefit greatly from the input of CNNs, though information about their implementation for the purposes of colorectal cancer is very scarce. Thus far, the strongest areas of where AI has been able to impact this type of imaging comes from detection, segmentation and classifications of images (Yamashita et al. 2018; Shan et al. 2019), which are identical to the previously mentioned attempts though just from CT/MRI (Zhang and Xie 2019; Ali et al. 2020; Mori et al. 2019). Another forgotten way technology can indirectly affect patient health was seen in a study by Shan et al. which showed that with the aid of AI, low dose CT scans can be essentially reconstructed with learning machines to yield a valuable image (Sharma and Aggarwal 2010). This can be a huge step towards actually reducing the amount of radiation patients receive when doing scans and alleviate unwanted side effects (Sharma and Aggarwal 2010). We have also seen general attempts to reduce attenuation correction of PET/MR images by using something they called deep MRAC (deep learning approaches for MR image-based AC) to help improve image quality for better readings and improvements to segmentation technology (Pesapane et al. 2018; Lundervold and Lundervold 2019).

With both endoscope and CT/MRI imaging, we are reminded of how AI is not yet completely optimized and ready for complete implementation, and also shows how technicians are still invaluable to the interpretation of imaging (Tajbakhsh et al. 2016). Pesapane et al. believes that machines will not replace radiologists but underlines the importance of combining efforts between radiologists and computer scientists to make more effective tools even at the cost of less jobs in that field (Tajbakhsh et al. 2016). Lundervold et al. poses that this technology is here to stay and stresses the significance and integration of computational medicine into the mainstream (Ribeiro et al. 2016). The general direction seems to indicate that overall improvements and incorporation via improving colonoscopy, with areas of emphasis that comes from detection of polyps (Borkowski et al. 2019; Marley and Nan 2016). This includes addressing improvements to computer learning understanding shapes and adjacent boundaries, suggesting that it is better to optimize and improve current technologies rather than starting from nothing (Ali et al. 2020; Misawa et al. 2018; Borkowski et al. 2019).

In general, certain limitations or issues can be found when trying to use CNNs for image or object recognition. Images may have some aberrations regarding how pixels appear leading to misinterpretations due to lost or abnormal pixilation. Anatomy is not always 100% similar within all individuals therefore can be misinterpreted incorrectly. Manners or methods with regards to positioning of patients or anatomic differences can lead to positional changes of how an image is collected, hence leading to potential misinterpretation. Also, the issues of segmentation itself and how to really know how to differentiate what is relevant information or what are just artefacts (i.e., Bubbles or gases) that have no bearing on the overall determinant of diagnosing someone for a neoplasia.

Genetic and pathological diagnosis

Efforts towards pathological understanding and genetic diagnosis look to enhance the current categorizing framework and look for other pathologic signs or genetic reasons for carcinogenesis. This can prove important since histopathology or genetics alone might not be enough for AI to diagnose CRC, as seen in the study by Borkowski et al. which compared different AI platforms for detecting adenocarcinoma in the veteran population and found that the machine learning tools had the most difficulty trying to differentiate adenocarcinoma with KRAS mutation and adenocarcinoma without KRAS mutation (Germot and Maftah 2019). This means a more unified approach may be required.

Though the influence of environmental causes cannot be understated (Hilario and Kalousis 2008), the invaluable role of genetics from studies on tumor suppressor genes and oncogenes like APC, KRAS, MTHFR and others play in the potential formation of colorectal cancer (Hilario and Kalousis 2008). This has led to an explosion in the number of potential biomarkers and indicators that may be linked to cancer growth, i.e. POFUT1 and PLAGL2 (Iizuka et al. 2020). A large problem arises though: how can many genes and variables be extracted from a small sample size then for reapplied (Ciompi et al. 2017).

Training AI to classify tumors based on histopathology alone has been done with some success. Iizuka et al. trained an algorithm to classify gastric and epithelial tumors into adenocarcinoma, adenoma, or non-neoplastic (Komeda et al. 2017). Whole slide images from datasets collected and input to the AI, where they were segmented into smaller tiles, organized, and compared to a standardized tile that represented 1of the 3 potential states then yielded an eventual classification (Komeda et al. 2017). They compared max pooling (MP), a type of CNN which uses smaller tile sizes and compared it to RNN (recurrent neural network) for evaluating and classifying the images. RNNs proved more accurate due to MP information loss that is natural in the format, though there was no statistical difference (Komeda et al. 2017). Both systems had identification issues when inflammatory tissue included in data sets. Ciompi et al. postulate that it may not be entirely the fault of AI that it can recognize images but rather that CNNs need better quality images to learn from and that AI can be helped by stain normalization in H&E images leading to increased accuracy (Dimitriou et al. 2018).

The consequences of diagnosing CRC late can lead to an overabundance of issues. CNN models for accurately diagnosing adenomatous and non-adenomatous polyps in a study by Komeda et al. put their model at roughly 70% accuracy (Banerjee et al. 2020). This means we are far from optimized but AI could aid in other ways for later stage CRC diagnosing. Potential considerations for how AI can be incorporated in diagnosis come by way of a study by Dimitriou et al. which sought to increase accuracy in stage IICRC prognosis (Ferrari et al. 2019). Dimitriou states that too much focus is placed on the characteristics of the tumor itself, and that more attention should be brought to other traits such as textures, spatial relationships and morphology, all of which were facets handled by machine learning (Ferrari et al. 2019). They found that their model of incorporation outperformed pathological T staging by achieving the area under receiver operating characteristic curve (AUROC) above 77 and 94% in 5 and 10 year prognoses respectively, compared to 62% in 5 and 10 years using pathological T stage (Ferrari et al. 2019). They believe this is due to more emphasis on micro-environment, DAPI, the fluorescence microscopy stain, intensity from tumor cell buds, and irregularly shaped nuclei, insight brought by machine learning (Ferrari et al. 2019).

Though currently theoretical, pathological diagnosis for colorectal cancer seems like a natural fit for the incorporation of fuzzy based systems. By its nature, fuzzy systems allow for more detailed interpretation of information than just having all things fit the “0” or “1” category (i.e., “no” or “yes”) by allowing any number between 0 and 1 to exist. This scope can be applied to any form of pathology and can help with identifying aberrations or predicting cancer likelihood based on set parameters. In the case of colorectal cancer polyps, fuzzy parameters can be easily applied to match various characteristics that may be deemed important including polyp stock in pedunculated or mucosal lesions, if serrations on hyperplastic polyps will become advanced lesions, specific sizes or shapes to help pre-determine whether a growth is classified in the lower risk group or if an adenomatous polyp will become cancer. Attempts to apply of fuzzy logic concepts to diagnosing cancer were seen in a study by Banerjee et al. who employed the YOLO algorithm to evaluate imaging to try and identify melanoma using deep convolutional neural networks (DCNNs) (Martínez-Romero et al. 2010). Since early recognition of melanoma depends heavily on physical attributes of moles such as asymmetry, borders, color, diameter and evolution, it is very reasonable to think that fuzzy logic concepts can be applied to colorectal cancer and physical attributes of the lumen or polyps.

Treatment

AI for therapeutic assessment

Ferrari et al. reported that AI model, based on the texture analysis of MR images, could assess the therapeutic complete response (CR) of rectal cancer receiving neoadjuvant chemotherapy (Biglarian et al. 2012). The receiver operating curve (AUC) of AI model showed a comparably high power, 0.86, with 95% confidence interval between 0.70–0.94. In fact, AI model enables the early-onset distinction between CR and not-response to therapy (NR) in the treatment of rectal cancer.

Moreover, the significance of AI in drug metabolism of CRC was also recognized (Lisboa and Taktak 2006). In fact, AI enables better understanding of specific metabolism and drugs-induced transformation that is closely associated with the progression of CRC. AI-assisted methods provide reliable characterization of drug metabolic network of CRC, leading to the identification of distinguished elements in the metabolic pathways (Lisboa and Taktak 2006). Moreover, AI also dynamically describes information-rich metabolic network of drug metabolism in CRC (Lisboa and Taktak 2006).Processing of complex networks regarding biological information has been considerably improved due to AI (Lisboa and Taktak 2006).

In addition, the prediction power of AI in CRC using ANN algorithm is increasingly valued (Schöllhorn 2004). In fact, ANN is characterized by nonlinear models with flexibility to medical research and clinical practice (Kickingereder et al. 2019; Yin et al. 2019). Several advantages of ANN have been recognized. First, it could improve the optimization process, resulting in flexible nonlinear models with cost-effectiveness, particularly in large data scenario. Second, it is accuracy and reliable to prediction for clinical decision making. Third, the academic communication and knowledge dissemination are facilitated by these models (Kickingereder et al. 2019; Yin et al. 2019; Chen et al. 2019; Somashekhar et al. 2018; Mayo et al. 2017). Based on a systematic review of 27 studies (clinical trials or randomized controlled trials) using ANNs as diagnostic or prognostic tools, 21 of them show improved benefits to healthcare provision and the rest 6 show similar outcome to conventional pattern (Kickingereder et al. 2019). Meanwhile, Akbar et al. reported that ANN prediction of distant metastasis of CRC showed better outcome (AUROC = 0.812) than logistic regression models (AUROC = 0.779) using 1219 CRC cases (Schöllhorn 2004).

IBM Watson for Oncology, a new AI trend for therapeutic recommendation

Clinical decision-support systems (CDSSs) display huge potential for therapeutic management in oncology given the increasing development of clinical information. It enables knowledge collection and procession in a way that is superior to conventional human touch by simulating human reasoning (Seroussi and Bouaud 2003). In general, the role of AI in healthcare field is focused on structured (machine learning) or unstructured (natural language processing) data processing. Computational reasoning algorithms have been developed for in-depth curation and evaluation of clinical field. AI has been involved in several systems for oncology, including the CancerLinq of American Society for Clinical Oncology and OncoDoc system (Zhou et al. 2019; Somashekhar et al. 2017a).

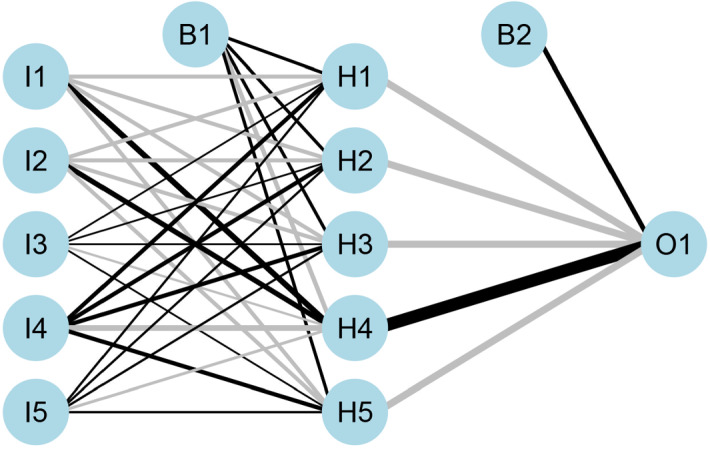

Intriguingly, it is a cognitive computing system, IBM Watson for Oncology (WFO), that accelerated the involvement of AI into the oncology therapy selection. WFO is featured by confidence-ranked, evidence-based recommendations, has been increasingly valued in the treatment field of cancer (Somashekhar et al. 2017b; Meng et al. 2020) (Fig. 3). The machine learning of WFO takes place in Memorial Sloan Kettering Cancer Center (New York, United States). It uses natural language processing and clinical data from multiple resources (treatment guidelines, expert opinions, literatures and medical records) to formulate recommendation (Somashekhar et al. 2017b). Doctors’ notes or medical records, one of the most clinically valued assets, remains to be obstacles for the clinical use of AI. Commonly, the training data for AI is supervised and labeled, such as winning or board game. Clear connections have been made between the input and output values which are already known. However, it is much harder, maybe not possible for AI to perform a supervised datasets in oncology practice. Since major part of a case record is composed of doctors’ notes, and other unstructured, individual-specific information that is hard to feed WFO. Nonetheless, breakthrough has been made in diagnosis. 96% concordance was reported in ovarian cancer between WFO and treatment decision from selected oncologists, while only 12% concordance was found in gastric cancer (Somashekhar et al. 2017b).

Fig. 3.

Typical flow diagram of WFO procedure; WFO: Watson for Oncology

Meanwhile, WFO has been implemented in Manipal Comprehensive Cancer Center (Bangalore, India) for comparison of cancer therapy in 250 CRC cases and 112 lung cancers (Yang et al. 2017). Of note, concordant recommendation is found in 92.7% of rectal cancer and 81.0% of colon cancer between WFO and local expert multidisciplinary tumor board (Yang et al. 2017).

Role of AI in surgery

Another promising field for AI to get involved in is surgery. Ichimasa et al. reported that AI significantly reduced unnecessary additional surgery following endoscopic resection of T1 CRC than clinical guidelines of United States (National Comprehensive Cancer Network, NCCN), Europe (European Society for Medical Oncology, ESMO) and Japan (Japanese Society for Cancer of the Colon and Rectum, JSCCR) (Ichimasa et al. 2018). Specifically, a support vector of machine (SVM) was formulated for supervised machine learning and identification of patients who were necessary for additional surgical intervention (Ichimasa et al. 2018).

Recently, Meng et al. initiated a multicenter diagnostic study charactering early CRC using AI techniques (Kalis et al. 2018). This study included 4390 images of intraepithelial neoplasm. The diagnostic AI-assisted accuracy was 0.963 in the internal validation set, 0.835 in the external datasets. Researchers concluded that this approach performed better than expert endoscopists regarding the sensitivity and specificity. Moreover, early effective prevention of CRC using this approach is also applicable and reliable due to the interpretation of the authors (Kalis et al. 2018).

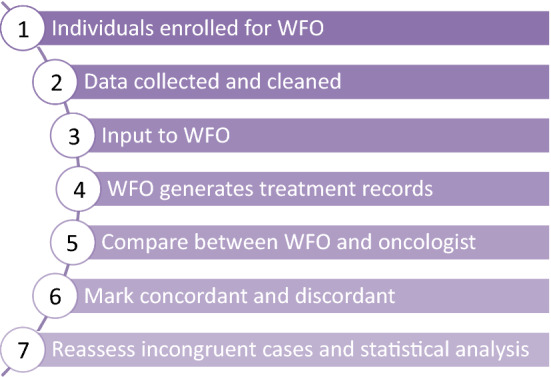

The role of AI in surgery remains superficial, perhaps due to the nature of surgery itself, laborious, time-intensive and sometimes non-scalable. All the presentable results achieved in AI merely facilitate the decision-making process. The real value of AI should be planted into the surgical conceptions, techniques and skills. During the several decades, we have seen the advances from open to minimal invasive pattern, such as laparoscopy and robotic-assistance (Chang et al. 2021). In 2018, Kalis et al. highlighted 10 promising AI applications in healthcare system (Hung et al. 2018a). Among them, robot-assisted surgery is the top 1 promising application with wide market across types of surgery. Robotic-assisted surgery equipped with AI not only real-timely guides and improves the performance of a surgeon, but also generates large quantity of data for subsequent surgical training and stimulation. Specifically, multi-dimensional metrics of robotic surgery, such as instrument position, camera movement, grip force and vessel detections, can be invaluable, particularly from expert surgeons (Hung et al. 2018a). Of note, numerous algorithms progress has been made based on the automated performance metrics (APMs) data (Ghani et al. 2016). APMs, depicting instrument motion tracking, are mainly generated by surgery video (Goldenberg et al. 2017) (Fig. 4).

Fig. 4.

Specific terms defined in automated performance metrics (APMs). It contained five major categories, including overall kinematic metrics, dominant instrument metrics, nondominant instrument metrics, camera metrics and other event metrics

The value of AI serves as an effective tool to assess surgical skills is promising (Ghani et al. 2016). Previous study in the Michigan Urological Surgery Improvement Collaborative reported that crow-sourced methodology could be of potential value to the surgical skills assessment based on video clips of robot-assisted radical prostatectomy (RARP) (Law et al. 2017). Meanwhile, another study reported that there may exist a correlation between surgical performance and functional outcome in RARP (Hung et al. 2018b). However, there are some challenges remain, including comparable high time-consuming and subjective bias. Introduction of AI into this field may enable the assessment more objective.

An initial SVM classifier system has been presented with remarkable accuracy in the assessment of surgical performance or skills, even is fed by limited data (Ghani et al. 2016; Hung et al. 2018c). Increasing AI algorithms and models have been developed (Table 3) (Hung et al. 2019; Zia et al. 2018,2019; Schroerlucke et al. 2017; Rao et al. 2017; Guan et al. 2017). We believe video-based surgery, both robotic and laparoscopy, is a fertile soil for AI to land on.

Table 3.

AI algorithms/models utilized in surgery

| Categorization | Function | Training data | |

|---|---|---|---|

| SVM (Law et al. 2017) | Deep learning | Categorize surgical performance into high/low based on video training data | Video data |

| dVLogger tool (Hung et al. 2018a) | NA | Recording data and synchronizing surgical footage and APMs | Video data |

| Random forest-50 model (Hung et al. 2018b) | Machine learning | Predict clinical outcomes in the form of hospital stay | Objective metrics |

| DeepSurv (Hung et al. 2019) | Deep learning | Predict postoperative urinary continence | Objective metrics |

| Random survival forest (Hung et al. 2019) | Deep learning | Predict postoperative urinary continence | Objective metrics |

| CNN RP-Net (Zia et al. 2018) | Deep learning | A methodology to recognize key steps in surgery | Objective metrics |

| CNN RP-Net-V2 (Zia et al. 2019) | Deep learning | Superior to RP-Net | Objective metrics |

SVM: support vector of machine; APMs: automated performance metrics; CNN: Convolutional Neural Network

Novel techniques or perspectives could be inspired as well (Hung et al. 2018a). Schroerlucke et al. reported that, AI-empowered robotic surgery reduced 21% of hospital stay and less postoperative complications, saving around $40 billion in each year (Guan et al. 2017). Integration of AI into surgery performance, from AI-assisted to AI-dominated, remains to be one of the ultimate efforts.

Role of AI in molecular research

Given the increasing AI studies focus on the clinical practice of CRC, the molecular researches remain comparably untouched. Noteworthy, there are also numerous issues that could be covered by AI, including the increasing interests of tumor mutational burden in immunotherapy for oncology clinic. Currently, checkpoint blockage therapy, using anti-programmed cell death protein 1 (PD-1) and PD-ligand 1 (PD-L1), has been one of the focuses to survival benefits of cancer patients (Chan et al. 2019; Goodman et al. 2017). However, difficulties remain for the overall benefits due to potential clinical and genomic heterogeneity. In fact, cancer itself is characterized by accumulation of somatic mutations which further highlights the role of tumor mutational burden in the treatment of oncology (Yarchoan et al. 2017). Of note, dramatic genetic alterations and variations have been found among individual cases, in which tumor mutational burden is regarded as significant biomarker to distinguish immunotherapy-sensitive individuals (Goodman et al. 2017; Yarchoan et al. 2017). Promisingly, the possible involvement of AI could accelerate the knowledge and rules that yet to be discovered beneath the immunotherapy recommendations given increasing volumes of genomic information is spewing.

Interestingly, Pham et al. studied the P73 biomarker expression of rectal cancer using deep learning pattern (Pham et al. 2019). The pair-wise pattern comparison of P73 protein expression was carried out in normal mucosa, primary tumor, metastatic lymph node tissues via immunohistochemical slides. Color, edge and other morphological variables were retrieved from image data. Poor prognosis was noticed in the group with relatively low dissimilarity pattern between primary tumor and metastatic lymph node tissues (Pham et al. 2019). In fact, this novel application of machine learning further extends the application of AI to molecular-clinical combination.

Implementation and limitations

Naturally, when dealing with new and exciting technologies, the question of when it can be fully implemented arises. With regards to AI and its implementation for daily medical use, this mainly depends on a few factors. Questions like “How valuable is it to incorporate AI into the diagnostic or treatment process?”, “Is it cost-effective to invest in this technology?” and “Is it appropriate for machines to diagnose and recommend treatments or is this a job solely for physicians?” are natural jumping-off points to establishing a basic understanding of how these tools should be used. Finding a fundamental approach for adding computer-aided diagnosis and understanding how much we should rely on the assistance of AI are key points that must be addressed. This along with safety measures must be heavily considered before true implementation becomes commonplace. At this point, the need for established AI medical ethics guidelines seems like the most basic and initial step. This would allow some direction and guidance in knowing when it is and is not appropriate to employ these technologies. A more unified and clear understanding of the rules and what they entail would mark an important step towards full use.

In addition to the steps needed in order to begin implementing this new technology, there is a need to finely tune and understand data for outright conclusions to be drawn from it. The main drawback of all this technology is the lack of data pools that allow for the composition of beneficial conclusions. Dependent on the study, for example, studies on the topic of CT imaging and AI, the amount of total image inputs can range from 10,000 to 40,000 images roughly, which is just plainly insufficient when having the difficult task of needed definitive answers to treat or diagnose the population-at-large. Additionally, all images are gathered by the research parties themselves, outside of those learning from databanks or information collectives. It seems preposterous and dangerous to allow life altering decisions to be made with such little information. Data allows for more better training and optimization. This requires investment of time and money to build systems that allow for better data collection which in turn allows for better and accurate decision making. As more institutions begin exploring this technology, the amount of data accrued by all will also increase. The creation of public databanks for applicable information such as symptomatology, different imaging modalities or geographic distribution can be a large boon that could benefit researchers by having access to more and more data.

Another overlooked facet of application is general accessibility to public. While this might not necessarily be an issue in certain countries, underdeveloped countries and those with less resources may not be able to have any access to this technology until it becomes more economically accessible for them. Ideally, as the technology becomes more common, the benefits can be felt by everyone and not just those in certain geographic areas. It is essential for the global health community that people in underdeveloped countries have specialized access to technology to better treat and deal with pathologies in their area and is catered to their specific needs for their local communities.

Conclusion

As we see with all relatively new forms of technology, their applications into daily use do take time to slowly incorporate. Though the promise of artificial intelligence is an exciting new venture, it remains in an infant stage with regards to its actual application. Attempts to kick start how these technologies can potentially be applied are slowly moving in a good direction. Thus far, it is very feasible that how we gather information, how we diagnose, and how we treat colorectal cancer will be dramatically enhanced by deep learning tools. Though methods for attaining medical information may be contentious, there is no doubt that colorectal cancer early detection will see a dramatic impact as soon as appropriate methods for collecting data can be found. Modes for finding maladies and associated patterns will be greatly benefited also. Finally, the power of AI regarding the therapeutic recommendation in colorectal cancer demonstrates much promise in clinical and translational field of oncology, which means better and personalized treatments for those in need.

Abbreviations

- AI

Artificial Intelligence

- ANN

Artificial Neural Networks

- CRC

Colorectal Cancer

- CNN

Convolutional Neural Network

- MP

Max Pooling

- RNN

Recurrent Neural Network

- WFO

Watson for Oncology

Author’s contribution

CY and EJH carried out literature and data analysis. CY and EJH drafted the manuscript. CY and EJH participated in study design and data collection. All authors read and approved the final manuscript.

Declaration

Conflict of interest

All authors declare no conflict of interest in this study.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Chaoran Yu, Email: chaoran_yu@yeah.net.

Ernest Johann Helwig, Email: ernjohelwig@gmail.com.

References

- Ali S, Zhou F, Braden B, et al. An objective comparison of detection and segmentation algorithms for artefacts in clinical endoscopy. Sci Rep. 2020;10:2748. doi: 10.1038/s41598-020-59413-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Banerjee S, Singh SK, Chakraborty A, Das A, Bag R. Melanoma diagnosis using deep learning and fuzzy logic. Diagnostics (basel, Switzerland) 2020;10(8):577. doi: 10.3390/diagnostics10080577. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Biglarian A, Bakhshi E, Gohari MR, et al. Artificial neural network for prediction of distant metastasis in colorectal cancer. Asian Pac J Cancer Prev. 2012;13(3):927–930. doi: 10.7314/APJCP.2012.13.3.927. [DOI] [PubMed] [Google Scholar]

- Borkowski AA, Wilson CP, Borkowski SA, Thomas LB, Deland LA, Grewe SJ, Mastorides SM. Comparing artificial intelligence platforms for histopathologic cancer diagnosis. Federal Practitioner: for the Health Care Professionals of the VA, DoD, and PHS. 2019;36(10):456–463. [PMC free article] [PubMed] [Google Scholar]

- Chan TA, Yarchoan M, Jaffee E, et al. Development of tumor mutation burden as an immunotherapy biomarker: utility for the oncology clinic. Ann Oncol. 2019;30(1):44–56. doi: 10.1093/annonc/mdy495. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chan Kcc, Wong Akc, Chiu Dky. Learning sequential patterns for probabilistic inductive prediction. IEEE Trans Syst Man Cybern. 1994;24(10):1532–1547. doi: 10.1109/21.310535. [DOI] [Google Scholar]

- Chang TC, Seufert C, Eminaga O, et al. Current trends in artificial intelligence application for endourology and robotic surgery. Urol Clin. 2021;48(1):151–160. doi: 10.1016/j.ucl.2020.09.004. [DOI] [PubMed] [Google Scholar]

- Chen JH, Asch SM. Machine learning and prediction in medicine—beyond the peak of inflated expectations. N Engl J Med. 2017;376(26):2507–2509. doi: 10.1056/NEJMp1702071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen PJ, Lin MC, Lai MJ, Lin JC, Lu HH, Tseng VS. Accurate classification of diminutive colorectal polyps using computer-aided analysis. Gastroenterology. 2018;154(3):568–575. doi: 10.1053/j.gastro.2017.10.010. [DOI] [PubMed] [Google Scholar]

- Chen J, Remulla D, Nguyen JH, et al. Current status of artificial intelligence applications in urology and their potential to influence clinical practice. BJU Int. 2019;124(4):567–577. doi: 10.1111/bju.14852. [DOI] [PubMed] [Google Scholar]

- Ciompi F, Geessink O, Bejnordi BE, De Souza GS, Baidoshvili A, Litjens G, van Ginnekan B, Nagtegaal I, Van Der Laak J (2017) The importance of stain normalization in colorectal tissue classification with convolutional networks. In 2017 IEEE 14th International Symposium on Biomedical Imaging (ISBI 2017), pp 160–163. IEEE.

- Deding U, Herp J, Havshoei AL et al (2020) Colon capsule endoscopy versus CT colonography after incomplete colonoscopy. Application of artificial intelligence algorithms to identify complete colonic investigations. United European Gastroenterol J 8(7):782–789. [DOI] [PMC free article] [PubMed]

- Denecke K. An ethical assessment model for digital disease detection technologies. Life Sci Society Policy. 2017;13(1):16. doi: 10.1186/s40504-017-0062-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dimitriou N, Arandjelović O, Harrison DJ, Caie PD. A principled machine learning framework improves accuracy of stage II colorectal cancer prognosis. NPJ Digital Med. 2018;1(1):1–9. doi: 10.1038/s41746-018-0057-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dion M, AbdelMalik P, Mawudeku A. Big data and the Global Public Health Intelligence Network (GPHIN) Can Commun Dis Rep. 2015;41(9):209–214. doi: 10.14745/ccdr.v41i09a02. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dodson S, Klassen KM, McDonald K, et al. HealthMap: a cluster randomised trial of interactive health plans and self-management support to prevent coronary heart disease in people with HIV. BMC Infect Dis. 2016;16:114. doi: 10.1186/s12879-016-1422-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eckmanns T, Füller H, Roberts SL. Digital epidemiology and global health security; an interdisciplinary conversation. Life Sci Soc Policy. 2019;15:2. doi: 10.1186/s40504-019-0091-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eckmanns T, Füller H, Roberts SL. Digital epidemiology and global health security; an interdisciplinary conversation. Life Sci Soc Policy. 2019;15(1):2. doi: 10.1186/s40504-019-0091-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferrari R, Mancini-Terracciano C, Voena C, et al. MR-based artificial intelligence model to assess response to therapy in locally advanced rectal cancer. Eur J Radiol. 2019;118:1–9. doi: 10.1016/j.ejrad.2019.06.013. [DOI] [PubMed] [Google Scholar]

- Fire Administration US, Agency FEM. Fire in the United States 2008–2017. 20. MD: Emmitsburg; 2019. [Google Scholar]

- Germot A, Maftah A. POFUT1 and PLAGL2 gene pair linked by a bidirectional promoter: the two in one of tumour progression in colorectal cancer? EBioMedicine. 2019;46:25–26. doi: 10.1016/j.ebiom.2019.07.065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghani KR, Miller DC, Linsell S, et al. Measuring to improve: peer and crowd-sourced assessments of technical skill with robot-assisted radical prostatectomy. Eur Urol. 2016;69(4):547–550. doi: 10.1016/j.eururo.2015.11.028. [DOI] [PubMed] [Google Scholar]

- Goldenberg MG, Goldenberg L, Grantcharov TP. Surgeon performance predicts early continence after robot-assisted radical prostatectomy. J Endourol. 2017;31(9):858–863. doi: 10.1089/end.2017.0284. [DOI] [PubMed] [Google Scholar]

- González A, Zanin M, Menasalvas E. Public health and epidemiology informatics: can artificial intelligence help future global challenges? An overview of antimicrobial resistance and impact of climate change in disease epidemiology. Yearb Med Inform. 2019;28:224–231. doi: 10.1055/s-0039-1677910. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goodman AM, Kato S, Bazhenova L et al (2017) Tumor mutational burden as an independent predictor of response to immunotherapy in diverse cancers. Molecular Cancer Therapeutics 16(11):2598–2608. [DOI] [PMC free article] [PubMed]

- Guan J, Lim KS, Mekhail T, et al. Programmed death ligand-1 (PD-L1) expression in the programmed death receptor-1 (PD-1)/PD-L1 blockade: a key player against various cancers. Arch Pathol Lab Med. 2017;141(6):851–861. doi: 10.5858/arpa.2016-0361-RA. [DOI] [PubMed] [Google Scholar]

- Han J (2012) Data mining: Concepts and techniques, 3rd edn. Morgan Kaufmann Publishers, Waltham

- Hand D, Mannila H, Smyth P (2001) Principles of data mining

- Hii A, Chughtai AA, Housen T, et al. Epidemic intelligence needs of stakeholders in the Asia-Pacific region. Western Pac Surveill Response J. 2018;9(4):28–36. doi: 10.5365/wpsar.2018.9.2.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hilario M, Kalousis A. Approaches to dimensionality reduction in proteomic biomarker studies. Brief Bioinform. 2008;9(2):102–118. doi: 10.1093/bib/bbn005. [DOI] [PubMed] [Google Scholar]

- Huang S, Jie Y, Fong S, Zhao Qi (2019) Artificial intelligence in cancer diagnosis and prognosis: Opportunities and challenges. Cancer Lett 471. 10.1016/j.canlet.2019.12.007 [DOI] [PubMed]

- Hung AJ, Chen J, Che Z, et al. Utilizing machine learning and automated performance metrics to evaluate robot-assisted radical prostatectomy performance and predict outcomes. J Endourol. 2018;32(5):438–444. doi: 10.1089/end.2018.0035. [DOI] [PubMed] [Google Scholar]

- Hung AJ, Chen J, Jarc A, et al. Development and validation of objective performance metrics for robot-assisted radical prostatectomy: a pilot study. J Urol. 2018;199(1):296–304. doi: 10.1016/j.juro.2017.07.081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hung AJ, Chen J, Gill IS. Automated performance metrics and machine learning algorithms to measure surgeon performance and anticipate clinical outcomes in robotic surgery. JAMA Surg. 2018;153(8):770–771. doi: 10.1001/jamasurg.2018.1512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hung A J, Chen J, Ghodoussipour S et al (2019) A deep-learning model using automated performance metrics and clinical features to predict urinary continence recovery after robot-assisted radical prostatectomy. BJU Int 124(3):487–495. [DOI] [PMC free article] [PubMed]

- Ichimasa K, Kudo SE, Mori Y et al (2018) Artificial intelligence may help in predicting the need for additional surgery after endoscopic resection of T1 colorectal cancer [published correction appears in Endoscopy. 50(3):C2]. Endoscopy 50(3):230–240. [DOI] [PubMed]

- Iizuka O, Kanavati F, Kato K, et al. Deep learning models for histopathological classification of gastric and colonic epithelial tumours. Sci Rep. 2020;10:1504. doi: 10.1038/s41598-020-58467-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Janowicz K, Gao S, McKenzie G, Hu Y, Bhaduri B (2020) GeoAI: Spatially Explicit Artificial Intelligence Techniques for Geographic Knowledge Discovery and Beyond. Int J Geogr Inf Sci 1–13. 10.1080/13658816.2019.1684500

- Kalis B, Collier M, Fu R (2018) 10 promising AI applications in health care. Harvard Business Review.

- Kamel Boulos MN, Peng G, Vo Pham T. An overview of GeoAI applications in health and healthcare. Int J Health Geogr. 2019;18(1):7. doi: 10.1186/s12942-019-0171-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- KamelBoulos MN, Le Blond J. On the road to personalized and precision geomedicine: medical geology and a renewed call for interdisciplinarity. Int J Health Geogr. 2016;15:5. doi: 10.1186/s12942-016-0033-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kayalibay B, Jensen G, van der Smagt P (2017) CNN-based segmentation of medical imaging data. arXiv preprint arXiv:1701.03056.

- Khoshemehr Gh, Asadzadeh, Bahadori H (2019) Predictive models of the dominant period of site using Artificial Neural Network and Microtremor Measurements: Application to Urmia, Iran. Int J Optim Civil Eng 9(3):395–410, http://ijoce.iust.ac.ir/article-1-397-en.pdf.

- Kickingereder P, Isensee F, Tursunova I, et al. Automated quantitative tumour response assessment of MRI in neuro-oncology with artificial neural networks: a multicentre, retrospective study. Lancet Oncol. 2019;20(5):728–740. doi: 10.1016/S1470-2045(19)30098-1. [DOI] [PubMed] [Google Scholar]

- Komeda Y, Handa H, Watanabe T, Nomura T, Kitahashi M, Sakurai T, Okamoto A, Minami T, Kono M, Arizumi T, Takenaka M, Hagiwara S, Matsui S, Nishida N, Kashida H, Kudo M. Computer-aided diagnosis based on Convolutional Neural Network System for Colorectal Polyp Classification: preliminary experience. Oncology. 2017;93(suppl 1):30–34. doi: 10.1159/000481227. [DOI] [PubMed] [Google Scholar]

- Kostkova P. Disease surveillance data sharing for public health: the next ethical frontiers. Life Sci Soc Policy. 2018;14(1):16. doi: 10.1186/s40504-018-0078-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kudo SE, Mori Y, Misawa M, Takeda K, Kudo T, Itoh H, Oda M, Mori K. Artificial intelligence and colonoscopy: current status and future perspectives. Dig Endosc. 2019;31(4):363–371. doi: 10.1111/den.13340. [DOI] [PubMed] [Google Scholar]

- Kudo SE, Misawa M, Mori Y, et al. Artificial intelligence-assisted system improves endoscopic identification of colorectal neoplasms. Clin Gastroenterol Hepatol. 2020;18(8):1874–1881.e2. doi: 10.1016/j.cgh.2019.09.009. [DOI] [PubMed] [Google Scholar]

- Kuipers EJ, Grady WM, Lieberman D, Seufferlein T, Sung JJ, Boelens PG, van de Velde CJ, Watanabe T. Colorectal cancer. Nat Rev Dis Primers. 2015;1:15065. doi: 10.1038/nrdp.2015.65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kweon SS. Updates on cancer epidemiology in Korea, 2018. Chonnam Med J. 2018;54(2):90–100. doi: 10.4068/cmj.2018.54.2.90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lai LL, Blakely A, Invernizzi M et al (2021) Separation of color channels from conventional colonoscopy images improves deep neural network detection of polyps. J Biomed Opt 26(1):015001. [DOI] [PMC free article] [PubMed]

- Law H, Ghani K, Deng J. (2017).Surgeon technical skill assessment using computer vision based analysis//Machine learning for healthcare conference. PMLR, pp 88–99.

- Le J (2018) The 8 Neural Network Architectures Machine Learning Researchers Need to Learn. Retrieved February 15, 2021, from https://www.kdnuggets.com/2018/02/8-neural-network-architectures-machine-learning-researchers-need-learn.html

- LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- Li M, Shen S, Gao W, Hsu W, Cong J (2018) Computed Tomography Image Enhancement Using 3D Convolutional Neural Network: 4th International Workshop, DLMIA 2018, and 8th International Workshop, ML-CDS 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, September 20, 2018, Proceedings. 10.1007/978-3-030-00889-5_33.

- Lisboa PJ, Taktak AFG (2006) The use of artificial neural networks in decision support in cancer: a systematic review. Neural Netw 19(4):408–415. [DOI] [PubMed]

- Liu J, Pan Y, Li M, Chen Z, Tang L, Lu C, Wang J (2018). Applications of deep learning to MRI images: a survey.

- Liu F, Jang H, Kijowski R, Bradshaw T, McMillan AB. Deep learning MR imaging–based attenuation correction for PET/MR imaging. Radiology. 2018;286(2):676–684. doi: 10.1148/radiol.2017170700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lundervold AS, Lundervold A. An overview of deep learning in medical imaging focusing on MRI. Zeitschriftfür Medizinische Physik. 2019;29(2):102–127. doi: 10.1016/j.zemedi.2018.11.002. [DOI] [PubMed] [Google Scholar]

- Marley AR, Nan H. Epidemiology of colorectal cancer. Int J Molecular Epidemiol Genet. 2016;7(3):105. [PMC free article] [PubMed] [Google Scholar]

- Martínez-Romero M, Vázquez-Naya JM, Rabunal RJ, et al. Artificial intelligence techniques for colorectal cancer drug metabolism: ontologies and complex networks. Curr Drug Metab. 2010;11(4):347–368. doi: 10.2174/138920010791514289. [DOI] [PubMed] [Google Scholar]

- Mayo RM, Summey JF, Williams JE, et al. Qualitative study of oncologists' views on the CancerLinQ rapid learning system. J OncolPract. 2017;13:e176–e184. doi: 10.1200/JOP.2016.016816. [DOI] [PubMed] [Google Scholar]

- Meng S, Zheng Y, Su R et al (2020) Artificial intelligence assisted standard white light endoscopy accurately characters early colorectal cancer: a multicenter diagnostic study.

- Misawa M, Kudo SE, Mori Y, Cho T, Kataoka S, Yamauchi A, Nakamura H. Artificial intelligence-assisted polyp detection for colonoscopy: initial experience. Gastroenterology. 2018;154(8):2027–2029. doi: 10.1053/j.gastro.2018.04.003. [DOI] [PubMed] [Google Scholar]

- Mori Y, Kudo SE, Berzin TM, Misawa M, Takeda K. Computer-aided diagnosis for colonoscopy. Endoscopy. 2017;49(8):813–819. doi: 10.1055/s-0043-109430. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mori Y, Kudo SE, Misawa M, et al. Real-time use of artificial intelligence in identification of diminutive polyps during colonoscopy: a prospective study. Ann Intern Med. 2018;169(6):357–366. doi: 10.7326/M18-0249. [DOI] [PubMed] [Google Scholar]

- Mori Y, Kudo SE, Misawa M, Mori K. Simultaneous detection and characterization of diminutive polyps with the use of artificial intelligence during colonoscopy. VideoGIE. 2019;4(1):7. doi: 10.1016/j.vgie.2018.10.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murphy, Kevin P (2012) Machine learning: a probabilistic perspective. The MIT Press, Cambridge.

- Nakajima Y, Zhu X, Nemoto D et al (2020) Diagnostic performance of artificial intelligence to identify deeply invasive colorectal cancer on non-magnified plain endoscopic images. Endosc Int Open 8(10):E1341–E1348. [DOI] [PMC free article] [PubMed]

- Nguyen DT, Lee MB, Pham TD, Batchuluun G, Arsalan M, Park KR. Enhanced image-based endoscopic pathological site classification using an ensemble of deep learning models. Sensors (Basel) 2020;20(21):5982. doi: 10.3390/s20215982. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Shea K, Nash R (2015). An introduction to convolutional neural networks. arXiv preprint arXiv:1511.08458.

- Pesapane F, Codari M, Sardanelli F. Artificial intelligence in medical imaging: threat or opportunity? Radiologists again at the forefront of innovation in medicine. Euro Radiol Exp. 2018;2(1):35. doi: 10.1186/s41747-018-0061-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pham T D, Fan C, Zhang H et al (2019) Deep learning Of P73 biomarker expression in rectal cancer patients//2019 International Joint Conference on Neural Networks (IJCNN). IEEE, New York, pp 1–8.

- Qureshi SA, Rehman AS, Qamar AM, Kamal A, Rehman A (2013) Telecommunication subscribers' churn prediction model using machine learning. In: Eighth International Conference on Digital Information Management (ICDIM 2013), Islamabad, 2013, pp 131–136.

- Rao M, Valentini D, Dodoo E, et al. Anti-PD-1/PD-L1 therapy for infectious diseases: learning from the cancer paradigm. Int J Infect Dis. 2017;56:221–228. doi: 10.1016/j.ijid.2017.01.028. [DOI] [PubMed] [Google Scholar]

- Repici A, Badalamenti M, Maselli R, et al. Efficacy of real-time computer-aided detection of colorectal neoplasia in a randomized trial. Gastroenterology. 2020;159(2):512–520.e7. doi: 10.1053/j.gastro.2020.04.062. [DOI] [PubMed] [Google Scholar]

- Ribeiro E, Uhl , Häfner M (2016) Colonic polyp classification with convolutional neural networks. In: 2016 IEEE 29th International Symposium on Computer-Based Medical Systems (CBMS), pp 253–258. IEEE, New York

- Rigano C (2018) Using Artificial Intelligence to Address Criminal Justice Need. https://nij.ojp.gov/topics/articles/using-artificial-intelligence-address-criminal-justice-needs

- Russell SJ, Dewey D, Tegmark M (2015) Research Ppriorities for robust and beneficial artificial intelligence. AI Magazine, 36.

- Sadilek A, Caty S, DiPrete L et al (2018) Machine-learned epidemiology: real-time detection of foodborne illness at scale. Digital Med 1:36. 10.1038/s41746-018-0045-1 [DOI] [PMC free article] [PubMed]

- Sakr, George E et al (2010) Artificial Intelligence for Forest Fire Prediction. In: 2010 IEEE/ASME International Conference on Advanced Intelligent Mechatronics, 6 July 2010, 10.1109/aim.2010.5695809.

- Salathé M. Digital epidemiology: what is it, and where is it going? Life Sci Soc Policy. 2018;14(1):1. doi: 10.1186/s40504-017-0065-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salathé M, Bengtsson L, Bodnar TJ, Brewer DD, Brownstein JS, Buckee C, et al. Digital epidemiology. PLOS Comput Biol. 2012;8(7):e1002616. doi: 10.1371/journal.pcbi.1002616. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sallab A, Abdou M, Perot E, Yogamani S. Deep reinforcement learning framework for autonomous driving. Electronic Imaging. 2017;2017(19):70–76. doi: 10.2352/issn.2470-1173.2017.19.avm-023. [DOI] [Google Scholar]

- Schöllhorn WI. Applications of artificial neural nets in clinical biomechanics. Clin Biomech. 2004;19(9):876–898. doi: 10.1016/j.clinbiomech.2004.04.005. [DOI] [PubMed] [Google Scholar]

- Schroerlucke SR, Wang MY, Cannestra AF, et al. Complication rate in robotic-guided vs fluoro-guided minimally invasive spinal fusion surgery: report from MIS refresh prospective comparative study. Spine J. 2017;17(10):S254–S255. doi: 10.1016/j.spinee.2017.08.177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seroussi B, Bouaud J. Using OncoDoc as a computer-based eligibility screening system to improve accrual onto breast cancer clinical trials. ArtifIntell Med. 2003;29:153–167. doi: 10.1016/s0933-3657(03)00040-x. [DOI] [PubMed] [Google Scholar]

- Shan H, Padole A, Homayounieh F, et al. Competitive performance of a modularized deep neural network compared to commercial algorithms for low-dose CT image reconstruction. Nat Mach Intell. 2019;1:269–276. doi: 10.1038/s42256-019-0057-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sharma N, Aggarwal LM. Automated medical image segmentation techniques. J Med Phys. 2010;35(1):3–14. doi: 10.4103/0971-6203.58777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Siegel RL, Miller KD, Jemal A (2016) Cancer statistics. A Cancer J Clin 66:7–30. 10.3322/caac.21332 [DOI] [PubMed]

- Somashekhar SP, Kumarc R, Rauthan A et al (2017) Abstract S6–07: Double blinded validation study to assess performance of IBM artificial intelligence platform, Watson for oncology in comparison with Manipal multidisciplinary tumour board–First study of 638 breast cancer cases

- Somashekhar SP, Sepúlveda MJ, Norden AD et al (2017) Early experience with IBM Watson for Oncology (WFO) cognitive computing system for lung and colorectal cancer treatment

- Somashekhar SP, Sepúlveda MJ, Puglielli S, et al. Watson for Oncology and breast cancer treatment recommendations: agreement with an expert multidisciplinary tumor board. Ann Oncol. 2018;29(2):418–423. doi: 10.1093/annonc/mdx781. [DOI] [PubMed] [Google Scholar]

- Tajbakhsh N, Shin JY, Gurudu SR, Hurst RT, Kendall CB, Gotway MB, Liang J. Convolutional neural networks for medical image analysis: Full training or fine tuning? IEEE Trans Med Imaging. 2016;35(5):1299–1312. doi: 10.1109/TMI.2016.2535302. [DOI] [PubMed] [Google Scholar]

- Tarkoma S, Alghnam S, Howell MD (2020) Fighting pandemics with digital epidemiology. EClinicalMedicine. 26:100512. [DOI] [PMC free article] [PubMed]

- Thiébaut R, Cossin S. Artificial Intelligence for Surveillance in Public Health. IMIA Yearbook of Medical Informatics. 2019;2019(28):232–234. doi: 10.1055/s-0039-1677939. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thiébaut R, Thiessard F. Artificial Intelligence in Public Health and Epidemiology. IMIA Yearbook of Medical Informatics. 2018;2018(27):207–210. doi: 10.1055/s-0038-1667082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thompson R, Valdes G, Fuller C, Carpenter C, Aneja S, Lindsay W, Aerts H, Agrimson B, Deville C, Rosenthal S, Yu J, Thomas C (2018) Artificial intelligence in radiation oncology: A specialty-wide disruptive transformation? Radiother Oncol 129. 10.1016/j.radonc.2018.05.030 [DOI] [PMC free article] [PubMed]

- Topol E (2019) High-performance medicine: the convergence of human and artificial intelligence. Nat Med 25. 10.1038/s41591-018-0300-7 [DOI] [PubMed]

- Velasco E. Disease detection, epidemiology and outbreak response: the digital future of public health practice. Life Sci Soc Policy. 2018;14(1):7. doi: 10.1186/s40504-018-0071-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- VoPham T, Hart JE, Laden F, et al. Emerging trends in geospatial artificial intelligence (geoAI): potential applications for environmental epidemiology. Environ Health. 2018;17:40. doi: 10.1186/s12940-018-0386-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu J (2017) Introduction to convolutional neural networks. National Key Lab for Novel Software Technology. Nanjing University. China, 5, 23.

- Yamada M, Saito Y, Imaoka H, et al. Development of a real-time endoscopic image diagnosis support system using deep learning technology in colonoscopy. Sci Rep. 2019;9(1):14465. doi: 10.1038/s41598-019-50567-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yamashita R, Nishio M, Do RKG, Togashi K. Convolutional neural networks: an overview and application in radiology. Insights Imaging. 2018;9(4):611–629. doi: 10.1007/s13244-018-0639-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yang SY, Roh KH, Kim YN, et al. Surgical outcomes after open, laparoscopic, and robotic gastrectomy for gastric cancer. Ann Surg Oncol. 2017;24(7):1770–1777. doi: 10.1245/s10434-017-5851-1. [DOI] [PubMed] [Google Scholar]

- Yarchoan M, Hopkins A, Jaffee EM. Tumor mutational burden and response rate to PD-1 inhibition. N Engl J Med. 2017;377(25):2500. doi: 10.1056/NEJMc1713444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yin M, Ma J, Xu J, et al. Use of artificial neural networks to identify the predictive factors of extracorporeal shock wave therapy treating patients with chronic plantar fasciitis. Sci Rep. 2019;9(1):1–8. doi: 10.1038/s41598-019-39026-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yuheng S, Hao Y (2017) Image segmentation algorithms overview. arXiv preprint arXiv:1707.02051.

- Zhang YY, Xie D. Detection and segmentation of multi-class artifacts in endoscopy. J Zhejiang Univ-Sci B. 2019;20(12):1014–1020. doi: 10.1631/jzus.B1900340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zheng X, et al. Detecting comma-shaped clouds for severe weather forecasting using shape and mmotion. IEEE Trans Geosci Remote Sens. 2019;57(6):3788–3801. doi: 10.1109/TGRS.2018.2887206. [DOI] [Google Scholar]

- Zhou N, Zhang CT, Lv HY, et al. Concordance study between IBM Watson for Oncology and clinical practice for patients with cancer in China. Oncologist. 2019;24(6):812–819. doi: 10.1634/theoncologist.2018-0255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zia A, Hung A, Essa I et al (2018) Surgical activity recognition in robot-assisted radical prostatectomy using deep learning[C]//International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, Cham, pp 273–280.

- Zia A, Guo L, Zhou L, et al. Novel evaluation of surgical activity recognition models using task-based efficiency metrics. Int J Computer Assist Radiol Surg. 2019;14(12):2155–2163. doi: 10.1007/s11548-019-02025-w. [DOI] [PubMed] [Google Scholar]