Abstract

Multisensory integration research has allowed us to better understand how humans integrate sensory information to produce a unitary experience of the external world. However, this field is often challenged by the limited ability to deliver and control sensory stimuli, especially when going beyond audio–visual events and outside laboratory settings. In this review, we examine the scope and challenges of new technology in the study of multisensory integration in a world that is increasingly characterized as a fusion of physical and digital/virtual events. We discuss multisensory integration research through the lens of novel multisensory technologies and, thus, bring research in human–computer interaction, experimental psychology, and neuroscience closer together. Today, for instance, displays have become volumetric so that visual content is no longer limited to 2D screens, new haptic devices enable tactile stimulation without physical contact, olfactory interfaces provide users with smells precisely synchronized with events in virtual environments, and novel gustatory interfaces enable taste perception through levitating stimuli. These technological advances offer new ways to control and deliver sensory stimulation for multisensory integration research beyond traditional laboratory settings and open up new experimentations in naturally occurring events in everyday life experiences. Our review then summarizes these multisensory technologies and discusses initial insights to introduce a bridge between the disciplines in order to advance the study of multisensory integration.

Keywords: multisensory integration, human–computer interaction, multisensory technology, interaction techniques, sensory stimulation

Introduction

We perceive the world through multiple senses by collecting different sensory cues that are integrated or segregated in our brain to interact with our environment (Shams and Beierholm, 2011). Integrating information across the senses is key to perception and action and influences a wide range of behavioral outcomes, including detection (Lovelace et al., 2003), localization (Nelson et al., 1998), and, more broadly, reaction times (Diederich and Colonius, 2004; Stein, 2012). Advancing the study of multisensory integration helps us to understand the organization of sensory systems, and in applied contexts, to conceive markers (based on deficits in integration) of disorders, such as autism spectrum disorder (Feldman et al., 2018) and schizophrenia (Williams et al., 2010). This, in turn, demonstrates the importance of assessing and quantifying multisensory integration (Stevenson et al., 2014).

Many studies have been conducted to quantify multisensory integration. However, different challenges are highlighted in the literature (Stein et al., 2009; Stevenson et al., 2014; Colonius and Diederich, 2017, 2020). One of the most notable challenges is the need to control timing, spatial location, and sensory quality and quantity during stimulus delivery (Spence et al., 2001). Another challenge is the complexity of studying integration involving the chemical senses (smell and taste). Many studies typically rely on audio–visual interactions (Stein, 2012; Noel et al., 2018) because, among other reasons, the technology to deliver audio–visual stimuli is relatively well-established and widely available (e.g., screens, headphones). Emerging multisensory technologies from computer science, engineering, and human–computer interaction (HCI1) enable new ways to stimulate, replicate, and control sensory signals (touch, taste, and smell). Therefore, they could expand the possibilities for multisensory integration research. However, due to their recent emergence and rapid development, their potential to do so might be overlooked or underexplored.

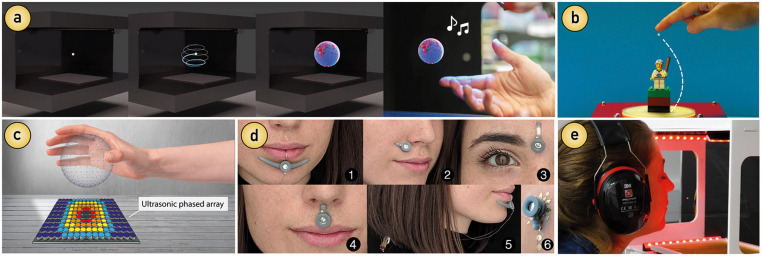

For example, as shown in Figure 1, acoustic levitation techniques are employed to display visual content (that can be also heard and felt), addressing the common limitations of 2D screens and stereoscopic displays typically employed to deliver visual stimuli (Figure 1a). Acoustic metamaterials are used to “bend” the sound so that auditory stimuli can be directed from a static source to a specific location while, at the same time, providing tactile sensations (Figure 1b). Moreover, it is now also possible to control and deliver tactile sensations to the skin without the need of additional attachments (e.g., gloves or physical actuators) using focused ultrasound (Figure 1c). With regards to smell and taste stimulation, we are seeing growing development efforts to create more flexible and portable solutions that vary in their capabilities compared to established laboratory equipment, such as gustatometers and olfactometers. Importantly, emerging olfactory displays and smell-delivery technologies are becoming smaller, wearable, and more modular, enabling less invasive stimulation within and outside laboratory settings (Figure 1d). Similarly, we can see novel gustatory stimulation approaches emerging, such as taste levitation systems that exploit the principles of acoustics for delivering precisely controllable taste stimuli to the user’s tongue (Figure 1e).

FIGURE 1.

Emerging multisensory technologies exemplifying interface and device advancements. (a) Particle-based volumetric displays (Hirayama et al., 2019). (b) Acoustic metamaterials that bend the sound (Norasikin et al., 2018). (c) Mid-air haptic 3D shapes produced by focused ultrasound (Carter et al., 2013). (d) On-face olfactory interfaces (Wang et al., 2020). (e) Gustatory experiences based on levitated food (Vi et al., 2020).

In this review, we discuss the potential of these new and emerging multisensory technologies to expand the study of multisensory integration by examining opportunities to facilitate the control and manipulation of sensory stimuli beyond traditional methods and paradigms. The set of novel digital interfaces and devices that we review exemplifies technological advances in multisensory stimulation and their associated opportunities and limitations for research on multisensory integration. The ultimate aim of this review is to introduce a bridge between disciplines and encourage future development and collaboration between the engineers developing the technologies and scientists from psychology and neuroscience studying multisensory integration.

We close our review with a reflection on the growing multisensory human–computer integration2 symbiosis—when technology becomes an integral part of everyday life and activities. This fast-growing integration raises a range of ethical questions and considerations regarding shared responsibility between humans and systems. One highly important question is related to the sense of agency (SoA), often referred to as the feeling of being in control (Haggard, 2017). We live in an increasingly digital world in which intelligent algorithms (e.g., autonomous systems and autocomplete predictors) assist us and influence our behavior. We are therefore not always aware of the extent to which technology makes decisions for us, which raises the question, who is in control now? While emerging technology can provide further multisensory signals to promote a SoA (I am, who is acting), further discussion is needed in light of the rapid development of artificial intelligence systems. This review also aims to promote further discussion and reflection upon the role of the SoA and other relevant questions that emerge through the relationship between the senses and technology (Velasco and Obrist, 2020).

Expanding Multisensory Integration: Current Tools/Methods and Emerging Technology

Studying how multiple sources of sensory information are integrated into a unified percept, often referred to as the “unity assumption” (Chen and Spence, 2017), has been a subject of intense research for many years. Studies employ different perspectives to explore multisensory integration. For example, some use weighted linear combination theories consisting of linear sums of unimodal sensory signals, wherein certain sensory modalities become more dominant than others to produce a unified perception (Ernst and Banks, 2002; Ernst and Bülthoff, 2004). Others explore sensory integration at the level of a single neuron (Stanford and Stein, 2007; Stein and Stanford, 2008) and explain the integration of sensory information through neural circuitry.

One common aspect to the study of multisensory integration is the need for a carefully controlled stimulus delivery. Computational and psychophysical studies must precisely present subjects with multisensory cues that have carefully controlled properties. Many such studies build on the modulation of the reliability of sensory cues to weigh the influence of individual sensory modalities (Ernst and Banks, 2002; Fetsch et al., 2012). For example, in a visuo–tactile task (e.g., size estimation of an object), I rely on my vision and touch to estimate the size of the object. Then, to examine how both of my senses are integrated, the researchers modify the reliability of the sensory information I perceived from the object. They might alter the clarity of my vision (e.g., by means of a special screen) or my perceived object size (e.g., by means of a shape-changing object). This modulation implies changing and varying the stimulus properties (Burns and Blohm, 2010; Parise and Ernst, 2017) requiring precise computer-controlled delivery. However, it has been suggested that many behavioral studies on multisensory integration rely on “century-old measures of behavior—task accuracy and latency” (Razavi et al., 2020) and are commonly constrained by in-laboratory and desktop-based settings (Wrzus and Mehl, 2015).

In the following sections, we present an overview of current challenges that can be overcome in light of new and emerging multisensory technologies. We particularly focus on technologies that illustrate the kinds of novel devices and methods emerging from HCI, which provide new functionalities for studying the human senses and which have not been used in multisensory integration research, although they can be of great interest and help in such research. Accordingly, we review novel, recently emerging technology that (1) is claimed to be multisensory/multimodal, (2) can be easily integrated with other multisensory technology, (3) allows naturalistic environments beyond laboratory settings, or (4) enables a move from physical to digital interactions.

We live in a time in which technology is ubiquitous, which means that delivering, measuring, and assessing multisensory signals in daily life can be facilitated. We have selected these particular technologies to highlight their potential to advance the study of multisensory integration not only by offering precision and controllability but also by enabling more natural study environments beyond desktop-based experiments. With this focus, we aim to examine opportunities that permit studies to take place over time (e.g., longitudinal studies) or outside a laboratory (e.g., at home) while still being precise. In the following sections, we describe the representative technological advancements for each of the main senses: vision, audition, touch, olfaction, and gustation (summarized in Table 1). We present separated sections for each of those senses to give focused information to readers with a particular interest (e.g., researchers interested in new olfactory technologies). In each section, we first introduce the emerging technology and benefits for individual sensory modalities, we then discuss and exemplify how it can aid multisensory integration research, and we further highlight how they can be integrated dynamically into multisensory paradigms, i.e., by capitalizing on the different technologies as modules to conduct studies involving multiple senses.

TABLE 1.

Key properties of emerging multisensory technologies for each sensory modality.

| Sensory modality | Emerging technology | Key stimulation opportunities | Multisensory integration flexibility | Main advantages for multisensory experiments | Primary reference |

| Vision | Volumetric displays | Depth | Visual content can be heard and felt simultaneously | No need of head-mounted displays while giving 3D cues in the real world | Hirayama et al., 2019 |

| Audition | Acoustic lenses and metamaterials | Directionality | Audio signals can be felt and used to levitate and direct objects that create visual displays | Enable directional sound stimulation while giving freedom to navigate | Norasikin et al., 2018, 2019 |

| Touch | Focused ultrasound | Un-instrumented 3D tactile sensations | The sound waves used to produce tactile sensation can also be heard and easily integrated into visual paradigms (e.g., virtual reality) | No need of physical actuators; open opportunities to introduce the study of integration of mid-air touch with other senses | Carter et al., 2013; Martinez Plasencia et al., 2020 |

| Smell | Wearable smell technology | Portable and body-responsive | Its portability makes it easily integrated into other multisensory technologies | Delivery on-demand outside a laboratory setting, enabling daily life testing and longitudinal studies | Amores et al., 2018; Wang et al., 2020 |

| Taste | Levitated food | Sterile, un-instrumented | Integration of levitated food with visual, olfactory, auditory, and tactile stimuli | Delivery actual food (multiple morsels) simultaneously in 3D, enabling the manipulation of food’s trajectories | Vi et al., 2017b, 2020 |

Visual Stimulation Beyond the Screen

In the well-studied audio–visual integration space, visual information is modulated by altering the frequency or localization of seen and heard stimuli (Rohe and Noppeney, 2018), often by employing the established McGurk paradigm (Gentilucci and Cattaneo, 2005). In another example, for visuo–haptic integration studies, visual information is modulated in size estimation or identification tasks through the manipulation of an object’s physical shape (Yalachkov et al., 2015) or the alteration of digital images through augmented (Rosa et al., 2016) and virtual reality (VR) headsets (Noccaro et al., 2020).

For these studies, visual stimulus presentation is typically limited to 2D screens that show visual cues (static or in movement) in a two-dimensional space. While high-frequency 2D screens offer a good image presentation quality and low latency, they are still limited to 2D content, thus constraining depth perception. The stereoscopic displays used in VR headsets offer great advantages for 3D content visualization and full-body immersion also allowing the study of visuo-vestibular and proprioceptive signals (Gallagher and Ferrè, 2018; Kim et al., 2020) and even visuo–gustatory interactions (Huang et al., 2019). However, it is suggested that stereoscopic displays typically used in VR have disadvantages for psychology experiments. For example, people tend to consistently underestimate the size of the environment and their distance to objects (Wilson and Soranzo, 2015) even when motion parallax and stereoscopic depth cues are provided to the observer (Piryankova et al., 2013). This can be limiting for spatial tasks (e.g., in visuo–tactile interactions). Additionally, immersion in VR can cause cybersickness due to the brain receiving conflicting signals about the user position and its relation to the movement observed in the virtual environment (Gallagher and Ferrè, 2018).

The aforesaid challenges could be overcome through novel visual display technologies, such as advances in particle-based volumetric displays (PBDs) (Smalley et al., 2018). These displays provide a benefit over traditional 2D screens since they are not limited by two-dimensional content. PBDs show 3D images in mid-air, thus allowing depth perception, which could be integrated into traditional experimental paradigms, such as depth discrimination tasks (Deneve and Pouget, 2004; Rosa et al., 2016). Furthermore, PBDs also offer a benefit over VR headsets as these novel displays do not require wearing of a head-mounted display (HMD). That is, the user is not brought to a virtual world, but the 3D content is shown in the real world, avoiding cybersickness and the size and distance underestimations typical when using stereoscopic displays, while also avoiding user instrumentation.

These PBDs allow the creation of 3D visualizations by freely moving a particle in 3D space at such a high speed (e.g., ∼8 m/s) that visual content is revealed using the persistence of vision (POV) effect (Hardy, 1920), i.e., when an image is perceived as a whole by the human eye due to rapid movement succession (see Figure 1a). Particularly, the class of PBDs that uses acoustophoresis (Hirayama et al., 2019; Martinez Plasencia et al., 2020) is able to deliver visual stimuli that can be felt and heard simultaneously (spatially overlapping). For this reason, this technology is called a multimodal acoustic trap display (MATD) (see Figure 1a).

To produce an image that exists in real 3D space, the MATD uses sound waves (emitted from an array of speakers) to trap a lightweight particle (a polystyrene bead) in free space, which is called acoustic levitation. The position of this particle is updated at a very high update rate so that the POV effect occurs, and the observer perceives it as a full object. Since the particle is updated at such a high speed, the display can create audio (any sound that you could play with a traditional speaker) and tactile feedback (a gentle sensation of touch coming from the display) simultaneously. Since this new volumetric display technology offers multisensory stimulation, it could enable the study of multisensory integration beyond pairs of senses (e.g., visual, auditory, and haptic tasks), as it offers the flexibility to deliver and precisely control visual content alongside tactile stimuli and sound within the same setup. Therefore, this technology could be used in studies exploring multisensory distractor processing, where sensory targets and distractors often need to be placed and presented from the same location (Merz et al., 2019).

The spatio-temporal features of these displays can be considered for possible experimental design around multisensory integration in future studies, replacing 2D screens or HMDs. For instance, the MATD proposed by Hirayama et al. (2019) manages two types of refresh rate, one for particle position and one for rendered images. The particle position refresh rate is ∼10 kHz, taking ∼0.1 ms to update the position of the particle in 3D space. Each image rendered with the MATD is composed from several updates of a single particle. The image refresh rate is ∼10 Hz, taking ∼100 ms for a 3D image to be fully rendered. The particles that this display can levitate and accelerate can have a maximum diameter of ∼2mm and a minimum diameter of ∼1 mm. The size of the images rendered is ∼10 cm3, with a maximum velocity of ∼8 m/s. For instance, a sphere of 2-cm in diameter takes ∼100 ms to be fully rendered using a single particle. When an image is rendered at ≤100 ms, it is considered POV time, i.e., when a single moving object along a trajectory is perceived as a whole image and the human eye can see it without flickering.

Similar volumetric displays use the principles of acoustic levitation, although they do not quickly update the particles to render an image (using POV). Instead, in real space, they levitate particles attached to a piece of fabric onto which an image is projected to created levitating displays. Recent work in HCI has shown that these levitating displays enable a good control for interactive presentations (Morales et al., 2019; Morales González et al., 2020).

Other novel techniques that can offer benefits for visual stimulus modulation, particularly for visuo–tactile tasks, include retargeting techniques in mixed reality. These techniques deform the visual space (conflicting an observer’s sense of vision and touch) without the user noticing, thus creating different illusions that can modulate the reliability of visual and tactile interactions. For example, many studies on visuo–haptic integration are limited to haptic modulation through force feedback (using physical devices or motor actuators). Retargeting techniques instead can modulate the perception of touch by exploiting the dominance of the visual system (visual capture; Rock and Victor, 1964), reducing the use of physical haptic devices. They can, for instance, modulate the perception of the quantity of objects (Azmandian et al., 2016), of an object’s weight (Rietzler et al., 2018; Samad et al., 2019), of different textures (Cheng et al., 2017), or of different geometries (Zhao and Follmer, 2018) using limited physical elements (no motors or robots) and relying mainly on visual cues.

In other words, emerging visual image processing and mixed reality technology can enable the study of visuo–haptic integration by reducing the use of physical proxies (e.g., deformable surfaces; Drewing et al., 2009; Cellini et al., 2013), which can be inflexible and more complex to control. Instead, these novel techniques deform the visual space which can be more easily controlled by taking advantage of the visual capture, which is particularly present in spatial tasks (Kitagawa and Ichihara, 2002). Using translational gains, these techniques can even be extended to modulate visual perception involving more complex actions (beyond hand–object interaction in desktop-based experiments), such as walking (Razzaque et al., 2001). Some examples include techniques that modulate the perception of walking speed (Montano-Murillo et al., 2017), walking elevation (Nagao et al., 2017), and distance travelled (Sun et al., 2018). These technologies could open up opportunities to expand and facilitate the study of the integration between vision and proprioception (Van Beers et al., 1999) or between visual and vestibular stimuli (Gu et al., 2006), as well as extend the study of the body schema, which is usually studied for hand interactions (Maravita et al., 2003).

As retargeting techniques mainly employ HMDs to show visual content, other multisensory technologies can easily be combined, for example, headphones to present auditory stimuli, haptic devices, such as vibrational attachments (controllers, suits), and smell delivery devices (external or wearable), as have previously been used in VR settings [e.g., in the work by Ranasinghe et al. (2018)].

Auditory Stimulation Beyond Headphones

Studies exploring auditory integration commonly modulate sensory information by changing the frequency or synchrony of auditory cues in identification or speech recognition tasks, requiring audio–visual simultaneity (Fujisaki et al., 2004). In these experiments, auditory stimulus delivery is limited to the use of headphones and static sources of sound (speakers). To avoid extra confounding factors, noise control or canceling is also required. However, recent advances in sound manipulation offer new opportunities to deliver and control sound, enabling, for instance, the presentation of directional sound without wearing headphones in a controlled manner. These technological advances could not only help overcome existing limitations but also open up new experimental designs for multisensory integration studies.

Researchers in areas, such as physics, engineering, computer science, and HCI are working on new concepts of controlling sound using ultrasonic manipulation and acoustic metamaterials (Norasikin et al., 2018; Prat-Camps et al., 2020), moving towards the ability of controlling sound just like we do with light (Memoli et al., 2019b). Advances in optics enable the modulation of users’ visual perception through the use of filters and lenses (e.g., cameras and VR headsets). Nevertheless, for sound, this is more challenging, but researchers have already created acoustic lenses to control, filter, and manipulate sound. These techniques are possible thanks to ultrasound phased arrays integrated with acoustic lenses (also called metamaterials) that direct the sound by using acoustic bricks (Memoli et al., 2017). For example, in a theater, a spotlight can be delivered to a single person while others around are in the darkness. But, imagine that a spot of sound is delivered to a single person in the audience while others around that person cannot perceive it. In another example, in a cinema, the movie audio could be played in different languages and delivered to specific persons in the audience (Memoli et al., 2019a). Figure 1b is a simplified representation of sound “bending” around an object by Norasikin et al. (2018). This technique directs sound waves to avoid obstacles (represented by the dashed line in Figure 1b). At the same time, the directed sound is able to not only levitate a small bead above an object but also produce a tactile sensation above the bead in the user’s finger.

The aforesaid sound manipulation can benefit the study of multisensory integration in different ways. Since the direction of sound can be controlled with these acoustic lenses, it is possible to modulate the perceived position of the sound source, even when it is static (Graham et al., 2019). This technology could then be integrated into classical paradigms used in multisensory integration studies, such as temporal/spatial ventriloquism (Vroomen and de Gelder, 2004) and other experimental paradigms studying spatial localization and sound source location using multisensory signals (Battaglia et al., 2003).

Other benefits include the possibility to avoid instrumentation, i.e., avoiding the use of headphones for noise canceling; the ability to precisely modulate the perception of sound location, direction, and intensity inside a room in spatial and temporal tasks, even when the sound source is static; or the possibility to have multiple subjects in an experimental room while auditory stimuli are delivered individually and without causing distractions. Furthermore, through the use of body tracking sensors, this technology could identify a moving person in order to deliver an auditory stimulus while they are walking (Rajguru et al., 2019), which could be suitable for navigation and spatial localization tasks (Rajguru et al., 2020). This opens up opportunities for studies beyond desktop-based experiments and therefore allowing navigation tasks that combine body movement signals, such as auditory–vestibular integration.

Additionally, some of these devices allow multimodal delivery, enabling interactions beyond pairs of senses. For example, the methods by Jackowski-Ashley et al. (2017) and Norasikin et al. (2018) allow the integration with touch (i.e., mid-air tactile stimulation), while the approach by Norasikin et al. (2019) allows visual stimulation via reconfigurable mid-air displays. This technology controls directional sound while at the same time producing a haptic sensation on the skin due to the specific frequency of the emitted sound waves. This combination of signals can allow us to present sound and haptic sensations from the same location, which could offer benefits in the study of haptic–auditory integration studies (Petrini et al., 2012).

Moreover, directional sound can be achieved with more traditional speakers (i.e., not involving ultrasound) using the principles of spatial sound reproduction, making it possible to “touch” the sound and interact with it (Müller et al., 2014) and enabling the study of audio-tactile interactions. This technology could be integrated into classical experimental paradigms involving audio–tactile judgments; for example, the audio–tactile loudness illusion (Yau et al., 2010) and other combinations of signals, such as tactile stimulation and music (Kuchenbuch et al., 2014).

While much of this research is still at an early stage (i.e., laboratory explorations), it already points to future opportunities in real environments with promising benefits for expanding research beyond the development of the technology itself and towards its use in psychology and neuroscience research.

From Physical to Contactless Tactile Stimulation

Much research on touch, in the context of multisensory integration, has focused on visuo–haptic integration (Stein, 2012), although several studies also focus on the integration between haptics and audio (Petrini et al., 2012) and smell (Castiello et al., 2006; Dematte et al., 2006). In most cases, however, haptic information is modulated in size estimation or identification tasks. Haptic sensation is usually modulated through deformable surfaces (Drewing et al., 2009), force feedback (Ernst and Banks, 2002), data gloves (Ma and Hommel, 2015; Schwind et al., 2019), or vibration actuators on skin (Maselli et al., 2016).

These studies rely on tangible objects, so therefore findings on haptic integration with other senses have so far been based on physical touch achieved by using either mechanical actuators or user instrumentation. However, with the accelerated digitization of human experiences produced by social distance restrictions, we see increasing contactless and remote interactions not only in light of the COVID-19 pandemic but also in light of the proliferation of mid-air interactions (Rakkolainen et al., 2020) and the digitalization of the senses (Velasco and Obrist, 2020).

Mid-air interactions allow subjects to control objects from a distance by means of hand gestures and without the need of physical contact. To provide a tactile sensation in mid-air, ultrasonic phased arrays composed of several speakers (see Figure 1c) can be computer-controlled to emit focused ultrasound over distance (e.g., 20 cm) and enable a person to perceive tactile sensations in mid-air without the need of physical attachments, such as a glove. These tactile sensations can be single or multiple focal points on the hand, 3D shapes (Carter et al., 2013), or textures (Beattie et al., 2020). This unique combination is enabling novel interaction paradigms previously only seen in science fiction movies. For example, it is now possible to touch holograms (Kervegant et al., 2017; Frish et al., 2019), as well as levitate objects (Marzo et al., 2015), and interact with them (Freeman et al., 2018; Martinez Plasencia et al., 2020). We can now interact with computers, digital objects, and other people in immersive 3D environments in which we cannot only see and hear but can also touch and feel. This technology is also able to convey information (Paneva et al., 2020) with a huge potential for mediating and studying emotions (Obrist et al., 2015). Furthermore, it has become wearable (Sand et al., 2015) and part of daily-life activities, suggesting a promising potential for dynamic and more natural scenarios, such as online shopping (Kim et al., 2019; Petit et al., 2019), in-vehicle interactions (Large et al., 2019), and home environments (Van den Bogaert et al., 2019), where people can naturally integrate sensory information during daily tasks.

Despite the rapid development of mid-air technologies, efforts to study haptic integration are uniquely directed to physical touch to date, and it is therefore unknown how mid-air touch is integrated with the other senses. For instance, we do not know if the integration of vision, audio, or smell with mid-air touch is similar to what has been found with actual touch, as there are many factors that make physical and mid-air touch different (e.g., physical limits, force, ergonomics, instrumentation, etc.). Here, we see an opportunity to expand the knowledge around mid-air interactions by applying the principles of multisensory integration from the area of psychology and neuroscience. Bridging this gap could open up a wide range of new studies exploring the integration of multiple senses with mid-air touch using the technology recently developed in HCI and further taking advantage of the current knowledge generated in this area. For example, a number of studies have already provided insights that improve our understanding of mid-air haptic stimuli perception in terms of perceived strength (Frier et al., 2019), geometry identification (Hajas et al., 2020b), and tactile congruency (Pittera et al., 2019b), providing compelling evidence of the capability of mid-air haptics to convey information (Hajas et al., 2020a; Paneva et al., 2020).

Recent studies have used mid-air haptics to replicate traditional paradigms used in sensory experiments, such as the rubber hand illusion (Pittera et al., 2019b) and the apparent tactile motion effect (Pittera et al., 2019a). This suggests promising opportunities to use mid-air touch in other tasks involving visuo–tactile judgments, such as the cutaneous rabbit illusion (Geldard and Sherrick, 1972). In the future, it may even be possible to apply mid-air touch to tasks more complex than cutaneous sensations, such as force judgments (e.g., the force matching paradigm; Kilteni and Ehrsson, 2017).

Additionally, mid-air technology can be flexible enough to allow for multisensory experiences. Ultrasonic phased arrays, such as those developed by Hirayama et al. (2019), Shakeri et al. (2019), and Martinez Plasencia et al. (2020) combine mid-air tactile and auditory stimulation simultaneously. They employ speakers emitting sound waves that, at specific frequencies, can be both heard and felt on the skin. In particular, the methods introduced by Jackowski-Ashley et al. (2017) and Norasikin et al. (2018), not only deliver haptics but also parametric audio (i.e., allowing continuous control over every parameter) that can be directed by using acoustic metamaterials. Mid-air haptics has also been largely integrated with visual stimulus presentation via virtual and augmented reality (Koutsabasis and Vogiatzidakis, 2019) and multimedia interactions (Ablart et al., 2017; Vi et al., 2017a).

While most of the technology described above is still in the development phase, some devices able to provide mid-air haptics are commercially available. For example, STRATOS Explore and STRATOS Inspire are haptics development kits introduced by Ultraleap3 that are are currently available in the market.

Emerging Smell Technologies and Olfactory Interfaces

The sense of smell is powerful, and research shows that the human nose has similar abilities to those of many animals (Porter et al., 2007; Gilbert, 2008). Therefore, the sense of smell has gained increasing attention in augmenting audio–visual experiences (Spence, 2020). Some studies have explored the integration of smell with vision (Gottfried and Dolan, 2003; Forscher and Li, 2012), audition (Seo and Hummel, 2011), and taste (Dalton et al., 2000; Small and Prescott, 2005). Common strategies to modulate and deliver olfactory cues are based on analog methods, such as smelling scented pens and jars of essential oils (Hummel et al., 1997; Stewart et al., 2010) that are limited by poor control over the scent stimulus delivery. More sophisticated clinical computer-controlled olfactometers have been employed but can often be bulky, static, and noisy (Pfeiffer et al., 2005; Spence, 2012).

Novel olfactory interfaces developed by researchers in the field of HCI can overcome some of those challenges. For example, today smell delivery technology, in addition to being precise and controllable (Maggioni et al., 2019), has become wearable (Yamada et al., 2006), small (Risso et al., 2018), and even fashionable (Amores and Maes, 2017; Wang et al., 2020; see Figure 1c). This portability can facilitate scent delivery in daily activities outside laboratory settings (e.g., home, work), offering opportunities to study smell stimulation in various contexts, such as longitudinal and field studies. For example, attention ability might differ between lab studies and daily-life settings, which can affect the study results (Park and George, 2018). Researchers studying the behavior of the sensory system during daily-life activities (e.g., Sloboda et al., 2001; Low, 2006) might benefit from wearable and miniaturized devices that can be easily carried. Some of these devices not only deliver sensory stimuli on demand but also record data that can be stored in a smartphone for further processing and analysis (Amores and Maes, 2017; Amores et al., 2018).

Furthermore, since wearable scent delivery systems are small and portable, they can easily be integrated with additional multisensory technology and other actuators. For example, Brooks et al. (2020) used a wearable smell delivery device attached to a VR headset to show visual stimuli as well. Moreover, Ranasinghe et al. (2018) added sensory stimulation, such as wind and thermal feedback to provide a multisensory experience and thus induce a sense of presence. In another example, Amores and Maes (2017) and Amores et al. (2018) developed a wearable smell delivery system in the form of a necklace that can be combined with a VR headset and sensors to collect physiological data (e.g., EEG, heart rate), suggesting opportunities for using it while sleeping.

One interesting exploration of emerging wearable smell delivery systems is how to deliver scent stimuli which are released based on physiological data from the body, including moods and emotions (Tillotson and Andre, 2002), brain activity, or respiration (Amores et al., 2018). These new olfactory devices make use of advances in sensors (e.g., biometric and wearable sensors) and enable thinking beyond the constraints of unisensory stimulation. For example, wearable scent delivery systems have been used to modulate the perception of temperature (Brooks et al., 2020), which can enable the study of multisensory integration involving olfactory and somatosensory signals (de la Zerda et al., 2020).

Based on the same principles of directed sound, ultrasound can also be used to control and direct scent stimuli (Hasegawa et al., 2018). Current air-based scent delivery devices such as those employing compressed air (Dmitrenko et al., 2017), fans (Hirota et al., 2013) and vortexes (Nakaizumi et al., 2006), allow great control over the temporal and spatial diffusion of scents (Maggioni et al., 2020). However, these air-based scent transportation systems produce a turbulent flow that disperses the scents with distance decreasing their intensity. Sound-based smell delivery instead uses acoustic beams that produce more laminar scent flow, suggesting promising additional control over the spatial distribution of scents particularly, thus increasing their intensity.

While these efforts are still in the early exploration stage, they again illustrates how technological advances can enable experimental studies to help advance our understanding of multisensory integration. While it may seem far-fetched and beyond current everyday life experiences, wearable and body-responsive technology (e.g., a device that releases a scent based on my heart rate) is in line with growing efforts to design and develop technology that promotes a paradigm shift from human–computer interaction to human–computer integration (Mueller et al., 2020)—a future in which technology becomes part of us (e.g., wearing a device that becomes part of my body and responds based on my body’s signals). As prior research has shown, the sense of one’s own body is highly plastic, with representations of body structure and size particularly sensitive to multisensory influences (Longo and Haggard, 2012). We are seeing initial efforts, sometimes from an artistic design perspective, to explore smell-based emotionally responsive wearable technology. For example, smell has been shown to influence how we feel about ourselves (Tillotson, 2017; Amores et al., 2018), affect our body image perception (BIP) (Brianza et al., 2019) and support sleep and dreaming (Carr et al., 2020). More opportunities around smell can be studied with respect to human sensory perception and integration due to these ongoing technological advances.

Emerging Gustatory Technologies and Interfaces

Unlike other sensory modalities that can be stimulated externally (e.g., vision, audio, and smell), taste stimulation occurs inside the body, and this can be more complex and invasive. A common area of study is around odor–taste integration (Dalton et al., 2000) given the multisensory nature of flavor perception (Prescott, 2015). However, since food perception is more broadly considered one of the most multisensory experiences in people’s everyday lives (Spence, 2012), different studies have also explored the integration of taste with vision (Ohla et al., 2012), audition (Yan and Dando, 2015), and touch (Humbert and Joel, 2012). In most cases, however, gustatory cues have been modulated by changing the concentration of taste stimuli in reaction and detection tasks (Overbosch and De Jong, 1989). For such tasks, precision is crucial, and while some studies use simple methods, such as glass bottles (Pfeiffer et al., 2005), precise taste control stimulation can be achieved through well-established gustatometers consisting of either chemical or electric stimulation (Ranasinghe and Do, 2016; Andersen et al., 2019). However, controlling taste delivery through these methods can be unnaturalistic and is constrained-to-in-lab settings.

Novel interfaces from HCI may represent more naturalistic interactions and enable new contexts for studying the multisensory integration of taste with other senses. For example, mixed reality4 systems are also employed to modulate the perception of taste in augmented (Narumi et al., 2011b; Nishizawa et al., 2016) and virtual reality (Huang et al., 2019) suitable for visuo–gustatory interactions in wearable settings. Recent systems have also enabled the combination of multiple senses, for example, involving vision, olfaction, and gustation (Narumi et al., 2011a), which can facilitate studying integration beyond pairs of senses. These systems alter the visual attributes (e.g., color) of a seen physical item (e.g., cookie, tea) by means of image processing to vary its flavor perception.

Meanwhile, emerging tongue-mounted interfaces (Ranasinghe et al., 2012) do not use physical edible items but are able to produce, to a certain degree, sour, salty, bitter, and sweet sensations by electric and thermal stimulation without using chemical solutions, promising to be user-friendly (Ranasinghe and Do, 2016). These interfaces can be combined with other sensory modalities as well, such as smell and vision, using common objects for a more natural interaction, such as drinking a cocktail (Ranasinghe et al., 2017).

In another example, touch-related devices have enabled the study of taste perception by varying weight sensations (Hirose et al., 2015), biting force (Iwata et al., 2004), or vibrotactile stimuli (Tsutsui et al., 2016) suitable for studying a combination of gustatory and proprioceptive signals. Precise control of taste stimuli quantities can also be achieved through novel food 3D printing techniques (Khot et al., 2017; Lin et al., 2020), which permit the design and creation of physical food structures with controllable printing parameters, such as infill pattern and infill density (Lin et al., 2020). This control capability could be suitable for customizing and equalizing conditions during multisensory integration experiments; for example, giving the same concentration of taste stimuli across subjects while enabling a more natural taste stimulation (e.g., an actual cookie or chocolate treat), unlike using electrical stimulation (Spence et al., 2017), which can be invasive. Many other examples can be seen in the field of HCI for enhancing and modulating taste perception via different senses [e.g., see the work by Velasco et al. (2018) for a review of multisensory technology for flavor augmentation].

An emerging approach based on the principles of acoustic levitation is computer-controllable levitating food (Vi et al., 2017b). This technology consists of a contactless food delivery system able to deliver food morsels to the user’s tongue without the need of pipettes or electrodes. This contactless interaction can be suitable for delivering taste stimuli while maintaining a sterile and clean environment. Unlike electrical stimulation, levitating food techniques offer the possibility to deliver actual food, i.e., multiple morsels simultaneously in 3D, enabling the manipulation of the food’s trajectories. This technology has been extended to synchronized integration of levitated food with visual, olfactory, auditory, and tactile stimuli (Vi et al., 2020), enabling systematic investigations of multisensory signals around levitated food and eating experiences. For example, with this system, Vi et al. (2020) found that perceived intensity, pleasantness, and satisfaction regarding levitating taste stimuli are influenced by different lighting and smell conditions. This approach thus opens up experimentations into new tasting experiences (e.g., molecular gastronomy; Barham et al., 2010).

The aforesaid new approach can extend the study of multisensory integration in several ways. For example, studies exploring olfactory–gustatory integration can benefit from the multimodal functionalities of this technology. Different mixtures could be created in mid-air by levitating different droplets of different solutions with precision, allowing researchers to dynamically change experimental conditions (e.g., different tastes) while at the same time controlling smell stimulus presentation in terms of time (precise control of delivery duration) and location (directional delivery toward the subject’s nose). Additionally, since levitated food does not involve physical actuators, this could facilitate its implementation within VR environments (e.g., in visuo–gustatory interactions), avoiding the need to track additional elements (e.g., the subject’s hands, spoons) (Arnold et al., 2018). Finally, levitating food approaches can also facilitate the study of multisensory spatial interactions, given that food stimuli can be delivered to the subject’s mouth from different locations.

The multimodal properties of these new gustatory technologies and interfaces can be applied to classical paradigms used in the study of multisensory integration, for example, in studies involving gustatory and olfactory interactions, such as odor–taste learning (Small and Green, 2012) or involving gustatory and auditory interactions, such as the sonic chip paradigm (Spence, 2015). Overall, the technology described in this section is opening up a wide range of opportunities not only in multisensory integration research but also in the context of eating and human–food interaction (Velasco and Obrist, 2021).

Discussion, Conclusion, and Future Research

The aim of this review was to reflect upon the opportunities that advances in multisensory technology can provide for the study of multisensory integration. We have exemplified how researchers in the field of multisensory integration could derive inspiration and benefit from novel emerging technologies for visual, auditory, tactile, and also olfactory and gustatory stimulation. Apart from describing the level of control that new interfaces and devices offer, we have highlighted some of the new flexibility such technologies provide, such as how the different senses can be stimulated simultaneously and how the study of multisensory integration can be moved beyond the laboratory into more naturalistic and newly created settings, including physical/real and digital/virtual worlds.

While multisensory technology is advancing and revealing new opportunities for the study of multisensory integration, a major issue we would like to highlight is how responsibility is shared between humans and technology. Computing systems today have become ubiquitous and increasingly digital. An example of this is the evolution from human–computer interaction—a stimulus–response interplay between humans and technology (Hornbæk and Oulasvirta, 2017)—towards human–computer integration (Mueller et al., 2020)—a symbiosis in which humans and software act with autonomy (Farooq and Grudin, 2016). For example, multisensory technology becomes more connected to our body, emotions, and actions since sensors can be worn that allow mobile interactions (Wang et al., 2020). Responses from systems are mediated by the user’s biological responses and emotional states (Amores and Maes, 2017). Virtual environments allow one to embody virtual avatars, thus creating the feeling of body ownership and the sense of presence (i.e., the feeling of being there), with realistic environments no longer limited to audio–visual experiences but also including touch (Sand et al., 2015), smell (Ranasinghe et al., 2018), and taste experiences (Narumi et al., 2011b).

This increased symbiosis between humans and technology (Cross and Ramsey, 2020) leads to the challenge of a shared “agency” between humans and digital systems. Agency or, more precisely, the sense of agency (SoA) is crucial in our interaction with technology and refers to the feeling of “I did that” as opposed to “the system did that,” supporting a feeling of being in control (Haggard, 2017). The SoA arises through a combination of internal motoric signals and sensory evidence about our own actions and their effects (Moore et al., 2009). Therefore, increasing sensory evidence by giving the subjects multisensory cues during interactions can make technology users more aware of their actions and the consequences of these, thus promoting a feeling of responsibility (Haggard and Tsakiris, 2009). Since recent technology posits the user in environments that are not fully real (e.g., virtual or augmented) and where users’ actions are sometimes influenced (e.g., autocompletion predictors) or even automated (e.g., autonomous driving), multisensory signals can help the users to feel agency during the interaction with technology, even though they are not the agent of the action (Banakou and Slater, 2014). Emerging research is examining how to improve the SoA during human–computer interaction, for example, by exploring motor actuation without diminishing the SoA (Kasahara et al., 2019), exploring appropriate levels of automation (Berberian et al., 2012), or exploring how the SoA can be improved through olfactory interfaces (Cornelio et al., 2020). Despite such efforts, it has been suggested that “the cognitive coupling between human and machine remains difficult to achieve” (Berberian, 2019), so therefore further research is needed. However, in light of this review, we argue that, in a digital world in which users can see, hear, smell, touch, and taste just like they do it in the real world, it can provide the sensory signals that they need to self-attribute events, thus facilitating the agency delegation between humans and systems.

In summary, we believe that the SoA is a key concept that may become increasingly important to consider in the study of multisensory integration especially when moving from laboratory to real-world environments. Despite the astonishing technological progress, it is worth acknowledging that some of the technologies—interfaces and devices—described in this review are still in the development phase, and although their principles are possible in theory and often demonstrated in proofs-of-concepts, more testing is needed. Additionally, some of the devices discussed in our review lack studies with human participants. For example, the volumetric displays illustrated in Figure 1c have only been tested in laboratory settings with no further exploration of areas in which they could be useful (e.g., psychophysics studies). This highlights the main motivation underlying our review – to make researchers aware of these emerging technological opportunities for studying multisensory integration. While technological feasibility has been demonstrated, there is a lack of understanding of how these new devices can benefit the study of human sensory systems. We hope that this review sparks interest and curiosity among those working in other fields and opens up mutually beneficial research avenues to advance both engineering and computing and our understanding of the human sensory systems. Indeed, we believe that strengthening the collaborations between psychology, neuroscience, and HCI, maybe prove to be fruitful for the study of multisensory integration.

Bringing these disciplines closer together may benefit the study of multisensory integration in a reciprocal fashion, that is, new technologies can easily be adapted to classical experimental paradigms used in neuroscience research. Similarly, principles and theories emerging from neuroscience research that have provided evidence of how the human sensory system works can be used to develop new technologies, contributing to a more accurate human–computer integration symbiosis.

Author Contributions

All authors listed have made a substantial, direct and intellectual contribution to the work, and approved it for publication.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Funding. This work was funded by the European Research Council (ERC) under the European Unions Horizon 2020 Research and Innovation Program under Grant No: 638605.

We refer to human–computer interaction as a stimulus–response interplay between humans and any computer technology that can go beyond common devices, such as PC or laptops (Dix et al., 2004).

We refer to human–computer integration to a partnership between humans and any computer technology that can go beyond common devices, such as PC or laptops (Farooq and Grudin, 2016).

“Mixed reality is the merging of real and virtual worlds to produce newenvironments and visualizations, where physical and digital objects co-exist and interact in real time.” (Milgram and Kishino, 1994).

References

- Ablart D., Velasco C., Obrist M. (2017). “Integrating mid-air haptics into movie experiences,” in Proceedings of the 2017 ACM International Conference on Interactive Experiences for TV and Online Video, (New York NY: ), 10.1145/3077548.3077551 [DOI] [Google Scholar]

- Amores J., Hernandez J., Dementyev A., Wang X., Maes P. (2018). “Bioessence: a wearable olfactory display that monitors cardio-respiratory information to support mental wellbeing,” in Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), IEEE, (Honolulu, HI: ), 10.1109/EMBC.2018.8513221 [DOI] [PubMed] [Google Scholar]

- Amores J., Maes P. (2017). “Essence: Olfactory interfaces for unconscious influence of mood and cognitive performance,” in Proceedings of the 2017 CHI conference on human factors in computing systems, (Denver, CO: ), 10.1145/3025453.3026004 [DOI] [Google Scholar]

- Andersen C. A., Alfine L., Ohla K., Höchenberger R. (2019). A new gustometer: template for the construction of a portable and modular stimulator for taste and lingual touch. Behav. Res. 51 2733–2747. 10.3758/s13428-018-1145-1 [DOI] [PubMed] [Google Scholar]

- Arnold P., Khot R. A., Mueller F. F. (2018). “You better eat to survive” exploring cooperative eating in virtual reality games,” in Proceedings of the Twelfth International Conference on Tangible, Embedded, and Embodied Interaction, (New York NY: ), 10.1145/3173225.3173238 [DOI] [Google Scholar]

- Azmandian M., Hancock M., Benko H., Ofek E., Wilson A. D. (2016). “Haptic retargeting: Dynamic repurposing of passive haptics for enhanced virtual reality experiences,” in Proceedings of the 2016 chi conference on human factors in computing systems, (San Jose, CA: ), 10.1145/2858036.2858226 [DOI] [Google Scholar]

- Banakou D., Slater M. (2014). Body ownership causes illusory self-attribution of speaking and influences subsequent real speaking. Proc. Natl. Acad. Sci.U.S.A. 111 17678–17683. 10.1073/pnas.1414936111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barham P., Skibsted L. H., Bredie W. L., Bom Frøst M., Møller P., Risbo J., et al. (2010). Molecular gastronomy: a new emerging scientific discipline. Chem. Rev. 110 2313–2365. 10.1021/cr900105w [DOI] [PMC free article] [PubMed] [Google Scholar]

- Battaglia P. W., Jacobs R. A., Aslin R. N. (2003). Bayesian integration of visual and auditory signals for spatial localization. Josa 20 1391–1397. 10.1364/JOSAA.20.001391 [DOI] [PubMed] [Google Scholar]

- Beattie D., Frier W., Georgiou O., Long B., Ablart D. (2020). “Incorporating the Perception of Visual Roughness into the Design of Mid-Air Haptic Textures,” in Proceedings of the ACM Symposium on Applied Perception 2020, (New York NY: ), 10.1145/3385955.3407927 [DOI] [Google Scholar]

- Berberian B. (2019). Man-Machine teaming: a problem of Agency. IFAC PapersOnLine 51 118–123. 10.1016/j.ifacol.2019.01.049 [DOI] [Google Scholar]

- Berberian B., Sarrazin J.-C., Le Blaye P., Haggard P. (2012). Automation technology and sense of control: a window on human agency. PLoS One 7:e34075. 10.1371/journal.pone.0034075 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brianza G., Tajadura-Jiménez A., Maggioni E., Pittera D., Bianchi-Berthouze N., Obrist M. (2019). “As light as your scent: effects of smell and sound on body image perception,” in IFIP Conference on Human–Computer Interaction, eds Lamas D., Loizides F., Nacke L., Petrie H., Winckler M., Zaphiris P. (Cham: Springer; ), 10.1007/978-3-030-29390-1_10 [DOI] [Google Scholar]

- Brooks J., Nagels S., Lopes P. (2020). “Trigeminal-based temperature illusions,” in Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, (New York NY: ), 10.1145/3313831.3376806 [DOI] [Google Scholar]

- Burns J. K., Blohm G. (2010). Multi-sensory weights depend on contextual noise in reference frame transformations. Front. Hum. Neurosci. 4:221. 10.3389/fnhum.2010.00221 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carr M., Haar A., Amores J., Lopes P., Bernal G., Vega T., et al. (2020). Dream engineering: simulating worlds through sensory stimulation. Conscious. Cogn. 83 102955. 10.1016/j.concog.2020.102955 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carter T., Seah S. A., Long B., Drinkwater B., Subramanian S. (2013). “UltraHaptics: multi-point mid-air haptic feedback for touch surfaces,” in Proceedings of the 26th annual ACM symposium on User interface software and technology, (New York NY: ), 10.1145/2501988.2502018 [DOI] [Google Scholar]

- Castiello U., Zucco G. M., Parma V., Ansuini C., Tirindelli R. (2006). Cross-modal interactions between olfaction and vision when grasping. Chemical senses 31 665–671. 10.1093/chemse/bjl007 [DOI] [PubMed] [Google Scholar]

- Cellini C., Kaim L., Drewing K. (2013). ”Visual and haptic integration in the estimation of softness of deformable objects. Iperception 4 516–531. 10.1068/i0598 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen Y.-C., Spence C. (2017). Assessing the role of the ‘unity assumption’on multisensory integration: a review. Front. Psychol. 8:445. 10.3389/fpsyg.2017.00445 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheng L.-P., Ofek E., Holz C., Benko H., Wilson A. D. (2017). “Sparse haptic proxy: touch feedback in virtual environments using a general passive prop,” in Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, (New York NY: ), 10.1145/3025453.3025753 [DOI] [Google Scholar]

- Colonius H., Diederich A. (2017). Measuring multisensory integration: from reaction times to spike counts. Sci Rep. 7:3023. 10.1038/s41598-017-03219-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Colonius H., Diederich A. (2020). Formal models and quantitative measures of multisensory integration: a selective overview. Eur. J. Neurosci. 51 1161–1178. 10.1111/ejn.13813 [DOI] [PubMed] [Google Scholar]

- Cornelio P., Maggioni E., Brianza G., Subramanian S., Obrist M. (2020). “SmellControl: the study of sense of agency in smell,” in Proceedings of the 2020 International Conference on Multimodal Interaction, (New York NY: ), 10.1145/3382507.3418810 [DOI] [Google Scholar]

- Cross E. S., Ramsey R. (2020). Mind meets machine: towards a cognitive science of human–machine interactions. Trends Cogn. Sci. 25 200–212. 10.1016/j.tics.2020.11.009 [DOI] [PubMed] [Google Scholar]

- Dalton P., Doolittle N., Nagata H., Breslin P. (2000). The merging of the senses: integration of subthreshold taste and smell. Nat. Neurosci. 3 431–432. 10.1038/74797 [DOI] [PubMed] [Google Scholar]

- de la Zerda S. H., Netser S., Magalnik H., Briller M., Marzan D., Glatt S., et al. (2020). Social recognition in rats and mice requires integration of olfactory, somatosensory and auditory cues. bioRxiv[Preprint] 10.1101/2020.05.05.078139 [DOI] [PubMed] [Google Scholar]

- Dematte M. L., Sanabria D., Sugarman R., Spence C. (2006). Cross-modal interactions between olfaction and touch. Chem. Sens. 31 291–300. 10.1093/chemse/bjj031 [DOI] [PubMed] [Google Scholar]

- Deneve S., Pouget A. (2004). Bayesian multisensory integration and cross-modal spatial links. J. Physiol. Paris 98 249–258. 10.1016/j.jphysparis.2004.03.011 [DOI] [PubMed] [Google Scholar]

- Diederich A., Colonius H. (2004). Bimodal and trimodal multisensory enhancement: effects of stimulus onset and intensity on reaction time. Percept. Psychophys. 66 1388–1404. 10.3758/BF03195006 [DOI] [PubMed] [Google Scholar]

- Dix A., Dix A. J., Finlay J., Abowd G. D., Beale R. (2004). Human–Computer Interaction. London: Pearson. [Google Scholar]

- Dmitrenko D., Maggioni E., Obrist M. (2017). “OSpace: towards a systematic exploration of olfactory interaction spaces,” in Proceedings of the 2017 ACM International Conference on Interactive Surfaces and Spaces, (New York NY: ), 10.1145/3132272.3134121 [DOI] [Google Scholar]

- Drewing K., Ramisch A., Bayer F. (2009). “Haptic, visual and visuo-haptic softness judgments for objects with deformable surfaces,” in World Haptics 2009-Third Joint EuroHaptics conference and Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, IEEE, (Salt Lake City, UT: ), 10.1109/WHC.2009.4810828 [DOI] [Google Scholar]

- Ernst M. O., Banks M. S. (2002). Humans integrate visual and haptic information in a statistically optimal fashion. Nature 415 429–433. 10.1038/415429a [DOI] [PubMed] [Google Scholar]

- Ernst M. O., Bülthoff H. H. (2004). Merging the senses into a robust percept. Trends Cogn. Sci. 8 162–169. 10.1016/j.tics.2004.02.002 [DOI] [PubMed] [Google Scholar]

- Farooq U., Grudin J. (2016). Human-computer integration. Interactions 23 26–32. 10.1145/3001896 [DOI] [Google Scholar]

- Feldman J. I., Dunham K., Cassidy M., Wallace M. T., Liu Y., Woynaroski T. G. (2018). Audiovisual multisensory integration in individuals with autism spectrum disorder: a systematic review and meta-analysis. Neurosci. Biobehav. Rev. 95 220–234. 10.1016/j.neubiorev.2018.09.020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fetsch C. R., Pouget A., DeAngelis G. C., Angelaki D. E. (2012). Neural correlates of reliability-based cue weighting during multisensory integration. Nat. Neurosci. 15 146–154. 10.1038/nn.2983 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Forscher E. C., Li W. (2012). Hemispheric asymmetry and visuo-olfactory integration in perceiving subthreshold (micro) fearful expressions. J. Neurosci. 32 2159–2165. 10.1523/JNEUROSCI.5094-11.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freeman E., Williamson J., Subramanian S., Brewster S. (2018). “Point-and-shake: selecting from levitating object displays,” in Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, (New York NY: ), 10.1145/3173574.3173592 [DOI] [Google Scholar]

- Frier W., Pittera D., Ablart D., Obrist M., Subramanian S. (2019). “Sampling strategy for ultrasonic mid-air haptics,” in Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, (New York NY: ), 10.1145/3290605.3300351 [DOI] [Google Scholar]

- Frish S., Maksymenko M., Frier W., Corenthy L., Georgiou O. (2019). “Mid-air haptic bio-holograms in mixed reality,” in Proceedings of the 2019 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), IEEE, (Beijing: ), 10.1109/ISMAR-Adjunct.2019.00-14 [DOI] [Google Scholar]

- Fujisaki W., Shimojo S., Kashino M., Nishida S. Y. (2004). Recalibration of audiovisual simultaneity. Nat. Neurosci. 7 773–778. 10.1038/nn1268 [DOI] [PubMed] [Google Scholar]

- Gallagher M., Ferrè E. R. (2018). Cybersickness: a multisensory integration perspective. Multisens. Res. 31 645–674. 10.1163/22134808-20181293 [DOI] [PubMed] [Google Scholar]

- Geldard F. A., Sherrick C. E. (1972). The cutaneous” rabbit”: a perceptual illusion. Science 178 178–179. 10.1126/science.178.4057.178 [DOI] [PubMed] [Google Scholar]

- Gentilucci M., Cattaneo L. (2005). Automatic audiovisual integration in speech perception. Exp. Brain Res. 167 66–75. 10.1007/s00221-005-0008-z [DOI] [PubMed] [Google Scholar]

- Gilbert A. N. (2008). What the Nose knows: the Science of Scent in Everyday Life. New York NY: Crown Publishers. [Google Scholar]

- Gottfried J. A., Dolan R. J. (2003). The nose smells what the eye sees: crossmodal visual facilitation of human olfactory perception. Neuron 39 375–386. 10.1016/S0896-6273(03)00392-1 [DOI] [PubMed] [Google Scholar]

- Graham T. J., Magnusson T., Rajguru C., Yazdan A. P., Jacobs A., Memoli G. (2019). “Composing spatial soundscapes using acoustic metasurfaces,” in Proceedings of the 14th International Audio Mostly Conference: A Journey in Sound, (New York NY: ), 10.1145/3356590.3356607 [DOI] [Google Scholar]

- Gu Y., Watkins P. V., Angelaki D. E., DeAngelis G. C. (2006). Visual and nonvisual contributions to three-dimensional heading selectivity in the medial superior temporal area. J. Neurosci. 26 73–85. 10.1523/JNEUROSCI.2356-05.2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haggard P. (2017). Sense of agency in the human brain. Nat. Rev. Neurosci. 18:196. 10.1038/nrn.2017.14 [DOI] [PubMed] [Google Scholar]

- Haggard P., Tsakiris M. (2009). The experience of agency: Feelings, judgments, and responsibility. Curr. Direct. Psychol. Sci. 18 242–246. 10.1111/j.1467-8721.2009.01644.x [DOI] [Google Scholar]

- Hajas D., Ablart D., Schneider O., Obrist M. (2020a). I can feel it moving: science communicators talking about the potential of mid-air haptics. Front. Comput. Sci. 2:534974. 10.3389/fcomp.2020.534974 [DOI] [Google Scholar]

- Hajas D., Pittera D., Nasce A., Georgiou O., Obrist M. (2020b). Mid-air haptic rendering of 2d geometric shapes with a dynamic tactile pointer. Proc. IEEE Trans. Haptics 13 806–817. 10.1109/TOH.2020.2966445 [DOI] [PubMed] [Google Scholar]

- Hardy A. C. (1920). A study of the persistence of vision. Proc. Natl Acad. Sci. U.S.A. 6:221. 10.1073/pnas.6.4.221 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hasegawa K., Qiu L., Shinoda H. (2018). Midair ultrasound fragrance rendering. IEEE Trans. Vis. Comp. Grap. 24 1477–1485. 10.1109/TVCG.2018.2794118 [DOI] [PubMed] [Google Scholar]

- Hirayama R., Plasencia D. M., Masuda N., Subramanian S. (2019). A volumetric display for visual, tactile and audio presentation using acoustic trapping. Nature 575 320–323. 10.1038/s41586-019-1739-5 [DOI] [PubMed] [Google Scholar]

- Hirose M., Iwazaki K., Nojiri K., Takeda M., Sugiura Y., Inami M. (2015). “Gravitamine spice: a system that changes the perception of eating through virtual weight sensation,” in Proceedings of the 6th Augmented Human International Conference, (New York NY: ), 10.1145/2735711.2735795 [DOI] [Google Scholar]

- Hirota K., Ito Y., Amemiya T., Ikei Y. (2013). “Presentation of odor in multi-sensory theater,” in International Conference on Virtual, Augmented and Mixed Reality, ed. Shumaker R. (Berlin: Springer; ), 10.1007/978-3-642-39420-1_39 [DOI] [Google Scholar]

- Hornbæk K., Oulasvirta A. (2017). “What is interaction?,” in Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, (New York NY: ), 10.1145/3025453.3025765 [DOI] [Google Scholar]

- Huang F., Huang J., Wan X. (2019). Influence of virtual color on taste: Multisensory integration between virtual and real worlds. Comp. Hum. Behav. 95 168–174. 10.1016/j.chb.2019.01.027 [DOI] [Google Scholar]

- Humbert I. A., Joel S. (2012). Tactile, gustatory, and visual biofeedback stimuli modulate neural substrates of deglutition. Neuroimage 59 1485–1490. 10.1016/j.neuroimage.2011.08.022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hummel T., Sekinger B., Wolf S. R., Pauli E., Kobal G. (1997). ‘Sniffin’sticks’: olfactory performance assessed by the combined testing of odor identification, odor discrimination and olfactory threshold. Chem. Sens. 22 39–52. 10.1093/chemse/22.1.39 [DOI] [PubMed] [Google Scholar]

- Iwata H., Yano H., Uemura T., Moriya T. (2004). “Food simulator: a haptic interface for biting,” in Proceedings of the IEEE Virtual Reality 2004, IEEE, (Chicago, IL: ), 10.1109/VR.2004.1310055 [DOI] [Google Scholar]

- Jackowski-Ashley L., Memoli G., Caleap M., Slack N., Drinkwater B. W., Subramanian S. (2017). “Haptics and directional audio using acoustic metasurfaces,” in Proceedings of the 2017 ACM International Conference on Interactive Surfaces and Spaces, (New York NY: ), 10.1145/3132272.3132285 [DOI] [Google Scholar]

- Kasahara S., Nishida J., Lopes P. (2019). “Preemptive action: accelerating human reaction using electrical muscle stimulation without compromising agency,” in Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, (New York NY: ). [Google Scholar]

- Kervegant C., Raymond F., Graeff D., Castet J. (2017). “Touch hologram in mid-air,” in Proceedings of the ACM SIGGRAPH 2017 Emerging Technologies, (New York NY: ), 1–2. 10.1145/3084822.3084824 [DOI] [Google Scholar]

- Khot R. A., Aggarwal D., Pennings R., Hjorth L., Mueller F. F. (2017). “Edipulse: investigating a playful approach to self-monitoring through 3D printed chocolate treats,” in Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, (New York NY: ), 10.1145/3025453.3025980 [DOI] [Google Scholar]

- Kilteni K., Ehrsson H. H. (2017). Body ownership determines the attenuation of self-generated tactile sensations. Proc. Natl Acad. Sci. U.S.A. 114 8426–8431. 10.1073/pnas.1703347114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim J., Luu W., Palmisano S. (2020). Multisensory integration and the experience of scene instability, presence and cybersickness in virtual environments. Comp. Hum. Behav. 113:106484. 10.1016/j.chb.2020.106484 [DOI] [Google Scholar]

- Kim J. R., Chan S., Huang X., Ng K., Fu L. P., Zhao C. (2019). “Demonstration of refinity: an interactive holographic signage for new retail shopping experience,” in Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems, (New York NY: ), 10.1145/3290607.3313269 [DOI] [Google Scholar]

- Kitagawa N., Ichihara S. (2002). Hearing visual motion in depth. Nature 416 172–174. 10.1038/416172a [DOI] [PubMed] [Google Scholar]

- Koutsabasis P., Vogiatzidakis P. (2019). Empirical research in mid-air interaction: a systematic review. Int. J. Hum. Comp. Interact. 35 1747–1768. 10.1080/10447318.2019.1572352 [DOI] [Google Scholar]

- Kuchenbuch A., Paraskevopoulos E., Herholz S. C., Pantev C. (2014). Audio-tactile integration and the influence of musical training. PLoS One 9:e85743. 10.1371/journal.pone.0085743 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Large D. R., Harrington K., Burnett G., Georgiou O. (2019). Feel the noise: mid-air ultrasound haptics as a novel human-vehicle interaction paradigm. Appl. Ergon. 81 102909. 10.1016/j.apergo.2019.102909 [DOI] [PubMed] [Google Scholar]

- Lin Y.-J., Punpongsanon P., Wen X., Iwai D., Sato K., Obrist M., et al. (2020). “FoodFab: Creating Food Perception Illusions using Food 3D Printing,” in Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, (New York NY: ), 10.1145/3313831.3376421 [DOI] [Google Scholar]

- Longo M. R., Haggard P. (2012). What is it like to have a body? Curr. Direct. Psychol. Sci. 21 140–145. 10.1177/0963721411434982 [DOI] [Google Scholar]

- Lovelace C. T., Stein B. E., Wallace M. T. (2003). An irrelevant light enhances auditory detection in humans: a psychophysical analysis of multisensory integration in stimulus detection. Cogn. Brain Res. 17 447–453. 10.1016/S0926-6410(03)00160-5 [DOI] [PubMed] [Google Scholar]

- Low K. E. (2006). Presenting the self, the social body, and the olfactory: managing smells in everyday life experiences. Sociol. Perspect. 49 607–631. 10.1525/sop.2006.49.4.607 33021500 [DOI] [Google Scholar]

- Ma K., Hommel B. (2015). The role of agency for perceived ownership in the virtual hand illusion. Conscious. Cogn. 36 277–288. 10.1016/j.concog.2015.07.008 [DOI] [PubMed] [Google Scholar]

- Maggioni E., Cobden R., Dmitrenko D., Hornbæk K., Obrist M. (2020). SMELL space: mapping out the olfactory design space for novel interactions. ACM Trans. Comp. Hum. Interact. (TOCHI) 27 1–26. 10.1145/3402449 [DOI] [Google Scholar]

- Maggioni E., Cobden R., Obrist M. (2019). OWidgets: A toolkit to enable smell-based experience design. Int. J. Hum. Comp. Stud. 130 248–260. 10.1016/j.ijhcs.2019.06.014 [DOI] [Google Scholar]

- Maravita A., Spence C., Driver J. (2003). Multisensory integration and the body schema: close to hand and within reach. Curr. Biol. 13 R531–R539. 10.1016/S0960-9822(03)00449-4 [DOI] [PubMed] [Google Scholar]

- Martinez Plasencia D., Hirayama R., Montano-Murillo R., Subramanian S. (2020). GS-PAT: high-speed multi-point sound-fields for phased arrays of transducers. ACM Trans. Graph. 39 138. 10.1145/3386569.3392492 [DOI] [Google Scholar]

- Marzo A., Seah S. A., Drinkwater B. W., Sahoo D. R., Long B., Subramanian S. (2015). Holographic acoustic elements for manipulation of levitated objects. Nat. Commun. 6:8661. 10.1038/ncomms9661 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maselli A., Kilteni K., ópez-Moliner J. L., Slater M. (2016). The sense of body ownership relaxes temporal constraints for multisensory integration. Sci. Rep. 6:30628. 10.1038/srep30628 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Memoli G., Caleap M., Asakawa M., Sahoo D. R., Drinkwater B. W., Subramanian S. (2017). Metamaterial bricks and quantization of meta-surfaces. Nature communications 8 14608. 10.1038/ncomms14608 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Memoli G., Chisari L., Eccles J. P., Caleap M., Drinkwater B. W., Subramanian S. (2019a). “Vari-sound: A varifocal lens for sound,” in Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, (New York NY: ), 10.1145/3290605.3300713 [DOI] [Google Scholar]

- Memoli G., Graham T. J., Kybett J. T., Pouryazdan A. (2019b). “From light to sound: prisms and auto-zoom lenses,” in Proceedings of the ACM SIGGRAPH 2019 Talks, (Los Angeles CA: ), 1–2. 10.1145/3306307.3328206 [DOI] [Google Scholar]

- Merz S., Jensen A., Spence C., Frings C. (2019). Multisensory distractor processing is modulated by spatial attention. J. Exp. Psychol. 45:1375. 10.1037/xhp0000678 [DOI] [PubMed] [Google Scholar]

- Milgram P., Kishino F. (1994). A taxonomy of mixed reality visual displays. IEICE Trans. Inf. Syst. 77 1321–1329. [Google Scholar]

- Montano-Murillo R. A., Gatti E., Oliver Segovia M., Obrist M., Molina Masso J. P., Martinez-Plasencia D. (2017). “NaviFields: relevance fields for adaptive VR navigation,” in Proceedings of the 30th Annual ACM Symposium on User Interface Software and Technology, (New York NY: ), 10.1145/3126594.3126645 [DOI] [Google Scholar]

- Moore J. W., Wegner D. M., Haggard P. (2009). Modulating the sense of agency with external cues. Conscious. Cogn. 18 1056–1064. 10.1016/j.concog.2009.05.004 [DOI] [PubMed] [Google Scholar]

- Morales González R., Freeman E., Georgiou O. (2020). “Levi-loop: a mid-air gesture controlled levitating particle game,” in Proceedings of the Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, (Honolulu HI: ), 10.1145/3334480.3383152 [DOI] [Google Scholar]

- Morales R., Marzo A., Subramanian S., Martínez D. (2019). “LeviProps: animating levitated optimized fabric structures using holographic acoustic tweezers,” in Proceedings of the 32nd Annual ACM Symposium on User Interface Software and Technology, (New Orleans LA: ), 10.1145/3332165.3347882 [DOI] [Google Scholar]

- Mueller F. F., Lopes P., Strohmeier P., Ju W., Seim C., Weigel M., et al. (2020). “Next Steps for Human-Computer Integration,” in Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, (Honolulu HI: ), 10.1145/3313831.3376242 [DOI] [Google Scholar]

- Müller J., Geier M., Dicke C., Spors S. (2014). “The boomRoom: mid-air direct interaction with virtual sound sources,” in Proceedings of the SIGCHI conference on human factors in computing systems, (Toronto CA: ), 10.1145/2556288.2557000 [DOI] [Google Scholar]

- Nagao R., Matsumoto K., Narumi T., Tanikawa T., Hirose M. (2017). “Infinite stairs: simulating stairs in virtual reality based on visuo-haptic interaction,” in Proceedings of the ACM SIGGRAPH 2017 Emerging Technologies, (Los Angeles CA: ), 1–2. 10.1145/3084822.3084838 [DOI] [Google Scholar]

- Nakaizumi F., Noma H., Hosaka K., Yanagida Y. (2006). “SpotScents: a novel method of natural scent delivery using multiple scent projectors,” in Proceedings of the IEEE Virtual Reality Conference (VR 2006), IEEE, (Hangzhou: ), 10.1109/VR.2006.122 [DOI] [Google Scholar]

- Narumi T., Kajinami T., Nishizaka S., Tanikawa T., Hirose M. (2011a). “Pseudo-gustatory display system based on cross-modal integration of vision, olfaction and gustation,” in Proceedings of the 2011 IEEE Virtual Reality Conference, IEEE, (Singapore: ), 10.1109/VR.2011.5759450 [DOI] [Google Scholar]

- Narumi T., Nishizaka S., Kajinami T., Tanikawa T., Hirose M. (2011b). “Meta cookie+: an illusion-based gustatory display,” in International Conference on Virtual and Mixed Reality, ed. Shumaker R. (Berlin: Springer; ), 10.1007/978-3-642-22021-0_29 [DOI] [Google Scholar]

- Nelson W. T., Hettinger L. J., Cunningham J. A., Brickman B. J., Haas M. W., McKinley R. L. (1998). Effects of localized auditory information on visual target detection performance using a helmet-mounted display. Hum. Factors 40 452–460. 10.1518/001872098779591304 [DOI] [PubMed] [Google Scholar]

- Nishizawa M., Jiang W., Okajima K. (2016). “Projective-AR system for customizing the appearance and taste of food,” in Proceedings of the 2016 workshop on Multimodal Virtual and Augmented Reality, (New York NY: ), 10.1145/3001959.3001966 [DOI] [Google Scholar]