Abstract

Practicality was a valued attribute of academic psychological theory during its initial decades, but usefulness has since faded in importance to the field. Theories are now evaluated mainly on their ability to account for decontextualized laboratory data and not their ability to help solve societal problems. With laudable exceptions in the clinical, intergroup, and health domains, most psychological theories have little relevance to people’s everyday lives, poor accessibility to policymakers, or even applicability to the work of other academics who are better positioned to translate the theories to the practical realm. We refer to the lack of relevance, accessibility, and applicability of psychological theory to the rest of society as the practicality crisis. The practicality crisis harms the field in its ability to attract the next generation of scholars and maintain viability at the national level. We describe practical theory and illustrate its use in the field of self-regulation. Psychological theory is historically and scientifically well positioned to become useful should scholars in the field decide to value practicality. We offer a set of incentives to encourage the return of social psychology to the Lewinian vision of a useful science that speaks to pressing social issues.

Keywords: usefulness, practicality, relevance, accessibility, policy

Psychological theory, particularly in social psychology, was once viewed as an instrument to address issues of pressing societal importance. Kurt Lewin, a central figure in the foundation of social psychology, wrote that “there is nothing so practical as a good theory.” This view is still held by some, but many psychologists regard theory as an end in itself regardless of whether and how easily the theory addresses questions of practical significance. The prevalence of this view represents a practicality crisis that not only threatens the historical value that the field of psychology has placed on practicality but also risks undermining the field in the minds of the general public, policymakers, and the next generation of scholars in the field. We describe this crisis and illustrate how it has unfolded in the study of self-control.

What is a Practical Theory?

A practical theory is one that suggests actionable steps toward solving a problem that currently exists in a particular context in the real world. Practical theories can guide practitioners in changing psychological processes or behaviors and state the conditions under which and the people for whom the theoretical predictions apply. As such, practical theories will have familiar theoretical components such as causal predictions and hypothesized mediators and moderators. However, unlike an impractical theory, the structure and content of a practical theory lends itself to realistic adaptation to a specific context by practitioners. Achieving this kind of theory involves a bi-directional relationship between practitioners, who are the consumers of theory when they use it in the field, and theory developers, who must engage with practical problems and feasible solutions for their work to be useful in the field (Giner-Sorolla, 2019).

For example, a problem in the field of self-regulation is that dieters often fail at self-control when they attempt to change their eating patterns. A practical theory of self-control describes not only why that failure happens, but also to whom it happens, when it is likely to happen, and what malleable psychological or behavioral processes could be targeted for intervention that would make it less likely to happen. The theory of ego depletion, for example, states that self-control draws upon a capacity-limited ego resource, so failure results from reductions in that resource following repeated exertion (Baumeister et al., 1998). The theory posits an underlying mechanism (variability in ego strength or energy) that accounts for success and failure in self-control, which is moderated only by previous self-regulatory effort. The theory is impractical because it presents no clear way to increase or replenish the ego resource, which is not specified with sufficient precision to easily measure or manipulate. Practitioners attempting to put the theory to use would be hampered by the theory’s omission of additional boundary conditions such as individual differences, situational factors, or cultural contexts that might moderate the effect.

A contrasting theory of self-regulation is the Theory of Planned Behavior (Ajzen, 1988) from the health psychology tradition. In this theory, failures of self-control are results of intentions and perceived control over the behavior, which themselves are influenced by various cognitive and social factors including attitudes about the behavior and its effects, subjective norms, and self-efficacy beliefs about control. This theory is relatively more practical because it centers variables that are measurable and malleable, such as beliefs and subjective norms, and specifies the conditions under which self-control is more or less likely (e.g., when attitudes or intentions are strong). Practitioners who have knowledge about the cultural context where a particular behavior occurs can derive from the theory predictions not only about expected behaviors (e.g., beliefs about the malleability of this outcome is low, so intentions and behavior change will also be low), but also about the factors that will change the behavior (e.g., changing efficacy beliefs will cause a change in intentions and behavior).

Practical theories exist in a space between basic and applied theory. The term “basic” in psychology connotes research that tests theory or uncovers mechanisms in a general way, whereas “applied” in psychology usually means research that solves problems in a specific context. There is no logical inconsistency between theory development and problem solving. Both can be done at once. The deeper tension between basic and applied work hinges on the question of universality. Psychological theory is often formulated as though it might apply to all humanity at all times. Third century Roman peasants are just as likely to become ego depleted as eighteenth century Qing royalty. Practical theory is not necessarily anchored to a specific time, place, and culture, but it needs to be contextualizable in a realistic way to be useful to practitioners. One of the reasons the Theory of Planned Behavior is so influential is because researchers have been able to apply (and test) it in a wide range of contexts and populations (Armitage & Conner, 2001).

An emphasis on practicality encourages psychologists to focus our theories in ways that are useful to addressing a problem in the real world. Prioritizing practicality is one way to ensure that theories remain substantive and do not become centered around higher-order theoretical questions that build out theoretical nuance but are not critical for application in the field. Prioritizing some types of theories over others parallels Meehl’s distinction between substantive theory and statistical hypothesis (Meehl, 1967; 1978). In Meehl’s view, research that advances theory is superior to research that only tests hypotheses because the former contributes far more to cumulative knowledge. For the same reason, we argue that theory structured to address practical problems is superior to theory that only identifies relations between psychological constructs. The theme in both cases is missed opportunity for broader impact. Superficial research fails to contribute to generalizable theory, and impractical theory fails to contribute to work of practitioners in the world beyond the academy.

What Does Practical Theory Look Like in the Wild?

Many psychologists are already building practical theories. A reader interested in increasing the practicality of her theoretical work could draw inspiration from these examples.

Practical theory starts with a problem

The genesis of many theories is lack of explanatory knowledge about the nature of or relations among psychological processes. What is self-control? Why does self-control wane over time? These are good psychological questions and do eventually have relevance to people’s lives. Yet, a more practical theory could begin with an even more direct question: How can people get better at self-control? Reorienting toward a problem in the real world helps ground our theory and guides the empirical work that follows in a practical direction. The authors of a recent review of the self-control literature identified more than 21 practical, evidence-based ways to reduce the problem of self-control failure (Duckworth, Milkman, & Laibson, 2018). The solutions range from setting goals and planning, to consciously changing the environment, to binding one’s behavior by committing to certain acts in advance. Many of these solutions test and contribute to various theories of self-control and behavior change, but it is notable that the theory-building aspects of the research emerges from and is in service of answering the practical question and not vice versa.

Practical theory iteratively engages with practitioners and real people throughout the research cycle

Perhaps the most important step in practical theory building is identifying the right research question. From our removed position in the ivory tower, how do we know which research questions will lead to the most practical questions? We must collaborate from the very first step if we want our theories to speak to practical problems. We must allow our theories to be informed more substantially than they have been by people outside of the academy. Community-engaged participatory research has been used for decades in other fields (Minkler & Wallerstein, 2002), so there are ample resources available to help psychologists learn this family of methods. In self-regulation, for example, the question of how to build emotion regulation skills among adolescents who face persistent bullying is a practical question (Garner & Hinton, 2010). Models of emotion regulation could be modified to more readily lend themselves to direct application by practitioners.

An important consideration with community-based research are the heightened ethical stakes. To ask more of communities is to take on additional obligation to return something of value to them. Other fields are well ahead of psychology in grappling with these ethical issues and developing tools to responsibly conduct this kind of work (Anderson, Solomon, Heitman, DuBois, Fisher, Kost, et al., 2012; Mikesell, Bromley, & Khodyakov, 2013). The embrace of ethical community-based participatory research is one way to promote inclusion and begin to restore the trust of people who have been betrayed by scientists in the past.

Practical theory is grounded in high-quality descriptive data on real-world problems

Detailed knowledge about exactly what the practical problem is and the conditions under which it occurs is a prerequisite for practical theory. A call for more and better descriptive work is neither controversial nor novel; others have described the value of careful observational work to theory development as well as practical applications, not to mention its deep historical roots (Rozin, 2001). Obtaining clear observations of how a psychological phenomenon plays out in the real world - that is, in a particular and non-generalizable context - can provide insight into the conditions and contexts where a theory might apply and be most useful. Unfortunately, non-experimental observational studies tend to be devalued in psychology. We will address the issue of how to incentivize practicality later in this paper.

Some of the most theoretically powerful research in self-regulation in the last few years has grown from observational work. Studies that used a longitudinal observation to sample the experiences of people as they pursue goals in their everyday lives and how those experiences fluctuate over time have transformed how scholars think about self-regulation. For example, it is because of this work that theories must account for substantial variability in self-regulatory success within people in addition to the variance between people (Werner, Milyavskaya, Foxen-Craft, & Koestner, 2016). Observational studies have also prompted theorists to focus on situational strategies (such as avoiding temptations) as a way of increasing the likelihood of self-regulation success (Duckworth, Gendler, & Gross, 2016; Hofmann et al., 2011).

The Historical Value of Practicality in Social Psychology

Several fields in psychology, especially social psychology, have long placed high value on practicality. Classic studies in that field were spurred by major societal events or movements. For example, Milgram’s studies on obedience were a direct attempt to understand why people obey authority figures (Milgram, 1963) and identify the conditions that afford disobedience (Milgram, 1965). Gordon Allport wrote The Nature of Prejudice (Allport, 1954) to provide a psychological account of the systemic discrimination during the decades of the Jim Crow era. Foundational work sought to identify ways to prevent prejudice, discrimination, and intergroup conflict and to mitigate their harmful effects (Aronson, 1978; Clark & Clark, 1939; Sherif, Harvey, White, Hood, & Sherif, 1961; Steele & Aronson, 1995). Theoretical explanations of bystander non-intervention during emergencies (Latane & Darley, 1968) were famously prompted by the murder of Kitty Genovese (even though the actual case might not have illustrated the bystander effect).

We focus here on social psychology, but we note that the historical importance of practicality is not exclusive to that discipline. Several of the largest branches of our field sprung out of problems faced by militaries during the 20th century. The roots of modern personality psychology can be traced back in part to the personnel problem faced by the US Army during and after World War I of optimally assigning soldiers to roles in the military (Koopman, 2019). Daniel Kahneman’s early work in judgment and decision making was inspired by a similar problem faced by the Israeli military during the Six Day War (Lewis, 2016). Some of the most important scientific advances in understanding speech perception and synthesis arose from the challenge of encrypting and decoding spoken command messages during World War II (Greenberg, 1996). B. F. Skinner’s work on operant conditioning famously began as part of Project Orcon, a US Navy project to use pigeons to solve the problem of unmanned missile control before the availability of reliable electronic guidance (Capshew, 1993). We offer these examples not to suggest that we should all start working for the military, but rather to recall a time when scientists allowed their research priorities to be driven by events in the real world that overshadowed their other projects.

Others have pointed out the ways that psychology research has shifted over the last decade to prioritize laboratory work over applied work. Both kinds of research can test theory, but theories that are tested only in the lab miss the critical opportunity to adapt to feedback from their application by practitioners (Giner-Sorolla, 2019). Robert Cialdini left the field in a paper titled “We Have to Break Up,” (2009) citing the surging emphasis in the field of cognition over behavior, tidy multi-study packages over messy field studies, and mediational analyses over descriptions of real behavior. Others have issued similar critiques about the cognitive revolution, noting how the field at its height had been reduced to the study of “self-reports and finger movements” (Baumeister, Vohs, & Funder, 2007). Despite the subsequent and self-proclaimed “decade of behavior” from 2001–2010 (Association for Psychological Science, 2001) empirical psychology is still dominated by studies of people sitting at computers pushing buttons, only they are mTurk workers using their own computers instead of ours. Modern psychological theory is informed far more by data about how people interact with stimuli on a computer screen than with events in the real world.

This is not to imply that our field is entirely without practicality. Clinical psychology and intervention science, for example, are built around solving the problems that mental illness and social disadvantage can cause for quality of life and interpersonal functioning. Intergroup conflict, war, prejudice, discrimination, xenophobia, abuse of power, inequality, poverty, homophobia, and transphobia are only some of the world’s problems that continue to inspire research in psychology. Instead, the question at hand is: how practical is our science compared to how practical it could be?

The Current Status of Practicality in Social Psychological Theory

Our casual observation is that psychological theory has become unmoored from the guiding principle of practicality and is drifting toward more nuanced or myopic theoretical questions that are less relevant to helping solve the problems that people care about, such as predicting (Yarkoni & Westfall, 2017) and changing (Gainforth et al., 2015) behavior. We present data in this section as illustrative examples of how practicality has become devalued in psychological theory.

Publication criteria in the top ten psychology journals

One of the hallmarks of practical theory is that it will be useful to practitioners. Psychological theory might be developed in the lab, but an important purpose of this work is for the theories to be exported to other disciplines where it can be put to use. If our field valued this kind of relevance, then it would be reflected in our publication priorities. Top journals in other academic fields, such as medicine, human physiology, and clinical psychology, evaluate papers not only on methodological rigor and innovation, but also on their potential impact on practice. These journals have practitioners in their readership and are aware that these “importers” of the scientific knowledge are best positioned to make use of it.

We surveyed the criteria for publication in the top 10 multidisciplinary psychology journals as rated by their 2018 Impact Factor according to Journal Citation Reports (Clarivate Analytics) as one data point on this issue. Most of the journals mentioned contributing to scientific progress as a criterion and encouraged researchers to broaden the scope of the field in proposing new theories or advancing methods. However, very few journals mentioned anything about adjusting that scope beyond academic psychology. Of the ten journals, six mentioned readability as a criterion for submission. Out of those six, three of the journals (including Perspectives on Psychological Science), mentioned only that articles should be accessible to other scientists; one journal mentioned that articles should be intelligible to other scientists as well as the public; and the remaining two simply stated that any submissions should be accessible to a “wide audience,” failing to specify who makes up that audience. The implicit statement from the top psychology journals is that they are intended to be read by fellow scientists and not practitioners.

All of the journals mention valuing work that is “original” or “provocative.” Five of the journals focus on research methods and advancing “psychological science,” with no mention of improvements outside of academia. Two of the journals actively discourage submissions that are based on empirical findings or applied science. Of the ten journals, only two require any kind of description of the public significance (in the form of a brief statement), and only one even mentions applied theories, programs, and interventions. Psychological Science in the Public Interest does not require mention of public application or recommendations for practitioners. The submission criteria for that journal state that the issues reviewed should be “of direct relevance to the general public,” but provide no further instruction.

Placing greater value on novelty than relevance in our theory and research is not new. Meehl wrote that “…the profession highly rewards a kind of ‘cuteness’ or ‘cleverness’ in experimental design such as a hitherto untried method for inducing a desired emotional state, or a particularly ‘subtle’ gimmick for detecting its influence upon behavioral output” (1967, pp. 114). In that passage, Meehl was contrasting the novelty of experimental methods with their robustness to address theoretical questions, but his critique of flashy methods can apply just as well to flashy theoretical maneuvers. “Cuteness or cleverness” in theory might work against practicality, which needs to be iteratively refined as ideas from the field are tested in the lab and vice versa. The Theory of Planned Behavior evolved in a notably non-gimmicky way from the Theory of Reasoned Action (Ajzen & Fishbein, 1980) based on years of feedback from laboratory and field data. Indeed, Meehl later admitted that some of what he characterized as “substantial” traditions within psychology (e.g., descriptive clinical psychiatry, psychometric assessment) were less “conceptually exciting” and yet “more than make up for that by their remarkable technological power” (1978, p. 817). In this, he draws a parallel contrast to the one we put forth here between theories that are cute and ones that are useful for solving problems.

Case study of practicality in a leading social psychology journal

Practicality might be reflected in social psychological research, even if it is not stated as a value in journals. We examined the content of one journal in depth as a case study of the practicality of the papers being published in a specific field. We chose the first two sections of the Journal of Personality and Social Psychology (JPSP) because it is the most cited journal in social psychology. In operationalizing practicality, we drew heavily from the work of Weiss and Weiss, who studied the gap between practitioners and social scientists (Weiss & Bucuvalas, 1980; Weiss & Weiss, 1996). The Weisses interviewed people in both groups about what they believe makes research useful and had their participants rate the importance of a number of characteristics (e.g., objectivity, scalability) in determining a study’s usefulness. The Weisses also had participants read through real studies and rate each on the individual characteristics and overall usefulness. With these data, the investigators calculated a “revealed importance” score for each characteristic, reflecting how strongly each one contributed to the decision maker’s classification of a study as useful or not. This procedure yielded a set of characteristics that both drove perceptions of usefulness in the revealed scores and were explicitly described by decision makers as qualities of useful research.

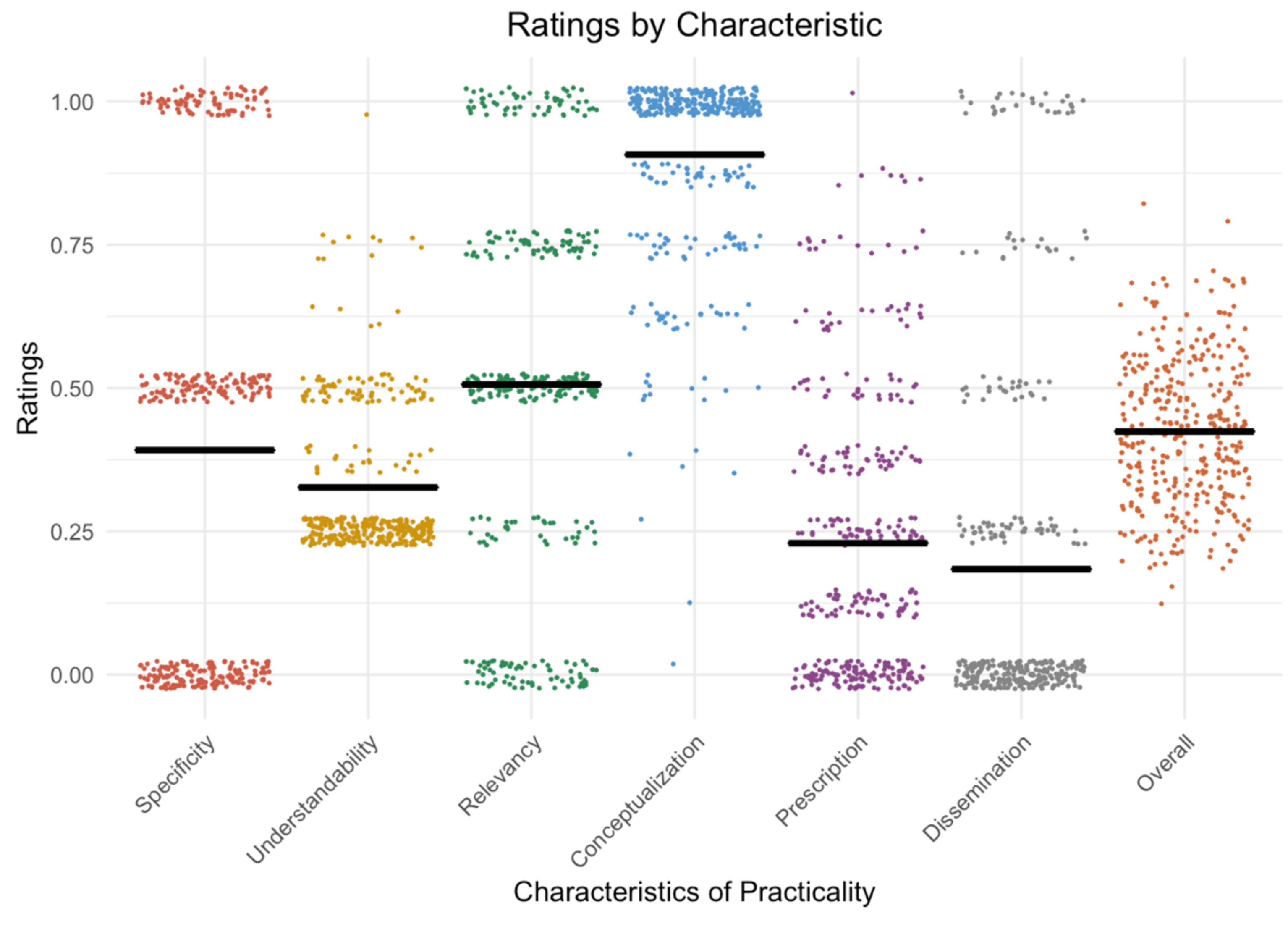

We identified six characteristics from the Weiss and Weiss (1996) list as reflective of practical research. Specificity refers to whether or not a research study addressed a specific social issue. This was the top response to the open-ended usefulness question. Understandability reflects how accessible an article is to the general public in terms of the level of the writing. Relevancy captures whether or not the participants are representative of the population most affected by the focal problem. Decision makers are savvy to the limitations of using samples that aren’t representative of the target population. Conceptualization indicates whether or not the theoretical model is sufficiently comprehensive to be useful to non-scientists. Decision makers indicated that models that accounted for more variables were more useful. Prescription identifies how well the study offers actionable next steps to address the focal problem. This criterion was tied with technical quality for the highest revealed importance score. Finally, dissemination serves as an index of the accessibility of the results and recommendations of the study. Policy makers recognize that studies are only useful insofar as they are available outside of academia. The first three criteria (specificity, understandability, and relevancy) are properties more of the research itself, whereas the final three criterion (conceptualization, prescription, and dissemination) are properties more of the theoretical framework at play.

Eight research assistants coded 360 articles from a 5-year span. Each article was rated by two raters, and the average of their ratings was used as the score on each of the characteristics. The average raters random effects ICCs of the characteristics ranged from 0.69 (conceptualization) to 1.00 (dissemination), and was 0.91 for the overall practicality score, indicating that 91% of the rating variance was between articles. An overall practicality score was calculated by averaging the percentage of the maximum possible of the six characteristics. These overall practicality scores are normalized on a scale from 0 to 1, where 0 can be considered 0% practical and 1 is 100% practical. Complete details of the preregistered methods and results can be found at https://osf.io/2qyru/. Figure 1 summarizes the results.

Figure 1.

Average coder scores for 360 articles from JPSP from 2012–2016 on each of six usefulness characteristics and an overall practicality score. Scores are normalized to percentages of the maximum possible score on each characteristic.

On average, articles from JPSP were 42.5% practical. Not a single article we reviewed received higher than 83%, with the modal score being 33%. Conceptualization was the only characteristic with a mean score above the midpoint. Average scores of four of the remaining characteristics, however, fell well short of even the 50% mark.

Notably, two of the most important characteristics of practicality as rated by policy makers, prescription and dissemination, received the lowest scores. The average score for prescription was 0.9 and the average score for dissemination was only 0.7 on the original 0–4 scale. Out of all 360 articles, only one was unanimously awarded a perfect score for prescription across raters: an article on bridging the gap for first-generation college students in American universities (Stephens et al., 2012). This was the only JPSP article from the first two sections article that clearly demonstrated implementable solutions to a social issue.

Potential Consequences to the Field of the Diminishment of Practicality

Academia is in possession of a large, highly educated, and incredibly capable workforce. An excessive focus on impractical theory in psychology journals can be interesting but is also an inefficient use of our intellectual resources. People outside the field have long recognized this reality about academia (consider, for example, the use of the phrase “it’s all academic to me” to signal something that has no practical value or importance). But a variety of factors that surfaced during the prolonged recovery from the Great Recession of 2008 have promoted the impracticality of psychological theory from an endearing, anachronistic quirk into an existential crisis for the field.

Perhaps the most pressing of these is the potential that we risk discouraging bright, talented people from entering our field. Socially engaged people might not find the motivation to dedicate sustained attention to abstract theoretical questions when historical geopolitical events are unfolding around the globe. It is understandable that at these times talented young scholars would turn to other fields with more-relevant theories. Each year, nearly half a million students across hundreds of higher education institutions complete the National Survey of Student Engagement (NSSE), where first-year students and seniors answer questions about academic challenges and opportunities, peer and faculty interactions, campus environment, and civic engagement. A consistent result in recent years is that graduating students are highly interested in work that has social impact at a variety of levels. In the 2019 NSSE survey, for instance, 66% of seniors said they often or very often informed themselves about state, national, or global issues, 31% actively raised awareness about those issues, and 15% indicated they even organized others to work on them (Center for Postsecondary Research, 2019). This level of civic engagement appears to be part of a steady rise in interest in community service among college students since the 1990s (Syvertsen, Wray-Lake, Flanagan, Wayne Osgood, & Briddell, 2011).

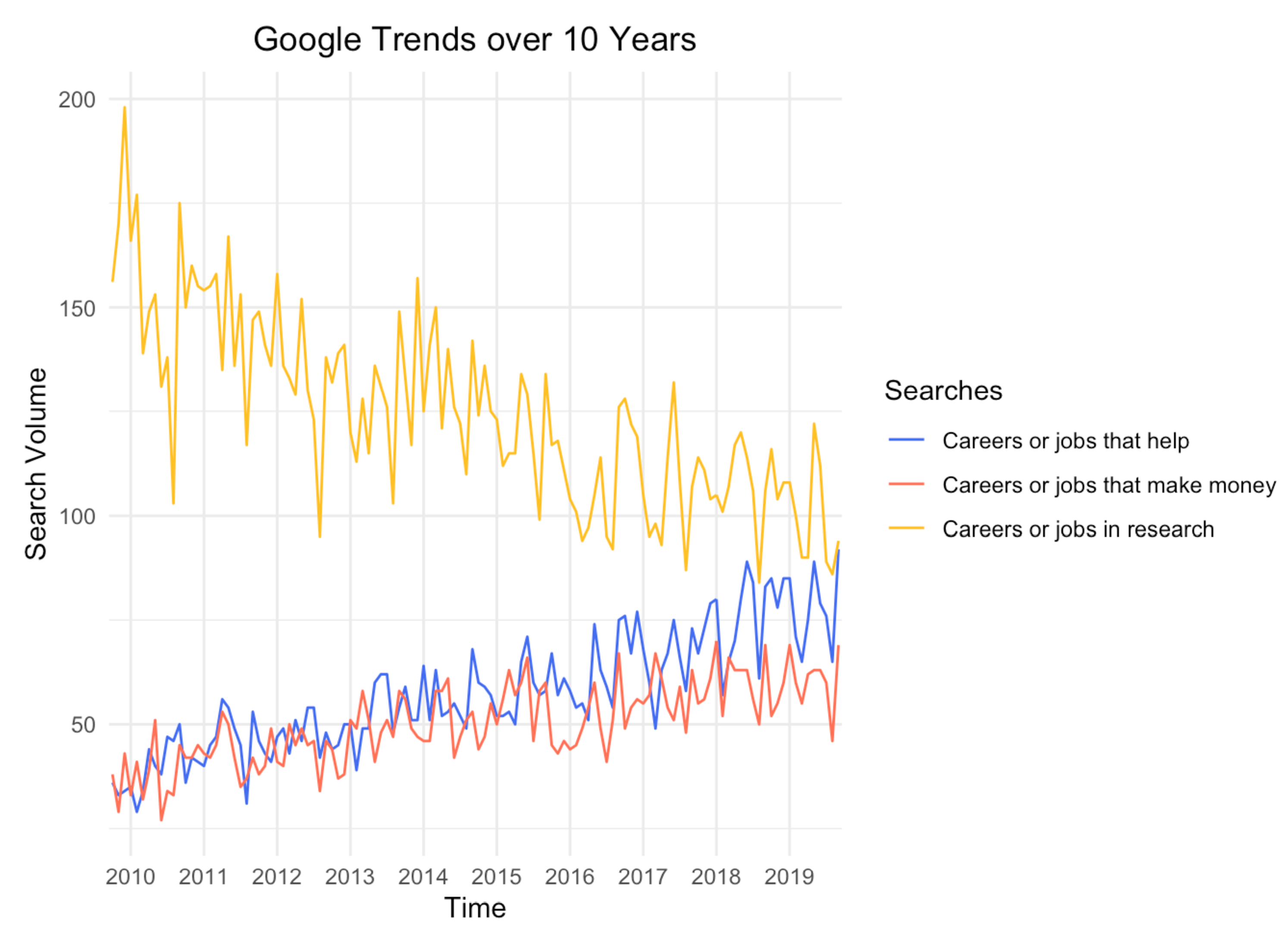

The interests and priorities of graduating seniors are particularly relevant to doctoral programs that hope to attract the most talented among them. And graduating seniors are not the only ones who are interested in the practicality of their careers. We used Google’s “Trends” feature to estimate the popularity of various types of careers in the United States in aggregate Google searches. We compared searches for “jobs (or careers) that help” to “jobs (or careers) that make money” as well as “jobs (or careers) in research” from January 2010 through January 2020 (Figure 2). Though searches for careers with the words “help” (blue lines) and “make money” (red line) steadily increase across ten years, searches for “help” careers have overtaken “money” careers in the last few years. Searches for careers “in research” (yellow line), though overall more frequent than “money” and “help” careers, have declined steeply over the past 10 years. People using Google to search for jobs are less interested in research and more interested in helping. Psychology could increase its appeal to the next generation by designing theories to address pressing social issues.

Figure 2.

Popularity of searches for careers that “help” (blue line), “make money” (red line) or “in research” (yellow line) as indexed by the Google Search Trend interest score, 2010 thru 2020. Data are normalized by total search volume at each time point.

That psychological theory is not more practical is ironic given the inherent practicality of the topics that so many of us study. We study topics that can so easily be applied to everyday life, yet rarely do we go out of our way to make the case of our relevance to the lay public. Cialdini commented on this trend in his Dear John letter to the field (2009):

“As we have moved increasingly into the laboratory and away from the study of behavior, I believe we have been eroding the public’s perception of the relevance of our findings to their daily activities. One of the best aspects of field research into naturally occurring behavior is that such relevance is manifest. When my colleagues and I have studied which messages most spur citizens to reduce household energy usage, the results don’t have to be decoded or interpreted or extrapolated. The pertinence is plain. Truth be told, as a discipline, we’ve become lax in our responsibilities to the public in this regard. They deserve to know the pertinence of our research to their lives because, in any meaningful sense, they’ve paid for that research. They are entitled to know what we have learned about them with their money.”

(pp. 6)

Scholars from other fields have stepped up to fill the void left by our absence in practical domains of behavioral science. The fields of behavioral medicine, behavioral economics, and now data science are populated in large part by people doing practical psychology who do not identify as psychologists. Advisory boards that consult with policymakers at all levels about behavioral science matters frequently are dominated by behavioral economists, medical doctors, business school professors, and lawyers. The occasional cognitive psychologist with expertise in judgment and decision making slips in (for example, Cialdini is now a member of the Advisory Board of the Behavioral Science Policy Association with Daniel Kahneman, Paul Slovic, Cass Sunstein, and Richard Thaler, among others), but for the most part, the academics that government officials turn to for advice on human behavior are not psychologists.

Support at the federal level is also vulnerable to concerns about practicality. Public skepticism about the value of science in general (Funk, Hefferon, Kennedy, & Johnson, 2019), and randomized experiments on humans in particular (Meyer et al., 2019), are significant barriers that we must overcome to convince federal policymakers of the value of psychological theory. Lawmakers might already be aware of the difficulties of scaling psychological theory to the population level (Al-Ubaydli, List, & Suskind, 2017) and therefore might be more inclined to defer to other fields that grapple more directly with scalability (e.g., economics, public health), when they listen to scientists at all. The cumulative effect of these trends is that federal funding agencies might become hesitant to make substantial investments in a field with questionable significance to the issues that motivate political leaders. Data on the totality of federal funding suggests overall declines in support for most branches of psychology across the past decade (National Science Foundation, 2018a).

We have every reason to believe that the trends of decreasing student interest, increasing competition for public trust, and decreasing federal support will continue for the foreseeable future. Even if it were not always the case, it is especially incumbent on psychologists right now to rediscover the lost value of theoretical practicality for the field (Berkman, 2018). The next section lays out an agenda for increasing practicality in psychological theory.

Incentives for Practical Theory

There are several clear steps we can take to increase the practicality of psychological theory, and many researchers within our field are already doing so. Following others (e.g., Giner-Sorolla, 2019), we focus on the role of incentives. Here, we describe incentives that would increase the practicality of field.

Publication and peer review

Peer-reviewed articles in journals remain the coin of the academic psychology realm. There is no shortage of outlets for psychological theory, whether practical or impractical. As reviewed earlier, the criteria for acceptance into these journals focuses on theoretical advances and innovation. In concept, then, a practical theory that is also innovative would fare just as well in review as a similarly innovative impractical one. However, in practice, practical theories are likely to be more incremental in nature because they build over years through feedback from practitioners and applications in the field. The current incentives for publication of theoretical pieces are likely to work against practical theory.

A relatively straightforward change would be for editorial boards to revisit the expectations for publication in their journals and add some consideration of practicality. Changes to the author guidelines will be more effective if they are coupled with changes in editorial practices such as action editors triaging papers describing impractical theories and encouraging papers describing practical ones. Editors could also invite reviews of theoretical papers from at least one relevant practitioner. Reviewers can signal a commitment to practicality by highlighting how it plays out in their reviews of theoretical papers.

Hiring, tenure, and promotion

Written journal guidelines will only go so far on their own. Cultural inertia within the field will overcome new guidelines, and researchers will continue to work in the way they always have if it still produces the desired outcomes. Among those desired outcomes are being hired, receiving tenure, and getting promoted at academic institutions. Theory development, particularly if it results in journal publications, typically can help with all three. However, as in the peer review process, the evaluation of the merit of psychological theory by hiring, promotion, and tenure committees does not center on or necessarily include practicality. Instead, standards for quality are usually described in broad terms such as “meaningful contributions” or “substantial impact on the field.”

Practicality-minded institutions and departments could additionally specify the potential or actual “influence on practice” or “societal impact” among the criteria for hiring, tenure, and promotion. This impact could then be assessed as part of the review processes by requesting evidence from the applicant (e.g., in a section of the curriculum vitae or a written statement) about the practicality of their theoretical work. Referees could be asked about impact beyond the field, and it might even be possible to invite practitioners to evaluate the practicality of the theoretical work in an academic portfolio. These changes would provide powerful incentives for academic psychologists to create practical theories.

Research funding

Practicality is already a priority in how funding agencies, philanthropic donors, and private foundations evaluate the return on investment in grants, at least in the United States. For example, the main review criterion for National Institutes of Health (NIH) grant applications is the “Overall Impact” of the project, which is “the likelihood for the project to exert a sustained, powerful influence on the research field(s) involved,” considering five criteria: significance, innovation, approach, investigative team, and environment. After the quality of the approach, significance is the second biggest driver of the overall impact score (Rockey, 2011). Significance reflects how successfully executing the project will “change the concepts, methods, technologies, treatments, services, or preventative interventions” within the field (NIH, 2016). The National Science Foundation (NSF) has two evaluation criteria: “Intellectual Merit,” the potential to advance knowledge, and “Broader Impacts,” the potential to benefit society and to progress towards specific, socially desirable outcomes (NSF, 2018b). These funding agencies signal the value they place on research that can contribute to solving real-world problems by considering the practicality of research as co-equal with its methodological rigor.

More Research Is Needed?

This is the point in the academic paper where we would normally call for more research on this topic. However, without first pausing to consider the practical value of the knowledge to be gained by “further research,” we risk becoming the kind of scientists that Meehl (1967) wrote about with disdain:

“…a zealous and clever investigator can slowly wend his way through a tenuous nomological network, performing a long series of related experiments which appear to the uncritical reader as a fine example of ‘an integrated research program,’ without ever once refuting or corroborating so much as a single strand of the network…. Meanwhile our eager-beaver researcher, undismayed by logic-of-science considerations and relying blissfully on the ‘exactitude’ of modern statistical hypothesis-testing, has produced a long publication list and been promoted to a full professorship. In terms of his contributions to the enduring body of psychological knowledge, he has done hardly anything.”

(pp. 114)

As easily as we can slip into a mindless over-reliance on hypothesis-testing to string together a career that contributes nothing to the literature, so too can we slide down a path of theory building that adds to abstract knowledge but has no discernable impact on the world.

But hope is not lost. We began this article by recalling Kurt Lewin’s claim that theory can be the most useful thing. Lewin provides a way through the practicality crisis by rejecting the dichotomy between “basic” science that develops and tests theory and “applied” science that uses theory for practical purposes. We join him in embracing practical theory that identifies new ways to address problems and, thereby, builds incremental knowledge. The tools and resources exist to do so. As with self-regulation, the change will depend on motivation.

Supplementary Material

Acknowledgements

The authors are grateful to Anna Agron, Gianna Andrade, Shirley Banh, Anastasia Browning, Vaughan Hooper, Amanda Johnson, Suma Mohamed, and Jake Mulleavey and for their diligent work in coding the articles and to the Social and Affective Neuroscience Laboratory for helpful comments and guidance along the way. This work was supported by grants CA211224, CA240252, DA04856, and HD094831 from the National Institutes of Health.

References

- Ajzen I (1988). Attitudes, personality and behavior. Milton Keynes: Open University Press. [Google Scholar]

- Ajzen I, & Fishbein M (1980). Understanding Attitudes and Predicting Social Behavior. New Jersey: Prentice-Hall. [Google Scholar]

- Al-Ubaydli O, List J, Suskind D (2017). What Can We Learn from Experiments? Understanding the Threats to the Scalability of Experimental Results. American Economic Review, 107(5), 282–286. [Google Scholar]

- Anderson EE, Solomon S, Heitman E, DuBois JM, Fisher CB, Kost RG, … & Ross LF (2012). Research ethics education for community-engaged research: A review and research agenda. Journal of Empirical Research on Human Research Ethics, 7(2), 3–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Armitage CJ, & Conner M (2001). Efficacy of the theory of planned behaviour: A meta-analytic review. British Journal of Social Psychology, 40(4), 471–499. [DOI] [PubMed] [Google Scholar]

- Aronson E (1978). The Jigsaw Classroom. Sage, Thousand Oaks, CA. [Google Scholar]

- Association for Psychological Science (2001, February). Decade of Behavior Initiative is Under Way. Observer. Retrieved from: https://www.psychologicalscience.org/observer/decade-of-behavior-initiative-is-under-way. [Google Scholar]

- Baumeister RF, Bratslavsky E, Muraven M, & Tice DM (1998). Ego depletion: Is the active self a limited resource? Journal of personality and social psychology, 74(5), 1252–1265. 10.1037/0022-3514.74.5.1252. [DOI] [PubMed] [Google Scholar]

- Baumeister RF, Vohs KD, & Funder DC (2007). Psychology as the science of self-reports and finger movements: Whatever happened to actual behavior?. Perspectives on Psychological Science, 2(4), 396–403. [DOI] [PubMed] [Google Scholar]

- Berkman E (2018, August 27). The Self-Defect of Academia. Quillette. https://quillette.com/2018/08/27/the-self-defeat-of-academia/

- Capshew JH (1993). Engineering behavior: Project pigeon, World War II, and the conditioning of BF Skinner. Technology and Culture, 34(4), 835–857. [PubMed] [Google Scholar]

- Center for Postsecondary Research (2019). Summary Tables. NSSE: National Survey of Student Engagement. https://nsse.indiana.edu/html/summary_tables.cfm [Google Scholar]

- Cialdini R (2009). We have to break up. Perspectives on Psychological Science, 4(1), 5–6. 10.1111/j.1745-6924.2009.01091.x [DOI] [PubMed] [Google Scholar]

- Clark KB, & Clark MK (1939). The development of consciousness of self and the emergence of racial identification in Negro preschool children. The Journal of Social Psychology, 10(4), 591–599. [Google Scholar]

- Duckworth AL, Gendler TS, & Gross JJ (2016). Situational strategies for self-control. Perspectives on Psychological Science, 11(1), 35–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duckworth A, Milkman K, Laibson D (2019). Beyond willpower: Strategies for reducing failures of self-control. Psychological Science in the Public Interest, 19(3), 102–129. [DOI] [PubMed] [Google Scholar]

- Funk C, Hefferon M, Kennedy B, & Johnson C (2019, August 2). Trust and Mistrust in Americans Views of Scientific Experts: Americans often trust practitioners more than researchers but are skeptical about scientific integrity. Pew Research Center. https://www.pewresearch.org/science/2019/08/02/americans-often-trust-practitioners-more-than-researchers-but-are-skeptical-about-scientific-integrity/ [Google Scholar]

- Gainforth HL, West R, & Michie S (2015). Assessing connections between behavior change theories using network analysis. Annals of Behavioral Medicine, 49(5), 754–761. [DOI] [PubMed] [Google Scholar]

- Garner PW, & Hinton TS (2010). Emotional display rules and emotion self-regulation: Associations with bullying and victimization in community-based after school programs. Journal of Community & Applied Social Psychology, 20(6), 480–496. [Google Scholar]

- Giner-Sorolla R (2019). From crisis of evidence to a “crisis” of relevance? Incentive-based answers for social psychology’s perennial relevance worries. European Review of Social Psychology, 30(1), 1–38. [Google Scholar]

- Greenberg S (1996, July). Understanding speech understanding: Towards a unified theory of speech perception. In Proceedings of the ESCA Tutorial and Advanced Research Workshop on the Auditory Basis of Speech Perception; (pp. 1–8). Keele, England. [Google Scholar]

- Koopman C (2019). How we became our data: A genealogy of the informational person. University of Chicago Press. [Google Scholar]

- Latane B, & Darley JM (1968). Group inhibition of bystander intervention in emergencies. Journal of Personality and Social Psychology, 10(3), 215. [DOI] [PubMed] [Google Scholar]

- Lewis M (2016). The undoing project: A friendship that changed the world. Penguin UK. [Google Scholar]

- Meehl PE (1967). Theory-testing in psychology and physics: A methodological paradox. Philosophy of Science 34(2), 103–115. [Google Scholar]

- Meehl PE (1978). Theoretical risks and tabular asterisks: Sir Karl, Sir Ronald, and the slow progress of soft psychology. Journal of Consulting and Clinical Psychology, 46(4), 806–834. [Google Scholar]

- Meyer MN, Heck PR, Holtzman GS, Anderson SM, Cai W, Watts DJ, & Chabris CF (2019). Objecting to experiments that compare two unobjectionable policies or treatments. Proceedings of the National Academy of Sciences, 116(22), 10723–10728. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mikesell L, Bromley E, & Khodyakov D (2013). Ethical community-engaged research: A literature review. American Journal of Public Health, 103(12), e7–e14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Milgram S (1963). Behavioral study of obedience. The Journal of Abnormal and Social Psychology, 67(4), 371. [DOI] [PubMed] [Google Scholar]

- Milgram S (1965). Some conditions of obedience and disobedience to authority. Human Relations, 18(1), 57–76. [Google Scholar]

- Minker M & Wallersstein N (2002). Community-Based Participatory Research for Health (1st edition). New York: Wiley, John & Sons. [Google Scholar]

- National Institute of Health (2016, January 28). Write Your Application. NIH: Grants & Funding. https://grants.nih.gov/grants/how-to-apply-application-guide/format-and-write/write-your-application.htm [Google Scholar]

- National Science Foundation (2018a). Recent Trends in Federal Support for U.S. R&D https://www.nsf.gov/statistics/2018/nsb20181/report/sections/research-and-development-u-s-trends-and-international-comparisons/recent-trends-in-federal-support-for-u-s-r-d [Google Scholar]

- National Science Foundation (2018b, January 1). Proposal Preparation and Submission Guidelines: NSF Proposal Processing and Review. https://www.nsf.gov/pubs/policydocs/pappg18_1/pappg_3.jsp#IIIA

- Rockey S (2011, March 8). Correlation between overall impact scores and criterion scores [Blog post]. Retried from https://nexus.od.nih.gov/all/2011/03/08/overall-impact-and-criterion-scores/.

- Rozin P (2001). Social psychology and science: Some lessons from Solomon Asch. Personality and Social Psychology Review, 5(1), 2–14. [Google Scholar]

- Sherif M, Harvey OJ, White BJ, Hood WR, & Sherif CW (1961). Intergroup conflict and cooperation: The Robbers Cave experiment. Norman: University of Oklahoma Book Exchange. [Google Scholar]

- Steele CM, & Aronson J (1995). Stereotype threat and the intellectual test performance of African Americans. Journal of Personality and Social Psychology, 69(5), 797. [DOI] [PubMed] [Google Scholar]

- Stephens NM, Fryberg SA, Markus HR, Johnson CS, & Covarrubias R (2012). Unseen disadvantage: How American universities’ focus on independence undermines academic performance of first-generation college students. Journal of Personality and Social Psychology, 102(6), 1178–1197. [DOI] [PubMed] [Google Scholar]

- Syvertsen AK, Wray-Lake L, Flanagan CA, Wayne Osgood D, & Briddell L (2011). Thirty-year trends in US adolescents’ civic engagement: A story of changing participation and educational differences. Journal of Research on Adolescence, 21(3), 586–594. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weiss CH, & Bucuvalas MJ (1980). Social science research and decision-making. Columbia University Press. [Google Scholar]

- Weiss JA, & Weiss CH (1996). Social scientists and decision makers look at the usefulness of mental health research. In Lorion RP, Iscoe I, DeLeon PH, & VandenBos GR (Eds.), Psychology and Public Policy: Balancing Public Service and Professional Need (pp. 165–181). Washington, DC, US: American Psychological Association. [Google Scholar]

- Werner K, Milyavskaya M, Foxen-Craft E, Koestner R (2016). Some goals just feel easier: Self-concordance leads to goal progress through subjective ease, not effort. Personality and Individual Differences, 96, 237–242. [Google Scholar]

- Yarkoni T, & Westfall J (2017). Choosing prediction over explanation in psychology: Lessons from machine learning. Perspectives on Psychological Science, 12(6), 1100–1122. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.