Abstract

Numerous studies find associations between social media use and beliefs in conspiracy theories and misinformation. While such findings are often interpreted as evidence that social media causally promotes conspiracy beliefs, we theorize that this relationship is conditional on other individual-level predispositions. Across two studies, we examine the relationship between beliefs in conspiracy theories and media use, finding that individuals who get their news from social media and use social media frequently express more beliefs in some types of conspiracy theories and misinformation. However, we also find that these relationships are conditional on conspiracy thinking––the predisposition to interpret salient events as products of conspiracies––such that social media use becomes more strongly associated with conspiracy beliefs as conspiracy thinking intensifies. This pattern, which we observe across many beliefs from two studies, clarifies the relationship between social media use and beliefs in dubious ideas.

Supplementary Information

The online version contains supplementary material available at 10.1007/s11109-021-09734-6.

Keywords: Media effects, Social media, Conspiracy theory, Misinformation, Selective exposure

Introduction

Social media is a key player in the dissemination of conspiracy theories and misinformation.1 Dubious ideas about electoral fraud, COVID-19 vaccine safety, and Satanic pedophiles controlling the government, for example, swiftly navigate social media platforms, oftentimes avoiding censors all the while feeding the algorithms that further promote them (Marwick & Lewis, 2017; Vosoughi et al., 2018). The adoption of such ideas can have tangible consequences for political discourse and behavior (Jolley et al., 2020) and has therefore prompted serious concern about the impact of social media on individuals’ beliefs in dangerous falsehoods (Lazer et al., 2018). Indeed, approximately 75% of Americans believe that social media and the internet, more generally, is the primary mechanism by which conspiracy theories are spread.2

While a robust literature demonstrates an association between social media use and beliefs in conspiracy theories and misinformation (e.g., Jamieson & Albarracín, 2020, Stempel et al, 2007), parallel literatures on public opinion formation and media effects demonstrate that individual-level motivations to seek out and accept certain perspectives are critical to understanding these associations (e.g., Iyengar & Hahn, 2009). Just as partisan identities and ideological principles condition the acceptance of political information, conspiracy thinking, the predisposition to interpret salient events and circumstances as the product of malevolent conspiracies (e.g., Cassese et al., 2020; Enders et al., 2020b; Klofstad et al., 2019; Miller, 2020a), has been found to condition the acceptance of conspiratorial information (Uscinski et al., 2016).

In this paper, we extend recent work on the association between social media use and beliefs in conspiracy theories and misinformation by investigating the moderating role of conspiracy thinking. We hypothesize that while social media is likely to spread conspiracy theories and some misinformation, such information will be most likely to translate into beliefs for those already attracted to conspiratorial explanations for salient events. For those who exhibit the lowest levels of conspiracy thinking, we should observe only a very weak relationship, perhaps even an absence of one altogether, between social media use and beliefs in conspiracy theories and related misinformation––either because such individuals are not intentionally seeking out related ideas, or because they reject such ideas when incidentally exposed to them online. While the confluence of several disparate research strands provides suggestive evidence for these patterns, we are aware of no formal investigations in this vein, despite theoretical support for our expectations in literatures on public opinion formation and media effects.

To test our proposition, we first use a national survey (n = 2023) from March 2020 to examine the relationship between the form and frequency of social media use and beliefs in 15 conspiracy theories, as well as support for QAnon. We find that social media use and beliefs in conspiracy theories are, indeed, correlated; however, this relationship is conditional on individuals’ levels of conspiracy thinking, as hypothesized. Among those least prone to conspiracy thinking, we observe no relationship between social media use and the number of conspiracy beliefs one holds. We extend our analysis via a second study that employs a national survey (n = 1040) fielded in June 2020 that focused on the relationships between respondents’ social media use and beliefs in 7 COVID-19 conspiracy theories, 4 pieces of COVID-19 health misinformation, and 5 non-COVID-19 conspiracy theories. Even though we should not, and do not, treat misinformation and conspiracy theories as synonymous, previous work demonstrates that COVID-19 health misinformation shares a dimension of opinion with COVID-19 conspiracy theories (Miller, 2020a)––one frequently entails the other. We find that, even during a pandemic when people spent considerable time on social media, the relationship between social media use and dubious beliefs is conditional on conspiracy thinking.

The conditional relationship that we uncover suggests that the impact of social media on beliefs in conspiracy theories and misinformation is likely negligible unless individuals are attracted to, or otherwise predisposed to accepting, such ideas. These findings comport with a recent, growing body of literature demonstrating that the relationship between social media use and dubious beliefs is more nuanced and limited than previously assumed (e.g., Chen et al., 2021; Guess et al., 2020). Our findings provide additional such nuance to our understanding of the adoption of beliefs in dubious ideas, and can contribute to the development of more targeted, efficacious approaches to limiting the pernicious impact of online conspiracy theories and misinformation. They also have the potential to inform the development of public policy regarding free speech and social media regulation, especially in a political context in which the U.S. Congress has increased the frequency and scope of hearings regarding the responsibilities of social media companies and Sect. 230 of the Communications Decency Act.

The Conditional Effects of Media Messages

The central media narrative regarding the impact of social media on beliefs in conspiracy theories and misinformation is structured something like, ‘social media turns people into conspiracy theorists and misinformation mongers.’ While some nuance is occasionally present, the narrative nonetheless tends to assume a powerful, direct effect whereby information exposure causally translates into belief and action (e.g., Collins, 2020). This narrative rests on two assumptions: first, that beliefs in conspiracy theories and misinformation are increasing, and second, that social media use is a causal factor in this increase.

While not the central focus of our current investigation, the first assumption is not supported by available evidence. National surveys in the U.S. do not show that the proportion of Americans endorsing specific conspiracy theories has increased over time. For example, belief in Kennedy assassination conspiracy theories have decreased 30 points from their high of nearly 80% in the 1970s (Swift, 2013). Likewise, beliefs in COVID-19 and other conspiracy theories remained stable during the pandemic (Enders et al., 2020b; Romer & Jamieson, 2020), despite a deluge of online misinformation.3 Even support for QAnon in the U.S. has not increased (Schaffner, 2020), despite numerous headlines suggesting it has gone “mainstream.”4 In short, there currently exists no compelling evidence for an average increase in conspiracy beliefs in the internet era.

The second assumption––that social media use causally promotes conspiracy beliefs––does find some empirical support and is often buttressed by observed correlations between social media use and beliefs. Conspiracy theories and misinformation are, indeed, widely available on social media (Allcott & Gentzkow, 2017; Dredze et al., 2016; Wang et al., 2019). Moreover, many studies find that social media use is positively associated with conspiratorial and misinformed beliefs (Allington et al., 2020; Bridgman et al., 2020; Jamieson & Albarracín, 2020; Stempel et al., 2007).

We have no reason to question the accuracy of these reported correlational relationships, but their substantive interpretation remains an open question. Robust literatures on media effects and opinion formation demonstrate that individual-level predispositions––partisan attachments, ideological principles, personality traits, and group identities, to name a few––bear more directly on specific beliefs than new information on its own (Finkel, 1993; Klapper, 1960; McGuire, 1986). Although these primary ingredients of opinion are often supplemented by elite communications (Zaller, 1992) and the information environment (Bartels, 1993), individual-level predispositions are necessary and, sometimes, sufficient for opinion formation (Van Bavel & Pereira, 2018). In short, numerous lines of research (e.g., Stroud et al, 2017) suggest that the connection between exposure to information online and subsequent belief adoption is more complicated than sometimes argued.

Predisposition-based models of opinion formation hold that individual-level traits constitute the “primary ingredients” of mass opinion (Kinder, 1998). Specific beliefs, as this perspective goes, are the products of individual-level motivations, not just information exposure. Processes guided by these predispositions, such as selective exposure (Garrett, 2009; Knobloch-Westerwick & Johnson, 2014; Stroud, 2010), motivated reasoning (Kunda, 1990; Lodge & Taber, 2013), and biased assimilation (Corner et al., 2012) disrupt media influence by guiding individuals to both seek out and accept or ignore and reject information based on its (in)congruence with their previously-established worldviews (e.g., Coe et al., 2008; Druckman & Bolsen, 2011; Kunda, 1990). Considering related social scientific theories of opinion formation, the relationship between social media use and beliefs in specific conspiracy theories and misinformation is likely contingent on psychological predispositions that would lead individuals to seek out and accept such content. Thus, we focus on one predisposition that is critical to the adoption of conspiracy theories: conspiracy thinking.

Conspiracy thinking is a latent predisposition to interpret events and circumstances as the product of malevolent conspiracies, a tendency to impose a conspiratorial narrative on salient affairs (e.g., Enders et al., 2020a; Miller, 2020b). Researchers across disciplines have simultaneously theorized that such a predisposition underwrites beliefs in specific conspiracy theories and misinformation (Klofstad et al., 2019; Brotherton et al., 2013; Imhoff & Bruder, 2014; Atari et al, 2019; Lewandowsky et al, 2013a). Indeed, numerous observational studies find correlations between conspiracy thinking and the endorsement of specific conspiracy theories and misinformation (Cassese et al., 2020; Klofstad et al., 2019; Miller 2020b). Particularly germane to the question at hand, several studies find that people who tend to employ conspiratorial explanations for salient events tend also to actively seek out such content online (Bessi et al., 2015; Del Vicario et al., 2016).

We also have good reason to believe that conspiracy thinking might condition the relationship between social media use and specific conspiracy beliefs. For example, Uscinski et al. (2016) find, using an experimental design with a conspiratorial information treatment, that only individuals exhibiting relatively high levels of conspiracy thinking are influenced by conspiratorial information. Similarly, Mancosu and Vegetti (2020), who manipulate online news stories in their study, find that those exhibiting high levels of conspiracy thinking are more likely than those exhibiting low levels to believe conspiracy theories promoted in online news. Thus, online conspiracy theories and misinformation may have little influence over individuals who are not predisposed to seek out or be attracted to such ideas. This is analogous to how liberal-conservative ideology and partisanship are thought to affect individuals’ choices of information sources and how they interpret that information (e.g., Arceneaux & Johnson, 2013; Iyengar & Hahn, 2009). Regardless, none of these previous findings demonstrate that the connection between social media use and conspiracy beliefs is moderated by conspiracy thinking. We believe it is critically important to theorize about and demonstrate the moderating effect of conspiracy thinking, especially given the tangible societal and public policy consequences of our understanding of the relationship between social media use and conspiracy beliefs.

Hypotheses

Combining the predictions of theories regarding media exposure and opinion formation, we argue that the strength of observed correlations between social media users’ opinions and conspiracy beliefs should be moderated by conspiracy thinking. Our argument builds upon previously identified associations between social media use and beliefs in conspiracy theories and misinformation (e.g., Jamieson & Albarracín, 2020; Stempel, et al., 2007) in hopes of clarifying the substantive interpretation of those previously identified empirical relationships. Our first hypothesis is, therefore, designed to be confirmatory of past work:

H1:

Those who use social media as their primary source for news and spend more time on social media will, all else equal, believe in more conspiracy theories and some misinformation than those who obtain news elsewhere and spend less time on social media platforms.

How these patterns should be interpreted remains unclear. Theories of minimal effects (Finkel, 1993), selective exposure (Stroud, 2010), and mass opinion formation (Zaller, 1992) lead us to suspect that a conditional relationship exists. Specifically, we expect to observe that the strength of the association between social media use and conspiracy beliefs is conditional on conspiracy thinking (Uscinski et al., 2016). Among those who exhibit low levels of conspiracy thinking, social media use will not be associated with beliefs in conspiracy theories and misinformation:

H2:

The impact of social media use is conditional on conspiracy thinking, such that the association between social media use and conspiracy beliefs is weaker for those exhibiting low levels of conspiracy thinking compared to those exhibiting higher levels.

Study 1: Social Media and Conspiracy Beliefs

In Study 1, we investigate the relationship between the types and frequency of social media use and beliefs in a host of conspiracy theories.5 Data was collected from March 17–19, 2020 by Qualtrics. In total, 2023 responses were collected from individuals who matched 2010 U.S. Census records on sex, age, race, and income.6 See the Appendix for details about the sociodemographic composition of the sample.

Measures

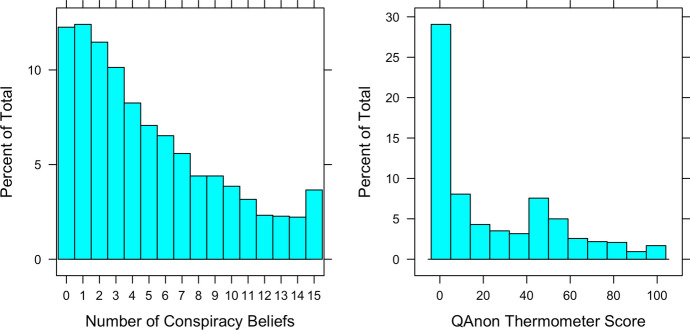

Respondents were presented with 15 conspiracy theories spanning various domains (e.g., science, government, individuals, health). Question wording and levels of support appear in Table 1. Respondents who “agreed” or “strongly agreed” with a conspiracy theory were counted as exhibiting a “conspiracy belief.” We use these beliefs to generate a count of the number of beliefs that each respondent holds––this is our dependent variable. The distribution (depicted in the left panel of Fig. 1) is skewed such that many more individuals hold few beliefs than hold many. The mean is 5 beliefs, and the median 4. Approximately 12% of respondents hold 1 conspiracy belief, with 11% holding 2 beliefs and 10% holding 3. About half of our respondents hold more than 4 conspiracy beliefs.

Table 1.

Conspiracy belief questions and the percentage of respondents who “agree” or “strongly agree” with them

| Conspiracy belief question (label) | % Agree |

|---|---|

| 1.) The one percent (1%) of the richest people in the U.S. control the government and the economy for their own benefit | 54 |

| 2.) Jeffrey Epstein, the billionaire accused of running an elite sex trafficking ring, was murdered to cover-up the activities of his criminal network | 50 |

| 3.) The dangers of genetically-modified foods are being hidden from the public | 45 |

| 4.) President Kennedy was killed by a conspiracy rather than by a lone gunman | 44 |

| 5.) There is a “deep state” embedded in the government that operates in secret and without oversight | 43 |

| 6.) Regardless of who is officially in charge of governments and other organizations, there is a single group of people who secretly control events and rule the world together | 35 |

| 7.) Humans have made contact with aliens and this fact has been deliberately hidden from the public | 33 |

| 8.) Coronavirus was purposely created and released by powerful people as part of a conspiracy | 31 |

| 9.) The dangers of vaccines are being hidden by the medical establishment | 30 |

| 10.) A powerful family, the Rothschilds, through their wealth, controls governments, wars, and many countries’ economies | 29 |

| 11.) Businesses and corporations are purposely allowing foreigners into the country to replace American workers and culture | 29 |

| 12.) The dangers of 5G cellphone technology are being covered up | 26 |

| 13.) The AIDS virus was created and spread around the world on purpose by a secret organization | 22 |

| 14.) School shootings, like those at Sandy Hook, CT and Parkland, FL are false flag attacks perpetrated by the government | 17 |

| 15.) The number of Jews killed by the Nazis during World War II has been exaggerated on purpose | 15 |

Fig. 1.

Distribution of A the number of conspiracy beliefs people hold (0–15) and B feelings toward the “QAnon movement” (0–100). Study 1

We also include a second dependent variable that specifically regards QAnon, a conspiracy theory about a “deep state” of political elites who traffic children that has frequently been touted as an example of how social media can encourage conspiracy beliefs (Roose, 2020). Respondents reported their feelings about the “QAnon movement” vis-à-vis a 101-point feeling thermometer. The thermometer ranges from 0, representing very negative feelings, to 100, which signifies very positive feelings. The distribution of responses, pictured in the right panel of Fig. 1, reveals an even sharper skew than the 15-belief scale. The mean score is 25, and the median is 12. Approximately 21% of respondents rated the QAnon movement greater than 50. Although the proportion of Americans possessing enough knowledge of QAnon to provide it a rating (70% in our sample) has fluctuated over time, the general structure of QAnon beliefs has remained stable between 2018 and 2020 (Enders, et al. Forthcoming), suggesting that our analysis of this conspiracy belief is robust to dynamics regarding salience and mainstream political and media attention to QAnon.

We have three central independent variables. The first captures which medium serves as respondents’ “primary source for finding news.” Respondents were able to select only one of the following options: national TV (22%), local TV (22%), radio (3%), newspaper (4%), (non-social media) internet news websites (24%), and social media websites (21%). The second independent variable asks respondents “how often in a typical week” they “visit or use”––on a five-point scale ranging from “not at all” (1) to “every day” (5)––each of the following social media websites: Facebook (M = 3.95, SD = 1.52), Twitter (M = 2.57, SD = 1.69), Instagram (M = 3.15, SD = 1.76), Reddit (M = 2.00, SD = 1.44), YouTube (M = 3.99, SD = 1.29), and 4chan/8chan (M = 1.33, SD = 0.93).

Our final independent variable captures conspiracy thinking, the psychological predisposition to interpret major events as the product of conspiracy theories. We employ a four-item measure developed by Uscinski and Parent (2014) and validated by others (Miller, 2020b). Respondents react––using five-point scales ranging from “strongly disagree” (1) to “strongly agree” (5)––to four statements, such as “Much of our lives are being controlled by plots hatched in secret places.” The items are then averaged into an index (Range = 1–5, M = 3.17, SD = 1.00, α = 0.84). The correlation between this measure of conspiracy thinking and the count of conspiracy beliefs detailed above is 0.71 (p < 0.001); 0.26 (p < 0.001) with the QAnon thermometer.7

In each of the multivariate models presented below, we control for partisanship, ideological self-identification, interest in politics, educational attainment, age, household income, gender, and race and ethnicity. Details about how these variables are coded appear in the Appendix.

Results

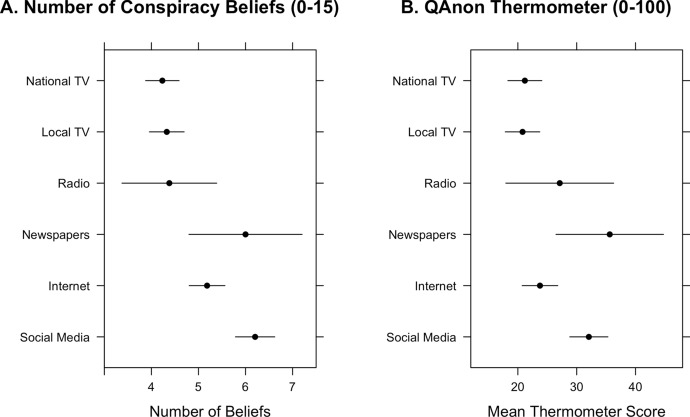

We begin by examining the average number of conspiracy beliefs individuals hold (panel A) and average feelings toward the QAnon movement (panel B) by preferred news medium in Fig. 2. Those who use social media as their primary source of news hold significantly more conspiracy beliefs, on average, than those who use any other medium (p < 0.01 in each case, two-tailed test), with one exception. While there is not a significant difference in conspiracy beliefs between social media users and newspaper consumers (p = 0.717), the error bars are considerably larger for newspaper consumers because of the low proportion of such consumers (4%). We observe no significant difference in mean QAnon feelings between social media users and either newspaper (p = 0.417) or radio (p = 0.305) consumers, presumably for the same reason. This analysis provides evidence that social media use is connected to conspiracy belief, though we reiterate that these patterns shed no light on direction of the causal relationship in question.

Fig. 2.

A Number of conspiracy beliefs people hold (0–15) by news medium. B Mean of “QAnon movement” thermometer (0–100) by news medium. Horizontal bars reflect 95% confidence intervals. Study 1

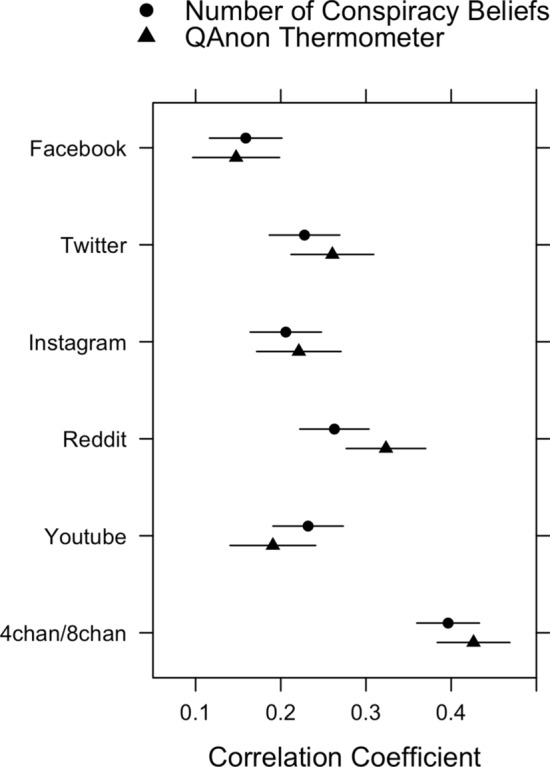

Next, we conduct a more granular examination of the relationship between conspiracy beliefs and social media, specifically. Rather than consider mere usage, we investigate the relationship between self-reported frequency of usage of several social media platforms and both conspiracy beliefs and feelings toward QAnon in Fig. 3. We observe statistically significant correlations in every instance, supporting the notion that frequency of social media usage––in general, across platforms––is positively related to conspiracy beliefs. However, we also find that the strength of this relationship varies across platforms. Despite the frequent blame the platform endures, Facebook usage exhibits the weakest correlation with conspiracy beliefs. This makes good sense given the prevalence of Facebook usage across all political, psychological, and sociodemographic factors. Twitter, YouTube, and Reddit usage are each significantly more strongly correlated with the number of conspiracy beliefs one holds than Facebook usage (p < 0.05 in each case), and 4chan/8chan (now 8kun) usage stands considerably above the pack with a correlation more than double that associated with Facebook usage (p < 0.001). This pattern is essentially the same for feelings toward QAnon, except we do not observe a statistically significant difference in the correlations with Facebook and YouTube use (p = 0.237).

Fig. 3.

Correlation between frequency of social media use and number of beliefs in conspiracy theories (0–15) and “QAnon movement” thermometer (0–100) by platform. Bars reflect 95% confidence intervals. Study 1

Thus far, our analyses suggest that those who primarily look to social media for news hold more conspiracy beliefs than those who consume traditional news media. Moreover, the more frequently one engages social media platforms––for news or otherwise––the more conspiracy beliefs they tend to hold. In our final analysis, we model these relationships, regressing conspiracy beliefs on social media consumption for news, frequency of social media consumption, conspiracy thinking, and a host of controls. Rather than incorporate each platform into the model, we generate a summated index of social media usage across platforms (Range = 1–5, M = 2.83, SD = 0.92, α = 0.70). Even though we lose some information about the variability of relationships between platforms and conspiracy beliefs, this approach is useful for reducing measurement error in self-reports (Ansolabehere et al, 2008) and not giving too much weight to any one platform.8

We consider the relationships outlined above in three steps. In the first model, we include only the dummy variable denoting whether one uses social media as their primary source of news and conspiracy thinking (Model 1). In the second model, we add the frequency of social media usage index (Model 2). We build the model in this way with an expectation that frequency of usage may wash away the effect of merely choosing social media over other platforms, even though the latter effect is important to establish in a multivariate framework with controls (for example, social media news consumption could be confounded by age, whereby older people are systematically less likely to consume news on social media than younger people). Finally, we add interactions between both types of social media use and conspiracy thinking in the third model (Model 3). Recall that we hypothesize that the relationship between social media use and specific conspiracy beliefs is contingent on conspiracy thinking.

We use OLS to estimate each of the models presented in Table 2. Because the distributions of the number of conspiracy beliefs and QAnon thermometer variables are skewed, we might also consider estimating these models using an estimator designed for count variables (e.g., Poisson, negative binomial) or censored variables (e.g., tobit). In all cases throughout this manuscript the dependent variables are over-dispersed (i.e., the conditional variance is statistically greater than the mean); thus, standard Poisson models are not appropriate. Moreover, three other concerns persist. First, as the QAnon variable is not a count, some assumptions of count models do not hold. Second, each of our count dependent variables are right-censored: no individual can express more than 15 conspiracy beliefs, in the case of Study 1 for example, even though they may believe in many more such ideas. Finally, count models assume that the probability of adding an additional number to the count is (roughly) equal across the distribution of the variable. Unfortunately, we have no reason to expect this is true. For example, someone who believes that coronavirus is being used to install tracking devices in our bodies is very likely to also believe that the coronavirus was released as part of a conspiracy by elites (both of these questions are employed in Study 2); in other words, conspiracy beliefs are dependent in many cases. Since OLS is remarkably robust to violations of assumptions, and because this model is most familiar to readers, we present OLS results below. We also estimated all models throughout the paper using a tobit regression model for censored dependent variables. Substantive results are identical across estimation strategies. Tobit replications of all models appear in the Appendix.9

Table 2.

OLS regressions of number of conspiracy beliefs (0–15) and feelings toward “QAnon movement” (0–100) on social media news and use, with controls. Study 1

| Independent variable (range) | Number of conspiracy beliefs | QAnon FT | ||

|---|---|---|---|---|

| Model 1 | Model 2 | Model 3 | Model 4 | |

| Social media for news (0, 1) |

0.596*** (0.169) |

0.349* (0.167) |

0.503 (0.591) |

11.172 (6.335) |

| Frequency of social media use (1–5) |

0.878*** (0.086) |

-0.753** (0.238) |

− 4.214 (2.498) |

|

| Conspiracy thinking (1–5) |

3.028*** (0.069) |

2.909*** (0.068) |

1.493*** (0.203) |

− 6.431*** (2.184) |

| SM frequency conspiracy thinking |

0.492*** (0.067) |

3.997*** (0.693) |

||

| SM for news conspiracy thinking |

− 0.061 (0.168) |

− 2.426 (1.759) |

||

| Partisanship (1–5) |

0.122* (0.057) |

0.146** (0.056) |

0.137* (0.056) |

1.012 (0.581) |

| Ideology (1–7) |

− 0.011 (0.045) |

0.021 (0.044) |

0.028 (0.043) |

− 0.381 (0.450) |

| Interest in politics (0–4) |

0.384*** (0.070) |

0.269*** (0.069) |

0.260*** (0.069) |

0.294 (0.763) |

| Education (1–6) |

0.029 (0.049) |

-0.058 (0.048) |

− 0.064 (0.048) |

0.880 (0.520) |

| Age (18–90) |

− 0.017*** (0.005) |

0.003 (0.005) |

0.000 (0.005) |

− 0.004 (0.054) |

| Household income (1–7) |

0.136** (0.042) |

0.081 (0.042) |

0.052 (0.041) |

0.617 (0.444) |

| Female (0, 1) |

− 0.525*** (0.137) |

− 0.372** (0.134) |

− 0.327* (0.132) |

− 1.813 (1.420) |

| Black (0, 1) |

0.141 (0.197) |

− 0.007 (0.192) |

− 0.021 (0.190) |

− 1.048 (1.977) |

| Hispanic (0, 1) |

0.157 (0.183) |

0.111 (0.179) |

0.151 (0.177) |

− 2.264 (1.842) |

| Constant |

− 5.846*** (0.440) |

− 8.122*** (0.483) |

− 3.292*** (0.811) |

13.020 (8.830) |

| R2 | 0.534 | 0.557 | 0.569 | 0.213 |

| n | 2022 | 2022 | 2022 | 1418 |

***p < 0.001

**p < 0.01

*p < 0.05

OLS coefficients with standard errors in parentheses

Across models we observe a statistically significant effect of conspiracy thinking, and we always observe some significant effect of social media use. While social media use as a primary source of news is significant in the first and second model, it is not in the third model including interactions between (frequency of) use and conspiracy thinking. In Model 3, we observe a statistically significant interaction between conspiracy thinking and frequency of social media use, though we do not observe a significant interaction between conspiracy thinking and use of social media––rather than other media––for news.

Nearly all the aforementioned patterns hold for identical models of feelings toward the QAnon movement,10 although only the final interactive model (4) is depicted in Table 2 (see the Appendix for the equivalent of Models 1 and 2). Here, too, the interaction between frequency of social media use and conspiracy thinking is positive and statistically significant, while the interaction involving social media as a primary source of news is not.

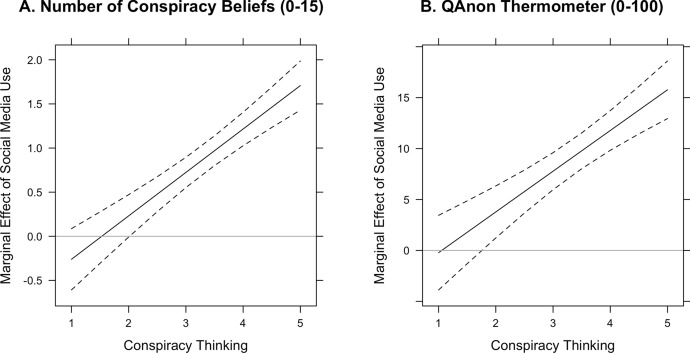

To facilitate interpretation of the interaction effects in Models 3 and 4, we plot the marginal effect of the frequency of social media use on conspiracy beliefs and feelings toward the QAnon movement across levels of conspiracy thinking in Fig. 4.11 At the lowest levels of conspiracy thinking we observe no relationship between frequency of social media use and either the number of conspiracy beliefs one holds or feelings about QAnon. As the level of conspiracy thinking increases, however, this relationship increases in strength.

Fig. 4.

Marginal effect of social media use on the number of conspiracy beliefs one holds (0–15) and feelings about the QAnon movement (0–100) by level of conspiracy thinking. Dashed lines represent 95% confidence intervals. Study 1

This pattern can be interpreted in different ways. First, it seems reasonable to conclude that social media use alone does not cause one to believe in conspiracy theories––rather, some susceptibility to conspiratorial sentiments is required for this relationship to take hold. Second, this pattern may also be suggestive of selective exposure: the conditional marginal effect may indicate that people who exhibit higher levels of conspiracy thinking are more likely to use social media to seek out the conspiratorial ideas that reinforce their belief system. Of course, both possibilities are likely in operation. Importantly, however, neither of these possible explanations leaves open the additional possibility for a widespread increase in conspiracy beliefs due to systematic, albeit incidental, exposure to conspiratorial ideas on social media alone.

Study 2: Beliefs in COVID-19 Conspiracy Theories and Misinformation

In Study 2, we extend our findings involving a host of conspiracy beliefs to beliefs in both conspiracy theories and health misinformation regarding COVID-19. These analyses serve to provide supportive evidence for the robustness of our initial findings and demonstrate that the patterns we uncovered in Study 1 are applicable within certain domains of belief and across conspiracy theories and misinformation. Even though misinformation should not be treated as synonymous to conspiracy theories, we do have good reason to expect that the relationship between belief in COVID-19 misinformation, in particular, and social media use is moderated by conspiracy thinking. Indeed, previous work finds that COVID-19 misinformation and conspiracy theories occupy the same dimension of opinion (Miller, 2020a). Similarly, work on the psychological antecedents of climate change beliefs finds that such beliefs––whether misinformed, conspiratorial, or otherwise––can be partially explained by conspiracy thinking (Lewandowsky et al., 2013a, b; Uscinski & Olivella, 2017). In other words, there are some topical domains for which misinformation tends to go hand-in-hand with conspiracy theories; COVID-19 seems to be such a domain.

Data for this study was collected between June 4–17, 2020 by Qualtrics. In total, 1040 responses were collected from individuals who matched 2010 U.S. Census records on sex, age, race, and income; see the Appendix for a comparison of sample characteristics to U.S. Census estimates.

Measures

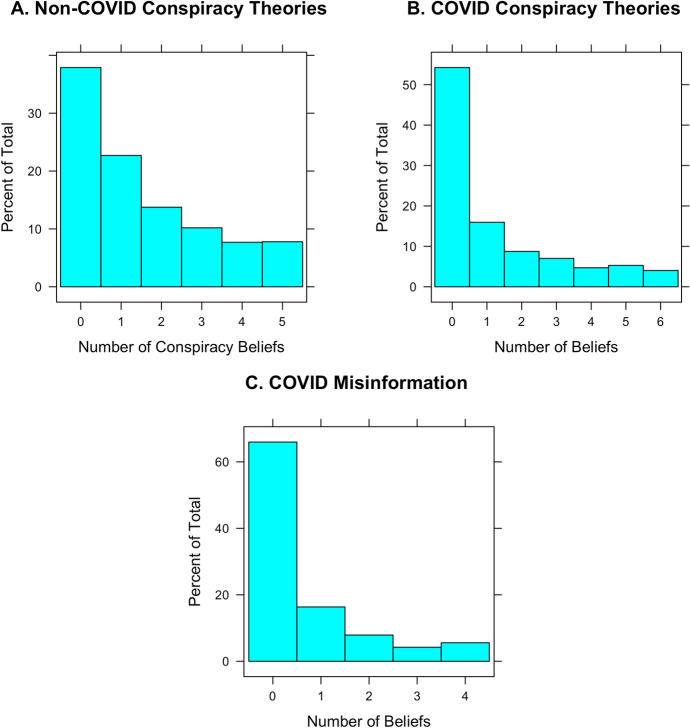

Our dependent variables are similar to those employed in Study 1, except in addition to a count of many different conspiracy beliefs we also employ counts of beliefs in COVID-19 conspiracy theories and health misinformation, specifically.12 Question wording and levels of support for such ideas appear in Table 3; the distribution of each of the count variables appears in Fig. 5. We employ 5 non-COVID-19 conspiracy beliefs (Mean = 1.50, Median = 0), 6 COVID-19 conspiracy beliefs (Mean = 1.24, Median = 0), and 4 beliefs in COVID-19 misinformation (Mean = 0.67, Median = 0). As Fig. 5 shows, people appear more likely to believe non-COVID-19 conspiracy theories than those associated with the virus. Indeed, more than 50% of respondents reported no beliefs in COVID-19 conspiracy theories or misinformation. That said, at least 34% of respondents reported at least one belief in COVID-19 misinformation, which generally involve harmful self-medication practices such as ingesting disinfectant or using hydroxychloroquine.

Table 3.

Questions about beliefs in conspiracy theories and misinformation and the percentage of the mass public that either “agrees” or “strongly agrees.”

| Conspiracy/Misinformation belief question | % Agree |

|---|---|

| COVID-19 conspiracy beliefs | |

| 1.) The number of deaths related to the coronavirus has been exaggerated | 29 |

| 2.) Coronavirus was purposely created and released by powerful people as part of a conspiracy | 27 |

| 3.) The coronavirus is being used to force a dangerous and unnecessary vaccine on Americans | 25 |

| 4.) The coronavirus is being used to install tracking devices inside our bodies | 18 |

| 5.) Bill Gates is behind the coronavirus pandemic | 13 |

| 6.) 5G cell phone technology is responsible for the spread of the coronavirus | 11 |

| COVID-19 health misinformation | |

| 1.) Ultra-violet (UV) light can prevent or cure COVID-19 | 19 |

| 2.) Hydroxychloroquine can prevent or cure COVID-19 | 18 |

| 3.) COVID-19 can’t be transmitted in areas with hot and humid climates | 18 |

| 4.) Putting disinfectant into your body can prevent or cure COVID-19 | 12 |

| General conspiracy beliefs | |

| 1.) There is a “deep state” embedded in the government that operates in secret and without oversight | 45 |

| 2.) The dangers of genetically-modified foods are being hidden from the public | 40 |

| 3.) The dangers of vaccines are being hidden by the medical establishment | 30 |

| 4.) The AIDS virus was created and spread around the world on purpose by a secret organization | 19 |

| 5.) School shootings, like those at Sandy Hook, CT and Parkland, FL are false flag attacks perpetrated by the government | 16 |

Fig. 5.

Distribution of the number of beliefs in non-COVID conspiracy theories (0–5), COVID conspiracy theories (0–6), and COVID misinformation (0–4) that people hold. Study 2

Our independent variables are identical to those employed in Study 1. In this dataset, conspiracy thinking (Range = 1–5, M = 3.18, SD = 0.97, α = 0.84) is correlated 0.61 with non-COVID-19 conspiracy beliefs, 0.53 with COVID-19 conspiracy beliefs, and 0.38 with beliefs in COVID-19 misinformation. In each of the multivariate models presented, we control for partisanship, ideological self-identification, interest in politics, educational attainment, age, household income, gender, and race and ethnicity. Details about control variable coding appear in the Appendix.

Results

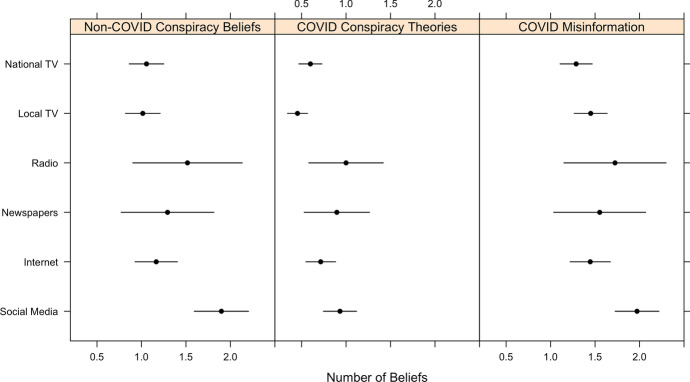

Our empirical tests in Study 2 follow the same pattern as those in Study 1. First, we examine the average number of beliefs in conspiracy theories and misinformation across primary sources of news, by dependent variable, in Fig. 6. Results are very similar to those reported in Study 1. For conspiracy beliefs that both do and do not regard COVID-19, we observe a greater mean for social media users than any other medium. This difference is significant (p < 0.01, two-tailed) in all cases but with newspaper (p = 0.112 for non-COVID, p = 0.051 for COVID beliefs) and radio (p = 0.453 for non-COVID, p = 0.343 for COVID beliefs) news consumers for which there is a high degree of uncertainty owing to the small proportion of respondents claiming to use those media as their primary sources of news (6% and 3%, respectively). When it comes to COVID-19 misinformation, we observe a slightly greater mean for radio listeners than social media users, though this difference is nonsignificant (p = 0.785).

Fig. 6.

Number of beliefs in non-COVID conspiracy theories (0–5), COVID conspiracy theories (0–6), and COVID misinformation (0–4) that people hold by news medium. Bars reflect 95% confidence intervals. Study 2

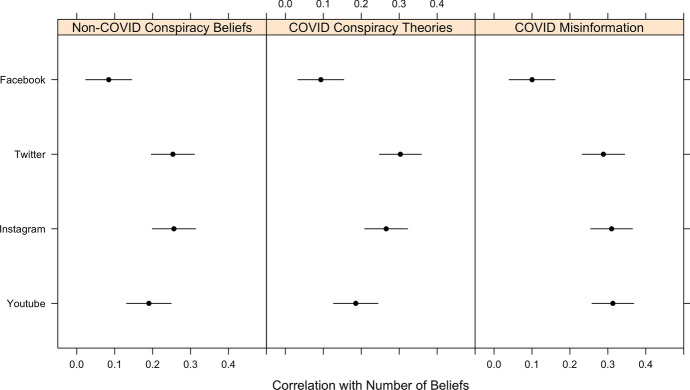

In Fig. 7, we plot the correlation between beliefs in conspiracy theories and misinformation by frequency of usage of four different social media platforms. Across all types of beliefs and platforms we observe statistically significant, positive associations between the number of beliefs and social media usage. Moreover, as in Study 1, this relationship is weakest for Facebook users, which compose 60–80% of the adult U.S. population by most estimates. We observe the greatest correlations for Twitter and Instagram in this study, although YouTube is either close behind (COVID-19 misinformation) or statistically indistinguishable (both types of conspiracy theories; p > 0.11 in each case) from these two.

Fig. 7.

Correlation between frequency of social media use and number of beliefs in non-COVID conspiracy theories (0–5), COVID conspiracy theories (0–6), and COVID misinformation (0–4), by platform. Bars reflect 95% confidence intervals. Study 2

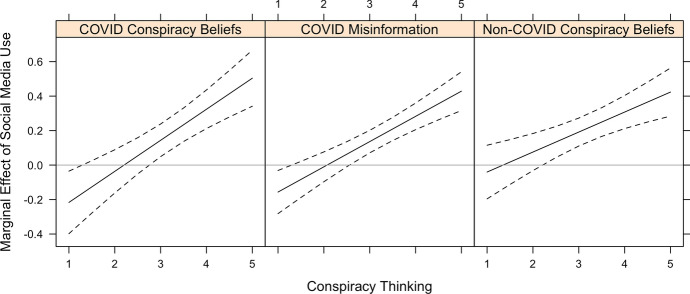

For each of the three dependent variables, we specified the same three models discussed in Study 1.13 We relegate full model estimates to the Appendix, though we note here that, once again, conspiracy thinking and frequency of social media use are statistically significant and positive across all models for each dependent variable. In Fig. 8, we plot the marginal effect of the frequency of social media use on the number of beliefs in conspiracy theories and misinformation, conditional on the level of conspiracy thinking. In each case, we observe the same pattern: among those exhibiting very low levels of conspiracy beliefs, the relationship between social media use and conspiratorial and misinformed beliefs is very weak; as conspiracy thinking intensifies, so too does the relationship in question. Those demonstrating the strongest proclivity toward conspiracy thinking exhibit the strongest relationship between frequency of social media use and number of beliefs in dubious ideas.

Fig. 8.

Marginal effect of social media use on the number of beliefs in non-COVID conspiracy theories (0–5), COVID conspiracy theories (0–6), and COVID misinformation (0–4) that people hold by level of conspiracy thinking. Dashed lines represent 95% confidence intervals. Study 2

General Discussion

That the patterns we uncover consistently present themselves across various domains of belief, time, and sociopolitical contexts is telling of the relationship between social media usage and conspiracy beliefs. Even amid a crippling global pandemic where many Americans were confined to their homes and online contact with outsiders, social media usage alone appears incapable of promoting beliefs in conspiracy theories and misinformation. Rather, individuals must possess a belief system hospitable to conspiratorial information. The more likely one is to see conspiracies in all manner of cultural and political events, the stronger the relationship between social media use and beliefs in dubious ideas. Whether conspiracy theorists actively seek out conspiracy theories and misinformation online or people exhibiting higher levels of conspiracy thinking are simply more willing to accept the veracity of conspiratorial claims, some attraction to conspiratorial explanations appears to be a necessary ingredient of conspiracy belief.

These patterns situate nicely within a growing body of literature finding that the effects of online misinformation and conspiracy theories are likely smaller than commonly assumed and concentrated among audiences exhibiting particular characteristics (Nelson & Taneja, 2018; Guess et al, 2019; Bail et al., 2019; Guess et al., 2020; Benkler et al., 2020; Nyhan, 2020). They also complement studies finding that most “fake news” is shared (Lazer et al., 2018; Berriche & Altay, 2020) and adopted by a relatively small proportion of users (Metzger et al., 2020). However, our findings run counter to the prevailing journalistic narrative that social media widely spreads conspiracy theories and misinformation, exposing unwitting consumers to dubious ideas who adopt them in short order. Although media narratives rarely make good scientific foils, they are consequential in this instance. Social media platforms have been experimenting with labeling conspiracy theories and misinformation, tweaking algorithms to avoid certain content, and even removing content. These actions require onerous judgements about the veracity of information––judgements that would be difficult even for the most astute philosophers of science, let alone tech developers and politicians (Uscinski & Butler, 2013).

That the connection between social media use and conspiracy beliefs is contingent on (at least) conspiracy thinking suggests additional avenues for social media companies and policymakers to pursue for reducing the pernicious effects of conspiracy theories and misinformation. For example, policymakers might do well to consider how trust in governmental and scientific institutions and processes can be bolstered––these changes focus on the toxic levels of conspiracy thinking that encourage beliefs in conspiracy theories and misinformation regardless of the medium by which one is exposed to such ideas. Targeting of the predispositions that facilitate beliefs in dubious ideas can also be used to devise more effective strategies for correcting dubious beliefs (Vraga & Bode, 2017) and pre-bunking dubious ideas (Roozenbeek et al, 2020). Finally, policymakers and journalists might broaden their inquiries to include mainstream news sources––if predispositions matter, regular attention to conspiracy theories in mainstream outlets can do as much, if not more, to inflame conspiracy beliefs as social media platforms can (e.g., Papakyriakopoulos et al, 2020).

Our findings are not without limitations. Cross-sectional data does not allow us to empirically test the causal mechanism by which social media usage and conspiracy beliefs become related. As we note above, it strikes us as unlikely that either selective exposure or mere willingness to accept conspiratorial information accurately characterizes all social media users with some minimal level of conspiratorial predispositions (Mancosu & Vegetti, 2020), but knowing the extent to which one of the possibilities is present relative to the other would provide for a richer understanding of the causal process and better aid policymakers and social media platforms in devising effective strategies for mitigating the spread of conspiracy theories and misinformation. Experimental methods or longitudinal data may help with this, though external validity always threatens inference in experimental frameworks and longitudinal data can be difficult and expensive to collect. Hence, media effects scholars continue to debate the relative merits of select exposure, selective avoidance, and incidental exposure in explaining relationships between media usage and attitudes, even after nearly a century of empirical investigation (see review in Valkenburg et al., 2016). Experimental designs might also be used to ensure that the patterns we uncover using self-reported media usage are accurate, in addition to directly exposing individuals to conspiratorial content online. We encourage future studies to engage the difficult task of simultaneously tracking actual media use and belief formation.

Supplementary Information

Below is the link to the electronic supplementary material.

Acknowledgements

We thank Miles Armaly, as well as 4 anonymous reviewers, for their helpful suggestions.

Footnotes

While we oftentimes refer to both conspiracy theories and misinformation, we do not treat them as synonymous constructs. A conspiracy theory is “a proposed explanation of events that cites as a main causal factor a small group of persons (the conspirators) acting in secret for their own benefit, against the common good” (Uscinski et al., 2016, p. 58). Misinformation, on the other hand, is simply information that is false or misleading (Flynn et al, 2017). Misinformation often surrounds and buttresses conspiracy theories, but this is not a necessary condition.

See nationally representative polls (Institute, 2021; Quinnipiac University Polling Institute, 2021).

That said, the number of conspiracy theories permeating political culture could be increasing, even if the number of believers or strength of belief is not.

For example, http://tiny.cc/byn0tz

We also replicated findings from Study 1 and 2 in two additional studies: a representative survey of U.S. adults, and a representative survey of Floridians, both from 2020. Details of these analyses appear in the Appendix.

Although the data is representative, the sample is non-probability; the same is true for the data employed in Study 2.

See the Appendix for full item wordings.

This is especially important in the case of 4chan/8chan because very few respondents (7%) reported using the platform “everyday” or “several times a week” (for comparison, it is 72% for Facebook). Re-estimating the model for each platform separately, we find substantively identical results to those presented below, including significant interaction effects for each platform (p < 0.001 in each case).

As an additional robustness check, we re-estimated all models for which the dependent variable is a count, instead using a summated scale of beliefs in conspiracy theories or misinformation (i.e., we retained the variation in survey responses, “strongly disagree” to “strongly agree”). Substantive results are identical to those presented above; see the Appendix.

Social media as a primary source of news is not significant in the equivalent of model 2 for the QAnon thermometer, though frequency of social media use is. See the Appendix for details.

Other variables in the model are held at their mean values.

Although classifying misinformation as such––i.e., determining what is true and what is false––is an inherently tricky endeavor (Uscinski & Butler, 2013), we believe that each of the pieces of misinformation we employ are fairly straightforward. Indeed, there was never a lack of an expert medical consensus on the efficacy of ultraviolent light or household disinfectant in preventing or treating COVID-19, even at the beginning of the pandemic. Given that expert knowledge is a primary epistemological mechanism by which “truth” is deciphered, our labeling of the ideas we probe as “misinformation” is appropriate.

These results also replicate when using the tobit estimator instead of ordinary least squares. See the Appendix for a replication of Fig. 8.

Replication materials are available on the Harvard Dataverse: https://doi.org/10.7910/DVN/VSNQPI

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Adam M. Enders, Email: adam.enders@louisville.edu

Joseph E. Uscinski, Email: uscinski@miami.edu

Michelle I. Seelig, Email: mseelig@miami.edu

Casey A. Klofstad, Email: c.klofstad@miami.edu

Stefan Wuchty, Email: wuchtys@cs.miami.edu.

John R. Funchion, Email: jfunchion@miami.edu

Manohar N. Murthi, Email: mmurthi@miami.edu

Kamal Premaratne, Email: kamal@miami.edu.

Justin Stoler, Email: stoler@miami.edu.

References

- Allcott H, Gentzkow M. Social media and fake news in the 2016 election. Journal of Economic Perspectives. 2017;31:211–236. doi: 10.1257/jep.31.2.211. [DOI] [Google Scholar]

- Allington D, Duffy B, Wessely S, Dhavan N, Rubin J. Health-protective behaviour, social media usage and conspiracy belief during the COVID-19 public health emergency. Psychological Medicine. 2020 doi: 10.1017/S003329172000224X. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ansolabehere S, Rodden J, Snyder JM. The strength of issues: Using multiple measures to gauge preference stability, ideological constraint, and issue voting. American Political Science Review. 2008;102(2):215–232. doi: 10.1017/S0003055408080210. [DOI] [Google Scholar]

- Arceneaux K, Johnson M. Changing minds or changing channels?: Partisan news in an age of choice. University of Chicago Press; 2013. [Google Scholar]

- Atari M, Afhami R, Swami V. Psychometric assessments of persian translations of three measures of conspiracist beliefs. PLoS ONE. 2019;14:e0215202. doi: 10.1371/journal.pone.0215202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bail C, Guay B, Maloney E, Combs A, Hillygus DS, Merhout F, Freelon D, Volfovsky A. Assessing the Russian internet research agency’s impact on the political attitudes and behaviors of American Twitter users in late 2017. Proceedings of the National Academy of Sciences. 2019;117(1):243–250. doi: 10.1073/pnas.1906420116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bavel V, Jay J, Pereira A. The partisan brain: An identity-based model of political belief. Trends in Cognitive Sciences. 2018;22:213–224. doi: 10.1016/j.tics.2018.01.004. [DOI] [PubMed] [Google Scholar]

- Bartels LM. Messages received: The political impact of media exposure. The American Political Science Review. 1993;87:267–285. doi: 10.2307/2939040. [DOI] [Google Scholar]

- Benkler, Y., Casey, T., Bruce E., Hal R., Justin C., Robert F., Jonas K., & Carolyn S. (2020). "Mail-in Voter Fraud: Anatomy of a Disinformation Campaign." http://wilkins.law.harvard.edu/publications/Benkler-etal-Mail-in-Voter-Fraud-Anatomy-of-a-Disinformation-Campaign.pdf.

- Berriche M, Altay S. Internet users engage more with phatic posts than with health misinformation on facebook. Palgrave Communications. 2020;6:71. doi: 10.1057/s41599-020-0452-1. [DOI] [Google Scholar]

- Bessi A, Coletto M, Davidescu GA, Scala A, Caldarelli G, Quattrociocchi W. Science vs conspiracy: Collective narratives in the age of misinformation. PLoS ONE. 2015;10:e0118093. doi: 10.1371/journal.pone.0118093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bridgman A, Merkley E, Loewen PJ, Owen T, Ruths D, Teichmann L, Zhilin O. The causes and consequences of COVID-19 misperceptions: Understanding the role of news and social media. The Harvard Kennedy School Misinformation Review. 2020 doi: 10.37016/mr-2020-028. [DOI] [Google Scholar]

- Brotherton R, French CC, Pickering AD. Measuring belief in conspiracy theories: The generic conspiracist beliefs scale. Frontiers in Psychology. 2013;4:1–15. doi: 10.3389/fpsyg.2013.00279. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cassese EC, Farhart CE, Miller JM. Gender differences in COVID-19 conspiracy theory beliefs. Politics & Gender. 2020;16:1009–1018. doi: 10.1017/S1743923X20000409. [DOI] [Google Scholar]

- Chen, Annie Y., Brendan Nyhan, Jason Reifler, Ronald E. Robertson, and Christo Wilson. 2021. "Exposure to Alternative & Extremist Content on Youtube." In Secondary Exposure to Alternative & Extremist Content on Youtube, ed Secondary. https://www.adl.org/media/15868/download. Reprint, Reprint.

- Coe K, Tewksbury D, Bond BJ, Drogos KL, Porter RW, Yahn A, Zhang Y. Hostile news: Partisan use and perceptions of cable news programming. Journal of Communication. 2008;58:201–219. doi: 10.1111/j.1460-2466.2008.00381.x. [DOI] [Google Scholar]

- Collins, Ben. 2020. "How qanon rode the pandemic to new heights — and fueled the viral anti-mask phenomenon." NBC News. https://www.nbcnews.com/tech/tech-news/how-qanon-rode-pandemic-new-heights-fueled-viral-anti-mask-n1236695. (8/15/2020).

- Corner A, Whitmarsh L, Xenias D. Uncertainty, scepticism and attitudes towards climate change: Biased assimilation and attitude polarisation. Climatic Change. 2012;114:463–478. doi: 10.1007/s10584-012-0424-6. [DOI] [Google Scholar]

- Dredze M, Broniatowski DA, Hilyard KM. Zika vaccine misconceptions: A social media analysis. Vaccine. 2016;34:3441–3442. doi: 10.1016/j.vaccine.2016.05.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Druckman J, Bolsen T. Framing, motivated reasoning, and opinions about emergent technologies. Journal of Communication. 2011;61:659–688. doi: 10.1111/j.1460-2466.2011.01562.x. [DOI] [Google Scholar]

- Enders, A., Uscinski, J., Klofstad, C., Wuchty, S., Seelig, M., Funchion, J., et al. (Forthcoming). Who supports qanon? A case study in political extremism. Journal of Politics.

- Enders A, Uscinski J, Klofstad C, Stoler J. The Different forms of COVID-19 misinformation and their consequences. Harvard Kennedy School Misinformation Review. 2020;1:1–21. [Google Scholar]

- Enders A, Smallpage SM, Lupton RN. Are all ‘birthers’ conspiracy theorists? On the relationship between conspiratorial thinking and political orientations. British Journal of Political Science. 2020;50:849–866. doi: 10.1017/S0007123417000837. [DOI] [Google Scholar]

- Finkel SE. Reexamining the "minimal effects" model in recent presidential campaigns. The Journal of Politics. 1993;55:1–21. doi: 10.2307/2132225. [DOI] [Google Scholar]

- Flynn DJ, Nyhan B, Reifler J. The nature and origins of misperceptions: Understanding false and unsupported beliefs about politics. Political Psychology. 2017;38:127–150. doi: 10.1111/pops.12394. [DOI] [Google Scholar]

- Garrett RK. Politically motivated reinforcement seeking: Reframing the selective exposure debate. Journal of Communication. 2009;59:676–699. doi: 10.1111/j.1460-2466.2009.01452.x. [DOI] [Google Scholar]

- Guess A, Nagler J, Tucker J. Less than you think: Prevalence and predictors of fake news dissemination on facebook. Science Advances. 2019;5(1):eauu4586. doi: 10.1126/sciadv.aau4586. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guess A, Nyhan B, Reifler J. Exposure to untrustworthy websites in the 2016 US election. Nature Human Behaviour. 2020;4:472–480. doi: 10.1038/s41562-020-0833-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Imhoff R, Bruder M. Speaking (Un-)truth to power: Conspiracy mentality as a generalised political attitude. European Journal of Personality. 2014;28:25–43. doi: 10.1002/per.1930. [DOI] [Google Scholar]

- Institute, Quinnipiac University Polling. 2021. Quinnipiac University Poll, Question 29, 31118230.00028. In: Roper Center for Public Opinion, Quinnipiac University Polling Institute. Cornell University, Ithaca, NY Research.https://ropercenter.cornell.edu/ipoll/study/31118230/questions#71e322a9-ef8b-4372-b37b-a70b3bc3f06a

- Iyengar S, Hahn KS. Red media, blue media: Evidence of ideological selectivity in media use. Journal of Communication. 2009;59:19–39. doi: 10.1111/j.1460-2466.2008.01402.x. [DOI] [Google Scholar]

- Jamieson KH, Albarracín D. The relation between media consumption and misinformation at the outset of the sars-cov-2 pandemic in the us. The Harvard Kennedy School Misinformation Review. 2020;1(2):1–22. doi: 10.37016/mr-2020-012. [DOI] [Google Scholar]

- Jolley D, Mari S, Douglas KM. Consequences of conspiracy theories. In: Butter M, Knight P, editors. Routledge Handbook of Conspiracy Theories. Routledge; 2020. pp. 231–241. [Google Scholar]

- Kinder DR. Opinion and action in the realm of politics. In: Gilbert DT, Fiske ST, Lindsey G, editors. The handbook of social psychology. 4. McGraw-Hill; 1998. pp. 778–867. [Google Scholar]

- Klapper J. The effects of mass communications. Free Press; 1960. [Google Scholar]

- Klofstad CA, Uscinski JE, Connolly JM, West JP. What drives people to believe in zika conspiracy theories? Palgrave Communications. 2019;5:36. doi: 10.1057/s41599-019-0243-8. [DOI] [Google Scholar]

- Knobloch-Westerwick S, Johnson BK. Selective exposure for better or worse: Its mediating role for online news' impact on political participation*. Journal of Computer-Mediated Communication. 2014;19:184–196. doi: 10.1111/jcc4.12036. [DOI] [Google Scholar]

- Kunda Z. The case for motivated reasoning. Psychological Bulletin. 1990;108:480. doi: 10.1037/0033-2909.108.3.480. [DOI] [PubMed] [Google Scholar]

- Lazer DMJ, Baum MA, Benkler Y, Berinsky AJ, Greenhill KM, Menczer F, Metzger MJ, Nyhan B, Pennycook G, Rothschild D, Schudson M, Sloman SA, Sunstein CR, Thorson EA, Watts DJ, Zittrain JL. The science of fake news. Science. 2018;359:1094–1096. doi: 10.1126/science.aao2998. [DOI] [PubMed] [Google Scholar]

- Lazer, D., Ruck, D. J., Quintana, A., Shugars, S., Joseph, K., Horgan, L., Gitomer, A., Bajak, A., Baum, M., Ognyanova, K., Qu, H., Hobbs, W. R., McCabe, S., & Green, J. (2020). "The State of the Nation: A 50-State Covid-19 Survey Report #18: Covid-19 Fake News on Twitter." In Secondary The State of the Nation: A 50-State Covid-19 Survey Report #18: Covid-19 Fake News on Twitter, ed Secondary https://news.northeastern.edu/wp-content/uploads/2020/10/COVID19_CONSORTIUM_REPORT_18_FAKE_NEWS_TWITTER_OCT_2020.pdf. Reprint.

- Lewandowsky S, Gignac GE, Oberauer K. The role of conspiracist ideation and worldviews in predicting rejection of science. PLoS ONE. 2013;8:e75637. doi: 10.1371/journal.pone.0075637. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewandowsky S, Oberauer K, Gignac G. Nasa faked the moon landing—Therefore (Climate) science is a hoax: An anatomy of the motivated rejection of science. Psychological Science. 2013;5:622–633. doi: 10.1177/0956797612457686. [DOI] [PubMed] [Google Scholar]

- Lodge M, Taber CS. The rationalizing voter. Cambridge University Press; 2013. [Google Scholar]

- Mancosu Moreno, Vegetti Federico. “Is it the message or the messenger?”: Conspiracy endorsement and media sources. Social Science Computer Review. 2020 doi: 10.1177/0894439320965107. [DOI] [Google Scholar]

- Marwick A, Lewis R. Media manipulation and disinformation online. Data & Society Research Institute; 2017. [Google Scholar]

- McGuire WJ. The myth of massive media impact: Savagings and salvagings. In: Comstock G, editor. Public communication and behavior. Academic Press; 1986. pp. 173–257. [Google Scholar]

- Metzger MJ, Flanagin AJ, Mena P, Jiang S, Wilson C. From dark to light: The many shades of sharing misinformation online. Media and Communication. 2020;9(1):134–143. doi: 10.17645/mac.v9i1.3409. [DOI] [Google Scholar]

- Miller JM. Do Covid-19 conspiracy theory beliefs form a monological belief system? Canadian Journal of Political Science. 2020;53(2):319–326. doi: 10.1017/S0008423920000517. [DOI] [Google Scholar]

- Miller JM. Psychological, political, and situational factors combine to boost Covid-19 conspiracy theory beliefs. Canadian Journal of Political Science. 2020;53:327–334. doi: 10.1017/S000842392000058X. [DOI] [Google Scholar]

- Nelson JL, Taneja H. The small, disloyal fake news audience: The role of audience availability in fake news consumption. New Media & Society. 2018;20:3720–3737. doi: 10.1177/1461444818758715. [DOI] [Google Scholar]

- Nyhan B. Facts and myths about misperceptions. Journal of Economic Perspectives. 2020;34:220–236. doi: 10.1257/jep.34.3.220. [DOI] [Google Scholar]

- Papakyriakopoulos O, Serrano JCM, Hegelich S. The spread of Covid-19 conspiracy theories on social media and the effect of content moderation. The Harvard Kennedy School Misinformation Review. 2020 doi: 10.37016/mr-2020-034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quinnipiac University Polling Institute . Roper Center for Public Opinion Research. Quinnipiac University Polling Institute Cornell University; 2021. Quinnipiac university poll, question 27 [31118210.00026] [Google Scholar]

- Romer D, Jamieson KH. Conspiracy theories as barriers to controlling the spread of COVID-19 in the US. Social Science & Medicine. 2020;263:1–8. doi: 10.1016/j.socscimed.2020.113356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roose, Kevin. 2020. "Think qanon is on the fringe? So was the tea party." The New York Times. https://www.nytimes.com/2020/08/13/technology/qanon-tea-party.html. (8/13/2020).

- Roozenbeek J, van Der Sander L, Thomas N. Prebunking interventions based on “inoculation” theory can reduce susceptibility to misinformation across cultures. The Harvard Kennedy School Misinformation Review. 2020 doi: 10.37016//mr-2020-008. [DOI] [Google Scholar]

- Schaffner, B. 2020. "Qanon and conspiracy beliefs." In secondary qanon and conspiracy beliefs, ed Secondary. https://www.isdglobal.org/wp-content/uploads/2020/10/qanon-and-conspiracy-beliefs.pdf. Reprint, Reprint.

- Stempel C, Hargrove T, Stempel GH. Media use, social structure, and belief in 9/11 conspiracy theories. Journalism & Mass Communication Quarterly. 2007;84:353–372. doi: 10.1177/107769900708400210. [DOI] [Google Scholar]

- Stroud NJ. Polarization and partisan selective exposure. Journal of Communication. 2010;60:556–576. doi: 10.1111/j.1460-2466.2010.01497.x. [DOI] [Google Scholar]

- Stroud, N.J., Thorson, E., & Young, D. (2017). Making sense of information and judging its credibility. Understanding and addressing the disinformation ecosystem. First draft 45–50.

- Swift, A. (2013). Majority in u. S. Still believe JFK killed in a conspiracy. Gallup.com. http://www.gallup.com/poll/165893/majority-believe-jfk-killed-conspiracy.aspx

- Uscinski J, Klofstad C, Atkinson M. Why do people believe in conspiracy theories? The role of informational cues and predispositions. Political Research Quarterly. 2016;69:57–71. doi: 10.1177/1065912915621621. [DOI] [Google Scholar]

- Uscinski J, Parent JM. American conspiracy theories. Oxford University Press; 2014. [Google Scholar]

- Uscinski JE, Olivella S. The conditional effect of conspiracy thinking on attitudes toward climate change. Research & Politics. 2017;4:1–9. doi: 10.1177/2053168017743105. [DOI] [Google Scholar]

- Uscinski JE, Butler RW. The epistemology of fact-checking. Critical Review. 2013;25(2):162–180. doi: 10.1080/08913811.2013.843872. [DOI] [Google Scholar]

- Valkenburg PM, Peter J, Walther JB. Media effects: Theory and research. Annual Review of Psychology. 2016;67:315–338. doi: 10.1146/annurev-psych-122414-033608. [DOI] [PubMed] [Google Scholar]

- Vicario D, Michela AB, Zollo F, Petroni F, Scala A, Guido Caldarelli H, Stanley E, Quattrociocchi W. The spreading of misinformation online. Proceedings of the National Academy of Sciences. 2016;113:554–559. doi: 10.1073/pnas.1517441113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vosoughi S, Roy D, Aral S. The spread of true and false news online. Science. 2018;359:1146–1151. doi: 10.1126/science.aap9559. [DOI] [PubMed] [Google Scholar]

- Vraga EK, Bode L. using expert sources to correct health misinformation in social media. Science Communication. 2017;39:621–645. doi: 10.1177/1075547017731776. [DOI] [Google Scholar]

- Wang Y, McKee M, Torbica A, Stuckler D. Systematic literature review on the spread of health-related misinformation on social media. Social Science & Medicine. 2019;240:112552. doi: 10.1016/j.socscimed.2019.112552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zaller J. The nature and origins of mass opinion cambridge. Cambridge University Press; 1992. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.